This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Language and voice support for the Speech service

- 5 contributors

The following tables summarize language support for speech to text , text to speech , pronunciation assessment , speech translation , speaker recognition , and more service features.

You can also get a list of locales and voices supported for each specific region or endpoint via:

- Speech to text REST API

- Speech to text REST API for short audio

- Text to speech REST API

Supported languages

Language support varies by Speech service functionality.

See Speech Containers and Embedded Speech separately for their supported languages.

Choose a Speech feature

- Speech to text

- Text to speech

- Pronunciation assessment

- Speech translation

- Language identification

- Speaker recognition

- Custom keyword

- Intent Recognition

The table in this section summarizes the locales supported for Speech to text. See the table footnotes for more details.

More remarks for Speech to text locales are included in the custom speech section of this article.

Try out the real-time speech to text tool without having to use any code.

1 The model is bilingual and also supports English.

Custom speech

To improve Speech to text recognition accuracy, customization is available for some languages and base models. Depending on the locale, you can upload audio + human-labeled transcripts, plain text, structured text, and pronunciation data. By default, plain text customization is supported for all available base models. To learn more about customization, see custom speech .

These are the locales that support the display text format feature : da-DK, de-DE, en-AU, en-CA, en-GB, en-HK, en-IE, en-IN, en-NG, en-NZ, en-PH, en-SG, en-US, es-ES, es-MX, fi-FI, fr-CA, fr-FR, hi-IN, it-IT, ja-JP, ko-KR, nb-NO, nl-NL, pl-PL, pt-BR, pt-PT, sv-SE, tr-TR, zh-CN, zh-HK.

The table in this section summarizes the locales and voices supported for Text to speech. See the table footnotes for more details.

More remarks for text to speech locales are included in the voice styles and roles , prebuilt neural voices , Custom neural voice , and personal voice sections in this article.

Check the Voice Gallery and determine the right voice for your business needs.

1 The neural voice is available in public preview. Voices and styles in public preview are only available in these service regions : East US, West Europe, and Southeast Asia.

2 Phonemes , custom lexicon , and visemes aren't supported. For details about supported visemes, see viseme locales .

3 The neural voice is a multilingual voice in Azure AI Speech.

4 The OpenAI text to speech voices in Azure AI Speech are in public preview and only available in North Central US ( northcentralus ) and Sweden Central ( swedencentral ).

Multilingual voices

Multilingual voices can support more languages. This expansion enhances your ability to express content in various languages, to overcome language barriers and foster a more inclusive global communication environment.

Use this table to understand all supported speaking languages for each multilingual neural voice. If the voice doesn’t speak the language of the input text, the Speech service doesn’t output synthesized audio. The table is sorted by the number of supported languages in descending order. The primary locale for each voice is indicated by the prefix in its name, such as the voice en-US-AndrewMultilingualNeural , its primary locale is en-US .

2 The neural voice is a multilingual voice in Azure AI Speech. All multilingual voices can speak in the language in default locale of the input text without using SSML . However, you can still use the <lang xml:lang> element to adjust the speaking accent of each language to set preferred accent such as British accent ( en-GB ) for English. Check the full list of supported locales through SSML.

3 The OpenAI text to speech voices in Azure AI Speech are in public preview and only available in North Central US ( northcentralus ) and Sweden Central ( swedencentral ). Locales not listed for OpenAI voices aren't supported by design.

Voice styles and roles

In some cases, you can adjust the speaking style to express different emotions like cheerfulness, empathy, and calm. All prebuilt voices with speaking styles and multi-style custom voices support style degree adjustment. You can optimize the voice for different scenarios like customer service, newscast, and voice assistant. With roles, the same voice can act as a different age and gender.

To learn how you can configure and adjust neural voice styles and roles, see Speech Synthesis Markup Language .

Use the following table to determine supported styles and roles for each neural voice.

1 The neural voice is available in public preview. Voices and styles in public preview are only available in three service regions : East US, West Europe, and Southeast Asia.

2 The advertisement-upbeat style for this voice is in preview and only available in three service regions: East US, West Europe, and Southeast Asia.

3 The sports-commentary style for this voice is in preview and only available in three service regions: East US, West Europe, and Southeast Asia.

4 The sports-commentary-excited style for this voice is in preview and only available in three service regions: East US, West Europe, and Southeast Asia.

This table lists all the locales supported for Viseme . For more information about Viseme, see Get facial position with viseme and Viseme element .

Prebuilt neural voices

Each prebuilt neural voice supports a specific language and dialect, identified by locale. You can try the demo and hear the voices in the Voice Gallery .

Pricing varies for Prebuilt Neural Voice (see Neural on the pricing page) and custom neural voice (see Custom Neural on the pricing page). For more information, see the Pricing page.

Each prebuilt neural voice model is available at 24kHz and high-fidelity 48kHz. Other sample rates can be obtained through upsampling or downsampling when synthesizing.

Note that the following neural voices are retired.

- The English (United Kingdom) voice en-GB-MiaNeural is retired on October 30, 2021. All service requests to en-GB-MiaNeural will be redirected to en-GB-SoniaNeural automatically as of October 30, 2021. If you're using container Neural TTS, download and deploy the latest version. All requests with previous versions won't succeed starting from October 30, 2021.

- The en-US-JessaNeural voice is retired and replaced by en-US-AriaNeural . If you were using "Jessa" before, convert to "Aria."

- The Chinese (Mandarin, Simplified) voice zh-CN-XiaoxuanNeural is retired on Feburary 29, 2024. All service requests to zh-CN-XiaoxuanNeural will be redirected to zh-CN-XiaoyiNeural automatically as of Feburary 29, 2024. If you're using container Neural TTS, download and deploy the latest version. All requests with previous versions won't succeed starting from Feburary 29, 2024.

Custom neural voice

Custom neural voice lets you create synthetic voices that are rich in speaking styles. You can create a unique brand voice in multiple languages and styles by using a small set of recording data. Multi-style custom neural voices support style degree adjustment. There are two custom neural voice (CNV) project types: CNV Pro and CNV Lite (preview).

Select the right locale that matches your training data to train a custom neural voice model. For example, if the recording data is spoken in English with a British accent, select en-GB .

With the cross-lingual feature, you can transfer your custom neural voice model to speak a second language. For example, with the zh-CN data, you can create a voice that speaks en-AU or any of the languages with Cross-lingual support. For the cross-lingual feature, we categorize locales into two tiers: one includes source languages that support the cross-lingual feature, and the other tier comprises locales designated as target languages for cross-lingual transfer. Within the following table, distinguish locales that function as both cross-lingual sources and targets and locales eligible solely as the target locale for cross-lingual transfer.

Personal voice

Personal voice is a feature that lets you create a voice that sounds like you or your users. The following table summarizes the locales supported for personal voice.

The table in this section summarizes the 27 locales supported for pronunciation assessment, and each language is available on all Speech to text regions . Latest update extends support from English to 26 more languages and quality enhancements to existing features, including accuracy, fluency and miscue assessment. You should specify the language that you're learning or practicing improving pronunciation. The default language is set as en-US . If you know your target learning language, set the locale accordingly. For example, if you're learning British English, you should specify the language as en-GB . If you're teaching a broader language, such as Spanish, and are uncertain about which locale to select, you can run various accent models ( es-ES , es-MX ) to determine the one that achieves the highest score to suit your specific scenario.

1 The language is in public preview for pronunciation assessment.

The table in this section summarizes the locales supported for Speech translation. Speech translation supports different languages for speech to speech and speech to text translation. The available target languages depend on whether the translation target is speech or text.

Translate from language

To set the input speech recognition language, specify the full locale with a dash ( - ) separator. See the speech to text language table . All languages are supported except jv-ID and wuu-CN . The default language is en-US if you don't specify a language.

Translate to text language

To set the translation target language, with few exceptions you only specify the language code that precedes the locale dash ( - ) separator. For example, use es for Spanish (Spain) instead of es-ES . See the speech translation target language table below. The default language is en if you don't specify a language.

The table in this section summarizes the locales supported for Language identification .

Language Identification compares speech at the language level, such as English and German. Do not include multiple locales of the same language in your candidate list.

The table in this section summarizes the locales supported for Speaker recognition. Speaker recognition is mostly language agnostic. The universal model for text-independent speaker recognition combines various data sources from multiple languages. We tuned and evaluated the model on these languages and locales. For more information on speaker recognition, see the overview .

The table in this section summarizes the locales supported for custom keyword and keyword verification.

The table in this section summarizes the locales supported for the Intent Recognizer Pattern Matcher.

- Region support

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback .

Submit and view feedback for

Additional resources

- Español – América Latina

- Português – Brasil

- Cloud Speech-to-Text

- Documentation

Speech-to-Text supported languages

This page lists all languages supported by Cloud Speech-to-Text. Language is specified within a recognition request's languageCode parameter. For more information about sending a recognition request and specifying the language of the transcription, see the how-to guides about performing speech recognition. For more information about the class tokens available for each language, see the class tokens page .

Try it for yourself

If you're new to Google Cloud, create an account to evaluate how Speech-to-Text performs in real-world scenarios. New customers also get $300 in free credits to run, test, and deploy workloads.

The table below lists the models available for each language. Cloud Speech-to-Text offers multiple recognition models , each tuned to different audio types. The default and command_and_search recognition models support all available languages. The command_and_search model is optimized for short audio clips, such as voice commands or voice searches. The default model can be used to transcribe any audio type.

Some languages are supported by additional models, optimized for additional audio types: enhanced phone_call , and enhanced video . These models can recognize speech captured from these audio sources more accurately than the default model. See the enhanced models page for more information. If any of these additional models are available for your language, they will be listed with the default and command_and_search models for your language. If only the default and command_and_search models are listed with your language, no additional models are currently available.

Use only the language codes shown in the following table. The following language codes are officially maintained and monitored externally by Google. Using other language codes can result in breaking changes.

Except as otherwise noted, the content of this page is licensed under the Creative Commons Attribution 4.0 License , and code samples are licensed under the Apache 2.0 License . For details, see the Google Developers Site Policies . Java is a registered trademark of Oracle and/or its affiliates.

Last updated 2024-03-26 UTC.

Search code, repositories, users, issues, pull requests...

Provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications

🐸💬 - a deep learning toolkit for Text-to-Speech, battle-tested in research and production

coqui-ai/TTS

Code of conduct, releases 98, contributors 149.

- Python 92.0%

- Jupyter Notebook 7.5%

- Makefile 0.1%

- Cython 0.0%

IBM Watson Text to Speech is an API cloud service that enables you to convert written text into natural-sounding audio in a variety of languages and voices within an existing application or within watsonx Assistant . Give your brand a voice and improve customer experience and engagement by interacting with users in their native language. Increase accessibility for users with different abilities, provide audio options to avoid distracted driving, or automate customer service interactions to eliminate hold times.

IBM Watson Text to Speech is now available as a containerized library for IBM partners to embed AI technology in their commercial applications.

Help all customers comprehend your message by translating written text to audio.

Solve customer issues faster by providing key information in their native language.

Enjoy the security of IBM’s world-class data governance practices.

Built to support global languages and deployable on any cloud—public, private, hybrid, multicloud, or on-premises.

Provide multilingual, natural-sounding support.

Create a branded voice with Premium.

Benefit from IBM Research in AI and machine learning.

Benefit from our deep neural networks trained on human speech to automatically produce smooth and natural sounding voice quality.

Design your own unique branded neural voice modeled after your chosen speaker using as little as one hour of recordings. Premium feature.

Easily adjust pronunciation, volume, pitch, speed and other attributes using Speech Synthesis Markup Language.

Clarify the pronunciation of unusual words with the help of IPA or the IBM SPR.

Control tone of voice by choosing a specific speaking style: GoodNews, Apology, and Uncertainty.

Personalize voice quality by specifying attributes such as strength, pitch, breathiness, rate, timbre, and more.

Accelerate your business growth as an Independent Software Vendor (ISV) by innovating with IBM. Partner with us to deliver enhanced commercial solutions embedded with AI to better address clients’ needs.

Build AI-based solutions faster with IBM embeddable AI

CodeObjects eliminates hold times by eliminating policyholder requests and transactions.

Get started for free or explore a demo

Everything you need to get started. Use 10,000 characters per month at no cost.

As low as USD 0.02 per thousand characters

Ideal for businesses. Gain unlimited characters, high-value features and guaranteed uptime.

Contact us for pricing

Provides large and security-sensitive firms with more capacity and data protection. The Premium version includes custom-branded neural voice and a 99.9% high availability and service level uptime guarantee.

Deploy Anywhere

Deploy behind your firewall or on any cloud with the flexibility of IBM Cloud Pak for Data . The Deploy Anywhere version includes unlimited characters per month, 35 neural voices, and 16 supported languages and dialects.

Leverage our enhanced security features to ensure that your data is isolated and encrypted end-to-end while in transit and at rest.

The Watson SDK repository in GitHub.

See documentation about our enhanced security features that ensure your data is isolated and encrypted end-to-end, while in transit and at rest.

Neural Voices improve customer experience with a clear, crisp, natural sound, powered by deep neural networks.

Use the text-to-speech service to convert incoming text from watsonx Assistant to a voice response for the user to hear over the phone.

Walk through the steps to install a customizable text-to-speech service in Red Hat OpenShift

Transform voice into written text with powerful machine learning technology.

Solve customer issues the first time using an AI virtual assistant across any application, device, or channel.

Infuse powerful natural language AI into commercial applications with a containerized library designed to empower IBM partners with greater flexibility.

See Watson Text to Speech capabilities in action.

Equipping machines with the ability to recognize and produce speech can make information accessible to many more people, including those who rely entirely on voice to access information. However, producing good-quality machine learning models for these tasks requires large amounts of labeled data — in this case, many thousands of hours of audio, along with transcriptions. For most languages, this data simply does not exist. For example, existing speech recognition models only cover approximately 100 languages — a fraction of the 7,000+ known languages spoken on the planet. Even more concerning, nearly half of these languages are in danger of disappearing in our lifetime.

RECOMMENDED READS

- Meta’s new AI-powered translation system for Hokkien pioneers new approach for unwritten languages

- MuAViC: The first audio-video speech translation benchmark

- Advancing direct speech-to-speech modeling with discrete units

In the Massively Multilingual Speech (MMS) project, we overcome some of these challenges by combining wav2vec 2.0 , our pioneering work in self-supervised learning, and a new dataset that provides labeled data for over 1,100 languages and unlabeled data for nearly 4,000 languages. Some of these, such as the Tatuyo language, have only a few hundred speakers, and for most of these languages, no prior speech technology exists. Our results show that the Massively Multilingual Speech models outperform existing models and cover 10 times as many languages. Meta is focused on multilinguality in general: For text, the NLLB project scaled multilingual translation to 200 languages , and the Massively Multilingual Speech project scales speech technology to many more languages.

Today, we are publicly sharing our models and code so that others in the research community can build upon our work. Through this work, we hope to make a small contribution to preserve the incredible language diversity of the world.

Our approach

Collecting audio data for thousands of languages was our first challenge because the largest existing speech datasets cover at most 100 languages. To overcome it, we turned to religious texts, such as the Bible, that have been translated in many different languages and whose translations have been widely studied for text-based language translation research. These translations have publicly available audio recordings of people reading these texts in different languages. As part of this project, we created a dataset of readings of the New Testament in over 1,100 languages, which provided on average 32 hours of data per language.

By considering unlabeled recordings of various other Christian religious readings, we increased the number of languages available to over 4,000. While this data is from a specific domain and is often read by male speakers, our analysis shows that our models perform equally well for male and female voices. And while the content of the audio recordings is religious, our analysis shows that this does not overly bias the model to produce more religious language. We believe this is because we use a Connectionist Temporal Classification approach, which is far more constrained compared with large language models (LLMs) or sequence to-sequence models for speech recognition.

We preprocessed the data to improve quality and to make it usable by our machine learning algorithms. To do so, we trained an alignment model on existing data in over 100 languages and used this model together with an efficient forced alignment algorithm that can process very long recordings of about 20 minutes or more. We applied multiple rounds of this process and performed a final cross-validation filtering step based on model accuracy to remove potentially misaligned data. To enable other researchers to create new speech datasets, we added the alignment algorithm to PyTorch and released the alignment model.

Thirty-two hours of data per language is not enough to train conventional supervised speech recognition models. This is why we built on wav2vec 2.0 , our prior work on self-supervised speech representation learning, which greatly reduced the amount of labeled data needed to train good systems. Concretely, we trained self-supervised models on about 500,000 hours of speech data in over 1,400 languages — this is nearly five times more languages than any known prior work. The resulting models were then fine-tuned for a specific speech task, such as multilingual speech recognition or language identification.

To get a better understanding of how well models trained on the Massively Multilingual Speech data perform, we evaluated them on existing benchmark datasets, such as FLEURS .

We trained multilingual speech recognition models on over 1,100 languages using a 1B parameter wav2vec 2.0 model. As the number of languages increases, performance does decrease, but only very slightly: Moving from 61 to 1,107 languages increases the character error rate by only about 0.4 percent but increases the language coverage by over 18 times.

In a like-for-like comparison with OpenAI’s Whisper , we found that models trained on the Massively Multilingual Speech data achieve half the word error rate, but Massively Multilingual Speech covers 11 times more languages. This demonstrates that our model can perform very well compared with the best current speech models.

Next, we trained a language identification (LID) model for over 4,000 languages using our datasets as well as existing datasets, such as FLEURS and CommonVoice, and evaluated it on the FLEURS LID task. It turns out that supporting 40 times the number languages still results in very good performance.

We also built text-to-speech systems for over 1,100 languages. Current text-to-speech models are typically trained on speech corpora that contain only a single speaker. A limitation of the Massively Multilingual Speech data is that it contains relatively few different speakers for many languages, and often only a single speaker. However, this is an advantage for building text-to-speech systems, and so we trained such systems for over 1,100 languages. We found that the speech produced by these systems is of good quality, as the examples below show.

We are encouraged by our results, but as with all new AI technologies, our models aren’t perfect. For example, there is some risk that the speech-to-text model may mistranscribe select words or phrases. Depending on the output, this could result in offensive and/or inaccurate language. We continue to believe that collaboration across the AI community is critical to the responsible development of AI technologies.

Toward a single speech model supporting thousands of languages

Many of the world’s languages are in danger of disappearing, and the limitations of current speech recognition and speech generation technology will only accelerate this trend. We envision a world where technology has the opposite effect, encouraging people to keep their languages alive since they can access information and use technology by speaking in their preferred language.

The Massively Multilingual Speech project presents a significant step forward in this direction. In the future, we want to increase the language coverage to support even more languages, and also tackle the challenge of handling dialects, which is often difficult for existing speech technology. Our goal is to make it easier for people to access information and to use devices in their preferred language. There are also many concrete use cases for speech technology — such as VR/AR technology — which can be used in a person’s preferred language - to messaging services that can understand everyone’s voice.

We also envision a future where a single model can solve several speech tasks for all languages. While we trained separate models for speech recognition, speech synthesis, and language identification, we believe that in the future, a single model will be able to accomplish all these tasks and more, leading to better overall performance.

This blog post was made possible by the work of Vineel Pratap, Andros Tjandra, Bowen Shi, Paden Tomasello, Arun Babu, Ali Elkahky, Zhaoheng Ni, Sayani Kundu, Maryam Fazel-Zarandi, Apoorv Vyas, Alexei Baevski, Yossef Adi, Xiaohui Zhang, Wei-Ning Hsu, Alexis Conneau, and Michael Auli.

Our latest updates delivered to your inbox

Subscribe to our newsletter to keep up with Meta AI news, events, research breakthroughs, and more.

Join us in the pursuit of what’s possible with AI.

Latest Work

Our Actions

Meta © 2024

Lifelike Text to Speech for Your Users

Make your content and products more engaging with our digital voice solutions

Select your options below to hear samples of ReadSpeaker's TTS voices

Apologies. You've reached the demo usage limit.

We've limited the number of sessions. Please request a full dynamic demo.

Request a full demo

Terms of Service - This demo is for evaluation purpose only; commercial use is strictly forbidden. No static audio files may be produced, downloaded, or distributed. The background music in the voice demo is not included with the purchased product.

Benefits of Text to Speech

Text to speech enables brands, companies, and organizations to deliver enhanced end-user experience, while minimizing costs. Whether you’re developing services for website visitors, mobile app users, online learners, subscribers or consumers, text to speech allows you to respond to the different needs and desires of each user in terms of how they interact with your services, applications, devices, and content.

See All Benefits of Text to Speech

TTS gives access to your content to a greater population, such as those with literacy difficulties, learning disabilities, reduced vision and those learning a language. It also opens doors to anyone else looking for easier ways to access digital content.

If flawless customer experience is at the heart of your business DNA, high-quality TTS voices or exclusive custom voices are both highly effective approaches to increasing your visibility in the voice user interface. TTS helps to enhance the customer journey across different touchpoints, fostering loyalty and setting your company apart from competitors.

Integrators and developers building services, apps, and devices across markets and verticals (e.g. telecoms, utilities, manufacturing, OEM, finance, etc.), benefit from adding speech output to services and applications. Text to speech enables a wider-reaching, more consumer-oriented end-user experience, helping reduce costs and increasing automation while providing personalized customer interactions.

ReadSpeaker is leading the way in text to speech.

ReadSpeaker offers a range of powerful text-to-speech solutions for instantly deploying lifelike, tailored voice interaction in any environment.

With more than 20 years’ experience, ReadSpeaker is “Pioneering Voice Technology” .

customers worldwide

market-leading own-brand voices

voices in 50 languages available in our SaaS solutions

countries with a local office

ReadSpeaker’s Blog

ReadSpeaker’s blog covers a wide variety of topics related to online and offline text to speech, mobile, and web accessibility.

ReadSpeaker’s industry-leading voice expertise leveraged by leading Italian newspaper to enhance the reader experience Milan, Italy. – 19 October, 2023 – ReadSpeaker, the most trusted,…

Accessibility overlays have gotten a lot of bad press, much of it deserved. So what can you do to improve web accessibility? Find out here.

Struggling to produce a worthwhile voice over for your podcast? One (or more!) of these three production methods is sure to work for you.

Learn everything you need to know about voice overs for e-learning content. Our FAQ has everything, including expert tips!

Learn how the STEM Olympiades made STEM assessments inclusive and accessible with text to speech.

Edtech is changing the way we run assessments in education. How do we get the benefit for all of our students equally? Learn from the experts.

- ReadSpeaker webReader

- ReadSpeaker docReader

- ReadSpeaker TextAid

- Assessments

- Text to Speech for K12

- Higher Education

- Corporate Learning

- Learning Management Systems

- Custom Text-To-Speech (TTS) Voices

- Voice Cloning Software

- Text-To-Speech (TTS) Voices

- ReadSpeaker speechMaker Desktop

- ReadSpeaker speechMaker

- ReadSpeaker speechCloud API

- ReadSpeaker speechEngine SAPI

- ReadSpeaker speechServer

- ReadSpeaker speechServer MRCP

- ReadSpeaker speechEngine SDK

- ReadSpeaker speechEngine SDK Embedded

- Accessibility

- Automotive Applications

- Conversational AI

- Entertainment

- Experiential Marketing

- Guidance & Navigation

- Smart Home Devices

- Transportation

- Virtual Assistant Persona

- Voice Commerce

- Customer Stories & e-Books

- About ReadSpeaker

- TTS Languages and Voices

- The Top 10 Benefits of Text to Speech for Businesses

- Learning Library

- e-Learning Voices: Text to Speech or Voice Actors?

- TTS Talks & Webinars

Make your products more engaging with our voice solutions.

- Solutions ReadSpeaker Online ReadSpeaker webReader ReadSpeaker docReader ReadSpeaker TextAid ReadSpeaker Learning Education Assessments Text to Speech for K12 Higher Education Corporate Learning Learning Management Systems ReadSpeaker Enterprise AI Voice Generator Custom Text-To-Speech (TTS) Voices Voice Cloning Software Text-To-Speech (TTS) Voices ReadSpeaker speechCloud API ReadSpeaker speechEngine SAPI ReadSpeaker speechServer ReadSpeaker speechServer MRCP ReadSpeaker speechEngine SDK ReadSpeaker speechEngine SDK Embedded

- Applications Accessibility Automotive Applications Conversational AI Education Entertainment Experiential Marketing Fintech Gaming Government Guidance & Navigation Healthcare Media Publishing Smart Home Devices Transportation Virtual Assistant Persona Voice Commerce

- Resources Resources TTS Languages and Voices Learning Library TTS Talks and Webinars About ReadSpeaker Careers Support Blog The Top 10 Benefits of Text to Speech for Businesses e-Learning Voices: Text to Speech or Voice Actors?

- Get started

Search on ReadSpeaker.com ...

All languages.

- Norsk Bokmål

- Latviešu valoda

Generative Voice AI

Convert text to speech online for free with our AI voice generator. Create natural AI voices instantly in any language - perfect for video creators, developers, and businesses.

Click on a language to convert text to speech :

Natural Text to Speech & AI Voice Generator

Whether you're a content creator or a short story writer, our AI voice generator lets you design captivating audio experiences.

Stories with emotions

Immerse your players in rich, dynamic worlds with our AI voice generator. From captivating NPC dialogue to real-time narration, our tool brings your game’s audio to the next level.

Immersive gaming

Bring stories to life by converting long-form content to engaging audio. Our AI voice generator lets you create audiobooks with a natural voice and tone, making it the perfect tool for authors and publishers.

Every book deserves to be heard

Ai chatbots.

Create a more natural and engaging experience for your users with our AI voice generator. Our tool lets you create AI chatbots with human-like voices.

AI assistants with personality

Experience advanced ai text to speech.

Generate lifelike speech in any language and voice with the most powerful text to speech (TTS) technology that combines advanced AI with emotive capabilities.

Indistinguishable from Human Speech.

Turn text into lifelike audio across 29 languages and 120 voices. Ideal for digital creators, get high-quality TTS streaming instantly.

Precision Tuning.

Adjust voice outputs effortlessly through an intuitive interface. Opt for a blend of vocal clarity and stability, or amplify vocal stylings for more animated delivery.

Online Text Reader.

Use our deep learning-powered tool to read any text aloud, from brief emails to full PDFs, while cutting costs and time.

AI Voice Generator in 29 Languages

Generate ai voices with voicelab.

Create new and unique synthetic voices in minutes using advanced Generative AI technology. Create lifelike voices to use in videos, podcasts, audiobooks, and more.

Clone Your Voice

Create a digital voice that sounds like a real human. Whether you're a content creator or a short story writer, our AI voice generator lets you design captivating audio experiences.

Find Voices

Share the unique synthetic voices you've created with our vibrant community and discover voices crafted by others, opening a world of auditory opportunity.

Multiple languages.

Clone your voice from a recording in one language and use it to generate speech in another.

Instant Results.

Generate new voices in seconds, not hours with our state-of-the-art AI voice generator.

Find the perfect voice for any project; be it a video, audiobook, video game or blog.

Dubbing Studio

Localize videos with precise control over transcript, translation, timing, and more. Create a perfect voiceover in any language, with any voice, in minutes. Explore AI Dubbing

Transcript editing.

Manually edit the dialogue of your translated script to get the perfect audio output.

Sequence timing.

Change the speaker’s timing by clicking and dragging the clips.

Adjust voice settings.

Click on the gear icon next to a speaker’s name to open more voice options.

Add more languages.

When you’re ready to add more languages, hit the “+” icon to instantly translate your script.

Change Your Voice With Speech To Speech

Edit and fine-tune your voiceovers using Speech to Speech. Get consistent, clear results that keep the feel and nuance of your original message. Change your voice

Emotional Range

Maintain the exact emotions of your content with our diverse range of voice profiles.

Nuance Preservation

Ensure that every inflection, pause and modulation is captured and reproduced perfectly.

Consistent Quality

Use Speech to Speech to create complex audio sequences with consistent quality.

Long-form voice generation with Projects

Our innovative workflow for directing and editing audio, providing you with complete control over the creative process for the production of audiobooks, long-form video and web content. Learn more about Projects

Conversion of whole books.

Import in a variety of formats, including .epub, .txt, and .pdf, and convert entire books into audio.

Text-inputted pauses.

Manually adjust the length of pauses between speech segments to fine-tune pacing.

Multiple languages and voices.

Choose from a wide range of languages and voices to create the perfect audio experience.

Regenerate selected fragments

Recreate specific audio fragments if you're not satisfied with the output.

Save progress.

Save your progress and return to your project at any time.

Single click conversion.

Convert your written masterpieces into captivating audiobooks, reaching listeners on the go.

Powered by cutting-edge research

Introducing Dubbing Studio

Introducing Speech to Speech

Turbo v2: Our Fastest Model Yet

Frequently asked questions, how do i make my own ai voice.

To create your own AI voice at ElevenLabs, you can use VoiceLab. Voice Design allows you to customize the speaker's identityfor unique voices in your scripts, while Voice Cloning mimics real voices. This ensures variety and exclusivity in your generated voices, as they are entirely artificial and not linked to real people.

How much does using ElevenLabs AI voice generator cost?

ElevenLabs provides a range of AI voice generation plans suitable for various needs. Starting with a Free Plan, which includes 10,000 characters monthly, up to 3 custom voices, Voice Design, and speech generation in 29 languages. The Starter Plan is $5 per month, offering 30,000 characters and up to 10 custom voices. For more extensive needs, the Creator Plan at $22 per month provides 100,000 characters and up to 30 custom voices. The Pro Plan costs $99 per month with a substantial 500,000 characters and up to 160 custom voices. Larger businesses can opt for the Scale Plan at $330 per month, which includes 2,000,000 characters and up to 660 custom voices. Lastly, the Enterprise Plan offers custom pricing for tailored quotas, PVC for any voice, priority rendering, and dedicated support. Each plan is crafted to support different levels of usage and customization requirements.

Can I use ElevenLabs AI voice generator for free?

Yes, you can use ElevenLabs prime AI voice generator for free with our Free Plan. It includes 10,000 characters per month, up to 3 custom voices, Voice Design, and speech generation in 29 languages.

What is the best AI voice generator?

ElevenLabs offers the best and highest quality AI voice generator software online. Our AI voice generator uses advanced deep learning models to provide high-quality audio output, emotion mapping, and a wide range of vocal choices. It's perfect for content creators and writers looking to create captivating audio experiences.

Who should use ElevenLabs’ AI voice generator and prime voice AI services?

ElevenLabs' AI voice generator is ideal for a variety of users, including content creators on YouTube and TikTok, audiobook producers for Audible and Google Play Books, presenters using PowerPoint or Google Docs, businesses with IVR systems, and podcasters on Spotify or Apple Podcasts. These services provide a natural-sounding voice across different platforms, enhancing user engagement and accessibility.

How many languages does ElevenLabs support?

ElevenLabs supports speech synthesis in 29 languages, making your content accessible to a global audience. Supported languages include Chinese, English, Spanish, French, and many more.

What is an AI voice generator?

ElevenLabs' AI voice generator transforms text to spoken audio that sounds like a natural human voice, complete with realistic intonation and accents. It offers a wide range of voice options across various languages and dialects. Designed for ease of use, it caters to both individuals and businesses looking for customizable vocal outputs.

How do I use AI voice generators to turn text into audio?

Step 1 involves selecting a voice and adjusting settings to your liking. In Step 2, you input your text into the provided box, ensuring it's in one of the supported languages. For Step 3, you simply click 'Generate' to convert your text into audio, listen to the output, and make any necessary adjustments. After that, you can download the audio for use in your project.

What is text to speech?

Text to speech is a technology that converts written text into spoken audio. It is also known as speech synthesis or TTS. The technology has been around for decades, but recent advancements in deep learning have made it possible to generate high-quality, natural-sounding speech.

What is the best text to speech software?

ElevenLabs is the best text to speech software. We offer the most advanced AI voices, with the highest quality and most natural-sounding speech. Our platform is easy to use and offers a wide range of customization options.

How much does text to speech cost?

ElevenLabs offers a free plan which includes 10,000 characters per month. Our paid plans start at $1 for 30,000 characters per month.

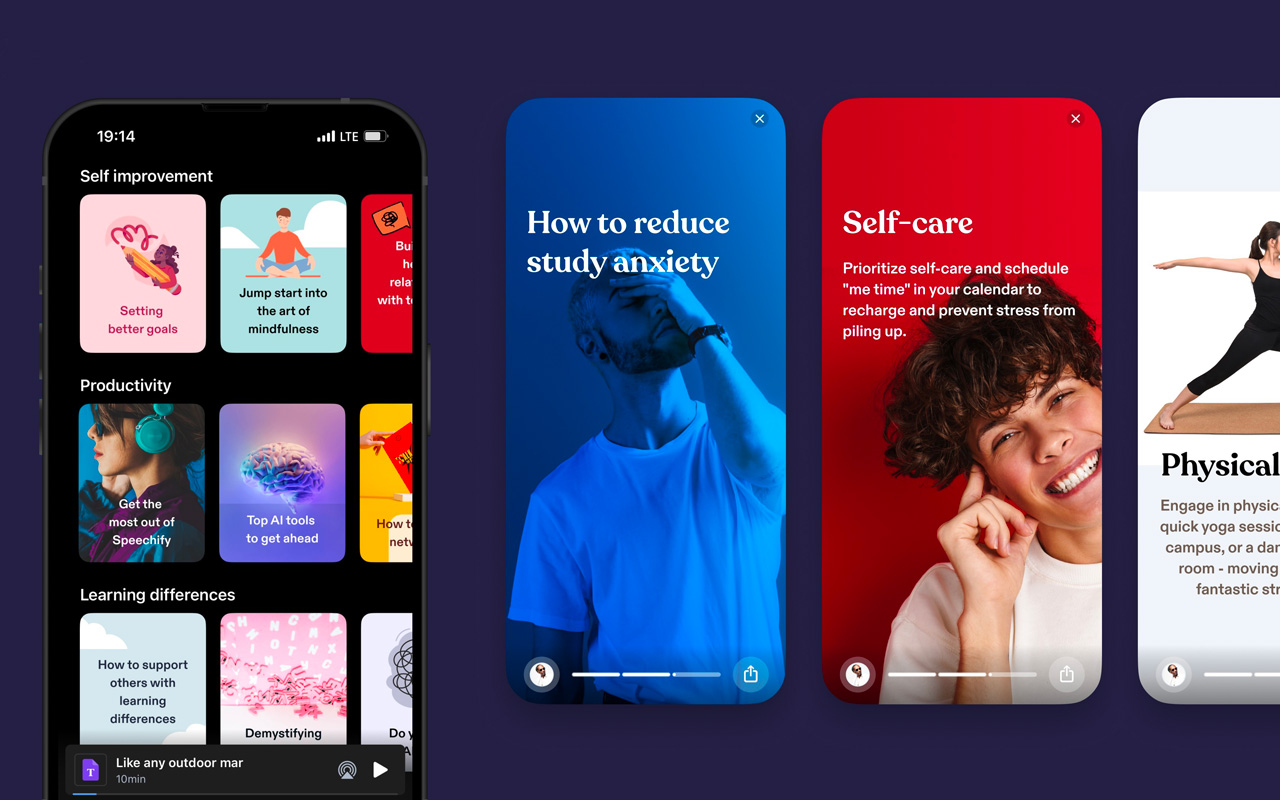

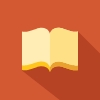

What Speechify languages are available?

Table of contents.

What Speechify languages are available? If you plan on using this incredible TTS app, you should know about available languages and how to choose them.

Speechify is among the best text-to-speech apps you can find today, and it is highly versatile. And one of the many reasons for that is that you can choose different languages and accents.

The app was launched in 2017, and it was developed by Cliff Weitzman, a dyslexic college student.

Languages supported

Speechify offers versatility like no other app. And one of many reasons for it is the number of different languages you can use. Currently, Speechify.com is offering over thirty different languages, as well as various accents.

This includes English, Arabic, Chinese, Czech, Danish, Dutch, Finnish, French, German, Greek, Hebrew, Hindi, Hungarian, Indonesian, Italian, Japanese, Korean, Norwegian Bokmål, Polish, Portuguese, Romanian, Russian, Slovak, Spanish, Swedish, Thai, Turkish, & Ukrainian.

And the majority of these languages come with different voice skins (such as male and female voices), and it is a nice way to explore additional functionalities.

How do I select a different language?

Knowing that Speechify supports numerous languages is great. But you should also know how to pick the languages you want to use. Fortunately, the process is quite simple. The first thing you will need to do is open the document you want to listen to.

Once you find it in the Speechify library and open it, you can click on the head icon, and you will see that language is one of the available options. Just pick the one you want to use, and press “done.” It is as simple as that.

Voice selection

The next thing on the list is an option to choose different voices or voice skins. Speechify allows you to further customize the app, and pick one of many available voices. And in the majority of cases, you will get to choose between male and female voices.

You can also use celebrity voices such as Gwyneth Paltrow and Snoop Dogg, which will make the entire experience even more fun. Currently, Speechify has over 130 different AI voices you can use.

Learning a new language with Speechify

One of the many things you can do with Speechify is to start learning a new language. Speechify is an excellent choice for eLearning, and with new languages, you will be able to hear proper pronunciations.

The app can easily convert the text to audio files, and all you need to do is open the page in the app, and it will read aloud any text you want. And thanks to so many options, you will be able to improve your language skills in no time.

Best text to speech option with multiple languages

If you are interested in using TTS apps for learning a new language, there are plenty of different options you can find today. And each mobile app offers new unique features you can explore.

And if you want the best option, you can check one of the following apps. It is also possible to adjust the reading speed, which will allow you to go through the content even faster.

Amazon Polly

Those that are familiar with Amazon Polly are well aware of why this app is on the list. The reason why it is such a good option for learning a new language is that it already serves a similar purpose.

Amazon Polly is used by Duolingo, which is one of the biggest language-learning platforms today. The only downside with Polly is that it is unavailable as a standalone app, and you will need to use the API that is a part of Amazon Web Services.

Murf is a common option for content creators and those looking to add narration and voiceovers to their videos with ease. Murf offers natural-sounding speech voices and supports different languages, and there are several subscription plans you can check out.

And while there is a free option you can use, it is quite limiting. You will get only ten minutes of voice generation for free, and after that, you will need to pick one of the pricing plans. However, the free plan will allow you to see what this app has to offer.

Speechelo is the next TTS app on the list, and it gives you a chance to pick one of several languages and accents. Speechelo is easy to use, and the quality of AI voices is quite good. Naturally, there are several subscription plans you can check out as well.

This will allow you to find a plan that’s suitable for your needs, and it can be quite versatile. There is also a variety of voice skins, and the majority of languages include both male and female voices you can use.

Speechify is one of the best text-to-speech software you can find today. It is easy to use, and it offers multiple human-like voices. The app is designed to improve the accessibility of any device and help people with dyslexia, ADHD , and other reading difficulties or reading disabilities.

The app is available on Windows, Mac, Apple iOS (both iPhone and iPad), and Android, and you can use it as a Google Chrome extension as well. Speechify is an excellent option if you want to focus on learning a new language since the quality is exceptional.

These high-quality voices can rival audiobooks and real human voice actors. You can also use celebrity voices such as Gwyneth Paltrow and Snoop Dogg , and there is an option to order custom voices as well.

Speechify works with PDF, txt, Google Docs, docx, and many other file formats and web pages.

Can TTS read other languages?

Yes. Apps such as Speechify are available in multiple languages, and you will be able to convert nearly any type of text into a digital voice. This will allow you to hear proper pronunciations and improve your language skills with ease.

You can use Speechify, Natural Reader, Amazon Polly, Murf, Speechelo, and many others.

What languages are available on Speechify?

Speechify supports English, Italian, French, Polish, Portuguese, Hindu, Arabic, Russian, Spanish, Norwegian, Finnish, and many others.

What is Speechify?

Speechify is a voice generator or speech synthesis app, and it can convert text into a synthetic voice. This also includes physical pages as well since the Speechify app is equipped with OCR.

- Previous Text to speech software (TTS) computer voice

- Next Text to speech Fandom

Cliff Weitzman

Cliff Weitzman is a dyslexia advocate and the CEO and founder of Speechify, the #1 text-to-speech app in the world, totaling over 100,000 5-star reviews and ranking first place in the App Store for the News & Magazines category. In 2017, Weitzman was named to the Forbes 30 under 30 list for his work making the internet more accessible to people with learning disabilities. Cliff Weitzman has been featured in EdSurge, Inc., PC Mag, Entrepreneur, Mashable, among other leading outlets.

Recent Blogs

Ultimate guide to ElevenLabs

Voice changer for Discord

How to download YouTube audio

Speechify 3.0 is the Best Text to Speech App Yet.

Voice API: Everything You Need to Know

Best text to speech generator apps

The best AI tools other than ChatGPT

Top voice over marketplaces reviewed

Speechify Studio vs. Descript

Everything to Know About Google Cloud Text to Speech API

Source of Joe Biden deepfake revealed after election interference

How to listen to scientific papers

How to add music to CapCut

What is CapCut?

VEED vs. InVideo

Speechify Studio vs. Kapwing

Voices.com vs. Voice123

Voices.com vs. Fiverr Voice Over

Fiverr voice overs vs. Speechify Voice Over Studio

Voices.com vs. Speechify Voice Over Studio

Voice123 vs. Speechify Voice Over Studio

Voice123 vs. Fiverr voice overs

HeyGen vs. Synthesia

Hour One vs. Synthesia

HeyGen vs. Hour One

Speechify makes Google’s Favorite Chrome Extensions of 2023 list

How to Add a Voice Over to Vimeo Video: A Comprehensive Guide

How to Add a Voice Over to Canva Video: A Comprehensive Guide

What is Speech AI: Explained

How to Add a Voice Over to Canva Video

Speechify text to speech helps you save time

Popular blogs.

The Best Celebrity Voice Generators in 2024

YouTube Text to Speech: Elevating Your Video Content with Speechify

The 7 best alternatives to Synthesia.io

Everything you need to know about text to speech on tiktok, the 10 best text-to-speech apps for android.

How to convert a PDF to speech

The top girl voice changers.

How to use Siri text to speech

Obama text to speech.

Robot Voice Generators: The Futuristic Frontier of Audio Creation

PDF Read Aloud: Free & Paid Options

Alternatives to fakeyou text to speech, all about deepfake voices, tiktok voice generator, text to speech goanimate, the best celebrity text to speech voice generators, pdf audio reader, how to get text to speech indian voices, elevating your anime experience with anime voice generators, best text to speech online, top 50 movies based on books you should read, download audio, how to use text-to-speech for quandale dingle meme sounds.

Only available on iPhone and iPad

To access our catalog of 100,000+ audiobooks, you need to use an iOS device.

Coming to Android soon...

Join the waitlist

Enter your email and we will notify you as soon as Speechify Audiobooks is available for you.

You’ve been added to the waitlist. We will notify you as soon as Speechify Audiobooks is available for you.

Find answers to your questions and learn more!

Get lots of tips and advice to get the most from typecast

- Customer Support

- Contact Sales

- June 25, 2023

Need a Voice Actor?

Recommended articles.

How to Use an Android Text to Speech

Hear the Difference: Typecast SSFM Redefines Text-to-Speech

How to Make Explainer Videos With AI Voice Actors

How to Get a Kanye West Text to Speech

The evolution of human society was made possible by language and communication, so it’s reasonable for us to want the same level of advancement for computers. However, we struggle with the massive amounts of language data we encounter daily. If computers could handle large-scale text and voice data with precision, they could revolutionize our lives. Natural Language Processing (NLP) has led to many innovations like Alexa and Siri.

Training a machine model to understand human languages is challenging due to the complexity of languages. In addition, countless nuances, dialects, and regional variations take much work to standardize. The latest breakthrough in natural language processing is Text-to-Speech (TTS) – a form of NLP that can convert written data into audio files with excellent speech quality. This blog post will examine how text-to-speech revolutionizes natural language processing and its applications.

What is natural language processing?

Natural language processing is an interdisciplinary subfield of linguistics, computer science, and artificial intelligence that deals with the ability of computers to understand, interpret, and generate human language. It analyzes large amounts of natural language data to understand how humans communicate. Natural language processing has existed since the early 1990s , but it has become increasingly important as technology advances and more data becomes available.

Natural language processing allows computers to interpret and manipulate human language, making it possible to understand what people are saying or writing and respond accordingly. NLP has become increasingly important due to its potential applications in various fields, like healthcare, finance, and education. In addition, it can be used for AI tools and to automate tasks like chatbots, voice generators , and more.

How does natural language processing work?

NLP analyzes the structure and meaning of natural language to extract useful information from it. NLP also uses syntax to assess and determine the significance of a language based on grammatical rules. Parsing is a syntax technique that involves analyzing the grammar of a sentence.

Using syntax techniques involves breaking down the text into smaller components, such as words or phrases, and then using algorithms to identify patterns in the data. Once these patterns are identified, they can be used to generate output, such as a text-to-speech model or lifelike voices.

What is text-to-speech technology?

Text-to-Speech technology is a type of speech synthesis that transforms written text into spoken words using computer algorithms. It enables machines to communicate with humans in a natural-sounding voice by processing text into synthesized speech. TTS systems typically use a combination of linguistic rules and statistical models to generate synthetic speech.

What is speech synthesis?

Speech synthesis refers to the process of using a computer to produce artificial human speech. It’s a generative model commonly used to convert written text into audio information and is utilized in voice-enabled services and mobile applications.

How do TTS tools work?

Natural language processing helps address these challenges by providing tools for understanding how humans communicate through their choice of words and phrases when speaking or writing. TTS systems can then use this understanding to generate more accurate synthetic speech reflecting the input text’s intended meaning. As a result, TTS technology has become increasingly important in modern communication as it allows machines to interact with humans more effectively than ever before.

Applications of natural language processing

NLP can be applied in various fields, such as sentiment analysis, chatbots, language translation, etc. Here are some examples:

- Sentiment Analysis : This type uses algorithms to analyze text data for sentiment or opinion expressed by the author. Businesses can use this to gain insights into customers’ views about their products or services.

- Voice Assistants and Chatbots : These computer programs use natural language processing technology to respond to commands by users. They can be used to play music, set reminders, or answer questions about products or services. Chatbots are similar but interact with users through text messages instead of voice commands.

- Email Filtering : This involves sorting emails according to specific criteria, such as sender address or subject line, using natural language processing algorithms. This can help reduce spam emails and make it easier for users to find relevant emails quickly without manually sorting them all individually.

- Language Translation : this application enables computers to automatically translate text from one language into another using algorithms trained on large datasets of translated sentences from different languages. This can help people communicate with each other across languages without having to learn multiple languages firsthand.

Is speech synthesis related to NLP?

There’s nothing like a good old conversation about speech synthesis and NLP. But to answer the burning question, yes, speech synthesis is indeed related to NLP. Speech synthesis, also a subfield of NLP, deals with converting text into spoken language.

Without NLP, speech synthesis would be nothing more than a robot monotone voice reciting words on a page. So, next time you listen to Siri, Alexa, or hear any other virtual assistant speak, you can thank NLP for enabling that human-like tone to be achieved.

Can NLP help create synthetic voices for content creation?

Natural language processing can create synthetic voices for content creation. NLP can generate speech almost indistinguishable from authentic human voices using sophisticated algorithms and models. This technology is becoming increasingly popular, allowing businesses to save time and money instead of hiring voice actors or recording real-life audio.

Furthermore, NLP enables personalized speech customized to the user’s preferences. This can help create a more immersive, personal, and engaging customer experience when interacting with digital content.

How does NLP apply to text-to-speech technology?

The text-to-speech technology utilizes algorithms that process natural language and speech synthesis to automatically convert written text into spoken words without a human intervening. Using NLP technologies and TTS tools together allows people with difficulty reading due to physical disabilities to access written material without having trouble understanding it. In addition, this technology provides easy access to educational materials for people facing financial constraints who need help to purchase books.

NLP techniques help TTS tools understand written words and convert them into natural-sounding speech. With an advanced NLP framework for high-quality TTS synthesis systems, developers can create more realistic synthesized speech. However, two essential components are needed to make this system function properly: a stage for natural language processing and speech synthesis.

Does an AI voice cloner use NLP?

Yes, an AI voice cloner does use NLP. Voice cloning is a technology that uses AI and TTS technology to clone a recorded human voice. It mimics a speaker’s intonation, pronunciation, and other characteristics to create a clone or a virtual copy of the original voice.

To achieve this, the AI voice cloner must first analyze and record the audio input using an NLP algorithm. This allows it to extract information about other vocal characteristics of the speaker. This information is then used to create a virtual clone or a replica of the original voice. By combining AI and NLP, this technology can create realistic synthetic voices that sound just like the natural person.

Voice cloning is another powerful tool for content creators, allowing them to easily create voices for their digital content without hiring voice actors or recording audio.

Can NLP be used to create a deepfake voice?

Yes, NLP can be used to create a deepfake voice. Deepfakes are AI-generated audio clips that mimic the sound of a natural person’s voice. They can generate realistic-sounding audio clips of the target voice that can easily be mistaken for an authentic voice using natural language processing, audio synthesis, and AI algorithms.

An excellent example is the Barrack Obama voice generator, which uses NLP and AI algorithms to generate a voice resembling that of the former US president. People often use cloned voices to have fun, create original content, or play pranks on their loved ones. Specific AI software lets you use the cloned voice as-is or modify it with tone, intonation, and rhythm variations to produce a slightly different custom voice-over.

Although there is no legislation regarding the voice cloning of famous people and other public figures, creators should still be careful and ensure they work with files not protected by copyright.

Pros And Cons Of TTS

Everything technology has pros and cons, and TTS technology is no different. However, there are many advantages of TTS technology, including:

- Its ability to save time by automating tasks that would typically require manual labor.

- Your content can reach visually impaired people.

- Using TTS tools is cost-effective compared to hiring professional voice actors.

- TTS tools are more flexible when creating different types of voices for other purposes.

Most TTS tools have a library of male and female voices or can emulate different accents and languages. However, traditional challenges in TTS include generating natural-sounding voices that accurately reflect the intended meaning of the input text. There are also some issues, such as awkward generations when speaking, which can make conversations seem robotic, and difficulty understanding complex sentences, context, emotions, etc.

There are limitations to NLP systems in TTS tools. Computers can have a hard time understanding the context of natural language data. They may need help interpreting slang words or idioms. Moreover, they might not be able to identify when someone is being sarcastic or ironic.

How to create memorable audio files for your content needs

Text-to-speech technology has come a long way over the years, thanks partly to advances in natural language processing algorithms that allow computers to understand human language inputs better. These advancements bring new opportunities for businesses and consumers who want access to powerful, easy-to-use communication tools. At Typecast, we create and harness the power of NLP and TTS systems to enable our customers to quickly create memorable audio files for various content needs.

Our platform offers a wide range of features allowing you to create engaging audio files from scratch or use existing text. In addition, you can customize how your audio file sounds by selecting different voices, accents, and languages. If you want to make fun and exciting content, you could also use our new Joe Biden voice generator to create audio recordings or clips that sound like the current US president.

Use your own voice with NLP and text-to-speech with Typecast

Text-to-speech technology has come a long way and is now essential to content creation. With the help of natural language processing algorithms, Typecast makes it easy for businesses and individuals to create engaging audio files from scratch or by using existing text.

If you’re not into creating memes or using celebrity impersonations, text-to-speech technology can also create audio files that feature your unique voice. Our text-to-speech system makes creating audio files that sound just like you easy. We use natural language processing and machine and deep learning algorithms to understand your voice and generate audio files that accurately represent it.

Our platform offers various customization options for voiceovers, allowing you to create unique audio files with just the right tone. You can adjust the speed, intonation, pitch, and more to make your audio files sound exactly like you. In addition, with our platform, you can create custom voices and accents to boost your channel’s traffic and stand out from other creators.

Type your script and cast AI voice actors & avatars

The ai generated text-to-speech program with voices so real it's worth trying, related articles.

How AI Can Improve Customer Experience

The Impact of AI Actors on Virtual Storytelling

AI Voice Technology in Mobile Apps for Enhanced User Experience

- We're hiring 🚀

- Press/Media

- Brand resource

- Typecast characters

- Usage policy

- Attribution guidelines

- Talk to sales

- Terms of Use

- Privacy Policy

- Copyright © 2024 Typecast US Inc. All Rights Reserved.

- 400 Concar Dr, San Mateo, CA 94402, USA

Exploring the Benefits of Text to Speech Technology

Imagine a world where words transcend the confines of the written page and come alive, resonating through the air with a captivating cadence. This isn’t an excerpt from a fantastical novel but a glimpse into the transformative power of text to speech (TTS) technology.

TTS is more than a tool that converts written text to spoken words; it’s a gateway to inclusivity, accessibility, and efficiency in communication. It helps break down barriers, ensuring information, stories, and ideas reach everyone, regardless of their abilities or limitations. It eliminates the need for voice actors and cumbersome recording sessions.

From empowering visually impaired individuals to access information online to providing a dynamic solution for a time-strapped professional to create AI voiceovers , text to speech is reshaping how we interact with content.

Table of Contents

Learning and education, efficient content consumption , personal productivity, enhanced language learning , customization and personalization, integration with smart devices, reducing reading fatigue, natural and expressive voices, advanced customization options, multi-language support , here are the top benefits of using text to speech technology: .

By converting written text into spoken words in audio format, text to speech technology accommodates auditory learners, offering an alternative and engaging method for information absorption. Instead of reading through their notebooks, students can listen to their online elearning modules. This helps with knowledge retention and comprehension skills, too.

TTS technology enables educators to produce lessons in multiple languages, allowing students to learn in their native spoken language. This enhances inclusivity, comprehension, and cultural relevance, fostering equitable access to education and accommodating diverse linguistic preferences in learning environments.

By transforming written content into spoken words, text to speech technology enables users to absorb information while commuting, exercising, or engaging in other activities. For instance, a commuter can listen to articles while driving, or a fitness enthusiast absorb research papers during a workout. TTS makes multitasking effortless, maximizing productivity by enabling users to consume information hands-free.

Professionals and students alike can leverage text to speech to enhance productivity. TTS has become a valuable tool for proofreading and editing audio content. The auditory feedback helps identify errors, ensuring a higher quality of written work. The time-saving benefits are also evident, allowing individuals to expedite their writing and editing processes with increased accuracy. Additionally, it allows for easy updates by editing text rather than re-recording voiceovers , saving both time and money, especially regarding large volumes of content.

Text to speech technology plays a pivotal role in honing pronunciation , comprehension, and overall language skills. Learners can listen to native speakers or practice challenging phonetics, enhancing their linguistic proficiency.

TTS becomes a virtual language companion, providing continuous exposure to correct pronunciation and aiding in the mastery of diverse language nuances.

Text to speech doesn’t merely provide a one-size-fits-all experience; it offers a realm of customization and personalization. Users can tailor their text to speech experience by choosing different voices, adjusting speeds, and selecting preferred accents. This customization ensures a more engaging interaction.

The seamless integration of TTS in smart devices marks a significant advancement in voice-guided interactions. From car navigation systems to assistive technology to virtual assistants in smart homes, TTS enhances the user experience by providing information audibly.

This integration adds convenience and contributes to developing more intuitive and accessible voice-enabled smart devices. Consider a smart home where occupants receive weather updates or schedule reminders audibly, enhancing accessibility and convenience.

For those who spend extended periods reading, text becomes a welcome respite from the strain of visual engagement in the learning process. Text to speech tools allow users to listen instead of reading, reducing the likelihood of reading fatigue. TTS offers a welcome alternative for students or professionals who spend hours with text-based materials, reducing strain and improving focus.

Murf: Elevating the Text to Speech Experience to Unprecedented Heights

In the ever-expanding landscape of TTS software, Murf stands as the pinnacle, redefining the user experience with its exceptional features and capabilities. Tailored for efficiency and user satisfaction, Murf takes TTS to unprecedented heights, offering a seamless blend of human voices, innovation, and accessibility. Some of the key features and benefits of using Murf are:

Murf boasts diverse, natural-sounding voices that breathe life into the text. These voices eliminate the robotic monotony often associated with synthesized speech or computer generated voices. The nuanced intonation and rhythm in Murf’s AI voices contribute to better engagement. Users can also select from various voice styles like happy, sad, promo, fury, meditative, inspirational, and more to create high quality audio content across different use cases.

With Murf, users can customize their TTS experience according to personal preferences. They can fine-tune the pitch, reading speed, and pause, ensure accurate pronunciation, and emphasize key points. This level of customization creates a genuinely personalized and enjoyable auditory experience, enhancing engagement and comprehension.

Murf breaks language barriers with its robust support in 20 languages, facilitating seamless communication and content consumption for a broader audience. This feature is especially beneficial for language learners, international professionals, and anyone seeking information in their native language.

Murf emerges as the epitome of excellence in text to speech, offering a harmonious synthesis of natural voices, advanced customization, and broad language support. Elevate your TTS experience with Murf and immerse yourself in the next generation of auditory interaction.

Try Murf for free today and discover the transformative power of cutting-edge TTS technology!

What are the advantages of text to speech systems?

Text to speech systems offer an array of advantages that extend beyond mere convenience. At the forefront is increased accessibility. Other benefits of TTS include productivity, multitasking, language learning, personalization, and more.

How does text to speech benefit students?

Beyond serving as an alternative method for information absorption, TTS aids in crucial tasks such as proofreading and editing. The auditory feedback provided by TTS enhances overall productivity, making it an invaluable tool in educational settings where catering to different learning preferences is critical.

How can text to speech enhance your business?

In business, TTS becomes a catalyst for elevated efficiency. Professionals can multitask effectively, utilizing TTS for proofreading documents, enhancing communication, and contributing to a streamlined workflow. This not only saves time but also improves overall content quality, making TTS an indispensable asset for businesses seeking operational optimization. Companies can use TTS to create ads, product demos, marketing campaigns for social media, explainer videos, and so on to improve productivity and create engaging audio content at scale.

How does text to speech technology specifically benefit individuals with visual impairments?

Specifically designed to empower individuals with visual impairments, TTS technology becomes a beacon of inclusivity. Through converting written content into spoken words, TTS, coupled with screen readers, ensures digital content is accessible to all. This empowerment fosters independence and emphasizes the importance of equal access to information for all.

In what ways can text to speech aid language learners in their studies?

For language learners, TTS is a dynamic companion in their academic journey. Beyond offering auditory exposure to correct pronunciation, TTS enhances comprehension and provides a versatile learning experience. Language learners can actively practice listening skills, refine pronunciation, and deepen their understanding of a new language, promoting a more immersive and compelling learning process.

How can text to speech enhance the reading experience for avid book lovers?

For avid book lovers, text to speech heralds a transformative shift in the reading experience, offering the option to convert books into audiobooks. TTS alleviates reading fatigue and introduces a more immersive method of consuming literature. By enabling individuals to listen instead of reading text files, TTS accommodates various lifestyles and preferences, especially during activities like commuting or exercising, where visual engagement may be limited. This technology serves as the audiophile’s gateway to a more versatile and enjoyable literary journey, providing flexibility and accessibility to indulge in the richness of storytelling in a dynamic and engaging format.

You should also read:

How to create engaging videos using TikTok text to speech

An in-depth guide on how to use Text to Speech on Discord

Medical Text to Speech: Changing Healthcare for the Better

Help | Advanced Search

Electrical Engineering and Systems Science > Audio and Speech Processing

Title: voicecraft: zero-shot speech editing and text-to-speech in the wild.

Abstract: We introduce VoiceCraft, a token infilling neural codec language model, that achieves state-of-the-art performance on both speech editing and zero-shot text-to-speech (TTS) on audiobooks, internet videos, and podcasts. VoiceCraft employs a Transformer decoder architecture and introduces a token rearrangement procedure that combines causal masking and delayed stacking to enable generation within an existing sequence. On speech editing tasks, VoiceCraft produces edited speech that is nearly indistinguishable from unedited recordings in terms of naturalness, as evaluated by humans; for zero-shot TTS, our model outperforms prior SotA models including VALLE and the popular commercial model XTTS-v2. Crucially, the models are evaluated on challenging and realistic datasets, that consist of diverse accents, speaking styles, recording conditions, and background noise and music, and our model performs consistently well compared to other models and real recordings. In particular, for speech editing evaluation, we introduce a high quality, challenging, and realistic dataset named RealEdit. We encourage readers to listen to the demos at this https URL .

Submission history

Access paper:.

- HTML (experimental)

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

BibTeX formatted citation

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

AWS Machine Learning Blog

Best practices for building secure applications with amazon transcribe.

Amazon Transcribe is an AWS service that allows customers to convert speech to text in either batch or streaming mode. It uses machine learning–powered automatic speech recognition (ASR), automatic language identification, and post-processing technologies. Amazon Transcribe can be used for transcription of customer care calls, multiparty conference calls, and voicemail messages, as well as subtitle generation for recorded and live videos, to name just a few examples. In this blog post, you will learn how to power your applications with Amazon Transcribe capabilities in a way that meets your security requirements.

Some customers entrust Amazon Transcribe with data that is confidential and proprietary to their business. In other cases, audio content processed by Amazon Transcribe may contain sensitive data that needs to be protected to comply with local laws and regulations. Examples of such information are personally identifiable information (PII), personal health information (PHI), and payment card industry (PCI) data. In the following sections of the blog, we cover different mechanisms Amazon Transcribe has to protect customer data both in transit and at rest. We share the following seven security best practices to build applications with Amazon Transcribe that meet your security and compliance requirements:

- Use data protection with Amazon Transcribe

- Communicate over a private network path

- Redact sensitive data if needed

- Use IAM roles for applications and AWS services that require Amazon Transcribe access

- Use tag-based access control

- Use AWS monitoring tools

- Enable AWS Config

The following best practices are general guidelines and don’t represent a complete security solution. Because these best practices might not be appropriate or sufficient for your environment, use them as helpful considerations rather than prescriptions.