Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

Anonymous and Non-anonymous User Behavior on Social Media: A Case Study of Jodel and Instagram

2018, Journal of Information Science Theory and Practice

Anonymity plays an increasingly important role on social media. This is reflected by more and more applications enabling anonymous interactions. However, do social media users behave different when they are anonymous? In our research, we investigated social media services meant for solely anonymous use (Jodel) and for widely spread non-anonymous sharing of pictures and videos (Instagram). This study examines the impact of anonymity on the behavior of users on Jodel compared to their non-anonymous use of Instagram as well as the differences between the user types: producer, consumer, and participant. Our approach is based on the uses and gratifications theory (U>) by E. Katz, specifically on the sought gratifications (motivations) of self-presentation, information, socialization, and entertainment. Since Jodel is mostly used in Germany, we developed an online survey in German. The questions addressed the three different user types and were subdivided according to the four motivatio...

Related Papers

Information, Communication and Society

Atte Oksanen

The dominance of computer-mediated communication in the online relational landscape continues to affect millions of users; yet, few studies have identified and analyzed characteristics shared by those specifically valuing its anonymous aspects for self-expression. This article identifies and investigates key characteristics of such users through online survey by two samples of Finnish users of social networking sites aged 15–30 years (n = 1013; 544). Various characteristics espoused by those especially valuing anonymity for self-expression online were identified and analyzed in relation to the users in question. Favoring anonymity was positively correlated with both grandiosity, a component of narcissism, and low self-esteem. In addition, users with stronger anonymity preference tended to be younger, highly trusting, having strong ties to online communities while having few offline friends. Findings emphasize the significance of a deeper understanding of how anonymity effects and attracts users seeking its benefits while also providing new insights into how user characteristics interact depending on motivation.

Anna Alexandra Ndegwa

Christina Frederick

This research presents several aspects of anonymous social media postings using an anonymous social media application (i.e., Yik Yak) that is GPS-linked to college campuses. Anonymous social media been widely criticized for postings containing threats/harassment, vulgarity and suicidal intentions. However, little research has empirically examined the content of anonymous social media postings, and whether they contain a large quantity of negative social content. To best understand this phenomenon an analysis of the content of anonymous social media posts was conducted in accordance with Deindividuation Theory (Reicher, Spears, & Postmes, 1995). Deindividuation Theory predicts group behavior is congruent with group norms. Therefore, if a group norm is antisocial in nature, then so too will be group behavior. In other words, individuals relinquish their individual identity to a group identity, while they are a part of that group. Since the application used in this study is limited to ...

Loyiso C Ngcongo

This research is aimed at exploring the uses and gratifications of social media by the Erand Court Residents in Midrand, Johannesburg, Gauteng. Social media is used amongst friends, and loved ones as another form of communication in order to receive feedback instantaneously. Social media is sometimes used to strengthen relationships, personally and professionally. As a result the social media evolution and trends has transformed communication tremendously. In the case of Erand Court complex in Midrand, the main stakeholders of the consists of residents, property owners, estate agency, the body corporate and the municipality and so forth. The Erand court body corporate has the responsibility to keep these stakeholders informed of their activities and be able to receive feedback from them on the services they receive. In addition the residents more specifically have family-like living arrangements and also have vibrant lifestyle of which most revolves in the circle of friends. Therefore social media is more important to them when they want to keep up to date with their friend and in the lives of their loved ones and colleagues. Erand Court complex is not yet exposed to alternative forms of communication and still dwells on the traditional forms of communication such as sending out letters to convey key important messages. Therefore it was important to investigate what social media the residents are using and for what reason in order for the body corporate to develop an alternative form of communication that would provide them with instant feedback. In order to achieve this, the body corporate has to ensure that communication methods used are able to keep the conversations going between the residents and other key stakeholders. This research study is aimed at exploring the uses and gratifications of social media by the tenants in order to help the body corporate find a better way to communicate with stakeholders and ensure that messages are delivered on time with feedback being able to come instantly form all residents who have concerns or suggestions about certain issues of concern with the complex. Social media is often taken for granted without realizing the power it has at influencing the opinion or circulating the news, hence it is vital for any organization or area of business to have social media as part of their communication strategy. After all, communication in its nature has to be a two way symmetrical activity where or message sender is able to get feedback swiftly or the other way around. Social media helps communication participants achieve this amicably. Though, the social media have the responsibility to educate themselves about the social media platforms they choose to be feature in including its advantages and the disadvantages. In most cases social media has become a threat to the society, such as promoting hate speech, violence, and expression. This is a challenge most people are facing because they choose not to educate themselves, and in this study is is explained how people feel about having social media privacy settings.

kandie joseph

Nicole Ellison

Online Journal of Communication and Media Technologies

Neslihan Özmelek Taş

Proceedings of HCI Korea 2013

Tasos Spiliotopoulos

Social networks are commonplace tools, accessed everyday by millions of users. However, despite their popularity, we still know relatively little about why they are so appealing and what specifically they are used for - what novel needs they meet. This paper presents two studies that extend work on applying Uses and Gratification theory to answer such questions. The first study explores motivations for using a content community on a social network service – a music video sharing group on Facebook. In a two-stage experiment, 20 users generated words or phrases to describe how they used the group, and what they enjoyed about their use. These phrases were coded into 34 questionnaire items that were then completed by 57 new participants. Factor analysis on this data revealed four gratifications: contribution; discovery; social interaction and entertainment. These factors are interpreted and discussed. The second study explores the links between motives for using a social network service and numerical measures of that activity. Specifically, it identified motives for Facebook use and then investigated the extent to which these motives can be predicted through a range of usage and network metrics collected automatically collected via the Facebook API. Results showed that all three variable types in this expanded U&G frame of analysis (covering social antecedents, usage metrics, and personal network metrics) effectively predicted motives and highlighted interesting behaviours. Together these studies extend the methodological framework of Uses and Gratification theory and show how it can be effectively used to better understand and appreciate the complexity of online social behaviors.

Visual Studies

Elisa Serafinelli

Liberato Camilleri

RELATED PAPERS

Physica B: Condensed Matter

Daniel Estève

Journal of Biological Chemistry

Michael Uhler

Turkish Journal of Forensic Medicine

METE KORKUT GULMEN

Revista de El Colegio de San Luis

Jorge Dolores Bautista

Marko Baretić

namita soni

Glenda Miralles

Strojniški vestnik

Urška Mlakar

Psympathic : Jurnal Ilmiah Psikologi

imaduddin Hamzah

Materials Today Communications

Harish Dubey

IEEE Transactions on Robotics

Jong-Hwan Kim

E3S Web of Conferences

Mustapha HASNAOUI

Physica Scripta

Erfan Fatehi

Bulletin du cancer

charles charles

Progress in Computational Fluid Dynamics, An International Journal

Amina Mataoui

Journal of Mathematical Physics

Jolly Kamwanga

Wisnu Putra

PSICOTHEMA-OVIEDO …

Luis De la Corte

Vivanceli Brunello

Modern pathology : an official journal of the United States and Canadian Academy of Pathology, Inc

Kerwin Shannon

International Journal of Food Science

Dr maha Ali

Ben Zarrouk Hatem

tyghfg hjgfdfd

Perspectivas y retos en los sistemas y ambientes educativos para el desarrollo de procesos de aprendizaje

Eduardo Gabriel Barrios-Pérez

See More Documents Like This

RELATED TOPICS

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

Sendit, Yolo, NGL: anonymous social apps are taking over once more, but they aren’t without risks

Research Fellow, Blockchain Innovation Hub, RMIT, RMIT University

Disclosure statement

Alexia Maddox does not work for, consult, own shares in or receive funding from any company or organisation that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.

RMIT University provides funding as a strategic partner of The Conversation AU.

View all partners

Have you ever told a stranger a secret about yourself online? Did you feel a certain kind of freedom doing so, specifically because the context was removed from your everyday life? Personal disclosure and anonymity have long been a potent mix laced through our online interactions.

We’ve recently seen this through the resurgence of anonymous question apps targeting young people, including Sendit and NGL (which stands for “not gonna lie”). The latter has been installed 15 million times globally, according to recent reports .

These apps can be linked to users’ Instagram and Snapchat accounts, allowing them to post questions and receive anonymous answers from followers.

Although they’re trending at the moment, it’s not the first time we’ve seen them. Early examples include ASKfm, launched in 2010, and Spring.me, launched in 2009 (as “Fromspring”).

These platforms have a troublesome history. As a sociologist of technology, I’ve studied human-technology encounters in contentious environments. Here’s my take on why anonymous question apps have once again taken the internet by storm, and what their impact might be.

Why are they so popular?

We know teens are drawn to social platforms. These networks connect them with their peers, support their journeys towards forming identity, and provide them space for experimentation, creativity and bonding.

We also know they manage online disclosures of their identity and personal life through a technique sociologists call “audience segregation”, or “code switching”. This means they’re likely to present themselves differently online to their parents than they are to their peers.

Digital cultures have long used online anonymity to separate real-world identities from online personas, both for privacy and in response to online surveillance. And research has shown online anonymity enhances self-disclosure and honesty .

For young people, having online spaces to express themselves away from the adult gaze is important. Anonymous question apps provide this space. They promise to offer the very things young people seek: opportunities for self-expression and authentic encounters.

Risky by design

We now have a generation of kids growing up with the internet. On one hand, young people are hailed as pioneers of the digital age – and on they other, we fear for them as its innocent victims.

A recent TechCrunch article chronicled the rapid uptake of anonymous question apps by young users, and raised concerns about transparency and safety.

NGL exploded in popularity this year, but hasn’t solved the issue of hate speech and bullying. Anonymous chat app YikYak was shut down in 2017 after becoming littered with hateful speech – but has since returned .

These apps are designed to hook users in. They leverage certain platform principles to provide a highly engaging experience, such as interactivity and gamification (wherein a form of “play” is introduced into non-gaming platforms).

Also, given their experimental nature, they’re a good example of how social media platforms have historically been developed with a “move fast and break things” attitude. This approach, first articulated by Meta CEO Mark Zuckerberg, has arguably reached its use-by date .

Breaking things in real life is not without consequence. Similarly, breaking away from important safeguards online is not without social consequence. Rapidly developed social apps can have harmful consequences for young people, including cyberbullying, cyber dating abuse, image-based abuse and even online grooming.

In May 2021, Snapchat suspended integrated anonymous messaging apps Yolo and LMK, after being sued by the distraught parents of teens who committed suicide after being bullied through the apps.

Yolo’s developers overestimated the capacity of their automated content moderation to identify harmful messages.

In the wake of these suspensions, Sendit soared through the app store charts as Snapchat users sought a replacement.

Snapchat then banned anonymous messaging from third-party apps in March this year, in a bid to limit bullying and harassment. Yet it appears Sendit can still be linked to Snapchat as a third-party app, so the implementation conditions are variable.

Are kids being manipulated by chatbots?

It also seems these apps may feature automated chatbots parading as anonymous responders to prompt interactions – or at least that’s what staff at Tech Crunch found.

Although chatbots can be harmless (or even helpful), problems arise if users can’t tell whether they’re interacting with a bot or a person. At the very least it’s likely the apps are not effectively screening bots out of conversations.

Users can’t do much either. If responses are anonymous (and don’t even have a profile or post history linked to them), there’s no way to know if they’re communicating with a real person or not.

It’s difficult to confirm whether bots are widespread on anonymous question apps, but we’ve seen them cause huge problems on other platforms – opening avenues for deception and exploitation.

For example, in the case of Ashley Madison , a dating and hook-up platform that was hacked in 2015, bots were used to chat with human users to keep them engaged. These bots used fake profiles created by Ashley Madison employees.

Read more: 'Anorexia coach': sexual predators online are targeting teens wanting to lose weight. Platforms are looking the other way

What can we do?

Despite all of the above, some research has found many of the risks teens experience online pose only brief negative effects, if any. This suggests we may be overemphasising the risks young people face online.

At the same time, implementing parental controls to mitigate online risk is often in tension with young people’s digital rights .

So the way forward isn’t simple. And just banning anonymous question apps isn’t the solution.

Rather than avoid anonymous online spaces, we’ll need to trudge through them together – all the while demanding as much accountability and transparency from tech companies as we can.

For parents, there are some useful resources on how to help children and teens navigate tricky online environments in a sensible way.

Read more: Ending online anonymity won't make social media less toxic

- Social media

- Online anonymity

- Anonymous comments

- Online abuse

- Online harassment

- Online hate

- Online bullying

- Online harm

- Young people and technology

Research Fellow in High Impact Weather under Climate Change

Director, Defence and Security

Opportunities with the new CIEHF

School of Social Sciences – Public Policy and International Relations opportunities

Deputy Editor - Technology

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 20 March 2024

Persistent interaction patterns across social media platforms and over time

- Michele Avalle ORCID: orcid.org/0009-0007-4934-2326 1 na1 ,

- Niccolò Di Marco 1 na1 ,

- Gabriele Etta 1 na1 ,

- Emanuele Sangiorgio ORCID: orcid.org/0009-0003-1024-3735 2 ,

- Shayan Alipour 1 ,

- Anita Bonetti 3 ,

- Lorenzo Alvisi 1 ,

- Antonio Scala 4 ,

- Andrea Baronchelli 5 , 6 ,

- Matteo Cinelli ORCID: orcid.org/0000-0003-3899-4592 1 &

- Walter Quattrociocchi ORCID: orcid.org/0000-0002-4374-9324 1

Nature ( 2024 ) Cite this article

8512 Accesses

191 Altmetric

Metrics details

- Mathematics and computing

- Social sciences

Growing concern surrounds the impact of social media platforms on public discourse 1 , 2 , 3 , 4 and their influence on social dynamics 5 , 6 , 7 , 8 , 9 , especially in the context of toxicity 10 , 11 , 12 . Here, to better understand these phenomena, we use a comparative approach to isolate human behavioural patterns across multiple social media platforms. In particular, we analyse conversations in different online communities, focusing on identifying consistent patterns of toxic content. Drawing from an extensive dataset that spans eight platforms over 34 years—from Usenet to contemporary social media—our findings show consistent conversation patterns and user behaviour, irrespective of the platform, topic or time. Notably, although long conversations consistently exhibit higher toxicity, toxic language does not invariably discourage people from participating in a conversation, and toxicity does not necessarily escalate as discussions evolve. Our analysis suggests that debates and contrasting sentiments among users significantly contribute to more intense and hostile discussions. Moreover, the persistence of these patterns across three decades, despite changes in platforms and societal norms, underscores the pivotal role of human behaviour in shaping online discourse.

Similar content being viewed by others

Decoding chromatin states by proteomic profiling of nucleosome readers

Saulius Lukauskas, Andrey Tvardovskiy, … Till Bartke

Interviews in the social sciences

Eleanor Knott, Aliya Hamid Rao, … Chana Teeger

A cross-verified database of notable people, 3500BC-2018AD

Morgane Laouenan, Palaash Bhargava, … Etienne Wasmer

The advent and proliferation of social media platforms have not only transformed the landscape of online participation 2 but have also become integral to our daily lives, serving as primary sources for information, entertainment and personal communication 13 , 14 . Although these platforms offer unprecedented connectivity and information exchange opportunities, they also present challenges by entangling their business models with complex social dynamics, raising substantial concerns about their broader impact on society. Previous research has extensively addressed issues such as polarization, misinformation and antisocial behaviours in online spaces 5 , 7 , 12 , 15 , 16 , 17 , revealing the multifaceted nature of social media’s influence on public discourse. However, a considerable challenge in understanding how these platforms might influence inherent human behaviours lies in the general lack of accessible data 18 . Even when researchers obtain data through special agreements with companies like Meta, it may not be enough to clearly distinguish between inherent human behaviours and the effects of the platform’s design 3 , 4 , 8 , 9 . This difficulty arises because the data, deeply embedded in platform interactions, complicate separating intrinsic human behaviour from the influences exerted by the platform’s design and algorithms.

Here we address this challenge by focusing on toxicity, one of the most prominent aspects of concern in online conversations. We use a comparative analysis to uncover consistent patterns across diverse social media platforms and timeframes, aiming to shed light on toxicity dynamics across various digital environments. In particular, our goal is to gain insights into inherently invariant human patterns of online conversations.

The lack of non-verbal cues and physical presence on the web can contribute to increased incivility in online discussions compared with face-to-face interactions 19 . This trend is especially pronounced in online arenas such as newspaper comment sections and political discussions, where exchanges may degenerate into offensive comments or mockery, undermining the potential for productive and democratic debate 20 , 21 . When exposed to such uncivil language, users are more likely to interpret these messages as hostile, influencing their judgement and leading them to form opinions based on their beliefs rather than the information presented and may foster polarized perspectives, especially among groups with differing values 22 . Indeed, there is a natural tendency for online users to seek out and align with information that echoes their pre-existing beliefs, often ignoring contrasting views 6 , 23 . This behaviour may result in the creation of echo chambers, in which like-minded individuals congregate and mutually reinforce shared narratives 5 , 24 , 25 . These echo chambers, along with increased polarization, vary in their prevalence and intensity across different social media platforms 1 , suggesting that the design and algorithms of these platforms, intended to maximize user engagement, can substantially shape online social dynamics. This focus on engagement can inadvertently highlight certain behaviours, making it challenging to differentiate between organic user interaction and the influence of the platform’s design. A substantial portion of current research is devoted to examining harmful language on social media and its wider effects, online and offline 10 , 26 . This examination is crucial, as it reveals how social media may reflect and amplify societal issues, including the deterioration of public discourse. The growing interest in analysing online toxicity through massive data analysis coincides with advancements in machine learning capable of detecting toxic language 27 . Although numerous studies have focused on online toxicity, most concentrate on specific platforms and topics 28 , 29 . Broader, multiplatform studies are still limited in scale and reach 12 , 30 . Research fragmentation complicates understanding whether perceptions about online toxicity are accurate or misconceptions 31 . Key questions include whether online discussions are inherently toxic and how toxic and non-toxic conversations differ. Clarifying these dynamics and how they have evolved over time is crucial for developing effective strategies and policies to mitigate online toxicity.

Our study involves a comparative analysis of online conversations, focusing on three dimensions: time, platform and topic. We examine conversations from eight different platforms, totalling about 500 million comments. For our analysis, we adopt the toxicity definition provided by the Perspective API, a state-of-the-art classifier for the automatic detection of toxic speech. This API considers toxicity as “a rude, disrespectful or unreasonable comment likely to make someone leave a discussion”. We further validate this definition by confirming its consistency with outcomes from other detection tools, ensuring the reliability and comparability of our results. The concept of toxicity in online discourse varies widely in the literature, reflecting its complexity, as seen in various studies 32 , 33 , 34 . The efficacy and constraints of current machine-learning-based automated toxicity detection systems have recently been debated 11 , 35 . Despite these discussions, automated systems are still the most practical means for large-scale analyses.

Here we analyse online conversations, challenging common assumptions about their dynamics. Our findings reveal consistent patterns across various platforms and different times, such as the heavy-tailed nature of engagement dynamics, a decrease in user participation and an increase in toxic speech in lengthier conversations. Our analysis indicates that, although toxicity and user participation in debates are independent variables, the diversity of opinions and sentiments among users may have a substantial role in escalating conversation toxicity.

To obtain a comprehensive picture of online social media conversations, we analysed a dataset of about 500 million comments from Facebook, Gab, Reddit, Telegram, Twitter, Usenet, Voat and YouTube, covering diverse topics and spanning over three decades (a dataset breakdown is shown in Table 1 and Supplementary Table 1 ; for details regarding the data collection, see the ‘Data collection’ section of the Methods ).

Our analysis aims to comprehensively compare the dynamics of diverse social media accounting for human behaviours and how they evolved. In particular, we first characterize conversations at a macroscopic level by means of their engagement and participation, and we then analyse the toxicity of conversations both after and during their unfolding. We conclude the paper by examining potential drivers for the emergence of toxic speech.

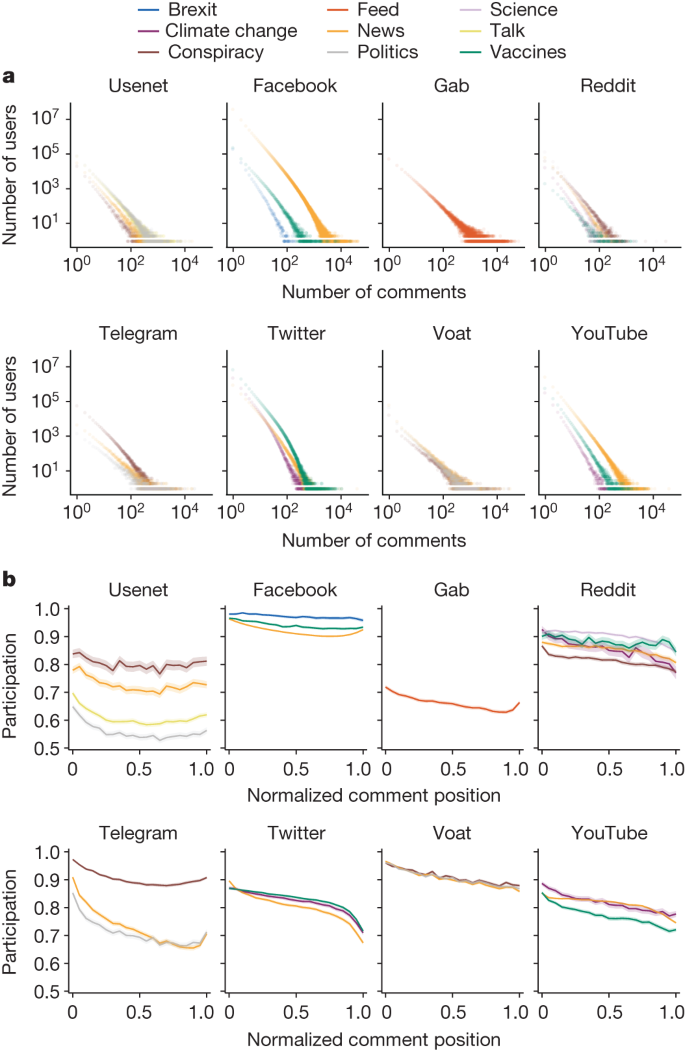

Conversations on different platforms

This section provides an overview of online conversations by considering user activity and thread size metrics. We define a conversation (or a thread) as a sequence of comments that follow chronologically from an initial post. In Fig. 1a and Extended Data Fig. 1 , we observe that, across all platforms, both user activity (defined as the number of comments posted by the user) and thread length (defined as the number of comments in a thread) exhibit heavy-tailed distributions. The summary statistics about these distributions are reported in Supplementary Tables 1 and 2 .

a , The distributions of user activity in terms of comments posted for each platform and each topic. b , The mean user participation as conversations evolve. For each dataset, participation is computed for the threads belonging to the size interval [0.7–1] (Supplementary Table 2 ). Trends are reported with their 95% confidence intervals. The x axis represents the normalized position of comment intervals in the threads.

Consistent with previous studies 36 , 37 our analysis shows that the macroscopic patterns of online conversations, such as the distribution of users/threads activity and lifetime, are consistent across all datasets and topics (Supplementary Tables 1 – 4 ). This observation holds regardless of the specific features of the diverse platforms, such as recommendation algorithms and moderation policies (described in the ‘Content moderation policies’ of the Methods ), as well as other factors, including the user base and the conversation topics. We extend our analysis by examining another aspect of user activity within conversations across all platforms. To do this, we introduce a metric for the participation of users as a thread evolves. In this analysis, threads are filtered to ensure sufficient length as explained in the ‘Logarithmic binning and conversation size’ section of the Methods .

The participation metric, defined over different conversation intervals (that is, 0–5% of the thread arranged in chronological order, 5–10%, and so on), is the ratio of the number of unique users to the number of comments in the interval. Considering a fixed number of comments c , smaller values of participation indicate that fewer unique users are producing c comments in a segment of the conversation. In turn, a value of participation equal to 1 means that each user is producing one of the c comments, therefore obtaining the maximal homogeneity of user participation. Our findings show that, across all datasets, the participation of users in the evolution of conversations, averaged over almost all considered threads, is decreasing, as indicated by the results of Mann–Kendall test—a nonparametric test assessing the presence of a monotonic upward or downward tendency—shown in Extended Data Table 1 . This indicates that fewer users tend to take part in a conversation as it evolves, but those who do are more active (Fig. 1b ). Regarding patterns and values, the trends in user participation for various topics are consistent across each platform. According to the Mann–Kendall test, the only exceptions were Usenet Conspiracy and Talk, for which an ambiguous trend was detected. However, we note that their regression slopes are negative, suggesting a decreasing trend, even if with a weaker effect. Overall, our first set of findings highlights the shared nature of certain online interactions, revealing a decrease in user participation over time but an increase in activity among participants. This insight, consistent across most platforms, underscores the dynamic interplay between conversation length, user engagement and topic-driven participation.

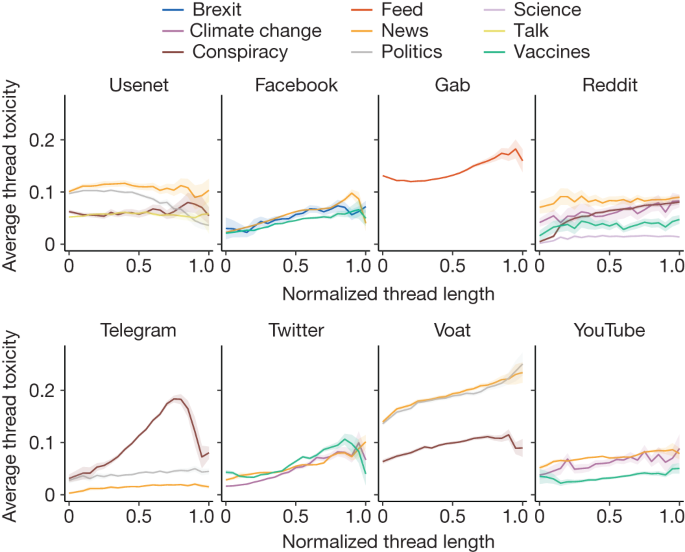

Conversation size and toxicity

To detect the presence of toxic language, we used Google’s Perspective API 34 , a state-of-the-art toxicity classifier that has been used extensively in recent literature 29 , 38 . Perspective API defines a toxic comment as “A rude, disrespectful, or unreasonable comment that is likely to make people leave a discussion”. On the basis of this definition, the classifier assigns a toxicity score in the [0,1] range to a piece of text that can be interpreted as an estimate of the likelihood that a reader would perceive the comment as toxic ( https://developers.perspectiveapi.com/s/about-the-api-score ). To define an appropriate classification threshold, we draw from the existing literature 39 , which uses 0.6 as the threshold for considering a comment as toxic. A robustness check of our results using different threshold and classification tools is reported in the ‘Toxicity detection and validation of employed models’ section of the Methods , together with a discussion regarding potential shortcomings deriving from automatic classifiers. To further investigate the interplay between toxicity and conversation features across various platforms, our study first examines the prevalence of toxic speech in each dataset. We then analyse the occurrence of highly toxic users and conversations. Lastly, we investigate how the length of conversations correlates with the probability of encountering toxic comments. First of all, we define the toxicity of a user as the fraction of toxic comments that she/he left. Similarly, the toxicity of a thread is the fraction of toxic comments it contains. We begin by observing that, although some toxic datasets exist on unmoderated platforms such as Gab, Usenet and Voat, the prevalence of toxic speech is generally low. Indeed, the percentage of toxic comments in each dataset is mostly below 10% (Table 1 ). Moreover, the complementary cumulative distribution functions illustrated in Extended Data Fig. 2 show that the fraction of extremely toxic users is very low for each dataset (in the range between 10 −3 and 10 −4 ), and the majority of active users wrote at least one toxic comment, as reported in Supplementary Table 5 , therefore suggesting that the overall volume of toxicity is not a phenomenon limited to the activity of very few users and localized in few conversations. Indeed, the number of users versus their toxicity decreases sharply following an exponential trend. The toxicity of threads follows a similar pattern. To understand the association between the size and toxicity of a conversation, we start by grouping conversations according to their length to analyse their structural differences 40 . The grouping is implemented by means of logarithmic binning (see the ‘Logarithmic binning and conversation size’ section of the Methods ) and the evolution of the average fraction of toxic comments in threads versus the thread size intervals is reported in Fig. 2 . Notably, the resulting trends are almost all increasing, showing that, independently of the platform and topic, the longer the conversation, the more toxic it tends to be.

The mean fraction of toxic comments in conversations versus conversation size for each dataset. Trends represent the mean toxicity over each size interval and their 95% confidence interval. Size ranges are normalized to enable visual comparison of the different trends.

We assessed the increase in the trends by both performing linear regression and applying the Mann–Kendall test to ensure the statistical significance of our results (Extended Data Table 2 ). To further validate these outcomes, we shuffled the toxicity labels of comments, finding that trends are almost always non-increasing when data are randomized. Furthermore, the z -scores of the regression slopes indicate that the observed trends deviate from the mean of the distributions resulting from randomizations, being at least 2 s.d. greater in almost all cases. This provides additional evidence of a remarkable difference from randomness. The only decreasing trend is Usenet Politics. Moreover, we verified that our results are not influenced by the specific number of bins as, after estimating the same trends again with different intervals, we found that the qualitative nature of the results remains unchanged. These findings are summarized in Extended Data Table 2 . These analyses have been validated on the same data using a different threshold for identifying toxic comments and on a new dataset labelled with three different classifiers, obtaining similar results (Extended Data Fig. 5 , Extended Data Table 5 , Supplementary Fig. 1 and Supplementary Table 8 ). Finally, using a similar approach, we studied the toxicity content of conversations versus their lifetime—that is, the time elapsed between the first and last comment. In this case, most trends are flat, and there is no indication that toxicity is generally associated either with the duration of a conversation or the lifetime of user interactions (Extended Data Fig. 4 ).

Conversation evolution and toxicity

In the previous sections, we analysed the toxicity level of online conversations after their conclusion. We next focus on how toxicity evolves during a conversation and its effect on the dynamics of the discussion. The common beliefs that (1) online interactions inevitably devolve into toxic exchanges over time and (2) once a conversation reaches a certain toxicity threshold, it would naturally conclude, are not modern notions but they were also prevalent in the early days of the World Wide Web 41 . Assumption 2 aligns with the Perspective API’s definition of toxic language, suggesting that increased toxicity reduces the likelihood of continued participation in a conversation. However, this observation should be reconsidered, as it is not only the peak levels of toxicity that might influence a conversation but, for example, also a consistent rate of toxic content. To test these common assumptions, we used a method similar to that used for measuring participation; we select sufficiently long threads, divide each of them into a fixed number of equal intervals, compute the fraction of toxic comments for each of these intervals, average it over all threads and plot the toxicity trend through the unfolding of the conversations. We find that the average toxicity level remains mostly stable throughout, without showing a distinctive increase around the final part of threads (Fig. 3a (bottom) and Extended Data Fig. 3 ). Note that a similar observation was made previously 41 , but referring only to Reddit. Our findings challenge the assumption that toxicity discourages people from participating in a conversation, even though this notion is part of the definition of toxicity used by the detection tool. This can be seen by checking the relationship between trends in user participation, a quantity related to the number of users in a discussion at some point, and toxicity. The fact that the former typically decreases while the latter remains stable during conversations indicates that toxicity is not associated with participation in conversations (an example is shown in Fig. 3a ; box plots of the slopes of participation and toxicity for the whole dataset are shown in Fig. 3b ). This suggests that, on average, people may leave discussions regardless of the toxicity of the exchanges. We calculated the Pearson’s correlation between user participation and toxicity trends for each dataset to support this hypothesis. As shown in Fig. 3d , the resulting correlation coefficients are very heterogeneous, indicating no consistent pattern across different datasets. To further validate this analysis, we tested the differences in the participation of users commenting on either toxic or non-toxic conversations. To split such conversations into two disjoint sets, we first compute the toxicity distribution T i of long threads in each dataset i , and we then label a conversation j in dataset i as toxic if it has toxicity t ij ≥ µ ( T i ) + σ ( T i ), with µ ( T i ) being mean and σ ( T i ) the standard deviation of T i ; all of the other conversations are considered to be non-toxic. After splitting the threads, for each dataset, we compute the Pearson’s correlation of user participation between sets to find strongly positive values of the coefficient in all cases (Fig. 3c,e ). This result is also confirmed by a different analysis of which the results are reported in Supplementary Table 8 , in which no significant difference between slopes in toxic and non-toxic threads can be found. Thus, user behaviour in toxic and non-toxic conversations shows almost identical patterns in terms of participation. This reinforces our finding that toxicity, on average, does not appear to affect the likelihood of people participating in a conversation. These analyses were repeated with a lower toxicity classification threshold (Extended Data Fig. 5 ) and on additional datasets (Supplementary Fig. 2 and Supplementary Table 11 ), finding consistent results.

a , Examples of a typical trend in averaged user participation (top) and toxicity (bottom) versus the normalized position of comment intervals in the threads (Twitter news dataset). b , Box plot distributions of toxicity ( n = 25, minimum = −0.012, maximum = 0.015, lower whisker = −0.012, quartile 1 (Q1) = − 0.004, Q2 = 0.002, Q3 = 0.008, upper whisker = 0.015) and participation ( n = 25, minimum = −0.198, maximum = −0.022, lower whisker = −0.198, Q1 = − 0.109, Q2 = − 0.071, Q3 = − 0.049, upper whisker = −0.022) trend slopes for all datasets, as resulting from linear regression. c , An example of user participation in toxic and non-toxic thread sets (Twitter news dataset). d , Pearson’s correlation coefficients between user participation and toxicity trends for each dataset. e , Pearson’s correlation coefficients between user participation in toxic and non-toxic threads for each dataset.

Controversy and toxicity

In this section, we aim to explore why people participate in toxic online conversations and why longer discussions tend to be more toxic. Several factors could be the subject matter. First, controversial topics might lead to longer, more heated debates with increased toxicity. Second, the endorsement of toxic content by other users may act as an incentive to increase the discussion’s toxicity. Third, engagement peaks, due to factors such as reduced discussion focus or the intervention of trolls, may bring a higher share of toxic exchanges. Pursuing this line of inquiry, we identified proxies to measure the level of controversy in conversations and examined how these relate to toxicity and conversation size. Concurrently, we investigated the relationship between toxicity, endorsement and engagement.

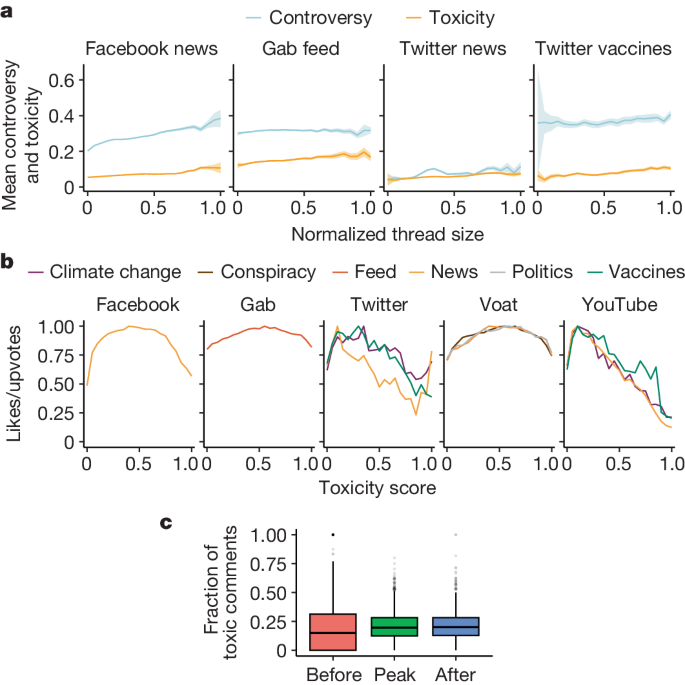

As shown previously 24 , 42 , controversy is likely to emerge when people with opposing views engage in the same debate. Thus, the presence of users with diverse political leanings within a conversation could be a valid proxy for measuring controversy. We operationalize this definition as follows. Exploiting the peculiarities of our data, we can infer the political leaning of a subset of users in the Facebook News, Twitter News, Twitter Vaccines and Gab Feed datasets. This is achieved by examining the endorsement, for example, in the form of likes, expressed towards news outlets of which the political inclinations have been independently assessed by news rating agencies (see the ‘Polarization and user leaning attribution’ section of the Methods ). Extended Data Table 3 shows a breakdown of the datasets. As a result, we label users with a leaning score l ∈ [−1, 1], −1 being left leaning and +1 being right leaning. We then select threads with at least ten different labelled users, in which at least 10% of comments (with a minimum of 20) are produced by such users and assign to each of these comments the same leaning score of those who posted them. In this setting, the level of controversy within a conversation is assumed to be captured by the spread of the political leaning of the participants in the conversation. A natural way for measuring such a spread is the s.d. σ ( l ) of the distribution of comments possessing a leaning score: the higher the σ ( l ), the greater the level of ideological disagreement and therefore controversy in a thread. We analysed the relationship between controversy and toxicity in online conversations of different sizes. Figure 4a shows that controversy increases with the size of conversations in all datasets, and its trends are positively correlated with the corresponding trends in toxicity (Extended Data Table 3 ). This supports our hypothesis that controversy and toxicity are closely related in online discussions.

a , The mean controversy ( σ ( l )) and mean toxicity versus thread size (log-binned and normalized) for the Facebook news, Twitter news, Twitter vaccines and Gab feed datasets. Here toxicity is calculated in the same conversations in which controversy could be computed (Extended Data Table 3 ); the relative Pearson’s, Spearman’s and Kendall’s correlation coefficients are also provided in Extended Data Table 3 . Trends are reported with their 95% confidence interval. b , Likes/upvotes versus toxicity (linearly binned). c , An example (Voat politics dataset) of the distributions of the frequency of toxic comments in threads before ( n = 2,201, minimum = 0, maximum = 1, lower whisker = 0, Q1 = 0, Q2 = 0.15, Q3 = 0.313, upper whisker = 0.769) at the peak ( n = 2,798, minimum = 0, maximum = 0.8, lower whisker = 0, Q1 = 0.125, Q2 = 0.196, Q3 = 0.282, upper whisker = 0.513) and after the peak ( n = 2,791, minimum = 0, maximum = 1, lower whisker = 0, Q1 = 0.129, Q2 = 0.200, Q3 = 0.282, upper whisker = 0.500) of activity, as detected by Kleinberg’s burst detection algorithm.

As a complementary analysis, we draw on previous results 43 . In that study, using a definition of controversy operationally different but conceptually related to ours, a link was found between a greater degree of controversy of a discussion topic and a wider distribution of sentiment scores attributed to the set of its posts and comments. We quantified the sentiment of comments using a pretrained BERT model available from Hugging Face 44 , used also in previous studies 45 . The model predicts the sentiment of a sentence through a scoring system ranging from 1 (negative) to 5 (positive). We define the sentiment attributed to a comment c as its weighted mean \(s(c)=\sum _{i=1.5}{x}_{i}{p}_{i}\) , where x i ∈ [1, 5] is the output score from the model and p i is the probability associated to that value. Moreover, we normalize the sentiment score s for each dataset between 0 and 1. We observe the trends of the mean s.d. of sentiment in conversations, \(\bar{\sigma }(s)\) , and toxicity are positively correlated for moderated platforms such as Facebook and Twitter but are negatively correlated on Gab (Extended Data Table 3 ). The positive correlation observed in Facebook and Twitter indicates that greater discrepancies in sentiment of the conversations can, in general, be linked to toxic conversations and vice versa. Instead, on unregulated platforms such as Gab, highly conflicting sentiments seem to be more likely to emerge in less toxic conversations.

As anticipated, another factor that may be associated with the emergence of toxic comments is the endorsement they receive. Indeed, such positive reactions may motivate posting even more comments of the same kind. Using the mean number of likes/upvotes as a proxy of endorsement, we have an indication that this may not be the case. Figure 4b shows that the trend in likes/upvotes versus comments toxicity is never increasing past the toxicity score threshold (0.6).

Finally, to complement our analysis, we inspect the relationship between toxicity and user engagement within conversations, measured as the intensity of the number of comments over time. To do so, we used a method for burst detection 46 that, after reconstructing the density profile of a temporal stream of elements, separates the stream into different levels of intensity and assigns each element to the level to which it belongs (see the ‘Burst analysis’ section of the Methods ). We computed the fraction of toxic comments at the highest intensity level of each conversation and for the levels right before and after it. By comparing the distributions of the fraction of toxic comments for the three intervals, we find that these distributions are statistically different in almost all cases (Fig. 4c and Extended Data Table 4 ). In all datasets but one, distributions are consistently shifted towards higher toxicity at the peak of engagement, compared with the previous phase. Likewise, in most cases, the peak shows higher toxicity even if compared to the following phase, which in turn is mainly more toxic than the phase before the peak. These results suggest that toxicity is likely to increase together with user engagement.

Here we examine one of the most prominent and persistent characteristics online discussions—toxic behaviour, defined here as rude, disrespectful or unreasonable conduct. Our analysis suggests that toxicity is neither a deterrent to user involvement nor an engagement amplifier; rather, it tends to emerge when exchanges become more frequent and may be a product of opinion polarization. Our findings suggest that the polarization of user opinions—intended as the degree of opposed partisanship of users in a conversation—may have a more crucial role than toxicity in shaping the evolution of online discussions. Thus, monitoring polarization could indicate early interventions in online discussions. However, it is important to acknowledge that the dynamics at play in shaping online discourse are probably multifaceted and require a nuanced approach for effective moderation. Other factors may influence toxicity and engagement, such as the specific subject of the conversation, the presence of influential users or ‘trolls’, the time and day of posting, as well as cultural or demographic aspects, such as user average age or geographical location. Furthermore, even though extremely toxic users are rare (Extended Data Fig. 2 ), the relationship between participation and toxicity of a discussion may in principle be affected also by small groups of highly toxic and engaged users driving the conversation dynamics. Although the analysis of such subtler aspects is beyond the scope of this Article, they are certainly worth investigating in future research.

However, when people encounter views that contradict their own, they may react with hostility and contempt, consistent with previous research 47 . In turn, it may create a cycle of negative emotions and behaviours that fuels toxicity. We also show that some online conversation features have remained consistent over the past three decades despite the evolution of platforms and social norms.

Our study has some limitations that we acknowledge and discuss. First, we use political leaning as a proxy for general leaning, which may capture only some of the nuances of online opinions. However, political leaning represents a broad spectrum of opinions across different topics, and it correlates well with other dimensions of leaning, such as news preferences, vaccine attitudes and stance on climate change 48 , 49 . We could not assign a political leaning to users to analyse controversies on all platforms. Still, those considered—Facebook, Gab and Twitter—represent different populations and moderation policies, and the combined data account for nearly 90% of the content in our entire dataset. Our analysis approach is based on breadth and heterogeneity. As such, it may raise concerns about potential reductionism due to the comparison of different datasets from different sources and time periods. We acknowledge that each discussion thread, platform and context has unique characteristics and complexities that might be diminished when homogenizing data. However, we aim not to capture the full depth of every discussion but to identify and highlight general patterns and trends in online toxicity across platforms and time. The quantitative approach used in our study is similar to numerous other studies 15 and enables us to uncover these overarching principles and patterns that may otherwise remain hidden. Of course, it is not possible to account for the behaviours of passive users. This entails, for example, that even if toxicity does not seem to make people leave conversations, it could still be a factor that discourages them from joining them. Our study leverages an extensive dataset to examine the intricate relationship between persistent online human behaviours and the characteristics of different social media platforms. Our findings challenge the prevailing assumption by demonstrating that toxic content, as traditionally defined, does not necessarily reduce user engagement, thereby questioning the assumed direct correlation between toxic content and negative discourse dynamics. This highlights the necessity for a detailed examination of the effect of toxic interactions on user behaviour and the quality of discussions across various platforms. Our results, showing user resilience to toxic content, indicate the potential for creating advanced, context-aware moderation tools that can accurately navigate the complex influence of antagonistic interactions on community engagement and discussion quality. Moreover, our study sets the stage for further exploration into the complexities of toxicity and its effect on engagement within online communities. Advancing our grasp of online discourse necessitates refining content moderation techniques grounded in a thorough understanding of human behaviour. Thus, our research adds to the dialogue on creating more constructive online spaces, promoting moderation approaches that are effective yet nuanced, facilitating engaging exchanges and reducing the tangible negative effects of toxic behaviour.

Through the extensive dataset presented here, critical aspects of the online platform ecosystem and fundamental dynamics of user interactions can be explored. Moreover, we provide insights that a comparative approach such as the one followed here can prove invaluable in discerning human behaviour from platform-specific features. This may be used to investigate further sensitive issues, such as the formation of polarization and misinformation. The resulting outcomes have multiple potential impacts. Our findings reveal consistent toxicity patterns across platforms, topics and time, suggesting that future research in this field should prioritize the concept of invariance. Recognizing that toxic behaviour is a widespread phenomenon that is not limited by platform-specific features underscores the need for a broader, unified approach to understanding online discourse. Furthermore, the participation of users in toxic conversations suggests that a simple approach to removing toxic comments may not be sufficient to prevent user exposure to such phenomena. This indicates a need for more sophisticated moderation techniques to manage conversation dynamics, including early interventions in discussions that show warnings of becoming toxic. Furthermore, our findings support the idea that examining content pieces in connection with others could enhance the effectiveness of automatic toxicity detection models. The observed homogeneity suggests that models trained using data from one platform may also have applicability to other platforms. Future research could explore further into the role of controversy and its interaction with other elements contributing to toxicity. Moreover, comparing platforms could enhance our understanding of invariant human factors related to polarization, disinformation and content consumption. Such studies would be instrumental in capturing the drivers of the effect of social media platforms on human behaviour, offering valuable insights into the underlying dynamics of online interactions.

Data collection

In our study, data collection from various social media platforms was strategically designed to encompass various topics, ensuring maximal heterogeneity in the discussion themes. For each platform, where feasible, we focus on gathering posts related to diverse areas such as politics, news, environment and vaccinations. This approach aims to capture a broad spectrum of discourse, providing a comprehensive view of conversation dynamics across different content categories.

We use datasets from previous studies that covered discussions about vaccines 50 , news 51 and brexit 52 . For the vaccines topic, the resulting dataset contains around 2 million comments retrieved from public groups and pages in a period that ranges from 2 January 2010 to 17 July 2017. For the news topic, we selected a list of pages from the Europe Media Monitor that reported the news in English. As a result, the obtained dataset contains around 362 million comments between 9 September 2009 and 18 August 2016. Furthermore, we collect a total of about 4.5 billion likes that the users put on posts and comments concerning these pages. Finally, for the brexit topic, the dataset contains around 460,000 comments from 31 December 2015 to 29 July 2016.

We collect data from the Pushshift.io archive ( https://files.pushshift.io/gab/ ) concerning discussions taking place from 10 August 2016, when the platform was launched, to 29 October 2018, when Gab went temporarily offline due to the Pittsburgh shooting 53 . As a result, we collect a total of around 14 million comments.

Data were collected from the Pushshift.io archive ( https://pushshift.io/ ) for the period ranging from 1 January 2018 to 31 December 2022. For each topic, whenever possible, we manually identified and selected subreddits that best represented the targeted topics. As a result of this operation, we obtained about 800,000 comments from the r/conspiracy subreddit for the conspiracy topic. For the vaccines topic, we collected about 70,000 comments from the r/VaccineDebate subreddit, focusing on the COVID-19 vaccine debate. We collected around 400,000 comments from the r/News subreddit for the news topic. We collected about 70,000 comments from the r/environment subreddit for the climate change topic. Finally, we collected around 550,000 comments from the r/science subreddit for the science topic.

We created a list of 14 channels, associating each with one of the topics considered in the study. For each channel, we manually collected messages and their related comments. As a result, from the four channels associated with the news topic (news notiziae, news ultimora, news edizionestraordinaria, news covidultimora), we obtained around 724,000 comments from posts between 9 April 2018 and 20 December 2022. For the politics topic, instead, the corresponding two channels (politics besttimeline, politics polmemes) produced a total of around 490,000 comments between 4 August 2017 and 19 December 2022. Finally, the eight channels assigned to the conspiracy topic (conspiracy bennyjhonson, conspiracy tommyrobinsonnews, conspiracy britainsfirst, conspiracy loomeredofficial, conspiracy thetrumpistgroup, conspiracy trumpjr, conspiracy pauljwatson, conspiracy iononmivaccino) produced a total of about 1.4 million comments between 30 August 2019 and 20 December 2022.

We used a list of datasets from previous studies that includes discussions about vaccines 54 , climate change 49 and news 55 topics. For the vaccines topic, we collected around 50 million comments from 23 January 2010 to 25 January 2023. For the news topic, we extend the dataset used previously 55 by collecting all threads composed of less than 20 comments, obtaining a total of about 9.5 million comments for a period ranging from 1 January 2020 to 29 November 2022. Finally, for the climate change topic, we collected around 9.7 million comments between 1 January 2020 and 10 January 2023.

We collected data for the Usenet discussion system by querying the Usenet Archive ( https://archive.org/details/usenet?tab=about ). We selected a list of topics considered adequate to contain a large, broad and heterogeneous number of discussions involving active and populated newsgroups. As a result of this selection, we selected conspiracy, politics, news and talk as topic candidates for our analysis. For the conspiracy topic, we collected around 280,000 comments between 1 September 1994 and 30 December 2005 from the alt.conspiracy newsgroup. For the politics topics, we collected around 2.6 million comments between 29 June 1992 and 31 December 2005 from the alt.politics newsgroup. For the news topic, we collected about 620,000 comments between 5 December 1992 and 31 December 2005 from the alt.news newsgroup. Finally, for the talk topic, we collected all of the conversations from the homonym newsgroup on a period that ranges from 13 February 1989 to 31 December 2005 for around 2.1 million contents.

We used a dataset presented previously 56 that covers the entire lifetime of the platform, from 9 January 2018 to 25 December 2020, including a total of around 16.2 million posts and comments shared by around 113,000 users in about 7,100 subverses (the equivalent of a subreddit for Voat). Similarly to previous platforms, we associated the topics to specific subverses. As a result of this operation, for the conspiracy topic, we collected about 1 million comments from the greatawakening subverse between 9 January 2018 and 25 December 2020. For the politics topic, we collected around 1 million comments from the politics subverse between 16 June 2014 and 25 December 2020. Finally, for the news topic, we collected about 1.4 million comments from the news subverse between 21 November 2013 and 25 December 2020.

We used a dataset proposed in previous studies that collected conversations about the climate change topic 49 , which is extended, coherently with previous platforms, by including conversations about vaccines and news topics. The data collection process for YouTube is performed using the YouTube Data API ( https://developers.google.com/youtube/v3 ). For the climate change topic, we collected around 840,000 comments between 16 March 2014 and 28 February 2022. For the vaccines topic, we collected conversations between 31 January 2020 and 24 October 2021 containing keywords about COVID-19 vaccines, namely Sinopharm, CanSino, Janssen, Johnson&Johnson, Novavax, CureVac, Pfizer, BioNTech, AstraZeneca and Moderna. As a result of this operation, we gathered a total of around 2.6 million comments to videos. Finally, for the news topic, we collected about 20 million comments between 13 February 2006 and 8 February 2022, including videos and comments from a list of news outlets, limited to the UK and provided by Newsguard (see the ‘Polarization and user leaning attribution’ section).

Content moderation policies

Content moderation policies are guidelines that online platforms use to monitor the content that users post on their sites. Platforms have different goals and audiences, and their moderation policies may vary greatly, with some placing more emphasis on free expression and others prioritizing safety and community guidelines.

Facebook and YouTube have strict moderation policies prohibiting hate speech, violence and harassment 57 . To address harmful content, Facebook follows a ‘remove, reduce, inform’ strategy and uses a combination of human reviewers and artificial intelligence to enforce its policies 58 . Similarly, YouTube has a similar set of community guidelines regarding hate speech policy, covering a wide range of behaviours such as vulgar language 59 , harassment 60 and, in general, does not allow the presence of hate speech and violence against individuals or groups based on various attributes 61 . To ensure that these guidelines are respected, the platform uses a mix of artificial intelligence algorithms and human reviewers 62 .

Twitter also has a comprehensive content moderation policy and specific rules against hateful conduct 63 , 64 . They use automation 65 and human review in the moderation process 66 . At the date of submission, Twitter’s content policies have remained unchanged since Elon Musk’s takeover, except that they ceased enforcing their COVID-19 misleading information policy on 23 November 2022. Their policy enforcement has faced criticism for inconsistency 67 .

Reddit falls somewhere in between regarding how strict its moderation policy is. Reddit’s content policy has eight rules, including prohibiting violence, harassment and promoting hate based on identity or vulnerability 68 , 69 . Reddit relies heavily on user reports and volunteer moderators. Thus, it could be considered more lenient than Facebook, YouTube and Twitter regarding enforcing rules. In October 2022, Reddit announced that they intend to update their enforcement practices to apply automation in content moderation 70 .

By contrast, Telegram, Gab and Voat take a more hands-off approach with fewer restrictions on content. Telegram has ambiguity in its guidelines, which arises from broad or subjective terms and can lead to different interpretations 71 . Although they mentioned they may use automated algorithms to analyse messages, Telegram relies mainly on users to report a range of content, such as violence, child abuse, spam, illegal drugs, personal details and pornography 72 . According to Telegram’s privacy policy, reported content may be checked by moderators and, if it is confirmed to violate their terms, temporary or permanent restrictions may be imposed on the account 73 . Gab’s Terms of Service allow all speech protected under the First Amendment to the US Constitution, and unlawful content is removed. They state that they do not review material before it is posted on their website and cannot guarantee prompt removal of illegal content after it has been posted 74 . Voat was once known as a ‘free-speech’ alternative to Reddit and allowed content even if it may be considered offensive or controversial 56 .

Usenet is a decentralized online discussion system created in 1979. Owing to its decentralized nature, Usenet has been difficult to moderate effectively, and it has a reputation for being a place where controversial and even illegal content can be posted without consequence. Each individual group on Usenet can have its own moderators, who are responsible for monitoring and enforcing their group’s rules, and there is no single set of rules that applies to the entire platform 75 .

Logarithmic binning and conversation size

Owing to the heavy-tailed distributions of conversation length (Extended Data Fig. 1 ), to plot the figures and perform the analyses, we used logarithmic binning. Thus, according to its length, each thread of each dataset is assigned to 1 out of 21 bins. To ensure a minimal number of points in each bin, we iteratively change the left bound of the last bin so that it contains at least N = 50 elements (we set N = 100 in the case of Facebook news, due to its larger size). Specifically, considering threads ordered in increasing length, the size of the largest thread is changed to that of the second last largest one, and the binning is recalculated accordingly until the last bin contains at least N points.

For visualization purposes, we provide a normalization of the logarithmic binning outcome that consists of mapping discrete points into coordinates of the x axis such that the bins correspond to {0, 0.05, 0.1, ..., 0.95, 1}.

To perform the part of the analysis, we select conversations belonging to the [0.7, 1] interval of the normalized logarithmic binning of thread length. This interval ensures that the conversations are sufficiently long and that we have a substantial number of threads. Participation and toxicity trends are obtained by applying to such conversations a linear binning of 21 elements to a chronologically ordered sequence of comments, that is, threads. A breakdown of the resulting datasets is provided in Supplementary Table 2 .

Finally, to assess the equality of the growth rates of participation values in toxic and non-toxic threads (see the ‘Conversation evolution and toxicity’ section), we implemented the following linear regression model:

where the term β 2 accounts for the effect that being a toxic conversation has on the growth of participation. Our results show that β 2 is not significantly different from 0 in most original and validation datasets (Supplementary Tables 8 and 11 )

Toxicity detection and validation of the models used

The problem of detecting toxicity is highly debated, to the point that there is currently no agreement on the very definition of toxic speech 64 , 76 . A toxic comment can be regarded as one that includes obscene or derogatory language 32 , that uses harsh, abusive language and personal attacks 33 , or contains extremism, violence and harassment 11 , just to give a few examples. Even though toxic speech should, in principle, be distinguished from hate speech, which is commonly more related to targeted attacks that denigrate a person or a group on the basis of attributes such as race, religion, gender, sex, sexual orientation and so on 77 , it sometimes may also be used as an umbrella term 78 , 79 . This lack of agreement directly reflects the challenging and inherent subjective nature of the concept of toxicity. The complexity of the topic makes it particularly difficult to assess the reliability of natural language processing models for automatic toxicity detection despite the impressive improvements in the field. Modern natural language processing models, such as Perspective API, are deep learning models that leverage word-embedding techniques to build representations of words as vectors in a high-dimensional space, in which a metric distance should reflect the conceptual distance among words, therefore providing linguistic context. A primary concern regarding toxicity detection models is their limited ability to contextualize conversations 11 , 80 . These models often struggle to incorporate factors beyond the text itself, such as the participant’s personal characteristics, motivations, relationships, group memberships and the overall tone of the discussion 11 . Consequently, what is considered to be toxic content can vary significantly among different groups, such as ethnicities or age groups 81 , leading to potential biases. These biases may stem from the annotators’ backgrounds and the datasets used for training, which might not adequately represent cultural heterogeneity. Moreover, subtle forms of toxic content, like indirect allusions, memes and inside jokes targeted at specific groups, can be particularly challenging to detect. Word embeddings equip current classifiers with a rich linguistic context, enhancing their ability to recognize a wide range of patterns characteristic of toxic expression. However, the requirements for understanding the broader context of a conversation, such as personal characteristics, motivations and group dynamics, remain beyond the scope of automatic detection models. We acknowledge these inherent limitations in our approach. Nonetheless, reliance on automatic detection models is essential for large-scale analyses of online toxicity like the one conducted in this study. We specifically resort to the Perspective API for this task, as it represents state-of-the-art automatic toxicity detection, offering a balance between linguistic nuance and scalable analysis capabilities. To define an appropriate classification threshold, we draw from the existing literature 64 , which uses 0.6 as the threshold for considering a comment to be toxic. This threshold can also be considered a reasonable one as, according to the developer guidelines offered by Perspective, it would indicate that the majority of the sample of readers, namely 6 out of 10, would perceive that comment as toxic. Due to the limitations mentioned above (for a criticism of Perspective API, see ref. 82 ), we validate our results by performing a comparative analysis using two other toxicity detectors: Detoxify ( https://github.com/unitaryai/detoxify ), which is similar to Perspective, and IMSYPP, a classifier developed for a European Project on hate speech 16 ( https://huggingface.co/IMSyPP ). In Supplementary Table 14 , the percentages of agreement among the three models in classifying 100,000 comments taken randomly from each of our datasets are reported. For Detoxify we used the same binary toxicity threshold (0.6) as used with Perspective. Although IMSYPP operates on a distinct definition of toxicity as outlined previously 16 , our comparative analysis shows a general agreement in the results. This alignment, despite the differences in underlying definitions and methodologies, underscores the robustness of our findings across various toxicity detection frameworks. Moreover, we perform the core analyses of this study using all classifiers on a further, vast and heterogeneous dataset. As shown in Supplementary Figs. 1 and 2 , the results regarding toxicity increase with conversation size and user participation and toxicity are quantitatively very similar. Furthermore, we verify the stability of our findings under different toxicity thresholds. Although the main analyses in this paper use the threshold value recommended by the Perspective API, set at 0.6, to minimize false positives, our results remain consistent even when applying a less conservative threshold of 0.5. This is demonstrated in Extended Data Fig. 5 , confirming the robustness of our observations across varying toxicity levels. For this study, we used the API support for languages prevalent in the European and American continents, including English, Spanish, French, Portuguese, German, Italian, Dutch, Polish, Swedish and Russian. Detoxify also offers multilingual support. However, IMSYPP is limited to English and Italian text, a factor considered in our comparative analysis.

Polarization and user leaning attribution

Our approach to measuring controversy in a conversation is based on estimating the degree of political partisanship among the participants. This measure is closely related to the political science concept of political polarization. Political polarization is the process by which political attitudes diverge from moderate positions and gravitate towards ideological extremes, as described previously 83 . By quantifying the level of partisanship within discussions, we aim to provide insights into the extent and nature of polarization in online debates. In this context, it is important to distinguish between ‘ideological polarization’ and ‘affective polarization’. Ideological polarization refers to divisions based on political viewpoints. By contrast, affective polarization is characterized by positive emotions towards members of one’s group and hostility towards those of opposing groups 84 , 85 . Here we focus specifically on ideological polarization. The subsequent description of our procedure for attributing user political leanings will further clarify this focus. On online social media, the individual leaning of a user toward a topic can be inferred through the content produced or the endorsement shown toward specific content. In this study, we consider the endorsement of users to news outlets of which the political leaning has been evaluated by trustworthy external sources. Although not without limitations—which we address below—this is a standard approach that has been used in several studies, and has become a common and established practice in the field of social media analysis due to its practicality and effectiveness in providing a broad understanding of political dynamics on these online platforms 1 , 43 , 86 , 87 , 88 . We label news outlets with a political score based on the information reported by Media Bias/Fact Check (MBFC) ( https://mediabiasfactcheck.com ), integrating with the equivalent information from Newsguard ( https://www.newsguardtech.com/ ). MBFC is an independent fact-checking organization that rates news outlets on the basis of the reliability and the political bias of the content that they produce and share. Similarly, Newsguard is a tool created by an international team of journalists that provides news outlet trust and political bias scores. Following standard methods used in the literature 1 , 43 , we calculated the individual leaning of a user l ∈ [−1, 1] as the average of the leaning scores l c ∈ [−1, 1] attributed to each of the content it produced/shared, where l c results from a mapping of the news organizations political scores provided by MBFC and Newsguard, respectively: [left, centre-left, centre, centre-right, right] to [−1, − 0.5, 0, 0.5, 1], and [far left, left, right, far right] to [−1, −0.5, 0.5, 1]). Our datasets have different structures, so we have to evaluate user leanings in different ways. For Facebook News, we assign a leaning score to users who posted a like at least three times and commented at least three times under news outlet pages that have a political score. For Twitter News, a leaning is assigned to users who posted at least 15 comments under scored news outlet pages. For Twitter Vaccines and Gab, we consider users who shared content produced by scored news outlet pages at least three times. A limitation of our approach is that engaging with politically aligned content does not always imply agreement; users may interact with opposing viewpoints for critical discussion. However, research indicates that users predominantly share content aligning with their own views, especially in politically charged contexts 87 , 89 , 90 . Moreover, our method captures users who actively express their political leanings, omitting the ‘passive’ ones. This is due to the lack of available data on users who do not explicitly state their opinions. Nevertheless, analysing active users offers valuable insights into the discourse of those most engaged and influential on social media platforms.

Burst analysis

We used the Kleinberg burst detection algorithm 46 (see the ‘Controversy and toxicity’ section) to all conversations with at least 50 comments in a dataset. In our analysis, we randomly sample up to 5,000 conversations, each containing a specific number of comments. To ensure the reliability of our data, we exclude conversations with an excessive number of double timestamps—defined as more than 10 consecutive or over 100 within the first 24 h. This criterion helps to mitigate the influence of bots, which could distort the patterns of human activity. Furthermore, we focus on the first 24 h of each thread to analyse streams of comments during their peak activity period. Consequently, Usenet was excluded from our study. The unique usage characteristics of Usenet render such a time-constrained analysis inappropriate, as its activity patterns do not align with those of the other platforms under consideration. By reconstructing the density profile of the comment stream, the algorithm divides the entire stream’s interval into subintervals on the basis of their level of intensity. Labelled as discrete positive values, higher levels of burstiness represent higher activity segments. To avoid considering flat-density phases, threads with a maximum burst level equal to 2 are excluded from this analysis. To assess whether a higher intensity of comments results in a higher comment toxicity, we perform a Mann–Whitney U -test 91 with Bonferroni correction for multiple testing between the distributions of the fraction of toxic comments t i in three intensity phases: during the peak of engagement and at the highest levels before and after. Extended Data Table 4 shows the corrected P values of each test, at a 0.99 confidence level, with H1 indicated in the column header. An example of the distribution of the frequency of toxic comments in threads at the three phases of a conversation considered (pre-peak, peak and post-peak) is reported in Fig. 4c .

Toxicity detection on Usenet