Reference management. Clean and simple.

Google Scholar: the ultimate guide

What is Google Scholar?

Why is google scholar better than google for finding research papers, the google scholar search results page, the first two lines: core bibliographic information, quick full text-access options, "cited by" count and other useful links, tips for searching google scholar, 1. google scholar searches are not case sensitive, 2. use keywords instead of full sentences, 3. use quotes to search for an exact match, 3. add the year to the search phrase to get articles published in a particular year, 4. use the side bar controls to adjust your search result, 5. use boolean operator to better control your searches, google scholar advanced search interface, customizing search preferences and options, using the "my library" feature in google scholar, the scope and limitations of google scholar, alternatives to google scholar, country-specific google scholar sites, frequently asked questions about google scholar, related articles.

Google Scholar (GS) is a free academic search engine that can be thought of as the academic version of Google. Rather than searching all of the indexed information on the web, it searches repositories of:

- universities

- scholarly websites

This is generally a smaller subset of the pool that Google searches. It's all done automatically, but most of the search results tend to be reliable scholarly sources.

However, Google is typically less careful about what it includes in search results than more curated, subscription-based academic databases like Scopus and Web of Science . As a result, it is important to take some time to assess the credibility of the resources linked through Google Scholar.

➡️ Take a look at our guide on the best academic databases .

One advantage of using Google Scholar is that the interface is comforting and familiar to anyone who uses Google. This lowers the learning curve of finding scholarly information .

There are a number of useful differences from a regular Google search. Google Scholar allows you to:

- copy a formatted citation in different styles including MLA and APA

- export bibliographic data (BibTeX, RIS) to use with reference management software

- explore other works have cited the listed work

- easily find full text versions of the article

Although it is free to search in Google Scholar, most of the content is not freely available. Google does its best to find copies of restricted articles in public repositories. If you are at an academic or research institution, you can also set up a library connection that allows you to see items that are available through your institution.

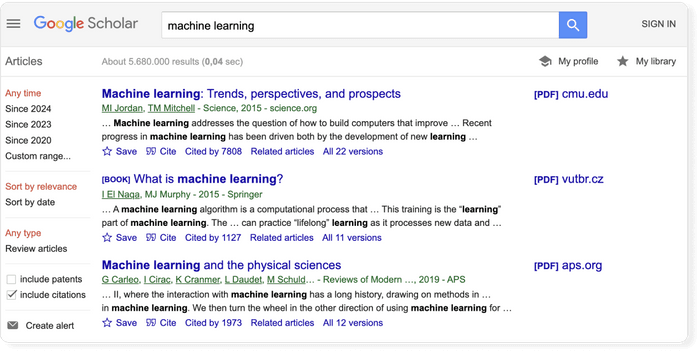

The Google Scholar results page differs from the Google results page in a few key ways. The search result page is, however, different and it is worth being familiar with the different pieces of information that are shown. Let's have a look at the results for the search term "machine learning.”

- The first line of each result provides the title of the document (e.g. of an article, book, chapter, or report).

- The second line provides the bibliographic information about the document, in order: the author(s), the journal or book it appears in, the year of publication, and the publisher.

Clicking on the title link will bring you to the publisher’s page where you may be able to access more information about the document. This includes the abstract and options to download the PDF.

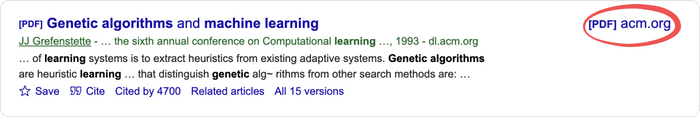

To the far right of the entry are more direct options for obtaining the full text of the document. In this example, Google has also located a publicly available PDF of the document hosted at umich.edu . Note, that it's not guaranteed that it is the version of the article that was finally published in the journal.

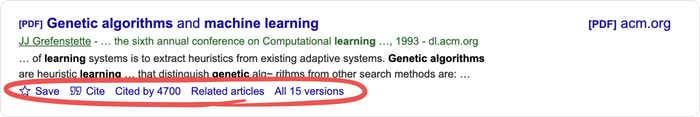

Below the text snippet/abstract you can find a number of useful links.

- Cited by : the cited by link will show other articles that have cited this resource. That is a super useful feature that can help you in many ways. First, it is a good way to track the more recent research that has referenced this article, and second the fact that other researches cited this document lends greater credibility to it. But be aware that there is a lag in publication type. Therefore, an article published in 2017 will not have an extensive number of cited by results. It takes a minimum of 6 months for most articles to get published, so even if an article was using the source, the more recent article has not been published yet.

- Versions : this link will display other versions of the article or other databases where the article may be found, some of which may offer free access to the article.

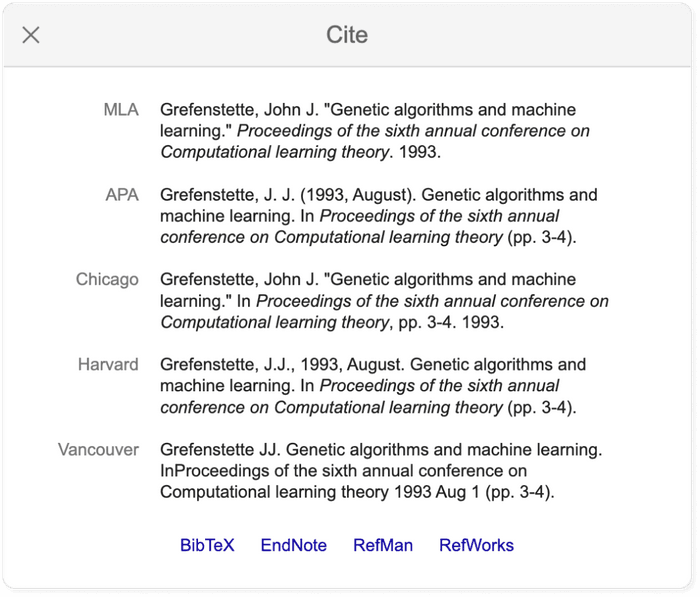

- Quotation mark icon : this will display a popup with commonly used citation formats such as MLA, APA, Chicago, Harvard, and Vancouver that may be copied and pasted. Note, however, that the Google Scholar citation data is sometimes incomplete and so it is often a good idea to check this data at the source. The "cite" popup also includes links for exporting the citation data as BibTeX or RIS files that any major reference manager can import.

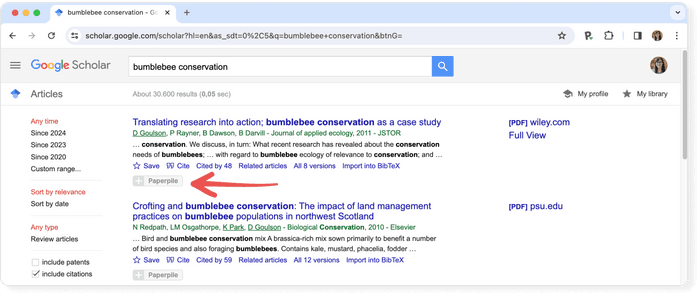

Pro tip: Use a reference manager like Paperpile to keep track of all your sources. Paperpile integrates with Google Scholar and many popular academic research engines and databases, so you can save references and PDFs directly to your library using the Paperpile buttons and later cite them in thousands of citation styles:

Although Google Scholar limits each search to a maximum of 1,000 results , it's still too much to explore, and you need an effective way of locating the relevant articles. Here’s a list of pro tips that will help you save time and search more effectively.

You don’t need to worry about case sensitivity when you’re using Google scholar. In other words, a search for "Machine Learning" will produce the same results as a search for "machine learning.”

Let's say your research topic is about self driving cars. For a regular Google search we might enter something like " what is the current state of the technology used for self driving cars ". In Google Scholar, you will see less than ideal results for this query .

The trick is to build a list of keywords and perform searches for them like self-driving cars, autonomous vehicles, or driverless cars. Google Scholar will assist you on that: if you start typing in the search field you will see related queries suggested by Scholar!

If you put your search phrase into quotes you can search for exact matches of that phrase in the title and the body text of the document. Without quotes, Google Scholar will treat each word separately.

This means that if you search national parks , the words will not necessarily appear together. Grouped words and exact phrases should be enclosed in quotation marks.

A search using “self-driving cars 2015,” for example, will return articles or books published in 2015.

Using the options in the left hand panel you can further restrict the search results by limiting the years covered by the search, the inclusion or exclude of patents, and you can sort the results by relevance or by date.

Searches are not case sensitive, however, there are a number of Boolean operators you can use to control the search and these must be capitalized.

- AND requires both of the words or phrases on either side to be somewhere in the record.

- NOT can be placed in front of a word or phrases to exclude results which include them.

- OR will give equal weight to results which match just one of the words or phrases on either side.

➡️ Read more about how to efficiently search online databases for academic research .

In case you got overwhelmed by the above options, here’s some illustrative examples:

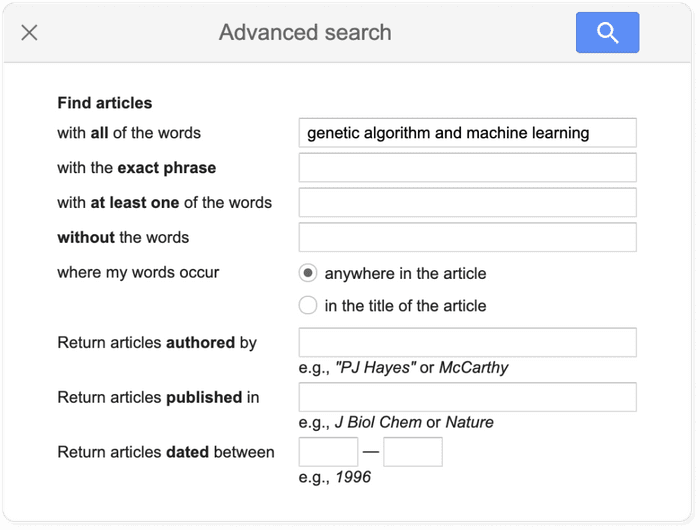

Tip: Use the advanced search features in Google Scholar to narrow down your search results.

You can gain even more fine-grained control over your search by using the advanced search feature. This feature is available by clicking on the hamburger menu in the upper left and selecting the "Advanced search" menu item.

Adjusting the Google Scholar settings is not necessary for getting good results, but offers some additional customization, including the ability to enable the above-mentioned library integrations.

The settings menu is found in the hamburger menu located in the top left of the Google Scholar page. The settings are divided into five sections:

- Collections to search: by default Google scholar searches articles and includes patents, but this default can be changed if you are not interested in patents or if you wish to search case law instead.

- Bibliographic manager: you can export relevant citation data via the “Bibliography manager” subsection.

- Languages: if you wish for results to return only articles written in a specific subset of languages, you can define that here.

- Library links: as noted, Google Scholar allows you to get the Full Text of articles through your institution’s subscriptions, where available. Search for, and add, your institution here to have the relevant link included in your search results.

- Button: the Scholar Button is a Chrome extension which adds a dropdown search box to your toolbar. This allows you to search Google Scholar from any website. Moreover, if you have any text selected on the page and then click the button it will display results from a search on those words when clicked.

When signed in, Google Scholar adds some simple tools for keeping track of and organizing the articles you find. These can be useful if you are not using a full academic reference manager.

All the search results include a “save” button at the end of the bottom row of links, clicking this will add it to your "My Library".

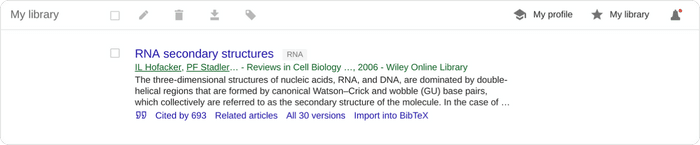

To help you provide some structure, you can create and apply labels to the items in your library. Appended labels will appear at the end of the article titles. For example, the following article has been assigned a “RNA” label:

Within your Google Scholar library, you can also edit the metadata associated with titles. This will often be necessary as Google Scholar citation data is often faulty.

There is no official statement about how big the Scholar search index is, but unofficial estimates are in the range of about 160 million , and it is supposed to continue to grow by several million each year.

Yet, Google Scholar does not return all resources that you may get in search at you local library catalog. For example, a library database could return podcasts, videos, articles, statistics, or special collections. For now, Google Scholar has only the following publication types:

- Journal articles : articles published in journals. It's a mixture of articles from peer reviewed journals, predatory journals and pre-print archives.

- Books : links to the Google limited version of the text, when possible.

- Book chapters : chapters within a book, sometimes they are also electronically available.

- Book reviews : reviews of books, but it is not always apparent that it is a review from the search result.

- Conference proceedings : papers written as part of a conference, typically used as part of presentation at the conference.

- Court opinions .

- Patents : Google Scholar only searches patents if the option is selected in the search settings described above.

The information in Google Scholar is not cataloged by professionals. The quality of the metadata will depend heavily on the source that Google Scholar is pulling the information from. This is a much different process to how information is collected and indexed in scholarly databases such as Scopus or Web of Science .

➡️ Visit our list of the best academic databases .

Google Scholar is by far the most frequently used academic search engine , but it is not the only one. Other academic search engines include:

- Science.gov

- Semantic Scholar

- scholar.google.fr : Sur les épaules d'un géant

- scholar.google.es (Google Académico): A hombros de gigantes

- scholar.google.pt (Google Académico): Sobre os ombros de gigantes

- scholar.google.de : Auf den Schultern von Riesen

➡️ Once you’ve found some research, it’s time to read it. Take a look at our guide on how to read a scientific paper .

No. Google Scholar is a bibliographic search engine rather than a bibliographic database. In order to qualify as a database Google Scholar would need to have stable identifiers for its records.

No. Google Scholar is an academic search engine, but the records found in Google Scholar are scholarly sources.

No. Google Scholar collects research papers from all over the web, including grey literature and non-peer reviewed papers and reports.

Google Scholar does not provide any full text content itself, but links to the full text article on the publisher page, which can either be open access or paywalled content. Google Scholar tries to provide links to free versions, when possible.

The easiest way to access Google scholar is by using The Google Scholar Button. This is a browser extension that allows you easily access Google Scholar from any web page. You can install it from the Chrome Webstore .

Article Contents

Body language and movement, verbal delivery.

- < Previous

Effective presentation skills

- Article contents

- Figures & tables

- Supplementary Data

Robert Dolan, Effective presentation skills, FEMS Microbiology Letters , Volume 364, Issue 24, December 2017, fnx235, https://doi.org/10.1093/femsle/fnx235

- Permissions Icon Permissions

Most PhD's will have a presentation component during the interview process, as well as presenting their work at conferences. This article will provide guidance on how to develop relevant content and effectively deliver it to your audience.

Most organizations list communication skills as one of their most critical issues…and presentation skills are a large component of communications. Presentation skills are crucial to almost every aspect of academic/business life, from meetings, interviews and conferences to trade shows and job fairs. Often times, leadership and presentation skills go hand in hand. NACE Survey 2016 - Ability to communicate verbally (internally and externally) ranked 4.63/5.0 and was the #1 skill employers want. The information provided in this article is designed to provide tips and strategies for delivering an effective presentation, and one that aligns the speaker with the audience.

What type of speaker are you?

Facts and fears of public speaking.

Your blueprint for delivery.

Avoider —You do everything possible to escape from having to get in front of an audience.

Resister —You may have to speak, but you never encourage it.

Accepter —You’ll give presentations but don’t seek those opportunities. Sometimes you feel good about a presentation you gave.

Seeker —Looks for opportunities to speak. Finds the anxiety a stimulant that fuels enthusiasm during a presentation.

Public speaking can create anxiety and fear in many people. Dale Carnegie has a free e-book that provides tips and advice on how to minimize these fears www.dalecarnegie.com/Free-eBook

People are caught between their fear and the fact that many employers expect them to demonstrate good verbal communication skills.

Most interviews by PhD’s have a presentation component.

Academic interviews always have a presentation component.

If your job doesn’t demand presentation skills, odds are that you’ll need them in your next job

Develop your blueprint for delivery:

Information by itself can be boring, unless it's unique or unusual. Conveying it through stories, gestures and analogies make it interesting. A large portion of the impact of communications rests on how you look and sound, not only on what you say. Having good presentation skills allows you to make the most out of your first impression, especially at conferences and job interviews. As you plan your presentation put yourself in the shoes of the audience.

Values …What is important to them?

Needs …What information do they want?

Constraints …Understand their level of knowledge on the subject and target them appropriately.

Demographics …Size of audience and location may influence the presentation. For example, a large auditorium may be more formal and less personal than a presentation to your team or lab mates in a less formal setting.

Structure—Introduction, Content and Conclusion

Body Language and Movement

Verbal Delivery

Introduction

Build rapport with audience (easier in a smaller less formal setting).

State preference for questions—during or after?

Set stage: provide agenda, objective and intended outcomes

Introduce yourself providing your name, role and function. Let the audience know the agenda, your objectives and set their expectations. Give them a reason to listen and make an explicit benefit statement, essentially what's in it for them. Finally, let them know how you will accomplish your objective by setting the agenda and providing an outline of what will be covered.

Deliver your message logically and structured.

Use appropriate anecdotes and examples.

Illustrate and emphasize key points by using color schemes or animations.

Establish credibility, possibly citing references or publications.

Structure your presentation to maximize delivery. Deliver the main idea and communicate to the audience what your intended outcome will be. Transition well through the subject matter and move through your presentation by using phrases such as; ‘now we will review…’ or ‘if there are no more questions, we will now move onto…’ Be flexible and on course. If needed, use examples not in the presentation to emphasize a point, but don’t get side tracked. Stay on course by using phrases such as ‘let's get back to…’ Occasionally, reiterate the benefits of the content and the main idea of your presentation.

Restate the main objective and key supporting points

For Q&A: ‘Who wants more details?’ (Not, ‘any questions?’)

Prompting for questions: ‘A question I often hear is…’

Summarize the main elements of your presentation as they relate to the original objective. If applicable, highlight a key point or crucial element for the audience to take away. Signal the end is near…‘to wrap up’ or ‘to sum up’. Clearly articulate the next steps, actions or practical recommendations. Thank the audience and solicit final questions.

Your non-verbal communications are key elements of your presentation. They are composed of open body posture, eye contact, facial expressions, hand gestures, posture and space between you and the audience.

Stand firmly and move deliberately. Do not sway or shift.

Move at appropriate times during presentation (e.g. move during transitions or to emphasize a point).

Stand where you can see everyone and do not block the visuals/screen.

Decide on a resting position for hands (should feel and look comfortable).

Gestures should be natural and follow what you are saying.

Hand movement can emphasize your point.

Make gestures strong and crisp…ok to use both arms/hands.

Keep hands away from face.

When pointing to the screen, do so deliberately. Do not wave and face the audience to speak

Look at audience's faces, not above their heads.

If an interview or business meeting…look at the decision makers as well as everyone else.

Look at faces for 3–5 seconds and then move on to the next person.

Do not look away from the audience for more than 10 seconds.

Looking at a person keeps them engaged.

Looking at their faces tells you how your delivery and topic is being received by the audience. The audience's body language may show interest, acceptance, openness, boredom, hostility, disapproval and neutrality. Read the audience and adjust where and if appropriate to keep them engaged. For example, if they seem bored inject an interesting anecdote or story to trigger more interest. If they appear to disapprove, ask for questions or comments to better understand how you might adjust your delivery and content if applicable.

Use active rather than passive verbs.

Avoid technical terms, unless you know the audience is familiar with them.

Always use your own words and phrases.

Cut out jargon/slang words.

Look at your audience and use vocal techniques to catch their attention. Consider changing your pace or volume, use a longer than normal pause between key points, and change the pitch or inflection of your voice if needed. Consider taking a drink of water to force yourself to pause or slowdown. View the audience as a group of individual people, so address them as if they were a single person.

Tips for reducing anxiety

If you experience nervousness before your presentation, as most people do, consider the following.

Be Organized —Knowing that your presentation and thoughts are well organized will give you confidence.

Visualize —Imagine delivering your presentation with enthusiasm and leaving the room knowing that you did a good job.

Practice —All successful speakers rehearse their presentations. Either do it alone, with your team, or video tape yourself and review your performance after. Another tip is to make contact before your talk. If possible, speak with the audience before your presentation begins; however, not always possible with a large audience. Walk up to them and thank them in advance for inviting you to speak today.

Movement —Speakers who stand in one spot may experience tension. In order to relax, move in a purposeful manner and use upper body gestures to make points.

Eye Contact —Make your presentation a one-on-one conversation. Build rapport by making it personal and personable. Use words such as ‘ we ’ , ‘ our ’, ‘ us ’ . Eye contact helps you relax because you become less isolated from the audience.

Personal appearance

Clothes should fit well, not too tight. Consider wearing more professional business-like attire. Find two to three colors that work well for you. Conservative colors, such as black, blue, gray and brown, seem to be the safest bet when presenting or meeting someone for the first time in a professional setting. Depending upon the audience, a sport coat and well-matched dress slacks are fine. Generally, try to avoid bright reds, oranges and whites, since these tend to draw attention away from your face. Avoid jewelry that sparkles, dangles or makes noise. Use subtle accessories to compliment your outfit.

Other resources: www.toastmasters.org https://www.skillsyouneed.com/present/presentation-tips.html

https://www.ag.ndsu.edu/evaluation/documents/effective-presentations-a-toolkit-for-engaging-an-audience

Email alerts

Citing articles via.

- Recommend to your Library

- Journals Career Network

Affiliations

- Online ISSN 1574-6968

- Print ISSN 0378-1097

- Copyright © 2024 Federation of European Microbiological Societies

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Institutional account management

- Rights and permissions

- Get help with access

- Accessibility

- Advertising

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

Click through the PLOS taxonomy to find articles in your field.

For more information about PLOS Subject Areas, click here .

Loading metrics

Open Access

Peer-reviewed

Research Article

Does a presentation’s medium affect its message? PowerPoint, Prezi, and oral presentations

* E-mail: [email protected]

Affiliations Department of Psychology, Harvard University, Cambridge, Massachusetts, United States of America, Harvard Initiative for Learning and Teaching, Harvard University, Cambridge, Massachusetts, United States of America

Affiliation Harvard Initiative for Learning and Teaching, Harvard University, Cambridge, Massachusetts, United States of America

Affiliation Minerva Schools at the Keck Graduate Institute, San Francisco, California, United States of America

- Samuel T. Moulton,

- Selen Türkay,

- Stephen M. Kosslyn

- Published: July 5, 2017

- https://doi.org/10.1371/journal.pone.0178774

- Reader Comments

12 Oct 2017: The PLOS ONE Staff (2017) Correction: Does a presentation's medium affect its message? PowerPoint, Prezi, and oral presentations. PLOS ONE 12(10): e0186673. https://doi.org/10.1371/journal.pone.0186673 View correction

Despite the prevalence of PowerPoint in professional and educational presentations, surprisingly little is known about how effective such presentations are. All else being equal, are PowerPoint presentations better than purely oral presentations or those that use alternative software tools? To address this question we recreated a real-world business scenario in which individuals presented to a corporate board. Participants (playing the role of the presenter) were randomly assigned to create PowerPoint, Prezi, or oral presentations, and then actually delivered the presentation live to other participants (playing the role of corporate executives). Across two experiments and on a variety of dimensions, participants evaluated PowerPoint presentations comparably to oral presentations, but evaluated Prezi presentations more favorably than both PowerPoint and oral presentations. There was some evidence that participants who viewed different types of presentations came to different conclusions about the business scenario, but no evidence that they remembered or comprehended the scenario differently. We conclude that the observed effects of presentation format are not merely the result of novelty, bias, experimenter-, or software-specific characteristics, but instead reveal a communication preference for using the panning-and-zooming animations that characterize Prezi presentations.

Citation: Moulton ST, Türkay S, Kosslyn SM (2017) Does a presentation’s medium affect its message? PowerPoint, Prezi, and oral presentations. PLoS ONE 12(7): e0178774. https://doi.org/10.1371/journal.pone.0178774

Editor: Philip Allen, University of Akron, UNITED STATES

Received: November 2, 2016; Accepted: May 18, 2017; Published: July 5, 2017

Copyright: © 2017 Moulton et al. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Data Availability: All data files are available from the Open Science Framework https://osf.io/fgf7c/ .

Funding: This research was supported by a grant from Prezi ( http://www.prezi.com ) to SMK. In the sponsored research agreement (which we are happy to provide) and in our conversations with Prezi leadership, they agreed to let us conduct the study as we wished and publish it no matter what the results revealed. Aside from funding the research, the only role that any employees of Prezi played was (as documented in the manuscript) 1) to provide us with a distribution list of Boston-area Prezi customers (8 of whom participated in the first experiment) and 2) as experts in Prezi, review the background questionnaire to ensure that we were accurately describing Prezi’s purported benefits and features (just as PowerPoint and oral presentation experts did the same). No employees at Prezi had any role in the study design, data collection and analysis, decision to publish, or preparation of the manuscript. None of the authors have any professional or financial connection to Prezi or personal relationships with any Prezi employees. We do not plan to conduct any follow-up research on this topic or obtain future funding from Prezi. As evident in the manuscript, we took special care not to allow bias or demand characteristics to influence this research.

Competing interests: This research was supported by a grant to SMK from Prezi ( http://www.prezi.com ), a commercial funder. This does not alter our adherence to PLOS ONE policies on sharing data and materials.

Introduction

How do the characteristics of a communication medium affect its messages? This question has been the subject of much philosophical and empirical inquiry, with some (e.g., [ 1 ]) claiming that the medium determines the message (“the medium is the message”), others (e.g., [ 2 ]) claiming that characteristics of a medium affect the message, and others claiming that the medium and message are separable (e.g.,[ 3 , 4 ]). As psychologists, we ask: What mental mechanisms underlie effective communication and how can presenters leverage these mechanisms to communicate better? These questions—at the intersection of psychology and communication practice—motivate this research.

That said, the relative efficacy of different communication media or technologies informs the primary questions of interest. If we can demonstrate that oral presentations are less or more effective than those that rely on presentation software—or that presenters who use one type of presentation software tend to be more effective than those who use another—then we advance our psychological and practical understanding of effective communication. Thus, in the tradition of use-inspired basic research [ 5 ]—and as a means to an end, rather than an end unto itself—we compare the effectiveness of three commonly-used formats for communication: oral, PowerPoint, and Prezi presentations.

We focused on presentations because they populate our academic, professional, and even personal lives in the form of public speeches, academic lectures, webinars, class presentations, wedding toasts, courtroom arguments, sermons, product demonstrations, and business presentations [ 6 – 8 ], and because basic questions remain about how to present effectively. Should we present with or without presentation software? If we should present with software, which software? We examined PowerPoint and Prezi because they are popular and psychologically interesting alternatives: Whereas PowerPoint’s linear slide format might reduce cognitive load, focus attention, and promote logical analysis, Prezi’s map-like canvas format and heavy reliance on animation (see the Background section and https://prezi.com for examples) might facilitate visuospatial processing, conceptual understanding, and narrative storytelling.

To inform the present research, we explore the methodological challenges of media research and review past research on presentation formats.

Methodological challenges of media research

To research the efficacy of different communication formats fairly and accurately, one must overcome two stubborn methodological challenges. First, because correlation is not causation and the variables that underlie media usage are heavily confounded, such research requires true experimentation. To study whether a blended learning “flipped classroom” is a more effective instructional medium than traditional lecturing, for example, researchers gain little insight by comparing outcomes for students who enroll in one type of course versus the other. To control for audience (in this case, student) self-selection effects, researchers need to 1) randomly assign audience members to different communication conditions (in this case, pedagogies) or 2) manipulate format within participants. Moreover, the same methodological controls need to be applied to presenters (in this case, instructors). Instructors who choose to teach with emerging, innovative methods probably differ in numerous other respects (e.g., motivation) from those who teach with more traditional methods. If students assigned randomly to a flipped classroom format perform better than those assigned randomly to a traditional classroom format, we risk drawing inferences about confounds instead of causes unless instructors are also assigned randomly to instructional media. To make strong, accurate inferences, therefore, researchers interested in communication must control for audience and presenter self-selection effects. Such control introduces new complexities; when randomly assigning presenters to formats, for example, one must ensure that all presenters receive sufficient training in the relevant format. Moreover, such control is often cumbersome, sometimes impractical, and occasionally unethical (e.g., randomly assigning students in actual courses to hypothetically worse instructional conditions). But there are no adequate methodological substitutes for proper experimental control.

A second thorny methodological challenge inherent in conducting media research concerns how to draw general inferences about formats instead of specific inferences about exemplars of those formats. For example, if one advertising expert is assigned randomly to design a print ad and another expert a television ad—and a hundred consumers are assigned randomly to view the television or print ad—can we actually infer anything about print versus television ads in general when the two groups of consumers behave differently? Arguably not, because such a finding is just as easily explained by other (confounding) differences between the ads or their creators (e.g., ratio of print to graphics, which sorts of people—if any—are shown, and so forth). In other words, even with proper random assignment, researchers who intend to study different forms of communication risk merely studying different instances of communication. Statistically speaking, one should assume a random not fixed effect of the communication objects of interest (e.g., presentations, lectures, advertisements). To overcome this challenge and draw generalizable inferences, one must (at the very least) sample a sufficiently large set of examples within each medium.

Research on presentation software

Methodological shortcomings..

Considerable research has been conducted on how different presentation formats (particularly PowerPoint) convey information (for review, see [ 9 ]). However, much of this research is anecdotal or based on case studies. For example, Tufte [ 10 ] claims that PowerPoint’s default settings lead presenters to create bulleted lists and vacuous graphs that abbreviate arguments and fragment thought. And Kjeldsen [ 11 ] used Al Gore’s TED talk on climate change as a positive example of how visuals can be used to effectively convey evidence and enhance verbal communication.

Research that goes beyond mere anecdote or case study is plagued by the aforementioned methodological shortcomings: failure to control for audience self-selection effects (71% of studies), failure to control for presenter self-selection effects (100% of studies), and a problematic assumption of fixed effects across content and presenters (91% of studies). As is evident in Table 1 , no studies overcame two of these shortcomings, let alone all three. For example, in one of the most heavily-cited publications on this topic Szabo and Hasting [ 12 ] investigated the efficacy of PowerPoint in undergraduate education. In the first study, they examined whether students who received lectures with PowerPoint performed better on a test than students who received traditional lectures. Students were not assigned randomly to lecture conditions, however; rather, the comparison was across time, between two cohorts of students enrolled in different iterations of the same course. Any observed outcome difference could have been caused by student or instructor variables (e.g., preparedness), not lecture format. The fact that no such differences were found does not obviate this concern: Such differences may in fact have been present, but were overshadowed by confounding characteristics of students or instructors. In the second study, the authors varied presentation format within the same cohort of students, but confounded format with order, time, content, and performance measure: student performance was compared between lectures on different days, on different topics, and using different tests. As the authors themselves note, the observed differences may have had nothing to do with PowerPoint. In the third study, they counterbalanced lecture order and content; some students received a PowerPoint lecture first and others a traditional lecture first, and the same topics were presented in both formats. However, students were assigned to conditions based on their course enrollment, not randomly, but more importantly the study included only four presentations, all by one presenter. Any advantages of the two PowerPoint lectures (none were found) might have been particular to those instances or that presenter and not representative of the format more generally.

- PPT PowerPoint slide

- PNG larger image

- TIFF original image

https://doi.org/10.1371/journal.pone.0178774.t001

Most studies—even those that control experimentally for audience self-selection—relied on only a single self-selected presenter, and some relied on only one presentation per format. In one study ([ 13 ]: Experiment 1), for example, one of the authors varied the format of his lecture instruction randomly across the semester, using transparences or PowerPoint slides. In another study [ 14 ], students who were enrolled in one of the authors’ courses were assigned randomly to a PowerPoint or Prezi e-lecture that contained identical audio narration and written text. In a third study [ 15 ], one of the researchers gave the same lecture over the course of the year to rotating medical students, using PowerPoint on odd months and overhead slides on even months. What reason is there to think that we can make general claims about presentation format based on studies of single lectures or single presenters? That is, how can we reasonably assume fixed as opposed to random effects? If the use of presentation software does meaningfully influence student learning or experience, surely that effect is not constant across all presenters or presentations—some instructors use it more effectively than others, and within any format some presentations are more effective than others (see [ 16 ]). And how can we assume that presenters who select both the content and format of their presentations are not designing them in ways that favor one format over another?

Research on the efficacy of presentation software has numerous other flaws, most notably the failure to control for experimenter effects or demand characteristics. In 82% of studies we identified, for example, the researchers investigated their own instruction and studied their own students. It is difficult to imagine that one would make these instructional and research efforts (e.g., creating new course material, conducting a field experiment) without a strong belief in the efficacy of one format over the other, and it is plausible (if not likely) that such beliefs would influence students or confound instructional format with instructional effort and enthusiasm.

Another common issue is the confounding of lecture format with access to study materials—in studies that contrast PowerPoint with traditional lecturing (e.g., [ 17 – 19 ]), students in the PowerPoint condition (but not the control condition) sometimes have access to PowerPoint slides as study material. This access could bias student motivation, behavior (e.g., attendance), course satisfaction, and performance (see [ 20 ]).

PowerPoint: Performance, perception, and persuasion.

Despite their methodological shortcomings, what are the findings of this research literature? The majority of studies examined the use of PowerPoint in higher education and measured both objective and subjective outcomes (see Table 1 ). They typically involved students enrolled in one or more of the researchers’ courses, and contrasted the efficacy of lectures (or whole lecture courses) that used PowerPoint with those that used a more traditional technology (e.g., blackboards, overhead projectors). In terms of student performance, their findings were notably mixed: Of the 28 studies we identified, 17 found no effect of PowerPoint lectures relative to traditional lectures ([ 12 ]: Experiments 1,3; [ 13 , 15 , 21 – 33 ]), 9 found a performance benefit of PowerPoint over traditional instruction ([ 12 ]: Experiment 2; [ 17 – 19 , 34 – 38 ]), and 2 found a performance benefit of traditional over PowerPoint instruction [ 39 , 40 ].

There is near consensus in the literature, however, when it comes student perception: Of the 26 studies we identified, 21 found that students preferred PowerPoint over traditional instruction ([ 12 ]: Experiment 1; [ 13 , 17 – 19 , 21 , 23 , 25 , 26 , 28 , 29 , 31 – 33 , 35 , 39 , 41 – 45 ]), 2 found that students preferred traditional over PowerPoint instruction [ 40 , 46 ], and 3 other studies found no preference for one or the other formats [ 15 , 22 , 37 ]. As one example, Tang and Austin [ 45 ] surveyed 215 undergraduates in business courses about their general perceptions of different lecture formats; on measures of enjoyment, learning, motivation, and career relevance, they found that students rated lectures with PowerPoint slides more favorably than lectures with overheads or without visual aids. An additional 7 studies did not contrast student perceptions of PowerPoint with another technology—they simply surveyed students about PowerPoint; these studies all found that students had, on average, favorable impressions of PowerPoint-based instruction [ 36 , 47 – 52 ].

In addition to these studies of how presentation software impacts student performance and perception, two studies examined PowerPoint‘s impact on audience persuasion. Guadagno, Sundie, Hardison, and Cialdini [ 53 ] argue that we heuristically use a presentation’s format to evaluate its content, particularly when we lack the expertise to evaluate the content on its merits. To test this hypothesis, they presented undergraduates with key statistics about a university football recruit and asked them to evaluate the recruit’s career prospects. The same statistics were presented in one of three formats: a written summary, a graphical summary via printed-out PowerPoint slides, or a graphical summary via animated PowerPoint slides (self-advanced by the participant). Participants shown the computer-based PowerPoint presentation tended to rate the recruit more positively than other participants, and there was some evidence that this effect was more pronounced for football novices than for experts. The findings of this study suggest that some presentation formats may be more persuasive than others, perhaps because audience members conflate a sophisticated medium with a sophisticated message.

In the second study to examine the impact of PowerPoint on persuasion, Park and Feigenson [ 54 ] examined the impact of video-recorded presentations on mock juror decision-making. Participants were more persuaded by attorneys on either side of a liability case when the attorney used PowerPoint slides as opposed to merely oral argument. They also remembered more details from PowerPoint than oral presentations, and evaluated both attorneys as more persuasive, competent, credible, and prepared when they presented with PowerPoint. Based on mediation analyses, the researchers argue that the decision-making benefit of PowerPoint results from both deliberative and heuristic processing (“slow” and “fast” thinking, respectively, see [ 55 ]).

Both of these studies, however, share the methodological limitations of the educational research on PowerPoint. The first study [ 53 ] used only one PowerPoint presentation, and the second [ 54 ] used only two. The presentations used were not selected at random from a larger stimulus pool but instead were created by researchers who hypothesized that PowerPoint would enhance presentations. But even if the presentations had been sampled randomly, the sample is too small to allow one to generalize to a broader population. In studying performance, perception, or persuasion, one cannot reasonably assume that all presentation effects are equal.

Prezi: A zoomable user interface.

Released in 2009, Prezi has received generally favorable reviews by researchers, educators, and professional critics [ 56 – 60 ]. With a purported 75 million users worldwide, it is increasingly popular but still an order of magnitude less so than PowerPoint (with as many as one billion users; [ 61 ]). Like PowerPoint and other slideware, Prezi allows users to arrange images, graphics, text, audio, video and animations, and to present them alongside aural narration to an in-person or remote audience. In contrast to PowerPoint and other slideware in which users create presentations as a deck of slides, Prezi users create presentations on a single visuospatial canvas. In this regard, Prezi is much like a blackboard and chalk. But unlike a physical blackboard, the Prezi canvas is infinite (cf. [ 62 ]) and zoomable: in designing presentations, users can infinitely expand the size of their canvas and can zoom in or out. When presenting, users define paths to navigate their audience through the map-like presentation, zooming and panning from a fixed-angle overhead view.

Like Google Maps or modern touchscreens, Prezi is an example of what scholars of human-computer interaction label a zoomable user interface (ZUI). These interfaces are defined by two features: They present information in a theoretically infinite two-dimensional space (i.e., an infinite canvas) and they enable users to animate this virtual space through panning and zooming. Some of the original ZUIs were used to visualize history, navigate file systems, browse images, and—in the Prezi predecessor CounterPoint—create presentations [ 63 , 64 ].

As communication and visualization tools, ZUIs in general and Prezi in particular are interesting psychologically for several reasons. First, they may take advantage of our mental and neural architecture, specifically the fact that we process information through dissociable visual and spatial systems. Whereas the so-called “ventral” visual system in the brain processes information such as shape and color, the “dorsal” spatial system processes information such as location and distance [ 65 – 68 ]. When working in concert, these systems result in vastly better memory and comprehension than when they work in isolation. For example, in the classic “method of loci” individuals visualize objects in specific locations; when later trying to recall the objects, they visualize navigating through the space, “seeing” each object in turn. This method typically doubles retention, compared to other ways of trying to memorize objects [ 69 , 70 ]. Similarly, in research on note-taking, students learned more when they used spatial methods than when they used linear methods (e.g., [ 71 ]). Mayer’s multimedia learning principles and evidence in their favor also highlight the importance of spatial contiguity [ 72 ].

Thus, by encouraging users to visualize and process information spatially, ZUIs such as Prezi may confer an advantage over traditional tools such as PowerPoint that do not encourage such visuospatial integration. As Good and Bederson [ 64 ] write: “Because they employ a metaphor based on physical space and navigation, ZUIs offer an additional avenue for exploring the utilization of human spatial abilities during a presentation.”

Furthermore, ZUIs may encourage a particularly efficacious type of spatial processing, namely graphical processing. In graphical processing, digital objects (or groups of objects) are not just arranged in space, they are arranged or connected in a way makes their interrelationships explicit. Randomly placing animal stickers on a blank page, for example, engages mere spatial processing; drawing connecting lines between animals of the same genus or arranging the animals into a phylogenetic tree, however, engages graphical processing. Because ZUIs force users to “see the big picture,” they may prompt deeper processing than software that segments content into separate spatial canvases. By facilitating such processing, ZUIs may leverage the same learning benefits of concept maps and other graphical organizers, which have been studied extensively. For example, in their meta-analysis of the use of concept maps in education, Nesbit and Adesope [ 73 ] found that these graphical representations (especially when animated) were more effective than texts, lists, and outlines. By requiring one to organize the whole presentation on a single canvas instead of a slide deck, therefore, Prezi may prompt presenters (and their audiences) to connect component ideas with each other, contextualize them in a larger narrative, and remember, understand, and appreciate this larger narrative. Slideware, on the other hand, may do just the opposite:

PowerPoint favours information that can be displayed on a single projected 4:3 rectangle. Knowledge that requires more space is disadvantaged … How to include a story on a slide? Distributing the associated text over several slides literally breaks it into fragments, disturbing its natural cohesion and thus coherence … PowerPoint renders obsolete some complex narrative and data forms in favour of those that are easily abbreviated or otherwise lend themselves to display on a series of slides [ 74 ] (p399)

Of course these arguments are speculative, and one can also speculate on the psychological costs of ZUI or benefits of standard slideware. Perhaps PowerPoint does confer some of same spatial processing benefits of Prezi—after all, slides are spatial canvases, and they must be arranged to form a narrative—but in a way that better manages the limited attentional resources of the presenter or audience. Our point here is simply that Prezi, as a ZUI presentation tool, offers a psychologically interesting alternative to standard deck-based slideware, with a range of possible advantages that could be explored empirically to discover the psychological mechanisms of effective communication.

Like the PowerPoint literature, most of the published literature on Prezi is limited to observational reports or case studies. Brock and Brodahl [ 75 ] evaluated Prezi favorably based on their review and students’ ratings of course presentations. Conboy, Fletcher, Russell, and Wilson [ 76 ] interviewed 6 undergraduates and 3 staff members about their experiences with Prezi in lecture instruction and reported generally positive experiences. Masood and Othman [ 77 ] measured the eye movements and subjective judgments of ten participants who viewed a single Prezi presentation; participants attended to the presentation’s text more than to its other components (e.g., images, headings), and favorably judged the presentation. Ballentine [ 78 ] assigned students to use Prezi to design text adventure games and reported benefits of using the medium. Two other studies [ 79 , 80 ] surveyed college students about their course experiences with Prezi, and both reported similarly positive perceptions.

All of these studies, however, suffer from major demand characteristics, due to the fact that the researchers observed or asked leading questions of their own students about their own instruction (e.g., “Do you find lectures delivered with Prezi more engaging then[sic] other lectures?”, from [ 79 ]). Moreover, all suffer from the methodological limitations discussed earlier.

Other literature that addresses Prezi is purely theoretical and speculative: In discussing the pedagogical implications of various presentation software, Harris [ 81 ] mostly just describes Prezi’s features, but does suggest that some of these features provide useful visual metaphors (e.g., zooming in to demonstrate otherwise hidden realities). Bean [ 82 ] offers a particularly compelling analysis of PowerPoint and Prezi’s histories, user interfaces, and visual metaphors, and argues that Prezi is the optimal tool for presenting certain types of information (e.g., wireflow diagrams).

The experimental literature on Prezi is limited to three published studies. Castelyn, Mottart and Valcke [ 14 ] investigated whether a Prezi e-lecture with graphic organizers (e.g., concepts maps) was more effective than a PowerPoint e-lecture without graphic organizers. Claiming that Prezi encourages the use of graphic organizers, they purposefully confounded the type of presentation software with the presence of graphic organizers. Undergraduates randomly assigned to the different e-lectures did not differ in their knowledge or self-efficacy gains, but did prefer the graphically-organized Prezi lecture over the PowerPoint control lecture. In a follow-up study [ 83 ], the same researchers assigned undergraduates to create Prezi presentations that did or did not use graphic organizers, and found no effects of this manipulation on students’ self-reported motivation or self-efficacy. Chou, Chang, and Lu [ 24 ] compared the effects of Prezi, PowerPoint and traditional blackboard instruction on 5 th graders’ learning of geography. Whereas the Prezi group performed better than the control group (which received blackboard instruction) in formative quizzes and a summative test, the PowerPoint group did not; however, on a delayed summative test, both Prezi and PowerPoint students performed better than those in the control group. In direct comparisons of PowerPoint and Prezi, there were no differences in any of the learning measures. Taken together, the studies are not just limited in number: They present uncompelling findings and suffer from the same methodological shortcomings of the PowerPoint research.

The current study

In short, the extant literature does not clarify whether presenters should present with or without visual aids—and, if the latter, whether they should use standard deck-based slideware such as PowerPoint or a ZUI such as Prezi. One of the reasons why these basic questions remain unanswered is the methodological challenges inherent in comparing different presentation formats. We designed the current study to overcome these challenges.

To control for individual differences among presenters, we randomly assigned presenters to different presentation conditions. To control for individual differences among audience members, we used a counterbalanced, within-participants design for the first experiment, and between-participants random assignment in the second experiment. And to draw general inferences about the impact of presentation format—instead of specific inferences about particular presenters or presentations—we sampled from a large number of presentations, each created by a different presenter. Our methods have their own challenges, such as recruiting participants sufficiently trained in all presentation methods, allowing presenters adequate preparation time and context, approximating the psychological conditions of real-world presentations, and measuring the “signal” of presentation format among the added “noise” of so many presenters and presentations. In addition, the studies had to be double-blind: Neither presenters nor audience members could be aware of any hypotheses, and had to be free from any sorts of confirmation bias conveyed by the investigators.

To focus on presentations as a form of presenter-audience communication and limit the number of confounded variables, we purposefully controlled for other possible impacts of presentation software on professional practices or outcomes, including 1) the use of presentation artifacts (e.g., PowerPoint files, printed-out slides, online Prezis), and 2) facilitated collaboration among presentation designers. Unlike other research (e.g., [ 32 , 33 ]) we did allow for the possibility that presentation format not only affects how audiences perceive presentations, but also how presenters design or deliver them (e.g., by increasing their conceptual understanding of the topic, or decreasing their cognitive load during live narration; cf. [ 84 ]). In other words, presentation technologies might affect the cognition of both the audience and the presenter, so we designed the present studies to accommodate both sets of mechanisms.

To maximize the real-world relevance of this research, we relied on multimedia case materials from Harvard Business School [ 85 ]; these materials recreate the actual professional circumstances in which presentations are typically used. Because presentations are designed commonly both to inform and convince audiences, we examine outcome measures of learning as well as persuasion. And to minimize demand characteristics, we avoided the typical flaws of existing research (e.g., researcher-designed presentations, the researchers’ students as research participants) and adopted several countermeasures (e.g., recruitment language and participant instructions that obscured the research hypotheses, between-participant manipulation).

We adopted a two-phased approach in this research. In the first phase, participants with sufficient experience in oral, PowerPoint, and Prezi presentation formats were randomly assigned to create a presentation in one of those formats. We provided the necessary context, instruction, and time to create a short but realistic presentation. Participants then presented live to an actual audience, who judged each presentation’s efficacy. In the second phase, recorded versions of these presentations were presented to a larger online audience, affording us greater statistical power and allowing us to measure the impact of presentation format on decision-making and learning.

Experiment 1

Participants..

We recruited presenter participants via online postings (on Craigslist, the Harvard Psychology Study Pool, the Harvard Decision Science Lab Study Pool), email solicitations to the local Prezi community, and campus flyers. To create the fairest comparison between PowerPoint and Prezi, we recruited individuals who “have expertise in using both PowerPoint and Prezi presentation software.” Interested individuals were directed to a prescreening survey in which they reported their experience with and preference for giving different types of presentations. Only individuals who reported that they were “not at all experienced” with PowerPoint, Prezi or giving oral presentations were excluded from research participation. Out of the 681 respondents who completed the prescreening survey, 456 of them were eligible and invited to sign up for an available timeslot. Out of this group, 146 individuals—105 from the Harvard study pools, 33 from Craigslist, and 8 from the Prezi community—participated as presenters in the study and were compensated $40 for approximately two hours of their time. There were no significant differences between the three presentation groups on any demographics variables.

We also recruited 153 audience participants from the Harvard Decision Science Lab Study Pool and Craigslist using the following announcement:

Do you use Skype? Does your computer have a large screen (13 inches or larger)? If so, you may be eligible to participate in a 45 minute long online study. In this study, you will watch professional presentations over Skype from home on your personal computer.

Anyone who responded to the recruitment notice was eligible, provided that they were available during one of the prescheduled testing sessions. Audience participants were compensated $10 for approximately 45 minutes of their time. Table 2 presents demographic information for the presenter and audience participants. This study was approved by the Harvard Committee on the Use of Human Subjects (Study #IRB14-1427), and all participants in both experiments provided written consent.

https://doi.org/10.1371/journal.pone.0178774.t002

Presenter procedure.

Presenter participants completed a survey remotely before attending the in-person, group sessions with other participants. In the online pre-survey, presenters first answered basic demographic questions (gender, age, education level, English fluency, and occupation). Next, they answered questions about their prior experience with, opinions about, and understanding of the different presentation formats (oral, Prezi, and PowerPoint). This section was prefaced with the following note:

A note on language: When we use the term "presentation," we mean a formal, planned, and oral presentation of any duration, including a public speech, an academic lecture, a webinar, a class presentation, a wedding toast, a sermon, a product demonstration, a business presentation, and so on. Examples of things we do NOT mean are: a theatrical performance, an impromptu toast at dinner, and any presentation with no audience. When we say PowerPoint presentations, we mean presentations that were made using Microsoft PowerPoint, not other software such as Apple's Keynote. When we say Prezi presentations, we mean presentations that were made using Prezi presentation software. Also, when we refer to "oral presentation", we mean a presentation that is only spoken and does not include any visual aids or the use of presentation software.

Participants were asked the following questions for each type of presentation:

- How experienced are you at making the following types of presentations? [5-level rating]

- When you give a presentation, how effective are the following types of presentations for you? [5-level rating, with “not applicable” option]

- When somebody else gives a presentation, how effective are the following types of presentations for you? [5-level rating, with “not applicable” option]

- How difficult is it for you to make the following types of presentations? [5-level rating, with “not applicable” option]

- In the last year, approximately how many of the following types of presentations did you make? [free response]

- In your lifetime, approximately how many of the following types of presentations have you made? [free response]

- For approximately how many years have you been making the following types of presentations? [free response]

As part of the expertise-related measures, we also asked the participants to identify the purported advantages and disadvantages of each presentation format, according to its proponents and critics, respectively. For PowerPoint and Prezi, we asked participants to identify whether or not it had particular functionalities (e.g., the capacity to record narration, create custom backgrounds, print handouts). Finally, participants viewed three sets of four short Prezi presentations and rank-ordered them from best to worst. In each set we manipulated a key dimension of Prezi effectiveness, according to its designers: the use of zooming, the connection of ideas, and the use of visual metaphor.

Presenter participants were tested in person at the Harvard Decision Science Lab, and randomly assigned to one of the three groups: Prezi, PowerPoint, or oral presentation. A total of 50 data collection sessions were held. In each session, there were typically three presenter participants (one for each presentation format); as a result of participants who failed to arrive or overbooking, there were ten sessions with only two presenters and six sessions with four presenters.

After providing informed consent, participants completed an online survey (in the lab) in which they rank-ordered three sets of recorded example PowerPoint and oral presentations. Identical in form to the example Prezi presentations they judged in the pre-survey, these short presentations were designed to assess their understanding of effective presentation design by manipulating a key aspect specific to each format. For PowerPoint presentations, we manipulated the use of text, use of extraneous “bells and whistles,” and graph design; for oral presentations, the three dimensions were verbal behavior, nonverbal behavior (other than eye contact), and eye contact. In selecting these dimensions (and those for Prezi), we consulted with a variety of experts, including software designers, speaking coaches, and researchers.

Next, presenters were shown material from a multimedia case created for and used by the Harvard Business School. Specifically, they were told the following (the company featured in the business case will be referred to anonymously here as “Company X” to respect their contractual agreement with the school):

For the next two hours, you are going to pretend to be the chief marketing officer of i-Mart, a large chain of retail stores. i-Mart recently made an offer to [Company X] to sell their products in i-Mart stores. Your boss, the CEO of i-Mart, has asked you to make a presentation to [Company X]’s leadership that persuades them to accept i-Mart’s offer. In your presentation, you will need to argue that accepting i-Mart’s offer is in [Company X]’s strategic interests, and address any concerns they may have about how accepting the offer might affect their corporate identity.

As a participant in this study, your primary job today is to prepare and then deliver this presentation. The presentation will be very short (less than 5 minutes) and made live (via Skype) to an audience of participants who are playing the part of [Company X] executives. Before you start planning your presentation, you will first learn more about [Company X] and how they’re thinking about i-Mart’s offer.

On their own computer workstation, participants studied the multimedia case for 30 minutes and were invited to take notes on blank paper provided for them. The multimedia case material included video and textual descriptions of Company’s X’s corporate culture, business model, and constituent communities.

Following this study period, participants were given 45 minutes to create a presentation in one of three randomly assigned presentation formats: PowerPoint, Prezi, or oral. To assist participants in the PowerPoint and Prezi conditions, we provided them with a set of digital artifacts including text, data, and graphics related to the case. Participants were not told that other participants were asked to present in different formats, and the workstations were separated from each other to prevent participants from discovering this manipulation.

After this preparation period, participants were taken individually (in a counterbalanced order) to another room to present to a live audience via Skype. For PowerPoint and Prezi presentations, we shared each participant’s presentation with the audience via screen sharing; thus they viewed both the presenter and the presentation. For those presenters who consented, we also recorded their presentations for future research purposes. After making their presentations, presenters completed a final survey about their presentation (e.g., “How convincing do you think your presentation will be to [Company X’s] board members”), the corporate scenario (e.g., What do you think [Company X] should do?”), and their presentation format (e.g., “How likely are you to recommend the presentation tool or presentation format you used to others to make professional presentations?”).

Audience procedure.

Audience participants completed the entire experiment remotely and online. Their participation was scheduled for the end of the presenter sessions so that the in-lab presenters could present live to a remote audience via Skype. We recruited between three and six audience participants per session, although participants who failed to arrive or Skype connectivity issues resulted in some sessions with only one or two audience participants: Five sessions had one participant, twelve sessions had two participants, sixteen sessions had three participants, eleven sessions had four participants, four sessions had five participants, and two sessions had six participants.

Individuals who responded to the recruitment notice completed a consent form and three online surveys prior to their scheduled Skype session. The first survey was a slightly modified form of the presenter pre-survey (demographics, background on presentation formats, rank-ordering of example Prezis) in which they also scheduled their Skype session. In the second survey, audience participants were told that they were “going to play the role of a corporate executive listening to several short business presentations,” and that their task was “to evaluate the quality of these presentations, each made by another participant engaged in a similar role-playing scenario.” They were then shown a brief video and textual description of the fictionalized corporate scenario (an abridged version of what presenter participants studied), and told the following:

You are a board member for [Company X], an innovative clothing company. Another company, i-Mart, wants to sell [Company Y’s products] in its stores. You and your fellow board members must decide whether or not to accept i-Mart's offer.

And in the third survey they rank-ordered the three sets of recorded example PowerPoint and oral presentations.

At the time of the scheduled session, the audience participants logged into Skype using a generic account provided by the research team, and were instructed to turn on their webcams and put on headphones. Once the first presenter participant was ready to present, the experimenter initiated the group Skype call, confirmed that the software was functioning properly, invited the presenter into the room to begin, left the room before the start of the presentation, monitored the presentation remotely via a closed-circuit video feed, and re-entered the room at the presentation’s conclusion. For Prezi and PowerPoint presentations, Skype’s built-in screen-sharing function was used to share the visual component of the presentation; audience participants viewing these presentations were instructed to use the split-screen view, with windows of equal size showing the presenter and the accompanying visuals.

Immediately after viewing each presentation, participants evaluated it via an online survey. They rated each presentation on how organized, engaging, realistic, persuasive, and effective it was using a five-level scale with response options of not at all , slightly , somewhat , very , and extremely . They were also invited to offer feedback to the presenter on how the presentation could be improved. After the final presentation, participants rank-ordered the presentations on the same dimensions (e.g., effectiveness, persuasiveness). Halfway through the experiment we added a final question in which we asked participants to rank-order PowerPoint, Prezi, and oral presentation formats “in terms of their general effectiveness, ignoring how well individual presenters (including today's) use that format,” and to explain their rank-ordering.

Prior experience and pre-existing beliefs.

Participants’ prior experience with and pre-existing beliefs about each presentation format provide a baseline that informs the research findings. If presenter participants had more experience with and more positive beliefs about one format than the others—and those assigned to that format induced more positive assessments from the audience members than did those assigned to the other formats—then the results are less compelling than if there was no correlation between these baseline measures and the experimental outcomes. The same applies to audience participants: Are they merely judging presentations according to their initial biases? Conversely, the results are most compelling if there is a negative association between the baseline measures and the experimental findings. For this reason—and to check that presenters assigned to the different formats did not happen to differ in these baseline measures—we analyzed participants’ prior experience with and pre-existing beliefs about PowerPoint, Prezi, and oral presentation formats.

Both audience and presenter participants were least experienced with Prezi and most experienced with oral presentations. At the outset, they rated PowerPoint as the most effective and easiest to use to present material and Prezi as the least effective and most difficult to use to present. For watching presentations, audience participants rated PowerPoint most effective and oral presentations least effective, but rated Prezi as more enjoyable than other formats. For watching presentations, presenter participants did not find any format more effective than the others. Table 3 presents full descriptive and inferential statistics for all self-reported measures of prior experience with and preexisting beliefs about Prezi, PowerPoint, and oral presentations.

https://doi.org/10.1371/journal.pone.0178774.t003

Presenters assigned to different formats did not differ in their experience with or pre-existing beliefs about presentations formats. They also did not differ in how well they identified the purported advantages and disadvantages of each presentation format, how well they identified the software features of PowerPoint and Prezi, or how accurately they could identify effective presentations of each format.

Audience ratings.

In term of their prior experience with and pre-existing beliefs about presentation formats, both audience and presenter participants were biased in favor of oral and PowerPoint presentations and against Prezi. After presenters were randomly assigned to these different formats, how did the audience evaluate their presentations?

In examining how presentation format affected the audience’s ratings of the presentations, two complications arose. First, sessions with two presentations were missing one presentation format, and sessions with four presentations had two presentations of the same format. To address this complexity we only conducted pairwise comparisons of different formats (e.g., PPT versus oral) instead of omnibus tests, and—for those sessions with four presentations—we averaged ratings for the two same-format presentations. To be certain that the differing number of presentations per session did not somehow bias the results even after adopting these measures, we also conducted an analysis on the subset of sessions that had exactly three presentations.

Second, the number of audience participants per session ranged from one to six. In calculating descriptive statistics, some sessions would be weighted more heavily than others unless ratings were first averaged across participants within the same session, then averaged across sessions. In calculating inferential statistics, averaging across ratings from different participants within the same session who received presentations in the same format was necessary to ensure that the sampling units were independent of each other, an assumption of all parametric and most nonparametric tests. In other words, for both descriptive and inferential statistics, we treated session (instead of participant) as the sampling unit.

As an empirical matter, this multi-step averaging—within participants across identical presentation formats, then across participants within the same session—had little impact on the condition means (i.e., the average ratings of PowerPoint, Prezi, or oral presentations on each dimension). Compared to the simplest, raw averaging of all ratings in one step, the maximum absolute difference between these two sets of means was .07 (on a 1–5 scale) and the mean absolute difference was .04.

To test whether the presentations’ format affected their ratings, therefore, we conducted paired t -tests for each rating dimension, with presentation format as the repeated measure and mean session rating as the dependent variable. Because we conducted three tests for each dimension—pairing each format with every other—we controlled for multiple comparisons by dividing our significance threshold by the same factor (i.e., α = .05/3 = .017). Results revealed that presentation format influenced audience ratings. In particular, the audience rated Prezi presentations as significantly more organized, engaging, persuasive, and effective than both PowerPoint and oral presentations; on a five-level scale, the average participant rated Prezi presentations over half a level higher than other presentations. The audience did not rate PowerPoint presentations differently than oral presentations on any dimension. Table 4 and Fig 1 present these results.

https://doi.org/10.1371/journal.pone.0178774.t004

Audience members rated presentations on each dimension on a 5-level scale (1 = “not at all,” 5 = “extremely”). The figure shows session-level means from all available data, including those from sessions with two or four presentations.

https://doi.org/10.1371/journal.pone.0178774.g001

By limiting the analysis to the 34 sessions with exactly three presentations (one of each format), we could ensure that the sessions with two or four presentations did not somehow bias the results. Moreover, this procedure enabled us to conduct omnibus tests of presentation format for each rating dimension. These omnibus tests revealed significant effects for organization, F (2,66) = 12.9, p < .0001, engagement, F (2,66) = 4.6, p = .01, persuasion, F (2,66) = 3.9, p = .03, and effectiveness, F (2,66) = 7.2, p = .001. The results from post-hoc tests (Fisher’s LSD) aligned with the original pairwise comparisons: On all dimensions, the audience rated Prezi presentations higher than PowerPoint and oral presentations, p s < .05; PowerPoint and oral presentations were not rated differently on any dimension, p s>.05. (Note: All p -values for pairwise tests here and elsewhere are two-tailed.)

To explore whether the obtained results were somehow the result of demand characteristics, we analyzed ratings from only the first presentation in each session. This analysis yielded the same pattern of findings, with a to-be-expected reduction in statistical significance due to the loss of power. On all four dimensions, a one-way, independent-measures ANOVA yielded significant or marginally-significant results: organized, F (2,49) = 5.1, p = .01; engaging, F (2,49) = 2.5, p = .09; persuasive, F (2,49) = 2.6, p = .09; and effective, F (2,49) = 5.8, p = .006. In all cases, Prezi was rated higher than oral and PowerPoint presentations (post-hoc LSD p s ≤.08).