Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Hypothesis Testing | A Step-by-Step Guide with Easy Examples

Published on November 8, 2019 by Rebecca Bevans . Revised on June 22, 2023.

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics . It is most often used by scientists to test specific predictions, called hypotheses, that arise from theories.

There are 5 main steps in hypothesis testing:

- State your research hypothesis as a null hypothesis and alternate hypothesis (H o ) and (H a or H 1 ).

- Collect data in a way designed to test the hypothesis.

- Perform an appropriate statistical test .

- Decide whether to reject or fail to reject your null hypothesis.

- Present the findings in your results and discussion section.

Though the specific details might vary, the procedure you will use when testing a hypothesis will always follow some version of these steps.

Table of contents

Step 1: state your null and alternate hypothesis, step 2: collect data, step 3: perform a statistical test, step 4: decide whether to reject or fail to reject your null hypothesis, step 5: present your findings, other interesting articles, frequently asked questions about hypothesis testing.

After developing your initial research hypothesis (the prediction that you want to investigate), it is important to restate it as a null (H o ) and alternate (H a ) hypothesis so that you can test it mathematically.

The alternate hypothesis is usually your initial hypothesis that predicts a relationship between variables. The null hypothesis is a prediction of no relationship between the variables you are interested in.

- H 0 : Men are, on average, not taller than women. H a : Men are, on average, taller than women.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

For a statistical test to be valid , it is important to perform sampling and collect data in a way that is designed to test your hypothesis. If your data are not representative, then you cannot make statistical inferences about the population you are interested in.

There are a variety of statistical tests available, but they are all based on the comparison of within-group variance (how spread out the data is within a category) versus between-group variance (how different the categories are from one another).

If the between-group variance is large enough that there is little or no overlap between groups, then your statistical test will reflect that by showing a low p -value . This means it is unlikely that the differences between these groups came about by chance.

Alternatively, if there is high within-group variance and low between-group variance, then your statistical test will reflect that with a high p -value. This means it is likely that any difference you measure between groups is due to chance.

Your choice of statistical test will be based on the type of variables and the level of measurement of your collected data .

- an estimate of the difference in average height between the two groups.

- a p -value showing how likely you are to see this difference if the null hypothesis of no difference is true.

Based on the outcome of your statistical test, you will have to decide whether to reject or fail to reject your null hypothesis.

In most cases you will use the p -value generated by your statistical test to guide your decision. And in most cases, your predetermined level of significance for rejecting the null hypothesis will be 0.05 – that is, when there is a less than 5% chance that you would see these results if the null hypothesis were true.

In some cases, researchers choose a more conservative level of significance, such as 0.01 (1%). This minimizes the risk of incorrectly rejecting the null hypothesis ( Type I error ).

Prevent plagiarism. Run a free check.

The results of hypothesis testing will be presented in the results and discussion sections of your research paper , dissertation or thesis .

In the results section you should give a brief summary of the data and a summary of the results of your statistical test (for example, the estimated difference between group means and associated p -value). In the discussion , you can discuss whether your initial hypothesis was supported by your results or not.

In the formal language of hypothesis testing, we talk about rejecting or failing to reject the null hypothesis. You will probably be asked to do this in your statistics assignments.

However, when presenting research results in academic papers we rarely talk this way. Instead, we go back to our alternate hypothesis (in this case, the hypothesis that men are on average taller than women) and state whether the result of our test did or did not support the alternate hypothesis.

If your null hypothesis was rejected, this result is interpreted as “supported the alternate hypothesis.”

These are superficial differences; you can see that they mean the same thing.

You might notice that we don’t say that we reject or fail to reject the alternate hypothesis . This is because hypothesis testing is not designed to prove or disprove anything. It is only designed to test whether a pattern we measure could have arisen spuriously, or by chance.

If we reject the null hypothesis based on our research (i.e., we find that it is unlikely that the pattern arose by chance), then we can say our test lends support to our hypothesis . But if the pattern does not pass our decision rule, meaning that it could have arisen by chance, then we say the test is inconsistent with our hypothesis .

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Descriptive statistics

- Measures of central tendency

- Correlation coefficient

Methodology

- Cluster sampling

- Stratified sampling

- Types of interviews

- Cohort study

- Thematic analysis

Research bias

- Implicit bias

- Cognitive bias

- Survivorship bias

- Availability heuristic

- Nonresponse bias

- Regression to the mean

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

A hypothesis states your predictions about what your research will find. It is a tentative answer to your research question that has not yet been tested. For some research projects, you might have to write several hypotheses that address different aspects of your research question.

A hypothesis is not just a guess — it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations and statistical analysis of data).

Null and alternative hypotheses are used in statistical hypothesis testing . The null hypothesis of a test always predicts no effect or no relationship between variables, while the alternative hypothesis states your research prediction of an effect or relationship.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bevans, R. (2023, June 22). Hypothesis Testing | A Step-by-Step Guide with Easy Examples. Scribbr. Retrieved April 9, 2024, from https://www.scribbr.com/statistics/hypothesis-testing/

Is this article helpful?

Rebecca Bevans

Other students also liked, choosing the right statistical test | types & examples, understanding p values | definition and examples, what is your plagiarism score.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Indian J Crit Care Med

- v.23(Suppl 3); 2019 Sep

An Introduction to Statistics: Understanding Hypothesis Testing and Statistical Errors

Priya ranganathan.

1 Department of Anesthesiology, Critical Care and Pain, Tata Memorial Hospital, Mumbai, Maharashtra, India

2 Department of Surgical Oncology, Tata Memorial Centre, Mumbai, Maharashtra, India

The second article in this series on biostatistics covers the concepts of sample, population, research hypotheses and statistical errors.

How to cite this article

Ranganathan P, Pramesh CS. An Introduction to Statistics: Understanding Hypothesis Testing and Statistical Errors. Indian J Crit Care Med 2019;23(Suppl 3):S230–S231.

Two papers quoted in this issue of the Indian Journal of Critical Care Medicine report. The results of studies aim to prove that a new intervention is better than (superior to) an existing treatment. In the ABLE study, the investigators wanted to show that transfusion of fresh red blood cells would be superior to standard-issue red cells in reducing 90-day mortality in ICU patients. 1 The PROPPR study was designed to prove that transfusion of a lower ratio of plasma and platelets to red cells would be superior to a higher ratio in decreasing 24-hour and 30-day mortality in critically ill patients. 2 These studies are known as superiority studies (as opposed to noninferiority or equivalence studies which will be discussed in a subsequent article).

SAMPLE VERSUS POPULATION

A sample represents a group of participants selected from the entire population. Since studies cannot be carried out on entire populations, researchers choose samples, which are representative of the population. This is similar to walking into a grocery store and examining a few grains of rice or wheat before purchasing an entire bag; we assume that the few grains that we select (the sample) are representative of the entire sack of grains (the population).

The results of the study are then extrapolated to generate inferences about the population. We do this using a process known as hypothesis testing. This means that the results of the study may not always be identical to the results we would expect to find in the population; i.e., there is the possibility that the study results may be erroneous.

HYPOTHESIS TESTING

A clinical trial begins with an assumption or belief, and then proceeds to either prove or disprove this assumption. In statistical terms, this belief or assumption is known as a hypothesis. Counterintuitively, what the researcher believes in (or is trying to prove) is called the “alternate” hypothesis, and the opposite is called the “null” hypothesis; every study has a null hypothesis and an alternate hypothesis. For superiority studies, the alternate hypothesis states that one treatment (usually the new or experimental treatment) is superior to the other; the null hypothesis states that there is no difference between the treatments (the treatments are equal). For example, in the ABLE study, we start by stating the null hypothesis—there is no difference in mortality between groups receiving fresh RBCs and standard-issue RBCs. We then state the alternate hypothesis—There is a difference between groups receiving fresh RBCs and standard-issue RBCs. It is important to note that we have stated that the groups are different, without specifying which group will be better than the other. This is known as a two-tailed hypothesis and it allows us to test for superiority on either side (using a two-sided test). This is because, when we start a study, we are not 100% certain that the new treatment can only be better than the standard treatment—it could be worse, and if it is so, the study should pick it up as well. One tailed hypothesis and one-sided statistical testing is done for non-inferiority studies, which will be discussed in a subsequent paper in this series.

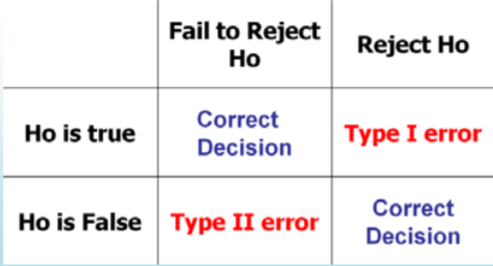

STATISTICAL ERRORS

There are two possibilities to consider when interpreting the results of a superiority study. The first possibility is that there is truly no difference between the treatments but the study finds that they are different. This is called a Type-1 error or false-positive error or alpha error. This means falsely rejecting the null hypothesis.

The second possibility is that there is a difference between the treatments and the study does not pick up this difference. This is called a Type 2 error or false-negative error or beta error. This means falsely accepting the null hypothesis.

The power of the study is the ability to detect a difference between groups and is the converse of the beta error; i.e., power = 1-beta error. Alpha and beta errors are finalized when the protocol is written and form the basis for sample size calculation for the study. In an ideal world, we would not like any error in the results of our study; however, we would need to do the study in the entire population (infinite sample size) to be able to get a 0% alpha and beta error. These two errors enable us to do studies with realistic sample sizes, with the compromise that there is a small possibility that the results may not always reflect the truth. The basis for this will be discussed in a subsequent paper in this series dealing with sample size calculation.

Conventionally, type 1 or alpha error is set at 5%. This means, that at the end of the study, if there is a difference between groups, we want to be 95% certain that this is a true difference and allow only a 5% probability that this difference has occurred by chance (false positive). Type 2 or beta error is usually set between 10% and 20%; therefore, the power of the study is 90% or 80%. This means that if there is a difference between groups, we want to be 80% (or 90%) certain that the study will detect that difference. For example, in the ABLE study, sample size was calculated with a type 1 error of 5% (two-sided) and power of 90% (type 2 error of 10%) (1).

Table 1 gives a summary of the two types of statistical errors with an example

Statistical errors

In the next article in this series, we will look at the meaning and interpretation of ‘ p ’ value and confidence intervals for hypothesis testing.

Source of support: Nil

Conflict of interest: None

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

14.3: Design Research Hypotheses and Experiment

- Last updated

- Save as PDF

- Page ID 130572

- Maurice A. Geraghty

- De Anza College

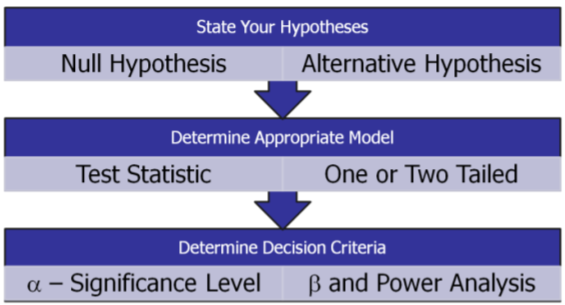

After developing a general question and having some sense of the data that is available or that is collected, we then design and an experiment and a set of hypotheses .

Hypotheses and Hypothesis

Testing For purposes of testing, we need to design hypotheses that are statements about population parameters. Some examples of hypotheses:

At least 20% of juvenile offenders are caught and sentenced to prison.

- The mean monthly income for college graduates is over $5000.

- The mean standardized test score for schools in Cupertino is the same as the mean scores for schools in Los Altos.

- The lung cancer rates in California are lower than the rates in Texas.

- The standard deviation of the New York Stock Exchange today is greater than 10 percentage points per year.

These same hypotheses could be written in symbolic notation:

- \(p \geq 0.20\)

- \(\mu>5000\)

- \(\mu_{1}=\mu_{2}\)

- \(p_{1}<p_{2}\)

- \(\sigma>10\)

Hypothesis Testing is a procedure, based on sample evidence and probability theory, used to determine whether the hypothesis is a reasonable statement and should not be rejected, or is unreasonable and should be rejected. This hypothesis that is tested is called the Null Hypothesis and is designated by the symbol Ho. If the Null Hypothesis is unreasonable and needs to be rejected, then the research supports an Alternative Hypothesis designated by the symbol Ha.

Definition: Null Hypothesis (\(H_o\))

A statement about the value of a population parameter that is assumed to be true for the purpose of testing.

Definition: Alternative Hypothesis (\(H_a\))

A statement about the value of a population parameter that is assumed to be true if the Null Hypothesis is rejected during testing.

From these definitions it is clear that the Alternative Hypothesis will necessarily contradict the Null Hypothesis; both cannot be true at the same time. Some other important points about hypotheses:

- Hypotheses must be statements about population parameters, never about sample statistics.

- In most hypotheses tests, equality (\(=, \leq, \geq\)) will be associated with the Null Hypothesis while non‐equality (\(\neq,<,>\)) will be associated with the Alternative Hypothesis.

- It is the Null Hypothesis that is always tested in attempt to “disprove” it and support the Alternative Hypothesis. This process is analogous in concept to a “proof by contradiction” in Mathematics or Logic, but supporting a hypothesis with a level of confidence is not the same as an absolute mathematical proof.

Examples of Null and Alternative Hypotheses:

- \(H_{o}: p \leq 0.20 \qquad H_{a}: p>0.20\)

- \(H_{o}: \mu \leq 5000 \qquad H_{a}: \mu>5000\)

- \(H_{o}: \mu_{1}=\mu_{2} \qquad H_{a}: \mu_{1} \neq \mu_{2}\)

- \(H_{o}: p_{1} \geq p_{2} \qquad H_{a}: p_{1}<p_{2}\)

- \(H_{o}: \sigma \leq 10 \qquad H_{a}: \sigma>10\)

Statistical Model and Test Statistic

To test a hypothesis we need to use a statistical model that describes the behavior for data and the type of population parameter being tested. Because of the Central Limit Theorem, many statistical models are from the Normal Family, most importantly the \(Z, t, \chi^{2}\), and \(F\) distributions. Other models that are used when the Central Limit Theorem is not appropriate are called non‐parametric Models and will not be discussed here.

Each chosen model has requirements of the data called model assumptions that should be checked for appropriateness. For example, many models require that the sample mean have approximately a Normal Distribution, something that may not be true for some smaller or heavily skewed data sets.

Once the model is chosen, we can then determine a test statistic , a value derived from the data that is used to decide whether to reject or fail to reject the Null Hypothesis.

Examples of Statistical Models and Test Statistics

Errors in Decision Making

Whenever we make a decision or support a position, there is always a chance we make the wrong choice. The hypothesis testing process requires us to either to reject the Null Hypothesis and support the Alternative Hypothesis or fail to reject the Null Hypothesis. This creates the possibility of two types of error:

- Type I Error Rejecting the null hypothesis when it is actually true.

- Type II Error Failing to reject the null hypothesis when it is actually false.

In designing hypothesis tests, we need to carefully consider the probability of making either one of these errors.

Example: Pharmaceutical research

Recall the two news stories discussed earlier. In the first story, a drug company marketed a suppository that was later found to be ineffective (and often dangerous) in treatment. Before marketing the drug, the company determined that the drug was effective in treatment, meaning that the company rejected a Null Hypothesis that the suppository had no effect on the disease. This is an example of Type I error.

In the second story, research was abandoned when the testing showed Interferon was ineffective in treating a lung disease. The company in this case failed to reject a Null Hypothesis that the drug was ineffective. What if the drug really was effective? Did the company make Type II error? Possibly, but since the drug was never marketed, we have no way of knowing the truth.

These stories highlight the problem of statistical research: errors can be analyzed using probability models, but there is often no way of identifying specific errors. For example, there are unknown innocent people in prison right now because a jury made Type I error in wrongfully convicting defendants. We must be open to the possibility of modification or rejection of currently accepted theories when new data is discovered.

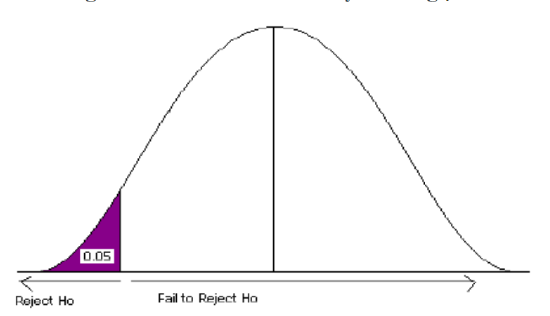

In designing an experiment, we set a maximum probability of making Type I error. This probability is called the level of significance or significance level of the test and is designated by the Greek letter \(\alpha\) , read as alpha. The analysis of Type II error is more problematic since there are many possible values that would satisfy the Alternative Hypothesis. For a specific value of the Alternative Hypothesis, the design probability of making Type II error is called Beta (\(\beta\)) which will be analyzed in detail later in this section.

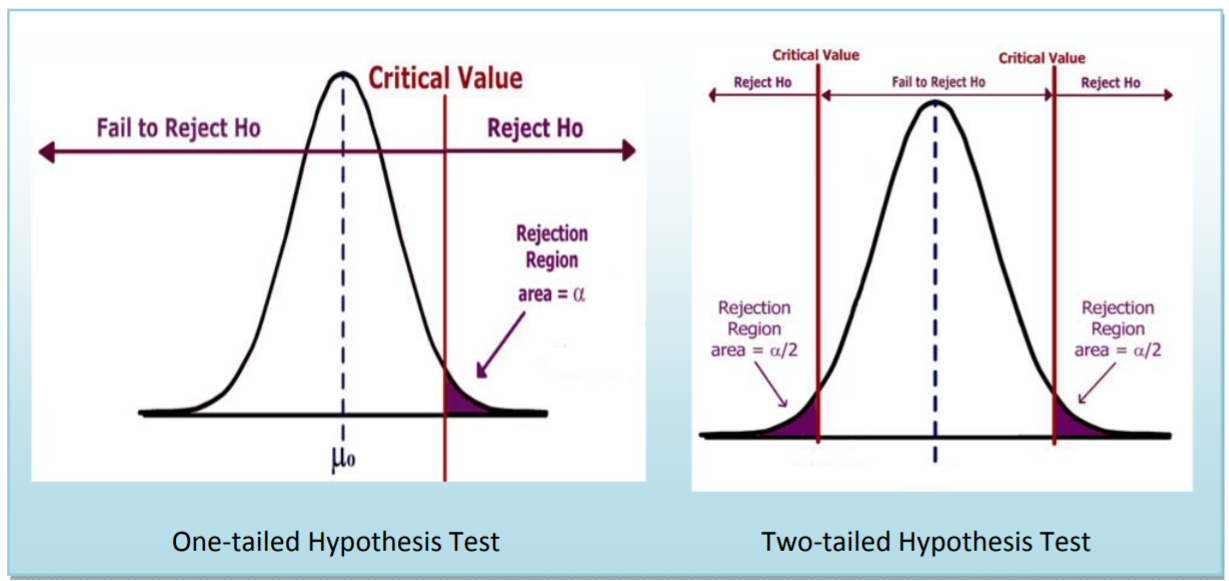

Critical Value and Rejection Region

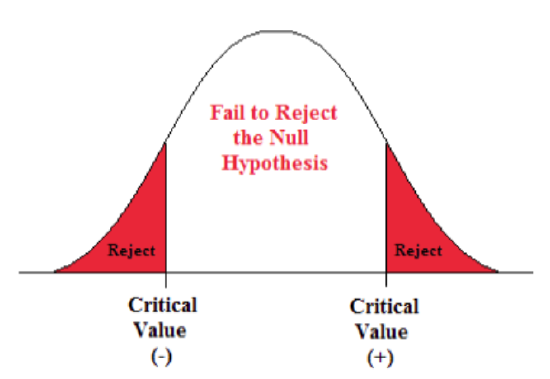

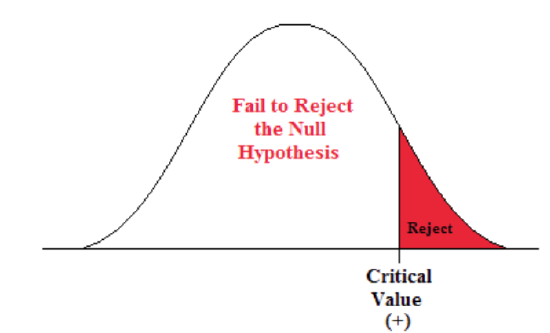

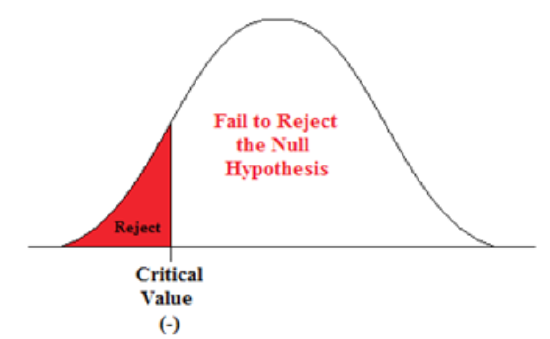

Once the significance level of the test is chosen, it is then possible to find the region(s) of the probability distribution function of the test statistic that would allow the Null Hypothesis to be rejected. This is called the Rejection Region , and the boundary between the Rejection Region and the “Fail to Reject” is called the Critical Value .

There can be more than one critical value and rejection region. What matters is that the total area of the rejection region equals the significance level \(\alpha\).

One and Two tailed Tests

A test is one‐tailed when the Alternative Hypothesis, \(H_{a}\), states a direction, such as:

\(H_{o}\): The mean income of females is less than or equal to the mean income of males.

\(H_{a}\): The mean income of females is greater than that of males.

Since equality is usually part of the Null Hypothesis, it is the Alternative Hypothesis which determines which tail to test.

A test is two‐tailed when no direction is specified in the alternate hypothesis Ha , such as:

\(H_{o}\): The mean income of females is equal to the mean income of males.

\(H_{a}\): The mean income of females is not equal to the mean income of the males.

In a two tailed‐test, the significance level is split into two parts since there are two rejection regions. In hypothesis testing, in which the statistical model is symmetrical ( eg: the Standard Normal \(Z\) or Student’s t distribution) these two regions would be equal. There is a relationship between a confidence interval and a two‐tailed test: if the level of confidence for a confidence interval is equal to \(1-\alpha\), where \(\alpha\) is the significance level of the two‐tailed test, the critical values would be the same.

Here are some examples for testing the mean \(\mu\) against a hypothesized value \(\mu_{0}\):

\(H_{a}: \mu>\mu_{0}\) means test the upper tail and is also called a right‐tailed test.

\(H_{a}: \mu<\mu_{0}\) means test the lower tail and is also called a left‐tailed test.

\(H_{a}: \mu \neq \mu_{0}\) means test both tails.

Deciding when to conduct a one or two‐tailed test is often controversial and many authorities even go so far as to say that only two‐tailed tests should be conducted. Ultimately, the decision depends on the wording of the problem. If we want to show that a new diet reduces weight, we would conduct a lower tailed test, since we don’t care if the diet causes weight gain. If instead, we wanted to determine if mean crime rate in California was different from the mean crime rate in the United States, we would run a two‐tailed test, since different implies greater than or less than.

Tutorial Playlist

Statistics tutorial, everything you need to know about the probability density function in statistics, the best guide to understand central limit theorem, an in-depth guide to measures of central tendency : mean, median and mode, the ultimate guide to understand conditional probability.

A Comprehensive Look at Percentile in Statistics

The Best Guide to Understand Bayes Theorem

Everything you need to know about the normal distribution, an in-depth explanation of cumulative distribution function, a complete guide to chi-square test, a complete guide on hypothesis testing in statistics, understanding the fundamentals of arithmetic and geometric progression, the definitive guide to understand spearman’s rank correlation, a comprehensive guide to understand mean squared error, all you need to know about the empirical rule in statistics, the complete guide to skewness and kurtosis, a holistic look at bernoulli distribution.

All You Need to Know About Bias in Statistics

A Complete Guide to Get a Grasp of Time Series Analysis

The Key Differences Between Z-Test Vs. T-Test

The Complete Guide to Understand Pearson's Correlation

A complete guide on the types of statistical studies, everything you need to know about poisson distribution, your best guide to understand correlation vs. regression, the most comprehensive guide for beginners on what is correlation, what is hypothesis testing in statistics types and examples.

Lesson 10 of 24 By Avijeet Biswal

Table of Contents

In today’s data-driven world , decisions are based on data all the time. Hypothesis plays a crucial role in that process, whether it may be making business decisions, in the health sector, academia, or in quality improvement. Without hypothesis & hypothesis tests, you risk drawing the wrong conclusions and making bad decisions. In this tutorial, you will look at Hypothesis Testing in Statistics.

What Is Hypothesis Testing in Statistics?

Hypothesis Testing is a type of statistical analysis in which you put your assumptions about a population parameter to the test. It is used to estimate the relationship between 2 statistical variables.

Let's discuss few examples of statistical hypothesis from real-life -

- A teacher assumes that 60% of his college's students come from lower-middle-class families.

- A doctor believes that 3D (Diet, Dose, and Discipline) is 90% effective for diabetic patients.

Now that you know about hypothesis testing, look at the two types of hypothesis testing in statistics.

Hypothesis Testing Formula

Z = ( x̅ – μ0 ) / (σ /√n)

- Here, x̅ is the sample mean,

- μ0 is the population mean,

- σ is the standard deviation,

- n is the sample size.

How Hypothesis Testing Works?

An analyst performs hypothesis testing on a statistical sample to present evidence of the plausibility of the null hypothesis. Measurements and analyses are conducted on a random sample of the population to test a theory. Analysts use a random population sample to test two hypotheses: the null and alternative hypotheses.

The null hypothesis is typically an equality hypothesis between population parameters; for example, a null hypothesis may claim that the population means return equals zero. The alternate hypothesis is essentially the inverse of the null hypothesis (e.g., the population means the return is not equal to zero). As a result, they are mutually exclusive, and only one can be correct. One of the two possibilities, however, will always be correct.

Your Dream Career is Just Around The Corner!

Null Hypothesis and Alternate Hypothesis

The Null Hypothesis is the assumption that the event will not occur. A null hypothesis has no bearing on the study's outcome unless it is rejected.

H0 is the symbol for it, and it is pronounced H-naught.

The Alternate Hypothesis is the logical opposite of the null hypothesis. The acceptance of the alternative hypothesis follows the rejection of the null hypothesis. H1 is the symbol for it.

Let's understand this with an example.

A sanitizer manufacturer claims that its product kills 95 percent of germs on average.

To put this company's claim to the test, create a null and alternate hypothesis.

H0 (Null Hypothesis): Average = 95%.

Alternative Hypothesis (H1): The average is less than 95%.

Another straightforward example to understand this concept is determining whether or not a coin is fair and balanced. The null hypothesis states that the probability of a show of heads is equal to the likelihood of a show of tails. In contrast, the alternate theory states that the probability of a show of heads and tails would be very different.

Become a Data Scientist with Hands-on Training!

Hypothesis Testing Calculation With Examples

Let's consider a hypothesis test for the average height of women in the United States. Suppose our null hypothesis is that the average height is 5'4". We gather a sample of 100 women and determine that their average height is 5'5". The standard deviation of population is 2.

To calculate the z-score, we would use the following formula:

z = ( x̅ – μ0 ) / (σ /√n)

z = (5'5" - 5'4") / (2" / √100)

z = 0.5 / (0.045)

We will reject the null hypothesis as the z-score of 11.11 is very large and conclude that there is evidence to suggest that the average height of women in the US is greater than 5'4".

Steps of Hypothesis Testing

Step 1: specify your null and alternate hypotheses.

It is critical to rephrase your original research hypothesis (the prediction that you wish to study) as a null (Ho) and alternative (Ha) hypothesis so that you can test it quantitatively. Your first hypothesis, which predicts a link between variables, is generally your alternate hypothesis. The null hypothesis predicts no link between the variables of interest.

Step 2: Gather Data

For a statistical test to be legitimate, sampling and data collection must be done in a way that is meant to test your hypothesis. You cannot draw statistical conclusions about the population you are interested in if your data is not representative.

Step 3: Conduct a Statistical Test

Other statistical tests are available, but they all compare within-group variance (how to spread out the data inside a category) against between-group variance (how different the categories are from one another). If the between-group variation is big enough that there is little or no overlap between groups, your statistical test will display a low p-value to represent this. This suggests that the disparities between these groups are unlikely to have occurred by accident. Alternatively, if there is a large within-group variance and a low between-group variance, your statistical test will show a high p-value. Any difference you find across groups is most likely attributable to chance. The variety of variables and the level of measurement of your obtained data will influence your statistical test selection.

Step 4: Determine Rejection Of Your Null Hypothesis

Your statistical test results must determine whether your null hypothesis should be rejected or not. In most circumstances, you will base your judgment on the p-value provided by the statistical test. In most circumstances, your preset level of significance for rejecting the null hypothesis will be 0.05 - that is, when there is less than a 5% likelihood that these data would be seen if the null hypothesis were true. In other circumstances, researchers use a lower level of significance, such as 0.01 (1%). This reduces the possibility of wrongly rejecting the null hypothesis.

Step 5: Present Your Results

The findings of hypothesis testing will be discussed in the results and discussion portions of your research paper, dissertation, or thesis. You should include a concise overview of the data and a summary of the findings of your statistical test in the results section. You can talk about whether your results confirmed your initial hypothesis or not in the conversation. Rejecting or failing to reject the null hypothesis is a formal term used in hypothesis testing. This is likely a must for your statistics assignments.

Types of Hypothesis Testing

To determine whether a discovery or relationship is statistically significant, hypothesis testing uses a z-test. It usually checks to see if two means are the same (the null hypothesis). Only when the population standard deviation is known and the sample size is 30 data points or more, can a z-test be applied.

A statistical test called a t-test is employed to compare the means of two groups. To determine whether two groups differ or if a procedure or treatment affects the population of interest, it is frequently used in hypothesis testing.

Chi-Square

You utilize a Chi-square test for hypothesis testing concerning whether your data is as predicted. To determine if the expected and observed results are well-fitted, the Chi-square test analyzes the differences between categorical variables from a random sample. The test's fundamental premise is that the observed values in your data should be compared to the predicted values that would be present if the null hypothesis were true.

Hypothesis Testing and Confidence Intervals

Both confidence intervals and hypothesis tests are inferential techniques that depend on approximating the sample distribution. Data from a sample is used to estimate a population parameter using confidence intervals. Data from a sample is used in hypothesis testing to examine a given hypothesis. We must have a postulated parameter to conduct hypothesis testing.

Bootstrap distributions and randomization distributions are created using comparable simulation techniques. The observed sample statistic is the focal point of a bootstrap distribution, whereas the null hypothesis value is the focal point of a randomization distribution.

A variety of feasible population parameter estimates are included in confidence ranges. In this lesson, we created just two-tailed confidence intervals. There is a direct connection between these two-tail confidence intervals and these two-tail hypothesis tests. The results of a two-tailed hypothesis test and two-tailed confidence intervals typically provide the same results. In other words, a hypothesis test at the 0.05 level will virtually always fail to reject the null hypothesis if the 95% confidence interval contains the predicted value. A hypothesis test at the 0.05 level will nearly certainly reject the null hypothesis if the 95% confidence interval does not include the hypothesized parameter.

Simple and Composite Hypothesis Testing

Depending on the population distribution, you can classify the statistical hypothesis into two types.

Simple Hypothesis: A simple hypothesis specifies an exact value for the parameter.

Composite Hypothesis: A composite hypothesis specifies a range of values.

A company is claiming that their average sales for this quarter are 1000 units. This is an example of a simple hypothesis.

Suppose the company claims that the sales are in the range of 900 to 1000 units. Then this is a case of a composite hypothesis.

One-Tailed and Two-Tailed Hypothesis Testing

The One-Tailed test, also called a directional test, considers a critical region of data that would result in the null hypothesis being rejected if the test sample falls into it, inevitably meaning the acceptance of the alternate hypothesis.

In a one-tailed test, the critical distribution area is one-sided, meaning the test sample is either greater or lesser than a specific value.

In two tails, the test sample is checked to be greater or less than a range of values in a Two-Tailed test, implying that the critical distribution area is two-sided.

If the sample falls within this range, the alternate hypothesis will be accepted, and the null hypothesis will be rejected.

Become a Data Scientist With Real-World Experience

Right Tailed Hypothesis Testing

If the larger than (>) sign appears in your hypothesis statement, you are using a right-tailed test, also known as an upper test. Or, to put it another way, the disparity is to the right. For instance, you can contrast the battery life before and after a change in production. Your hypothesis statements can be the following if you want to know if the battery life is longer than the original (let's say 90 hours):

- The null hypothesis is (H0 <= 90) or less change.

- A possibility is that battery life has risen (H1) > 90.

The crucial point in this situation is that the alternate hypothesis (H1), not the null hypothesis, decides whether you get a right-tailed test.

Left Tailed Hypothesis Testing

Alternative hypotheses that assert the true value of a parameter is lower than the null hypothesis are tested with a left-tailed test; they are indicated by the asterisk "<".

Suppose H0: mean = 50 and H1: mean not equal to 50

According to the H1, the mean can be greater than or less than 50. This is an example of a Two-tailed test.

In a similar manner, if H0: mean >=50, then H1: mean <50

Here the mean is less than 50. It is called a One-tailed test.

Type 1 and Type 2 Error

A hypothesis test can result in two types of errors.

Type 1 Error: A Type-I error occurs when sample results reject the null hypothesis despite being true.

Type 2 Error: A Type-II error occurs when the null hypothesis is not rejected when it is false, unlike a Type-I error.

Suppose a teacher evaluates the examination paper to decide whether a student passes or fails.

H0: Student has passed

H1: Student has failed

Type I error will be the teacher failing the student [rejects H0] although the student scored the passing marks [H0 was true].

Type II error will be the case where the teacher passes the student [do not reject H0] although the student did not score the passing marks [H1 is true].

Level of Significance

The alpha value is a criterion for determining whether a test statistic is statistically significant. In a statistical test, Alpha represents an acceptable probability of a Type I error. Because alpha is a probability, it can be anywhere between 0 and 1. In practice, the most commonly used alpha values are 0.01, 0.05, and 0.1, which represent a 1%, 5%, and 10% chance of a Type I error, respectively (i.e. rejecting the null hypothesis when it is in fact correct).

Future-Proof Your AI/ML Career: Top Dos and Don'ts

A p-value is a metric that expresses the likelihood that an observed difference could have occurred by chance. As the p-value decreases the statistical significance of the observed difference increases. If the p-value is too low, you reject the null hypothesis.

Here you have taken an example in which you are trying to test whether the new advertising campaign has increased the product's sales. The p-value is the likelihood that the null hypothesis, which states that there is no change in the sales due to the new advertising campaign, is true. If the p-value is .30, then there is a 30% chance that there is no increase or decrease in the product's sales. If the p-value is 0.03, then there is a 3% probability that there is no increase or decrease in the sales value due to the new advertising campaign. As you can see, the lower the p-value, the chances of the alternate hypothesis being true increases, which means that the new advertising campaign causes an increase or decrease in sales.

Why is Hypothesis Testing Important in Research Methodology?

Hypothesis testing is crucial in research methodology for several reasons:

- Provides evidence-based conclusions: It allows researchers to make objective conclusions based on empirical data, providing evidence to support or refute their research hypotheses.

- Supports decision-making: It helps make informed decisions, such as accepting or rejecting a new treatment, implementing policy changes, or adopting new practices.

- Adds rigor and validity: It adds scientific rigor to research using statistical methods to analyze data, ensuring that conclusions are based on sound statistical evidence.

- Contributes to the advancement of knowledge: By testing hypotheses, researchers contribute to the growth of knowledge in their respective fields by confirming existing theories or discovering new patterns and relationships.

Limitations of Hypothesis Testing

Hypothesis testing has some limitations that researchers should be aware of:

- It cannot prove or establish the truth: Hypothesis testing provides evidence to support or reject a hypothesis, but it cannot confirm the absolute truth of the research question.

- Results are sample-specific: Hypothesis testing is based on analyzing a sample from a population, and the conclusions drawn are specific to that particular sample.

- Possible errors: During hypothesis testing, there is a chance of committing type I error (rejecting a true null hypothesis) or type II error (failing to reject a false null hypothesis).

- Assumptions and requirements: Different tests have specific assumptions and requirements that must be met to accurately interpret results.

After reading this tutorial, you would have a much better understanding of hypothesis testing, one of the most important concepts in the field of Data Science . The majority of hypotheses are based on speculation about observed behavior, natural phenomena, or established theories.

If you are interested in statistics of data science and skills needed for such a career, you ought to explore Simplilearn’s Post Graduate Program in Data Science.

If you have any questions regarding this ‘Hypothesis Testing In Statistics’ tutorial, do share them in the comment section. Our subject matter expert will respond to your queries. Happy learning!

1. What is hypothesis testing in statistics with example?

Hypothesis testing is a statistical method used to determine if there is enough evidence in a sample data to draw conclusions about a population. It involves formulating two competing hypotheses, the null hypothesis (H0) and the alternative hypothesis (Ha), and then collecting data to assess the evidence. An example: testing if a new drug improves patient recovery (Ha) compared to the standard treatment (H0) based on collected patient data.

2. What is hypothesis testing and its types?

Hypothesis testing is a statistical method used to make inferences about a population based on sample data. It involves formulating two hypotheses: the null hypothesis (H0), which represents the default assumption, and the alternative hypothesis (Ha), which contradicts H0. The goal is to assess the evidence and determine whether there is enough statistical significance to reject the null hypothesis in favor of the alternative hypothesis.

Types of hypothesis testing:

- One-sample test: Used to compare a sample to a known value or a hypothesized value.

- Two-sample test: Compares two independent samples to assess if there is a significant difference between their means or distributions.

- Paired-sample test: Compares two related samples, such as pre-test and post-test data, to evaluate changes within the same subjects over time or under different conditions.

- Chi-square test: Used to analyze categorical data and determine if there is a significant association between variables.

- ANOVA (Analysis of Variance): Compares means across multiple groups to check if there is a significant difference between them.

3. What are the steps of hypothesis testing?

The steps of hypothesis testing are as follows:

- Formulate the hypotheses: State the null hypothesis (H0) and the alternative hypothesis (Ha) based on the research question.

- Set the significance level: Determine the acceptable level of error (alpha) for making a decision.

- Collect and analyze data: Gather and process the sample data.

- Compute test statistic: Calculate the appropriate statistical test to assess the evidence.

- Make a decision: Compare the test statistic with critical values or p-values and determine whether to reject H0 in favor of Ha or not.

- Draw conclusions: Interpret the results and communicate the findings in the context of the research question.

4. What are the 2 types of hypothesis testing?

- One-tailed (or one-sided) test: Tests for the significance of an effect in only one direction, either positive or negative.

- Two-tailed (or two-sided) test: Tests for the significance of an effect in both directions, allowing for the possibility of a positive or negative effect.

The choice between one-tailed and two-tailed tests depends on the specific research question and the directionality of the expected effect.

5. What are the 3 major types of hypothesis?

The three major types of hypotheses are:

- Null Hypothesis (H0): Represents the default assumption, stating that there is no significant effect or relationship in the data.

- Alternative Hypothesis (Ha): Contradicts the null hypothesis and proposes a specific effect or relationship that researchers want to investigate.

- Nondirectional Hypothesis: An alternative hypothesis that doesn't specify the direction of the effect, leaving it open for both positive and negative possibilities.

Find our Data Analyst Online Bootcamp in top cities:

About the author.

Avijeet is a Senior Research Analyst at Simplilearn. Passionate about Data Analytics, Machine Learning, and Deep Learning, Avijeet is also interested in politics, cricket, and football.

Recommended Resources

Free eBook: Top Programming Languages For A Data Scientist

Normality Test in Minitab: Minitab with Statistics

Machine Learning Career Guide: A Playbook to Becoming a Machine Learning Engineer

- PMP, PMI, PMBOK, CAPM, PgMP, PfMP, ACP, PBA, RMP, SP, and OPM3 are registered marks of the Project Management Institute, Inc.

If you're seeing this message, it means we're having trouble loading external resources on our website.

If you're behind a web filter, please make sure that the domains *.kastatic.org and *.kasandbox.org are unblocked.

To log in and use all the features of Khan Academy, please enable JavaScript in your browser.

Statistics and probability

Unit 1: analyzing categorical data, unit 2: displaying and comparing quantitative data, unit 3: summarizing quantitative data, unit 4: modeling data distributions, unit 5: exploring bivariate numerical data, unit 6: study design, unit 7: probability, unit 8: counting, permutations, and combinations, unit 9: random variables, unit 10: sampling distributions, unit 11: confidence intervals, unit 12: significance tests (hypothesis testing), unit 13: two-sample inference for the difference between groups, unit 14: inference for categorical data (chi-square tests), unit 15: advanced regression (inference and transforming), unit 16: analysis of variance (anova).

Teach yourself statistics

Hypothesis Test for a Mean

This lesson explains how to conduct a hypothesis test of a mean, when the following conditions are met:

- The sampling method is simple random sampling .

- The sampling distribution is normal or nearly normal.

Generally, the sampling distribution will be approximately normally distributed if any of the following conditions apply.

- The population distribution is normal.

- The population distribution is symmetric , unimodal , without outliers , and the sample size is 15 or less.

- The population distribution is moderately skewed , unimodal, without outliers, and the sample size is between 16 and 40.

- The sample size is greater than 40, without outliers.

This approach consists of four steps: (1) state the hypotheses, (2) formulate an analysis plan, (3) analyze sample data, and (4) interpret results.

State the Hypotheses

Every hypothesis test requires the analyst to state a null hypothesis and an alternative hypothesis . The hypotheses are stated in such a way that they are mutually exclusive. That is, if one is true, the other must be false; and vice versa.

The table below shows three sets of hypotheses. Each makes a statement about how the population mean μ is related to a specified value M . (In the table, the symbol ≠ means " not equal to ".)

The first set of hypotheses (Set 1) is an example of a two-tailed test , since an extreme value on either side of the sampling distribution would cause a researcher to reject the null hypothesis. The other two sets of hypotheses (Sets 2 and 3) are one-tailed tests , since an extreme value on only one side of the sampling distribution would cause a researcher to reject the null hypothesis.

Formulate an Analysis Plan

The analysis plan describes how to use sample data to accept or reject the null hypothesis. It should specify the following elements.

- Significance level. Often, researchers choose significance levels equal to 0.01, 0.05, or 0.10; but any value between 0 and 1 can be used.

- Test method. Use the one-sample t-test to determine whether the hypothesized mean differs significantly from the observed sample mean.

Analyze Sample Data

Using sample data, conduct a one-sample t-test. This involves finding the standard error, degrees of freedom, test statistic, and the P-value associated with the test statistic.

SE = s * sqrt{ ( 1/n ) * [ ( N - n ) / ( N - 1 ) ] }

SE = s / sqrt( n )

- Degrees of freedom. The degrees of freedom (DF) is equal to the sample size (n) minus one. Thus, DF = n - 1.

t = ( x - μ) / SE

- P-value. The P-value is the probability of observing a sample statistic as extreme as the test statistic. Since the test statistic is a t statistic, use the t Distribution Calculator to assess the probability associated with the t statistic, given the degrees of freedom computed above. (See sample problems at the end of this lesson for examples of how this is done.)

Sample Size Calculator

As you probably noticed, the process of hypothesis testing can be complex. When you need to test a hypothesis about a mean score, consider using the Sample Size Calculator. The calculator is fairly easy to use, and it is free. You can find the Sample Size Calculator in Stat Trek's main menu under the Stat Tools tab. Or you can tap the button below.

Interpret Results

If the sample findings are unlikely, given the null hypothesis, the researcher rejects the null hypothesis. Typically, this involves comparing the P-value to the significance level , and rejecting the null hypothesis when the P-value is less than the significance level.

Test Your Understanding

In this section, two sample problems illustrate how to conduct a hypothesis test of a mean score. The first problem involves a two-tailed test; the second problem, a one-tailed test.

Problem 1: Two-Tailed Test

An inventor has developed a new, energy-efficient lawn mower engine. He claims that the engine will run continuously for 5 hours (300 minutes) on a single gallon of regular gasoline. From his stock of 2000 engines, the inventor selects a simple random sample of 50 engines for testing. The engines run for an average of 295 minutes, with a standard deviation of 20 minutes. Test the null hypothesis that the mean run time is 300 minutes against the alternative hypothesis that the mean run time is not 300 minutes. Use a 0.05 level of significance. (Assume that run times for the population of engines are normally distributed.)

Solution: The solution to this problem takes four steps: (1) state the hypotheses, (2) formulate an analysis plan, (3) analyze sample data, and (4) interpret results. We work through those steps below:

Null hypothesis: μ = 300

Alternative hypothesis: μ ≠ 300

- Formulate an analysis plan . For this analysis, the significance level is 0.05. The test method is a one-sample t-test .

SE = s / sqrt(n) = 20 / sqrt(50) = 20/7.07 = 2.83

DF = n - 1 = 50 - 1 = 49

t = ( x - μ) / SE = (295 - 300)/2.83 = -1.77

where s is the standard deviation of the sample, x is the sample mean, μ is the hypothesized population mean, and n is the sample size.

Since we have a two-tailed test , the P-value is the probability that the t statistic having 49 degrees of freedom is less than -1.77 or greater than 1.77. We use the t Distribution Calculator to find P(t < -1.77) is about 0.04.

- If you enter 1.77 as the sample mean in the t Distribution Calculator, you will find the that the P(t < 1.77) is about 0.04. Therefore, P(t > 1.77) is 1 minus 0.96 or 0.04. Thus, the P-value = 0.04 + 0.04 = 0.08.

- Interpret results . Since the P-value (0.08) is greater than the significance level (0.05), we cannot reject the null hypothesis.

Note: If you use this approach on an exam, you may also want to mention why this approach is appropriate. Specifically, the approach is appropriate because the sampling method was simple random sampling, the population was normally distributed, and the sample size was small relative to the population size (less than 5%).

Problem 2: One-Tailed Test

Bon Air Elementary School has 1000 students. The principal of the school thinks that the average IQ of students at Bon Air is at least 110. To prove her point, she administers an IQ test to 20 randomly selected students. Among the sampled students, the average IQ is 108 with a standard deviation of 10. Based on these results, should the principal accept or reject her original hypothesis? Assume a significance level of 0.01. (Assume that test scores in the population of engines are normally distributed.)

Null hypothesis: μ >= 110

Alternative hypothesis: μ < 110

- Formulate an analysis plan . For this analysis, the significance level is 0.01. The test method is a one-sample t-test .

SE = s / sqrt(n) = 10 / sqrt(20) = 10/4.472 = 2.236

DF = n - 1 = 20 - 1 = 19

t = ( x - μ) / SE = (108 - 110)/2.236 = -0.894

Here is the logic of the analysis: Given the alternative hypothesis (μ < 110), we want to know whether the observed sample mean is small enough to cause us to reject the null hypothesis.

The observed sample mean produced a t statistic test statistic of -0.894. We use the t Distribution Calculator to find P(t < -0.894) is about 0.19.

- This means we would expect to find a sample mean of 108 or smaller in 19 percent of our samples, if the true population IQ were 110. Thus the P-value in this analysis is 0.19.

- Interpret results . Since the P-value (0.19) is greater than the significance level (0.01), we cannot reject the null hypothesis.

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

3.1: The Fundamentals of Hypothesis Testing

- Last updated

- Save as PDF

- Page ID 2883

- Diane Kiernan

- SUNY College of Environmental Science and Forestry via OpenSUNY

The previous two chapters introduced methods for organizing and summarizing sample data, and using sample statistics to estimate population parameters. This chapter introduces the next major topic of inferential statistics: hypothesis testing.

A hypothesis is a statement or claim about a property of a population.

The Fundamentals of Hypothesis Testing

When conducting scientific research, typically there is some known information, perhaps from some past work or from a long accepted idea. We want to test whether this claim is believable. This is the basic idea behind a hypothesis test:

- State what we think is true.

- Quantify how confident we are about our claim.

- Use sample statistics to make inferences about population parameters.

For example, past research tells us that the average life span for a hummingbird is about four years. You have been studying the hummingbirds in the southeastern United States and find a sample mean lifespan of 4.8 years. Should you reject the known or accepted information in favor of your results? How confident are you in your estimate? At what point would you say that there is enough evidence to reject the known information and support your alternative claim? How far from the known mean of four years can the sample mean be before we reject the idea that the average lifespan of a hummingbird is four years?

Definition: hypothesis testing

Hypothesis testing is a procedure, based on sample evidence and probability, used to test claims regarding a characteristic of a population.

A hypothesis is a claim or statement about a characteristic of a population of interest to us. A hypothesis test is a way for us to use our sample statistics to test a specific claim.

Example \(\PageIndex{1}\):

The population mean weight is known to be 157 lb. We want to test the claim that the mean weight has increased.

Example \(\PageIndex{2}\):

Two years ago, the proportion of infected plants was 37%. We believe that a treatment has helped, and we want to test the claim that there has been a reduction in the proportion of infected plants.

Components of a Formal Hypothesis Test

The null hypothesis is a statement about the value of a population parameter, such as the population mean (µ) or the population proportion ( p ). It contains the condition of equality and is denoted as H 0 (H-naught).

H 0 : µ = 157 or H0 : p = 0.37

The alternative hypothesis is the claim to be tested, the opposite of the null hypothesis. It contains the value of the parameter that we consider plausible and is denoted as H 1 .

H 1 : µ > 157 or H1 : p ≠ 0.37

The test statistic is a value computed from the sample data that is used in making a decision about the rejection of the null hypothesis. The test statistic converts the sample mean ( x̄ ) or sample proportion ( p̂ ) to a Z- or t-score under the assumption that the null hypothesis is true. It is used to decide whether the difference between the sample statistic and the hypothesized claim is significant.

The p-value is the area under the curve to the left or right of the test statistic. It is compared to the level of significance (α).

The critical value is the value that defines the rejection zone (the test statistic values that would lead to rejection of the null hypothesis). It is defined by the level of significance.

The level of significance (α) is the probability that the test statistic will fall into the critical region when the null hypothesis is true. This level is set by the researcher.

The conclusion is the final decision of the hypothesis test. The conclusion must always be clearly stated, communicating the decision based on the components of the test. It is important to realize that we never prove or accept the null hypothesis. We are merely saying that the sample evidence is not strong enough to warrant the rejection of the null hypothesis. The conclusion is made up of two parts:

1) Reject or fail to reject the null hypothesis, and 2) there is or is not enough evidence to support the alternative claim.

Option 1) Reject the null hypothesis (H0). This means that you have enough statistical evidence to support the alternative claim (H 1 ).

Option 2) Fail to reject the null hypothesis (H0). This means that you do NOT have enough evidence to support the alternative claim (H 1 ).

Another way to think about hypothesis testing is to compare it to the US justice system. A defendant is innocent until proven guilty (Null hypothesis—innocent). The prosecuting attorney tries to prove that the defendant is guilty (Alternative hypothesis—guilty). There are two possible conclusions that the jury can reach. First, the defendant is guilty (Reject the null hypothesis). Second, the defendant is not guilty (Fail to reject the null hypothesis). This is NOT the same thing as saying the defendant is innocent! In the first case, the prosecutor had enough evidence to reject the null hypothesis (innocent) and support the alternative claim (guilty). In the second case, the prosecutor did NOT have enough evidence to reject the null hypothesis (innocent) and support the alternative claim of guilty.

The Null and Alternative Hypotheses

There are three different pairs of null and alternative hypotheses:

Table \(PageIndex{1}\): The rejection zone for a two-sided hypothesis test.

where c is some known value.

A Two-sided Test

This tests whether the population parameter is equal to, versus not equal to, some specific value.

Ho: μ = 12 vs. H 1 : μ ≠ 12

The critical region is divided equally into the two tails and the critical values are ± values that define the rejection zones.

Example \(\PageIndex{3}\):

A forester studying diameter growth of red pine believes that the mean diameter growth will be different if a fertilization treatment is applied to the stand.

- Ho: μ = 1.2 in./ year

- H 1 : μ ≠ 1.2 in./ year

This is a two-sided question, as the forester doesn’t state whether population mean diameter growth will increase or decrease.

A Right-sided Test

This tests whether the population parameter is equal to, versus greater than, some specific value.

Ho: μ = 12 vs. H 1 : μ > 12

The critical region is in the right tail and the critical value is a positive value that defines the rejection zone.

Example \(\PageIndex{4}\):

A biologist believes that there has been an increase in the mean number of lakes infected with milfoil, an invasive species, since the last study five years ago.

- Ho: μ = 15 lakes

- H1: μ >15 lakes

This is a right-sided question, as the biologist believes that there has been an increase in population mean number of infected lakes.

A Left-sided Test

This tests whether the population parameter is equal to, versus less than, some specific value.

Ho: μ = 12 vs. H 1 : μ < 12

The critical region is in the left tail and the critical value is a negative value that defines the rejection zone.

Example \(\PageIndex{5}\):

A scientist’s research indicates that there has been a change in the proportion of people who support certain environmental policies. He wants to test the claim that there has been a reduction in the proportion of people who support these policies.

- Ho: p = 0.57

- H 1 : p < 0.57

This is a left-sided question, as the scientist believes that there has been a reduction in the true population proportion.

Statistically Significant

When the observed results (the sample statistics) are unlikely (a low probability) under the assumption that the null hypothesis is true, we say that the result is statistically significant, and we reject the null hypothesis. This result depends on the level of significance, the sample statistic, sample size, and whether it is a one- or two-sided alternative hypothesis.

Types of Errors

When testing, we arrive at a conclusion of rejecting the null hypothesis or failing to reject the null hypothesis. Such conclusions are sometimes correct and sometimes incorrect (even when we have followed all the correct procedures). We use incomplete sample data to reach a conclusion and there is always the possibility of reaching the wrong conclusion. There are four possible conclusions to reach from hypothesis testing. Of the four possible outcomes, two are correct and two are NOT correct.

Table \(\PageIndex{2}\). Possible outcomes from a hypothesis test.

A Type I error is when we reject the null hypothesis when it is true. The symbol α (alpha) is used to represent Type I errors. This is the same alpha we use as the level of significance. By setting alpha as low as reasonably possible, we try to control the Type I error through the level of significance.

A Type II error is when we fail to reject the null hypothesis when it is false. The symbol β(beta) is used to represent Type II errors.

In general, Type I errors are considered more serious. One step in the hypothesis test procedure involves selecting the significance level ( α ), which is the probability of rejecting the null hypothesis when it is correct. So the researcher can select the level of significance that minimizes Type I errors. However, there is a mathematical relationship between α, β, and n (sample size).

- As α increases, β decreases

- As α decreases, β increases

- As sample size increases (n), both α and β decrease

The natural inclination is to select the smallest possible value for α, thinking to minimize the possibility of causing a Type I error. Unfortunately, this forces an increase in Type II errors. By making the rejection zone too small, you may fail to reject the null hypothesis, when, in fact, it is false. Typically, we select the best sample size and level of significance, automatically setting β.

Power of the Test

A Type II error (β) is the probability of failing to reject a false null hypothesis. It follows that 1-β is the probability of rejecting a false null hypothesis. This probability is identified as the power of the test, and is often used to gauge the test’s effectiveness in recognizing that a null hypothesis is false.

Definition: power of the test

The probability that at a fixed level α significance test will reject H0, when a particular alternative value of the parameter is true is called the power of the test.

Power is also directly linked to sample size. For example, suppose the null hypothesis is that the mean fish weight is 8.7 lb. Given sample data, a level of significance of 5%, and an alternative weight of 9.2 lb., we can compute the power of the test to reject μ = 8.7 lb. If we have a small sample size, the power will be low. However, increasing the sample size will increase the power of the test. Increasing the level of significance will also increase power. A 5% test of significance will have a greater chance of rejecting the null hypothesis than a 1% test because the strength of evidence required for the rejection is less. Decreasing the standard deviation has the same effect as increasing the sample size: there is more information about μ.

What is Hypothesis Testing in Statistics? Types and Examples

Varun Saharawat is a seasoned professional in the fields of SEO and content writing. With a profound knowledge of the intricate aspects of these disciplines, Varun has established himself as a valuable asset in the world of digital marketing and online content creation.

Hypothesis testing in statistics involves testing an assumption about a population parameter using sample data. Learners can download Hypothesis Testing PDF to get instant access to all information!

What exactly is hypothesis testing, and how does it work in statistics? Can I find practical examples and understand the different types from this blog?

Hypothesis Testing : Ever wonder how researchers determine if a new medicine actually works or if a new marketing campaign effectively drives sales? They use hypothesis testing! It is at the core of how scientific studies, business experiments and surveys determine if their results are statistically significant or just due to chance.

Hypothesis testing allows us to make evidence-based decisions by quantifying uncertainty and providing a structured process to make data-driven conclusions rather than guessing. In this post, we will discuss hypothesis testing types, examples, and processes!

Table of Contents

Hypothesis Testing

Hypothesis testing is a statistical method used to evaluate the validity of a hypothesis using sample data. It involves assessing whether observed data provide enough evidence to reject a specific hypothesis about a population parameter.

Hypothesis Testing in Data Science

Hypothesis testing in data science is a statistical method used to evaluate two mutually exclusive population statements based on sample data. The primary goal is to determine which statement is more supported by the observed data.

Hypothesis testing assists in supporting the certainty of findings in research and data science projects. This statistical inference aids in making decisions about population parameters using sample data. For those who are looking to deepen their knowledge in data science and expand their skillset, we highly recommend checking out Master Generative AI: Data Science Course by Physics Wallah .

Also Read: What is Encapsulation Explain in Details

What is the Hypothesis Testing Procedure in Data Science?

The hypothesis testing procedure in data science involves a structured approach to evaluating hypotheses using statistical methods. Here’s a step-by-step breakdown of the typical procedure:

1) State the Hypotheses:

- Null Hypothesis (H0): This is the default assumption or a statement of no effect or difference. It represents what you aim to test against.

- Alternative Hypothesis (Ha): This is the opposite of the null hypothesis and represents what you want to prove.

2) Choose a Significance Level (α):

- Decide on a threshold (commonly 0.05) beyond which you will reject the null hypothesis. This is your significance level.

3) Select the Appropriate Test:

- Depending on your data type (e.g., continuous, categorical) and the nature of your research question, choose the appropriate statistical test (e.g., t-test, chi-square test, ANOVA, etc.).

4) Collect Data:

- Gather data from your sample or population, ensuring that it’s representative and sufficiently large (or as per your experimental design).

5)Compute the Test Statistic:

- Using your data and the chosen statistical test, compute the test statistic that summarizes the evidence against the null hypothesis.

6) Determine the Critical Value or P-value:

- Based on your significance level and the test statistic’s distribution, determine the critical value from a statistical table or compute the p-value.

7) Make a Decision:

- If the p-value is less than α: Reject the null hypothesis.

- If the p-value is greater than or equal to α: Fail to reject the null hypothesis.

8) Draw Conclusions:

- Based on your decision, draw conclusions about your research question or hypothesis. Remember, failing to reject the null hypothesis doesn’t prove it true; it merely suggests that you don’t have sufficient evidence to reject it.

9) Report Findings:

- Document your findings, including the test statistic, p-value, conclusion, and any other relevant details. Ensure clarity so that others can understand and potentially replicate your analysis.

Also Read: Binary Search Algorithm

How Hypothesis Testing Works?

Hypothesis testing is a fundamental concept in statistics that aids analysts in making informed decisions based on sample data about a larger population. The process involves setting up two contrasting hypotheses, the null hypothesis and the alternative hypothesis, and then using statistical methods to determine which hypothesis provides a more plausible explanation for the observed data.

The Core Principles:

- The Null Hypothesis (H0): This serves as the default assumption or status quo. Typically, it posits that there is no effect or no difference, often represented by an equality statement regarding population parameters. For instance, it might state that a new drug’s effect is no different from a placebo.

- The Alternative Hypothesis (H1 or Ha): This is the counter assumption or what researchers aim to prove. It’s the opposite of the null hypothesis, indicating that there is an effect, a change, or a difference in the population parameters. Using the drug example, the alternative hypothesis would suggest that the new drug has a different effect than the placebo.

Testing the Hypotheses:

Once these hypotheses are established, analysts gather data from a sample and conduct statistical tests. The objective is to determine whether the observed results are statistically significant enough to reject the null hypothesis in favor of the alternative.

Examples to Clarify the Concept:

- Null Hypothesis (H0): The sanitizer’s average efficacy is 95%.

- By conducting tests, if evidence suggests that the sanitizer’s efficacy is significantly less than 95%, we reject the null hypothesis.

- Null Hypothesis (H0): The coin is fair, meaning the probability of heads and tails is equal.

- Through experimental trials, if results consistently show a skewed outcome, indicating a significantly different probability for heads and tails, the null hypothesis might be rejected.

What are the 3 types of Hypothesis Test?

Hypothesis testing is a cornerstone in statistical analysis, providing a framework to evaluate the validity of assumptions or claims made about a population based on sample data. Within this framework, several specific tests are utilized based on the nature of the data and the question at hand. Here’s a closer look at the three fundamental types of hypothesis tests:

The z-test is a statistical method primarily employed when comparing means from two datasets, particularly when the population standard deviation is known. Its main objective is to ascertain if the means are statistically equivalent.

A crucial prerequisite for the z-test is that the sample size should be relatively large, typically 30 data points or more. This test aids researchers and analysts in determining the significance of a relationship or discovery, especially in scenarios where the data’s characteristics align with the assumptions of the z-test.

The t-test is a versatile statistical tool used extensively in research and various fields to compare means between two groups. It’s particularly valuable when the population standard deviation is unknown or when dealing with smaller sample sizes.

By evaluating the means of two groups, the t-test helps ascertain if a particular treatment, intervention, or variable significantly impacts the population under study. Its flexibility and robustness make it a go-to method in scenarios ranging from medical research to business analytics.

3. Chi-Square Test:

The Chi-Square test stands distinct from the previous tests, primarily focusing on categorical data rather than means. This statistical test is instrumental when analyzing categorical variables to determine if observed data aligns with expected outcomes as posited by the null hypothesis.

By assessing the differences between observed and expected frequencies within categorical data, the Chi-Square test offers insights into whether discrepancies are statistically significant. Whether used in social sciences to evaluate survey responses or in quality control to assess product defects, the Chi-Square test remains pivotal for hypothesis testing in diverse scenarios.

Also Read: Python vs Java: Which is Best for Machine learning algorithm

Hypothesis Testing in Statistics

Hypothesis testing is a fundamental concept in statistics used to make decisions or inferences about a population based on a sample of data. The process involves setting up two competing hypotheses, the null hypothesis H 0 and the alternative hypothesis H 1.

Through various statistical tests, such as the t-test, z-test, or Chi-square test, analysts evaluate sample data to determine whether there’s enough evidence to reject the null hypothesis in favor of the alternative. The aim is to draw conclusions about population parameters or to test theories, claims, or hypotheses.

Hypothesis Testing in Research

In research, hypothesis testing serves as a structured approach to validate or refute theories or claims. Researchers formulate a clear hypothesis based on existing literature or preliminary observations. They then collect data through experiments, surveys, or observational studies.

Using statistical methods, researchers analyze this data to determine if there’s sufficient evidence to reject the null hypothesis. By doing so, they can draw meaningful conclusions, make predictions, or recommend actions based on empirical evidence rather than mere speculation.

Hypothesis Testing in R

R, a powerful programming language and environment for statistical computing and graphics, offers a wide array of functions and packages specifically designed for hypothesis testing. Here’s how hypothesis testing is conducted in R:

- Data Collection : Before conducting any test, you need to gather your data and ensure it’s appropriately structured in R.

- Choose the Right Test : Depending on your research question and data type, select the appropriate hypothesis test. For instance, use the t.test() function for a t-test or chisq.test() for a Chi-square test.

- Set Hypotheses : Define your null and alternative hypotheses. Using R’s syntax, you can specify these hypotheses and run the corresponding test.

- Execute the Test : Utilize built-in functions in R to perform the hypothesis test on your data. For instance, if you want to compare two means, you can use the t.test() function, providing the necessary arguments like the data vectors and type of t-test (one-sample, two-sample, paired, etc.).

- Interpret Results : Once the test is executed, R will provide output, including test statistics, p-values, and confidence intervals. Based on these results and a predetermined significance level (often 0.05), you can decide whether to reject the null hypothesis.