Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Published: 25 January 2021

Online education in the post-COVID era

- Barbara B. Lockee 1

Nature Electronics volume 4 , pages 5–6 ( 2021 ) Cite this article

137k Accesses

199 Citations

337 Altmetric

Metrics details

- Science, technology and society

The coronavirus pandemic has forced students and educators across all levels of education to rapidly adapt to online learning. The impact of this — and the developments required to make it work — could permanently change how education is delivered.

The COVID-19 pandemic has forced the world to engage in the ubiquitous use of virtual learning. And while online and distance learning has been used before to maintain continuity in education, such as in the aftermath of earthquakes 1 , the scale of the current crisis is unprecedented. Speculation has now also begun about what the lasting effects of this will be and what education may look like in the post-COVID era. For some, an immediate retreat to the traditions of the physical classroom is required. But for others, the forced shift to online education is a moment of change and a time to reimagine how education could be delivered 2 .

Looking back

Online education has traditionally been viewed as an alternative pathway, one that is particularly well suited to adult learners seeking higher education opportunities. However, the emergence of the COVID-19 pandemic has required educators and students across all levels of education to adapt quickly to virtual courses. (The term ‘emergency remote teaching’ was coined in the early stages of the pandemic to describe the temporary nature of this transition 3 .) In some cases, instruction shifted online, then returned to the physical classroom, and then shifted back online due to further surges in the rate of infection. In other cases, instruction was offered using a combination of remote delivery and face-to-face: that is, students can attend online or in person (referred to as the HyFlex model 4 ). In either case, instructors just had to figure out how to make it work, considering the affordances and constraints of the specific learning environment to create learning experiences that were feasible and effective.

The use of varied delivery modes does, in fact, have a long history in education. Mechanical (and then later electronic) teaching machines have provided individualized learning programmes since the 1950s and the work of B. F. Skinner 5 , who proposed using technology to walk individual learners through carefully designed sequences of instruction with immediate feedback indicating the accuracy of their response. Skinner’s notions formed the first formalized representations of programmed learning, or ‘designed’ learning experiences. Then, in the 1960s, Fred Keller developed a personalized system of instruction 6 , in which students first read assigned course materials on their own, followed by one-on-one assessment sessions with a tutor, gaining permission to move ahead only after demonstrating mastery of the instructional material. Occasional class meetings were held to discuss concepts, answer questions and provide opportunities for social interaction. A personalized system of instruction was designed on the premise that initial engagement with content could be done independently, then discussed and applied in the social context of a classroom.

These predecessors to contemporary online education leveraged key principles of instructional design — the systematic process of applying psychological principles of human learning to the creation of effective instructional solutions — to consider which methods (and their corresponding learning environments) would effectively engage students to attain the targeted learning outcomes. In other words, they considered what choices about the planning and implementation of the learning experience can lead to student success. Such early educational innovations laid the groundwork for contemporary virtual learning, which itself incorporates a variety of instructional approaches and combinations of delivery modes.

Online learning and the pandemic

Fast forward to 2020, and various further educational innovations have occurred to make the universal adoption of remote learning a possibility. One key challenge is access. Here, extensive problems remain, including the lack of Internet connectivity in some locations, especially rural ones, and the competing needs among family members for the use of home technology. However, creative solutions have emerged to provide students and families with the facilities and resources needed to engage in and successfully complete coursework 7 . For example, school buses have been used to provide mobile hotspots, and class packets have been sent by mail and instructional presentations aired on local public broadcasting stations. The year 2020 has also seen increased availability and adoption of electronic resources and activities that can now be integrated into online learning experiences. Synchronous online conferencing systems, such as Zoom and Google Meet, have allowed experts from anywhere in the world to join online classrooms 8 and have allowed presentations to be recorded for individual learners to watch at a time most convenient for them. Furthermore, the importance of hands-on, experiential learning has led to innovations such as virtual field trips and virtual labs 9 . A capacity to serve learners of all ages has thus now been effectively established, and the next generation of online education can move from an enterprise that largely serves adult learners and higher education to one that increasingly serves younger learners, in primary and secondary education and from ages 5 to 18.

The COVID-19 pandemic is also likely to have a lasting effect on lesson design. The constraints of the pandemic provided an opportunity for educators to consider new strategies to teach targeted concepts. Though rethinking of instructional approaches was forced and hurried, the experience has served as a rare chance to reconsider strategies that best facilitate learning within the affordances and constraints of the online context. In particular, greater variance in teaching and learning activities will continue to question the importance of ‘seat time’ as the standard on which educational credits are based 10 — lengthy Zoom sessions are seldom instructionally necessary and are not aligned with the psychological principles of how humans learn. Interaction is important for learning but forced interactions among students for the sake of interaction is neither motivating nor beneficial.

While the blurring of the lines between traditional and distance education has been noted for several decades 11 , the pandemic has quickly advanced the erasure of these boundaries. Less single mode, more multi-mode (and thus more educator choices) is becoming the norm due to enhanced infrastructure and developed skill sets that allow people to move across different delivery systems 12 . The well-established best practices of hybrid or blended teaching and learning 13 have served as a guide for new combinations of instructional delivery that have developed in response to the shift to virtual learning. The use of multiple delivery modes is likely to remain, and will be a feature employed with learners of all ages 14 , 15 . Future iterations of online education will no longer be bound to the traditions of single teaching modes, as educators can support pedagogical approaches from a menu of instructional delivery options, a mix that has been supported by previous generations of online educators 16 .

Also significant are the changes to how learning outcomes are determined in online settings. Many educators have altered the ways in which student achievement is measured, eliminating assignments and changing assessment strategies altogether 17 . Such alterations include determining learning through strategies that leverage the online delivery mode, such as interactive discussions, student-led teaching and the use of games to increase motivation and attention. Specific changes that are likely to continue include flexible or extended deadlines for assignment completion 18 , more student choice regarding measures of learning, and more authentic experiences that involve the meaningful application of newly learned skills and knowledge 19 , for example, team-based projects that involve multiple creative and social media tools in support of collaborative problem solving.

In response to the COVID-19 pandemic, technological and administrative systems for implementing online learning, and the infrastructure that supports its access and delivery, had to adapt quickly. While access remains a significant issue for many, extensive resources have been allocated and processes developed to connect learners with course activities and materials, to facilitate communication between instructors and students, and to manage the administration of online learning. Paths for greater access and opportunities to online education have now been forged, and there is a clear route for the next generation of adopters of online education.

Before the pandemic, the primary purpose of distance and online education was providing access to instruction for those otherwise unable to participate in a traditional, place-based academic programme. As its purpose has shifted to supporting continuity of instruction, its audience, as well as the wider learning ecosystem, has changed. It will be interesting to see which aspects of emergency remote teaching remain in the next generation of education, when the threat of COVID-19 is no longer a factor. But online education will undoubtedly find new audiences. And the flexibility and learning possibilities that have emerged from necessity are likely to shift the expectations of students and educators, diminishing further the line between classroom-based instruction and virtual learning.

Mackey, J., Gilmore, F., Dabner, N., Breeze, D. & Buckley, P. J. Online Learn. Teach. 8 , 35–48 (2012).

Google Scholar

Sands, T. & Shushok, F. The COVID-19 higher education shove. Educause Review https://go.nature.com/3o2vHbX (16 October 2020).

Hodges, C., Moore, S., Lockee, B., Trust, T. & Bond, M. A. The difference between emergency remote teaching and online learning. Educause Review https://go.nature.com/38084Lh (27 March 2020).

Beatty, B. J. (ed.) Hybrid-Flexible Course Design Ch. 1.4 https://go.nature.com/3o6Sjb2 (EdTech Books, 2019).

Skinner, B. F. Science 128 , 969–977 (1958).

Article Google Scholar

Keller, F. S. J. Appl. Behav. Anal. 1 , 79–89 (1968).

Darling-Hammond, L. et al. Restarting and Reinventing School: Learning in the Time of COVID and Beyond (Learning Policy Institute, 2020).

Fulton, C. Information Learn. Sci . 121 , 579–585 (2020).

Pennisi, E. Science 369 , 239–240 (2020).

Silva, E. & White, T. Change The Magazine Higher Learn. 47 , 68–72 (2015).

McIsaac, M. S. & Gunawardena, C. N. in Handbook of Research for Educational Communications and Technology (ed. Jonassen, D. H.) Ch. 13 (Simon & Schuster Macmillan, 1996).

Irvine, V. The landscape of merging modalities. Educause Review https://go.nature.com/2MjiBc9 (26 October 2020).

Stein, J. & Graham, C. Essentials for Blended Learning Ch. 1 (Routledge, 2020).

Maloy, R. W., Trust, T. & Edwards, S. A. Variety is the spice of remote learning. Medium https://go.nature.com/34Y1NxI (24 August 2020).

Lockee, B. J. Appl. Instructional Des . https://go.nature.com/3b0ddoC (2020).

Dunlap, J. & Lowenthal, P. Open Praxis 10 , 79–89 (2018).

Johnson, N., Veletsianos, G. & Seaman, J. Online Learn. 24 , 6–21 (2020).

Vaughan, N. D., Cleveland-Innes, M. & Garrison, D. R. Assessment in Teaching in Blended Learning Environments: Creating and Sustaining Communities of Inquiry (Athabasca Univ. Press, 2013).

Conrad, D. & Openo, J. Assessment Strategies for Online Learning: Engagement and Authenticity (Athabasca Univ. Press, 2018).

Download references

Author information

Authors and affiliations.

School of Education, Virginia Tech, Blacksburg, VA, USA

Barbara B. Lockee

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Barbara B. Lockee .

Ethics declarations

Competing interests.

The author declares no competing interests.

Rights and permissions

Reprints and permissions

About this article

Cite this article.

Lockee, B.B. Online education in the post-COVID era. Nat Electron 4 , 5–6 (2021). https://doi.org/10.1038/s41928-020-00534-0

Download citation

Published : 25 January 2021

Issue Date : January 2021

DOI : https://doi.org/10.1038/s41928-020-00534-0

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

A comparative study on the effectiveness of online and in-class team-based learning on student performance and perceptions in virtual simulation experiments.

BMC Medical Education (2024)

Leveraging privacy profiles to empower users in the digital society

- Davide Di Ruscio

- Paola Inverardi

- Phuong T. Nguyen

Automated Software Engineering (2024)

Growth mindset and social comparison effects in a peer virtual learning environment

- Pamela Sheffler

- Cecilia S. Cheung

Social Psychology of Education (2024)

Nursing students’ learning flow, self-efficacy and satisfaction in virtual clinical simulation and clinical case seminar

- Sunghee H. Tak

BMC Nursing (2023)

Online learning for WHO priority diseases with pandemic potential: evidence from existing courses and preparing for Disease X

- Heini Utunen

- Corentin Piroux

Archives of Public Health (2023)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

How Online Learning Is Reshaping Higher Education

As the pandemic eases, many institutions are realizing that properly planned online platforms will allow them to better serve all students, including nontraditional learners.

Online Learning Is Reshaping Higher Ed

Getty Stock Images

“The nice thing about online education is that it can actually escape geographical boundaries,” said Don Kilburn, the CEO of UMass Online.

Two years ago, as COVID-19 caused campuses to close, some institutions were able to shift their students to already robust online learning programs. But many other colleges and universities scrambled to build online education curricula from scratch. Students and faculty often found themselves logging onto Zoom or other platforms for the first time, with little knowledge of how to navigate a new world of virtual learning.

“When the pandemic hit, it was a provocation, as well as a demand for innovation,” said Caroline Levander, the vice president for global and digital strategy at Rice University in Houston, during a recent webinar on the future of online learning hosted by U.S. News & World Report.

While the changes were challenging for many, faculty members at Rice and elsewhere embraced the new opportunities that online learning offered. Levander shared an example of a Rice physics professor, Jason Hafner, who capitalized on the virtual environment to find compelling new ways to teach concepts to students.

“He had been innovating with online delivery in our non-credit offerings before the pandemic,” said Levander. But once COVID-19 spread, Hafner moved beyond the walls of his classroom and took advantage of Rice’s physical campus to enhance his teaching with video-recorded experiments conducted outside of normal class times. For example, in one lesson, he climbed atop a rock edifice in Rice’s engineering quad to drop two equally sized spheres – one made of aluminum and the other of steel – to demonstrate that they would fall with the same acceleration despite their different densities.

Now, many educators are reassessing how virtual learning can further enhance the student experience by offering greater flexibility than in-class options, particularly for hybrid and all-virtual instruction models. During the early days of the pandemic, “people stood up Zoom classrooms” and “they put a lot of video lectures up online,” said Jeff Borden, the chief academic officer for D2L, a company that creates online learning software. “That’s fine. That was important to get people through.” Now, however, Borden stressed, colleges and universities have the opportunity to move beyond these makeshift models. They can work to build more durable online learning platforms that meet the needs of a range of learners who must access coursework at different times and in different formats to suit their particular goals and lifestyles.

While a four-year college education can be thought of as a default for many, there are a lot of people for whom “that’s not the right path,” said Borden. In fact, some students may be looking simply to gain credentials or to upskill, rather than get traditional degrees. “There are tens of millions of other people in our society who have needs that are other than that, who have desires that are different than that,” Borden noted. Online learning now enables older students, working adults, people from nontraditional backgrounds and those who might be neurodiverse to access content more easily than ever before, Borden added.

The multitude of options also extends to graduate and professional schools, many of which have rolled out fully or partially online programs in recent years. In fact, applicants to Rice’s fully online master’s degree program are “much more diverse in every way than students who apply to the residential counterpart,” Levander said, because access is made easier and more compatible to students who may be juggling work and family obligations.

“The nice thing about online education is that it can actually escape geographical boundaries,” said Don Kilburn, the CEO of UMass Online, which has offerings across the five University of Massachusetts schools. Kilburn agreed with his fellow panelists that online learning models play a critical role in broadening access. He also emphasized the potential added benefit of lessening the financial burden on students, since online programs can often cost a fraction of in-person ones. “Part of accessibility is affordability,” he said. “I do think there are ways to actually deliver fully online programs that have a lower cost structure and may actually reduce the cost of education significantly.”

Part of serving the needs of those who choose to attend classes online means understanding why they do so and how their needs differ from those who choose traditional, in-person options, said Nancy Gonzales, the executive vice president and university provost at Arizona State University , whose online programs will reach approximately 84,000 students this year.

Many online students choose to take fewer courses at a time and may take semesters off to accommodate other aspects of their lives like taking care of children or work responsibilities – part of why the flexibility of online learning is so appealing, Gonzales said. “We’ve been trying to really try to understand what is the cadence of attendance and how do we meet the needs of students, because they are a very different population,” said Gonzales.

At the same time, for Gonzales, part of what makes an online education model successful is providing students with comparable support and services to what they might receive through in-person instruction. Such services might range from financial aid counseling to ensuring that students can interact with their peers on discussion boards, in order to ensure that interactions with classmates are not lost when attending class online.

But the promise of online education, the panelists agreed, is great. “I think we are just at the beginning of the digital transformation,” said Kilburn. “I can’t tell you when, but at some point you will see a revolution in education like you will in everything else.”

Join the Conversation

Tags: online education , internet , education , colleges , students , Coronavirus , pandemic , United States

America 2024

Health News Bulletin

Stay informed on the latest news on health and COVID-19 from the editors at U.S. News & World Report.

Sign in to manage your newsletters »

Sign up to receive the latest updates from U.S News & World Report and our trusted partners and sponsors. By clicking submit, you are agreeing to our Terms and Conditions & Privacy Policy .

You May Also Like

The 10 worst presidents.

U.S. News Staff Feb. 23, 2024

Cartoons on President Donald Trump

Feb. 1, 2017, at 1:24 p.m.

Photos: Obama Behind the Scenes

April 8, 2022

Photos: Who Supports Joe Biden?

March 11, 2020

Florida High Court OKs Abortion Vote

Lauren Camera April 1, 2024

The Week in Cartoons April 1-5

April 1, 2024, at 3:48 p.m.

White House Easter Egg Roll

April 1, 2024

What to Know About the Easter Egg Roll

Steven Ross Johnson April 1, 2024

Jobs Back on the Radar to Start Q2

Tim Smart April 1, 2024

Biden Besting Trump in Campaign Cash

Susan Milligan and Lauren Camera March 29, 2024

- Reference Manager

- Simple TEXT file

People also looked at

Systematic review article, a systematic review of the effectiveness of online learning in higher education during the covid-19 pandemic period.

- 1 Department of Basic Education, Beihai Campus, Guilin University of Electronic Technology Beihai, Beihai, Guangxi, China

- 2 School of Sports and Arts, Harbin Sport University, Harbin, Heilongjiang, China

- 3 School of Music, Harbin Normal University, Harbin, Heilongjiang, China

- 4 School of General Education, Beihai Vocational College, Beihai, Guangxi, China

- 5 School of Economics and Management, Beihai Campus, Guilin University of Electronic Technology, Guilin, Guangxi, China

Background: The effectiveness of online learning in higher education during the COVID-19 pandemic period is a debated topic but a systematic review on this topic is absent.

Methods: The present study implemented a systematic review of 25 selected articles to comprehensively evaluate online learning effectiveness during the pandemic period and identify factors that influence such effectiveness.

Results: It was concluded that past studies failed to achieve a consensus over online learning effectiveness and research results are largely by how learning effectiveness was assessed, e.g., self-reported online learning effectiveness, longitudinal comparison, and RCT. Meanwhile, a set of factors that positively or negatively influence the effectiveness of online learning were identified, including infrastructure factors, instructional factors, the lack of social interaction, negative emotions, flexibility, and convenience.

Discussion: Although it is debated over the effectiveness of online learning during the pandemic period, it is generally believed that the pandemic brings a lot of challenges and difficulties to higher education and these challenges and difficulties are more prominent in developing countries. In addition, this review critically assesses limitations in past research, develops pedagogical implications, and proposes recommendations for future research.

1 Introduction

1.1 research background.

The COVID-19 pandemic first out broken in early 2020 has considerably shaped the higher education landscape globally. To restrain viral transmission, universities globally locked down, and teaching and learning activities were transferred to online platforms. Although online learning is a relatively mature learning model and is increasingly integrated into higher education, the sudden and unprepared transition to wholly online learning caused by the pandemic posed formidable challenges to higher education stakeholders, e.g., policymakers, instructors, and students, especially at the early stage of the pandemic ( García-Morales et al., 2021 ; Grafton-Clarke et al., 2022 ). Correspondingly, the effectiveness of online learning during the pandemic period is still questionable as online learning during this period has some unique characteristics, e.g., the lack of preparation, sudden and unprepared transition, the huge scale of implementation, and social distancing policies ( Sharma et al., 2020 ; Rahman, 2021 ; Tsang et al., 2021 ; Hollister et al., 2022 ; Zhang and Chen, 2023 ). This question is more prominent in developing or undeveloped countries because of insufficient Internet access, network problems, the lack of electronic devices, and poor network infrastructure ( Adnan and Anwar, 2020 ; Muthuprasad et al., 2021 ; Rahman, 2021 ; Chandrasiri and Weerakoon, 2022 ).

Learning effectiveness is a key consideration of education as it reflects the extent to which learning and teaching objectives are achieved and learners’ needs are satisfied ( Joy and Garcia, 2000 ; Swan, 2003 ). Online learning was generally proven to be effective within a higher education context ( Kebritchi et al., 2017 ) prior to the pandemic. ICTs have fundamentally shaped the process of learning as they allow learners to learn anywhere and anytime, interact with others efficiently and conveniently, and freely acquire a large volume of learning materials online ( Kebritchi et al., 2017 ; Choudhury and Pattnaik, 2020 ). Such benefits may be offset by the challenges brought about by the pandemic. A lot of empirical studies globally have investigated the effectiveness of online learning but there is currently a scarcity of a systematic review of these studies to comprehensively evaluate online learning effectiveness and identify factors that influence effectiveness.

At present, although the vast majority of countries have implemented opening policies to deal with the pandemic and higher education institutes have recovered offline teaching and learning, assessing the effectiveness of online learning during the pandemic period via a systematic review is still essential. First, it is necessary to summarize, learn from, and reflect on the lessons and experiences of online learning practices during the pandemic period to offer implications for future practices and research. Second, the review of online learning research carried out during the pandemic period is likely to generate interesting knowledge because of the unique research context. Third, higher education institutes still need a contingency plan for emergency online learning to deal with potential crises in the future, e.g., wars, pandemics, and natural disasters. A systematic review of research on the effectiveness of online learning during the pandemic period offers valuable knowledge for designing a contingency plan for the future.

1.2 Related concepts

1.2.1 online learning.

Online learning should not be simply understood as learning on the Internet or the integration of ICTs with learning because it is a systematic framework consisting of a set of pedagogies, technologies, implementations, and processes ( Kebritchi et al., 2017 ; Choudhury and Pattnaik, 2020). Choudhury and Pattnaik (2020; p.2) summarized prior definitions of online learning and provided a comprehensive and up-to-date definition, i.e., online learning refers to “ the transfer of knowledge and skills, in a well-designed course content that has established accreditations, through an electronic media like the Internet, Web 4.0, intranets and extranets .” Online learning differs from traditional learning because of not only technological differences, but also differences in social development and pedagogies ( Camargo et al., 2020 ). Online learning has also considerably shaped the patterns by which knowledge is stored, shared, and transferred, skills are practiced, as well as the way by which stakeholders (e.g., teachers and teachers) interact ( Desai et al., 2008 ; Anderson and Hajhashemi, 2013 ). In addition, online learning has altered educational objectives and learning requirements. Memorizing knowledge was traditionally viewed as vital to learning but it is now less important since required knowledge can be conveniently searched and acquired on the Internet while the reflection and application of knowledge becomes more important ( Gamage et al., 2023 ). Online learning also entails learners’ self-regulated learning ability more than traditional learning because the online learning environment inflicts less external regulation and provides more autonomy and flexibility ( Barnard-Brak et al., 2010 ; Wong et al., 2019 ). The above differences imply that traditional pedagogies may not apply to online learning.

There are a variety of online learning models according to the differences in learning methods, processes, outcomes, and the application of technologies ( Zeitoun, 2008 ). As ICTs can be used as either the foundation of learning or auxiliary means, online learning can be classified into assistant, blended, and wholly online models. Here, assistant online learning refers to the scenario where online learning technologies are used to supplement and support traditional learning; blended online learning refers to the integration/ mixture of online and offline methods, and; wholly online learning refers to the exclusive use of the Internet for learning ( Arkorful and Abaidoo, 2015 ). The present review focuses on wholly online learning because the review is interested in the COVID-19 pandemic context where learning activities are fully switched to online platforms.

1.2.2 Learning effectiveness

Learning effectiveness can be broadly defined as the extent to which learning and teaching objectives have been effectively and efficiently achieved via educational activities ( Swan, 2003 ) or the extent to which learners’ needs are satisfied by learning activities ( Joy and Garcia, 2000 ). It is a multi-dimensional construct because learning objectives and needs are always diversified ( Joy and Garcia, 2000 ; Swan, 2003 ). Assessing learning effectiveness is a key challenge in educational research and researchers generally use a set of subjective and objective indicators to assess learning effectiveness, e.g., examination scores, assignment performance, perceived effectiveness, student satisfaction, learning motivation, engagement in learning, and learning experience ( Rajaram and Collins, 2013 ; Noesgaard and Ørngreen, 2015 ). Prior research related to the effectiveness of online learning was diversified in terms of learning outcomes, e.g., satisfaction, perceived effectiveness, motivation, and learning engagement, and there is no consensus over which outcomes are valid indicators of learning effectiveness. The present study adopts a broad definition of learning effectiveness and considers various learning outcomes that are closely associated with learning objectives and needs.

1.3 Previous review research

Up to now, online learning during the COVID-19 pandemic period has attracted considerable attention from academia and there is a lot of related review research. Some review research analyzed the trends and major topics in related research. Pratama et al. (2020) tracked the trend of using online meeting applications in online learning during the pandemic period based on a systematic review of 12 articles. It was reported that the use of these applications kept a rising trend and this use helps promote learning and teaching processes. However, this review was descriptive and failed to identify problems related to these applications as well as the limitations of these applications. Zhang et al. (2022) implemented a bibliometric review to provide a holistic view of research on online learning in higher education during the COVID-19 pandemic period. They concluded that the majority of research focused on identifying the use of strategies and technologies, psychological impacts brought by the pandemic, and student perceptions. Meanwhile, collaborative learning, hands-on learning, discovery learning, and inquiry-based learning were the most frequently discussed instructional approaches. In addition, chemical and medical education were found to be the most investigated disciplines. This review hence offered a relatively comprehensive landscape of related research in the field. However, since it was a bibliometric review, it merely analyzed the superficial characteristics of past articles in the field without a detailed analysis of their research contributions. Bughrara et al. (2023) categorized the major research topics in the field of online medical education during the pandemic period via a scoping review. A total of 174 articles were included in the review and it was found there were seven major topics, including students’ mental health, stigma, student vaccination, use of telehealth, students’ physical health, online modifications and educational adaptations, and students’ attitudes and knowledge. Overall, the review comprehensively reveals major topics in the focused field.

Some scholars believed that online learning during the pandemic period has brought about a lot of problems while both students and teachers encounter many challenges. García-Morales et al. (2021) implemented a systematic review to identify the challenges encountered by higher education in an online learning scenario during the pandemic period. A total of seven studies were included and it was found that higher education suddenly transferred to online learning and a lot of technologies and platforms were used to support online learning. However, this transition was hasty and forced by the extreme situation. Thus, various stakeholders in learning and teaching (e.g., students, universities, and teachers) encountered difficulties in adapting to this sudden change. To deal with these challenges, universities need to utilize the potential of technologies, improve learning experience, and meet students’ expectations. The major limitation of García-Morales et al. (2021) review of the small-sized sample. Meanwhile, García-Morales et al. (2021) also failed to systematically categorize various types of challenges. Stojan et al. (2022) investigated the changes to medical education brought about by the shift to online learning in the COVID-19 pandemic context as well as the lessons and impacts of these changes via a systematic review. A total of 56 articles were included in the analysis, it was reported that small groups and didactics were the most prevalent instructional methods. Although learning engagement was always interactive, teachers majorly integrated technologies to amplify and replace, rather than transform learning. Based on this, they argued that the use of asynchronous and synchronous formats promoted online learning engagement and offered self-directed and flexible learning. The major limitation of this review is that the article is somewhat descriptive and lacks the crucial evaluation of problems of online learning.

Review research has also focused on the changes and impacts brought by online learning during the pandemic period. Camargo et al. (2020) implemented a meta-analysis on seven empirical studies regarding online learning methods during the pandemic period to evaluate feasible online learning platforms, effective online learning models, and the optimal duration of online lectures, as well as the perceptions of teachers and students in the online learning process. Overall, it was concluded that the shift from offline to online learning is feasible, and; effective online learning needs a well-trained and integrated team to identify students’ and teachers’ needs, timely respond, and support them via digital tools. In addition, the pandemic has brought more or less difficulties to online learning. An obvious limitation of this review is the overly small-sized sample ( N = 7), which offers very limited information, but the review tries to answer too many questions (four questions). Grafton-Clarke et al. (2022) investigated the innovation/adaptations implemented, their impacts, and the reasons for their selections in the shift to online learning in medical education during the pandemic period via a systematic review of 55 articles. The major adaptations implemented include the rapid shift to the virtual space, pre-recorded videos or live streaming of surgical procedures, remote adaptations for clinical visits, and multidisciplinary ward rounds and team meetings. Major challenges encountered by students and teachers include the need for technical resources, faculty time, and devices, the shortage of standardized telemedicine curricula, and the lack of personal interactions. Based on this, they criticized the quality of online medical education. Tang (2023) explored the impact of the pandemic on primary, secondary, and tertiary education in the pandemic context via a systematic review of 41 articles. It was reported that the majority of these impacts are negative, e.g., learning loss among learners, assessment and experiential learning in the virtual environment, limitations in instructions, technology-related constraints, the lack of learning materials and resources, and deteriorated psychosocial well-being. These negative impacts are amplified by the unequal distribution of resources, unfair socioeconomic status, ethnicity, gender, physical conditions, and learning ability. Overall, this review comprehensively criticizes the problems brought about by online learning during the pandemic period.

Very little review research evaluated students’ responses to online learning during the pandemic period. For instance, Salas-Pilco et al. (2022) evaluated the engagement in online learning in Latin American higher education during the COVID-19 pandemic period via a systematic review of 23 studies. They considered three dimensions of engagement, including affective, cognitive, and behavioral engagement. They described the characteristics of learning engagement and proposed suggestions for enhancing engagement, including improving Internet connectivity, providing professional training, transforming higher education, ensuring quality, and offering emotional support. A key limitation of the review is that these authors focused on describing the characteristics of engagement without identifying factors that influence engagement.

A synthesis of previous review research offers some implications. First, although learning effectiveness is an important consideration in educational research, review research is scarce on this topic and hence there is a lack of comprehensive knowledge regarding the extent to which online learning is effective during the COVID-19 pandemic period. Second, according to past review research that summarized the major topics of related research, e.g., Bughrara et al. (2023) and Zhang et al. (2022) , the effectiveness of online learning is not a major topic in prior empirical research and hence the author of this article argues that this topic has not received due attention from researchers. Third, some review research has identified a lot of problems in online learning during the pandemic period, e.g., García-Morales et al. (2021) and Stojan et al. (2022) . Many of these problems are caused by the sudden and rapid shift to online learning as well as the unique context of the pandemic. These problems may undermine the effectiveness of online learning. However, the extent to which these problems influence online learning effectiveness is still under-investigated.

1.4 Purpose of the review research

The research is carried out based on a systematic review of past empirical research to answer the following two research questions:

Q1: To what extent online learning in higher education is effective during the COVID-19 pandemic period?

Q2: What factors shape the effectiveness of online learning in higher education during the COVID-19 pandemic period?

2 Research methodology

2.1 literature review as a research methodology.

Regardless of discipline, all academic research activities should be related to and based on existing knowledge. As a result, scholars must identify related research on the topic of interest, critically assess the quality and content of existing research, and synthesize available results ( Linnenluecke et al., 2020 ). However, this task is increasingly challenging for scholars because of the exponential growth of academic knowledge, which makes it difficult to be at the forefront and keep up with state-of-the-art research ( Snyder, 2019 ). Correspondingly, literature review, as a research methodology is more relevant than previously ( Snyder, 2019 ; Linnenluecke et al., 2020 ). A well-implemented review provides a solid foundation for facilitating theory development and advancing knowledge ( Webster and Watson, 2002 ). Here, a literature review is broadly defined as a more or less systematic way of collecting and synthesizing past studies ( Tranfield et al., 2003 ). It allows researchers to integrate perspectives and results from a lot of past research and is able to address research questions unanswered by a single study ( Snyder, 2019 ).

There are generally three types of literature review, including meta-analysis, bibliometric review, and systematic review ( Snyder, 2019 ). A meta-analysis refers to a statistical technique for integrating results from a large volume of empirical research (majorly quantitative research) to compare, identify, and evaluate patterns, relationships, agreements, and disagreements generated by research on the same topic ( Davis et al., 2014 ). This study does not adopt a meta-analysis for two reasons. First, the research on the effectiveness of online learning in the context of the COVID-19 pandemic was published since 2020 and currently, there is a limited volume of empirical evidence. If the study adopts a meta-analysis, the sample size will be small, resulting in limited statistical power. Second, as mentioned above, there are a variety of indicators, e.g., motivation, satisfaction, experience, test score, and perceived effectiveness ( Rajaram and Collins, 2013 ; Noesgaard and Ørngreen, 2015 ), that reflect different aspects of online learning effectiveness. The use of diversified effectiveness indicators increases the difficulty of carrying out meta-analysis.

A bibliometric review refers to the analysis of a large volume of empirical research in terms of publication characteristics (e.g., year, journal, and citation), theories, methods, research questions, countries, and authors ( Donthu et al., 2021 ) and it is useful in tracing the trend, distribution, relationship, and general patterns of research published in a focused topic ( Wallin, 2005 ). A bibliometric review does not fit the present study for two reasons. First, at present, there are less than 4 years of history of research on online learning effectiveness. Hence the volume of relevant research is limited and the public trend is currently unclear. Second, this study is interested in the inner content and results of articles published, rather than their external characteristics.

A systematic review is a method and process of critically identifying and appraising research in a specific field based on predefined inclusion and exclusion criteria to test a hypothesis, answer a research question, evaluate problems in past research, identify research gaps, and/or point out the avenue for future research ( Liberati et al., 2009 ; Moher et al., 2009 ). This type of review is particularly suitable to the present study as there are still a lot of unanswered questions regarding the effectiveness of online learning in the pandemic context, a need for indicating future research direction, a lack of summary of relevant research in this field, and a scarcity of critical appraisal of problems in past research.

Adopting a systematic review methodology brings multiple benefits to the present study. First, it is helpful for distinguishing what needs to be done from what has been done, identifying major contributions made by past research, finding out gaps in past research, avoiding fruitless research, and providing insights for future research in the focused field ( Linnenluecke et al., 2020 ). Second, it is also beneficial for finding out new research directions, needs for theory development, and potential solutions for limitations in past research ( Snyder, 2019 ). Third, this methodology helps scholars to efficiently gain an overview of valuable research results and theories generated by past research, which inspires their research design, ideas, and perspectives ( Callahan, 2014 ).

Commonly, a systematic review can be either author-centric or theme-centric ( Webster and Watson, 2002 ) and the present review is theme-centric. Specifically, an author-centric review focuses on works published by a certain author or a group of authors and summarizes the major contributions made by the author(s; ( Webster and Watson, 2002 ). This type of review is problematic in terms of its incompleteness of research conclusions in a specific field and descriptive nature ( Linnenluecke et al., 2020 ). A theme-centric review is more common where a researcher guides readers through reviewing themes, concepts, and interesting phenomena according to a certain logic ( Callahan, 2014 ). A theme in this review can be further structured into several related sub-themes and this type of review helps researchers to gain a comprehensive understanding of relevant academic knowledge ( Papaioannou et al., 2016 ).

2.2 Research procedures

This study follows the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guideline ( Liberati et al., 2009 ) to implement a systematic review. The guideline indicates four phases of performing a systematic review, including (1) identifying possible research, (2) abstract screening, (3) assessing full-text for eligibility, and (4) qualitatively synthesizing included research. Figure 1 provides a flowchart of the process and the number of articles excluded and included in each phase.

Figure 1 . PRISMA flowchart concerning the selection of articles.

This study uses multiple academic databases to identify possible research, e.g., Academic Search Complete, IGI Global, ACM Digital Library, Elsevier (SCOPUS), Emerald, IEEE Xplore, Web of Science, Science Direct, ProQuest, Wiley Online Library, Taylor and Francis, and EBSCO. Since the COVID-19 pandemic broke out in January 2020, this study limits the literature search to articles published from January 2020 to August 2023. During this period, online learning was highly prevalent in schools globally and a considerable volume of articles were published to investigate various aspects of online learning in this period. Keywords used for searching possible research include pandemic, COVID, SARS-CoV-2, 2019-nCoV, coronavirus, online learning, e-learning, electronic learning, higher education, tertiary education, universities, learning effectiveness, learning satisfaction, learning engagement, and learning motivation. Aside from searching from databases, this study also manually checks the reference lists of relevant articles and uses Google Scholar to find out other articles that have cited these articles.

2.3 Inclusion and exclusion criteria

Articles included in the review must meet the following criteria. First, articles have to be written in English and published on peer-reviewed journals. The academic language being English was chosen because it is in the Q zone of the specified search engines. Second, the research must be carried out in an online learning context. Third, the research must have collected and analyzed empirical data. Fourth, the research should be implemented in a higher education context and during the pandemic period. Fifth, the outcome variable must be factors related to learning effectiveness, and included studies must have reported the quantitative results for online learning effectiveness. The outcome variable should be measured by data collected from students, rather than other individuals (e.g., instructors). For instance, the research of Rahayu and Wirza (2020) used teacher perception as a measurement of online learning effectiveness and was hence excluded from the sample. According to the above criteria, a total of 25 articles were included in the review.

2.4 Data extraction and analysis

Content analysis is performed on included articles and an inductive approach is used to answer the two research questions. First, to understand the basic characteristics of the 25 articles/studies, the researcher summarizes their types, research designs, and samples and categorizes them into several groups. The researcher carefully reads the full-text of these articles and codes valuable pieces of content. In this process, an inductive approach is used, and key themes in these studies have been extracted and summarized. Second, the researcher further categorizes these studies into different groups according to their similarities and differences in research findings. In this way, these studies are broadly categorized into three groups, i.e., (1) ineffective (2) neutral, and (3) effective. Based on this, the research answers the research question and indicates the percentage of studies that evidenced online learning as effective in a COVID-19 pandemic context. The researcher also discusses how online learning is effective by analyzing the learning outcomes brought by online learning. Third, the researcher analyzes and compares the characteristics of the three groups of studies and extracts key themes that are relevant to the conditional effectiveness of online learning from these studies. Based on this, the researcher identifies factors that influence the effectiveness of online learning in a pandemic context. In this way, the two research questions have been adequately answered.

3 Research results and discussion

3.1 study characteristics.

Table 1 shows the statistics of the 25 studies while Table 2 shows a summary of these studies. Overall, these studies varied greatly in terms of research design, research subjects, contexts, measurements of learning effectiveness, and eventually research findings. Approximately half of the studies were published in 2021 and the number of studies reduced in 2022 and 2023, which may be attributed to the fact that universities gradually implemented opening-up policies after 2020. China received the largest number of studies ( N = 5), followed by India ( N = 4) and the United States ( N = 3). The sample sizes of the majority of studies (88.0%) ranged between 101 and 500. As this review excluded qualitative studies, all studies included adopted a purely quantitative design (88.0%) or a mixed design (12.0%). The majority of the studies were cross-sectional (72%) and a few studies (2%) were experimental.

Table 1 . Statistics of studies included in the review.

Table 2 . A summary of studies reviewed.

3.2 The effectiveness of online learning

Overall, the 25 studies generated mixed results regarding the effectiveness of online learning during the pandemic period. 9 (36%) studies reported online learning as effective; 13 (52%) studies reported online learning as ineffective, and the rest 3 (12%) studies produced neutral results. However, it should be noted that the results generated by these studies are not comparable as they used different approaches to evaluate the effectiveness of online learning. According to the approach of evaluating online learning effectiveness, these studies are categorized into four groups, including (1) Cross-sectional evaluation of online learning effectiveness without a comparison with offline learning; without a control group ( N = 14; 56%), (2) Cross-sectional comparison of the effectiveness of online learning with offline learning; without control group (7; 28%), (3) Longitudinal comparison of the effectiveness of online learning with offline learning, without a control group ( N = 2; 8%), and (4) Randomized Controlled Trial (RCT); with a control group ( N = 2; 8%).

The first group of studies asked students to report the extent to which they perceived online learning as effective, they had achieved expected learning outcomes through online learning, or they were satisfied with online learning experience or outcomes, without a comparison with offline learning. Six out of 14 studies reported online learning as ineffective, including Adnan and Anwar (2020) , Hong et al. (2021) , Mok et al. (2021) , Baber (2022) , Chandrasiri and Weerakoon (2022) , and Lalduhawma et al. (2022) . Five out of 14 studies reported online learning as effective, including Almusharraf and Khahro (2020) , Sharma et al. (2020) , Mahyoob (2021) , Rahman (2021) , and Haningsih and Rohmi (2022) . In addition, 3 out of 14 studies reported neutral results, including Cranfield et al. (2021) , Tsang et al. (2021) , and Conrad et al. (2022) . It should be noted that this measurement approach is problematic in three aspects. First, researchers used various survey instruments to measure learning effectiveness without reaching a consensus over a widely accepted instrument. As a result, these studies measured different aspects of learning effectiveness and hence their results may be incomparable. Second, these studies relied on students’ self-reports to evaluate learning effectiveness, which is subjective and inaccurate. Third, even though students perceived online learning as effective, it does not imply that online learning is more effective than offline learning because of the absence of comparables.

The second group of studies asked students to compare online learning with offline learning to evaluate learning effectiveness. Interestingly, all 7 studies, including Alawamleh et al. (2020) , Almahasees et al. (2021) , Gonzalez-Ramirez et al. (2021) , Muthuprasad et al. (2021) , Selco and Habbak (2021) , Hollister et al. (2022) , and Zhang and Chen (2023) , reported that online learning was perceived by participants as less effective than offline learning. It should be noted that these results were specific to the COVID-19 pandemic context where strict social distancing policies were implemented. Consequently, these results should be interpreted as online learning during the school lockdown period was perceived by participants as less effective than offline learning during the pre-pandemic period. A key problem of the measurement of learning effectiveness in these studies is subjectivity, i.e., students’ self-reported online learning effectiveness relative to offline learning may be subjective and influenced by a lot of factors caused by the pandemic, e.g., negative emotions (e.g., fear, loneliness, and anxiety).

Only two studies implemented a longitudinal comparison of the effectiveness of online learning with offline learning, i.e., Chang et al. (2021) and Fyllos et al. (2021) . Interestingly, both studies reported that participants perceived online learning as more effective than offline learning, which is contradicted with the second group of studies. In the two studies, the same group of students participated in offline learning and online learning successively and rated the effectiveness of the two learning approaches, respectively. The two studies were implemented by time coincidence, i.e., researchers unexpectedly encountered the pandemic and subsequently, school lockdown when they were investigating learning effectiveness. Such time coincidence enabled them to compare the effectiveness of offline and online learning. However, this research design has three key problems. First, the content of learning in the online and offline learning periods was different and hence the evaluations of learning effectiveness of the two periods are not comparable. Second, self-reported learning effectiveness is subjective. Third, students are likely to obtain better examination scores in online examinations than in offline examinations because online examinations bring a lot of cheating behaviors and are less fair than offline examinations. As reported by Fyllos et al. (2021) , the examination score after online learning was significantly higher than after offline learning. Chang et al. (2021) reported that participants generally believed that offline examinations are fairer than online examinations.

Lastly, only two studies, i.e., Jiang et al. (2023) and Shirahmadi et al. (2023) , implemented an RCT design, which is more persuasive, objective, and accurate than the above-reviewed studies. Indeed, implementing an RCT to evaluate the effectiveness of online learning was a formidable challenge during the pandemic period because of viral transmission and social distancing policies. Both studies reported that online learning is more effective than offline learning during the pandemic period. However, it is questionable about the extent to which such results are affected by health/safety-related issues. It is reasonable to infer that online learning was perceived by students as safer than offline learning during the pandemic period and such perceptions may affect learning effectiveness.

Overall, it is difficult to conclude whether online learning is effective during the pandemic period. Nevertheless, it is possible to identify factors that shape the effectiveness of online learning, which is discussed in the next section.

3.3 Factors that shape online learning effectiveness

Infrastructure factors were reported as the most salient factors that determine online learning effectiveness. It seems that research from developed countries generated more positive results for online learning than research from less developed countries. This view was confirmed by the cross-country comparative study of Cranfield et al. (2021) . Indeed, online learning entails the support of ICT infrastructure, and hence ICT related factors, e.g., Internet connectivity, technical issues, network speed, accessibility of digital devices, and digital devices, considerably influence the effectiveness of online learning ( García-Morales et al., 2021 ; Grafton-Clarke et al., 2022 ). Prior review research, e.g., Tang (2023) also suggested that the unequal distribution of resources and unfair socioeconomic status intensified the problems brought about by online learning during the pandemic period. Salas-Pilco et al. (2022) recommended that improving Internet connectivity would increase students’ engagement in online learning during the pandemic period.

Adnan and Anwar (2020) study is one of the most cited works in the focused field. They reported that online learning is ineffective in Pakistan because of the problems of Internet access due to monetary and technical issues. The above problems hinder students from implementing online learning activities, making online learning ineffective. Likewise, Lalduhawma et al. (2022) research from India indicated that online learning is ineffective because of poor network interactivity, slow data speed, low data limits, and expensive costs of devices. As a result, online learning during the COVID-19 pandemic may have expanded the education gap between developed and developing countries because of developing countries’ infrastructure disadvantages. More attention to online learning infrastructure problems in developing countries is needed.

Instructional factors, e.g., course management and design, instructor characteristics, instructor-student interaction, assignments, and assessments were found to affect online learning effectiveness ( Sharma et al., 2020 ; Rahman, 2021 ; Tsang et al., 2021 ; Hollister et al., 2022 ; Zhang and Chen, 2023 ). Although these instructional factors have been well-documented as significant drivers of learning effectiveness in traditional learning literature, these factors in the pandemic period have some unique characteristics. Both students and teachers were not well prepared for wholly online instruction and learning in 2020 and hence they encountered a lot of problems in course management and design, learning interactions, assignments, and assessments ( Stojan et al., 2022 ; Tang, 2023 ). García-Morales et al. (2021) review also suggested that various stakeholders in learning and teaching encountered difficulties in adapting to the sudden, hasty, and forced transition of offline to online learning. Consequently, these instructional factors become salient in terms of affecting online learning effectiveness.

The negative role of the lack of social interaction caused by social distancing in affecting online learning effectiveness was highlighted by a lot of studies ( Almahasees et al., 2021 ; Baber, 2022 ; Conrad et al., 2022 ; Hollister et al., 2022 ). Baber (2022) argued that people give more importance to saving lives than socializing in the online environment and hence social interactions in learning are considerably reduced by social distancing norms. The negative impact of the lack of social interaction on online learning effectiveness is reflected in two aspects. First, according to a constructivist view, interaction is an indispensable element of learning because knowledge is actively constructed by learners in social interactions ( Woo and Reeves, 2007 ). Consequently, online learning effectiveness during the pandemic period is reduced by the lack of social interaction. Second, the lack of social interaction brings a lot of negative emotions, e.g., feelings of isolation, loneliness, anxiety, and depression ( Alawamleh et al., 2020 ; Gonzalez-Ramirez et al., 2021 ; Selco and Habbak, 2021 ). Such negative emotions undermine online learning effectiveness.

Negative emotions caused by the pandemic and school lockdown were also found to be detrimental to online learning effectiveness. In this context, it was reported that many students experience a lot of negative emotions, e.g., feelings of isolation, exhaustion, loneliness, and distraction ( Alawamleh et al., 2020 ; Gonzalez-Ramirez et al., 2021 ; Selco and Habbak, 2021 ). Such negative emotions, as mentioned above, reduce online learning effectiveness.

Several factors were also found to increase online learning effectiveness during the pandemic period, e.g., convenience and flexibility ( Hong et al., 2021 ; Muthuprasad et al., 2021 ; Selco and Habbak, 2021 ). Students with strong self-regulated learning abilities gain more benefits from convenience and flexibility in online learning ( Hong et al., 2021 ).

Overall, although it is debated over the effectiveness of online learning during the pandemic period, it is generally believed that the pandemic brings a lot of challenges and difficulties to higher education. Meanwhile, the majority of students prefer offline learning to online learning. The above challenges and difficulties are more prominent in developing countries than in developed countries.

3.4 Pedagogical implications

The results generated by the systematic review offer a lot of pedagogical implications. First, online learning entails the support of ICT infrastructure, and infrastructure defects strongly undermine learning effectiveness ( García-Morales et al., 2021 ; Grafton-Clarke et al., 2022 ). Given the fact online learning is increasingly integrated into higher education ( Kebritchi et al., 2017 ) regardless of the presence of the pandemic, governments globally should increase the investment in learning-related ICT infrastructure in higher education institutes. Meanwhile, schools should consider students’ affordability of digital devices and network fees when implementing online learning activities. It is important to offer material support for those students with poor economic status. Infrastructure issues are more prominent in developing countries because of the lack of monetary resources and poor infrastructure base. Thus, international collaboration and aid are recommended to address these issues.

Second, since the lack of social interaction is a key factor that reduces online learning effectiveness, it is important to increase social interactions during the implementation of online learning activities. On the one hand, both students and instructors are encouraged to utilize network technologies to promote inter-individual interactions. On the other hand, the two parties are also encouraged to engage in offline interaction activities if the risk is acceptable.

Third, special attention should be paid to students’ emotions during the online learning process as online learning may bring a lot of negative emotions to students, which undermine learning effectiveness ( Alawamleh et al., 2020 ; Gonzalez-Ramirez et al., 2021 ; Selco and Habbak, 2021 ). In addition, higher education institutes should prepare a contingency plan for emergency online learning to deal with potential crises in the future, e.g., wars, pandemics, and natural disasters.

3.5 Limitations and suggestions for future research

There are several limitations in past research regarding online learning effectiveness during the pandemic period. The first is the lack of rigor in assessing learning effectiveness. Evidently, there is a scarcity of empirical research with an RCT design, which is considered to be accurate, objective, and rigorous in assessing pedagogical models ( Torgerson and Torgerson, 2001 ). The scarcity of ICT research leads to the difficulty in accurately assessing the effectiveness of online learning and comparing it with offline learning. Second, the widely accepted criteria for assessing learning effectiveness are absent, and past empirical studies used diversified procedures, techniques, instruments, and criteria for measuring online learning effectiveness, resulting in difficulty in comparing research results. Third, learning effectiveness is a multi-dimensional construct but its multidimensionality was largely ignored by past research. Therefore, it is difficult to evaluate which dimensions of learning effectiveness are promoted or undermined by online learning and it is also difficult to compare the results of different studies. Finally, there is very limited knowledge about the difference in online learning effectiveness between different subjects. It is likely that the subjects that depend on lab-based work (e.g., experimental physics, organic chemistry, and cell biology) are less appropriate for online learning than the subjects that depend on desk-based work (e.g., economics, psychology, and literature).

To deal with the above limitations, there are several recommendations for future research on online learning effectiveness. First, future research is encouraged to adopt an RCT design and collect a large-sized sample to objectively, rigorously, and accurately quantify the effectiveness of online learning. Second, scholars are also encouraged to develop a new framework to assess learning effectiveness comprehensively. This framework should cover multiple dimensions of learning effectiveness and have strong generalizability. Finally, it is recommended that future research could compare the effectiveness of online learning between different subjects.

4 Conclusion

This study carried out a systematic review of 25 empirical studies published between 2020 and 2023 to evaluate the effectiveness of online learning during the COVID-19 pandemic period. According to how online learning effectiveness was assessed, these 25 studies were categorized into four groups. The first group of studies employed a cross-sectional design and assessed online learning based on students’ perceptions without a control group. Less than half of these studies reported online learning as effective. The second group of studies also employed a cross-sectional design and asked students to compare the effectiveness of online learning with offline learning. All these studies reported that online learning is less effective than offline learning. The third group of studies employed a longitudinal design and compared the effectiveness of online learning with offline learning but without a control group and this group includes only 2 studies. It was reported that online learning is more effective than offline learning. The fourth group of studies employed an RCT design and this group includes only 2 studies. Both studies reported online learning as more effective than offline learning.

Overall, it is difficult to conclude whether online learning is effective during the pandemic period because of the diversified research contexts, methods, and approaches in past research. Nevertheless, the review identifies a set of factors that positively or negatively influence the effectiveness of online learning, including infrastructure factors, instructional factors, the lack of social interaction, negative emotions, flexibility, and convenience. Although it is debated over the effectiveness of online learning during the pandemic period, it is generally believed that the pandemic brings a lot of challenges and difficulties to higher education. Meanwhile, the majority of students prefer offline learning to online learning. In addition, developing countries face more challenges and difficulties in online learning because of monetary and infrastructure issues.

The findings of this review offer significant pedagogical implications for online learning in higher education institutes, including enhancing the development of ICT infrastructure, providing material support for students with poor economic status, enhancing social interactions, paying attention to students’ emotional status, and preparing a contingency plan of emergency online learning.

The review also identifies several limitations in past research regarding online learning effectiveness during the pandemic period, including the lack of rigor in assessing learning effectiveness, the absence of accepted criteria for assessing learning effectiveness, the neglect of the multidimensionality of learning effectiveness, and limited knowledge about the difference in online learning effectiveness between different subjects.

To deal with the above limitations, there are several recommendations for future research on online learning effectiveness. First, future research is encouraged to adopt an RCT design and collect a large-sized sample to objectively, rigorously, and accurately quantify the effectiveness of online learning. Second, scholars are also encouraged to develop a new framework to assess learning effectiveness comprehensively. This framework should cover multiple dimensions of learning effectiveness and have strong generalizability. Finally, it is recommended that future research could compare the effectiveness of online learning between different subjects. To fix these limitations in future research, recommendations are made.

It should be noted that this review is not free of problems. First, only studies that quantitatively measured online learning effectiveness were included in the review and hence a lot of other studies (e.g., qualitative studies) that investigated factors that influence online learning effectiveness were excluded, resulting in a relatively small-sized sample and incomplete synthesis of past research contributions. Second, since this review was qualitative, it was difficult to accurately quantify the level of online learning effectiveness.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

WM: Writing – original draft, Writing – review & editing. LY: Writing – original draft, Writing – review & editing. CL: Writing – review & editing. NP: Writing – review & editing. XP: Writing – review & editing. YZ: Writing – review & editing.

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Adnan, M., and Anwar, K. (2020). Online learning amid the COVID-19 pandemic: Students' perspectives. J. Pedagogical Sociol. Psychol. 1, 45–51. doi: 10.33902/JPSP.2020261309

Crossref Full Text | Google Scholar

Alawamleh, M., Al-Twait, L. M., and Al-Saht, G. R. (2020). The effect of online learning on communication between instructors and students during Covid-19 pandemic. Asian Educ. Develop. Stud. 11, 380–400. doi: 10.1108/AEDS-06-2020-0131

Almahasees, Z., Mohsen, K., and Amin, M. O. (2021). Faculty’s and students’ perceptions of online learning during COVID-19. Front. Educ. 6:638470. doi: 10.3389/feduc.2021.638470

Almusharraf, N., and Khahro, S. (2020). Students satisfaction with online learning experiences during the COVID-19 pandemic. Int. J. Emerg. Technol. Learn. (iJET) 15, 246–267. doi: 10.3991/ijet.v15i21.15647

Anderson, N., and Hajhashemi, K. (2013). Online learning: from a specialized distance education paradigm to a ubiquitous element of contemporary education. In 4th international conference on e-learning and e-teaching (ICELET 2013) (pp. 91–94). IEEE.

Google Scholar

Arkorful, V., and Abaidoo, N. (2015). The role of e-learning, advantages and disadvantages of its adoption in higher education. Int. J. Instructional Technol. Distance Learn. 12, 29–42.

Baber, H. (2022). Social interaction and effectiveness of the online learning–a moderating role of maintaining social distance during the pandemic COVID-19. Asian Educ. Develop. Stud. 11, 159–171. doi: 10.1108/AEDS-09-2020-0209

Barnard-Brak, L., Paton, V. O., and Lan, W. Y. (2010). Profiles in self-regulated learning in the online learning environment. Int. Rev. Res. Open Dist. Learn. 11, 61–80. doi: 10.19173/irrodl.v11i1.769

Bughrara, M. S., Swanberg, S. M., Lucia, V. C., Schmitz, K., Jung, D., and Wunderlich-Barillas, T. (2023). Beyond COVID-19: the impact of recent pandemics on medical students and their education: a scoping review. Med. Educ. Online 28:2139657. doi: 10.1080/10872981.2022.2139657

PubMed Abstract | Crossref Full Text | Google Scholar

Callahan, J. L. (2014). Writing literature reviews: a reprise and update. Hum. Resour. Dev. Rev. 13, 271–275. doi: 10.1177/1534484314536705

Camargo, C. P., Tempski, P. Z., Busnardo, F. F., Martins, M. D. A., and Gemperli, R. (2020). Online learning and COVID-19: a meta-synthesis analysis. Clinics 75:e2286. doi: 10.6061/clinics/2020/e2286

Choudhury, S., and Pattnaik, S. (2020). Emerging themes in e-learning: A review from the stakeholders’ perspective. Computers and Education 144, 103657. doi: 10.1016/j.compedu.2019.103657

Chandrasiri, N. R., and Weerakoon, B. S. (2022). Online learning during the COVID-19 pandemic: perceptions of allied health sciences undergraduates. Radiography 28, 545–549. doi: 10.1016/j.radi.2021.11.008

Chang, J. Y. F., Wang, L. H., Lin, T. C., Cheng, F. C., and Chiang, C. P. (2021). Comparison of learning effectiveness between physical classroom and online learning for dental education during the COVID-19 pandemic. J. Dental Sci. 16, 1281–1289. doi: 10.1016/j.jds.2021.07.016

Conrad, C., Deng, Q., Caron, I., Shkurska, O., Skerrett, P., and Sundararajan, B. (2022). How student perceptions about online learning difficulty influenced their satisfaction during Canada's Covid-19 response. Br. J. Educ. Technol. 53, 534–557. doi: 10.1111/bjet.13206

Cranfield, D. J., Tick, A., Venter, I. M., Blignaut, R. J., and Renaud, K. (2021). Higher education students’ perceptions of online learning during COVID-19—a comparative study. Educ. Sci. 11, 403–420. doi: 10.3390/educsci11080403

Desai, M. S., Hart, J., and Richards, T. C. (2008). E-learning: paradigm shift in education. Education 129, 1–20.

Davis, J., Mengersen, K., Bennett, S., and Mazerolle, L. (2014). Viewing systematic reviews and meta-analysis in social research through different lenses. SpringerPlus 3, 1–9. doi: 10.1186/2193-1801-3-511

Donthu, N., Kumar, S., Mukherjee, D., Pandey, N., and Lim, W. M. (2021). How to conduct a bibliometric analysis: An overview and guidelines. Journal of business research 133, 264–269. doi: 10.1016/j.jbusres.2021.04.070

Fyllos, A., Kanellopoulos, A., Kitixis, P., Cojocari, D. V., Markou, A., Raoulis, V., et al. (2021). University students perception of online education: is engagement enough? Acta Informatica Medica 29, 4–9. doi: 10.5455/aim.2021.29.4-9

Gamage, D., Ruipérez-Valiente, J. A., and Reich, J. (2023). A paradigm shift in designing education technology for online learning: opportunities and challenges. Front. Educ. 8:1194979. doi: 10.3389/feduc.2023.1194979

García-Morales, V. J., Garrido-Moreno, A., and Martín-Rojas, R. (2021). The transformation of higher education after the COVID disruption: emerging challenges in an online learning scenario. Front. Psychol. 12:616059. doi: 10.3389/fpsyg.2021.616059

Gonzalez-Ramirez, J., Mulqueen, K., Zealand, R., Silverstein, S., Mulqueen, C., and BuShell, S. (2021). Emergency online learning: college students' perceptions during the COVID-19 pandemic. Coll. Stud. J. 55, 29–46.

Grafton-Clarke, C., Uraiby, H., Gordon, M., Clarke, N., Rees, E., Park, S., et al. (2022). Pivot to online learning for adapting or continuing workplace-based clinical learning in medical education following the COVID-19 pandemic: a BEME systematic review: BEME guide no. 70. Med. Teach. 44, 227–243. doi: 10.1080/0142159X.2021.1992372

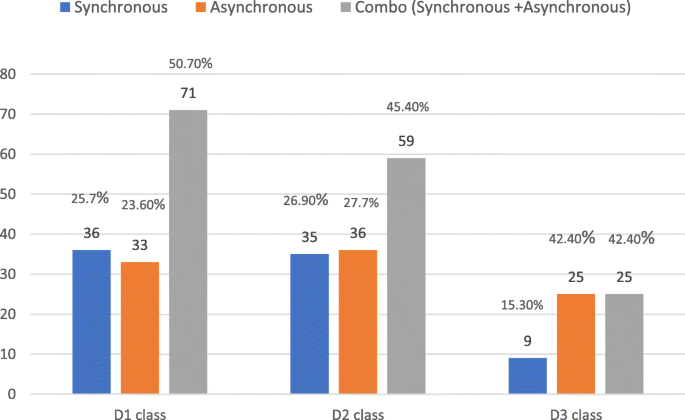

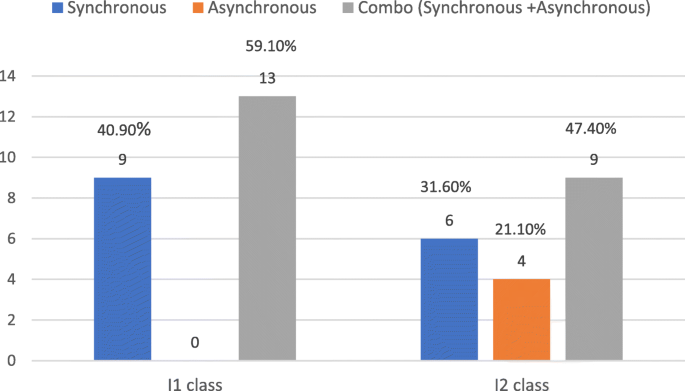

Haningsih, S., and Rohmi, P. (2022). The pattern of hybrid learning to maintain learning effectiveness at the higher education level post-COVID-19 pandemic. Eurasian J. Educ. Res. 11, 243–257. doi: 10.12973/eu-jer.11.1.243