M.Tech/Ph.D Thesis Help in Chandigarh | Thesis Guidance in Chandigarh

+91-9465330425

What is Digital Image Processing?

Digital image processing is the process of using computer algorithms to perform image processing on digital images. Latest topics in digital image processing for research and thesis are based on these algorithms. Being a subcategory of digital signal processing, digital image processing is better and carries many advantages over analog image processing. It permits to apply multiple algorithms to the input data and does not cause the problems such as the build-up of noise and signal distortion while processing. As images are defined over two or more dimensions that make digital image processing “a model of multidimensional systems”. The history of digital image processing dates back to early 1920s when the first application of digital image processing came into news. Many students are going for this field for their m tech thesis as well as for Ph.D. thesis. There are various thesis topics in digital image processing for M.Tech, M.Phil and Ph.D. students. The list of thesis topics in image processing is listed here. Before going into topics in image processing , you should have some basic knowledge of image processing.

Latest research topics in image processing for research scholars:

- The hybrid classification scheme for plant disease detection in image processing

- The edge detection scheme in image processing using ant and bee colony optimization

- To improve PNLM filtering scheme to denoise MRI images

- The classification method for the brain tumor detection

- The CNN approach for the lung cancer detection in image processing

- The neural network method for the diabetic retinopathy detection

- The copy-move forgery detection approach using textual feature extraction method

- Design face spoof detection method based on eigen feature extraction and classification

- The classification and segmentation method for the number plate detection

- Find the link at the end to download the latest thesis and research topics in Digital Image Processing

Formation of Digital Images

Firstly, the image is captured by a camera using sunlight as the source of energy. For the acquisition of the image, a sensor array is used. These sensors sense the amount of light reflected by the object when light falls on that object. A continuous voltage signal is generated when the data is being sensed. The data collected is converted into a digital format to create digital images. For this process, sampling and quantization methods are applied. This will create a 2-dimensional array of numbers which will be a digital image.

Why is Image Processing Required?

- Image Processing serves the following main purpose:

- Visualization of the hidden objects in the image.

- Enhancement of the image through sharpening and restoration.

- Seek valuable information from the images.

- Measuring different patterns of objects in the image.

- Distinguishing different objects in the image.

Applications of Digital Image Processing

- There are various applications of digital image processing which can also be a good topic for the thesis in image processing. Following are the main applications of image processing:

- Image Processing is used to enhance the image quality through techniques like image sharpening and restoration. The images can be altered to achieve the desired results.

- Digital Image Processing finds its application in the medical field for gamma-ray imaging, PET Scan, X-ray imaging, UV imaging.

- It is used for transmission and encoding.

- It is used in color processing in which processing of colored images is done using different color spaces.

- Image Processing finds its application in machine learning for pattern recognition.

List of topics in image processing for thesis and research

- There are various in digital image processing for thesis and research. Here is the list of latest thesis and research topics in digital image processing:

- Image Acquisition

- Image Enhancement

- Image Restoration

- Color Image Processing

- Wavelets and Multi Resolution Processing

- Compression

- Morphological Processing

- Segmentation

- Representation and Description

- Object recognition

- Knowledge Base

1. Image Acquisition:

Image Acquisition is the first and important step of the digital image of processing . Its style is very simple just like being given an image which is already in digital form and it involves preprocessing such as scaling etc. It starts with the capturing of an image by the sensor (such as a monochrome or color TV camera) and digitized. In case, the output of the camera or sensor is not in digital form then an analog-to-digital converter (ADC) digitizes it. If the image is not properly acquired, then you will not be able to achieve tasks that you want to. Customized hardware is used for advanced image acquisition techniques and methods. 3D image acquisition is one such advanced method image acquisition method. Students can go for this method for their master’s thesis and research.

2. Image Enhancement:

Image enhancement is one of the easiest and the most important areas of digital image processing. The core idea behind image enhancement is to find out information that is obscured or to highlight specific features according to the requirements of an image. Such as changing brightness & contrast etc. Basically, it involves manipulation of an image to get the desired image than original for specific applications. Many algorithms have been designed for the purpose of image enhancement in image processing to change an image’s contrast, brightness, and various other such things. Image Enhancement aims to change the human perception of the images. Image Enhancement techniques are of two types: Spatial domain and Frequency domain.

3. Image Restoration:

Image restoration involves improving the appearance of an image. In comparison to image enhancement which is subjective, image restoration is completely objective which makes the sense that restoration techniques are based on probabilistic or mathematical models of image degradation. Image restoration removes any form of a blur, noise from images to produce a clean and original image. It can be a good choice for the M.Tech thesis on image processing. The image information lost during blurring is restored through a reversal process. This process is different from the image enhancement method. Deconvolution technique is used and is performed in the frequency domain. The main defects that degrade an image are restored here.

4. Color Image Processing:

Color image processing has been proved to be of great interest because of the significant increase in the use of digital images on the Internet. It includes color modeling and processing in a digital domain etc. There are various color models which are used to specify a color using a 3D coordinate system. These models are RGB Model, CMY Model, HSI Model, YIQ Model. The color image processing is done as humans can perceive thousands of colors. There are two areas of color image processing full-color processing and pseudo color processing. In full-color processing, the image is processed in full colors while in pseudo color processing the grayscale images are converted to colored images. It is an interesting topic in image processing.

Important Digital Image Processing Terminologies

- Stereo Vision and Super Resolution

- Multi-Spectral Remote Sensing and Imaging

- Digital Photography and Imaging

- Acoustic Imaging and Holographic Imaging

- Computer Vision and Graphics

- Image Manipulation and Retrieval

- Quality Enrichment in Volumetric Imaging

- Color Imaging and Bio-Medical Imaging

- Pattern Recognition and Analysis

- Imaging Software Tools, Technologies and Languages

- Image Acquisition and Compression Techniques

- Mathematical Morphological Image Segmentation

Image Processing Algorithms

In general, image processing techniques/methods are used to perform certain actions over the input images, and according to that, the desired information is extracted in it. For that, input is an image, and the result is an improved/expected image associated with their task. It is essential to find that the algorithms for image processing play a crucial role in current real-time applications. Various algorithms are used for various purposes as follows,

- Digital Image Detection

- Image Reconstruction

- Image Restoration

- Image Enhancement

- Image Quality Estimation

- Spectral Image Estimation

- Image Data Compression

For the above image processing tasks, algorithms are customized for the number of training and testing samples and also can be used for real-time/online processing. Till now, filtering techniques are used for image processing and enhancement, and their main functions are as follows,

- Brightness Correction

- Contrast Enhancement

- Resolution and Noise Level of Image

- Contouring and Image Sharpening

- Blurring, Edge Detection and Embossing

Some of the commonly used techniques for image processing can be classified into the following,

- Medium Level Image Processing Techniques – Binarization and Compression

- Higher Level Image Processing Techniques – Image Segmentation

- Low-Level Image Processing Techniques – Noise Elimination and Color Contrast Enhancement

- Recognition and Detection Image Processing Algorithms – Semantic Analysis

Next, let’s see about some of the traditional image processing algorithms for your information. Our research team will guide in handpicking apt solutions for research problems . If there is a need, we are also ready to design own hybrid algorithms and techniques for sorting out complicated model .

Types of Digital Image Processing Algorithms

- Hough Transform Algorithm

- Canny Edge Detector Algorithm

- Scale-Invariant Feature Transform (SIFT) Algorithm

- Generalized Hough Transform Algorithm

- Speeded Up Robust Features (SURF) Algorithm

- Marr–Hildreth Algorithm

- Connected-component labeling algorithm: Identify and classify the disconnected areas

- Histogram equalization algorithm: Enhance the contrast of image by utilizing the histogram

- Adaptive histogram equalization algorithm: Perform slight alteration in contrast for the equalization of the histogram

- Error Diffusion Algorithm

- Ordered Dithering Algorithm

- Floyd–Steinberg Dithering Algorithm

- Riemersma Dithering Algorithm

- Richardson–Lucy deconvolution algorithm : It is also known as a deblurring algorithm, which removes the misrepresentation of the image to recover the original image

- Seam carving algorithm : Differentiate the edge based on the image background information and also known as content-aware image resizing algorithm

- Region Growing Algorithm

- GrowCut Algorithm

- Watershed Transformation Algorithm

- Random Walker Algorithm

- Elser difference-map algorithm: It is a search based algorithm primarily used for X-Ray diffraction microscopy to solve the general constraint satisfaction problems

- Blind deconvolution algorithm : It is similar to Richardson–Lucy deconvolution to reconstruct the sharp point of blur image. In other words, it’s the process of deblurring the image.

Nowadays, various industries are also utilizing digital image processing by developing customizing procedures to satisfy their requirements. It may be achieved either from scratch or hybrid algorithmic functions . As a result, it is clear that image processing is revolutionary developed in many information technology sectors and applications.

Digital Image Processing Techniques

- In order to smooth the image, substitutes neighbor median / common value in the place of the actual pixel value. Whereas it is performed in the case of weak edge sharpness and blur image effect.

- Eliminate the distortion in an image by scaling, wrapping, translation, and rotation process

- Differentiate the in-depth image content to figure out the original hidden data or to convert the color image into a gray-scale image

- Breaking up of image into multiple forms based on certain constraints. For instance: foreground, background

- Enhance the image display through pixel-based threshold operation

- Reduce the noise in an image by the average of diverse quality multiple images

- Sharpening the image by improving the pixel value in the edge

- Extract the specific feature for removal of noise in an image

- Perform arithmetic operations (add, sub, divide and multiply) to identify the variation in between the images

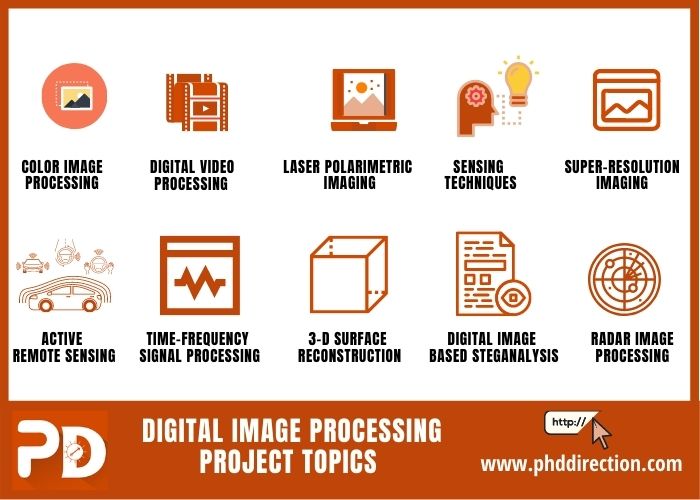

Beyond this, this field will give you numerous Digital Image Processing Project Topics for current and upcoming scholars . Below, we have mentioned some research ideas that help you to classify analysis, represent and display the images or particular characteristics of an image.

Latest 11 Interesting Digital Image Processing Project Topics

- Acoustic and Color Image Processing

- Digital Video and Signal Processing

- Multi-spectral and Laser Polarimetric Imaging

- Image Processing and Sensing Techniques

- Super-resolution Imaging and Applications

- Passive and Active Remote Sensing

- Time-Frequency Signal Processing and Analysis

- 3-D Surface Reconstruction using Remote Sensed Image

- Digital Image based Steganalysis and Steganography

- Radar Image Processing for Remote Sensing Applications

- Adaptive Clustering Algorithms for Image processing

Moreover, if you want to know more about Digital Image Processing Project Topics for your research, then communicate with our team. We will give detailed information on current trends, future developments, and real-time challenges in the research grounds of Digital Image Processing.

Why Work With Us ?

Senior research member, research experience, journal member, book publisher, research ethics, business ethics, valid references, explanations, paper publication, 9 big reasons to select us.

Our Editor-in-Chief has Website Ownership who control and deliver all aspects of PhD Direction to scholars and students and also keep the look to fully manage all our clients.

Our world-class certified experts have 18+years of experience in Research & Development programs (Industrial Research) who absolutely immersed as many scholars as possible in developing strong PhD research projects.

We associated with 200+reputed SCI and SCOPUS indexed journals (SJR ranking) for getting research work to be published in standard journals (Your first-choice journal).

PhDdirection.com is world’s largest book publishing platform that predominantly work subject-wise categories for scholars/students to assist their books writing and takes out into the University Library.

Our researchers provide required research ethics such as Confidentiality & Privacy, Novelty (valuable research), Plagiarism-Free, and Timely Delivery. Our customers have freedom to examine their current specific research activities.

Our organization take into consideration of customer satisfaction, online, offline support and professional works deliver since these are the actual inspiring business factors.

Solid works delivering by young qualified global research team. "References" is the key to evaluating works easier because we carefully assess scholars findings.

Detailed Videos, Readme files, Screenshots are provided for all research projects. We provide Teamviewer support and other online channels for project explanation.

Worthy journal publication is our main thing like IEEE, ACM, Springer, IET, Elsevier, etc. We substantially reduces scholars burden in publication side. We carry scholars from initial submission to final acceptance.

Related Pages

Our benefits, throughout reference, confidential agreement, research no way resale, plagiarism-free, publication guarantee, customize support, fair revisions, business professionalism, domains & tools, we generally use, wireless communication (4g lte, and 5g), ad hoc networks (vanet, manet, etc.), wireless sensor networks, software defined networks, network security, internet of things (mqtt, coap), internet of vehicles, cloud computing, fog computing, edge computing, mobile computing, mobile cloud computing, ubiquitous computing, digital image processing, medical image processing, pattern analysis and machine intelligence, geoscience and remote sensing, big data analytics, data mining, power electronics, web of things, digital forensics, natural language processing, automation systems, artificial intelligence, mininet 2.1.0, matlab (r2018b/r2019a), matlab and simulink, apache hadoop, apache spark mlib, apache mahout, apache flink, apache storm, apache cassandra, pig and hive, rapid miner, support 24/7, call us @ any time, +91 9444829042, [email protected].

Questions ?

Click here to chat with us

20+ Image Processing Projects Ideas in Python with Source Code

Image Processing Projects Ideas in Python with Source Code for Hands-on Practice to develop your computer vision skills as a Machine Learning Engineer.

Perhaps the great French military leader Napolean Bonaparte wasn't too far off when he said, “A picture is worth a thousand words.” Ignoring the poetic value, if just for a moment, the facts have since been established to prove this statement's literal meaning. Humans, the truly visual beings we are, respond to and process visual data better than any other data type. The human brain is said to process images 60,000 times faster than text. Further, 90 percent of information transmitted to the brain is visual. These stats alone are enough to serve the importance images have to humans.

Build an Image Classifier for Plant Species Identification

Downloadable solution code | Explanatory videos | Tech Support

Therefore, the domain of image processing, which deals with enhancing images and extracting useful information from them, has been growing exponentially since its inception. This process has developed and augmented popular platforms and libraries like MATLAB, scikit-image, and OpenCV . The technology forerunners and the world-renowned conglomerates such as Google, Apple, Microsoft, and Amazon dabble in Image processing. Therefore, the hands-on experience working on image processing projects can be an invaluable skill.

Table of Contents

Image processing projects for beginners, 1) grayscaling images, 2) image smoothing, 3) edge detection, 4) skew correction, 5) image compression using matlab, 6) image effect filters , 7) face detection, 8) image to text conversion using matlab, 9) watermarking, 10) image classification using matlab, 11) background subtraction, 12) instance segmentation, 13) pose recognition using matlab, 14) medical image segmentation, 15) image fusion, 16) sudoku solver, 17) bar-code detection, 18) automatically correcting images’ exposure, 19) quilting images and synthesising texture, 20) signature verifying system, what is image processing with example, what can be done with image processing, which is the best software for image processing, 20+ image processing projects ideas.

With the vast expectations the domain bears on its shoulders, getting started with Image Processing can unsurprisingly be a little intimidating. As if to make matters worse for a beginner, the myriad of high-level functions implemented can make it extremely hard to navigate. Since one of the best ways to get an intuitive understanding of the field can be to deconstruct and implement these commonly used functions yourself, the list of image processing projects ideas presented in this section seeks to do just that!

New Projects

This section has easy image processing projects ideas for novices in Image processing. You will find this section most helpful if you are a student looking for image processing projects for the final year.

Grayscaling is among the most commonly used preprocessing techniques as it allows for dimensionality reduction and reduces computational complexity. This process is almost indispensable even for more complex algorithms like Optical Character Recognition, around which companies like Microsoft have built and deployed entire products (i.e., Microsoft OCR).

The output image shown above has been grayscaled using the rgb2gray function from scikit-image. (Image used from Image Processing Kaggle)

There are plenty of readily available functions in OpenCV, MATLAB, and other popular image processing tools to implement a grayscaling algorithm. For this image processing project, you could import the color image of your choice using the Pillow library and then transform the array using NumPy . For this project, you are advised to use the Luminosity Method, which uses the formula 0.21*R+0.72*G+0.07*B. The results look similar to the Grayscale image in the figure with minor variations in contrast because of the difference in the formula used. Alternatively, you could attempt to implement other Grayscaling algorithms like the Lightness and the Average Method.

Upskill yourself for your dream job with industry-level big data projects with source code

Image smoothing ameliorates the effect of high-frequency spatial noise from an image. It is also an important step used even in advanced critical applications like medical image processing, making operations like derivative computation numerically stable.

For this beginner-level image processing project, you can implement Gaussian smoothing. To do so, you will need to create a 2-dimensional Gaussian kernel (possibly from one-dimensional kernels using the outer product) by employing the NumPy library and then convoluting it over the padded image of your choice. The above output has been obtained from the scikit-image with the Multi-dimensional Gaussian filter used for smoothing. Observe how the ‘sharpness' of the edges is lost after the smoothing operation in this image processing project. The smoothing process can also be performed on the RGB image. However, a grayscale image has been used here for simplicity.

Get Closer To Your Dream of Becoming a Data Scientist with 70+ Solved End-to-End ML Projects

Edge detection helps segment images to allow for data extraction. An edge in an image is essentially a discontinuity (or a sharp change) in the pixel intensity values of an image. You must have witnessed edge detection at play in software like Kingsoft WPS or your own smartphone scanners and, therefore, should be familiar with its significance.

For this project, you can implement the Sobel operator for edge detection. For this, you can use OpenCV to read the image, NumPy to create the masks, perform the convolution operations, and combine the horizontal and vertical mask outputs to extract all the edges.

The above image demonstrates the results obtained by applying the Sobel filter to the smoothed image.

NOTE: On comparing this to the results obtained by applying the Sobel filter directly to the Grayscaled image (without smoothing) as shown below, you should be able to understand one of the reasons why smoothing is essential before edge detection.

Explore Categories

Skew correction is beneficial in applications like OCR . The pain of skew correction is entirely avoided by having artificial intelligence -enabled features built into applications like Kingsoft WPS.

You can try using OpenCV to read and grayscale the image to implement your skew correction program. To eliminate the skew, you will need to compute the bounding box containing the text and adjust its angle. An example of the results of the skew correction operation has been shown. You can try to replicate the results by using this Kaggle dataset ImageProcessing .

Get FREE Access to Machine Learning Example Codes for Data Cleaning, Data Munging, and Data Visualization

Quoting Stephen Hawking, “A picture is worth a thousand words...and uses up a thousand times the memory.” Despite the advantages images have over text data, there is no denying the complexities that the extra bytes they eat up can bring. Optimization, therefore, becomes the only way out.

If words alone haven't made the case convincing enough, perhaps the mention of the startup, Deep Render, which is based on applying machine learning to image compression, raising £1.6 million in seed funding, should serve to emphasize the importance of this domain succinctly.

For this MATLAB Image Processing project, you can implement the discrete cosine transform approach to achieve image compression. It is based on the property that most of the critical information of an image can be described by just a few coefficients of the DCT. You can use the Image Processing Toolbox software for DCT computation. The input image is divided into 8-by-8 or 16-by-16 blocks, and the DCT coefficients computed, which have values close to zero, can be discarded without seriously degrading image quality. You can use the standard ‘cameraman.tif' image as input for this purpose.

Image Credit: Mathworks.in

Intermediate Image Processing Projects Ideas

In the previous section, we introduced simple image processing projects for beginners. We will now move ahead with projects on image processing that are slightly more difficult but equally interesting to attempt.

You must have come across several off-the-shelf software capable of cartooning and adding an artistic effect to your images. These features are enabled on popular social media platforms like Instagram and Snapchat. Producing images with effects of your liking is possible by using Neural Style Transfer.

To implement a model to achieve Neural Style Transfer, you need to choose a style image that will form the ‘effect' and a content image. (You can use this dataset: Tamil Neural Style Transfer Dataset for this image processing project.) The feature portion of a pre-trained VGG-19 can be used for this purpose, and the weighted addition of the style and content loss function is to be optimized during backpropagation.

Get confident to build end-to-end projects

Access to a curated library of 250+ end-to-end industry projects with solution code, videos and tech support.

Seldom would you find a smartphone or a camera without face detection still in use. This feature has become so mainstream that most major smartphone manufacturers, like Apple and Samsung, wouldn't explicitly mention its presence in product specifications.

For this project, you could choose one of the following two ways to implement face detection .

Image Processing Projects using OpenCV Python

For this approach, you could use the pre-trained classifier files for the Haar classifier. While this is not particularly hard to implement, there is much to learn from precisely understanding how the classifier works.

Deep Learning Approach

While dlib's CNN-based face detector is slower than most other methods, its superior performance compensates for its longer execution time. To implement this, you can simply use the pre-trained model from //dlib.net/files/mmod_human_face_detector.dat.bz2.

Irrespective of the approach you choose to go about with for the face detection task, you could use this Image Processing Random Faces Dataset on Kaggle.

Image-to-text conversion or Optical Character Recognition has been the basis of many popular applications such as Microsoft Office Lens and a feature of others such as Google documents. The prevalence of OCR systems is only rising as the world becomes increasingly digitized. Therefore, this digital image processing project will involve familiarizing yourself with accomplishing image-to-text conversion using MATLAB!

While converting image to text by Optical Character Recognition can be pretty easy with other programming languages like Python (for instance, using pyTesseract), the MATLAB implementation of this project can seem slightly unfamiliar. But unfamiliarity is all there is to this otherwise simple application. You can simply use the Computer Vision Toolbox to perform Optical Character Recognition. Additionally, you can use the pre-trained language data files in the OCR Language Data support files from the OCR Engine page, Tesseract Open Source OCR Engine. Further, as an extension of this project, you could try training your own OCR model using the OCR Trainer application for a specific set of characters, such as handwritten characters, mathematical characters, and so on.

Some publicly available datasets you could use for training on handwritten characters include Digits 0-9: MNIST , A-Z in CSV format , and Math symbols.

Get More Practice, More Data Science and Machine Learning Projects , and More guidance.Fast-Track Your Career Transition with ProjectPro

Watermarking is a helpful way to protect proprietary images and data; it essentially signs your work the way painters do to their artwork. For digital watermarking to be effective, it should be imperceivable to the human eye and robust to withstand image manipulations like warping, rotation, etc.

For this project, you can combine Discrete Cosine Transform and Discrete Wavelet Transform for watermarking. You can implement an effective machine learning algorithm for watermarking by changing the wavelet coefficients of select DWT sub-bands followed by the application of DCT transform. Operations like DCT can be accomplished in Python Data Science Tutorial using the scipy library.

Here's what valued users are saying about ProjectPro

Savvy Sahai

Data Science Intern, Capgemini

Graduate Research assistance at Stony Brook University

Not sure what you are looking for?

Image Classification finds wide digital applications (for instance, it is used by Airbnb for room type classification) and industrial settings such as assembly lines to find faults, etc.

One way to achieve image classification with MATLAB using the Computer Vision Toolbox function is by employing a visual bag of words. This involves extracting feature descriptors from the training data to train the classifier. The training essentially consists of converting the extracted features into an approximated feature histogram based on the likeness or closeness of the descriptors using clustering to arrive at the image's feature vector. This classifier is then used for prediction. To start this task, you could use this (adorable!) cat, dog, and panda classifier dataset .

Recommended Reading:

- 15 Computer Vision Project Ideas for Beginners

- 15 NLP Projects Ideas for Beginners With Source Code

- 15 Time Series Projects Ideas for Beginners to Practice

- 8 Machine Learning Projects to Practice for August

- 15 Deep Learning Projects Ideas for Beginners to Practice

- 15 Machine Learning Projects GitHub for Beginners

- 15+ Machine Learning Projects for Resume with Source Code

- 20 Machine Learning Projects That Will Get You Hired

- 15 Data Visualization Projects for Beginners with Source Code

- 15 Object Detection Project Ideas with Source Code for Practice

- 15 OpenCV Projects Ideas for Beginners to Practice

Advanced Python Image Processing Projects with Source Code

It is time to level up your game in image processing. After working on the above mentioned projects, we suggest you try out the following digital image processing projects using Python .

Background subtraction is an important technique used in applications like video surveillance. As video analysis involves frame-wise processing, this technique naturally finds its place in the image processing domain. It essentially separates the elements in the foreground from the background by generating a mask.

To model the background, you will need to initialize a model to represent the background characteristics and update it to represent the changes such as lighting during different times of the day or the change in seasons. The Gaussian Mixture Model is one of the many algorithms you can use for this image processing project. (Alternatively, you can use the OpenCV library, which has some High-level APIs which will significantly simplify the task. However, it is advised to understand their working before using them.) The dataset made available on: http://pione.dinf.usherbrooke.ca/ can be used with due acknowledgments.

While object detection involves finding the location of an object and creating bounding boxes, instance segmentation goes a step beyond by identifying the exact pixels representing an instance of every class of object that the machine learning model has been trained. Instance segmentation finds its use in numerous state-of-the-art use cases like self-driving cars.

It is advised to use Mask RCNN for this image segmentation problem. You can use the pre-trained mask_rcnn_coco.h5 model and then provide an annotated dataset. The following miniature traffic dataset is annotated in COCO format and should aid transfer learning .

Source Code: Image Segmentation using Mask RCNN Data Science Project

Pose estimation finds use in augmented reality, robotics, and even gaming. The computer vision or deep learning-based company, Wrnch, is based on a product designed to estimate human pose and motion and reconstruct human shape digitally as two or three-dimensional characters.

Using the Open Pose algorithm, you can implement pose estimation with the Deep Learning Toolbox in MATLAB. The pre-trained model can be used from //ssd.mathworks.com/supportfiles/vision/data/human-pose-estimation.zip. (For this project, you can use the MPII Human Pose Dataset (human-pose.mpi-inf.mpg.de/).

Image processing in the medical field is a topic whose benefits and scopes need no introduction. Healthcare product giants like Siemens Healthineers and GE Healthcare have already headed into this domain by introducing AI-Rad Companion and AIRx (or Artificial Intelligence Prescription), respectively.

To accomplish the medical image segmentation task, you can consider implementing the famous U-Net architecture; a convolutional neural network developed to segment biomedical images using the Tensorflow API. While there have been many advancements and developments since U-Net, the popularity of this architecture and the possible availability of pre-trained models will perhaps help you get started.

Using the two chest x-rays datasets from Montgomery County and Shenzhen Hospital, you can attempt lung image segmentation: hncbc.nlm.nih.gov/LHC-downloads/dataset.html. Alternatively, you can use the masks for the Shenzhen Hospital dataset .

Source Code: Medical Image Segmentation

Access Data Science and Machine Learning Project Code Examples

IImage fusion combines or fuses multiple input images to obtain new or more precise knowledge. In medical image fusion, combining multiple images from single or multiple modalities reduces the redundancy and augments the usefulness and capabilities of medical images in diagnosis. Many companies (like ImFusion) provide expert services, and others develop in-house solutions for their image processing use cases, such as image fusion.

For your image fusion project, you can consider Multifocus Image Fusion.You can use the method described by Slavica Savić's paper titled “Multifocus Image Fusion Based on Empirical Mode Decomposition” for this. The following publicly available multi-focus image datasets can be used to build and evaluate the solution: github.com/sametaymaz/Multi-focus-Image-Fusion-Dataset.

Image-Processing Projects using Python with Source Code on GitHub

This section is particularly for those readers who want solved projects on image processing using Python. We have mentioned the GitHub repository for each project so that you can understand the implementation of the projects deeply.

During summer holidays in childhood, most of us would have at least once tried playing the exciting game- Sudoku. It is common to get stuck when playing the game for the first time. But, the most challenging part is to go back and realize where one went wrong?

Well, if you are into image processing, you can build a project to solve the sudoku.

The project idea is to build an intelligent sudoku solver that can pull out the sudoku game from an input image and solve it. And the first step in creating such an application is to apply a gaussian blur filter to the image. After that, use adaptive gaussian thresholding, invert the colors, dilate the image, and use a convolutional neural network to recognize the puzzle. The last step is then to use mathematical algorithms to solve the puzzle.

FUN FACT: Sudoku is an abbreviation for “suuji wa dokushin ni kagiru” (japanese), which means “the numbers must remain single.”

Source Code on GitHub: GitHub Repository:neeru1207/AI_Sudoku

This is one of the fun digital image processing projects you should try. It will introduce you to exciting and intriguing image processing techniques while guiding you on building a system that can detect bar codes from an image.

The idea behind this project is simple. One must make a computer locate that area in an image with maximum contours. You will first need to convert the image into grayscale to get started. After that, use image processing methods like gradient calculation, blur image, binary thresholding, morphology, etc., and finally find the area with the highest number of contours. Then, label the area as a bar code. Additionally, you can use OpenCV to decode the barcode. Source Code on GitHub: pyxploiter/Barcode-Detection-and-Decoding

Instagram is one of the top 6 social networks with more than a billion users, and we hope you are not surprised. It's the era of social media platforms, and photographs/digital images are one of the best ways to convey what's going on in your life. And, where there are images, there are filters to beautify them. In this project, you will build a system that can automatically correct the exposure of an input image. This project will help you understand image processing techniques like Histogram Equalisation, Bi-Histogram based Histogram Equalisation, Contrast Limited Adaptive Histogram Equalisation, Gamma Transformation, Adaptive Gamma Transformation, Weighted Adaptive Gamma Transformation, Improved Adaptive Gamma Transformation, and Adaptive non-linear Stretching.

Source Code on GitHub: GitHub - 07Agarg/Automatic-Exposure-Correction

While creating images on platforms like Canva, one often comes across images with great texture but do not have a high resolution. Well, through image processing techniques, you can easily create a solution for such images.

This project will create an application that will take a textured image as input and extend that texture to form a higher resolution image. Additionally, you will use the texture and overlap it over another image, referred to as image quilting. This project will use various image processing methods to pick the right texture and create the desired images. You will understand how different mathematical functions like root-mean-square are utilized over pixels for images.

Source Code on GitHub: GitHub - ani8897/Image-Quilting-and-Texture-Synthesis

Can you recall those childhood days when you'd request your siblings to sign your leave application on your parents' behalf by forging their signatures? Forging signatures sounds like a funny thing when you are a kid but not as an adult. Someone can withdraw money from your bank account without you knowing it by forging your signature. So, how do banks make sure it's you and nobody else? You guessed it right; they use image processing.

In this project, you evaluate the score difference between two images of signatures; one would be the original, and the other would be the test image. You will learn how to apply deep learning models like CNN, SigNet, etc., on processed images to build the signature verification application.

Source Code on GitHub: GitHub - DefUs3r/Automatic-Signature-Verification

Concluding with a quote from George Bernard Shaw, “The only way to learn something is to do something.” While (digital) image processing and machine learning were long established in his time, it doesn't make his advice any less applicable. Projects, short and fun as they are, are a great way to improve your skills in any domain. So, if you've made it up to here, make sure you don't leave without taking up an image processing project or two, and before you know it, you'll have the skills and the project portfolio to show for it!

FAQs on Image Processing Projects

Image processing is a method for applying operations on an image to enhance or extract relevant information. It's a form of signal processing in which the input is an image, and the output is either that image or its features. Example: Grayscaling is a popular image processing technique that reduces computational complexity while minimizing dimensionality.

Image processing has various applications such as Pattern Recognition , Video processing, Machine/Robot Vision, Image sharpening and restoration, Color processing, Microscopic Imaging, etc.

Here is a list of the best software for image processing:

Adobe Photoshop

About the Author

ProjectPro is the only online platform designed to help professionals gain practical, hands-on experience in big data, data engineering, data science, and machine learning related technologies. Having over 270+ reusable project templates in data science and big data with step-by-step walkthroughs,

© 2024

© 2024 Iconiq Inc.

Privacy policy

User policy

Write for ProjectPro

A list of completed theses and new thesis topics from the Computer Vision Group.

Are you about to start a BSc or MSc thesis? Please read our instructions for preparing and delivering your work.

Below we list possible thesis topics for Bachelor and Master students in the areas of Computer Vision, Machine Learning, Deep Learning and Pattern Recognition. The project descriptions leave plenty of room for your own ideas. If you would like to discuss a topic in detail, please contact the supervisor listed below and Prof. Paolo Favaro to schedule a meeting. Note that for MSc students in Computer Science it is required that the official advisor is a professor in CS.

AI deconvolution of light microscopy images

Level: master.

Background Light microscopy became an indispensable tool in life sciences research. Deconvolution is an important image processing step in improving the quality of microscopy images for removing out-of-focus light, higher resolution, and beter signal to noise ratio. Currently classical deconvolution methods, such as regularisation or blind deconvolution, are implemented in numerous commercial software packages and widely used in research. Recently AI deconvolution algorithms have been introduced and being currently actively developed, as they showed a high application potential.

Aim Adaptation of available AI algorithms for deconvolution of microscopy images. Validation of these methods against state-of-the -art commercially available deconvolution software.

Material and Methods Student will implement and further develop available AI deconvolution methods and acquire test microscopy images of different modalities. Performance of developed AI algorithms will be validated against available commercial deconvolution software.

- Al algorithm development and implementation: 50%.

- Data acquisition: 10%.

- Comparison of performance: 40 %.

Requirements

- Interest in imaging.

- Solid knowledge of AI.

- Good programming skills.

Supervisors Paolo Favaro, Guillaume Witz, Yury Belyaev.

Institutes Computer Vison Group, Digital Science Lab, Microscopy imaging Center.

Contact Yury Belyaev, Microscopy imaging Center, [email protected] , + 41 78 899 0110.

Instance segmentation of cryo-ET images

Level: bachelor/master.

In the 1600s, a pioneering Dutch scientist named Antonie van Leeuwenhoek embarked on a remarkable journey that would forever transform our understanding of the natural world. Armed with a simple yet ingenious invention, the light microscope, he delved into uncharted territory, peering through its lens to reveal the hidden wonders of microscopic structures. Fast forward to today, where cryo-electron tomography (cryo-ET) has emerged as a groundbreaking technique, allowing researchers to study proteins within their natural cellular environments. Proteins, functioning as vital nano-machines, play crucial roles in life and understanding their localization and interactions is key to both basic research and disease comprehension. However, cryo-ET images pose challenges due to inherent noise and a scarcity of annotated data for training deep learning models.

Credit: S. Albert et al./PNAS (CC BY 4.0)

To address these challenges, this project aims to develop a self-supervised pipeline utilizing diffusion models for instance segmentation in cryo-ET images. By leveraging the power of diffusion models, which iteratively diffuse information to capture underlying patterns, the pipeline aims to refine and accurately segment cryo-ET images. Self-supervised learning, which relies on unlabeled data, reduces the dependence on extensive manual annotations. Successful implementation of this pipeline could revolutionize the field of structural biology, facilitating the analysis of protein distribution and organization within cellular contexts. Moreover, it has the potential to alleviate the limitations posed by limited annotated data, enabling more efficient extraction of valuable information from cryo-ET images and advancing biomedical applications by enhancing our understanding of protein behavior.

Methods The segmentation pipeline for cryo-electron tomography (cryo-ET) images consists of two stages: training a diffusion model for image generation and training an instance segmentation U-Net using synthetic and real segmentation masks.

1. Diffusion Model Training: a. Data Collection: Collect and curate cryo-ET image datasets from the EMPIAR database (https://www.ebi.ac.uk/empiar/). b. Architecture Design: Select an appropriate architecture for the diffusion model. c. Model Evaluation: Cryo-ET experts will help assess image quality and fidelity through visual inspection and quantitative measures 2. Building the Segmentation dataset: a. Synthetic and real mask generation: Use the trained diffusion model to generate synthetic cryo-ET images. The diffusion process will be seeded from either a real or a synthetic segmentation mask. This will yield to pairs of cryo-ET images and segmentation masks. 3. Instance Segmentation U-Net Training: a. Architecture Design: Choose an appropriate instance segmentation U-Net architecture. b. Model Evaluation: Evaluate the trained U-Net using precision, recall, and F1 score metrics.

By combining the diffusion model for cryo-ET image generation and the instance segmentation U-Net, this pipeline provides an efficient and accurate approach to segment structures in cryo-ET images, facilitating further analysis and interpretation.

References 1. Kwon, Diana. "The secret lives of cells-as never seen before." Nature 598.7882 (2021): 558-560. 2. Moebel, Emmanuel, et al. "Deep learning improves macromolecule identification in 3D cellular cryo-electron tomograms." Nature methods 18.11 (2021): 1386-1394. 3. Rice, Gavin, et al. "TomoTwin: generalized 3D localization of macromolecules in cryo-electron tomograms with structural data mining." Nature Methods (2023): 1-10.

Contacts Prof. Thomas Lemmin Institute of Biochemistry and Molecular Medicine Bühlstrasse 28, 3012 Bern ( [email protected] )

Prof. Paolo Favaro Institute of Computer Science Neubrückstrasse 10 3012 Bern ( [email protected] )

Adding and removing multiple sclerosis lesions with to imaging with diffusion networks

Background multiple sclerosis lesions are the result of demyelination: they appear as dark spots on t1 weighted mri imaging and as bright spots on flair mri imaging. image analysis for ms patients requires both the accurate detection of new and enhancing lesions, and the assessment of atrophy via local thickness and/or volume changes in the cortex. detection of new and growing lesions is possible using deep learning, but made difficult by the relative lack of training data: meanwhile cortical morphometry can be affected by the presence of lesions, meaning that removing lesions prior to morphometry may be more robust. existing ‘lesion filling’ methods are rather crude, yielding unrealistic-appearing brains where the borders of the removed lesions are clearly visible., aim: denoising diffusion networks are the current gold standard in mri image generation [1]: we aim to leverage this technology to remove and add lesions to existing mri images. this will allow us to create realistic synthetic mri images for training and validating ms lesion segmentation algorithms, and for investigating the sensitivity of morphometry software to the presence of ms lesions at a variety of lesion load levels., materials and methods: a large, annotated, heterogeneous dataset of mri data from ms patients, as well as images of healthy controls without white matter lesions, will be available for developing the method. the student will work in a research group with a long track record in applying deep learning methods to neuroimaging data, as well as experience training denoising diffusion networks..

Nature of the Thesis:

Literature review: 10%

Replication of Blob Loss paper: 10%

Implementation of the sliding window metrics:10%

Training on MS lesion segmentation task: 30%

Extension to other datasets: 20%

Results analysis: 20%

Fig. Results of an existing lesion filling algorithm, showing inadequate performance

Requirements:

Interest/Experience with image processing

Python programming knowledge (Pytorch bonus)

Interest in neuroimaging

Supervisor(s):

PD. Dr. Richard McKinley

Institutes: Diagnostic and Interventional Neuroradiology

Center for Artificial Intelligence in Medicine (CAIM), University of Bern

References: [1] Brain Imaging Generation with Latent Diffusion Models , Pinaya et al, Accepted in the Deep Generative Models workshop @ MICCAI 2022 , https://arxiv.org/abs/2209.07162

Contact : PD Dr Richard McKinley, Support Centre for Advanced Neuroimaging ( [email protected] )

Improving metrics and loss functions for targets with imbalanced size: sliding window Dice coefficient and loss.

Background The Dice coefficient is the most commonly used metric for segmentation quality in medical imaging, and a differentiable version of the coefficient is often used as a loss function, in particular for small target classes such as multiple sclerosis lesions. Dice coefficient has the benefit that it is applicable in instances where the target class is in the minority (for example, in case of segmenting small lesions). However, if lesion sizes are mixed, the loss and metric is biased towards performance on large lesions, leading smaller lesions to be missed and harming overall lesion detection. A recently proposed loss function (blob loss[1]) aims to combat this by treating each connected component of a lesion mask separately, and claims improvements over Dice loss on lesion detection scores in a variety of tasks.

Aim: The aim of this thesisis twofold. First, to benchmark blob loss against a simple, potentially superior loss for instance detection: sliding window Dice loss, in which the Dice loss is calculated over a sliding window across the area/volume of the medical image. Second, we will investigate whether a sliding window Dice coefficient is better corellated with lesion-wise detection metrics than Dice coefficient and may serve as an alternative metric capturing both global and instance-wise detection.

Materials and Methods: A large, annotated, heterogeneous dataset of MRI data from MS patients will be available for benchmarking the method, as well as our existing codebases for MS lesion segmentation. Extension of the method to other diseases and datasets (such as covered in the blob loss paper) will make the method more plausible for publication. The student will work alongside clinicians and engineers carrying out research in multiple sclerosis lesion segmentation, in particular in the context of our running project supported by the CAIM grant.

Fig. An annotated MS lesion case, showing the variety of lesion sizes

References: [1] blob loss: instance imbalance aware loss functions for semantic segmentation, Kofler et al, https://arxiv.org/abs/2205.08209

Idempotent and partial skull-stripping in multispectral MRI imaging

Background Skull stripping (or brain extraction) refers to the masking of non-brain tissue from structural MRI imaging. Since 3D MRI sequences allow reconstruction of facial features, many data providers supply data only after skull-stripping, making this a vital tool in data sharing. Furthermore, skull-stripping is an important pre-processing step in many neuroimaging pipelines, even in the deep-learning era: while many methods could now operate on data with skull present, they have been trained only on skull-stripped data and therefore produce spurious results on data with the skull present.

High-quality skull-stripping algorithms based on deep learning are now widely available: the most prominent example is HD-BET [1]. A major downside of HD-BET is its behaviour on datasets to which skull-stripping has already been applied: in this case the algorithm falsely identifies brain tissue as skull and masks it. A skull-stripping algorithm F not exhibiting this behaviour would be idempotent: F(F(x)) = F(x) for any image x. Furthermore, legacy datasets from before the availability of high-quality skull-stripping algorithms may still contain images which have been inadequately skull-stripped: currently the only solution to improve the skull-stripping on this data is to go back to the original datasource or to manually correct the skull-stripping, which is time-consuming and prone to error.

Aim: In this project, the student will develop an idempotent skull-stripping network which can also handle partially skull-stripped inputs. In the best case, the network will operate well on a large subset of the data we work with (e.g. structural MRI, diffusion-weighted MRI, Perfusion-weighted MRI, susceptibility-weighted MRI, at a variety of field strengths) to maximize the future applicability of the network across the teams in our group.

Materials and Methods: Multiple datasets, both publicly available and internal (encompassing thousands of 3D volumes) will be available. Silver standard reference data for standard sequences at 1.5T and 3T can be generated using existing tools such as HD-BET: for other sequences and field strengths semi-supervised learning or methods improving robustness to domain shift may be employed. Robustness to partial skull-stripping may be induced by a combination of learning theory and model-based approaches.

Dataset curation: 10%

Idempotent skull-stripping model building: 30%

Modelling of partial skull-stripping:10%

Extension of model to handle partial skull: 30%

Results analysis: 10%

Fig. An example of failed skull-stripping requiring manual correction

References: [1] Isensee, F, Schell, M, Pflueger, I, et al. Automated brain extraction of multisequence MRI using artificial neural networks. Hum Brain Mapp . 2019; 40: 4952– 4964. https://doi.org/10.1002/hbm.24750

Automated leaf detection and leaf area estimation (for Arabidopsis thaliana)

Correlating plant phenotypes such as leaf area or number of leaves to the genotype (i.e. changes in DNA) is a common goal for plant breeders and molecular biologists. Such data can not only help to understand fundamental processes in nature, but also can help to improve ecotypes, e.g., to perform better under climate change, or reduce fertiliser input. However, collecting data for many plants is very time consuming and automated data acquisition is necessary.

The project aims at building a machine learning model to automatically detect plants in top-view images (see examples below), segment their leaves (see Fig C) and to estimate the leaf area. This information will then be used to determine the leaf area of different Arabidopsis ecotypes. The project will be carried out in collaboration with researchers of the Institute of Plant Sciences at the University of Bern. It will also involve the design and creation of a dataset of plant top-views with the corresponding annotation (provided by experts at the Institute of Plant Sciences).

Contact: Prof. Dr. Paolo Favaro ( [email protected] )

Master Projects at the ARTORG Center

The Gerontechnology and Rehabilitation group at the ARTORG Center for Biomedical Engineering is offering multiple MSc thesis projects to students, which are interested in working with real patient data, artificial intelligence and machine learning algorithms. The goal of these projects is to transfer the findings to the clinic in order to solve today’s healthcare problems and thus to improve the quality of life of patients. Assessment of Digital Biomarkers at Home by Radar. [PDF] Comparison of Radar, Seismograph and Ballistocardiography and to Monitor Sleep at Home. [PDF] Sentimental Analysis in Speech. [PDF] Contact: Dr. Stephan Gerber ( [email protected] )

Internship in Computational Imaging at Prophesee

A 6 month intership at Prophesee, Grenoble is offered to a talented Master Student.

The topic of the internship is working on burst imaging following the work of Sam Hasinoff , and exploring ways to improve it using event-based vision.

A compensation to cover the expenses of living in Grenoble is offered. Only students that have legal rights to work in France can apply.

Anyone interested can send an email with the CV to Daniele Perrone ( [email protected] ).

Using machine learning applied to wearables to predict mental health

This Master’s project lies at the intersection of psychiatry and computer science and aims to use machine learning techniques to improve health. Using sensors to detect sleep and waking behavior has as of yet unexplored potential to reveal insights into health. In this study, we make use of a watch-like device, called an actigraph, which tracks motion to quantify sleep behavior and waking activity. Participants in the study consist of healthy and depressed adolescents and wear actigraphs for a year during which time we query their mental health status monthly using online questionnaires. For this masters thesis we aim to make use of machine learning methods to predict mental health based on the data from the actigraph. The ability to predict mental health crises based on sleep and wake behavior would provide an opportunity for intervention, significantly impacting the lives of patients and their families. This Masters thesis is a collaboration between Professor Paolo Favaro at the Institute of Computer Science ( [email protected] ) and Dr Leila Tarokh at the Universitäre Psychiatrische Dienste (UPD) ( [email protected] ). We are looking for a highly motivated individual interested in bridging disciplines.

Bachelor or Master Projects at the ARTORG Center

The Gerontechnology and Rehabilitation group at the ARTORG Center for Biomedical Engineering is offering multiple BSc- and MSc thesis projects to students, which are interested in working with real patient data, artificial intelligence and machine learning algorithms. The goal of these projects is to transfer the findings to the clinic in order to solve today’s healthcare problems and thus to improve the quality of life of patients. Machine Learning Based Gait-Parameter Extraction by Using Simple Rangefinder Technology. [PDF] Detection of Motion in Video Recordings [PDF] Home-Monitoring of Elderly by Radar [PDF] Gait feature detection in Parkinson's Disease [PDF] Development of an arthroscopic training device using virtual reality [PDF] Contact: Dr. Stephan Gerber ( [email protected] ), Michael Single ( [email protected]. ch )

Dynamic Transformer

Level: bachelor.

Visual Transformers have obtained state of the art classification accuracies [ViT, DeiT, T2T, BoTNet]. Mixture of experts could be used to increase the capacity of a neural network by learning instance dependent execution pathways in a network [MoE]. In this research project we aim to push the transformers to their limit and combine their dynamic attention with MoEs, compared to Switch Transformer [Switch], we will use a much more efficient formulation of mixing [CondConv, DynamicConv] and we will use this idea in the attention part of the transformer, not the fully connected layer.

- Input dependent attention kernel generation for better transformer layers.

Publication Opportunity: Dynamic Neural Networks Meets Computer Vision (a CVPR 2021 Workshop)

Extensions:

- The same idea could be extended to other ViT/Transformer based models [DETR, SETR, LSTR, TrackFormer, BERT]

Related Papers:

- Visual Transformers: Token-based Image Representation and Processing for Computer Vision [ViT]

- DeiT: Data-efficient Image Transformers [DeiT]

- Bottleneck Transformers for Visual Recognition [BoTNet]

- Tokens-to-Token ViT: Training Vision Transformers from Scratch on ImageNet [T2TViT]

- Outrageously Large Neural Networks: The Sparsely-Gated Mixture-of-Experts Layer [MoE]

- Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity [Switch]

- CondConv: Conditionally Parameterized Convolutions for Efficient Inference [CondConv]

- Dynamic Convolution: Attention over Convolution Kernels [DynamicConv]

- End-to-End Object Detection with Transformers [DETR]

- Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers [SETR]

- End-to-end Lane Shape Prediction with Transformers [LSTR]

- TrackFormer: Multi-Object Tracking with Transformers [TrackFormer]

- BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding [BERT]

Contact: Sepehr Sameni

Visual Transformers have obtained state of the art classification accuracies for 2d images[ViT, DeiT, T2T, BoTNet]. In this project, we aim to extend the same ideas to 3d data (videos), which requires a more efficient attention mechanism [Performer, Axial, Linformer]. In order to accelerate the training process, we could use [Multigrid] technique.

- Better video understanding by attention blocks.

Publication Opportunity: LOVEU (a CVPR workshop) , Holistic Video Understanding (a CVPR workshop) , ActivityNet (a CVPR workshop)

- Rethinking Attention with Performers [Performer]

- Axial Attention in Multidimensional Transformers [Axial]

- Linformer: Self-Attention with Linear Complexity [Linformer]

- A Multigrid Method for Efficiently Training Video Models [Multigrid]

GIRAFFE is a newly introduced GAN that can generate scenes via composition with minimal supervision [GIRAFFE]. Generative methods can implicitly learn interpretable representation as can be seen in GAN image interpretations [GANSpace, GanLatentDiscovery]. Decoding GIRAFFE could give us per-object interpretable representations that could be used for scene manipulation, data augmentation, scene understanding, semantic segmentation, pose estimation [iNeRF], and more.

In order to invert a GIRAFFE model, we will first train the generative model on Clevr and CompCars datasets, then we add a decoder to the pipeline and train this autoencoder. We can make the task easier by knowing the number of objects in the scene and/or knowing their positions.

Goals:

Scene Manipulation and Decomposition by Inverting the GIRAFFE

Publication Opportunity: DynaVis 2021 (a CVPR workshop on Dynamic Scene Reconstruction)

Related Papers:

- GIRAFFE: Representing Scenes as Compositional Generative Neural Feature Fields [GIRAFFE]

- Neural Scene Graphs for Dynamic Scenes

- pixelNeRF: Neural Radiance Fields from One or Few Images [pixelNeRF]

- NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis [NeRF]

- Neural Volume Rendering: NeRF And Beyond

- GANSpace: Discovering Interpretable GAN Controls [GANSpace]

- Unsupervised Discovery of Interpretable Directions in the GAN Latent Space [GanLatentDiscovery]

- Inverting Neural Radiance Fields for Pose Estimation [iNeRF]

Quantized ViT

Visual Transformers have obtained state of the art classification accuracies [ViT, CLIP, DeiT], but the best ViT models are extremely compute heavy and running them even only for inference (not doing backpropagation) is expensive. Running transformers cheaply by quantization is not a new problem and it has been tackled before for BERT [BERT] in NLP [Q-BERT, Q8BERT, TernaryBERT, BinaryBERT]. In this project we will be trying to quantize pretrained ViT models.

Quantizing ViT models for faster inference and smaller models without losing accuracy

Publication Opportunity: Binary Networks for Computer Vision 2021 (a CVPR workshop)

Extensions:

- Having a fast pipeline for image inference with ViT will allow us to dig deep into the attention of ViT and analyze it, we might be able to prune some attention heads or replace them with static patterns (like local convolution or dilated patterns), We might be even able to replace the transformer with performer and increase the throughput even more [Performer].

- The same idea could be extended to other ViT based models [DETR, SETR, LSTR, TrackFormer, CPTR, BoTNet, T2TViT]

- Learning Transferable Visual Models From Natural Language Supervision [CLIP]

- Visual Transformers: Token-based Image Representation and Processing for Computer Vision [ViT]

- DeiT: Data-efficient Image Transformers [DeiT]

- BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding [BERT]

- Q-BERT: Hessian Based Ultra Low Precision Quantization of BERT [Q-BERT]

- Q8BERT: Quantized 8Bit BERT [Q8BERT]

- TernaryBERT: Distillation-aware Ultra-low Bit BERT [TernaryBERT]

- BinaryBERT: Pushing the Limit of BERT Quantization [BinaryBERT]

- Rethinking Attention with Performers [Performer]

- End-to-End Object Detection with Transformers [DETR]

- Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers [SETR]

- End-to-end Lane Shape Prediction with Transformers [LSTR]

- TrackFormer: Multi-Object Tracking with Transformers [TrackFormer]

- CPTR: Full Transformer Network for Image Captioning [CPTR]

- Bottleneck Transformers for Visual Recognition [BoTNet]

- Tokens-to-Token ViT: Training Vision Transformers from Scratch on ImageNet [T2TViT]

Multimodal Contrastive Learning

Recently contrastive learning has gained a lot of attention for self-supervised image representation learning [SimCLR, MoCo]. Contrastive learning could be extended to multimodal data, like videos (images and audio) [CMC, CoCLR]. Most contrastive methods require large batch sizes (or large memory pools) which makes them expensive for training. In this project we are going to use non batch size dependent contrastive methods [SwAV, BYOL, SimSiam] to train multimodal representation extractors.

Our main goal is to compare the proposed method with the CMC baseline, so we will be working with STL10, ImageNet, UCF101, HMDB51, and NYU Depth-V2 datasets.

Inspired by the recent works on smaller datasets [ConVIRT, CPD], to accelerate the training speed, we could start with two pretrained single-modal models and finetune them with the proposed method.

- Extending SwAV to multimodal datasets

- Grasping a better understanding of the BYOL

Publication Opportunity: MULA 2021 (a CVPR workshop on Multimodal Learning and Applications)

- Most knowledge distillation methods for contrastive learners also use large batch sizes (or memory pools) [CRD, SEED], the proposed method could be extended for knowledge distillation.

- One could easily extend this idea to multiview learning, for example one could have two different networks working on the same input and train them with contrastive learning, this may lead to better models [DeiT] by cross-model inductive biases communications.

- Self-supervised Co-training for Video Representation Learning [CoCLR]

- Learning Spatiotemporal Features via Video and Text Pair Discrimination [CPD]

- Audio-Visual Instance Discrimination with Cross-Modal Agreement [AVID-CMA]

- Self-Supervised Learning by Cross-Modal Audio-Video Clustering [XDC]

- Contrastive Multiview Coding [CPC]

- Contrastive Learning of Medical Visual Representations from Paired Images and Text [ConVIRT]

- A Simple Framework for Contrastive Learning of Visual Representations [SimCLR]

- Momentum Contrast for Unsupervised Visual Representation Learning [MoCo]

- Bootstrap your own latent: A new approach to self-supervised Learning [BYOL]

- Exploring Simple Siamese Representation Learning [SimSiam]

- Unsupervised Learning of Visual Features by Contrasting Cluster Assignments [SwAV]

- Contrastive Representation Distillation [CRD]

- SEED: Self-supervised Distillation For Visual Representation [SEED]

Robustness of Neural Networks

Neural Networks have been found to achieve surprising performance in several tasks such as classification, detection and segmentation. However, they are also very sensitive to small (controlled) changes to the input. It has been shown that some changes to an image that are not visible to the naked eye may lead the network to output an incorrect label. This thesis will focus on studying recent progress in this area and aim to build a procedure for a trained network to self-assess its reliability in classification or one of the popular computer vision tasks.

Contact: Paolo Favaro

Masters projects at sitem center

The Personalised Medicine Research Group at the sitem Center for Translational Medicine and Biomedical Entrepreneurship is offering multiple MSc thesis projects to the biomed eng MSc students that may also be of interest to the computer science students. Automated quantification of cartilage quality for hip treatment decision support. PDF Automated quantification of massive rotator cuff tears from MRI. PDF Deep learning-based segmentation and fat fraction analysis of the shoulder muscles using quantitative MRI. PDF Unsupervised Domain Adaption for Cross-Modality Hip Joint Segmentation. PDF Contact: Dr. Kate Gerber

Internships/Master thesis @ Chronocam

3-6 months internships on event-based computer vision. Chronocam is a rapidly growing startup developing event-based technology, with more than 15 PhDs working on problems like tracking, detection, classification, SLAM, etc. Event-based computer vision has the potential to solve many long-standing problems in traditional computer vision, and this is a super exciting time as this potential is becoming more and more tangible in many real-world applications. For next year we are looking for motivated Master and PhD students with good software engineering skills (C++ and/or python), and preferable good computer vision and deep learning background. PhD internships will be more research focused and possibly lead to a publication. For each intern we offer a compensation to cover the expenses of living in Paris. List of some of the topics we want to explore:

- Photo-realistic image synthesis and super-resolution from event-based data (PhD)

- Self-supervised representation learning (PhD)

- End-to-end Feature Learning for Event-based Data

- Bio-inspired Filtering using Spiking Networks

- On-the fly Compression of Event-based Streams for Low-Power IoT Cameras

- Tracking of Multiple Objects with a Dual-Frequency Tracker

- Event-based Autofocus

- Stabilizing an Event-based Stream using an IMU

- Crowd Monitoring for Low-power IoT Cameras

- Road Extraction from an Event-based Camera Mounted in a Car for Autonomous Driving

- Sign detection from an Event-based Camera Mounted in a Car for Autonomous Driving

- High-frequency Eye Tracking

Email with attached CV to Daniele Perrone at [email protected] .

Contact: Daniele Perrone

Object Detection in 3D Point Clouds

Today we have many 3D scanning techniques that allow us to capture the shape and appearance of objects. It is easier than ever to scan real 3D objects and transform them into a digital model for further processing, such as modeling, rendering or animation. However, the output of a 3D scanner is often a raw point cloud with little to no annotations. The unstructured nature of the point cloud representation makes it difficult for processing, e.g. surface reconstruction. One application is the detection and segmentation of an object of interest. In this project, the student is challenged to design a system that takes a point cloud (a 3D scan) as input and outputs the names of objects contained in the scan. This output can then be used to eliminate outliers or points that belong to the background. The approach involves collecting a large dataset of 3D scans and training a neural network on it.

Contact: Adrian Wälchli

Shape Reconstruction from a Single RGB Image or Depth Map

A photograph accurately captures the world in a moment of time and from a specific perspective. Since it is a projection of the 3D space to a 2D image plane, the depth information is lost. Is it possible to restore it, given only a single photograph? In general, the answer is no. This problem is ill-posed, meaning that many different plausible depth maps exist, and there is no way of telling which one is the correct one. However, if we cover one of our eyes, we are still able to recognize objects and estimate how far away they are. This motivates the exploration of an approach where prior knowledge can be leveraged to reduce the ill-posedness of the problem. Such a prior could be learned by a deep neural network, trained with many images and depth maps.

CNN Based Deblurring on Mobile

Deblurring finds many applications in our everyday life. It is particularly useful when taking pictures on handheld devices (e.g. smartphones) where camera shake can degrade important details. Therefore, it is desired to have a good deblurring algorithm implemented directly in the device. In this project, the student will implement and optimize a state-of-the-art deblurring method based on a deep neural network for deployment on mobile phones (Android). The goal is to reduce the number of network weights in order to reduce the memory footprint while preserving the quality of the deblurred images. The result will be a camera app that automatically deblurs the pictures, giving the user a choice of keeping the original or the deblurred image.

Depth from Blur

If an object in front of the camera or the camera itself moves while the aperture is open, the region of motion becomes blurred because the incoming light is accumulated in different positions across the sensor. If there is camera motion, there is also parallax. Thus, a motion blurred image contains depth information. In this project, the student will tackle the problem of recovering a depth-map from a motion-blurred image. This includes the collection of a large dataset of blurred- and sharp images or videos using a pair or triplet of GoPro action cameras. Two cameras will be used in stereo to estimate the depth map, and the third captures the blurred frames. This data is then used to train a convolutional neural network that will predict the depth map from the blurry image.

Unsupervised Clustering Based on Pretext Tasks

The idea of this project is that we have two types of neural networks that work together: There is one network A that assigns images to k clusters and k (simple) networks of type B perform a self-supervised task on those clusters. The goal of all the networks is to make the k networks of type B perform well on the task. The assumption is that clustering in semantically similar groups will help the networks of type B to perform well. This could be done on the MNIST dataset with B being linear classifiers and the task being rotation prediction.

Adversarial Data-Augmentation

The student designs a data augmentation network that transforms training images in such a way that image realism is preserved (e.g. with a constrained spatial transformer network) and the transformed images are more difficult to classify (trained via adversarial loss against an image classifier). The model will be evaluated for different data settings (especially in the low data regime), for example on the MNIST and CIFAR datasets.

Unsupervised Learning of Lip-reading from Videos

People with sensory impairment (hearing, speech, vision) depend heavily on assistive technologies to communicate and navigate in everyday life. The mass production of media content today makes it impossible to manually translate everything into a common language for assistive technologies, e.g. captions or sign language. In this project, the student employs a neural network to learn a representation for lip-movement in videos in an unsupervised fashion, possibly with an encoder-decoder structure where the decoder reconstructs the audio signal. This requires collecting a large dataset of videos (e.g. from YouTube) of speakers or conversations where lip movement is visible. The outcome will be a neural network that learns an audio-visual representation of lip movement in videos, which can then be leveraged to generate captions for hearing impaired persons.

Learning to Generate Topographic Maps from Satellite Images