- Library databases

- Library website

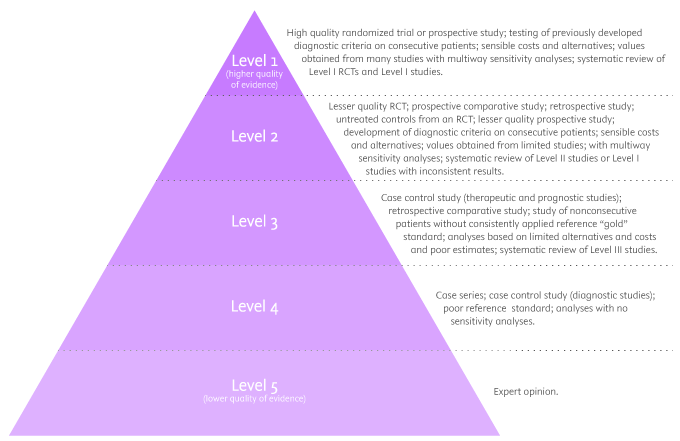

Evidence-Based Research: Levels of Evidence Pyramid

Introduction.

One way to organize the different types of evidence involved in evidence-based practice research is the levels of evidence pyramid. The pyramid includes a variety of evidence types and levels.

- systematic reviews

- critically-appraised topics

- critically-appraised individual articles

- randomized controlled trials

- cohort studies

- case-controlled studies, case series, and case reports

- Background information, expert opinion

Levels of evidence pyramid

The levels of evidence pyramid provides a way to visualize both the quality of evidence and the amount of evidence available. For example, systematic reviews are at the top of the pyramid, meaning they are both the highest level of evidence and the least common. As you go down the pyramid, the amount of evidence will increase as the quality of the evidence decreases.

Text alternative for Levels of Evidence Pyramid diagram

EBM Pyramid and EBM Page Generator, copyright 2006 Trustees of Dartmouth College and Yale University. All Rights Reserved. Produced by Jan Glover, David Izzo, Karen Odato and Lei Wang.

Filtered Resources

Filtered resources appraise the quality of studies and often make recommendations for practice. The main types of filtered resources in evidence-based practice are:

Scroll down the page to the Systematic reviews , Critically-appraised topics , and Critically-appraised individual articles sections for links to resources where you can find each of these types of filtered information.

Systematic reviews

Authors of a systematic review ask a specific clinical question, perform a comprehensive literature review, eliminate the poorly done studies, and attempt to make practice recommendations based on the well-done studies. Systematic reviews include only experimental, or quantitative, studies, and often include only randomized controlled trials.

You can find systematic reviews in these filtered databases :

- Cochrane Database of Systematic Reviews Cochrane systematic reviews are considered the gold standard for systematic reviews. This database contains both systematic reviews and review protocols. To find only systematic reviews, select Cochrane Reviews in the Document Type box.

- JBI EBP Database (formerly Joanna Briggs Institute EBP Database) This database includes systematic reviews, evidence summaries, and best practice information sheets. To find only systematic reviews, click on Limits and then select Systematic Reviews in the Publication Types box. To see how to use the limit and find full text, please see our Joanna Briggs Institute Search Help page .

You can also find systematic reviews in this unfiltered database :

To learn more about finding systematic reviews, please see our guide:

- Filtered Resources: Systematic Reviews

Critically-appraised topics

Authors of critically-appraised topics evaluate and synthesize multiple research studies. Critically-appraised topics are like short systematic reviews focused on a particular topic.

You can find critically-appraised topics in these resources:

- Annual Reviews This collection offers comprehensive, timely collections of critical reviews written by leading scientists. To find reviews on your topic, use the search box in the upper-right corner.

- Guideline Central This free database offers quick-reference guideline summaries organized by a new non-profit initiative which will aim to fill the gap left by the sudden closure of AHRQ’s National Guideline Clearinghouse (NGC).

- JBI EBP Database (formerly Joanna Briggs Institute EBP Database) To find critically-appraised topics in JBI, click on Limits and then select Evidence Summaries from the Publication Types box. To see how to use the limit and find full text, please see our Joanna Briggs Institute Search Help page .

- National Institute for Health and Care Excellence (NICE) Evidence-based recommendations for health and care in England.

- Filtered Resources: Critically-Appraised Topics

Critically-appraised individual articles

Authors of critically-appraised individual articles evaluate and synopsize individual research studies.

You can find critically-appraised individual articles in these resources:

- EvidenceAlerts Quality articles from over 120 clinical journals are selected by research staff and then rated for clinical relevance and interest by an international group of physicians. Note: You must create a free account to search EvidenceAlerts.

- ACP Journal Club This journal publishes reviews of research on the care of adults and adolescents. You can either browse this journal or use the Search within this publication feature.

- Evidence-Based Nursing This journal reviews research studies that are relevant to best nursing practice. You can either browse individual issues or use the search box in the upper-right corner.

To learn more about finding critically-appraised individual articles, please see our guide:

- Filtered Resources: Critically-Appraised Individual Articles

Unfiltered resources

You may not always be able to find information on your topic in the filtered literature. When this happens, you'll need to search the primary or unfiltered literature. Keep in mind that with unfiltered resources, you take on the role of reviewing what you find to make sure it is valid and reliable.

Note: You can also find systematic reviews and other filtered resources in these unfiltered databases.

The Levels of Evidence Pyramid includes unfiltered study types in this order of evidence from higher to lower:

You can search for each of these types of evidence in the following databases:

TRIP database

Background information & expert opinion.

Background information and expert opinions are not necessarily backed by research studies. They include point-of-care resources, textbooks, conference proceedings, etc.

- Family Physicians Inquiries Network: Clinical Inquiries Provide the ideal answers to clinical questions using a structured search, critical appraisal, authoritative recommendations, clinical perspective, and rigorous peer review. Clinical Inquiries deliver best evidence for point-of-care use.

- Harrison, T. R., & Fauci, A. S. (2009). Harrison's Manual of Medicine . New York: McGraw-Hill Professional. Contains the clinical portions of Harrison's Principles of Internal Medicine .

- Lippincott manual of nursing practice (8th ed.). (2006). Philadelphia, PA: Lippincott Williams & Wilkins. Provides background information on clinical nursing practice.

- Medscape: Drugs & Diseases An open-access, point-of-care medical reference that includes clinical information from top physicians and pharmacists in the United States and worldwide.

- Virginia Henderson Global Nursing e-Repository An open-access repository that contains works by nurses and is sponsored by Sigma Theta Tau International, the Honor Society of Nursing. Note: This resource contains both expert opinion and evidence-based practice articles.

- Previous Page: Phrasing Research Questions

- Next Page: Evidence Types

- Office of Student Disability Services

Walden Resources

Departments.

- Academic Residencies

- Academic Skills

- Career Planning and Development

- Customer Care Team

- Field Experience

- Military Services

- Student Success Advising

- Writing Skills

Centers and Offices

- Center for Social Change

- Office of Academic Support and Instructional Services

- Office of Degree Acceleration

- Office of Research and Doctoral Services

- Office of Student Affairs

Student Resources

- Doctoral Writing Assessment

- Form & Style Review

- Quick Answers

- ScholarWorks

- SKIL Courses and Workshops

- Walden Bookstore

- Walden Catalog & Student Handbook

- Student Safety/Title IX

- Legal & Consumer Information

- Website Terms and Conditions

- Cookie Policy

- Accessibility

- Accreditation

- State Authorization

- Net Price Calculator

- Contact Walden

Walden University is a member of Adtalem Global Education, Inc. www.adtalem.com Walden University is certified to operate by SCHEV © 2024 Walden University LLC. All rights reserved.

Systematic Reviews

- Levels of Evidence

- Evidence Pyramid

- Joanna Briggs Institute

The evidence pyramid is often used to illustrate the development of evidence. At the base of the pyramid is animal research and laboratory studies – this is where ideas are first developed. As you progress up the pyramid the amount of information available decreases in volume, but increases in relevance to the clinical setting.

Meta Analysis – systematic review that uses quantitative methods to synthesize and summarize the results.

Systematic Review – summary of the medical literature that uses explicit methods to perform a comprehensive literature search and critical appraisal of individual studies and that uses appropriate st atistical techniques to combine these valid studies.

Randomized Controlled Trial – Participants are randomly allocated into an experimental group or a control group and followed over time for the variables/outcomes of interest.

Cohort Study – Involves identification of two groups (cohorts) of patients, one which received the exposure of interest, and one which did not, and following these cohorts forward for the outcome of interest.

Case Control Study – study which involves identifying patients who have the outcome of interest (cases) and patients without the same outcome (controls), and looking back to see if they had the exposure of interest.

Case Series – report on a series of patients with an outcome of interest. No control group is involved.

- Levels of Evidence from The Centre for Evidence-Based Medicine

- The JBI Model of Evidence Based Healthcare

- How to Use the Evidence: Assessment and Application of Scientific Evidence From the National Health and Medical Research Council (NHMRC) of Australia. Book must be downloaded; not available to read online.

When searching for evidence to answer clinical questions, aim to identify the highest level of available evidence. Evidence hierarchies can help you strategically identify which resources to use for finding evidence, as well as which search results are most likely to be "best".

Image source: Evidence-Based Practice: Study Design from Duke University Medical Center Library & Archives. This work is licensed under a Creativ e Commons Attribution-ShareAlike 4.0 International License .

The hierarchy of evidence (also known as the evidence-based pyramid) is depicted as a triangular representation of the levels of evidence with the strongest evidence at the top which progresses down through evidence with decreasing strength. At the top of the pyramid are research syntheses, such as Meta-Analyses and Systematic Reviews, the strongest forms of evidence. Below research syntheses are primary research studies progressing from experimental studies, such as Randomized Controlled Trials, to observational studies, such as Cohort Studies, Case-Control Studies, Cross-Sectional Studies, Case Series, and Case Reports. Non-Human Animal Studies and Laboratory Studies occupy the lowest level of evidence at the base of the pyramid.

- Finding Evidence-Based Answers to Clinical Questions – Quickly & Effectively A tip sheet from the health sciences librarians at UC Davis Libraries to help you get started with selecting resources for finding evidence, based on type of question.

- << Previous: What is a Systematic Review?

- Next: Locating Systematic Reviews >>

- Getting Started

- What is a Systematic Review?

- Locating Systematic Reviews

- Searching Systematically

- Developing Answerable Questions

- Identifying Synonyms & Related Terms

- Using Truncation and Wildcards

- Identifying Search Limits/Exclusion Criteria

- Keyword vs. Subject Searching

- Where to Search

- Search Filters

- Sensitivity vs. Precision

- Core Databases

- Other Databases

- Clinical Trial Registries

- Conference Presentations

- Databases Indexing Grey Literature

- Web Searching

- Handsearching

- Citation Indexes

- Documenting the Search Process

- Managing your Review

Research Support

- Last Updated: Apr 8, 2024 3:33 PM

- URL: https://guides.library.ucdavis.edu/systematic-reviews

MSU Libraries

- Need help? Ask a Librarian

Nursing Literature and Other Types of Reviews

- Literature and Other Types of Reviews

- Starting Your Search

- Constructing Your Search

- Selecting Databases and Saving Your Search

Levels of Evidence

- Creating a PRISMA Table

- Literature Table and Synthesis

- Other Resources

Levels of evidence (sometimes called hierarchy of evidence) are assigned to studies based on the methodological quality of their design, validity, and applicability to patient care. These decisions gives the grade (or strength) of recommendation. Just because something is lower on the pyramid doesn't mean that the study itself is lower-quality, it just means that the methods used may not be as clinically rigorous as higher levels of the pyramid. In nursing, the system for assigning levels of evidence is often from Melnyk & Fineout-Overholt's 2011 book, Evidence-based Practice in Nursing and Healthcare: A Guide to Best Practice . The Levels of Evidence below are adapted from Melnyk & Fineout-Overholt's (2011) model.

Melnyk & Fineout-Overholt (2011)

- Meta-Analysis: A systematic review that uses quantitative methods to summarize the results. (Level 1)

- Systematic Review: A comprehensive review that authors have systematically searched for, appraised, and summarized all of the medical literature for a specific topic (Level 1)

- Randomized Controlled Trials: RCT's include a randomized group of patients in an experimental group and a control group. These groups are followed up for the variables/outcomes of interest. Examples of RCTs are clinical trials that compare the effects of drugs, surgical techniques, medical devices, diagnostic procedures, diets or other medical treatments. (can be Level 2 or Level 4, depending on how expansive the study)

- Non-Randomized Controlled Trials: A clinical trial in which the participants are not assigned by chance to different treatment groups. Participants may choose which group they want to be in, or they may be assigned to the groups by the researchers.

- Cohort Study: Identifies two groups (cohorts) of patients, one which did receive the exposure of interest, and one which did not, and following these cohorts forward for the outcome of interest. ( Level 5)

- Case-Control Study: Involves identifying patients who have the outcome of interest (cases) and control patients without the same outcome, and looking to see if they had the exposure of interest.

- Background Information/Expert Opinion: Handbooks, encyclopedias, and textbooks often provide a good foundation or introduction and often include generalized information about a condition. While background information presents a convenient summary, often it takes about three years for this type of literature to be published. (Level 7)

- << Previous: Selecting Databases and Saving Your Search

- Next: Creating a PRISMA Table >>

- Last Updated: Mar 27, 2024 3:56 PM

- URL: https://libguides.lib.msu.edu/nursinglitreview

- Research Process

Levels of evidence in research

- 5 minute read

- 98.1K views

Table of Contents

Level of evidence hierarchy

When carrying out a project you might have noticed that while searching for information, there seems to be different levels of credibility given to different types of scientific results. For example, it is not the same to use a systematic review or an expert opinion as a basis for an argument. It’s almost common sense that the first will demonstrate more accurate results than the latter, which ultimately derives from a personal opinion.

In the medical and health care area, for example, it is very important that professionals not only have access to information but also have instruments to determine which evidence is stronger and more trustworthy, building up the confidence to diagnose and treat their patients.

5 levels of evidence

With the increasing need from physicians – as well as scientists of different fields of study-, to know from which kind of research they can expect the best clinical evidence, experts decided to rank this evidence to help them identify the best sources of information to answer their questions. The criteria for ranking evidence is based on the design, methodology, validity and applicability of the different types of studies. The outcome is called “levels of evidence” or “levels of evidence hierarchy”. By organizing a well-defined hierarchy of evidence, academia experts were aiming to help scientists feel confident in using findings from high-ranked evidence in their own work or practice. For Physicians, whose daily activity depends on available clinical evidence to support decision-making, this really helps them to know which evidence to trust the most.

So, by now you know that research can be graded according to the evidential strength determined by different study designs. But how many grades are there? Which evidence should be high-ranked and low-ranked?

There are five levels of evidence in the hierarchy of evidence – being 1 (or in some cases A) for strong and high-quality evidence and 5 (or E) for evidence with effectiveness not established, as you can see in the pyramidal scheme below:

Level 1: (higher quality of evidence) – High-quality randomized trial or prospective study; testing of previously developed diagnostic criteria on consecutive patients; sensible costs and alternatives; values obtained from many studies with multiway sensitivity analyses; systematic review of Level I RCTs and Level I studies.

Level 2: Lesser quality RCT; prospective comparative study; retrospective study; untreated controls from an RCT; lesser quality prospective study; development of diagnostic criteria on consecutive patients; sensible costs and alternatives; values obtained from limited stud- ies; with multiway sensitivity analyses; systematic review of Level II studies or Level I studies with inconsistent results.

Level 3: Case-control study (therapeutic and prognostic studies); retrospective comparative study; study of nonconsecutive patients without consistently applied reference “gold” standard; analyses based on limited alternatives and costs and poor estimates; systematic review of Level III studies.

Level 4: Case series; case-control study (diagnostic studies); poor reference standard; analyses with no sensitivity analyses.

Level 5: (lower quality of evidence) – Expert opinion.

By looking at the pyramid, you can roughly distinguish what type of research gives you the highest quality of evidence and which gives you the lowest. Basically, level 1 and level 2 are filtered information – that means an author has gathered evidence from well-designed studies, with credible results, and has produced findings and conclusions appraised by renowned experts, who consider them valid and strong enough to serve researchers and scientists. Levels 3, 4 and 5 include evidence coming from unfiltered information. Because this evidence hasn’t been appraised by experts, it might be questionable, but not necessarily false or wrong.

Examples of levels of evidence

As you move up the pyramid, you will surely find higher-quality evidence. However, you will notice there is also less research available. So, if there are no resources for you available at the top, you may have to start moving down in order to find the answers you are looking for.

- Systematic Reviews: -Exhaustive summaries of all the existent literature about a certain topic. When drafting a systematic review, authors are expected to deliver a critical assessment and evaluation of all this literature rather than a simple list. Researchers that produce systematic reviews have their own criteria to locate, assemble and evaluate a body of literature.

- Meta-Analysis: Uses quantitative methods to synthesize a combination of results from independent studies. Normally, they function as an overview of clinical trials. Read more: Systematic review vs meta-analysis .

- Critically Appraised Topic: Evaluation of several research studies.

- Critically Appraised Article: Evaluation of individual research studies.

- Randomized Controlled Trial: a clinical trial in which participants or subjects (people that agree to participate in the trial) are randomly divided into groups. Placebo (control) is given to one of the groups whereas the other is treated with medication. This kind of research is key to learning about a treatment’s effectiveness.

- Cohort studies: A longitudinal study design, in which one or more samples called cohorts (individuals sharing a defining characteristic, like a disease) are exposed to an event and monitored prospectively and evaluated in predefined time intervals. They are commonly used to correlate diseases with risk factors and health outcomes.

- Case-Control Study: Selects patients with an outcome of interest (cases) and looks for an exposure factor of interest.

- Background Information/Expert Opinion: Information you can find in encyclopedias, textbooks and handbooks. This kind of evidence just serves as a good foundation for further research – or clinical practice – for it is usually too generalized.

Of course, it is recommended to use level A and/or 1 evidence for more accurate results but that doesn’t mean that all other study designs are unhelpful or useless. It all depends on your research question. Focusing once more on the healthcare and medical field, see how different study designs fit into particular questions, that are not necessarily located at the tip of the pyramid:

- Questions concerning therapy: “Which is the most efficient treatment for my patient?” >> RCT | Cohort studies | Case-Control | Case Studies

- Questions concerning diagnosis: “Which diagnose method should I use?” >> Prospective blind comparison

- Questions concerning prognosis: “How will the patient’s disease will develop over time?” >> Cohort Studies | Case Studies

- Questions concerning etiology: “What are the causes for this disease?” >> RCT | Cohort Studies | Case Studies

- Questions concerning costs: “What is the most cost-effective but safe option for my patient?” >> Economic evaluation

- Questions concerning meaning/quality of life: “What’s the quality of life of my patient going to be like?” >> Qualitative study

Find more about Levels of evidence in research on Pinterest:

17 March 2021 – Elsevier’s Mini Program Launched on WeChat Brings Quality Editing Straight to your Smartphone

- Manuscript Review

Professor Anselmo Paiva: Using Computer Vision to Tackle Medical Issues with a Little Help from Elsevier Author Services

You may also like.

Descriptive Research Design and Its Myriad Uses

Five Common Mistakes to Avoid When Writing a Biomedical Research Paper

Making Technical Writing in Environmental Engineering Accessible

To Err is Not Human: The Dangers of AI-assisted Academic Writing

When Data Speak, Listen: Importance of Data Collection and Analysis Methods

Choosing the Right Research Methodology: A Guide for Researchers

Why is data validation important in research?

Writing a good review article

Input your search keywords and press Enter.

AACN Levels of Evidence

Add to Collection

Added to Collection

Level A — Meta-analysis of quantitative studies or metasynthesis of qualitative studies with results that consistently support a specific action, intervention, or treatment (including systematic review of randomized controlled trials).

Level B — Well-designed, controlled studies with results that consistently support a specific action, intervention, or treatment.

Level C — Qualitative studies, descriptive or correlational studies, integrative review, systematic review, or randomized controlled trials with inconsistent results.

Level D — Peer-reviewed professional and organizational standards with the support of clinical study recommendations.

Level E — Multiple case reports, theory-based evidence from expert opinions, or peer-reviewed professional organizational standards without clinical studies to support recommendations.

Level M — Manufacturer’s recommendations only.

(Excerpts from Peterson et al. Choosing the Best Evidence to Guide Clinical Practice: Application of AACN Levels of Evidence. Critical Care Nurse. 2014;34[2]:58-68.)

What is the purpose of levels of evidence (LOEs)?

“The amount and availability of research supporting evidence-based practice can be both useful and overwhelming for critical care clinicians. Therefore, clinicians must critically evaluate research before attempting to put the findings into practice. Evaluation of research generally occurs on 2 levels: rating or grading the evidence by using a formal level-of-evidence system and individually critiquing the quality of the study. Determining the level of evidence is a key component of appraising the evidence.1-3 Levels or hierarchies of evidence are used to evaluate and grade evidence. The purpose of determining the level of evidence and then critiquing the study is to ensure that the evidence is credible (eg, reliable and valid) and appropriate for inclusion into practice.3 Critique questions and checklists are available in most nursing research and evidence-based practice texts to use as a starting point in evaluation.”

How are LOEs determined?

“The most common method used to classify or determine the level of evidence is to rate the evidence according to the methodological rigor or design of the research study.3,4 The rigor of a study refers to the strict precision or exactness of the design. In general, findings from experimental research are considered stronger than findings from nonexperimental studies, and similar findings from more than 1 study are considered stronger than results of single studies. Systematic reviews of randomized controlled trials are considered the highest level of evidence, despite the inability to provide answers to all questions in clinical practice.”4,5

Who developed the AACN LOEs?

“As interest in promoting evidence-based practice has grown, many professional organizations have adopted criteria to evaluate evidence and develop evidence-based guidelines for their members.”1,5 Originally developed in 1995, AACN’s rating scale has been updated in 2008 and 2014 by the Evidence-Based Practice Resources Workgroup (EBPRWG). The 2011-2013 EBPRWG continued the tradition of previous workgroups to move research to the patient bedside.

What are the AACN LOEs and how are they used?

The AACN levels of evidence are structured in an alphabetical hierarchy in which the highest form of evidence is ranked as A and includes meta-analyses and meta-syntheses of the results of controlled trials. Evidence from controlled trials is rated B. Level C, the highest level for nonexperimental studies includes systematic reviews of qualitative, descriptive, or correlational studies. “Levels A, B, and C are all based on research (either experimental or nonexperimental designs) and are considered evidence. Levels D, E, and M are considered recommendations drawn from articles, theory, or manufacturers’ recommendations.”

“Clinicians must critically evaluate research before attempting to implement the findings into practice. The clinical relevance of any research must be evaluated as appropriate for inclusion into practice.”

- Polit DF, Beck CT. Resource Manual for Nursing Research: Generating and Assessing Evidence for Nursing Practice. 9th ed. Philadelphia, PA: Williams & Wilkins; 2012.

- Armola RR, Bourgault AM, Halm MA, et al; 2008-2009 Evidence-Based Practice Resource Work Group of the American Association of Critical-Care Nurses. Upgrading the American Association of Critical-Care Nurses’ evidence-leveling hierarchy. Am J Crit Care. 2009;18(5):405-409.

- Melnyk BM, Fineout-Overholt, E. Evidence-Based Practice in Nursing and Healthcare: A Guide to Best Practice. 2nd ed. Philadelphia, PA: Lippincott Williams & Wilkins; 2011.

- Gugiu PC, Gugiu MR. A critical appraisal of standard guidelines for grading levels of evidence. Eval Health Prof. 2010;33(3):233-255. doi:10.1177/0163278710373980.

- Evans D. Hierarchy of evidence: a framework for ranking evidence evaluating healthcare interventions. J Clin Nurs. 2003;12(1):77-84.

- Evidence-Based Medicine

- Finding the Evidence

- eJournals for EBM

Levels of Evidence

- JAMA Users' Guides

- Tutorials (Learning EBM)

- Web Resources

Resources That Rate The Evidence

- ACP Smart Medicine

- Agency for Healthcare Research and Quality

- Clinical Evidence

- Cochrane Library

- Health Services/Technology Assessment Texts (HSTAT)

- PDQ® Cancer Information Summaries from NCI

- Trip Database

Critically Appraised Individual Articles

- Evidence-Based Complementary and Alternative Medicine

- Evidence-Based Dentistry

- Evidence-Based Nursing

- Journal of Evidence-Based Dental Practice

Grades of Recommendation

Critically-appraised individual articles and synopses include:

Filtered evidence:

- Level I: Evidence from a systematic review of all relevant randomized controlled trials.

- Level II: Evidence from a meta-analysis of all relevant randomized controlled trials.

- Level III: Evidence from evidence summaries developed from systematic reviews

- Level IV: Evidence from guidelines developed from systematic reviews

- Level V: Evidence from meta-syntheses of a group of descriptive or qualitative studies

- Level VI: Evidence from evidence summaries of individual studies

- Level VII: Evidence from one properly designed randomized controlled trial

Unfiltered evidence:

- Level VIII: Evidence from nonrandomized controlled clinical trials, nonrandomized clinical trials, cohort studies, case series, case reports, and individual qualitative studies.

- Level IX: Evidence from opinion of authorities and/or reports of expert committee

Two things to remember:

1. Studies in which randomization occurs represent a higher level of evidence than those in which subject selection is not random.

2. Controlled studies carry a higher level of evidence than those in which control groups are not used.

Strength of Recommendation Taxonomy (SORT)

- SORT The American Academy of Family Physicians uses the Strength of Recommendation Taxonomy (SORT) to label key recommendations in clinical review articles. In general, only key recommendations are given a Strength-of-Recommendation grade. Grades are assigned on the basis of the quality and consistency of available evidence.

- << Previous: eJournals for EBM

- Next: JAMA Users' Guides >>

- Last Updated: Jan 25, 2024 4:15 PM

- URL: https://guides.library.stonybrook.edu/evidence-based-medicine

- Request a Class

- Hours & Locations

- Ask a Librarian

- Special Collections

- Library Faculty & Staff

Library Administration: 631.632.7100

- Stony Brook Home

- Campus Maps

- Web Accessibility Information

- Accessibility Barrier Report Form

Comments or Suggestions? | Library Webmaster

Except where otherwise noted, this work by SBU Libraries is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License .

Log in using your username and password

- Search More Search for this keyword Advanced search

- Latest content

- Current issue

- BMJ Journals More You are viewing from: Google Indexer

You are here

- Volume 21, Issue 4

- New evidence pyramid

- Article Text

- Article info

- Citation Tools

- Rapid Responses

- Article metrics

- M Hassan Murad ,

- Mouaz Alsawas ,

- http://orcid.org/0000-0001-5481-696X Fares Alahdab

- Rochester, Minnesota , USA

- Correspondence to : Dr M Hassan Murad, Evidence-based Practice Center, Mayo Clinic, Rochester, MN 55905, USA; murad.mohammad{at}mayo.edu

https://doi.org/10.1136/ebmed-2016-110401

Statistics from Altmetric.com

Request permissions.

If you wish to reuse any or all of this article please use the link below which will take you to the Copyright Clearance Center’s RightsLink service. You will be able to get a quick price and instant permission to reuse the content in many different ways.

- EDUCATION & TRAINING (see Medical Education & Training)

- EPIDEMIOLOGY

- GENERAL MEDICINE (see Internal Medicine)

The first and earliest principle of evidence-based medicine indicated that a hierarchy of evidence exists. Not all evidence is the same. This principle became well known in the early 1990s as practising physicians learnt basic clinical epidemiology skills and started to appraise and apply evidence to their practice. Since evidence was described as a hierarchy, a compelling rationale for a pyramid was made. Evidence-based healthcare practitioners became familiar with this pyramid when reading the literature, applying evidence or teaching students.

Various versions of the evidence pyramid have been described, but all of them focused on showing weaker study designs in the bottom (basic science and case series), followed by case–control and cohort studies in the middle, then randomised controlled trials (RCTs), and at the very top, systematic reviews and meta-analysis. This description is intuitive and likely correct in many instances. The placement of systematic reviews at the top had undergone several alterations in interpretations, but was still thought of as an item in a hierarchy. 1 Most versions of the pyramid clearly represented a hierarchy of internal validity (risk of bias). Some versions incorporated external validity (applicability) in the pyramid by either placing N-1 trials above RCTs (because their results are most applicable to individual patients 2 ) or by separating internal and external validity. 3

Another version (the 6S pyramid) was also developed to describe the sources of evidence that can be used by evidence-based medicine (EBM) practitioners for answering foreground questions, showing a hierarchy ranging from studies, synopses, synthesis, synopses of synthesis, summaries and systems. 4 This hierarchy may imply some sort of increasing validity and applicability although its main purpose is to emphasise that the lower sources of evidence in the hierarchy are least preferred in practice because they require more expertise and time to identify, appraise and apply.

The traditional pyramid was deemed too simplistic at times, thus the importance of leaving room for argument and counterargument for the methodological merit of different designs has been emphasised. 5 Other barriers challenged the placement of systematic reviews and meta-analyses at the top of the pyramid. For instance, heterogeneity (clinical, methodological or statistical) is an inherent limitation of meta-analyses that can be minimised or explained but never eliminated. 6 The methodological intricacies and dilemmas of systematic reviews could potentially result in uncertainty and error. 7 One evaluation of 163 meta-analyses demonstrated that the estimation of treatment outcomes differed substantially depending on the analytical strategy being used. 7 Therefore, we suggest, in this perspective, two visual modifications to the pyramid to illustrate two contemporary methodological principles ( figure 1 ). We provide the rationale and an example for each modification.

- Download figure

- Open in new tab

- Download powerpoint

The proposed new evidence-based medicine pyramid. (A) The traditional pyramid. (B) Revising the pyramid: (1) lines separating the study designs become wavy (Grading of Recommendations Assessment, Development and Evaluation), (2) systematic reviews are ‘chopped off’ the pyramid. (C) The revised pyramid: systematic reviews are a lens through which evidence is viewed (applied).

Rationale for modification 1

In the early 2000s, the Grading of Recommendations Assessment, Development and Evaluation (GRADE) Working Group developed a framework in which the certainty in evidence was based on numerous factors and not solely on study design which challenges the pyramid concept. 8 Study design alone appears to be insufficient on its own as a surrogate for risk of bias. Certain methodological limitations of a study, imprecision, inconsistency and indirectness, were factors independent from study design and can affect the quality of evidence derived from any study design. For example, a meta-analysis of RCTs evaluating intensive glycaemic control in non-critically ill hospitalised patients showed a non-significant reduction in mortality (relative risk of 0.95 (95% CI 0.72 to 1.25) 9 ). Allocation concealment and blinding were not adequate in most trials. The quality of this evidence is rated down due to the methodological imitations of the trials and imprecision (wide CI that includes substantial benefit and harm). Hence, despite the fact of having five RCTs, such evidence should not be rated high in any pyramid. The quality of evidence can also be rated up. For example, we are quite certain about the benefits of hip replacement in a patient with disabling hip osteoarthritis. Although not tested in RCTs, the quality of this evidence is rated up despite the study design (non-randomised observational studies). 10

Rationale for modification 2

Another challenge to the notion of having systematic reviews on the top of the evidence pyramid relates to the framework presented in the Journal of the American Medical Association User's Guide on systematic reviews and meta-analysis. The Guide presented a two-step approach in which the credibility of the process of a systematic review is evaluated first (comprehensive literature search, rigorous study selection process, etc). If the systematic review was deemed sufficiently credible, then a second step takes place in which we evaluate the certainty in evidence based on the GRADE approach. 11 In other words, a meta-analysis of well-conducted RCTs at low risk of bias cannot be equated with a meta-analysis of observational studies at higher risk of bias. For example, a meta-analysis of 112 surgical case series showed that in patients with thoracic aortic transection, the mortality rate was significantly lower in patients who underwent endovascular repair, followed by open repair and non-operative management (9%, 19% and 46%, respectively, p<0.01). Clearly, this meta-analysis should not be on top of the pyramid similar to a meta-analysis of RCTs. After all, the evidence remains consistent of non-randomised studies and likely subject to numerous confounders.

Therefore, the second modification to the pyramid is to remove systematic reviews from the top of the pyramid and use them as a lens through which other types of studies should be seen (ie, appraised and applied). The systematic review (the process of selecting the studies) and meta-analysis (the statistical aggregation that produces a single effect size) are tools to consume and apply the evidence by stakeholders.

Implications and limitations

Changing how systematic reviews and meta-analyses are perceived by stakeholders (patients, clinicians and stakeholders) has important implications. For example, the American Heart Association considers evidence derived from meta-analyses to have a level ‘A’ (ie, warrants the most confidence). Re-evaluation of evidence using GRADE shows that level ‘A’ evidence could have been high, moderate, low or of very low quality. 12 The quality of evidence drives the strength of recommendation, which is one of the last translational steps of research, most proximal to patient care.

One of the limitations of all ‘pyramids’ and depictions of evidence hierarchy relates to the underpinning of such schemas. The construct of internal validity may have varying definitions, or be understood differently among evidence consumers. A limitation of considering systematic review and meta-analyses as tools to consume evidence may undermine their role in new discovery (eg, identifying a new side effect that was not demonstrated in individual studies 13 ).

This pyramid can be also used as a teaching tool. EBM teachers can compare it to the existing pyramids to explain how certainty in the evidence (also called quality of evidence) is evaluated. It can be used to teach how evidence-based practitioners can appraise and apply systematic reviews in practice, and to demonstrate the evolution in EBM thinking and the modern understanding of certainty in evidence.

- Leibovici L

- Agoritsas T ,

- Vandvik P ,

- Neumann I , et al

- ↵ Resources for Evidence-Based Practice: The 6S Pyramid. Secondary Resources for Evidence-Based Practice: The 6S Pyramid Feb 18, 2016 4:58 PM. http://hsl.mcmaster.libguides.com/ebm

- Vandenbroucke JP

- Berlin JA ,

- Dechartres A ,

- Altman DG ,

- Trinquart L , et al

- Guyatt GH ,

- Vist GE , et al

- Coburn JA ,

- Coto-Yglesias F , et al

- Sultan S , et al

- Montori VM ,

- Ioannidis JP , et al

- Altayar O ,

- Bennett M , et al

- Nissen SE ,

Contributors MHM conceived the idea and drafted the manuscript. FA helped draft the manuscript and designed the new pyramid. MA and NA helped draft the manuscript.

Competing interests None declared.

Provenance and peer review Not commissioned; externally peer reviewed.

Linked Articles

- Editorial Pyramids are guides not rules: the evolution of the evidence pyramid Terrence Shaneyfelt BMJ Evidence-Based Medicine 2016; 21 121-122 Published Online First: 12 Jul 2016. doi: 10.1136/ebmed-2016-110498

- Perspective EBHC pyramid 5.0 for accessing preappraised evidence and guidance Brian S Alper R Brian Haynes BMJ Evidence-Based Medicine 2016; 21 123-125 Published Online First: 20 Jun 2016. doi: 10.1136/ebmed-2016-110447

Read the full text or download the PDF:

Systematic Review Process: best practices

Levels of evidence.

- Formulate your search question

- Translate Search Strategies

- Locate systematic reviews & create a protocol

- Data collection: select your sources

- Why Grey Literature is important

- Screening and Data management

- KU Systematic Reviews

Hierarchy of evidence pyramid

The pyramidal shape qualitatively integrates the amount of evidence generally available from each type of study design and the strength of evidence expected from indicated designs. Study designs in ascending levels of the pyramid generally exhibit increased quality of evidence and reduced risk of bias.

Understand the different levels of evidence

Meta Analysis - systematic review that uses quantitative methods to synthesize and summarize the results.

Systematic Review - summary of the medical literature that uses explicit methods to perform a comprehensive literature search and critical appraisal of individual studies and that uses appropriate statistical techniques to combine these valid studies.

Randomised Controlled Trial - Participants are randomly allocated into an experimental group or a control group and followed over time for the variables/outcomes of interest.

Cohort Study - Involves identification of two groups (cohorts) of patients, one which received the exposure of interest, and one which did not, and following these cohorts forward for the outcome of interest.

Case Control Study - study which involves identifying patients who have the outcome of interest (cases) and patients without the same outcome (controls), and looking back to see if they had the exposure of interest.

Case Series - report on a series of patients with an outcome of interest. No control group is involved. (Definitions from CEBM)

Scholarly publications

The Joanna Briggs Institute Reviewers’ Manual 2015 Methodology for JBI Scoping Reviews

Clarifying differences between review designs and methods

Systematic review or scoping review? Guidance for authors when choosing between a systematic or scoping review approach

A scoping review of scoping reviews: advancing the approach and enhancing the consistency

- << Previous: Welcome

- Next: Structure your search strategy >>

Suna Kıraç Library on Social Media

Koç University Suna Kıraç Library Rumelifeneri Yolu, 34450, Sarıyer-İstanbul T:+90-212 338 13 17 F:+90-212 338 13 21 [email protected]

Nursing-Johns Hopkins Evidence-Based Practice Model

Jhebp model for levels of evidence, jhebp levels of evidence overview.

- Levels I, II and III

Evidence-Based Practice (EBP) uses a rating system to appraise evidence (usually a research study published as a journal article). The level of evidence corresponds to the research study design. Scientific research is considered to be the strongest form of evidence and recommendations from the strongest form of evidence will most likely lead to the best practices. The strength of evidence can vary from study to study based on the methods used and the quality of reporting by the researchers. You will want to seek the highest level of evidence available on your topic (Dang et al., 2022, p. 130).

The Johns Hopkins EBP model uses 3 ratings for the level of scientific research evidence

- true experimental (level I)

- quasi-experimental (level II)

- nonexperimental (level III)

The level determination is based on the research meeting the study design requirements (Dang et al., 2022, p. 146-7).

You will use the Research Appraisal Tool (Appendix E) along with the Evidence Level and Quality Guide (Appendix D) to analyze and appraise research studies . (Tools linked below.)

N onresearch evidence is covered in Levels IV and V.

- Evidence Level and Quality Guide (Appendix D)

- Research Evidence Appraisal Tool (Appendix E)

Level I Experimental study

randomized controlled trial (RCT)

Systematic review of RCTs, with or without meta-analysis

Level II Quasi-experimental Study

Systematic review of a combination of RCTs and quasi-experimental, or quasi-experimental studies only, with or without meta-analysis.

Level III Non-experimental study

Systematic review of a combination of RCTs, quasi-experimental and non-experimental, or non-experimental studies only, with or without meta-analysis.

Qualitative study or systematic review, with or without meta-analysis

Level IV Opinion of respected authorities and/or nationally recognized expert committees/consensus panels based on scientific evidence.

Clinical practice guidelines

Consensus panels

Level V Based on experiential and non-research evidence.

Literature reviews

Quality improvement, program, or financial evaluation

Case reports

Opinion of nationally recognized expert(s) based on experiential evidence

These flow charts can also help you detemine the level of evidence throigh a series of questions.

Single Quantitative Research Study

Summary/Reviews

These charts are a part of the Research Evidence Appraisal Tool (Appendix E) document.

Dang, D., Dearholt, S., Bissett, K., Ascenzi, J., & Whalen, M. (2022). Johns Hopkins evidence-based practice for nurses and healthcare professionals: Model and guidelines. 4th ed. Sigma Theta Tau International

- << Previous: Start Here

- Next: Levels I, II and III >>

- Last Updated: Feb 8, 2024 1:24 PM

- URL: https://bradley.libguides.com/jhebp

Darrell W. Krueger Library Krueger Library

Evidence based practice toolkit.

- What is EBP?

- Asking Your Question

Levels of Evidence / Evidence Hierarchy

Evidence pyramid (levels of evidence), definitions, research designs in the hierarchy, clinical questions --- research designs.

- Evidence Appraisal

- Find Research

- Standards of Practice

Levels of evidence (sometimes called hierarchy of evidence) are assigned to studies based on the research design, quality of the study, and applicability to patient care. Higher levels of evidence have less risk of bias .

Levels of Evidence (Melnyk & Fineout-Overholt 2023)

*Adapted from: Melnyk, & Fineout-Overholt, E. (2023). Evidence-based practice in nursing & healthcare: A guide to best practice (Fifth edition.). Wolters Kluwer.

Levels of Evidence (LoBiondo-Wood & Haber 2022)

Adapted from LoBiondo-Wood, G. & Haber, J. (2022). Nursing research: Methods and critical appraisal for evidence-based practice (10th ed.). Elsevier.

" Evidence Pyramid " is a product of Tufts University and is licensed under BY-NC-SA license 4.0

Tufts' "Evidence Pyramid" is based in part on the Oxford Centre for Evidence-Based Medicine: Levels of Evidence (2009)

- Oxford Centre for Evidence Based Medicine Glossary

Different types of clinical questions are best answered by different types of research studies. You might not always find the highest level of evidence (i.e., systematic review or meta-analysis) to answer your question. When this happens, work your way down to the next highest level of evidence.

This table suggests study designs best suited to answer each type of clinical question.

- << Previous: Asking Your Question

- Next: Evidence Appraisal >>

- Last Updated: Apr 2, 2024 7:02 PM

- URL: https://libguides.winona.edu/ebptoolkit

Systematic Reviews: Levels of evidence and study design

Levels of evidence.

"Levels of Evidence" tables have been developed which outline and grade the best evidence. However, the review question will determine the choice of study design.

Secondary sources provide analysis, synthesis, interpretation and evaluation of primary works. Secondary sources are not evidence, but rather provide a commentary on and discussion of evidence. e.g. systematic review

Primary sources contain the original data and analysis from research studies. No outside evaluation or interpretation is provided. An example of a primary literature source is a peer-reviewed research article. Other primary sources include preprints, theses, reports and conference proceedings.

Levels of evidence for primary sources fall into the following broad categories of study designs (listed from highest to lowest):

- Experimental : RTC's (Randomised Control Trials)

- Quasi-experimental studies (Non-randomised control studies, Before-and-after study, Interrupted time series)

- Observational studies (Cohort study, Case-control study, Case series)

Based on information from Centre for Reviews and Dissemination. (2009). Systematic reviews: CRD's guidance for undertaking reviews in health care. Retrieved from http://www.york.ac.uk/inst/crd/index_guidance.htm

Hierarchy of Evidence Pyramid

"Levels of Evidence" are often represented in as a pyramid, with the highest level of evidence at the top:

Types of Study Design

The following definitions are adapted from the Glossary in " Systematic reviews: CRD's Guidance for Undertaking Reviews in Health Care " , Centre for Reviews and Dissemination, University of York :

- Systematic Review The application of strategies that limit bias in the assembly, critical appraisal, and synthesis of all relevant studies on a specific topic and research question.

- Meta-analysis A systematic review which uses quantitative methods to summarise the results

- Randomized control clinical trial (RCT) A group of patients is randomised into an experimental group and a control group. These groups are followed up for the variables/outcomes of interest.

- Cohort study Involves the identification of two groups (cohorts) of patients, one which did receive the exposure of interest, and one which did not, and following these cohorts forward for the outcome of interest.

- Case-control study Involves identifying patients who have the outcome of interest (cases) and control patients without the same outcome, and looking to see if they had the exposure of interest.

- Critically appraised topic A short summary of an article from the literature, created to answer a specific clinical question.

EBM and Study Design

- << Previous: SR protocol

- Next: Searching for systematic reviews >>

- Getting started

- Types of reviews

- Formulate the question

- SR protocol

- Levels of evidence and study design

- Searching for systematic reviews

- Search strategies

- Subject databases

- Keeping up to date/Alerts

- Trial registers

- Conference proceedings

- Critical appraisal

- Documenting and reporting

- Managing search results

- Statistical methods

- Journal information/publishing

- Contact a librarian

- Last Updated: Oct 2, 2023 4:13 PM

- URL: https://ecu.au.libguides.com/systematic-reviews

Edith Cowan University acknowledges and respects the Noongar people, who are the traditional custodians of the land upon which its campuses stand and its programs operate. In particular ECU pays its respects to the Elders, past and present, of the Noongar people, and embrace their culture, wisdom and knowledge.

- Harvard Library

- Research Guides

- Harvard Graduate School of Design - Frances Loeb Library

Write and Cite

- Literature Review

- Academic Integrity

- Citing Sources

- Fair Use, Permissions, and Copyright

- Writing Resources

- Grants and Fellowships

- Last Updated: Apr 26, 2024 10:28 AM

- URL: https://guides.library.harvard.edu/gsd/write

Harvard University Digital Accessibility Policy

[Physiotherapy in rehabilitation of patients with degenerative disk diseases from positions of evidence-based medicine: a literature review]

Affiliation.

- 1 Federal Scientific and Clinical Center of Medical Rehabilitation and Balneology of the Federal Medical and Biological Agency, Moscow, Russia.

- PMID: 38639152

- DOI: 10.17116/kurort202410102157

Publication types

- English Abstract

- Evidence-Based Medicine

- Low Back Pain*

- Lumbar Vertebrae / surgery

- Physical Therapy Modalities

- Spinal Fusion* / methods

- Treatment Outcome

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Korean J Anesthesiol

- v.71(2); 2018 Apr

Introduction to systematic review and meta-analysis

1 Department of Anesthesiology and Pain Medicine, Inje University Seoul Paik Hospital, Seoul, Korea

2 Department of Anesthesiology and Pain Medicine, Chung-Ang University College of Medicine, Seoul, Korea

Systematic reviews and meta-analyses present results by combining and analyzing data from different studies conducted on similar research topics. In recent years, systematic reviews and meta-analyses have been actively performed in various fields including anesthesiology. These research methods are powerful tools that can overcome the difficulties in performing large-scale randomized controlled trials. However, the inclusion of studies with any biases or improperly assessed quality of evidence in systematic reviews and meta-analyses could yield misleading results. Therefore, various guidelines have been suggested for conducting systematic reviews and meta-analyses to help standardize them and improve their quality. Nonetheless, accepting the conclusions of many studies without understanding the meta-analysis can be dangerous. Therefore, this article provides an easy introduction to clinicians on performing and understanding meta-analyses.

Introduction

A systematic review collects all possible studies related to a given topic and design, and reviews and analyzes their results [ 1 ]. During the systematic review process, the quality of studies is evaluated, and a statistical meta-analysis of the study results is conducted on the basis of their quality. A meta-analysis is a valid, objective, and scientific method of analyzing and combining different results. Usually, in order to obtain more reliable results, a meta-analysis is mainly conducted on randomized controlled trials (RCTs), which have a high level of evidence [ 2 ] ( Fig. 1 ). Since 1999, various papers have presented guidelines for reporting meta-analyses of RCTs. Following the Quality of Reporting of Meta-analyses (QUORUM) statement [ 3 ], and the appearance of registers such as Cochrane Library’s Methodology Register, a large number of systematic literature reviews have been registered. In 2009, the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement [ 4 ] was published, and it greatly helped standardize and improve the quality of systematic reviews and meta-analyses [ 5 ].

Levels of evidence.

In anesthesiology, the importance of systematic reviews and meta-analyses has been highlighted, and they provide diagnostic and therapeutic value to various areas, including not only perioperative management but also intensive care and outpatient anesthesia [6–13]. Systematic reviews and meta-analyses include various topics, such as comparing various treatments of postoperative nausea and vomiting [ 14 , 15 ], comparing general anesthesia and regional anesthesia [ 16 – 18 ], comparing airway maintenance devices [ 8 , 19 ], comparing various methods of postoperative pain control (e.g., patient-controlled analgesia pumps, nerve block, or analgesics) [ 20 – 23 ], comparing the precision of various monitoring instruments [ 7 ], and meta-analysis of dose-response in various drugs [ 12 ].

Thus, literature reviews and meta-analyses are being conducted in diverse medical fields, and the aim of highlighting their importance is to help better extract accurate, good quality data from the flood of data being produced. However, a lack of understanding about systematic reviews and meta-analyses can lead to incorrect outcomes being derived from the review and analysis processes. If readers indiscriminately accept the results of the many meta-analyses that are published, incorrect data may be obtained. Therefore, in this review, we aim to describe the contents and methods used in systematic reviews and meta-analyses in a way that is easy to understand for future authors and readers of systematic review and meta-analysis.

Study Planning

It is easy to confuse systematic reviews and meta-analyses. A systematic review is an objective, reproducible method to find answers to a certain research question, by collecting all available studies related to that question and reviewing and analyzing their results. A meta-analysis differs from a systematic review in that it uses statistical methods on estimates from two or more different studies to form a pooled estimate [ 1 ]. Following a systematic review, if it is not possible to form a pooled estimate, it can be published as is without progressing to a meta-analysis; however, if it is possible to form a pooled estimate from the extracted data, a meta-analysis can be attempted. Systematic reviews and meta-analyses usually proceed according to the flowchart presented in Fig. 2 . We explain each of the stages below.

Flowchart illustrating a systematic review.

Formulating research questions

A systematic review attempts to gather all available empirical research by using clearly defined, systematic methods to obtain answers to a specific question. A meta-analysis is the statistical process of analyzing and combining results from several similar studies. Here, the definition of the word “similar” is not made clear, but when selecting a topic for the meta-analysis, it is essential to ensure that the different studies present data that can be combined. If the studies contain data on the same topic that can be combined, a meta-analysis can even be performed using data from only two studies. However, study selection via a systematic review is a precondition for performing a meta-analysis, and it is important to clearly define the Population, Intervention, Comparison, Outcomes (PICO) parameters that are central to evidence-based research. In addition, selection of the research topic is based on logical evidence, and it is important to select a topic that is familiar to readers without clearly confirmed the evidence [ 24 ].

Protocols and registration

In systematic reviews, prior registration of a detailed research plan is very important. In order to make the research process transparent, primary/secondary outcomes and methods are set in advance, and in the event of changes to the method, other researchers and readers are informed when, how, and why. Many studies are registered with an organization like PROSPERO ( http://www.crd.york.ac.uk/PROSPERO/ ), and the registration number is recorded when reporting the study, in order to share the protocol at the time of planning.

Defining inclusion and exclusion criteria

Information is included on the study design, patient characteristics, publication status (published or unpublished), language used, and research period. If there is a discrepancy between the number of patients included in the study and the number of patients included in the analysis, this needs to be clearly explained while describing the patient characteristics, to avoid confusing the reader.

Literature search and study selection

In order to secure proper basis for evidence-based research, it is essential to perform a broad search that includes as many studies as possible that meet the inclusion and exclusion criteria. Typically, the three bibliographic databases Medline, Embase, and Cochrane Central Register of Controlled Trials (CENTRAL) are used. In domestic studies, the Korean databases KoreaMed, KMBASE, and RISS4U may be included. Effort is required to identify not only published studies but also abstracts, ongoing studies, and studies awaiting publication. Among the studies retrieved in the search, the researchers remove duplicate studies, select studies that meet the inclusion/exclusion criteria based on the abstracts, and then make the final selection of studies based on their full text. In order to maintain transparency and objectivity throughout this process, study selection is conducted independently by at least two investigators. When there is a inconsistency in opinions, intervention is required via debate or by a third reviewer. The methods for this process also need to be planned in advance. It is essential to ensure the reproducibility of the literature selection process [ 25 ].

Quality of evidence

However, well planned the systematic review or meta-analysis is, if the quality of evidence in the studies is low, the quality of the meta-analysis decreases and incorrect results can be obtained [ 26 ]. Even when using randomized studies with a high quality of evidence, evaluating the quality of evidence precisely helps determine the strength of recommendations in the meta-analysis. One method of evaluating the quality of evidence in non-randomized studies is the Newcastle-Ottawa Scale, provided by the Ottawa Hospital Research Institute 1) . However, we are mostly focusing on meta-analyses that use randomized studies.

If the Grading of Recommendations, Assessment, Development and Evaluations (GRADE) system ( http://www.gradeworkinggroup.org/ ) is used, the quality of evidence is evaluated on the basis of the study limitations, inaccuracies, incompleteness of outcome data, indirectness of evidence, and risk of publication bias, and this is used to determine the strength of recommendations [ 27 ]. As shown in Table 1 , the study limitations are evaluated using the “risk of bias” method proposed by Cochrane 2) . This method classifies bias in randomized studies as “low,” “high,” or “unclear” on the basis of the presence or absence of six processes (random sequence generation, allocation concealment, blinding participants or investigators, incomplete outcome data, selective reporting, and other biases) [ 28 ].

The Cochrane Collaboration’s Tool for Assessing the Risk of Bias [ 28 ]

Data extraction

Two different investigators extract data based on the objectives and form of the study; thereafter, the extracted data are reviewed. Since the size and format of each variable are different, the size and format of the outcomes are also different, and slight changes may be required when combining the data [ 29 ]. If there are differences in the size and format of the outcome variables that cause difficulties combining the data, such as the use of different evaluation instruments or different evaluation timepoints, the analysis may be limited to a systematic review. The investigators resolve differences of opinion by debate, and if they fail to reach a consensus, a third-reviewer is consulted.

Data Analysis

The aim of a meta-analysis is to derive a conclusion with increased power and accuracy than what could not be able to achieve in individual studies. Therefore, before analysis, it is crucial to evaluate the direction of effect, size of effect, homogeneity of effects among studies, and strength of evidence [ 30 ]. Thereafter, the data are reviewed qualitatively and quantitatively. If it is determined that the different research outcomes cannot be combined, all the results and characteristics of the individual studies are displayed in a table or in a descriptive form; this is referred to as a qualitative review. A meta-analysis is a quantitative review, in which the clinical effectiveness is evaluated by calculating the weighted pooled estimate for the interventions in at least two separate studies.

The pooled estimate is the outcome of the meta-analysis, and is typically explained using a forest plot ( Figs. 3 and and4). 4 ). The black squares in the forest plot are the odds ratios (ORs) and 95% confidence intervals in each study. The area of the squares represents the weight reflected in the meta-analysis. The black diamond represents the OR and 95% confidence interval calculated across all the included studies. The bold vertical line represents a lack of therapeutic effect (OR = 1); if the confidence interval includes OR = 1, it means no significant difference was found between the treatment and control groups.

Forest plot analyzed by two different models using the same data. (A) Fixed-effect model. (B) Random-effect model. The figure depicts individual trials as filled squares with the relative sample size and the solid line as the 95% confidence interval of the difference. The diamond shape indicates the pooled estimate and uncertainty for the combined effect. The vertical line indicates the treatment group shows no effect (OR = 1). Moreover, if the confidence interval includes 1, then the result shows no evidence of difference between the treatment and control groups.

Forest plot representing homogeneous data.

Dichotomous variables and continuous variables

In data analysis, outcome variables can be considered broadly in terms of dichotomous variables and continuous variables. When combining data from continuous variables, the mean difference (MD) and standardized mean difference (SMD) are used ( Table 2 ).

Summary of Meta-analysis Methods Available in RevMan [ 28 ]

The MD is the absolute difference in mean values between the groups, and the SMD is the mean difference between groups divided by the standard deviation. When results are presented in the same units, the MD can be used, but when results are presented in different units, the SMD should be used. When the MD is used, the combined units must be shown. A value of “0” for the MD or SMD indicates that the effects of the new treatment method and the existing treatment method are the same. A value lower than “0” means the new treatment method is less effective than the existing method, and a value greater than “0” means the new treatment is more effective than the existing method.

When combining data for dichotomous variables, the OR, risk ratio (RR), or risk difference (RD) can be used. The RR and RD can be used for RCTs, quasi-experimental studies, or cohort studies, and the OR can be used for other case-control studies or cross-sectional studies. However, because the OR is difficult to interpret, using the RR and RD, if possible, is recommended. If the outcome variable is a dichotomous variable, it can be presented as the number needed to treat (NNT), which is the minimum number of patients who need to be treated in the intervention group, compared to the control group, for a given event to occur in at least one patient. Based on Table 3 , in an RCT, if x is the probability of the event occurring in the control group and y is the probability of the event occurring in the intervention group, then x = c/(c + d), y = a/(a + b), and the absolute risk reduction (ARR) = x − y. NNT can be obtained as the reciprocal, 1/ARR.

Calculation of the Number Needed to Treat in the Dichotomous table

Fixed-effect models and random-effect models

In order to analyze effect size, two types of models can be used: a fixed-effect model or a random-effect model. A fixed-effect model assumes that the effect of treatment is the same, and that variation between results in different studies is due to random error. Thus, a fixed-effect model can be used when the studies are considered to have the same design and methodology, or when the variability in results within a study is small, and the variance is thought to be due to random error. Three common methods are used for weighted estimation in a fixed-effect model: 1) inverse variance-weighted estimation 3) , 2) Mantel-Haenszel estimation 4) , and 3) Peto estimation 5) .

A random-effect model assumes heterogeneity between the studies being combined, and these models are used when the studies are assumed different, even if a heterogeneity test does not show a significant result. Unlike a fixed-effect model, a random-effect model assumes that the size of the effect of treatment differs among studies. Thus, differences in variation among studies are thought to be due to not only random error but also between-study variability in results. Therefore, weight does not decrease greatly for studies with a small number of patients. Among methods for weighted estimation in a random-effect model, the DerSimonian and Laird method 6) is mostly used for dichotomous variables, as the simplest method, while inverse variance-weighted estimation is used for continuous variables, as with fixed-effect models. These four methods are all used in Review Manager software (The Cochrane Collaboration, UK), and are described in a study by Deeks et al. [ 31 ] ( Table 2 ). However, when the number of studies included in the analysis is less than 10, the Hartung-Knapp-Sidik-Jonkman method 7) can better reduce the risk of type 1 error than does the DerSimonian and Laird method [ 32 ].

Fig. 3 shows the results of analyzing outcome data using a fixed-effect model (A) and a random-effect model (B). As shown in Fig. 3 , while the results from large studies are weighted more heavily in the fixed-effect model, studies are given relatively similar weights irrespective of study size in the random-effect model. Although identical data were being analyzed, as shown in Fig. 3 , the significant result in the fixed-effect model was no longer significant in the random-effect model. One representative example of the small study effect in a random-effect model is the meta-analysis by Li et al. [ 33 ]. In a large-scale study, intravenous injection of magnesium was unrelated to acute myocardial infarction, but in the random-effect model, which included numerous small studies, the small study effect resulted in an association being found between intravenous injection of magnesium and myocardial infarction. This small study effect can be controlled for by using a sensitivity analysis, which is performed to examine the contribution of each of the included studies to the final meta-analysis result. In particular, when heterogeneity is suspected in the study methods or results, by changing certain data or analytical methods, this method makes it possible to verify whether the changes affect the robustness of the results, and to examine the causes of such effects [ 34 ].

Heterogeneity

Homogeneity test is a method whether the degree of heterogeneity is greater than would be expected to occur naturally when the effect size calculated from several studies is higher than the sampling error. This makes it possible to test whether the effect size calculated from several studies is the same. Three types of homogeneity tests can be used: 1) forest plot, 2) Cochrane’s Q test (chi-squared), and 3) Higgins I 2 statistics. In the forest plot, as shown in Fig. 4 , greater overlap between the confidence intervals indicates greater homogeneity. For the Q statistic, when the P value of the chi-squared test, calculated from the forest plot in Fig. 4 , is less than 0.1, it is considered to show statistical heterogeneity and a random-effect can be used. Finally, I 2 can be used [ 35 ].

I 2 , calculated as shown above, returns a value between 0 and 100%. A value less than 25% is considered to show strong homogeneity, a value of 50% is average, and a value greater than 75% indicates strong heterogeneity.

Even when the data cannot be shown to be homogeneous, a fixed-effect model can be used, ignoring the heterogeneity, and all the study results can be presented individually, without combining them. However, in many cases, a random-effect model is applied, as described above, and a subgroup analysis or meta-regression analysis is performed to explain the heterogeneity. In a subgroup analysis, the data are divided into subgroups that are expected to be homogeneous, and these subgroups are analyzed. This needs to be planned in the predetermined protocol before starting the meta-analysis. A meta-regression analysis is similar to a normal regression analysis, except that the heterogeneity between studies is modeled. This process involves performing a regression analysis of the pooled estimate for covariance at the study level, and so it is usually not considered when the number of studies is less than 10. Here, univariate and multivariate regression analyses can both be considered.

Publication bias

Publication bias is the most common type of reporting bias in meta-analyses. This refers to the distortion of meta-analysis outcomes due to the higher likelihood of publication of statistically significant studies rather than non-significant studies. In order to test the presence or absence of publication bias, first, a funnel plot can be used ( Fig. 5 ). Studies are plotted on a scatter plot with effect size on the x-axis and precision or total sample size on the y-axis. If the points form an upside-down funnel shape, with a broad base that narrows towards the top of the plot, this indicates the absence of a publication bias ( Fig. 5A ) [ 29 , 36 ]. On the other hand, if the plot shows an asymmetric shape, with no points on one side of the graph, then publication bias can be suspected ( Fig. 5B ). Second, to test publication bias statistically, Begg and Mazumdar’s rank correlation test 8) [ 37 ] or Egger’s test 9) [ 29 ] can be used. If publication bias is detected, the trim-and-fill method 10) can be used to correct the bias [ 38 ]. Fig. 6 displays results that show publication bias in Egger’s test, which has then been corrected using the trim-and-fill method using Comprehensive Meta-Analysis software (Biostat, USA).

Funnel plot showing the effect size on the x-axis and sample size on the y-axis as a scatter plot. (A) Funnel plot without publication bias. The individual plots are broader at the bottom and narrower at the top. (B) Funnel plot with publication bias. The individual plots are located asymmetrically.

Funnel plot adjusted using the trim-and-fill method. White circles: comparisons included. Black circles: inputted comparisons using the trim-and-fill method. White diamond: pooled observed log risk ratio. Black diamond: pooled inputted log risk ratio.

Result Presentation

When reporting the results of a systematic review or meta-analysis, the analytical content and methods should be described in detail. First, a flowchart is displayed with the literature search and selection process according to the inclusion/exclusion criteria. Second, a table is shown with the characteristics of the included studies. A table should also be included with information related to the quality of evidence, such as GRADE ( Table 4 ). Third, the results of data analysis are shown in a forest plot and funnel plot. Fourth, if the results use dichotomous data, the NNT values can be reported, as described above.

The GRADE Evidence Quality for Each Outcome

N: number of studies, ROB: risk of bias, PON: postoperative nausea, POV: postoperative vomiting, PONV: postoperative nausea and vomiting, CI: confidence interval, RR: risk ratio, AR: absolute risk.

When Review Manager software (The Cochrane Collaboration, UK) is used for the analysis, two types of P values are given. The first is the P value from the z-test, which tests the null hypothesis that the intervention has no effect. The second P value is from the chi-squared test, which tests the null hypothesis for a lack of heterogeneity. The statistical result for the intervention effect, which is generally considered the most important result in meta-analyses, is the z-test P value.