Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- What is Secondary Research? | Definition, Types, & Examples

What is Secondary Research? | Definition, Types, & Examples

Published on January 20, 2023 by Tegan George . Revised on January 12, 2024.

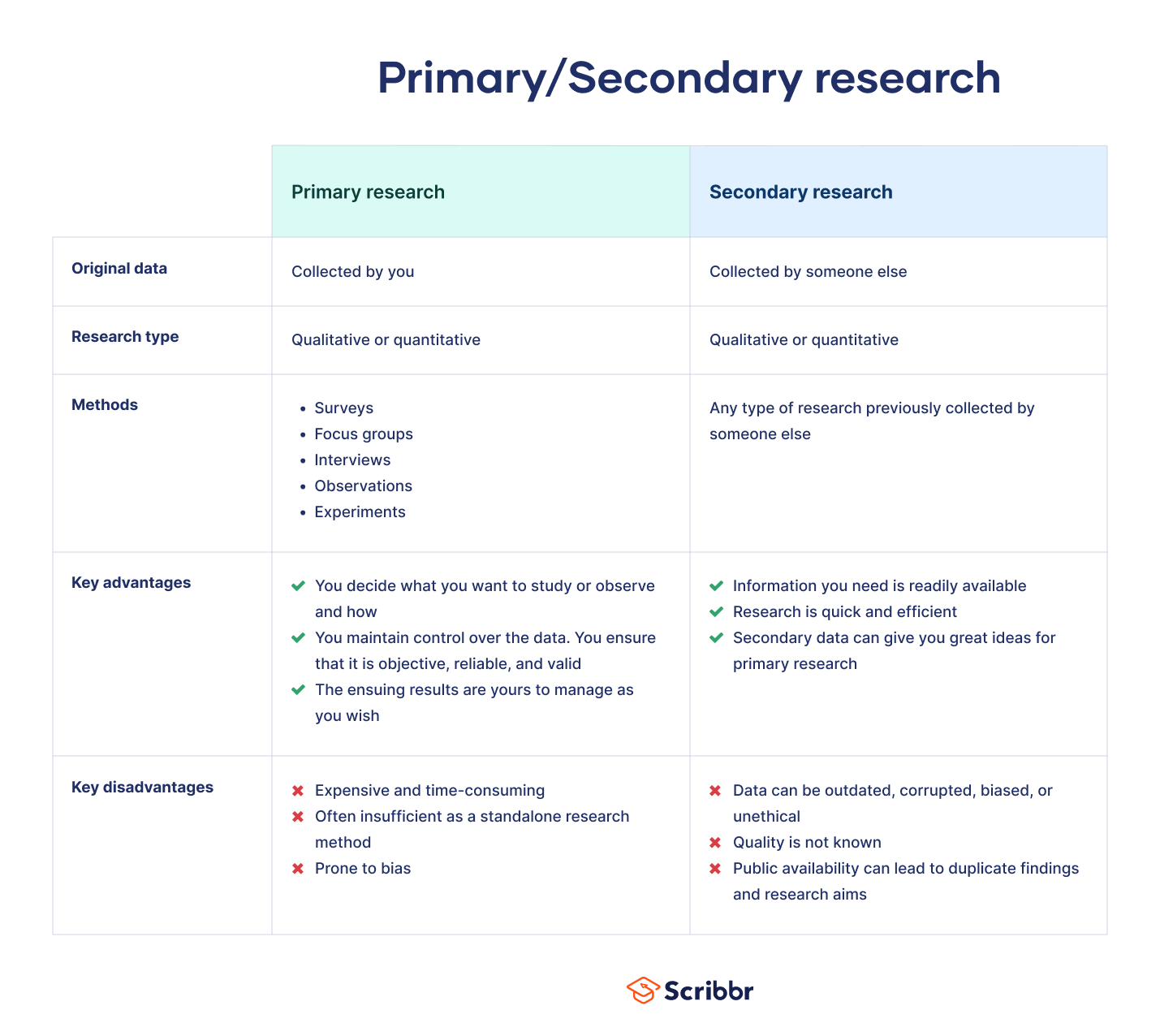

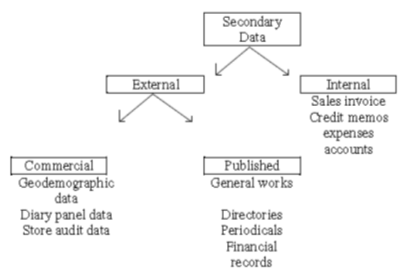

Secondary research is a research method that uses data that was collected by someone else. In other words, whenever you conduct research using data that already exists, you are conducting secondary research. On the other hand, any type of research that you undertake yourself is called primary research .

Secondary research can be qualitative or quantitative in nature. It often uses data gathered from published peer-reviewed papers, meta-analyses, or government or private sector databases and datasets.

Table of contents

When to use secondary research, types of secondary research, examples of secondary research, advantages and disadvantages of secondary research, other interesting articles, frequently asked questions.

Secondary research is a very common research method, used in lieu of collecting your own primary data. It is often used in research designs or as a way to start your research process if you plan to conduct primary research later on.

Since it is often inexpensive or free to access, secondary research is a low-stakes way to determine if further primary research is needed, as gaps in secondary research are a strong indication that primary research is necessary. For this reason, while secondary research can theoretically be exploratory or explanatory in nature, it is usually explanatory: aiming to explain the causes and consequences of a well-defined problem.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

Secondary research can take many forms, but the most common types are:

Statistical analysis

Literature reviews, case studies, content analysis.

There is ample data available online from a variety of sources, often in the form of datasets. These datasets are often open-source or downloadable at a low cost, and are ideal for conducting statistical analyses such as hypothesis testing or regression analysis .

Credible sources for existing data include:

- The government

- Government agencies

- Non-governmental organizations

- Educational institutions

- Businesses or consultancies

- Libraries or archives

- Newspapers, academic journals, or magazines

A literature review is a survey of preexisting scholarly sources on your topic. It provides an overview of current knowledge, allowing you to identify relevant themes, debates, and gaps in the research you analyze. You can later apply these to your own work, or use them as a jumping-off point to conduct primary research of your own.

Structured much like a regular academic paper (with a clear introduction, body, and conclusion), a literature review is a great way to evaluate the current state of research and demonstrate your knowledge of the scholarly debates around your topic.

A case study is a detailed study of a specific subject. It is usually qualitative in nature and can focus on a person, group, place, event, organization, or phenomenon. A case study is a great way to utilize existing research to gain concrete, contextual, and in-depth knowledge about your real-world subject.

You can choose to focus on just one complex case, exploring a single subject in great detail, or examine multiple cases if you’d prefer to compare different aspects of your topic. Preexisting interviews , observational studies , or other sources of primary data make for great case studies.

Content analysis is a research method that studies patterns in recorded communication by utilizing existing texts. It can be either quantitative or qualitative in nature, depending on whether you choose to analyze countable or measurable patterns, or more interpretive ones. Content analysis is popular in communication studies, but it is also widely used in historical analysis, anthropology, and psychology to make more semantic qualitative inferences.

Secondary research is a broad research approach that can be pursued any way you’d like. Here are a few examples of different ways you can use secondary research to explore your research topic .

Secondary research is a very common research approach, but has distinct advantages and disadvantages.

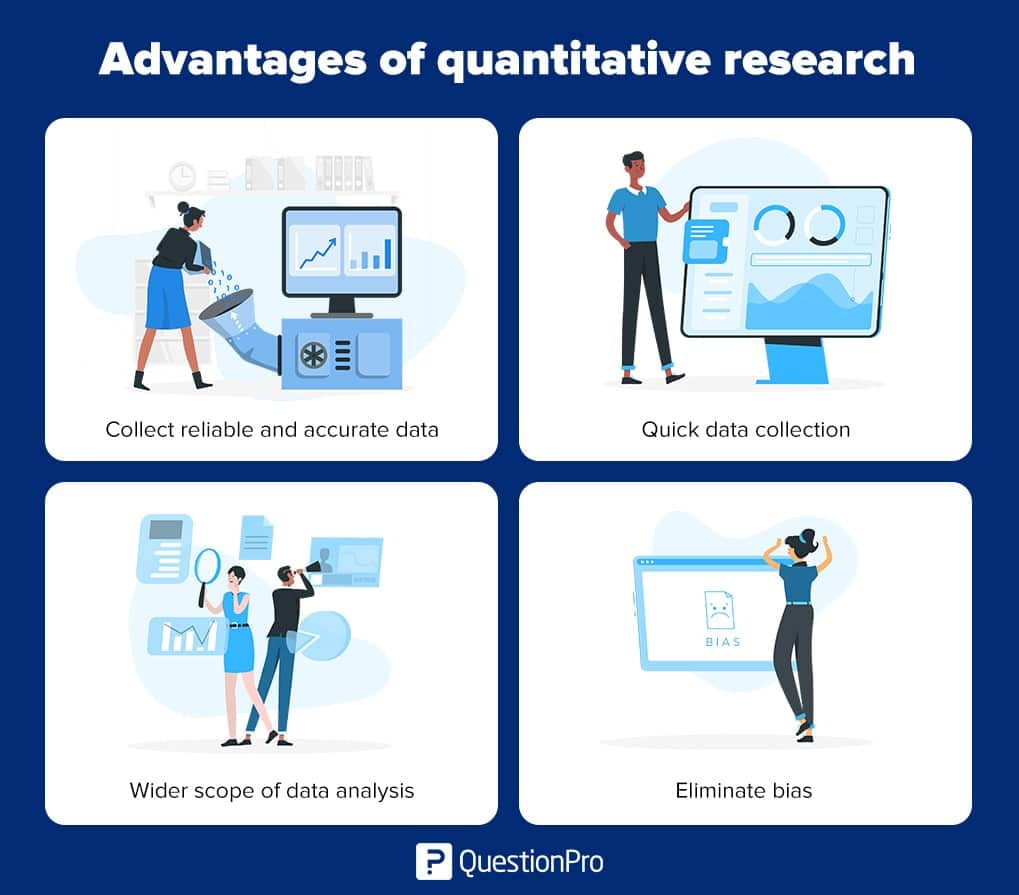

Advantages of secondary research

Advantages include:

- Secondary data is very easy to source and readily available .

- It is also often free or accessible through your educational institution’s library or network, making it much cheaper to conduct than primary research .

- As you are relying on research that already exists, conducting secondary research is much less time consuming than primary research. Since your timeline is so much shorter, your research can be ready to publish sooner.

- Using data from others allows you to show reproducibility and replicability , bolstering prior research and situating your own work within your field.

Disadvantages of secondary research

Disadvantages include:

- Ease of access does not signify credibility . It’s important to be aware that secondary research is not always reliable , and can often be out of date. It’s critical to analyze any data you’re thinking of using prior to getting started, using a method like the CRAAP test .

- Secondary research often relies on primary research already conducted. If this original research is biased in any way, those research biases could creep into the secondary results.

Many researchers using the same secondary research to form similar conclusions can also take away from the uniqueness and reliability of your research. Many datasets become “kitchen-sink” models, where too many variables are added in an attempt to draw increasingly niche conclusions from overused data . Data cleansing may be necessary to test the quality of the research.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Degrees of freedom

- Null hypothesis

- Discourse analysis

- Control groups

- Mixed methods research

- Non-probability sampling

- Quantitative research

- Inclusion and exclusion criteria

Research bias

- Rosenthal effect

- Implicit bias

- Cognitive bias

- Selection bias

- Negativity bias

- Status quo bias

A systematic review is secondary research because it uses existing research. You don’t collect new data yourself.

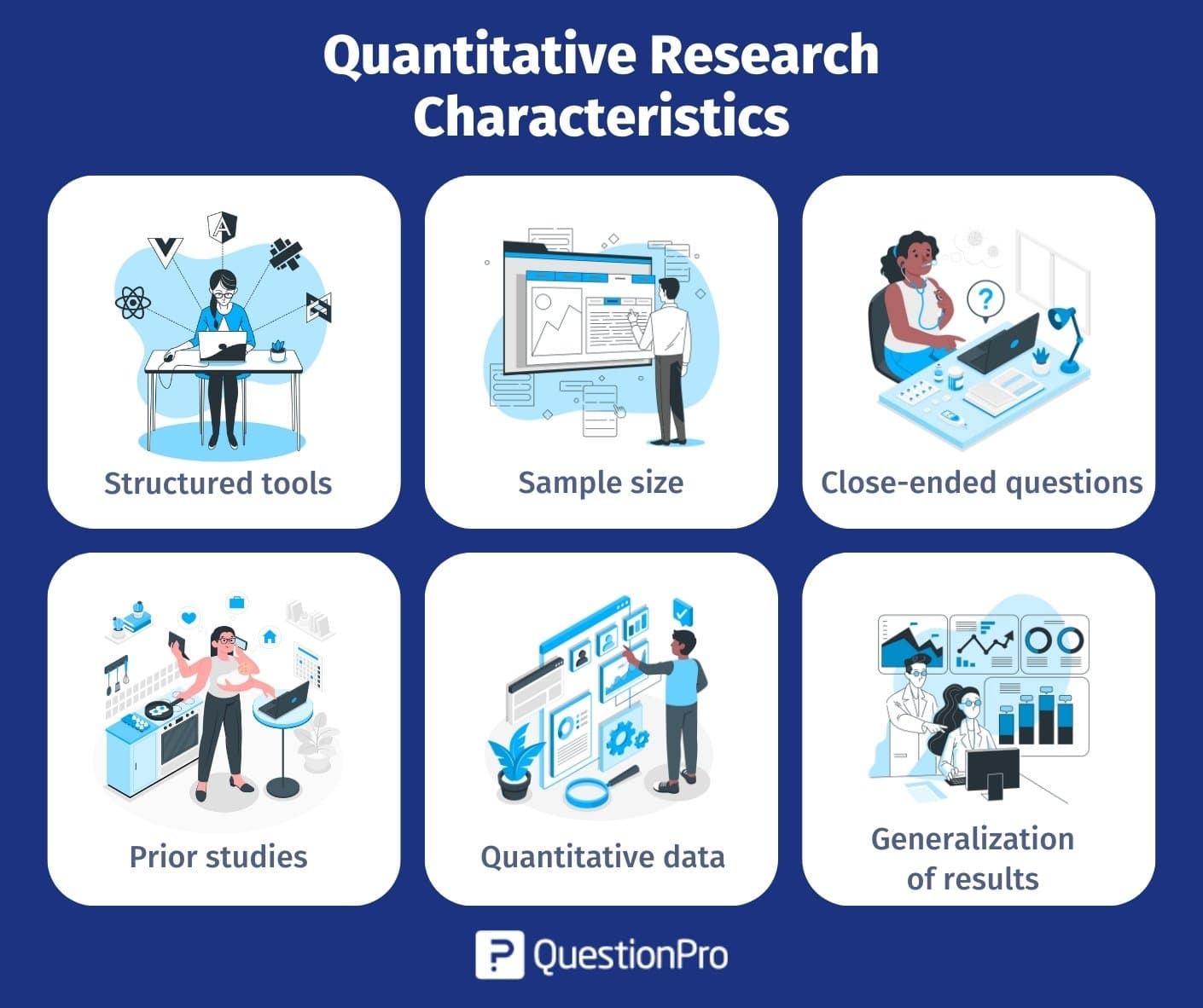

The research methods you use depend on the type of data you need to answer your research question .

- If you want to measure something or test a hypothesis , use quantitative methods . If you want to explore ideas, thoughts and meanings, use qualitative methods .

- If you want to analyze a large amount of readily-available data, use secondary data. If you want data specific to your purposes with control over how it is generated, collect primary data.

- If you want to establish cause-and-effect relationships between variables , use experimental methods. If you want to understand the characteristics of a research subject, use descriptive methods.

Quantitative research deals with numbers and statistics, while qualitative research deals with words and meanings.

Quantitative methods allow you to systematically measure variables and test hypotheses . Qualitative methods allow you to explore concepts and experiences in more detail.

Sources in this article

We strongly encourage students to use sources in their work. You can cite our article (APA Style) or take a deep dive into the articles below.

George, T. (2024, January 12). What is Secondary Research? | Definition, Types, & Examples. Scribbr. Retrieved April 1, 2024, from https://www.scribbr.com/methodology/secondary-research/

Largan, C., & Morris, T. M. (2019). Qualitative Secondary Research: A Step-By-Step Guide (1st ed.). SAGE Publications Ltd.

Peloquin, D., DiMaio, M., Bierer, B., & Barnes, M. (2020). Disruptive and avoidable: GDPR challenges to secondary research uses of data. European Journal of Human Genetics , 28 (6), 697–705. https://doi.org/10.1038/s41431-020-0596-x

Is this article helpful?

Tegan George

Other students also liked, primary research | definition, types, & examples, how to write a literature review | guide, examples, & templates, what is a case study | definition, examples & methods, what is your plagiarism score.

- Search Menu

- Browse content in Arts and Humanities

- Browse content in Archaeology

- Anglo-Saxon and Medieval Archaeology

- Archaeological Methodology and Techniques

- Archaeology by Region

- Archaeology of Religion

- Archaeology of Trade and Exchange

- Biblical Archaeology

- Contemporary and Public Archaeology

- Environmental Archaeology

- Historical Archaeology

- History and Theory of Archaeology

- Industrial Archaeology

- Landscape Archaeology

- Mortuary Archaeology

- Prehistoric Archaeology

- Underwater Archaeology

- Urban Archaeology

- Zooarchaeology

- Browse content in Architecture

- Architectural Structure and Design

- History of Architecture

- Residential and Domestic Buildings

- Theory of Architecture

- Browse content in Art

- Art Subjects and Themes

- History of Art

- Industrial and Commercial Art

- Theory of Art

- Biographical Studies

- Byzantine Studies

- Browse content in Classical Studies

- Classical Literature

- Classical Reception

- Classical History

- Classical Philosophy

- Classical Mythology

- Classical Art and Architecture

- Classical Oratory and Rhetoric

- Greek and Roman Archaeology

- Greek and Roman Epigraphy

- Greek and Roman Law

- Greek and Roman Papyrology

- Late Antiquity

- Religion in the Ancient World

- Digital Humanities

- Browse content in History

- Colonialism and Imperialism

- Diplomatic History

- Environmental History

- Genealogy, Heraldry, Names, and Honours

- Genocide and Ethnic Cleansing

- Historical Geography

- History by Period

- History of Agriculture

- History of Education

- History of Emotions

- History of Gender and Sexuality

- Industrial History

- Intellectual History

- International History

- Labour History

- Legal and Constitutional History

- Local and Family History

- Maritime History

- Military History

- National Liberation and Post-Colonialism

- Oral History

- Political History

- Public History

- Regional and National History

- Revolutions and Rebellions

- Slavery and Abolition of Slavery

- Social and Cultural History

- Theory, Methods, and Historiography

- Urban History

- World History

- Browse content in Language Teaching and Learning

- Language Learning (Specific Skills)

- Language Teaching Theory and Methods

- Browse content in Linguistics

- Applied Linguistics

- Cognitive Linguistics

- Computational Linguistics

- Forensic Linguistics

- Grammar, Syntax and Morphology

- Historical and Diachronic Linguistics

- History of English

- Language Variation

- Language Families

- Language Acquisition

- Language Evolution

- Language Reference

- Lexicography

- Linguistic Theories

- Linguistic Typology

- Linguistic Anthropology

- Phonetics and Phonology

- Psycholinguistics

- Sociolinguistics

- Translation and Interpretation

- Writing Systems

- Browse content in Literature

- Bibliography

- Children's Literature Studies

- Literary Studies (Modernism)

- Literary Studies (Asian)

- Literary Studies (European)

- Literary Studies (Eco-criticism)

- Literary Studies (Romanticism)

- Literary Studies (American)

- Literary Studies - World

- Literary Studies (1500 to 1800)

- Literary Studies (19th Century)

- Literary Studies (20th Century onwards)

- Literary Studies (African American Literature)

- Literary Studies (British and Irish)

- Literary Studies (Early and Medieval)

- Literary Studies (Fiction, Novelists, and Prose Writers)

- Literary Studies (Gender Studies)

- Literary Studies (Graphic Novels)

- Literary Studies (History of the Book)

- Literary Studies (Plays and Playwrights)

- Literary Studies (Poetry and Poets)

- Literary Studies (Postcolonial Literature)

- Literary Studies (Queer Studies)

- Literary Studies (Science Fiction)

- Literary Studies (Travel Literature)

- Literary Studies (War Literature)

- Literary Studies (Women's Writing)

- Literary Theory and Cultural Studies

- Mythology and Folklore

- Shakespeare Studies and Criticism

- Browse content in Media Studies

- Browse content in Music

- Applied Music

- Dance and Music

- Ethics in Music

- Ethnomusicology

- Gender and Sexuality in Music

- Medicine and Music

- Music Cultures

- Music and Culture

- Music and Religion

- Music and Media

- Music Education and Pedagogy

- Music Theory and Analysis

- Musical Scores, Lyrics, and Libretti

- Musical Structures, Styles, and Techniques

- Musicology and Music History

- Performance Practice and Studies

- Race and Ethnicity in Music

- Sound Studies

- Browse content in Performing Arts

- Browse content in Philosophy

- Aesthetics and Philosophy of Art

- Epistemology

- Feminist Philosophy

- History of Western Philosophy

- Metaphysics

- Moral Philosophy

- Non-Western Philosophy

- Philosophy of Action

- Philosophy of Law

- Philosophy of Religion

- Philosophy of Science

- Philosophy of Language

- Philosophy of Mind

- Philosophy of Perception

- Philosophy of Mathematics and Logic

- Practical Ethics

- Social and Political Philosophy

- Browse content in Religion

- Biblical Studies

- Christianity

- East Asian Religions

- History of Religion

- Judaism and Jewish Studies

- Qumran Studies

- Religion and Education

- Religion and Health

- Religion and Politics

- Religion and Science

- Religion and Law

- Religion and Art, Literature, and Music

- Religious Studies

- Browse content in Society and Culture

- Cookery, Food, and Drink

- Cultural Studies

- Customs and Traditions

- Ethical Issues and Debates

- Hobbies, Games, Arts and Crafts

- Lifestyle, Home, and Garden

- Natural world, Country Life, and Pets

- Popular Beliefs and Controversial Knowledge

- Sports and Outdoor Recreation

- Technology and Society

- Travel and Holiday

- Visual Culture

- Browse content in Law

- Arbitration

- Browse content in Company and Commercial Law

- Commercial Law

- Company Law

- Browse content in Comparative Law

- Systems of Law

- Competition Law

- Browse content in Constitutional and Administrative Law

- Government Powers

- Judicial Review

- Local Government Law

- Military and Defence Law

- Parliamentary and Legislative Practice

- Construction Law

- Contract Law

- Browse content in Criminal Law

- Criminal Procedure

- Criminal Evidence Law

- Sentencing and Punishment

- Employment and Labour Law

- Environment and Energy Law

- Browse content in Financial Law

- Banking Law

- Insolvency Law

- History of Law

- Human Rights and Immigration

- Intellectual Property Law

- Browse content in International Law

- Private International Law and Conflict of Laws

- Public International Law

- IT and Communications Law

- Jurisprudence and Philosophy of Law

- Law and Society

- Law and Politics

- Browse content in Legal System and Practice

- Courts and Procedure

- Legal Skills and Practice

- Primary Sources of Law

- Regulation of Legal Profession

- Medical and Healthcare Law

- Browse content in Policing

- Criminal Investigation and Detection

- Police and Security Services

- Police Procedure and Law

- Police Regional Planning

- Browse content in Property Law

- Personal Property Law

- Study and Revision

- Terrorism and National Security Law

- Browse content in Trusts Law

- Wills and Probate or Succession

- Browse content in Medicine and Health

- Browse content in Allied Health Professions

- Arts Therapies

- Clinical Science

- Dietetics and Nutrition

- Occupational Therapy

- Operating Department Practice

- Physiotherapy

- Radiography

- Speech and Language Therapy

- Browse content in Anaesthetics

- General Anaesthesia

- Neuroanaesthesia

- Browse content in Clinical Medicine

- Acute Medicine

- Cardiovascular Medicine

- Clinical Genetics

- Clinical Pharmacology and Therapeutics

- Dermatology

- Endocrinology and Diabetes

- Gastroenterology

- Genito-urinary Medicine

- Geriatric Medicine

- Infectious Diseases

- Medical Oncology

- Medical Toxicology

- Pain Medicine

- Palliative Medicine

- Rehabilitation Medicine

- Respiratory Medicine and Pulmonology

- Rheumatology

- Sleep Medicine

- Sports and Exercise Medicine

- Clinical Neuroscience

- Community Medical Services

- Critical Care

- Emergency Medicine

- Forensic Medicine

- Haematology

- History of Medicine

- Medical Ethics

- Browse content in Medical Dentistry

- Oral and Maxillofacial Surgery

- Paediatric Dentistry

- Restorative Dentistry and Orthodontics

- Surgical Dentistry

- Browse content in Medical Skills

- Clinical Skills

- Communication Skills

- Nursing Skills

- Surgical Skills

- Medical Statistics and Methodology

- Browse content in Neurology

- Clinical Neurophysiology

- Neuropathology

- Nursing Studies

- Browse content in Obstetrics and Gynaecology

- Gynaecology

- Occupational Medicine

- Ophthalmology

- Otolaryngology (ENT)

- Browse content in Paediatrics

- Neonatology

- Browse content in Pathology

- Chemical Pathology

- Clinical Cytogenetics and Molecular Genetics

- Histopathology

- Medical Microbiology and Virology

- Patient Education and Information

- Browse content in Pharmacology

- Psychopharmacology

- Browse content in Popular Health

- Caring for Others

- Complementary and Alternative Medicine

- Self-help and Personal Development

- Browse content in Preclinical Medicine

- Cell Biology

- Molecular Biology and Genetics

- Reproduction, Growth and Development

- Primary Care

- Professional Development in Medicine

- Browse content in Psychiatry

- Addiction Medicine

- Child and Adolescent Psychiatry

- Forensic Psychiatry

- Learning Disabilities

- Old Age Psychiatry

- Psychotherapy

- Browse content in Public Health and Epidemiology

- Epidemiology

- Public Health

- Browse content in Radiology

- Clinical Radiology

- Interventional Radiology

- Nuclear Medicine

- Radiation Oncology

- Reproductive Medicine

- Browse content in Surgery

- Cardiothoracic Surgery

- Gastro-intestinal and Colorectal Surgery

- General Surgery

- Neurosurgery

- Paediatric Surgery

- Peri-operative Care

- Plastic and Reconstructive Surgery

- Surgical Oncology

- Transplant Surgery

- Trauma and Orthopaedic Surgery

- Vascular Surgery

- Browse content in Science and Mathematics

- Browse content in Biological Sciences

- Aquatic Biology

- Biochemistry

- Bioinformatics and Computational Biology

- Developmental Biology

- Ecology and Conservation

- Evolutionary Biology

- Genetics and Genomics

- Microbiology

- Molecular and Cell Biology

- Natural History

- Plant Sciences and Forestry

- Research Methods in Life Sciences

- Structural Biology

- Systems Biology

- Zoology and Animal Sciences

- Browse content in Chemistry

- Analytical Chemistry

- Computational Chemistry

- Crystallography

- Environmental Chemistry

- Industrial Chemistry

- Inorganic Chemistry

- Materials Chemistry

- Medicinal Chemistry

- Mineralogy and Gems

- Organic Chemistry

- Physical Chemistry

- Polymer Chemistry

- Study and Communication Skills in Chemistry

- Theoretical Chemistry

- Browse content in Computer Science

- Artificial Intelligence

- Computer Architecture and Logic Design

- Game Studies

- Human-Computer Interaction

- Mathematical Theory of Computation

- Programming Languages

- Software Engineering

- Systems Analysis and Design

- Virtual Reality

- Browse content in Computing

- Business Applications

- Computer Games

- Computer Security

- Computer Networking and Communications

- Digital Lifestyle

- Graphical and Digital Media Applications

- Operating Systems

- Browse content in Earth Sciences and Geography

- Atmospheric Sciences

- Environmental Geography

- Geology and the Lithosphere

- Maps and Map-making

- Meteorology and Climatology

- Oceanography and Hydrology

- Palaeontology

- Physical Geography and Topography

- Regional Geography

- Soil Science

- Urban Geography

- Browse content in Engineering and Technology

- Agriculture and Farming

- Biological Engineering

- Civil Engineering, Surveying, and Building

- Electronics and Communications Engineering

- Energy Technology

- Engineering (General)

- Environmental Science, Engineering, and Technology

- History of Engineering and Technology

- Mechanical Engineering and Materials

- Technology of Industrial Chemistry

- Transport Technology and Trades

- Browse content in Environmental Science

- Applied Ecology (Environmental Science)

- Conservation of the Environment (Environmental Science)

- Environmental Sustainability

- Environmentalist Thought and Ideology (Environmental Science)

- Management of Land and Natural Resources (Environmental Science)

- Natural Disasters (Environmental Science)

- Nuclear Issues (Environmental Science)

- Pollution and Threats to the Environment (Environmental Science)

- Social Impact of Environmental Issues (Environmental Science)

- History of Science and Technology

- Browse content in Materials Science

- Ceramics and Glasses

- Composite Materials

- Metals, Alloying, and Corrosion

- Nanotechnology

- Browse content in Mathematics

- Applied Mathematics

- Biomathematics and Statistics

- History of Mathematics

- Mathematical Education

- Mathematical Finance

- Mathematical Analysis

- Numerical and Computational Mathematics

- Probability and Statistics

- Pure Mathematics

- Browse content in Neuroscience

- Cognition and Behavioural Neuroscience

- Development of the Nervous System

- Disorders of the Nervous System

- History of Neuroscience

- Invertebrate Neurobiology

- Molecular and Cellular Systems

- Neuroendocrinology and Autonomic Nervous System

- Neuroscientific Techniques

- Sensory and Motor Systems

- Browse content in Physics

- Astronomy and Astrophysics

- Atomic, Molecular, and Optical Physics

- Biological and Medical Physics

- Classical Mechanics

- Computational Physics

- Condensed Matter Physics

- Electromagnetism, Optics, and Acoustics

- History of Physics

- Mathematical and Statistical Physics

- Measurement Science

- Nuclear Physics

- Particles and Fields

- Plasma Physics

- Quantum Physics

- Relativity and Gravitation

- Semiconductor and Mesoscopic Physics

- Browse content in Psychology

- Affective Sciences

- Clinical Psychology

- Cognitive Neuroscience

- Cognitive Psychology

- Criminal and Forensic Psychology

- Developmental Psychology

- Educational Psychology

- Evolutionary Psychology

- Health Psychology

- History and Systems in Psychology

- Music Psychology

- Neuropsychology

- Organizational Psychology

- Psychological Assessment and Testing

- Psychology of Human-Technology Interaction

- Psychology Professional Development and Training

- Research Methods in Psychology

- Social Psychology

- Browse content in Social Sciences

- Browse content in Anthropology

- Anthropology of Religion

- Human Evolution

- Medical Anthropology

- Physical Anthropology

- Regional Anthropology

- Social and Cultural Anthropology

- Theory and Practice of Anthropology

- Browse content in Business and Management

- Business History

- Business Strategy

- Business Ethics

- Business and Government

- Business and Technology

- Business and the Environment

- Comparative Management

- Corporate Governance

- Corporate Social Responsibility

- Entrepreneurship

- Health Management

- Human Resource Management

- Industrial and Employment Relations

- Industry Studies

- Information and Communication Technologies

- International Business

- Knowledge Management

- Management and Management Techniques

- Operations Management

- Organizational Theory and Behaviour

- Pensions and Pension Management

- Public and Nonprofit Management

- Strategic Management

- Supply Chain Management

- Browse content in Criminology and Criminal Justice

- Criminal Justice

- Criminology

- Forms of Crime

- International and Comparative Criminology

- Youth Violence and Juvenile Justice

- Development Studies

- Browse content in Economics

- Agricultural, Environmental, and Natural Resource Economics

- Asian Economics

- Behavioural Finance

- Behavioural Economics and Neuroeconomics

- Econometrics and Mathematical Economics

- Economic Methodology

- Economic Systems

- Economic History

- Economic Development and Growth

- Financial Markets

- Financial Institutions and Services

- General Economics and Teaching

- Health, Education, and Welfare

- History of Economic Thought

- International Economics

- Labour and Demographic Economics

- Law and Economics

- Macroeconomics and Monetary Economics

- Microeconomics

- Public Economics

- Urban, Rural, and Regional Economics

- Welfare Economics

- Browse content in Education

- Adult Education and Continuous Learning

- Care and Counselling of Students

- Early Childhood and Elementary Education

- Educational Equipment and Technology

- Educational Strategies and Policy

- Higher and Further Education

- Organization and Management of Education

- Philosophy and Theory of Education

- Schools Studies

- Secondary Education

- Teaching of a Specific Subject

- Teaching of Specific Groups and Special Educational Needs

- Teaching Skills and Techniques

- Browse content in Environment

- Applied Ecology (Social Science)

- Climate Change

- Conservation of the Environment (Social Science)

- Environmentalist Thought and Ideology (Social Science)

- Natural Disasters (Environment)

- Social Impact of Environmental Issues (Social Science)

- Browse content in Human Geography

- Cultural Geography

- Economic Geography

- Political Geography

- Browse content in Interdisciplinary Studies

- Communication Studies

- Museums, Libraries, and Information Sciences

- Browse content in Politics

- African Politics

- Asian Politics

- Chinese Politics

- Comparative Politics

- Conflict Politics

- Elections and Electoral Studies

- Environmental Politics

- European Union

- Foreign Policy

- Gender and Politics

- Human Rights and Politics

- Indian Politics

- International Relations

- International Organization (Politics)

- International Political Economy

- Irish Politics

- Latin American Politics

- Middle Eastern Politics

- Political Theory

- Political Methodology

- Political Communication

- Political Philosophy

- Political Sociology

- Political Behaviour

- Political Economy

- Political Institutions

- Politics and Law

- Public Administration

- Public Policy

- Quantitative Political Methodology

- Regional Political Studies

- Russian Politics

- Security Studies

- State and Local Government

- UK Politics

- US Politics

- Browse content in Regional and Area Studies

- African Studies

- Asian Studies

- East Asian Studies

- Japanese Studies

- Latin American Studies

- Middle Eastern Studies

- Native American Studies

- Scottish Studies

- Browse content in Research and Information

- Research Methods

- Browse content in Social Work

- Addictions and Substance Misuse

- Adoption and Fostering

- Care of the Elderly

- Child and Adolescent Social Work

- Couple and Family Social Work

- Developmental and Physical Disabilities Social Work

- Direct Practice and Clinical Social Work

- Emergency Services

- Human Behaviour and the Social Environment

- International and Global Issues in Social Work

- Mental and Behavioural Health

- Social Justice and Human Rights

- Social Policy and Advocacy

- Social Work and Crime and Justice

- Social Work Macro Practice

- Social Work Practice Settings

- Social Work Research and Evidence-based Practice

- Welfare and Benefit Systems

- Browse content in Sociology

- Childhood Studies

- Community Development

- Comparative and Historical Sociology

- Economic Sociology

- Gender and Sexuality

- Gerontology and Ageing

- Health, Illness, and Medicine

- Marriage and the Family

- Migration Studies

- Occupations, Professions, and Work

- Organizations

- Population and Demography

- Race and Ethnicity

- Social Theory

- Social Movements and Social Change

- Social Research and Statistics

- Social Stratification, Inequality, and Mobility

- Sociology of Religion

- Sociology of Education

- Sport and Leisure

- Urban and Rural Studies

- Browse content in Warfare and Defence

- Defence Strategy, Planning, and Research

- Land Forces and Warfare

- Military Administration

- Military Life and Institutions

- Naval Forces and Warfare

- Other Warfare and Defence Issues

- Peace Studies and Conflict Resolution

- Weapons and Equipment

- < Previous chapter

- Next chapter >

28 Secondary Data Analysis

Department of Psychology, Michigan State University

Richard E. Lucas, Department of Psychology, Michigan State University, East Lansing, MI

- Published: 01 October 2013

- Cite Icon Cite

- Permissions Icon Permissions

Secondary data analysis refers to the analysis of existing data collected by others. Secondary analysis affords researchers the opportunity to investigate research questions using large-scale data sets that are often inclusive of under-represented groups, while saving time and resources. Despite the immense potential for secondary analysis as a tool for researchers in the social sciences, it is not widely used by psychologists and is sometimes met with sharp criticism among those who favor primary research. The goal of this chapter is to summarize the promises and pitfalls associated with secondary data analysis and to highlight the importance of archival resources for advancing psychological science. In addition to describing areas of convergence and divergence between primary and secondary data analysis, we outline basic steps for getting started and finding data sets. We also provide general guidance on issues related to measurement, handling missing data, and the use of survey weights.

The goal of research in the social science is to gain a better understanding of the world and how well theoretical predictions match empirical realities. Secondary data analysis contributes to these objectives through the application of “creative analytical techniques to data that have been amassed by others” ( Kiecolt & Nathan, 1985 , p. 10). Primary researchers design new studies to answer research questions, whereas the secondary data analyst uses existing resources. There is a deliberate coupling of research design and data analysis in primary research; however, the secondary data analyst rarely has had input into the design of the original studies in terms of the sampling strategy and measures selected for the investigation. For better or worse, the secondary data analyst simply has access to the final products of the data collection process in the form of a codebook or set of codebooks and a cleaned data set.

The analysis of existing data sets is routine in disciplines such as economics, political science, and sociology, but it is less well established in psychology ( but see Brooks-Gunn & Chase-Lansdale, 1991 ; Brooks-Gunn, Berlin, Leventhal, & Fuligini, 2000 ). Moreover, biases against secondary data analysis in favor of primary research may be present in psychology ( see McCall & Appelbaum, 1991 ). One possible explanation for this bias is that psychology has a rich and vibrant experimental tradition, and the training of many psychologists has likely emphasized this approach as the “gold standard” for addressing research questions and establishing causality ( see , e.g., Cronbach, 1957 ). As a result, the nonexperimental methods that are typically used in secondary analyses may be viewed by some as inferior. Psychological scientists trained in the experimental tradition may not fully appreciate the unique strengths that nonexperimental techniques have to offer and may underestimate the time, effort, and skills required for conducting secondary data analyses in a competent and professional manner. Finally, biases against secondary data analysis might stem from lingering concerns over the validity of the self-report methods that are typically used in secondary data analysis. These can include concerns about the possibility that placement of items in a survey can influence responses (e.g., differences in the average levels of reported marital and life satisfaction when questions occur back to back as opposed to having the questions separated in the survey; see Schwarz, 1999 ; Schwarz & Strack, 1999 ) and concerns with biased reporting of sensitive behaviors ( but see Akers, Massey, & Clarke, 1983 ).

Despite the initial reluctance to widely embrace secondary data analysis as a tool for psychological research, there are promising signs that the skepticism toward secondary analyses will diminish as psychology seeks to position itself as a hub science that plays a key role in interdisciplinary inquiry ( see Mroczek, Pitzer, Miller, Turiano, & Fingerman, 2011 ). Accordingly, there is a compelling argument for including secondary data analysis into the suite of methodological approaches used by psychologists ( see Trzesniewski, Donnellan, & Lucas, 2011 ).

The goal of this chapter is to summarize the promises and pitfalls associated with secondary data analysis and to highlight the importance of archival resources for advancing psychological science. We limit our discussion to analyses based on large-scale and often longitudinal national data sets such as the National Longitudinal Study of Adolescent Health (Add Health), the British Household Panel Study (BHPS), the German Socioeconomic Panel Study (GSOEP), and the National Institute of Child Health and Human Development (NICHD) Study of Early Child Care and Youth Development (SEC-CYD). However, much of our discussion applies to all secondary analyses. The perspective and specific recommendations found in this chapter draw on the edited volume by Trzesniewski et al. (2011 ). Following a general introduction to secondary data analysis, we will outline the necessary steps for getting started and finding data sets. Finally, we provide some general guidance on issues related to measurement, approaches to handling missing data, and survey weighting. Our treatment of these important topics is intended to draw attention to the relevant issues rather than to provide extensive coverage. Throughout, we take a practical approach to the issues and offer tips and guidance rooted in our experiences as data analysts and researchers with substantive interests in personality and life span developmental psychology.

Comparing Primary Research and Secondary Research

As noted in the opening section, it is possible that biases against secondary data analysis exist in the minds of some psychological scientists. To address these concerns, we have found it can be helpful to explicitly compare the processes of secondary analyses with primary research ( see also McCall & Appelbaum, 1991 ). An idealized and simplified list of steps is provided in Table 28.1 . As is evident from this table, both techniques start with a research question that is ideally rooted in existing theory and previous empirical results. The areas of biggest divergence between primary and secondary approaches occur after researchers have identified their questions (i.e., Steps 2 through 5 in Table 28.1 ). At this point, the primary researcher develops a set of procedures and then engages in pilot testing to refine procedures and methods, whereas the secondary analyst searches for data sets and evaluates codebooks. The primary researcher attempts to refine her or his procedures, whereas the secondary analyst determines whether a particular resource is appropriate for addressing the question at hand. In the next stages, the primary researcher collects new data, whereas the secondary data analyst constructs a working data set from a much larger data archive. At these stages, both types of researchers must grapple with the practical considerations imposed by real world constraints. There is no such thing as a perfect single study ( see Hunter & Schmidt, 2004 ), as all data sets are subject to limitations stemming from design and implementation. For example, the primary researcher may not have enough subjects to generate adequate levels of statistical power (because of a failure to take power calculations into account during the design phase, time or other resource constraints during the data collection phase, or because of problems with sample retention), whereas the secondary data analyst may have to cope with impoverished measurement of core constructs. Both sets of considerations will affect the ability of a given study to detect effects and provide unbiased estimates of effect sizes.

Table 28.1 also illustrates the fact that there are considerable areas of overlap between the two techniques. Researchers stemming from both traditions analyze data, interpret results, and write reports for dissemination to the wider scientific community. Both kinds of research require a significant investment of time and intellectual resources. Many skills required in conducting high-quality primary research are also required in conducting high-quality secondary data analysis including sound scientific judgment, attention to detail, and a firm grasp of statistical methodology.

Note: Steps modified and expanded from McCall and Appelbaum (1991 ).

We argue that both primary research and secondary data analysis have the potential to provide meaningful and scientifically valid research findings for psychology. Both approaches can generate new knowledge and are therefore reasonable ways of evaluating research questions. Blanket pronouncements that one approach is inherently superior to the other are usually difficult to justify. Many of the concerns about secondary data analysis are raised in the context of an unfair comparison—a contrast between the idealized conceptualization of primary research with the actual process of a secondary data analysis. Our point is that both approaches can be conducted in a thoughtful and rigorous manner, yet both approaches involve concessions to real-world constraints. Accordingly, we encourage all researchers and reviewers of papers to keep an open mind about the importance of both types of research.

Advantages and Disadvantages of Secondary Data Analysis

The foremost reason why psychologists should learn about secondary data analysis is that there are many existing data sets that can be used to answer interesting and important questions. Individuals who are unaware of these resources are likely to miss crucial opportunities to contribute new knowledge to the discipline and even risk reinventing the proverbial wheel by collecting new data. Regrettably, new data collection efforts may occur on a smaller scale than what is available in large national datasets. Researchers who are unaware of the potential treasure trove of variables in existing data sets risk unnecessarily duplicating considerable amounts of time and effort. At the very least, researchers may wish to familiarize themselves with publicly available data to truly address gaps in the literature when they undertake projects that involve new data collection.

The biggest advantage of secondary analyses is that the data have already been collected and are ready to be analyzed ( see Hofferth, 2005 ), thus conserving time and resources. Existing data sources are often of much larger and higher quality than could be feasibly collected by a single investigator. This advantage is especially pronounced when considering the investments of time and money necessary to collect longitudinal data. Some data sets were collected with scientific sampling plans (such as the GSOEP), which make it possible to generalize the findings to a specific population. Further, many publicly available data sets are quite large, and therefore provide adequate statistical power for conducting many analyses, including hypotheses about statistical interactions. Investigations of interactions often require a surprisingly high number of participants to achieve respectable levels of statistical power in the face of measurement error ( see Aiken & West, 1991 ). 1 Large-scale data sets are also well suited for subgroup analyses of populations that are often under-represented in smaller research studies.

Another advantage of secondary data analysis is that it forces researchers to adopt an open and transparent approach to their craft. Because data are publicly available, other investigators may attempt to replicate findings and specify alternative models for a given research question. This reality encourages transparency and detailed record keeping on the part of the researcher, including careful reporting of analysis and a reasoned justification for all analytic decisions. Freese (2007 ) has provided a useful discussion about policies for archiving material necessary for replicating results, and his treatment of the issues provides guidance to researchers interested in maintaining good records.

Despite the many advantages of secondary data analysis, it is not without its disadvantages. The most significant challenge is simply the flipside of the primary advantage—the data have already been collected by somebody else! Analysts must take advantage of what has been collected without input into design and measurement issues. In some cases, an existing data set may not be available to address the particular research questions of a given investigator without some limitations in terms of sampling, measurement, or other design feature. For example, data sets commonly used for secondary analysis often have a great deal of breadth in terms of the range of constructs assessed (e.g., finances, attitudes, personality, life satisfaction, physical health), but these constructs are often measured with a limited number of survey items. Issues of measurement reliability and validity are usually a major concern. Therefore, a strong grounding in basic and advanced psychometrics is extremely helpful for responding to criticisms and concerns about measurement issues that arise during the peer-review process.

A second consequence of the fact that the data have been collected by somebody else is that analysts may not have access to all of the information about data collection procedures and issues. The analyst simply receives a cleaned data set to use for subsequent analyses. Perhaps not obvious to the user is the amount of actual cleaning that occurred behind the scenes. Similarly, the complicated sampling procedures used in a given study may not be readily apparent to users, and this issue can prevent the appropriate use of survey weights ( Shrout & Napier, 2011 ).

Another significant disadvantage for secondary data analysis is the large amount of time and energy initially required to review data documentation. It can take hours and even weeks to become familiar with the codebooks and to discover which research questions have already been addressed by investigators using the existing data sets. It is very easy to underestimate how long it will take to move from an initial research idea to a competent final analysis. There is a risk that, unbeknownst to one another, researchers in different locations will pursue answers to the same research questions. On the other hand, once a researcher has become familiar with a data set and developed skills to work with the resource, they are able to pursue additional research questions resulting in multiple publications from the same data set. It is our experience that the process of learning about a data set can help generate new research ideas as it becomes clearer how the resource can be used to contribute to psychological science. Thus, the initial time and energy expended to learn about a resource can be viewed as initial investment that holds the potential to pay larger dividends over time.

Finally, a possible disadvantage concerns how secondary data analyses are viewed within particular subdisciplines of psychology and by referees during the peer-review process. Some journals and some academic departments may not value secondary data analyses as highly as primary research. Such preferences might break along Cronbach’s two disciplines or two streams of psychology—correlational versus experimental ( Cronbach, 1957 ; Tracy, Robins, & Sherman, 2009 ). The reality is that if original data collection is more highly valued in a given setting, then new investigators looking to build a strong case for getting hired or getting promoted might face obstacles if they base a career exclusively on secondary data analysis. Similarly, if experimental methods are highly valued and correlational methods are denigrated in a particular subfield, then results of secondary data analyses will face difficulties getting attention (and even getting published). The best advice is to be aware of local norms and to act accordingly.

Steps for Beginning a Secondary Data Analysis

Step 1: Find Existing Data Sets . After generating a substantive question, the first task is to find relevant data sets ( see Pienta, O’Rouke, & Franks, 2011 ). In some cases researchers will be aware of existing data sets through familiarity with the literature given that many well-cited papers have used such resources. For example, the GSOEP has now been widely used to address questions about correlates and developmental course of subjective well-being (e.g., Baird, Lucas, & Donnellan, 2010 ; Gerstorf, Ram, Estabrook, Schupp, Wagner, & Lindenberger, 2008 ; Gerstorf, Ram, Goebel, Schupp, Lindenberger, & Wagner, 2010 ; Lucas, 2005 ; 2007 ), and thus, researchers in this area know to turn to this resource if a new question arises. In other cases, however, researchers will attempt to find data sets using established archives such as the University of Michigan’s Interuniversity Consortium for Political and Social Research (ICPSR; http://www.icpsr.umich.edu/icpsrweb/ICPSR/ ). In addition to ICPSR, there are a number of other major archives ( see Pienta et al., 2011 ) that house potentially relevant data sets. Here are just a few starting points:

The Henry A. Murray Research Archive ( http://www.murray.harvard.edu/ )

The Howard W Odum Institute for Research in Social Science ( http://www.irss.unc.edu/odum/jsp/home2.jsp )

The National Opinion Research Center ( http://norc.org/homepage.htm )

The Roper Center of Public Opinion Research ( http://ropercenter.uconn.edu/ )

The United Kingdom Data Archive ( http://www.data-archive.ac.uk/ )

Individuals in charge of these archives and data depositories often catalog metadata, which is the technical term for information about the constituent data sets. Typical kinds of metadata include information about the original investigators, a description of the design and process of data collection, a list of the variables assessed, and notes about sampling weights and missing data. Searching through this information is an efficient way of gaining familiarity with data sets. In particular, the ICPSR has an impressive infrastructure for allowing researchers to search for data sets through a cataloguing of study metadata. The ICPSR is thus a useful starting point for finding the raw material for a secondary data analysis. The ICPSR also provides a new user tutorial for searching their holdings ( http://www.icpsr.umich.edu/icpsrweb/ICPSR/help/newuser.jsp ). We recommend that researchers search through their holdings to make a list of potential data sets. At that point, the next task is to obtain relevant codebooks to learn more about each resource.

Step 2: Read Codebooks . Researchers interesting in using an existing data set are strongly advised to thoroughly read the accompanying codebook ( Pienta et al., 2011 ). There are several reasons why a comprehensive understanding of the codebook is a critical first step when conducting a secondary data analysis. First, the codebook will detail the procedures and methods used to acquire the data and provide a list of all of the questions and assessments collected. A thorough reading of the codebook can provide insights into important covariates that can be included in subsequent models, and a careful reading will draw the analyst’s attention to key variables that will be missing because no such information was collected. Reading through a codebook can also help to generate new research questions.

Second, high-quality codebooks often report basic descriptive information for each variable such as raw frequency distributions and information about the extent of missing values. The descriptive information in the codebook can give investigators a baseline expectation for variables under consideration, including the expected distributions of the variables and the frequencies of under-represented groups (such as ethnic minority participants). Because it is important to verify that the descriptive statistics in the published codebook match those in the file analyzed by the secondary analyst, a familiarity with the codebook is essential. In addition to codebooks, many existing resources provide copies of the actual surveys completed by participants ( Pienta et al., 2011 ). However, the use of actual pencil-and-paper surveys is becoming less common with the advent of computer assisted interview techniques and Internet surveys. It is often the case that survey methods involve skip patterns (e.g., a participant is not asked about the consequences of her drinking if she responds that she doesn’t drink alcohol) that make it more difficult to assume the perspective of the “typical” respondent in a given study ( Pienta et al., 2011 ). Nonetheless, we recommend that analysts try to develop an understanding for the experiences of the participant in a given study. This perspective can help secondary analysts develop an intuitive understanding of certain patterns of missing data and anticipate concerns about question ordering effects ( see , e.g., Schwarz, 1999 ).

Step 3: Acquire Datasets and Construct a Working Datafile . Although there is a growing availability of Web-based resources for conducting basic analyses using selected data sets (e.g., the Survey Documentation Analysis software used by ICPSR), we are convinced that there is no substitute for the analysis of the raw data using the software packages of preference for a given investigator. This means that the analysts will need to acquire the data sets that they consider most relevant. This is typically a very straightforward process that involves acknowledging researcher responsibilities before downloading the entire data set from a website. In some cases, data are classified as restricted-use, and there are more extensive procedures for obtaining access that may involve submitting a detailed security plan and accompanying legal paperwork before becoming an authorized data user. When data involve children and other sensitive groups, Institutional Review Board approval is often required.

Each data set has different usage requirements, so it is difficult to provide blanket guidance. Researchers should be aware of the policies for using each data set and recognize their ethical responsibility for adhering to those regulations. A central issue is that the researcher must avoid deductive disclosure whereby otherwise anonymous participants are identified because of prior knowledge in conjunction with the personal characteristics coded in the dataset (e.g., gender, racial/ethnic group, geographic location, birth date). Such a practice violates the major ethical principles followed by responsible social scientists and has the potential to harm research participants.

Once the entire set of raw data is acquired, it is usually straightforward to import the files into the kinds of statistical packages used by researchers (e.g., R, SAS, SPSS, and STATA). At this point, it is likely that researchers will want to create smaller “working” file by pulling only relevant variables from the larger master files. It is often too cumbersome to work with a computer file that may have more than a thousand columns of information. The solution is to construct a working data file that has all of the needed variables tied to a particular research project. Researchers may also need to link multiple files by matching longitudinal data sets and linking to contextual variables such as information about schools or neighborhoods for data sets with a multilevel structure (e.g., individuals nested in schools or neighborhoods).

Explicit guidance about managing a working data file can be found in Willms (2011 ). Here, we simply highlight some particularly useful advice: (1) keep exquisite notes about what variables were selected and why; (2) keep detailed notes regarding changes to each variable and reasons why; and (3) keep track of sample sizes throughout this entire process. The guiding philosophy is to create documentation that is clear enough for an outside user to follow the logic and procedures used by the researcher. It is far too easy to overestimate the power of memory only to be disappointed when it comes time to revisit a particular analysis. Careful documentation can save time and prevent frustration. Willms (2011 ) noted that “keeping good notes is the sine qua non of the trade” (p. 33).

Step 4: Conduct Analyses . After assembling the working data file, the researcher will likely construct major study variables by creating scale composites (e.g., the mean of the responses to the items assessing the same construct) and conduct initial analyses. As previously noted, a comparison of the distributions and sample sizes with those in the study codebook is essential at this stage. Any deviations for the variables in the working data file and the codebook should be understood and documented. It is particularly useful to keep track of missing values to make sure that they have been properly coded. It should go without saying that an observed value of-9999 will typically require recoding to a missing value in the working file. Similarly, errors in reverse scoring items can be particularly common (and troubling) so researchers are well advised to conduct through item-level and scale analyses and check to make sure that reverse scoring was done correctly (e.g., examine the inter-item correlation matrix when calculating internal consistency estimates to screen for negative correlations). Willms (2011 ) provides some very savvy advice for the initial stages of actual data analysis: “Be wary of surprise findings” (p. 35). He noted that “too many times I have been excited by results only to find that I have made some mistake” (p. 35). Caution, skepticism, and a good sense of the underlying data set are essential for detecting mistakes.

An important comment about the nature of secondary data analysis is again worth emphasizing: These data sets are available to others in the scholarly community. This means that others should be able to replicate your results! It is also very useful to adopt a self-critical perspective because others will be able to subject findings to their own empirical scrutiny. Contemplate alternative explanations and attempt to conduct analyses to evaluate the plausibility of these explanations. Accordingly, we recommend that researchers strive to think of theoretically relevant control variables and include them in the analytic models when appropriate. Such an approach is useful both from the perspective of scientific progress (i.e., attempting to curb confirmation biases) and in terms of surviving the peer-review process.

Special Issue: Measurement Concerns in Existing Datasets

One issue with secondary data analyses that is likely to perplex psychologists are concerns regarding the measurement of core constructs. The reality is that many of the measures available in large-scale data sets consist of a subset of items derived from instruments commonly used by psychologists ( see Russell & Matthews, 2011 ). For example, the 10-item Rosenberg Self-Esteem scale ( Rosenberg, 1965 ) is the most commonly used measure of global self-esteem in the literature ( Donnellan, Trzesniewski, & Robins, 2011 ). Measures of self-esteem are available in many data sets like Monitoring the Future ( see Trzesniewski & Donnellan, 2010 ) but these measures are typically shorter than the original Rosenberg scale. Similarly, the GSOEP has a single-item rating of subjective well-being in the form of happiness, whereas psychologists might be more accustomed to measuring this construct with at least five items (e.g., Diener, Emmons, Larsen, & Griffin, 1985 ). Researchers using existing data sets will have to grapple with the consequences of having relatively short assessments in terms of the impact on reliability and validity.

For purposes of this chapter, we will make use of a conventional distinction between reliability and validity. Reliability will refer to the degree of measurement error present in a given set of scores (or alternatively the degree of consistency or precision in scores), whereas validity will refer to the degree to which measures capture the construct of interest and predict other variables in ways that are consistent with theory. More detailed but accessible discussions of reliability and validity can be found in Briggs and Cheek (1986 ), Clark and Watson (1995 ), John and Soto (2007 ), Messick (1995 ), Simms (2008 ), and Simms and Watson (2007 ). Widaman, Little, Preacher, and Sawalani (2011 ) have provided a discussion of these issues in the context of the shortened assessments available in existing data sets.

Short Measures and Reliability . Classical Test Theory (e.g., Lord & Novick, 1968 ) is the measurement perspective most commonly used among psychologists. According to this measurement philosophy, any observed score is a function of the underlying attribute (the so-called “true score”) and measurement error. Reliability is conceptualized as any deviation or inconsistency in observed scores for the same attribute across multiple assessments of that attribute. A thought experiment may help crystallize insights about reliability (e.g., Lord & Novick, 1968 ): Imagine a thousand identical clones each completing the same self-esteem instrument simultaneously. The underlying self-esteem attribute (i.e., the true scores) should be the same for each clone (by definition), whereas the observed scores may fluctuate across clones because of random measurement errors (e.g., a single clone misreading an item vs. another clone being frustrated by an extremely hot testing room). The extent of the observed fluctuations in reported scores across clones offers insight into how much measurement error is present in this instrument. If scores are tightly clustered around a single value, then measurement error is minimal; however, if scores are dramatically different across clones, then there is a clear indication of problems with reliability. The measure is imprecise because it yields inconsistent values across the same true scores.

These ideas about reliability can be applied to observed samples of scores such that the total observed variance is attributable to true score variance (i.e., true individual differences in underlying attributes) and variance stemming from random measurement errors. The assumption that measurement error is random means that it has an expected value of zero across observations. Using this framework, reliability can then be defined as the ratio of true score variance to the total observed variance. An assessment that is perfectly reliable (i.e., has no measurement error) will have a ratio of 1.0, whereas an assessment that is completely unreliable will yield a ratio of 0.0 ( see John & Soto, 2007 , for an expanded discussion). This perspective provides a formal definition of a reliability coefficient.

Psychologists have developed several tools to estimate the reliability of their measures, but the approach that is most commonly used is coefficient a ( Cronbach, 1951 ; see Schmitt, 1996 , for an accessible review). This approach considers reliability from the perspective of internal consistency. The basic idea is that fluctuations across items assessing the same construct reflect the presence of measurement error. The formula for the standardized α is a fairly simple function of the average inter-item correlation (a measure of inter-item homogeneity) and the total number of items in a scale. The α coefficient is typically judged acceptable if it is above 0.70, but the justification for this particular cutoff is somewhat arbitrary ( see Lance, Butts, & Michels, 2006 ). Researchers are therefore advised to take a more critical perspective on this statistic. A relevant concern is that α is negatively impacted when the measure is short.

Given concerns with scale length and α, many methodologically oriented researchers recommend evaluating and reporting the average inter-item correlation because it can be interpreted independently of length and thus represents a “more straightforward indicator of internal consistency” ( Clark & Watson, 1995 , p. 316). Consider that it is common to observe an average inter-item correlation for the 10-item Rosenberg Self-Esteem ( Rosenberg, 1965 ) scale around 0.40 (this is based on typically reported a coefficients; see Donnellan et al., 2011 ). This same level of internal homogeneity (i.e., an inter-item correlation of 0.40) yields an α of around 0.67 with a 3-item scale but an α of around 0.87 with 10 items. A measure of a broader construct like Extraversion may generate an average inter-item correlation of 0.20 ( Clark & Watson, 1995 , p. 316), which would translate to an α of 0.43 for a 3-item scale and 0.71 for a 10-item scale. The point is that α coefficients will fluctuate with scale length and the breadth of the construct. Because most scales in existing resources are short, the α coefficients might fall below the 0.70 convention despite having a respectable level of inter-item correlation.

Given these considerations, we recommend that researchers consider the average inter-item correlation more explicitly when working with secondary data sets. It is also important to consider the breadth of the underlying construct to generate expectations for reasonable levels of item homogeneity as indexed by the average inter-item correlation. Clark and Watson (1995 ; see also Briggs & Cheek, 1986 ) recommend values of around 0.40 to 0.50 for measures of fairly narrow constructs (e.g., self-esteem) and values of around 0.15 to 0.20 for measures of broader constructs (e.g., neuroticism). It is our experience that considerations about internal consistency often need to be made explicit in manuscripts so that reviewers will not take an unnecessarily harsh perspective on α’s that fall below their expectations. Finally, we want to emphasize that internal consistency is but one kind of reliability. In some cases, it might be that test—retest reliability is more informative and diagnostic of the quality of a measure ( McCrae, Kurtz, Yamagata, & Terracciano, 2011 ). Fortunately, many secondary data sets are longitudinal so it possible to get an estimate of longer term test-retest reliability from the existing data.

Beyond simply reporting estimates of reliability, it is worth considering why measurement reliability is such an important issue in the first place. One consequence of reliability for substantive research is that measurement imprecision tends to depress observed correlations with other variables. This notion of attenuation resulting from measurement error and a solution were discussed by Spearman as far back as 1904 ( see , e.g., pp. 88–94). Unreliable measures can affect the conclusions drawn from substantive research by imposing a downward bias on effect size estimation. This is perhaps why Widaman et al. (2011 ) advocate using latent variable structural modeling methods to combat this important consequence of measurement error. Their recommendation is well worth considering for those with experience with this technique ( see Kline, 2011 , for an introduction). Regardless of whether researchers use observed variables or latent variables for their analyses, it is important to recognize and appreciate the consequences of reliability.

Short Measures and Validity . Validity, for our purposes, reflects how well a measure captures the underlying conceptual attribute of interest. All discussions of validity are based, in part, on agreement in a field as to how to understand the construct in question. Validity, like reliability, is assessed as a matter of degree rather than a categorical distinction between valid or invalid measures. Cronbach and Meehl (1955 ) have provided a classic discussion of construct validity, perhaps the most overarching and fundamental form of validity considered in psychological research ( see also Smith, 2005 ). However, we restrict our discussion to content validity and criterion-related validity because these two types of validity are particularly relevant for secondary data analysis and they are more immediately addressable.

Content validity describes how well a measure captures the entire domain of the construct in question. Judgments regarding content validity are ideally made by panels of experts familiar with the focal construct. A measure is considered construct deficient if it fails to assess important elements of the construct. For example, if thoughts of suicide are an integral aspect of the concept depression and a given self-report measure is missing items that tap this content, then the measure would be deemed construct-deficient. A measure can also suffer from construct contamination if it includes extraneous items that are irrelevant to the focal construct. For example, if somatic symptoms like a rapid heartbeat are considered to reflect the construct of anxiety and not part of depression, then a depression inventory that has such an item would suffer from construct contamination. Given the reduced length of many assessments, concerns over construct deficiency are likely to be especially pressing. A short assessment may not include enough items to capture the full breadth of a broad construct. This limitation is not readily addressed and should be acknowledged ( see Widaman et al., 2011 ). In particular, researchers may need to clearly specify that their findings are based on a narrower content domain than is normally associated with the focal construct of interest.

A subtle but important point can arise when considering the content of measures with particularly narrow content. Internal consistency will increase when there is redundancy among items in the scale; however, the presence of similar items may decrease predictive power. This is known as the attenuation paradox in psycho metrics ( see Clark & Watson, 1995 ). When items are nearly identical, they contribute redundant information about a very specific aspect of the construct. However, the very specific attribute may not have predictive power. In essence, reliability can be maximized at the expense of creating a measure that is not very useful from the point of view of prediction (and likely explanation). Indeed, Clark and Watson (1995 ) have argued that the “goal of scale construction is to maximize validity rather than reliability” (p. 316). In short, an evaluation of content validity is also important when considering the predictive power of a given measure.

Whereas content validity is focused on the internal attributes of a measure, criterion-related validity is based on the empirical relations between measures and other variables. Using previous research and theory surrounding the focal construct, the researcher should develop an expectation regarding the magnitude and direction of observed associations (i.e., correlations) with other variables. A good supporting theory of a construct should stipulate a pattern of association, or nomological network, concerning those other variables that should be related and unrelated to the focal construct. This latter requirement is often more difficult to specify from existing theories, which tend to provide a more elaborate discussion of convergent associations rather than discriminant validity ( Widaman et al., 2011 ). For example, consider a very truncated nomological network for Agreeableness (dispositional kindness and empathy). Measures of this construct should be positively associated with romantic relationship quality, negatively related to crime (especially violent crime), and distinct from measures of cognitive ability such as tests of general intelligence.

Evaluations of criterion-related validity can be conducted within a data set as researchers document that a measure has an expected pattern of associations with existing criterion-related variables. Investigators using secondary data sets may want to conduct additional research to document the criterion-related validity of short measures with additional convenience samples (e.g., the ubiquitous college student samples used by many psychologists; Sears, 1986 ). For example, there are six items in the Add Health data set that appear to measure self-esteem (e.g., “I have a lot of good qualities” and “I like myself just the way I am”) ( see Russell, Crockett, Shen, &Lee, 2008 ). Although many of the items bear a strong resemblance to the items on the Rosenberg Self-Esteem scale ( Rosenberg, 1965 ), they are not exactly the same items. To obtain some additional data on the usefulness of this measure, we administered the Add Health items to a sample of 387 college students at our university along with the Rosenberg Self-Esteem scale and an omnibus measure of personality based on the Five-Factor model ( Goldberg, 1999 ). The six Add Health items were strongly correlated with the Rosenberg ( r = 0.79), and both self-esteem measures had a similar pattern of convergent and divergent associations with the facets of the Five-Factor model (the two profiles were very strongly associated: r > 0.95). This additional information can help bolster the case for the validity of the short Add Health self-esteem measure.

Special Issue: Missing Data in Existing Data Sets

Missing data is a fact of life in research— individuals may drop out of longitudinal studies or refuse to answer particular questions. These behaviors can affect the generalizability of findings because results may only apply to those individuals who choose to complete a study or a measure. Missing data can also diminish statistical power when common techniques like listwise deletion are used (e.g., only using cases with complete information, thereby reducing the sample size) and even lead to biased effect size estimates (e.g., McKnight & McKnight, 2011 ; McKnight, McKnight, Sidani, & Figuredo, 2007 ; Widaman, 2006 ). Thus, concerns about missing data are important for all aspects of research, including secondary data analysis. The development of specific techniques for appropriately handling missing data is an active area of research in quantitative methods ( Schafer & Graham, 2002 ).

Unfortunately, the literature surrounding missing data techniques is often technical and steeped in jargon, as noted by McKnight et al. (2007 ). The reality is that researchers attempting to understand issues of missing data need to pay careful attention to terminology. For example, a novice researcher may not immediately grasp the classification of missing data used in the literature ( see Schafer & Graham, 2002 , for a clear description). Consider the confusion that may stem from learning that data are missing at random (MAR) versus data are missing completely at random (MCAR). The term MAR does not mean that missing values only occurred because of chance factors. This is the case when data are missing completely at random (MCAR). Data that are MCAR are absent because of truly random factors. Data that are MAR refers to the situation in which the probability that the observations are missing depends only on other available information in the data set. Data that are MAR can be essentially “ignored” when the other factors are included in a statistical model. The last type of missing data, data missing not at random (MNAR), is likely to characterize the variables in many real-life data sets. As it stands, methods for handing data that are MAR and MCAR are better developed and more easily implemented than methods for handling data MNAR. Thus, many applied researchers will assume data are MAR for purposes of statistical modeling (and the ability to sleep comfortably at night). Fortunately, such an assumption might not create major problems for many analyses and may in fact represent the “practical state of the art” ( Schafer & Graham, 2002 , p. 173).

The literature on missing data techniques is growing, so we simply recommend that researchers keep current on developments in this area. McKnight et al. (2007 ) and Widaman (2006 ) both provide an accessible primer on missing data techniques. In keeping with the largely practical bent to the chapter, we suggest that researchers keep careful track of the amount of missing data present in their analyses and report such information clearly in research papers ( see McKnight & McKnight, 2011 ). Similarly, we recommend that researchers thoroughly screen their data sets for evidence that missing values depend on other measured variables (e.g., scores at Time 1 might be associated with Time 2 dropout). In general, we suggest that researchers avoid listwise and pairwise deletion methods because there is very little evidence that these are good practices ( see Jeličić, Phelps, & Lerner, 2009 ; Widaman, 2006 ). Rather, it might be easiest to use direct fitting methods such as the estimation procedures used in conventional structural equation modeling packages (e.g., Full Information Maximum Likelihood; see Allison, 2003 ). At the very least, it is usually instructive to compare results using listwise deletion with results obtained with direct model fitting in terms of the effect size estimates and basic conclusions regarding the statistical significance of focal coefficients.

Special Issue: Sample Weighting in Existing Data Sets

One of the advantages of many existing data sets is that they were collected using probabilistic sampling methods so that researchers can obtain unbiased population estimates. Such estimates, however, are only obtained when complex survey weights are formally incorporated into the statistical modeling procedures. Such weighting schemes can affect the correlations between variables, and therefore all users of secondary data sets should become familiar with sampling design when they begin working with a new data set. A considerable amount of time and effort is dedicated toward generating complex weighting schemes that account for the precise sampling strategies used in the given study, and users of secondary data sets should give careful consideration to using these weights appropriately.