- Undergraduate

- High School

- Architecture

- American History

- Asian History

- Antique Literature

- American Literature

- Asian Literature

- Classic English Literature

- World Literature

- Creative Writing

- Linguistics

- Criminal Justice

- Legal Issues

- Anthropology

- Archaeology

- Political Science

- World Affairs

- African-American Studies

- East European Studies

- Latin-American Studies

- Native-American Studies

- West European Studies

- Family and Consumer Science

- Social Issues

- Women and Gender Studies

- Social Work

- Natural Sciences

- Pharmacology

- Earth science

- Agriculture

- Agricultural Studies

- Computer Science

- IT Management

- Mathematics

- Investments

- Engineering and Technology

- Engineering

- Aeronautics

- Medicine and Health

- Alternative Medicine

- Communications and Media

- Advertising

- Communication Strategies

- Public Relations

- Educational Theories

- Teacher's Career

- Chicago/Turabian

- Company Analysis

- Education Theories

- Shakespeare

- Canadian Studies

- Food Safety

- Relation of Global Warming and Extreme Weather Condition

- Movie Review

- Admission Essay

- Annotated Bibliography

- Application Essay

- Article Critique

- Article Review

- Article Writing

- Book Review

- Business Plan

- Business Proposal

- Capstone Project

- Cover Letter

- Creative Essay

- Dissertation

- Dissertation - Abstract

- Dissertation - Conclusion

- Dissertation - Discussion

- Dissertation - Hypothesis

- Dissertation - Introduction

- Dissertation - Literature

- Dissertation - Methodology

- Dissertation - Results

- GCSE Coursework

- Grant Proposal

- Marketing Plan

- Multiple Choice Quiz

- Personal Statement

- Power Point Presentation

- Power Point Presentation With Speaker Notes

- Questionnaire

- Reaction Paper

- Research Paper

- Research Proposal

- SWOT analysis

- Thesis Paper

- Online Quiz

- Literature Review

- Movie Analysis

- Statistics problem

- Math Problem

- All papers examples

- How It Works

- Money Back Policy

- Terms of Use

- Privacy Policy

- We Are Hiring

Relation Between Humans and Technology, Essay Example

Pages: 3

Words: 925

Hire a Writer for Custom Essay

Use 10% Off Discount: "custom10" in 1 Click 👇

You are free to use it as an inspiration or a source for your own work.

Technological determinism is a theory that the development of both social structures and cultural values driven by the society’s technology. The term is believed to have been invented by an American Sociologist, Thorstein Veblen (1857-1929). Technological momentum is also a theory that states the relationship between the society and technology over a period. It was coined by Thomas P. Hughes a Historian of technology. Hughes believes in having two distinct models to see how technology and society interact, he claims that the new, upcoming technologies are the one that affects the society. These technologies are brought up in ways that they are lasting and irremediable, and the society has to change to be able to survive.

Technology and the human community have drastically grown together given the fact that, technology puts the human community on its toes each and every second. Time is the unifying factor between the social and technological determinism. Social claims that the societies have the power to control how technology will develop and uses are true. When technology is still young, the society has control over it and can easily mould it to fit its suitability. Although, when it has matured, the society will be the one to adjust to accommodate to the new technology. The current technology has increasingly improved the education sector. According to Thomas Edison State College “technology has improved the ability to do research and elevates our knowledge on contemporary problems and extends our ability to address those issues with scope and depth”. Education research has become easier, and fun to do with the new technology, which makes it faster to acquire required materials for use. However, technology cannot work independently it requires knowledge on cultural values to be able to compliment it (Murphie & Potts 19).

In relation to cultural values and history, technology had to be there even in the ancient time where hunting tools, sculptures and jewelry were being shaped and decorated either by curving or painting.

Currently in leadership, technology has demonstrated a great deal of importance in numerous occasions, like in the case of Second World War. Nazi Germany with the use of technology like new rockets and jet planes achieved technological innovations, although he did not change the fate of the war. It would have been different if the innovation had come earlier and would have determined the momentum (Feenberg 2003). Technology has also improved the communication standards, accountability and accessibility. Leaders can be easily reached through the availability of Internet, telephones and fax machines. Therefore, it is imperative for leaders to gain knowledge and understanding of vast technological advancements and how they help in managerial and decision making processes.

Technology and human interaction are things that need to be advocated in institutions and professions because they have changed thinking patterns and mode of presentation. This accommodates the needs and preferences of individuals in the society, which includes the disabled and physically challenged. Technology influence on humanity has affected all sectors in marketing (private, public, off line and or on-line). This intersection has disrupted old models of business, industrial theories and systems of belief underpinning ancient knowledge and concepts (Croteau & Hoynes 305). This has promoted movement comprehension curves and results in the creation of meaning and significance from changes emerging in technology and human interaction.

Technology, on the other hand, can also affect negatively in other cases, for example, in a war, where destructive technology is used by the major technological powers of Soviet Union and United States in the cold war. They could not achieve clear victories because it would destroy everything and nothing would be left to win. In other cases, executive members of a given firm use this media (Croteau & Hoynes 306) in confronting sub-ordinate staff members and harass female counterparts’ sub-ordinate staff members and harass female counterparts sexually. This has affected working conditions negatively and hence, production is hampered.

Civilization and technology work hand in hand. Therefore, in order to achieve some of the civilization, technology has to be in place first. Some historians believe that the higher the technology the influential the civilization will be to the neighboring cultures (Murphie & Potts 21). Technology has made life easier like in the availability of ATM’s, which has made society able to access the banking services faster and conveniently. The changes currently seen in the society is due to the arising technology. For example, in the ancient history, communication used to be in the form of signs (smoke, sounds among others). Unlike today, where people communicate through email thus, negatively affecting the social life of the human community since people rarely meet.

In conclusion, technology has brought both positive and negative effects in the human community. Although it has some negative factors, we cannot do without it because it has become a requirement. In the current world, one cannot live without a mobile phone. Although, having it also have some negative factors like wave radiation, which can affect the human health after using it for long. This has also brought forth the use of other technology to reduce radiation of the wave to the human body to allow him use the mobile phone more. This shows that technology and humanity co-exist with each other.

Works Cited

Croteau, D & Hoynes, W. Media Society: Industries, Images and Audiences. Thousand Oaks: Pine Forge Press, 2003. Print

Davies, F. Technology and Business Ethics Theory. Business Ethics: A European Review vol. 6, no. 2, pp. 76-80, 2002. Print

Feenberg, A. (2003). What Is Philosophy of Technology ? Retrieved 26 April, 2012 from <http://www.sfu.ca/~andrewf/books/What_is_Philosophy_of_Technology.pdf >

Murphie, A and Potts, J. Culture and Technology . London: Palgrave, 2003. Print

Stuck with your Essay?

Get in touch with one of our experts for instant help!

J Rishard Branson & the Virgin Group, Case Study Example

Technology Through Time, Essay Example

Time is precious

don’t waste it!

Plagiarism-free guarantee

Privacy guarantee

Secure checkout

Money back guarantee

Related Essay Samples & Examples

Voting as a civic responsibility, essay example.

Pages: 1

Words: 287

Utilitarianism and Its Applications, Essay Example

Words: 356

The Age-Related Changes of the Older Person, Essay Example

Pages: 2

Words: 448

The Problems ESOL Teachers Face, Essay Example

Pages: 8

Words: 2293

Should English Be the Primary Language? Essay Example

Pages: 4

Words: 999

The Term “Social Construction of Reality”, Essay Example

Words: 371

Photo by Gary Hershorn/Getty

Our tools shape our selves

For bernard stiegler, a visionary philosopher of our digital age, technics is the defining feature of human experience.

by Bryan Norton + BIO

It has become almost impossible to separate the effects of digital technologies from our everyday experiences. Reality is parsed through glowing screens, unending data feeds, biometric feedback loops, digital protheses and expanding networks that link our virtual selves to satellite arrays in geostationary orbit. Wristwatches interpret our physical condition by counting steps and heartbeats. Phones track how we spend our time online, map the geographic location of the places we visit and record our histories in digital archives. Social media platforms forge alliances and create new political possibilities. And vast wireless networks – connecting satellites, drones and ‘smart’ weapons – determine how the wars of our era are being waged. Our experiences of the world are soaked with digital technologies.

But for the French philosopher Bernard Stiegler, one of the earliest and foremost theorists of our digital age, understanding the world requires us to move beyond the standard view of technology. Stiegler believed that technology is not just about the effects of digital tools and the ways that they impact our lives. It is not just about how devices are created and wielded by powerful organisations, nation-states or individuals. Our relationship with technology is about something deeper and more fundamental. It is about technics .

According to Stiegler, technics – the making and use of technology, in the broadest sense – is what makes us human. Our unique way of existing in the world, as distinct from other species, is defined by the experiences and knowledge our tools make possible, whether that is a state-of-the-art brain-computer interface such as Neuralink, or a prehistoric flint axe used to clear a forest. But don’t be mistaken: ‘technics’ is not simply another word for ‘technology’. As Martin Heidegger wrote in his essay ‘The Question Concerning Technology’ (1954), which used the German term Technik instead of Technologie in the original title: the ‘essence of technology is by no means anything technological.’ This aligns with the history of the word: the etymology of ‘technics’ leads us back to something like the ancient Greek term for art – technē . The essence of technology, then, is not found in a device, such as the one you are using to read this essay. It is an open-ended creative process, a relationship with our tools and the world.

This is Stiegler’s legacy. Throughout his life, he took this idea of technics, first explored while he was imprisoned for armed robbery, further than anyone else. But his ideas have often been overlooked and misunderstood, even before he died in 2020. Today, they are more necessary than ever. How else can we learn to disentangle the effects of digital technologies from our everyday experiences? How else can we begin to grasp the history of our strange reality?

S tiegler’s path to becoming the pre-eminent philosopher of our digital age was anything but straightforward. He was born in Villebon-sur-Yvette, south of Paris, in 1952, during a period of affluence and rejuvenation in France that followed the devastation of the Second World War. By the time he was 16, Stiegler participated in the revolutionary wave of 1968 (he would later become a member of the Communist Party), when a radical uprising of students and workers forced the president Charles de Gaulle to seek temporary refuge across the border in West Germany. However, after a new election was called and the barricades were dismantled, Stiegler became disenchanted with traditional Marxism, as well as the political trends circulating in France at the time. The Left in France seemed helplessly torn between the postwar existentialism of Jean-Paul Sartre and the anti-humanism of Louis Althusser. While Sartre insisted on humans’ creative capacity to shape their own destiny, Althusser argued that the pervasiveness of ideology in capitalist society had left us helplessly entrenched in systems of power beyond our control. Neither of these options satisfied Stiegler because neither could account for the rapid rise of a new historical force: electronic technology. By the 1970s and ’80s, Stiegler sensed that this new technology was redefining our relationship to ourselves, to the world, and to each other. To account for these new conditions, he believed the history of philosophy would have to be rewritten from the ground up, from the perspective of technics. Neither existentialism nor Marxism nor any other school of philosophy had come close to acknowledging the fundamental link between human existence and the evolutionary history of tools.

Stiegler describes his time in prison as one of radical self-exploration and philosophical experimentation

In the decade after 1968, Stiegler opened a jazz club in Toulouse that was shut down by the police a few years later for illegal prostitution. Desperate to make ends meet, Stiegler turned to robbing banks to pay off his debts and feed his family. In 1978, he was arrested for armed robbery and sentenced to five years in prison. A high-school dropout who was never comfortable in institutional settings, Stiegler requested his own cell when he first arrived in prison, and went on a hunger strike until it was granted. After the warden finally acquiesced, Stiegler began taking note of how his relationship to the outside world was mediated through reading and writing. This would be a crucial realisation. Through books, paper and pencils, he was able to interface with people and places beyond the prison walls.

It was during his time behind bars that Stiegler began to study philosophy more intently, devouring any books he could get his hands on. In his philosophical memoir Acting Out (2009), Stiegler describes his time in prison as one of radical self-exploration and philosophical experimentation. He read classic works of Greek philosophy, studied English and memorised modern poetry, but the book that really drew his attention was Plato’s Phaedrus. In this dialogue between Socrates and Phaedrus, Plato outlines his concept of anamnesis , a theory of learning that states the acquisition of new knowledge is just a process of remembering what we once knew in a previous life. Caught in an endless cycle of death and rebirth, we forget what we know each time we are reborn. For Stiegler, this idea of learning as recollection would become less spiritual and more material: learning and memory are tied inextricably to technics. Through the tools we use – including books, writing, archives – we can store and preserve vast amounts of knowledge.

After an initial attempt at writing fiction in prison, Stiegler enrolled in a philosophy programme designed for inmates. While still serving his sentence, he finished a degree in philosophy and corresponded with prominent intellectuals such as the philosopher and translator Gérard Granel, who was a well-connected professor at the University of Toulouse-Le Mirail (later known as the University of Toulouse-Jean Jaurès). Granel introduced Stiegler to some of the most prominent figures in philosophy at the time, including Jean-François Lyotard and Jacques Derrida . Lyotard would oversee Stiegler’s master’s thesis after his eventual release; Derrida would supervise his doctoral dissertation, completed in 1993, which was reworked and published a year later as the first volume in his Technics and Time series. With the help of these philosophers and their novel ideals, Stiegler began to reshape his earlier political commitment to Marxist materialism, seeking to account for the ways that new technologies shape the world.

B y the start of the 1970s, a growing number of philosophers and political theorists began calling into question the immediacy of our lived experience. The world around us was no longer seen by these thinkers as something that was simply given, as it had been for phenomenologists such as Immanuel Kant and Edmund Husserl. The world instead presented itself as a built environment composed of things such as roads, power plants and houses, all made possible by political institutions, cultural practices and social norms. And so, reality also appeared to be a construction, not a given.

One of the French philosophers who interrogated the immediacy of reality most closely was Louis Althusser. In his essay ‘Ideology and Ideological State Apparatuses’ published in 1970, years before Stiegler was taught by him, Althusser suggests that ideology is not something that an individual believes in, but something that goes far beyond the scale of a single person, or even a community. Just as we unthinkingly turn around when we hear our name shouted from behind, ideology has a hold on us that is both automatic and unconscious – it seeps in from outside. Michel Foucault , a former student of Althusser at the École Normale Supérieure in Paris, developed a theory of power that functions in a similar way. In Discipline and Punish (1975) and elsewhere, Foucault argues that social and political power is not concentrated in individuals but is produced by ‘discourses, institutions, architectural forms, regulatory decisions, laws, administrative measures, scientific statements, philosophical, moral and philanthropic propositions’. Foucault’s insight was to show how power shapes every facet of the world, from classroom interactions between a teacher and student to negotiations of a trade agreement between representatives of two different nations. From this perspective, power is constituted in and through material practices, rather than something possessed by individual subjects.

We don’t simply ‘use’ our digital tools – they enter and pharmacologically change us, like medicinal drugs

These are the foundations on which Stiegler assembled his idea of technics. Though he appreciated the ways that Foucault and Althusser had tried to account for technology, he remained dissatisfied by the lack of attention to particular types of technology – not to mention the fact that neither thinker had offered any real alternatives to the forms of power they described. In his book Taking Care of Youth and the Generations (2008), Stiegler explains that he was able to move beyond Foucault with the help of his mentor Derrida’s concept of the pharmakon . In his essay ‘Plato’s Pharmacy’ (1972), Derrida began developing the idea as he explored how our ability to write can create and undermine (‘cure’ and ‘poison’) an individual subject’s sense of identity. For Derrida, the act of writing – itself a kind of technology – has a Janus-faced relationship to individual memory. Though it allows us to store knowledge and experience across vast periods of time, writing disincentivises us from practising our own mental capacity for recollection. The written word short-circuits the immediate connection between lived experience and internal memory. It ‘cures’ our cognitive limits, but also ‘poisons’ our cognition by limiting our abilities.

In the late 20th century, Stiegler began applying this idea to new media technologies, such as television, which led to the development of a concept he called pharmacology – an idea that suggests we don’t simply ‘use’ our digital tools. Instead, they enter and pharmacologically change us, like medicinal drugs. Today, we can take this analogy even further. The internet presents us with a massive archive of formatted, readily accessible information. Sites such as Wikipedia contain terabytes of knowledge, accumulated and passed down over millennia. At the same time, this exchange of unprecedented amounts of information enables the dissemination of an unprecedented amount of misinformation, conspiracy theories, and other harmful content. The digital is both a poison and a cure, as Derrida would say.

This kind of polyvalence led Stiegler to think more deliberately about technics rather than technology. For Stiegler, there are inherent risks in thinking in terms of the latter: the more ubiquitous that digital technologies become in our lives, the easier it is to forget that these tools are social products that have been constructed by our fellow humans. How we consume music, the paths we take to get from point A to point B , how we share ourselves with others, all of these aspects of daily life have been reshaped by new technologies and the humans that produce them. Yet we rarely stop to reflect on what this means for us. Stiegler believed this act of forgetting creates a deep crisis for all facets of human experience. By forgetting, we lose our all-important capacity to imagine alternative ways of living. The future appears limited, even predetermined, by new technology.

I n the English-speaking world, Stiegler is best known for his first book Technics and Time, 1: The Fault of Epimetheus (1994). In the first sentence, he highlights the vital link between our understanding of the technologies we use and our capacity to imagine the future. ‘The object of this work is technics,’ he writes, ‘apprehended as the horizon of all possibility to come and of all possibility of a future.’ He views our relationship with tools as the determining force for all future possibilities; technics is the defining feature of human experience, one that has been overlooked by philosophers from Plato and Aristotle down to the present. While René Descartes, Husserl and other thinkers asked important questions about consciousness and lived experience (phenomenology), and the nature of truth (metaphysics) or knowledge (epistemology), they failed to account for the ways that technologies help us find – or guide us toward – answers to these questions. In the history of philosophy, ‘Technics is the unthought,’ according to Stiegler.

To further stress the importance of technics, Stiegler turns to the creation myth told by the Greek poet Hesiod in Works and Days , written around 700 BCE . During the world’s creation, Zeus asks the Titan Epimetheus to distribute individual talents to each species. Epimetheus gives wings to birds so they can fly, and fins to fish so they can swim. By the time he gets to humans, however, Epimetheus has no talents left over. Epimetheus, whose name (according to Stiegler) means the ‘forgetful one’ in Greek, turns to his brother Prometheus for help. Prometheus then steals fire from the gods, presenting it to humans in place of a biological talent. Humans, once more, are born out of an act of forgetting, just like in Plato’s theory of anamnesis. The difference with Hesiod’s story is that technics here provides a material basis for human experience. Bereft of any physiological talents, Homo sapiens must survive by using tools, beginning with fire.

Factories, server farms and even psychotropic drugs possess the capacity to poison or cure our world

The pharmacology of technics, for Stiegler, presents opportunities for positive or negative relationships with tools. ‘But where the danger lies,’ writes the poet Friedrich Hölderlin in a quote Stiegler often turned to, ‘also grows the saving power.’ While Derrida focuses on the ability of the written word to subvert the sovereignty of the individual subject, Stiegler widens this understanding of pharmacology to include a variety of media and technologies. Not just writing, but factories, server farms and even psychotropic drugs possess the pharmacological capacity to poison or cure our world and, crucially, our understanding of it. Technological development can destroy our sense of ourselves as rational, coherent subjects, leading to widespread suffering and destruction. But tools can also provide us with a new sense of what it means to be human, leading to new modes of expression and cultural practices.

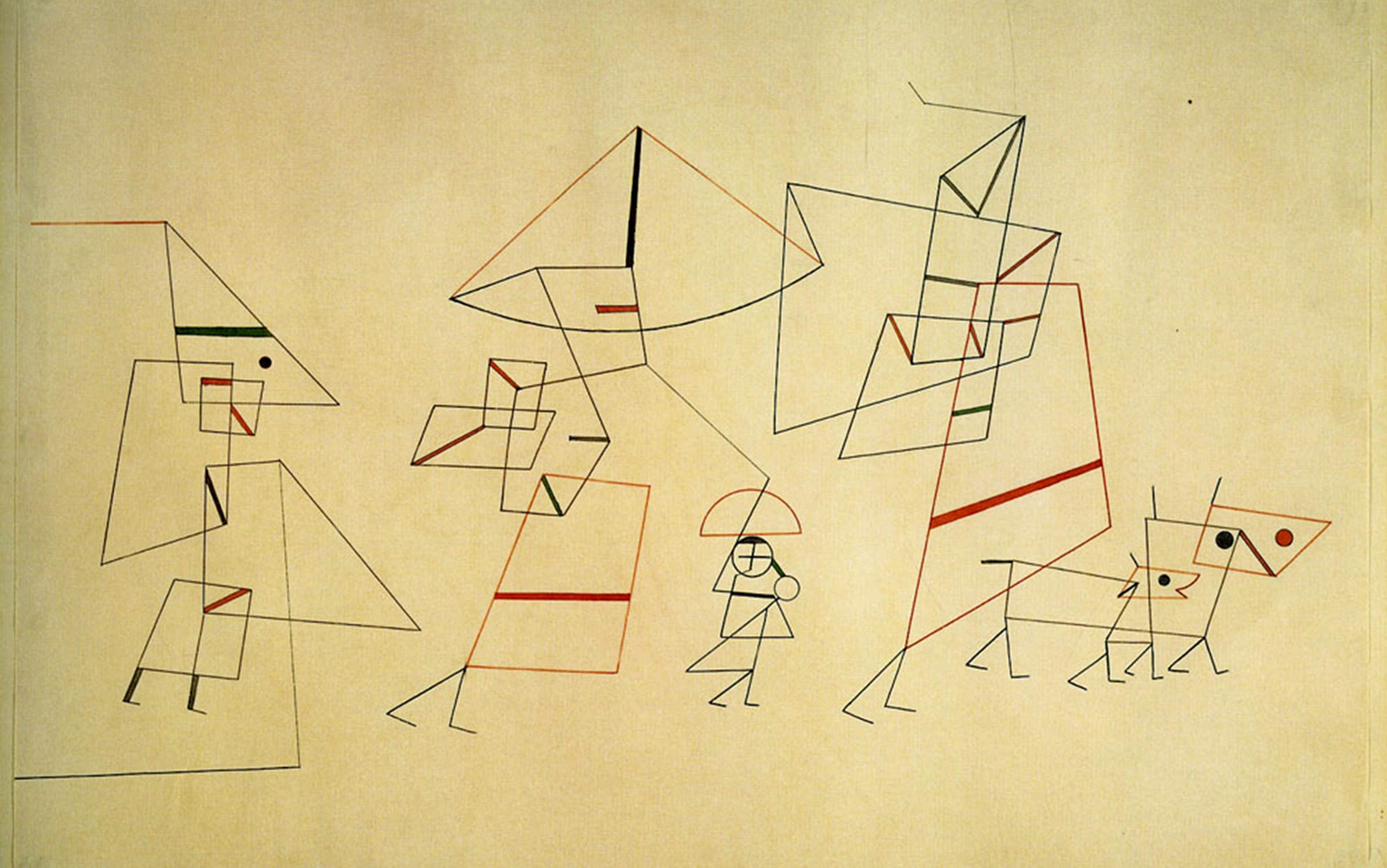

In Symbolic Misery, Volume 2: The Catastrophe of the Sensible (2015) , Stiegler considers the effect that new technologies, especially those accompanying industrialisation, have had on art and music. Industry, defined by mass production and standardisation, is often regarded as antithetical to artistic freedom and expression. But Stiegler urges us to take a closer look at art history to see how artists responded to industrialisation. In response to the standardising effects of new machinery, for example, Marcel Duchamp and other members of the 20th-century avant-garde used industrial tools to invent novel forms of creative expression. In the painting Nude Descending a Staircase, No 2 (1912), Duchamp employed the new temporal perspectives made possible by photography and cinema to paint a radically different kind of portrait. Inspired by the camera’s ability to capture movement, frame by frame, Duchamp paints a nude model who appears in multiple instants at once, like a series of time-lapse photographs superimposed onto each other. The image became an immediate sensation, an icon of modernity and the resulting entanglement of art and industrial technology.

Technical innovations are never without political and social implications for Stiegler. The phonograph, for example, may have standardised classical musical performances after its invention in the late 1800s, but it also contributed to the development of jazz, a genre that was popular among musicians who were barred from accessing the elite world of classical music. Thanks to the gramophone, Black musicians such as the pianist and composer Duke Ellington were able to learn their instruments by ear, without first learning to read musical notation. The phonograph’s industrialisation of musical performance paradoxically led to the free-flowing improvisation of jazz performers.

T echnics draws our attention to the world-making capabilities of our tools, while reminding us of the constructed nature of our technological reality. Stiegler’s capacious understanding of technics, encompassing everything from early agricultural tools to the television set, does not disregard new innovations, either. In 2006, Stiegler founded the Institute for Research and Innovation, an organisation at the Centre Pompidou in Paris devoted to exploring the impact digital technology has on contemporary society. Stiegler’s belief in the power of technology to shape the world around us has often led to the charge that he is a techno-determinist who believes the entire course of history is shaped by tools and machines. It’s true that Stiegler thinks technology defines who we are as humans, but this process does not always lock us into predetermined outcomes. Instead, it simultaneously provides us with a material horizon of possible experience. Stiegler’s theory of technics urges us to rethink the history of philosophy, art and politics in order that we might better understand how our world has been shaped by technology. And by acquiring this historical consciousness, he hopes that we will ultimately design better tools, using technology to improve our world in meaningful ways.

This doesn’t mean Stiegler is a techno-optimist, either, who blindly sees digital technology as a panacea for our problems. One particular concern he expresses about digital technology is its capacity to standardise the world we inhabit. Big data, for Stiegler, threatens to limit our sense of what is possible, rather than broadening our horizons and opening new opportunities for creative expression. Just as Hollywood films in the 20th century manufactured and distributed the ideology of consumer capitalism to the rest of the globe, Stiegler suggests that tech firms such as Google and Apple often disseminate values that are hidden from view. A potent example of this can be found in the first fully AI-judged beauty pageant. As discussed by the sociologist Ruha Benjamin in her book Race After Technology (2019), the developers of Beauty.AI advertised the contest as an opportunity for beauty to be judged in a way that was free of prejudice. What they found, however, was that the tool they had designed exhibited an overwhelming preference for white contestants.

The digital economy doesn’t always offer desirable alternatives as former ways of working and living are destroyed

In Automatic Society, Volume 1: The Future of Work (2016), Stiegler shows how big data can standardise our world by reorganising work and employment. Digital tools were first seen as a disruptive force that could break the monotonous rhythms of large industry, but the rise of flexible forms of employment in the gig economy has created a massive underclass. A new proletariat of Uber drivers and other precarious workers now labour under extremely unstable conditions. They are denied even the traditional protections of working-class employment. The digital economy doesn’t always offer desirable alternatives as former ways of working and living are destroyed.

A particularly pressing concern Stiegler took up before his untimely death in 2020 is the capacity of digital tools to surveil us. The rise of big tech firms such as Google and Amazon has meant the intrusion of surveillance tools into every aspect of our lives. Smart homes have round-the-clock video feeds, and marketing companies spend billions collecting data about everything we do online. In his last two books published in English, The Neganthropocene ( 2018 ) and The Age of Disruption: Technology and Madness in Computational Capitalism ( 2019 ), Stiegler suggests that the growth of widespread surveillance tools is at odds with the pharmacological promise of new technology. Though tracking tools can be useful by, for example, limiting the spread of harmful diseases, they are also used to deny us worlds of possible experience.

Technology, for better or worse, affects every aspect of our lives. Our very sense of who we are is shaped and reshaped by the tools we have at our disposal. The problem, for Stiegler, is that when we pay too much attention to our tools, rather than how they are developed and deployed, we fail to understand our reality. We become trapped, merely describing the technological world on its own terms and making it even harder to untangle the effects of digital technologies and our everyday experiences. By encouraging us to pay closer attention to this world-making capacity, with its potential to harm and heal, Stiegler is showing us what else is possible. There are other ways of living, of being, of evolving. It is technics, not technology, that will give the future its new face.

Economic history

The southern gap

In the American South, an oligarchy of planters enriched itself through slavery. Pervasive underdevelopment is their legacy

Keri Leigh Merritt

Family life

A patchwork family

After my marriage failed, I strove to create a new family – one made beautiful by the loving way it’s stitched together

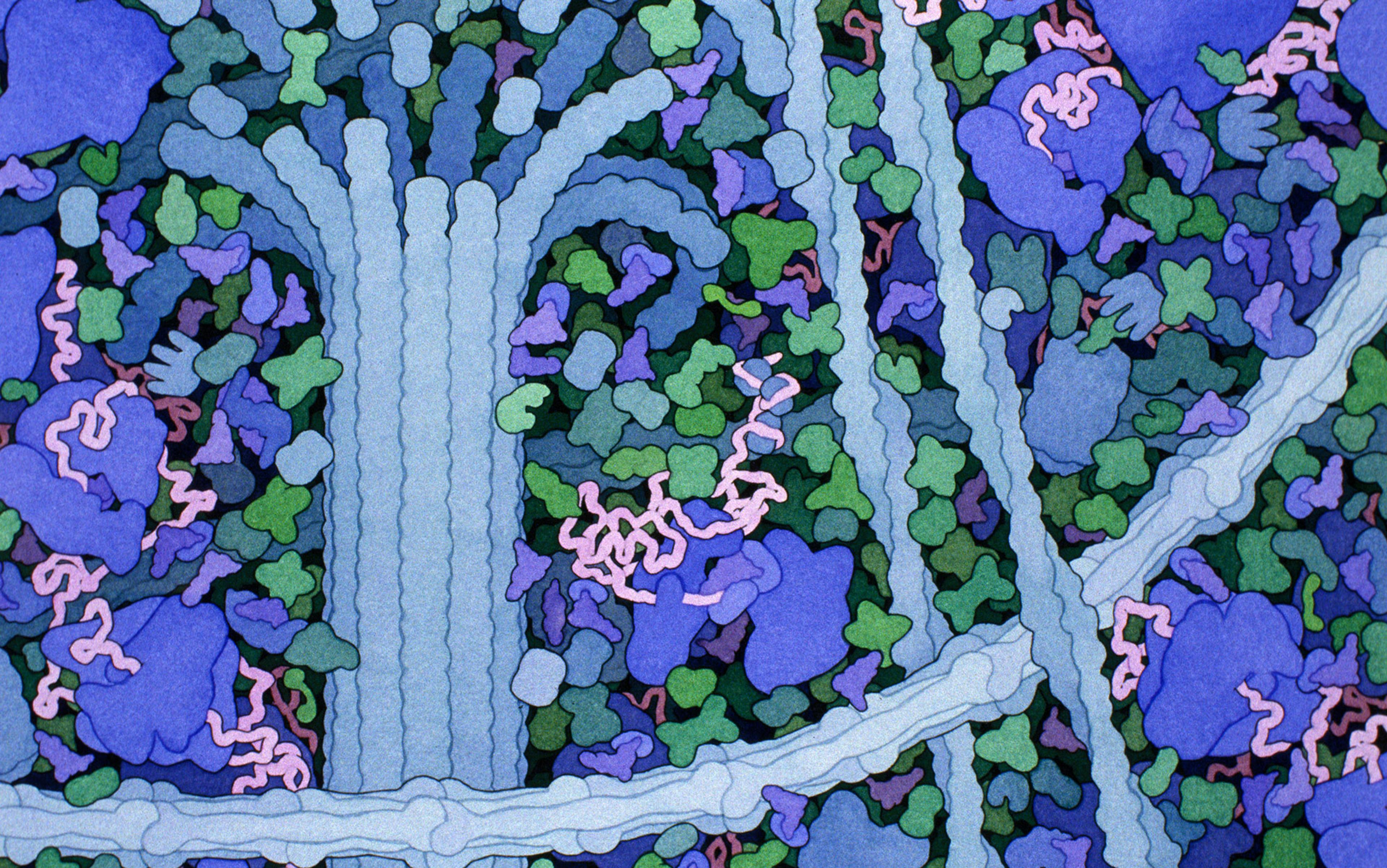

The cell is not a factory

Scientific narratives project social hierarchies onto nature. That’s why we need better metaphors to describe cellular life

Charudatta Navare

Stories and literature

Terrifying vistas of reality

H P Lovecraft, the master of cosmic horror stories, was a philosopher who believed in the total insignificance of humanity

Sam Woodward

The dangers of AI farming

AI could lead to new ways for people to abuse animals for financial gain. That’s why we need strong ethical guidelines

Virginie Simoneau-Gilbert & Jonathan Birch

Thinkers and theories

A man beyond categories

Paul Tillich was a religious socialist and a profoundly subtle theologian who placed doubt at the centre of his thought

Feb 13, 2023

200-500 Word Example Essays about Technology

Got an essay assignment about technology check out these examples to inspire you.

Technology is a rapidly evolving field that has completely changed the way we live, work, and interact with one another. Technology has profoundly impacted our daily lives, from how we communicate with friends and family to how we access information and complete tasks. As a result, it's no surprise that technology is a popular topic for students writing essays.

But writing a technology essay can be challenging, especially for those needing more time or help with writer's block. This is where Jenni.ai comes in. Jenni.ai is an innovative AI tool explicitly designed for students who need help writing essays. With Jenni.ai, students can quickly and easily generate essays on various topics, including technology.

This blog post aims to provide readers with various example essays on technology, all generated by Jenni.ai. These essays will be a valuable resource for students looking for inspiration or guidance as they work on their essays. By reading through these example essays, students can better understand how technology can be approached and discussed in an essay.

Moreover, by signing up for a free trial with Jenni.ai, students can take advantage of this innovative tool and receive even more support as they work on their essays. Jenni.ai is designed to help students write essays faster and more efficiently, so they can focus on what truly matters – learning and growing as a student. Whether you're a student who is struggling with writer's block or simply looking for a convenient way to generate essays on a wide range of topics, Jenni.ai is the perfect solution.

The Impact of Technology on Society and Culture

Introduction:.

Technology has become an integral part of our daily lives and has dramatically impacted how we interact, communicate, and carry out various activities. Technological advancements have brought positive and negative changes to society and culture. In this article, we will explore the impact of technology on society and culture and how it has influenced different aspects of our lives.

Positive impact on communication:

Technology has dramatically improved communication and made it easier for people to connect from anywhere in the world. Social media platforms, instant messaging, and video conferencing have brought people closer, bridging geographical distances and cultural differences. This has made it easier for people to share information, exchange ideas, and collaborate on projects.

Positive impact on education:

Students and instructors now have access to a multitude of knowledge and resources because of the effect of technology on education . Students may now study at their speed and from any location thanks to online learning platforms, educational applications, and digital textbooks.

Negative impact on critical thinking and creativity:

Technological advancements have resulted in a reduction in critical thinking and creativity. With so much information at our fingertips, individuals have become more passive in their learning, relying on the internet for solutions rather than logic and inventiveness. As a result, independent thinking and problem-solving abilities have declined.

Positive impact on entertainment:

Technology has transformed how we access and consume entertainment. People may now access a wide range of entertainment alternatives from the comfort of their own homes thanks to streaming services, gaming platforms, and online content makers. The entertainment business has entered a new age of creativity and invention as a result of this.

Negative impact on attention span:

However, the continual bombardment of information and technological stimulation has also reduced attention span and the capacity to focus. People are easily distracted and need help focusing on a single activity for a long time. This has hampered productivity and the ability to accomplish duties.

The Ethics of Artificial Intelligence And Machine Learning

The development of artificial intelligence (AI) and machine learning (ML) technologies has been one of the most significant technological developments of the past several decades. These cutting-edge technologies have the potential to alter several sectors of society, including commerce, industry, healthcare, and entertainment.

As with any new and quickly advancing technology, AI and ML ethics must be carefully studied. The usage of these technologies presents significant concerns around privacy, accountability, and command. As the use of AI and ML grows more ubiquitous, we must assess their possible influence on society and investigate the ethical issues that must be taken into account as these technologies continue to develop.

What are Artificial Intelligence and Machine Learning?

Artificial Intelligence is the simulation of human intelligence in machines designed to think and act like humans. Machine learning is a subfield of AI that enables computers to learn from data and improve their performance over time without being explicitly programmed.

The impact of AI and ML on Society

The use of AI and ML in various industries, such as healthcare, finance, and retail, has brought many benefits. For example, AI-powered medical diagnosis systems can identify diseases faster and more accurately than human doctors. However, there are also concerns about job displacement and the potential for AI to perpetuate societal biases.

The Ethical Considerations of AI and ML

A. Bias in AI algorithms

One of the critical ethical concerns about AI and ML is the potential for algorithms to perpetuate existing biases. This can occur if the data used to train these algorithms reflects the preferences of the people who created it. As a result, AI systems can perpetuate these biases and discriminate against certain groups of people.

B. Responsibility for AI-generated decisions

Another ethical concern is the responsibility for decisions made by AI systems. For example, who is responsible for the damage if a self-driving car causes an accident? The manufacturer of the vehicle, the software developer, or the AI algorithm itself?

C. The potential for misuse of AI and ML

AI and ML can also be used for malicious purposes, such as cyberattacks and misinformation. The need for more regulation and oversight in developing and using these technologies makes it difficult to prevent misuse.

The developments in AI and ML have given numerous benefits to humanity, but they also present significant ethical concerns that must be addressed. We must assess the repercussions of new technologies on society, implement methods to limit the associated dangers, and guarantee that they are utilized for the greater good. As AI and ML continue to play an ever-increasing role in our daily lives, we must engage in an open and frank discussion regarding their ethics.

The Future of Work And Automation

Rapid technological breakthroughs in recent years have brought about considerable changes in our way of life and work. Concerns regarding the influence of artificial intelligence and machine learning on the future of work and employment have increased alongside the development of these technologies. This article will examine the possible advantages and disadvantages of automation and its influence on the labor market, employees, and the economy.

The Advantages of Automation

Automation in the workplace offers various benefits, including higher efficiency and production, fewer mistakes, and enhanced precision. Automated processes may accomplish repetitive jobs quickly and precisely, allowing employees to concentrate on more complex and creative activities. Additionally, automation may save organizations money since it removes the need to pay for labor and minimizes the danger of workplace accidents.

The Potential Disadvantages of Automation

However, automation has significant disadvantages, including job loss and income stagnation. As robots and computers replace human labor in particular industries, there is a danger that many workers may lose their jobs, resulting in higher unemployment and more significant economic disparity. Moreover, if automation is not adequately regulated and managed, it might lead to stagnant wages and a deterioration in employees' standard of life.

The Future of Work and Automation

Despite these difficulties, automation will likely influence how labor is done. As a result, firms, employees, and governments must take early measures to solve possible issues and reap the rewards of automation. This might entail funding worker retraining programs, enhancing education and skill development, and implementing regulations that support equality and justice at work.

IV. The Need for Ethical Considerations

We must consider the ethical ramifications of automation and its effects on society as technology develops. The impact on employees and their rights, possible hazards to privacy and security, and the duty of corporations and governments to ensure that automation is utilized responsibly and ethically are all factors to be taken into account.

Conclusion:

To summarise, the future of employment and automation will most certainly be defined by a complex interaction of technological advances, economic trends, and cultural ideals. All stakeholders must work together to handle the problems and possibilities presented by automation and ensure that technology is employed to benefit society as a whole.

The Role of Technology in Education

Introduction.

Nearly every part of our lives has been transformed by technology, and education is no different. Today's students have greater access to knowledge, opportunities, and resources than ever before, and technology is becoming a more significant part of their educational experience. Technology is transforming how we think about education and creating new opportunities for learners of all ages, from online courses and virtual classrooms to instructional applications and augmented reality.

Technology's Benefits for Education

The capacity to tailor learning is one of technology's most significant benefits in education. Students may customize their education to meet their unique needs and interests since they can access online information and tools.

For instance, people can enroll in online classes on topics they are interested in, get tailored feedback on their work, and engage in virtual discussions with peers and subject matter experts worldwide. As a result, pupils are better able to acquire and develop the abilities and information necessary for success.

Challenges and Concerns

Despite the numerous advantages of technology in education, there are also obstacles and considerations to consider. One issue is the growing reliance on technology and the possibility that pupils would become overly dependent on it. This might result in a lack of critical thinking and problem-solving abilities, as students may become passive learners who only follow instructions and rely on technology to complete their assignments.

Another obstacle is the digital divide between those who have access to technology and those who do not. This division can exacerbate the achievement gap between pupils and produce uneven educational and professional growth chances. To reduce these consequences, all students must have access to the technology and resources necessary for success.

In conclusion, technology is rapidly becoming an integral part of the classroom experience and has the potential to alter the way we learn radically.

Technology can help students flourish and realize their full potential by giving them access to individualized instruction, tools, and opportunities. While the benefits of technology in the classroom are undeniable, it's crucial to be mindful of the risks and take precautions to guarantee that all kids have access to the tools they need to thrive.

The Influence of Technology On Personal Relationships And Communication

Technological advancements have profoundly altered how individuals connect and exchange information. It has changed the world in many ways in only a few decades. Because of the rise of the internet and various social media sites, maintaining relationships with people from all walks of life is now simpler than ever.

However, concerns about how these developments may affect interpersonal connections and dialogue are inevitable in an era of rapid technological growth. In this piece, we'll discuss how the prevalence of digital media has altered our interpersonal connections and the language we use to express ourselves.

Direct Effect on Direct Interaction:

The disruption of face-to-face communication is a particularly stark example of how technology has impacted human connections. The quality of interpersonal connections has suffered due to people's growing preference for digital over human communication. Technology has been demonstrated to reduce the usage of nonverbal signs such as facial expressions, tone of voice, and other indicators of emotional investment in the connection.

Positive Impact on Long-Distance Relationships:

Yet there are positives to be found as well. Long-distance relationships have also benefited from technological advancements. The development of technologies such as video conferencing, instant messaging, and social media has made it possible for individuals to keep in touch with distant loved ones. It has become simpler for individuals to stay in touch and feel connected despite geographical distance.

The Effects of Social Media on Personal Connections:

The widespread use of social media has had far-reaching consequences, especially on the quality of interpersonal interactions. Social media has positive and harmful effects on relationships since it allows people to keep in touch and share life's milestones.

Unfortunately, social media has made it all too easy to compare oneself to others, which may lead to emotions of jealousy and a general decline in confidence. Furthermore, social media might cause people to have inflated expectations of themselves and their relationships.

A Personal Perspective on the Intersection of Technology and Romance

Technological advancements have also altered physical touch and closeness. Virtual reality and other technologies have allowed people to feel physical contact and familiarity in a digital setting. This might be a promising breakthrough, but it has some potential downsides.

Experts are concerned that people's growing dependence on technology for intimacy may lead to less time spent communicating face-to-face and less emphasis on physical contact, both of which are important for maintaining good relationships.

In conclusion, technological advancements have significantly affected the quality of interpersonal connections and the exchange of information. Even though technology has made it simpler to maintain personal relationships, it has chilled interpersonal interactions between people.

Keeping tabs on how technology is changing our lives and making adjustments as necessary is essential as we move forward. Boundaries and prioritizing in-person conversation and physical touch in close relationships may help reduce the harm it causes.

The Security and Privacy Implications of Increased Technology Use and Data Collection

The fast development of technology over the past few decades has made its way into every aspect of our life. Technology has improved many facets of our life, from communication to commerce. However, significant privacy and security problems have emerged due to the broad adoption of technology. In this essay, we'll look at how the widespread use of technological solutions and the subsequent explosion in collected data affects our right to privacy and security.

Data Mining and Privacy Concerns

Risk of Cyber Attacks and Data Loss

The Widespread Use of Encryption and Other Safety Mechanisms

The Privacy and Security of the Future in a Globalized Information Age

Obtaining and Using Individual Information

The acquisition and use of private information is a significant cause for privacy alarm in the digital age. Data about their customers' online habits, interests, and personal information is a valuable commodity for many internet firms. Besides tailored advertising, this information may be used for other, less desirable things like identity theft or cyber assaults.

Moreover, many individuals need to be made aware of what data is being gathered from them or how it is being utilized because of the lack of transparency around gathering personal information. Privacy and data security have become increasingly contentious as a result.

Data breaches and other forms of cyber-attack pose a severe risk.

The risk of cyber assaults and data breaches is another big issue of worry. More people are using more devices, which means more opportunities for cybercriminals to steal private information like credit card numbers and other identifying data. This may cause monetary damages and harm one's reputation or identity.

Many high-profile data breaches have occurred in recent years, exposing the personal information of millions of individuals and raising serious concerns about the safety of this information. Companies and governments have responded to this problem by adopting new security methods like encryption and multi-factor authentication.

Many businesses now use encryption and other security measures to protect themselves from cybercriminals and data thieves. Encryption keeps sensitive information hidden by encoding it so that only those possessing the corresponding key can decipher it. This prevents private information like bank account numbers or social security numbers from falling into the wrong hands.

Firewalls, virus scanners, and two-factor authentication are all additional security precautions that may be used with encryption. While these safeguards do much to stave against cyber assaults, they are not entirely impregnable, and data breaches are still possible.

The Future of Privacy and Security in a Technologically Advanced World

There's little doubt that concerns about privacy and security will persist even as technology improves. There must be strict safeguards to secure people's private information as more and more of it is transferred and kept digitally. To achieve this goal, it may be necessary to implement novel technologies and heightened levels of protection and to revise the rules and regulations regulating the collection and storage of private information.

Individuals and businesses are understandably concerned about the security and privacy consequences of widespread technological use and data collecting. There are numerous obstacles to overcome in a society where technology plays an increasingly important role, from acquiring and using personal data to the risk of cyber-attacks and data breaches. Companies and governments must keep spending money on security measures and working to educate people about the significance of privacy and security if personal data is to remain safe.

In conclusion, technology has profoundly impacted virtually every aspect of our lives, including society and culture, ethics, work, education, personal relationships, and security and privacy. The rise of artificial intelligence and machine learning has presented new ethical considerations, while automation is transforming the future of work.

In education, technology has revolutionized the way we learn and access information. At the same time, our dependence on technology has brought new challenges in terms of personal relationships, communication, security, and privacy.

Jenni.ai is an AI tool that can help students write essays easily and quickly. Whether you're looking, for example, for essays on any of these topics or are seeking assistance in writing your essay, Jenni.ai offers a convenient solution. Sign up for a free trial today and experience the benefits of AI-powered writing assistance for yourself.

Try Jenni for free today

Create your first piece of content with Jenni today and never look back

- Previous Article

- Next Article

Promises and Pitfalls of Technology

Politics and privacy, private-sector influence and big tech, state competition and conflict, author biography, how is technology changing the world, and how should the world change technology.

- Split-Screen

- Article contents

- Figures & tables

- Supplementary Data

- Peer Review

- Open the PDF for in another window

- Guest Access

- Get Permissions

- Cite Icon Cite

- Search Site

Josephine Wolff; How Is Technology Changing the World, and How Should the World Change Technology?. Global Perspectives 1 February 2021; 2 (1): 27353. doi: https://doi.org/10.1525/gp.2021.27353

Download citation file:

- Ris (Zotero)

- Reference Manager

Technologies are becoming increasingly complicated and increasingly interconnected. Cars, airplanes, medical devices, financial transactions, and electricity systems all rely on more computer software than they ever have before, making them seem both harder to understand and, in some cases, harder to control. Government and corporate surveillance of individuals and information processing relies largely on digital technologies and artificial intelligence, and therefore involves less human-to-human contact than ever before and more opportunities for biases to be embedded and codified in our technological systems in ways we may not even be able to identify or recognize. Bioengineering advances are opening up new terrain for challenging philosophical, political, and economic questions regarding human-natural relations. Additionally, the management of these large and small devices and systems is increasingly done through the cloud, so that control over them is both very remote and removed from direct human or social control. The study of how to make technologies like artificial intelligence or the Internet of Things “explainable” has become its own area of research because it is so difficult to understand how they work or what is at fault when something goes wrong (Gunning and Aha 2019) .

This growing complexity makes it more difficult than ever—and more imperative than ever—for scholars to probe how technological advancements are altering life around the world in both positive and negative ways and what social, political, and legal tools are needed to help shape the development and design of technology in beneficial directions. This can seem like an impossible task in light of the rapid pace of technological change and the sense that its continued advancement is inevitable, but many countries around the world are only just beginning to take significant steps toward regulating computer technologies and are still in the process of radically rethinking the rules governing global data flows and exchange of technology across borders.

These are exciting times not just for technological development but also for technology policy—our technologies may be more advanced and complicated than ever but so, too, are our understandings of how they can best be leveraged, protected, and even constrained. The structures of technological systems as determined largely by government and institutional policies and those structures have tremendous implications for social organization and agency, ranging from open source, open systems that are highly distributed and decentralized, to those that are tightly controlled and closed, structured according to stricter and more hierarchical models. And just as our understanding of the governance of technology is developing in new and interesting ways, so, too, is our understanding of the social, cultural, environmental, and political dimensions of emerging technologies. We are realizing both the challenges and the importance of mapping out the full range of ways that technology is changing our society, what we want those changes to look like, and what tools we have to try to influence and guide those shifts.

Technology can be a source of tremendous optimism. It can help overcome some of the greatest challenges our society faces, including climate change, famine, and disease. For those who believe in the power of innovation and the promise of creative destruction to advance economic development and lead to better quality of life, technology is a vital economic driver (Schumpeter 1942) . But it can also be a tool of tremendous fear and oppression, embedding biases in automated decision-making processes and information-processing algorithms, exacerbating economic and social inequalities within and between countries to a staggering degree, or creating new weapons and avenues for attack unlike any we have had to face in the past. Scholars have even contended that the emergence of the term technology in the nineteenth and twentieth centuries marked a shift from viewing individual pieces of machinery as a means to achieving political and social progress to the more dangerous, or hazardous, view that larger-scale, more complex technological systems were a semiautonomous form of progress in and of themselves (Marx 2010) . More recently, technologists have sharply criticized what they view as a wave of new Luddites, people intent on slowing the development of technology and turning back the clock on innovation as a means of mitigating the societal impacts of technological change (Marlowe 1970) .

At the heart of fights over new technologies and their resulting global changes are often two conflicting visions of technology: a fundamentally optimistic one that believes humans use it as a tool to achieve greater goals, and a fundamentally pessimistic one that holds that technological systems have reached a point beyond our control. Technology philosophers have argued that neither of these views is wholly accurate and that a purely optimistic or pessimistic view of technology is insufficient to capture the nuances and complexity of our relationship to technology (Oberdiek and Tiles 1995) . Understanding technology and how we can make better decisions about designing, deploying, and refining it requires capturing that nuance and complexity through in-depth analysis of the impacts of different technological advancements and the ways they have played out in all their complicated and controversial messiness across the world.

These impacts are often unpredictable as technologies are adopted in new contexts and come to be used in ways that sometimes diverge significantly from the use cases envisioned by their designers. The internet, designed to help transmit information between computer networks, became a crucial vehicle for commerce, introducing unexpected avenues for crime and financial fraud. Social media platforms like Facebook and Twitter, designed to connect friends and families through sharing photographs and life updates, became focal points of election controversies and political influence. Cryptocurrencies, originally intended as a means of decentralized digital cash, have become a significant environmental hazard as more and more computing resources are devoted to mining these forms of virtual money. One of the crucial challenges in this area is therefore recognizing, documenting, and even anticipating some of these unexpected consequences and providing mechanisms to technologists for how to think through the impacts of their work, as well as possible other paths to different outcomes (Verbeek 2006) . And just as technological innovations can cause unexpected harm, they can also bring about extraordinary benefits—new vaccines and medicines to address global pandemics and save thousands of lives, new sources of energy that can drastically reduce emissions and help combat climate change, new modes of education that can reach people who would otherwise have no access to schooling. Regulating technology therefore requires a careful balance of mitigating risks without overly restricting potentially beneficial innovations.

Nations around the world have taken very different approaches to governing emerging technologies and have adopted a range of different technologies themselves in pursuit of more modern governance structures and processes (Braman 2009) . In Europe, the precautionary principle has guided much more anticipatory regulation aimed at addressing the risks presented by technologies even before they are fully realized. For instance, the European Union’s General Data Protection Regulation focuses on the responsibilities of data controllers and processors to provide individuals with access to their data and information about how that data is being used not just as a means of addressing existing security and privacy threats, such as data breaches, but also to protect against future developments and uses of that data for artificial intelligence and automated decision-making purposes. In Germany, Technische Überwachungsvereine, or TÜVs, perform regular tests and inspections of technological systems to assess and minimize risks over time, as the tech landscape evolves. In the United States, by contrast, there is much greater reliance on litigation and liability regimes to address safety and security failings after-the-fact. These different approaches reflect not just the different legal and regulatory mechanisms and philosophies of different nations but also the different ways those nations prioritize rapid development of the technology industry versus safety, security, and individual control. Typically, governance innovations move much more slowly than technological innovations, and regulations can lag years, or even decades, behind the technologies they aim to govern.

In addition to this varied set of national regulatory approaches, a variety of international and nongovernmental organizations also contribute to the process of developing standards, rules, and norms for new technologies, including the International Organization for Standardization and the International Telecommunication Union. These multilateral and NGO actors play an especially important role in trying to define appropriate boundaries for the use of new technologies by governments as instruments of control for the state.

At the same time that policymakers are under scrutiny both for their decisions about how to regulate technology as well as their decisions about how and when to adopt technologies like facial recognition themselves, technology firms and designers have also come under increasing criticism. Growing recognition that the design of technologies can have far-reaching social and political implications means that there is more pressure on technologists to take into consideration the consequences of their decisions early on in the design process (Vincenti 1993; Winner 1980) . The question of how technologists should incorporate these social dimensions into their design and development processes is an old one, and debate on these issues dates back to the 1970s, but it remains an urgent and often overlooked part of the puzzle because so many of the supposedly systematic mechanisms for assessing the impacts of new technologies in both the private and public sectors are primarily bureaucratic, symbolic processes rather than carrying any real weight or influence.

Technologists are often ill-equipped or unwilling to respond to the sorts of social problems that their creations have—often unwittingly—exacerbated, and instead point to governments and lawmakers to address those problems (Zuckerberg 2019) . But governments often have few incentives to engage in this area. This is because setting clear standards and rules for an ever-evolving technological landscape can be extremely challenging, because enforcement of those rules can be a significant undertaking requiring considerable expertise, and because the tech sector is a major source of jobs and revenue for many countries that may fear losing those benefits if they constrain companies too much. This indicates not just a need for clearer incentives and better policies for both private- and public-sector entities but also a need for new mechanisms whereby the technology development and design process can be influenced and assessed by people with a wider range of experiences and expertise. If we want technologies to be designed with an eye to their impacts, who is responsible for predicting, measuring, and mitigating those impacts throughout the design process? Involving policymakers in that process in a more meaningful way will also require training them to have the analytic and technical capacity to more fully engage with technologists and understand more fully the implications of their decisions.

At the same time that tech companies seem unwilling or unable to rein in their creations, many also fear they wield too much power, in some cases all but replacing governments and international organizations in their ability to make decisions that affect millions of people worldwide and control access to information, platforms, and audiences (Kilovaty 2020) . Regulators around the world have begun considering whether some of these companies have become so powerful that they violate the tenets of antitrust laws, but it can be difficult for governments to identify exactly what those violations are, especially in the context of an industry where the largest players often provide their customers with free services. And the platforms and services developed by tech companies are often wielded most powerfully and dangerously not directly by their private-sector creators and operators but instead by states themselves for widespread misinformation campaigns that serve political purposes (Nye 2018) .

Since the largest private entities in the tech sector operate in many countries, they are often better poised to implement global changes to the technological ecosystem than individual states or regulatory bodies, creating new challenges to existing governance structures and hierarchies. Just as it can be challenging to provide oversight for government use of technologies, so, too, oversight of the biggest tech companies, which have more resources, reach, and power than many nations, can prove to be a daunting task. The rise of network forms of organization and the growing gig economy have added to these challenges, making it even harder for regulators to fully address the breadth of these companies’ operations (Powell 1990) . The private-public partnerships that have emerged around energy, transportation, medical, and cyber technologies further complicate this picture, blurring the line between the public and private sectors and raising critical questions about the role of each in providing critical infrastructure, health care, and security. How can and should private tech companies operating in these different sectors be governed, and what types of influence do they exert over regulators? How feasible are different policy proposals aimed at technological innovation, and what potential unintended consequences might they have?

Conflict between countries has also spilled over significantly into the private sector in recent years, most notably in the case of tensions between the United States and China over which technologies developed in each country will be permitted by the other and which will be purchased by other customers, outside those two countries. Countries competing to develop the best technology is not a new phenomenon, but the current conflicts have major international ramifications and will influence the infrastructure that is installed and used around the world for years to come. Untangling the different factors that feed into these tussles as well as whom they benefit and whom they leave at a disadvantage is crucial for understanding how governments can most effectively foster technological innovation and invention domestically as well as the global consequences of those efforts. As much of the world is forced to choose between buying technology from the United States or from China, how should we understand the long-term impacts of those choices and the options available to people in countries without robust domestic tech industries? Does the global spread of technologies help fuel further innovation in countries with smaller tech markets, or does it reinforce the dominance of the states that are already most prominent in this sector? How can research universities maintain global collaborations and research communities in light of these national competitions, and what role does government research and development spending play in fostering innovation within its own borders and worldwide? How should intellectual property protections evolve to meet the demands of the technology industry, and how can those protections be enforced globally?

These conflicts between countries sometimes appear to challenge the feasibility of truly global technologies and networks that operate across all countries through standardized protocols and design features. Organizations like the International Organization for Standardization, the World Intellectual Property Organization, the United Nations Industrial Development Organization, and many others have tried to harmonize these policies and protocols across different countries for years, but have met with limited success when it comes to resolving the issues of greatest tension and disagreement among nations. For technology to operate in a global environment, there is a need for a much greater degree of coordination among countries and the development of common standards and norms, but governments continue to struggle to agree not just on those norms themselves but even the appropriate venue and processes for developing them. Without greater global cooperation, is it possible to maintain a global network like the internet or to promote the spread of new technologies around the world to address challenges of sustainability? What might help incentivize that cooperation moving forward, and what could new structures and process for governance of global technologies look like? Why has the tech industry’s self-regulation culture persisted? Do the same traditional drivers for public policy, such as politics of harmonization and path dependency in policy-making, still sufficiently explain policy outcomes in this space? As new technologies and their applications spread across the globe in uneven ways, how and when do they create forces of change from unexpected places?

These are some of the questions that we hope to address in the Technology and Global Change section through articles that tackle new dimensions of the global landscape of designing, developing, deploying, and assessing new technologies to address major challenges the world faces. Understanding these processes requires synthesizing knowledge from a range of different fields, including sociology, political science, economics, and history, as well as technical fields such as engineering, climate science, and computer science. A crucial part of understanding how technology has created global change and, in turn, how global changes have influenced the development of new technologies is understanding the technologies themselves in all their richness and complexity—how they work, the limits of what they can do, what they were designed to do, how they are actually used. Just as technologies themselves are becoming more complicated, so are their embeddings and relationships to the larger social, political, and legal contexts in which they exist. Scholars across all disciplines are encouraged to join us in untangling those complexities.

Josephine Wolff is an associate professor of cybersecurity policy at the Fletcher School of Law and Diplomacy at Tufts University. Her book You’ll See This Message When It Is Too Late: The Legal and Economic Aftermath of Cybersecurity Breaches was published by MIT Press in 2018.

Recipient(s) will receive an email with a link to 'How Is Technology Changing the World, and How Should the World Change Technology?' and will not need an account to access the content.

Subject: How Is Technology Changing the World, and How Should the World Change Technology?

(Optional message may have a maximum of 1000 characters.)

Citing articles via

Email alerts, affiliations.

- Special Collections

- Review Symposia

- Info for Authors

- Info for Librarians

- Editorial Team

- Emerging Scholars Forum

- Open Access

- Online ISSN 2575-7350

- Copyright © 2024 The Regents of the University of California. All Rights Reserved.

Stay Informed

Disciplines.

- Ancient World

- Anthropology

- Communication

- Criminology & Criminal Justice

- Film & Media Studies

- Food & Wine

- Browse All Disciplines

- Browse All Courses

- Book Authors

- Booksellers

- Instructions

- Journal Authors

- Journal Editors

- Media & Journalists

- Planned Giving

About UC Press

- Press Releases

- Seasonal Catalog

- Acquisitions Editors

- Customer Service

- Exam/Desk Requests

- Media Inquiries

- Print-Disability

- Rights & Permissions

- UC Press Foundation

- © Copyright 2024 by the Regents of the University of California. All rights reserved. Privacy policy Accessibility

This Feature Is Available To Subscribers Only

Sign In or Create an Account

- Subject List

- Take a Tour

- For Authors

- Subscriber Services

- Publications

- African American Studies

- African Studies

- American Literature

- Anthropology

- Architecture Planning and Preservation

- Art History

- Atlantic History

- Biblical Studies

- British and Irish Literature

- Childhood Studies

- Chinese Studies

- Cinema and Media Studies

- Communication

- Criminology

- Environmental Science

- Evolutionary Biology

- International Law

- International Relations

- Islamic Studies

- Jewish Studies

- Latin American Studies

- Latino Studies

- Linguistics

- Literary and Critical Theory

- Medieval Studies

- Military History

- Political Science

- Public Health

- Renaissance and Reformation

Social Work

- Urban Studies

- Victorian Literature

- Browse All Subjects

How to Subscribe

- Free Trials

In This Article Expand or collapse the "in this article" section Technology, Human Relationships, and Human Interaction

Introduction, introductory works.

- Reference Works

- Organizations

- Technology-Mediated Communication

- Theoretical Approaches

- Social Work Practice Implications

Related Articles Expand or collapse the "related articles" section about

About related articles close popup.

Lorem Ipsum Sit Dolor Amet

Vestibulum ante ipsum primis in faucibus orci luctus et ultrices posuere cubilia Curae; Aliquam ligula odio, euismod ut aliquam et, vestibulum nec risus. Nulla viverra, arcu et iaculis consequat, justo diam ornare tellus, semper ultrices tellus nunc eu tellus.

- Computational Social Welfare: Applying Data Science in Social Work

- Digital Storytelling for Social Work Interventions

- Ethical Issues in Social Work and Technology

- Evidence-based Social Work Practice

- Health Social Work

- Human Needs

- Impact of Emerging Technology in Social Work Practice

- Internet and Video Game Addiction

- Technology Adoption in Social Work Education

- Technology for Social Work Interventions

- Technology in Social Work

- Virtual Reality and Social Work

Other Subject Areas

Forthcoming articles expand or collapse the "forthcoming articles" section.

- Child Welfare Effectiveness

- Immigration and Child Welfare

- International Human Trafficking

- Find more forthcoming articles...

- Export Citations

- Share This Facebook LinkedIn Twitter

Technology, Human Relationships, and Human Interaction by Angela N. Bullock , Alex D. Colvin LAST MODIFIED: 27 April 2017 DOI: 10.1093/obo/9780195389678-0249