Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Random Assignment in Experiments | Introduction & Examples

Random Assignment in Experiments | Introduction & Examples

Published on March 8, 2021 by Pritha Bhandari . Revised on June 22, 2023.

In experimental research, random assignment is a way of placing participants from your sample into different treatment groups using randomization.

With simple random assignment, every member of the sample has a known or equal chance of being placed in a control group or an experimental group. Studies that use simple random assignment are also called completely randomized designs .

Random assignment is a key part of experimental design . It helps you ensure that all groups are comparable at the start of a study: any differences between them are due to random factors, not research biases like sampling bias or selection bias .

Table of contents

Why does random assignment matter, random sampling vs random assignment, how do you use random assignment, when is random assignment not used, other interesting articles, frequently asked questions about random assignment.

Random assignment is an important part of control in experimental research, because it helps strengthen the internal validity of an experiment and avoid biases.

In experiments, researchers manipulate an independent variable to assess its effect on a dependent variable, while controlling for other variables. To do so, they often use different levels of an independent variable for different groups of participants.

This is called a between-groups or independent measures design.

You use three groups of participants that are each given a different level of the independent variable:

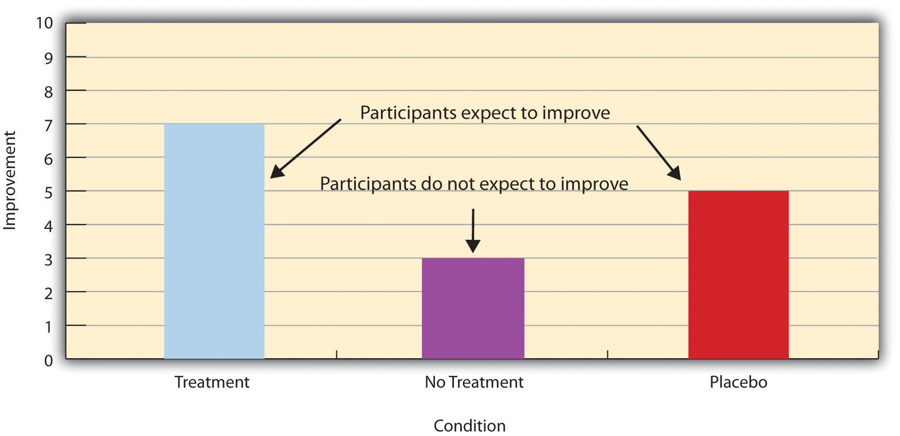

- a control group that’s given a placebo (no dosage, to control for a placebo effect ),

- an experimental group that’s given a low dosage,

- a second experimental group that’s given a high dosage.

Random assignment to helps you make sure that the treatment groups don’t differ in systematic ways at the start of the experiment, as this can seriously affect (and even invalidate) your work.

If you don’t use random assignment, you may not be able to rule out alternative explanations for your results.

- participants recruited from cafes are placed in the control group ,

- participants recruited from local community centers are placed in the low dosage experimental group,

- participants recruited from gyms are placed in the high dosage group.

With this type of assignment, it’s hard to tell whether the participant characteristics are the same across all groups at the start of the study. Gym-users may tend to engage in more healthy behaviors than people who frequent cafes or community centers, and this would introduce a healthy user bias in your study.

Although random assignment helps even out baseline differences between groups, it doesn’t always make them completely equivalent. There may still be extraneous variables that differ between groups, and there will always be some group differences that arise from chance.

Most of the time, the random variation between groups is low, and, therefore, it’s acceptable for further analysis. This is especially true when you have a large sample. In general, you should always use random assignment in experiments when it is ethically possible and makes sense for your study topic.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

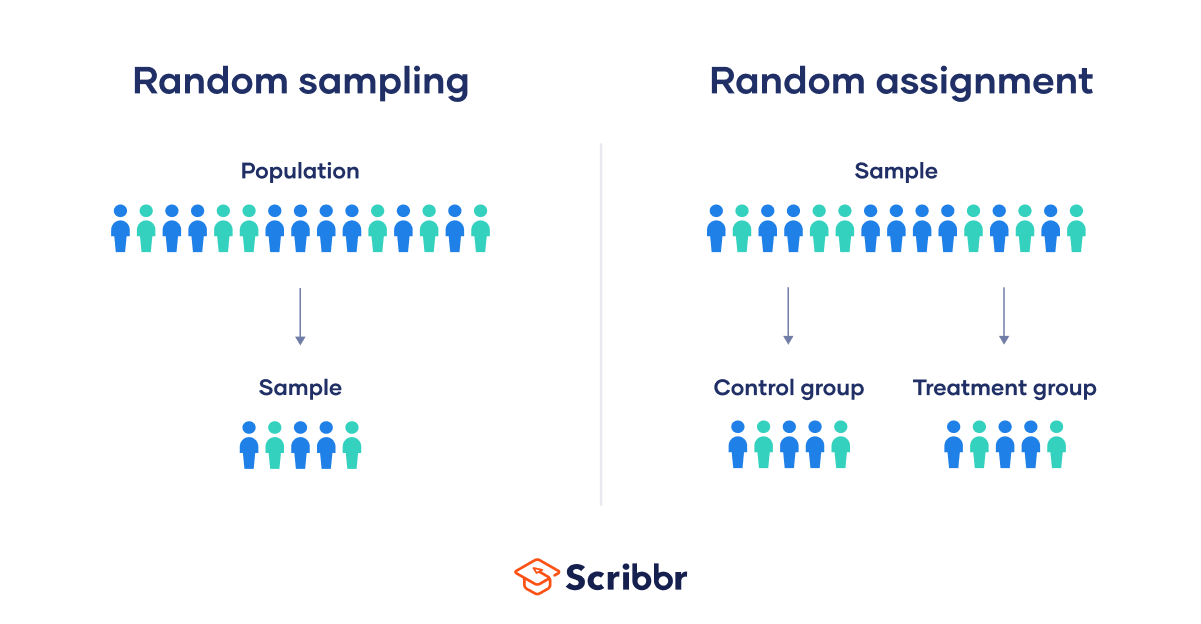

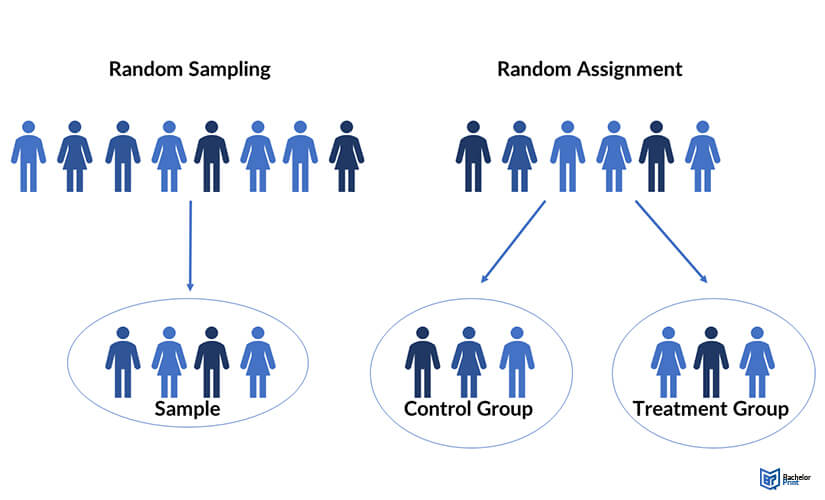

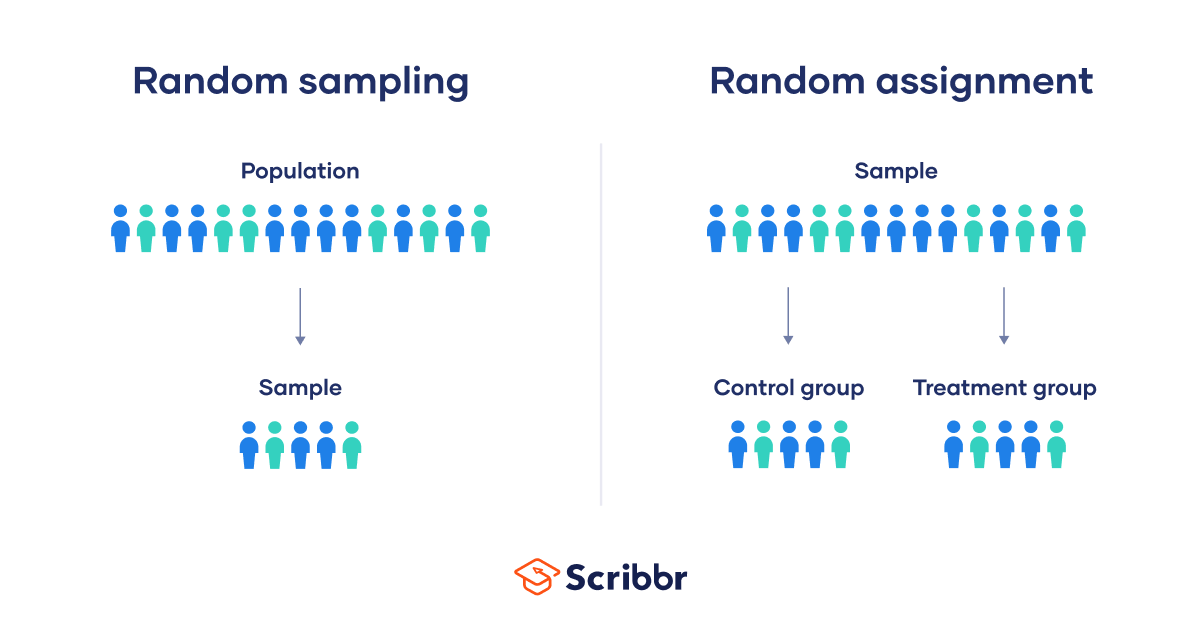

Random sampling and random assignment are both important concepts in research, but it’s important to understand the difference between them.

Random sampling (also called probability sampling or random selection) is a way of selecting members of a population to be included in your study. In contrast, random assignment is a way of sorting the sample participants into control and experimental groups.

While random sampling is used in many types of studies, random assignment is only used in between-subjects experimental designs.

Some studies use both random sampling and random assignment, while others use only one or the other.

Random sampling enhances the external validity or generalizability of your results, because it helps ensure that your sample is unbiased and representative of the whole population. This allows you to make stronger statistical inferences .

You use a simple random sample to collect data. Because you have access to the whole population (all employees), you can assign all 8000 employees a number and use a random number generator to select 300 employees. These 300 employees are your full sample.

Random assignment enhances the internal validity of the study, because it ensures that there are no systematic differences between the participants in each group. This helps you conclude that the outcomes can be attributed to the independent variable .

- a control group that receives no intervention.

- an experimental group that has a remote team-building intervention every week for a month.

You use random assignment to place participants into the control or experimental group. To do so, you take your list of participants and assign each participant a number. Again, you use a random number generator to place each participant in one of the two groups.

To use simple random assignment, you start by giving every member of the sample a unique number. Then, you can use computer programs or manual methods to randomly assign each participant to a group.

- Random number generator: Use a computer program to generate random numbers from the list for each group.

- Lottery method: Place all numbers individually in a hat or a bucket, and draw numbers at random for each group.

- Flip a coin: When you only have two groups, for each number on the list, flip a coin to decide if they’ll be in the control or the experimental group.

- Use a dice: When you have three groups, for each number on the list, roll a dice to decide which of the groups they will be in. For example, assume that rolling 1 or 2 lands them in a control group; 3 or 4 in an experimental group; and 5 or 6 in a second control or experimental group.

This type of random assignment is the most powerful method of placing participants in conditions, because each individual has an equal chance of being placed in any one of your treatment groups.

Random assignment in block designs

In more complicated experimental designs, random assignment is only used after participants are grouped into blocks based on some characteristic (e.g., test score or demographic variable). These groupings mean that you need a larger sample to achieve high statistical power .

For example, a randomized block design involves placing participants into blocks based on a shared characteristic (e.g., college students versus graduates), and then using random assignment within each block to assign participants to every treatment condition. This helps you assess whether the characteristic affects the outcomes of your treatment.

In an experimental matched design , you use blocking and then match up individual participants from each block based on specific characteristics. Within each matched pair or group, you randomly assign each participant to one of the conditions in the experiment and compare their outcomes.

Sometimes, it’s not relevant or ethical to use simple random assignment, so groups are assigned in a different way.

When comparing different groups

Sometimes, differences between participants are the main focus of a study, for example, when comparing men and women or people with and without health conditions. Participants are not randomly assigned to different groups, but instead assigned based on their characteristics.

In this type of study, the characteristic of interest (e.g., gender) is an independent variable, and the groups differ based on the different levels (e.g., men, women, etc.). All participants are tested the same way, and then their group-level outcomes are compared.

When it’s not ethically permissible

When studying unhealthy or dangerous behaviors, it’s not possible to use random assignment. For example, if you’re studying heavy drinkers and social drinkers, it’s unethical to randomly assign participants to one of the two groups and ask them to drink large amounts of alcohol for your experiment.

When you can’t assign participants to groups, you can also conduct a quasi-experimental study . In a quasi-experiment, you study the outcomes of pre-existing groups who receive treatments that you may not have any control over (e.g., heavy drinkers and social drinkers). These groups aren’t randomly assigned, but may be considered comparable when some other variables (e.g., age or socioeconomic status) are controlled for.

Prevent plagiarism. Run a free check.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Student’s t -distribution

- Normal distribution

- Null and Alternative Hypotheses

- Chi square tests

- Confidence interval

- Quartiles & Quantiles

- Cluster sampling

- Stratified sampling

- Data cleansing

- Reproducibility vs Replicability

- Peer review

- Prospective cohort study

Research bias

- Implicit bias

- Cognitive bias

- Placebo effect

- Hawthorne effect

- Hindsight bias

- Affect heuristic

- Social desirability bias

In experimental research, random assignment is a way of placing participants from your sample into different groups using randomization. With this method, every member of the sample has a known or equal chance of being placed in a control group or an experimental group.

Random selection, or random sampling , is a way of selecting members of a population for your study’s sample.

In contrast, random assignment is a way of sorting the sample into control and experimental groups.

Random sampling enhances the external validity or generalizability of your results, while random assignment improves the internal validity of your study.

Random assignment is used in experiments with a between-groups or independent measures design. In this research design, there’s usually a control group and one or more experimental groups. Random assignment helps ensure that the groups are comparable.

In general, you should always use random assignment in this type of experimental design when it is ethically possible and makes sense for your study topic.

To implement random assignment , assign a unique number to every member of your study’s sample .

Then, you can use a random number generator or a lottery method to randomly assign each number to a control or experimental group. You can also do so manually, by flipping a coin or rolling a dice to randomly assign participants to groups.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bhandari, P. (2023, June 22). Random Assignment in Experiments | Introduction & Examples. Scribbr. Retrieved April 15, 2024, from https://www.scribbr.com/methodology/random-assignment/

Is this article helpful?

Pritha Bhandari

Other students also liked, guide to experimental design | overview, steps, & examples, confounding variables | definition, examples & controls, control groups and treatment groups | uses & examples, "i thought ai proofreading was useless but..".

I've been using Scribbr for years now and I know it's a service that won't disappoint. It does a good job spotting mistakes”

Random Assignment in Psychology: Definition & Examples

Julia Simkus

Editor at Simply Psychology

BA (Hons) Psychology, Princeton University

Julia Simkus is a graduate of Princeton University with a Bachelor of Arts in Psychology. She is currently studying for a Master's Degree in Counseling for Mental Health and Wellness in September 2023. Julia's research has been published in peer reviewed journals.

Learn about our Editorial Process

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

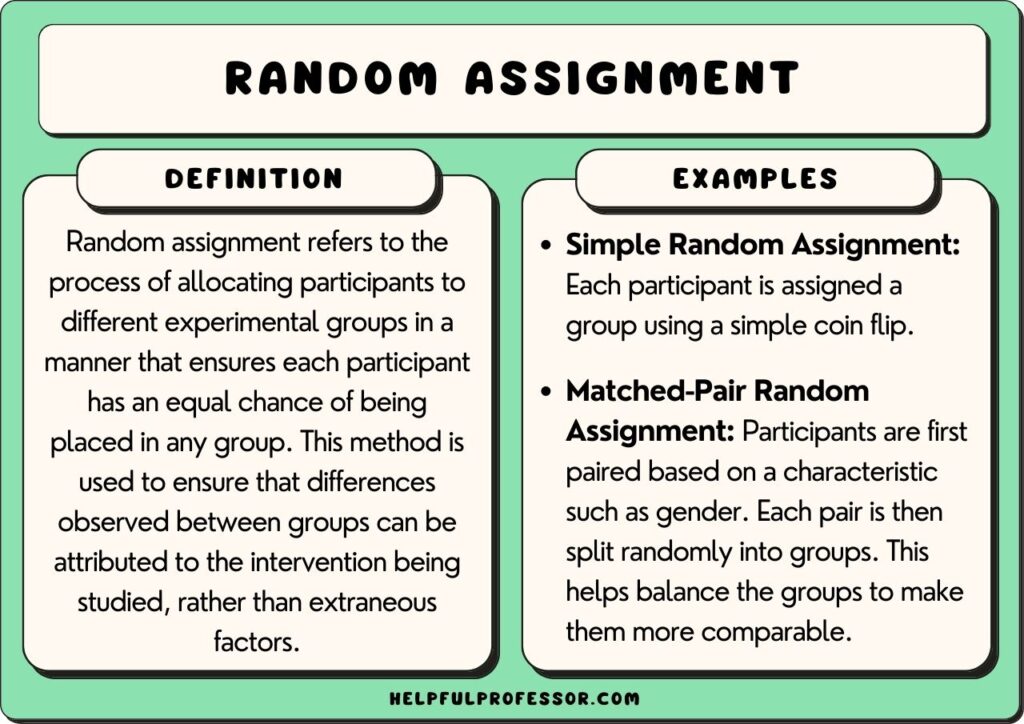

In psychology, random assignment refers to the practice of allocating participants to different experimental groups in a study in a completely unbiased way, ensuring each participant has an equal chance of being assigned to any group.

In experimental research, random assignment, or random placement, organizes participants from your sample into different groups using randomization.

Random assignment uses chance procedures to ensure that each participant has an equal opportunity of being assigned to either a control or experimental group.

The control group does not receive the treatment in question, whereas the experimental group does receive the treatment.

When using random assignment, neither the researcher nor the participant can choose the group to which the participant is assigned. This ensures that any differences between and within the groups are not systematic at the onset of the study.

In a study to test the success of a weight-loss program, investigators randomly assigned a pool of participants to one of two groups.

Group A participants participated in the weight-loss program for 10 weeks and took a class where they learned about the benefits of healthy eating and exercise.

Group B participants read a 200-page book that explains the benefits of weight loss. The investigator randomly assigned participants to one of the two groups.

The researchers found that those who participated in the program and took the class were more likely to lose weight than those in the other group that received only the book.

Importance

Random assignment ensures that each group in the experiment is identical before applying the independent variable.

In experiments , researchers will manipulate an independent variable to assess its effect on a dependent variable, while controlling for other variables. Random assignment increases the likelihood that the treatment groups are the same at the onset of a study.

Thus, any changes that result from the independent variable can be assumed to be a result of the treatment of interest. This is particularly important for eliminating sources of bias and strengthening the internal validity of an experiment.

Random assignment is the best method for inferring a causal relationship between a treatment and an outcome.

Random Selection vs. Random Assignment

Random selection (also called probability sampling or random sampling) is a way of randomly selecting members of a population to be included in your study.

On the other hand, random assignment is a way of sorting the sample participants into control and treatment groups.

Random selection ensures that everyone in the population has an equal chance of being selected for the study. Once the pool of participants has been chosen, experimenters use random assignment to assign participants into groups.

Random assignment is only used in between-subjects experimental designs, while random selection can be used in a variety of study designs.

Random Assignment vs Random Sampling

Random sampling refers to selecting participants from a population so that each individual has an equal chance of being chosen. This method enhances the representativeness of the sample.

Random assignment, on the other hand, is used in experimental designs once participants are selected. It involves allocating these participants to different experimental groups or conditions randomly.

This helps ensure that any differences in results across groups are due to manipulating the independent variable, not preexisting differences among participants.

When to Use Random Assignment

Random assignment is used in experiments with a between-groups or independent measures design.

In these research designs, researchers will manipulate an independent variable to assess its effect on a dependent variable, while controlling for other variables.

There is usually a control group and one or more experimental groups. Random assignment helps ensure that the groups are comparable at the onset of the study.

How to Use Random Assignment

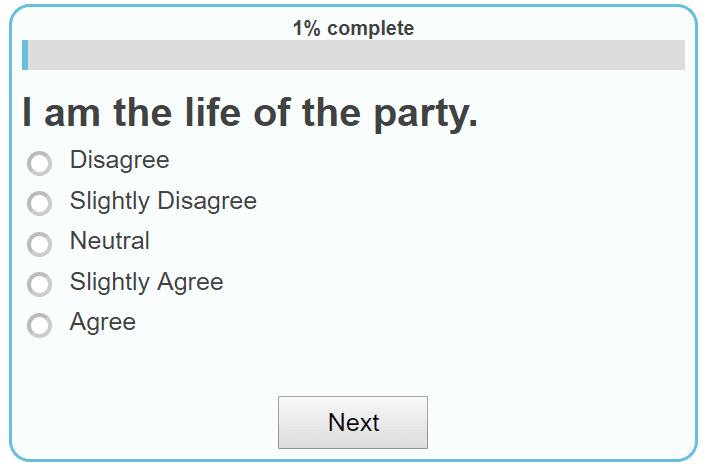

There are a variety of ways to assign participants into study groups randomly. Here are a handful of popular methods:

- Random Number Generator : Give each member of the sample a unique number; use a computer program to randomly generate a number from the list for each group.

- Lottery : Give each member of the sample a unique number. Place all numbers in a hat or bucket and draw numbers at random for each group.

- Flipping a Coin : Flip a coin for each participant to decide if they will be in the control group or experimental group (this method can only be used when you have just two groups)

- Roll a Die : For each number on the list, roll a dice to decide which of the groups they will be in. For example, assume that rolling 1, 2, or 3 places them in a control group and rolling 3, 4, 5 lands them in an experimental group.

When is Random Assignment not used?

- When it is not ethically permissible: Randomization is only ethical if the researcher has no evidence that one treatment is superior to the other or that one treatment might have harmful side effects.

- When answering non-causal questions : If the researcher is just interested in predicting the probability of an event, the causal relationship between the variables is not important and observational designs would be more suitable than random assignment.

- When studying the effect of variables that cannot be manipulated: Some risk factors cannot be manipulated and so it would not make any sense to study them in a randomized trial. For example, we cannot randomly assign participants into categories based on age, gender, or genetic factors.

Drawbacks of Random Assignment

While randomization assures an unbiased assignment of participants to groups, it does not guarantee the equality of these groups. There could still be extraneous variables that differ between groups or group differences that arise from chance. Additionally, there is still an element of luck with random assignments.

Thus, researchers can not produce perfectly equal groups for each specific study. Differences between the treatment group and control group might still exist, and the results of a randomized trial may sometimes be wrong, but this is absolutely okay.

Scientific evidence is a long and continuous process, and the groups will tend to be equal in the long run when data is aggregated in a meta-analysis.

Additionally, external validity (i.e., the extent to which the researcher can use the results of the study to generalize to the larger population) is compromised with random assignment.

Random assignment is challenging to implement outside of controlled laboratory conditions and might not represent what would happen in the real world at the population level.

Random assignment can also be more costly than simple observational studies, where an investigator is just observing events without intervening with the population.

Randomization also can be time-consuming and challenging, especially when participants refuse to receive the assigned treatment or do not adhere to recommendations.

What is the difference between random sampling and random assignment?

Random sampling refers to randomly selecting a sample of participants from a population. Random assignment refers to randomly assigning participants to treatment groups from the selected sample.

Does random assignment increase internal validity?

Yes, random assignment ensures that there are no systematic differences between the participants in each group, enhancing the study’s internal validity .

Does random assignment reduce sampling error?

Yes, with random assignment, participants have an equal chance of being assigned to either a control group or an experimental group, resulting in a sample that is, in theory, representative of the population.

Random assignment does not completely eliminate sampling error because a sample only approximates the population from which it is drawn. However, random sampling is a way to minimize sampling errors.

When is random assignment not possible?

Random assignment is not possible when the experimenters cannot control the treatment or independent variable.

For example, if you want to compare how men and women perform on a test, you cannot randomly assign subjects to these groups.

Participants are not randomly assigned to different groups in this study, but instead assigned based on their characteristics.

Does random assignment eliminate confounding variables?

Yes, random assignment eliminates the influence of any confounding variables on the treatment because it distributes them at random among the study groups. Randomization invalidates any relationship between a confounding variable and the treatment.

Why is random assignment of participants to treatment conditions in an experiment used?

Random assignment is used to ensure that all groups are comparable at the start of a study. This allows researchers to conclude that the outcomes of the study can be attributed to the intervention at hand and to rule out alternative explanations for study results.

Further Reading

- Bogomolnaia, A., & Moulin, H. (2001). A new solution to the random assignment problem . Journal of Economic theory , 100 (2), 295-328.

- Krause, M. S., & Howard, K. I. (2003). What random assignment does and does not do . Journal of Clinical Psychology , 59 (7), 751-766.

- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2023 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

The Definition of Random Assignment According to Psychology

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

Emily is a board-certified science editor who has worked with top digital publishing brands like Voices for Biodiversity, Study.com, GoodTherapy, Vox, and Verywell.

:max_bytes(150000):strip_icc():format(webp)/Emily-Swaim-1000-0f3197de18f74329aeffb690a177160c.jpg)

Materio / Getty Images

Random assignment refers to the use of chance procedures in psychology experiments to ensure that each participant has the same opportunity to be assigned to any given group in a study to eliminate any potential bias in the experiment at the outset. Participants are randomly assigned to different groups, such as the treatment group versus the control group. In clinical research, randomized clinical trials are known as the gold standard for meaningful results.

Simple random assignment techniques might involve tactics such as flipping a coin, drawing names out of a hat, rolling dice, or assigning random numbers to a list of participants. It is important to note that random assignment differs from random selection .

While random selection refers to how participants are randomly chosen from a target population as representatives of that population, random assignment refers to how those chosen participants are then assigned to experimental groups.

Random Assignment In Research

To determine if changes in one variable will cause changes in another variable, psychologists must perform an experiment. Random assignment is a critical part of the experimental design that helps ensure the reliability of the study outcomes.

Researchers often begin by forming a testable hypothesis predicting that one variable of interest will have some predictable impact on another variable.

The variable that the experimenters will manipulate in the experiment is known as the independent variable , while the variable that they will then measure for different outcomes is known as the dependent variable. While there are different ways to look at relationships between variables, an experiment is the best way to get a clear idea if there is a cause-and-effect relationship between two or more variables.

Once researchers have formulated a hypothesis, conducted background research, and chosen an experimental design, it is time to find participants for their experiment. How exactly do researchers decide who will be part of an experiment? As mentioned previously, this is often accomplished through something known as random selection.

Random Selection

In order to generalize the results of an experiment to a larger group, it is important to choose a sample that is representative of the qualities found in that population. For example, if the total population is 60% female and 40% male, then the sample should reflect those same percentages.

Choosing a representative sample is often accomplished by randomly picking people from the population to be participants in a study. Random selection means that everyone in the group stands an equal chance of being chosen to minimize any bias. Once a pool of participants has been selected, it is time to assign them to groups.

By randomly assigning the participants into groups, the experimenters can be fairly sure that each group will have the same characteristics before the independent variable is applied.

Participants might be randomly assigned to the control group , which does not receive the treatment in question. The control group may receive a placebo or receive the standard treatment. Participants may also be randomly assigned to the experimental group , which receives the treatment of interest. In larger studies, there can be multiple treatment groups for comparison.

There are simple methods of random assignment, like rolling the die. However, there are more complex techniques that involve random number generators to remove any human error.

There can also be random assignment to groups with pre-established rules or parameters. For example, if you want to have an equal number of men and women in each of your study groups, you might separate your sample into two groups (by sex) before randomly assigning each of those groups into the treatment group and control group.

Random assignment is essential because it increases the likelihood that the groups are the same at the outset. With all characteristics being equal between groups, other than the application of the independent variable, any differences found between group outcomes can be more confidently attributed to the effect of the intervention.

Example of Random Assignment

Imagine that a researcher is interested in learning whether or not drinking caffeinated beverages prior to an exam will improve test performance. After randomly selecting a pool of participants, each person is randomly assigned to either the control group or the experimental group.

The participants in the control group consume a placebo drink prior to the exam that does not contain any caffeine. Those in the experimental group, on the other hand, consume a caffeinated beverage before taking the test.

Participants in both groups then take the test, and the researcher compares the results to determine if the caffeinated beverage had any impact on test performance.

A Word From Verywell

Random assignment plays an important role in the psychology research process. Not only does this process help eliminate possible sources of bias, but it also makes it easier to generalize the results of a tested sample of participants to a larger population.

Random assignment helps ensure that members of each group in the experiment are the same, which means that the groups are also likely more representative of what is present in the larger population of interest. Through the use of this technique, psychology researchers are able to study complex phenomena and contribute to our understanding of the human mind and behavior.

Lin Y, Zhu M, Su Z. The pursuit of balance: An overview of covariate-adaptive randomization techniques in clinical trials . Contemp Clin Trials. 2015;45(Pt A):21-25. doi:10.1016/j.cct.2015.07.011

Sullivan L. Random assignment versus random selection . In: The SAGE Glossary of the Social and Behavioral Sciences. SAGE Publications, Inc.; 2009. doi:10.4135/9781412972024.n2108

Alferes VR. Methods of Randomization in Experimental Design . SAGE Publications, Inc.; 2012. doi:10.4135/9781452270012

Nestor PG, Schutt RK. Research Methods in Psychology: Investigating Human Behavior. (2nd Ed.). SAGE Publications, Inc.; 2015.

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

Random Assignment in Psychology (Intro for Students)

Random assignment is a research procedure used to randomly assign participants to different experimental conditions (or ‘groups’). This introduces the element of chance, ensuring that each participant has an equal likelihood of being placed in any condition group for the study.

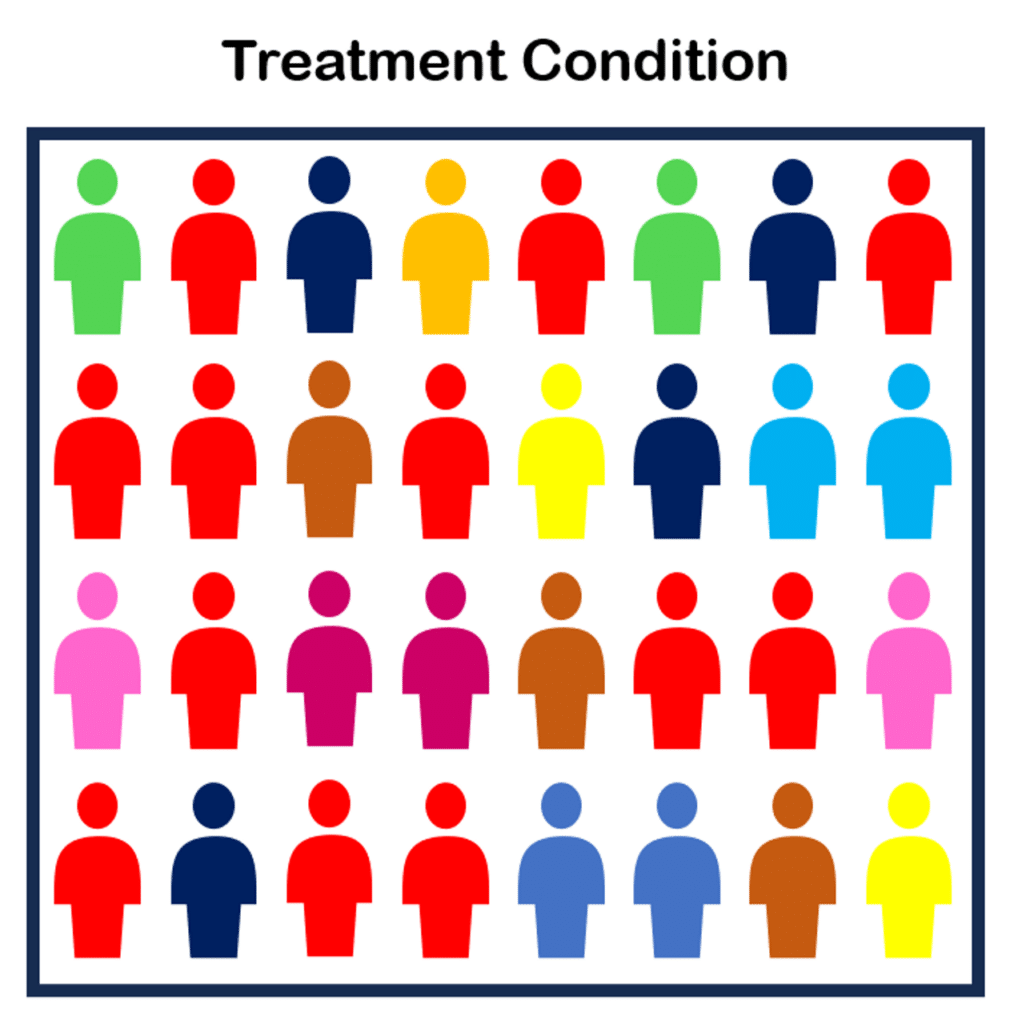

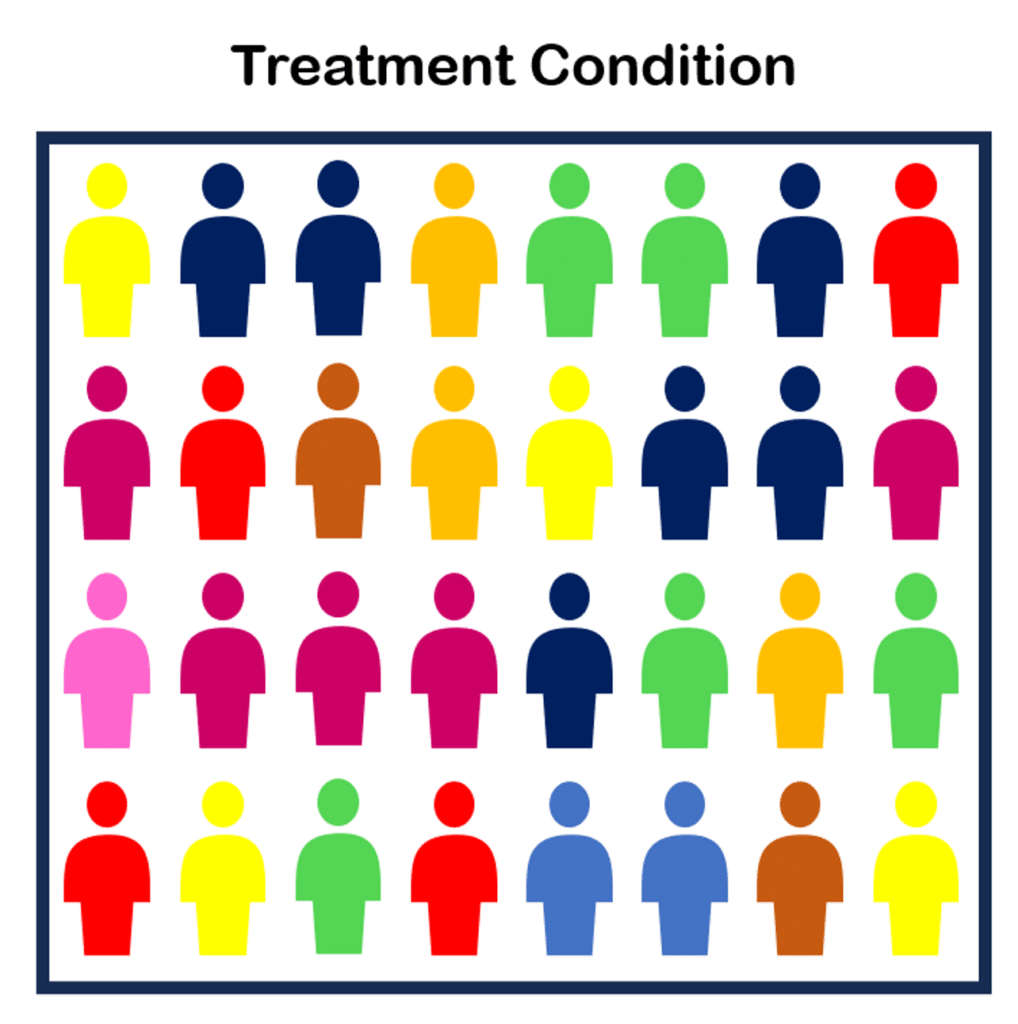

It is absolutely essential that the treatment condition and the control condition are the same in all ways except for the variable being manipulated.

Using random assignment to place participants in different conditions helps to achieve this.

It ensures that those conditions are the same in regards to all potential confounding variables and extraneous factors .

Why Researchers Use Random Assignment

Researchers use random assignment to control for confounds in research.

Confounds refer to unwanted and often unaccounted-for variables that might affect the outcome of a study. These confounding variables can skew the results, rendering the experiment unreliable.

For example, below is a study with two groups. Note how there are more ‘red’ individuals in the first group than the second:

There is likely a confounding variable in this experiment explaining why more red people ended up in the treatment condition and less in the control condition. The red people might have self-selected, for example, leading to a skew of them in one group over the other.

Ideally, we’d want a more even distribution, like below:

To achieve better balance in our two conditions, we use randomized sampling.

Fact File: Experiments 101

Random assignment is used in the type of research called the experiment.

An experiment involves manipulating the level of one variable and examining how it affects another variable. These are the independent and dependent variables :

- Independent Variable: The variable manipulated is called the independent variable (IV)

- Dependent Variable: The variable that it is expected to affect is called the dependent variable (DV).

The most basic form of the experiment involves two conditions: the treatment and the control .

- The Treatment Condition: The treatment condition involves the participants being exposed to the IV.

- The Control Condition: The control condition involves the absence of the IV. Therefore, the IV has two levels: zero and some quantity.

Researchers utilize random assignment to determine which participants go into which conditions.

Methods of Random Assignment

There are several procedures that researchers can use to randomly assign participants to different conditions.

1. Random number generator

There are several websites that offer computer-generated random numbers. Simply indicate how many conditions are in the experiment and then click. If there are 4 conditions, the program will randomly generate a number between 1 and 4 each time it is clicked.

2. Flipping a coin

If there are two conditions in an experiment, then the simplest way to implement random assignment is to flip a coin for each participant. Heads means being assigned to the treatment and tails means being assigned to the control (or vice versa).

3. Rolling a die

Rolling a single die is another way to randomly assign participants. If the experiment has three conditions, then numbers 1 and 2 mean being assigned to the control; numbers 3 and 4 mean treatment condition one; and numbers 5 and 6 mean treatment condition two.

4. Condition names in a hat

In some studies, the researcher will write the name of the treatment condition(s) or control on slips of paper and place them in a hat. If there are 4 conditions and 1 control, then there are 5 slips of paper.

The researcher closes their eyes and selects one slip for each participant. That person is then assigned to one of the conditions in the study and that slip of paper is placed back in the hat. Repeat as necessary.

There are other ways of trying to ensure that the groups of participants are equal in all ways with the exception of the IV. However, random assignment is the most often used because it is so effective at reducing confounds.

Read About More Methods and Examples of Random Assignment Here

Potential Confounding Effects

Random assignment is all about minimizing confounding effects.

Here are six types of confounds that can be controlled for using random assignment:

- Individual Differences: Participants in a study will naturally vary in terms of personality, intelligence, mood, prior knowledge, and many other characteristics. If one group happens to have more people with a particular characteristic, this could affect the results. Random assignment ensures that these individual differences are spread out equally among the experimental groups, making it less likely that they will unduly influence the outcome.

- Temporal or Time-Related Confounds: Events or situations that occur at a particular time can influence the outcome of an experiment. For example, a participant might be tested after a stressful event, while another might be tested after a relaxing weekend. Random assignment ensures that such effects are equally distributed among groups, thus controlling for their potential influence.

- Order Effects: If participants are exposed to multiple treatments or tests, the order in which they experience them can influence their responses. Randomly assigning the order of treatments for different participants helps control for this.

- Location or Environmental Confounds: The environment in which the study is conducted can influence the results. One group might be tested in a noisy room, while another might be in a quiet room. Randomly assigning participants to different locations can control for these effects.

- Instrumentation Confounds: These occur when there are variations in the calibration or functioning of measurement instruments across conditions. If one group’s responses are being measured using a slightly different tool or scale, it can introduce a confound. Random assignment can ensure that any such potential inconsistencies in instrumentation are equally distributed among groups.

- Experimenter Effects: Sometimes, the behavior or expectations of the person administering the experiment can unintentionally influence the participants’ behavior or responses. For instance, if an experimenter believes one treatment is superior, they might unconsciously communicate this belief to participants. Randomly assigning experimenters or using a double-blind procedure (where neither the participant nor the experimenter knows the treatment being given) can help control for this.

Random assignment helps balance out these and other potential confounds across groups, ensuring that any observed differences are more likely due to the manipulated independent variable rather than some extraneous factor.

Limitations of the Random Assignment Procedure

Although random assignment is extremely effective at eliminating the presence of participant-related confounds, there are several scenarios in which it cannot be used.

- Ethics: The most obvious scenario is when it would be unethical. For example, if wanting to investigate the effects of emotional abuse on children, it would be unethical to randomly assign children to either received abuse or not. Even if a researcher were to propose such a study, it would not receive approval from the Institutional Review Board (IRB) which oversees research by university faculty.

- Practicality: Other scenarios involve matters of practicality. For example, randomly assigning people to specific types of diet over a 10-year period would be interesting, but it would be highly unlikely that participants would be diligent enough to make the study valid. This is why examining these types of subjects has to be carried out through observational studies . The data is correlational, which is informative, but falls short of the scientist’s ultimate goal of identifying causality.

- Small Sample Size: The smaller the sample size being assigned to conditions, the more likely it is that the two groups will be unequal. For example, if you flip a coin many times in a row then you will notice that sometimes there will be a string of heads or tails that come up consecutively. This means that one condition may have a build-up of participants that share the same characteristics. However, if you continue flipping the coin, over the long-term, there will be a balance of heads and tails. Unfortunately, how large a sample size is necessary has been the subject of considerable debate (Bloom, 2006; Shadish et al., 2002).

“It is well known that larger sample sizes reduce the probability that random assignment will result in conditions that are unequal” (Goldberg, 2019, p. 2).

Applications of Random Assignment

The importance of random assignment has been recognized in a wide range of scientific and applied disciplines (Bloom, 2006).

Random assignment began as a tool in agricultural research by Fisher (1925, 1935). After WWII, it became extensively used in medical research to test the effectiveness of new treatments and pharmaceuticals (Marks, 1997).

Today it is widely used in industrial engineering (Box, Hunter, and Hunter, 2005), educational research (Lindquist, 1953; Ong-Dean et al., 2011)), psychology (Myers, 1972), and social policy studies (Boruch, 1998; Orr, 1999).

One of the biggest obstacles to the validity of an experiment is the confound. If the group of participants in the treatment condition are substantially different from the group in the control condition, then it is impossible to determine if the IV has an affect or if the confound has an effect.

Thankfully, random assignment is highly effective at eliminating confounds that are known and unknown. Because each participant has an equal chance of being placed in each condition, they are equally distributed.

There are several ways of implementing random assignment, including flipping a coin or using a random number generator.

Random assignment has become an essential procedure in research in a wide range of subjects such as psychology, education, and social policy.

Alferes, V. R. (2012). Methods of randomization in experimental design . Sage Publications.

Bloom, H. S. (2008). The core analytics of randomized experiments for social research. The SAGE Handbook of Social Research Methods , 115-133.

Boruch, R. F. (1998). Randomized controlled experiments for evaluation and planning. Handbook of applied social research methods , 161-191.

Box, G. E., Hunter, W. G., & Hunter, J. S. (2005). Design of experiments: Statistics for Experimenters: Design, Innovation and Discovery.

Dehue, T. (1997). Deception, efficiency, and random groups: Psychology and the gradual origination of the random group design. Isis , 88 (4), 653-673.

Fisher, R.A. (1925). Statistical methods for research workers (11th ed. rev.). Oliver and Boyd: Edinburgh.

Fisher, R. A. (1935). The Design of Experiments. Edinburgh: Oliver and Boyd.

Goldberg, M. H. (2019). How often does random assignment fail? Estimates and recommendations. Journal of Environmental Psychology , 66 , 101351.

Jamison, J. C. (2019). The entry of randomized assignment into the social sciences. Journal of Causal Inference , 7 (1), 20170025.

Lindquist, E. F. (1953). Design and analysis of experiments in psychology and education . Boston: Houghton Mifflin Company.

Marks, H. M. (1997). The progress of experiment: Science and therapeutic reform in the United States, 1900-1990 . Cambridge University Press.

Myers, J. L. (1972). Fundamentals of experimental design (2nd ed.). Allyn & Bacon.

Ong-Dean, C., Huie Hofstetter, C., & Strick, B. R. (2011). Challenges and dilemmas in implementing random assignment in educational research. American Journal of Evaluation , 32 (1), 29-49.

Orr, L. L. (1999). Social experiments: Evaluating public programs with experimental methods . Sage.

Shadish, W. R., Cook, T. D., & Campbell, D. T. (2002). Quasi-experiments: interrupted time-series designs. Experimental and quasi-experimental designs for generalized causal inference , 171-205.

Stigler, S. M. (1992). A historical view of statistical concepts in psychology and educational research. American Journal of Education , 101 (1), 60-70.

Dave Cornell (PhD)

Dr. Cornell has worked in education for more than 20 years. His work has involved designing teacher certification for Trinity College in London and in-service training for state governments in the United States. He has trained kindergarten teachers in 8 countries and helped businessmen and women open baby centers and kindergartens in 3 countries.

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ 25 Positive Punishment Examples

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ 25 Dissociation Examples (Psychology)

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ 15 Zone of Proximal Development Examples

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ Perception Checking: 15 Examples and Definition

Chris Drew (PhD)

This article was peer-reviewed and edited by Chris Drew (PhD). The review process on Helpful Professor involves having a PhD level expert fact check, edit, and contribute to articles. Reviewers ensure all content reflects expert academic consensus and is backed up with reference to academic studies. Dr. Drew has published over 20 academic articles in scholarly journals. He is the former editor of the Journal of Learning Development in Higher Education and holds a PhD in Education from ACU.

- Chris Drew (PhD) #molongui-disabled-link 25 Positive Punishment Examples

- Chris Drew (PhD) #molongui-disabled-link 25 Dissociation Examples (Psychology)

- Chris Drew (PhD) #molongui-disabled-link 15 Zone of Proximal Development Examples

- Chris Drew (PhD) #molongui-disabled-link Perception Checking: 15 Examples and Definition

Leave a Comment Cancel Reply

Your email address will not be published. Required fields are marked *

Random Assignment in Psychology (Definition + 40 Examples)

Have you ever wondered how researchers discover new ways to help people learn, make decisions, or overcome challenges? A hidden hero in this adventure of discovery is a method called random assignment, a cornerstone in psychological research that helps scientists uncover the truths about the human mind and behavior.

Random Assignment is a process used in research where each participant has an equal chance of being placed in any group within the study. This technique is essential in experiments as it helps to eliminate biases, ensuring that the different groups being compared are similar in all important aspects.

By doing so, researchers can be confident that any differences observed are likely due to the variable being tested, rather than other factors.

In this article, we’ll explore the intriguing world of random assignment, diving into its history, principles, real-world examples, and the impact it has had on the field of psychology.

History of Random Assignment

Stepping back in time, we delve into the origins of random assignment, which finds its roots in the early 20th century.

The pioneering mind behind this innovative technique was Sir Ronald A. Fisher , a British statistician and biologist. Fisher introduced the concept of random assignment in the 1920s, aiming to improve the quality and reliability of experimental research .

His contributions laid the groundwork for the method's evolution and its widespread adoption in various fields, particularly in psychology.

Fisher’s groundbreaking work on random assignment was motivated by his desire to control for confounding variables – those pesky factors that could muddy the waters of research findings.

By assigning participants to different groups purely by chance, he realized that the influence of these confounding variables could be minimized, paving the way for more accurate and trustworthy results.

Early Studies Utilizing Random Assignment

Following Fisher's initial development, random assignment started to gain traction in the research community. Early studies adopting this methodology focused on a variety of topics, from agriculture (which was Fisher’s primary field of interest) to medicine and psychology.

The approach allowed researchers to draw stronger conclusions from their experiments, bolstering the development of new theories and practices.

One notable early study utilizing random assignment was conducted in the field of educational psychology. Researchers were keen to understand the impact of different teaching methods on student outcomes.

By randomly assigning students to various instructional approaches, they were able to isolate the effects of the teaching methods, leading to valuable insights and recommendations for educators.

Evolution of the Methodology

As the decades rolled on, random assignment continued to evolve and adapt to the changing landscape of research.

Advances in technology introduced new tools and techniques for implementing randomization, such as computerized random number generators, which offered greater precision and ease of use.

The application of random assignment expanded beyond the confines of the laboratory, finding its way into field studies and large-scale surveys.

Researchers across diverse disciplines embraced the methodology, recognizing its potential to enhance the validity of their findings and contribute to the advancement of knowledge.

From its humble beginnings in the early 20th century to its widespread use today, random assignment has proven to be a cornerstone of scientific inquiry.

Its development and evolution have played a pivotal role in shaping the landscape of psychological research, driving discoveries that have improved lives and deepened our understanding of the human experience.

Principles of Random Assignment

Delving into the heart of random assignment, we uncover the theories and principles that form its foundation.

The method is steeped in the basics of probability theory and statistical inference, ensuring that each participant has an equal chance of being placed in any group, thus fostering fair and unbiased results.

Basic Principles of Random Assignment

Understanding the core principles of random assignment is key to grasping its significance in research. There are three principles: equal probability of selection, reduction of bias, and ensuring representativeness.

The first principle, equal probability of selection , ensures that every participant has an identical chance of being assigned to any group in the study. This randomness is crucial as it mitigates the risk of bias and establishes a level playing field.

The second principle focuses on the reduction of bias . Random assignment acts as a safeguard, ensuring that the groups being compared are alike in all essential aspects before the experiment begins.

This similarity between groups allows researchers to attribute any differences observed in the outcomes directly to the independent variable being studied.

Lastly, ensuring representativeness is a vital principle. When participants are assigned randomly, the resulting groups are more likely to be representative of the larger population.

This characteristic is crucial for the generalizability of the study’s findings, allowing researchers to apply their insights broadly.

Theoretical Foundation

The theoretical foundation of random assignment lies in probability theory and statistical inference .

Probability theory deals with the likelihood of different outcomes, providing a mathematical framework for analyzing random phenomena. In the context of random assignment, it helps in ensuring that each participant has an equal chance of being placed in any group.

Statistical inference, on the other hand, allows researchers to draw conclusions about a population based on a sample of data drawn from that population. It is the mechanism through which the results of a study can be generalized to a broader context.

Random assignment enhances the reliability of statistical inferences by reducing biases and ensuring that the sample is representative.

Differentiating Random Assignment from Random Selection

It’s essential to distinguish between random assignment and random selection, as the two terms, while related, have distinct meanings in the realm of research.

Random assignment refers to how participants are placed into different groups in an experiment, aiming to control for confounding variables and help determine causes.

In contrast, random selection pertains to how individuals are chosen to participate in a study. This method is used to ensure that the sample of participants is representative of the larger population, which is vital for the external validity of the research.

While both methods are rooted in randomness and probability, they serve different purposes in the research process.

Understanding the theories, principles, and distinctions of random assignment illuminates its pivotal role in psychological research.

This method, anchored in probability theory and statistical inference, serves as a beacon of reliability, guiding researchers in their quest for knowledge and ensuring that their findings stand the test of validity and applicability.

Methodology of Random Assignment

Implementing random assignment in a study is a meticulous process that involves several crucial steps.

The initial step is participant selection, where individuals are chosen to partake in the study. This stage is critical to ensure that the pool of participants is diverse and representative of the population the study aims to generalize to.

Once the pool of participants has been established, the actual assignment process begins. In this step, each participant is allocated randomly to one of the groups in the study.

Researchers use various tools, such as random number generators or computerized methods, to ensure that this assignment is genuinely random and free from biases.

Monitoring and adjusting form the final step in the implementation of random assignment. Researchers need to continuously observe the groups to ensure that they remain comparable in all essential aspects throughout the study.

If any significant discrepancies arise, adjustments might be necessary to maintain the study’s integrity and validity.

Tools and Techniques Used

The evolution of technology has introduced a variety of tools and techniques to facilitate random assignment.

Random number generators, both manual and computerized, are commonly used to assign participants to different groups. These generators ensure that each individual has an equal chance of being placed in any group, upholding the principle of equal probability of selection.

In addition to random number generators, researchers often use specialized computer software designed for statistical analysis and experimental design.

These software programs offer advanced features that allow for precise and efficient random assignment, minimizing the risk of human error and enhancing the study’s reliability.

Ethical Considerations

The implementation of random assignment is not devoid of ethical considerations. Informed consent is a fundamental ethical principle that researchers must uphold.

Informed consent means that every participant should be fully informed about the nature of the study, the procedures involved, and any potential risks or benefits, ensuring that they voluntarily agree to participate.

Beyond informed consent, researchers must conduct a thorough risk and benefit analysis. The potential benefits of the study should outweigh any risks or harms to the participants.

Safeguarding the well-being of participants is paramount, and any study employing random assignment must adhere to established ethical guidelines and standards.

Conclusion of Methodology

The methodology of random assignment, while seemingly straightforward, is a multifaceted process that demands precision, fairness, and ethical integrity. From participant selection to assignment and monitoring, each step is crucial to ensure the validity of the study’s findings.

The tools and techniques employed, coupled with a steadfast commitment to ethical principles, underscore the significance of random assignment as a cornerstone of robust psychological research.

Benefits of Random Assignment in Psychological Research

The impact and importance of random assignment in psychological research cannot be overstated. It is fundamental for ensuring the study is accurate, allowing the researchers to determine if their study actually caused the results they saw, and making sure the findings can be applied to the real world.

Facilitating Causal Inferences

When participants are randomly assigned to different groups, researchers can be more confident that the observed effects are due to the independent variable being changed, and not other factors.

This ability to determine the cause is called causal inference .

This confidence allows for the drawing of causal relationships, which are foundational for theory development and application in psychology.

Ensuring Internal Validity

One of the foremost impacts of random assignment is its ability to enhance the internal validity of an experiment.

Internal validity refers to the extent to which a researcher can assert that changes in the dependent variable are solely due to manipulations of the independent variable , and not due to confounding variables.

By ensuring that each participant has an equal chance of being in any condition of the experiment, random assignment helps control for participant characteristics that could otherwise complicate the results.

Enhancing Generalizability

Beyond internal validity, random assignment also plays a crucial role in enhancing the generalizability of research findings.

When done correctly, it ensures that the sample groups are representative of the larger population, so can allow researchers to apply their findings more broadly.

This representative nature is essential for the practical application of research, impacting policy, interventions, and psychological therapies.

Limitations of Random Assignment

Potential for implementation issues.

While the principles of random assignment are robust, the method can face implementation issues.

One of the most common problems is logistical constraints. Some studies, due to their nature or the specific population being studied, find it challenging to implement random assignment effectively.

For instance, in educational settings, logistical issues such as class schedules and school policies might stop the random allocation of students to different teaching methods .

Ethical Dilemmas

Random assignment, while methodologically sound, can also present ethical dilemmas.

In some cases, withholding a potentially beneficial treatment from one of the groups of participants can raise serious ethical questions, especially in medical or clinical research where participants' well-being might be directly affected.

Researchers must navigate these ethical waters carefully, balancing the pursuit of knowledge with the well-being of participants.

Generalizability Concerns

Even when implemented correctly, random assignment does not always guarantee generalizable results.

The types of people in the participant pool, the specific context of the study, and the nature of the variables being studied can all influence the extent to which the findings can be applied to the broader population.

Researchers must be cautious in making broad generalizations from studies, even those employing strict random assignment.

Practical and Real-World Limitations

In the real world, many variables cannot be manipulated for ethical or practical reasons, limiting the applicability of random assignment.

For instance, researchers cannot randomly assign individuals to different levels of intelligence, socioeconomic status, or cultural backgrounds.

This limitation necessitates the use of other research designs, such as correlational or observational studies , when exploring relationships involving such variables.

Response to Critiques

In response to these critiques, people in favor of random assignment argue that the method, despite its limitations, remains one of the most reliable ways to establish cause and effect in experimental research.

They acknowledge the challenges and ethical considerations but emphasize the rigorous frameworks in place to address them.

The ongoing discussion around the limitations and critiques of random assignment contributes to the evolution of the method, making sure it is continuously relevant and applicable in psychological research.

While random assignment is a powerful tool in experimental research, it is not without its critiques and limitations. Implementation issues, ethical dilemmas, generalizability concerns, and real-world limitations can pose significant challenges.

However, the continued discourse and refinement around these issues underline the method's enduring significance in the pursuit of knowledge in psychology.

By being careful with how we do things and doing what's right, random assignment stays a really important part of studying how people act and think.

Real-World Applications and Examples

Random assignment has been employed in many studies across various fields of psychology, leading to significant discoveries and advancements.

Here are some real-world applications and examples illustrating the diversity and impact of this method:

- Medicine and Health Psychology: Randomized Controlled Trials (RCTs) are the gold standard in medical research. In these studies, participants are randomly assigned to either the treatment or control group to test the efficacy of new medications or interventions.

- Educational Psychology: Studies in this field have used random assignment to explore the effects of different teaching methods, classroom environments, and educational technologies on student learning and outcomes.

- Cognitive Psychology: Researchers have employed random assignment to investigate various aspects of human cognition, including memory, attention, and problem-solving, leading to a deeper understanding of how the mind works.

- Social Psychology: Random assignment has been instrumental in studying social phenomena, such as conformity, aggression, and prosocial behavior, shedding light on the intricate dynamics of human interaction.

Let's get into some specific examples. You'll need to know one term though, and that is "control group." A control group is a set of participants in a study who do not receive the treatment or intervention being tested , serving as a baseline to compare with the group that does, in order to assess the effectiveness of the treatment.

- Smoking Cessation Study: Researchers used random assignment to put participants into two groups. One group received a new anti-smoking program, while the other did not. This helped determine if the program was effective in helping people quit smoking.

- Math Tutoring Program: A study on students used random assignment to place them into two groups. One group received additional math tutoring, while the other continued with regular classes, to see if the extra help improved their grades.

- Exercise and Mental Health: Adults were randomly assigned to either an exercise group or a control group to study the impact of physical activity on mental health and mood.

- Diet and Weight Loss: A study randomly assigned participants to different diet plans to compare their effectiveness in promoting weight loss and improving health markers.

- Sleep and Learning: Researchers randomly assigned students to either a sleep extension group or a regular sleep group to study the impact of sleep on learning and memory.

- Classroom Seating Arrangement: Teachers used random assignment to place students in different seating arrangements to examine the effect on focus and academic performance.

- Music and Productivity: Employees were randomly assigned to listen to music or work in silence to investigate the effect of music on workplace productivity.

- Medication for ADHD: Children with ADHD were randomly assigned to receive either medication, behavioral therapy, or a placebo to compare treatment effectiveness.

- Mindfulness Meditation for Stress: Adults were randomly assigned to a mindfulness meditation group or a waitlist control group to study the impact on stress levels.

- Video Games and Aggression: A study randomly assigned participants to play either violent or non-violent video games and then measured their aggression levels.

- Online Learning Platforms: Students were randomly assigned to use different online learning platforms to evaluate their effectiveness in enhancing learning outcomes.

- Hand Sanitizers in Schools: Schools were randomly assigned to use hand sanitizers or not to study the impact on student illness and absenteeism.

- Caffeine and Alertness: Participants were randomly assigned to consume caffeinated or decaffeinated beverages to measure the effects on alertness and cognitive performance.

- Green Spaces and Well-being: Neighborhoods were randomly assigned to receive green space interventions to study the impact on residents’ well-being and community connections.

- Pet Therapy for Hospital Patients: Patients were randomly assigned to receive pet therapy or standard care to assess the impact on recovery and mood.

- Yoga for Chronic Pain: Individuals with chronic pain were randomly assigned to a yoga intervention group or a control group to study the effect on pain levels and quality of life.

- Flu Vaccines Effectiveness: Different groups of people were randomly assigned to receive either the flu vaccine or a placebo to determine the vaccine’s effectiveness.

- Reading Strategies for Dyslexia: Children with dyslexia were randomly assigned to different reading intervention strategies to compare their effectiveness.

- Physical Environment and Creativity: Participants were randomly assigned to different room setups to study the impact of physical environment on creative thinking.

- Laughter Therapy for Depression: Individuals with depression were randomly assigned to laughter therapy sessions or control groups to assess the impact on mood.

- Financial Incentives for Exercise: Participants were randomly assigned to receive financial incentives for exercising to study the impact on physical activity levels.

- Art Therapy for Anxiety: Individuals with anxiety were randomly assigned to art therapy sessions or a waitlist control group to measure the effect on anxiety levels.

- Natural Light in Offices: Employees were randomly assigned to workspaces with natural or artificial light to study the impact on productivity and job satisfaction.

- School Start Times and Academic Performance: Schools were randomly assigned different start times to study the effect on student academic performance and well-being.

- Horticulture Therapy for Seniors: Older adults were randomly assigned to participate in horticulture therapy or traditional activities to study the impact on cognitive function and life satisfaction.

- Hydration and Cognitive Function: Participants were randomly assigned to different hydration levels to measure the impact on cognitive function and alertness.

- Intergenerational Programs: Seniors and young people were randomly assigned to intergenerational programs to study the effects on well-being and cross-generational understanding.

- Therapeutic Horseback Riding for Autism: Children with autism were randomly assigned to therapeutic horseback riding or traditional therapy to study the impact on social communication skills.

- Active Commuting and Health: Employees were randomly assigned to active commuting (cycling, walking) or passive commuting to study the effect on physical health.

- Mindful Eating for Weight Management: Individuals were randomly assigned to mindful eating workshops or control groups to study the impact on weight management and eating habits.

- Noise Levels and Learning: Students were randomly assigned to classrooms with different noise levels to study the effect on learning and concentration.

- Bilingual Education Methods: Schools were randomly assigned different bilingual education methods to compare their effectiveness in language acquisition.

- Outdoor Play and Child Development: Children were randomly assigned to different amounts of outdoor playtime to study the impact on physical and cognitive development.

- Social Media Detox: Participants were randomly assigned to a social media detox or regular usage to study the impact on mental health and well-being.

- Therapeutic Writing for Trauma Survivors: Individuals who experienced trauma were randomly assigned to therapeutic writing sessions or control groups to study the impact on psychological well-being.

- Mentoring Programs for At-risk Youth: At-risk youth were randomly assigned to mentoring programs or control groups to assess the impact on academic achievement and behavior.

- Dance Therapy for Parkinson’s Disease: Individuals with Parkinson’s disease were randomly assigned to dance therapy or traditional exercise to study the effect on motor function and quality of life.

- Aquaponics in Schools: Schools were randomly assigned to implement aquaponics programs to study the impact on student engagement and environmental awareness.

- Virtual Reality for Phobia Treatment: Individuals with phobias were randomly assigned to virtual reality exposure therapy or traditional therapy to compare effectiveness.

- Gardening and Mental Health: Participants were randomly assigned to engage in gardening or other leisure activities to study the impact on mental health and stress reduction.

Each of these studies exemplifies how random assignment is utilized in various fields and settings, shedding light on the multitude of ways it can be applied to glean valuable insights and knowledge.

Real-world Impact of Random Assignment

Random assignment is like a key tool in the world of learning about people's minds and behaviors. It’s super important and helps in many different areas of our everyday lives. It helps make better rules, creates new ways to help people, and is used in lots of different fields.

Health and Medicine

In health and medicine, random assignment has helped doctors and scientists make lots of discoveries. It’s a big part of tests that help create new medicines and treatments.

By putting people into different groups by chance, scientists can really see if a medicine works.

This has led to new ways to help people with all sorts of health problems, like diabetes, heart disease, and mental health issues like depression and anxiety.

Schools and education have also learned a lot from random assignment. Researchers have used it to look at different ways of teaching, what kind of classrooms are best, and how technology can help learning.

This knowledge has helped make better school rules, develop what we learn in school, and find the best ways to teach students of all ages and backgrounds.

Workplace and Organizational Behavior

Random assignment helps us understand how people act at work and what makes a workplace good or bad.

Studies have looked at different kinds of workplaces, how bosses should act, and how teams should be put together. This has helped companies make better rules and create places to work that are helpful and make people happy.

Environmental and Social Changes

Random assignment is also used to see how changes in the community and environment affect people. Studies have looked at community projects, changes to the environment, and social programs to see how they help or hurt people’s well-being.

This has led to better community projects, efforts to protect the environment, and programs to help people in society.

Technology and Human Interaction

In our world where technology is always changing, studies with random assignment help us see how tech like social media, virtual reality, and online stuff affect how we act and feel.

This has helped make better and safer technology and rules about using it so that everyone can benefit.

The effects of random assignment go far and wide, way beyond just a science lab. It helps us understand lots of different things, leads to new and improved ways to do things, and really makes a difference in the world around us.

From making healthcare and schools better to creating positive changes in communities and the environment, the real-world impact of random assignment shows just how important it is in helping us learn and make the world a better place.

So, what have we learned? Random assignment is like a super tool in learning about how people think and act. It's like a detective helping us find clues and solve mysteries in many parts of our lives.

From creating new medicines to helping kids learn better in school, and from making workplaces happier to protecting the environment, it’s got a big job!

This method isn’t just something scientists use in labs; it reaches out and touches our everyday lives. It helps make positive changes and teaches us valuable lessons.

Whether we are talking about technology, health, education, or the environment, random assignment is there, working behind the scenes, making things better and safer for all of us.

In the end, the simple act of putting people into groups by chance helps us make big discoveries and improvements. It’s like throwing a small stone into a pond and watching the ripples spread out far and wide.

Thanks to random assignment, we are always learning, growing, and finding new ways to make our world a happier and healthier place for everyone!

Related posts:

- 19+ Experimental Design Examples (Methods + Types)

- Cluster Sampling vs Stratified Sampling

- 41+ White Collar Job Examples (Salary + Path)

- 47+ Blue Collar Job Examples (Salary + Path)

- McDonaldization of Society (Definition + Examples)

Reference this article:

About The Author

Free Personality Test

Free Memory Test

Free IQ Test

PracticalPie.com is a participant in the Amazon Associates Program. As an Amazon Associate we earn from qualifying purchases.

Follow Us On:

Youtube Facebook Instagram X/Twitter

Psychology Resources

Developmental

Personality

Relationships

Psychologists

Serial Killers

Psychology Tests

Personality Quiz

Memory Test

Depression test

Type A/B Personality Test

© PracticalPsychology. All rights reserved

Privacy Policy | Terms of Use

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

6.2 Experimental Design

Learning objectives.

- Explain the difference between between-subjects and within-subjects experiments, list some of the pros and cons of each approach, and decide which approach to use to answer a particular research question.

- Define random assignment, distinguish it from random sampling, explain its purpose in experimental research, and use some simple strategies to implement it.

- Define what a control condition is, explain its purpose in research on treatment effectiveness, and describe some alternative types of control conditions.

- Define several types of carryover effect, give examples of each, and explain how counterbalancing helps to deal with them.

In this section, we look at some different ways to design an experiment. The primary distinction we will make is between approaches in which each participant experiences one level of the independent variable and approaches in which each participant experiences all levels of the independent variable. The former are called between-subjects experiments and the latter are called within-subjects experiments.

Between-Subjects Experiments

In a between-subjects experiment , each participant is tested in only one condition. For example, a researcher with a sample of 100 college students might assign half of them to write about a traumatic event and the other half write about a neutral event. Or a researcher with a sample of 60 people with severe agoraphobia (fear of open spaces) might assign 20 of them to receive each of three different treatments for that disorder. It is essential in a between-subjects experiment that the researcher assign participants to conditions so that the different groups are, on average, highly similar to each other. Those in a trauma condition and a neutral condition, for example, should include a similar proportion of men and women, and they should have similar average intelligence quotients (IQs), similar average levels of motivation, similar average numbers of health problems, and so on. This is a matter of controlling these extraneous participant variables across conditions so that they do not become confounding variables.

Random Assignment

The primary way that researchers accomplish this kind of control of extraneous variables across conditions is called random assignment , which means using a random process to decide which participants are tested in which conditions. Do not confuse random assignment with random sampling. Random sampling is a method for selecting a sample from a population, and it is rarely used in psychological research. Random assignment is a method for assigning participants in a sample to the different conditions, and it is an important element of all experimental research in psychology and other fields too.

In its strictest sense, random assignment should meet two criteria. One is that each participant has an equal chance of being assigned to each condition (e.g., a 50% chance of being assigned to each of two conditions). The second is that each participant is assigned to a condition independently of other participants. Thus one way to assign participants to two conditions would be to flip a coin for each one. If the coin lands heads, the participant is assigned to Condition A, and if it lands tails, the participant is assigned to Condition B. For three conditions, one could use a computer to generate a random integer from 1 to 3 for each participant. If the integer is 1, the participant is assigned to Condition A; if it is 2, the participant is assigned to Condition B; and if it is 3, the participant is assigned to Condition C. In practice, a full sequence of conditions—one for each participant expected to be in the experiment—is usually created ahead of time, and each new participant is assigned to the next condition in the sequence as he or she is tested. When the procedure is computerized, the computer program often handles the random assignment.