Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

Assignment ON" EVOLUTION AND HISTORY OF COMPUTER "

Related Papers

Ndidi Opara

Edmund Miller

AJEET TELECOM

ELAIYA SENGUTTUVAN

Tahir Siddique

In this paper, emphasis has been given on the gradual and continuous advancement of computer from on and before 300BC to 2012 and beyond. During this very long period of time, a simple device like computer has witnessed many significant changes in its manufacturing and development. By and large, the changes are conceptual, manufacturing and in ever increasing applications. Abstract-In this paper, emphasis has been given on the gradual and continuous advancement of computer from on and before 300BC to 2012 and beyond. During this very long period of time, a simple device like computer has witnessed many significant changes in its manufacturing and development. By and large, the changes are conceptual, manufacturing and in ever increasing applications.

IEEE Potentials

Cresent Escriber

Fernando A G Alcoforado

This article aims to present how the computer, humanity's greatest invention, evolved and how its most likely future will be. The computer is humanity's greatest invention because the worldwide computer network made possible the use of the Internet as the technology that most changed the world with the advent of the information society. IBM developed the mainframe computer starting in 1952. In the 1970s, the dominance of mainframes began to be challenged by the emergence of microprocessors. The innovations greatly facilitated the task of developing and manufacturing smaller computers - then called minicomputers. In 1976, the first microcomputers appeared whose costs represented only a fraction of those practiced by manufacturers of mainframes and minicomputers. The existence of the computer provided the conditions for the advent of the Internet which is undoubtedly one of the greatest inventions of the 20th century, whose development took place in 1965. At the beginning of the 21st century, cloud computing emerged, which symbolizes the tendency to place all the infrastructure and information available digitally on the Internet. Current computers are electronic because they are made up of transistors used in electronic chips that have limitations given that there will be a time when it will no longer be possible to reduce the size of one of the components of the processors, the transistor. Quantum computers have been shown to be the newest answer in Physics and Computing to problems related to the limited capacity of electronic computers. Canadian company D-Wave claims to have produced the first commercial quantum computer. In addition to the quantum computer, Artificial Intelligence (AI) can reinvent computers.

Suggested Videos

What is a computer.

A computer is an electronic machine that collects information, stores it, processes it according to user instructions, and then returns the result.

A computer is a programmable electronic device that performs arithmetic and logical operations automatically using a set of instructions provided by the user.

Early Computing Devices

People used sticks, stones, and bones as counting tools before computers were invented. More computing devices were produced as technology advanced and the human intellect improved over time. Let us look at a few of the early-age computing devices used by mankind.

Abacus was invented by the Chinese around 4000 years ago. It’s a wooden rack with metal rods with beads attached to them. The abacus operator moves the beads according to certain guidelines to complete arithmetic computations.

- Napier’s Bone

John Napier devised Napier’s Bones, a manually operated calculating apparatus. For calculating, this instrument used 9 separate ivory strips (bones) marked with numerals to multiply and divide. It was also the first machine to calculate using the decimal point system.

Pascaline was invented in 1642 by Biaise Pascal, a French mathematician and philosopher. It is thought to be the first mechanical and automated calculator. It was a wooden box with gears and wheels inside.

- Stepped Reckoner or Leibniz wheel

In 1673, a German mathematician-philosopher named Gottfried Wilhelm Leibniz improved on Pascal’s invention to create this apparatus. It was a digital mechanical calculator known as the stepped reckoner because it used fluted drums instead of gears.

- Difference Engine

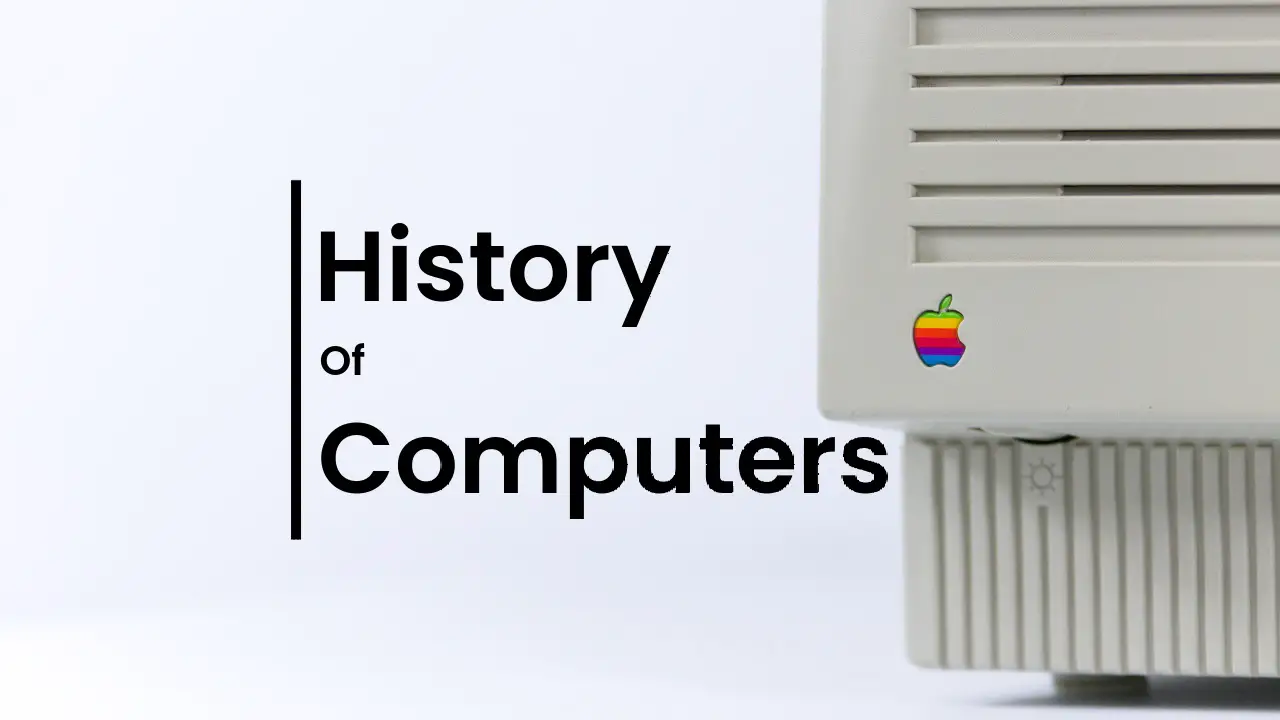

In the early 1820s, Charles Babbage created the Difference Engine. It was a mechanical computer that could do basic computations. It was a steam-powered calculating machine used to solve numerical tables such as logarithmic tables.

- Analytical Engine

Charles Babbage created another calculating machine, the Analytical Engine, in 1830. It was a mechanical computer that took input from punch cards. It was capable of solving any mathematical problem and storing data in an indefinite memory.

- Tabulating machine

An American Statistician – Herman Hollerith invented this machine in the year 1890. Tabulating Machine was a punch card-based mechanical tabulator. It could compute statistics and record or sort data or information. Hollerith began manufacturing these machines in his company, which ultimately became International Business Machines (IBM) in 1924.

- Differential Analyzer

Vannevar Bush introduced the first electrical computer, the Differential Analyzer, in 1930. This machine is made up of vacuum tubes that switch electrical impulses in order to do calculations. It was capable of performing 25 calculations in a matter of minutes.

Howard Aiken planned to build a machine in 1937 that could conduct massive calculations or calculations using enormous numbers. The Mark I computer was constructed in 1944 as a collaboration between IBM and Harvard.

History of Computers Generation

The word ‘computer’ has a very interesting origin. It was first used in the 16th century for a person who used to compute, i.e. do calculations. The word was used in the same sense as a noun until the 20th century. Women were hired as human computers to carry out all forms of calculations and computations.

By the last part of the 19th century, the word was also used to describe machines that did calculations. The modern-day use of the word is generally to describe programmable digital devices that run on electricity.

Early History of Computer

Since the evolution of humans, devices have been used for calculations for thousands of years. One of the earliest and most well-known devices was an abacus. Then in 1822, the father of computers, Charles Babbage began developing what would be the first mechanical computer. And then in 1833 he actually designed an Analytical Engine which was a general-purpose computer. It contained an ALU, some basic flow chart principles and the concept of integrated memory.

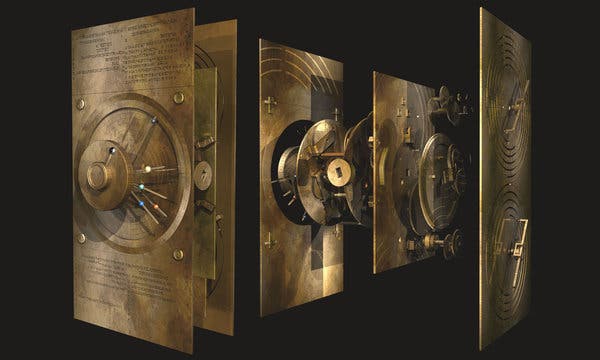

Then more than a century later in the history of computers, we got our first electronic computer for general purpose. It was the ENIAC, which stands for Electronic Numerical Integrator and Computer. The inventors of this computer were John W. Mauchly and J.Presper Eckert.

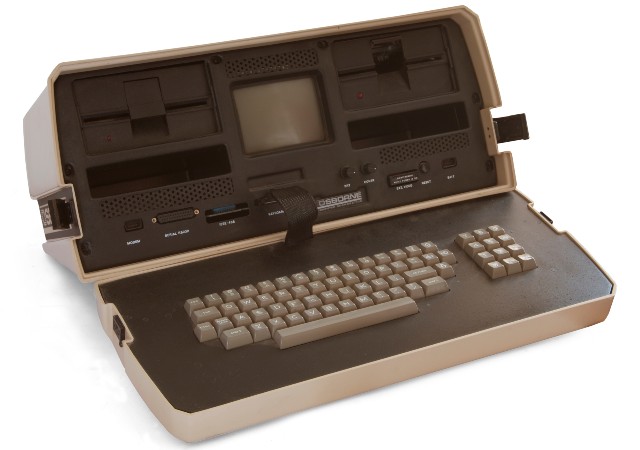

And with times the technology developed and the computers got smaller and the processing got faster. We got our first laptop in 1981 and it was introduced by Adam Osborne and EPSON.

Browse more Topics under Basics Of Computers

- Number Systems

- Number System Conversions

Generations of Computers

- Computer Organisation

- Computer Memory

- Computers Abbreviations

- Basic Computer Terminology

- Computer Languages

- Basic Internet Knowledge and Protocols

- Hardware and Software

- Keyboard Shortcuts

- I/O Devices

- Practice Problems On Basics Of Computers

In the history of computers, we often refer to the advancements of modern computers as the generation of computers . We are currently on the fifth generation of computers. So let us look at the important features of these five generations of computers.

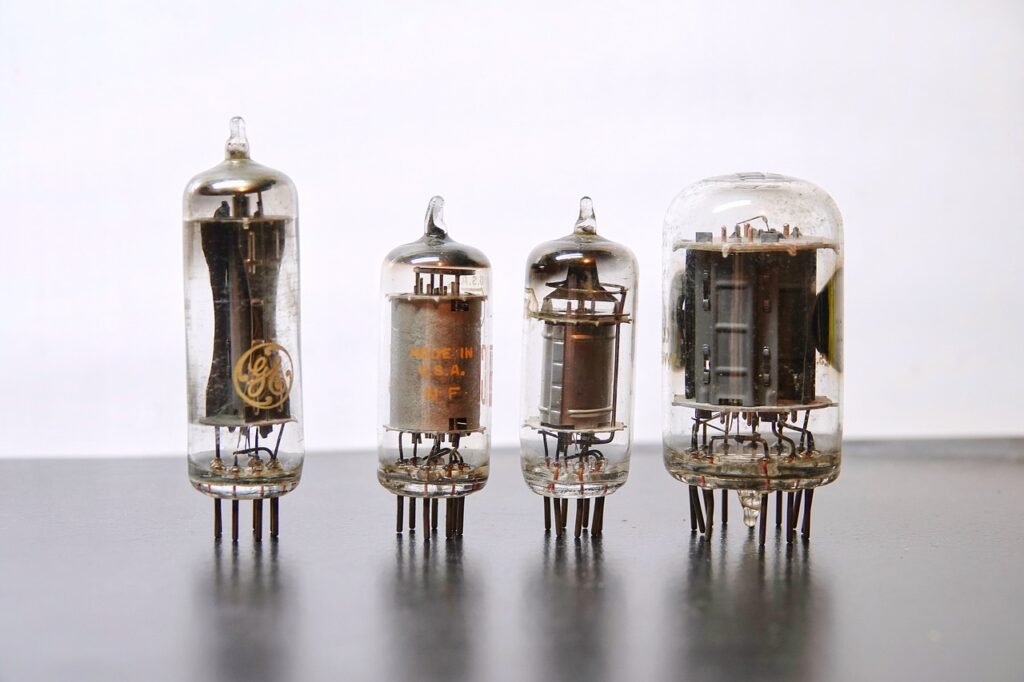

- 1st Generation: This was from the period of 1940 to 1955. This was when machine language was developed for the use of computers. They used vacuum tubes for the circuitry. For the purpose of memory, they used magnetic drums. These machines were complicated, large, and expensive. They were mostly reliant on batch operating systems and punch cards. As output and input devices, magnetic tape and paper tape were implemented. For example, ENIAC, UNIVAC-1, EDVAC, and so on.

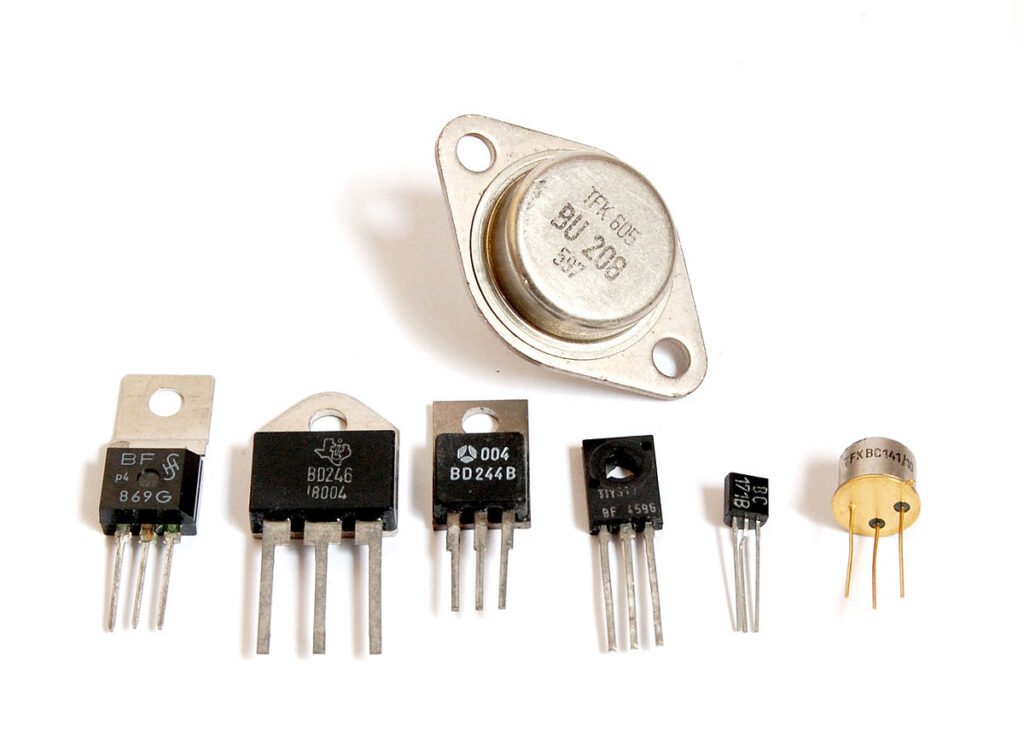

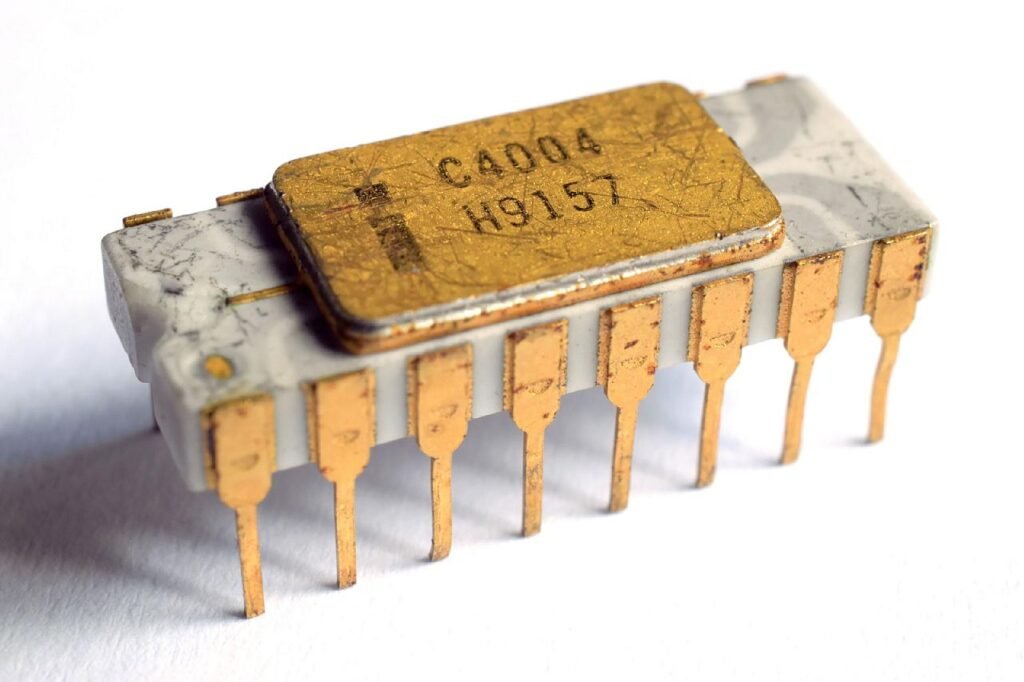

- 2nd Generation: The years 1957-1963 were referred to as the “second generation of computers” at the time. In second-generation computers, COBOL and FORTRAN are employed as assembly languages and programming languages. Here they advanced from vacuum tubes to transistors. This made the computers smaller, faster and more energy-efficient. And they advanced from binary to assembly languages. For instance, IBM 1620, IBM 7094, CDC 1604, CDC 3600, and so forth.

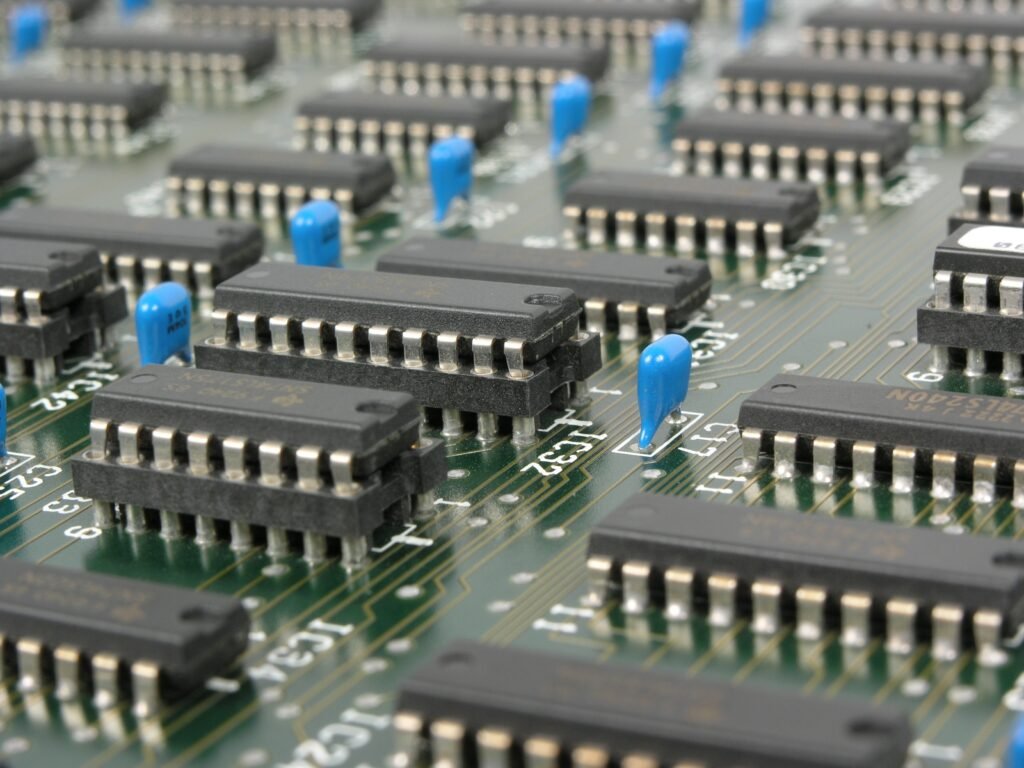

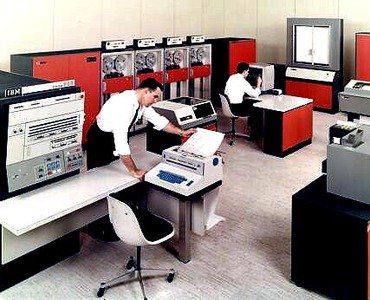

- 3rd Generation: The hallmark of this period (1964-1971) was the development of the integrated circuit. A single integrated circuit (IC) is made up of many transistors, which increases the power of a computer while simultaneously lowering its cost. These computers were quicker, smaller, more reliable, and less expensive than their predecessors. High-level programming languages such as FORTRON-II to IV, COBOL, and PASCAL PL/1 were utilized. For example, the IBM-360 series, the Honeywell-6000 series, and the IBM-370/168.

- 4th Generation: The invention of the microprocessors brought along the fourth generation of computers. The years 1971-1980 were dominated by fourth generation computers. C, C++ and Java were the programming languages utilized in this generation of computers. For instance, the STAR 1000, PDP 11, CRAY-1, CRAY-X-MP, and Apple II. This was when we started producing computers for home use.

- 5th Generation: These computers have been utilized since 1980 and continue to be used now. This is the present and the future of the computer world. The defining aspect of this generation is artificial intelligence. The use of parallel processing and superconductors are making this a reality and provide a lot of scope for the future. Fifth-generation computers use ULSI (Ultra Large Scale Integration) technology. These are the most recent and sophisticated computers. C, C++, Java,.Net, and more programming languages are used. For instance, IBM, Pentium, Desktop, Laptop, Notebook, Ultrabook, and so on.

Brief History of Computers

The naive understanding of computation had to be overcome before the true power of computing could be realized. The inventors who worked tirelessly to bring the computer into the world had to realize that what they were creating was more than just a number cruncher or a calculator. They had to address all of the difficulties associated with inventing such a machine, implementing the design, and actually building the thing. The history of the computer is the history of these difficulties being solved.

19 th Century

1801 – Joseph Marie Jacquard, a weaver and businessman from France, devised a loom that employed punched wooden cards to automatically weave cloth designs.

1822 – Charles Babbage, a mathematician, invented the steam-powered calculating machine capable of calculating number tables. The “Difference Engine” idea failed owing to a lack of technology at the time.

1848 – The world’s first computer program was written by Ada Lovelace, an English mathematician. Lovelace also includes a step-by-step tutorial on how to compute Bernoulli numbers using Babbage’s machine.

1890 – Herman Hollerith, an inventor, creates the punch card technique used to calculate the 1880 U.S. census. He would go on to start the corporation that would become IBM.

Early 20 th Century

1930 – Differential Analyzer was the first large-scale automatic general-purpose mechanical analogue computer invented and built by Vannevar Bush.

1936 – Alan Turing had an idea for a universal machine, which he called the Turing machine, that could compute anything that could be computed.

1939 – Hewlett-Packard was discovered in a garage in Palo Alto, California by Bill Hewlett and David Packard.

1941 – Konrad Zuse, a German inventor and engineer, completed his Z3 machine, the world’s first digital computer. However, the machine was destroyed during a World War II bombing strike on Berlin.

1941 – J.V. Atanasoff and graduate student Clifford Berry devise a computer capable of solving 29 equations at the same time. The first time a computer can store data in its primary memory.

1945 – University of Pennsylvania academics John Mauchly and J. Presper Eckert create an Electronic Numerical Integrator and Calculator (ENIAC). It was Turing-complete and capable of solving “a vast class of numerical problems” by reprogramming, earning it the title of “Grandfather of computers.”

1946 – The UNIVAC I (Universal Automatic Computer) was the first general-purpose electronic digital computer designed in the United States for corporate applications.

1949 – The Electronic Delay Storage Automatic Calculator (EDSAC), developed by a team at the University of Cambridge, is the “first practical stored-program computer.”

1950 – The Standards Eastern Automatic Computer (SEAC) was built in Washington, DC, and it was the first stored-program computer completed in the United States.

Late 20 th Century

1953 – Grace Hopper, a computer scientist, creates the first computer language, which becomes known as COBOL, which stands for CO mmon, B usiness- O riented L anguage. It allowed a computer user to offer the computer instructions in English-like words rather than numbers.

1954 – John Backus and a team of IBM programmers created the FORTRAN programming language, an acronym for FOR mula TRAN slation. In addition, IBM developed the 650.

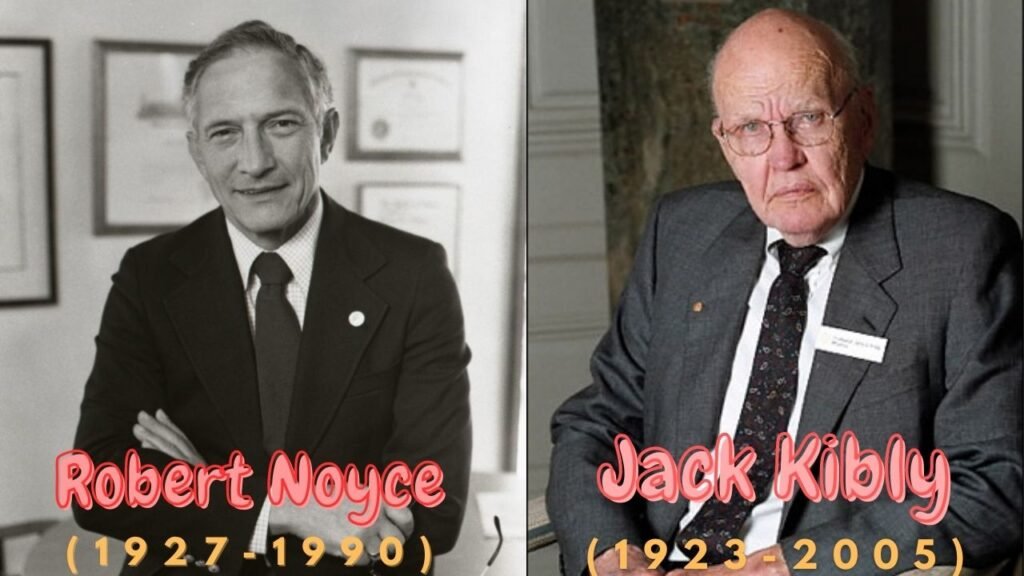

1958 – The integrated circuit, sometimes known as the computer chip, was created by Jack Kirby and Robert Noyce.

1962 – Atlas, the computer, makes its appearance. It was the fastest computer in the world at the time, and it pioneered the concept of “virtual memory.”

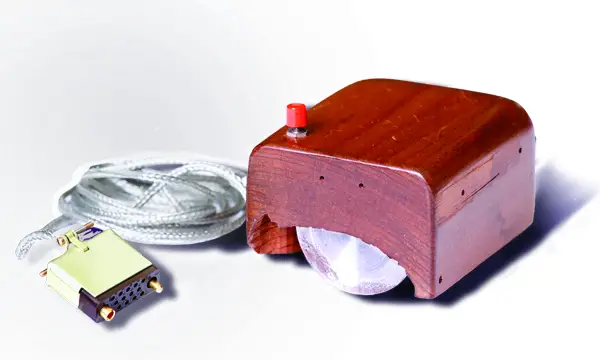

1964 – Douglas Engelbart proposes a modern computer prototype that combines a mouse and a graphical user interface (GUI).

1969 – Bell Labs developers, led by Ken Thompson and Dennis Ritchie, revealed UNIX, an operating system developed in the C programming language that addressed program compatibility difficulties.

1970 – The Intel 1103, the first Dynamic Access Memory (DRAM) chip, is unveiled by Intel.

1971 – The floppy disc was invented by Alan Shugart and a team of IBM engineers. In the same year, Xerox developed the first laser printer, which not only produced billions of dollars but also heralded the beginning of a new age in computer printing.

1973 – Robert Metcalfe, a member of Xerox’s research department, created Ethernet, which is used to connect many computers and other gear.

1974 – Personal computers were introduced into the market. The first were the Altair Scelbi & Mark-8, IBM 5100, and Radio Shack’s TRS-80.

1975 – Popular Electronics magazine touted the Altair 8800 as the world’s first minicomputer kit in January. Paul Allen and Bill Gates offer to build software in the BASIC language for the Altair.

1976 – Apple Computers is founded by Steve Jobs and Steve Wozniak, who expose the world to the Apple I, the first computer with a single-circuit board.

1977 – At the first West Coast Computer Faire, Jobs and Wozniak announce the Apple II. It has colour graphics and a cassette drive for storing music.

1978 – The first computerized spreadsheet program, VisiCalc, is introduced.

1979 – WordStar, a word processing tool from MicroPro International, is released.

1981 – IBM unveils the Acorn, their first personal computer, which has an Intel CPU, two floppy drives, and a colour display. The MS-DOS operating system from Microsoft is used by Acorn.

1983 – The CD-ROM, which could carry 550 megabytes of pre-recorded data, hit the market. This year also saw the release of the Gavilan SC, the first portable computer with a flip-form design and the first to be offered as a “laptop.”

1984 – Apple launched Macintosh during the Superbowl XVIII commercial. It was priced at $2,500

1985 – Microsoft introduces Windows, which enables multitasking via a graphical user interface. In addition, the programming language C++ has been released.

1990 – Tim Berners-Lee, an English programmer and scientist, creates HyperText Markup Language, widely known as HTML. He also coined the term “WorldWideWeb.” It includes the first browser, a server, HTML, and URLs.

1993 – The Pentium CPU improves the usage of graphics and music on personal computers.

1995 – Microsoft’s Windows 95 operating system was released. A $300 million promotional campaign was launched to get the news out. Sun Microsystems introduces Java 1.0, followed by Netscape Communications’ JavaScript.

1996 – At Stanford University, Sergey Brin and Larry Page created the Google search engine.

1998 – Apple introduces the iMac, an all-in-one Macintosh desktop computer. These PCs cost $1,300 and came with a 4GB hard drive, 32MB RAM, a CD-ROM, and a 15-inch monitor.

1999 – Wi-Fi, an abbreviation for “wireless fidelity,” is created, originally covering a range of up to 300 feet.

21 st Century

2000 – The USB flash drive is first introduced in 2000. They were speedier and had more storage space than other storage media options when used for data storage.

2001 – Apple releases Mac OS X, later renamed OS X and eventually simply macOS, as the successor to its conventional Mac Operating System.

2003 – Customers could purchase AMD’s Athlon 64, the first 64-bit CPU for consumer computers.

2004 – Facebook began as a social networking website.

2005 – Google acquires Android, a mobile phone OS based on Linux.

2006 – Apple’s MacBook Pro was available. The Pro was the company’s first dual-core, Intel-based mobile computer.

Amazon Web Services, including Amazon Elastic Cloud 2 (EC2) and Amazon Simple Storage Service, were also launched (S3)

2007 – The first iPhone was produced by Apple, bringing many computer operations into the palm of our hands. Amazon also released the Kindle, one of the first electronic reading systems, in 2007.

2009 – Microsoft released Windows 7.

2011 – Google introduces the Chromebook, which runs Google Chrome OS.

2014 – The University of Michigan Micro Mote (M3), the world’s smallest computer, was constructed.

2015 – Apple introduces the Apple Watch. Windows 10 was also released by Microsoft.

2016 – The world’s first reprogrammable quantum computer is built.

Types of Computers

- Analog Computers – Analog computers are built with various components such as gears and levers, with no electrical components. One advantage of analogue computation is that designing and building an analogue computer to tackle a specific problem can be quite straightforward.

- Mainframe computers – It is a computer that is generally utilized by large enterprises for mission-critical activities such as massive data processing. Mainframe computers were distinguished by massive storage capacities, quick components, and powerful computational capabilities. Because they were complicated systems, they were managed by a team of systems programmers who had sole access to the computer. These machines are now referred to as servers rather than mainframes.

- Supercomputers – The most powerful computers to date are commonly referred to as supercomputers. Supercomputers are enormous systems that are purpose-built to solve complicated scientific and industrial problems. Quantum mechanics, weather forecasting, oil and gas exploration, molecular modelling, physical simulations, aerodynamics, nuclear fusion research, and cryptoanalysis are all done on supercomputers.

- Minicomputers – A minicomputer is a type of computer that has many of the same features and capabilities as a larger computer but is smaller in size. Minicomputers, which were relatively small and affordable, were often employed in a single department of an organization and were often dedicated to a specific task or shared by a small group.

- Microcomputers – A microcomputer is a small computer that is based on a microprocessor integrated circuit, often known as a chip. A microcomputer is a system that incorporates at a minimum a microprocessor, program memory, data memory, and input-output system (I/O). A microcomputer is now commonly referred to as a personal computer (PC).

- Embedded processors – These are miniature computers that control electrical and mechanical processes with basic microprocessors. Embedded processors are often simple in design, have limited processing capability and I/O capabilities, and need little power. Ordinary microprocessors and microcontrollers are the two primary types of embedded processors. Embedded processors are employed in systems that do not require the computing capability of traditional devices such as desktop computers, laptop computers, or workstations.

FAQs on History of Computers

Q: The principle of modern computers was proposed by ____

- Adam Osborne

- Alan Turing

- Charles Babbage

Ans: The correct answer is C.

Q: Who introduced the first computer from home use in 1981?

- Sun Technology

Ans: Answer is A. IBM made the first home-use personal computer.

Q: Third generation computers used which programming language ?

- Machine language

Ans: The correct option is C.

Customize your course in 30 seconds

Which class are you in.

Basics of Computers

- Computer Abbreviations

- Basic Computer Knowledge – Practice Problems

- Computer Organization

- Input and Output (I/O) Devices

One response to “Hardware and Software”

THANKS ,THIS IS THE VERY USEFUL KNOWLEDGE

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Download the App

History of computers: A brief timeline

The history of computers began with primitive designs in the early 19th century and went on to change the world during the 20th century.

- 2000-present day

Additional resources

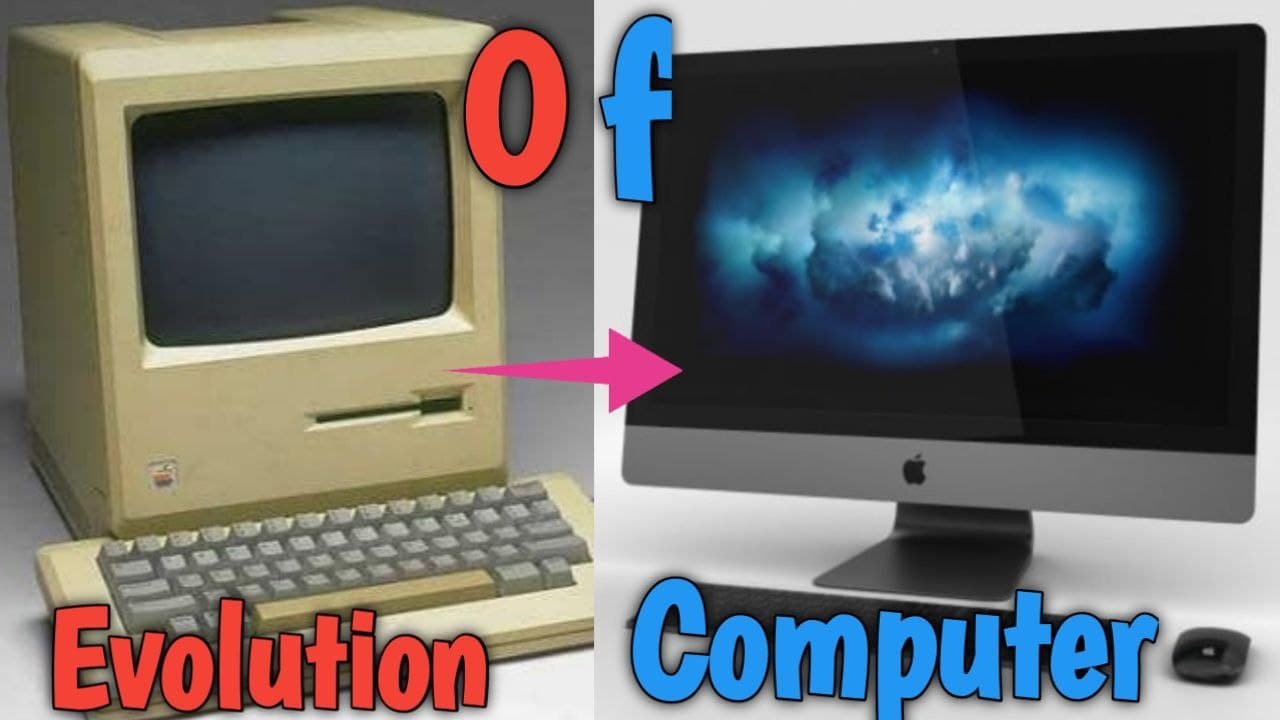

The history of computers goes back over 200 years. At first theorized by mathematicians and entrepreneurs, during the 19th century mechanical calculating machines were designed and built to solve the increasingly complex number-crunching challenges. The advancement of technology enabled ever more-complex computers by the early 20th century, and computers became larger and more powerful.

Today, computers are almost unrecognizable from designs of the 19th century, such as Charles Babbage's Analytical Engine — or even from the huge computers of the 20th century that occupied whole rooms, such as the Electronic Numerical Integrator and Calculator.

Here's a brief history of computers, from their primitive number-crunching origins to the powerful modern-day machines that surf the Internet, run games and stream multimedia.

19th century

1801: Joseph Marie Jacquard, a French merchant and inventor invents a loom that uses punched wooden cards to automatically weave fabric designs. Early computers would use similar punch cards.

1821: English mathematician Charles Babbage conceives of a steam-driven calculating machine that would be able to compute tables of numbers. Funded by the British government, the project, called the "Difference Engine" fails due to the lack of technology at the time, according to the University of Minnesota .

1848: Ada Lovelace, an English mathematician and the daughter of poet Lord Byron, writes the world's first computer program. According to Anna Siffert, a professor of theoretical mathematics at the University of Münster in Germany, Lovelace writes the first program while translating a paper on Babbage's Analytical Engine from French into English. "She also provides her own comments on the text. Her annotations, simply called "notes," turn out to be three times as long as the actual transcript," Siffert wrote in an article for The Max Planck Society . "Lovelace also adds a step-by-step description for computation of Bernoulli numbers with Babbage's machine — basically an algorithm — which, in effect, makes her the world's first computer programmer." Bernoulli numbers are a sequence of rational numbers often used in computation.

1853: Swedish inventor Per Georg Scheutz and his son Edvard design the world's first printing calculator. The machine is significant for being the first to "compute tabular differences and print the results," according to Uta C. Merzbach's book, " Georg Scheutz and the First Printing Calculator " (Smithsonian Institution Press, 1977).

1890: Herman Hollerith designs a punch-card system to help calculate the 1890 U.S. Census. The machine, saves the government several years of calculations, and the U.S. taxpayer approximately $5 million, according to Columbia University Hollerith later establishes a company that will eventually become International Business Machines Corporation ( IBM ).

Early 20th century

1931: At the Massachusetts Institute of Technology (MIT), Vannevar Bush invents and builds the Differential Analyzer, the first large-scale automatic general-purpose mechanical analog computer, according to Stanford University .

1936: Alan Turing , a British scientist and mathematician, presents the principle of a universal machine, later called the Turing machine, in a paper called "On Computable Numbers…" according to Chris Bernhardt's book " Turing's Vision " (The MIT Press, 2017). Turing machines are capable of computing anything that is computable. The central concept of the modern computer is based on his ideas. Turing is later involved in the development of the Turing-Welchman Bombe, an electro-mechanical device designed to decipher Nazi codes during World War II, according to the UK's National Museum of Computing .

1937: John Vincent Atanasoff, a professor of physics and mathematics at Iowa State University, submits a grant proposal to build the first electric-only computer, without using gears, cams, belts or shafts.

1939: David Packard and Bill Hewlett found the Hewlett Packard Company in Palo Alto, California. The pair decide the name of their new company by the toss of a coin, and Hewlett-Packard's first headquarters are in Packard's garage, according to MIT .

1941: German inventor and engineer Konrad Zuse completes his Z3 machine, the world's earliest digital computer, according to Gerard O'Regan's book " A Brief History of Computing " (Springer, 2021). The machine was destroyed during a bombing raid on Berlin during World War II. Zuse fled the German capital after the defeat of Nazi Germany and later released the world's first commercial digital computer, the Z4, in 1950, according to O'Regan.

1941: Atanasoff and his graduate student, Clifford Berry, design the first digital electronic computer in the U.S., called the Atanasoff-Berry Computer (ABC). This marks the first time a computer is able to store information on its main memory, and is capable of performing one operation every 15 seconds, according to the book " Birthing the Computer " (Cambridge Scholars Publishing, 2016)

1945: Two professors at the University of Pennsylvania, John Mauchly and J. Presper Eckert, design and build the Electronic Numerical Integrator and Calculator (ENIAC). The machine is the first "automatic, general-purpose, electronic, decimal, digital computer," according to Edwin D. Reilly's book "Milestones in Computer Science and Information Technology" (Greenwood Press, 2003).

1946: Mauchly and Presper leave the University of Pennsylvania and receive funding from the Census Bureau to build the UNIVAC, the first commercial computer for business and government applications.

1947: William Shockley, John Bardeen and Walter Brattain of Bell Laboratories invent the transistor . They discover how to make an electric switch with solid materials and without the need for a vacuum.

1949: A team at the University of Cambridge develops the Electronic Delay Storage Automatic Calculator (EDSAC), "the first practical stored-program computer," according to O'Regan. "EDSAC ran its first program in May 1949 when it calculated a table of squares and a list of prime numbers ," O'Regan wrote. In November 1949, scientists with the Council of Scientific and Industrial Research (CSIR), now called CSIRO, build Australia's first digital computer called the Council for Scientific and Industrial Research Automatic Computer (CSIRAC). CSIRAC is the first digital computer in the world to play music, according to O'Regan.

Late 20th century

1953: Grace Hopper develops the first computer language, which eventually becomes known as COBOL, which stands for COmmon, Business-Oriented Language according to the National Museum of American History . Hopper is later dubbed the "First Lady of Software" in her posthumous Presidential Medal of Freedom citation. Thomas Johnson Watson Jr., son of IBM CEO Thomas Johnson Watson Sr., conceives the IBM 701 EDPM to help the United Nations keep tabs on Korea during the war.

1954: John Backus and his team of programmers at IBM publish a paper describing their newly created FORTRAN programming language, an acronym for FORmula TRANslation, according to MIT .

1958: Jack Kilby and Robert Noyce unveil the integrated circuit, known as the computer chip. Kilby is later awarded the Nobel Prize in Physics for his work.

1968: Douglas Engelbart reveals a prototype of the modern computer at the Fall Joint Computer Conference, San Francisco. His presentation, called "A Research Center for Augmenting Human Intellect" includes a live demonstration of his computer, including a mouse and a graphical user interface (GUI), according to the Doug Engelbart Institute . This marks the development of the computer from a specialized machine for academics to a technology that is more accessible to the general public.

1969: Ken Thompson, Dennis Ritchie and a group of other developers at Bell Labs produce UNIX, an operating system that made "large-scale networking of diverse computing systems — and the internet — practical," according to Bell Labs .. The team behind UNIX continued to develop the operating system using the C programming language, which they also optimized.

1970: The newly formed Intel unveils the Intel 1103, the first Dynamic Access Memory (DRAM) chip.

1971: A team of IBM engineers led by Alan Shugart invents the "floppy disk," enabling data to be shared among different computers.

1972: Ralph Baer, a German-American engineer, releases Magnavox Odyssey, the world's first home game console, in September 1972 , according to the Computer Museum of America . Months later, entrepreneur Nolan Bushnell and engineer Al Alcorn with Atari release Pong, the world's first commercially successful video game.

1973: Robert Metcalfe, a member of the research staff for Xerox, develops Ethernet for connecting multiple computers and other hardware.

1977: The Commodore Personal Electronic Transactor (PET), is released onto the home computer market, featuring an MOS Technology 8-bit 6502 microprocessor, which controls the screen, keyboard and cassette player. The PET is especially successful in the education market, according to O'Regan.

1975: The magazine cover of the January issue of "Popular Electronics" highlights the Altair 8080 as the "world's first minicomputer kit to rival commercial models." After seeing the magazine issue, two "computer geeks," Paul Allen and Bill Gates, offer to write software for the Altair, using the new BASIC language. On April 4, after the success of this first endeavor, the two childhood friends form their own software company, Microsoft.

1976: Steve Jobs and Steve Wozniak co-found Apple Computer on April Fool's Day. They unveil Apple I, the first computer with a single-circuit board and ROM (Read Only Memory), according to MIT .

1977: Radio Shack began its initial production run of 3,000 TRS-80 Model 1 computers — disparagingly known as the "Trash 80" — priced at $599, according to the National Museum of American History. Within a year, the company took 250,000 orders for the computer, according to the book " How TRS-80 Enthusiasts Helped Spark the PC Revolution " (The Seeker Books, 2007).

1977: The first West Coast Computer Faire is held in San Francisco. Jobs and Wozniak present the Apple II computer at the Faire, which includes color graphics and features an audio cassette drive for storage.

1978: VisiCalc, the first computerized spreadsheet program is introduced.

1979: MicroPro International, founded by software engineer Seymour Rubenstein, releases WordStar, the world's first commercially successful word processor. WordStar is programmed by Rob Barnaby, and includes 137,000 lines of code, according to Matthew G. Kirschenbaum's book " Track Changes: A Literary History of Word Processing " (Harvard University Press, 2016).

1981: "Acorn," IBM's first personal computer, is released onto the market at a price point of $1,565, according to IBM. Acorn uses the MS-DOS operating system from Windows. Optional features include a display, printer, two diskette drives, extra memory, a game adapter and more.

1983: The Apple Lisa, standing for "Local Integrated Software Architecture" but also the name of Steve Jobs' daughter, according to the National Museum of American History ( NMAH ), is the first personal computer to feature a GUI. The machine also includes a drop-down menu and icons. Also this year, the Gavilan SC is released and is the first portable computer with a flip-form design and the very first to be sold as a "laptop."

1984: The Apple Macintosh is announced to the world during a Superbowl advertisement. The Macintosh is launched with a retail price of $2,500, according to the NMAH.

1985 : As a response to the Apple Lisa's GUI, Microsoft releases Windows in November 1985, the Guardian reported . Meanwhile, Commodore announces the Amiga 1000.

1989: Tim Berners-Lee, a British researcher at the European Organization for Nuclear Research ( CERN ), submits his proposal for what would become the World Wide Web. His paper details his ideas for Hyper Text Markup Language (HTML), the building blocks of the Web.

1993: The Pentium microprocessor advances the use of graphics and music on PCs.

1996: Sergey Brin and Larry Page develop the Google search engine at Stanford University.

1997: Microsoft invests $150 million in Apple, which at the time is struggling financially. This investment ends an ongoing court case in which Apple accused Microsoft of copying its operating system.

1999: Wi-Fi, the abbreviated term for "wireless fidelity" is developed, initially covering a distance of up to 300 feet (91 meters) Wired reported .

21st century

2001: Mac OS X, later renamed OS X then simply macOS, is released by Apple as the successor to its standard Mac Operating System. OS X goes through 16 different versions, each with "10" as its title, and the first nine iterations are nicknamed after big cats, with the first being codenamed "Cheetah," TechRadar reported.

2003: AMD's Athlon 64, the first 64-bit processor for personal computers, is released to customers.

2004: The Mozilla Corporation launches Mozilla Firefox 1.0. The Web browser is one of the first major challenges to Internet Explorer, owned by Microsoft. During its first five years, Firefox exceeded a billion downloads by users, according to the Web Design Museum .

2005: Google buys Android, a Linux-based mobile phone operating system

2006: The MacBook Pro from Apple hits the shelves. The Pro is the company's first Intel-based, dual-core mobile computer.

2009: Microsoft launches Windows 7 on July 22. The new operating system features the ability to pin applications to the taskbar, scatter windows away by shaking another window, easy-to-access jumplists, easier previews of tiles and more, TechRadar reported .

2010: The iPad, Apple's flagship handheld tablet, is unveiled.

2011: Google releases the Chromebook, which runs on Google Chrome OS.

2015: Apple releases the Apple Watch. Microsoft releases Windows 10.

2016: The first reprogrammable quantum computer was created. "Until now, there hasn't been any quantum-computing platform that had the capability to program new algorithms into their system. They're usually each tailored to attack a particular algorithm," said study lead author Shantanu Debnath, a quantum physicist and optical engineer at the University of Maryland, College Park.

2017: The Defense Advanced Research Projects Agency (DARPA) is developing a new "Molecular Informatics" program that uses molecules as computers. "Chemistry offers a rich set of properties that we may be able to harness for rapid, scalable information storage and processing," Anne Fischer, program manager in DARPA's Defense Sciences Office, said in a statement. "Millions of molecules exist, and each molecule has a unique three-dimensional atomic structure as well as variables such as shape, size, or even color. This richness provides a vast design space for exploring novel and multi-value ways to encode and process data beyond the 0s and 1s of current logic-based, digital architectures."

2019: A team at Google became the first to demonstrate quantum supremacy — creating a quantum computer that could feasibly outperform the most powerful classical computer — albeit for a very specific problem with no practical real-world application. The described the computer, dubbed "Sycamore" in a paper that same year in the journal Nature . Achieving quantum advantage – in which a quantum computer solves a problem with real-world applications faster than the most powerful classical computer — is still a ways off.

2022: The first exascale supercomputer, and the world's fastest, Frontier, went online at the Oak Ridge Leadership Computing Facility (OLCF) in Tennessee. Built by Hewlett Packard Enterprise (HPE) at the cost of $600 million, Frontier uses nearly 10,000 AMD EPYC 7453 64-core CPUs alongside nearly 40,000 AMD Radeon Instinct MI250X GPUs. This machine ushered in the era of exascale computing, which refers to systems that can reach more than one exaFLOP of power – used to measure the performance of a system. Only one machine – Frontier – is currently capable of reaching such levels of performance. It is currently being used as a tool to aid scientific discovery.

What is the first computer in history?

Charles Babbage's Difference Engine, designed in the 1820s, is considered the first "mechanical" computer in history, according to the Science Museum in the U.K . Powered by steam with a hand crank, the machine calculated a series of values and printed the results in a table.

What are the five generations of computing?

The "five generations of computing" is a framework for assessing the entire history of computing and the key technological advancements throughout it.

The first generation, spanning the 1940s to the 1950s, covered vacuum tube-based machines. The second then progressed to incorporate transistor-based computing between the 50s and the 60s. In the 60s and 70s, the third generation gave rise to integrated circuit-based computing. We are now in between the fourth and fifth generations of computing, which are microprocessor-based and AI-based computing.

What is the most powerful computer in the world?

As of November 2023, the most powerful computer in the world is the Frontier supercomputer . The machine, which can reach a performance level of up to 1.102 exaFLOPS, ushered in the age of exascale computing in 2022 when it went online at Tennessee's Oak Ridge Leadership Computing Facility (OLCF)

There is, however, a potentially more powerful supercomputer waiting in the wings in the form of the Aurora supercomputer, which is housed at the Argonne National Laboratory (ANL) outside of Chicago. Aurora went online in November 2023. Right now, it lags far behind Frontier, with performance levels of just 585.34 petaFLOPS (roughly half the performance of Frontier), although it's still not finished. When work is completed, the supercomputer is expected to reach performance levels higher than 2 exaFLOPS.

What was the first killer app?

Killer apps are widely understood to be those so essential that they are core to the technology they run on. There have been so many through the years – from Word for Windows in 1989 to iTunes in 2001 to social media apps like WhatsApp in more recent years

Several pieces of software may stake a claim to be the first killer app, but there is a broad consensus that VisiCalc, a spreadsheet program created by VisiCorp and originally released for the Apple II in 1979, holds that title. Steve Jobs even credits this app for propelling the Apple II to become the success it was, according to co-creator Dan Bricklin .

- Fortune: A Look Back At 40 Years of Apple

- The New Yorker: The First Windows

- " A Brief History of Computing " by Gerard O'Regan (Springer, 2021)

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

Timothy is Editor in Chief of print and digital magazines All About History and History of War . He has previously worked on sister magazine All About Space , as well as photography and creative brands including Digital Photographer and 3D Artist . He has also written for How It Works magazine, several history bookazines and has a degree in English Literature from Bath Spa University .

Error-corrected qubits 800 times more reliable after breakthrough, paving the way for 'next level' of quantum computing

The 7 most powerful supercomputers in the world right now

'Gambling with your life': Experts weigh in on dangers of the Wim Hof method

Most Popular

By Anna Gora December 27, 2023

By Anna Gora December 26, 2023

By Anna Gora December 25, 2023

By Emily Cooke December 23, 2023

By Victoria Atkinson December 22, 2023

By Anna Gora December 16, 2023

By Anna Gora December 15, 2023

By Anna Gora November 09, 2023

By Donavyn Coffey November 06, 2023

By Anna Gora October 31, 2023

By Anna Gora October 26, 2023

- 2 Error-corrected qubits 800 times more reliable after breakthrough, paving the way for 'next level' of quantum computing

- 3 Early humans lived on 'Persian plateau' for 20,000 years after leaving Africa, study suggests

- 4 1,700-year-old Roman fort discovered in Germany was built to keep out barbarians

- 5 Why NASA is launching 3 rockets into the solar eclipse next week

- 2 Early humans lived on 'Persian plateau' for 20,000 years after leaving Africa, study suggests

- 3 'It's had 1.1 billion years to accumulate': Helium reservoir in Minnesota has 'mind-bogglingly large' concentrations

- 4 Bite from toilet rat hospitalizes man in Canada

- Trending Now

- Foundational Courses

- Data Science

- Practice Problem

- Machine Learning

- System Design

- DevOps Tutorial

- What is Motherboard?

- Fifth Generation of Computers

- Computer Memory

- How to Add Audio to Powerpoint Presentation

- Different Types of Websites

- Computer Hardware

- Creating Bullet Lists in MS Word

- Text Decoration in MS Word

- Add a Drop Cap in MS Word

- Creating New Styles in MS Word

- Delete Text in Microsoft Word

- Moving Text in Microsoft Word

- Change Page Orientation in MS Word

- What is Internet? Definition, Uses, Working, Advantages and Disadvantages

- What is an Email?

- How to Open a Website using the Web Address?

- Change Paper Size in MS Word

- Change Text Font in Microsoft Word

- How to add Filters in MS Excel?

History of Computers

Before computers were developed people used sticks, stones, and bones as counting tools. As technology advanced and the human mind improved with time more computing devices were developed like Abacus, Napier’s Bones, etc. These devices were used as computers for performing mathematical computations but not very complex ones.

Some of the popular computing devices are described below, starting from the oldest to the latest or most advanced technology developed:

Around 4000 years ago, the Chinese invented the Abacus, and it is believed to be the first computer. The history of computers begins with the birth of the abacus.

Structure: Abacus is basically a wooden rack that has metal rods with beads mounted on them.

Working of abacus: In the abacus, the beads were moved by the abacus operator according to some rules to perform arithmetic calculations. In some countries like China, Russia, and Japan, the abacus is still used by their people.

Napier’s Bones

Napier’s Bones was a manually operated calculating device and as the name indicates, it was invented by John Napier. In this device, he used 9 different ivory strips (bones) marked with numbers to multiply and divide for calculation. It was also the first machine to use the decimal point system for calculation.

It is also called an Arithmetic Machine or Adding Machine. A French mathematician-philosopher Blaise Pascal invented this between 1642 and 1644. It was the first mechanical and automatic calculator. It is invented by Pascal to help his father, a tax accountant in his work or calculation. It could perform addition and subtraction in quick time. It was basically a wooden box with a series of gears and wheels. It is worked by rotating wheel like when a wheel is rotated one revolution, it rotates the neighbouring wheel and a series of windows is given on the top of the wheels to read the totals.

Stepped Reckoner or Leibniz wheel

A German mathematician-philosopher Gottfried Wilhelm Leibniz in 1673 developed this device by improving Pascal’s invention to develop this machine. It was basically a digital mechanical calculator, and it was called the stepped reckoner as it was made of fluted drums instead of gears (used in the previous model of Pascaline).

Difference Engine

Charles Babbage who is also known as the “Father of Modern Computer” designed the Difference Engine in the early 1820s. Difference Engine was a mechanical computer which is capable of performing simple calculations. It works with help of steam as it was a steam-driven calculating machine, and it was designed to solve tables of numbers like logarithm tables.

Analytical Engine

Again in 1830 Charles Babbage developed another calculating machine which was Analytical Engine. Analytical Engine was a mechanical computer that used punch cards as input. It was capable of performing or solving any mathematical problem and storing information as a permanent memory (storage).

Tabulating Machine

Herman Hollerith, an American statistician invented this machine in the year 1890. Tabulating Machine was a mechanical tabulator that was based on punch cards. It was capable of tabulating statistics and record or sort data or information. This machine was used by U.S. Census in the year 1890. Hollerith’s Tabulating Machine Company was started by Hollerith and this company later became International Business Machine (IBM) in the year 1924.

Differential Analyzer

Differential Analyzer was the first electronic computer introduced in the year 1930 in the United States. It was basically an analog device that was invented by Vannevar Bush. This machine consists of vacuum tubes to switch electrical signals to perform calculations. It was capable of doing 25 calculations in a few minutes.

In the year 1937, major changes began in the history of computers when Howard Aiken planned to develop a machine that could perform large calculations or calculations involving large numbers. In the year 1944, Mark I computer was built as a partnership between IBM and Harvard. It was also the first programmable digital computer marking a new era in the computer world.

Generations of Computers

First Generation Computers

In the period of the year 1940-1956, it was referred to as the period of the first generation of computers. These machines are slow, huge, and expensive. In this generation of computers, vacuum tubes were used as the basic components of CPU and memory. Also, they were mainly dependent on the batch operating systems and punch cards. Magnetic tape and paper tape were used as output and input devices. For example ENIAC, UNIVAC-1, EDVAC, etc.

Second Generation Computers

In the period of the year, 1957-1963 was referred to as the period of the second generation of computers. It was the time of the transistor computers. In the second generation of computers, transistors (which were cheap in cost) are used. Transistors are also compact and consume less power. Transistor computers are faster than first-generation computers. For primary memory, magnetic cores were used, and for secondary memory magnetic disc and tapes for storage purposes. In second-generation computers, COBOL and FORTRAN are used as Assembly language and programming languages, and Batch processing and multiprogramming operating systems were used in these computers.

For example IBM 1620, IBM 7094, CDC 1604, CDC 3600, etc.

Third Generation Computers

In the third generation of computers, integrated circuits (ICs) were used instead of transistors(in the second generation). A single IC consists of many transistors which increased the power of a computer and also reduced the cost. The third generation computers are more reliable, efficient, and smaller in size. It used remote processing, time-sharing, and multiprogramming as operating systems. FORTRON-II TO IV, COBOL, and PASCAL PL/1 were used which are high-level programming languages.

For example IBM-360 series, Honeywell-6000 series, IBM-370/168, etc.

Fourth Generation Computers

The period of 1971-1980 was mainly the time of fourth generation computers. It used VLSI(Very Large Scale Integrated) circuits. VLSI is a chip containing millions of transistors and other circuit elements and because of these chips, the computers of this generation are more compact, powerful, fast, and affordable(low in cost). Real-time, time-sharing and distributed operating system are used by these computers. C and C++ are used as the programming languages in this generation of computers.

For example STAR 1000, PDP 11, CRAY-1, CRAY-X-MP, etc.

Fifth Generation Computers

From 1980 – to till date these computers are used. The ULSI (Ultra Large Scale Integration) technology is used in fifth-generation computers instead of the VLSI technology of fourth-generation computers. Microprocessor chips with ten million electronic components are used in these computers. Parallel processing hardware and AI (Artificial Intelligence) software are also used in fifth-generation computers. The programming languages like C, C++, Java, .Net, etc. are used.

For example Desktop, Laptop, NoteBook, UltraBook, etc.

Sample Questions

Let us now see some sample questions on the History of computers:

Question 1: Arithmetic Machine or Adding Machine is used between ___________ years.

a. 1642 and 1644

b. Around 4000 years ago

c. 1946 – 1956

d. None of the above

Solution:

a. 1642 and 1644 Explanation: Pascaline is also called as Arithmetic Machine or Adding Machine. A French mathematician-philosopher Blaise Pascal invented this between 1642 and 1644.

Question 2: Who designed the Difference Engine?

a. Blaise Pascal

b. Gottfried Wilhelm Leibniz

c. Vannevar Bush

d. Charles Babbage

Solution:

d. Charles Babbage Explanation: Charles Babbage who is also known as “Father of Modern Computer” designed the Difference Engine in the early 1820s.

Question 3: In second generation computers _______________ are used as Assembly language and programming languages.

a. C and C++.

b. COBOL and FORTRAN

c. C and .NET

d. None of the above.

b. COBOL and FORTRAN Explanation: In second generation computers COBOL and FORTRAN are used as Assembly language and programming languages, and Batch processing and multiprogramming operating systems were used in these computers.

Question 4: ENIAC and UNIVAC-1 are examples of which generation of computers?

a. First generation of computers.

b. Second generation of computers.

c. Third generation of computers.

d. Fourth generation of computers.

a. First-generation of computers. Explanation: ENIAC, UNIVAC-1, EDVAC, etc. are examples of the first generation of computers.

Question 5: The ______________ technology is used in fifth generation computers .

a. ULSI (Ultra Large Scale Integration)

b. VLSI( very large scale integrated)

c. vacuum tubes

d. All of the above

a. ULSI (Ultra Large Scale Integration) Explanation: From 1980 -to till date these computers are used. The ULSI (Ultra Large Scale Integration) technology is used in fifth generation computers.

Please Login to comment...

Similar reads.

- School Learning

- School Programming

- 10 Best Todoist Alternatives in 2024 (Free)

- How to Get Spotify Premium Free Forever on iOS/Android

- Yahoo Acquires Instagram Co-Founders' AI News Platform Artifact

- OpenAI Introduces DALL-E Editor Interface

- Top 10 R Project Ideas for Beginners in 2024

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

- Random article

- Teaching guide

- Privacy & cookies

A brief history of computers

by Chris Woodford . Last updated: January 19, 2023.

C omputers truly came into their own as great inventions in the last two decades of the 20th century. But their history stretches back more than 2500 years to the abacus: a simple calculator made from beads and wires, which is still used in some parts of the world today. The difference between an ancient abacus and a modern computer seems vast, but the principle—making repeated calculations more quickly than the human brain—is exactly the same.

Read on to learn more about the history of computers—or take a look at our article on how computers work .

Photo: A model of one of the world's first computers (the Difference Engine invented by Charles Babbage) at the Computer History Museum in Mountain View, California, USA. Photo by Cory Doctorow published on Flickr in 2020 under a Creative Commons (CC BY-SA 2.0) licence.

Cogs and Calculators

It is a measure of the brilliance of the abacus, invented in the Middle East circa 500 BC, that it remained the fastest form of calculator until the middle of the 17th century. Then, in 1642, aged only 18, French scientist and philosopher Blaise Pascal (1623–1666) invented the first practical mechanical calculator , the Pascaline, to help his tax-collector father do his sums. The machine had a series of interlocking cogs ( gear wheels with teeth around their outer edges) that could add and subtract decimal numbers. Several decades later, in 1671, German mathematician and philosopher Gottfried Wilhelm Leibniz (1646–1716) came up with a similar but more advanced machine. Instead of using cogs, it had a "stepped drum" (a cylinder with teeth of increasing length around its edge), an innovation that survived in mechanical calculators for 300 hundred years. The Leibniz machine could do much more than Pascal's: as well as adding and subtracting, it could multiply, divide, and work out square roots. Another pioneering feature was the first memory store or "register."

Apart from developing one of the world's earliest mechanical calculators, Leibniz is remembered for another important contribution to computing: he was the man who invented binary code, a way of representing any decimal number using only the two digits zero and one. Although Leibniz made no use of binary in his own calculator, it set others thinking. In 1854, a little over a century after Leibniz had died, Englishman George Boole (1815–1864) used the idea to invent a new branch of mathematics called Boolean algebra. [1] In modern computers, binary code and Boolean algebra allow computers to make simple decisions by comparing long strings of zeros and ones. But, in the 19th century, these ideas were still far ahead of their time. It would take another 50–100 years for mathematicians and computer scientists to figure out how to use them (find out more in our articles about calculators and logic gates ).

Artwork: Pascaline: Two details of Blaise Pascal's 17th-century calculator. Left: The "user interface": the part where you dial in numbers you want to calculate. Right: The internal gear mechanism. Picture courtesy of US Library of Congress .

Engines of Calculation

Neither the abacus, nor the mechanical calculators constructed by Pascal and Leibniz really qualified as computers. A calculator is a device that makes it quicker and easier for people to do sums—but it needs a human operator. A computer, on the other hand, is a machine that can operate automatically, without any human help, by following a series of stored instructions called a program (a kind of mathematical recipe). Calculators evolved into computers when people devised ways of making entirely automatic, programmable calculators.

Photo: Punched cards: Herman Hollerith perfected the way of using punched cards and paper tape to store information and feed it into a machine. Here's a drawing from his 1889 patent Art of Compiling Statistics (US Patent#395,782), showing how a strip of paper (yellow) is punched with different patterns of holes (orange) that correspond to statistics gathered about people in the US census. Picture courtesy of US Patent and Trademark Office.

The first person to attempt this was a rather obsessive, notoriously grumpy English mathematician named Charles Babbage (1791–1871). Many regard Babbage as the "father of the computer" because his machines had an input (a way of feeding in numbers), a memory (something to store these numbers while complex calculations were taking place), a processor (the number-cruncher that carried out the calculations), and an output (a printing mechanism)—the same basic components shared by all modern computers. During his lifetime, Babbage never completed a single one of the hugely ambitious machines that he tried to build. That was no surprise. Each of his programmable "engines" was designed to use tens of thousands of precision-made gears. It was like a pocket watch scaled up to the size of a steam engine , a Pascal or Leibniz machine magnified a thousand-fold in dimensions, ambition, and complexity. For a time, the British government financed Babbage—to the tune of £17,000, then an enormous sum. But when Babbage pressed the government for more money to build an even more advanced machine, they lost patience and pulled out. Babbage was more fortunate in receiving help from Augusta Ada Byron (1815–1852), Countess of Lovelace, daughter of the poet Lord Byron. An enthusiastic mathematician, she helped to refine Babbage's ideas for making his machine programmable—and this is why she is still, sometimes, referred to as the world's first computer programmer. [2] Little of Babbage's work survived after his death. But when, by chance, his notebooks were rediscovered in the 1930s, computer scientists finally appreciated the brilliance of his ideas. Unfortunately, by then, most of these ideas had already been reinvented by others.

Artwork: Charles Babbage (1791–1871). Picture from The Illustrated London News, 1871, courtesy of US Library of Congress .

Babbage had intended that his machine would take the drudgery out of repetitive calculations. Originally, he imagined it would be used by the army to compile the tables that helped their gunners to fire cannons more accurately. Toward the end of the 19th century, other inventors were more successful in their effort to construct "engines" of calculation. American statistician Herman Hollerith (1860–1929) built one of the world's first practical calculating machines, which he called a tabulator, to help compile census data. Then, as now, a census was taken each decade but, by the 1880s, the population of the United States had grown so much through immigration that a full-scale analysis of the data by hand was taking seven and a half years. The statisticians soon figured out that, if trends continued, they would run out of time to compile one census before the next one fell due. Fortunately, Hollerith's tabulator was an amazing success: it tallied the entire census in only six weeks and completed the full analysis in just two and a half years. Soon afterward, Hollerith realized his machine had other applications, so he set up the Tabulating Machine Company in 1896 to manufacture it commercially. A few years later, it changed its name to the Computing-Tabulating-Recording (C-T-R) company and then, in 1924, acquired its present name: International Business Machines (IBM).

Photo: Keeping count: Herman Hollerith's late-19th-century census machine (blue, left) could process 12 separate bits of statistical data each minute. Its compact 1940 replacement (red, right), invented by Eugene M. La Boiteaux of the Census Bureau, could work almost five times faster. Photo by Harris & Ewing courtesy of US Library of Congress .

Bush and the bomb

Photo: Dr Vannevar Bush (1890–1974). Picture by Harris & Ewing, courtesy of US Library of Congress .

The history of computing remembers colorful characters like Babbage, but others who played important—if supporting—roles are less well known. At the time when C-T-R was becoming IBM, the world's most powerful calculators were being developed by US government scientist Vannevar Bush (1890–1974). In 1925, Bush made the first of a series of unwieldy contraptions with equally cumbersome names: the New Recording Product Integraph Multiplier. Later, he built a machine called the Differential Analyzer, which used gears, belts, levers, and shafts to represent numbers and carry out calculations in a very physical way, like a gigantic mechanical slide rule. Bush's ultimate calculator was an improved machine named the Rockefeller Differential Analyzer, assembled in 1935 from 320 km (200 miles) of wire and 150 electric motors . Machines like these were known as analog calculators—analog because they stored numbers in a physical form (as so many turns on a wheel or twists of a belt) rather than as digits. Although they could carry out incredibly complex calculations, it took several days of wheel cranking and belt turning before the results finally emerged.

Impressive machines like the Differential Analyzer were only one of several outstanding contributions Bush made to 20th-century technology. Another came as the teacher of Claude Shannon (1916–2001), a brilliant mathematician who figured out how electrical circuits could be linked together to process binary code with Boolean algebra (a way of comparing binary numbers using logic) and thus make simple decisions. During World War II, President Franklin D. Roosevelt appointed Bush chairman first of the US National Defense Research Committee and then director of the Office of Scientific Research and Development (OSRD). In this capacity, he was in charge of the Manhattan Project, the secret $2-billion initiative that led to the creation of the atomic bomb. One of Bush's final wartime contributions was to sketch out, in 1945, an idea for a memory-storing and sharing device called Memex that would later inspire Tim Berners-Lee to invent the World Wide Web . [3] Few outside the world of computing remember Vannevar Bush today—but what a legacy! As a father of the digital computer, an overseer of the atom bomb, and an inspiration for the Web, Bush played a pivotal role in three of the 20th-century's most far-reaching technologies.

Photo: "A gigantic mechanical slide rule": A differential analyzer pictured in 1938. Picture courtesy of and © University of Cambridge Computer Laboratory, published with permission via Wikimedia Commons under a Creative Commons (CC BY 2.0) licence.

Turing—tested

The first modern computers.

The World War II years were a crucial period in the history of computing, when powerful gargantuan computers began to appear. Just before the outbreak of the war, in 1938, German engineer Konrad Zuse (1910–1995) constructed his Z1, the world's first programmable binary computer, in his parents' living room. [4] The following year, American physicist John Atanasoff (1903–1995) and his assistant, electrical engineer Clifford Berry (1918–1963), built a more elaborate binary machine that they named the Atanasoff Berry Computer (ABC). It was a great advance—1000 times more accurate than Bush's Differential Analyzer. These were the first machines that used electrical switches to store numbers: when a switch was "off", it stored the number zero; flipped over to its other, "on", position, it stored the number one. Hundreds or thousands of switches could thus store a great many binary digits (although binary is much less efficient in this respect than decimal, since it takes up to eight binary digits to store a three-digit decimal number). These machines were digital computers: unlike analog machines, which stored numbers using the positions of wheels and rods, they stored numbers as digits.

The first large-scale digital computer of this kind appeared in 1944 at Harvard University, built by mathematician Howard Aiken (1900–1973). Sponsored by IBM, it was variously known as the Harvard Mark I or the IBM Automatic Sequence Controlled Calculator (ASCC). A giant of a machine, stretching 15m (50ft) in length, it was like a huge mechanical calculator built into a wall. It must have sounded impressive, because it stored and processed numbers using "clickety-clack" electromagnetic relays (electrically operated magnets that automatically switched lines in telephone exchanges)—no fewer than 3304 of them. Impressive they may have been, but relays suffered from several problems: they were large (that's why the Harvard Mark I had to be so big); they needed quite hefty pulses of power to make them switch; and they were slow (it took time for a relay to flip from "off" to "on" or from 0 to 1).

Photo: An analog computer being used in military research in 1949. Picture courtesy of NASA on the Commons (where you can download a larger version.

Most of the machines developed around this time were intended for military purposes. Like Babbage's never-built mechanical engines, they were designed to calculate artillery firing tables and chew through the other complex chores that were then the lot of military mathematicians. During World War II, the military co-opted thousands of the best scientific minds: recognizing that science would win the war, Vannevar Bush's Office of Scientific Research and Development employed 10,000 scientists from the United States alone. Things were very different in Germany. When Konrad Zuse offered to build his Z2 computer to help the army, they couldn't see the need—and turned him down.

On the Allied side, great minds began to make great breakthroughs. In 1943, a team of mathematicians based at Bletchley Park near London, England (including Alan Turing) built a computer called Colossus to help them crack secret German codes. Colossus was the first fully electronic computer. Instead of relays, it used a better form of switch known as a vacuum tube (also known, especially in Britain, as a valve). The vacuum tube, each one about as big as a person's thumb (earlier ones were very much bigger) and glowing red hot like a tiny electric light bulb, had been invented in 1906 by Lee de Forest (1873–1961), who named it the Audion. This breakthrough earned de Forest his nickname as "the father of radio" because their first major use was in radio receivers , where they amplified weak incoming signals so people could hear them more clearly. [5] In computers such as the ABC and Colossus, vacuum tubes found an alternative use as faster and more compact switches.

Just like the codes it was trying to crack, Colossus was top-secret and its existence wasn't confirmed until after the war ended. As far as most people were concerned, vacuum tubes were pioneered by a more visible computer that appeared in 1946: the Electronic Numerical Integrator And Calculator (ENIAC). The ENIAC's inventors, two scientists from the University of Pennsylvania, John Mauchly (1907–1980) and J. Presper Eckert (1919–1995), were originally inspired by Bush's Differential Analyzer; years later Eckert recalled that ENIAC was the "descendant of Dr Bush's machine." But the machine they constructed was far more ambitious. It contained nearly 18,000 vacuum tubes (nine times more than Colossus), was around 24 m (80 ft) long, and weighed almost 30 tons. ENIAC is generally recognized as the world's first fully electronic, general-purpose, digital computer. Colossus might have qualified for this title too, but it was designed purely for one job (code-breaking); since it couldn't store a program, it couldn't easily be reprogrammed to do other things.

Photo: Sir Maurice Wilkes (left), his collaborator William Renwick, and the early EDSAC-1 electronic computer they built in Cambridge, pictured around 1947/8. Picture courtesy of and © University of Cambridge Computer Laboratory, published with permission via Wikimedia Commons under a Creative Commons (CC BY 2.0) licence.

ENIAC was just the beginning. Its two inventors formed the Eckert Mauchly Computer Corporation in the late 1940s. Working with a brilliant Hungarian mathematician, John von Neumann (1903–1957), who was based at Princeton University, they then designed a better machine called EDVAC (Electronic Discrete Variable Automatic Computer). In a key piece of work, von Neumann helped to define how the machine stored and processed its programs, laying the foundations for how all modern computers operate. [6] After EDVAC, Eckert and Mauchly developed UNIVAC 1 (UNIVersal Automatic Computer) in 1951. They were helped in this task by a young, largely unknown American mathematician and Naval reserve named Grace Murray Hopper (1906–1992), who had originally been employed by Howard Aiken on the Harvard Mark I. Like Herman Hollerith's tabulator over 50 years before, UNIVAC 1 was used for processing data from the US census. It was then manufactured for other users—and became the world's first large-scale commercial computer.

Machines like Colossus, the ENIAC, and the Harvard Mark I compete for significance and recognition in the minds of computer historians. Which one was truly the first great modern computer? All of them and none: these—and several other important machines—evolved our idea of the modern electronic computer during the key period between the late 1930s and the early 1950s. Among those other machines were pioneering computers put together by English academics, notably the Manchester/Ferranti Mark I, built at Manchester University by Frederic Williams (1911–1977) and Thomas Kilburn (1921–2001), and the EDSAC (Electronic Delay Storage Automatic Calculator), built by Maurice Wilkes (1913–2010) at Cambridge University. [7]

Photo: Control panel of the UNIVAC 1, the world's first large-scale commercial computer. Photo by Cory Doctorow published on Flickr in 2020 under a Creative Commons (CC BY-SA 2.0) licence.

The microelectronic revolution

Vacuum tubes were a considerable advance on relay switches, but machines like the ENIAC were notoriously unreliable. The modern term for a problem that holds up a computer program is a "bug." Popular legend has it that this word entered the vocabulary of computer programmers sometime in the 1950s when moths, attracted by the glowing lights of vacuum tubes, flew inside machines like the ENIAC, caused a short circuit, and brought work to a juddering halt. But there were other problems with vacuum tubes too. They consumed enormous amounts of power: the ENIAC used about 2000 times as much electricity as a modern laptop. And they took up huge amounts of space. Military needs were driving the development of machines like the ENIAC, but the sheer size of vacuum tubes had now become a real problem. ABC had used 300 vacuum tubes, Colossus had 2000, and the ENIAC had 18,000. The ENIAC's designers had boasted that its calculating speed was "at least 500 times as great as that of any other existing computing machine." But developing computers that were an order of magnitude more powerful still would have needed hundreds of thousands or even millions of vacuum tubes—which would have been far too costly, unwieldy, and unreliable. So a new technology was urgently required.

The solution appeared in 1947 thanks to three physicists working at Bell Telephone Laboratories (Bell Labs). John Bardeen (1908–1991), Walter Brattain (1902–1987), and William Shockley (1910–1989) were then helping Bell to develop new technology for the American public telephone system, so the electrical signals that carried phone calls could be amplified more easily and carried further. Shockley, who was leading the team, believed he could use semiconductors (materials such as germanium and silicon that allow electricity to flow through them only when they've been treated in special ways) to make a better form of amplifier than the vacuum tube. When his early experiments failed, he set Bardeen and Brattain to work on the task for him. Eventually, in December 1947, they created a new form of amplifier that became known as the point-contact transistor. Bell Labs credited Bardeen and Brattain with the transistor and awarded them a patent. This enraged Shockley and prompted him to invent an even better design, the junction transistor, which has formed the basis of most transistors ever since.

Like vacuum tubes, transistors could be used as amplifiers or as switches. But they had several major advantages. They were a fraction the size of vacuum tubes (typically about as big as a pea), used no power at all unless they were in operation, and were virtually 100 percent reliable. The transistor was one of the most important breakthroughs in the history of computing and it earned its inventors the world's greatest science prize, the 1956 Nobel Prize in Physics . By that time, however, the three men had already gone their separate ways. John Bardeen had begun pioneering research into superconductivity , which would earn him a second Nobel Prize in 1972. Walter Brattain moved to another part of Bell Labs.

William Shockley decided to stick with the transistor, eventually forming his own corporation to develop it further. His decision would have extraordinary consequences for the computer industry. With a small amount of capital, Shockley set about hiring the best brains he could find in American universities, including young electrical engineer Robert Noyce (1927–1990) and research chemist Gordon Moore (1929–). It wasn't long before Shockley's idiosyncratic and bullying management style upset his workers. In 1956, eight of them—including Noyce and Moore—left Shockley Transistor to found a company of their own, Fairchild Semiconductor, just down the road. Thus began the growth of "Silicon Valley," the part of California centered on Palo Alto, where many of the world's leading computer and electronics companies have been based ever since. [8]

It was in Fairchild's California building that the next breakthrough occurred—although, somewhat curiously, it also happened at exactly the same time in the Dallas laboratories of Texas Instruments. In Dallas, a young engineer from Kansas named Jack Kilby (1923–2005) was considering how to improve the transistor. Although transistors were a great advance on vacuum tubes, one key problem remained. Machines that used thousands of transistors still had to be hand wired to connect all these components together. That process was laborious, costly, and error prone. Wouldn't it be better, Kilby reflected, if many transistors could be made in a single package? This prompted him to invent the "monolithic" integrated circuit (IC) , a collection of transistors and other components that could be manufactured all at once, in a block, on the surface of a semiconductor. Kilby's invention was another step forward, but it also had a drawback: the components in his integrated circuit still had to be connected by hand. While Kilby was making his breakthrough in Dallas, unknown to him, Robert Noyce was perfecting almost exactly the same idea at Fairchild in California. Noyce went one better, however: he found a way to include the connections between components in an integrated circuit, thus automating the entire process.

Photo: An integrated circuit from the 1980s. This is an EPROM chip (effectively a forerunner of flash memory , which you could only erase with a blast of ultraviolet light).

Mainframes, minis, and micros

Photo: An IBM 704 mainframe pictured at NASA in 1958. Designed by Gene Amdahl, this scientific number cruncher was the successor to the 701 and helped pave the way to arguably the most important IBM computer of all time, the System/360, which Amdahl also designed. Photo courtesy of NASA .

Photo: The control panel of DEC's classic 1965 PDP-8 minicomputer. Photo by Cory Doctorow published on Flickr in 2020 under a Creative Commons (CC BY-SA 2.0) licence.

Integrated circuits, as much as transistors, helped to shrink computers during the 1960s. In 1943, IBM boss Thomas Watson had reputedly quipped: "I think there is a world market for about five computers." Just two decades later, the company and its competitors had installed around 25,000 large computer systems across the United States. As the 1960s wore on, integrated circuits became increasingly sophisticated and compact. Soon, engineers were speaking of large-scale integration (LSI), in which hundreds of components could be crammed onto a single chip, and then very large-scale integrated (VLSI), when the same chip could contain thousands of components.