Research Excellence Framework

Securing a world-class, dynamic and responsive research base across the full academic spectrum within UK higher education

The REF is the UK’s system for assessing the quality of research in UK higher education institutions. It first took place in 2014 and 2021 . The next exercise is planned for 2029.

What is the REF’s purpose?

- To provide accountability for public investment in research and produce evidence of the benefits of this investment.

- To provide benchmarking information and establish reputational yardsticks, for use within the Higher Education sector and for public information.

- To inform the selective allocation of funding for research.

The Research Excellence Framework (REF) is the UK’s system for assessing the excellence of research in UK higher education institutions (HEIs). The REF outcomes are used to inform the allocation of around £2 billion per year of public funding for universities’ research. The REF is a process of expert review, carried out by sub-panels focused on subject-based units of assessment (UOAs), under the guidance of overarching main panels and advisory panels. Panels are made up of senior academics, international members, and research users.

Latest news

View all news

People, Culture and Environment Update March 2024

We are pleased to announce updates on the development of PCE for REF 2029, and associated community engagement opportunities. These are through: We are committed to gathering input on PCE with a broad and inclusive approach and encourage all stakeholders to make the most of these opportunities to engage on this important issue for the…

The REF 2029 Open Access Policy consultation opens

The four UK higher education funding bodies have launched a consultation on the Research Excellence Framework (REF) 2029 Open Access Policy. The consultation will gather a deeper understanding of sector perspectives on key issues and impacts in relation to our policy proposals. The purpose of the REF 2029 Open Access Policy is to outline open…

People, Culture and Environment update January 2024

The REF Steering Group is pleased to announce an update on the development of approaches to the assessment of People, Culture and Environment (PCE) in the next REF exercise. A project has now been commissioned with Technopolis and CRAC-Vitae in collaboration with a number of sector organisations, which will develop indicators to be used for…

Quick links

About the REF

OA consultation

Publications

Contact REF

Logo guidelines

- Share on twitter

- Share on facebook

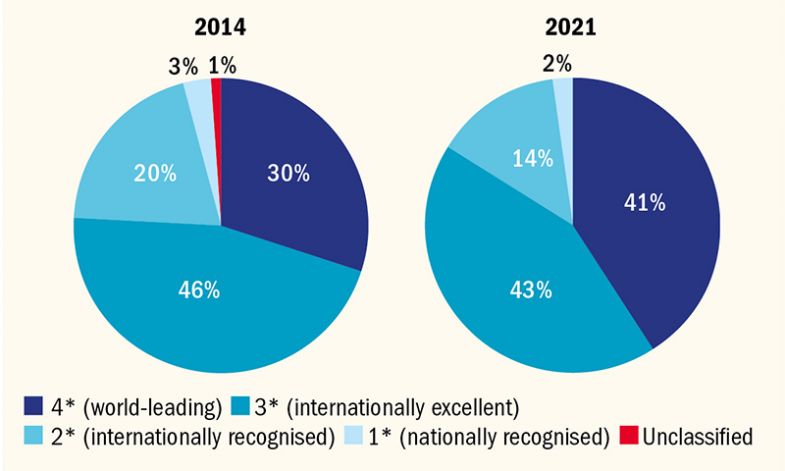

REF 2021: Quality ratings hit new high in expanded assessment

Four in five outputs judged to be either ‘world-leading’ or ‘internationally excellent’.

- Share on linkedin

- Share on mail

The quality of UK scholarship as rated by the Research Excellence Framework has hit a new high following reforms that required universities to submit all research-active staff to the 2021 exercise.

For the first time in the history of the UK’s national audit of research, all staff with a “significant responsibility” for research were entered for assessment – a rule change that resulted in 76,132 academics submitting at least one research output, up 46 per cent from 52,000 in 2014.

Overall, 41 per cent of outputs were deemed world-leading (4*) by assessment panels and 43 per cent judged internationally excellent (3*), which was described as an “exceptional achievement for UK university research” by Steven Hill, director of research at Research England, which runs the REF.

In the 2014 assessment 30 per cent of research got a 4* rating, with 46 per cent judged to be 3*.

Who’s up, who’s down? See how your institution performed in REF 2021 Output v impact: where is your institution strongest? Unit of assessment tables: see who's top in your subject More staff, more excellent research, great impacts: David Sweeney on REF 2021

Analysis of institutional performance by Times Higher Education now puts the grade point average at UK sector level at 3.16 for outputs, compared with 2.90 in 2014. Scores for research impact have also increased , from 3.24 to 3.35.

At least 15 per cent of research was considered world-leading in three-quarters of the UK’s universities.

And analysis by THE suggests that institutions outside London have improved their performance the most, with several Russell Group universities from outside the “golden triangle” of Oxford, Cambridge and London making major gains .

REF 2021 results at a glance:

See here for full results table.

The results of the REF will be used to distribute quality-related research funding by the UK’s four higher education funding bodies, the value of which will stand at around £2 billion from 2022-23.

The requirement to submit all research-active staff was introduced following the review of the REF conducted by Lord Stern in 2016 and was designed to reduce institutional “game-playing” over which staff members were submitted.

Outputs in REF 2014 and 2021

The uptick in quality may be driven by universities focusing instead on which of their researchers’ outputs should be submitted, allowing greater flexibility to pick “excellent” scholarship.

In the 2014 exercise, each participating researcher was expected to submit four outputs, but this time the number of outputs can range between one and five, with an average of 2.5 per full-time equivalent researcher expected. In the 2021 exercise, a single output was submitted for 44 per cent of researchers who participated.

University staff had welcomed the new submission rules which had removed the “emotional pressure” caused by deliberations over whether they would be “in or out” of the REF – a decision that often had consequences for future promotions, said David Sweeney, executive chair of Research England. “There is no longer that same pressure on individuals,” he reflected.

Methodology: how THE calculates its REF tables

However, the rule change has been linked to universities’ decisions to move many staff on to teaching-only contracts in recent years, with the latest data showing that about 20,000 academics are employed on such terms compared with five years ago .

This change represented a welcome clarification of academics’ roles rather than “game-playing’ on behalf of institutions, insisted Mr Sweeney. “If these contracts represent the expectations of institutions and the responsibilities of academics, that is not game-playing, it is transparency,” he said.

David Price, vice-provost (research) at UCL and chair of the REF’s main panel B (physical sciences, engineering and mathematics), agreed that “the REF may have helped in resolving many contractual ambiguities. Game-playing has not been noticeable,” he said.

Dame Jessica Corner, pro vice-chancellor (research and knowledge exchange) at the University of Nottingham , said that “less focus on individuals with the partial separation of outputs from academics has been helpful”.

“That outputs can be returned by institutions where individuals worked if they move jobs has reduced, though not entirely eliminated, the academic transfer market,” she added.

James Wilsdon, Digital Science professor of research policy at the University of Sheffield , agreed. “The large-scale transfers of people between institutions that we saw in the lead-up to REF 2014 have definitely reduced, which is positive,” said Professor Wilsdon, who said that while “choices around inclusion and exclusion of individuals with ‘significant responsibility’ have been complex in some institutions – particularly less research-intensive universities – some have welcomed the clarity that this brought to different roles in terms of research, teaching and hybrid roles.”

“The game-playing, where it occurs, is often more subtle: it’s about the gradual sifting and reordering of what kinds of research, and what kinds of impact, are deemed ‘excellent’,” he explained.

Kieron Flanagan, professor of science and technology policy at the University of Manchester , questioned the extent to which REF game-playing had been eliminated.

“There is bound to have been some of this happening because habits are hard to break – people who run university research have come in a management system informed by REFs over the past 10 to 20 years – some game-playing is inevitable,” he said.

However, Jane Millar, emerita professor of social policy at the University of Bath , who chaired the social sciences REF panel, believed the Stern review reforms had “worked well”.

“We saw a great diversity in the submissions, from very small to very large, from well-established and new units,” said Professor Millar, who added that there had been “examples of world-leading and internationally excellent quality across the range”.

THE Campus: How I plan to get through REF results day THE Campus: The good, the bad and the way forward: how UK universities should respond to REF results THE Campus: Don’t let the REF tail wag the academic dog

Interdisciplinary research was also “well presented”, with the Stern reforms encouraging greater links between subjects, with “sub-panels whose reach stretched through to design and engineering, physical and/or biological sciences, humanities, biomechanics, and medicine”, added Professor Millar.

With an international review body examining the future of the REF , there has been some speculation that this could be its final incarnation.

But Mr Sweeney said that the REF remained an important tool in justifying the £9 billion or so in research funding given to institutions in open-ended funding that is likely to flow from the exercise.

POSTSCRIPT:

Print headline: REF submission rules help push quality to new high

Register to continue

Why register?

- Registration is free and only takes a moment

- Once registered, you can read 3 articles a month

- Sign up for our newsletter

Or subscribe for unlimited access to:

- Unlimited access to news, views, insights & reviews

- Digital editions

- Digital access to THE’s university and college rankings analysis

Already registered or a current subscriber? Login

Related articles

REF 2021: Golden triangle looks set to lose funding share

Although major players still dominate on research power, some large – and small – regional institutions have made their mark

REF 2021: Increased impact weighting helps push up scores

Greater weighting helps medical institutions in particular improve overall positions

REF 2021: More staff, more excellent research, great impacts

The latest iteration of the UK’s national research audit has fulfilled its aim to identify research quality across the whole system, says David Sweeney

Reader's comments (2)

You might also like.

‘Co-creation’ of REF 2029 research environment metrics promised

Pilot to test potential indicators announced amid sector uncertainty

Open access rules for trade books would hit HE impact and reputation

Requiring OA publication would have left bookshops’ history sections narrower in scope and the preserve of a select few authors, says Rory Cormac

Books ‘should be open access within two years’ for REF submission

Extension of journal mandate to monographs designed to ‘make research more open and equitable’, but has historically been a source of controversy

Featured jobs

How Research England supports research excellence

The Research Excellence Framework (REF) was the first exercise to assess the impact of research outside of academia. Impact was defined as ‘an effect on, change or benefit to the economy, society, culture, public policy or services, health, the environment or quality of life, beyond academia’.

Impact case studies

As part of the 2014 Research Excellence Framework exercise, UK higher education institutions (HEIs) submitted 6,975 impact case studies demonstrating the impact of their research on wider society.

These case studies provide a unique and invaluable source of information on the impact of UK research. UK higher education (HE) research has wide and varied benefits on the economy, society, culture, policy, health, the environment and quality of life — both within the UK and overseas.

Universities engage with a range of public, private and charitable organisations and local communities. Analysis found that these wider impacts and benefits often stem from multidisciplinary work.

Latest report: Patterns in research outputs

Publication patterns in research underpinning impact in REF2014 describes patterns in research outputs submitted by UK higher education institutions to the 2014 Research Excellence Framework and to previous Research Assessment Exercises (RAEs).

The REF impact case study database

The impact case study database is a searchable tool that will make the impact case studies widely available and will enable analysis and automated text mining.

The licence arrangements relating to the use of this database can be accessed on the National Archive .

An initial analysis of the REF impact case studies is captured in the report, The nature, scale and beneficiaries of research impact .

The REF impact case studies were analysed by Digital Science, a division of Macmillan Science and Education, working in conjunction with its sister company Nature Publishing Group and the policy institute at King’s College London. This analysis was co-funded by the UK Funding Bodies, Research Councils UK and Wellcome Trust.

Maps of impact case studies

The maps of impact case studies indicate the local and global spread of research impact for UK higher education institutions (HEIs) by impact type and research area.

They are based on the names of locations referenced in REF2014 impact case studies and categorised in the REF impact case study database.

Last updated: 31 March 2022

This is the website for UKRI: our seven research councils, Research England and Innovate UK. Let us know if you have feedback or would like to help improve our online products and services .

UCL Research

Research Excellence Framework

The Research Excellence Framework (REF) is the system for assessing the quality of research in UK higher education institutions (HEIs).

The REF is carried out approximately every six to seven years to assess the quality of research across 157 UK universities and to share how this research benefits society both in the UK and globally. It is implemented by Research England , part of UK Research and Innovation.

Submissions for REF 2021 closed on 31 March 2021 and the results were announced on 12 May 2022. See the REF 2021 results and visit the REF Hub to read over 170 impact case studies about how UCL is transforming lives.

The previous REF was REF 2014 . The next REF will be REF 2029 , with results published in December 2029.

For queries, contact the UCL REF team .

Purpose of the Framework

The main objectives of the REF are:

- To provide accountability for public investment in research

- To provide benchmarking information for use within the Higher Education sector and for public information.

- To inform the selective allocation of quality-related (QR) funding for research

The REF assesses three distinct elements:

- quality of research outputs

- impact of research beyond academia

- the environment that supports research.

Assessment details and process

The REF is a process of expert review , with discipline-based expert panels assessing submissions made by HEIs in 34 Units of Assessment (UOAs). Additional measures, including specific advisory panels, were introduced in REF2021 to support the implementation of equality and diversity, and submission and review of interdisciplinary research, during assessment. The main panels were as follows:

- Panel A: Medicine, health and life sciences

- Panel B: Physical sciences, engineering and mathematics

- Panel C: Social sciences

- Panel D: Arts and humanities

The REF submission comprises three elements: research outputs, research impact and research environment. Sub-panels for each unit of assessment use their expertise to grade each element of the submission from 4 stars (outstanding work) through to 1 star (with an unclassified grade awarded if the work falls below the standard expected or is deemed not to meet the definition of research). The scores are weighted 60% (outputs), 25% (impact) and 15% (environment).

Find out more about REF 2021

Follow @uclref.

Tweets by UCLREF

REF for UCL staff (login required)

Recommended pages

- Undergraduate open days

- Postgraduate open days

- Accommodation

- Information for teachers

- Maps and directions

- Sport and fitness

Research Excellence Framework

The University of Birmingham’s world-leading research tackles some of the greatest challenges facing humanity and helps to improves the lives of people everywhere.

The latest Research Excellence Framework (REF 2021, published May 2022) confirms that we are one of the best universities in the UK. The REF results are an outstanding vote of confidence in the quality of our research and our contribution to society, bolstering our ambition to become a Top 50 Global University.

Our REF 2021 results

- Ranked 10th in Russell Group and 13th in UK for Grade Point Average (up from 39th in 2014)

- Ranked equal 10th in the Russell Group and equal 13th in the UK for 4* research (THE)

- Ranked 12th overall in the UK for Research Power (up from 14th in 2014)

- More than 50% of research recognised as 4*

- Largest rise in the Russell Group on percentage 4* and GPA (grade point average)

- Nine subjects ranked in the UK Top 5 for 4* (individual subject rank displayed in brackets): Physics (1), Earth Systems and Environmental Sciences (2), Computer Science and informatics (3), Philosophy (3), Theology and Religious Studies (3), Area Studies (3), Education (4), Sport and exercise sciences, leisure and tourism (5), Public Health, Health Services and Primary Care (5)

- 15 subjects ranked in the top 10 for 4*: Those above plus Anthropology and Development Studies (7) Classics (8), Art and design: history, practice and theory (9), Social Work and Social Policy (9), Law (9), Engineering (10)

Discover more about the University's REF 2021 results, showing the quality profiles for each of our 28 submissions.

The University of Birmingham’s Vice-Chancellor, Professor Adam Tickell, says: ‘This was a tremendous result and reflects many years of hard work by outstanding researchers working at the forefront of their disciplines. But the most important thing is not the ranking itself but the confirmation that we are collectively engaged in world-leading work that has a genuine impact on people’s lives.

‘Our REF results highlight the strong partnerships we have, including with industry and the health, education, and cultural sectors. It is not enough to simply undertake high quality research, it is only through working in partnership with others in our region and across the world that we can deliver solutions to improve people’s lives, society and our planet.’

I am extremely proud that the quality and significance of our research and its wider impact were recognised by the assessment panels. Our credo over a number of years now has been to produce ‘research that matters’, whether that means breakthroughs in discovery science and philosophical enquiry that change the way people think, or innovations across the full range of our disciplines that transform the way people and institutions act. Excellent results in the REF derive from our pursuit of a larger goal: that is, for our research, in partnership with diverse people and organisations, to make the world a better place for individuals, society, and the environment. Professor Hugh Addlington, Pro-Vice-Chancellor Research

What is REF?

The Research Excellence Framework (REF) is a national assessment which is carried out by the UK’s four higher education funding organisations every seven years to evaluate the standard and broader impact of research in the country’s higher education institutions.

It helps to maintain quality and world-class research across the breadth of the UK’s universities and assesses the impact of this multi-disciplinary work, informing accountability and future funding and public investment.

The most recent REF results were delayed because of the COVID-19 pandemic, which is why it was branded REF21.

A total of 157 UK higher education institutions (HEIs) made submissions in 34 subject-based Units of Assessment (UOAs). The submissions were assessed by panels of experts, including international researchers skilled in interdisciplinary collaboration, who produced an overall quality profile for each submission. Each profile shows the proportion of research activity judged by the panels to have met each of the four starred quality levels in steps of 1%.

Three key areas were scrutinised: output, impact and environment, alongside an assessment of the overall standard of the institution.

- 60 per cent of the weighting was given to the output

- 25 per cent was given to its significance

- 15 per cent covered sustainability

The panel assessed the overall research excellence and graded outputs from the top 4 * to unclassified.

4* = Quality classed as world-leading in originality, significance and rigour.

3* = Quality classed as internationally excellent in originality, significance and rigour but falling short of the highest standards of excellence.

2* = Quality classed as international in originality, significance and rigour.

1* = Quality classed as national in originality, significance and rigour.

Unclassified: = Quality below the standard of nationally recognised; or work ineligible for the REF.

Game-changing impact

The REF results also recognise the game-changing impact that the University’s research has on global health challenges: tackling inequalities, driving innovation with industry and manufacturing, and addressing major issues such as climate change.

Our Research Spotlights showcase our pioneering breakthroughs, multidisciplinary collaborations and significant global impact.

Browser does not support script.

Our REF2021 results

We are placed seventh in the UK for research power according to analysis by Times Higher Education

We submitted 1,804 researchers to our 2021 REF return

We have 135 case studies evidencing our impact on lives across the world

Results from the 2021 Research Excellence Framework (REF2021) reaffirm the University of Nottingham as among the best universities in the UK for the strength of our research. According to analysis by Times Higher Education , the university has improved its placing in each of the three key measures determined by REF – Research Power, Research Intensity and Grade Point Average (GPA).

Of the 157 institutions participating in REF2021, the university is placed 7 th in the UK for Research Power, which takes into account a combination of the quality of our research, its international impact, critical mass and sustainability. It is placed 25 th in the UK for Grade Point Average (GPA) – an improvement from 26 th place in REF2014. Our own analysis using THE methodology places the University 24 th in the UK for Research Intensity adjusted GPA, which takes into account the proportion of eligible staff returned – an improvement from 28 th place in REF2014.

Nottingham has often been recognised for the breadth of its research: to achieve quality across the range of disciplines submitted is a reflection of the talent, imagination and dedication of colleagues across the institution. However I am especially proud of the positive impact that our research has had on people in the UK and throughout the world, which is testament to the determination of our researchers to make a difference to people’s lives. Vice-Chancellor Professor Shearer West

Our REF2021 performance at a glance:

- 90%* of our research is classed as ‘world-leading’ (4*) or ‘internationally excellent’ (3*)

- 100%* of our research is recognised internationally

*According to analysis by Times Higher Education .

- 51% of our research is assessed as ‘world-leading’ (4*) for its impact*

*According to our own analysis.

- We are placed in the top 5 for our research in Pharmacy and Health Sciences, Area Studies and Economics

- Our research in Public Health and Primary Care placed 1 st in the UK for real-world impact, with Engineering placing third, and nine of our units of assessment are in the top 10 for the impact of their research

- We are placed 1 st for our research environment in Pharmacy and Health Sciences, and Computer Sciences

Explore our impact case studies submitted to REF2021 and view other universities' research impact.

Visit the REF impact database

From our world to yours. We are UoN Research.

In the ref assessment period (2014-21):.

- The lives of more than 100 million people have been positively changed by our research

- We saved the NHS £2 billion

- Legislation informed by our discoveries touches the lives of every UK citizen

Discover how our research changes lives and shapes the future

What is the Research Excellence Framework?

The Research Excellence Framework is a national assessment of the quality of research in UK higher education institutions and determines the allocation of around £2 billion quality-related funding a year, starting in August 2022.

The Research Excellence Framework:

- covers all research disciplines

- gives accountability for public investment in research and produces evidence of the benefits of this investment

- provides reputational benchmarking information for higher education institutions and the public

The four UK higher education funding bodies that undertake the REF are:

- Research England

- Scottish Funding Council

- The Higher Education Funding Council for Wales

- The Department for the Economy, Northern Ireland

The REF requires us to submit complex data and evidence to allow our research to be assessed. This covers three benchmarks:

- Research outputs – based on numbers of research active staff and their outputs (journal articles, performances, edited books etc. (60%)

- Research impact – demonstrating the difference our research has made to culture, economy, health and society (25%)

- Research environment – research ecosystem and infrastructure; strategies and mechanisms that support/enable research and impact (15%)

Privacy Notice for REF2021 Evidence Collection

Personal data provided to the University of Nottingham (UoN) for the purposes of evidencing research impact or environment, as part of the REF2021 assessment, will be held only for the duration of the REF2021 assessment. Once the assessment period is complete (at the end of April 2022) any personal data is anonymised and deleted from UoN systems.

The lawful processing purpose relied on is Public Task.

Information will be shared beyond the university with UK Higher Education funding bodies as per the data collection statement .

Understand how the university processes personal data >

It is a requirement that every institution making a REF submission needs to develop, document and apply a code of practice for REF2021, including:

- the fair and transparent identification of staff with significant responsibility for research where a higher education institute (HEI) is not submitting 100% of eligible staff

- determining who is an independent researcher

- the selection of research outputs, including approaches to supporting staff with circumstances

The University of Nottingham has developed our REF code of practice through a consultative process and submitted it to Research England on 7 June 2019. The university decided to return 100% of eligible Category A staff to REF2021 and therefore identification of staff with significant responsibility for research does not form part of our REF2021 code.

As per REF guidance on submissions, paragraph 117, Category A eligible staff are defined as academic staff:

- with a contract of employment of 0.2 FTE or greater, on the payroll of the submitting institution on the census date

- whose primary employment function is to undertake either ‘research only’ or ‘teaching and research’

Staff employed on minimum fractional contracts (0.20 to 0.29 FTE) on the census date should have a substantive research connection with the submitting unit in addition to meeting the bullet points 1 and 2 above to be Category A eligible staff.

Staff on ‘research only’ contracts should meet the definition of an independent researcher in addition to bullet points 1 and 2 above to be category A eligible staff.

Cookies on our website

We use some essential cookies to make this website work.

We'd like to set additional cookies to understand how you use our site. And we'd like to serve you some cookies set by other services to show you relevant content.

The REF explained

Get an overview of the Research Excellence Framework (REF) and why it’s so important.

About the REF

The Research Excellence Framework (REF) is the UK’s national assessment of research in higher education institutions (HEIs).

Its results help decide research funding worth about £2 billion in public money to UK HEIs.

The main purposes of the REF are to:

- provide accountability for public investment in research

- provide benchmarking information for use within the Higher Education sector and for public information

- to inform the selective allocation of quality-related (QR) funding for research.

Why the REF is important

The REF is the biggest assessment of research excellence in the world.

Panels of experts (from academia and beyond) have read and assessed thousands of submissions made by HEIs in specific subject areas (known as “units of assessment”).

The REF matters because it helps to decide how money from a major UK government budget is distributed. The REF also boosts public recognition of the quality and relevance of HEI research.

Results from the REF feed into several of the major league tables, which have a significant effect on student and staff recruitment.

868 researchers*

89% world-leading or internationally excellent**

2,021 research outputs*

32nd rank in UK for Impact***

27th rank in UK for Research Power***

1,604 PhDs awarded*

83 impact case studies*

25 research areas*

*University of Sussex submission data to the Research Excellence Framework (REF 2021) ** The Research Excellence Framework (REF2021) , published 12 May 2022 *** Times Higher Education (THE) , published 12 May 2022

How the REF is scored

The REF generates an assessment profile for each submission, allocating percentages across these grades:

- 4* (world-leading)

- 3* (internationally excellent)

- 2* (recognised internationally)

- 1* (recognised nationally)

- unclassified.

A higher set of quality grades for each institution in the REF translates into more financial support and enhanced reputational recognition.

When the REF happens

The REF normally takes place roughly every seven years. This time, REF 2021 was delayed because of the Covid-19 pandemic.

Research England, the agency that runs the REF, published the results of REF 2021 on Thursday 12 May 2022.

We published our own summary of our REF results and commented on how we did at the time.

We used our REF results to highlight our stories of outstanding research and real-world impact. You can view case studies of the Sussex submissions to REF 2021 .

We are proud of our performance in the previous REF, carried out in 2014. Over 75% of the Sussex submission was categorised as world-leading (4*) or internationally excellent (3*).

Individual highlights of the REF 2014 results included:

- Sussex History was the highest rated History submission in the UK for the quality of its research outputs

- the Sussex English submission rose from 31st to 9th across the UK since the last research assessment exercise (RAE) in 2008

- 84% of the University’s research impact in Psychology was rated 4* – the top possible grade

- Sussex Geography had the most 4*-rated research impact of any Geography submission across the UK.

Overall, the University was placed 36th of all multi-disciplinary institutions in the UK (submitting to more than three subject areas) by grade point average (GPA). Institutional [PDF] and per-subject [PDF] ranking data for REF 2014 and RAE 2008 [PDF] sourced from Times Higher Education .

View all our REF 2014 results in the Sussex archive .

You might also be interested in:

- exploring our research

- more about our research environment

- our research programmes, centres and groups .

What is REF?

The Research Excellence Framework (REF) is the UK’s system for assessing the excellence of research in UK higher education institutions.

The REF outcomes are used to inform the allocation of around £2 billion per year of public funding for universities’ research. Our impact case studies demonstrate the benefits of this investment, highlighting how our research improves society and economic prosperity. The results also affect institutional reputation and inform strategic decisions about national research priorities.

The REF was first carried out in 2014, replacing the previous Research Assessment Exercise. The latest exercise was submitted in 2021, with the results published in May 2022.

How is the REF carried out?

The REF is a process of peer review, carried out by expert panels for each of the 34 subject-based units of assessment (UOAs). These panels are made up of senior academics, international members, and professionals from the private, public and charitable sectors with experience of working with research.

For each submission, three distinct elements are assessed: the quality of outputs (e.g. journal articles, books, designs), the impact of our research beyond academia, and the environment that supports research. These are weighted and then aggregated to form an overall profile.

- Outputs 60%

- Environment 15%

The panels use their expertise to grade each item submitted from 4* (outstanding work) through to 1* (with an unclassified grade awarded if the work falls below the standard expected or is deemed not to meet the eligibility rules).

Related information

Our REF results

Our research impact

Research Excellence Framework website

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

Research Quality—Lessons from the UK Research Excellence Framework (REF) 2021

David r. thompson.

1 School of Nursing and Midwifery, Queen’s University Belfast, Belfast BT7 1NN, UK

Hugh P. McKenna

2 School of Nursing, Ulster University, Newtownabbey BT37 0QB, UK

Research quality is a term often bandied around but rarely clearly defined or measured. Certainly, the nursing contribution to research and research quality has often been under-recognised, under-valued and under-funded, at least in the UK. For over 20 years, it has been argued that there should be more investment in, and acknowledgement of, nursing’s contribution to high-quality research [ 1 , 2 ].

One way of measuring the quality of research in nursing across different higher education institutions (HEIs) and its parity with other disciplines is through periodic national research assessment exercises. In 1986, the first national Research Assessment Exercise (RAE) in higher education (HE) took place in the UK under the government of Margaret Thatcher. The purpose of the exercise was to determine the allocation of funding to UK universities at a time of tight budgetary restrictions. Since then, RAEs took place in 1989, 1992, 1996, 2001 and 2008 in its different iterations. In response to criticisms, the scale and assessment process has changed markedly since the first RAE.

1. The Research Excellence Framework (REF)

In 2014, the first Research Excellence Framework (REF) replaced the RAE. In 2016, the Stern report [ 3 ] identified five purposes for the REF:

- To provide accountability for public investment in research and produce evidence of the benefits of this investment.

- To provide benchmarking information and reputational yardsticks for the HE sector and for public information.

- To provide a rich evidence base to inform strategic decisions about national research priorities.

- To create strong performance incentives for HEIs/researchers.

- To inform decisions on the selective allocation of non-hypothecated funding for research.

The REF2021 has just reported its assessment and its outcomes inform the research grant allocations from the four HE funding bodies, with effect from 2022–2023. This quality related (QR) research funding, totalling £2 billion per year, enables HEIs to conduct their own directed research, much of which is supported subsequently by the UKRI Research Councils and other bodies (charities, industry, EU, etc.): known as the Dual Support System. So, in essence, this is an important exercise for universities and their disciplines in terms of funding, but also reputation, image and prestige.

The REF2021, for the first time, included the submission of all staff with significant responsibility for research. A total of 157 UK HEIs participated, submitting over 76,000 academic staff. As the REF is a discipline-based expert review process, 34 expert sub-panels, working under the guidance of four main panels, reviewed the submissions and made judgements on their quality. The panels comprised 900 academics, including 38 international members, and 220 users of research.

The rigour, significance and originality of research outputs (185,594) were judged on a 5-point scale:

- 4* (quality that is world-leading in terms of originality, significance and rigour);

- 3* (quality that is internationally excellent in terms of originality, significance and rigour but which falls short of the highest standards);

- 2* (quality that is recognised internationally in terms of originality, significance and rigour);

- 1* (quality that is recognised nationally in terms of originality, significance and rigour);

- Unclassified (below the quality threshold for 1* or does not meet the definition of research used for the REF).

Impact case studies (6781) were assessed in terms of reach and significance of impacts on the economy, society and/or culture [ 4 ].

Environment was assessed in terms of vitality and sustainability (strategy, people, income and collaboration).

Results were produced as “overall quality profiles”, which show the proportions of submitted activity judged to have met each quality level from 4* to unclassified. Submissions included research outputs, examples of the impact and wider benefits of research and evidence about the research environment. The overall quality profile awarded to each submission is derived from three elements that were assessed: the quality of research outputs (contributing 60% of the profile); the social, economic and cultural impact of research (contributing 25% of the profile); and the research environment (contributing 15%) of the overall quality profile. The panels reviewed the submitted environment statements and statistical data on research income and doctoral degrees. A statement about the overall institution’s environment was provided to inform and contextualise the panel’s assessment.

Key findings were that the overall quality was 41 per cent world-leading (4*) and 43 per cent internationally excellent (3*) across all submitted research activities. At least 15 per cent of the research was considered world-leading (4*) in three-quarters of the UK’s HEIs. In terms of impacts, the expert panels observed the significant gains made from university investment in realising research impact. However, changes between the REF2014 and REF2021 exercises limit the extent to which meaningful comparisons can be made across results, particularly outputs.

2. How Did Nursing Fare?

Nursing research was primarily, but not exclusively, submitted to the REF2021 sub-panel (Unit of Assessment) 3 (Allied Health Professions, Dentistry, Nursing and Pharmacy). SP3 received 89 submissions from 90 universities covering a very wide range of disciplines, thus making it difficult to focus specifically on research emanating from the discipline of nursing. Additionally, some research on nursing-related issues and interventions may have been included in returns to other units of assessment and not seen by SP3 members.

Nevertheless, some overall impressions of nursing emerged:

2.1. Strengths

There was evidence of a strong level of interdisciplinary collaboration and examples of strong academic nursing leadership in large multidisciplinary research teams. The scale of research activity in nursing ranged from modest or emerging centres of nursing research through to substantial, long-established units with mature research environments. A notable feature was high quality research addressing a wide range of nursing-related issues of critical importance to recipients of nursing care.

Many of the strongest outputs focused on people’s quality of life and health outcomes and included interventions designed to support older people and those with enduring health challenges, including symptom management, self-management and managing continence, mobility problems and pain. There were also signs of a growing emphasis on evaluating new approaches to care delivery and new or extended roles which aim to enhance access to care.

There were outstanding case studies that demonstrated clear links to the underpinning research related to the outputs, including in mental health, ageing, dementia, enduring health challenges and self-management of care. There was clear evidence of impact and reach on society, policy, practice and the economy, including changes to public perceptions of health. Evidence of reach was demonstrated through improvements to healthcare practice and delivery which enhanced health outcomes and quality of life.

There was evidence of highly developed research environments in which a significant volume of world leading or internationally excellent quality research was being generated within the nursing discipline. There was evidence of nurses leading large research centres and institutions where mature inter- and trans-disciplinary research was facilitated with a clear and joined up strategy. Most stronger submissions demonstrated clear institutional strategic commitment to and investment in furthering research in the discipline. There was also evidence of methodological developments, strong collaborations with non-academic partners, explicit attention to equality, diversity and inclusion and support for early career researchers. There was good evidence of national and international esteem demonstrated in the discipline through journal editorships, grant awards panel membership, significant positions in national institutions as well as some examples of national honours and other forms of recognition.

The early research assessment exercises were instrumental in sustaining research funding in elite universities and it achieved that goal for decades. Many of these had no nursing departments (e.g., Oxford, Cambridge, Imperial). However, the results of REF2021 show that this is no longer the case. In REF2021, the “golden triangle” universities have lost 2.4 percentage points and there appears to be a “levelling-up” of research with islands of excellence across the UK, often in universities with large nursing departments. For example, Northumbria leaped from 52 to 28 places in the market share table for QR funding. Similar trends were seen at Manchester Metropolitan University (56 to 38) and Portsmouth (60 to 47).

2.2. Challenges

It was sometimes difficult to determine how research activity in nursing was organised, supported and resourced. This included a few stronger research-led universities where there was little evidence that nursing was being prioritised equitably with other disciplines in terms of supporting growth and sustainability.

There were some outputs that were iterative scoping and systematic reviews which did not generate new knowledge and had difficulty meeting the originality and significance criteria. Furthermore, we know that nurses are undertaking excellent pedagogic research, yet little or none of this was returned to SP3, even though the panel’s descriptor showed that it would be welcome. Perhaps it was submitted to other expert panels such as SP23 (Education). Similarly, SP3 received few public engagement impact case studies, even though we know that nurses undertake excellent research in partnership with the public and patients.

It was apparent that, in general, nursing appears to have gained limited access to major funding schemes beyond the National Institute for Health and Care Research (NIHR) compared with many of the other disciplines included in UoA3. Additionally, there was a paucity of career development and personal award schemes to support post-doctoral nurses, which may be a key factor influencing the overall low numbers of early career researchers included in the submissions. Another concern was the low percentage of eligible nursing staff returned by some institutions, which raises the question of the extent to which eligible nursing staff in these institutions are being given time and support to undertake research as part of their academic role. This was often accompanied by a low number of early career researchers, down 3% from the REF2014 exercise, and none returned by some nursing departments. The combination of lower early career researcher numbers with a low return of eligible staff in some universities raises questions about research capacity and capability support.

These issues suggest strongly that there needs to be significant investment in nursing and more attention to the research priorities of major grant awarding bodies and access to a broader base of research funding opportunities. Mentorship, coaching and support will also promote the development of research leaders and strengthen the growth and spread of high-quality research activity.

A major and enduring challenge for nursing has been building and sustaining research capacity and capability. Nursing has long suffered from a lack of proper investment, including funding, and failure to influence the priorities of research funders. These are issues that need to be addressed urgently, particularly as there remain concerns about the availability of nurses in universities and health services at all levels, but particularly early career researchers, in the pipeline [ 5 , 6 ]. National strategies for nursing research have long been articulated [ 1 ], but little progress has been made in their implementation. Such strategies need to raise the profile of nursing research, ensure it is valued and that nurses in universities and the health services have protected research time and access to the same level of funding and support as their peers in other disciplines.

A number of expert committees have been established to identify how the REF structure and process can be improved prior to the next iteration in 2028. Sir Peter Gluckman is chairing a group of international experts and they report in October 2022. There are also committees examining the use of quantitative metrics and artificial intelligence (AI) in research assessment. What is clear is that peer review will remain, but informed by AI, metrics, and citations. Another emerging theme is the greater use of qualitative data and an emphasis on research culture, both of which should benefit nursing.

3. Conclusions

Research assessment exercises in one form or another seem likely to stay. Though imperfect, they are an important, perhaps the best available, means of ensuring scrutiny and accountability of public investment in research and for benchmarking disciplines and universities. However, the REF is merely the tip of the iceberg regarding the research and impact of UK nursing. Therefore, we should not let the REF tail wag the research dog. It is important to rejoice in the improved outcomes of nursing, but all contributions should be celebrated. Many nurses have expertly undertaken heavy administration and teaching loads so that the very best research can be submitted.

The REF is important because such exercises, beginning in the UK in 1986, have been emulated or adapted by other countries across the world, including Australia, New Zealand, Hong Kong, The Netherlands, Germany, Italy, Romania, Denmark, Finland, Norway, Sweden and the Czech Republic. The results inform the allocation of public funding on which the viability and reputation of research in nursing depends. One of the criticisms of the RAE was that university presidents used poor ratings as a reason to close, cut or merge departments/disciplines. Nursing can ill-afford to be at the mercy of such decision making. It is incumbent on the discipline to demonstrate forcibly the quality and impact of its research on the health and wellbeing of the people and communities it serves.

Conflicts of Interest

The author were member and chair, respectively of the REF2021 Unit of Assessment 3: Allied Health Professions, Dentistry, Nursing and Pharmacy. The views expressed are their personal ones.

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- CAREER COLUMN

- 08 July 2022

Why the party is over for Britain’s Research Excellence Framework

- Richard Watermeyer 0 &

- Gemma Derrick 1

Richard Watermeyer is professor of higher education and co-director of the Centre for Higher Education Transformations at the University of Bristol, UK.

You can also search for this author in PubMed Google Scholar

Gemma Derrick is associate professor of higher education at the University of Bristol, UK.

The past few weeks have generated a flurry of excitement for universities in the United Kingdom with the release of the latest assessment by the Research Excellence Framework, the country’s performance-based research-funding system.

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

24,99 € / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

185,98 € per year

only 3,65 € per issue

Rent or buy this article

Prices vary by article type

Prices may be subject to local taxes which are calculated during checkout

doi: https://doi.org/10.1038/d41586-022-01881-y

This is an article from the Nature Careers Community, a place for Nature readers to share their professional experiences and advice. Guest posts are encouraged.

Competing Interests

The authors declare no competing interests.

Related Articles

- Research management

The beauty of what science can do when urgently needed

Career Q&A 26 MAR 24

‘Woah, this is affecting me’: why I’m fighting racial inequality in prostate-cancer research

Career Q&A 20 MAR 24

So … you’ve been hacked

Technology Feature 19 MAR 24

Superconductivity case shows the need for zero tolerance of toxic lab culture

Correspondence 26 MAR 24

Cuts to postgraduate funding threaten Brazilian science — again

Don’t underestimate the rising threat of groundwater to coastal cities

‘Exhausted and insulted’: how harsh visa-application policies are hobbling global research

World View 26 MAR 24

Principal Investigator in Modeling of Plant Stress Responses

Join our multidisciplinary and stimulative research environment as Associate Professor in Modeling of Plant Stress Responses

Umeå (Kommun), Västerbotten (SE)

Umeå Plant Science Centre and Integrated Science Lab

Postdoctoral Associate- Cellular Neuroscience

Houston, Texas (US)

Baylor College of Medicine (BCM)

Postdoctoral Associate- Cancer Biology

Recruitment of talent positions at shengjing hospital of china medical university.

Call for top experts and scholars in the field of science and technology.

Shenyang, Liaoning, China

Shengjing Hospital of China Medical University

Assistant Professor in Plant Biology

The Plant Science Program in the Biological and Environmental Science and Engineering (BESE) Division at King Abdullah University of Science and Te...

Saudi Arabia (SA)

King Abdullah University of Science and Technology

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

- Cranfield University

Taught degrees

- Why study for an MSc at Cranfield?

- Application guide

- Find a course

- Search bursaries

- Fees and funding

- Make a payment

Research degrees

- Why study for a research degree at Cranfield?

- Research opportunities

Apprenticeships

- Apprenticeship programmes

- Apprentices

- MKU Apprenticeships

Professional development

- Professional and technical development

- Level 7 Apprenticeships

- Open Executive Programmes

- Find a programme

Studying at Cranfield

International students.

- Life at Cranfield

- Life at Shrivenham

- Careers and Employability Service

- IT services

- View our prospectus

Choose Cranfield

- Why work with Cranfield

- Who we work with

- Entrepreneurship

- Corporate Sustainability Consultancy

- Networking opportunities

- Access our facilities

- Business facilities and support services

Develop your people

- Short courses and executive development

- Customised programmes

- Recruit our students

Develop your technology and products

- Access funding

- Knowledge Transfer Partnerships

- Work with our students

- License our technology

- Business incubation

- Cranfield spin-out and start-up companies

Our research publications and data

- Case studies

- Research projects

Why research at Cranfield?

- Sustainability at Cranfield

- Theme Research Strategies

- Hydrogen Gateway

Research Excellence Framework

- Access our world class facilities

- Current research projects

- Cranfield Doctoral Network

- Applying for a research degree

- Entry requirements

Engage with us

- Industry collaboration

- Find an expert

Research and Innovation Office

- Open research

- Fellowships

- Energy and Sustainability

- Manufacturing and Materials

- Transport Systems

- Defence and Security

- Environment and Agrifood

- Cranfield School of Management

- Centres and institutes

- Academic disciplines

- Virtual Experience

International activity and reach

- Partnerships

- Cranfield in India

- European Partnership Programme

- Global impact

- Information for your region

- Visas and immigration

- Life on campus

- Pre-sessional English for Academic Purposes

- About Cranfield University

- Our structure

- Rankings and awards

- Building on our heritage

- Community and public engagement

- Facts and figures

- History and heritage

Governance and policies

- University management

- Council and Senate

- Corporate responsibility

- Environmental credentials

Research integrity

- Staff and students

- Work at Cranfield

- School of Management

- Why Cranfield for research?

- Research support services

The Research Excellence Framework (REF) is a system for assessing the quality and impact of research in UK universities.

The most recent REF results, published in May 2022, demonstrate Cranfield University’s global excellence with 88% of research rated as world leading or internationally excellent.

Results at a glance

- 88% of research rated world-leading (4*) or internationally excellent (3*)

- 7th in the UK for Engineering research power

- 7th in the UK for research impact in Agriculture, Food and Veterinary Science

- 7th in the UK for research impact in Business and Management

Work from 348 FTE researchers was submitted to the REF 2021. The results compliment Cranfield’s established position as a partner of choice.

As an institution Cranfield also has the second highest income of all UK universities in terms of income per FTE from industry (source: HESA 2019/20 data).

Cranfield’s global research excellence

The University’s research was submitted for REF in three Units of Assessment (UoA):

- Agriculture, Food and Veterinary Science

- Business and Management

- Engineering

Cranfield University is well-known throughout the world as a centre for dynamic and pioneering research which is actively applied to industry and societal challenges. In each area, the institution was assessed on the sub-categories of outputs, impact and research environment.

In Agriculture, Food and Veterinary Science, Cranfield saw increases in its Grade Point Average across all sub-categories of assessment and was ranked 7th in the UK for its research impact.

Engineering, which is a new Unit of Assessment in 2021, was ranked 7th in the UK for research power.

Business and Management increased its ranking for research impact, ranked 7th in the UK out of 108 institutions.

About the REF

The REF is undertaken by the four UK higher education funding bodies: Research England, the Scottish Funding Council (SFC), the Higher Education Funding Council for Wales (HEFCW), and the Department for the Economy, Northern Ireland (DfE).

The funding bodies aim to secure the continuation of a world-class, dynamic and responsive research base across the full academic spectrum within UK higher education. The results of the REF are used to inform the allocation of around £2billion of research funding per year. 157 UK universities took part in the most recent REF.

Find out more about what the REF is and how it works.

Get in touch

- Research Office

Download our REF Code of Practice

REF 2021: Cranfield research is world-leading with a global impact

Research unwrapped.

In May 2022, we celebrated outstanding results in the latest Research Excellence Framework, which rated 88% of our research as world-leading or internationally excellent.

Find out more

Browser does not support script.

- Departments and Institutes

- Research centres and groups

- Chair's Blog: Summer Term 2022

- Staff wellbeing

Research Excellence Framework at LSE

Research excellence framework.

Navigate your way around Research Excellence Framework (REF) at LSE. Browse resources for LSE staff preparing for and managing aspects of REF.

What is REF? The purpose of REF, assessment process, important dates, REF 2021 rules, past results and impact

REF 2021 LSE results for the latest Research Excellence Framework (REF)

Have you been accepted for publication? Don't delay - deposit your research for Open Access and LSE Research Online

Download LSE's Equality Impact Assessment for REF 2021

Enhance your research Support for staff with research funding, award management, commercial outreach and consulting

Revisions to REF 2021 Taking account of the effects of COVID-19

How is REF managed at LSE? Learn about REF Strategy Committee and departmental roles

What does REF mean for me? Key questions answered for academics and researchers

Unit of Assessment departmental map Find out which LSE departments share Unit of Assessments for REF 2021

REF 2014 results View the outcomes of UK Higher Education Institutions from the last assessment

How did REF 2021 differ from 2014? Rules for the 2021 REF changed - explore the differences

Code of Practice Download LSE's procedure for selecting staff and outputs for REF 2021

REF FAQs Explore common REF related questions asked by LSE staff

REF glossary Confused by REF related terms?

Key definitions simply explained

Research for the World LSE academics helping to shape the world’s political, economic and social future

World-leading research from LSE Be inspired by research news and initiatives, search our repository of research papers and more

What is REF Impact? Find out if your research has impact - do's and don'ts of writing an impact case study

How do I write my impact case study? Present your best examples with this A to Z of writing an impact case study

LSE Impact Blog - social media and REF How was social media cited in 2014 REF impact case studies?

Research excellence framework strategy committee (REFSC) Learn how often REFSC meet, who the members are and what they discuss

Research Environment Find out how the assessment will support research and enable impact

Are you REF ready? Follow our easy steps to submit your research for REF2028

Arma awards.

Research Management Team of the Year, winners 2016

Values in Practice Awards (VIP) for Professional Services Staff

LSE Citizenship, Team winners 2016

Research Management Team of the Year, highly commended 2015

Follow us on social media

Read the latest updates from Research and Innovation

Read the latest updates from LSE Consulting

Follow LSE Generate on Instagram

+44 (0)20 7852 3727

Address View on Google maps

LSE Research and Innovation, Houghton Street, London, WC2A 2AE, United Kingdom

- Search Menu

- Advance articles

- Author Guidelines

- Submission Site

- Open Access

- Why Publish?

- About Research Evaluation

- Editorial Board

- Advertising and Corporate Services

- Journals Career Network

- Self-Archiving Policy

- Dispatch Dates

- Journals on Oxford Academic

- Books on Oxford Academic

Article Contents

1. introduction, 2. research excellence framework and related literature, 3. methodology, 5. conclusions, acknowledgements, assessing research excellence: evaluating the research excellence framework.

- Article contents

- Figures & tables

- Supplementary Data

Mehmet Pinar, Timothy J Horne, Assessing research excellence: Evaluating the Research Excellence Framework, Research Evaluation , Volume 31, Issue 2, April 2022, Pages 173–187, https://doi.org/10.1093/reseval/rvab042

- Permissions Icon Permissions

Performance-based research funding systems have been extensively used around the globe to allocate funds across higher education institutes (HEIs), which led to an increased amount of literature examining their use. The UK’s Research Excellence Framework (REF) uses a peer-review process to evaluate the research environment, research outputs and non-academic impact of research produced by HEIs to produce a more accountable distribution of public funds. However, carrying out such a research evaluation is costly. Given the cost and that it is suggested that the evaluation of each component is subject to bias and has received other criticisms, this article uses correlation and principal component analysis to evaluate REF’s usefulness as a composite evaluation index. As the three elements of the evaluation—environment, impact and output—are highly and positively correlated, the effect of the removal of an element from the evaluation leads to relatively small shifts in the allocation of funds and in the rankings of HEIs. As a result, future evaluations may consider the removal of some elements of the REF or reconsider a new way of evaluating different elements to capture organizational achievement rather than individual achievements.

Performance-based research funding systems (PRFS) have multiplied since the United Kingdom introduced the first ‘Research Selectivity Exercise’ in 1986. Thirty years on from this first exercise, Jonkers and Zacharewicz (2016) reported that 17 of the EU28 countries had some form of PRFS, and this had increased to 18 by 2019 ( Zacharewicz et al. 2019 ).

A widely used definition of what constitutes a PRFS is that they must meet the following criteria ( Hicks 2012 ):

Research must be evaluated, not the quality of teaching and degree programmes;

The evaluation must be ex post, and must not be an ex ante evaluation of a research or project proposal;

The output(s) of research must be evaluated;

The distribution of funding from Government must depend upon the evaluation results;

The system must be national.

Within these relatively narrow boundaries, there is significant variation between both what is assessed in different PRFS, and how the assessment is made. With regards to ‘what’, some focus almost exclusively on research outputs, predominantly journal articles, whereas others, notably the UK’s Research Excellence Framework (REF), assess other aspects of research such as the impact of research and the research environment. With regards to ‘how’, some PRFS use exclusively or predominantly metrics such as citations whereas others use expert peer review, and others still a mix of both methods ( Zacharewicz et al. 2019 ). 1

This article focuses on UK’s REF, which originated in the very first PRFS, the Research Selectivity Exercise in 1986. This was followed by a second exercise in 1989 and a series of Research Assessment Exercises (RAEs) in the 1990s and 2000s. Each RAE represented a relatively gentle evolution from the previous one, but there was arguably more of a revolution than evolution between the last RAE in 2008 and the first REF in 2014 ( REF 2014 ), with the introduction of the assessment of research impact into the assessment framework (see e.g., Gilroy and McNamara 2009 ; Shattock 2012 ; Marques et al. 2017 for a detailed discussion on the evolution of RAEs in the UK). Three elements of research, namely research outputs, the non-academic impact of research and the research environment, were evaluated in the REF 2014 exercise. Research outputs (e.g., journal articles, books and research-based artistic works) were evaluated in terms of their ‘originality, significance and rigour’. The assessment of the non-academic impact of research is based on the submission of impact case studies that describe the details of the ‘reach and significance’ of impacts on the economy, society and/or culture, that were underpinned by excellent research. The research environment consisted of both data relating to the environment and a narrative environment statement. The environment data consisted of the number of postgraduate research degree completions and total research income generated by the submitting unit. The research environment statement provided information on the research undertaken, the staffing strategy, infrastructure and facilities, staff development activities, and research collaborations and contribution to the discipline. The quality of the research environment was assessed in terms of its ‘vitality and sustainability’ based on the environment data and narrative environment statements (see REF 2012 for further details).

There has been criticism of several aspects of the assessment of research excellence in the REF, including the cost of preparation and evaluation of the REF, the potential lack of objectivity in assessing them and the effect of the quasi-arbitrary or opaque value judgements on the allocation of quality-related research (QR) funding (see Section 2 for the details). Furthermore, the use of multiple criteria, which is the case for the REF (i.e., environment, impact and outputs), in assessing university performance has been long criticized (see e.g., Saisana, d’Hombres and Saltelli 2011 ; Pinar, Milla and Stengos 2019 ). These multidimensional indices are risky as some of the index components have been considered redundant ( McGillivray 1991 ; McGillivray and White 1993 ). For instance, McGillivray (1991) , McGillivray and White (1993) and Bérenger and Verdier-Chouchane (2007) use correlation analysis to examine the redundancy of different components of well-being when the indices are constructed. The main argument of these papers is that if the index components are highly and positively correlated, then the inclusion of additional dimensions to the index does not add new information to that provided by any of the other components. Furthermore, Nardo et al. (2008) also point out that obtaining a composite index with the highly correlated components leads to a double weighting of the same information and so overweighting of the information captured by these components. Therefore, this literature argues that excluding any component from the evaluation does not lead to loss of information if the evaluation elements are highly and positively correlated. For instance, by using correlation analysis, Cahill (2005) showed that excluding any component from a composite index produces rankings and achievements similar to the composite index. To overcome these drawbacks, principal components analysis (PCA) has been used to obtain indices (see e.g., McGillivray 2005 ; Khatun 2009 ; Nguefack‐Tsague, Klasen and Zucchini 2011 for the use of PCA for well-being indices, and see Tijssen, Yegros-Yegros and Winnink 2016 and Robinson-Garcia et al. 2019 for the use of PCA for university rankings). The PCA transforms the correlated variables into a new set of uncorrelated variables using a covariance matrix, which explains most of the variation in the existing components ( Nardo et al. 2008 ).

This article will contribute to the literature by examining the redundancy of the three components of the REF by using the correlation analysis between them to examine the relevance of each component for the evaluation. If the three elements of the REF are highly and positively correlated, then excluding one component from the analysis will not result in major changes in the overall assessment of universities and funding allocated to them. This article will examine whether this would be the case. Furthermore, we will also carry out PCA to obtain weights that would produce an index that explains most of the variation in the three elements of the REF while obtaining an overall assessment of higher education institutes (HEIs) and distributing funding across them.

The remainder of this article is structured as follows. In Section 2, we will provide details on how the UK’s REF operates, identify the literature on the REF exercise and outline the hypotheses of the article. In Section 3, we provide the detailed data used in this article and examine the correlation between the environment, impact and output scores. In this section, we also provide the details of the QR funding formula used to allocate the funding and demonstrate the correlation between the funding distribution in the environment, impact and output pots. We also will carry out PCA by using the achievement scores and funding distributed in each element in this section. Finally, in this section, we provide an alternative approach to the calculation of overall REF scores and the distribution of QR funding based on the hypotheses of the article. Section 4 will consider the effect on the distribution of QR funding for English universities 2 and their rankings when each element is removed from the calculation one at a time and PCA weights are used. Finally, Section 5 will identify conclusions of our analyses and the implications for how future REF assessment exercises might be structured.

Research assessment exercises have existed in the UK since the first Research Selectivity Exercise was undertaken in 1986. A subsequent exercise was held in 1989, which was followed by RAEs in 1996, 2001 and 2008. Each HEI’s submission to the 1986 exercise comprised a research statement in one or more of 37 subject areas, together with five research outputs per area in which a submission was made (see e.g., Hinze et al. 2019 ). The complexity of the submissions has increased from that first exercise, and in 2014 the requirement to submit case studies and a narrative template to allow for the assessment of research impact was included for the first time, and the exercise was renamed to the REF.

The REF 2014 ‘Assessment Framework and Guidance on Submissions’ (REF 2011) indicated that a submission’s research environment would be assessed according to its ‘vitality and sustainability’, using the same five-point (4* down to unclassified) scale as for the other elements of the exercise. 3

Following the 2014 REF exercise, there have been many criticisms of REF. For instance, the effects of the introduction of impact as an element of the UK’s research assessment methodology has itself been the subject of many papers and reports in which many of the issues and challenges it has brought have been discussed (see e.g., Smith and Ward, House 2011 ; Penfield et al. 2014 ; Manville et al. 2015 ; Watermeyer 2016 ; Pinar and Unlu 2020a ). Manville et al. (2015) and Watermeyer (2016) show that academics in some fields were concerned about how their research focus would be affected by the impact agenda by forcing them to produce more ‘impactful’ research than carrying out their own research agenda. On the other hand, Manville et al. (2015 ) demonstrate that there have been problems with the peer reviewing of the impact case studies where reviewer panels struggled to distinguish between 2-star and 3-star and, most importantly, between 3-star and 4-star. Furthermore, Pinar and Unlu (2020a ) demonstrate that the inclusion of the impact agenda in REF 2014 increased the research income gap across HEIs. Similarly, the literature identifies some serious concerns with the assessment of the research environment ( Taylor 2011 ; Wilsdon et al. 2015 ; Thorpe et al. 2018a , b ). Taylor (2011) considered the use of metrics to assess the research environment, and found evidence of bias towards more research-intensive universities in the assessment of research environment in the 2008 RAE (see Pinar and Unlu 2020b for similar findings for the REF 2014). In particular, he argued that the judgement of assessors may have an implicit bias and be influenced by the ‘halo effect’, where assessors allocate relatively higher scores to departments with long-standing records of high-quality research, and showed that members of Russell Group universities benefited from a ‘halo effect’, after accounting for various important quantitative factors. Wilsdon et al. (2015) wrote in a report for the Higher Education Funding Council for England (HEFCE), which ran the REF on behalf of the four countries of the UK, in which those who had reviewed the narrative research environment statements in REF 2014 as members of the panels of experts expressed concerns ‘that the narrative elements were hard to assess, with difficulties in separating quality in research environment from quality in writing about it.’ Thorpe et al. (2018a , b ) examined environment statements submitted to REF 2014, and their work indicates that the scores given to the overall research environment were influenced by the language used in the narrative statements, and whether or not the submitting university was represented amongst those experts who reviewed the statements. Finally, a similar peer-review bias has been identified in the evaluation of research outputs (see e.g., Taylor 2011 ). Overall, there have been criticisms about the evaluation biases in each element of the REF exercise.

Another criticism of the REF 2014 exercise has been that of the cost. HEFCE commissioned a review of it ( Farla and Simmonds 2015 ) which estimated the cost of the exercise to be £246 million ( Farla and Simmonds 2015 , 6), and the cost of preparing the REF submissions was £212 million. It can be estimated that roughly £19–27 million was spent preparing the research environment statements, 4 and £55 million was spent in preparation of impact case studies, and the remainder cost of preparation may be associated with the output submission. Overall, the cost of preparing each element was significant. Since there is a good agreement between bibliometric factors and peer review assessments ( Bertocchi et al. 2015 ; Pidd and Broadbent 2015 ), it has been argued that cost of evaluating outputs could be decreased with the use of bibliometric information (see e.g., De Boer et al. 2015 ; Geuna and Piolatto 2016 ). Furthermore, Pinar and Unlu (2020b ) found that the use of ‘environment data’ alone could minimize the cost of preparation of the environment part of the assessment as the environment data (i.e., income generated by units, number of staff and postgraduate degree completions) explains a good percentage of the variation between HEIs in REF environment scores.