- Statistical Analysis Plan: What is it & How to Write One

- Data Collection

Statistics give meaning to data collected during research and make it simple to extract actionable insights from the data. As a result, it’s important to have a guide for analyzing data, which is where a statistical analysis plan (SAP) comes in.

A statistical analysis plan provides a framework for collecting data , simplifying and interpreting it , and assessing its reliability and validity.

Here’s a guide on what a statistical analysis plan is and how to write one.

What Is a Statistical Analysis Plan?

A statistical analysis plan (SAP) is a document that specifies the statistical analysis that will be performed on a given dataset. It serves as a comprehensive guide for the analysis, presenting a clear and organized approach to data analysis that ensures the reliability and validity of the results.

SAPs are most widely used in research, data science, and statistics. They are a necessary tool for clearly communicating the goals and methods of analysis, as well as documenting the decisions made during the analysis process.

SAPs typically outline the steps needed to prepare data for analysis, the methods to use, and how details such as sample size, data sources, and any assumptions or limitations of the analysis.

The first step in creating a statistical analysis plan is to identify the research question or hypothesis you’re testing.

Next, choose the appropriate statistical techniques for analyzing the data and specify the analysis details, such as sample size and data sources. It should also include the strategy for presenting and interpreting the results.

How to Develop a Statistical Analysis Plan

Here are the steps for creating a successful statistical analysis plan (SAP):

Identify the Research Question or Hypothesis

This is the main goal of the analysis, and it will guide the rest of the SAP. Here are the steps to identifying research questions or hypotheses:

Define the Analysis’s Goal

The research question or hypothesis should be related to the analysis’s main goal or purpose. If the goal is to evaluate the effectiveness of a content strategy, the research question could be “Is the new strategy more effective than the previous or standard strategy?”

Determine the Variables of Interest

Determine which variables are important to the research question or hypothesis. In the preceding example, the variables could include the effectiveness of the content strategy and its drawbacks.

Formulate the Question or Hypothesis

After identifying the variables, use them to research the question in a clear and precise way. For example, “is the new content strategy more effective than the current one in terms of user acquisition?

Check for Clarity and Specificity

Review the research question or hypothesis for precision and clarity. If a question isn’t well-structured enough to be tested with the data and resources at hand, revise it.

Determine the Sample Size

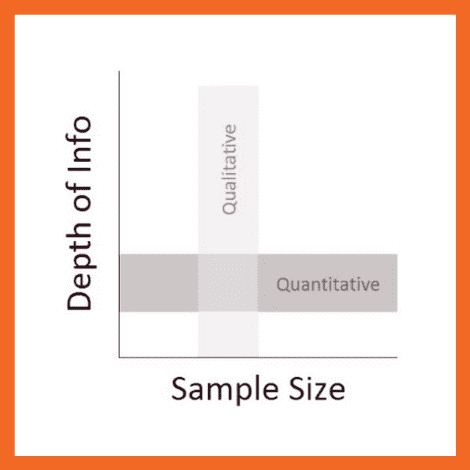

The main factors that influence the sample size are the type of data being analyzed and the resources available. For example, if the data is continuous, you’ll probably need a large sample size.

Also, your sample size should be tailored to your available resources, time, and budget. You could also calculate the sample size using a sample size formula or software.

Select the Appropriate Statistical Techniques

Choose the most appropriate statistical techniques for the analysis based on the research question, data type, and sample size.

Specify the Details of the Analysis

This includes the data sources, any analysis assumptions or limitations, and any variables that need modifications.

Plan For Presenting and Interpreting the Results

Plan how the results will be interpreted and communicated to your audience. Choose how you want to present the information, such as a report or a presentation.

Identifying the Need for a Statistical Analysis Plan

Here are some real-world examples of where a statistical analysis plan is needed:

Research Studies

Health researchers need SAP to determine the effectiveness of a new drug in treating a specific medical condition. It also outlines the methods and procedures for analyzing the study’s data, including sample size, data sources, and statistical techniques to be used.

Clinic Trials

Clinical trials help to test the safety and efficacy of new medical treatments, which would necessitate gathering a large amount of data on how patients respond to treatment, side effects, and comparisons to existing treatments.

A clinic trial SAP should emphasize the statistical analysis that will be performed on the trial data, such as sample size, data sources, and statistical techniques to be used.

Data-Driven Projects

SAP is used by marketing research firms to outline the statistical analysis that will be performed on market research data. It specifies the sample size, data sources, and statistical techniques that will be used to analyze data and provide insights into consumer behavior.

Government Agencies

When government agencies collect data for new policies such as new tax laws or population censuses, they require a statistical analysis plan outlining how the data will be collected, interpreted, and used. The SAP would specify the sample size, data sources, and statistical techniques that will be used to analyze the data and assess the effectiveness of the policy or program.

Nonprofit Organizations

Nonprofits could also use SAPs to analyze data collected as part of a research study or program evaluation. A non-profit, for example, could gather information about who is likely to donate to their cause and how to contact them to solicit donations.

How Do You Write a Statistical Analysis Plan?

Here are the steps to writing a simple and effective Statistical analysis plan:

Introduction

A statistical analysis plan (SAP) introduction should provide an overview of the research question or hypothesis being tested as well as the goals and objectives of the analysis. It should also provide some context for the topic and the context in which the analysis is being conducted.

This section should describe how the data was collected and prepared for analysis, including sample size, data sources, and any analysis assumptions or limitations.

For example, a clinical trial involving 100 patients with a specific medical condition. The sample will be assigned at random to either the new or current standard treatment.

The SAP will include data on the treatment’s effectiveness in reducing symptoms, which will be collected at the start of the trial and at regular intervals throughout and after it. To avoid common survey bias, data is collected using standardized questionnaires created by researchers.

Next, the data will be cleaned and prepared for analysis by removing any missing or invalid values and ensuring that it is in the correct format. Also, any data collected outside of the specified time frame will be excluded from the analysis.

The small sample size and brief duration of the clinical trial are two of the study’s limitations. These constraints should be considered when interpreting the results of this analysis.

Statistical Techniques

This section should describe the statistical techniques that will be used in the analysis, including any specific software or tools.

Using the preceding example, you can use software such as SPSS or R. They use t-tests and regression analysis to determine the effectiveness of the two treatments.

You can make further investigations using additional statistical techniques such as ANOVA. It enables you to investigate the effects of various variables on treatment efficacy and identify any significant inter-variable interactions.

This section describes how the results will be presented and interpreted, including any plans for visualizing the data or using statistical tests to determine their significance.

Using the clinical trial example, you can visualize the data and find patterns in the data by using graphical representations. Next, interpret the result in light of the research question or hypothesis, as well as any limitations or assumptions of the analysis.

Assess the implications of the clinical trial results and future research on the medical condition’s treatment. Then, develop a summary of the results including any recommendations or conclusions drawn from the research.

The “Conclusion” section should provide a concise summary of the main findings of the analysis as well as any recommendations or implications. It should also highlight any limitations or assumptions of the analysis and discuss the implications of the results for clinical practice and future research.

Information in the Statistical Analysis Plan

1. Statistics on who wrote the SAP, when it was approved, and who signed it.

2. Expected number of participants, and sample size calculation.

3. A detailed explanation of the main and short-term analysis techniques used for analyzing the data. This includes:

- Study goals

- Specify the primary and secondary hypotheses, as well as the parameters you’ll use to assess how well you met the study objectives.

- A detailed description of the study’s sample size.

- A summary of the primary and secondary outcomes of each study. Typically, there should be just one primary outcome.

4. The SAP should also specify how each outcome metric will be assessed. Statistical tests are typically used to examine outcome measures and the method for accounting for missing data.

5. The SAP should also explain the procedures used to analyze and display the study results in detail. This includes:

- The level of statistical significance that will be used, and if one-tailed or two-tailed tests will be used.

- How to deal with missing data.

- Outlier management techniques.

- Protocol variations, noncompliance, and withdrawal procedures.

- Estimation methods for points and intervals.

- How to calculate composite or derived variables, including data-driven definitions and any additional details needed to reduce uncertainties.

- Baseline and covariate data

- Add randomization factors

- Methods for dealing with data from multiple sources

- How to deal with participant interactions

- Multiple comparisons and subgroup analysis methods

- Interim or sequential analyses

- Step-by-step procedure to terminate research and its implications

- Statistical software for analyzing the data

- Validate critical analysis assumptions and sensitivity analyses.

- Visual representation of the research data

- Define the safe population

6. Alternative models for data analysis if the data does not fit the chosen statistical model

Making Modifications to Statistical Analysis Plan

It is not unusual for a statistical analysis plan (SAP) to undergo adjustments during the project’s life cycle. Here’s why you may need to modify your SAP:

- Research question or hypothesis change : As the project progresses, the research question or hypothesis may evolve or change, requiring changes to the SAP.

- New data : As new data is collected or becomes available, it may be necessary to modify the SAP to include the new information.

- Unpredicted challenges : Unexpected challenges may arise during the project, requiring SAP alteration. For example, the data may not be of the expected quality, or the sample size may need to be adjusted.

- Improved Data Understanding : The researcher may gain a better understanding of the data as the analysis progresses and may need to modify the SAP to reflect this enhanced understanding.

Make sure to document the changes made to the SAP, as well as the reasons for them. This ensures the analysis’s reliability and accuracy.

You could also work with a statistician or research expert to ensure that the SAP changes are appropriate and do not jeopardize the results’ reliability and validity.

A statistical analysis plan (SAP) is a step-by-step plan that highlights the methods and techniques to be used in data analysis for a research project. SAPs ensure the reliability and validity of the results and provide a clear roadmap for the analysis.

You have to include the research question or hypothesis, sample size, data sources, statistical techniques, variables, and guidelines for interpreting and presenting the results to have an effective SAP.

Connect to Formplus, Get Started Now - It's Free!

- data analysis

- statistical analysis

- statistical analysis plan

- Moradeke Owa

You may also like:

What is Field Research: Meaning, Examples, Pros & Cons

Introduction Field research is a method of research that deals with understanding and interpreting the social interactions of groups of...

Unit of Analysis: Definition, Types & Examples

Introduction A unit of analysis is the smallest level of analysis for a research project. It’s important to choose the right unit of...

What Are Research Repositories?

A research repository is a database that helps organizations to manage, share, and gain access to research data to make product and...

Statistical Analysis Software: A Guide For Social Researchers

Introduction Social research is a complex endeavor. It takes a lot of time, energy, and resources to gather data, analyze and present...

Formplus - For Seamless Data Collection

Collect data the right way with a versatile data collection tool. try formplus and transform your work productivity today..

educational research techniques

Research techniques and education.

Developing a Data Analysis Plan

It is extremely common for beginners and perhaps even experience researchers to lose track of what they are trying to achieve or do when trying to complete a research project. The open nature of research allows for a multitude of equally acceptable ways to complete a project. This leads to an inability to make a decision and or stay on course when doing research.

Data Analysis Plan

A data analysis plan includes many features of a research project in it with a particular emphasis on mapping out how research questions will be answered and what is necessary to answer the question. Below is a sample template of the analysis plan.

The majority of this diagram should be familiar to someone who has ever done research. At the top, you state the problem , this is the overall focus of the paper. Next, comes the purpose , the purpose is the over-arching goal of a research project.

After purpose comes the research questions . The research questions are questions about the problem that are answerable. People struggle with developing clear and answerable research questions. It is critical that research questions are written in a way that they can be answered and that the questions are clearly derived from the problem. Poor questions means poor or even no answers.

After the research questions, it is important to know what variables are available for the entire study and specifically what variables can be used to answer each research question. Lastly, you must indicate what analysis or visual you will develop in order to answer your research questions about your problem. This requires you to know how you will answer your research questions

Below is an example of a completed analysis plan for simple undergraduate level research paper

In the example above, the student wants to understand the perceptions of university students about the cafeteria food quality and their satisfaction with the university. There were four research questions, a demographic descriptive question, a descriptive question about the two main variables, a comparison question, and lastly a relationship question.

The variables available for answering the questions are listed off to the left side. Under that, the student indicates the variables needed to answer each question. For example, the demographic variables of sex, class level, and major are needed to answer the question about the demographic profile.

The last section is the analysis. For the demographic profile, the student found the percentage of the population in each sub group of the demographic variables.

A data analysis plan provides an excellent way to determine what needs to be done to complete a study. It also helps a researcher to clearly understand what they are trying to do and provides a visual for those who the research wants to communicate with about the progress of a study.

Share this:

Leave a reply cancel reply, discover more from educational research techniques.

Subscribe now to keep reading and get access to the full archive.

Type your email…

Continue reading

Customize Your Path

Filters Applied

Customize Your Experience.

Utilize the "Customize Your Path" feature to refine the information displayed in myRESEARCHpath based on your role, project inclusions, sponsor or funding, and management center.

Design the analysis plan

Need assistance with analysis planning?

Get help with analysis planning.

Contact the Biostatistics, Epidemiology, and Research Design (BERD) Methods Core:

- Submit a help request

- 1-icon/ui/arrow_right Amsterdam Public Health

- 1-icon/ui/arrow_right Home

- 1-icon/ui/arrow_right Research Lifecycle

More APH...

- 1-icon/ui/arrow_right About

- 1-icon/ui/arrow_right News

- 1-icon/ui/arrow_right Events

- 1-icon/ui/arrow_right Research information

- 1-icon/ui/arrow_right Our strenghts

- Amsterdam Public Health

- Research Lifecycle

- Research information

- Our strenghts

- Proposal Writing

- Study Preparation

- Methods & Data Collection

- Process & Analyze Data

- Writing & Publication

- Archiving & Open Data

- Knowledge Utilization

- Supervision

- Analysis plan

- Set-up & Conduct

- Quantitative research

Data analysis

- Initial data analysis

- Post-hoc & sensitivity analyses

- Data analysis documentation

- Handling missing data

To promote structured targeted data analysis.

Requirements

An analysis plan should be created and finalized prior to the data analyses.

Documentation

The analysis plan (Guidelines per study type are provided below)

Responsibilities

- Executing researcher: To create the analysis plan prior to the data analyses, containing a description of the research question and what the various steps in the analysis are going to be. This should also be signed and dated by the PI.

- Project leaders: To inform the executing researcher about setting up the analysis plan before analyses are undertaken.

- Research assistant: N.a.

An analysis plan should be created and finalized (signed and dated by PI) prior to the data analyses. The analysis plan contains a description of the research question and what the various steps in the analysis are going to be. It also contains an exploration of literature (what is already know? What will this study add?) to make sure your research question is relevant (see Glasziou et al. Lancet 2014 on avoiding research waste).The analysis plan is intended as a starting point for the analysis. It ensures that the analysis can be undertaken in a targeted manner, and promotes research integrity.

If you will perform an exploratory study you can adjust your analysis based on the data you find; this may be useful if not much is known about the research subject, but it is considered as relatively low level evidence and it should be clearly mentioned in your report that the presented study is exploratory. If you want to perform an hypothesis-testing study (be it interventional or using observational data) you need to pre-specify the analyses you intend to do prior to performing the analysis, including the population, subgroups, stratifications and statistical tests. If deviations from the analysis plan are made during the study this should be documented in the analysis plan and stated in the report (i.e. post-hoc tests). If you intend to do hypothesis-free research with multiple testing you should pre-specify your threshold for statistical significance according to the number of analyses you will perform. Lastly, if you intend to perform an RCT, the analysis plan is practically set in stone. (Also see ICH E9 - statistical principles for clinical trials )

If needed, an exploratory analysis may be part of the analysis plan, to inform the setting up of the final analysis (see initial data analysis ). For instance, you may want to know distributions of values in order to create meaningful categories, or determine whether data are normally distributed. The findings and decisions made during these preliminary exploratory analyses should be clearly documented, preferably in a version two of the analysis plan, and made reproducible by providing the data analysis syntax (in SPSS, SAS, STATA, R) (see guideline Documentation of data analysis ).

The concrete research question needs to be formulated firstly within the analysis plan following the literature review; this is the question intended to be answered by the analyses. Concrete research questions may be defined using the acronym PICO: Population, Intervention, Comparison, Outcomes. An example of a concrete question could be: “Does frequent bending at work lead to an elevated risk of lower back pain occurring in employees?” (Population = Employees; Intervention = Frequent bending; Comparison = Infrequent bending; Outcome = Occurrence of back pain). Concrete research questions are essential for determining the analyses required.

The analysis plan should then describe the primary and secondary outcomes, the determinants and data needed, and which statistical techniques are to be used to analyse the data. The following issues need to be considered in this process and described where applicable:

- In case of a trial: is the trial a superiority, non-inferiority or equivalence trial.

- Superiority: treatment A is better than the control.

- Non-inferiority: treatment A is not worse than treatment B.

- Equivalence: testing similarity using a tolerance range.

In other studies: what is the study design (case control, longitudinal cohort etc).

- Which (subgroup of the) population is to be included in the analyses? Which groups will you compare?;

- What are the primary and secondary endpoints? Which data from which endpoint (T1, T2, etc.) will be used?;

- Which (dependent and independent) variables are to be used in the analyses and how are the variables to be analysed (e.g. continuous or in categories)?;

- Which variables are to be investigated as potential confounders or effect modifiers (and why) and how are these variables to be analysed? There are different ways of dealing with confounders. We distinguish the following: 1) correct for all potential confounders (and do not concern about the question whether or not a variable is a ‘real’ confounder). Mostly, confounders are split up in little groups (demographic factors, clinical parameters, etc.). As a result you get corrected model 1, corrected model 2, etc. However, pay attention to collinearity and overcorrection if confounders coincide too much with primary determinants. 2) if the sample size is not big enough relative to the number of potential confounders, you may consider to only correct for those confounders that are relevant for the association between determinant and outcome. To select the relevant confounders, mostly a forward selection procedure is performed. In this case the confounders are added to the model one by one (the confounder that is associated strongest first). Subsequently, consider to what extent the effect of the variable of interest is changed. Then first choose the strongest confounder in the model. Subsequently, repeat this procedure untill no confounder has a relevant effect (<10% change in regression coefficient). Alternatively, you can select the confounders that univariately change the point estimate of the association with >10%. 3) Another option is to set up a Directed Acyclic Graph (DAG), to determine which confounders should be added to the model. Please see http://www.dagitty.net/ for more information.

- How to deal with missing values? (see chapter on handeling missing data );

- Which analyses are to be carried out in which order (e.g. univariable analyses, multivariable analyses, analysis of confounders, analysis of interaction effects, analysis of sub-populations, etc.)?; Which sensitivity analyses will be performed?

- Do the data meet the criteria for the specific statistical technique?

A statistician may need to be consulted regarding the choice of statistical techniques (also see this intanetpage on statistical analysis plan ).

It is recommended to already design the empty tables to be included in the article prior to the start of data analysis. This is often very helpful in deciding which analyses are exactly required in order to analyse the data in a targeted manner.

You may consider to make your study protocol including the (statistical) analysis plan public, either by placing in on a publicly accessible website (Concept Paper/Design paper) or by uploading it in an appropriate studies register (for human trials: NTR / EUDRACT / ClinicalTrials.gov , for non-/preclinicaltrials: preclinicaltrials.eu ).

Check the reporting guidelines when writing an analysis plan . These will help increase the quality of your research and guide you.

How to Write a Successful Research Grant Application pp 283–298 Cite as

Writing the Data Analysis Plan

- A. T. Panter 4

- First Online: 01 January 2010

5745 Accesses

3 Altmetric

You and your project statistician have one major goal for your data analysis plan: You need to convince all the reviewers reading your proposal that you would know what to do with your data once your project is funded and your data are in hand. The data analytic plan is a signal to the reviewers about your ability to score, describe, and thoughtfully synthesize a large number of variables into appropriately-selected quantitative models once the data are collected. Reviewers respond very well to plans with a clear elucidation of the data analysis steps – in an appropriate order, with an appropriate level of detail and reference to relevant literatures, and with statistical models and methods for that map well into your proposed aims. A successful data analysis plan produces reviews that either include no comments about the data analysis plan or better yet, compliments it for being comprehensive and logical given your aims. This chapter offers practical advice about developing and writing a compelling, “bullet-proof” data analytic plan for your grant application.

- Latent Class Analysis

- Grant Application

- Grant Proposal

- Data Analysis Plan

- Latent Transition Analysis

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

This is a preview of subscription content, log in via an institution .

Buying options

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Aiken, L. S. & West, S. G. (1991). Multiple regression: testing and interpreting interactions . Newbury Park, CA: Sage.

Google Scholar

Aiken, L. S., West, S. G., & Millsap, R. E. (2008). Doctoral training in statistics, measurement, and methodology in psychology: Replication and extension of Aiken, West, Sechrest and Reno’s (1990) survey of PhD programs in North America. American Psychologist , 63 , 32–50.

Article PubMed Google Scholar

Allison, P. D. (2003). Missing data techniques for structural equation modeling. Journal of Abnormal Psychology , 112 , 545–557.

American Psychological Association (APA) Task Force to Increase the Quantitative Pipeline (2009). Report of the task force to increase the quantitative pipeline . Washington, DC: American Psychological Association.

Bauer, D. & Curran, P. J. (2004). The integration of continuous and discrete latent variables: Potential problems and promising opportunities. Psychological Methods , 9 , 3–29.

Bollen, K. A. (1989). Structural equations with latent variables . New York: Wiley.

Bollen, K. A. & Curran, P. J. (2007). Latent curve models: A structural equation modeling approach . New York: Wiley.

Cohen, J., Cohen, P., West, S. G., & Aiken, L. S. (2003). Multiple correlation/regression for the behavioral sciences (3rd ed.). Mahwah, NJ: Erlbaum.

Curran, P. J., Bauer, D. J., & Willoughby, M. T. (2004). Testing main effects and interactions in hierarchical linear growth models. Psychological Methods , 9 , 220–237.

Embretson, S. E. & Reise, S. P. (2000). Item response theory for psychologists . Mahwah, NJ: Erlbaum.

Enders, C. K. (2006). Analyzing structural equation models with missing data. In G. R. Hancock & R. O. Mueller (Eds.), Structural equation modeling: A second course (pp. 313–342). Greenwich, CT: Information Age.

Hosmer, D. & Lemeshow, S. (1989). Applied logistic regression . New York: Wiley.

Hoyle, R. H. & Panter, A. T. (1995). Writing about structural equation models. In R. H. Hoyle (Ed.), Structural equation modeling: Concepts, issues, and applications (pp. 158–176). Thousand Oaks: Sage.

Kaplan, D. & Elliott, P. R. (1997). A didactic example of multilevel structural equation modeling applicable to the study of organizations. Structural Equation Modeling , 4 , 1–23.

Article Google Scholar

Lanza, S. T., Collins, L. M., Schafer, J. L., & Flaherty, B. P. (2005). Using data augmentation to obtain standard errors and conduct hypothesis tests in latent class and latent transition analysis. Psychological Methods , 10 , 84–100.

MacKinnon, D. P. (2008). Introduction to statistical mediation analysis . Mahwah, NJ: Erlbaum.

Maxwell, S. E. (2004). The persistence of underpowered studies in psychological research: Causes, consequences, and remedies. Psychological Methods , 9 , 147–163.

McCullagh, P. & Nelder, J. (1989). Generalized linear models . London: Chapman and Hall.

McDonald, R. P. & Ho, M. R. (2002). Principles and practices in reporting structural equation modeling analyses. Psychological Methods , 7 , 64–82.

Messick, S. (1989). Validity. In R. L. Linn (Ed.), Educational measurement (3rd ed., pp. 13–103). New York: Macmillan.

Muthén, B. O. (1994). Multilevel covariance structure analysis. Sociological Methods & Research , 22 , 376–398.

Muthén, B. (2008). Latent variable hybrids: overview of old and new models. In G. R. Hancock & K. M. Samuelsen (Eds.), Advances in latent variable mixture models (pp. 1–24). Charlotte, NC: Information Age.

Muthén, B. & Masyn, K. (2004). Discrete-time survival mixture analysis. Journal of Educational and Behavioral Statistics , 30 , 27–58.

Muthén, L. K. & Muthén, B. O. (2004). Mplus, statistical analysis with latent variables: User’s guide . Los Angeles, CA: Muthén &Muthén.

Peugh, J. L. & Enders, C. K. (2004). Missing data in educational research: a review of reporting practices and suggestions for improvement. Review of Educational Research , 74 , 525–556.

Preacher, K. J., Curran, P. J., & Bauer, D. J. (2006). Computational tools for probing interaction effects in multiple linear regression, multilevel modeling, and latent curve analysis. Journal of Educational and Behavioral Statistics , 31 , 437–448.

Preacher, K. J., Curran, P. J., & Bauer, D. J. (2003, September). Probing interactions in multiple linear regression, latent curve analysis, and hierarchical linear modeling: Interactive calculation tools for establishing simple intercepts, simple slopes, and regions of significance [Computer software]. Available from http://www.quantpsy.org .

Preacher, K. J., Rucker, D. D., & Hayes, A. F. (2007). Addressing moderated mediation hypotheses: Theory, methods, and prescriptions. Multivariate Behavioral Research , 42 , 185–227.

Raudenbush, S. W. & Bryk, A. S. (2002). Hierarchical linear models: Applications and data analysis methods (2nd ed.). Thousand Oaks, CA: Sage.

Radloff, L. (1977). The CES-D scale: A self-report depression scale for research in the general population. Applied Psychological Measurement , 1 , 385–401.

Rosenberg, M. (1965). Society and the adolescent self-image . Princeton, NJ: Princeton University Press.

Schafer. J. L. & Graham, J. W. (2002). Missing data: Our view of the state of the art. Psychological Methods , 7 , 147–177.

Schumacker, R. E. (2002). Latent variable interaction modeling. Structural Equation Modeling , 9 , 40–54.

Schumacker, R. E. & Lomax, R. G. (2004). A beginner’s guide to structural equation modeling . Mahwah, NJ: Erlbaum.

Selig, J. P. & Preacher, K. J. (2008, June). Monte Carlo method for assessing mediation: An interactive tool for creating confidence intervals for indirect effects [Computer software]. Available from http://www.quantpsy.org .

Singer, J. D. & Willett, J. B. (1991). Modeling the days of our lives: Using survival analysis when designing and analyzing longitudinal studies of duration and the timing of events. Psychological Bulletin , 110 , 268–290.

Singer, J. D. & Willett, J. B. (1993). It’s about time: Using discrete-time survival analysis to study duration and the timing of events. Journal of Educational Statistics , 18 , 155–195.

Singer, J. D. & Willett, J. B. (2003). Applied longitudinal data analysis: Modeling change and event occurrence . New York: Oxford University.

Book Google Scholar

Vandenberg, R. J. & Lance, C. E. (2000). A review and synthesis of the measurement invariance literature: Suggestions, practices, and recommendations for organizational research. Organizational Research Methods , 3 , 4–69.

Wirth, R. J. & Edwards, M. C. (2007). Item factor analysis: Current approaches and future directions. Psychological Methods , 12 , 58–79.

Article PubMed CAS Google Scholar

Download references

Author information

Authors and affiliations.

L. L. Thurstone Psychometric Laboratory, Department of Psychology, University of North Carolina, Chapel Hill, NC, USA

A. T. Panter

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to A. T. Panter .

Editor information

Editors and affiliations.

National Institute of Mental Health, Executive Blvd. 6001, Bethesda, 20892-9641, Maryland, USA

Willo Pequegnat

Ellen Stover

Delafield Place, N.W. 1413, Washington, 20011, District of Columbia, USA

Cheryl Anne Boyce

Rights and permissions

Reprints and permissions

Copyright information

© 2010 Springer Science+Business Media, LLC

About this chapter

Cite this chapter.

Panter, A.T. (2010). Writing the Data Analysis Plan. In: Pequegnat, W., Stover, E., Boyce, C. (eds) How to Write a Successful Research Grant Application. Springer, Boston, MA. https://doi.org/10.1007/978-1-4419-1454-5_22

Download citation

DOI : https://doi.org/10.1007/978-1-4419-1454-5_22

Published : 20 August 2010

Publisher Name : Springer, Boston, MA

Print ISBN : 978-1-4419-1453-8

Online ISBN : 978-1-4419-1454-5

eBook Packages : Medicine Medicine (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Data Analysis Plan: Ultimate Guide and Examples

Learn the post survey questions you need to ask attendees for valuable feedback.

Once you get survey feedback , you might think that the job is done. The next step, however, is to analyze those results. Creating a data analysis plan will help guide you through how to analyze the data and come to logical conclusions.

So, how do you create a data analysis plan? It starts with the goals you set for your survey in the first place. This guide will help you create a data analysis plan that will effectively utilize the data your respondents provided.

What can a data analysis plan do?

Think of data analysis plans as a guide to your organization and analysis, which will help you accomplish your ultimate survey goals. A good plan will make sure that you get answers to your top questions, such as “how do customers feel about this new product?” through specific survey questions. It will also separate respondents to see how opinions among various demographics may differ.

Creating a data analysis plan

Follow these steps to create your own data analysis plan.

Review your goals

When you plan a survey, you typically have specific goals in mind. That might be measuring customer sentiment, answering an academic question, or achieving another purpose.

If you’re beta testing a new product, your survey goal might be “find out how potential customers feel about the new product.” You probably came up with several topics you wanted to address, such as:

- What is the typical experience with the product?

- Which demographics are responding most positively? How well does this match with our idea of the target market?

- Are there any specific pain points that need to be corrected before the product launches?

- Are there any features that should be added before the product launches?

Use these objectives to organize your survey data.

Evaluate the results for your top questions

Your survey questions probably included at least one or two questions that directly relate to your primary goals. For example, in the beta testing example above, your top two questions might be:

- How would you rate your overall satisfaction with the product?

- Would you consider purchasing this product?

Those questions offer a general overview of how your customers feel. Whether their sentiments are generally positive, negative, or neutral, this is the main data your company needs. The next goal is to determine why the beta testers feel the way they do.

Assign questions to specific goals

Next, you’ll organize your survey questions and responses by which research question they answer. For example, you might assign questions to the “overall satisfaction” section, like:

- How would you describe your experience with the product?

- Did you encounter any problems while using the product?

- What were your favorite/least favorite features?

- How useful was the product in achieving your goals?

Under demographics, you’d include responses to questions like:

- Education level

This helps you determine which questions and answers will answer larger questions, such as “which demographics are most likely to have had a positive experience?”

Pay special attention to demographics

Demographics are particularly important to a data analysis plan. Of course you’ll want to know what kind of experience your product testers are having with the product—but you also want to know who your target market should be. Separating responses based on demographics can be especially illuminating.

For example, you might find that users aged 25 to 45 find the product easier to use, but people over 65 find it too difficult. If you want to target the over-65 demographic, you can use that group’s survey data to refine the product before it launches.

Other demographic segregation can be helpful, too. You might find that your product is popular with people from the tech industry, who have an easier time with a user interface, while those from other industries, like education, struggle to use the tool effectively. If you’re targeting the tech industry, you may not need to make adjustments—but if it’s a technological tool designed primarily for educators, you’ll want to make appropriate changes.

Similarly, factors like location, education level, income bracket, and other demographics can help you compare experiences between the groups. Depending on your ultimate survey goals, you may want to compare multiple demographic types to get accurate insight into your results.

Consider correlation vs. causation

When creating your data analysis plan, remember to consider the difference between correlation and causation. For instance, being over 65 might correlate with a difficult user experience, but the cause of the experience might be something else entirely. You may find that your respondents over 65 are primarily from a specific educational background, or have issues reading the text in your user interface. It’s important to consider all the different data points, and how they might have an effect on the overall results.

Moving on to analysis

Once you’ve assigned survey questions to the overall research questions they’re designed to answer, you can move on to the actual data analysis. Depending on your survey tool, you may already have software that can perform quantitative and/or qualitative analysis. Choose the analysis types that suit your questions and goals, then use your analytic software to evaluate the data and create graphs or reports with your survey results.

At the end of the process, you should be able to answer your major research questions.

Power your data analysis with Voiceform

Once you have established your survey goals, Voiceform can power your data collection and analysis. Our feature-rich survey platform offers an easy-to-use interface, multi-channel survey tools, multimedia question types, and powerful analytics. We can help you create and work through a data analysis plan. Find out more about the product, and book a free demo today !

We make collecting, sharing and analyzing data a breeze

Get started for free. Get instant access to Voiceform features that get you amazing data in minutes.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Contemp Clin Trials Commun

- v.34; 2023 Aug

- PMC10300078

A template for the authoring of statistical analysis plans

Gary stevens.

a DynaStat Consulting, Inc., 119 Fairway Court, Bastrop, TX, 78602, USA

Shawn Dolley

b Open Global Health, 710 12th St. South, Suite 2523, Arlington, VA, 22202, USA

c Takeda Pharmaceuticals USA Inc., 95 Hayden Avenue, Lexington, MA, 02421, USA

Jason T. Connor

d ConfluenceStat, 3102 NW 82nd Way, Cooper City, Florida, 33024, USA

e University of Central Florida College of Medicine, 6850 Lake Nona Blvd, Orlando, FL, 32827, USA

Associated Data

All data is contained within the manuscript.

No data was used for the research described in the article.

A number of principal investigators may have limited access to biostatisticians, a lack of biostatistical training, or no requirement to complete a timely statistical analysis plan (SAP). SAPs completed early will identify design or implementation weak points, improve protocols, remove the temptation for p-hacking, and enable proper peer review by stakeholders considering funding the trial. An SAP completed at the same time as the study protocol might be the only comprehensive method for at once optimizing sample size, identifying bias, and applying rigor to study design. This ordered corpus of SAP sections with detailed definitions and a variety of examples represents an omnibus of best practice methods offered by biostatistical practitioners inside and outside of industry. The article presents a protocol template for clinical research design, enabling statisticians, from beginners to advanced.

- • Comprehensive template for biostatisticians: best practices, annotations, code samples, and more. Perfect for both early and mature statisticians.

- • Promotes equity by bridging the gap for biostatisticians in low-resource settings, ensuring access to tools available in the Global North.

- • Benefits of earlier SAP completion alongside the protocol are described.

1. Introduction

1.1. the statistical analysis plan.

The Statistical Analysis Plan (SAP) is a key document that complements the study protocol in randomized controlled trials (RCT). SAPs are a vital component of transparent, objective, rigorous, reproducible research. The SAP “describes the planned analysis of study objectives … it describes what variables and outcomes will be collected and which statistical methods will be used to analyze them [ 1 ]”. National regulatory agencies around the world require SAPs to be submitted when considering drugs, biologics, and devices for approval. The SAP is meant to supplement the protocol and provide richer detail for all prospectively planned statistical analyses. In addition, it defines the population(s) and time point(s) used for each analysis. It defines details such as multiplicity control, sensitivity analyses, methods used to handle missing data, subsets analyses prospectively identified, and the specific analyses performed on each subset of interest. For those populations defined, the SAP should provide clear rules for who is included in each analysis population.

The SAP functions as contract between the study team and the potential consumers of their research [ 2 ]. It identifies analyses described in the protocol and ensures there is sufficient detail so prospectively defined methods can be precisely executed. While there may always be post-hoc/exploratory analysis after data is collected, the SAP is used to identify all primary, secondary, and pre-specified exploratory analysis and the precise methods to be used for those. The situation where further detail is required after unblinding of the data is one to be avoided as it may allow for the introduction of bias if the investigators, who have now seen the unblinded trial data, must be approached to provide clarity.

One key goal of a well-written SAP is reproducibility. The standard for this reproducibility can be measured by this idealistic exercise: if multiple statisticians have access to (1) the analysis dataset and (2) the SAP, they would all conduct very similar to the same analyses, and ideally produce the same results. While the trial protocol may describe all primary and secondary endpoints and analyses, the SAP is the place where greater technical detail can be provided to the target audience of statisticians and statistical programmers, in order to achieve this high level of reproducibility.

As the vehicle for key findings and solid science, the SAP is a foundational document to reproducible research. Given the data and the SAP, there should be a very limited number of subjective decisions necessary at the time of the analysis. This ought to help the resulting analyses have the highest possible integrity. Any analysis not prospectively defined in the SAP should be clearly noted as post hoc. Likewise, any analysis that differed in any way from the prospectively planned analysis described within the SAP should be noted with the difference and its rationale.

1.2. Create the SAP when creating the trial protocol

The SAP cannot be completed before the protocol is completed. The SAP must be completed before the study is unblinded (in a blinded trial) or the PI or statistical team has access to the accumulating data (in an unblinded trial). For years, conventional wisdom maintained an SAP ought to be finished “after the protocol is finalized and before the blind is broken” [ 2 ]. In recent years, many have adopted a best practice that an SAP ought to be finalized before the first patient is enrolled. In some cases, however, protocols that are finished, funded and frozen without an SAP are not clear and detailed enough to carry out a true prospective analysis. One approach to avoid this is to prepare and complete the SAP in parallel with the protocol. Rather than delay, “a statistical analysis plan should be prepared at the time of protocol development [ 3 ]”.

There are significant benefits to completing the SAP while the protocol is being completed. The protocol clarity needed by the statistician for the SAP can act as a catalyst to unearth design flaws in a protocol that is ‘in development’. This identification of design flaws is secondary to the primary goal of completing an SAP. This secondary effect is a significant benefit to creating the SAP at the time of the protocol. Evans and Ting confirm that secondary uses of an SAP are valid, including describing the SAP as: “a pseudo-contract between the statisticians and other members of the project team” and “… a communication mechanism with regulatory agencies [ 2 ]”. Those who do not complete the SAP concomitantly with the protocol lose a unique opportunity to find study design flaws. Otherwise, those flaws can live in the design until found by the trial implementation team on site or during the data analysis phase. If uncovered during the data analysis phase, it may suddenly dawn on the PI team that the research question cannot be answered with the trial that was implemented. If a trial is implemented to answer a statistically well-defined research question, then “consideration of the statistical methods should underpin all aspects of an RCT, including development of the specific aims and design of the protocol [ 3 ]”.

An additional benefit of co-developing the protocol and SAP is the resulting increase in likelihood the study will end informatively. Deborah Zarin and colleagues created the term “uninformative clinical trials” in 2019 [ 4 ]. Uninformativeness is a type of research inefficiency. “An uninformative trial is one that provides results that are not of meaningful use for a patient, clinician, researcher, or policy maker [ 4 ]”. They describe one potential driver of uninformativeness as when a “study design is pre-specified in a trial protocol, but [the] trial is not conducted, analyzed or reported in manner consistent with [the] protocol [ 4 ]”. There is established evidence of SAPs being at odds with their protocol pair at study publication [ 5 , 6 ]. An obvious method to ensure against this possible disconnect is to finalize the SAP during the development of the study protocol.

1.3. Engage biostatisticians with domain experience

There are unique characteristics and statistics that tend to repeat within specific types of trials. Examples of these specific types of trials include those with particular pathologies (e.g., cancer, malnutrition), interventions (e.g., vaccines, digital health applications), or study designs (e.g., enrichment, cluster randomized, or challenge trials). RCTs touching different domains will need to apply statistical techniques specific to those domains. For example, in the case of human challenge trials, the SAP would need to include a model for estimating infection fatality risk. For example, recently one team created a Bayesian meta-analysis model to estimate infection fatality in COVID-19 human challenge trials including young participants [ 7 ]. In cluster randomized trials, handling the similarities in outcomes amongst participants inside and outside of clusters—referred to as intracluster correlation—adds particular complexities to estimating proper sample sizes [ 8 ]. Employing biostatisticians with experience in creating statistical analysis plans for particular trial varietals increases the likelihood of success of those trials.

1.4. An excellent SAP rewards the principal investigator (PI) and team

There are a number of secondary positive effects of having a solidly constructed and thorough SAP. These include, but are not limited to:

- 1. Regulatory Review. If the PI knows there will be a path to engage regulators for approval, or pivots to that decision later, the quality of the SAP will be paramount. Since one cannot re-write or create the SAP post hoc, the commitment to SAP excellence must happen up front.

- 2. Ethics Committee/Institutional Review Board. Although the SAP is not a core document that an Ethics Committee or Institutional Review Board might use to make key decisions about a study, it might be useful to the members of such groups. In this case, a high-quality SAP will speed approval, while raising the credibility of the PI team.

- 3. Unanticipated Review. If there is some heavy scrutiny of the RCT, either for positive or negative reasons, requests may emerge for the SAP. If that SAP is reviewed more widely and the stakes are high, the PI team and SAP authors would want a complete, high-quality document.

- 4. Future Re-Use. With a high-quality SAP, it might be a useful template for future studies. Because it includes a number of endpoints or populations you may use in future studies, regular updates will keep it fresh. This will save time in writing new SAPs from scratch each time.

- 5. Funding Asset. Showing a thorough and professional SAP enables funders, donors, and sponsors to give grant funding with confidence. As they compare research teams, it is in places like the SAP where the ground truth about capabilities shines through.

- 6. Future Readiness. More funders, donors, and sponsors are realizing the value of early SAPs for informativeness. It is likely that SAPs will increasingly be requested or be requested pre-funding. Excellent SAPs and the discipline it takes to maintain their creation will make it easier when those shifts occur. Readiness for publication will be higher, as more and more high impact journals are requiring Protocols and SAPs as online appendices during publication.

To realize these benefits as well as the primary goal of ensuring transparent, objective, rigorous, reproducible research, one or more biostatisticians must be engaged to create an SAP. While some biostatisticians write and edit SAPs frequently and are ‘living’ in the world of trial design, more of them are not. Those who are new to SAPs or are irregularly writing such documents could benefit from current best practices and refreshers of fundamentals. Fortunately, a number of contemporary peer-reviewed publications have included SAP checklists. These checklists are designed to ensure SAP authors include all necessary and best practice items [ 1 , 2 , 9 ]. In addition to these SAP creation checklists, a recent checklist includes items that a biostatistician might look for when reviewing an SAP on behalf of donors, funders, or sponsors [ 10 ].

2. Materials and methods

The template that follows is organized as an example SAP, with guidance included. While all clinical trials and prospective studies are different, this document describes sections that should or may be desirable to produce a document that will guide objective analyses at the project's conclusion. Not all sections mentioned here will be necessary for all trials or studies and some may be omitted. Furthermore, there may be unique aspects of a study not contained herein that may be required for studies using novel methodology or having uncommon characteristics. The key is that the SAP must contain detailed descriptions of all study populations, endpoints, and preplanned analyses to maximize study integrity and eliminate or limit the subjectivity required in the study's analysis phase. The SAP template herein includes examples from a number of different trials and trial types. This was necessary to provide a wider breadth of examples, and for examples with richer content.

No human participants were included nor involved in this work. As no humans nor animals were involved, there was no opportunity for informed consent, and no ethical approval was sought.

While all clinical trials and prospective studies are different, this document describes sections that should or may be desirable to produce a document that will guide objective analyses at the project's conclusion. Too often, an SAP contains large chunks of text copied and pasted from the protocol. In some places, e.g., inclusion/exclusion criteria, this may be appropriate. Otherwise the SAP should be viewed as a place to add detail. Therefore, when copying from the protocol, consider whether technical details can or should be added to guide the statistical team at the time of analysis.

The following document is organized as an example Statistical Analysis Plan. Not all sections mentioned here will be necessary for all trials/studies and some may be omitted. Furthermore, there may be unique aspects of a study not contained herein that may be required for studies using novel methodology or having uncommon characteristics. The key is that the SAP contain detailed descriptions of all study populations, endpoints, and preplanned analyses to maximize study integrity and eliminate or limit the subjectivity required in the study's analysis phase.

Descriptive text defining the aims or providing descriptive detail for each section is found in italics and is meant to guide the SAP authors and it not meant for inclusion in an SAP. Bold underlined text are examples meant to be replaced by authors with their protocol-specific text . Other black text is meant as example description and may be kept entirely, edited as necessary, or replaced in its entirety.

4. Statistical analysis plan

5. statistical analysis plan approval signature page, 6. sap revision history.

Each time the SAP is given a new version number/the version is incremented, add here a date of the new version, the name of the primary author of the changes, a summary list of changes made, the reasons for those revisions, and any other information that seems suitable to record. Add to each row or entry the estimated number of weeks prior to the first interim analysis the revision was made.

SAP revisions may be aligned with specific protocol revisions as well. Each version of the SAP should reference the latest version of the protocol to which it is aligned.

7. SAP roles

SAP Primary Author: ____________________ (This is the name of the person directly writing most of the document.)

Senior Statistician: _______________________ (This is the name of the person who is the most organizationally senior who would actually read and sign off on and be accountable for the correct approaches being included.)

SAP Contributor ([Role]): _________________ (This is someone on the team who contributed to the SAP but did not do most of the writing nor are they the senior statistician.)

List of abbreviations

This list typically follows the terms from the protocol that are used in the SAP plus additional terms that are specific to the SAP that are statistical in nature, i.e., MMRM = Mixed Model Repeated Measures. The list below is offered as an example; if you want to use it, please remove terms not used in the protocol, and replace it with your terms.

Example wording to explain the purpose of this SAP. This should lay out the background, rationale, hypotheses, and objectives of the study and may be similar to the intro to the protocol.

The primary objective of this study is to assess the efficacy and safety of Product Name or Healthcare intervention strategy in the treatment of disease name in target population .

This document outlines the statistical methods to be implemented during the analyses of data collected within the scope of Trial Group 's Protocol Number titled “ Protocol Title ”.

This Statistical Analysis Plan (SAP) was prepared in accordance with the Protocol, Protocol Number , dated add date . (Original protocol version & date from which first SAP was created) .

This SAP was modified to be in accordance with the protocol revision(s) Protocol Number dated Date modified . (Protocol revisions which necessitated updates to SAP) .

The purpose of this Statistical Analysis Plan (SAP) is to provide a framework in which answers to the protocol objectives may be achieved in a statistically rigorous fashion, without bias or analytical deficiencies, following methods identified prior to database lock. Specifically, this plan has the following purposes:

- • To prospectively outline the specific types of analyses and presentations of data that will form the basis for conclusions.

- • To explain in detail how the data will be handled and analyzed, adhering to commonly accepted standards and practices of biostatistical analysis. Any deviations from these guidelines must be substantiated by sound statistical reasoning and documented in writing in the final clinical study report (CSR).

Because the SAP is easier to update than the protocol, there may be situations where the SAP is updated later and deviates from the statistical methods described in the protocol. A summary statement should be included to cover this situation indicating the SAP takes precedent when the protocol and SAP deviate:

The analyses described in this analysis plan are consistent with the analyses described in the study protocol. The order may be changed for clarity. If there are discrepancies between the protocol and SAP, the SAP will serve as the definitive analysis plan.

Any analysis performed not prospectively defined in this document will be labeled as post hoc & exploratory.

If a substantive change occurs after the final protocol version it may be included such as:

During the course of data collection, while randomization assignment was blinded, it became evident the primary outcome was heavily skewed right. Therefore, while the protocol cites a regression model for the primary outcome with unity link, the SAP is updated to include a log transformation followed by the same regression model.

2. Overview & Objectives of Study Design

This is used to give a brief synopsis of the study design. Typically, this can be from the synopsis in the protocol. Should include study design, dose, phase, and patient population. Authors should confirm that each objective is aligned with one or more study endpoints.

This example is for an oncology product.

This is a multicenter, double-blind, randomized study with a phase 2 portion and a phase 3 portion. Approximately X patients will be enrolled in this study. The phase 2 portion will be open label with all patients receiving study drug at one of two doses.

In Phase 2, patients only with advanced or metastatic NSCLC after failing standard therapy will be enrolled.

In Phase 3, patients with one of the following conditions will be enrolled:

- 1) advanced or metastatic breast cancer, who have failed ≥1 but <5 prior lines of chemotherapy; advanced or metastatic NSCLC after failing drug xxx-based therapy; or

- 2) hormone refractory (androgen independent) metastatic prostate cancer.

The eligibility of all patients will be determined during a 28-day screening period.

Approximately X patients with advanced and metastatic NSCLC will be enrolled. Patients are randomly assigned, with xx patients enrolled in each arm, with the arm designation and planned intervention as follows:

- Arm 1: Arm 1 Description, Dosing strategy

- Arm 2: Arm 2 Description, Dosing strategy

The study will be temporarily closed to enrollment when Z patients have been enrolled and completed at least 1 treatment cycle in each arm in phase 2. The Sponsor will notify the study sites when this occurs.

Once the study is temporarily closed to enrollment in phase 2, a PK/PD analysis will be performed to determine the RP3D. The PK/PD analysis will be done by an independent party (may define 3 rd party here) at the time 40 patients in Phase 2 have completed at least Cycle 1. This analysis will be blinded to the study team.

Remember all newly introduced abbreviations used above (e.g., RP3D and PK/PD) need to be in the list of abbreviations.

Phase 3 will not begin until RP3D has been determined based on the phase 2 PK/PD analysis as mentioned above. The dose chosen as the RP3D will constitute one arm and active control the other.

Approximately YYY patients are planned to be enrolled in the Phase 3 with one of the following diagnosis: Put in conditions for enrollment– this is in the protocol as inclusion/exclusion criteria and should match.

Patients will be randomly assigned with equal probability (1:1 ratio), with the arm designation and planned intervention as follows:

- Arm 1: Describe Arm 1, e.g., the RP3D identified in Phase 2.

- Arm 2: Describe Arm 2, e.g., the standard of care/control

For multi-stage seamless trials, it is necessary to define whether data from the initial stage will be combined with data from the follow stages, and if so how.

Data from all patients receiving the RP3D DRUG A dose in Phase 2 and Phase 3 will not be pooled for assessing the primary and secondary study endpoints. Phase 2 is for dose selection. Phase 3 will serve as independent validation and comparison of the chosen dose to active control. Therefore Phase 3 data will be analyzed separately. The primary results will be calculated only from patients enrolled in Phase 3 that have concurrent controls.

Rescue Treatment or other treatments or procedures:

This is usually detailed in the protocol and those details should be presented here if appropriate.

Section 14 of this SAP has further details regarding the schedule of events.

2.1. Phase 2 objectives

Objectives should be stated here and should match the objectives in the protocol.

Primary objective:

- • To establish the Recommended Phase 3 Dose (RP3D) based on PK/PD analysis.

Primary efficacy pharmacodynamic objective :

- • To assess DSN in treatment Cycle 1 in patients treated with Dose 1or with Dose 2. Neutrophils count will be assessed at baseline; Pre-dose during Cycle 1, Day 1, 2, 5, 6, 7, 8, 9, 10, 15.

Primary Safety Pharmacodynamic objective:

- • To assess blood pressure semi-continuously with 15-min intervals, starting 15 min pre-dose and lasting 6 h after start of infusion with drug xxx or drug yyy.

Secondary objectives:

- • To characterize the pharmacokinetic profile of Dose 1and Dose 2

- • To characterize the exposure-response relationships between measures of drug xxx exposure and pharmacodynamic endpoints of interest (e.g., duration of severe neutropenia [DSN]).

- • To characterize the exposure-safety relationships between measures of drug xxx exposure and safety events of interest.

Exploratory objectives:

- • To assess CD34 + at baseline, Days 2, 5, and 8 in Cycle 1 and Day 1 in Cycle 2

- • Quality of Life as assessed by EORTC QLQ-C30 and EQ-5D-5L

- • Disease Progression

Safety objectives:

- • Incidence, occurrence, and severity of AEs/SAEs

- • Incidence, occurrence, and severity of bone pain

- • Systemic tolerance (physical examination and safety laboratory assessments)

2.2. Phase 3 objectives

- • To assess DSN in treatment Cycle 1 in patients with advanced or metastatic breast cancer, who have failed ≥1 but <5 prior lines of chemotherapy; advanced or metastatic non-small cell lung cancer (NSCLC) after failing DRUG-based therapy. Neutrophils count will be assessed at baseline; Pre-dose during Cycle 1, Day 1, 2, 5, 6, 7, 8, 9, 10, 15.

- − Incidence of Grade 4 neutropenia (ANC <0.5 × 10 9 /L) on Days 8 and 15 in Cycles 1 to 4

- − Incidence of FN (ANC<0.5 × 10 9 /L and body temperature ≥38.3 °C) in Cycles 1 to 4

- − Neutrophil nadir during Cycle 1

- − Incidence of documented infections in Cycles 1 to 4

- − Incidence and duration of hospitalizations due to FN in Cycles 1 to 4

- − Health-related Quality of Life (QoL) questionnaire evaluated with European Organization for Research and Treatment of Cancer (EORTC) QLQ-C30 and EQ-5D-5L

- − Use of pegfilgrastim or filgrastim as treatment with neutropenia

- − Incidence of antibiotic use

- − Incidence of docetaxel dose delay, dose reduction, and/or dose discontinuation

Safety objectives .

3. Sample Size Justification

This section should contain the complete details for the justification of the sample size. This should include all details and assumptions necessary so the sample size could be independently replicated based upon provided information.

Many organizations, agencies and funders are increasingly encouraging simulation to be used for power calculations, even for designs that may have closed for sample size calculations. Simulation enables various sensitivity analyses such a sensitivity to violated assumptions, to missing data, etc. If simulation was used to calculate the sample size/power, then a reference should be made to archived simulation code. If manageable, the code could be included in a later portion of the SAP or provided elsewhere, e.g. reference to GitHub.

Note here also the phase 2 is a convenience sample and that is stated along with no hypotheses are being tested.

Phase 2 Sample Size Justification.

In the Phase 2 portion of this study, 30 patients with advanced or metastatic NSCLC will be enrolled. It is not powered for testing any statistical hypotheses but a standard sample size for this type of study to support Pk/Pd analysis. No formal hypotheses are being tested in the Phase 2 portion of this study.

Phase 3 Sample Size Justification.

Approximately 150 patients are planned to be enrolled with 1 of the following diagnoses: advanced or metastatic breast cancer, NSCLC, or HRPC. A sample size of 75 patients in each of the treatment arms DRUG A versus Standard of Care, with matching placebos achieve at least a 90% power to reject the null hypothesis of 0.65 day of inferiority in DSN between the treatment means with standard deviations of 0.75, at a significance level (alpha) of 0.05 two-sided two-sample zero-inflated Poisson model. Simulation code to confirm the power for N = 150 patients is contained in a later section.

Another example that uses simulation.

Negative binomial regression is used to test whether the intervention decreases the need for medical services in the following six months. Assuming medical service utilization will decrease from an average of 3 to 1.5, with SD = 1.25 x the mean for each group, 67 patients per group, 134 total, offers 90% power at the two-sided a = 0.05 level.

4. Randomization, stratification, blinding, and Replacement of Patients

Here, a description of the randomization procedures for the study are needed. If open label, state the same. If randomized, stratified, or any other type of grouping, that needs to be made clear. Also, address if this is a fixed randomization or dynamic as from a central IVRS/IWRS system.

This section should also contain specific information if patients are stratified, and if so how, and details on whether block randomization was performed, along with block size.

Also, if patients withdraw after consent and confirmed eligibility but before randomization, this section may contain information on whether such patients may be replaced.

Patients will be identified by a patient number assigned at the time of informed consent.

4.1. Treatment assignment

Patients will be stratified based on his or her diagnosis. Strata 1 and 2 are:

Patients will be randomized using IVRS/IWRS to 1 of the following treatment groups:

Phase 2 (10 patients in each arm):

Arm 1 : Describe treatment arm.

Arm 2: Describe treatment arm.

No blocking is used in Phase 2.

Phase 3 (75 patients enrolled in each arm):

Arm 1: Describe Arm.

Arm 2: Describe Arm.

Random blocks of 4 or 6 are used within each strata in Phase 3.

4.2. Rescue treatment/other treatments/dose escalation/other procedures are related

Describe here any other treatments/procedures that may be administered to the patient that result from efficacy/lack of efficacy or safety issues .

For example, for a pain trial.

A patient will be considered censored if they require opioids for rescue treatment. The patient will continue to be followed for safety outcomes, but efficacy assessments will terminate and will be counted as missing from that point forward. Such patients will not be replaced in the trial.

5. Definitions of Patients Populations to be analyzed

This section should clearly define all potential populations to be used in the data analyses. Each data analysis should then reference the population(s) to be used.

5.1. Analysis Sets of Phase 2

5.1.1. intent-to-treat analysis set (itt).

The intent-to-treat analysis set for Phase 2 is comprised of all Phase 2 patients that have been randomized.

The analysis of all endpoints, unless noted otherwise, will be conducted on the intent-to-treat analysis set.

5.1.2. Safety analysis set

The safety analysis set will include all patients who receive one or more doses of study drug.

5.1.3. Per Protocol Analysis Set (PP)

Patients who qualify for the ITT population who complete the study period without a major protocol deviation will be included in the PP analysis. Expected major protocol deviations include:

Also include any other circumstances which would preclude a study subject from inclusion in the per protocol population. Examples include:

- 1. Rescue therapy requiring opioids or other protocol prohibited medications

- 2. Primary outcome measures out of window

- 3. Subjects who did not meet all inclusion exclusion criteria

5.1.4. Interim Analysis Set

If the design calls for an interim analysis, define which population will be used. Usually this can denote the ITT or PP analysis sets described above. Sometimes it may include a unique set.

Should also include how it is capped, e.g., ‘first 30 patients enrolled who reach their 3-month endpoint’, or ‘first 30 patients to reach their 3-month endpoint.’

The interim analysis will use the intent-to-treat population but include only patients who have complete primary endpoint data at the time of the interim analysis.

A sensitivity analysis will be performed using the per protocol population, including only subjects with complete primary endpoint data, at the time of the analysis, and presented to the DMC.

5.1.5. Pharmacokinetic Analysis Set

Describe the conditions of the patient population utilized the PK Set.

All subjects who received at least 1 dose of any study and had at least 1 PK sample collected will be included in the PK analysis set. These subjects will be evaluated for PK unless significant protocol deviations affect the data analysis or if key dosing, dosing interruption, or sampling information is missing. For phase 3, PK samples may be collected with a schedule of collection based on the emerging data from Phase 2. Population pharmacokinetic modeling will be utilized to analyze the PK data, and optimal sampling approaches will be used to determine the PK time points for Phase 3.

5.1.6. Pharmacodynamic Analysis Set

Describe the conditions of the patient population utilized in any PD Set.

All patients who had blood pressure and DSN collected at any time during the study will be included in the PD analysis set. For phase 3, PD data may be collected with a schedule of collection to be confirmed based on the emerging data to be determined. Exploratory PK/PD and exposure-response analyses will be conducted to evaluate the effects of DRUG A on safety and efficacy endpoints. Details of these analyses will be summarized in the statistical analysis plan and may be reported outside of the main clinical study report.

5.2. Analysis Sets of Phase 3

5.2.1. intent-to-treat analysis set.

The intent-to-treat analysis set for Phase 3 is comprised of all Phase 3 patients that have been randomized.

5.2.2. Safety analysis set

The safety analysis set will be the same as the intent-to-treat analysis set for Phase 3.

5.2.3. Per Protocol Set

Also include any other circumstances which would preclude a study subject from inclusion in the per protocol population. Again, there should be sufficient detail in the SAP so that multiple people reviewing the data and the SAP would make the same judgement on who is and is not eligible for the PP set.

5.2.4. Modified intent-to-treat analysis set

Some studies include a modified intent-to-treat analysis which is a minor modification to the ITT analysis.

The intent-to-treat analysis set for Phase 3 is comprised of all Phase 3 patients that have been randomized in the study and have received at least one dose of study medication.

5.2.5. Interim Analysis Set

6. endpoints.

This section describes the primary, key secondary (if any), secondary, and exploratory endpoints and any safety endpoints that are tracked.

This section can be used to provide greater detail, description, or references for endpoints. For example, if the primary endpoint is a composite endpoint (e.g., MACE events), the set of conditions defined the presence of absence of the endpoint should be included. The primary endpoint section below provides an example.

Furthermore, this is the section where you would provide detail on how to calculate derived endpoints, e.g., if a set of survey questions were used to provide a composite score (e.g. HAQ-DI). The key secondary endpoint section provides an example.

Again here, authors should confirm that each objective is aligned with one or more study endpoints.

6.1. Primary endpoint

The primary endpoint is the time to first Major Adverse Cardiac Event (MACE). MACE events include the presence of any one or more of the following:

- 1) Non-fatal stroke

- 2) Non-fatal myocardial infarction