- Random article

- Teaching guide

- Privacy & cookies

Artwork: Serial and parallel processing: Top: In serial processing, a problem is tackled one step at a time by a single processor. It doesn't matter how fast different parts of the computer are (such as the input/output or memory), the job still gets done at the speed of the central processor in the middle. Bottom: In parallel processing, problems are broken up into components, each of which is handled by a separate processor. Since the processors are working in parallel, the problem is usually tackled more quickly even if the processors work at the same speed as the one in a serial system.

Chart: Who has the most supercomputers? About three quarters of the world's 500 most powerful machines can be found in just five countries: China (32.4%), the USA (25.4%), Germany (6.8%), Japan (6.2%), and France (4.8%). For each country, the series of bars show its total number of supercomputers in 2017, 2018, 2020, 2021, and 2023. The block on the right of each series, with a bold number in red above, shows the current figure for November 2022. Although China has the most machines by far, the aggregate performance of the US machines is significantly higher (representing almost half the world's supercomputer total performance, according to TOP500's analysis). Drawn in March 2023 using the latest data from TOP500 , November 2022.

Photo: Supercomputer cluster: NASA's Pleiades ICE Supercomputer is a cluster of 241,108 cores made from 158 racks of Silicon Graphics (SGI) workstations. That means it can easily be extendedto make a more powerful machine: it's now about 15 times more powerful than when it was first built over a decade ago. As of March 2023, it's the world's 97th most powerful machine (compared to 2021, when it was 70th, and 2020, when it stood at number 40). Picture by Dominic Hart courtesy of NASA Ames Research Center .

Photo: A Cray-2 supercomputer (left), photographed at NASA in 1989, with its own personal Fluorinert cooling tower (right). State of the art in the mid-1980s, this particular machine could perform a half-billion calculations per second. Picture courtesy of NASA Langley Research Center and Internet Archive .

Photo: Supercomputers can help us crack the most complex scientific problems, including modeling Earth's climate. Picture courtesy of NASA on the Commons .

Photo: Summit , based at Oak Ridge National Laboratory. At the time of writing in March 2023, it's the world's fifth most powerful machine (and the second fastest in the United States) with some 2,414,592 processor cores. Picture courtesy of Oak Ridge National Laboratory, US Department of Energy, published on Flickr in 2018 under a Creative Commons Licence .

If you liked this article...

On this website.

You might like these other articles on our site covering similar topics:

- Computers (basic introduction to how they work)

- Computer networks

- History of computers

Other websites

Biographies of pioneers, technical books for students, simple, general introductions for younger readers.

Text copyright © Chris Woodford 2012, 2023. All rights reserved. Full copyright notice and terms of use .

Fluorinert and 3M are trademarks of 3M.

Rate this page

Tell your friends, cite this page, more to explore on our website....

- Get the book

- Send feedback

Argonne National Laboratory

Science 101: supercomputing.

There are computers. And then there are super computers.

Most personal and work computers are powerful enough to perform tasks like doing homework and conducting business. You can even increase the power of certain components for an awesome gaming experience.

But it’s pretty unlikely that you’ll solve riddles about the universe or understand the inner workings of a complex virus. Those are big problems that both need and produce a lot of data. Managing all of that information requires the power of supercomputers.

Supercomputers are often used to simulate experiments that might be too costly, dangerous, or even impossible to conduct in real life. For example, researchers use simulations to understand how stars explode or how fuel is injected inside an engine.

A supercomputer consists of thousands of small computers called nodes. Each node is equipped with its own memory and a number of processors, the bits that do all the figuring out.

Newer supercomputers use a combination of central processing units, CPUs, like the kind that operate most home computers, and different accelerator chips related to graphic processing units, GPUs. In gaming, GPUs help quickly create the visuals; in supercomputing, they specialize in quick calculations for data processing or artificial intelligence workloads.

Another key to a powerful supercomputing system is an incredibly fast network, the communications hub that connects all of those mini computers. So now, instead of working as separate units, they can act as one, managing millions of tasks to tackle complex problems quickly.

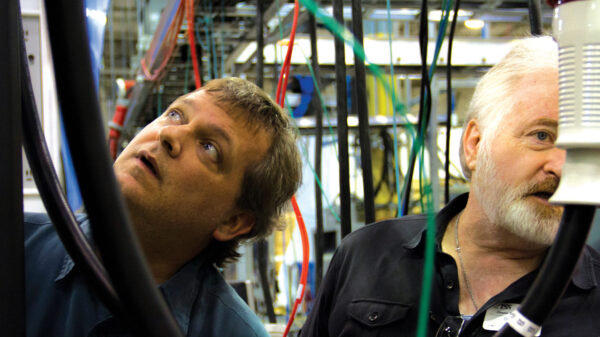

But it’s not just the technological marvels that make supercomputers special. An enormous amount of expertise and infrastructure is required to operate these massive machines and make them available to scientists, who tap into supercomputing power remotely to run their computational experiments.

System administrators ensure supercomputer hardware is functional and software is up to date. In-house computational scientists work with supercomputer users from across the world to help ensure their codes run smoothly and their simulations help advance their scientific explorations. Technical support experts are on standby to help users troubleshoot any issues they encounter with their work at a supercomputing facility.

The world’s most powerful supercomputers, like those run by the U.S. Department of Energy, require specialized facilities, known as data centers, to accommodate their space, energy, and cooling requirements. For example, Argonne National Laboratory’s next supercomputer, Aurora, will occupy the area of two NBA basketball courts, consume the same amount of energy as thousands of homes, and be cooled by a complex system that contains 44,000 gallons of chilled water.

As home to the Argonne Leadership Computing Facility, a DOE Office of Science user facility, the laboratory has a long history of building and using supercomputers to tackle some of the world’s most pressing problems. And Argonne continues to push the limits of technology and discovery. Aurora will help scientists advance our understanding of everything from Earth’s changing climate to improved solar cell materials to the structure of the human brain.

Solving big, complex problems takes a lot of smart people and big, amazing machines. Argonne’s supercomputers are up for the challenge!

What is a supercomputer?

A complicated machine helping scientists make huge discoveries

Imagine one million laptops calculating together in perfect harmony and you’re getting close to the power of a supercomputer. Researchers need all that computing muscle to answer some of the world’s biggest questions in human health, climate change, energy and even the origins of the universe! When you want to study something that’s impossible to explore in a lab — like an exploding star or a fast-forming hurricane — your computer has to be super.

What is a Supercomputer? Features, Importance, and Examples

A supercomputer processes data at lightning speeds, measured in floating-point operations per second (FLOPS).

A supercomputer is defined as an extremely powerful computing device that processes data at speeds measured in floating-point operations per second (FLOPS) to perform complex calculations and simulations, usually in the field of research, artificial intelligence, and big data computing. This article discusses the features, importance, and examples of supercomputers and their use in research and development.

Table of Contents

What is a supercomputer, features of a supercomputer, why are supercomputers important.

- Examples Of Supercomputers

A supercomputer is an extremely robust computing device that processes data at speeds measured in floating-point operations per second (FLOPS) to perform complex calculations and simulations, usually in the field of research, artificial intelligence, and big data computing.

Supercomputers operate at the maximum rate of operation or the best performance rate for computing. The primary distinction between supercomputers and basic computing systems is processing power.

A supercomputer could perform computations at 100 PFLOPS. A standard general-purpose computer is limited to scores of gigaflops to tens of teraflops in processing speed. Supercomputers consume huge amounts of energy. Consequently, they produce so much heat that users must retain them in cooling environments.

Evolution of supercomputers

In the early 1960s, IBM introduced the IBM 7030 Stretch, and Sperry Rand introduced the UNIVAC LARC, the first two supercomputers intentionally built to be significantly more powerful when compared to the quickest business machines available at the time. In the late 1950s, the U.S. government consistently supported the research and creation of state-of-the-art, high-performing computer technology for defense purposes, influencing supercomputing development.

Although only a small number of supercomputers were initially manufactured for government use, the new technology would eventually enter the commercial and industrial sectors, mainstreaming the technology. For instance, from the mid-1960s until the late 1970s, Control Data Corporation (CDC) and Cray Research dominated the commercial supercomputer sector. Seymour Cray’s CDC 6600 is regarded as the first commercially viable supercomputer. IBM would become a market leader from the 1990s onwards and right to the present day.

How do supercomputers work?

The architectures of supercomputers consist of many central processor units (CPUs). These CPUs are organized into clusters of computation nodes and memory storage. Supercomputers may have many nodes linked to solve problems via parallel processing.

Multiple concurrent processors which conduct parallel processing comprise the biggest and most powerful supercomputers. Two parallel processing methodologies exist: symmetric multiprocessing and massively parallel processing . In other instances, supercomputers are dispersed, meaning they take power from many PCs located in several areas instead of putting all CPUs in a single location.

Supercomputers are measured in floating point operations per second or FLOPS, whereas previous systems were generally measured in IPS (instructions per second). The greater this value, the more effective the supercomputer.

In contrast to conventional computers, supercomputers have many CPUs. These CPUs are organized into compute nodes, each having a processor or group of processors – symmetric multiprocessing (SMP) — and a memory block. A supercomputer may comprise a large number of nodes at scale. These nodes may work together to solve a particular issue using interconnect communications networks.

Notably, due to the power consumption of current supercomputers, data centers need cooling systems and adequate facilities to accommodate all of this equipment.

Types of supercomputers

Supercomputers may be divided into the following classes and types:

- Tightly connected clusters: These are groupings of interconnected computers that collaborate to solve a shared challenge. There are four approaches to establishing clusters for connecting these computers. This results in four cluster types: two-node clusters, multi-node clusters, director-based clusters, and massively parallel clusters.

- Supercomputers with vector processors: This occurs when the CPU can process a full array of data items simultaneously instead of working on each piece individually. This offers a sort of parallelism in which all array members are processed simultaneously. Such supercomputer processors are stacked in arrays that can simultaneously process many data items.

- Special-purpose computers: These are intended for a particular function and can’t be used for anything else. They are meant to address a specific problem. These systems devote their attention and resources to resolving the given challenge. The IBM Deep Blue chess-playing supercomputer is an example of a supercomputer developed for a specific task.

- Commodity supercomputers: These consists of standard (common) personal computers linked by high-bandwidth, fast Local Area Networks (LANs) . These computers then use parallel computing, working together to complete a single task.

- Virtual supercomputers: A virtual supercomputer essentially works on, and lives in the cloud. It offers a highly efficient computing platform by merging many virtual machines on processors in a cloud data center.

See More: What Is IT Infrastructure? Definition, Building Blocks, and Management Best Practices

Standard supercomputer features include the following:

1. High-speed operations, measured in FLOPS

Every second, supercomputers perform billions of computations. As a performance metric, these use Floating-Point Operations per Second (FLOPS). A FLOPS measures the number of fluctuating computations a CPU can perform every second. Since the vast majority of supercomputers are employed primarily for scientific research, which demands the reliability of floating numbers, FLOPS are recommended when evaluating supercomputers. The performance of the fastest supercomputers is measured in exaFLOPS.

2. An extremely powerful main memory

Supercomputers are distinguished by their sizeable primary memory capacity. The system comprises many nodes, each with its own memory addresses that may amount to approximately several petabytes of RAM. The frontier, the world’s fastest computer, contains roughly 9.2 petabytes of storage or memory. Even other supercomputers have a considerable RAM capacity.

3. The use of parallel processing and Linux operating systems

Parallel processing is a method in which many processors work concurrently to accomplish a specific computation. Each processor is responsible for a portion of the computation to solve the issue as quickly as practicable. In addition, most supercomputers use modified versions of the Linux operating system. Operating systems based on Linux are used because they are publicly available, open-source software, and execute instructions more efficiently.

4. Problem resolution with a high degree of accuracy

With the vast volume of data constantly processed and its accelerated execution, there is a possibility that the computer may provide inaccurate results at any point. It has been shown that supercomputers are accurate in all their calculations and provide correct information. With faster and more precise simulations, supercomputers can effectively tackle problems. Supercomputers are assigned several repetitions of a problem, which they answer in a split second. These iterations are also capable of being created by supercomputers. Supercomputers can accurately answer any numerical or logical issue.

See More: What Is an NFT (Non-Fungible Token)? Definition, Working, Uses, and Examples

Today, the world is increasingly dependent on supercomputers for the following reasons:

1. Supporting artificial intelligence research (AI) initiatives

Artificial intelligence (AI) systems often demand efficiency and processing power equivalent to that of a supercomputer. Machine-learning and artificial intelligence app developments consume massive volumes of data, which supercomputers can manage.

Some supercomputers are designed with artificial intelligence in consideration. Microsoft, for instance, custom-built a supercomputer for training huge AI models that are compatible with it’s Azure cloud platform. The objective is to deliver supercomputing resources to programmers, data analysts, and business customers via Microsoft Azure’s AI services. Microsoft’s Turing Natural Language Generation is one such tool; it is a natural language processing framework. Nvidia’s Perlmutter is yet another instance of a supercomputer designed exclusively for AI tasks.

2. Simulating mathematical problems to invest in the right direction

Because supercomputers can calculate and predict particle interactions, they have become an indispensable tool for researchers. In a way, interactions are occurring everywhere. This includes the weather, the formation of stars, and the interaction of human cells with drugs.

A supercomputer is capable of simulating all of these interactions. Scientists may then use the data to gain valuable insights, such as whether it will snow tomorrow, whether a new scientific hypothesis is legitimate, or if an impending cancer therapy is viable. The same technology may also enable enterprises to examine radical innovations and choose which ones merit real-world verification or testing.

3. Using parallel processing to solve complex problems

Decades ago, supercomputers began using a technique known as “massively parallel processing,” in which problems were divided into sections and worked on concurrently by thousands of processors instead of the “serial” approach.

Comparable to arriving at the register with a full shopping cart and then dividing the items among numerous companions. Each “friend or companion” may proceed to a separate checkout and pay individually for a couple of the products. After everyone has paid, they may reunite, reload the cart, and exit the store. The greater the number of articles and friends, the quicker parallel processing gets.

4. Predicting the future with an increasing level of accuracy

Large-scale weather forecast models and the computers that operate them have progressively improved over the past three decades, resulting in more exact and reliable hurricane path predictions. Supercomputers have contributed to these advancements in forecasting when, where, and how extreme storms may occur. Additionally, users may extend the same ideas to other historical occurrences.

Is it surprising that supercomputers are being prepared and trained to anticipate wars, uprisings, and other societal disruptions in this era of big data ?

Kalev Leetaru, a Yahoo Fellow-in-Residence at Georgetown University, Washington, D.C., has accumulated a library of over one hundred million articles from media sources throughout the globe, spanning thirty years, with each story translated and categorized for geographical region and tone. Leetaru processed the data using the shared-memory supercomputer Nautilus, establishing a network of 10 billion objects linked by 100 trillion semantic links.

This three-decade-long worldwide news repository was part of the Culturomics 2.0 project, which forecasted large-scale human behavior by analyzing the tone of global news media as per timeframe and location.

5. Identifying cyber threats at lightning speed

Identifying cybersecurity risks from raw internet data may be comparable to searching for a needle in a pile of hay. For instance, the quantity of web traffic data created in 48 hours is just too large for a single laptop or even 100 computers to convert into a form human analysts can comprehend. For this reason, cybersecurity analysts depend on sampling to identify potential dangers.

Supercomputing may provide a more advanced solution. In a newly published research titled “Hyperscaling Internet Graph Analysis with D4M on the MIT SuperCloud,” a supercomputer successfully compressed 96 hours of unprocessed, 1-gigabit network-linked internet traffic information into a query-ready bundle. It constructed this bundle using 30,000 computing cores (at par with 1,000 personal computers).

6. Powering scientific breakthroughs across industries

Throughout its history, supercomputing has been of considerable significance because it has enabled significant improvements in vital sectors of national security and scientific discovery, and the resolution of societally significant issues.

Currently, supercomputing is utilized to solve complex issues in stockpile management, military intelligence, meteorological prediction, seismic modeling, transportation, manufacturing, community safety and health, and practically every other field of fundamental scientific study. The significance of supercomputing in these fields is growing, and supercomputing is showing an ever-increasing influence on future advancements.

See More: What Is Raspberry Pi? Models, Features, and Uses

Examples of Supercomputers

Now that we have discussed the meaning of supercomputers and how the technology works let us look at a few real-world examples. Here are the most notable examples of supercomputers you need to know:

1. AI Research SuperCluster (RSC) by Facebook parent Meta

Facebook’s parent company, Meta, said in January 2022 that it would develop a supercomputer slated to be among the world’s most powerful to increase its data processing capability. Its array of devices could process videos and images up to 20 times quicker than their present systems. Meta RSC is anticipated to assist the organization in developing unique AI systems that may, for instance, enable real-time speech translations for big groups of individuals who speak various languages.

2. Google Sycamore, a supercomputer using quantum processing

Google AI Quantum created the quantum computer Google Sycamore. The Google Sycamore chip is based on superconducting qubits, a type of quantum computing that combines superconducting materials and electric currents to store and manage information. With 54 qubits, the Google Sycamore chip can perform a computation in 200 seconds which would take a traditional processor 10,000 years to finish.

3. Summit, a supercomputer by IBM

Summit, or OLCF-4, is a 200 petaFLOPS-capable supercomputer designed at IBM for deployment at the Oak Ridge Leadership Computing Facility (OLCF). As of November 2019, the supercomputer’s estimated power efficacy of 14.668 gigaFLOPS/watt ranked it as the fifth most energy-efficient in the world. The Summit supercomputer allows scientists and researchers to address challenging problems in energy, intelligent systems, human health, and other study sectors. It has been used in modeling earthquakes, material science, genetics, and the forecasting of neutrino lifetimes in physics.

4. Microsoft’s cloud supercomputer for OpenAI

Microsoft has constructed one of the world’s top five publicly reported supercomputers, making new OpenAI technology accessible on Azure. It will aid in the training of massive artificial intelligence models. This is a critical step toward establishing a platform upon which other organizations and developers may innovate. The OpenAI supercomputer is a single system with around 285,000 CPU cores, 10,000 GPUs, and 400 gigabits every second of network bandwidth per GPU server.

5. Fugaku by Fujitsu

Fujitsu placed Fugaku in the RIKEN Center for Computational Science (R-CCS) in Japan’s Kobe prefecture. The system’s upgraded hardware set a new worldwide record — 442 petaflops. Its only mission is to address the world’s most pressing problems, with a particular emphasis on climate change. The most significant difficulty for Fugaku is correctly anticipating global warming depending on carbon dioxide emissions and their effect on the population worldwide.

6. Lonestar6 by the Texas Advanced Computing Center (TACC) at the University of Texas

Lonestar6 is certified at three petaFLOPS, which indicates that it is capable of about three quadrillion calculations per second. TACC says that to replicate what Lonestar6 can calculate in one second, humans must do one computation every moment for 100 million years. It is a hybrid structure comprising air-cooled and liquid (oil) immersion-cooled components. And over 800 Dell EMC PowerEdge C6525 servers function as a single HPC system. Lonestar6 supports the initiatives of the University of Texas Research Cyberinfrastructure, such as COVID-19 studies and drug development, hurricane modeling, wind energy, and research on dark energy.

7. Qian Shi, Baidu’s quantum supercomputer

This year, Baidu, Inc. unveiled its first-ever superconducting quantum computer, which combines technology, algorithms, software, basic hardware, and apps. Atop this infrastructure sits several quantum applications, including quantum algorithms deployed to create new materials for revolutionary lithium batteries or emulate protein folding. Qian Shi provides the general public with a ten-qubit quantum computing service that is both secure and substantial.

8. Virtual supercomputing by AWS

In 2011, Amazon constructed a virtualized supercomputer on top of its Elastic Compute Cloud (EC2), a web service that creates virtual computers on demand. The nonexistent (i.e., virtual) supercomputer was faster than all but 41 of the world’s actual supercomputers at the time. EC2 by Amazon Web Services (AWS) is capable of competing with supercomputers constructed using standard microprocessors and commodity hardware components.

See More: What Is Deep Learning? Definition, Techniques, and Use Cases

Supercomputers have evolved in leaps and bounds from costly and bulky systems. For example, HPE revealed its new supercomputer at Supercomputing 2022 (SE22), which is not only powerful but also energy efficient. The rapid proliferation of data also means that supercomputing technology now has more information to ingest and can create better models and simulations. Eventually, organizations and individuals will be able to use hardware and cloud-based resources to build bespoke supercomputing setups.

Did this article adequately explain the meaning and workings of supercomputers? Tell us on Facebook Opens a new window , Twitter Opens a new window , and LinkedIn Opens a new window . We’d love to hear from you!

MORE ON TECH 101

- What Is a Neural Network? Definition, Working, Types, and Applications in 2022

- What Is Data Analytics? Definition, Types, and Applications

- What Is Robotic Process Automation (RPA)? Meaning, Working, Software, and Uses

- What Is the Metaverse? Meaning, Features, and Importance

- What Is Data Security? Definition, Planning, Policy, and Best Practices

Share This Article:

Technical Writer

Recommended Reads

What Is a Mainframe? Features, Importance, and Examples

UNIX vs. Linux vs. Windows: 4 Key Comparisons

What Is Cache? Definition, Working, Types, and Importance

SSD vs. HDD: 11 Key Comparisons

Data Science vs. Machine Learning: Top 10 Differences

15 Best Machine Learning (ML) Books for 2020

What Is a Supercomputer and How Does It Work?

A supercomputer refers to a high-performance mainframe computer. It is a powerful, highly accurate machine known for processing massive sets of data and complex calculations at rapid speeds.

What makes a supercomputer “super” is its ability to interlink multiple processors within one system. This allows it to split up a task and distribute it in parts, then execute the parts of the task concurrently, in a method known as parallel processing .

Supercomputer Definition

Supercomputers are high-performing mainframe systems that solve complex computations. They split tasks into multiple parts and work on them in parallel, as if there were many computers acting as one collective machine.

“You have to use parallel computing to really take advantage of the power of the supercomputer,” Caitlin Joann Ross, a research and development engineer at Kitware who studied extreme-scale systems during her residency at Argonne Leadership Computing Facility , told Built In. “There are certain computations that might take weeks or months to run on your laptop, but if you can parallelize it efficiently to run on a supercomputer, it might only take a day.”

To put that speed in perspective, a person can solve an equation on pen and paper in one second. In that same timespan, today’s fastest supercomputer can execute a quintillion calculations. That’s eighteen zeros.

Soumya Bhattacharya, system administrator at OU Supercomputing Center for Education and Research , explained it like this: “Imagine one-quintillion people standing back-to-face and adding two numbers all at the same time, each second,” he said. “This line would be so long that it could make a million round trips from our earth to the sun.”

Originally developed for nuclear weapon design and code-cracking , supercomputers are used by scientists and engineers these days to test simulations that help predict climate changes and weather forecasts, explore cosmological evolution and discover new chemical compounds for pharmaceuticals.

How Do Supercomputers Work?

Unlike our everyday devices, supercomputers can perform multiple operations at once in parallel thanks to a multitude of built-in processors. This high-level of performance is measured by floating-point operations per second (FLOPS), a unit that indicates how many arithmetic problems a supercomputer can solve in a given timeframe.

How it works: an operation is split into smaller parts, where each piece is sent to a CPU to solve. These multi-core processors are located within a node, alongside a memory block. In collaboration, these individual units — as many as tens of thousands — communicate through inter-node channels called interconnects to enable concurrent computation. Interconnects also interact with I/O systems, which manage disk storage and networking.

How’s that different from regular old computers? Picture this: On your home computer, once you strike the ‘return’ key on a search engine query, that information is input into a computer’s system, stored, then processed to produce an output value. In other words, one task is solved at a time. This process works great for everyday applications, such as sending a text message or mapping a route via GPS . But for more data-intensive projects, like calculating a missile’s ballistic orbit or cryptanalysis, researchers rely on more sophisticated systems that can execute many tasks at once. Hence supercomputers.

What Are Supercomputers Used For?

Supercomputing’s chief contribution to science has been its ability to simulate reality. This capability helps humans make better performance predictions and design better products in fields from manufacturing and oil to pharmaceutical and military. Jack Dongarra, a Turing Award recipient and emeritus professor at the University of Tennessee , likened that ability to having a crystal ball.

“Say I want to understand what happens when two galaxies collide,” Dongarra said. “I can’t really do that experiment. I can’t take two galaxies and collide them — so I have to build a model and run it on a computer.”

Back in the day, when testing new car models, companies would literally crash them into a wall to better understand how they withstand certain thresholds of impact — an expensive and time consuming trial, he noted.

“Today, we don’t do that very often,” Dongarra said. “[Now] we build a computer model with all the physics [calculations] and crash it into a simulated wall to understand where the weak points are.”

This concept carries over into various use cases and fields of study enlisting the help of high-performance computing.

Weather Forecasting and Climate Research

When you feed a supercomputer with numerical modeling data — gathered via satellites, buoys, radar and weather balloons — field experts become better informed on how atmospheric conditions affect us and. They become better equipped to advise the public on weather-related topics, like whether you should bring a jacket and what to do in the event of a thunderstorm.

Derecho, a petascale supercomputer, is being used to explore the effects of solar geoengineering, a method that would theoretically cool the planet by redirecting sunrays, and how releasing aerosols influence rainfall patterns.

Genomic Sequencing

Genomic sequencing — a type of molecular modeling — is a tactic scientists use to get a closer look at a virus’ DNA sequence. This helps them diagnose diseases, develop tailor-made treatments and track viral mutations. Originally, this time-intensive process took a team of researchers 13 years to complete . But with the help of supercomputers, complete DNA sequencing is now a matter of hours. Most recently, researchers at the Stanford University scored the Guinness World Records title for fastest genomic sequencing technique using a “mega-machine” method that runs a single patient’s genome across all 48 flow cells simultaneously.

Aviation Engineering

Supercomputing systems in aviation have been used to detect solar flares , predict turbulence and approximate aeroelasticity (how aerodynamic loads affect a plane) to build better aircrafts. In fact, the world’s fastest supercomputer to date, Frontier, has been recruited by GE Aerospace to test open fan engine architecture designed for the next-generation of commercial aircrafts, which could help reduce carbon-dioxide emissions by more than 20 percent .

Space Exploration

Supercomputers can take the massive amounts of data collected by a various set of sensor-laden devices — satellites, probes, robots and telescopes — and use it to simulate outer space conditions earthside. These machines can create artificial environments that match patches of the universe and, with advanced generative algorithms , even reproduce it.

Over at NASA, a petascale supercomputer named Aitken is the latest addition to the Ames Research Center that is used to create high-resolution simulations in preparation for upcoming Artemis missions, which aim to establish long-term human presence on the moon. A better understanding of how aerodynamic loads will affect the launch vehicle, mobile launcher, tower structure and flame trench reduces risk and creates safer conditions.

Nuclear Fusion Research

Two of the world’s highest-performing supercomputers — Frontier and Summit — will be creating simulations to predict energy loss and optimize performance in plasma. The project’s objective, led by scientists at General Atomics, the Oak Ridge National Laboratory and the University of California, San Diego, is to help develop next-generation technology for fusion energy reactors. Emulating energy generation processes of the sun, nuclear fusion is a candidate in the search for abundant, long-term energy resources free of carbon emissions and radioactive waste.

Related Reading High-Performance Computing Applications and Examples to Know

How Fast Is a Supercomputer?

Today’s highest performing supercomputers are able to compute simulations in the time it would take a personal computer 500 years , according to the Partnership for Advanced Computing in Europe.

Fastest Supercomputers in the World

The following supercomputers are ranked by Top500 , a project co-founded by Dongarra that ranks the fastest non-distributed computer systems based on their ability to solve a set of linear equations using a dense random matrix. It uses the LINPACK Benchmark , which estimates how fast a computer is likely to run one program or many.

1. Frontier

Operating out of Oak Ridge National Lab in Tennessee, Frontier is the world’s first recorded supercomputer to break the “exascale ,” sustaining computational power of 1.1 exaFLOPS. In other words, it can solve a quintillion calculations per second. Built out of 74 HPE Cray EX supercomputing cabinets — which weigh nearly 8,000 pounds each — it’s more powerful than the top seven supercomputers combined. According to the laboratory, it would take the entire planet’s population more than four years to solve what Frontier can solve in one second .

Fugaku debuted at 416 petaFLOPS — a performance that won it the world title for two consecutive years — and, following a software upgrade in 2020, has since peaked at 442 petaFLOPS. It’s built with a Fujitsu A64FX microprocessor that has 158,976 nodes . The petascale computer is named after an alternative name for Mount Fuji, and is located at the Riken Center for Computational Science in Kobe, Japan.

A consortium of 10 European countries banded together to bring about Lumi , Europe’s fastest supercomputer. This 1,600-square-foot, 165-ton machine has a sustained computing power of 375 petaFLOPS, with peak performance at 550 petaFLOPS — a capacity comparable to 1.5 million laptops . It’s also one of the most energy efficient models to date. Located at CSC’s data center in Kajaani, Finland, Lumi is kept cool by natural climate conditions. It also runs entirely on carbon-free, hydro-electric energy while producing 20 percent of the surrounding district’s heating from its waste heat.

4. Leonardo

Leonardo is a petascale supercomputer hosted by the CINECA data center based in Bologna, Italy. The 2,000-square-foot system is split into three modules — the booster, data center and front-end and service modules — which run on an Atos BullSequana XH2000 computer with more than 13,800 Nvidia Ampere GPUs. At peak performance, processing speeds hit 250 petaFLOPS.

Summit was the world’s fastest computer when it debuted in 2018, and holds a current top speed of 200 petaFLOPS. The United States Department of Energy sponsored the project , operated by IBM, with a $325 million contract. Using AI, material science and genomics, the 9,300 square-foot machine has been used to simulate earthquakes and extreme weather conditions and predict the lifespan of neutrinos. Like Frontier, Summit is hosted by the Oak Ridge National Laboratory in Tennessee.

Related Reading Will Exascale Computing Change Everything?

Difference Between General-Purpose Computers and Supercomputers

Processing power is the main difference that separates supercomputers from your average, everyday Macbook Pro. This can be credited to the multiple CPUs built into their architecture, which outnumber the sole CPU found in a general-purpose computer by tens of thousands.

In terms of speed, the typical performance of an everyday device —which is measured between one gigaFLOPS to tens of teraFLOPS, ranging from one billion to 10 trillion computations per second — pales in comparison to today’s 100-petascale machines, capable of solving 100 trillion computations per second.

The other big difference is size. A laptop slips easily into a tote bag. But scalable supercomputing machines weigh tons and have a square-footage in the thousands. They generate so much heat — which, in some cases, is repurposed to heat local towns — that they require a built-in cooling system to properly function.

Supercomputers and Artificial Intelligence

Supercomputers can train various AI models at quicker speeds while processing larger, more detailed data sets (as in this climate science research ).

Plus, AI will actually lighten a supercomputer's workload, as it uses lower precision calculations that are then cross-checked for accuracy. AI heavily relies on algorithms, which, over time, lets the data do the programming.

Paired together, AI and supercomputers have boosted the number of calculations per second of today’s fastest supercomputer by an interval of six .

This pairing has rendered an entirely new standard to measure performance, known as the HPL-MxP benchmark . It balances traditional hardware-based metrics with algorithmic computation.

Dongarra thinks supercomputers will shape the future of AI , though exactly how that will happen isn’t entirely foreseeable.

“To some extent, the computers that are being developed today will be used for applications that need artificial intelligence, deep learning and neuro-networking computations,” Dongarra said. “It’s going to be a tool that aids scientists in understanding and solving some of the most challenging problems we have.”

History of Supercomputers

While “super computing” was originally coined by now-defunct newspaper New York World in 1929, describing large IBM tabulators at Columbia University that could read 150 punched cards per minute, the world’s first supercomputer — the CDC 6600 — didn’t arrive onto the scene until 1964.

Even though computers of this era were built with only one processor, this model managed to outperform its peer machines — more specifically, the leader at that time, which was the 7030 Stretch — threefold, which is exactly what made it so “super.” Designed by Seymour Cray, the CDC 6600 was capable of completing three million calculations per second. Built with 400,000 transistors, more than 100 miles of hand wiring and a Freon-cooling system, the CDC 6600 was about four file cabinets in size and sold for about $8 million — what would be $78 million today .

Cray’s vector supercomputers would dominate until the 1990s, when a new concept — known as massive parallel processing — took over. These systems ushered in the modern era of supercomputing, where multiple computers concurrently solve problems in unison.

Beginning with Fujitsu's Numerical Wind Tunnel in 1994 , the global spotlight shifted from American labs over to those in Japan. This model accelerated processing speeds by increasing the number of processors from a standard of eight units to 167. Just two years later, Hitachi pushed this into the thousands when it built its namesake SR2201 , with a total of 2,048 processors.

By the turn of the 21st century, it became the norm to design petascale supercomputers with tens of thousands of CPUs. Increasing the number of cores, interconnects, memory capacity, power efficiency as well as incorporating GPUs, artificial intelligence and edge computing are some of the latest efforts defining supercomputing today.

With Frontier’s debut in May 2022, we have entered the era of exascale supercomputing, producing machines capable of computing one quintillion calculations per second.

Related Reading Parallel Processing Examples and Applications to Know

Future of Supercomputing

Your current smartphone is as fast as a supercomputer was in 1994 — one that had 1,000 processors and did nuclear simulations. With such rapid acceleration, it’s natural to wonder what comes next.

Just around the corner, two exascale supercomputing systems, Aurora and El Capitan, are planned to be installed in United States-based laboratories in 2024, with plans to create neural maps and research ways to accelerate industry.

“There are limitations on what we can do today on a supercomputer,” Mike Papka, division director of the Argonne Leadership Computing Facility , told Built In. “Right now, we can do simulations of the evolution of the universe. But with Aurora, we’ll be able to do that in a more realistic manner, with more physics and more chemistry added to them. We’re starting to do things like try to understand how different drugs interact with each other and, say, some form of cancer. We’ll be able to do that on an even larger scale with Aurora.”

The deployment of Europe's first exascale supercomputer , named Jupiter, is also scheduled for 2024, and will focus on climate change and sustainable energy solutions as well as how to best combat a pandemic.

Current supercomputing trends indicate a continuation of AI’s stronghold on science and tech innovation. Argonne National Lab’s laboratory director Rick Stevens sees AI-based inferencing techniques, so-called “surrogate machine learning models,” replacing simulations altogether, Inside HPC reports.

The influence of machine learning and deep learning can be seen in the growing interest to build systems with GPU-heavy architecture, specialized in multiple parallel processing.

“GPU-heavy machine learning and artificial intelligence calculations are gaining popularity so much so that there are supercomputers dedicated for GPU-based computation only,” Bhattacharya said.

“Traditionally, communication and computation technologies were at the heart of the supercomputer and its advancement,” he explained. “However, as individual computers become more power hungry, datacenter designers have had to shift their focus on the adequate and sustained cooling of these machines.”

Quantum computation is also rapidly advancing, Bhattacharya said, and could potentially team with supercomputers to take on unresolved societal quandaries together sooner than we think. While each excel in their own right, quantum computers guide the way to understanding life on the quantum scale, capable of modeling the state of an atom or a molecule.

Now that the top speeds of today’s machines have breached the exascale, the race to the zettascale has begun. Based on the trends of the last 30 years, Bhattacharya predicts the record will be bested within a decade.

Frequently Asked Questions

What is a supercomputer.

Supercomputers are powerful, high-performing computer systems that can process lots of data and solve complex calculations at fast speeds, thanks to their ability to split tasks into multiple parts and work on them in parallel.

What is a supercomputer used for?

Supercomputers are commonly used for making predictions with advanced modeling and simulations. This can be applied to climate research, weather forecasting, genomic sequencing, space exploration, aviation engineering and more.

What is the difference between a normal computer and a supercomputer?

Normal computers carry out one task at a time, while supercomputers can execute many tasks at once. Additionally, supercomputers are much faster, bigger and have more processing power than everyday computers used by consumers.

Great Companies Need Great People. That's Where We Come In.

What is a Supercomputer and What are its Types, Uses and Applications

What does a supercomputer do and where are they used today read this blog to find out, where we introduce you to the world of supercomputers, what they do, their uses and more....

Mar 31, 2020 By Team YoungWonks *

Even as the world scrambles to fight the dreaded Coronavirus, all resources are being pooled in; it is perhaps not surprising then to see that the IBM Summit, the world’s fastest supercomputer (at least as of November 2019), is also at the forefront of this fight.

According to a CNBC report, the IBM Summit has zeroed in on 77 potential molecules that may turn out to be useful in the treatment against the novel coronavirus. It would be interesting to note that this supercomputer - based at the Oak Ridge National Laboratory in Tennessee - has a peak speed of 200 petaFLOPS.

What then is a supercomputer? And what does petaFLOPS stand for? This blog in its introduction to supercomputers shall explain these concepts and more…

What is a Supercomputer?

A supercomputer is a computer with a high level of performance in comparison to a general-purpose computer. The performance of a supercomputer is typically tracked through its floating-point operations per second (FLOPS). FLOPS a measure of computer performance, useful in fields of scientific computations that require floating-point calculations, aka calculations that include very small and very large real numbers and usually need fast processing times. It is a more accurate measure than million instructions per second (MIPS).

Since 2017, we have supercomputers that can carry out over a hundred quadrillion FLOPS, called petaFLOPS. It is also interesting to note that today, all of the world’s fastest 500 supercomputers run Linux-based operating systems.

History of Supercomputers

The US, China, European Union, Taiwan and Japan are already in the race to create faster, more powerful and technologically superior supercomputers.

The US’s first big strides in the field of supercomputing can perhaps be traced back to 1964 when the CDC 6600 was manufactured by Control Data Corporation (CDC). Designed by American electrical engineer and supercomputer architect Seymour Cray, it is generally considered to be the first successful supercomputer as it clocked a performance of up to three megaFLOPS. Cray used - in place of instead of germanium transistors - silicon ones that could run faster. Moreover, he tackled the overheating problem by incorporating refrigeration in the supercomputer design. The CDC 6600 was followed by the CDC 7600 in 1969.

In 1976, four years after he left CDC, Cray came up with the 80 MHz Cray-1, which went on to become one of the most successful supercomputers ever with its performance clocking at an impressive 160 MFLOPS. Then came the Cray-2 that was delivered in 1985, which performed at 1.9 gigaFLOPS and was back then the world’s second fastest supercomputer after Moscow’s M-13.

Supercomputers today

Since 1993, the fastest supercomputers have been ranked on the TOP500 list according to their system’s floating point computing power. Clocking a whopping 117 units out of the TOP50 supercomputers, Lenovo became the world’s largest provider for supercomputers in the year 2018.

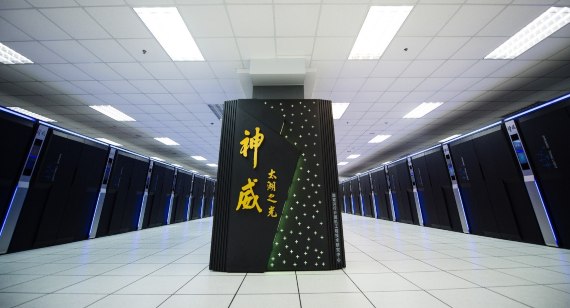

As mentioned earlier, the fastest supercomputer today on the TOP500 supercomputer list is the IBM Summit. It’s followed by the Sierra, another American supercomputer with a peak speed of 125 petaFLOPS. Sunway TaihuLight in Wuxi (China), Tianhe-2 in Guangzhou (China), Dell Frontera in Austin (USA), Piz Daint in Lugano (Switzerland) and AI Bridging Cloud Infrastructure (ABCI) in Tokyo (Japan) are some of the other examples of supercomputers today. The US also dominates the top 10 list with five supercomputers while China has two.

Types of Supercomputers

The two broad categories of supercomputers: general purpose supercomputers and special purpose supercomputers.

General purpose supercomputers can be further divided into three subcategories: vector processing supercomputers, tightly connected cluster computers, and commodity computers. Vector processing supercomputers are ones that rely on vector or array processors. These processors are basically like a CPU that can perform mathematical operations on a large number of data elements rather quickly; so these processors are the opposite of scalar processors, which can only work on one element at a time. Common in the scientific sector of computing, vector processors formed the basis of most supercomputers in the 1980s and early ’90s but are not so popular now. That said, supercomputers today do have CPUs that incorporate some vector processing instructions.

Cluster computers refers to groups of connected computers that work together as a unit. These could be director-based clusters, two-node clusters, multi-node clusters, and massively parallel clusters. A popular example would be the cluster with nodes running Linux OS and free software to implement the parallelism. Grid Engine by Sun Microsystems and Open SSI are also examples of such clusters that offer single-system image functionalities.

Director-based clusters and parallel clusters are often used for high performance reasons, even as two-node clusters are used for fault-tolerance. Massively parallel clusters make for supercomputers where a huge number of processors simultaneously work to solve different parts of a single larger problem; they basically perform a set of coordinated computations in parallel. The first massively parallel computer was the 1970s ILLIAC IV; it had 64 processors with over 200 MFLOPS.

Meanwhile, commodity clusters are basically a large number of commodity computers (standard-issue PCs) that are connected by high-bandwidth low-latency local area networks.

Special purpose computers, on the other hand, comprises supercomputers that have been built with the explicit purpose of achieving a particular task/ goal. They typically use Application-Specific Integrated Circuits (ASICs), which in turn offer better performance. Belle, Deep Blue and Hydra - all of whom have been built to play chess - as also Gravity Pipe for astrophysics, MDGRAPE-3 for protein structure computation molecular dynamics are a few notable examples of special-purpose supercomputers.

Capability over capacity

Supercomputers are typically programmed to pursue capability computing over capacity computing. Capability computing is where the maximum computing power is harnessed to solve a single big problem - say, a very complex weather simulation - in as short a time as possible. Capacity computing is when efficient and cost-effective computing power is used to solve a few rather large problems or several small problems. But such computing architectures that are employed for solving routine everyday tasks are often not considered to be supercomputers, despite their huge capacity. This is because they are not being used to tackle one highly complex problem.

Heat management in supercomputers

A typical supercomputer takes up large amounts of electrical power, almost all of which is converted into heat, thus needing cooling. Much like in our PCs, overheating interferes with the functioning of the supercomputer as it reduces the lifespan of several of its components.

There have been many approaches to heat management, from pumping Fluorinert through the system, to a hybrid liquid-air cooling system or air cooling with normal air conditioning temperatures. Makers have also resorted to steps such as using low power processors and hot water cooling. And since copper wires can transfer energy into a supercomputer with power densities that are higher than the rate at which forced air or circulating refrigerants can remove waste heat, the cooling systems’ ability to remove waste heat continues to be a limiting factor.

Uses/ Applications of Supercomputers

While supercomputers (read Cray-1) were used mainly for weather forecasting and aerodynamic research in the 1970s, the following decade saw them being used for probabilistic analysis and radiation shielding modeling. The 1990s were when supercomputers were used for brute force code breaking even as their uses shifted to 3D nuclear test simulations. In the last decade (starting 2010), supercomputers have been used for molecular dynamics simulation.

The applications of supercomputers today also include climate modelling (weather forecasting) and life science research. For instance, the IBM Blue Gene/P computer has been used for simulating a number of artificial neurons equivalent to around one percent of a human cerebral cortex, containing 1.6 billion neurons with approximately 9 trillion connections. Additionally, supercomputers are being used by governments; the Advanced Simulation and Computing Program - run by the US federal agency National Nuclear Security Administration (NNSA) - currently relies on supercomputers so as to manage and simulate the United States nuclear stockpile.

The vast capabilities of supercomputers extend beyond scientific research and complex simulations; they also hold immense potential in the realm of game development. By harnessing the power of supercomputers, game developers can create more intricate, realistic, and expansive virtual worlds, pushing the boundaries of what's possible in interactive entertainment. Recognizing the convergence of these fields, YoungWonks is at the forefront of tech education. Our coding classes for kids lay the groundwork in programming, while our game development classes for kids delve into the intricacies of creating immersive gaming experiences. By integrating knowledge of supercomputers into our curriculum, we're preparing the next generation to innovate and excel in a rapidly evolving digital landscape.

*Contributors: Written by Vidya Prabhu; Lead image by: Leonel Cruz

This blog is presented to you by YoungWonks. The leading coding program for kids and teens. YoungWonks offers instructor led one-on-one online classes and in-person classes with 4:1 student teacher ratio. Sign up for a free trial class by filling out the form below:

These are the world’s top 10 fastest supercomputers

From Frontier to France's Adastra, here are the top 10 fastest supercomputers in the world. Image: Unsplash/Taylor Vick

.chakra .wef-1c7l3mo{-webkit-transition:all 0.15s ease-out;transition:all 0.15s ease-out;cursor:pointer;-webkit-text-decoration:none;text-decoration:none;outline:none;color:inherit;}.chakra .wef-1c7l3mo:hover,.chakra .wef-1c7l3mo[data-hover]{-webkit-text-decoration:underline;text-decoration:underline;}.chakra .wef-1c7l3mo:focus,.chakra .wef-1c7l3mo[data-focus]{box-shadow:0 0 0 3px rgba(168,203,251,0.5);} Simon Read

.chakra .wef-1nk5u5d{margin-top:16px;margin-bottom:16px;line-height:1.388;color:#2846F8;font-size:1.25rem;}@media screen and (min-width:56.5rem){.chakra .wef-1nk5u5d{font-size:1.125rem;}} Get involved .chakra .wef-9dduvl{margin-top:16px;margin-bottom:16px;line-height:1.388;font-size:1.25rem;}@media screen and (min-width:56.5rem){.chakra .wef-9dduvl{font-size:1.125rem;}} with our crowdsourced digital platform to deliver impact at scale

Stay up to date:, emerging technologies.

Listen to the article

- The US has retaken the top spot in the race to build the world’s fastest supercomputer.

- 'Frontier' is capable of more than a billion, billion operations a second, making it the first exascale supercomputer.

- Supercomputers have been used to discover more about diseases including COVID-19 and cancer.

- Fun fact: there might be faster supercomputers out there whose operators didn’t submit their systems to be ranked.

A huge system called Frontier has put the US ahead in the race to build the world’s fastest supercomputer. This and other of the speediest computers on the planet promise to transform our understanding of climate change, medicine and the sciences by processing vast amounts of data more quickly than would have been thought possible even a few years ago.

Leading the field in the TOP500 rankings , Frontier is also said to be the first exascale supercomputer. This means it is capable of more than a billion, billion operations a second (known as an Exaflop).

Frontier might be ahead, but it has plenty of rivals. Here are the 10 fastest supercomputers in the world today:

1. Frontier , the new number 1, is built by Hewlett Packard Enterprise (HPE) and housed at the Oak Ridge National Laboratory (ORNL) in Tennessee, USA.

2. Fugaku , which previously held the top spot, is installed at the Riken Center for Computational Science in Kobe, Japan. It is three times faster than the next supercomputer in the top 10.

3. LUMI is another HPE system and the new number 3, crunching the numbers in Finland.

Have you read?

The future of jobs report 2023, how to follow the growth summit 2023.

4. Summit , an IBM-built supercomputer, is also at ORNL in Tennessee. Summit is used to tackle climate change, predict extreme weather and understand the genetic factors that influence opioid addiction .

5. Another US entry is Sierra , a system installed at the Lawrence Livermore National Laboratory in California, which is used for testing and maintaining the reliability of nuclear weapons.

6. China’s highest entry is the Sunway TaihuLight , a system developed by the National Research Center of Parallel Computer Engineering and Technology and installed in Wuxi, Jiangsu.

7. Perlmutter is another top 10 entry based on HPE technology.

8. Selene is a supercomputer currently running at AI multinational NVIDIA in the US.

9. Tianhe-2A is a system developed by China’s National University of Defence Technology and installed at the National Supercomputer Center in Guangzhou.

10. France’s Adastra is the second-fastest system in Europe and has been built using HPE and AMD technology.

Supercomputers are exceptionally high-performing computers able to process vast amounts of data very quickly and draw key insights from it. While a domestic or office computer might have just one central processing unit, supercomputers can contain thousands.

Put simply, they are bigger, more expensive and much faster than the humble personal computer. And Frontier - the fastest of the fast - has some impressive statistics .

To achieve such formidable processing speeds, a supercomputer needs to be big. Each of Frontier’s 74 cabinets is as heavy as a pick-up truck, and the $600 million machine has to be cooled by 6,000 gallons of water a minute.

Developing vaccines

The speed of the latest generation of supercomputers can help solve some of the toughest global problems, playing a part in developing vaccines, testing car designs and modelling climate change.

In Japan, the Fugaku system was used to research COVID-19’s spike protein . Satoshi Matsuoka, director of Riken Center for Computational Science, says the calculations involved would have taken Fugaku’s predecessor system “days, weeks, multiple weeks”. It took Fugaku three hours.

Supercomputers are being used to support healthcare in the US, too. IBM says its systems support the search for new cancer treatments by quickly analysing huge amounts of detailed data about patients.

Launched in 2000, the Technology Pioneer Community is composed of early to growth-stage companies from around the world that are involved in the design, development and deployment of new technologies and innovations, and are poised to have a significant impact on business and society.

By joining this community, Technology Pioneers begin a two-year journey where they are part of the World Economic Forum’s initiatives, activities and events, bringing their cutting-edge insight and fresh thinking to critical global discussions.

Apply to become a Technology Pioneer!

The application portal for the 2024 Technology Pioneers Community is now open. We are looking for leading early-stage start-ups with innovative technology that are committed to improving the state of the world.

Interested in joining the community? Apply now!

Secret supercomputers

IBM also says its fastest systems will help scientists identify the next generation of materials which manufacturers may use to make better batteries, building materials and semiconductors.

The 10 fastest supercomputers are impressive, but it is possible there are other, even quicker systems out there. According to the New York Times, experts believe two systems in China beat Frontier in the race to be the first exascale computer. But the operators of these supercomputers have not submitted test results to the Top500 rankings, perhaps due to geopolitical tensions between the US and China.

Intelligence agencies and some companies might want to keep their supercomputers secret , as Simon McIntosh-Smith from the University of Bristol, UK, said to the New Scientist. “Certainly in the [US], some of the security forces have things which would put them at the top … there are definitely groups who obviously wouldn’t want this on the list.”

Supercomputers, AI and the metaverse: here’s what you need to know

What microsoft's acquisition of activision blizzard means for the metaverse, don't miss any update on this topic.

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

The Agenda .chakra .wef-n7bacu{margin-top:16px;margin-bottom:16px;line-height:1.388;font-weight:400;} Weekly

A weekly update of the most important issues driving the global agenda

.chakra .wef-1dtnjt5{display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;} More on Emerging Technologies .chakra .wef-nr1rr4{display:-webkit-inline-box;display:-webkit-inline-flex;display:-ms-inline-flexbox;display:inline-flex;white-space:normal;vertical-align:middle;text-transform:uppercase;font-size:0.75rem;border-radius:0.25rem;font-weight:700;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;line-height:1.2;-webkit-letter-spacing:1.25px;-moz-letter-spacing:1.25px;-ms-letter-spacing:1.25px;letter-spacing:1.25px;background:none;padding:0px;color:#B3B3B3;-webkit-box-decoration-break:clone;box-decoration-break:clone;-webkit-box-decoration-break:clone;}@media screen and (min-width:37.5rem){.chakra .wef-nr1rr4{font-size:0.875rem;}}@media screen and (min-width:56.5rem){.chakra .wef-nr1rr4{font-size:1rem;}} See all

Space is booming. Here's how to embrace the $1.8 trillion opportunity

Nikolai Khlystov and Gayle Markovitz

April 8, 2024

These 6 countries are using space technology to build their digital capabilities. Here’s how

Simon Torkington

What is e-voting? Who’s using it and is it safe?

Victoria Masterson

April 4, 2024

Lootah Biofuels turns used cooking oil into biofuels. Here's how

4 lessons from Jane Goodall as the renowned primatologist turns 90

Gareth Francis

April 3, 2024

Microchips – their past, present and future

March 27, 2024

- skip to Main Navigation

- skip to Main Content

- skip to Footer

- Accessibility feedback

What Makes a Supercomputer so Super?

Tuesday, November 7, 2017 10:00am to 11:00am

This event is part of the Research Computing event series.

This event has concluded.

View other upcoming events

About This Event

Supercomputers play an important role in the field of computational science and are used for a wide range of computationally intensive tasks in various fields ranging from basic science to finance. What is High-Performance Computing? Who uses it and why? Did you know the State of North Carolina once had its own HPC center? Blondin and Lucio will address these questions and more interesting stuff like what makes a Supercomputer so super, where do you find them, and ways to get access.

Contact Information

Free and open to the public. Registration is required.

Opens in your default email client

URL copied to clipboard.

QR code for this page

Browse Course Material

Course info, instructors.

- Prof. Eric Grimson

- Prof. John Guttag

Departments

- Electrical Engineering and Computer Science

As Taught In

- Programming Languages

Introduction to Computer Science and Programming

Assignments.

You are leaving MIT OpenCourseWare

- Marketing and Communications

Texas Connect

After 20 years, texas advanced computing center continues on path of discovery.

From its humble beginnings with just a handful of staff housed in modest surroundings to becoming one of the leading academic supercomputing centers in the world, the Texas Advanced Computing Center (TACC) has not stopped evolving since opening its doors in June 2001.

That year, TACC’s first supercomputer boasted the capability to do 50 gigaflops, or 50 billion calculations per second (the average home computer today can do around 60 gigaflops). In 2021, the center’s most powerful supercomputer, Frontera, was 800,000 times more powerful.

High-performance computing (HPC) had in fact already been at UT long before TACC was established. Its precursor, the Center for High Performance Computing, dates back to the 1960s. But things have dramatically improved since then, as J. Tinsley Oden — one of UT’s most decorated scientists, engineers and mathematicians — explains. Oden is also widely considered to be the father of computational science and engineering.

“I came to the university for the first time in 1973 as a visitor,” he says. “The computing was still very primitive, even by the standards of the time. People were using desk calculators, which shocked me.” Oden was central to nurturing an HPC-friendly environment in Texas. But supercomputing was beginning to grow exponentially nationwide also.

What is supercomputing?

The term “supercomputing” refers to the processing of massively complex or data-laden problems using the compute resources of multiple computer systems working in parallel (i.e., a supercomputer). Supercomputing also denotes a system working at the maximum potential performance of any computer. Its power can be applied to weather forecasting, energy, the life sciences and manufacturing.

Despite being a top-tier university, enthusiasm for supercomputing at UT ebbed and flowed over the years. This meant the HPC headquarters shifted locations across campus (including a brief stint where operations were conducted out of a stairwell in the UT Tower). “It’s remarkable how far things have come since then,” Oden says.

Calls from HPC advocates — Oden and many others — had grown so loud during the 1980s and 1990s that university leaders couldn’t ignore them any longer.

“When I became president of UT in 1998, there was already a lot of discussion around the need to prioritize advanced computing — or ‘big iron’ as we called it back then — at the university,” says former UT President Larry Faulkner.

In 1999, Faulkner named Juan M. Sanchez as vice president for research; that appointment proved central to the TACC story. Instrumental in a variety of university initiatives, Sanchez echoed the calls from HPC advocates to build a home for advanced computing at UT.

In 2001, a dedicated facility was established at UT’s J.J. Pickle Research Campus in North Austin with a small staff led by Jay Boisseau, who influenced the future direction of TACC and the wider HPC community. TACC grew rapidly in part due to Boisseau’s acceptance of hand-me-down hardware, an aggressive pursuit of external funding, and success forging strong collaborations with technology partners, including notable hometown success story Dell Technologies. The center also enjoyed access to a rich pipeline of scientific and engineering expertise at UT.

One entity in particular became a key partner — the Institute for Computational Engineering and Sciences (ICES, established in 2003). Renamed the Oden Institute for Computational Engineering and Sciences, in recognition of its founder J. Tinsley Oden, the institute quickly became regarded as one of the leading computational science and engineering (CSE) institutes in the world.

Thanks to the unwavering support of Texan educational philanthropists Peter and Edith O’Donnell, Oden was able to recruit the most talented computational scientists in the field and build a team that could not only expand the mathematical agility of CSE as a discipline but also grow the number of potential real-world applications.

“ICES was such a successful enterprise, it produced great global credibility for Texas as a new center for HPC,” Faulkner says.

Computational science and advanced computing tend to move forward symbiotically, which is why Oden Institute faculty have been instrumental in planning TACC’s largest supercomputers, providing insights into the types of computing environment that researchers require to deliver impactful research outcome s.

“Computational science and engineering today is foundational to scientific and technological progress — and HPC is in turn a critical enabler for modern CSE,” says Omar Ghattas, who holds the John A. and Katherine G. Jackson Chair in Computational Geosciences with additional appointments in the Jackson School of Geosciences and the Department of Mechanical Engineering.

Ghattas has served as co-principal investigator on the Ranger and Frontera supercomputers and is a member of the team planning the next-generation system at TACC.

“Every field of science and engineering, and increasingly medicine and the social sciences, relies on advanced computing for modeling, simulation, prediction, inference, design and control,” he says. “The partnership between the Oden Institute and TACC has made it possible to anticipate future directions in CSE. Having that head start has allowed us to deploy systems and services that empower researchers to define that future.”

We were hungry to win the Ranger contract and, against the odds, it paid off.

No(de) time for complacency

Over its first 10 years, TACC deployed several new supercomputers, each larger than the last, and gave each a moniker appropriate to the confident assertion that everything is bigger and better in Texas: Lonestar, Ranger, Stampede, Frontera.

While every system was and still is treated like a cherished member of TACC’s family, current Executive Director Dan Stanzione doesn’t flinch when asked to pick a favorite child. “We really got on the map with Ranger,” says Stanzione, who was a co-principal investigator on the Ranger project.

The successful acquisition of the Ranger supercomputer in 2007, the first “path to petascale” system deployed in the U.S., catapulted TACC to a national level of supercomputing stardom. At this point, Stanzione was the director of high performance computing at Arizona State University but was playing an increasingly important role in TACC operations.

Ranger was slated to be the largest open science system in the world at that time, and TACC was still a relatively small center compared with other institutions bidding for the same $59 million National Science Foundation (NSF) grant. TACC’s chances of successfully winning the award had its fair share of skeptics.

However, Stanzione, who officially joined TACC as deputy director soon after the successful Ranger bid, and Boisseau were a formidable pair. “TACC has a reputation for punching above its weight,” Oden says. “Jay and Dan just knew how to write a proposal that you simply could not deny.”

Stanzione continued the winning approach when he took over as executive director in 2014. In addition, he has held the title of associate vice president for research at UT since 2018. “Juan Sanchez also made the decision to appoint Stanzione as Jay Boisseau’s successor,” Faulkner noted. “And while the success of TACC has, of course, come from those actually working in the field, Sanchez deserves great credit for establishing an environment where that success could thrive .”

In particular, the center stands out for the entrepreneurial culture it has cultivated over the years. Stanzione describes the approach as being akin to a startup. “We were hungry to win the Ranger contract and, against the odds, it paid off.”

The formula for success

TACC has kept its edge for 20 years by prioritizing the needs of researchers and maintaining strong partnerships.

As important as TACC systems are as a resource for the academic community, it’s the people of TACC that make the facility so special. “They‘re not just leaders in designing and operating frontier systems; they also support numerous users across campus, teach HPC courses and collaborate on multiple research projects,” Ghattas says.

The center’s mission and purpose has always focused on the researcher and enabling discovery in open science. A list of advances would include forecasting storm surge during hurricanes, confirming the discovery of gravitational waves and identifying one of the most promising new materials for superconductivity.

But in recent decades, the nature of computing and computational science has changed. Whereas researchers have historically used the command line to access supercomputers, today a majority of scholars use supercomputers remotely through web portals and gateways, uploading data and running analyses through an interface that would be familiar to any Amazon or Google customer. TACC’s portal team — nonexistent at launch — is now the largest group at the center, encompassing more than 30 experts and leading a nationwide institute to develop best practices and train new developers for the field.

Likewise, life scientists were a very small part of TACC’s user base in 2001. Today, they are among the largest and more advanced users of supercomputers, leveraging TACC systems to model the billion-atom coronavirus or run complex cancer data analyses.

The physical growth of data in parallel with rapid advances in data science, machine learning and artificial intelligence marked another major shift for the center, requiring new types of hardware, software and expertise that TACC integrated into its portfolio.

“The Wrangler system, which operated from 2014 to 2020, was the most powerful data analysis system for open science in the U.S.,” Stanzione says. “Two current systems, Maverick and Longhorn, were custom-built to handle machine and deep learning problems and are leading to discoveries in areas from astrophysics to drug discovery.”

With almost 200 staff members, TACC’s mission has grown well beyond servicing and maintaining the needs of the big iron. It also includes large, active groups in scientific visualization, code development, data management and collections, and computer science education and outreach.

The next decades

In 2019, TACC was successful in its bid to build and operate Frontera, a $120 million NSF-funded project that created not just the fastest supercomputer at any university worldwide, but one of the most powerful systems on the planet. (It was No. 13 on the November 2021 TOP500 list.)

The NSF grant further stipulated that TACC would develop a plan for a leadership-class computing facility (LCCF), which would operate for at least a decade and deploy a system 10 times as powerful as Frontera.

TACC now has an opportunity not just to build a bigger machine but to define how computational science and engineering progress in the coming decade. “We’ll help lead the HPC community, particularly in computational science and machine learning, which will both play greater roles than ever before,” Stanzione says.

“The LCCF will be the open science community’s premier resource for catalyzing a new generation of research that addresses societal grand challenges of the next decade,” Ghattas says.

The design, implementation and management of the next system, as well as a new facility, mean that TACC could be about to experience its most transformative period to date. And that change presents one final question: If TACC has made it this far since 2001, what might we be celebrating at its 40th anniversary?

“I don’t know what TACC will look like two years from now,” Stanzione says. “But in another 20 years, I hope it looks entirely different. Then at least I’ll know the center’s legacy has persisted.”