No More Seat Costs: Semaphore Plans Just Got Better!

- Talk to a developer

- Start building for free

- System Status

- Semaphore On-Premise

- Semaphore Hybrid

- Premium support

- Docker & Kubernetes

- vs GitHub Actions

- vs Travis CI

- vs Bitbucket

- Write with us

- Get started

Getting Started With Property-Based Testing in Python With Hypothesis and Pytest

This tutorial will be your gentle guide to property-based testing. Property-based testing is a testing philosophy; a way of approaching testing, much like unit testing is a testing philosophy in which we write tests that verify individual components of your code.

By going through this tutorial, you will:

- learn what property-based testing is;

- understand the key benefits of using property-based testing;

- see how to create property-based tests with Hypothesis;

- attempt a small challenge to understand how to write good property-based tests; and

- Explore several situations in which you can use property-based testing with zero overhead.

What is Property-Based Testing?

In the most common types of testing, you write a test by running your code and then checking if the result you got matches the reference result you expected. This is in contrast with property-based testing , where you write tests that check that the results satisfy certain properties . This shift in perspective makes property-based testing (with Hypothesis) a great tool for a variety of scenarios, like fuzzing or testing roundtripping.

In this tutorial, we will be learning about the concepts behind property-based testing, and then we will put those concepts to practice. In order to do that, we will use three tools: Python, pytest, and Hypothesis.

- Python will be the programming language in which we will write both our functions that need testing and our tests.

- pytest will be the testing framework.

- Hypothesis will be the framework that will enable property-based testing.

Both Python and pytest are simple enough that, even if you are not a Python programmer or a pytest user, you should be able to follow along and get benefits from learning about property-based testing.

Setting up your environment to follow along

If you want to follow along with this tutorial and run the snippets of code and the tests yourself – which is highly recommendable – here is how you set up your environment.

Installing Python and pip

Start by making sure you have a recent version of Python installed. Head to the Python downloads page and grab the most recent version for yourself. Then, make sure your Python installation also has pip installed. [ pip ] is the package installer for Python and you can check if you have it on your machine by running the following command:

(This assumes python is the command to run Python on your machine.) If pip is not installed, follow their installation instructions .

Installing pytest and Hypothesis

pytest, the Python testing framework, and Hypothesis, the property-based testing framework, are easy to install after you have pip. All you have to do is run this command:

This tells pip to install pytest and Hypothesis and additionally it tells pip to update to newer versions if any of the packages are already installed.

To make sure pytest has been properly installed, you can run the following command:

The output on your machine may show a different version, depending on the exact version of pytest you have installed.

To ensure Hypothesis has been installed correctly, you have to open your Python REPL by running the following:

and then, within the REPL, type import hypothesis . If Hypothesis was properly installed, it should look like nothing happened. Immediately after, you can check for the version you have installed with hypothesis.__version__ . Thus, your REPL session would look something like this:

Your first property-based test

In this section, we will write our very first property-based test for a small function. This will show how to write basic tests with Hypothesis.

The function to test

Suppose we implemented a function gcd(n, m) that computes the greatest common divisor of two integers. (The greatest common divisor of n and m is the largest integer d that divides evenly into n and m .) What’s more, suppose that our implementation handles positive and negative integers. Here is what this implementation could look like:

If you save that into a file, say gcd.py , and then run it with:

you will enter an interactive REPL with your function already defined. This allows you to play with it a bit:

Now that the function is running and looks about right, we will test it with Hypothesis.

The property test

A property-based test isn’t wildly different from a standard (pytest) test, but there are some key differences. For example, instead of writing inputs to the function gcd , we let Hypothesis generate arbitrary inputs. Then, instead of hardcoding the expected outputs, we write assertions that ensure that the solution satisfies the properties that it should satisfy.

Thus, to write a property-based test, you need to determine the properties that your answer should satisfy.

Thankfully for us, we already know the properties that the result of gcd must satisfy:

“[…] the greatest common divisor (GCD) of two or more integers […] is the largest positive integer that divides each of the integers.”

So, from that Wikipedia quote, we know that if d is the result of gcd(n, m) , then:

- d is positive;

- d divides n ;

- d divides m ; and

- no other number larger than d divides both n and m .

To turn these properties into a test, we start by writing the signature of a test_ function that accepts the same inputs as the function gcd :

(The prefix test_ is not significant for Hypothesis. We are using Hypothesis with pytest and pytest looks for functions that start with test_ , so that is why our function is called test_gcd .)

The arguments n and m , which are also the arguments of gcd , will be filled in by Hypothesis. For now, we will just assume that they are available.

If n and m are arguments that are available and for which we want to test the function gcd , we have to start by calling gcd with n and m and then saving the result. It is after calling gcd with the supplied arguments and getting the answer that we get to test the answer against the four properties listed above.

Taking the four properties into account, our test function could look like this:

Go ahead and put this test function next to the function gcd in the file gcd.py . Typically, tests live in a different file from the code being tested but this is such a small example that we can have everything in the same file.

Plugging in Hypothesis

We have written the test function but we still haven’t used Hypothesis to power the test. Let’s go ahead and use Hypothesis’ magic to generate a bunch of arguments n and m for our function gcd. In order to do that, we need to figure out what are all the legal inputs that our function gcd should handle.

For our function gcd , the valid inputs are all integers, so we need to tell Hypothesis to generate integers and feed them into test_gcd . To do that, we need to import a couple of things:

given is what we will use to tell Hypothesis that a test function needs to be given data. The submodule strategies is the module that contains lots of tools that know how to generate data.

With these two imports, we can annotate our test:

You can read the decorator @given(st.integers(), st.integers()) as “the test function needs to be given one integer, and then another integer”. To run the test, you can just use pytest :

(Note: depending on your operating system and the way you have things configured, pytest may not end up in your path, and the command pytest gcd.py may not work. If that is the case for you, you can use the command python -m pytest gcd.py instead.)

As soon as you do so, Hypothesis will scream an error message at you, saying that you got a ZeroDivisionError . Let us try to understand what Hypothesis is telling us by looking at the bottom of the output of running the tests:

This shows that the tests failed with a ZeroDivisionError , and the line that reads “Falsifying example: …” contains information about the test case that blew our test up. In our case, this was n = 0 and m = 0 . So, Hypothesis is telling us that when the arguments are both zero, our function fails because it raises a ZeroDivisionError .

The problem lies in the usage of the modulo operator % , which does not accept a right argument of zero. The right argument of % is zero if n is zero, in which case the result should be m . Adding an if statement is a possible fix for this:

However, Hypothesis still won’t be happy. If you run your test again, with pytest gcd.py , you get this output:

This time, the issue is with the very first property that should be satisfied. We can know this because Hypothesis tells us which assertion failed while also telling us which arguments led to that failure. In fact, if we look further up the output, this is what we see:

This time, the issue isn’t really our fault. The greatest common divisor is not defined when both arguments are zero, so it is ok for our function to not know how to handle this case. Thankfully, Hypothesis lets us customise the strategies used to generate arguments. In particular, we can say that we only want to generate integers between a minimum and a maximum value.

The code below changes the test so that it only runs with integers between 1 and 100 for the first argument ( n ) and between -500 and 500 for the second argument ( m ):

That is it! This was your very first property-based test.

Why bother with Property-Based Testing?

To write good property-based tests you need to analyse your problem carefully to be able to write down all the properties that are relevant. This may look quite cumbersome. However, using a tool like Hypothesis has very practical benefits:

- Hypothesis can generate dozens or hundreds of tests for you, while you would typically only write a couple of them;

- tests you write by hand will typically only cover the edge cases you have already thought of, whereas Hypothesis will not have that bias; and

- thinking about your solution to figure out its properties can give you deeper insights into the problem, leading to even better solutions.

These are just some of the advantages of using property-based testing.

Using Hypothesis for free

There are some scenarios in which you can use property-based testing essentially for free (that is, without needing to spend your precious brain power), because you don’t even need to think about properties. Let’s look at two such scenarios.

Testing Roundtripping

Hypothesis is a great tool to test roundtripping. For example, the built-in functions int and str in Python should roundtrip. That is, if x is an integer, then int(str(x)) should still be x . In other words, converting x to a string and then to an integer again should not change its value.

We can write a simple property-based test for this, leveraging the fact that Hypothesis generates dozens of tests for us. Save this in a Python file:

Now, run this file with pytest. Your test should pass!

Did you notice that, in our gcd example above, the very first time we ran Hypothesis we got a ZeroDivisionError ? The test failed, not because of an assert, but simply because our function crashed.

Hypothesis can be used for tests like this. You do not need to write a single property because you are just using Hypothesis to see if your function can deal with different inputs. Of course, even a buggy function can pass a fuzzing test like this, but this helps catch some types of bugs in your code.

Comparing against a gold standard

Sometimes, you want to test a function f that computes something that could be computed by some other function f_alternative . You know this other function is correct (that is why you call it a “gold standard”), but you cannot use it in production because it is very slow, or it consumes a lot of resources, or for some other combination of reasons.

Provided it is ok to use the function f_alternative in a testing environment, a suitable test would be something like the following:

When possible, this type of test is very powerful because it directly tests if your solution is correct for a series of different arguments.

For example, if you refactored an old piece of code, perhaps to simplify its logic or to make it more performant, Hypothesis will give you confidence that your new function will work as it should.

The importance of property completeness

In this section you will learn about the importance of being thorough when listing the properties that are relevant. To illustrate the point, we will reason about property-based tests for a function called my_sort , which is your implementation of a sorting function that accepts lists of integers.

The results are sorted

When thinking about the properties that the result of my_sort satisfies, you come up with the obvious thing: the result of my_sort must be sorted.

So, you set out to assert this property is satisfied:

Now, the only thing missing is the appropriate strategy to generate lists of integers. Thankfully, Hypothesis knows a strategy to generate lists, which is called lists . All you need to do is give it a strategy that generates the elements of the list.

Now that the test has been written, here is a challenge. Copy this code into a file called my_sort.py . Between the import and the test, define a function my_sort that is wrong (that is, write a function that does not sort lists of integers) and yet passes the test if you run it with pytest my_sort.py . (Keep reading when you are ready for spoilers.)

Notice that the only property that we are testing is “all elements of the result are sorted”, so we can return whatever result we want , as long as it is sorted. Here is my fake implementation of my_sort :

This passes our property test and yet is clearly wrong because we always return an empty list. So, are we missing a property? Perhaps.

The lengths are the same

We can try to add another obvious property, which is that the input and the output should have the same length, obviously. This means that our test becomes:

Now that the test has been improved, here is a challenge. Write a new version of my_sort that passes this test and is still wrong. (Keep reading when you are ready for spoilers.)

Notice that we are only testing for the length of the result and whether or not its elements are sorted, but we don’t test which elements are contained in the result. Thus, this fake implementation of my_sort would work:

Use the right numbers

To fix this, we can add the obvious property that the result should only contain numbers from the original list. With sets, this is easy to test:

Now that our test has been improved, I have yet another challenge. Can you write a fake version of my_sort that passes this test? (Keep reading when you are ready for spoilers).

Here is a fake version of my_sort that passes the test above:

The issue here is that we were not precise enough with our new property. In fact, set(result) <= set(int_list) ensures that we only use numbers that were available in the original list, but it doesn’t ensure that we use all of them. What is more, we can’t fix it by simply replacing the <= with == . Can you see why?I will give you a hint. If you just replace the <= with a == , so that the test becomes:

then you can write this passing version of my_sort that is still wrong:

This version is wrong because it reuses the largest element of the original list without respecting the number of times each integer should be used. For example, for the input list [1, 1, 2, 2, 3, 3] the result should be unchanged, whereas this version of my_sort returns [1, 2, 3, 3, 3, 3] .

The final test

A test that is correct and complete would have to take into account how many times each number appears in the original list, which is something the built-in set is not prepared to do. Instead, one could use the collections.Counter from the standard library:

So, at this point, your test function test_my_sort is complete. At this point, it is no longer possible to fool the test! That is, the only way the test will pass is if my_sort is a real sorting function.

Use properties and specific examples

This section showed that the properties that you test should be well thought-through and you should strive to come up with a set of properties that are as specific as possible. When in doubt, it is better to have properties that may look redundant over having too few.

Another strategy that you can follow to help mitigate the danger of having come up with an insufficient set of properties is to mix property-based testing with other forms of testing, which is perfectly reasonable.

For example, on top of having the property-based test test_my_sort , you could add the following test:

This article covered two examples of functions to which we added property-based tests. We only covered the basics of using Hypothesis to run property-based tests but, more importantly, we covered the fundamental concepts that enable a developer to reason about and write complete property-based tests.

Property-based testing isn’t a one-size-fits-all solution that means you will never have to write any other type of test, but it does have characteristics that you should take advantage of whenever possible. In particular, we saw that property-based testing with Hypothesis was beneficial in that:

This article also went over a couple of common gotchas when writing property-based tests and listed scenarios in which property-based testing can be used with no overhead.

If you are interested in learning more about Hypothesis and property-based testing, we recommend you take a look at the Hypothesis docs and, in particular, to the page “What you can generate and how” .

Learn CI/CD

Level up your developer skills to use CI/CD at its max.

5 thoughts on “ Getting Started With Property-Based Testing in Python With Hypothesis and Pytest ”

Awesome intro to property based testing for Python. Thank you, Dan and Rodrigo!

Greeting! Unfortunately, I don’t understand due to translation difficulties. PyCharm writes error messages and does not run the codes. The installation was done fine, check ok. I created a virtual environment. I would like a single good, usable, complete code, an example of what to write in gcd.py and what in test_gcd.py, which the development environment runs without errors. Thanks!

Thanks for article!

“it is better to have properties that may look redundant over having too few” Isn’t it the case with: assert len(result) == len(int_list) and: assert Counter(result) == Counter(int_list) ? I mean: is it possible to satisfy the second condition without satisfying the first ?

Yes. One case could be if result = [0,1], int_list = [0,1,1], and the implementation of Counter returns unique count.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

hypothesis 6.100.1

pip install hypothesis Copy PIP instructions

Released: Apr 8, 2024

A library for property-based testing

Verified details

Maintainers.

Unverified details

Project links.

- Documentation

GitHub Statistics

- Open issues:

View statistics for this project via Libraries.io , or by using our public dataset on Google BigQuery

License: Mozilla Public License 2.0 (MPL 2.0) (MPL-2.0)

Author: David R. MacIver and Zac Hatfield-Dodds

Tags python, testing, fuzzing, property-based-testing

Requires: Python >=3.8

Classifiers

- 5 - Production/Stable

- OSI Approved :: Mozilla Public License 2.0 (MPL 2.0)

- Microsoft :: Windows

- Python :: 3

- Python :: 3 :: Only

- Python :: 3.8

- Python :: 3.9

- Python :: 3.10

- Python :: 3.11

- Python :: 3.12

- Python :: Implementation :: CPython

- Python :: Implementation :: PyPy

- Education :: Testing

- Software Development :: Testing

Project description

Hypothesis is an advanced testing library for Python. It lets you write tests which are parametrized by a source of examples, and then generates simple and comprehensible examples that make your tests fail. This lets you find more bugs in your code with less work.

Hypothesis is extremely practical and advances the state of the art of unit testing by some way. It’s easy to use, stable, and powerful. If you’re not using Hypothesis to test your project then you’re missing out.

Quick Start/Installation

If you just want to get started:

Links of interest

The main Hypothesis site is at hypothesis.works , and contains a lot of good introductory and explanatory material.

Extensive documentation and examples of usage are available at readthedocs .

If you want to talk to people about using Hypothesis, we have both an IRC channel and a mailing list .

If you want to receive occasional updates about Hypothesis, including useful tips and tricks, there’s a TinyLetter mailing list to sign up for them .

If you want to contribute to Hypothesis, instructions are here .

If you want to hear from people who are already using Hypothesis, some of them have written about it .

If you want to create a downstream package of Hypothesis, please read these guidelines for packagers .

Project details

Release history release notifications | rss feed.

Apr 8, 2024

Mar 31, 2024

Mar 24, 2024

Mar 23, 2024

Mar 20, 2024

Mar 19, 2024

Mar 18, 2024

Mar 14, 2024

Mar 12, 2024

Mar 11, 2024

Mar 10, 2024

Mar 9, 2024

Mar 4, 2024

Feb 29, 2024

Feb 27, 2024

Feb 25, 2024

Feb 24, 2024

Feb 22, 2024

Feb 20, 2024

Feb 18, 2024

Feb 15, 2024

Feb 14, 2024

Feb 12, 2024

Feb 8, 2024

Feb 5, 2024

Feb 4, 2024

Feb 3, 2024

Jan 31, 2024

Jan 30, 2024

Jan 27, 2024

Jan 25, 2024

Jan 23, 2024

Jan 22, 2024

Jan 21, 2024

Jan 18, 2024

Jan 17, 2024

Jan 16, 2024

Jan 15, 2024

Jan 13, 2024

Jan 12, 2024

Jan 11, 2024

Jan 10, 2024

Jan 8, 2024

Dec 27, 2023

Dec 16, 2023

Dec 10, 2023

Dec 8, 2023

Nov 27, 2023

Nov 20, 2023

Nov 19, 2023

Nov 16, 2023

Nov 13, 2023

Nov 5, 2023

Oct 16, 2023

Oct 15, 2023

Oct 12, 2023

Oct 6, 2023

Oct 1, 2023

Sep 25, 2023

Sep 18, 2023

Sep 17, 2023

Sep 16, 2023

Sep 10, 2023

Sep 6, 2023

Sep 5, 2023

Sep 4, 2023

Sep 3, 2023

Sep 1, 2023

Aug 28, 2023

Aug 20, 2023

Aug 18, 2023

Aug 12, 2023

Aug 8, 2023

Aug 6, 2023

Aug 5, 2023

Jul 20, 2023

Jul 15, 2023

Jul 11, 2023

Jul 10, 2023

Jul 6, 2023

Jun 27, 2023

Jun 26, 2023

Jun 22, 2023

Jun 19, 2023

Jun 17, 2023

Jun 15, 2023

Jun 13, 2023

Jun 12, 2023

Jun 11, 2023

Jun 9, 2023

Jun 4, 2023

May 31, 2023

May 30, 2023

May 27, 2023

May 26, 2023

May 14, 2023

May 4, 2023

Apr 30, 2023

Apr 28, 2023

Apr 26, 2023

Apr 27, 2023

Apr 25, 2023

Apr 24, 2023

Apr 19, 2023

Apr 16, 2023

Apr 7, 2023

Apr 3, 2023

Mar 27, 2023

Mar 16, 2023

Mar 15, 2023

Feb 17, 2023

Feb 12, 2023

Feb 9, 2023

Feb 5, 2023

Feb 4, 2023

Feb 3, 2023

Feb 2, 2023

Jan 27, 2023

Jan 26, 2023

Jan 24, 2023

Jan 23, 2023

Jan 20, 2023

Jan 14, 2023

Jan 8, 2023

Jan 7, 2023

Jan 6, 2023

Dec 11, 2022

Dec 4, 2022

Dec 2, 2022

Nov 30, 2022

Nov 26, 2022

Nov 19, 2022

Nov 14, 2022

Oct 28, 2022

Oct 17, 2022

Oct 10, 2022

Oct 5, 2022

Oct 2, 2022

Sep 29, 2022

Sep 18, 2022

Sep 5, 2022

Aug 20, 2022

Aug 12, 2022

Aug 10, 2022

Aug 2, 2022

Jul 25, 2022

Jul 22, 2022

Jul 19, 2022

Jul 18, 2022

Jul 17, 2022

Jul 9, 2022

Jul 5, 2022

Jul 4, 2022

Jul 3, 2022

Jun 29, 2022

Jun 27, 2022

Jun 25, 2022

Jun 23, 2022

Jun 15, 2022

Jun 12, 2022

Jun 10, 2022

Jun 7, 2022

Jun 2, 2022

Jun 1, 2022

May 25, 2022

May 19, 2022

May 18, 2022

May 15, 2022

May 11, 2022

May 3, 2022

May 1, 2022

Apr 30, 2022

Apr 29, 2022

Apr 27, 2022

Apr 22, 2022

Apr 21, 2022

Apr 18, 2022

Apr 16, 2022

Apr 13, 2022

Apr 12, 2022

Apr 10, 2022

Apr 9, 2022

Apr 1, 2022

Mar 29, 2022

Mar 27, 2022

Mar 26, 2022

Mar 17, 2022

Mar 7, 2022

Mar 3, 2022

Mar 1, 2022

Feb 26, 2022

Feb 21, 2022

Feb 18, 2022

Feb 13, 2022

Jan 31, 2022

Jan 19, 2022

Jan 17, 2022

Jan 8, 2022

Jan 5, 2022

Dec 31, 2021

Dec 30, 2021

Dec 23, 2021

Dec 15, 2021

Dec 14, 2021

Dec 11, 2021

Dec 10, 2021

Dec 9, 2021

Dec 5, 2021

Dec 3, 2021

Dec 2, 2021

Nov 29, 2021

Nov 28, 2021

Nov 26, 2021

Nov 22, 2021

Nov 21, 2021

Nov 19, 2021

Nov 18, 2021

Nov 16, 2021

Nov 15, 2021

Nov 13, 2021

Nov 5, 2021

Nov 1, 2021

Oct 23, 2021

Oct 20, 2021

Oct 18, 2021

Oct 8, 2021

Sep 29, 2021

Sep 26, 2021

Sep 24, 2021

Sep 19, 2021

Sep 16, 2021

Sep 15, 2021

Sep 13, 2021

Sep 11, 2021

Sep 10, 2021

Sep 9, 2021

Sep 8, 2021

Sep 6, 2021

Aug 31, 2021

Aug 30, 2021

Aug 29, 2021

Aug 27, 2021

Aug 22, 2021

Aug 20, 2021

Aug 16, 2021

Aug 14, 2021

Aug 7, 2021

Jul 27, 2021

Jul 26, 2021

Jul 18, 2021

Jul 12, 2021

Jul 2, 2021

Jun 9, 2021

Jun 4, 2021

Jun 3, 2021

Jun 2, 2021

May 30, 2021

May 28, 2021

May 27, 2021

May 26, 2021

May 24, 2021

May 23, 2021

May 20, 2021

May 18, 2021

May 17, 2021

May 6, 2021

Apr 26, 2021

Apr 17, 2021

Apr 15, 2021

Apr 12, 2021

Apr 11, 2021

Apr 7, 2021

Apr 6, 2021

Apr 5, 2021

Apr 1, 2021

Mar 28, 2021

Mar 27, 2021

Mar 14, 2021

Mar 11, 2021

Mar 10, 2021

Mar 9, 2021

Mar 7, 2021

Mar 4, 2021

Mar 2, 2021

Feb 28, 2021

Feb 26, 2021

Feb 25, 2021

Feb 24, 2021

Feb 20, 2021

Feb 12, 2021

Jan 31, 2021

Jan 29, 2021

Jan 27, 2021

Jan 23, 2021

Jan 14, 2021

Jan 13, 2021

Jan 8, 2021

Jan 7, 2021

Jan 6, 2021

Jan 5, 2021

Jan 4, 2021

Jan 3, 2021

Jan 2, 2021

Jan 1, 2021

Dec 24, 2020

Dec 11, 2020

Dec 10, 2020

Dec 9, 2020

Dec 5, 2020

Nov 28, 2020

Nov 18, 2020

Nov 8, 2020

Nov 3, 2020

Oct 30, 2020

Oct 26, 2020

Oct 24, 2020

Oct 20, 2020

Oct 15, 2020

Oct 14, 2020

Oct 7, 2020

Oct 3, 2020

Oct 2, 2020

Sep 25, 2020

Sep 24, 2020

Sep 21, 2020

Sep 15, 2020

Sep 14, 2020

Sep 11, 2020

Sep 9, 2020

Sep 7, 2020

Sep 6, 2020

Sep 4, 2020

Aug 30, 2020

Aug 28, 2020

Aug 27, 2020

Aug 24, 2020

Aug 20, 2020

Aug 19, 2020

Aug 17, 2020

Aug 16, 2020

Aug 14, 2020

Aug 13, 2020

Aug 12, 2020

Aug 10, 2020

Aug 4, 2020

Aug 3, 2020

Jul 31, 2020

Jul 29, 2020

Jul 27, 2020

Jul 26, 2020

Jul 25, 2020

Jul 23, 2020

Jul 21, 2020

Jul 18, 2020

Jul 17, 2020

Jul 15, 2020

Jul 13, 2020

Jul 12, 2020

Jun 30, 2020

Jun 27, 2020

Jun 26, 2020

Jun 25, 2020

Jun 22, 2020

Jun 21, 2020

Jun 19, 2020

Jun 10, 2020

May 27, 2020

May 21, 2020

May 19, 2020

May 13, 2020

May 12, 2020

May 10, 2020

May 7, 2020

May 4, 2020

Apr 24, 2020

Apr 22, 2020

Apr 19, 2020

Apr 18, 2020

Apr 16, 2020

Apr 15, 2020

Apr 14, 2020

Apr 12, 2020

Mar 24, 2020

Mar 23, 2020

Mar 19, 2020

Mar 18, 2020

Feb 29, 2020

Feb 16, 2020

Feb 14, 2020

Feb 13, 2020

Feb 7, 2020

Feb 6, 2020

Feb 1, 2020

Jan 30, 2020

Jan 26, 2020

Jan 21, 2020

Jan 19, 2020

Jan 12, 2020

Jan 11, 2020

Jan 9, 2020

Jan 6, 2020

Jan 3, 2020

Jan 1, 2020

Dec 29, 2019

Dec 28, 2019

Dec 22, 2019

Dec 21, 2019

Dec 19, 2019

Dec 18, 2019

Dec 17, 2019

Dec 16, 2019

Dec 15, 2019

Dec 11, 2019

Dec 9, 2019

Dec 7, 2019

Dec 5, 2019

Dec 2, 2019

Dec 1, 2019

Nov 29, 2019

Nov 28, 2019

Nov 27, 2019

Nov 26, 2019

Nov 25, 2019

Nov 24, 2019

Nov 23, 2019

Nov 22, 2019

Nov 20, 2019

Nov 12, 2019

Nov 11, 2019

Nov 8, 2019

Nov 7, 2019

Nov 6, 2019

Nov 5, 2019

Nov 4, 2019

Nov 3, 2019

Nov 2, 2019

Nov 1, 2019

Oct 30, 2019

Oct 27, 2019

Oct 21, 2019

Oct 17, 2019

Oct 16, 2019

Oct 14, 2019

Oct 9, 2019

Oct 7, 2019

Oct 4, 2019

Oct 2, 2019

Oct 1, 2019

Sep 28, 2019

Sep 20, 2019

Sep 17, 2019

Sep 9, 2019

Sep 4, 2019

Aug 23, 2019

Aug 21, 2019

Aug 20, 2019

Aug 5, 2019

Jul 30, 2019

Jul 29, 2019

Jul 28, 2019

Jul 24, 2019

Jul 14, 2019

Jul 12, 2019

Jul 11, 2019

Jul 8, 2019

Jul 7, 2019

Jul 5, 2019

Jul 4, 2019

Jul 3, 2019

Jun 26, 2019

Jun 23, 2019

Jun 21, 2019

Jun 7, 2019

Jun 6, 2019

Jun 4, 2019

May 29, 2019

May 28, 2019

May 26, 2019

May 19, 2019

May 16, 2019

May 9, 2019

May 8, 2019

May 7, 2019

May 6, 2019

May 5, 2019

Apr 30, 2019

Apr 29, 2019

Apr 24, 2019

Apr 19, 2019

Apr 16, 2019

Apr 12, 2019

Apr 9, 2019

Apr 7, 2019

Apr 5, 2019

Apr 3, 2019

Mar 31, 2019

Mar 30, 2019

Mar 19, 2019

Mar 18, 2019

Mar 15, 2019

Mar 13, 2019

Mar 12, 2019

Mar 11, 2019

Mar 9, 2019

Mar 6, 2019

Mar 4, 2019

Mar 3, 2019

Mar 1, 2019

Feb 28, 2019

Feb 27, 2019

Feb 25, 2019

Feb 24, 2019

Feb 23, 2019

Feb 22, 2019

Feb 21, 2019

Feb 19, 2019

Feb 18, 2019

Feb 15, 2019

Feb 14, 2019

Feb 12, 2019

Feb 11, 2019

Feb 10, 2019

Feb 8, 2019

Feb 6, 2019

Feb 5, 2019

Feb 3, 2019

Feb 2, 2019

Jan 25, 2019

Jan 24, 2019

Jan 23, 2019

Jan 22, 2019

Jan 16, 2019

Jan 14, 2019

Jan 11, 2019

Jan 10, 2019

Jan 9, 2019

Jan 8, 2019

Jan 7, 2019

Jan 6, 2019

Jan 4, 2019

Jan 3, 2019

Jan 2, 2019

Dec 31, 2018

Dec 30, 2018

Dec 29, 2018

Dec 28, 2018

Dec 21, 2018

Dec 20, 2018

Dec 19, 2018

Dec 18, 2018

Dec 17, 2018

Dec 13, 2018

Dec 12, 2018

Dec 11, 2018

Dec 8, 2018

Oct 29, 2018

Oct 27, 2018

Oct 25, 2018

Oct 23, 2018

Oct 22, 2018

Oct 18, 2018

Oct 16, 2018

Oct 11, 2018

Oct 10, 2018

Oct 9, 2018

Oct 8, 2018

Oct 3, 2018

Oct 1, 2018

Sep 30, 2018

Sep 27, 2018

Sep 26, 2018

Sep 25, 2018

Sep 24, 2018

Sep 18, 2018

Sep 17, 2018

Sep 16, 2018

Sep 15, 2018

Sep 14, 2018

Sep 9, 2018

Sep 8, 2018

Sep 3, 2018

Sep 1, 2018

Aug 30, 2018

Aug 29, 2018

Aug 28, 2018

Aug 27, 2018

Aug 23, 2018

Aug 21, 2018

Aug 20, 2018

Aug 19, 2018

Aug 18, 2018

Aug 15, 2018

Aug 14, 2018

Aug 10, 2018

Aug 9, 2018

Aug 8, 2018

Aug 6, 2018

Aug 5, 2018

Aug 3, 2018

Aug 2, 2018

Aug 1, 2018

Jul 31, 2018

Jul 30, 2018

Jul 28, 2018

Jul 26, 2018

Jul 24, 2018

Jul 23, 2018

Jul 22, 2018

Jul 20, 2018

Jul 19, 2018

Jul 8, 2018

Jul 5, 2018

Jul 4, 2018

Jul 3, 2018

Jun 30, 2018

Jun 27, 2018

Jun 26, 2018

Jun 24, 2018

Jun 20, 2018

Jun 19, 2018

Jun 18, 2018

Jun 16, 2018

Jun 14, 2018

Jun 13, 2018

May 20, 2018

May 16, 2018

May 11, 2018

May 10, 2018

May 9, 2018

Apr 22, 2018

Apr 21, 2018

Apr 20, 2018

Apr 17, 2018

Apr 14, 2018

Apr 13, 2018

Apr 12, 2018

Apr 11, 2018

Apr 6, 2018

Apr 5, 2018

Apr 4, 2018

Apr 1, 2018

Mar 30, 2018

Mar 29, 2018

Mar 24, 2018

Mar 20, 2018

Mar 19, 2018

Mar 15, 2018

Mar 12, 2018

Mar 5, 2018

Mar 2, 2018

Mar 1, 2018

Feb 26, 2018

Feb 25, 2018

Feb 23, 2018

Feb 18, 2018

Feb 17, 2018

Feb 13, 2018

Feb 5, 2018

Jan 27, 2018

Jan 24, 2018

Jan 23, 2018

Jan 22, 2018

Jan 21, 2018

Jan 20, 2018

Jan 13, 2018

Jan 8, 2018

Jan 7, 2018

Jan 6, 2018

Jan 4, 2018

Jan 2, 2018

Dec 23, 2017

Dec 21, 2017

Dec 20, 2017

Dec 17, 2017

Dec 12, 2017

Dec 10, 2017

Dec 9, 2017

Dec 6, 2017

Dec 4, 2017

Dec 2, 2017

Dec 1, 2017

Nov 29, 2017

Nov 28, 2017

Nov 23, 2017

Nov 22, 2017

Nov 21, 2017

Nov 18, 2017

Nov 12, 2017

Nov 10, 2017

Nov 6, 2017

Nov 2, 2017

Nov 1, 2017

Oct 16, 2017

Oct 15, 2017

Oct 13, 2017

Oct 9, 2017

Oct 8, 2017

Oct 6, 2017

Sep 30, 2017

Sep 29, 2017

Sep 27, 2017

Sep 25, 2017

Sep 24, 2017

Sep 22, 2017

Sep 19, 2017

Sep 18, 2017

Sep 16, 2017

Sep 15, 2017

Sep 14, 2017

Sep 13, 2017

Sep 12, 2017

Sep 11, 2017

Sep 6, 2017

Sep 5, 2017

Sep 1, 2017

Aug 31, 2017

Aug 29, 2017

Aug 28, 2017

Aug 26, 2017

Aug 25, 2017

Aug 24, 2017

Aug 23, 2017

Aug 22, 2017

Aug 21, 2017

Aug 20, 2017

Aug 18, 2017

Aug 17, 2017

Aug 16, 2017

Aug 15, 2017

Aug 13, 2017

Aug 7, 2017

Aug 4, 2017

Aug 3, 2017

Aug 2, 2017

Jul 23, 2017

Jul 20, 2017

Jul 16, 2017

Jul 7, 2017

Jun 19, 2017

Jun 17, 2017

Jun 11, 2017

Jun 10, 2017

May 28, 2017

May 23, 2017

May 22, 2017

May 19, 2017

May 17, 2017

May 9, 2017

Apr 26, 2017

Apr 23, 2017

Apr 22, 2017

Apr 21, 2017

Mar 20, 2017

Dec 20, 2016

Oct 31, 2016

Oct 5, 2016

Sep 26, 2016

Sep 23, 2016

Sep 22, 2016

Jul 13, 2016

Jul 7, 2016

May 27, 2016

May 24, 2016

May 1, 2016

Apr 30, 2016

Apr 29, 2016

Mar 6, 2016

Feb 25, 2016

Feb 24, 2016

Feb 23, 2016

Feb 18, 2016

Feb 17, 2016

Jan 10, 2016

Jan 9, 2016

Dec 22, 2015

Dec 21, 2015

Dec 16, 2015

Dec 15, 2015

Dec 8, 2015

Nov 24, 2015

Nov 1, 2015

Oct 29, 2015

Oct 18, 2015

Sep 27, 2015

Sep 23, 2015

Sep 16, 2015

Aug 31, 2015

Aug 26, 2015

Aug 22, 2015

Aug 19, 2015

Aug 4, 2015

Aug 3, 2015

Jul 27, 2015

Jul 24, 2015

Jul 21, 2015

Jul 20, 2015

Jul 18, 2015

Jul 17, 2015

Jul 16, 2015

Jul 10, 2015

Jun 29, 2015

Jun 8, 2015

May 21, 2015

May 14, 2015

May 5, 2015

May 4, 2015

Apr 22, 2015

Apr 15, 2015

Apr 14, 2015

Apr 7, 2015

Apr 6, 2015

Mar 27, 2015

Mar 26, 2015

Mar 25, 2015

Mar 23, 2015

Mar 22, 2015

Mar 21, 2015

Mar 20, 2015

Mar 14, 2015

Feb 10, 2015

Feb 5, 2015

Feb 4, 2015

Feb 3, 2015

Jan 21, 2015

Jan 16, 2015

Jan 13, 2015

Jan 12, 2015

Jan 8, 2015

Jan 7, 2015

Dec 14, 2013

May 3, 2013

Mar 26, 2013

Mar 24, 2013

Mar 23, 2013

Mar 13, 2013

Mar 12, 2013

Mar 10, 2013

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages .

Source Distribution

Uploaded Apr 8, 2024 Source

Built Distribution

Uploaded Apr 8, 2024 Python 3

Hashes for hypothesis-6.100.1.tar.gz

Hashes for hypothesis-6.100.1-py3-none-any.whl.

- português (Brasil)

Supported by

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications

Your Data Guide

How to Perform Hypothesis Testing Using Python

Step into the intriguing world of hypothesis testing, where your natural curiosity meets the power of data to reveal truths!

This article is your key to unlocking how those everyday hunches—like guessing a group’s average income or figuring out who owns their home—can be thoroughly checked and proven with data.

Thanks for reading Your Data Guide! Subscribe for free to receive new posts and support my work.

I am going to take you by the hand and show you, in simple steps, how to use Python to explore a hypothesis about the average yearly income.

By the time we’re done, you’ll not only get the hang of creating and testing hypotheses but also how to use statistical tests on actual data.

Perfect for up-and-coming data scientists, anyone with a knack for analysis, or just if you’re keen on data, get ready to gain the skills to make informed decisions and turn insights into real-world actions.

Join me as we dive deep into the data, one hypothesis at a time!

Before we get started, elevate your data skills with my expert eBooks—the culmination of my experiences and insights.

Support my work and enhance your journey. Check them out:

eBook 1: Personal INTERVIEW Ready “SQL” CheatSheet

eBook 2: Personal INTERVIEW Ready “Statistics” Cornell Notes

Best Selling eBook: Top 50+ ChatGPT Personas for Custom Instructions

Data Science Bundle ( Cheapest ): The Ultimate Data Science Bundle: Complete

ChatGPT Bundle ( Cheapest ): The Ultimate ChatGPT Bundle: Complete

💡 Checkout for more such resources: https://codewarepam.gumroad.com/

What is a hypothesis, and how do you test it?

A hypothesis is like a guess or prediction about something specific, such as the average income or the percentage of homeowners in a group of people.

It’s based on theories, past observations, or questions that spark our curiosity.

For instance, you might predict that the average yearly income of potential customers is over $50,000 or that 60% of them own their homes.

To see if your guess is right, you gather data from a smaller group within the larger population and check if the numbers ( like the average income, percentage of homeowners, etc. ) from this smaller group match your initial prediction.

You also set a rule for how sure you need to be to trust your findings, often using a 5% chance of error as a standard measure . This means you’re 95% confident in your results. — Level of Significance (0.05)

There are two main types of hypotheses : the null hypothesi s, which is your baseline saying there’s no change or difference, and the alternative hypothesis , which suggests there is a change or difference.

For example,

If you start with the idea that the average yearly income of potential customers is $50,000,

The alternative could be that it’s not $50,000—it could be less or more, depending on what you’re trying to find out.

To test your hypothesis, you calculate a test statistic —a number that shows how much your sample data deviates from what you predicted.

How you calculate this depends on what you’re studying and the kind of data you have. For example, to check an average, you might use a formula that considers your sample’s average, the predicted average, the variation in your sample data, and how big your sample is.

This test statistic follows a known distribution ( like the t-distribution or z-distribution ), which helps you figure out the p-value.

The p-value tells you the odds of seeing a test statistic as extreme as yours if your initial guess was correct.

A small p-value means your data strongly disagrees with your initial guess.

Finally, you decide on your hypothesis by comparing the p-value to your error threshold.

If the p-value is smaller or equal, you reject the null hypothesis, meaning your data shows a significant difference that’s unlikely due to chance.

If the p-value is larger, you stick with the null hypothesis , suggesting your data doesn’t show a meaningful difference and any change might just be by chance.

We’ll go through an example that tests if the average annual income of prospective customers exceeds $50,000.

This process involves stating hypotheses , specifying a significance level , collecting and analyzing data , and drawing conclusions based on statistical tests.

Example: Testing a Hypothesis About Average Annual Income

Step 1: state the hypotheses.

Null Hypothesis (H0): The average annual income of prospective customers is $50,000.

Alternative Hypothesis (H1): The average annual income of prospective customers is more than $50,000.

Step 2: Specify the Significance Level

Significance Level: 0.05, meaning we’re 95% confident in our findings and allow a 5% chance of error.

Step 3: Collect Sample Data

We’ll use the ProspectiveBuyer table, assuming it's a random sample from the population.

This table has 2,059 entries, representing prospective customers' annual incomes.

Step 4: Calculate the Sample Statistic

In Python, we can use libraries like Pandas and Numpy to calculate the sample mean and standard deviation.

SampleMean: 56,992.43

SampleSD: 32,079.16

SampleSize: 2,059

Step 5: Calculate the Test Statistic

We use the t-test formula to calculate how significantly our sample mean deviates from the hypothesized mean.

Python’s Scipy library can handle this calculation:

T-Statistic: 4.62

Step 6: Calculate the P-Value

The p-value is already calculated in the previous step using Scipy's ttest_1samp function, which returns both the test statistic and the p-value.

P-Value = 0.0000021

Step 7: State the Statistical Conclusion

We compare the p-value with our significance level to decide on our hypothesis:

Since the p-value is less than 0.05, we reject the null hypothesis in favor of the alternative.

Conclusion:

There’s strong evidence to suggest that the average annual income of prospective customers is indeed more than $50,000.

This example illustrates how Python can be a powerful tool for hypothesis testing, enabling us to derive insights from data through statistical analysis.

How to Choose the Right Test Statistics

Choosing the right test statistic is crucial and depends on what you’re trying to find out, the kind of data you have, and how that data is spread out.

Here are some common types of test statistics and when to use them:

T-test statistic:

This one’s great for checking out the average of a group when your data follows a normal distribution or when you’re comparing the averages of two such groups.

The t-test follows a special curve called the t-distribution . This curve looks a lot like the normal bell curve but with thicker ends, which means more chances for extreme values.

The t-distribution’s shape changes based on something called degrees of freedom , which is a fancy way of talking about your sample size and how many groups you’re comparing.

Z-test statistic:

Use this when you’re looking at the average of a normally distributed group or the difference between two group averages, and you already know the standard deviation for all in the population.

The z-test follows the standard normal distribution , which is your classic bell curve centered at zero and spreading out evenly on both sides.

Chi-square test statistic:

This is your go-to for checking if there’s a difference in variability within a normally distributed group or if two categories are related.

The chi-square statistic follows its own distribution, which leans to the right and gets its shape from the degrees of freedom —basically, how many categories or groups you’re comparing.

F-test statistic:

This one helps you compare the variability between two groups or see if the averages of more than two groups are all the same, assuming all groups are normally distributed.

The F-test follows the F-distribution , which is also right-skewed and has two types of degrees of freedom that depend on how many groups you have and the size of each group.

In simple terms, the test you pick hinges on what you’re curious about, whether your data fits the normal curve, and if you know certain specifics, like the population’s standard deviation.

Each test has its own special curve and rules based on your sample’s details and what you’re comparing.

Join my community of learners! Subscribe to my newsletter for more tips, tricks, and exclusive content on mastering Data Science & AI. — Your Data Guide Join my community of learners! Subscribe to my newsletter for more tips, tricks, and exclusive content on mastering data science and AI. By Richard Warepam ⭐️ Visit My Gumroad Shop: https://codewarepam.gumroad.com/

Ready for more?

Pytest With Eric

How to Use Hypothesis and Pytest for Robust Property-Based Testing in Python

There will always be cases you didn’t consider, making this an ongoing maintenance job. Unit testing solves only some of these issues.

Example-Based Testing vs Property-Based Testing

Project set up, getting started, prerequisites, simple example, source code, simple example — unit tests, example-based testing, running the unit test, property-based testing, complex example, source code, complex example — unit tests, discover bugs with hypothesis, define your own hypothesis strategies, model-based testing in hypothesis, additional reading.

Statistical Hypothesis Testing: A Comprehensive Guide

We’ve all heard it – “ go to college to get a good job .” The assumption is that higher education leads straight to higher incomes. Elite Indian institutes like the IITs and IIMs are even judged based on the average starting salaries of their graduates. But is this direct connection between schooling and income actually true?

Intuitively, it seems believable. But how can we really prove this assumption that more school = more money? Is there hard statistical evidence either way? Turns out, there are methods to scientifically test widespread beliefs like this – what statisticians call hypothesis testing.

In this article, we’ll dig into the concept of hypothesis testing and the tools to rigorously question conventional wisdom: null and alternate hypotheses, one and two-tailed tests, paired sample tests, and more.

Statistical hypothesis testing allows researchers to make inferences about populations based on sample data. It involves setting up a null hypothesis, choosing a confidence level, calculating a p-value, and conducting tests such as two-tailed, one-tailed, or paired sample tests to draw conclusions.

What is Hypothesis Testing?

Statistical Hypothesis Testing is a method used to make inferences about a population based on sample data. Before we move ahead and understand what Hypothesis Testing is, we need to understand some basic terms.

Null Hypothesis

The Null Hypothesis is generally where we start our journey. Null Hypotheses are statements that are generally accepted or statements that you want to challenge. Since it is generally accepted that income level is positively correlated with quality of education, this will be our Null Hypothesis. It is denoted by H 0 .

H 0 : Income levels are positively correlated with quality of education.

Alternate Hypothesis

The Alternate Hypothesis is the opposite of the Null hypothesis. An alternate Hypothesis is what we want to prove as a researcher and is not generally accepted by society. An alternate hypothesis is denoted H a . The alternate hypothesis of the above is given below.

H a : Income levels are negatively correlated with the quality of education.

Confidence Level (1- α )

Confidence Levels represent the probability that the range of values contains the true parameter value. The most common confidence levels are 95% and 99%. It can be interpreted that our test is 95% accurate if our confidence level is 95%. It is denoted by 1-α.

p-value ( p )

The p-value represents the probability of obtaining test results at least as extreme as the results actually observed, under the assumption that the null hypothesis is correct. A lower p-value means fewer chances for our observed result to happen. If our p-value is less than α , our null hypothesis is rejected, otherwise null hypothesis is accepted.

Types of Hypothesis Tests

Since we are equipped with the basic terms, let’s go ahead and conduct some hypothesis tests.

Conducting a Two-Tailed Hypothesis Test

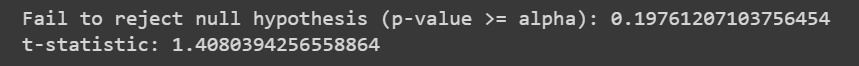

In a two-tailed hypothesis test, our analysis can go in either direction i.e. either more than or less than our observed value. For example, a medical researcher testing out the effects of a placebo wants to know whether it increases or decreases blood pressure. Let’s look at its Python implementation.

In the above code, we want to know if the group study method is an effective way to study or not. Therefore our null and alternate hypotheses are as follows.

- H 0 : The Group study method is not an effective way to study .

- H a : The group study method is an effective way to study .

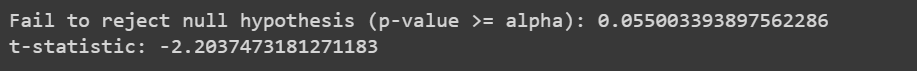

Since the p-value is greater than α , we fail to reject the null hypothesis. Therefore the group study method is not an effective way to study.

Recommended: Hypothesis Testing in Python: Finding the critical value of T

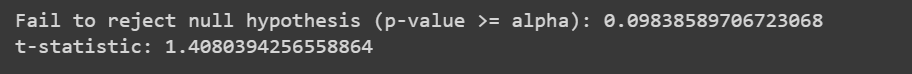

In a one-tailed hypothesis test, we have certain expectations in which way our observed value will move i.e. higher or lower. For example, our researchers want to know if a particular medicine lowers our cholesterol level. Let’s look at its Python code.

Here our null and alternate hypothesis tests are given below.

- H 0 : The Group study method does not increase our marks.

- H a : The group study method increases our marks.

Since the p-value is greater than α , we fail to reject the null hypothesis. Therefore the group study method does not increase our marks.

A paired sample test compares two sets of observations and then provides us with a conclusion. For example, we need to know whether the reaction time of our participants increases after consuming caffeine. Let’s look at another example with a Python code as well.

Similar to the above hypothesis tests, we consider the group study method here as well. Our null and alternate hypotheses are as follows.

- H 0 : The group study method does not provide us with significant differences in our scores.

- H a : The group study method gives us significant differences in our scores.

Since the p-value is greater than α , we fail to reject the null hypothesis.

Here you go! Now you are equipped to perform statistical hypothesis testing on different samples and draw out different conclusions. You need to collect data and decide on null and alternate hypotheses. Furthermore, based on the predetermined hypothesis, you need to decide on which type of test to perform. Statistical hypothesis testing is one of the most powerful tools in the world of research.

Now that you have a grasp on statistical hypothesis testing, how will you apply these concepts to your own research or data analysis projects? What hypotheses are you eager to test?

Do check out: How to find critical value in Python

Open source smart fuzzing for Python's best testing workflow.

Property-based tests fit cleanly into any Python test suite - while being faster to write, better at finding bugs, and giving clearer design feedback than traditional example-based unit tests. That's why everyone serious about testing Python code uses Hypothesis - from companies like Google and Amazon, to open source projects like Numpy or dateutil, to high-school students and professors of astrophysics.

HypoFuzz is an advanced fuzzing backend for test suites which use Hypothesis.

Because HypoFuzz is designed to run for hours or days - unlike Hypothesis' sub-second budget for unit tests - we can use cutting-edge fuzzing techniques and coverage instrumentation to find even the rarest inputs which trigger an error. Find bugs with continuous fuzzing, so you can keep CI fast and focussed on regressions.

Instead of running a fixed number of examples for each test and then stopping, we can interleave examples for multiple tests, and dynamically prioritise those which are finding new behaviour or bugs. We're not just spending compute time instead of engineer-time, HypoFuzz does so as efficiently as possible - and we're working on quantifying the residual risk so you can make an informed decision about when to stop fuzzing.

Finally, if our web-based dashboard doesn't make it clear what failed, you can replay the minimal failing example for any bug locally just by running the same test . With a shared Hypothesis database , that's all it ever takes!

the newsletter

HypoFuzz is still young, so we'll have plenty to share as we roll out new features - and the first place you'll hear about them is our low-volume newsletter.

Get started

HypoFuzz fits seamlessly into your workflow, with a familiar CLI and a live dashboard to monitor progress. Questions about how to get set up to find bugs, or reproduce bugs you've found? The docs can help.

See the demo

There's no substitute for seeing it in action... but if pip install hypofuzz seems too hard, there's a snapshot of the dashboard for you to explore right here.

Hypothesis is world-leading library for property-based testing, and used by thousands of companies and Python projects who are serious about finding bugs.

Statistics Made Easy

How to Perform Hypothesis Testing in Python (With Examples)

A hypothesis test is a formal statistical test we use to reject or fail to reject some statistical hypothesis.

This tutorial explains how to perform the following hypothesis tests in Python:

- One sample t-test

- Two sample t-test

- Paired samples t-test

Let’s jump in!

Example 1: One Sample t-test in Python

A one sample t-test is used to test whether or not the mean of a population is equal to some value.

For example, suppose we want to know whether or not the mean weight of a certain species of some turtle is equal to 310 pounds.

To test this, we go out and collect a simple random sample of turtles with the following weights:

Weights : 300, 315, 320, 311, 314, 309, 300, 308, 305, 303, 305, 301, 303

The following code shows how to use the ttest_1samp() function from the scipy.stats library to perform a one sample t-test:

The t test statistic is -1.5848 and the corresponding two-sided p-value is 0.1389 .

The two hypotheses for this particular one sample t-test are as follows:

- H 0 : µ = 310 (the mean weight for this species of turtle is 310 pounds)

- H A : µ ≠310 (the mean weight is not 310 pounds)

Because the p-value of our test (0.1389) is greater than alpha = 0.05, we fail to reject the null hypothesis of the test.

We do not have sufficient evidence to say that the mean weight for this particular species of turtle is different from 310 pounds.

Example 2: Two Sample t-test in Python

A two sample t-test is used to test whether or not the means of two populations are equal.

For example, suppose we want to know whether or not the mean weight between two different species of turtles is equal.

To test this, we collect a simple random sample of turtles from each species with the following weights:

Sample 1 : 300, 315, 320, 311, 314, 309, 300, 308, 305, 303, 305, 301, 303

Sample 2 : 335, 329, 322, 321, 324, 319, 304, 308, 305, 311, 307, 300, 305

The following code shows how to use the ttest_ind() function from the scipy.stats library to perform this two sample t-test:

The t test statistic is – 2.1009 and the corresponding two-sided p-value is 0.0463 .

The two hypotheses for this particular two sample t-test are as follows:

- H 0 : µ 1 = µ 2 (the mean weight between the two species is equal)

- H A : µ 1 ≠ µ 2 (the mean weight between the two species is not equal)

Since the p-value of the test (0.0463) is less than .05, we reject the null hypothesis.

This means we have sufficient evidence to say that the mean weight between the two species is not equal.

Example 3: Paired Samples t-test in Python

A paired samples t-test is used to compare the means of two samples when each observation in one sample can be paired with an observation in the other sample.

For example, suppose we want to know whether or not a certain training program is able to increase the max vertical jump (in inches) of basketball players.

To test this, we may recruit a simple random sample of 12 college basketball players and measure each of their max vertical jumps. Then, we may have each player use the training program for one month and then measure their max vertical jump again at the end of the month.

The following data shows the max jump height (in inches) before and after using the training program for each player:

Before : 22, 24, 20, 19, 19, 20, 22, 25, 24, 23, 22, 21

After : 23, 25, 20, 24, 18, 22, 23, 28, 24, 25, 24, 20

The following code shows how to use the ttest_rel() function from the scipy.stats library to perform this paired samples t-test:

The t test statistic is – 2.5289 and the corresponding two-sided p-value is 0.0280 .

The two hypotheses for this particular paired samples t-test are as follows:

- H 0 : µ 1 = µ 2 (the mean jump height before and after using the program is equal)

- H A : µ 1 ≠ µ 2 (the mean jump height before and after using the program is not equal)

Since the p-value of the test (0.0280) is less than .05, we reject the null hypothesis.

This means we have sufficient evidence to say that the mean jump height before and after using the training program is not equal.

Additional Resources

You can use the following online calculators to automatically perform various t-tests:

One Sample t-test Calculator Two Sample t-test Calculator Paired Samples t-test Calculator

Hey there. My name is Zach Bobbitt. I have a Master of Science degree in Applied Statistics and I’ve worked on machine learning algorithms for professional businesses in both healthcare and retail. I’m passionate about statistics, machine learning, and data visualization and I created Statology to be a resource for both students and teachers alike. My goal with this site is to help you learn statistics through using simple terms, plenty of real-world examples, and helpful illustrations.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

COMMENTS

Welcome to Hypothesis!¶ Hypothesis is a Python library for creating unit tests which are simpler to write and more powerful when run, finding edge cases in your code you wouldn't have thought to look for. It is stable, powerful and easy to add to any existing test suite. It works by letting you write tests that assert that something should be true for every case, not just the ones you ...

For example, everything_except(int) returns a strategy that can generate anything that from_type() can ever generate, except for instances of int, and excluding instances of types added via register_type_strategy(). This is useful when writing tests which check that invalid input is rejected in a certain way. hypothesis.strategies. frozensets (elements, *, min_size = 0, max_size = None ...

We can write a simple property-based test for this, leveraging the fact that Hypothesis generates dozens of tests for us. Save this in a Python file: from hypothesis import given, strategies as st. @given(st.integers()) def test_int_str_roundtripping(x): assert x == int(str(x)) Now, run this file with pytest.

Hypothesis is an advanced testing library for Python. It lets you write tests which are parametrized by a source of examples, and then generates simple and comprehensible examples that make your tests fail. This lets you find more bugs in your code with less work. e.g. xs=[1.7976321109618856e+308, 6.102390043022755e+303] Hypothesis is extremely ...

The Hypothesis example database. When Hypothesis finds a bug it stores enough information in its database to reproduce it. This enables you to have a classic testing workflow of find a bug, fix a bug, and be confident that this is actually doing the right thing because Hypothesis will start by retrying the examples that broke things last time.

In this article, I want to show hypothesis testing with Python on several questions step-by-step. But before, let me explain the hypothesis testing process briefly. If you wish, you can move to the questions directly. 1. Defining Hypotheses ... A random sample was taken of 660 customers from the database. The customers in the sample were ...

The test work flow should go as: for example in hypothesis-given-examples: # @given. for combination in pytest-parametrized-combinations: # @pytest.mark.parametrize. db = setup_db(example, combination) # should be a fixture with `yield` but I can't parametrize it. do_test_1(db) # using the setup database.

In this article, we will introduce property-based testing for Python by using the Hypothesis. It can be used to create test cases following certain customizable strategies automatically. ... This database is located in the folder .hypothesis, under the directory we execute the test from. Deleting it clears the database.

Dive into the fascinating process of hypothesis testing with Python in this comprehensive guide. Perfect for aspiring data scientists and analytical minds, learn how to validate your predictions using statistical tests and Python's robust libraries. From understanding the basics of hypothesis formulation to executing detailed statistical analysis, this article illuminates the path to data ...

To use Hypothesis in this example, we import the given, strategies and assume in-built methods.. The @given decorator is placed just before each test followed by a strategy.. A strategy is specified using the strategy.X method which can be st.list(), st.integers(), st.text() and so on.. Here's a comprehensive list of strategies.. Strategies are used to generate test data and can be heavily ...

Hypothesis testing with Python. One of the most important factors driving Python's popularity as a statistical modeling language is its widespread use as the language of choice in data science and machine learning. Today, there's a huge demand for data science expertise as more and more businesses apply it within their operations.

Hypothesis Testing in Python. In this course, you'll learn advanced statistical concepts like significance testing and multi-category chi-square testing, which will help you perform more powerful and robust data analysis. Enroll for free. Part of the Data Analyst (Python), and Data Scientist (Python) paths. 4.8 (359 reviews)

Statistical hypothesis testing allows researchers to make inferences about populations based on sample data. It involves setting up a null hypothesis, choosing a confidence level, calculating a p-value, and conducting tests such as two-tailed, one-tailed, or paired sample tests to draw conclusions.

That's why everyone serious about testing Python code uses Hypothesis - from companies like Google and Amazon, to open source projects like Numpy or dateutil, to high ... With a shared Hypothesis database, that's all it ever takes! the newsletter. HypoFuzz is still young, so we'll have plenty to share as we roll out new features - and the first ...

If the issue persists, it's likely a problem on our side. Unexpected token < in JSON at position 4. keyboard_arrow_up. content_copy. SyntaxError: Unexpected token < in JSON at position 4. Refresh. Explore and run machine learning code with Kaggle Notebooks | Using data from No attached data sources.

Example 1: One Sample t-test in Python. A one sample t-test is used to test whether or not the mean of a population is equal to some value. For example, suppose we want to know whether or not the mean weight of a certain species of some turtle is equal to 310 pounds. To test this, we go out and collect a simple random sample of turtles with the ...