Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Comparing and contrasting in an essay | Tips & examples

Comparing and Contrasting in an Essay | Tips & Examples

Published on August 6, 2020 by Jack Caulfield . Revised on July 23, 2023.

Comparing and contrasting is an important skill in academic writing . It involves taking two or more subjects and analyzing the differences and similarities between them.

Instantly correct all language mistakes in your text

Upload your document to correct all your mistakes in minutes

Table of contents

When should i compare and contrast, making effective comparisons, comparing and contrasting as a brainstorming tool, structuring your comparisons, other interesting articles, frequently asked questions about comparing and contrasting.

Many assignments will invite you to make comparisons quite explicitly, as in these prompts.

- Compare the treatment of the theme of beauty in the poetry of William Wordsworth and John Keats.

- Compare and contrast in-class and distance learning. What are the advantages and disadvantages of each approach?

Some other prompts may not directly ask you to compare and contrast, but present you with a topic where comparing and contrasting could be a good approach.

One way to approach this essay might be to contrast the situation before the Great Depression with the situation during it, to highlight how large a difference it made.

Comparing and contrasting is also used in all kinds of academic contexts where it’s not explicitly prompted. For example, a literature review involves comparing and contrasting different studies on your topic, and an argumentative essay may involve weighing up the pros and cons of different arguments.

Prevent plagiarism. Run a free check.

As the name suggests, comparing and contrasting is about identifying both similarities and differences. You might focus on contrasting quite different subjects or comparing subjects with a lot in common—but there must be some grounds for comparison in the first place.

For example, you might contrast French society before and after the French Revolution; you’d likely find many differences, but there would be a valid basis for comparison. However, if you contrasted pre-revolutionary France with Han-dynasty China, your reader might wonder why you chose to compare these two societies.

This is why it’s important to clarify the point of your comparisons by writing a focused thesis statement . Every element of an essay should serve your central argument in some way. Consider what you’re trying to accomplish with any comparisons you make, and be sure to make this clear to the reader.

Comparing and contrasting can be a useful tool to help organize your thoughts before you begin writing any type of academic text. You might use it to compare different theories and approaches you’ve encountered in your preliminary research, for example.

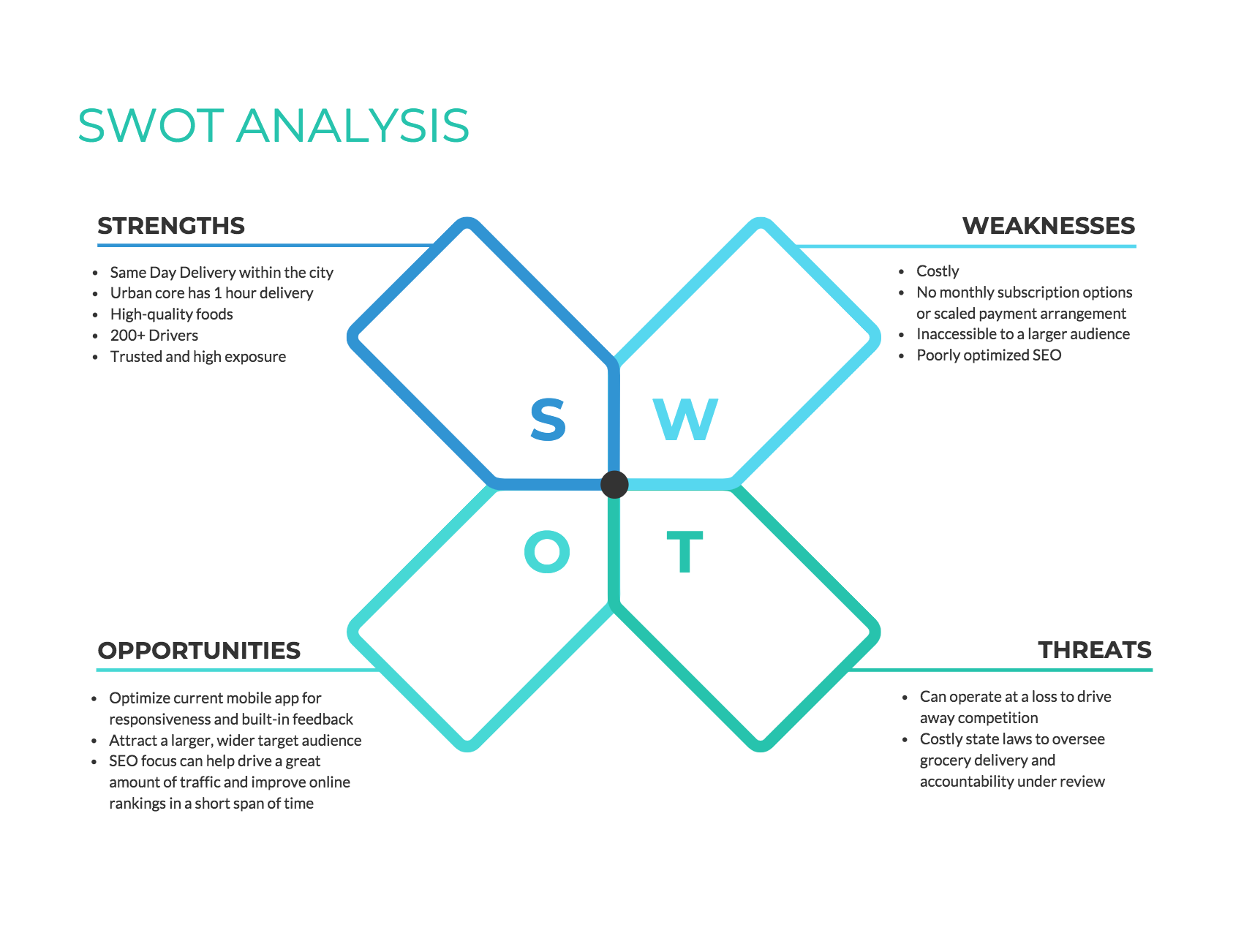

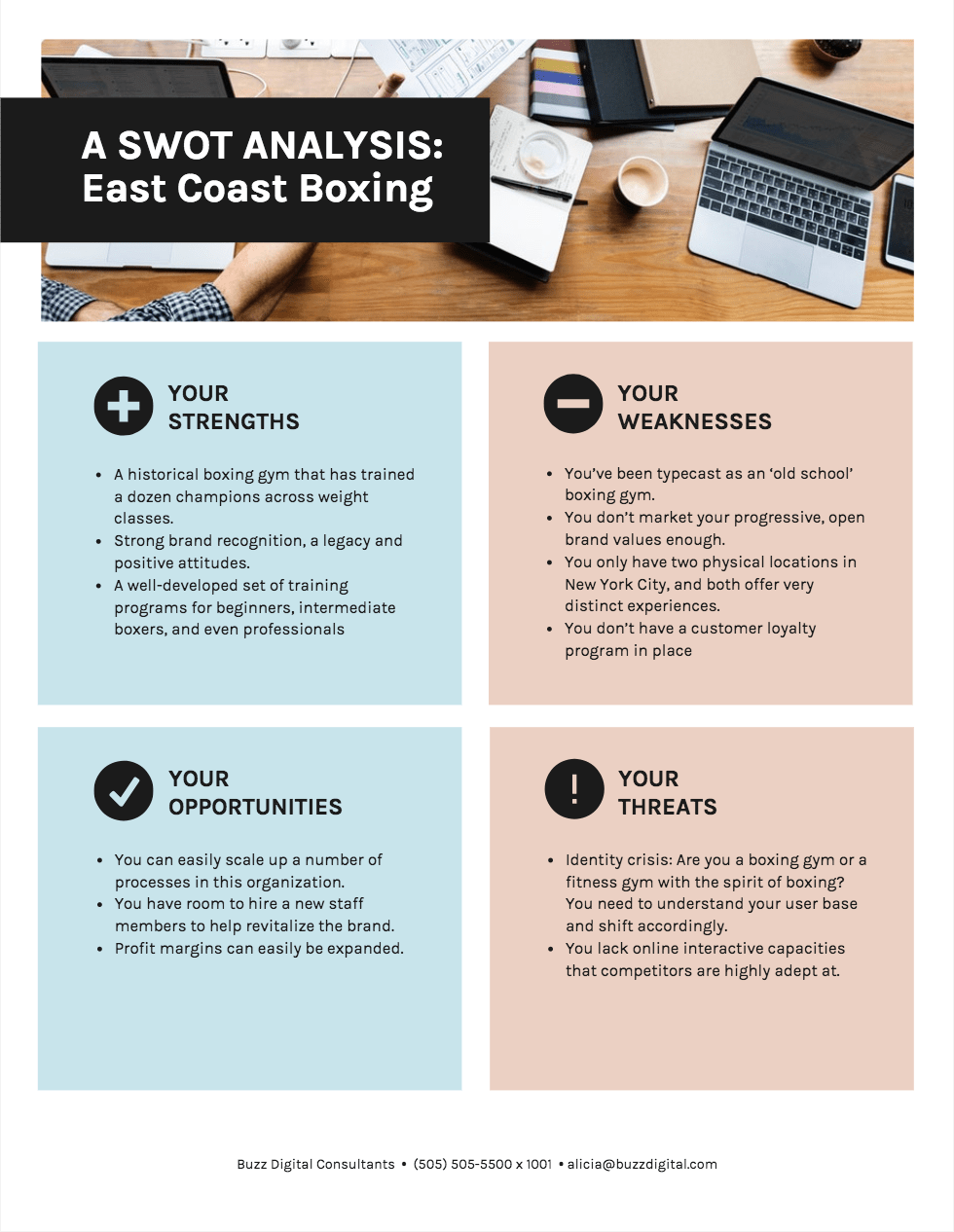

Let’s say your research involves the competing psychological approaches of behaviorism and cognitive psychology. You might make a table to summarize the key differences between them.

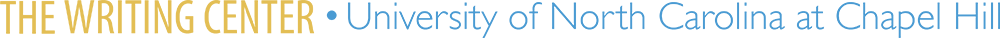

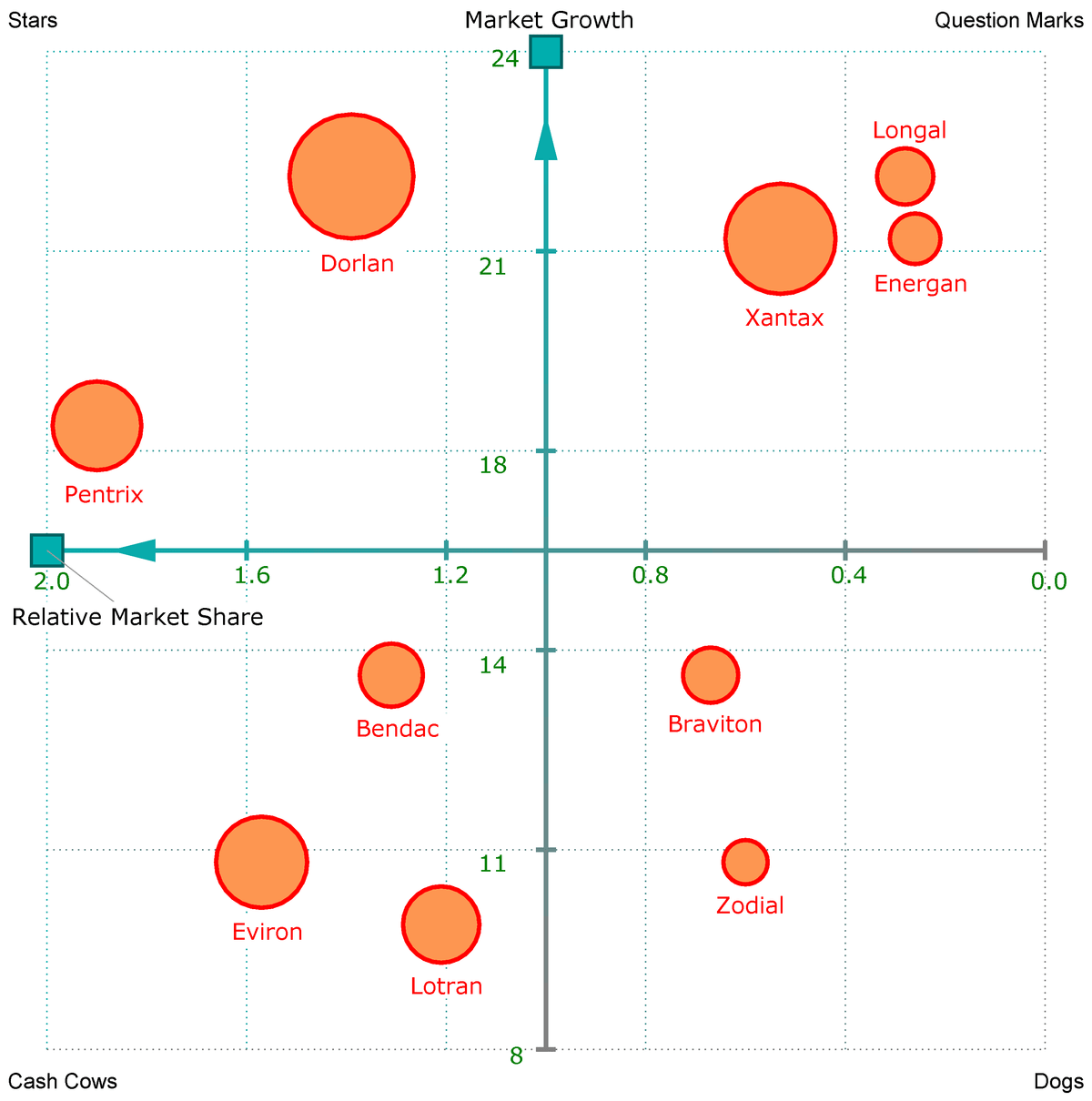

Or say you’re writing about the major global conflicts of the twentieth century. You might visualize the key similarities and differences in a Venn diagram.

These visualizations wouldn’t make it into your actual writing, so they don’t have to be very formal in terms of phrasing or presentation. The point of comparing and contrasting at this stage is to help you organize and shape your ideas to aid you in structuring your arguments.

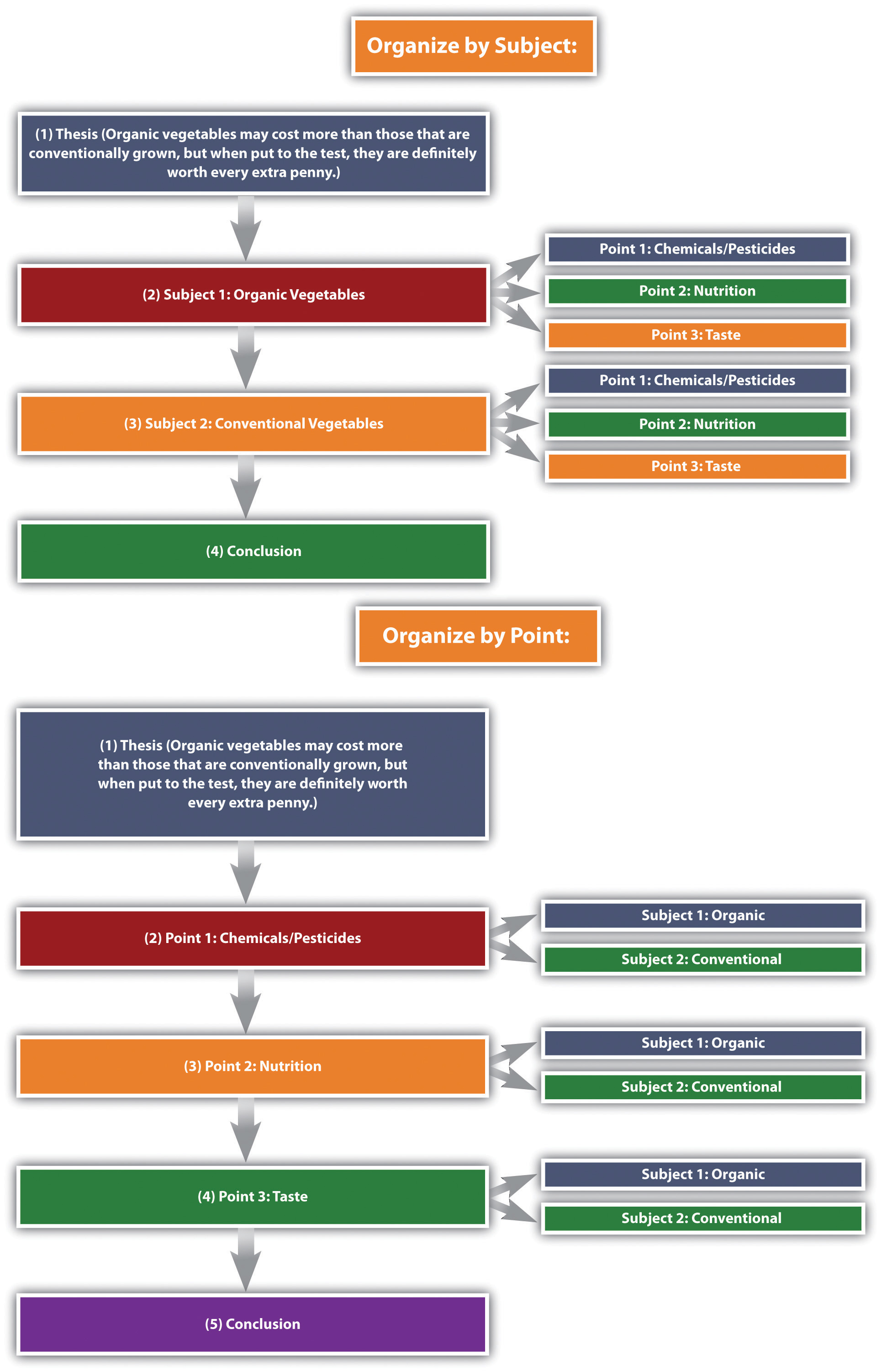

When comparing and contrasting in an essay, there are two main ways to structure your comparisons: the alternating method and the block method.

The alternating method

In the alternating method, you structure your text according to what aspect you’re comparing. You cover both your subjects side by side in terms of a specific point of comparison. Your text is structured like this:

Mouse over the example paragraph below to see how this approach works.

One challenge teachers face is identifying and assisting students who are struggling without disrupting the rest of the class. In a traditional classroom environment, the teacher can easily identify when a student is struggling based on their demeanor in class or simply by regularly checking on students during exercises. They can then offer assistance quietly during the exercise or discuss it further after class. Meanwhile, in a Zoom-based class, the lack of physical presence makes it more difficult to pay attention to individual students’ responses and notice frustrations, and there is less flexibility to speak with students privately to offer assistance. In this case, therefore, the traditional classroom environment holds the advantage, although it appears likely that aiding students in a virtual classroom environment will become easier as the technology, and teachers’ familiarity with it, improves.

The block method

In the block method, you cover each of the overall subjects you’re comparing in a block. You say everything you have to say about your first subject, then discuss your second subject, making comparisons and contrasts back to the things you’ve already said about the first. Your text is structured like this:

- Point of comparison A

- Point of comparison B

The most commonly cited advantage of distance learning is the flexibility and accessibility it offers. Rather than being required to travel to a specific location every week (and to live near enough to feasibly do so), students can participate from anywhere with an internet connection. This allows not only for a wider geographical spread of students but for the possibility of studying while travelling. However, distance learning presents its own accessibility challenges; not all students have a stable internet connection and a computer or other device with which to participate in online classes, and less technologically literate students and teachers may struggle with the technical aspects of class participation. Furthermore, discomfort and distractions can hinder an individual student’s ability to engage with the class from home, creating divergent learning experiences for different students. Distance learning, then, seems to improve accessibility in some ways while representing a step backwards in others.

Note that these two methods can be combined; these two example paragraphs could both be part of the same essay, but it’s wise to use an essay outline to plan out which approach you’re taking in each paragraph.

If you want to know more about AI tools , college essays , or fallacies make sure to check out some of our other articles with explanations and examples or go directly to our tools!

- Ad hominem fallacy

- Post hoc fallacy

- Appeal to authority fallacy

- False cause fallacy

- Sunk cost fallacy

College essays

- Choosing Essay Topic

- Write a College Essay

- Write a Diversity Essay

- College Essay Format & Structure

- Comparing and Contrasting in an Essay

(AI) Tools

- Grammar Checker

- Paraphrasing Tool

- Text Summarizer

- AI Detector

- Plagiarism Checker

- Citation Generator

Some essay prompts include the keywords “compare” and/or “contrast.” In these cases, an essay structured around comparing and contrasting is the appropriate response.

Comparing and contrasting is also a useful approach in all kinds of academic writing : You might compare different studies in a literature review , weigh up different arguments in an argumentative essay , or consider different theoretical approaches in a theoretical framework .

Your subjects might be very different or quite similar, but it’s important that there be meaningful grounds for comparison . You can probably describe many differences between a cat and a bicycle, but there isn’t really any connection between them to justify the comparison.

You’ll have to write a thesis statement explaining the central point you want to make in your essay , so be sure to know in advance what connects your subjects and makes them worth comparing.

Comparisons in essays are generally structured in one of two ways:

- The alternating method, where you compare your subjects side by side according to one specific aspect at a time.

- The block method, where you cover each subject separately in its entirety.

It’s also possible to combine both methods, for example by writing a full paragraph on each of your topics and then a final paragraph contrasting the two according to a specific metric.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Caulfield, J. (2023, July 23). Comparing and Contrasting in an Essay | Tips & Examples. Scribbr. Retrieved March 25, 2024, from https://www.scribbr.com/academic-essay/compare-and-contrast/

Is this article helpful?

Jack Caulfield

Other students also liked, how to write an expository essay, how to write an argumentative essay | examples & tips, academic paragraph structure | step-by-step guide & examples, unlimited academic ai-proofreading.

✔ Document error-free in 5minutes ✔ Unlimited document corrections ✔ Specialized in correcting academic texts

OASIS: Writing Center

Writing a paper: comparing & contrasting.

A compare and contrast paper discusses the similarities and differences between two or more topics. The paper should contain an introduction with a thesis statement, a body where the comparisons and contrasts are discussed, and a conclusion.

Address Both Similarities and Differences

Because this is a compare and contrast paper, both the similarities and differences should be discussed. This will require analysis on your part, as some topics will appear to be quite similar, and you will have to work to find the differing elements.

Make Sure You Have a Clear Thesis Statement

Just like any other essay, a compare and contrast essay needs a thesis statement. The thesis statement should not only tell your reader what you will do, but it should also address the purpose and importance of comparing and contrasting the material.

Use Clear Transitions

Transitions are important in compare and contrast essays, where you will be moving frequently between different topics or perspectives.

- Examples of transitions and phrases for comparisons: as well, similar to, consistent with, likewise, too

- Examples of transitions and phrases for contrasts: on the other hand, however, although, differs, conversely, rather than.

For more information, check out our transitions page.

Structure Your Paper

Consider how you will present the information. You could present all of the similarities first and then present all of the differences. Or you could go point by point and show the similarity and difference of one point, then the similarity and difference for another point, and so on.

Include Analysis

It is tempting to just provide summary for this type of paper, but analysis will show the importance of the comparisons and contrasts. For instance, if you are comparing two articles on the topic of the nursing shortage, help us understand what this will achieve. Did you find consensus between the articles that will support a certain action step for people in the field? Did you find discrepancies between the two that point to the need for further investigation?

Make Analogous Comparisons

When drawing comparisons or making contrasts, be sure you are dealing with similar aspects of each item. To use an old cliché, are you comparing apples to apples?

- Example of poor comparisons: Kubista studied the effects of a later start time on high school students, but Cook used a mixed methods approach. (This example does not compare similar items. It is not a clear contrast because the sentence does not discuss the same element of the articles. It is like comparing apples to oranges.)

- Example of analogous comparisons: Cook used a mixed methods approach, whereas Kubista used only quantitative methods. (Here, methods are clearly being compared, allowing the reader to understand the distinction.

Related Webinar

Didn't find what you need? Search our website or email us .

Read our website accessibility and accommodation statement .

- Previous Page: Developing Arguments

- Next Page: Avoiding Logical Fallacies

- Office of Student Disability Services

Walden Resources

Departments.

- Academic Residencies

- Academic Skills

- Career Planning and Development

- Customer Care Team

- Field Experience

- Military Services

- Student Success Advising

- Writing Skills

Centers and Offices

- Center for Social Change

- Office of Academic Support and Instructional Services

- Office of Degree Acceleration

- Office of Research and Doctoral Services

- Office of Student Affairs

Student Resources

- Doctoral Writing Assessment

- Form & Style Review

- Quick Answers

- ScholarWorks

- SKIL Courses and Workshops

- Walden Bookstore

- Walden Catalog & Student Handbook

- Student Safety/Title IX

- Legal & Consumer Information

- Website Terms and Conditions

- Cookie Policy

- Accessibility

- Accreditation

- State Authorization

- Net Price Calculator

- Contact Walden

Walden University is a member of Adtalem Global Education, Inc. www.adtalem.com Walden University is certified to operate by SCHEV © 2024 Walden University LLC. All rights reserved.

Comparing and Contrasting

What this handout is about.

This handout will help you first to determine whether a particular assignment is asking for comparison/contrast and then to generate a list of similarities and differences, decide which similarities and differences to focus on, and organize your paper so that it will be clear and effective. It will also explain how you can (and why you should) develop a thesis that goes beyond “Thing A and Thing B are similar in many ways but different in others.”

Introduction

In your career as a student, you’ll encounter many different kinds of writing assignments, each with its own requirements. One of the most common is the comparison/contrast essay, in which you focus on the ways in which certain things or ideas—usually two of them—are similar to (this is the comparison) and/or different from (this is the contrast) one another. By assigning such essays, your instructors are encouraging you to make connections between texts or ideas, engage in critical thinking, and go beyond mere description or summary to generate interesting analysis: when you reflect on similarities and differences, you gain a deeper understanding of the items you are comparing, their relationship to each other, and what is most important about them.

Recognizing comparison/contrast in assignments

Some assignments use words—like compare, contrast, similarities, and differences—that make it easy for you to see that they are asking you to compare and/or contrast. Here are a few hypothetical examples:

- Compare and contrast Frye’s and Bartky’s accounts of oppression.

- Compare WWI to WWII, identifying similarities in the causes, development, and outcomes of the wars.

- Contrast Wordsworth and Coleridge; what are the major differences in their poetry?

Notice that some topics ask only for comparison, others only for contrast, and others for both.

But it’s not always so easy to tell whether an assignment is asking you to include comparison/contrast. And in some cases, comparison/contrast is only part of the essay—you begin by comparing and/or contrasting two or more things and then use what you’ve learned to construct an argument or evaluation. Consider these examples, noticing the language that is used to ask for the comparison/contrast and whether the comparison/contrast is only one part of a larger assignment:

- Choose a particular idea or theme, such as romantic love, death, or nature, and consider how it is treated in two Romantic poems.

- How do the different authors we have studied so far define and describe oppression?

- Compare Frye’s and Bartky’s accounts of oppression. What does each imply about women’s collusion in their own oppression? Which is more accurate?

- In the texts we’ve studied, soldiers who served in different wars offer differing accounts of their experiences and feelings both during and after the fighting. What commonalities are there in these accounts? What factors do you think are responsible for their differences?

You may want to check out our handout on understanding assignments for additional tips.

Using comparison/contrast for all kinds of writing projects

Sometimes you may want to use comparison/contrast techniques in your own pre-writing work to get ideas that you can later use for an argument, even if comparison/contrast isn’t an official requirement for the paper you’re writing. For example, if you wanted to argue that Frye’s account of oppression is better than both de Beauvoir’s and Bartky’s, comparing and contrasting the main arguments of those three authors might help you construct your evaluation—even though the topic may not have asked for comparison/contrast and the lists of similarities and differences you generate may not appear anywhere in the final draft of your paper.

Discovering similarities and differences

Making a Venn diagram or a chart can help you quickly and efficiently compare and contrast two or more things or ideas. To make a Venn diagram, simply draw some overlapping circles, one circle for each item you’re considering. In the central area where they overlap, list the traits the two items have in common. Assign each one of the areas that doesn’t overlap; in those areas, you can list the traits that make the things different. Here’s a very simple example, using two pizza places:

To make a chart, figure out what criteria you want to focus on in comparing the items. Along the left side of the page, list each of the criteria. Across the top, list the names of the items. You should then have a box per item for each criterion; you can fill the boxes in and then survey what you’ve discovered.

Here’s an example, this time using three pizza places:

As you generate points of comparison, consider the purpose and content of the assignment and the focus of the class. What do you think the professor wants you to learn by doing this comparison/contrast? How does it fit with what you have been studying so far and with the other assignments in the course? Are there any clues about what to focus on in the assignment itself?

Here are some general questions about different types of things you might have to compare. These are by no means complete or definitive lists; they’re just here to give you some ideas—you can generate your own questions for these and other types of comparison. You may want to begin by using the questions reporters traditionally ask: Who? What? Where? When? Why? How? If you’re talking about objects, you might also consider general properties like size, shape, color, sound, weight, taste, texture, smell, number, duration, and location.

Two historical periods or events

- When did they occur—do you know the date(s) and duration? What happened or changed during each? Why are they significant?

- What kinds of work did people do? What kinds of relationships did they have? What did they value?

- What kinds of governments were there? Who were important people involved?

- What caused events in these periods, and what consequences did they have later on?

Two ideas or theories

- What are they about?

- Did they originate at some particular time?

- Who created them? Who uses or defends them?

- What is the central focus, claim, or goal of each? What conclusions do they offer?

- How are they applied to situations/people/things/etc.?

- Which seems more plausible to you, and why? How broad is their scope?

- What kind of evidence is usually offered for them?

Two pieces of writing or art

- What are their titles? What do they describe or depict?

- What is their tone or mood? What is their form?

- Who created them? When were they created? Why do you think they were created as they were? What themes do they address?

- Do you think one is of higher quality or greater merit than the other(s)—and if so, why?

- For writing: what plot, characterization, setting, theme, tone, and type of narration are used?

- Where are they from? How old are they? What is the gender, race, class, etc. of each?

- What, if anything, are they known for? Do they have any relationship to each other?

- What are they like? What did/do they do? What do they believe? Why are they interesting?

- What stands out most about each of them?

Deciding what to focus on

By now you have probably generated a huge list of similarities and differences—congratulations! Next you must decide which of them are interesting, important, and relevant enough to be included in your paper. Ask yourself these questions:

- What’s relevant to the assignment?

- What’s relevant to the course?

- What’s interesting and informative?

- What matters to the argument you are going to make?

- What’s basic or central (and needs to be mentioned even if obvious)?

- Overall, what’s more important—the similarities or the differences?

Suppose that you are writing a paper comparing two novels. For most literature classes, the fact that they both use Caslon type (a kind of typeface, like the fonts you may use in your writing) is not going to be relevant, nor is the fact that one of them has a few illustrations and the other has none; literature classes are more likely to focus on subjects like characterization, plot, setting, the writer’s style and intentions, language, central themes, and so forth. However, if you were writing a paper for a class on typesetting or on how illustrations are used to enhance novels, the typeface and presence or absence of illustrations might be absolutely critical to include in your final paper.

Sometimes a particular point of comparison or contrast might be relevant but not terribly revealing or interesting. For example, if you are writing a paper about Wordsworth’s “Tintern Abbey” and Coleridge’s “Frost at Midnight,” pointing out that they both have nature as a central theme is relevant (comparisons of poetry often talk about themes) but not terribly interesting; your class has probably already had many discussions about the Romantic poets’ fondness for nature. Talking about the different ways nature is depicted or the different aspects of nature that are emphasized might be more interesting and show a more sophisticated understanding of the poems.

Your thesis

The thesis of your comparison/contrast paper is very important: it can help you create a focused argument and give your reader a road map so they don’t get lost in the sea of points you are about to make. As in any paper, you will want to replace vague reports of your general topic (for example, “This paper will compare and contrast two pizza places,” or “Pepper’s and Amante are similar in some ways and different in others,” or “Pepper’s and Amante are similar in many ways, but they have one major difference”) with something more detailed and specific. For example, you might say, “Pepper’s and Amante have similar prices and ingredients, but their atmospheres and willingness to deliver set them apart.”

Be careful, though—although this thesis is fairly specific and does propose a simple argument (that atmosphere and delivery make the two pizza places different), your instructor will often be looking for a bit more analysis. In this case, the obvious question is “So what? Why should anyone care that Pepper’s and Amante are different in this way?” One might also wonder why the writer chose those two particular pizza places to compare—why not Papa John’s, Dominos, or Pizza Hut? Again, thinking about the context the class provides may help you answer such questions and make a stronger argument. Here’s a revision of the thesis mentioned earlier:

Pepper’s and Amante both offer a greater variety of ingredients than other Chapel Hill/Carrboro pizza places (and than any of the national chains), but the funky, lively atmosphere at Pepper’s makes it a better place to give visiting friends and family a taste of local culture.

You may find our handout on constructing thesis statements useful at this stage.

Organizing your paper

There are many different ways to organize a comparison/contrast essay. Here are two:

Subject-by-subject

Begin by saying everything you have to say about the first subject you are discussing, then move on and make all the points you want to make about the second subject (and after that, the third, and so on, if you’re comparing/contrasting more than two things). If the paper is short, you might be able to fit all of your points about each item into a single paragraph, but it’s more likely that you’d have several paragraphs per item. Using our pizza place comparison/contrast as an example, after the introduction, you might have a paragraph about the ingredients available at Pepper’s, a paragraph about its location, and a paragraph about its ambience. Then you’d have three similar paragraphs about Amante, followed by your conclusion.

The danger of this subject-by-subject organization is that your paper will simply be a list of points: a certain number of points (in my example, three) about one subject, then a certain number of points about another. This is usually not what college instructors are looking for in a paper—generally they want you to compare or contrast two or more things very directly, rather than just listing the traits the things have and leaving it up to the reader to reflect on how those traits are similar or different and why those similarities or differences matter. Thus, if you use the subject-by-subject form, you will probably want to have a very strong, analytical thesis and at least one body paragraph that ties all of your different points together.

A subject-by-subject structure can be a logical choice if you are writing what is sometimes called a “lens” comparison, in which you use one subject or item (which isn’t really your main topic) to better understand another item (which is). For example, you might be asked to compare a poem you’ve already covered thoroughly in class with one you are reading on your own. It might make sense to give a brief summary of your main ideas about the first poem (this would be your first subject, the “lens”), and then spend most of your paper discussing how those points are similar to or different from your ideas about the second.

Point-by-point

Rather than addressing things one subject at a time, you may wish to talk about one point of comparison at a time. There are two main ways this might play out, depending on how much you have to say about each of the things you are comparing. If you have just a little, you might, in a single paragraph, discuss how a certain point of comparison/contrast relates to all the items you are discussing. For example, I might describe, in one paragraph, what the prices are like at both Pepper’s and Amante; in the next paragraph, I might compare the ingredients available; in a third, I might contrast the atmospheres of the two restaurants.

If I had a bit more to say about the items I was comparing/contrasting, I might devote a whole paragraph to how each point relates to each item. For example, I might have a whole paragraph about the clientele at Pepper’s, followed by a whole paragraph about the clientele at Amante; then I would move on and do two more paragraphs discussing my next point of comparison/contrast—like the ingredients available at each restaurant.

There are no hard and fast rules about organizing a comparison/contrast paper, of course. Just be sure that your reader can easily tell what’s going on! Be aware, too, of the placement of your different points. If you are writing a comparison/contrast in service of an argument, keep in mind that the last point you make is the one you are leaving your reader with. For example, if I am trying to argue that Amante is better than Pepper’s, I should end with a contrast that leaves Amante sounding good, rather than with a point of comparison that I have to admit makes Pepper’s look better. If you’ve decided that the differences between the items you’re comparing/contrasting are most important, you’ll want to end with the differences—and vice versa, if the similarities seem most important to you.

Our handout on organization can help you write good topic sentences and transitions and make sure that you have a good overall structure in place for your paper.

Cue words and other tips

To help your reader keep track of where you are in the comparison/contrast, you’ll want to be sure that your transitions and topic sentences are especially strong. Your thesis should already have given the reader an idea of the points you’ll be making and the organization you’ll be using, but you can help them out with some extra cues. The following words may be helpful to you in signaling your intentions:

- like, similar to, also, unlike, similarly, in the same way, likewise, again, compared to, in contrast, in like manner, contrasted with, on the contrary, however, although, yet, even though, still, but, nevertheless, conversely, at the same time, regardless, despite, while, on the one hand … on the other hand.

For example, you might have a topic sentence like one of these:

- Compared to Pepper’s, Amante is quiet.

- Like Amante, Pepper’s offers fresh garlic as a topping.

- Despite their different locations (downtown Chapel Hill and downtown Carrboro), Pepper’s and Amante are both fairly easy to get to.

You may reproduce it for non-commercial use if you use the entire handout and attribute the source: The Writing Center, University of North Carolina at Chapel Hill

Make a Gift

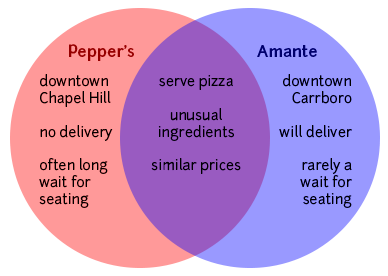

Join thousands of product people at Insight Out Conf on April 11. Register free.

Insights hub solutions

Analyze data

Uncover deep customer insights with fast, powerful features, store insights, curate and manage insights in one searchable platform, scale research, unlock the potential of customer insights at enterprise scale.

Featured reads

Tips and tricks

Make magic with your customer data in Dovetail

Four ways Dovetail helps Product Managers master continuous product discovery

Product updates

Dovetail retro: our biggest releases from the past year

Events and videos

© Dovetail Research Pty. Ltd.

What is comparative analysis? A complete guide

Last updated

18 April 2023

Reviewed by

Jean Kaluza

Comparative analysis is a valuable tool for acquiring deep insights into your organization’s processes, products, and services so you can continuously improve them.

Similarly, if you want to streamline, price appropriately, and ultimately be a market leader, you’ll likely need to draw on comparative analyses quite often.

When faced with multiple options or solutions to a given problem, a thorough comparative analysis can help you compare and contrast your options and make a clear, informed decision.

If you want to get up to speed on conducting a comparative analysis or need a refresher, here’s your guide.

Make comparative analysis less tedious

Dovetail streamlines comparative analysis to help you uncover and share actionable insights

- What exactly is comparative analysis?

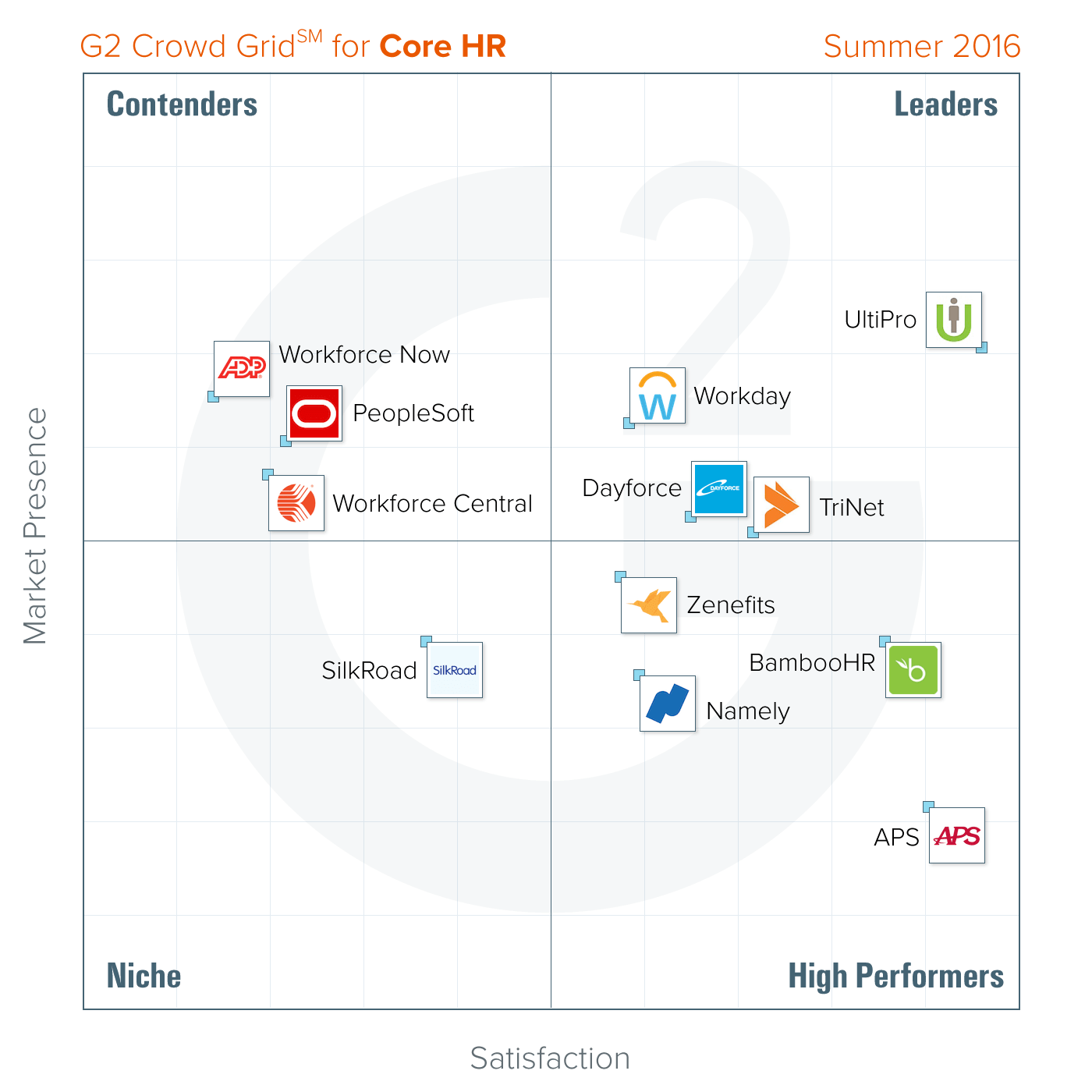

A comparative analysis is a side-by-side comparison that systematically compares two or more things to pinpoint their similarities and differences. The focus of the investigation might be conceptual—a particular problem, idea, or theory—or perhaps something more tangible, like two different data sets.

For instance, you could use comparative analysis to investigate how your product features measure up to the competition.

After a successful comparative analysis, you should be able to identify strengths and weaknesses and clearly understand which product is more effective.

You could also use comparative analysis to examine different methods of producing that product and determine which way is most efficient and profitable.

The potential applications for using comparative analysis in everyday business are almost unlimited. That said, a comparative analysis is most commonly used to examine

Emerging trends and opportunities (new technologies, marketing)

Competitor strategies

Financial health

Effects of trends on a target audience

- Why is comparative analysis so important?

Comparative analysis can help narrow your focus so your business pursues the most meaningful opportunities rather than attempting dozens of improvements simultaneously.

A comparative approach also helps frame up data to illuminate interrelationships. For example, comparative research might reveal nuanced relationships or critical contexts behind specific processes or dependencies that wouldn’t be well-understood without the research.

For instance, if your business compares the cost of producing several existing products relative to which ones have historically sold well, that should provide helpful information once you’re ready to look at developing new products or features.

- Comparative vs. competitive analysis—what’s the difference?

Comparative analysis is generally divided into three subtypes, using quantitative or qualitative data and then extending the findings to a larger group. These include

Pattern analysis —identifying patterns or recurrences of trends and behavior across large data sets.

Data filtering —analyzing large data sets to extract an underlying subset of information. It may involve rearranging, excluding, and apportioning comparative data to fit different criteria.

Decision tree —flowcharting to visually map and assess potential outcomes, costs, and consequences.

In contrast, competitive analysis is a type of comparative analysis in which you deeply research one or more of your industry competitors. In this case, you’re using qualitative research to explore what the competition is up to across one or more dimensions.

For example

Service delivery —metrics like the Net Promoter Scores indicate customer satisfaction levels.

Market position — the share of the market that the competition has captured.

Brand reputation —how well-known or recognized your competitors are within their target market.

- Tips for optimizing your comparative analysis

Conduct original research

Thorough, independent research is a significant asset when doing comparative analysis. It provides evidence to support your findings and may present a perspective or angle not considered previously.

Make analysis routine

To get the maximum benefit from comparative research, make it a regular practice, and establish a cadence you can realistically stick to. Some business areas you could plan to analyze regularly include:

Profitability

Competition

Experiment with controlled and uncontrolled variables

In addition to simply comparing and contrasting, explore how different variables might affect your outcomes.

For example, a controllable variable would be offering a seasonal feature like a shopping bot to assist in holiday shopping or raising or lowering the selling price of a product.

Uncontrollable variables include weather, changing regulations, the current political climate, or global pandemics.

Put equal effort into each point of comparison

Most people enter into comparative research with a particular idea or hypothesis already in mind to validate. For instance, you might try to prove the worthwhileness of launching a new service. So, you may be disappointed if your analysis results don’t support your plan.

However, in any comparative analysis, try to maintain an unbiased approach by spending equal time debating the merits and drawbacks of any decision. Ultimately, this will be a practical, more long-term sustainable approach for your business than focusing only on the evidence that favors pursuing your argument or strategy.

Writing a comparative analysis in five steps

To put together a coherent, insightful analysis that goes beyond a list of pros and cons or similarities and differences, try organizing the information into these five components:

1. Frame of reference

Here is where you provide context. First, what driving idea or problem is your research anchored in? Then, for added substance, cite existing research or insights from a subject matter expert, such as a thought leader in marketing, startup growth, or investment

2. Grounds for comparison Why have you chosen to examine the two things you’re analyzing instead of focusing on two entirely different things? What are you hoping to accomplish?

3. Thesis What argument or choice are you advocating for? What will be the before and after effects of going with either decision? What do you anticipate happening with and without this approach?

For example, “If we release an AI feature for our shopping cart, we will have an edge over the rest of the market before the holiday season.” The finished comparative analysis will weigh all the pros and cons of choosing to build the new expensive AI feature including variables like how “intelligent” it will be, what it “pushes” customers to use, how much it takes off the plates of customer service etc.

Ultimately, you will gauge whether building an AI feature is the right plan for your e-commerce shop.

4. Organize the scheme Typically, there are two ways to organize a comparative analysis report. First, you can discuss everything about comparison point “A” and then go into everything about aspect “B.” Or, you alternate back and forth between points “A” and “B,” sometimes referred to as point-by-point analysis.

Using the AI feature as an example again, you could cover all the pros and cons of building the AI feature, then discuss the benefits and drawbacks of building and maintaining the feature. Or you could compare and contrast each aspect of the AI feature, one at a time. For example, a side-by-side comparison of the AI feature to shopping without it, then proceeding to another point of differentiation.

5. Connect the dots Tie it all together in a way that either confirms or disproves your hypothesis.

For instance, “Building the AI bot would allow our customer service team to save 12% on returns in Q3 while offering optimizations and savings in future strategies. However, it would also increase the product development budget by 43% in both Q1 and Q2. Our budget for product development won’t increase again until series 3 of funding is reached, so despite its potential, we will hold off building the bot until funding is secured and more opportunities and benefits can be proved effective.”

Get started today

Go from raw data to valuable insights with a flexible research platform

Editor’s picks

Last updated: 21 December 2023

Last updated: 16 December 2023

Last updated: 17 February 2024

Last updated: 19 November 2023

Last updated: 5 March 2024

Last updated: 15 February 2024

Last updated: 11 March 2024

Last updated: 12 December 2023

Last updated: 6 March 2024

Last updated: 10 April 2023

Last updated: 20 December 2023

Latest articles

Related topics, log in or sign up.

Get started for free

How to Do Comparative Analysis in Research ( Examples )

Comparative analysis is a method that is widely used in social science . It is a method of comparing two or more items with an idea of uncovering and discovering new ideas about them. It often compares and contrasts social structures and processes around the world to grasp general patterns. Comparative analysis tries to understand the study and explain every element of data that comparing.

We often compare and contrast in our daily life. So it is usual to compare and contrast the culture and human society. We often heard that ‘our culture is quite good than theirs’ or ‘their lifestyle is better than us’. In social science, the social scientist compares primitive, barbarian, civilized, and modern societies. They use this to understand and discover the evolutionary changes that happen to society and its people. It is not only used to understand the evolutionary processes but also to identify the differences, changes, and connections between societies.

Most social scientists are involved in comparative analysis. Macfarlane has thought that “On account of history, the examinations are typically on schedule, in that of other sociologies, transcendently in space. The historian always takes their society and compares it with the past society, and analyzes how far they differ from each other.

The comparative method of social research is a product of 19 th -century sociology and social anthropology. Sociologists like Emile Durkheim, Herbert Spencer Max Weber used comparative analysis in their works. For example, Max Weber compares the protestant of Europe with Catholics and also compared it with other religions like Islam, Hinduism, and Confucianism.

To do a systematic comparison we need to follow different elements of the method.

1. Methods of comparison The comparison method

In social science, we can do comparisons in different ways. It is merely different based on the topic, the field of study. Like Emile Durkheim compare societies as organic solidarity and mechanical solidarity. The famous sociologist Emile Durkheim provides us with three different approaches to the comparative method. Which are;

- The first approach is to identify and select one particular society in a fixed period. And by doing that, we can identify and determine the relationship, connections and differences exist in that particular society alone. We can find their religious practices, traditions, law, norms etc.

- The second approach is to consider and draw various societies which have common or similar characteristics that may vary in some ways. It may be we can select societies at a specific period, or we can select societies in the different periods which have common characteristics but vary in some ways. For example, we can take European and American societies (which are universally similar characteristics) in the 20 th century. And we can compare and contrast their society in terms of law, custom, tradition, etc.

- The third approach he envisaged is to take different societies of different times that may share some similar characteristics or maybe show revolutionary changes. For example, we can compare modern and primitive societies which show us revolutionary social changes.

2 . The unit of comparison

We cannot compare every aspect of society. As we know there are so many things that we cannot compare. The very success of the compare method is the unit or the element that we select to compare. We are only able to compare things that have some attributes in common. For example, we can compare the existing family system in America with the existing family system in Europe. But we are not able to compare the food habits in china with the divorce rate in America. It is not possible. So, the next thing you to remember is to consider the unit of comparison. You have to select it with utmost care.

3. The motive of comparison

As another method of study, a comparative analysis is one among them for the social scientist. The researcher or the person who does the comparative method must know for what grounds they taking the comparative method. They have to consider the strength, limitations, weaknesses, etc. He must have to know how to do the analysis.

Steps of the comparative method

1. Setting up of a unit of comparison

As mentioned earlier, the first step is to consider and determine the unit of comparison for your study. You must consider all the dimensions of your unit. This is where you put the two things you need to compare and to properly analyze and compare it. It is not an easy step, we have to systematically and scientifically do this with proper methods and techniques. You have to build your objectives, variables and make some assumptions or ask yourself about what you need to study or make a hypothesis for your analysis.

The best casings of reference are built from explicit sources instead of your musings or perceptions. To do that you can select some attributes in the society like marriage, law, customs, norms, etc. by doing this you can easily compare and contrast the two societies that you selected for your study. You can set some questions like, is the marriage practices of Catholics are different from Protestants? Did men and women get an equal voice in their mate choice? You can set as many questions that you wanted. Because that will explore the truth about that particular topic. A comparative analysis must have these attributes to study. A social scientist who wishes to compare must develop those research questions that pop up in your mind. A study without those is not going to be a fruitful one.

2. Grounds of comparison

The grounds of comparison should be understandable for the reader. You must acknowledge why you selected these units for your comparison. For example, it is quite natural that a person who asks why you choose this what about another one? What is the reason behind choosing this particular society? If a social scientist chooses primitive Asian society and primitive Australian society for comparison, he must acknowledge the grounds of comparison to the readers. The comparison of your work must be self-explanatory without any complications.

If you choose two particular societies for your comparative analysis you must convey to the reader what are you intended to choose this and the reason for choosing that society in your analysis.

3 . Report or thesis

The main element of the comparative analysis is the thesis or the report. The report is the most important one that it must contain all your frame of reference. It must include all your research questions, objectives of your topic, the characteristics of your two units of comparison, variables in your study, and last but not least the finding and conclusion must be written down. The findings must be self-explanatory because the reader must understand to what extent did they connect and what are their differences. For example, in Emile Durkheim’s Theory of Division of Labour, he classified organic solidarity and Mechanical solidarity . In which he means primitive society as Mechanical solidarity and modern society as Organic Solidarity. Like that you have to mention what are your findings in the thesis.

4. Relationship and linking one to another

Your paper must link each point in the argument. Without that the reader does not understand the logical and rational advance in your analysis. In a comparative analysis, you need to compare the ‘x’ and ‘y’ in your paper. (x and y mean the two-unit or things in your comparison). To do that you can use likewise, similarly, on the contrary, etc. For example, if we do a comparison between primitive society and modern society we can say that; ‘in the primitive society the division of labour is based on gender and age on the contrary (or the other hand), in modern society, the division of labour is based on skill and knowledge of a person.

Demerits of comparison

Comparative analysis is not always successful. It has some limitations. The broad utilization of comparative analysis can undoubtedly cause the feeling that this technique is a solidly settled, smooth, and unproblematic method of investigation, which because of its undeniable intelligent status can produce dependable information once some specialized preconditions are met acceptably.

Perhaps the most fundamental issue here respects the independence of the unit picked for comparison. As different types of substances are gotten to be analyzed, there is frequently a fundamental and implicit supposition about their independence and a quiet propensity to disregard the mutual influences and common impacts among the units.

One more basic issue with broad ramifications concerns the decision of the units being analyzed. The primary concern is that a long way from being a guiltless as well as basic assignment, the decision of comparison units is a basic and precarious issue. The issue with this sort of comparison is that in such investigations the depictions of the cases picked for examination with the principle one will in general turn out to be unreasonably streamlined, shallow, and stylised with contorted contentions and ends as entailment.

However, a comparative analysis is as yet a strategy with exceptional benefits, essentially due to its capacity to cause us to perceive the restriction of our psyche and check against the weaknesses and hurtful results of localism and provincialism. We may anyway have something to gain from history specialists’ faltering in utilizing comparison and from their regard for the uniqueness of settings and accounts of people groups. All of the above, by doing the comparison we discover the truths the underlying and undiscovered connection, differences that exist in society.

Also Read: How to write a Sociology Analysis? Explained with Examples

Sociology Group

The Sociology Group is an organization dedicated to creating social awareness through thoughtful initiatives like "social stories" and the "Meet the Professor" insightful interview series. Recognized for our book reviews, author interviews, and social sciences articles, we also host annual social sciences writing competition. Interested in joining us? Email [email protected] . We are a dedicated team of social scientists on a mission to simplify complex theories, conduct enlightening interviews, and offer academic assistance, making Social Science accessible and practical for all curious minds.

Comparison in Scientific Research: Uncovering statistically significant relationships

by Anthony Carpi, Ph.D., Anne E. Egger, Ph.D.

Listen to this reading

Did you know that when Europeans first saw chimpanzees, they thought the animals were hairy, adult humans with stunted growth? A study of chimpanzees paved the way for comparison to be recognized as an important research method. Later, Charles Darwin and others used this comparative research method in the development of the theory of evolution.

Comparison is used to determine and quantify relationships between two or more variables by observing different groups that either by choice or circumstance are exposed to different treatments.

Comparison includes both retrospective studies that look at events that have already occurred, and prospective studies, that examine variables from the present forward.

Comparative research is similar to experimentation in that it involves comparing a treatment group to a control, but it differs in that the treatment is observed rather than being consciously imposed due to ethical concerns, or because it is not possible, such as in a retrospective study.

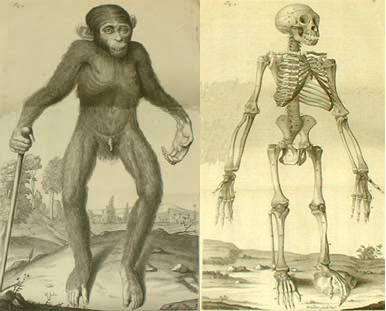

Anyone who has stared at a chimpanzee in a zoo (Figure 1) has probably wondered about the animal's similarity to humans. Chimps make facial expressions that resemble humans, use their hands in much the same way we do, are adept at using different objects as tools, and even laugh when they are tickled. It may not be surprising to learn then that when the first captured chimpanzees were brought to Europe in the 17 th century, people were confused, labeling the animals "pygmies" and speculating that they were stunted versions of "full-grown" humans. A London physician named Edward Tyson obtained a "pygmie" that had died of an infection shortly after arriving in London, and began a systematic study of the animal that cataloged the differences between chimpanzees and humans, thus helping to establish comparative research as a scientific method .

Figure 1: A chimpanzee

- A brief history of comparative methods

In 1698, Tyson, a member of the Royal Society of London, began a detailed dissection of the "pygmie" he had obtained and published his findings in the 1699 work: Orang-Outang, sive Homo Sylvestris: or, the Anatomy of a Pygmie Compared with that of a Monkey, an Ape, and a Man . The title of the work further reflects the misconception that existed at the time – Tyson did not use the term Orang-Outang in its modern sense to refer to the orangutan; he used it in its literal translation from the Malay language as "man of the woods," as that is how the chimps were viewed.

Tyson took great care in his dissection. He precisely measured and compared a number of anatomical variables such as brain size of the "pygmie," ape, and human. He recorded his measurements of the "pygmie," even down to the direction in which the animal's hair grew: "The tendency of the Hair of all of the Body was downwards; but only from the Wrists to the Elbows 'twas upwards" (Russell, 1967). Aided by William Cowper, Tyson made drawings of various anatomical structures, taking great care to accurately depict the dimensions of these structures so that they could be compared to those in humans (Figure 2). His systematic comparative study of the dimensions of anatomical structures in the chimp, ape, and human led him to state:

in the Organization of abundance of its Parts, it more approached to the Structure of the same in Men: But where it differs from a Man, there it resembles plainly the Common Ape, more than any other Animal. (Russell, 1967)

Tyson's comparative studies proved exceptionally accurate and his research was used by others, including Thomas Henry Huxley in Evidence as to Man's Place in Nature (1863) and Charles Darwin in The Descent of Man (1871).

Figure 2: Edward Tyson's drawing of the external appearance of a "pygmie" (left) and the animal's skeleton (right) from The Anatomy of a Pygmie Compared with that of a Monkey, an Ape, and a Man from the second edition, London, printed for T. Osborne, 1751.

Tyson's methodical and scientific approach to anatomical dissection contributed to the development of evolutionary theory and helped establish the field of comparative anatomy. Further, Tyson's work helps to highlight the importance of comparison as a scientific research method .

- Comparison as a scientific research method

Comparative research represents one approach in the spectrum of scientific research methods and in some ways is a hybrid of other methods, drawing on aspects of both experimental science (see our Experimentation in Science module) and descriptive research (see our Description in Science module). Similar to experimentation, comparison seeks to decipher the relationship between two or more variables by documenting observed differences and similarities between two or more subjects or groups. In contrast to experimentation, the comparative researcher does not subject one of those groups to a treatment , but rather observes a group that either by choice or circumstance has been subject to a treatment. Thus comparison involves observation in a more "natural" setting, not subject to experimental confines, and in this way evokes similarities with description.

Importantly, the simple comparison of two variables or objects is not comparative research . Tyson's work would not have been considered scientific research if he had simply noted that "pygmies" looked like humans without measuring bone lengths and hair growth patterns. Instead, comparative research involves the systematic cataloging of the nature and/or behavior of two or more variables, and the quantification of the relationship between them.

Figure 3: Skeleton of the juvenile chimpanzee dissected by Edward Tyson, currently displayed at the Natural History Museum, London.

While the choice of which research method to use is a personal decision based in part on the training of the researchers conducting the study, there are a number of scenarios in which comparative research would likely be the primary choice.

- The first scenario is one in which the scientist is not trying to measure a response to change, but rather he or she may be trying to understand the similarities and differences between two subjects . For example, Tyson was not observing a change in his "pygmie" in response to an experimental treatment . Instead, his research was a comparison of the unknown "pygmie" to humans and apes in order to determine the relationship between them.

- A second scenario in which comparative studies are common is when the physical scale or timeline of a question may prevent experimentation. For example, in the field of paleoclimatology, researchers have compared cores taken from sediments deposited millions of years ago in the world's oceans to see if the sedimentary composition is similar across all oceans or differs according to geographic location. Because the sediments in these cores were deposited millions of years ago, it would be impossible to obtain these results through the experimental method . Research designed to look at past events such as sediment cores deposited millions of years ago is referred to as retrospective research.

- A third common comparative scenario is when the ethical implications of an experimental treatment preclude an experimental design. Researchers who study the toxicity of environmental pollutants or the spread of disease in humans are precluded from purposefully exposing a group of individuals to the toxin or disease for ethical reasons. In these situations, researchers would set up a comparative study by identifying individuals who have been accidentally exposed to the pollutant or disease and comparing their symptoms to those of a control group of people who were not exposed. Research designed to look at events from the present into the future, such as a study looking at the development of symptoms in individuals exposed to a pollutant, is referred to as prospective research.

Comparative science was significantly strengthened in the late 19th and early 20th century with the introduction of modern statistical methods . These were used to quantify the association between variables (see our Statistics in Science module). Today, statistical methods are critical for quantifying the nature of relationships examined in many comparative studies. The outcome of comparative research is often presented in one of the following ways: as a probability , as a statement of statistical significance , or as a declaration of risk. For example, in 2007 Kristensen and Bjerkedal showed that there is a statistically significant relationship (at the 95% confidence level) between birth order and IQ by comparing test scores of first-born children to those of their younger siblings (Kristensen & Bjerkedal, 2007). And numerous studies have contributed to the determination that the risk of developing lung cancer is 30 times greater in smokers than in nonsmokers (NCI, 1997).

Comprehension Checkpoint

- Comparison in practice: The case of cigarettes

In 1919, Dr. George Dock, chairman of the Department of Medicine at Barnes Hospital in St. Louis, asked all of the third- and fourth-year medical students at the teaching hospital to observe an autopsy of a man with a disease so rare, he claimed, that most of the students would likely never see another case of it in their careers. With the medical students gathered around, the physicians conducting the autopsy observed that the patient's lungs were speckled with large dark masses of cells that had caused extensive damage to the lung tissue and had forced the airways to close and collapse. Dr. Alton Ochsner, one of the students who observed the autopsy, would write years later that "I did not see another case until 1936, seventeen years later, when in a period of six months, I saw nine patients with cancer of the lung. – All the afflicted patients were men who smoked heavily and had smoked since World War I" (Meyer, 1992).

Figure 4: Image from a stereoptic card showing a woman smoking a cigarette circa 1900

The American physician Dr. Isaac Adler was, in fact, the first scientist to propose a link between cigarette smoking and lung cancer in 1912, based on his observation that lung cancer patients often reported that they were smokers. Adler's observations, however, were anecdotal, and provided no scientific evidence toward demonstrating a relationship. The German epidemiologist Franz Müller is credited with the first case-control study of smoking and lung cancer in the 1930s. Müller sent a survey to the relatives of individuals who had died of cancer, and asked them about the smoking habits of the deceased. Based on the responses he received, Müller reported a higher incidence of lung cancer among heavy smokers compared to light smokers. However, the study had a number of problems. First, it relied on the memory of relatives of deceased individuals rather than first-hand observations, and second, no statistical association was made. Soon after this, the tobacco industry began to sponsor research with the biased goal of repudiating negative health claims against cigarettes (see our Scientific Institutions and Societies module for more information on sponsored research).

Beginning in the 1950s, several well-controlled comparative studies were initiated. In 1950, Ernest Wynder and Evarts Graham published a retrospective study comparing the smoking habits of 605 hospital patients with lung cancer to 780 hospital patients with other diseases (Wynder & Graham, 1950). Their study showed that 1.3% of lung cancer patients were nonsmokers while 14.6% of patients with other diseases were nonsmokers. In addition, 51.2% of lung cancer patients were "excessive" smokers while only 19.1% of other patients were excessive smokers. Both of these comparisons proved to be statistically significant differences. The statisticians who analyzed the data concluded:

when the nonsmokers and the total of the high smoking classes of patients with lung cancer are compared with patients who have other diseases, we can reject the null hypothesis that smoking has no effect on the induction of cancer of the lungs.

Wynder and Graham also suggested that there might be a lag of ten years or more between the period of smoking in an individual and the onset of clinical symptoms of cancer. This would present a major challenge to researchers since any study that investigated the relationship between smoking and lung cancer in a prospective fashion would have to last many years.

Richard Doll and Austin Hill published a similar comparative study in 1950 in which they showed that there was a statistically higher incidence of smoking among lung cancer patients compared to patients with other diseases (Doll & Hill, 1950). In their discussion, Doll and Hill raise an interesting point regarding comparative research methods by saying,

This is not necessarily to state that smoking causes carcinoma of the lung. The association would occur if carcinoma of the lung caused people to smoke or if both attributes were end-effects of a common cause.

They go on to assert that because the habit of smoking was seen to develop before the onset of lung cancer, the argument that lung cancer leads to smoking can be rejected. They therefore conclude, "that smoking is a factor, and an important factor, in the production of carcinoma of the lung."

Despite this substantial evidence , both the tobacco industry and unbiased scientists raised objections, claiming that the retrospective research on smoking was "limited, inconclusive, and controversial." The industry stated that the studies published did not demonstrate cause and effect, but rather a spurious association between two variables . Dr. Wilhelm Hueper of the National Cancer Institute, a scientist with a long history of research into occupational causes of cancers, argued that the emphasis on cigarettes as the only cause of lung cancer would compromise research support for other causes of lung cancer. Ronald Fisher , a renowned statistician, also was opposed to the conclusions of Doll and others, purportedly because they promoted a "puritanical" view of smoking.

The tobacco industry mounted an extensive campaign of misinformation, sponsoring and then citing research that showed that smoking did not cause "cardiac pain" as a distraction from the studies that were being published regarding cigarettes and lung cancer. The industry also highlighted studies that showed that individuals who quit smoking suffered from mild depression, and they pointed to the fact that even some doctors themselves smoked cigarettes as evidence that cigarettes were not harmful (Figure 5).

Figure 5: Cigarette advertisement circa 1946.

While the scientific research began to impact health officials and some legislators, the industry's ad campaign was effective. The US Federal Trade Commission banned tobacco companies from making health claims about their products in 1955. However, more significant regulation was averted. An editorial that appeared in the New York Times in 1963 summed up the national sentiment when it stated that the tobacco industry made a "valid point," and the public should refrain from making a decision regarding cigarettes until further reports were issued by the US Surgeon General.

In 1951, Doll and Hill enrolled 40,000 British physicians in a prospective comparative study to examine the association between smoking and the development of lung cancer. In contrast to the retrospective studies that followed patients with lung cancer back in time, the prospective study was designed to follow the group forward in time. In 1952, Drs. E. Cuyler Hammond and Daniel Horn enrolled 187,783 white males in the United States in a similar prospective study. And in 1959, the American Cancer Society (ACS) began the first of two large-scale prospective studies of the association between smoking and the development of lung cancer. The first ACS study, named Cancer Prevention Study I, enrolled more than 1 million individuals and tracked their health, smoking and other lifestyle habits, development of diseases, cause of death, and life expectancy for almost 13 years (Garfinkel, 1985).

All of the studies demonstrated that smokers are at a higher risk of developing and dying from lung cancer than nonsmokers. The ACS study further showed that smokers have elevated rates of other pulmonary diseases, coronary artery disease, stroke, and cardiovascular problems. The two ACS Cancer Prevention Studies would eventually show that 52% of deaths among smokers enrolled in the studies were attributed to cigarettes.

In the second half of the 20 th century, evidence from other scientific research methods would contribute multiple lines of evidence to the conclusion that cigarette smoke is a major cause of lung cancer:

Descriptive studies of the pathology of lungs of deceased smokers would demonstrate that smoking causes significant physiological damage to the lungs. Experiments that exposed mice, rats, and other laboratory animals to cigarette smoke showed that it caused cancer in these animals (see our Experimentation in Science module for more information). Physiological models would help demonstrate the mechanism by which cigarette smoke causes cancer.

As evidence linking cigarette smoke to lung cancer and other diseases accumulated, the public, the legal community, and regulators slowly responded. In 1957, the US Surgeon General first acknowledged an association between smoking and lung cancer when a report was issued stating, "It is clear that there is an increasing and consistent body of evidence that excessive cigarette smoking is one of the causative factors in lung cancer." In 1965, over objections by the tobacco industry and the American Medical Association, which had just accepted a $10 million grant from the tobacco companies, the US Congress passed the Federal Cigarette Labeling and Advertising Act, which required that cigarette packs carry the warning: "Caution: Cigarette Smoking May Be Hazardous to Your Health." In 1967, the US Surgeon General issued a second report stating that cigarette smoking is the principal cause of lung cancer in the United States. While the tobacco companies found legal means to protect themselves for decades following this, in 1996, Brown and Williamson Tobacco Company was ordered to pay $750,000 in a tobacco liability lawsuit; it became the first liability award paid to an individual by a tobacco company.

- Comparison across disciplines

Comparative studies are used in a host of scientific disciplines, from anthropology to archaeology, comparative biology, epidemiology , psychology, and even forensic science. DNA fingerprinting, a technique used to incriminate or exonerate a suspect using biological evidence , is based on comparative science. In DNA fingerprinting, segments of DNA are isolated from a suspect and from biological evidence such as blood, semen, or other tissue left at a crime scene. Up to 20 different segments of DNA are compared between that of the suspect and the DNA found at the crime scene. If all of the segments match, the investigator can calculate the statistical probability that the DNA came from the suspect as opposed to someone else. Thus DNA matches are described in terms of a "1 in 1 million" or "1 in 1 billion" chance of error.

Comparative methods are also commonly used in studies involving humans due to the ethical limits of experimental treatment . For example, in 2007, Petter Kristensen and Tor Bjerkedal published a study in which they compared the IQ of over 250,000 male Norwegians in the military (Kristensen & Bjerkedal, 2007). The researchers found a significant relationship between birth order and IQ, where the average IQ of first-born male children was approximately three points higher than the average IQ of the second-born male in the same family. The researchers further showed that this relationship was correlated with social rather than biological factors, as second-born males who grew up in families in which the first-born child died had average IQs similar to other first-born children. One might imagine a scenario in which this type of study could be carried out experimentally, for example, purposefully removing first-born male children from certain families, but the ethics of such an experiment preclude it from ever being conducted.

- Limitations of comparative methods

One of the primary limitations of comparative methods is the control of other variables that might influence a study. For example, as pointed out by Doll and Hill in 1950, the association between smoking and cancer deaths could have meant that: a) smoking caused lung cancer, b) lung cancer caused individuals to take up smoking, or c) a third unknown variable caused lung cancer AND caused individuals to smoke (Doll & Hill, 1950). As a result, comparative researchers often go to great lengths to choose two different study groups that are similar in almost all respects except for the treatment in question. In fact, many comparative studies in humans are carried out on identical twins for this exact reason. For example, in the field of tobacco research , dozens of comparative twin studies have been used to examine everything from the health effects of cigarette smoke to the genetic basis of addiction.

- Comparison in modern practice

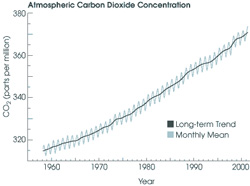

Figure 6: The "Keeling curve," a long-term record of atmospheric CO 2 concentration measured at the Mauna Loa Observatory (Keeling et al.). Although the annual oscillations represent natural, seasonal variations, the long-term increase means that concentrations are higher than they have been in 400,000 years. Graphic courtesy of NASA's Earth Observatory.

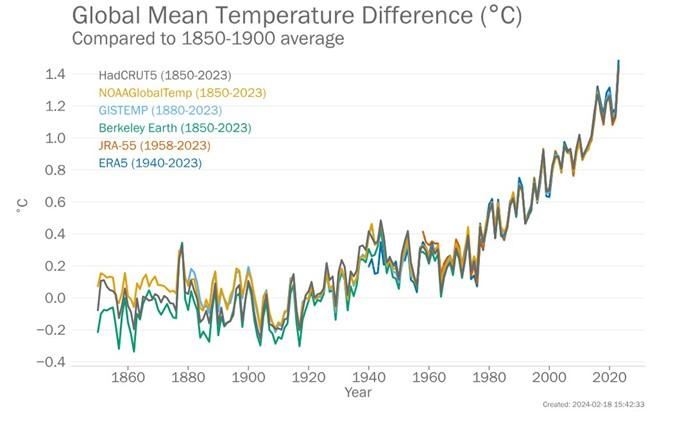

Despite the lessons learned during the debate that ensued over the possible effects of cigarette smoke, misconceptions still surround comparative science. For example, in the late 1950s, Charles Keeling , an oceanographer at the Scripps Institute of Oceanography, began to publish data he had gathered from a long-term descriptive study of atmospheric carbon dioxide (CO 2 ) levels at the Mauna Loa observatory in Hawaii (Keeling, 1958). Keeling observed that atmospheric CO 2 levels were increasing at a rapid rate (Figure 6). He and other researchers began to suspect that rising CO 2 levels were associated with increasing global mean temperatures, and several comparative studies have since correlated rising CO 2 levels with rising global temperature (Keeling, 1970). Together with research from modeling studies (see our Modeling in Scientific Research module), this research has provided evidence for an association between global climate change and the burning of fossil fuels (which emits CO 2 ).

Yet in a move reminiscent of the fight launched by the tobacco companies, the oil and fossil fuel industry launched a major public relations campaign against climate change research . As late as 1989, scientists funded by the oil industry were producing reports that called the research on climate change "noisy junk science" (Roberts, 1989). As with the tobacco issue, challenges to early comparative studies tried to paint the method as less reliable than experimental methods. But the challenges actually strengthened the science by prompting more researchers to launch investigations, thus providing multiple lines of evidence supporting an association between atmospheric CO 2 concentrations and climate change. As a result, the culmination of multiple lines of scientific evidence prompted the Intergovernmental Panel on Climate Change organized by the United Nations to issue a report stating that "Warming of the climate system is unequivocal," and "Carbon dioxide is the most important anthropogenic greenhouse gas (IPCC, 2007)."

Comparative studies are a critical part of the spectrum of research methods currently used in science. They allow scientists to apply a treatment-control design in settings that preclude experimentation, and they can provide invaluable information about the relationships between variables . The intense scrutiny that comparison has undergone in the public arena due to cases involving cigarettes and climate change has actually strengthened the method by clarifying its role in science and emphasizing the reliability of data obtained from these studies.

Table of Contents

Activate glossary term highlighting to easily identify key terms within the module. Once highlighted, you can click on these terms to view their definitions.

Activate NGSS annotations to easily identify NGSS standards within the module. Once highlighted, you can click on them to view these standards.

How to Write a Comparison Essay

- Introduction

- Essay Outline

- Expressions For Comparison Essays

- Sample Comparison 1

- Sample Comparison 2

- Guides & Handouts Home

- Writing Centre Home

A comparison essay compares and contrasts two things. That is, it points out the similarities and differences (mostly focusing on the differences) of those two things. The two things usually belong to the same class (ex. two cities, two politicians, two sports, etc.). Relatively equal attention is given to the two subjects being compared. The essay may treat the two things objectively and impartially. Or it may be partial, favoring one thing over the other (ex. "American football is a sissy's game compared to rugby").

The important thing in any comparison essay is that the criteria for comparison should remain the same; that is, the same attributes should be compared . For example, if you are comparing an electric bulb lamp with a gas lamp, compare them both according to their physical characteristics, their history of development, and their operation.

Narrow Your Focus (in this essay, as in any essay). For example, if you compare two religions, focus on one particular aspect which you can discuss in depth and detail, e.g., sin in Buddhism vs. sin in Christianity, or salvation in two religions. Or if your topic is political, you might compare the Conservative attitude to old growth logging vs. the Green Party's attitude to old growth logging, or the Conservative attitude to the Persian Gulf War vs. the NDP attitude to the same war.

Each paragraph should deal with only one idea and deal with it thoroughly . Give adequate explanation and specific examples to support each idea. The first paragraph introduces the topic, captures the reader's attention, and provides a definite summary of the essay. It may be wise to end the first paragraph with a thesis statement that summarizes the main points of difference (or similarity). For example, "Submarines and warships differ not only in construction, but in their style of weapons and method of attack." This gives the reader a brief outline of your essay, allowing him to anticipate what's to come. Each middle paragraph should begin with a topic sentence that summarizes the main idea of that paragraph (ex. "The musical styles of Van Halen and Steely Dan are as differing in texture as are broken glass and clear water"). An opening sentence like this that uses a metaphor or simile not only summarizes the paragraph but captures the reader's attention, making him want to read on. Avoid a topic sentence that is too dull and too broad (ex. "There are many differences in the musical styles of Van Halen and Steely Dan").

VARY THE STRUCTURE

The structure of the comparison essay may vary. You may use simultaneous comparison structure in which the two things are compared together, feature by feature, point by point. For example, "The electric light bulb lasts 80 hours, while the gas lamp lasts only 20 hours . . . ." Or as in this example (comparing two American presidents):