- Search by keyword

- Search by citation

Page 1 of 30

Predicting preoperative muscle invasion status for bladder cancer using computed tomography-based radiomics nomogram

The aim of the study is to assess the efficacy of the established computed tomography (CT)-based radiomics nomogram combined with radiomics and clinical features for predicting muscle invasion status in bladde...

- View Full Text

Quantitative analysis of the eyelid curvature in patients with blepharoptosis

The aim of this study was to evaluate the ability of two novel eyelid curvature measurements to distinguish between normal eyes and different severities of blepharoptosis.

MRCT and CT in the diagnosis of pediatric disease imaging: assessing imaging performance and clinical effects

This study focused on analyzing the clinical value and effect of magnetic resonance imaging plus computed tomography (MRCT) and CT in the clinical diagnosis of cerebral palsy in children.

Imaging segmentation mechanism for rectal tumors using improved U-Net

In radiation therapy, cancerous region segmentation in magnetic resonance images (MRI) is a critical step. For rectal cancer, the automatic segmentation of rectal tumors from an MRI is a great challenge. There...

Kidney dynamic SPECT acquisition on a CZT swiveling-detector ring camera: an in vivo pilot study

Large field of view CZT SPECT cameras with a ring geometry are available for some years now. Thanks to their good sensitivity and high temporal resolution, general dynamic SPECT imaging may be performed more e...

Comparison of vestibular aqueduct visualization on computed tomography and magnetic resonance imaging in patients with Ménière’s disease

The vestibular aqueduct (VA) serves an essential role in homeostasis of the inner ear and pathogenesis of Ménière’s disease (MD). The bony VA can be clearly depicted by high-resolution computed tomography (HRC...

Deep learning model for pleural effusion detection via active learning and pseudo-labeling: a multisite study

The study aimed to develop and validate a deep learning-based Computer Aided Triage (CADt) algorithm for detecting pleural effusion in chest radiographs using an active learning (AL) framework. This is aimed a...

Lymph node metastasis prediction and biological pathway associations underlying DCE-MRI deep learning radiomics in invasive breast cancer

The relationship between the biological pathways related to deep learning radiomics (DLR) and lymph node metastasis (LNM) of breast cancer is still poorly understood. This study explored the value of DLR based...

Construction of a nomogram for predicting compensated cirrhosis with Wilson’s disease based on non-invasive indicators

Wilson’s disease (WD) often leads to liver fibrosis and cirrhosis, and early diagnosis of WD cirrhosis is essential. Currently, there are few non-invasive prediction models for WD cirrhosis. The purpose of thi...

Ultrasound-based deep learning radiomics model for differentiating benign, borderline, and malignant ovarian tumours: a multi-class classification exploratory study

Accurate preoperative identification of ovarian tumour subtypes is imperative for patients as it enables physicians to custom-tailor precise and individualized management strategies. So, we have developed an u...

The feasibility of half-dose contrast-enhanced scanning of brain tumours at 5.0 T: a preliminary study

This study investigated and compared the effects of Gd enhancement on brain tumours with a half-dose of contrast medium at 5.0 T and with a full dose at 3.0 T.

Role of radiomics in staging liver fibrosis: a meta-analysis

Fibrosis has important pathoetiological and prognostic roles in chronic liver disease. This study evaluates the role of radiomics in staging liver fibrosis.

Remote sensing image information extraction based on Compensated Fuzzy Neural Network and big data analytics

Medical imaging AI systems and big data analytics have attracted much attention from researchers of industry and academia. The application of medical imaging AI systems and big data analytics play an important...

Amide proton transfer weighted and diffusion weighted imaging based radiomics classification algorithm for predicting 1p/19q co-deletion status in low grade gliomas

1p/19q co-deletion in low-grade gliomas (LGG, World Health Organization grade II and III) is of great significance in clinical decision making. We aim to use radiomics analysis to predict 1p/19q co-deletion in...

Radiomics-based discrimination of coronary chronic total occlusion and subtotal occlusion on coronary computed tomography angiography

Differentiating chronic total occlusion (CTO) from subtotal occlusion (SO) is often difficult to make from coronary computed tomography angiography (CCTA). We developed a CCTA-based radiomics model to differen...

Deep learning-based image annotation for leukocyte segmentation and classification of blood cell morphology

The research focuses on the segmentation and classification of leukocytes, a crucial task in medical image analysis for diagnosing various diseases. The leukocyte dataset comprises four classes of images such ...

Deep transfer learning with fuzzy ensemble approach for the early detection of breast cancer

Breast Cancer is a significant global health challenge, particularly affecting women with higher mortality compared with other cancer types. Timely detection of such cancer types is crucial, and recent researc...

Correction: Evaluating the consistency in different methods for measuring left atrium diameters

The original article was published in BMC Medical Imaging 2024 24 :57

Dynamic radiomics based on contrast-enhanced MRI for predicting microvascular invasion in hepatocellular carcinoma

To exploit the improved prediction performance based on dynamic contrast-enhanced (DCE) MRI by using dynamic radiomics for microvascular invasion (MVI) in hepatocellular carcinoma (HCC).

A survey of the impact of self-supervised pretraining for diagnostic tasks in medical X-ray, CT, MRI, and ultrasound

Self-supervised pretraining has been observed to be effective at improving feature representations for transfer learning, leveraging large amounts of unlabelled data. This review summarizes recent research int...

Renal interstitial fibrotic assessment using non-Gaussian diffusion kurtosis imaging in a rat model of hyperuricemia

To investigate the feasibility of Diffusion Kurtosis Imaging (DKI) in assessing renal interstitial fibrosis induced by hyperuricemia.

Preoperative prediction of microsatellite instability status in colorectal cancer based on a multiphasic enhanced CT radiomics nomogram model

To investigate the value of a nomogram model based on the combination of clinical-CT features and multiphasic enhanced CT radiomics for the preoperative prediction of the microsatellite instability (MSI) statu...

Dynamic contrast-enhanced MR imaging in identifying active anal fistula after surgery

It is challenging to identify residual or recurrent fistulas from the surgical region, while MR imaging is feasible. The aim was to use dynamic contrast-enhanced MR imaging (DCE-MRI) technology to distinguish ...

The use of individual-based FDG-PET volume of interest in predicting conversion from mild cognitive impairment to dementia

Based on a longitudinal cohort design, the aim of this study was to investigate whether individual-based 18 F fluorodeoxyglucose positron emission tomography ( 18 F-FDG-PET) regional signals can predict dementia con...

The value of a neural network based on multi-scale feature fusion to ultrasound images for the differentiation in thyroid follicular neoplasms

The objective of this research was to create a deep learning network that utilizes multiscale images for the classification of follicular thyroid carcinoma (FTC) and follicular thyroid adenoma (FTA) through pr...

Correction: Evaluating renal iron overload in diabetes mellitus by blood oxygen level-dependent magnetic resonance imaging: a longitudinal experimental study

The original article was published in BMC Medical Imaging 2022 22 :200

Classification of cognitive ability of healthy older individuals using resting-state functional connectivity magnetic resonance imaging and an extreme learning machine

Quantitative determination of the correlation between cognitive ability and functional biomarkers in the older brain is essential. To identify biomarkers associated with cognitive performance in the older, thi...

Characteristics of high frame frequency contrast-enhanced ultrasound in renal tumors

This study aims to analyze the characteristics of high frame rate contrast-enhanced ultrasound (H-CEUS) in renal lesions and to improve the ability for differential diagnosis of renal tumors.

Comparison of ASL and DSC perfusion methods in the evaluation of response to treatment in patients with a history of treatment for malignant brain tumor

Perfusion MRI is of great benefit in the post-treatment evaluation of brain tumors. Interestingly, dynamic susceptibility contrast-enhanced (DSC) perfusion has taken its place in routine examination for this p...

Predictive value of cyst/tumor volume ratio of pituitary adenoma for tumor cell proliferation

MRI has been widely used to predict the preoperative proliferative potential of pituitary adenoma (PA). However, the relationship between the cyst/tumor volume ratio (C/T ratio) and the proliferative potential...

Malignancy diagnosis of liver lesion in contrast enhanced ultrasound using an end-to-end method based on deep learning

Contrast-enhanced ultrasound (CEUS) is considered as an efficient tool for focal liver lesion characterization, given it allows real-time scanning and provides dynamic tissue perfusion information. An accurate...

Contrast-enhanced to non-contrast-enhanced image translation to exploit a clinical data warehouse of T1-weighted brain MRI

Clinical data warehouses provide access to massive amounts of medical images, but these images are often heterogeneous. They can for instance include images acquired both with or without the injection of a gad...

Altered trends of local brain function in classical trigeminal neuralgia patients after a single trigger pain

To investigate the altered trends of regional homogeneity (ReHo) based on time and frequency, and clarify the time-frequency characteristics of ReHo in 48 classical trigeminal neuralgia (CTN) patients after a ...

Development and validation of a multi-modal ultrasomics model to predict response to neoadjuvant chemoradiotherapy in locally advanced rectal cancer

To assess the performance of multi-modal ultrasomics model to predict efficacy to neoadjuvant chemoradiotherapy (nCRT) in patients with locally advanced rectal cancer (LARC) and compare with the clinical model.

Combining radiomics with thyroid imaging reporting and data system to predict lateral cervical lymph node metastases in medullary thyroid cancer

Medullary Thyroid Carcinoma (MTC) is a rare type of thyroid cancer. Accurate prediction of lateral cervical lymph node metastases (LCLNM) in MTC patients can help guide surgical decisions and ensure that patie...

Unified deep learning models for enhanced lung cancer prediction with ResNet-50–101 and EfficientNet-B3 using DICOM images

Significant advancements in machine learning algorithms have the potential to aid in the early detection and prevention of cancer, a devastating disease. However, traditional research methods face obstacles, a...

Hybrid transformer convolutional neural network-based radiomics models for osteoporosis screening in routine CT

Early diagnosis of osteoporosis is crucial to prevent osteoporotic vertebral fracture and complications of spine surgery. We aimed to conduct a hybrid transformer convolutional neural network (HTCNN)-based rad...

Improving the diagnosis and treatment of congenital heart disease through the combination of three-dimensional echocardiography and image guided surgery

The paper aimed to improve the accuracy limitations of traditional two-dimensional ultrasound and surgical procedures in the diagnosis and management of congenital heart disease (chd), and to improve the diagn...

A noninvasive method for predicting clinically significant prostate cancer using magnetic resonance imaging combined with PRKY promoter methylation level: a machine learning study

Traditional process for clinically significant prostate cancer (csPCA) diagnosis relies on invasive biopsy and may bring pain and complications. Radiomic features of magnetic resonance imaging MRI and methylat...

Artificial intelligence in tongue diagnosis: classification of tongue lesions and normal tongue images using deep convolutional neural network

This study aims to classify tongue lesion types using tongue images utilizing Deep Convolutional Neural Networks (DCNNs).

A rapid multi-parametric quantitative MR imaging method to assess Parkinson’s disease: a feasibility study

MULTIPLEX is a single-scan three-dimensional multi-parametric MRI technique that provides 1 mm isotropic T1-, T2*-, proton density- and susceptibility-weighted images and the corresponding quantitative maps. T...

Evaluating the consistency in different methods for measuring left atrium diameters

The morphological information of the pulmonary vein (PV) and left atrium (LA) is of immense clinical importance for effective atrial fibrillation ablation. The aim of this study is to examine the consistency i...

The Correction to this article has been published in BMC Medical Imaging 2024 24 :81

Deep learning–based automatic segmentation of meningioma from T1-weighted contrast-enhanced MRI for preoperative meningioma differentiation using radiomic features

This study aimed to establish a dedicated deep-learning model (DLM) on routine magnetic resonance imaging (MRI) data to investigate DLM performance in automated detection and segmentation of meningiomas in com...

Development of a prediction model for facilitating the clinical application of transcranial color-coded duplex ultrasonography

Transcranial color-coded duplex ultrasonography (TCCD) is an important diagnostic tool in the investigation of cerebrovascular diseases. TCCD is often hampered by the temporal window that ultrasound cannot pen...

Transfer learning–based PET/CT three-dimensional convolutional neural network fusion of image and clinical information for prediction of EGFR mutation in lung adenocarcinoma

To introduce a three-dimensional convolutional neural network (3D CNN) leveraging transfer learning for fusing PET/CT images and clinical data to predict EGFR mutation status in lung adenocarcinoma (LADC).

Evaluation of post-dilatation on longitudinal stent deformation and postprocedural stent malapposition in the left main artery by optical coherence tomography (OCT): an in vitro study

The diameter of the ostial and proximal left main coronary artery can be greater than 5.0 mm. However, the diameters of the mostly available coronary drug-eluting stents (DESs) are ≤ 4.0 mm. Whether high-press...

Craniofacial phenotyping with fetal MRI: a feasibility study of 3D visualisation, segmentation, surface-rendered and physical models

This study explores the potential of 3D Slice-to-Volume Registration (SVR) motion-corrected fetal MRI for craniofacial assessment, traditionally used only for fetal brain analysis. In addition, we present the ...

PulmoNet: a novel deep learning based pulmonary diseases detection model

Pulmonary diseases are various pathological conditions that affect respiratory tissues and organs, making the exchange of gas challenging for animals inhaling and exhaling. It varies from gentle and self-limit...

Automated machine learning for the identification of asymptomatic COVID-19 carriers based on chest CT images

Asymptomatic COVID-19 carriers with normal chest computed tomography (CT) scans have perpetuated the ongoing pandemic of this disease. This retrospective study aimed to use automated machine learning (AutoML) ...

Influence of spectral shaping and tube voltage modulation in ultralow-dose computed tomography of the abdomen

Unenhanced abdominal CT constitutes the diagnostic standard of care in suspected urolithiasis. Aiming to identify potential for radiation dose reduction in this frequent imaging task, this experimental study c...

Important information

Editorial board

For authors

For editorial board members

For reviewers

- Manuscript editing services

Annual Journal Metrics

2022 Citation Impact 2.7 - 2-year Impact Factor 2.7 - 5-year Impact Factor 0.983 - SNIP (Source Normalized Impact per Paper) 0.535 - SJR (SCImago Journal Rank)

2023 Speed 34 days submission to first editorial decision for all manuscripts (Median) 177 days submission to accept (Median)

2023 Usage 951,496 downloads 161 Altmetric mentions

- More about our metrics

Peer-review Terminology

The following summary describes the peer review process for this journal:

Identity transparency: Single anonymized

Reviewer interacts with: Editor

Review information published: Review reports. Reviewer Identities reviewer opt in. Author/reviewer communication

More information is available here

- Follow us on Twitter

BMC Medical Imaging

ISSN: 1471-2342

- General enquiries: [email protected]

- U.S. Department of Health & Human Services

- National Institutes of Health

En Español | Site Map | Staff Directory | Contact Us

Medical Imaging and Data Resource Center

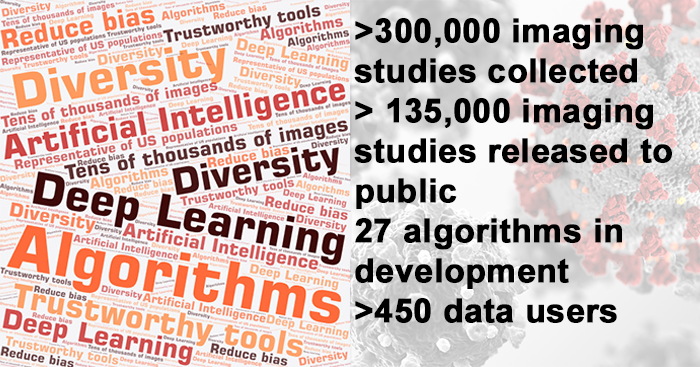

The Medical Imaging and Data Resource Center (MIDRC) is a collaboration of leading medical imaging organizations launched in August 2020 as part of NIBIB's response to the COVID-19 pandemic. MIDRC aims to develop a high-quality repository for medical images related to COVID-19 and associated clinical data, and develop and foster medical image-based artificial intelligence (AI) for use in the detection, diagnosis, prognosis, and monitoring of COVID-19.

NIBIB joined with the American College of Radiology (ACR), the Radiological Society of North America (RSNA), the American Association of Physicists in Medicine (AAPM), and the University of Chicago to create this free resource for researchers to use in the development of AI and deep learning tools and serve as a reference to enhance clinical recognition of COVID-19. Imaging data is contributed to MIDRC from multiple clinical sites throughout the country.

Learn more about MIDRC .

Access to the MIDRC data commons is now open.

MIDRC wins distinction in 2023 DataWorks! Prize

MIDRC has received a Distinguished Achievement Award in the 2023 DataWorks! Prize. The prize is a partnership between the NIH Office of Data Science Strategy and the Federation of American Societies for Experimental Biology (FASEB) to incentivize effective practices and increase community engagement around data sharing and reuse. MIDRC was recognized for its collaboration to create a curated, diverse commons for medical imaging AI research and translation. All of MIDRC’s procedures, data models, data harmonization, annotation, and data management strategies are publicly available.

MIDRC lends medical imaging expertise to ARPA-H program

MIDRC was selected in September 2023 as one of several performers for the ARPA-H Biomedical Data Fabric (BDF) Toolbox. The ARPA-H BDF is an initiative to de-risk technologies for an easily deployable, multi-modal, multi-scale, connected data ecosystem for biomedical data. MIDRC will provide domain expertise and data commons technology development in medical imaging. MIDRC is funded by NIH NIBIB, co-led by investigators from the American Association of Physicists in Medicine (AAPM), the Radiological Society of North America (RSNA), and the American College of Radiology (ACR), hosted at the University of Chicago (MIDRC Central), and exists on the Gen3 data ecosystem.

ARPA-H is enlisting multiple performers who are experts in their fields to build components of the ARPA-H BDF Toolbox. MIDRC’s expertise stems from developing the repository in which MIDRC imaging data are harmonized and vetted by clinical, artificial intelligence (AI)/machine learning (ML), and data science domain experts and are aligned with a common data model. The public open data commons was created “at scale” and is interoperable with other data commons to enable multi-modal, multi-omics research as well as rigorous statistical evaluations. MIDRC also includes a user portal for cohort building and downloading, allowing for multiple re-uses of the data by various AI/ML developers for various specific tasks. Importantly, MIDRC has also produced various resources such as a metrology decision tree and a bias awareness tool for aiding in bias mitigation as well as multiple algorithms/software.

The ARPA-H BDF toolbox will help make research data easier and more reliable to use, reduce effort for data integration, and enable new capabilities and models that can be applied across disciplines and generalized across disease domains. “MIDRC’s involvement in the ARPA-H BDF Toolbox will include development and deployment of medical imaging data commons architectures and resources to support various diseases and applications for the toolbox.” said MIDRC principal investigator Maryellen L. Giger, PhD, the A.N. Pritzker Distinguished Service Professor of Radiology, Committee on Medical Physics at the University of Chicago.

For more on how you can get involved, go to ARPA-H BDF ; for more on how you can submit and/or access data, go to MIDRC .

Announcements

MIDRC and NIBIB are hosting a workshop to review the history of MIDRC and its impact on medical imaging and trustworthy Artificial Intelligence (AI) development, and to look forward to the future of MIDRC and AI.

Date: April 26, 2024

Time: 9:00 AM to 12 PM EDT

Location: NIH main campus in Bethesda, MD and virtually by Zoom

Registration is now closed.

Publications

Find publications on the MIDRC website .

- International Society for Optics and Photonics (SPIE)

- America Society for Neuroradiology (ASNR)

- American Institute of Ultrasound in Medicine (AIUM)

- Academy for Radiology and Biomedical Imaging Research

- Society of Imaging Informatics in Medicine (SIIM)

- >300,000 imaging studies collected

- >135,000 imaging studies released to the public

- 27 algorithms in development

- >450 data users

Related News & Events

This interview with Maryellen Giger, PhD, delves into the creation of the MIDRC imaging repository, how its data can be used to develop and evaluate AI algorithms, ways that bias can be introduced—and potentially mitigated—in medical imaging models, and what the future may hold.

Researchers have found that AI models could accurately predict self-reported race in several types of medical images, suggesting that race information could be unknowingly incorporated into image analysis models.

After less than two years of data collection and processing, RSNA has successfully delivered over 30,000 de-identified imaging studies to the Medical Imaging and Data Resource Center (MIDRC) project, an open-access platform which publishes data to be used for research. MIDRC is funded by NIBIB. Source: RSNA News

MIDRC's June 2023 monthly seminar will focus on how MIDRC can help investigators satisfy the new NIH Data Management and Sharing Policy.

Presenting the medical imaging community with new tools and resources from MIDRC to facilitate rapid and flexible collection, AI research, and dissemination of imaging and associated data.

Virtual event: February 21, 2023 speaker: Dr. Paul Kinahan, University of Washington, on Curating the MIDRC collection of DICOM images for use in AI research.

A series of webinars about topics related to the Medical Imaging and Data Resource Center (MIDRC).

Explore More

Heath Topics

Research Topics

- Artificial Intelligence

- Computed Tomography (CT)

- Machine Learning

Scientific Program Areas

- Division of Applied Science & Technology (Bioimaging)

- Division of Discovery Science & Technology (Bioengineering)

- Division of Health Informatics Technologies (Informatics)

- Division of Interdisciplinary Training (DIDT)

Inside NIBIB

- Director's Corner

- Funding Policies

- NIBIB Fact Sheets

- Press Releases

- Brownell Lab

- Brugarolas Lab

- Elmaleh Lab

- Johnson Lab

- Normandin Lab

- Santarnecchi Lab

- Sepulcre Lab

- Technical Staff

- Administrative Staff

- Collaborators

- Publications

- Image Generation and Clinical Assessment

- PET and SPECT Instrumentation

- Multimodal MRI Research

- Quantitative PET-MR Imaging

- Quantitative PET/SPECT

- Machine Learning for Anatomical imaging

- Therapy Imaging Program

- Pharmaco-Kinetic Modeling

- Monitoring Radiotherapy with PET

- Radiochemistry Discovery

- Precision Neuroscience & Neuromodulation Program

- Video Lectures

- T32 Postgraduate Training Program in Medical Imaging (PTPMI)

- The Gordon Speaker Series

- Translation

- Covid-19 response

- Recent Seminars

- Awards and Honors

- Recent Publications

- Other News Items

- Grant Proposals

- Grant Related FAQs

- Travel Policies

- Purchasing and Other Daily Operations

- Human Resources

- Contact Forms

- Science Resources

- Computing Resources

- File Repository

- Seminar Sign Up

Welcome to the Gordon Center for Medical Imaging

한국어 中文 Français Español

The Gordon Center for Medical Imaging at Massachusetts General Hospital (MGH) and Harvard Medical School is a multidisciplinary research center dedicated to improving patient care by developing and promoting new biomedical imaging technologies used in both diagnosis and therapy.

Our main activities include research , training , and translation of innovative research into clinical applications. Investigators from the academic community, research laboratories, and industry are welcome to use the Center’s medical imaging equipment and clinical research facilities available through PET Core .

Created in 2015 with an endowment from the Bernard and Sophia Gordon Foundation, the Gordon Center for Medical Imaging is a direct continuation of MGH’s Division of Radiological Sciences where the first positron-imaging device was invented. As early as the 1950s, a series of important milestones were achieved at MGH, including the MGH-positron (2D) cameras, the filtered backprojection algorithm (Chesler) and multiple gated cardiac imaging (Alpert).

Today, the Gordon Center focuses on research, translation to clinical application and training of the next generation of scientists and engineers in medical imaging. These activities stress academic research sponsored by federal agencies and industrial collaborations. Predoctoral students and postdoctoral fellows gain experience, mainly aimed toward fostering their academic careers, by participating in ongoing research, educational symposia and tutorials. In parallel, promising scientists and engineers, who are interested in combining entrepreneurship, engineering and academic research in support of the public good, can benefit from the Center’s support to translate their transformative research.

Upcoming Seminars and Events

Our research spans a variety of imaging modalities from CT to PET to MRI to optical. We have expertise in a variety of techniques from reconstruction and kinetic modeling to radiotracer development

See updates on recent publications, Gordon Lecture Series seminars on a variety of medical imaging topics from experts around the world, and other news items such as awards and promotions.

The MGH Gordon PET Core is cGMP/FDA registered positron emission tomography drug manufacturer that operates at cost to provide investigators with services to design and conduct research studies.

Medical Imaging

Our research in this area covers a wide range of topics, from development of imaging devices, image reconstruction, contrast media and kinetic modeling, image processing and analysis, and patient modeling. In addition to traditional clinical imaging modalities, such as CT, MRI, PET and ultrasound, we are also interested in emerging technologies (e.g., functional and biological imaging techniques) for cancer detection, tumor target delineation and therapeutic assessment. We are also working on four-dimensional imaging techniques so that the change of 3D patient anatomy with time can be visualized and analyzed.

4D CT Sample 1

Conventional 3D PET

The AIMI Center

Stanford has established the AIMI Center to develop, evaluate, and disseminate artificial intelligence systems to benefit patients. We conduct research that solves clinically important problems using machine learning and other AI techniques.

Director's Welcome

Back in 2017, I tweeted “radiologists who use AI will replace radiologists who don’t.” The tweet has taken on a life of its own, perhaps because it has a double meaning.

Register for #AIMI24

AIMI Dataset Index

AIMI has launched a community-driven resource of health AI datasets for machine learning in healthcare as part of our vision of catalyze sharing well curated, de-identified clinical data sets

AIMI Summer Research Internship & AI Bootcamp

Inviting high school students to join us for a two-week virtual journey delving into the intersection of AI and healthcare through our summer programs. Applications due March 31, 2024.

AIMI Datasets for Research & Commercial Use

The AIMI Center is helping to catalyze outstanding open science by publicly releasing 20+ AI-ready clinical data sets (many with code and AI models) for research and commercial use.

Upcoming Events

Aimi symposium 2024, ibiis-aimi seminar: mildred cho, phd, ibiis-aimi seminar: bo wang, phd.

- Alzheimer's disease & dementia

- Arthritis & Rheumatism

- Attention deficit disorders

- Autism spectrum disorders

- Biomedical technology

- Diseases, Conditions, Syndromes

- Endocrinology & Metabolism

- Gastroenterology

- Gerontology & Geriatrics

- Health informatics

- Inflammatory disorders

- Medical economics

- Medical research

- Medications

- Neuroscience

- Obstetrics & gynaecology

- Oncology & Cancer

- Ophthalmology

- Overweight & Obesity

- Parkinson's & Movement disorders

- Psychology & Psychiatry

- Radiology & Imaging

- Sleep disorders

- Sports medicine & Kinesiology

- Vaccination

- Breast cancer

- Cardiovascular disease

- Chronic obstructive pulmonary disease

- Colon cancer

- Coronary artery disease

- Heart attack

- Heart disease

- High blood pressure

- Kidney disease

- Lung cancer

- Multiple sclerosis

- Myocardial infarction

- Ovarian cancer

- Post traumatic stress disorder

- Rheumatoid arthritis

- Schizophrenia

- Skin cancer

- Type 2 diabetes

- Full List »

share this!

April 25, 2024

This article has been reviewed according to Science X's editorial process and policies . Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

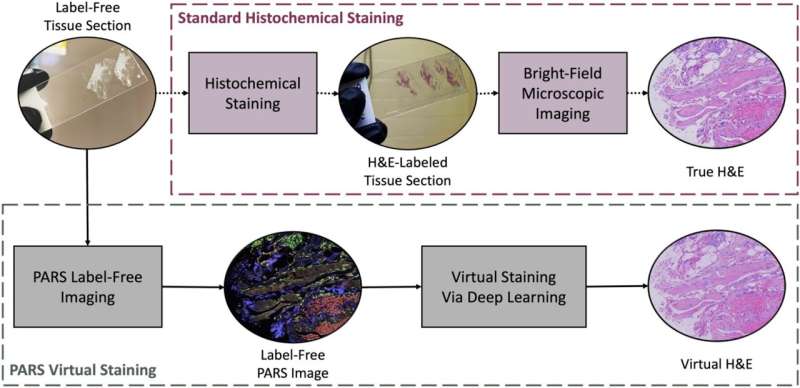

Researchers create an AI-powered digital imaging system to speed up cancer biopsy results

by University of Waterloo

University of Waterloo researchers have invented a digital medical imaging system that significantly improves the cancer detection process to deliver immediate results and enable swift, effective treatment for all types of cancer.

The Photon Absorption Remote Sensing (PARS) system, an innovative, built-from-scratch technology, is faster than traditional cancer-detection methods and aims to deliver a diagnosis in minutes—enabling prompt surgical intervention. Currently, patients can wait weeks or even months to receive biopsy results, leading to delays in treatment and increased patient anxiety . The work is published in the journals IEEE Transactions on Biomedical Engineering and Scientific Reports .

The new system is also highly accurate. Clinical studies using human breast tissue showed that pathologists were unable to distinguish between images generated by the PARS system and those produced by conventional methods. The technology boasts a 98 percent correlation with traditional diagnostic techniques.

"Our primary goal is to provide patients with timely and accurate diagnoses, reducing the need for multiple surgeries and minimizing the risk of cancer spread," said Dr. Parsin Haji Reza, lead researcher and a professor in Waterloo's Department of Systems Design Engineering.

"This invention will transform digital pathology, enabling surgeons to obtain multiple results simultaneously with just one biopsy and provide accurate diagnoses within minutes. It also ensures thorough removal of cancerous tissue before closing the incision, mitigating the need for further surgeries."

The biopsy process is a crucial step in determining the presence of cancer. The traditional histopathology method involves taking a tissue sample and preparing it for analysis—cutting it into sections, staining those sections with different dyes, mounting those sections onto slides, and analyzing those slides under a microscope to identify cancer markers.

By replacing traditional methods with high-resolution imaging powered by artificial intelligence (AI), the PARS system accelerates the diagnostic process, saving both time and resources. Lasers are applied to a tissue sample which generates a unique and detailed set of data in high resolution.

The data is fed into the AI system which translates it into a standard histopathology image for the pathologist to read. It removes the need for multiple slide preparation by applying digitized image filters to the one tissue sample providing multiple reads—all without damaging the sample which allows for further analysis if needed.

"In addition to reducing patients' stress, this technology will save billions of dollars for the health care system," said Reza. "Surgeon and pathologists' time costs money, each slide and special dye costs money, the hospitals' facilities cost money. We can now reduce all those costs with faster biopsy results that are just as accurate."

illumiSonics, a Waterloo-based startup, has been established to commercialize the research with plans to bring the technology to market within two years. The company offers graduate students involved in the research employment opportunities to continue to steer the system's development.

Boktor, M., et al. Multi-channel feature extraction for virtual histological staining of photon absorption remote sensing images. Scientific Reports (2024). DOI: 10.1038/s41598-024-52588-1

Explore further

Feedback to editors

Research shows 'profound' link between dietary choices and brain health

3 hours ago

Component of keto diet plus immunotherapy may reduce prostate cancer

7 hours ago

Study finds big jump in addiction treatment at community health clinics

Positive childhood experiences can boost mental health and reduce depression and anxiety in teens

Gene linked to epilepsy and autism decoded in new study

Apr 26, 2024

Blood test finds knee osteoarthritis up to eight years before it appears on X-rays

Researchers find pregnancy cytokine levels impact fetal brain development and offspring behavior

Study finds biomarkers for psychiatric symptoms in patients with rare genetic condition 22q

Clinical trial evaluates azithromycin for preventing chronic lung disease in premature babies

Scientists report that new gene therapy slows down amyotrophic lateral sclerosis disease progression

Related stories.

'Virtual biopsy' lets clinicians analyze skin noninvasively

Apr 10, 2024

Deep learning–based virtual staining of tissue facilitates rapid assessment of breast cancer biomarker

Oct 27, 2022

Digital pathology cleared for use in UK cancer screening programs

Jan 25, 2024

A touchless technology for early detection of eye diseases

Jun 14, 2021

New imaging technology could 'revolutionize' cancer surgery

Sep 16, 2019

AI taps human wisdom for faster, better cancer diagnosis

Mar 11, 2020

Recommended for you

Study identifies driver of liver cancer that could be target for treatment

Analysis identifies 50 new genomic regions associated with kidney cancer risk

Biomarkers identified for successful treatment of bone marrow tumors

Study finds vitamin D alters mouse gut bacteria to give better cancer immunity

Apr 25, 2024

Targeting specific protein regions offers a new treatment approach in medulloblastoma

Let us know if there is a problem with our content.

Use this form if you have come across a typo, inaccuracy or would like to send an edit request for the content on this page. For general inquiries, please use our contact form . For general feedback, use the public comments section below (please adhere to guidelines ).

Please select the most appropriate category to facilitate processing of your request

Thank you for taking time to provide your feedback to the editors.

Your feedback is important to us. However, we do not guarantee individual replies due to the high volume of messages.

E-mail the story

Your email address is used only to let the recipient know who sent the email. Neither your address nor the recipient's address will be used for any other purpose. The information you enter will appear in your e-mail message and is not retained by Medical Xpress in any form.

Newsletter sign up

Get weekly and/or daily updates delivered to your inbox. You can unsubscribe at any time and we'll never share your details to third parties.

More information Privacy policy

Donate and enjoy an ad-free experience

We keep our content available to everyone. Consider supporting Science X's mission by getting a premium account.

E-mail newsletter

- Reference Manager

- Simple TEXT file

People also looked at

Mini review article, a history of cardiovascular magnetic resonance imaging in clinical practice and population science.

- 1 William Harvey Research Institute, Queen Mary University of London, London, United Kingdom

- 2 Barts Heart Centre, Barts Health NHS Trust, London, United Kingdom

- 3 Division of Cardiology, Department of Medicine, Johns Hopkins University, Baltimore, MD, United States

- 4 Department of Radiology, Johns Hopkins University, Baltimore, MD, United States

- 5 Department of Radiology, University of Wisconsin School of Medicine and Public Heath, Madison, WI, United States

Cardiovascular magnetic resonance (CMR) imaging has become an invaluable clinical and research tool. Starting from the discovery of nuclear magnetic resonance, this article provides a brief overview of the key developments that have led to CMR as it is today, and how it became the modality of choice for large-scale population studies.

Introduction

Even the most ardent cardiac imager may be forgiven for not having read C. J. Gorter's 1936 manuscript in Physica in which he described unsuccessfully attempting to find resonance of lithium-7 nuclei in crystalline lithium fluoride ( 1 ). However, it is from here that the story of cardiovascular magnetic resonance (CMR) can trace its origins. Whilst the latest guidelines and research articles make up the bricks of our clinical and academic practice, the mortar—the tales of how these edifices were constructed—can often be even more compelling. In this review article, we embark on a whistlestop tour of the history of CMR in clinical practice and its strides into population science. We hope that state-of-the-art CMR and future directions will be better understood and appreciated when contextualised within the tapestry of innovation and discovery that has preceded it.

Early NMR research

The phenomenon of nuclear magnetic resonance (NMR) sought by Gorter was discovered independently by Felix Bloch and Edwin Purcell in 1946 ( 2 , 3 ). They determined that when certain nuclei were placed in a magnetic field and subjected to radiofrequency energy, they absorbed energy and re-emitted this energy when the nuclei returned to their original state. For this discovery, they were awarded the 1952 Nobel Prize in physics. From this point, the field of NMR saw a host of investigations in non-biological and biological samples. The work of Richard Ernst in 1966 resulted in a step change in the field when he demonstrated that it was possible to markedly improve the sensitivity of NMR signals generated by applying the Fourier transformation ( 4 ). This mathematical operation, named after the French physicist Jean-Baptiste Joseph Fourier, is now the bedrock upon which magnetic resonance output can be interpreted.

In 1971, Raymond Damadian measured T1 and T2 relaxation times of normal and cancerous ex vivo rat tissue, demonstrating that tumours had longer relaxation times ( 5 ). However, all NMR experiments until the early 1970's were one-dimensional—no spatial information was available to ascertain where within the sample the NMR signal originated from. The problem of spatial localisation was solved independently by Paul Lauterbur and Peter Mansfield in 1974 ( 6 , 7 ). They discovered that the addition of a varying magnetic field (a “gradient”) altered the resonance frequency of nuclei in proportion to their position in the magnetic field. By understanding how these gradients could be applied in different orientations, spatial information could be encoded in three dimensions and thereby provide the requisite information for generating an image. Ernst, having heard Lauterbur describe this at a conference in North Carolina in 1974 ( 8 ), understood the applicability of his work from NMR spectroscopy and wrote a 1975 publication with Anil Kumar which described a practical method to rapidly reconstruct an image from NMR signals ( 9 ). Ernst received the Nobel Prize in chemistry in 1991 and Lauterbur and Mansfield the Nobel Prize in medicine in 2003.

Towards cardiovascular NMR

With the discovery of spatial localisation, a Rubicon had been crossed. The first anatomical structure imaged in detail was a cross-section of a finger in 1977 by Mansfield and Andrew Maudsley ( 10 ). Later the same year, Damadian and colleagues had generated a cross-sectional image of the human chest ( 11 ) and by 1978, Hugh Clow and Ian Young had reported the first transverse image of the head ( 12 ).

Whilst cardiac structures were visible on these early NMR images, Robert Hawkes and colleagues in Nottingham published results of NMR imaging directed specifically at the heart in 1981 ( 13 ). In this manuscript they presciently remarked that electrocardiogram (ECG) gating might improve scan quality and the great potential of cardiovascular NMR given its lack of ionising radiation. By this time, the original term NMR imaging had been replaced by the rather less precise magnetic resonance imaging (MRI) and applications of MRI accelerated rapidly. In 1983, Herfkens et al. published cardiovascular MRI findings at 0.35 Tesla (T) in 244 individuals—a remarkably large sample even by comparison to the size of modern day CMR cohorts ( 14 ).

Limitations in early MRI scanners meant the process of generation the NMR signal was slow—to the order of one to several seconds. Due to heart motion during long acquisitions, investigators recognised that synchronisation of the NMR signal to the cardiac cycle was necessary. Higgins and colleagues tested three different triggering methods: peripheral pulse signals, Doppler flow signals and ECG signals. The ECG gating method was demonstrably superior to other methods and became widely adopted in the field.

Structure and function

Mansfield and his team reported the first real time cine CMR image of a live rabbit heart in 1982 using an echo planar imaging technique they developed ( 15 ). CMR was recognised to be useful to provide accurate estimates of cardiac function and left ventricular mass ( 16 ) as well as estimating regurgitant fractions in patients with mitral or aortic regurgitation ( 17 ). A major disadvantage of early CMR was long acquisition times (e.g., two minutes for each phase of the cardiac cycle). This was solved in the early 1990's by Atkinson and colleagues who introduction segmented data acquisition. With this method, cine CMR image acquisition was accelerated in inverse proportion to the acquired number of frames per cardiac cycle. Importantly, ECG segmented acquisitions combined with fast gradient echo acquisition allowed single breath-hold, two-dimensional cine imaging of the heart ( 18 ).

Hawkes, who generated perhaps the first set of dedicated cardiac images using NMR, also worked on the earliest versions of the steady-state free precession imaging sequence (SSFP) in the early 1980's. The SSFP technique temporarily faded in relevance due to its relative inferior image quality compared to the gradient echo imaging techniques. However, by the turn of the millennium, better magnet field homogeneity and improved gradient hardware with rapid switching had remarkably improved image quality. By the late 1990s, the SSFP method was providing far clearer blood pool-to-myocardium and epicardial fat-to-myocardium delineation and is currently the workhorse for both clinical and research cine CMR.

From the early 1980's, the use of exogenous intravenously administered contrast agents had been explored in conjunction with MRI, particularly for imaging the brain. MRI contrast agents were found to improve the detection of multiple pathological abnormalities in the brain, including better depiction of solid tissue from adjacent oedema. Similar results were subsequently identified when imaging the heart with CMR. In 1982, intravenous administration of a manganese chelate contrast agent was demonstrated in differentiating ischaemic myocardium (“a dark spot”) from normal tissue in a canine model. Manganese had a limited clinical role due to its toxicity, however, other agents such as gadopentetate dimeglumine were investigated in the early 1980's. At present, gadolinium-based contrast agents remain the cornerstone of CMR to improve the depiction of multiple cardiovascular abnormalities, ranging from perfusion deficits and myocardial infarction to myocardial tumours and inflammation.

In 1989, de Roos and colleagues described the different enhancement patterns in patients with occlusive vs. reperfused infarction ( 19 ). Over the following decade, improvements in CMR technology and sequences improving the contrast between normal and infarcted myocardium, in concert with a proliferation of gadolinium-based contrast agents, led to gadolinium-enhanced MR becoming a cornerstone of CMR's clinical utility as a modality. In 2000, a seminal paper described the use of a 180 degree inversion-recovery preparatory pulse combined with a subsequent gradient echo image acquisition. In this method, normal myocardial signal relaxed to steady state at a different rate than infarcted tissue due to their difference in T1 relaxation times. Image acquisition at the inversion time when normal tissue had zero signal (the “null” point after the inversion pulse) provided a substantial improvement in contrast to noise ratio between the infarcted myocardial tissue and the adjacent normal myocardium ( 20 ). Images were acquired under steady state conditions (e.g., 10–30 min) after gadolinium contrast administration. The so-called “late gadolinium enhancement” or LGE CMR method led to a critical study by Kim et al. showing contrast-enhanced CMR could differentiate irreversible from reversible myocardial dysfunction prior to patients proceeding to percutaneous or surgical revascularisation ( 21 ).

Interpreting late-gadolinium enhanced images was extended to other pathologies, including infiltrative diseases and tumours. Patterns of mural distribution on LGE CMR images began to be described for both ischaemic and non-ischaemic cardiomyopathies. The extent of myocardial LGE appeared related to the risk of adverse cardiovascular events as well as to specific genotypes in the cases of Mendelian disease. At present, the LGE CMR method has become central to the characterisation of multiple types of acquired and genetic cardiac diseases.

Myocardial perfusion

Myocardial perfusion evaluation by CMR was first described in 1990 also by Atkinson and colleagues. They observed the first pass kinetics of a rapid bolus of gadolinium-DTPA through the cardiac chambers and myocardium ( 22 ). This technique was subsequently augmented by the introduction of common pharmacological cardiac agents such as dipyridamole ( 23 ) and dobutamine ( 24 ) through the 1990's. Today, a vasodilator approach using adenosine or regadenoson is preferred. Further technical developments in the pulse sequence design and contrast agents have led to stress perfusion now being a key component of the assessment of coronary artery disease using CMR. Stress CMR has been demonstrated to be a feasible and efficient tool for the diagnosis of myocardial ischaemia when compared to other non-invasive modalities such as SPECT in large prospective trials ( 25 ). Current advances in stress CMR have focused on quantification of myocardial blood flow in order to supplement the qualitative interpretation of images ( 26 ).

Quantitative CMR

The bulk of CMR interpretation relies on visual identification of varying myocardial signal intensity. However, these signals may also be quantified. Signal quantification in CMR is a technique that provides pixel-by-pixel representation of absolutely denominated numerical T1 or T2 properties, expressed in units of time (ms). These quantitative imaging approaches produce parametric maps of the entire myocardium, allowing rapid assessment of both diffuse and focal myocardial abnormalities.

The roots of parametric mapping emerged very early in the development of NMR. In particular, T1 relaxation experiments were slow, requiring many minutes (or even hours) to acquire depending on the signal magnitude. David Look and Donald Locker solved this in 1970 whilst grappling with methods to automate the challenge of calculating short T1 values ( 27 ). They published their method in 1970. Remarkably, Look and Locker only learnt of the new life their method had taken on within medical MRI in 2014 via a serendipitous email. To their surprise, the term “Look-Locker” had already been mentioned in more than 2,300 papers and 140 patents ( 28 ).

The Look-Locker technique was modified to perform measurement of myocardial T1 relaxation times within a single breath-hold by Messroghli and colleagues in 2004. Their pulse sequence, named MOLLI (MOdified Look-Locker Inversion recovery) ( 29 ), acquired an image where the signal intensity of each pixel corresponds to the T1 relaxation time of that pixel. Subsequent modifications of the original MOLLI sequence have led to the more exotic acronyms in the field of CMR such as shMOLLI, SASHA and SAPPHIRE, each representing modifications or alternatives to the original MOLLI scheme. With non-diseased myocardium having a predictable T1 relaxation time which becomes altered in the presence of pathology such as oedema, fibrosis, and infiltrative diseases, T1 mapping is now relatively routine within clinical workflows to detect focal or diffuse disease. Extensions of the approach such as extracellular myocardial volume quantification and T2 and T2* mapping also have now been incorporated into workstreams depending on the myocardial disease being studied.

The bridge to population science

The advancements described above have catapulted CMR from the relatively esoteric to being a mainstream clinical diagnostic modality. Moreover, with its accurate quantification came the opportunity to evaluate early, asymptomatic tissue remodelling not evident with other non-invasive techniques. It was quickly apparent that CMR had a role to play in assessing subclinical cardiovascular disease and how these insights could be used in risk assessment and prognostication.

By the 1980s, cardiovascular risk factors in the general population that led to clinical cardiovascular events were identified, most notably from work in the Framingham Study. In particular, age, gender, blood pressure and cholesterol elevation as well as diabetes were termed “traditional” risk factors that identified individuals at high risk for subsequent cardiovascular disease. Notably, most early population-based studies such as Framingham evaluated European ancestry populations, typically enrolling men. By the mid-1990s, the concept of “novel” risk factors took hold, with the premise that subclinical cardiovascular disease could be detected with new methods, and potentially modified to reduce clinical disease expression. Besides serum and genetic testing, advanced imaging methods were identified as methods to phenotype subclinical cardiovascular disease. In the United States, this led to the National Heart, Lung and Blood Institute (NHLBI)-sponsored Multi-Ethnic Study of Atherosclerosis (MESA) population-based cohort. The MESA study was also designed to reflect ethnic diversity of the United States population at that time. MESA investigators sought to maximise the statistical opportunities for risk factor association and prediction; this led to all MESA participants undergoing the same exhaustive battery of tests, often acquired over a multiple day interval.

The CMR component of MESA was led by David Bluemke and João Lima ( 30 ). More than 5,000 men and women without clinical cardiovascular disease underwent CMR examination at six university sites in the United States. Given the relative shortage of MRI scanner capacity, this represented an enormous commitment of scarce hospital resources to imaging apparently healthy individuals at a time when MRI was otherwise reserved for the most complex and in-need patients.

When the CMR MESA protocol was finalised (1999), Hawkes' SSFP cine sequences were non-standard and commercially available at only half of the CMR MESA sites. In order to provide standardisation of methods, the initial MESA CMR protocol used the better validated fast gradient-recalled echo cine imaging. MESA permitted large-scale exploration of cardiac structure and function and demonstrated its association with cardiovascular outcomes in the general population. As examples, novel insights into the importance of mass:volume ratio ( 31 ) as well as the relevance of the right ventricle ( 32 ), independent of the left ventricle, as biomarkers of subclinical cardiovascular disease in healthy individuals were novel. In 2010, the NHLBI authorised additional funding for re-examining a large portion of the original MESA cohort using CMR and other phenotypic and genotyping methods. This second CMR MESA examination added the use of gadolinium contrast to the base CMR protocol. LGE CMR contributed to understanding regarding unrecognised myocardial scar—demonstrating the strong association between scar and adverse cardiovascular events even in those without known myocardial infarction ( 33 ). In addition, gadolinium administration allowed pre- and post-contrast T1 mapping to be performed in more than 1,000 study participants.

As well as the scientific insights MESA has continued to provide since its conception a quarter of a century ago, another of its legacies is how it has allowed CMR to proliferate as the modality of choice in large-scale, prospective population studies across the world. Where MESA led, cohorts such as the Jackson Heart Study, Study of Health in Pomerania, Framingham Heart Study Offspring Cohort, Dallas Heart Study and AGES Reykjavik incorporated CMR into their population studies. The largest effort, however, was yet to come, spurred by the concept of genotype-phenotype association.

In the early 2000's, the Medical Research Council and Wellcome Trust decided to establish the UK Biobank cohort to investigate risk factors for diseases of middle and old age. Between 2,006–10, 500,000 individuals were recruited and extensive questionnaire data, physical measurements and biological samples collected. The desire to better understand the role of genetics in phenotypic and disease variation has been a major driver of the UK Biobank project and its sample size. As such, a key component has been the collection of whole-genome genetic data in every participant.

In 2009, three years since the first participants had begun being recruited, a proposal was submitted for conducting imaging assessments as an enhancement to the UK Biobank study. This was to be done in 100,000 of the 500,000 participants and that these were to be performed in regional, non-medical settings. This ambitious, perhaps even radical, plan was initially deferred by the funders but the UK Biobank imaging working group, in collaboration with international experts, returned with a bolstered case and were awarded funding for this unprecedented task. The CMR component of the imaging enhancement was led by Steffen Petersen, Stefan Piechnik and Stefan Neubauer. A pilot phase consisting of performing 5,000 examinations was successfully completed in 2014 with approval to proceed to 100,000; to date, over 60,000 participants have been imaged with funding recently agreed to additionally perform repeat examinations in 10,000 individuals.

CMR in the UK Biobank has provided many novel insights regarding associations with traditional and non-traditional cardiovascular risk factors, allowed development of novel imaging biomarkers and seen the publication of the first genome-wide association studies of CMR phenotypes ( 34 – 38 ). However, just as a legacy of MESA is in its inspiration of using CMR in population studies, it can be argued that the UK Biobank CMR effort too has a legacy of equal note to the science it has produced.

By 2015, the UK Biobank core lab had commenced work on manually segmenting the 5,000 pilot cases however, it was always clear that manual analysis of the intended 100,000 CMR examinations was never going to be feasible. Thus, UK Biobank provided not only the supply of ground truth but also the demand and impetus for a solution to be found. The manual segmentation by the core lab led to automated algorithms using deep learning methods for CMR analysis, first solved by Bai ( 39 ) and subsequently others ( 40 – 42 ). The ability to segment all four chambers, in any view, in every frame of the cardiac cycle, without any human input and all of this within seconds has been a profound legacy that the UK Biobank has left upon the field of CMR. This development led to another significant advance with the possibility of relating detailed CMR-derived imaging phenotypes to paired UK Biobank genomics, proteomics and metabolomics data.

It is nearly 90 years since Bloch and Purcell discovered NMR and just over 50 since Lauterbur and Mansfield recognised its application to medical imaging. In Look's personal reflections on the sequence that bears his name, he concludes by saying “the cost of research is small, but the long-term payoff can be huge”. It is a fool's errand to predict where CMR might be 50 years hence but as long as it continues to be supported and attracts individuals willing to innovate and push boundaries, it will continue to offer new solutions to the age-old challenge of cardiovascular disease.

Author contributions

MMS: Writing – original draft, Writing – review & editing. JACL: Writing – review & editing. DAB: Writing – review & editing. SEP: Writing – review & editing.

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article.

MMS is supported by a British Heart Foundation Clinical Research Training Fellowship (FS/CRTF/22/24353). This work acknowledges the support of the National Institute for Health and Care Research Barts Biomedical Research Centre (NIHR203330); a delivery partnership of Barts Health NHS Trust, Queen Mary University of London, St George's University Hospitals NHS Foundation Trust and St George's University of London.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The reviewer PD declared a past co-authorship with the author SEP to the handling editor.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Gorter CJ. Negative result of an attempt to detect nuclear magnetic spins. Physica . (1936) 3:995–8. doi: 10.1016/S0031-8914(36)80324-3

Crossref Full Text | Google Scholar

2. Bloch F. Nuclear induction. Phys Rev . (1946) 70:460–74. doi: 10.1103/PhysRev.70.460

3. Purcell EM, Torrey HC, Pound RV. Resonance absorption by nuclear magnetic moments in a solid. Phys Rev . (1946) 69:37–8. doi: 10.1103/PhysRev.69.37

4. Ernst RR, Anderson WA. Application of fourier transform spectroscopy to magnetic resonance. Rev Sci Instrum . (1966) 37:93–102. doi: 10.1063/1.1719961

5. Damadian R. Tumor detection by nuclear magnetic resonance. Science . (1971) 171:1151–3. doi: 10.1126/science.171.3976.1151

PubMed Abstract | Crossref Full Text | Google Scholar

6. Lauterbur PC. Image formation by induced local interactions: examples employing nuclear magnetic resonance. Nature . (1973) 242:190–1. doi: 10.1038/242190a0

7. Mansfield P, Grannell PK. NMR “diffraction” in solids? J Phys C Solid State Phys . (1973) 6:L422. doi: 10.1088/0022-3719/6/22/007

8. Ernst RR. NMR fourier zeugmatography. J Magn Reson . (2011) 213:510–2. doi: 10.1016/j.jmr.2011.08.006

9. Kumar A, Welti D, Ernst RR. NMR fourier zeugmatography. J Magn Reson . (1975) 18:69–83. doi: 10.1016/0022-2364(75)90224-3

10. Mansfield P, Maudsley AA. Medical imaging by NMR. Br J Radiol . (1977) 50:188–94. doi: 10.1259/0007-1285-50-591-188

11. Damadian R, Goldsmith M, Minkoff L. NMR In cancer: XVI. FONAR image of the live human body. Physiol Chem Phys . (1977) 9:97–100. 108.909957

PubMed Abstract | Google Scholar

12. Clow H, Young IR. Britain’s brains produce first NMR scans. New Sci . (1978) 80:588.

Google Scholar

13. Hawkes RC, Holland GN, Moore WS, Roebuck EJ, Worthington BS. Nuclear magnetic resonance (NMR) tomography of the normal heart. J Comput Assist Tomogr . (1981) 5:605–12. doi: 10.1097/00004728-198110000-00001

14. Herfkens RJ, Higgins CB, Hricak H, Lipton MJ, Crooks LE, Lanzer P, et al. Nuclear magnetic resonance imaging of the cardiovascular system: normal and pathologic findings. Radiology . (1983) 147(3):749–59. doi: 10.1148/radiology.147.3.6601813

15. Stehling MK, Turner R, Mansfield P. Echo-planar imaging: magnetic resonance imaging in a fraction of a second. Science . (1991) 254:43–50. doi: 10.1126/science.1925560

16. Katz J, Milliken MC, Stray-Gundersen J, Buja LM, Parkey RW, Mitchell JH, et al. Estimation of human myocardial mass with MR imaging. Radiology . (1988) 169:495–8. doi: 10.1148/radiology.169.2.2971985

17. Klipstein RH, Firmin DN, Underwood SR, Nayler GL, Rees RS, Longmore DB. Colour display of quantitative blood flow and cardiac anatomy in a single magnetic resonance cine loop. Br J Radiol . (1987) 60:105–11. doi: 10.1259/0007-1285-60-710-105

18. Atkinson DJ, Edelman RR. Cineangiography of the heart in a single breath hold with a segmented turboFLASH sequence. Radiology . (1991) 178:357–60. doi: 10.1148/radiology.178.2.1987592

19. de Roos A, van Rossum AC, van der Wall E, Postema S, Doornbos J, Matheijssen N, et al. Reperfused and nonreperfused myocardial infarction: diagnostic potential of Gd-DTPA–enhanced MR imaging. Radiology . (1989) 172:717–20. doi: 10.1148/radiology.172.3.2772179

20. Simonetti OP, Kim RJ, Fieno DS, Hillenbrand HB, Wu E, Bundy JM, et al. An improved MR imaging technique for the visualization of myocardial infarction. Radiology . (2001) 218:215–23. doi: 10.1148/radiology.218.1.r01ja50215

21. Kim RJ, Wu E, Rafael A, Chen E-L, Parker MA, Simonetti O, et al. The use of contrast-enhanced magnetic resonance imaging to identify reversible myocardial dysfunction. N Engl J Med . (2000) 343:1445–53. doi: 10.1056/NEJM200011163432003

22. Atkinson DJ, Burstein D, Edelman RR. First-pass cardiac perfusion: evaluation with ultrafast MR imaging. Radiology . (1990) 174:757–62. doi: 10.1148/radiology.174.3.2305058

23. Pennell DJ, Underwood SR, Ell PJ, Swanton RH, Walker JM, Longmore DB. Dipyridamole magnetic resonance imaging: a comparison with thallium-201 emission tomography. Br Heart J . (1990) 64:362–9. doi: 10.1136/hrt.64.6.362

24. Pennell DJ, Underwood SR, Manzara CC, Swanton RH, Walker JM, Ell PJ, et al. Magnetic resonance imaging during dobutamine stress in coronary artery disease. Am J Cardiol . (1992) 70:34–40. doi: 10.1016/0002-9149(92)91386-i

25. Greenwood JP, Maredia N, Younger JF, Brown JM, Nixon J, Everett CC, et al. Cardiovascular magnetic resonance and single-photon emission computed tomography for diagnosis of coronary heart disease (CE-MARC): a prospective trial. Lancet Lond Engl . (2012) 379:453–60. doi: 10.1016/S0140-6736(11)61335-4

26. Hsu L-Y, Jacobs M, Benovoy M, Ta AD, Conn HM, Winkler S, et al. Diagnostic performance of fully automated pixel-wise quantitative myocardial perfusion imaging by cardiovascular magnetic resonance. JACC Cardiovasc Imaging . (2018) 11:697–707. doi: 10.1016/j.jcmg.2018.01.005

27. Look D, Locker D. Time saving in measurement of NMR and EPR relaxation times. Rev Sci Instrum . (1970) 41:250–1. doi: 10.1063/1.1684482

28. Look DC. The Look-Locker Method in Magnetic Resonance Imaging: A Brief, Personal History.

29. Messroghli DR, Radjenovic A, Kozerke S, Higgins DM, Sivananthan MU, Ridgway JP. Modified look-locker inversion recovery (MOLLI) for high-resolution T1 mapping of the heart. Magn Reson Med . (2004) 52:141–6. doi: 10.1002/mrm.20110

30. Bild DE, Bluemke DA, Burke GL, Detrano R, Diez Roux AV, Folsom AR, et al. Multi-ethnic study of atherosclerosis: objectives and design. Am J Epidemiol . (2002) 156:871–81. doi: 10.1093/aje/kwf113

31. Bluemke DA, Kronmal RA, Lima JAC, Liu K, Olson J, Burke GL, et al. The relationship of left ventricular mass and geometry to incident cardiovascular events: the MESA (multi-ethnic study of atherosclerosis) study. J Am Coll Cardiol . (2008) 52:2148–55. doi: 10.1016/j.jacc.2008.09.014

32. Kawut SM, Barr RG, Lima JAC, Praestgaard A, Johnson WC, Chahal H, et al. Right ventricular structure is associated with the risk of heart failure and cardiovascular death: the multi-ethnic study of atherosclerosis (MESA)–right ventricle study. Circulation . (2012) 126:1681–8. doi: 10.1161/CIRCULATIONAHA.112.095216

33. Ambale-Venkatesh B, Liu C-Y, Liu Y-C, Donekal S, Ohyama Y, Sharma RK, et al. Association of myocardial fibrosis and cardiovascular events: the multi-ethnic study of atherosclerosis. Eur Heart J Cardiovasc Imaging . (2019) 20:168–76. doi: 10.1093/ehjci/jey140

34. Petersen SE, Sanghvi MM, Aung N, Cooper JA, Paiva JM, Zemrak F, et al. The impact of cardiovascular risk factors on cardiac structure and function: insights from the UK biobank imaging enhancement study. PLoS One . (2017) 12:e0185114. doi: 10.1371/journal.pone.0185114

35. Sanghvi MM, Aung N, Cooper JA, Paiva JM, Lee AM, Zemrak F, et al. The impact of menopausal hormone therapy (MHT) on cardiac structure and function: insights from the UK biobank imaging enhancement study. PLoS One . (2018) 13:e0194015. doi: 10.1371/journal.pone.0194015

36. Aung N, Sanghvi MM, Piechnik SK, Neubauer S, Munroe PB, Petersen SE. The effect of blood lipids on the left ventricle: a mendelian randomization study. J Am Coll Cardiol . (2020) 76:2477–88. doi: 10.1016/j.jacc.2020.09.583

37. Aung N, Vargas JD, Yang C, Cabrera CP, Warren HR, Fung K, et al. Genome-wide analysis of left ventricular image-derived phenotypes identifies fourteen loci associated with cardiac morphogenesis and heart failure development. Circulation . (2019) 140:1318–30. doi: 10.1161/CIRCULATIONAHA.119.041161

38. Aung N, Vargas JD, Yang C, Fung K, Sanghvi MM, Piechnik SK, et al. Genome-wide association analysis reveals insights into the genetic architecture of right ventricular structure and function. Nat Genet . (2022) 54:783–91. doi: 10.1038/s41588-022-01083-2

39. Bai W, Sinclair M, Tarroni G, Oktay O, Rajchl M, Vaillant G, et al. Automated cardiovascular magnetic resonance image analysis with fully convolutional networks. J Cardiovasc Magn Reson Off J Soc Cardiovasc Magn Reson . (2018) 20:65. doi: 10.1186/s12968-018-0471-x

40. Attar R, Pereañez M, Gooya A, Albà X, Zhang L, de Vila MH, et al. Quantitative CMR population imaging on 20,000 subjects of the UK biobank imaging study: LV/RV quantification pipeline and its evaluation. Med Image Anal . (2019) 56:26–42. doi: 10.1016/j.media.2019.05.006

41. Davies RH, Augusto JB, Bhuva A, Xue H, Treibel TA, Ye Y, et al. Precision measurement of cardiac structure and function in cardiovascular magnetic resonance using machine learning. J Cardiovasc Magn Reson . (2022) 24:16. doi: 10.1186/s12968-022-00846-4

42. Chen C, Qin C, Qiu H, Tarroni G, Duan J, Bai W, et al. Deep learning for cardiac image segmentation: a review. Front Cardiovasc Med . (2020) 7:25. doi: 10.3389/fcvm.2020.00025

Keywords: history of medicine, magnetic resonance imaging, cardiovascular magnetic resonance (CMR) imaging, nuclear magnetic resonance, Multi-Ethnic Study of Atherosclerosis (MESA), UK Biobank

Citation: Sanghvi MM, Lima JAC, Bluemke DA and Petersen SE (2024) A history of cardiovascular magnetic resonance imaging in clinical practice and population science. Front. Cardiovasc. Med. 11:1393896. doi: 10.3389/fcvm.2024.1393896

Received: 29 February 2024; Accepted: 8 April 2024; Published: 19 April 2024.

Reviewed by:

© 2024 Sanghvi, Lima, Bluemke and Petersen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY) . The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Steffen E. Petersen [email protected]

This article is part of the Research Topic

Glimpse to the Past – the Evolution of the Role of Imaging in Cardio Therapeutics

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- v.2(1); 2020

The role of artificial intelligence in medical imaging research

Xiaoli tang.

1 Yale New Haven Hospital, New Haven, USA

Without doubt, artificial intelligence (AI) is the most discussed topic today in medical imaging research, both in diagnostic and therapeutic. For diagnostic imaging alone, the number of publications on AI has increased from about 100–150 per year in 2007–2008 to 1000–1100 per year in 2017–2018. Researchers have applied AI to automatically recognizing complex patterns in imaging data and providing quantitative assessments of radiographic characteristics. In radiation oncology, AI has been applied on different image modalities that are used at different stages of the treatment. i.e . tumor delineation and treatment assessment. Radiomics, the extraction of a large number of image features from radiation images with a high-throughput approach, is one of the most popular research topics today in medical imaging research. AI is the essential boosting power of processing massive number of medical images and therefore uncovers disease characteristics that fail to be appreciated by the naked eyes. The objectives of this paper are to review the history of AI in medical imaging research, the current role, the challenges need to be resolved before AI can be adopted widely in the clinic, and the potential future.

A brief overview of the history

A handful of scientists from a variety of fields (mathematics, psychology, engineering, economics and political science) began to discuss the possibility of creating an artificial brain. They gathered together at a workshop held on the campus of Dartmouth College during the summer of 1956. This is widely known as Dartmouth Workshop, and it founded a society of artificial intelligence (AI). 1 The field then went through its peaks and valleys several cycles. MIT cognitive scientist Marvin Minsky along with other attendees at the Dartmouth Workshop were extremely optimistic about AI’s future. They believed that AI will substantially be solved within a generation. However, no significant progress was made. After several criticizing reports and ongoing pressure from congress, government funding and interests dropped off. 1974–90 became the first AI winter. In the 80’s, due to the competition of the British and Japan, AI revived. 1983–93 was a major winter for AI, coinciding with the collapse of the market for the needed computer power, which led to withdrawal of funding again. Research began to pick up again after that. One well-known event was IBM’s Deep Blue—the first computer beat a chess champion. In 2011, the computer giant’s question answering system Watson won the quiz show Jeopardy, and this marked the newest wave of AI booming. In Parallel of recent 10 years in medical imaging research, the amount of imaging data has grown exponentially. This has increased the burden to physicians to process the images. They need to read images with higher efficiency while maintain the same or better accuracy. At the same time, fortunately, computational power has also grown exponentially. These challenges and opportunities have formed the perfect foundation for the AI to be blossomed in the medical imaging research.

Researchers have successfully applied AI in radiology to identify findings either detectable or not by the human eye. Radiology is now moving from a subjective perceptual skill to a more objective science. 2,3 In Radiation Oncology, AI has been successfully applied to automatic tumor and organ segmentation, 4–6 7 8 and tumor monitoring during the treatment for adaptive treatment. In 2012, a Dutch researcher, Lambin P, proposed the concept of “Radiomics” for the first time and defined it as follows: the extraction of a large number of image features from radiation images with a high-throughput approach. 9 As AI became more popular and also more medical images than ever have been generated, these are good reason for radiomics to evolve rapidly. Radiomics is a novel approach for solving the issue of precision medicine. These researches have demonstrated a great potential of the role of AI in medical imaging. In fact, it has sparkled one of the ongoing discussions—will AI replace clinicians entirely? We believe it will not. For short term, AI is constrained by a lack of high quality, high volume, longitudinal, outcomes data, a constraint that is further exacerbated by the competing need for strict privacy protection. 10 There were approaches to address the privacy threat, like distributed learning. However, in a 2017 paper, it was argued that any distributed, federated, or decentralized deep learning approach is susceptible to attacks that reveal information about participant information from the training set. 11 For long term, we believe that AI will continue to underperform human level accuracy in medical decision making. Fundamentally, medicine is art, not science. AI might be able to outperform human in terms of quantitative tasks. Overall medical decision, however, will still depend on human evaluation to achieve the optimal results for a given patient.

Current role of AI in radiology

Machine learning, as a subset of AI, also called the traditional AI, was applied on diagnostic imaging started 1980’s. 12 Users first predefine explicit parameters and features of the imaging based on expert knowledge. For instance, the shapes, areas, histogram of image pixels of the regions-of-interest ( i.e. tumor regions) can be extracted. Usually, for a given number of available data entries, part of them are used as training and the rest would be for testing. Certain machine learning algorithm is selected for the training to understand the features. Some examples of the algorithms are principal component analysis (PCA), support vector machines (SVM), convolutional neural networks (CNN), etc. Then, for a given testing image, the trained algorithm is supposed to recognize the features and classify the image.