13 Interesting Data Structure Projects Ideas and Topics For Beginners [2023]

![data structures case study topics 13 Interesting Data Structure Projects Ideas and Topics For Beginners [2023]](https://www.upgrad.com/__khugblog-next/image/?url=https%3A%2F%2Fd14b9ctw0m6fid.cloudfront.net%2Fugblog%2Fwp-content%2Fuploads%2F2020%2F05%2F510-Data-Structure-Projects.png&w=1920&q=75)

In the world of computer science, understanding data structures is essential, especially for beginners. These structures serve as the foundation for organizing and manipulating data effectively. To assist newcomers in grasping these concepts, I’ll provide you with data structure projects ideas for beginners . These projects are tailored to offer hands-on learning experiences, allowing beginners to explore various data structures while honing their programming skills. By working on these projects, beginners can gain practical insights into data organization and algorithmic thinking, laying a solid foundation for their journey into computer science. Let’s delve into some exciting data structure projects ideas designed specifically for beginners. . These projects are tailored to offer hands-on learning experiences, allowing beginners to explore various data structures while honing their programming skills. By working on these projects, beginners can gain practical insights into data organization and algorithmic thinking, laying a solid foundation for their journey into computer science. Let’s delve into some exciting data structure project ideas designed specifically for beginners.

You can also check out our free courses offered by upGrad under machine learning and IT technology.

Data Structure Basics

Data structures can be classified into the following basic types:

- Linked Lists

- Hash tables

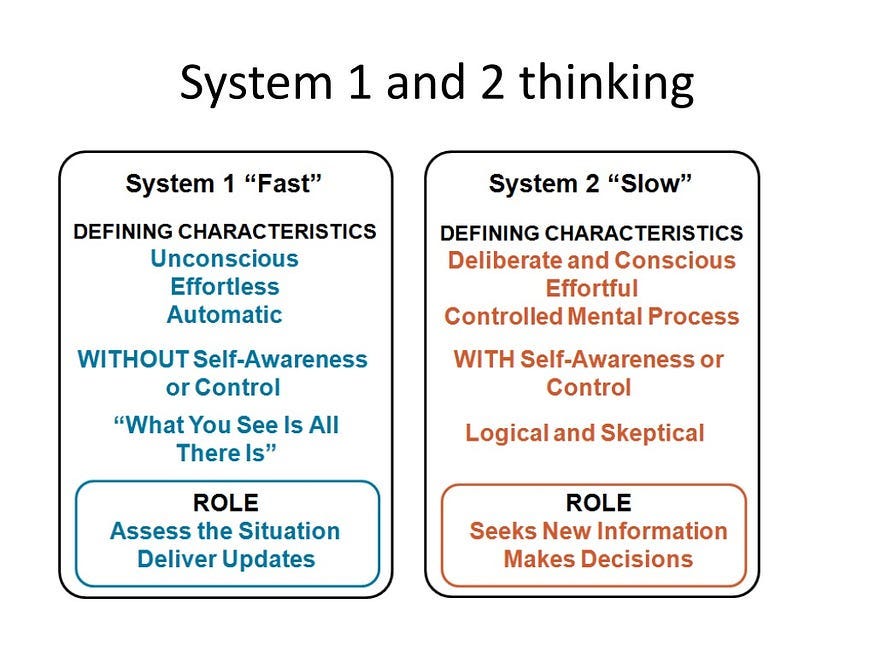

Selecting the appropriate setting for your data is an integral part of the programming and problem-solving process. And you can observe that data structures organize abstract data types in concrete implementations. To attain that result, they make use of various algorithms, such as sorting, searching, etc. Learning data structures is one of the important parts in data science courses .

With the rise of big data and analytics , learning about these fundamentals has become almost essential for data scientists. The training typically incorporates various topics in data structure to enable the synthesis of knowledge from real-life experiences. Here is a list of dsa topics to get you started!

Check out our Python Bootcamp created for working professionals.

Benefits of Data structures:

Data structures are fundamental building blocks in computer science and programming. They are important tools that helps inorganizing, storing, and manipulating data efficiently. On top of that it provide a way to represent and manage information in a structured manner, which is essential for designing efficient algorithms and solving complex problems.

So, let’s explore the numerous benefits of Data Structures and dsa topics list in the below post: –

1. Efficient Data Access

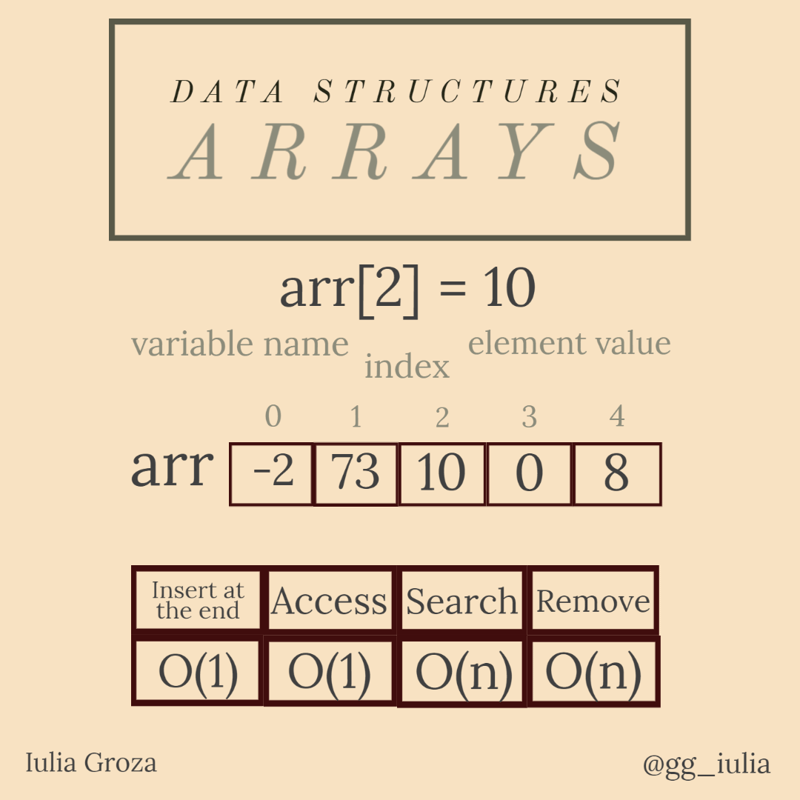

Data structures enable efficient access to data elements. Arrays, for example, provide constant-time access to elements using an index. Linked lists allow for efficient traversal and modification of data elements. Efficient data access is crucial for improving the overall performance of algorithms and applications.

2. Memory Management

Data structures help manage memory efficiently. They helps in allocating and deallocating memory resources as per requirement, reducing memory wastage and fragmentation. Remember, proper memory management is important for preventing memory leaks and optimizing resource utilization.

3. Organization of Data

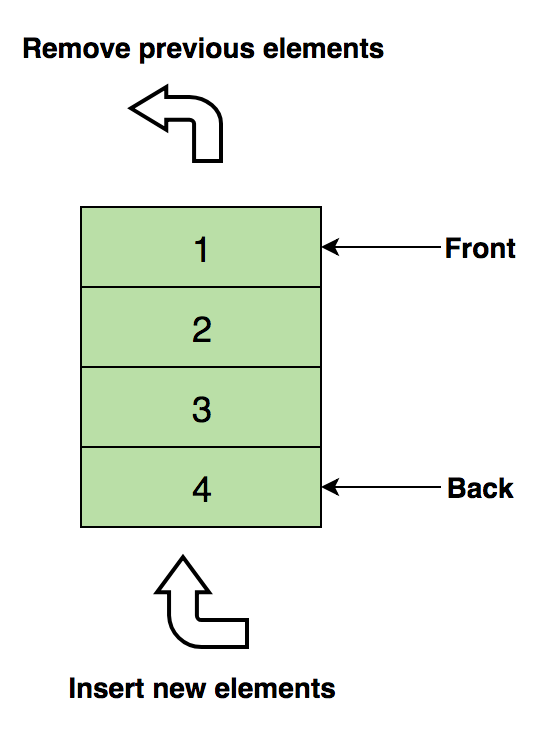

Data structures offers a structured way to organize and store data. For example, a stack organizes data in a last-in, first-out (LIFO) fashion, while a queue uses a first-in, first-out (FIFO) approach. These organizations make it easier to model and solve specific problems efficiently.

4. Search and Retrieval

Efficient data search and retrieval are an important aspect in varied applications, like, databases and information retrieval systems. Data structures like binary search trees and hash tables enable fast lookup and retrieval of data, reducing the time complexity of search operations.

Sorting is a fundamental operation in computer science. Data structures like arrays and trees can implement various sorting algorithms. Efficient sorting is crucial for maintaining ordered data lists and searching for specific elements.

6. Dynamic Memory Allocation

Many programming languages and applications require dynamic memory allocation. Data structures like dynamic arrays and linked lists can grow or shrink dynamically, allowing for efficient memory management in response to changing data requirements.

7. Data Aggregation

Data structures can aggregate data elements into larger, more complex structures. For example, arrays and lists can create matrices and graphs, enabling the representation and manipulation of intricate data relationships.

8. Modularity and Reusability

Data structures promote modularity and reusability in software development. Well-designed data structures can be used as building blocks for various applications, reducing code duplication and improving maintainability.

9. Complex Problem Solving

Data structures play a crucial role in solving complex computational problems. Algorithms often rely on specific data structures tailored to the problem’s requirements. For instance, graph algorithms use data structures like adjacency matrices or linked lists to represent and traverse graphs efficiently.

10. Resource Efficiency

Selecting the right data structure for a particular task can impact the efficiency of an application. Regards to this, Data structures helps in minimizing resource usage, such as time and memory, leading to faster and more responsive software.

11. Scalability

Scalability is a critical consideration in modern software development. Data structures that efficiently handle large datasets and adapt to changing workloads are essential for building scalable applications and systems.

12. Algorithm Optimization

Algorithms that use appropriate data structures can be optimized for speed and efficiency. For example, by choosing a hash table data structure, you can achieve constant-time average-case lookup operations, improving the performance of algorithms relying on data retrieval.

13. Code Readability and Maintainability

Well-defined data structures contribute to code readability and maintainability. They provide clear abstractions for data manipulation, making it easier for developers to understand, maintain, and extend code over time.

14. Cross-Disciplinary Applications

Data structures are not limited to computer science; they find applications in various fields, such as biology, engineering, and finance. Efficient data organization and manipulation are essential in scientific research and data analysis.

Other benefits:

- It can store variables of various data types.

- It allows the creation of objects that feature various types of attributes.

- It allows reusing the data layout across programs.

- It can implement other data structures like stacks, linked lists, trees, graphs, queues, etc.

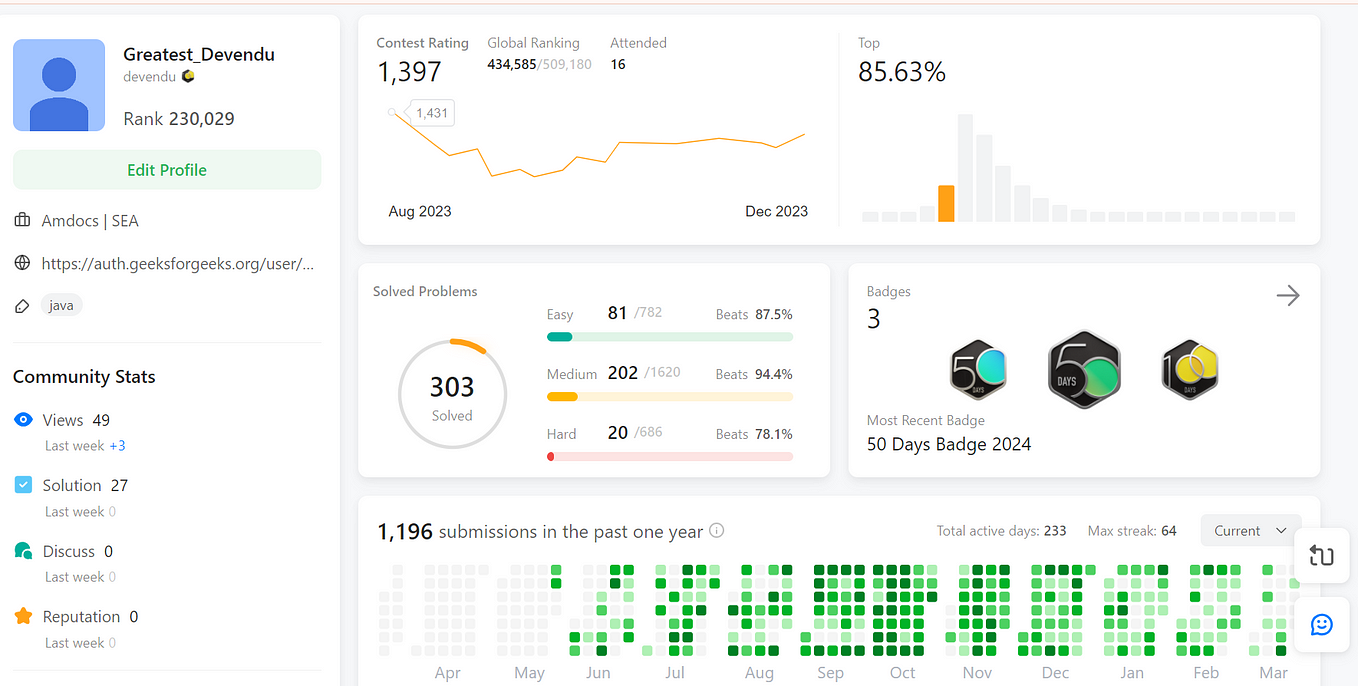

Why study data structures & algorithms?

- They help to solve complex real-time problems.

- They improve analytical and problem-solving skills.

- They help you to crack technical interviews.

- Topics in data structure can efficiently manipulate the data.

Studying relevant DSA topics increases job opportunities and earning potential. Therefore, they guarantee career advancement.

Data Structures Projects Ideas

1. obscure binary search trees.

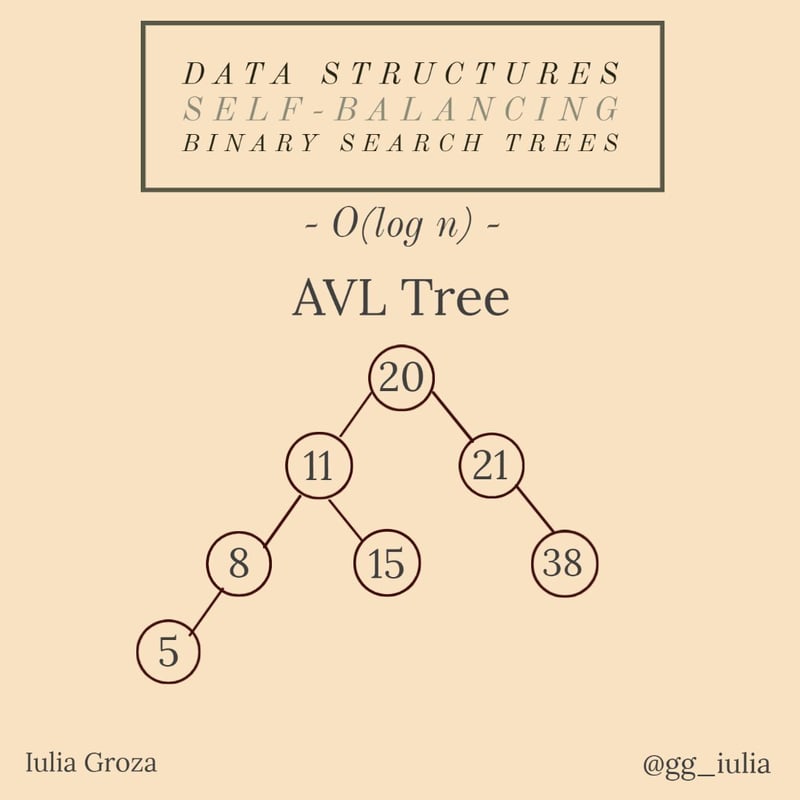

Items, such as names, numbers, etc. can be stored in memory in a sorted order called binary search trees or BSTs. And some of these data structures can automatically balance their height when arbitrary items are inserted or deleted. Therefore, they are known as self-balancing BSTs. Further, there can be different implementations of this type, like the BTrees, AVL trees, and red-black trees. But there are many other lesser-known executions that you can learn about. Some examples include AA trees, 2-3 trees, splay trees, scapegoat trees, and treaps.

You can base your project on these alternatives and explore how they can outperform other widely-used BSTs in different scenarios. For instance, splay trees can prove faster than red-black trees under the conditions of serious temporal locality.

Also, check out our business analytics course to widen your horizon.

2. BSTs following the memoization algorithm

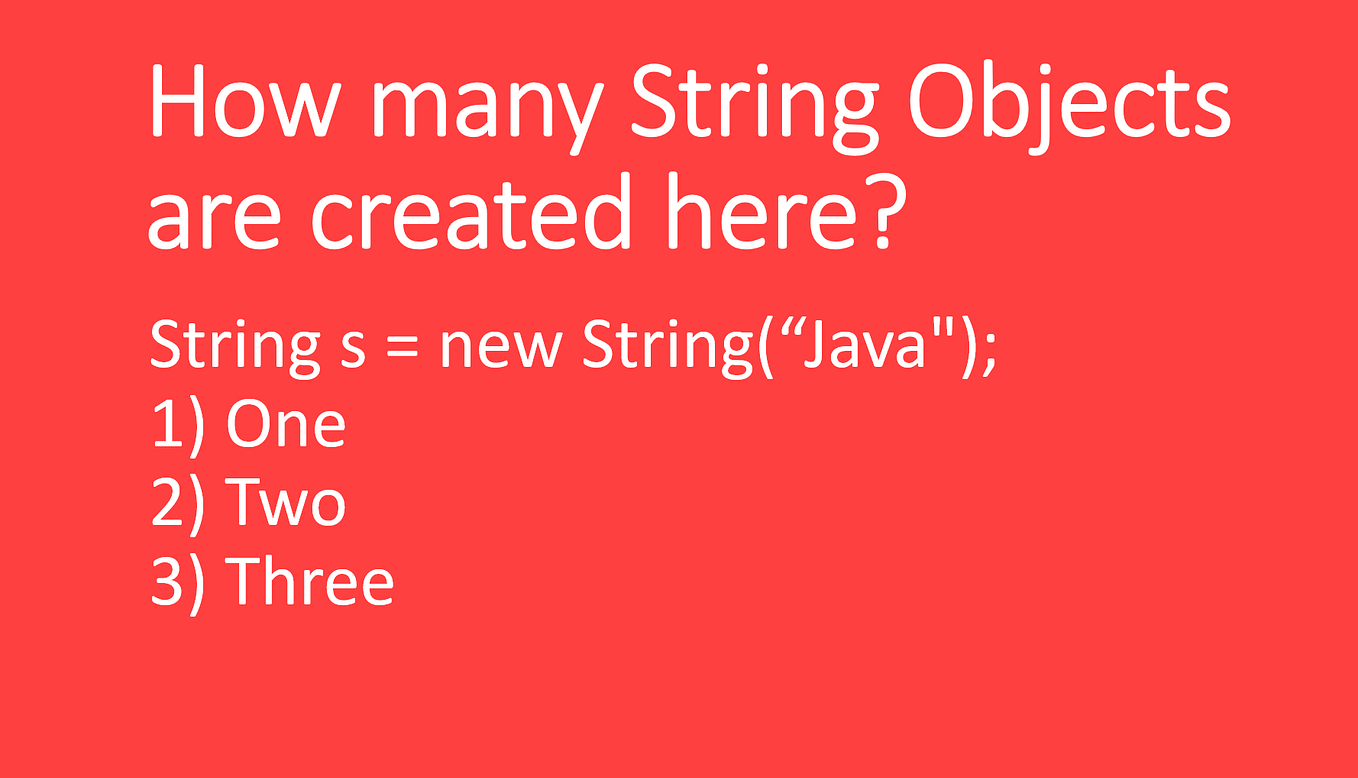

Memoization related to dynamic programming. In reduction-memoizing BSTs, each node can memoize a function of its subtrees. Consider the example of a BST of persons ordered by their ages. Now, let the child nodes store the maximum income of each individual. With this structure, you can answer queries like, “What is the maximum income of people aged between 18.3 and 25.3?” It can also handle updates in logarithmic time.

Moreover, such data structures are easy to accomplish in C language. You can also attempt to bind it with Ruby and a convenient API. Go for an interface that allows you to specify ‘lambda’ as your ordering function and your subtree memoizing function. All in all, you can expect reduction-memoizing BSTs to be self-balancing BSTs with a dash of additional book-keeping.

Dynamic coding will need cognitive memorisation for its implementation. Each vertex in a reducing BST can memorise its sub–trees’ functionality. For example, a BST of persons is categorised by their age.

This DSA topics based project idea allows the kid node to store every individual’s maximum salary. This framework can be used to answer the questions like “what’s the income limit of persons aged 25 to 30?”

Checkout: Types of Binary Tree

Explore our Popular Data Science Courses

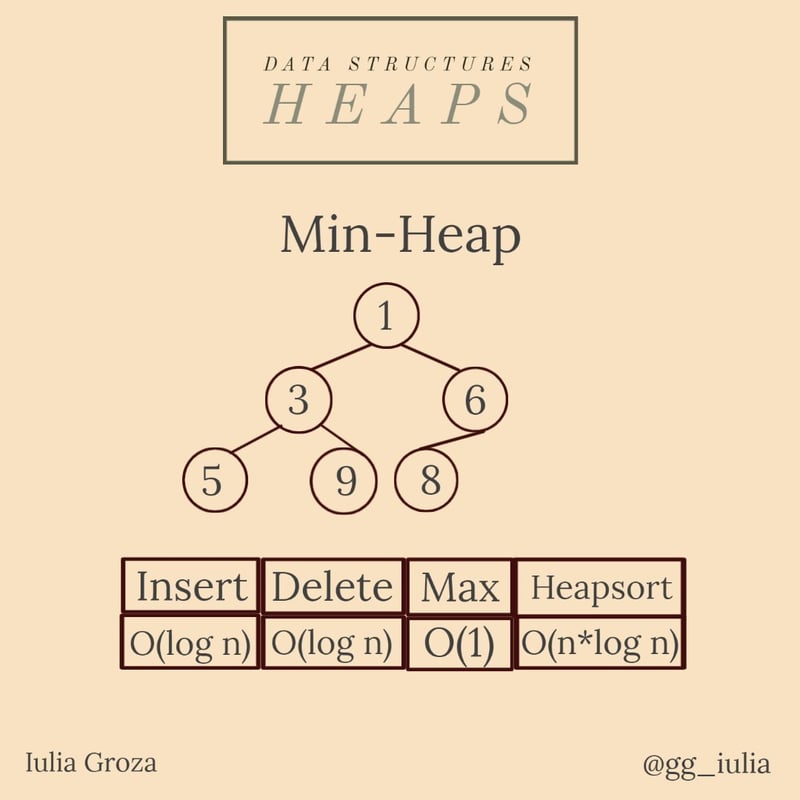

3. heap insertion time.

When looking for data structure projects , you want to encounter distinct problems being solved with creative approaches. One such unique research question concerns the average case insertion time for binary heap data structures. According to some online sources, it is constant time, while others imply that it is log(n) time.

But Bollobas and Simon give a numerically-backed answer in their paper entitled, “Repeated random insertion into a priority queue.” First, they assume a scenario where you want to insert n elements into an empty heap. There can be ‘n!’ possible orders for the same. Then, they adopt the average cost approach to prove that the insertion time is bound by a constant of 1.7645.

When looking for Data Structures tasks in this project idea, you will face challenges that are addressed using novel methods. One of the interesting research subjects is the mean response insertion time for the sequential heap DS.

Inserting ‘n’ components into an empty heap will yield ‘n!’ arrangements which you can use in suitable DSA projects in C++ . Subsequently, you can implement the estimated cost approach to specify that the inserting period is limited by a fixed constant.

Our learners also read : Excel online course free !

4. Optimal treaps with priority-changing parameters

Treaps are a combination of BSTs and heaps. These randomized data structures involve assigning specific priorities to the nodes. You can go for a project that optimizes a set of parameters under different settings. For instance, you can set higher preferences for nodes that are accessed more frequently than others. Here, each access will set off a two-fold process:

- Choosing a random number

- Replacing the node’s priority with that number if it is found to be higher than the previous priority

As a result of this modification, the tree will lose its random shape. It is likely that the frequently-accessed nodes would now be near the tree’s root, hence delivering faster searches. So, experiment with this data structure and try to base your argument on evidence.

Also read : Python online course free !

At the end of the project, you can either make an original discovery or even conclude that changing the priority of the node does not deliver much speed. It will be a relevant and useful exercise, nevertheless.

Constructing a heap involves building an ordered binary tree and letting it fulfill the “heap” property. But if it is done using a single element, it would appear like a line. This is because in the BST, the right child should be greater or equal to its parent, and the left child should be less than its parent. However, for a heap, every parent must either be all larger or all smaller than its children.

The numbers show the data structure’s heap arrangement (organized in max-heap order). The alphabets show the tree portion. Now comes the time to use the unique property of treap data structure in DSA projects in C++ . This treap has only one arrangement irrespective of the order by which the elements were chosen to build the tree.

You can use a random heap weight to make the second key more useful. Hence, now the tree’s structure will completely depend on the randomized weight offered to the heap values. In the file structure mini project topics , we obtain randomized heap priorities by ascertaining that you assign these randomly.

Top Data Science Skills to Learn

upGrad’s Exclusive Data Science Webinar for you –

Transformation & Opportunities in Analytics & Insights

5. Research project on k-d trees

K-dimensional trees or k-d trees organize and represent spatial data. These data structures have several applications, particularly in multi-dimensional key searches like nearest neighbor and range searches. Here is how k-d trees operate:

- Every leaf node of the binary tree is a k-dimensional point

- Every non-leaf node splits the hyperplane (which is perpendicular to that dimension) into two half-spaces

- The left subtree of a particular node represents the points to the left of the hyperplane. Similarly, the right subtree of that node denotes the points in the right half.

You can probe one step further and construct a self-balanced k-d tree where each leaf node would have the same distance from the root. Also, you can test it to find whether such balanced trees would prove optimal for a particular kind of application.

Also, visit upGrad’s Degree Counselling page for all undergraduate and postgraduate programs.

Read our popular Data Science Articles

With this, we have covered five interesting ideas that you can study, investigate, and try out. Now, let us look at some more projects on data structures and algorithms .

Read : Data Scientist Salary in India

6. Knight’s travails

In this project, we will understand two algorithms in action – BFS and DFS. BFS stands for Breadth-First Search and utilizes the Queue data structure to find the shortest path. Whereas, DFS refers to Depth-First Search and traverses Stack data structures.

For starters, you will need a data structure similar to binary trees. Now, suppose that you have a standard 8 X 8 chessboard, and you want to show the knight’s movements in a game. As you may know, a knight’s basic move in chess is two forward steps and one sidestep. Facing in any direction and given enough turns, it can move from any square on the board to any other square.

If you want to know the simplest way your knight can move from one square (or node) to another in a two-dimensional setup, you will first have to build a function like the one below.

- knight_plays([0,0], [1,2]) == [[0,0], [1,2]]

- knight_plays([0,0], [3,3]) == [[0,0], [1,2], [3,3]]

- knight_plays([3,3], [0,0]) == [[3,3], [1,2], [0,0]]

Furthermore, this project would require the following tasks:

- Creating a script for a board game and a night

- Treating all possible moves of the knight as children in the tree structure

- Ensuring that any move does not go off the board

- Choosing a search algorithm for finding the shortest path in this case

- Applying the appropriate search algorithm to find the best possible move from the starting square to the ending square.

7. Fast data structures in non-C systems languages

Programmers usually build programs quickly using high-level languages like Ruby or Python but implement data structures in C/C++. And they create a binding code to connect the elements. However, the C language is believed to be error-prone, which can also cause security issues. Herein lies an exciting project idea.

You can implement a data structure in a modern low-level language such as Rust or Go, and then bind your code to the high-level language. With this project, you can try something new and also figure out how bindings work. If your effort is successful, you can even inspire others to do a similar exercise in the future and drive better performance-orientation of data structures.

Also read: Data Science Project Ideas for Beginners

8. Search engine for data structures

The software aims to automate and speed up the choice of data structures for a given API. This project not only demonstrates novel ways of representing different data structures but also optimizes a set of functions to equip inference on them. We have compiled its summary below.

- The data structure search engine project requires knowledge about data structures and the relationships between different methods.

- It computes the time taken by each possible composite data structure for all the methods.

- Finally, it selects the best data structures for a particular case.

Read: Data Mining Project Ideas

9. Phone directory application using doubly-linked lists

This project can demonstrate the working of contact book applications and also teach you about data structures like arrays, linked lists, stacks, and queues. Typically, phone book management encompasses searching, sorting, and deleting operations. A distinctive feature of the search queries here is that the user sees suggestions from the contact list after entering each character. You can read the source-code of freely available projects and replicate the same to develop your skills.

This project demonstrates how to address the book programs’ function. It also teaches you about queuing, stacking, linking lists, and arrays. Usually, this project’s directory includes certain actions like categorising, scanning, and removing. Subsequently, the client shows recommendations from the address book after typing each character. This is the web searches’ unique facet. You can inspect the code of extensively used DSA projects in C++ and applications and ultimately duplicate them. This helps you to advance your data science career.

10. Spatial indexing with quadtrees

The quadtree data structure is a special type of tree structure, which can recursively divide a flat 2-D space into four quadrants. Each hierarchical node in this tree structure has either zero or four children. It can be used for various purposes like sparse data storage, image processing, and spatial indexing.

Spatial indexing is all about the efficient execution of select geometric queries, forming an essential part of geo-spatial application design. For example, ride-sharing applications like Ola and Uber process geo-queries to track the location of cabs and provide updates to users. Facebook’s Nearby Friends feature also has similar functionality. Here, the associated meta-data is stored in the form of tables, and a spatial index is created separately with the object coordinates. The problem objective is to find the nearest point to a given one.

You can pursue quadtree data structure projects in a wide range of fields, from mapping, urban planning, and transportation planning to disaster management and mitigation. We have provided a brief outline to fuel your problem-solving and analytical skills.

QuadTrees are techniques for indexing spatial data. The root node signifies the whole area and every internal node signifies an area called a quadrant which is obtained by dividing the area enclosed into half across both axes. These basics are important to understand QuadTrees-related data structures topics.

Objective: Creating a data structure that enables the following operations

- Insert a location or geometric space

- Search for the coordinates of a specific location

- Count the number of locations in the data structure in a particular contiguous area

One of the leading applications of QuadTrees in the data structure is finding the nearest neighbor. For example, you are dealing with several points in a space in one of the data structures topics . Suppose somebody asks you what’s the nearest point to an arbitrary point. You can search in a quadtree to answer this question. If there is no nearest neighbor, you can specify that there is no point in this quadrant to be the nearest neighbor to an arbitrary point. Consequently, you can save time otherwise spent on comparisons.

Spatial indexing with Quadtrees is also used in image compression wherein every node holds the average color of each child. You get a more detailed image if you dive deeper into the tree. This project idea is also used in searching for the nods in a 2D area. For example, you can use quadtrees to find the nearest point to the given coordinates.

Follow these steps to build a quadtree from a two-dimensional area:

- Divide the existing two-dimensional space into four boxes.

- Create a child object if a box holds one or more points within. This object stores the box’s 2D space.

- Don’t create a child for a box that doesn’t include any points.

- Repeat these steps for each of the children.

- You can follow these steps while working on one of the file structure mini project topics .

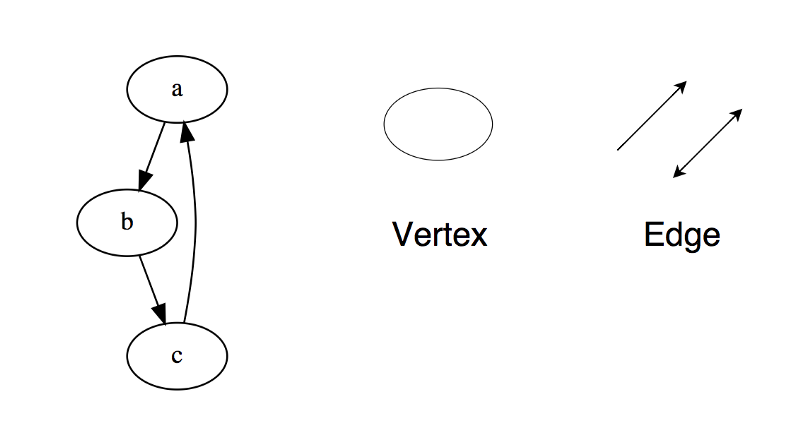

11. Graph-based projects on data structures

You can take up a project on topological sorting of a graph. For this, you will need prior knowledge of the DFS algorithm. Here is the primary difference between the two approaches:

- We print a vertex & then recursively call the algorithm for adjacent vertices in DFS.

- In topological sorting, we recursively first call the algorithm for adjacent vertices. And then, we push the content into a stack for printing.

Therefore, the topological sort algorithm takes a directed acyclic graph or DAG to return an array of nodes.

Let us consider the simple example of ordering a pancake recipe. To make pancakes, you need a specific set of ingredients, such as eggs, milk, flour or pancake mix, oil, syrup, etc. This information, along with the quantity and portions, can be easily represented in a graph.

But it is equally important to know the precise order of using these ingredients. This is where you can implement topological ordering. Other examples include making precedence charts for optimizing database queries and schedules for software projects. Here is an overview of the process for your reference:

- Call the DFS algorithm for the graph data structure to compute the finish times for the vertices

- Store the vertices in a list with a descending finish time order

- Execute the topological sort to return the ordered list

12. Numerical representations with random access lists

In the representations we have seen in the past, numerical elements are generally held in Binomial Heaps. But these patterns can also be implemented in other data structures. Okasaki has come up with a numerical representation technique using binary random access lists. These lists have many advantages:

- They enable insertion at and removal from the beginning

- They allow access and update at a particular index

Know more: The Six Most Commonly Used Data Structures in R

13. Stack-based text editor

Your regular text editor has the functionality of editing and storing text while it is being written or edited. So, there are multiple changes in the cursor position. To achieve high efficiency, we require a fast data structure for insertion and modification. And the ordinary character arrays take time for storing strings.

You can experiment with other data structures like gap buffers and ropes to solve these issues. Your end objective will be to attain faster concatenation than the usual strings by occupying smaller contiguous memory space.

This project idea handles text manipulation and offers suitable features to improve the experience. The key functionalities of text editors include deleting, inserting, and viewing text. Other features needed to compare with other text editors are copy/cut and paste, find and replace, sentence highlighting, text formatting, etc.

This project idea’s functioning depends on the data structures you determined to use for your operations. You will face tradeoffs when choosing among the data structures. This is because you must consider the implementation difficulty for the memory and performance tradeoffs. You can use this project idea in different file structure mini project topics to accelerate the text’s insertion and modification.

Data structure skills are foundational in software development, especially for managing vast data sets in today’s digital landscape. Top companies like Adobe, Amazon, and Google seek professionals proficient in data structures and algorithms for lucrative positions. During interviews, recruiters evaluate not only theoretical knowledge but also practical skills. Therefore, practicing data structure project ideas for beginners is essential to kickstart your career.

If you’re interested in delving into data science, I strongly recommend exploring I IIT-B & upGrad’s Executive PG Programme in Data Science . Tailored for working professionals, this program offers 10+ case studies & projects, practical workshops, mentorship with industry experts, 1-on-1 sessions with mentors, 400+ hours of learning, and job assistance with leading firms. It’s a comprehensive opportunity to advance your skills and excel in the field.

Rohit Sharma

Something went wrong

Our Popular Data Science Course

Data Science Skills to Master

- Data Analysis Courses

- Inferential Statistics Courses

- Hypothesis Testing Courses

- Logistic Regression Courses

- Linear Regression Courses

- Linear Algebra for Analysis Courses

Our Trending Data Science Courses

- Data Science for Managers from IIM Kozhikode - Duration 8 Months

- Executive PG Program in Data Science from IIIT-B - Duration 12 Months

- Master of Science in Data Science from LJMU - Duration 18 Months

- Executive Post Graduate Program in Data Science and Machine LEarning - Duration 12 Months

- Master of Science in Data Science from University of Arizona - Duration 24 Months

Frequently Asked Questions (FAQs)

There are certain types of containers that are used to store data. These containers are nothing but data structures. These containers have different properties associated with them, which are used to store, organize, and manipulate the data stored in them. There can be two types of data structures based on how they allocate the data. Linear data structures like arrays and linked lists and dynamic data structures like trees and graphs.

In linear data structures, each element is linearly connected to each other having reference to the next and previous elements whereas in non-linear data structures, data is connected in a non-linear or hierarchical manner. Implementing a linear data structure is much easier than a non-linear data structure since it involves only a single level. If we see memory-wise then the non-linear data structures are better than their counterpart since they consume memory wisely and do not waste it.

You can see applications based on data structures everywhere around you. The google maps application is based on graphs, call centre systems use queues, file explorer applications are based on trees, and even the text editor that you use every day is based upon stack data structure and this list can go on. Not just applications, but many popular algorithms are also based on these data structures. One such example is that of the decision trees. Google search uses trees to implement its amazing auto-complete feature in its search bar.

Related Programs View All

View Program

Executive PG Program

Complimentary Python Bootcamp

Master's Degree

Live Case Studies and Projects

8+ Case Studies & Assignments

Certification

Live Sessions by Industry Experts

ChatGPT Powered Interview Prep

Top US University

120+ years Rich Legacy

Based in the Silicon Valley

Case based pedagogy

High Impact Online Learning

Mentorship & Career Assistance

AACSB accredited

Placement Assistance

Earn upto 8LPA

Interview Opportunity

8-8.5 Months

Exclusive Job Portal

Learn Generative AI Developement

Explore Free Courses

Learn more about the education system, top universities, entrance tests, course information, and employment opportunities in Canada through this course.

Advance your career in the field of marketing with Industry relevant free courses

Build your foundation in one of the hottest industry of the 21st century

Master industry-relevant skills that are required to become a leader and drive organizational success

Build essential technical skills to move forward in your career in these evolving times

Get insights from industry leaders and career counselors and learn how to stay ahead in your career

Kickstart your career in law by building a solid foundation with these relevant free courses.

Stay ahead of the curve and upskill yourself on Generative AI and ChatGPT

Build your confidence by learning essential soft skills to help you become an Industry ready professional.

Learn more about the education system, top universities, entrance tests, course information, and employment opportunities in USA through this course.

Suggested Blogs

![data structures case study topics Top 13 Highest Paying Data Science Jobs in India [A Complete Report]](https://www.upgrad.com/__khugblog-next/image/?url=https%3A%2F%2Fd14b9ctw0m6fid.cloudfront.net%2Fugblog%2Fwp-content%2Fuploads%2F2020%2F08%2F904-scaled.jpg&w=3840&q=75)

by Rohit Sharma

12 Apr 2024

![data structures case study topics Most Common PySpark Interview Questions & Answers [For Freshers & Experienced]](https://www.upgrad.com/__khugblog-next/image/?url=https%3A%2F%2Fd14b9ctw0m6fid.cloudfront.net%2Fugblog%2Fwp-content%2Fuploads%2F2020%2F09%2F991.png&w=3840&q=75)

05 Mar 2024

by Harish K

28 Feb 2024

by Rohan Vats

27 Feb 2024

19 Feb 2024

![data structures case study topics Sorting in Data Structure: Categories & Types [With Examples]](https://www.upgrad.com/__khugblog-next/image/?url=https%3A%2F%2Fd14b9ctw0m6fid.cloudfront.net%2Fugblog%2Fwp-content%2Fuploads%2F2020%2F05%2F493-Sorting-in-Data-Structure.png&w=3840&q=75)

- Trending Now

- Foundational Courses

- Data Science

- Practice Problem

- Machine Learning

- System Design

- DevOps Tutorial

Data Structures Tutorial

- Introduction to Data Structures

- Data Structure Types, Classifications and Applications

Overview of Data Structures

- Introduction to Linear Data Structures

- Introduction to Hierarchical Data Structure

- Overview of Graph, Trie, Segment Tree and Suffix Tree Data Structures

Different Types of Data Structures

- Array Data Structure

- String in Data Structure

- Linked List Data Structure

- Stack Data Structure

- Queue Data Structure

- Introduction to Tree - Data Structure and Algorithm Tutorials

- Heap Data Structure

- Hashing in Data Structure

- Graph Data Structure And Algorithms

- Matrix Data Structure

- Advanced Data Structures

- Data Structure Alignment : How data is arranged and accessed in Computer Memory?

- Static Data Structure vs Dynamic Data Structure

- Static and Dynamic data structures in Java with Examples

- Common operations on various Data Structures

- Real-life Applications of Data Structures and Algorithms (DSA)

Different Types of Advanced Data Structures

Data structures are essential components that help organize and store data efficiently in computer memory. They provide a way to manage and manipulate data effectively, enabling faster access, insertion, and deletion operations.

Common data structures include arrays, linked lists, stacks, queues, trees, and graphs , each serving specific purposes based on the requirements of the problem. Understanding data structures is fundamental for designing efficient algorithms and optimizing software performance.

Data Structure

Table of Content

What is a Data Structure?

Why are data structures important, classification of data structures, types of data structures, applications of data structures.

- Learn Basics of Data Structure

- Most Popular Data Structures

- Advanced Data Structure

A data structure is a way of organizing and storing data in a computer so that it can be accessed and used efficiently. It defines the relationship between the data and the operations that can be performed on the data

Data structures are essential for the following reasons:

- Efficient Data Management: They enable efficient storage and retrieval of data, reducing processing time and improving performance.

- Data Organization: They organize data in a logical manner, making it easier to understand and access.

- Data Abstraction: They hide the implementation details of data storage, allowing programmers to focus on the logical aspects of data manipulation.

- Reusability: Common data structures can be reused in multiple applications, saving time and effort in development.

- Algorithm Optimization: The choice of the appropriate data structure can significantly impact the efficiency of algorithms that operate on the data.

Data structures can be classified into two main categories:

- Linear Data Structures: These structures store data in a sequential order this allowing for easy insertion and deletion operations. Examples include arrays, linked lists, and queues.

- Non-Linear Data Structures: These structures store data in a hierarchical or interconnected manner this allowing for more complex relationships between data elements. Examples include trees, graphs, and hash tables.

Basically, data structures are divided into two categories:

Linear Data Structures:

- Array: A collection of elements of the same type stored in contiguous memory locations.

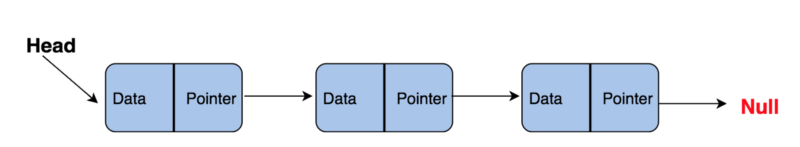

- Linked List: A collection of elements linked together by pointers, allowing for dynamic insertion and deletion.

- Queue: A First-In-First-Out (FIFO) structure where elements are added at the end and removed from the beginning.

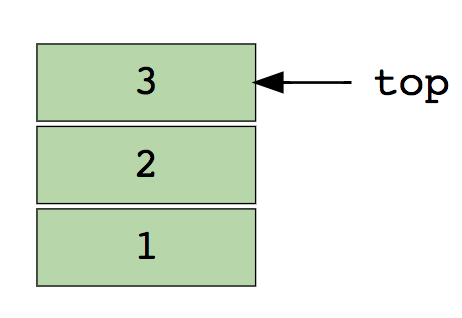

- Stack: A Last-In-First-Out (LIFO) structure where elements are added and removed from the top.

Non-Linear Data Structures:

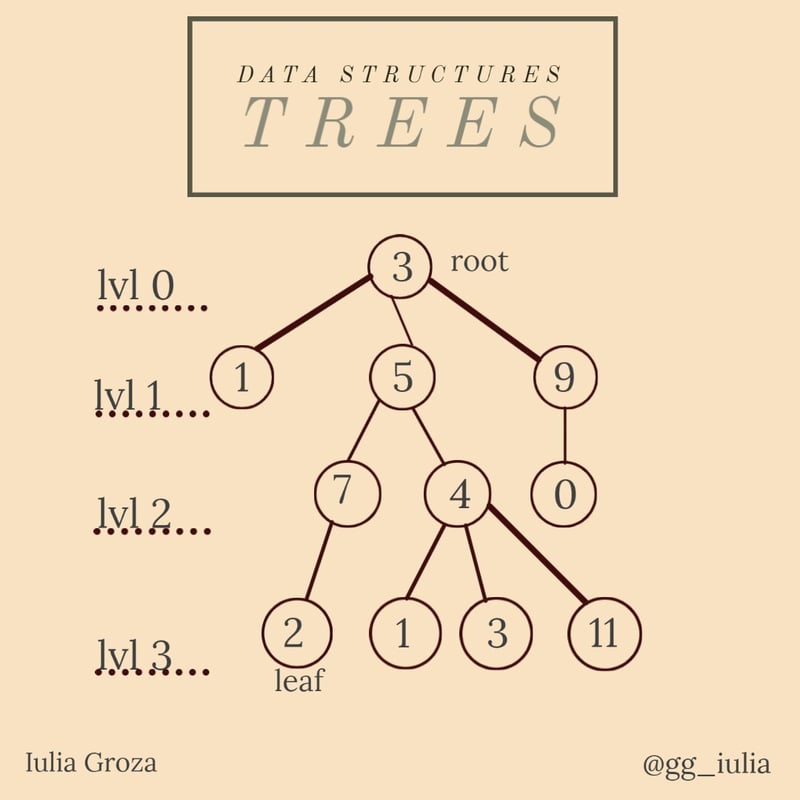

- Tree: A hierarchical structure where each node can have multiple child nodes.

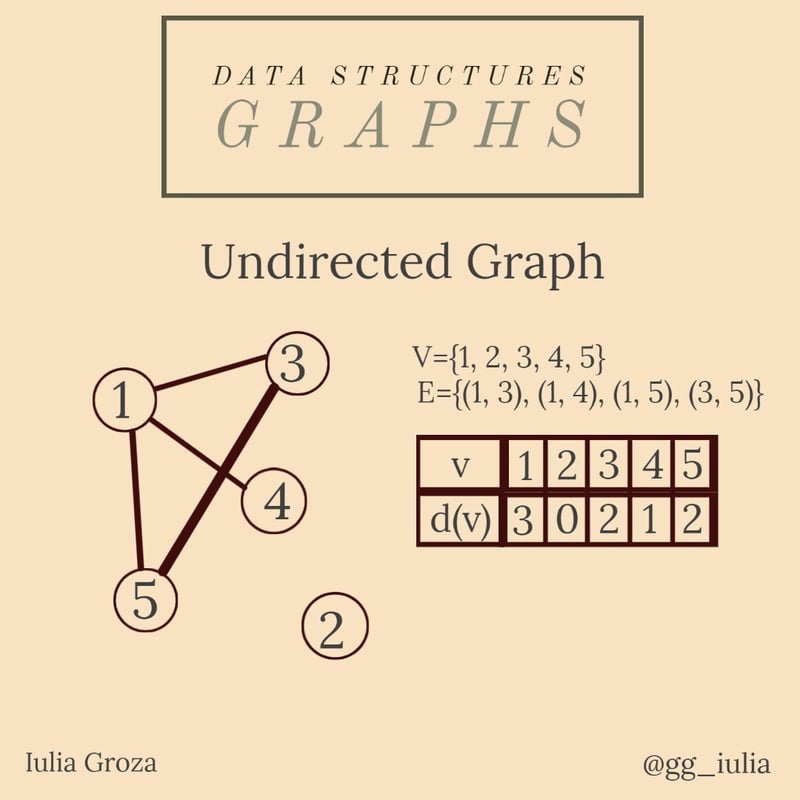

- Graph: A collection of nodes connected by edges, representing relationships between data elements.

- Hash Table: A data structure that uses a hash function to map keys to values, allowing for fast lookup and insertion.

Data structures are widely used in various applications, including:

- Database Management Systems: To store and manage large amounts of structured data.

- Operating Systems: To manage memory, processes, and files.

- Compiler Design: To represent source code and intermediate code.

- Artificial Intelligence: To represent knowledge and perform reasoning.

- Graphics and Multimedia: To store and process images, videos, and audio data.

Learn Basics of Data Structure:

- Overview of Data Structures | Set 3 (Graph, Trie, Segment Tree and Suffix Tree)

- Abstract Data Types

Most Popular Data Structures :

Below are some most popular Data Structure:

Array is a linear data structure that stores a collection of elements of the same data type. Elements are allocated contiguous memory, allowing for constant-time access. Each element has a unique index number.

Important articles on Array:

- Search, insert and delete in an unsorted array

- Search, insert and delete in a sorted array

- Write a program to reverse an array

- Leaders in an array

- Given an array A[] and a number x, check for pair in A[] with sum as x

- Majority Element

- Find the Number Occurring Odd Number of Times

- Largest Sum Contiguous Subarray

- Find the Missing Number

- Search an element in a sorted and pivoted array

- Merge an array of size n into another array of size m+n

- Median of two sorted arrays

- Program for array rotation

- Reversal algorithm for array rotation

- Block swap algorithm for array rotation

- Maximum sum such that no two elements are adjacent

- Sort elements by frequency | Set 1

- Count Inversions in an array

Related articles on Array:

- All Articles on Array

- Coding Practice on Array

- Quiz on Array

- Recent Articles on Array

A matrix is a two-dimensional array of elements, arranged in rows and columns. It is represented as a rectangular grid, with each element at the intersection of a row and column.

Important articles on Matrix:

- Search in a row wise and column wise sorted matrix

- Print a given matrix in spiral form

- A Boolean Matrix Question

- Print unique rows in a given boolean matrix

- Maximum size square sub-matrix with all 1s

- Inplace M x N size matrix transpose | Updated

- Dynamic Programming | Set 27 (Maximum sum rectangle in a 2D matrix)

- Strassen’s Matrix Multiplication

- Create a matrix with alternating rectangles of O and X

- Print all elements in sorted order from row and column wise sorted matrix

- Given an n x n square matrix, find sum of all sub-squares of size k x k

- Count number of islands where every island is row-wise and column-wise separated

- Find a common element in all rows of a given row-wise sorted matrix

Related articles on Matrix:

- All Articles on Matrix

- Coding Practice on Matrix

- Recent Articles on Matrix.

3. Linked List:

A linear data structure where elements are stored in nodes linked together by pointers. Each node contains the data and a pointer to the next node in the list. Linked lists are efficient for inserting and deleting elements, but they can be slower for accessing elements than arrays.

Types of Linked List:

a) Singly Linked List: Each node points to the next node in the list.

Important articles on Singly Linked Lis:

- Introduction to Linked List

- Linked List vs Array

- Linked List Insertion

- Linked List Deletion (Deleting a given key)

- Linked List Deletion (Deleting a key at given position)

- A Programmer’s approach of looking at Array vs. Linked List

- Find Length of a Linked List (Iterative and Recursive)

- How to write C functions that modify head pointer of a Linked List?

- Swap nodes in a linked list without swapping data

- Reverse a linked list

- Merge two sorted linked lists

- Merge Sort for Linked Lists

- Reverse a Linked List in groups of given size

- Detect and Remove Loop in a Linked List

- Add two numbers represented by linked lists | Set 1

- Rotate a Linked List

- Generic Linked List in C

b) Circular Linked List: The last node points back to the first node, forming a circular loop.

Important articles on Circular Linked List:

- Circular Linked List Introduction and Applications,

- Circular Singly Linked List Insertion

- Circular Linked List Traversal

- Split a Circular Linked List into two halves

- Sorted insert for circular linked list

c) Doubly Linked List: Each node points to both the next and previous nodes in the list.

Important articles on Doubly Linked List:

- Doubly Linked List Introduction and Insertion

- Delete a node in a Doubly Linked List

- Reverse a Doubly Linked List

- The Great Tree-List Recursion Problem.

- QuickSort on Doubly Linked List

- Merge Sort for Doubly Linked List

Related articles on Linked List:

- All Articles of Linked List

- Coding Practice on Linked List

- Recent Articles on Linked List

Stack is a linear data structure that follows a particular order in which the operations are performed. The order may be LIFO(Last In First Out) or FILO(First In Last Out) . LIFO implies that the element that is inserted last, comes out first and FILO implies that the element that is inserted first, comes out last.

Important articles on Stack:

- Introduction to Stack

- Infix to Postfix Conversion using Stack

- Evaluation of Postfix Expression

- Reverse a String using Stack

- Implement two stacks in an array

- Check for balanced parentheses in an expression

- Next Greater Element

- Reverse a stack using recursion

- Sort a stack using recursion

- The Stock Span Problem

- Design and Implement Special Stack Data Structure

- Implement Stack using Queues

- Design a stack with operations on middle element

- How to efficiently implement k stacks in a single array?

Related articles on Stack:

- All Articles on Stack

- Coding Practice on Stack

- Recent Articles on Stack

A Queue Data Structure is a fundamental concept in computer science used for storing and managing data in a specific order. It follows the principle of “First in, First out ” ( FIFO ), where the first element added to the queue is the first one to be removed

Important articles on Queue:

- Queue Introduction and Array Implementation

- Linked List Implementation of Queue

- Applications of Queue Data Structure

- Priority Queue Introduction

- Deque (Introduction and Applications)

- Implementation of Deque using circular array

- Implement Queue using Stacks

- Find the first circular tour that visits all petrol pumps

- Maximum of all subarrays of size k

- An Interesting Method to Generate Binary Numbers from 1 to n

- How to efficiently implement k Queues in a single array?

Related articles on Queue:

- All Articles on Queue

- Coding Practice on Queue

- Recent Articles on Queue

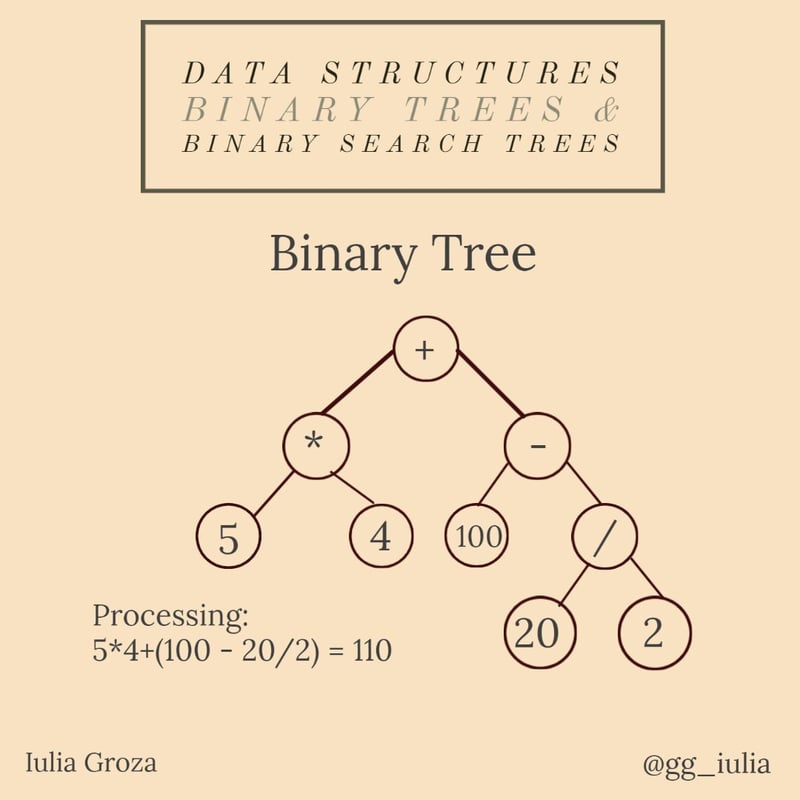

6. Binary Tree:

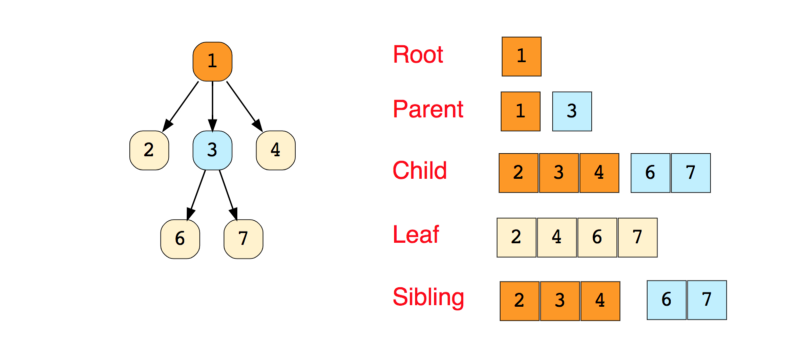

Binary Tree is a hierarchical data structure where each node has at most two child nodes, referred to as the left child and the right child. Binary trees are mostly used to represent hierarchical data, such as file systems or family trees.

Important articles on Binary Tree:

- Binary Tree Introduction

- Binary Tree Properties

- Types of Binary Tree

- Handshaking Lemma and Interesting Tree Properties

- Enumeration of Binary Tree

- Applications of tree data structure

- Tree Traversals

- BFS vs DFS for Binary Tree

- Level Order Tree Traversal

- Diameter of a Binary Tree

- Inorder Tree Traversal without Recursion

- Inorder Tree Traversal without recursion and without stack!

- Threaded Binary Tree

- Maximum Depth or Height of a Tree

- If you are given two traversal sequences, can you construct the binary tree?

- Clone a Binary Tree with Random Pointers

- Construct Tree from given Inorder and Preorder traversals

- Maximum width of a binary tree

- Print nodes at k distance from root

- Print Ancestors of a given node in Binary Tree

- Check if a binary tree is subtree of another binary tree

- Connect nodes at same level

Related articles on Binary Tree:

- All articles on Binary Tree

- Coding Practice on Binary Tree

- Recent Articles on Tree

7. Binary Search Tree:

A Binary Search Tree is a data structure used for storing data in a sorted manner. Each node in a Binary Search Tree has at most two children, a left child and a right child, with the left child containing values less than the parent node and the right child containing values greater than the parent node. This hierarchical structure allows for efficient searching, insertion, and deletion operations on the data stored in the tree.

Important articles on Binary Search Tree:

- Search and Insert in BST

- Deletion from BST

- Minimum value in a Binary Search Tree

- Inorder predecessor and successor for a given key in BST

- Check if a binary tree is BST or not

- Lowest Common Ancestor in a Binary Search Tree.

- Inorder Successor in Binary Search Tree

- Find k-th smallest element in BST (Order Statistics in BST)

- Merge two BSTs with limited extra space

- Two nodes of a BST are swapped, correct the BST

- Floor and Ceil from a BST

- In-place conversion of Sorted DLL to Balanced BST

- Find a pair with given sum in a Balanced BST

- Total number of possible Binary Search Trees with n keys

- Merge Two Balanced Binary Search Trees

- Binary Tree to Binary Search Tree Conversion

Related articles on Binary Search Tree:

- All Articles on Binary Search Tree

- Coding Practice on Binary Search Tree

- Recent Articles on BST

A Heap is a complete binary tree data structure that satisfies the heap property: for every node, the value of its children is less than or equal to its own value. Heaps are usually used to implement priority queues , where the smallest (or largest) element is always at the root of the tree.

Important articles on Heap:

- Binary Heap

- Why is Binary Heap Preferred over BST for Priority Queue?

- K’th Largest Element in an array

- Sort an almost sorted array

- Binomial Heap

- Fibonacci Heap

- Tournament Tree (Winner Tree) and Binary Heap

Related articles on Heap:

- All Articles on Heap

- Coding Practice on Heap

- Recent Articles on Heap

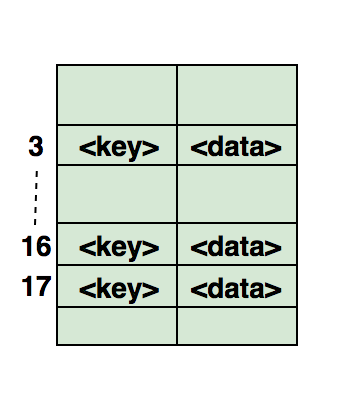

9. Hashing:

Hashing is a technique that generates a fixed-size output (hash value) from an input of variable size using mathematical formulas called hash functions . Hashing is used to determine an index or location for storing an item in a data structure, allowing for efficient retrieval and insertion.

Important articles on Hashing:

- Hashing Introduction

- Separate Chaining for Collision Handling

- Open Addressing for Collision Handling

- Print a Binary Tree in Vertical Order

- Find whether an array is subset of another array

- Union and Intersection of two Linked Lists

- Find a pair with given sum

- Check if a given array contains duplicate elements within k distance from each other

- Find Itinerary from a given list of tickets

- Find number of Employees Under every Employee

Related articles on Hashing:

- All Articles on Hashing

- Coding Practice on Hashing

- Recent Articles on Hashing

Graph is a collection of nodes connected by edges. Graphs are mostly used to represent networks, such as social networks or transportation networks.

Important articles on Graph:

- Graph and its representations

- Breadth First Traversal for a Graph

- Depth First Traversal for a Graph

- Applications of Depth First Search

- Applications of Breadth First Traversal

- Detect Cycle in a Directed Graph

- Detect Cycle in Graph using DSU

- Detect cycle in an Undirected Graph using DFS

- Longest Path in a Directed Acyclic Graph

- Topological Sorting

- Check whether a given graph is Bipartite or not

- Snake and Ladder Problem

- Minimize Cash Flow among a given set of friends who have borrowed money from each other

- Boggle (Find all possible words in a board of characters)

- Assign directions to edges so that the directed graph remains acyclic

Related articles on Graph:

- All Articles on Graph Data Structure

- Coding Practice on Graph

- Recent Articles on Graph

Advanced Data Structure:

Below are some advance Data Structure:

1. Advanced Lists:

Advanced Lists is a data structure that extends the functionality of a standard list. Advanced lists may support additional operations, such as finding the minimum or maximum element in the list, or rotating the list.

Important articles on Advanced Lists:

- Memory efficient doubly linked list

- XOR Linked List – A Memory Efficient Doubly Linked List | Set 1

- XOR Linked List – A Memory Efficient Doubly Linked List | Set 2

- Skip List | Set 1 (Introduction)

- Self Organizing List | Set 1 (Introduction)

- Unrolled Linked List | Set 1 (Introduction)

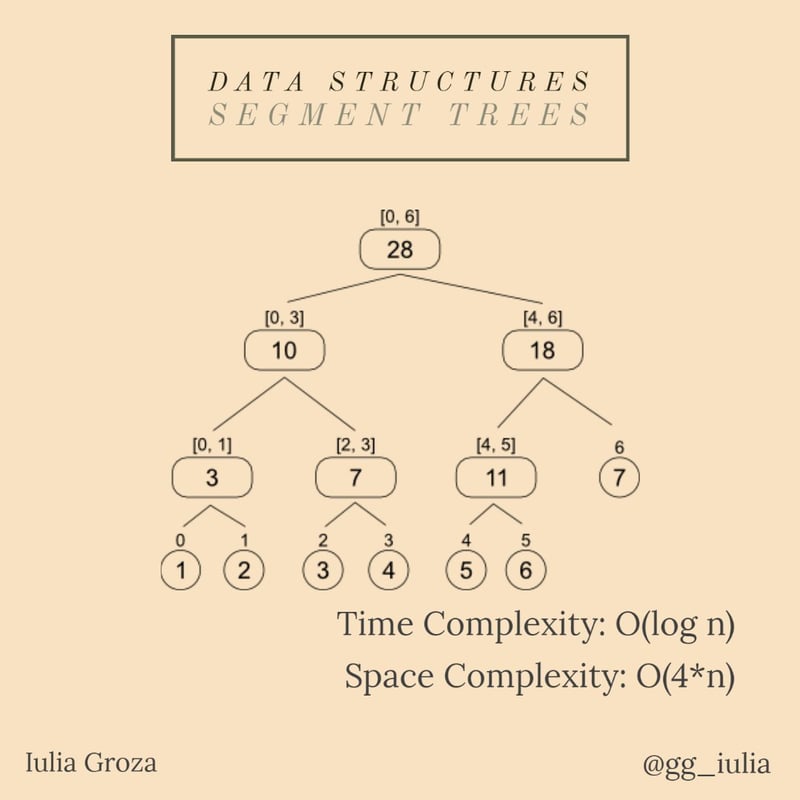

2. Segment Tree:

Segment Tree is a tree data structure that allows for efficient range queries on an array. Each node in the segment tree represents a range of elements in the array, and the value stored in the node is some aggregate value of the elements in that range.

Important articles on Segment Tree:

- Segment Tree | Set 1 (Sum of given range)

- Segment Tree | Set 2 (Range Minimum Query)

- Lazy Propagation in Segment Tree

- Persistent Segment Tree | Set 1 (Introduction)

Related articles on Segment Tree:

- All articles on Segment Tree

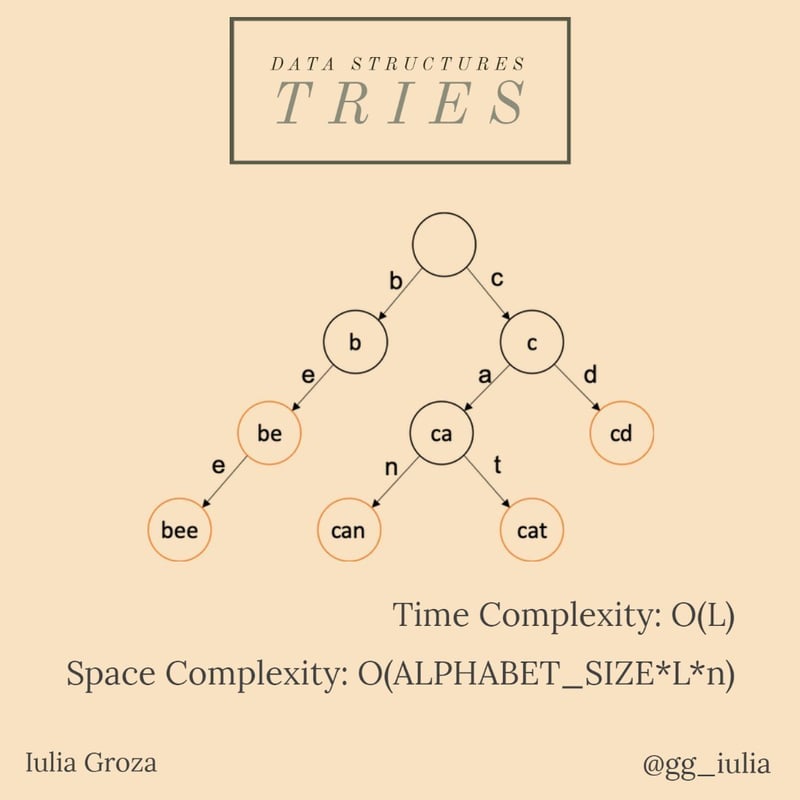

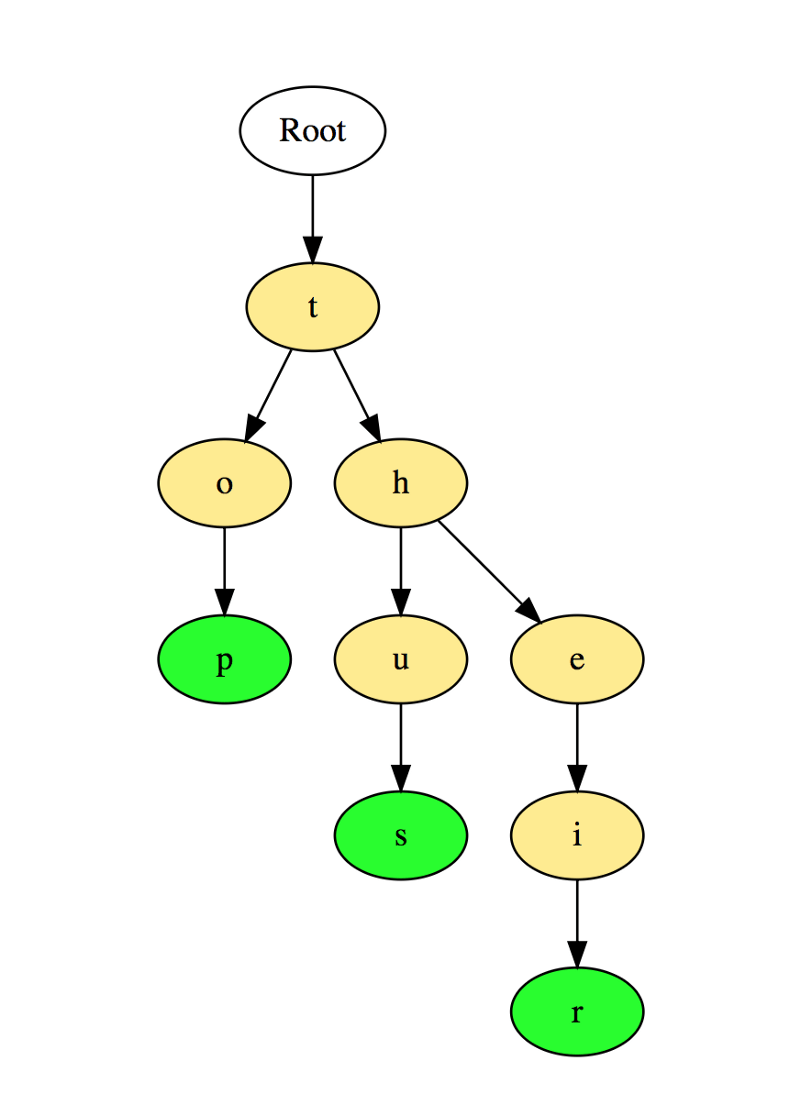

Trie is a tree-like data structure that is used to store strings. Each node in the trie represents a prefix of a string, and the children of a node represent the different characters that can follow that prefix. Tries are often used for efficient string matching and searching.

Important articles on Trie:

- Trie | (Insert and Search)

- Trie | (Delete)

- Longest prefix matching – A Trie based solution in Java

- How to Implement Reverse DNS Look Up Cache?

- How to Implement Forward DNS Look Up Cache?

Related articles on Trie :

- All Articles on Trie

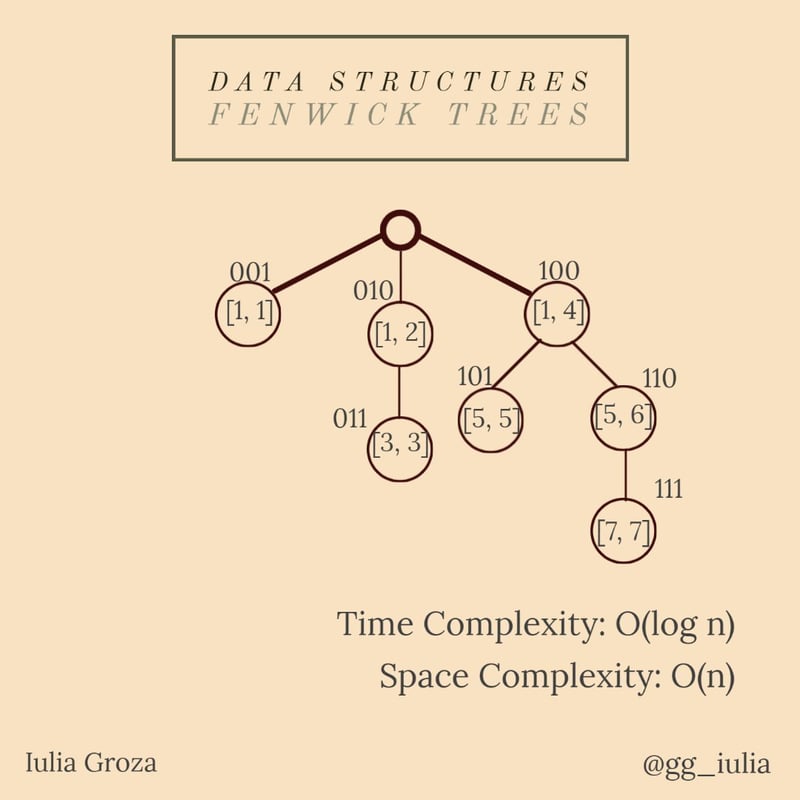

4. Binary Indexed Tree:

Binary Indexed Tree is a data structure that allows for efficient range queries and updates on an array. Binary indexed trees are often used to compute prefix sums or to solve range query problems.

Important articles on Binary Indexed Tree:

- Binary Indexed Tree

- Two Dimensional Binary Indexed Tree or Fenwick Tree

- Binary Indexed Tree : Range Updates and Point Queries

- Binary Indexed Tree : Range Update and Range Queries

Related articles on Binary Indexed Tree :

- All Articles on Binary Indexed Tree

5. Suffix Array and Suffix Tree :

Suffix Array and Suffix Tree is a data structures that are used to efficiently search for patterns within a string. Suffix arrays and suffix trees are mostly used in bioinformatics and text processing applications.

Important articles on Suffix Array and Suffix Tree:

- Suffix Array Introduction

- Suffix Array nLogn Algorithm

- kasai’s Algorithm for Construction of LCP array from Suffix Array

- Suffix Tree Introduction

- Ukkonen’s Suffix Tree Construction – Part 1

- Ukkonen’s Suffix Tree Construction – Part 2

- Ukkonen’s Suffix Tree Construction – Part 3

- Ukkonen’s Suffix Tree Construction – Part 4,

- Ukkonen’s Suffix Tree Construction – Part 5

- Ukkonen’s Suffix Tree Construction – Part 6

- Generalized Suffix Tree

- Build Linear Time Suffix Array using Suffix Tree

- Substring Check

- Searching All Patterns

- Longest Repeated Substring,

- Longest Common Substring, Longest Palindromic Substring

Related articles on Suffix Array and Suffix Tree:

- All Articles on Suffix Tree

6. AVL Tree:

AVL tree is a self-balancing binary search tree that maintains a balanced height. AVL trees are mostly used when it is important to have efficient search and insertion operations.

Important articles on AVL Tree:

- AVL Tree | Set 1 (Insertion)

- AVL Tree | Set 2 (Deletion)

- AVL with duplicate keys

7. Splay Tree:

Splay Tree is a self-balancing binary search tree that moves frequently accessed nodes to the root of the tree. Splay trees are mostly used when it is important to have fast access to recently accessed data.

Important articles on Splay Tree:

- Splay Tree | Set 1 (Search)

- Splay Tree | Set 2 (Insert)

B Tree is a balanced tree data structure that is used to store data on disk. B trees are mostly used in database systems to efficiently store and retrieve large amounts of data.

Important articles on B Tree:

- B-Tree | Set 1 (Introduction)

- B-Tree | Set 2 (Insert)

- B-Tree | Set 3 (Delete)

9. Red-Black Tree:

Red-Black Tree is a self-balancing binary search tree that maintains a balance between the number of black and red nodes. Red-black trees are mostly used when it is important to have efficient search and insertion operations.

Important articles on Red-Black Tree:

- Red-Black Tree Introduction

- Red Black Tree Insertion.

- Red-Black Tree Deletion

- Program for Red Black Tree Insertion

Related articles on Red-Black Tree:

- All Articles on Self-Balancing BSTs

10. K Dimensional Tree:

K Dimensional Tree is a tree data structure that is used to store data in a multidimensional space. K dimensional trees are mostly used for efficient range queries and nearest neighbor searches.

Important articles on K Dimensional Tree:

- KD Tree (Search and Insert)

- K D Tree (Find Minimum)

- K D Tree (Delete)

Others Data Structure:

- Treap (A Randomized Binary Search Tree)

- Ternary Search Tree

- Interval Tree

- Implement LRU Cache

- Sort numbers stored on different machines

- Find the k most frequent words from a file

- Given a sequence of words, print all anagrams together

- Decision Trees – Fake (Counterfeit) Coin Puzzle (12 Coin Puzzle)

- Spaghetti Stack

- Data Structure for Dictionary and Spell Checker?

- Cartesian Tree

- Cartesian Tree Sorting

- Centroid Decomposition of Tree

- Gomory-Hu Tree

- Recent Articles on Advanced Data Structures.

- Commonly Asked Data Structure Interview Questions | Set 1

- A data structure for n elements and O(1) operations

- Expression Tree

Please Login to comment...

Related articles.

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

- Online Degree Explore Bachelor’s & Master’s degrees

- MasterTrack™ Earn credit towards a Master’s degree

- University Certificates Advance your career with graduate-level learning

- Top Courses

- Join for Free

Data Structures

This course is part of Data Structures and Algorithms Specialization

Taught in English

Some content may not be translated

Instructors: Neil Rhodes +4 more

Instructors

Instructor ratings

We asked all learners to give feedback on our instructors based on the quality of their teaching style.

Financial aid available

271,756 already enrolled

(5,357 reviews)

Recommended experience

Intermediate level

Basic knowledge of at least one programming language: C++, Java, Python, C, C#, Javascript, Haskell, Kotlin, Ruby, Rust, Scala.

Skills you'll gain

- Priority Queue

- Binary Search Tree

- Stack (Abstract Data Type)

Details to know

Add to your LinkedIn profile

See how employees at top companies are mastering in-demand skills

Build your subject-matter expertise

- Learn new concepts from industry experts

- Gain a foundational understanding of a subject or tool

- Develop job-relevant skills with hands-on projects

- Earn a shareable career certificate

Earn a career certificate

Add this credential to your LinkedIn profile, resume, or CV

Share it on social media and in your performance review

There are 6 modules in this course

A good algorithm usually comes together with a set of good data structures that allow the algorithm to manipulate the data efficiently. In this online course, we consider the common data structures that are used in various computational problems. You will learn how these data structures are implemented in different programming languages and will practice implementing them in our programming assignments. This will help you to understand what is going on inside a particular built-in implementation of a data structure and what to expect from it. You will also learn typical use cases for these data structures.

A few examples of questions that we are going to cover in this class are the following: 1. What is a good strategy of resizing a dynamic array? 2. How priority queues are implemented in C++, Java, and Python? 3. How to implement a hash table so that the amortized running time of all operations is O(1) on average? 4. What are good strategies to keep a binary tree balanced? You will also learn how services like Dropbox manage to upload some large files instantly and to save a lot of storage space!

Basic Data Structures

In this module, you will learn about the basic data structures used throughout the rest of this course. We start this module by looking in detail at the fundamental building blocks: arrays and linked lists. From there, we build up two important data structures: stacks and queues. Next, we look at trees: examples of how they’re used in Computer Science, how they’re implemented, and the various ways they can be traversed. Once you’ve completed this module, you will be able to implement any of these data structures, as well as have a solid understanding of the costs of the operations, as well as the tradeoffs involved in using each data structure.

What's included

7 videos 7 readings 1 quiz 1 programming assignment

7 videos • Total 60 minutes

- Arrays • 7 minutes • Preview module

- Singly-Linked Lists • 9 minutes

- Doubly-Linked Lists • 4 minutes

- Stacks • 10 minutes

- Queues • 7 minutes

- Trees • 11 minutes

- Tree Traversal • 10 minutes

7 readings • Total 70 minutes

- Welcome • 10 minutes

- Slides and External References • 10 minutes

- Available Programming Languages • 10 minutes

- FAQ on Programming Assignments • 10 minutes

- Acknowledgements • 10 minutes

1 quiz • Total 30 minutes

- Basic Data Structures • 30 minutes

1 programming assignment • Total 120 minutes

- Programming Assignment 1: Basic Data Structures • 120 minutes

Dynamic Arrays and Amortized Analysis

In this module, we discuss Dynamic Arrays: a way of using arrays when it is unknown ahead-of-time how many elements will be needed. Here, we also discuss amortized analysis: a method of determining the amortized cost of an operation over a sequence of operations. Amortized analysis is very often used to analyse performance of algorithms when the straightforward analysis produces unsatisfactory results, but amortized analysis helps to show that the algorithm is actually efficient. It is used both for Dynamic Arrays analysis and will also be used in the end of this course to analyze Splay trees.

5 videos 1 reading 1 quiz

5 videos • Total 30 minutes

- Dynamic Arrays • 8 minutes • Preview module

- Amortized Analysis: Aggregate Method • 5 minutes

- Amortized Analysis: Banker's Method • 6 minutes

- Amortized Analysis: Physicist's Method • 7 minutes

- Amortized Analysis: Summary • 2 minutes

1 reading • Total 10 minutes

- Dynamic Arrays and Amortized Analysis • 30 minutes

Priority Queues and Disjoint Sets

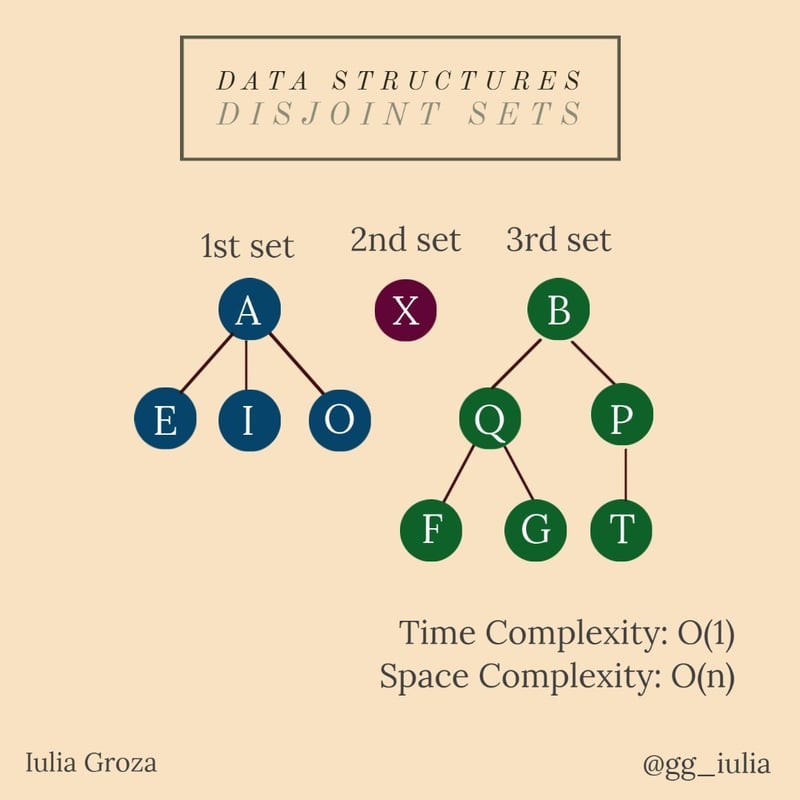

We start this module by considering priority queues which are used to efficiently schedule jobs, either in the context of a computer operating system or in real life, to sort huge files, which is the most important building block for any Big Data processing algorithm, and to efficiently compute shortest paths in graphs, which is a topic we will cover in our next course. For this reason, priority queues have built-in implementations in many programming languages, including C++, Java, and Python. We will see that these implementations are based on a beautiful idea of storing a complete binary tree in an array that allows to implement all priority queue methods in just few lines of code. We will then switch to disjoint sets data structure that is used, for example, in dynamic graph connectivity and image processing. We will see again how simple and natural ideas lead to an implementation that is both easy to code and very efficient. By completing this module, you will be able to implement both these data structures efficiently from scratch.

15 videos 6 readings 3 quizzes 1 programming assignment 1 plugin

15 videos • Total 129 minutes

- Introduction • 6 minutes • Preview module

- Naive Implementations of Priority Queues • 5 minutes

- Binary Trees • 1 minute

- Basic Operations • 12 minutes

- Complete Binary Trees • 9 minutes

- Pseudocode • 8 minutes

- Heap Sort • 10 minutes

- Building a Heap • 10 minutes

- Final Remarks • 4 minutes

- Overview • 7 minutes

- Naive Implementations • 10 minutes

- Trees for Disjoint Sets • 7 minutes

- Union by Rank • 9 minutes

- Path Compression • 6 minutes

- Analysis (Optional) • 18 minutes

6 readings • Total 60 minutes

- Slides • 10 minutes

- Tree Height Remark • 10 minutes

3 quizzes • Total 72 minutes

- Priority Queues and Disjoint Sets • 30 minutes

- Priority Queues: Quiz • 12 minutes

- Quiz: Disjoint Sets • 30 minutes

- Programming Assignment 2: Priority Queues and Disjoint Sets • 120 minutes

1 plugin • Total 10 minutes

- Survey • 10 minutes

Hash Tables

In this module you will learn about very powerful and widely used technique called hashing. Its applications include implementation of programming languages, file systems, pattern search, distributed key-value storage and many more. You will learn how to implement data structures to store and modify sets of objects and mappings from one type of objects to another one. You will see that naive implementations either consume huge amount of memory or are slow, and then you will learn to implement hash tables that use linear memory and work in O(1) on average! In the end, you will learn how hash functions are used in modern disrtibuted systems and how they are used to optimize storage of services like Dropbox, Google Drive and Yandex Disk!

20 videos 4 readings 2 quizzes 1 programming assignment

20 videos • Total 148 minutes

- Applications of Hashing • 3 minutes • Preview module

- Analysing Service Access Logs • 7 minutes

- Direct Addressing • 7 minutes

- Hash Functions • 3 minutes

- Chaining • 7 minutes

- Chaining Implementation and Analysis • 6 minutes

- Hash Tables • 6 minutes

- Phone Book Data Structure • 9 minutes

- Universal Family • 10 minutes

- Hashing Phone Numbers • 9 minutes

- Hashing Names • 6 minutes

- Analysis of Polynomial Hashing • 8 minutes

- Find Substring in Text • 6 minutes

- Rabin-Karp's Algorithm • 8 minutes

- Recurrence for Substring Hashes • 12 minutes

- Improving Running Time • 8 minutes

- Julia's Diary • 6 minutes

- Julia's Bank • 5 minutes

- Blockchain • 5 minutes

- Merkle Tree • 7 minutes

4 readings • Total 40 minutes

2 quizzes • total 60 minutes.

- Hashing • 30 minutes

- Hash Tables and Hash Functions • 30 minutes

- Programming Assignment 3: Hash Tables • 120 minutes

Binary Search Trees

In this module we study binary search trees, which are a data structure for doing searches on dynamically changing ordered sets. You will learn about many of the difficulties in accomplishing this task and the ways in which we can overcome them. In order to do this you will need to learn the basic structure of binary search trees, how to insert and delete without destroying this structure, and how to ensure that the tree remains balanced.

7 videos 2 readings 1 quiz

7 videos • Total 54 minutes

- Introduction • 7 minutes • Preview module

- Search Trees • 5 minutes

- Basic Operations • 10 minutes

- Balance • 5 minutes

- AVL Trees • 5 minutes

- AVL Tree Implementation • 9 minutes

- Split and Merge • 9 minutes

2 readings • Total 20 minutes

1 quiz • total 20 minutes.

- Binary Search Trees • 20 minutes

Binary Search Trees 2

In this module we continue studying binary search trees. We study a few non-trivial applications. We then study the new kind of balanced search trees - Splay Trees. They adapt to the queries dynamically and are optimal in many ways.

4 videos 2 readings 1 quiz 1 programming assignment

4 videos • Total 36 minutes

- Applications • 10 minutes • Preview module

- Splay Trees: Introduction • 6 minutes

- Splay Trees: Implementation • 7 minutes

- (Optional) Splay Trees: Analysis • 10 minutes

- Splay Trees • 30 minutes

1 programming assignment • Total 180 minutes

- Programming Assignment 4: Binary Search Trees • 180 minutes

UC San Diego is an academic powerhouse and economic engine, recognized as one of the top 10 public universities by U.S. News and World Report. Innovation is central to who we are and what we do. Here, students learn that knowledge isn't just acquired in the classroom—life is their laboratory.

Recommended if you're interested in Algorithms

Coursera Project Network

Crea formularios con React Hooks y MUI

Guided Project

Stanford University

Divide and Conquer, Sorting and Searching, and Randomized Algorithms

Princeton University

Algorithms, Part I

Why people choose coursera for their career.

Learner reviews

Showing 3 of 5357

5,357 reviews

Reviewed on May 15, 2020

In depth mathematical analysis and implementation of important Data Structures. This is a very good course for programmers looking to solve computational problems with first principles.

Reviewed on Nov 23, 2019

The lectures and the reading material were great. The assignments are challenging and require thought before attempting. The forums were really useful when I got stuck with the assignments

Reviewed on Sep 27, 2020

Overall, it's good. But some chapters like the binary search tree and hash table, the instructions are now very heuristic. I can only understand the content after reading the textbook.

New to Algorithms? Start here.

Open new doors with Coursera Plus

Unlimited access to 7,000+ world-class courses, hands-on projects, and job-ready certificate programs - all included in your subscription

Advance your career with an online degree

Earn a degree from world-class universities - 100% online

Join over 3,400 global companies that choose Coursera for Business

Upskill your employees to excel in the digital economy

Frequently asked questions

When will i have access to the lectures and assignments.

Access to lectures and assignments depends on your type of enrollment. If you take a course in audit mode, you will be able to see most course materials for free. To access graded assignments and to earn a Certificate, you will need to purchase the Certificate experience, during or after your audit. If you don't see the audit option:

The course may not offer an audit option. You can try a Free Trial instead, or apply for Financial Aid.

The course may offer 'Full Course, No Certificate' instead. This option lets you see all course materials, submit required assessments, and get a final grade. This also means that you will not be able to purchase a Certificate experience.

What will I get if I subscribe to this Specialization?

When you enroll in the course, you get access to all of the courses in the Specialization, and you earn a certificate when you complete the work. Your electronic Certificate will be added to your Accomplishments page - from there, you can print your Certificate or add it to your LinkedIn profile. If you only want to read and view the course content, you can audit the course for free.

What is the refund policy?

If you subscribed, you get a 7-day free trial during which you can cancel at no penalty. After that, we don’t give refunds, but you can cancel your subscription at any time. See our full refund policy Opens in a new tab .

Is financial aid available?

Yes. In select learning programs, you can apply for financial aid or a scholarship if you can’t afford the enrollment fee. If fin aid or scholarship is available for your learning program selection, you’ll find a link to apply on the description page.

More questions

DEV Community

Posted on Sep 3, 2020 • Updated on Feb 21

Complete Introduction to the 30 Most Essential Data Structures & Algorithms

Data Structures & Algorithms (DSA) is often considered to be an intimidating topic - a common misbelief. Forming the foundation of the most innovative concepts in tech, they are essential in both jobs/internships applicants' and experienced programmers' journey. Mastering DSA implies that you are able to use your computational and algorithmic thinking in order to solve never-before-seen problems and contribute to any tech company's value (including your own!). By understanding them, you can improve the maintainability, extensibility and efficiency of your code.

These being said, I've decided to centralize all the DSA threads that I have been posting on Twitter during my #100DaysOfCode challenge. This article is aiming to make DSA not look as intimidating as it is believed to be. It includes the 15 most useful data structures and the 15 most important algorithms that can help you ace your interviews and improve your competitive programming skills. Each chapter includes useful links with additional information and practice problems. DS topics are accompanied by a graphic representation and key information. Every algorithm is implemented into a continuously updating Github repo . At the time of writing, it contains the pseudocode, C++, Python and Java (still in progress) implementations of each mentioned algorithm (and not only). This repository is expanding thanks to other talented and passionate developers that are contributing to it by adding new algorithms and new programming languages implementations.

I. Data Structures

- Linked Lists

- Maps & Hash Tables

- Binary Trees & Binary Search Trees

- Self-balancing Trees (AVL Trees, Red-Black Trees, Splay Trees)

- Segment Trees

- Fenwick Trees

- Disjoint Set Union

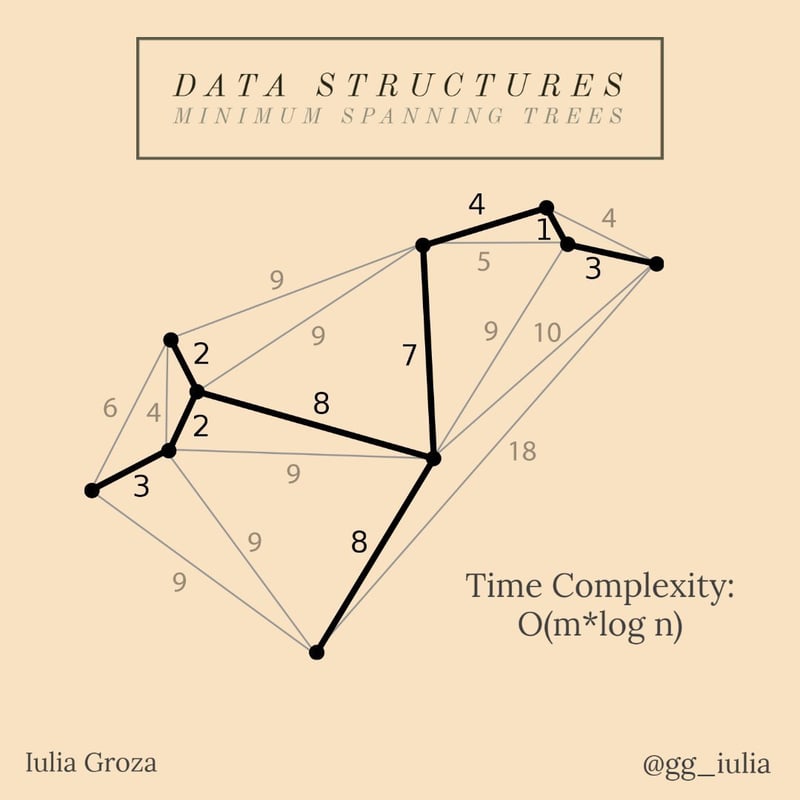

- Minimum Spanning Trees

II. Algorithms

- Divide and Conquer

- Sorting Algorithms (Bubble Sort, Counting Sort, Quick Sort, Merge Sort, Radix Sort)

- Searching Algorithms (Linear Search, Binary Search)

- Sieve of Eratosthenes

- Knuth-Morris-Pratt Algorithm

- Greedy I (Maximum number of non-overlapping intervals on an axis)

- Greedy II (Fractional Knapsack Problem)

- Dynamic Programming I (0–1 Knapsack Problem)

- Dynamic Programming II (Longest Common Subsequence)

- Dynamic Programming III (Longest Increasing Subsequence)

- Convex Hull

- Graph Traversals (Breadth-First Search, Depth-First Search)

- Floyd-Warshall / Roy-Floyd Algorithm

- Dijkstra's Algorithm & Bellman-Ford Algorithm

- Topological Sorting

Arrays are the simplest and most common data structures. They are characterised by the facile access of elements by index (position).

What are they used for?

Imagine having a theater chair row. Each chair has assigned a position (from left to right), therefore every spectator will have assigned the number from the chair (s)he will be sitting on. This is an array. Expand the problem to the whole theater (rows and columns of chairs) and you will have a 2D array (matrix)!

- elements' values are placed in order and accessed by their index from 0 to the length of the array-1;

- an array is a continuous block of memory;

- they are usually made of elements of the same type (it depends on the programming language);

- access and addition of elements are fast; search and deletion are not done in O(1).

Useful Links

- GeeksforGeeks: Introduction to Arrays

- LeetCode Problem Set

- Top 50 Array Coding Problems for Interviews

2. Linked Lists

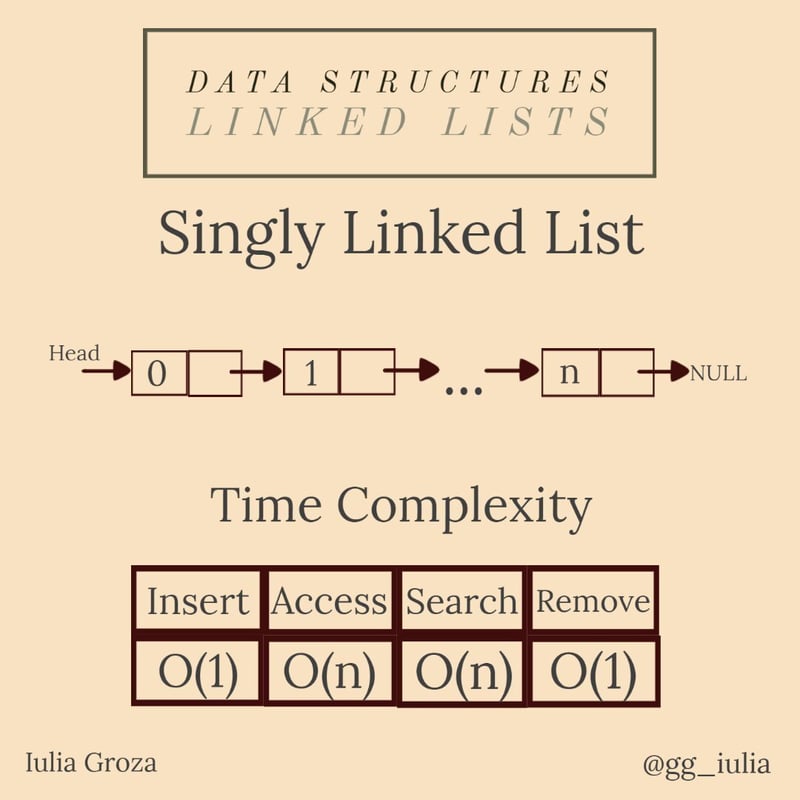

Linked lists are linear data structures, just like arrays. The main difference between linked lists and arrays is that elements of a linked list are not stored at contiguous memory locations. It is composed of nodes - entities that store the current element's value and an address reference to the next element. That way, elements are linked by pointers.

One relevant application of linked lists is the implementation of the previous and the next page of a browser. A double linked list is the perfect data structure to store the pages displayed by a user's search.

- they come in three types: singly, doubly and circular;

- elements are NOT stored in a contiguous block of memory;

- perfect for an excellent memory management (using pointers implies dynamic memory usage);

- insertion and deletion are fast; accessing and searching elements are done in linear time.

- Visualizing Linked Lists

- Top 50 Problems on Linked List Data Structure asked in SDE Interviews

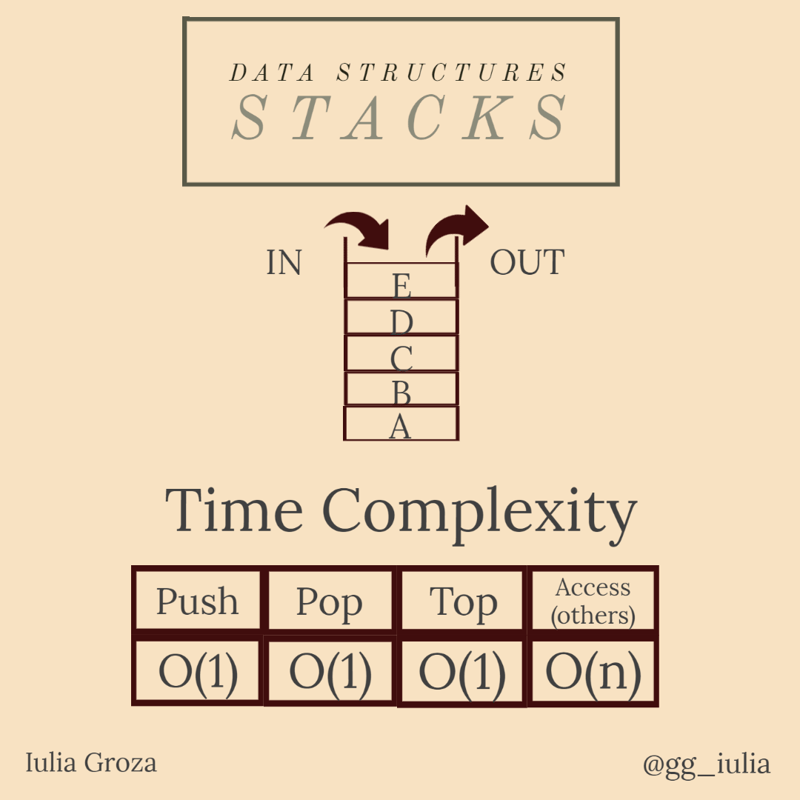

A stack is an abstract data type that formalizes the concept of restricted access collection. The restriction follows the rule LIFO (Last In, First Out). Therefore, the last element added in the stack is the first element you remove from it. Stacks can be implemented using arrays or linked lists.

The most common real-life example uses plates placed one over another in the canteen. The plate which is at the top is the first to be removed. The plate placed at the bottommost is the one that remains in the stack for the longest period of time. One situation when stacks are the most useful is when you need to obtain the reverse order of given elements. Just push them all in a stack and then pop them. Another interesting application is the Valid Parentheses Problem. Given a string of parantheses, you can check that they are matched using a stack.

- you can only access the last element at one time (the one at the top);

- one disadvantage is that once you pop elements from the top in order to access other elements, their values will be lost from the stack's memory;

- access of other elements is done in linear time; any other operation is in O(1).

- CS Academy: Stack Introduction

- CS Academy: Stack Application - Soldiers Row

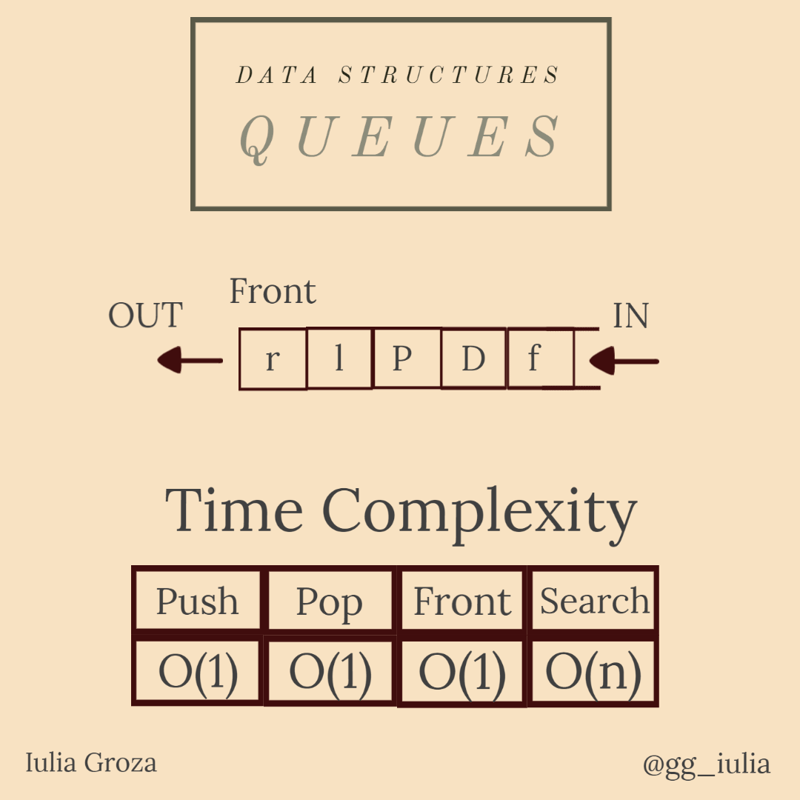

A queue is another data type from the restricted access collection, just like the previously discussed stack. The main difference is that the queue is organised after the FIFO (First In, First Out) model: the first inserted element in the queue is the first element to be removed. Queues can be implemented using a fixed length array, a circular array or a linked list.

The best use of this abstract data type (ADT) is, of course, the simulation of a real life queue. For example, in a call center application, a queue is used for saving the clients that are waiting to receive help from the consultant - these clients should get help in the order they called. One special and very important type of queue is the priority queue. The elements are introduced in the queue based on a "priority" associated with them: the element with the highest priority is the first introduced in the queue. This ADT is essential in many Graph Algorithms (Dijkstra's Algorithm, BFS, Prim's Algorithm, Huffman Coding - more about them below). It is implemented using a heap. Another special type of queue is the deque ( pun alert it's pronounced "deck"). Elements can be inserted/removed from both endings of the queue.

- we can directly access only the "oldest" element introduced;

- searching elements will remove all the accessed elements from the queue's memory;

- popping/pushing elements or getting the front of the queue is done in constant time. Searching is linear.

- Visualizing Queues

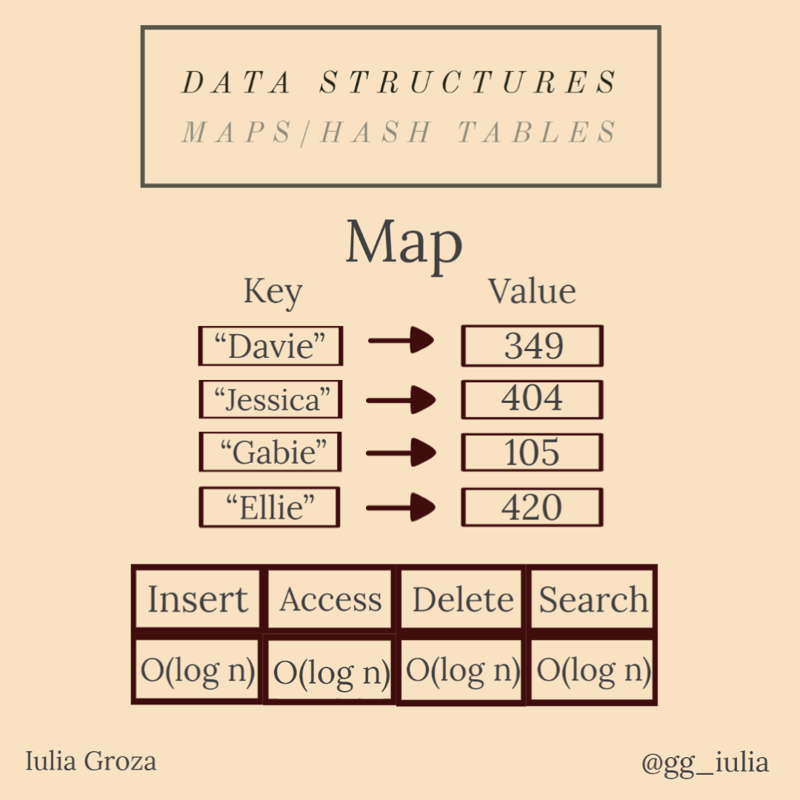

5. Maps & Hash Tables

Maps (dictionaries) are abstract data types that contain a collection of keys and a collection of values. Each key has a value associated with it. A hash table is a particular type of map. It uses a hash function to generate a hash code, into an array of buckets or slots: the key is hashed and the resulting hash indicates where the value is stored. The most common hash function (among many) is the modulo constant function. e. g. if the constant is 6, the value of the key x is x%6 . Ideally, a hash function will assign each key to a unique bucket, but most of their designs employ an imperfect function, which might conduct to collision between keys with the same generated value. Such collisions are always accomodated in some way.

The most known application of maps is a language dictionary. Each word from a language has assigned its definition to it. It is implemented using an ordered map (its keys are alphabetically ordered). Contacts is also a map. Each name has a phone number assigned to it. Another useful application is normalization of values. Let's say we want to assign to each minute of a day (24 hours = 1440 minutes) an index from 0 to 1439. The hash function will be h(x) = x.hour*60+x.minute .

- keys are unique (no duplicates);

- collision resistance: it should be hard to find two different inputs with the same key;

- pre-image resistance: given a value H, it should be hard to find a key x, such that h(x)=H ;

- second pre-image resistance: given a key and its value, it should be hard to find another key with the same value;

- terminology:

- * "map": Java, C++;

- * "dictionary": Python, JavaScript, .NET;

- * "associative array": PHP.