Education Technology: An Evidence-Based Review

In recent years, there has been widespread excitement around the potential for technology to transform learning. As investments in education technology continue to grow, students, parents, and teachers face a seemingly endless array of education technologies from which to choose—from digital personalized learning platforms to educational games to online courses. Amidst the excitement, it is important to step back and understand how technology can help—or in some cases hinder—how students learn. This review paper synthesizes and discusses experimental evidence on the effectiveness of technology-based approaches in education and outlines areas for future inquiry. In particular, we examine RCTs across the following categories of education technology: (1) access to technology, (2) computer-assisted learning, (3) technology-enabled behavioral interventions in education, and (4) online learning. While this review focuses on literature from developed countries, it also draws upon extensive research from developing countries. We hope this literature review will advance the knowledge base of how technology can be used to support education, outline key areas for new experimental research, and help drive improvements to the policies, programs, and structures that contribute to successful teaching and learning.

We are extremely grateful to Caitlin Anzelone, Rekha Balu, Peter Bergman, Brad Bernatek, Ben Castleman, Luke Crowley, Angela Duckworth, Jonathan Guryan, Alex Haslam, Andrew Ho, Ben Jones, Matthew Kraft, Kory Kroft, David Laibson, Susanna Loeb, Andrew Magliozzi, Ignacio Martinez, Susan Mayer, Steve Mintz, Piotr Mitros, Lindsay Page, Amanda Pallais, John Pane, Justin Reich, Jonah Rockoff, Sylvi Rzepka, Kirby Smith, and Oscar Sweeten-Lopez for providing helpful and detailed comments as we put together this review. We also thank Rachel Glennerster for detailed support throughout the project, Jessica Mardo and Sophie Shank for edits, and to the Spencer Foundation for financial support. Any errors or omissions are our own. The views expressed herein are those of the authors and do not necessarily reflect the views of the National Bureau of Economic Research.

MARC RIS BibTeΧ

Download Citation Data

Mentioned in the News

More from nber.

In addition to working papers , the NBER disseminates affiliates’ latest findings through a range of free periodicals — the NBER Reporter , the NBER Digest , the Bulletin on Retirement and Disability , the Bulletin on Health , and the Bulletin on Entrepreneurship — as well as online conference reports , video lectures , and interviews .

- Review article

- Open access

- Published: 22 January 2020

Mapping research in student engagement and educational technology in higher education: a systematic evidence map

- Melissa Bond ORCID: orcid.org/0000-0002-8267-031X 1 ,

- Katja Buntins 2 ,

- Svenja Bedenlier 1 ,

- Olaf Zawacki-Richter 1 &

- Michael Kerres 2

International Journal of Educational Technology in Higher Education volume 17 , Article number: 2 ( 2020 ) Cite this article

117k Accesses

246 Citations

58 Altmetric

Metrics details

Digital technology has become a central aspect of higher education, inherently affecting all aspects of the student experience. It has also been linked to an increase in behavioural, affective and cognitive student engagement, the facilitation of which is a central concern of educators. In order to delineate the complex nexus of technology and student engagement, this article systematically maps research from 243 studies published between 2007 and 2016. Research within the corpus was predominantly undertaken within the United States and the United Kingdom, with only limited research undertaken in the Global South, and largely focused on the fields of Arts & Humanities, Education, and Natural Sciences, Mathematics & Statistics. Studies most often used quantitative methods, followed by mixed methods, with little qualitative research methods employed. Few studies provided a definition of student engagement, and less than half were guided by a theoretical framework. The courses investigated used blended learning and text-based tools (e.g. discussion forums) most often, with undergraduate students as the primary target group. Stemming from the use of educational technology, behavioural engagement was by far the most often identified dimension, followed by affective and cognitive engagement. This mapping article provides the grounds for further exploration into discipline-specific use of technology to foster student engagement.

Introduction

Over the past decade, the conceptualisation and measurement of ‘student engagement’ has received increasing attention from researchers, practitioners, and policy makers alike. Seminal works such as Astin’s ( 1999 ) theory of involvement, Fredricks, Blumenfeld, and Paris’s ( 2004 ) conceptualisation of the three dimensions of student engagement (behavioural, emotional, cognitive), and sociocultural theories of engagement such as Kahu ( 2013 ) and Kahu and Nelson ( 2018 ), have done much to shape and refine our understanding of this complex phenomenon. However, criticism about the strength and depth of student engagement theorising remains e.g. (Boekaerts, 2016 ; Kahn, 2014 ; Zepke, 2018 ), the quality of which has had a direct impact on the rigour of subsequent research (Lawson & Lawson, 2013 ; Trowler, 2010 ), prompting calls for further synthesis (Azevedo, 2015 ; Eccles, 2016 ).

In parallel to this increased attention on student engagement, digital technology has become a central aspect of higher education, inherently affecting all aspects of the student experience (Barak, 2018 ; Henderson, Selwyn, & Aston, 2017 ; Selwyn, 2016 ). International recognition of the importance of ICT skills and digital literacy has been growing, alongside mounting recognition of its importance for active citizenship (Choi, Glassman, & Cristol, 2017 ; OECD, 2015a ; Redecker, 2017 ), and the development of interdisciplinary and collaborative skills (Barak & Levenberg, 2016 ; Oliver, & de St Jorre, Trina, 2018 ). Using technology has the potential to make teaching and learning processes more intensive (Kerres, 2013 ), improve student self-regulation and self-efficacy (Alioon & Delialioğlu, 2017 ; Bouta, Retalis, & Paraskeva, 2012 ), increase participation and involvement in courses as well as the wider university community (Junco, 2012 ; Salaber, 2014 ), and predict increased student engagement (Chen, Lambert, & Guidry, 2010 ; Rashid & Asghar, 2016 ). There is, however, no guarantee of active student engagement as a result of using technology (Kirkwood, 2009 ), with Tamim, Bernard, Borokhovski, Abrami, and Schmid’s ( 2011 ) second-order meta-analysis finding only a small to moderate impact on student achievement across 40 years. Rather, careful planning, sound pedagogy and appropriate tools are vital (Englund, Olofsson, & Price, 2017 ; Koehler & Mishra, 2005 ; Popenici, 2013 ), as “technology can amplify great teaching, but great technology cannot replace poor teaching” (OECD, 2015b ), p. 4.

Due to the nature of its complexity, educational technology research has struggled to find a common definition and terminology with which to talk about student engagement, which has resulted in inconsistency across the field. For example, whilst 77% of articles reviewed by Henrie, Halverson, and Graham ( 2015 ) operationalised engagement from a behavioural perspective, most of the articles did not have a clearly defined statement of engagement, which is no longer considered acceptable in student engagement research (Appleton, Christenson, & Furlong, 2008 ; Christenson, Reschly, & Wylie, 2012 ). Linked to this, educational technology research has, however, lacked theoretical guidance (Al-Sakkaf, Omar, & Ahmad, 2019 ; Hew, Lan, Tang, Jia, & Lo, 2019 ; Lundin, Bergviken Rensfeldt, Hillman, Lantz-Andersson, & Peterson, 2018 ). A review of 44 random articles published in 2014 in the journals Educational Technology Research & Development and Computers & Education, for example, revealed that more than half had no guiding conceptual or theoretical framework (Antonenko, 2015 ), and only 13 out of 62 studies in a systematic review of flipped learning in engineering education reported theoretical grounding (Karabulut-Ilgu, Jaramillo Cherrez, & Jahren, 2018 ). Therefore, calls have been made for a greater understanding of the role that educational technology plays in affecting student engagement, in order to strengthen teaching practice and lead to improved outcomes for students (Castañeda & Selwyn, 2018 ; Krause & Coates, 2008 ; Nelson Laird & Kuh, 2005 ).

A reflection upon prior research that has been undertaken in the field is a necessary first step to engage in meaningful discussion on how to foster student engagement in the digital age. In support of this aim, this article provides a synthesis of student engagement theory research, and systematically maps empirical higher education research between 2007 and 2016 on student engagement in educational technology. Synthesising the vast body of literature on student engagement (for previous literature and systematic reviews, see Additional file 1 ), this article develops “a tentative theory” in the hopes of “plot[ting] the conceptual landscape…[and chart] possible routes to explore it” (Antonenko, 2015 , pp. 57–67) for researchers, practitioners, learning designers, administrators and policy makers. It then discusses student engagement against the background of educational technology research, exploring prior literature and systematic reviews that have been undertaken. The systematic review search method is then outlined, followed by the presentation and discussion of findings.

Literature review

What is student engagement.

Student engagement has been linked to improved achievement, persistence and retention (Finn, 2006 ; Kuh, Cruce, Shoup, Kinzie, & Gonyea, 2008 ), with disengagement having a profound effect on student learning outcomes and cognitive development (Ma, Han, Yang, & Cheng, 2015 ), and being a predictor of student dropout in both secondary school and higher education (Finn & Zimmer, 2012 ). Student engagement is a multifaceted and complex construct (Appleton et al., 2008 ; Ben-Eliyahu, Moore, Dorph, & Schunn, 2018 ), which some have called a ‘meta-construct’ (e.g. Fredricks et al., 2004 ; Kahu, 2013 ), and likened to blind men describing an elephant (Baron & Corbin, 2012 ; Eccles, 2016 ). There is ongoing disagreement about whether there are three components e.g., (Eccles, 2016 )—affective/emotional, cognitive and behavioural—or whether there are four, with the recent suggested addition of agentic engagement (Reeve, 2012 ; Reeve & Tseng, 2011 ) and social engagement (Fredricks, Filsecker, & Lawson, 2016 ). There has also been confusion as to whether the terms ‘engagement’ and ‘motivation’ can and should be used interchangeably (Reschly & Christenson, 2012 ), especially when used by policy makers and institutions (Eccles & Wang, 2012 ). However, the prevalent understanding across the literature is that motivation is an antecedent to engagement; it is the intent and unobservable force that energises behaviour (Lim, 2004 ; Reeve, 2012 ; Reschly & Christenson, 2012 ), whereas student engagement is energy and effort in action; an observable manifestation (Appleton et al., 2008 ; Eccles & Wang, 2012 ; Kuh, 2009 ; Skinner & Pitzer, 2012 ), evidenced through a range of indicators.

Whilst it is widely accepted that no one definition exists that will satisfy all stakeholders (Solomonides, 2013 ), and no one project can be expected to possibly examine every sub-construct of student engagement (Kahu, 2013 ), it is important for each research project to begin with a clear definition of their own understanding (Boekaerts, 2016 ). Therefore, in this project, student engagement is defined as follows:

Student engagement is the energy and effort that students employ within their learning community, observable via any number of behavioural, cognitive or affective indicators across a continuum. It is shaped by a range of structural and internal influences, including the complex interplay of relationships, learning activities and the learning environment. The more students are engaged and empowered within their learning community, the more likely they are to channel that energy back into their learning, leading to a range of short and long term outcomes, that can likewise further fuel engagement.

Dimensions and indicators of student engagement

There are three widely accepted dimensions of student engagement; affective, cognitive and behavioural. Within each component there are several indicators of engagement (see Additional file 2 ), as well as disengagement (see Additional file 2 ), which is now seen as a separate and distinct construct to engagement. It should be stated, however, that whilst these have been drawn from a range of literature, this is not a finite list, and it is recognised that students might experience these indicators on a continuum at varying times (Coates, 2007 ; Payne, 2017 ), depending on their valence (positive or negative) and activation (high or low) (Pekrun & Linnenbrink-Garcia, 2012 ). There has also been disagreement in terms of which dimension the indicators align with. For example, Järvelä, Järvenoja, Malmberg, Isohätälä, and Sobocinski ( 2016 ) argue that ‘interaction’ extends beyond behavioural engagement, covering both cognitive and/or emotional dimensions, as it involves collaboration between students, and Lawson and Lawson ( 2013 ) believe that ‘effort’ and ‘persistence’ are cognitive rather than behavioural constructs, as they “represent cognitive dispositions toward activity rather than an activity unto itself” (p. 465), which is represented in the table through the indicator ‘stay on task/focus’ (see Additional file 2 ). Further consideration of these disagreements represent an area for future research, however, as they are beyond the scope of this paper.

Student engagement within educational technology research

The potential that educational technology has to improve student engagement, has long been recognised (Norris & Coutas, 2014 ), however it is not merely a case of technology plus students equals engagement. Without careful planning and sound pedagogy, technology can promote disengagement and impede rather than help learning (Howard, Ma, & Yang, 2016 ; Popenici, 2013 ). Whilst still a young area, most of the research undertaken to gain insight into this, has been focused on undergraduate students e.g., (Henrie et al., 2015 ; Webb, Clough, O’Reilly, Wilmott, & Witham, 2017 ), with Chen et al. ( 2010 ) finding a positive relationship between the use of technology and student engagement, particularly earlier in university study. Research has also been predominantly STEM and medicine focused (e.g., Li, van der Spek, Feijs, Wang, & Hu, 2017 ; Nikou & Economides, 2018 ), with at least five literature or systematic reviews published in the last 5 years focused on medicine, and nursing in particular (see Additional file 3 ). This indicates that further synthesis is needed of research in other disciplines, such as Arts & Humanities and Education, as well as further investigation into whether research continues to focus on undergraduate students.

The five most researched technologies in Henrie et al.’s ( 2015 ) review were online discussion boards, general websites, learning management systems (LMS), general campus software and videos, as opposed to Schindler, Burkholder, Morad, and Marsh’s ( 2017 ) literature review, which concentrated on social networking sites (Facebook and Twitter), digital games, wikis, web-conferencing software and blogs. Schindler et al. found that most of these technologies had a positive impact on multiple indicators of student engagement across the three dimensions of engagement, with digital games, web-conferencing software and Facebook the most effective. However, it must be noted that they only considered seven indicators of student engagement, which could be extended by considering further indicators of student engagement. Other reviews that have found at least a small positive impact on student engagement include those focused on audience response systems (Hunsu, Adesope, & Bayly, 2016 ; Kay & LeSage, 2009 ), mobile learning (Kaliisa & Picard, 2017 ), and social media (Cheston, Flickinger, & Chisolm, 2013 ). Specific indicators of engagement that increased as a result of technology include interest and enjoyment (Li et al., 2017 ), improved confidence (Smith & Lambert, 2014 ) and attitudes (Nikou & Economides, 2018 ), as well as enhanced relationships with peers and teachers e.g., (Alrasheedi, Capretz, & Raza, 2015 ; Atmacasoy & Aksu, 2018 ).

Literature and systematic reviews focused on student engagement and technology do not always include information on where studies have been conducted. Out of 27 identified reviews (see Additional file 3 ), only 14 report the countries included, and two of these were explicitly focused on a specific region or country, namely Africa and Turkey. Most of the research has been conducted in the USA, followed by the UK, Taiwan, Australia and China. Table 1 depicts the three countries from which most studies originated from in the respective reviews, and highlights a clear lack of research conducted within mainland Europe, South America and Africa. Whilst this could be due to the choice of databases in which the literature was searched for, this nevertheless highlights a substantial gap in the literature, and to that end, it will be interesting to see whether this review is able to substantiate or contradict these trends.

Research into student engagement and educational technology has predominantly used a quantitative methodology (see Additional file 3 ), with 11 literature and systematic reviews reporting that surveys, particularly self-report Likert-scale, are the most used source of measurement (e.g. Henrie et al., 2015 ). Reviews that have included research using a range of methodologies, have found a limited number of studies employing qualitative methods (e.g. Connolly, Boyle, MacArthur, Hainey, & Boyle, 2012 ; Kay & LeSage, 2009 ; Lundin et al., 2018 ). This has led to a call for further qualitative research to be undertaken, exploring student engagement and technology, as well as more rigorous research designs e.g., (Li et al., 2017 ; Nikou & Economides, 2018 ), including sampling strategies, data collection, and in experimental studies in particular (Cheston et al., 2013 ; Connolly et al., 2012 ). However, not all reviews included information on methodologies used. Crook ( 2019 ), in his recent editorial in the British Journal of Educational Technology , stated that research methodology is a “neglected topic” (p. 487) within educational technology research, and stressed its importance in order to conduct studies delving deeper into phenomena (e.g. longitudinal studies).

Therefore, this article presents an initial “evidence map” (Miake-Lye, Hempel, Shanman, & Shekelle, 2016 ), p. 19 of systematically identified literature on student engagement and educational technology within higher education, undertaken through a systematic review, in order to address the issues raised by prior research, and to identify research gaps. These issues include the disparity between field of study and study levels researched, the geographical distribution of studies, the methodologies used, and the theoretical fuzziness surrounding student engagement. This article, however, is intended to provide an initial overview of the systematic review method employed, as well as an overview of the overall corpus. Further synthesis of possible correlations between student engagement and disengagement indicators with the co-occurrence of technology tools, will be undertaken within field of study specific articles (e.g., Bedenlier, 2020b ; Bedenlier 2020a ), allowing more meaningful guidance on applying the findings in practice.

The following research questions guide this enquiry:

How do the studies in the sample ground student engagement and align with theory?

Which indicators of cognitive, behavioural and affective engagement were identified in studies where educational technology was used? Which indicators of student disengagement?

What are the learning scenarios, modes of delivery and educational technology tools employed in the studies?

Overview of the study

With the intent to systematically map empirical research on student engagement and educational technology in higher education, we conducted a systematic review. A systematic review is an explicitly and systematically conducted literature review, that answers a specific question through applying a replicable search strategy, with studies then included or excluded, based on explicit criteria (Gough, Oliver, & Thomas, 2012 ). Studies included for review are then coded and synthesised into findings that shine light on gaps, contradictions or inconsistencies in the literature, as well as providing guidance on applying findings in practice. This contribution maps the research corpus of 243 studies that were identified through a systematic search and ensuing random parameter-based sampling.

Search strategy and selection procedure

The initial inclusion criteria for the systematic review were peer-reviewed articles in the English language, empirically reporting on students and student engagement in higher education, and making use of educational technology. The search was limited to records between 1995 and 2016, chosen due to the implementation of the first Virtual Learning Environments and Learning Management Systems within higher education see (Bond, 2018 ). Articles were limited to those published in peer-reviewed journals, due to the rigorous process under which they are published, and their trustworthiness in academia (Nicholas et al., 2015 ), although concerns within the scientific community with the peer-review process are acknowledged e.g. (Smith, 2006 ).

Discussion arose on how to approach the “hard-to-detect” (O’Mara-Eves et al., 2014 , p. 51) concept of student engagement in regards to sensitivity versus precision (Brunton, Stansfield, & Thomas, 2012 ), particularly in light of engagement being Henrie et al.’s ( 2015 ) most important search term. The decision was made that the concept ‘student engagement’ would be identified from titles and abstracts at a later stage, during the screening process. In this way, it was assumed that articles would be included, which indeed are concerned with student engagement, but which use different terms to describe the concept. Given the nature of student engagement as a meta-construct e.g. (Appleton et al., 2008 ; Christenson et al., 2012 ; Kahu, 2013 ) and by limiting the search to only articles including the term engagement , important research on other elements of student engagement might be missed. Hence, we opted for recall over precision. According to Gough et al. ( 2012 ), p. 13 “electronic searching is imprecise and captures many studies that employ the same terms without sharing the same focus”, or would lead to disregarding studies that analyse the construct but use different terms to describe it.

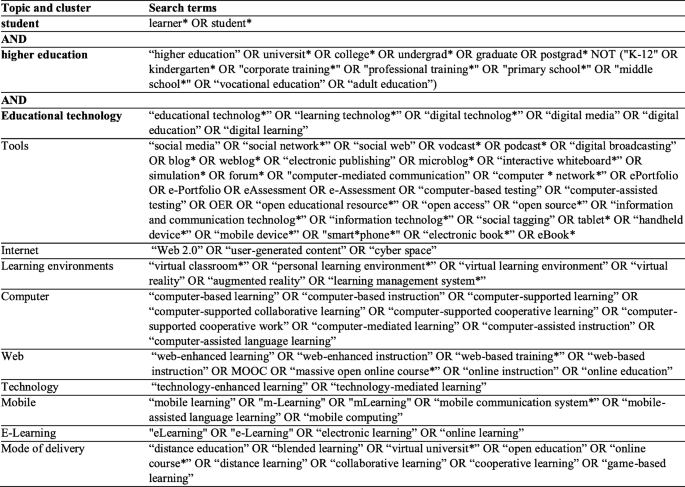

With this in mind, the search strategy to identify relevant studies was developed iteratively with support from the University Research Librarian. As outlined in O’Mara-Eves et al. ( 2014 ) as a standard approach, we used reviewer knowledge—in this case strongly supported through not only reviewer knowledge but certified expertise—and previous literature (e.g. Henrie et al., 2015 ; Kahu, 2013 ) to elicit concepts with potential importance under the topics student engagement, higher education and educational technology . The final search string (see Fig. 1 ) encompasses clusters of different educational technologies that were searched for separately in order to avoid an overly long search string. It was decided not to include any brand names, e.g. Facebook, Twitter, Moodle etc. because it was again reasoned that in scientific publication, the broader term would be used (e.g. social media). The final search string was slightly adapted, e.g. the format required for truncations or wildcards, according to the settings of each database being used Footnote 1 .

Final search terms used in the systematic review

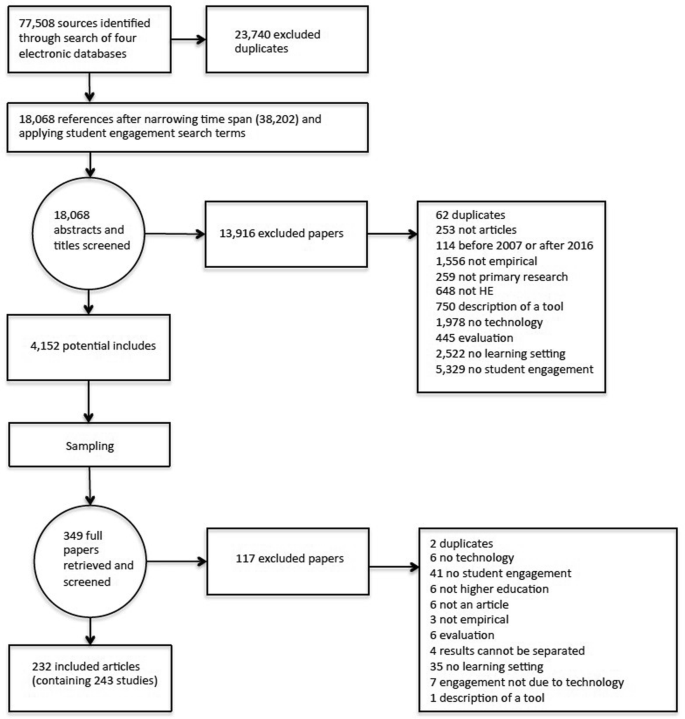

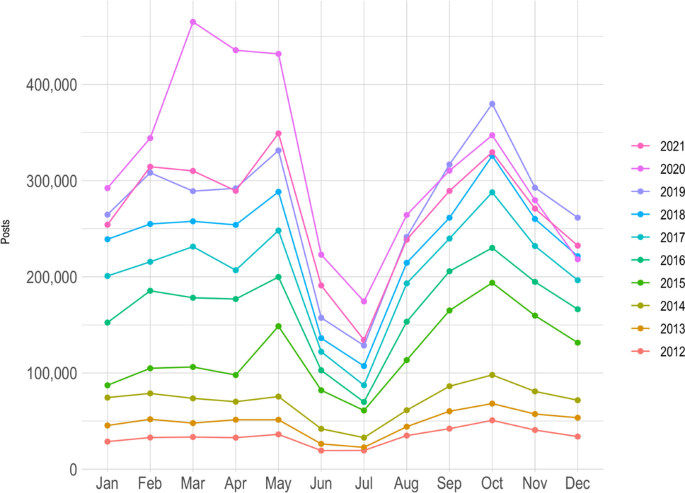

Four databases (ERIC, Web of Science, Scopus and PsycINFO) were searched in July 2017 and three researchers and a student assistant screened abstracts and titles of the retrieved references between August and November 2017, using EPPI Reviewer 4.0. An initial 77,508 references were retrieved, and with the elimination of duplicate records, 53,768 references remained (see Fig. 2 ). A first cursory screening of records revealed that older research was more concerned with technologies that are now considered outdated (e.g. overhead projectors, floppy disks). Therefore, we opted to adjust the period to include research published between 2007 and 2016, labeled as a phase of research and practice, entitled ‘online learning in the digital age’ (Bond, 2018 ). Whilst we initially opted for recall over precision, the decision was then made to search for specific facets of the student engagement construct (e.g. deep learning, interest and persistence) within EPPI-Reviewer, in order to further refine the corpus. These adaptations led to a remaining 18,068 records.

Systematic review PRISMA flow chart (slightly modified after Brunton et al., 2012 , p. 86; Moher, Liberati, Tetzlaff, & Altman, 2009 ), p. 8

Four researchers screened the first 150 titles and abstracts, in order to iteratively establish a joint understanding of the inclusion criteria. The remaining references were distributed equally amongst the screening team, which resulted in the inclusion of 4152 potentially relevant articles. Given the large number of articles for screening on full text, whilst facing restrained time as a condition in project-based and funded work, it was decided that a sample of articles would be drawn from this corpus for further analysis. With the intention to draw a sample that estimates the population parameters with a predetermined error range, we used methods of sample size estimation in the social sciences (Kupper & Hafner, 1989 ). To do so, the R Package MBESS (Kelley, Lai, Lai, & Suggests, 2018 ) was used. Accepting a 5% error range, a percentage of a half and an alpha of 5%, 349 articles were sampled, with this sample being then stratified by publishing year, as student engagement has become much more prevalent (Zepke, 2018 ) and educational technology has become more differentiated within the last decade (Bond, 2018 ). Two researchers screened the first 100 articles on full text, reaching an agreement of 88% on inclusion/exclusion. The researchers then discussed the discrepancies and came to an agreement on the remaining 12%. It was decided that further comparison screening was needed, to increase the level of reliability. After screening the sample on full text, 232 articles remained for data extraction, which contained 243 studies.

Data extraction process

In order to extract the article data, an extensive coding system was developed, including codes to extract information on the set-up and execution of the study (e.g. methodology, study sample) as well as information on the learning scenario, the mode of delivery and educational technology used. Learning scenarios included broader pedagogies, such as social collaborative learning and self-determined learning, but also specific pedagogies such as flipped learning, given the increasing number of studies and interest in these approaches (e.g., Lundin et al., 2018 ). Specific examples of student engagement and/or disengagement were coded under cognitive, affective or behavioural (dis)engagement. The facets of student (dis)engagement were identified based on the literature review undertaken (see Additional file 2 ), and applied in this detailed manner to not only capture the overarching dimensions of the concept, but rather their diverse sub-meanings. New indicators also emerged during the coding process, which had not initially been identified from the literature review, including ‘confidence’ and ‘assuming responsibility’. The 243 studies were coded with this extensive code set and any disagreements that occurred between the coders were reconciled. Footnote 2

As a plethora of over 50 individual educational technology applications and tools were identified in the 243 studies, in line with results found in other large-scale systematic reviews (e.g., Lai & Bower, 2019 ), concerns were raised over how the research team could meaningfully analyse and report the results. The decision was therefore made to employ Bower’s ( 2016 ) typology of learning technologies (see Additional file 4 ), in order to channel the tools into groups that share the same characteristics or “structure of information” (Bower, 2016 ), p. 773. Whilst it is acknowledged that some of the technology could be classified into more than one type within the typology, e.g. wikis can be used in individual composition, for collaborative tasks, or for knowledge organisation and sharing, “the type of learning that results from the use of the tool is dependent on the task and the way people engage with it rather than the technology itself” therefore “the typology is presented as descriptions of what each type of tool enables and example use cases rather than prescriptions of any particular pedagogical value system” (Bower, 2016 ), p. 774. For further elaboration on each category, please see Bower ( 2015 ).

Study characteristics

Geographical characteristics.

The systematic mapping reveals that the 243 studies were set in 33 different countries, whilst seven studies investigated settings in an international context, and three studies did not indicate their country setting. In 2% of the studies, the country was allocated based on the author country of origin, if the two authors came from the same country. The top five countries account for 158 studies (see Fig. 3 ), with 35.4% ( n = 86) studies conducted in the United States (US), 10.7% ( n = 26) in the United Kingdom (UK), 7.8% ( n = 19) in Australia, 7.4% ( n = 18) in Taiwan, and 3.7% ( n = 9) in China. Across the corpus, studies from countries employing English as the official or one of the official languages total up to 59.7% of the entire sample, followed by East Asian countries that in total account for 18.8% of the sample. With the exception of the UK, European countries are largely absent from the sample, only 7.3% of the articles originate from this region, with countries such as France, Belgium, Italy or Portugal having no studies and countries such as Germany or the Netherlands having one respectively. Thus, with eight articles, Spain is the most prolific European country outside of the UK. The geographical distribution of study settings also clearly shows an almost complete absence of studies undertaken within African contexts, with five studies from South Africa and one from Tunisia. Studies from South-East Asia, the Middle East, and South America are likewise low in number this review. Whilst the global picture evokes an imbalance, this might be partially due to our search and sampling strategy, having focused on English language journals, indexed in four primarily Western-focused databases.

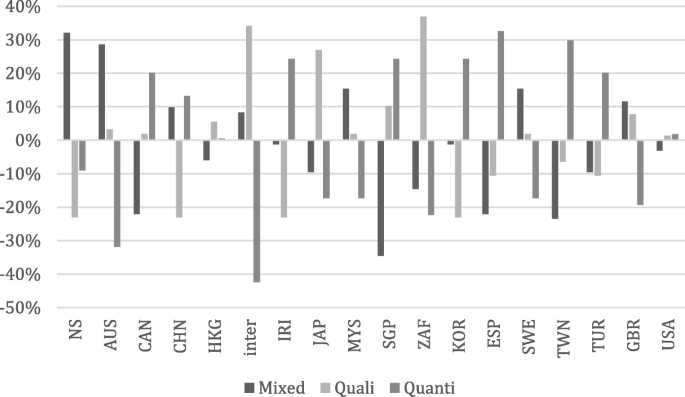

Percentage deviation from the average relative frequencies of the different data collection formats per country (≥ 3 articles). Note. NS = not stated; AUS = Australia; CAN = Canada; CHN = China; HKG = Hong Kong; inter = international; IRI = Iran; JAP = Japan; MYS = Malaysia; SGP = Singapore; ZAF = South Africa; KOR = South Korea; ESP = Spain; SWE = Sweden; TWN = Taiwan; TUR = Turkey; GBR = United Kingdom; USA = United States of America

Methodological characteristics

Within this literature corpus, 103 studies (42%) employed quantitative methods, 84 (35%) mixed methods, and 56 (23%) qualitative. Relating these numbers back to the contributing countries, different preferences for and frequencies of methods used become apparent (see Fig. 3 ). As a general tendency, mixed methods and qualitative research occurs more often in Western countries, whereas quantitative research is the preferred method in East Asian countries. For example, studies originating from Australia employ mixed methods research 28% more often than the average, whereas Singapore is far below average in mixed methods research, with 34.5% less than the other countries in the sample. In Taiwan, on the other hand, mixed methods studies are being conducted 23.5% below average and qualitative research 6.4% less often than average. However, quantitative research occurs more often than in other countries, with 29.8% above average.

Amongst the qualitative studies, qualitative content analysis ( n = 30) was the most frequently used analysis approach, followed by thematic analysis ( n = 21) and grounded theory ( n = 12). However, a lot of times ( n = 37) the exact analysis approach was not reported, could not be allocated to a specific classification ( n = 22), or no method of analysis was identifiable ( n = 11). Within studies using quantitative methods, mean comparison was used in 100 studies, frequency data was collected and analysed in 83 studies, and in 40 studies regression models were used. Furthermore, looking at the correlation between the different analysis approaches, only one significant correlation can be identified, this being between mean comparison and frequency data (−.246). Besides that, correlations are small, for example, in only 14% of the studies both mean comparisons and regressions models are employed.

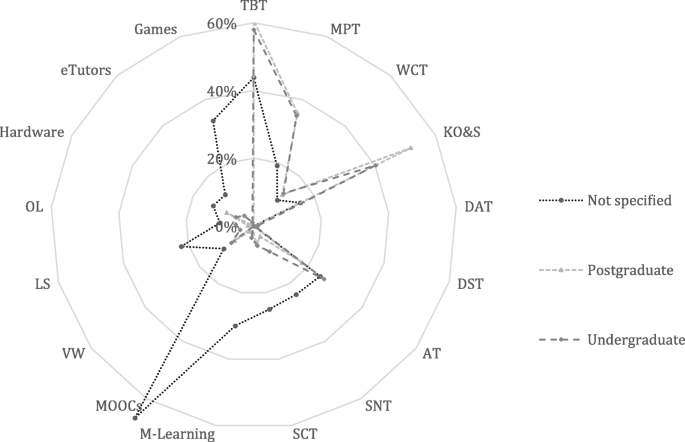

Study population characteristics

Research in the corpus focused on universities as the prime institution type ( n = 191, 79%), followed by 24 (10%) non-specified institution types, and colleges ( n = 21, 8.2%) (see Fig. 4 ). Five studies (2%) included institutions classified as ‘other’, and two studies (0.8%) included both college and university students. The most frequently studied student population was undergraduate students (60%, n = 146), as opposed to 33 studies (14%) focused on postgraduate students (see Fig. 6 ). A combination of undergraduate and postgraduate students were the subject of interest in 23 studies (9%), with 41 studies (17%) not specifying the level of study of research participants.

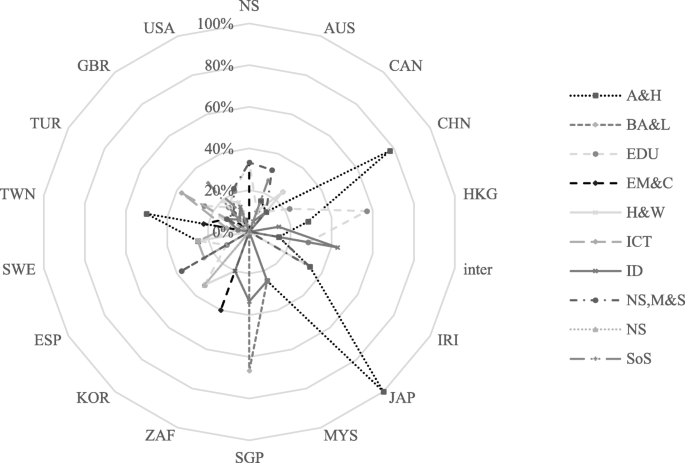

Relative frequencies of study field in dependence of countries with ≥3 articles. Note. Country abbreviations are as per Figure 4. A&H = Arts & Humanities; BA&L = Business, Administration and Law; EDU = Education; EM&C = Engineering, Manufacturing & Construction; H&W = Health & Welfare; ICT = Information & Communication Technologies; ID = interdisciplinary; NS,M&S = Natural Science, Mathematics & Statistics; NS = Not specified; SoS = Social Sciences, Journalism & Information

Based on the UNESCO (2015) ISCED classification, eight broad study fields are covered in the sample, with Arts & Humanities (42 studies), Education (42 studies), and Natural Sciences, Mathematics & Statistics (37) being the top three study fields, followed by Health & Welfare (30 studies), Social Sciences, Journalism & Information (22), Business, Administration & Law (19 studies), Information & Communication Technologies (13), Engineering, Manufacturing & Construction (11), and another 26 studies of interdisciplinary character. One study did not specify a field of study.

An expectancy value was calculated, according to which, the distribution of studies per discipline should occur per country. The actual deviation from this value then showed that several Asian countries are home to more articles in the field of Arts & Humanities than was expected: Japan with 3.3 articles more, China with 5.4 and Taiwan with 5.9. Furthermore, internationally located research also shows 2.3 more interdisciplinary studies than expected, whereas studies on Social Sciences occur more often than expected in the UK (5.7 more articles) and Australia (3.3 articles) but less often than expected across all other countries. Interestingly, the USA have 9.9 studies less in Arts & Humanities than was expected but 5.6 articles more than expected in Natural Science.

Question One: How do the studies in the sample ground student engagement and align with theory?

Defining student engagement.

It is striking that almost all of the studies ( n = 225, 93%) in this corpus lack a definition of student engagement, with only 18 (7%) articles attempting to define the concept. However, this is not too surprising, as the search strategy was set up with the assumption that researchers investigating student engagement (dimensions and indicators) would not necessarily label them as student engagement. When developing their definitions, authors in these 18 studies referenced 22 different sources, with the work of Kuh and colleagues e.g., (Hu & Kuh, 2002 ; Kuh, 2001 ; Kuh et al., 2006 ), as well as Astin ( 1984 ), the only authors referred to more than once. The most popular definition of student engagement within these studies was that of active participation and involvement in learning and university life e.g., (Bolden & Nahachewsky, 2015 ; bFukuzawa & Boyd, 2016 ), which was also found by Joksimović et al. ( 2018 ) in their review of MOOC research. Interaction, especially between peers and with faculty, was the next most prevalent definition e.g., (Andrew, Ewens, & Maslin-Prothero, 2015 ; Bigatel & Williams, 2015 ). Time and effort was given as a definition in four studies (Gleason, 2012 ; Hatzipanagos & Code, 2016 ; Price, Richardson, & Jelfs, 2007 ; Sun & Rueda, 2012 ), with expending physical and psychological energy (Ivala & Gachago, 2012 ) another definition. This variance in definitions and sources reflects the ongoing complexity of the construct (Zepke, 2018 ), and serves to reinforce the need for a clearer understanding across the field (Schindler et al., 2017 ).

Theoretical underpinnings

Reflecting findings from other systematic and literature reviews on the topic (Abdool, Nirula, Bonato, Rajji, & Silver, 2017 ; Hunsu et al., 2016 ; Kaliisa & Picard, 2017 ; Lundin et al., 2018 ), 59% ( n = 100) of studies did not employ a theoretical model in their research. Of the 41% ( n = 100) that did, 18 studies drew on social constructivism, followed by the Community of Inquiry model ( n = 8), Sociocultural Learning Theory ( n = 5), and Community of Practice models ( n = 4). These findings also reflect the state of the field in general (Al-Sakkaf et al., 2019 ; Bond, 2019b ; Hennessy, Girvan, Mavrikis, Price, & Winters, 2018 ).

Another interesting finding of this research is that whilst 144 studies (59%) provided research questions, 99 studies (41%) did not. Although it is recognised that not all studies have research questions (Bryman, 2007 ), or only develop them throughout the research process, such as with grounded theory (Glaser & Strauss, 1967 ), a surprising number of quantitative studies (36%, n = 37) did not have research questions. This is a reflection on the lack of theoretical guidance, as 30 of these 37 studies also did not draw on a theoretical or conceptual framework.

Question 2: which indicators of cognitive, behavioural and affective engagement were identified in studies where educational technology was used? Which indicators of student disengagement?

Student engagement indicators.

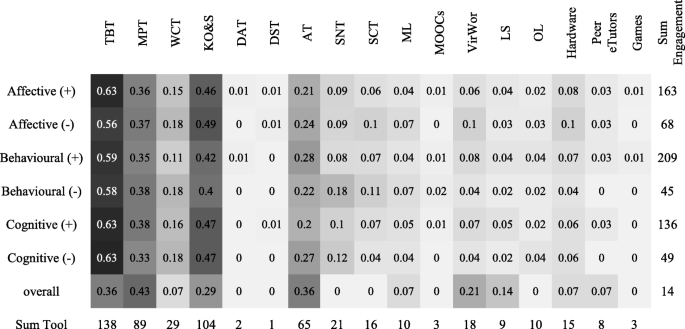

Within the corpus, the behavioural engagement dimension was documented in some form in 209 studies (86%), whereas the dimension of affective engagement was reported in 163 studies (67%) and the cognitive dimension in only 136 (56%) studies. However, the ten most often identified student engagement indicators across the studies overall (see Table 2 ) were evenly distributed over all three dimensions (see Table 3 ). The indicators participation/interaction/involvement , achievement and positive interactions with peers and teachers each appear in at least 100 studies, which is almost double the amount of the next most frequent student engagement indicator.

Across the 243 studies in the corpus, 117 (48%) showed all three dimensions of affective, cognitive and behavioural student engagement e.g., (Szabo & Schwartz, 2011 ), including six studies that used established student engagement questionnaires, such as the NSSE (e.g., Delialioglu, 2012 ), or self-developed addressing these three dimensions. Another 54 studies (22%) displayed at least two student engagement dimensions e.g., (Hatzipanagos & Code, 2016 ), including six questionnaire studies. Studies exhibiting one student engagement dimension only, was reported in 71 studies (29%) e.g., (Vural, 2013 ).

Student disengagement indicators

Indicators of student disengagement (see Table 4 ) were identified considerably less often across the corpus, which could be explained by the purpose of the studies being to primarily address/measure positive engagement, but on the other hand this could potentially be due to a form of self-selected or publication bias, due to less frequently reporting and/or publishing studies with negative results. The three disengagement indicators that were most often indicated were frustration ( n = 33, 14%) e.g., (Ikpeze, 2007 ), opposition/rejection ( n = 20, 8%) e.g., (Smidt, Bunk, McGrory, Li, & Gatenby, 2014 ) and disappointment e.g., (Granberg, 2010 ) , as well as other affective disengagement ( n = 18, 7% each).

Technology tool typology and engagement/disengagement indicators

Across the 243 studies, a plethora of over 50 individual educational technology tools were employed. The top five most frequently researched tools were LMS ( n = 89), discussion forums ( n = 80), videos ( n = 44), recorded lectures ( n = 25), and chat ( n = 24). Following a slightly modified version of Bower’s ( 2016 ) educational tools typology, 17 broad categories of tools were identified (see Additional file 4 for classification, and 3.2 for further information). The frequency with which tools from the respective groups employed in studies varied considerably (see Additional file 4 ), with the top five categories being text-based tools ( n = 138), followed by knowledge organisation & sharing tools ( n = 104), multimodal production tools ( n = 89), assessment tools ( n = 65) and website creation tools ( n = 29).

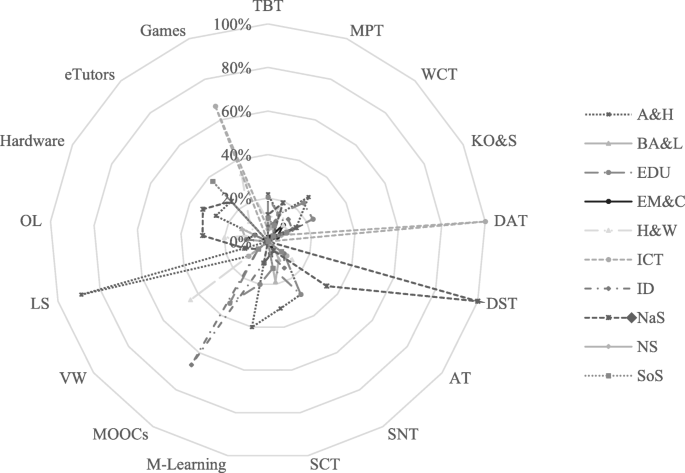

Figure 5 shows what percentage of each engagement dimension (e.g., affective engagement or cognitive disengagement) was fostered through each specific technology type. Given the results in 4.2.1 on student engagement, it was somewhat unsurprising to see the prevalence of text-based tools , knowledge organisation & sharing tools, and multimodal production tools having the highest proportion of affective, behavioural and cognitive engagement. For example, affective engagement was identified in 163 studies, with 63% of these studies using text-based tools (e.g., Bulu & Yildirim, 2008 ) , and cognitive engagement identified in 136 studies, with 47% of those using knowledge organisation & sharing tools e.g., (Shonfeld & Ronen, 2015 ). However, further analysis of studies employing discussion forums (a text-based tool ) revealed that, whilst the top affective and behavioural engagement indicators were found in almost two-thirds of studies (see Additional file 5 ), there was a substantial gap between that and the next most prevalent engagement indicator, with the exact pattern (and indicators) emerging for wikis. This represents an area for future research.

Engagement and disengagement by tool typology. Note. TBT = text-based tools; MPT = multimodal production tools; WCT = website creation tools; KO&S = knowledge organisation and sharing tools; DAT = data analysis tools; DST = digital storytelling tools; AT = assessment tools; SNT = social networking tools; SCT = synchronous collaboration tools; ML = mobile learning; VW = virtual worlds; LS = learning software; OL = online learning; A&H = Arts & Humanities; BA&L = Business, Administration and Law; EDU = Education; EM&C = Engineering, Manufacturing & Construction; H&W = Health & Welfare; ICT = Information & Communication Technologies; ID = interdisciplinary; NS,M&S = Natural Science, Mathematics & Statistics; NS = Not specified; SoS = Social Sciences, Journalism & Information

Interestingly, studies using website creation tools reported more disengagement than engagement indicators across all three domains (see Fig. 5 ), with studies using assessment tools and social networking tools also reporting increased instances of disengagement across two domains (affective and cognitive, and behavioural and cognitive respectively). 23 of the studies (79%) using website creation tools , used blogs, with students showing, for example, disinterest in topics chosen e.g., (Sullivan & Longnecker, 2014 ), anxiety over their lack of blogging knowledge and skills e.g., (Mansouri & Piki, 2016 ), and continued avoidance of using blogs in some cases, despite introductory training e.g., (Keiller & Inglis-Jassiem, 2015 ). In studies where assessment tools were used, students found timed assessment stressful, particularly when trying to complete complex mathematical solutions e.g., (Gupta, 2009 ), as well as quizzes given at the end of lectures, with some students preferring take-up time of content first e.g., (DePaolo & Wilkinson, 2014 ). Disengagement in studies where social networking tools were used, indicated that some students found it difficult to express themselves in short posts e.g., (Cook & Bissonnette, 2016 ), that conversations lacked authenticity e.g., (Arnold & Paulus, 2010 ), and that some did not want to mix personal and academic spaces e.g., (Ivala & Gachago, 2012 ).

Question 3: What are the learning scenarios, modes of delivery and educational technology tools employed in the studies?

Learning scenarios.

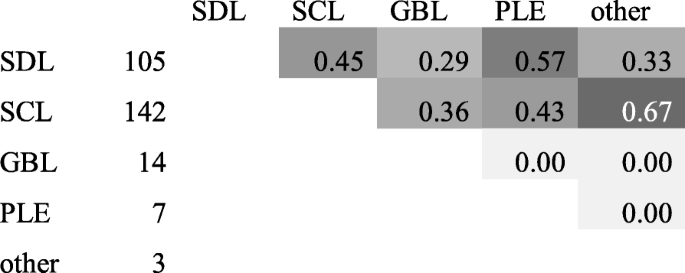

With 58.4% across the sample, social-collaborative learning (SCL) was the scenario most often employed ( n = 142), followed by 43.2% of studies investigating self-directed learning (SDL) ( n = 105) and 5.8% of studies using game-based learning (GBL) ( n = 14) (see Fig. 6 ). Studies coded as SCL included those exploring social learning (Bandura, 1971 ) and social constructivist approaches (Vygotsky, 1978 ). Personal learning environments (PLE) were found for 2.9% of studies, 1.3% studies used other scenarios ( n = 3), whereas another 13.2% did not provide specification of their learning scenarios ( n = 32). It is noteworthy that in 45% of possible cases for employing SDL scenarios, SCL was also used. Other learning scenarios were also used mostly in combination with SCL and SDL. Given the rising number of higher education studies exploring flipped learning (Lundin et al., 2018 ), studies exploring the approach were also specifically coded (3%, n = 7).

Co-occurrence of learning scenarios across the sample ( n = 243). Note. SDL = self-directed learning; SCL = social collaborative learning; GBL = game-based learning; PLE = personal learning environments; other = other learning scenario

Modes of delivery

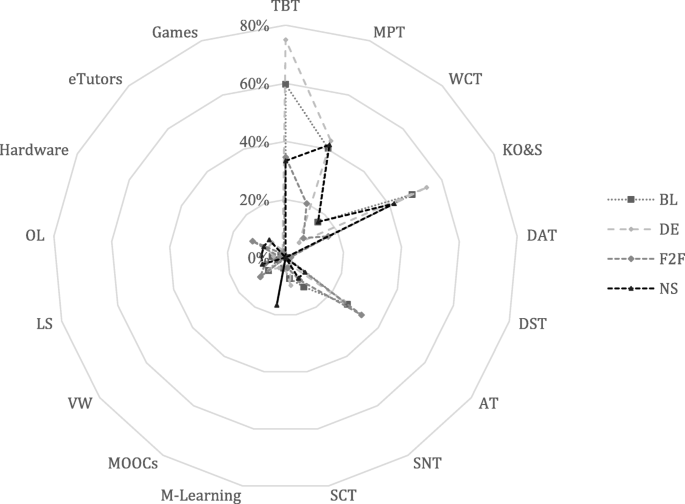

In 84% of studies ( n = 204), a single mode of delivery was used, with blended learning the most researched (109 studies), followed by distance education (72 studies), and face-to-face instruction (55 studies). Of the remaining 39 studies, 12 did not indicate their mode of delivery, whilst the other 27 studies combined or compared modes of delivery, e.g. comparing face to face courses to blended learning, such as the study on using iPads in undergraduate nursing education by Davies ( 2014 ).

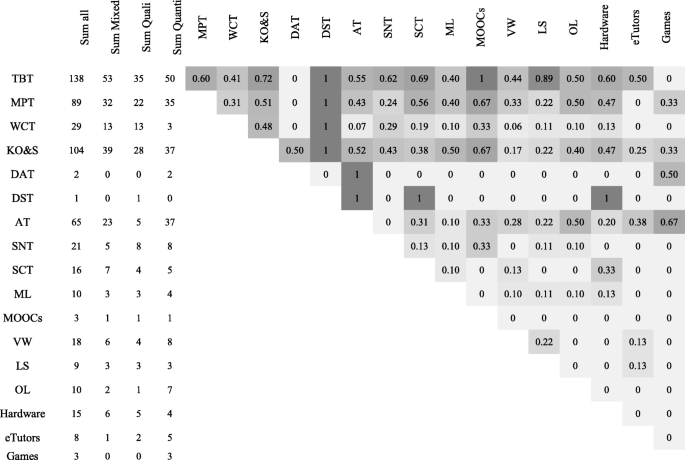

Educational technology tools investigated

Most studies in this corpus (55%) used technology asynchronously, with 12% of studies researching synchronous tools, and 18% of studies using both asynchronous and synchronous. When exploring the use of tools, the results are not surprising, with a heavy reliance on asynchronous technology. However, when looking at tool usage with studies in face-to-face contexts, the number of synchronous tools (31%) is almost as many as the number of asynchronous tools (41%), and surprisingly low within studies in distance education (7%).

Tool categories were used in combination, with text-based tools most often used in combination with other technology types (see Fig. 7 ). For example, in 60% of all possible cases using multimodal production tools, in 69% of all possible synchronous production tool cases, in 72% of all possible knowledge, organisation & sharing tool cases , and a striking 89% of all possible learning software cases and 100% of all possible MOOC cases. On the contrary, text-based tools were never used in combination with games or data analysis tools . However, studies using gaming tools were used in 67% of possible assessment tool cases as well. Assessment tools, however, constitute somewhat of a special case when studies using website creation tools are concerned, with only 7% of possible cases having employed assessment tools .

Co-occurrence of tools across the sample ( n = 243). Note. TBT = text-based tools; MPT = multimodal production tools; WCT = website creation tools; KO&S = knowledge organisation and sharing tools; DAT = data analysis tools; DST = digital storytelling tools; AT = assessment tools; SNT = social networking tools; SCT = synchronous collaboration tools; ML = mobile learning; VW = virtual worlds; LS = learning software; OL = online learning

In order to gain further understanding into how educational technology was used, we examined how often a combination of two variables should occur in the sample and how often it actually occurs, with deviations described as either ‘more than’ or ‘less than’ the expected value. This provides further insight into potential gaps in the literature, which can inform future research. For example, an analysis of educational technology tool usage amongst study populations (see Fig. 8 ) reveals that 5.0 more studies than expected looked at knowledge organisation & sharing for graduate students, but 5.0 studies less than expected investigated assessment tools for this group. By contrast, 5 studies more than expected researched assessment tools for unspecified study levels, and 4.3 studies less than expected employed knowledge organisation & sharing for undergraduate students.

Relative frequency of educational technology tools used according to study level Note. Abbreviations are explained in Fig. 7

Educational technology tools were also used differently from the expected pattern within various fields of study (see Fig. 9 ), most obviously for the cases of the top five tools. However, also for virtual worlds, found in 5.8 studies more in Health & Welfare than expected, and learning software, used in 6.4 studies more in Arts & Humanities than expected. In all other disciplines, learning software was used less often than assumed. Text-based tools were used more often than expected in fields of study that are already text-intensive, including Arts & Humanities, Education, Business, Administration & Law as well as Social Sciences - but less often than thought in fields such as Engineering, Health & Welfare, and Natural Sciences, Mathematics & Statistics. Multimodal production tools were used more often only in Health & Welfare, ICT and Natural Sciences, and less often than assumed across all other disciplines. Assessment tools deviated most clearly, with 11.9 studies more in Natural Sciences, Mathematics & Statistics than assumed, but with 5.2 studies less in both Education and Arts & Humanities.

Relative frequency of educational technology tools used according to field of study. Note. TBT = text-based tools; MPT = multimodal production tools; WCT = website creation tools; KO&S = knowledge organisation and sharing tools; DAT = data analysis tools; DST = digital storytelling tools; AT = assessment tools; SNT = social networking tools; SCT = synchronous collaboration tools; ML = mobile learning; VW = virtual worlds; LS = learning software; OL = online learning

In regards to mode of delivery and educational technology tools used, it is interesting to see that from the five top tools, except for assessment tools , all tools were used in face-to-face instruction less often than expected (see Fig. 10 ); from 1.6 studies less for website creation tools to 14.5 studies less for knowledge organisation & sharing tools . Assessment tools , however, were used in 3.3 studies more than expected - but less often than assumed (although moderately) in blended learning and distance education formats. Text-based tools, multimodal production tools and knowledge organisation & sharing tools were employed more often than expected in blended and distance learning, especially obvious in 13.1 studies more on t ext-based tools and 8.2 studies on knowledge organisation & sharing tools in distance education. Contrary to what one would perhaps expect, social networking tools were used in 4.2 studies less than expected for this mode of delivery.

Relative frequency of educational technology tools used according mode of delivery. Note. Tool abbreviations as per Figure 10. BL = Blended learning; DE = Distance education; F2F = Face-to-face; NS = Not stated

The findings of this study confirm those of previous research, with the most prolific countries being the US, UK, Australia, Taiwan and China. This is rather representative of the field, with an analysis of instructional design and technology research from 2007 to 2017 listing the most productive countries as the US, Taiwan, UK, Australia and Turkey (Bodily, Leary, & West, 2019 ). Likewise, an analysis of 40 years of research in Computers & Education (CAE) found that the US, UK and Taiwan accounted for 49.9% of all publications (Bond, 2018 ). By contrast, a lack of African research was apparent in this review, which is also evident in educational technology research in top tier peer-reviewed journals, with only 4% of articles published in the British Journal of Educational Technology ( BJET ) in the past decade (Bond, 2019b ) and 2% of articles in the Australasian Journal of Educational Technology (AJET) (Bond, 2018 ) hailing from Africa. Similar results were also found in previous literature and systematic reviews (see Table 1 ), which again raises questions of literature search and inclusion strategies, which will be further discussed in the limitations section.

Whilst other reviews of educational technology and student engagement have found studies to be largely STEM focused (Boyle et al., 2016 ; Li et al., 2017 ; Lundin et al., 2018 ; Nikou & Economides, 2018 ), this corpus features a more balanced scope of research, with the fields of Arts & Humanities (42 studies, 17.3%) and Education (42 studies, 17.3%) constituting roughly one third of all studies in the corpus - and Natural Sciences, Mathematics & Statistics, nevertheless, assuming rank 3 with 38 studies (15.6%). Beyond these three fields, further research is needed within underrepresented fields of study, in order to gain more comprehensive insights into the usage of educational technology tools (Kay & LeSage, 2009 ; Nikou & Economides, 2018 ).

Results of the systematic map further confirm the focus that prior educational technology research has placed on undergraduate students as the target group and participants in technology-enhanced learning settings e.g. (Cheston et al., 2013 ; Henrie et al., 2015 ). With the overwhelming number of 146 studies researching undergraduate students—compared to 33 studies on graduate students and 23 studies investigating both study levels—this also indicates that further investigation into the graduate student experience is needed. Furthermore, the fact that 41 studies do not report on the study level of their participants is an interesting albeit problematic fact, as implications might not easily be drawn for application to one’s own specific teaching context if the target group under investigation is not clearly denominated. A more precise reporting of participants’ details, as well as specification of the study context (country, institution, study level to name a few) is needed to transfer and apply study results to practice—being then able to take into account why some interventions succeed and others do not.

In line with other studies e.g. (Henrie et al., 2015 ), this review has also demonstrated that student engagement remains an under-theorised concept, that is often only considered fragmentally in research. Whilst studies in this review have often focused on isolated aspects of student engagement, their results are nevertheless interesting and valuable. However, it is important to relate these individual facets to the larger framework of student engagement, by considering how these aspects are connected and linked to each other. This is especially helpful to integrate research findings into practice, given that student engagement and disengagement are rarely one-dimensional; it is not enough to focus only on one aspect of engagement, but also to look at aspects that are adjacent to it (Pekrun & Linnenbrink-Garcia, 2012 ). It is also vital, therefore, that researchers develop and refine an understanding of student engagement, and make this explicit in their research (Appleton et al., 2008 ; Christenson et al., 2012 ).

Reflective of current conversations in the field of educational technology (Bond, 2019b ; Castañeda & Selwyn, 2018 ; Hew et al., 2019 ), as well as other reviews (Abdool et al., 2017 ; Hunsu et al., 2016 ; Kaliisa & Picard, 2017 ; Lundin et al., 2018 ), a substantial number of studies in this corpus did not have any theoretical underpinnings. Kaliisa and Picard ( 2017 ) argue that, without theory, research can result in disorganised accounts and issues with interpreting data, with research effectively “sit[ting] in a void if it’s not theoretically connected” (Kara, 2017 ), p. 56. Therefore, framing research in educational technology with a stronger theoretical basis, can assist with locating the “field’s disciplinary alignment” (Crook, 2019 ), p. 486 and further drive conversations forward.

The application of methods in this corpus was interesting in two ways. First, it is noticeable that quantitative studies are prevalent across the 243 articles in the sample. The number of studies employing qualitative research methods in the sample was comparatively low (56 studies as opposed to 84 mixed method studies and 103 quantitative studies). This is also reflected in the educational technology field at large, with a review of articles published in BJET and Educational Technology Research & Development (ETR&D) from 2002 to 2014 revealing that 40% of articles used quantitative methods, 26% qualitative and 13% mixed (Baydas, Kucuk, Yilmaz, Aydemir, & Goktas, 2015 ), and likewise a review of educational technology research from Turkey 1990–2011 revealed that 53% of articles used quantitative methods, 22% qualitative and 10% mixed methods (Kucuk, Aydemir, Yildirim, Arpacik, & Goktas, 2013 ). Quantitative studies primarily show that an intervention has worked or not when applied to e.g. a group of students in a certain setting as done in the study on using mobile apps on student performance in engineering education by Jou, Lin, and Tsai ( 2016 ), however, not all student engagement indicators can actually be measured in this way. The lower numbers of affective and cognitive engagement found in the studies in the corpus, reflect a wider call to the field to increase research on these two domains (Henrie et al., 2015 ; Joksimović et al., 2018 ; O’Flaherty & Phillips, 2015 ; Schindler et al., 2017 ). Whilst it is arguably more difficult to measure these two than behavioural engagement, the use of more rigorous and accurate surveys could be one possibility, as they can “capture unobservable aspects” (Henrie et al., 2015 ), p. 45 such as student feelings and information about the cognitive strategies they employ (Finn & Zimmer, 2012 ). However, they are often lengthy and onerous, or subject to the limitations of self-selection.

Whereas low numbers of qualitative studies researching student engagement and educational technology were previously identified in other student engagement and technology reviews (Connolly et al., 2012 ; Kay & LeSage, 2009 ; Lundin et al., 2018 ), it is studies like that by Lopera Medina ( 2014 ) in this sample, which reveal how people perceive this educational experience and the actual how of the process. Therefore, more qualitative and ethnographic measures should also be employed, such as student observations with thick descriptions, which can help shed light on the complexity of teaching and learning environments (Fredricks et al., 2004 ; Heflin, Shewmaker, & Nguyen, 2017 ). Conducting observations can be costly, however, both in time and money, so this is suggested in combination with computerised learning analytic data, which can provide measurable, objective and timely insight into how certain manifestations of engagement change over time (Henrie et al., 2015 ; Ma et al., 2015 ).

Whereas other results of this review have confirmed previous results in the field, the technology tools that were used in the studies and considered in their relation to student engagement in this corpus deviate. Whilst Henrie et al. ( 2015 ) found that the most frequently researched tools were discussion forums, general websites, LMS, general campus software and videos, the studies here focused predominantly on LMS, discussion forums, videos, recorded lectures and chat. Furthermore, whilst Schindler et al. ( 2017 ) found that digital games, web-conferencing software and Facebook were the most effective tools at enhancing student engagement, this review found that it was rather text-based tools , knowledge organisation & sharing , and multimodal production tools .

Limitations

During the execution of this systematic review, we tried to adhere to the method as rigorously as possible. However, several challenges were also encountered - some of which are addressed and discussed in another publication (Bedenlier, 2020b ) - resulting in limitations to this study. Four large, general educational research databases were searched, which are international in scope. However, by applying the criterion of articles published in English, research published on this topic in languages other than English was not included in this review. The same applies to research documented in, for example, grey literature, book chapters or monographs, or even articles from journals that are not indexed in the four databases searched. Another limitation is that only research published within the period 2007–2016 was investigated. Whilst we are cognisant of this being a restriction, we also think that the technological advances and the implications to be drawn from this time-frame relate more meaningfully to the current situation, than would have been the case for technologies used in the 1990s see (Bond, 2019b ). The sampling strategy also most likely accounts for the low number of studies from certain countries, e.g. in South America and Africa.

Studies included in this review represent various academic fields, and they also vary in the rigour with which they were conducted. Harden and Gough ( 2012 ) stress that the appraisal of quality and relevance of studies “ensure[s] that only the most appropriate, trustworthy and relevant studies are used to develop the conclusions of the review” (p. 154), we have included the criterion of being a peer reviewed contribution as a formal inclusion criterion from the beginning. In doing so, we reason that studies met a baseline of quality as applicable to published research in a specific field - otherwise they would not have been accepted for publication by the respective community. Finally, whilst the studies were diligently read and coded, and disagreements also discussed and reconciled, the human flaw of having overlooked or misinterpreted information provided in the individual articles cannot fully be excluded.

Finally, the results presented here provide an initial window into the overall body of research identified during the search, and further research is being undertaken to provide deeper insight into discipline specific use of technology and resulting student engagement using subsets of this sample (Bedenlier, 2020a ; Bond, M., Bedenlier, S., Buntins, K., Kerres, M., & Zawacki-Richter, O.: Facilitating student engagement through educational technology: A systematic review in the field of education, forthcoming).

Recommendations for future work and implications for practice

Whilst the evidence map presented in this article has confirmed previous research on the nexus of educational technology and student engagement, it has also elucidated a number of areas that further research is invited to address. Although these findings are similar to that of previous reviews, in order to more fully and comprehensively understand student engagement as a multi-faceted construct, it is not enough to focus only on indicators of engagement that can easily be measured, but rather the more complex endeavour of uncovering and investigating those indicators that reside below the surface. This also includes the careful alignment of theory and methodological design, in order to both adequately analyse the phenomenon under investigation, as well as contributing to the soundly executed body of research within the field of educational technology. Further research is invited in particular into how educational technology affects cognitive and affective engagement, whilst considering how this fits within the broader sociocultural framework of engagement (Bond, 2019a ). Further research is also invited into how educational technology affects student engagement within fields of study beyond Arts & Humanities, Education and Natural Sciences, Mathematics & Statistics, as well as within graduate level courses. The use of more qualitative research methods is particularly encouraged.

The findings of this review suggest that research gaps exist with particular combinations of tools, study levels and modes of delivery. With respect to study level, the use of assessment tools with graduate students, as well as knowledge organisation & sharing tools with undergraduate students, are topics researched far less than expected. The use of text-based tools in Engineering, Health & Welfare and Natural Sciences, Mathematics & Statistics, as well as the use of multimodal production tools outside of these disciplines, are also areas for future research, as is the use of assessment tools in the fields of Education and Arts & Humanities in particular.

With 109 studies in this systematic review using a blended learning design, this is a confirmation of the argument that online distance education and traditional face-to-face education are becoming increasingly more integrated with one another. Whilst this indicates that a lot of educators have made the move from face-to-face teaching to technology-enhanced learning, this also makes a case for the need for further professional development, in order to apply these tools effectively within their own teaching contexts, with this review indicating that further research is needed in particlar into the use of social networking tools in online/distance education. The question also needs to be asked, not only why the number of published studies are low within certain countries and regions, but also to enquire into the nature of why that is the case. This entails questioning the conditions under which research is being conducted, potentially criticising publication policies of major, Western-based journals, but also ultimately to reflect on one’s search strategy and research assumptions as a Western educator-researcher.

Based on the findings of this review, educators within higher education institutions are encouraged to use text-based tools , knowledge, organisation and sharing tools , and multimodal production tools in particular and, whilst any technology can lead to disengagement if not employed effectively, to be mindful that website creation tools (blogs and ePortfolios), social networking tools and assessment tools have been found to be more disengaging than engaging in this review. Therefore, educators are encouraged to ensure that students receive sufficient and ongoing training for any new technology used, including those that might appear straightforward, e.g. blogs, and that they may require extra writing support. Ensure that discussion/blog topics are interesting, that they allow student agency, and they are authentic to students, including the use of social media. Social networking tools that augment student professional learning networks are particularly useful. Educators should also be aware, however, that some students do not want to mix their academic and personal lives, and so the decision to use certain social platforms could be decided together with students.

Availability of data and materials

All data will be made publicly available, as part of the funding requirements, via https://www.researchgate.net/project/Facilitating-student-engagement-with-digital-media-in-higher-education-ActiveLeaRn .

The detailed search strategy, including the modified search strings according to the individual databases, can be retrieved from https://www.researchgate.net/project/Facilitating-student-engagement-with-digital-media-in-higher-education-ActiveLeaRn

The full code set can be retrieved from the review protocol at https://www.researchgate.net/project/Facilitating-student-engagement-with-digital-media-in-higher-education-ActiveLeaRn .

Abdool, P. S., Nirula, L., Bonato, S., Rajji, T. K., & Silver, I. L. (2017). Simulation in undergraduate psychiatry: Exploring the depth of learner engagement. Academic Psychiatry : the Journal of the American Association of Directors of Psychiatric Residency Training and the Association for Academic Psychiatry , 41 (2), 251–261. https://doi.org/10.1007/s40596-016-0633-9 .

Article Google Scholar

Alioon, Y., & Delialioğlu, Ö. (2017). The effect of authentic m-learning activities on student engagement and motivation. British Journal of Educational Technology , 32 , 121. https://doi.org/10.1111/bjet.12559 .

Alrasheedi, M., Capretz, L. F., & Raza, A. (2015). A systematic review of the critical factors for success of mobile learning in higher education (university students’ perspective). Journal of Educational Computing Research , 52 (2), 257–276. https://doi.org/10.1177/0735633115571928 .

Al-Sakkaf, A., Omar, M., & Ahmad, M. (2019). A systematic literature review of student engagement in software visualization: A theoretical perspective. Computer Science Education , 29 (2–3), 283–309. https://doi.org/10.1080/08993408.2018.1564611 .

Andrew, L., Ewens, B., & Maslin-Prothero, S. (2015). Enhancing the online learning experience using virtual interactive classrooms. Australian Journal of Advanced Nursing , 32 (4), 22–31.

Google Scholar

Antonenko, P. D. (2015). The instrumental value of conceptual frameworks in educational technology research. Educational Technology Research and Development , 63 (1), 53–71. https://doi.org/10.1007/s11423-014-9363-4 .

Appleton, J. J., Christenson, S. L., & Furlong, M. J. (2008). Student engagement with school: Critical conceptual and methodological issues of the construct. Psychology in the Schools , 45 (5), 369–386. https://doi.org/10.1002/pits.20303 .

Arnold, N., & Paulus, T. (2010). Using a social networking site for experiential learning: Appropriating, lurking, modeling and community building. Internet and Higher Education , 13 (4), 188–196. https://doi.org/10.1016/j.iheduc.2010.04.002 .

Astin, A. W. (1984). Student involvement: A developmental theory for higher education. Journal of College Student Development , 25 (4), 297–308.

Astin, A. W. (1999). Student involvement: A developmental theory for higher education. Journal of College Student Development , 40 (5), 518–529. https://www.researchgate.net/publication/220017441 (Original work published July 1984).

Atmacasoy, A., & Aksu, M. (2018). Blended learning at pre-service teacher education in Turkey: A systematic review. Education and Information Technologies , 23 (6), 2399–2422. https://doi.org/10.1007/s10639-018-9723-5 .

Azevedo, R. (2015). Defining and measuring engagement and learning in science: Conceptual, theoretical, methodological, and analytical issues. Educational Psychologist , 50 (1), 84–94. https://doi.org/10.1080/00461520.2015.1004069 .

Bandura, A. (1971). Social learning theory . New York: General Learning Press.

Barak, M. (2018). Are digital natives open to change? Examining flexible thinking and resistance to change. Computers & Education , 121 , 115–123. https://doi.org/10.1016/j.compedu.2018.01.016 .

Barak, M., & Levenberg, A. (2016). Flexible thinking in learning: An individual differences measure for learning in technology-enhanced environments. Computers & Education , 99 , 39–52. https://doi.org/10.1016/j.compedu.2016.04.003 .

Baron, P., & Corbin, L. (2012). Student engagement: Rhetoric and reality. Higher Education Research and Development , 31 (6), 759–772. https://doi.org/10.1080/07294360.2012.655711 .

Baydas, O., Kucuk, S., Yilmaz, R. M., Aydemir, M., & Goktas, Y. (2015). Educational technology research trends from 2002 to 2014. Scientometrics , 105 (1), 709–725. https://doi.org/10.1007/s11192-015-1693-4 .

Bedenlier, S., Bond, M., Buntins, K., Zawacki-Richter, O., & Kerres, M. (2020a). Facilitating student engagement through educational technology in higher education: A systematic review in the field of arts & humanities. Australasian Journal of Educational Technology , 36 (4), 27–47. https://doi.org/10.14742/ajet.5477 .

Bedenlier, S., Bond, M., Buntins, K., Zawacki-Richter, O., & Kerres, M. (2020b). Learning by Doing? Reflections on Conducting a Systematic Review in the Field of Educational Technology. In O. Zawacki-Richter, M. Kerres, S. Bedenlier, M. Bond, & K. Buntins (Eds.), Systematic Reviews in Educational Research (Vol. 45 , pp. 111–127). Wiesbaden: Springer Fachmedien Wiesbaden. https://doi.org/10.1007/978-3-658-27602-7_7 .

Ben-Eliyahu, A., Moore, D., Dorph, R., & Schunn, C. D. (2018). Investigating the multidimensionality of engagement: Affective, behavioral, and cognitive engagement across science activities and contexts. Contemporary Educational Psychology , 53 , 87–105. https://doi.org/10.1016/j.cedpsych.2018.01.002 .

Betihavas, V., Bridgman, H., Kornhaber, R., & Cross, M. (2016). The evidence for ‘flipping out’: A systematic review of the flipped classroom in nursing education. Nurse Education Today , 38 , 15–21. https://doi.org/10.1016/j.nedt.2015.12.010 .

Bigatel, P., & Williams, V. (2015). Measuring student engagement in an online program. Online Journal of Distance Learning Administration , 18 (2), 9.

Bodily, R., Leary, H., & West, R. E. (2019). Research trends in instructional design and technology journals. British Journal of Educational Technology , 50 (1), 64–79. https://doi.org/10.1111/bjet.12712 .

Boekaerts, M. (2016). Engagement as an inherent aspect of the learning process. Learning and Instruction , 43 , 76–83. https://doi.org/10.1016/j.learninstruc.2016.02.001 .

Bolden, B., & Nahachewsky, J. (2015). Podcast creation as transformative music engagement. Music Education Research , 17 (1), 17–33. https://doi.org/10.1080/14613808.2014.969219 .

Bond, M. (2018). Helping doctoral students crack the publication code: An evaluation and content analysis of the Australasian Journal of Educational Technology. Australasian Journal of Educational Technology , 34 (5), 168–183. https://doi.org/10.14742/ajet.4363 .

Bond, M., & Bedenlier, S. (2019a). Facilitating Student Engagement Through Educational Technology: Towards a Conceptual Framework. Journal of Interactive Media in Education , 2019 (1), 1-14. https://doi.org/10.5334/jime.528 .

Bond, M., Zawacki-Richter, O., & Nichols, M. (2019b). Revisiting five decades of educational technology research: A content and authorship analysis of the British Journal of Educational Technology. British Journal of Educational Technology , 50 (1), 12–63. https://doi.org/10.1111/bjet.12730 .

Bouta, H., Retalis, S., & Paraskeva, F. (2012). Utilising a collaborative macro-script to enhance student engagement: A mixed method study in a 3D virtual environment. Computers & Education , 58 (1), 501–517. https://doi.org/10.1016/j.compedu.2011.08.031 .

Bower, M. (2015). A typology of web 2.0 learning technologies . EDUCAUSE Digital Library Retrieved 20 June 2019, from http://www.educause.edu/library/resources/typology-web-20-learning-technologies .

Bower, M. (2016). Deriving a typology of web 2.0 learning technologies. British Journal of Educational Technology , 47 (4), 763–777. https://doi.org/10.1111/bjet.12344 .

Boyle, E. A., Connolly, T. M., Hainey, T., & Boyle, J. M. (2012). Engagement in digital entertainment games: A systematic review. Computers in Human Behavior , 28 (3), 771–780. https://doi.org/10.1016/j.chb.2011.11.020 .

Boyle, E. A., Hainey, T., Connolly, T. M., Gray, G., Earp, J., Ott, M., … Pereira, J. (2016). An update to the systematic literature review of empirical evidence of the impacts and outcomes of computer games and serious games. Computers & Education , 94 , 178–192. https://doi.org/10.1016/j.compedu.2015.11.003 .

Broadbent, J., & Poon, W. L. (2015). Self-regulated learning strategies & academic achievement in online higher education learning environments: A systematic review. The Internet and Higher Education , 27 , 1–13. https://doi.org/10.1016/j.iheduc.2015.04.007 .

Brunton, G., Stansfield, C., & Thomas, J. (2012). Finding relevant studies. In D. Gough, S. Oliver, & J. Thomas (Eds.), An introduction to systematic reviews , (pp. 107–134). Los Angeles: Sage.

Bryman, A. (2007). The research question in social research: What is its role? International Journal of Social Research Methodology , 10 (1), 5–20. https://doi.org/10.1080/13645570600655282 .

Bulu, S. T., & Yildirim, Z. (2008). Communication behaviors and trust in collaborative online teams. Educational Technology & Society , 11 (1), 132–147.

Bundick, M., Quaglia, R., Corso, M., & Haywood, D. (2014). Promoting student engagement in the classroom. Teachers College Record , 116 (4) Retrieved from http://www.tcrecord.org/content.asp?contentid=17402 .

Castañeda, L., & Selwyn, N. (2018). More than tools? Making sense of the ongoing digitizations of higher education. International Journal of Educational Technology in Higher Education , 15 (1), 211. https://doi.org/10.1186/s41239-018-0109-y .

Chen, P.-S. D., Lambert, A. D., & Guidry, K. R. (2010). Engaging online learners: The impact of web-based learning technology on college student engagement. Computers & Education , 54 (4), 1222–1232. https://doi.org/10.1016/j.compedu.2009.11.008 .