Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Chapter 26: Rigour

Darshini Ayton

Learning outcomes

Upon completion of this chapter, you should be able to:

- Understand the concepts of rigour and trustworthiness in qualitative research.

- Describe strategies for dependability, credibility, confirmability and transferability in qualitative research.

- Define reflexivity and describe types of reflexivity

What is rigour?

In qualitative research, rigour, or trustworthiness, refers to how researchers demonstrate the quality of their research. 1, 2 Rigour is an umbrella term for several strategies and approaches that recognise the influence on qualitative research by multiple realities; for example, of the researcher during data collection and analysis, and of the participant. The research process is shaped by multiple elements, including research skills, the social and research environment and the community setting. 2

Research is considered rigorous or trustworthy when members of the research community are confident in the study’s methods, the data and its interpretation. 3 As mentioned in Chapters 1 and 2, quantitative and qualitative research are founded on different research paradigms and, hence, quality in research cannot be addressed in the same way for both types of research studies. Table 26.1 provides a comparison overview of the approaches of quantitative and qualitative research in ensuring quality in research.

Table 26.1: Comparison of quantitative and qualitative approaches to ensuring quality in research

Below is an overview of the main approaches to rigour in qualitative research. For each of the approaches, examples of how rigour was demonstrated are provided from the author’s PhD thesis.

Approaches to dependability

Dependability requires the researcher to provide an account of changes to the research process and setting. 3 The main approach to dependability is an audit trail.

- Audit trail – the researcher records or takes notes on the conduct of the research and the process of reaching conclusions from the data. The audit trail includes information on the data collection and data analysis, including decision-making and interpretations of the data that influence the study’s results. 8 , 9

The interview questions for this study evolved as the study progressed, and accordingly, the process was iterative. I spent 12 months collecting data, and as my understanding and responsiveness to my participants and to the culture and ethos of the various churches developed, so did my line of questioning. For example, in the early interviews for phase 2, I included questions regarding the qualifications a church leader might look for in hiring someone to undertake health promotion activities. This question was dropped after the first couple of interviews, as it was clear that church leaders did not necessarily view their activities as health promoting and therefore did not perceive the relevance of this question. By ‘being church’, they were health promoting, and therefore activities that were health promoting were not easily separated from other activities that were part of the core mission of the church 10 ( pp93–4)

Approaches to credibility

Credibility requires the researcher to demonstrate the truth or confidence in the findings. The main approaches to credibility include triangulation, prolonged engagement, persistent observation, negative case analysis and member checking. 3

- Triangulation – the assembly of data and interpretations from multiple methods (methods triangulation), researchers (research triangulation), theory (theory triangulation) and data sources (different participant groups). 9 Refer to Chapter 28 for a detailed discussion of this process.

- Prolonged engagement – the requirement for researchers to spend sufficient time with participants and/or within the research context to familiarise them with the research setting, to build trust and rapport with participants and to recognise and correct any misinformation. 9

Prolonged engagement with churches was also achieved through the case study phase as the ten case study churches were involved in more than one phase of data collection. These ten churches were the case studies in which significant time was spent conducting interviews and focus groups, and attending activities and programs. Subsequently, there were many instances where I interacted with the same people on more than one occasion, thereby facilitating the development of interactive and deeper relationships with participants 10 (pp.94–5)

- Persistent observation – the identification of characteristics and elements that are most relevant to the problem or issue under study, and upon which the research will focus in detail. 9

In the following chapters, I present my analysis of the world of churches in which I was immersed as I conducted fieldwork. I describe the processes of church practice and action, and explore how this can be conceptualised into health promotion action 10 (p97)

- Negative case analysis – the process of finding and discussing data that contradicts the study’s main findings. Negative case analysis demonstrates that nuance and granularity in perspectives of both shared and divergent opinions have been examined, enhancing the quality of the interpretation of the data.

Although I did not use negative case selection, the Catholic churches in this study acted as examples of the ‘low engagement’ 10 (p97 )

- Member checking – the presentation of data analysis, interpretations and conclusions of the research to members of the participant groups. This enables participants or people with shared identity with the participants to provide their perspectives on the research. 9

Throughout my candidature – during data collection and analysis, and in the construction of my results chapters – I engaged with a number of Christians, both paid church staff members and volunteers, to test my thoughts and concepts. These people were not participants in the study, but they were embedded in the cultural and social context of churches in Victoria. They were able to challenge and also affirm my thinking and so contributed to a process of member checking 10 (p96)

Approaches to confirmability

Confirmability is demonstrated by grounding the results in the data from participants. 3 This can be achieved through the use of quotes, specifying the number of participants and data sources and providing details of the data collection.

- Quotes from participants are used to demonstrate that the themes are generated from the data. The results section of the thesis chapters commences with a story based on the field notes or recordings, with extensive quotes from participants presented throughout. 10

- The number of participants in the study provides the context for where the data is ‘sourced’ from for the results and interpretation. Table 26.2 is reproduced with permission from the Author’s thesis and details the data sources for the project. This also contributes to establishing how triangulation across data sources and methods was achieved.

- Details of data collection – Table 26.2 provides detailed information about the processes of data collection, including dates and locations but the duration of each research encounter was not specified.

Table 26.2 Data sources for the PhD research project of the Author.

Approaches to transferability.

To enable the transferability of qualitative research, researchers need to provide information about the context and the setting. A key approach for transferability is thick description. 6

- Thick description – detailed explanations and descriptions of the research questions are provided, including about the research setting, contextual factors and changes to the research setting. 9

I chose to include the Catholic Church because it is the largest Christian group in Australia and is an example of a traditional church. The Protestant group were represented through the Uniting, Anglican Baptist and Church of Christ denominations. The Uniting Church denomination is unique to Australia and was formed in 1977 through the merging of the Methodist, Presbyterian and Congregationalist denominations. The Church of Christ denomination was chosen to represent a contemporary less hierarchical denomination in comparison to the other protestant denominations. The last group, the Salvation Army, was chosen because of its high profile in social justice and social welfare, therefore offering different perspectives on the role and activities of the church in health promotion 10 (pp82–3)

What is reflexivity?

Reflexivity is the process in which researchers engage to explore and explain how their subjectivity (or bias) has influenced the research. 12 Researchers engage in reflexive practices to ensure and demonstrate rigour, quality and, ultimately, trustworthiness in their research. 13 The researcher is the instrument of data collection and data analysis, and hence awareness of what has influenced their approach and conduct of the research – and being able to articulate them – is vital in the creation of knowledge. One important element is researcher positionality (see Chapter 27), which acknowledges the characteristics, interests, beliefs and personal experiences of the researcher and how this influences the research process. Table 26.3 outlines different types of reflexivity, with examples from the author’s thesis.

Table 26.3: Types of reflexivity

The quality of qualitative research is measured through the rigour or trustworthiness of the research, demonstrated through a range of strategies in the processes of data collection, analysis, reporting and reflexivity.

- Chowdhury IA. Issue of quality in qualitative research: an overview. Innovative Issues and Approaches in Social Sciences . 2015;8(1):142-162. doi:10.12959/issn.1855-0541.IIASS-2015-no1-art09

- Cypress BS. Rigor or reliability and validity in qualitative research: perspectives, strategies, reconceptualization, and recommendations. Dimens Crit Care Nurs . 2017;36(4):253-263. doi:10.1097/DCC.0000000000000253

- Connelly LM. Trustworthiness in qualitative research. Medsurg Nurs . 2016;25(6):435-6.

- Golafshani N. Understanding reliability and validity in qualitative research. Qual Rep . 2003;8(4):597-607. Accessed September 18, 2023. https://nsuworks.nova.edu/tqr/vol8/iss4/6/

- Yilmaz K. Comparison of quantitative and qualitative research traditions: epistemological, theoretical, and methodological differences. Eur J Educ . 2013;48(2):311-325. doi:10.1111/ejed.12014

- Shenton AK. Strategies for ensuring trustworthiness in qualitative research projects. Education for Information 2004;22:63-75. Accessed September 18, 2023. https://content.iospress.com/articles/education-for-information/efi00778

- Varpio L, O’Brien B, Rees CE, Monrouxe L, Ajjawi R, Paradis E. The applicability of generalisability and bias to health professions education’s research. Med Educ . Feb 2021;55(2):167-173. doi:10.1111/medu.14348

- Carcary M. The Research Audit Trail: Methodological guidance for application in practice. Electronic Journal of Business Research Methods . 2020;18(2):166-177. doi:10.34190/JBRM.18.2.008

- Korstjens I, Moser A. Series: Practical guidance to qualitative research. Part 4: Trustworthiness and publishing. Eur J Gen Pract . Dec 2018;24(1):120-124. doi:10.1080/13814788.2017.1375092

- Ayton D. ‘From places of despair to spaces of hope’ – the local church and health promotion in Victoria . PhD. Monash University; 2013. https://figshare.com/articles/thesis/_From_places_of_despair_to_spaces_of_hope_-_the_local_church_and_health_promotion_in_Victoria/4628308/1

- Hanson A. Negative case analysis. In: Matthes J, ed. The International Encyclopedia of Communication Research Methods . John Wiley & Sons, Inc.; 2017. doi: 10.1002/9781118901731.iecrm0165

- Olmos-Vega FM. A practical guide to reflexivity in qualitative research: AMEE Guide No. 149. Med Teach . 2023;45(3):241-251. doi: 10.1080/0142159X.2022.2057287

- Dodgson JE. Reflexivity in qualitative research. J Hum Lact . 2019;35(2):220-222. doi:10.1177/08903344198309

Qualitative Research – a practical guide for health and social care researchers and practitioners Copyright © 2023 by Darshini Ayton is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License , except where otherwise noted.

Share This Book

MS in Nursing (MSN)

A Guide To Qualitative Rigor In Research

Advances in technology have made quantitative data more accessible than ever before; but in the human-centric discipline of nursing, qualitative research still brings vital learnings to the health care industry. It is sometimes difficult to derive viable insights from qualitative research; but in the article below, the authors identify three criteria for developing acceptable qualitative studies.

Qualitative rigor in research explained

Qualitative rigor. It’s one of those terms you either understand or you don’t. And it seems that many of us fall into the latter of those two categories. From novices to experienced qualitative researchers, qualitative rigor is a concept that can be challenging. However, it also happens to be one of the most critical aspects of qualitative research, so it’s important that we all start getting to grips with what it means.

Rigor, in qualitative terms, is a way to establish trust or confidence in the findings of a research study. It allows the researcher to establish consistency in the methods used over time. It also provides an accurate representation of the population studied. As a nurse, you want to build your practice on the best evidence you can and to do so you need to have confidence in those research findings.

This article will look in more detail at the unique components of qualitative research in relation to qualitative rigor. These are: truth-value (credibility); applicability (transferability); consistency (dependability); and neutrality (confirmability).

Credibility

Credibility allows others to recognize the experiences contained within the study through the interpretation of participants’ experiences. In order to establish credibility, a researcher must review the individual transcripts, looking for similarities within and across all participants.

A study is considered credible when it presents an interpretation of an experience in such a way that people sharing that experience immediately recognize it. Examples of strategies used to establish credibility include:

- Reflexivity

- Member checking (aka informant feedback)

- Peer examination

- Peer debriefing

- Prolonged time spent with participants

- Using the participants’ words in the final report

Transferability

The ability to transfer research findings or methods from one group to another is called transferability in qualitative language, equivalent to external validity. One way of establishing transferability is to provide a dense description of the population studied by describing the demographics and geographic boundaries of the study.

Ways in which transferability can be applied by researchers include:

- Using the same data collection methods with different demographic groups or geographical locations

- Giving a range of experiences on which the reader can build interventions and understanding to decide whether the research is applicable to practice

Dependability

Related to reliability in quantitative terms, dependability occurs when another researcher can follow the decision trail used by the researcher. This trail is achieved by:

- Describing the specific purpose of the study

- Discussing how and why the participants were selected for the study

- Describing how the data was collected and how long collection lasted

- Explaining how the data was reduced or transformed for analysis

- Discussing the interpretation and presentation of the findings

- Explaining the techniques used to determine the credibility of the data

Strategies used to establish dependability include:

- Having peers participate in the analysis process

- Providing a detailed description of the research methods

- Conducting a step-by-step repetition of the study to identify similarities in results or to enhance findings

Confirmability

Confirmability occurs once credibility, transferability and dependability have been established. Qualitative research must be reflective, maintaining a sense of awareness and openness to the study and results. The researcher needs a self-critical attitude, taking into account how his or her preconceptions affect the research.

Techniques researchers use to achieve confirmability include:

- Taking notes regarding personal feelings, biases and insights immediately after an interview

- Following, rather than leading, the direction of interviews by asking for clarifications when needed

Reflective research produces new insights, which lead the reader to trust the credibility of the findings and applicability of the study

Become a Champion of Qualitative Rigor

Clinical Nurse Leaders, or CNLs, work with interdisciplinary teams to improve care for populations of patients. CNLs can impact quality and safety by assessing risks and utilizing research findings to develop quality improvement strategies and evidence-based solutions.

As a student in Queens University of Charlotte’s online Master of Science in Nursing program , you will solidify your skills in research and analysis allowing you to make informed, strategic decisions to drive measurable results for your patients.

Request more information to learn more about how this degree can improve your nursing practice, or call 866-313-2356.

Adapted from: Thomas, E. and Magilvy, J. K. (2011), Qualitative Rigor or Research Validity in Qualitative Research. Journal for Specialists in Pediatric Nursing, 16: 151–155. [WWW document]. URL http://onlinelibrary.wiley.com/doi/10.1111/j.1744-6155.2011.00283.x [accessed 2 July 2014]

Recommended Articles

Tips for nurse leaders to maintain moral courage amid ethical dilemmas, relationship between nursing leadership & patient outcomes, day in the life of a clinical nurse leader®, 8 leadership skills nurses need to be successful, the rise of medical errors in hospitals, get started.

The Ultimate Guide to Qualitative Research - Part 3: Presenting Qualitative Data

- Presenting qualitative data

- Data visualization

- Research paper writing

- Introduction

What is rigor in qualitative research?

Why is transparent research important, how do you achieve transparency and rigor in research.

- How to publish a research paper

Transparency and rigor in research

Qualitative researchers face particular challenges in convincing their target audience of the value and credibility of their subsequent analysis . Numbers and quantifiable concepts in quantitative studies are relatively easier to understand than their counterparts associated with qualitative methods . Think about how easy it is to make conclusions about the value of items at a store based on their prices, then imagine trying to compare those items based on their design, function, and effectiveness.

The goal of qualitative data analysis is to allow a qualitative researcher and their audience to make determinations about the value and impact of the research. Still, before the audience can reach these determinations, the process of conducting research that produces the qualitative analysis must first be perceived as credible. It is the responsibility of the researcher to persuade their audience that their data collection process and subsequent analysis are rigorous.

Qualitative rigor refers to the meticulousness, consistency, and transparency of the research. It is the application of systematic, disciplined, and stringent methods to ensure the credibility, dependability, confirmability, and transferability of research findings. In qualitative inquiry, these attributes ensure the research accurately reflects the phenomenon it is intended to represent, that its findings can be used by others, and that its processes and results are open to scrutiny and validation.

Credibility

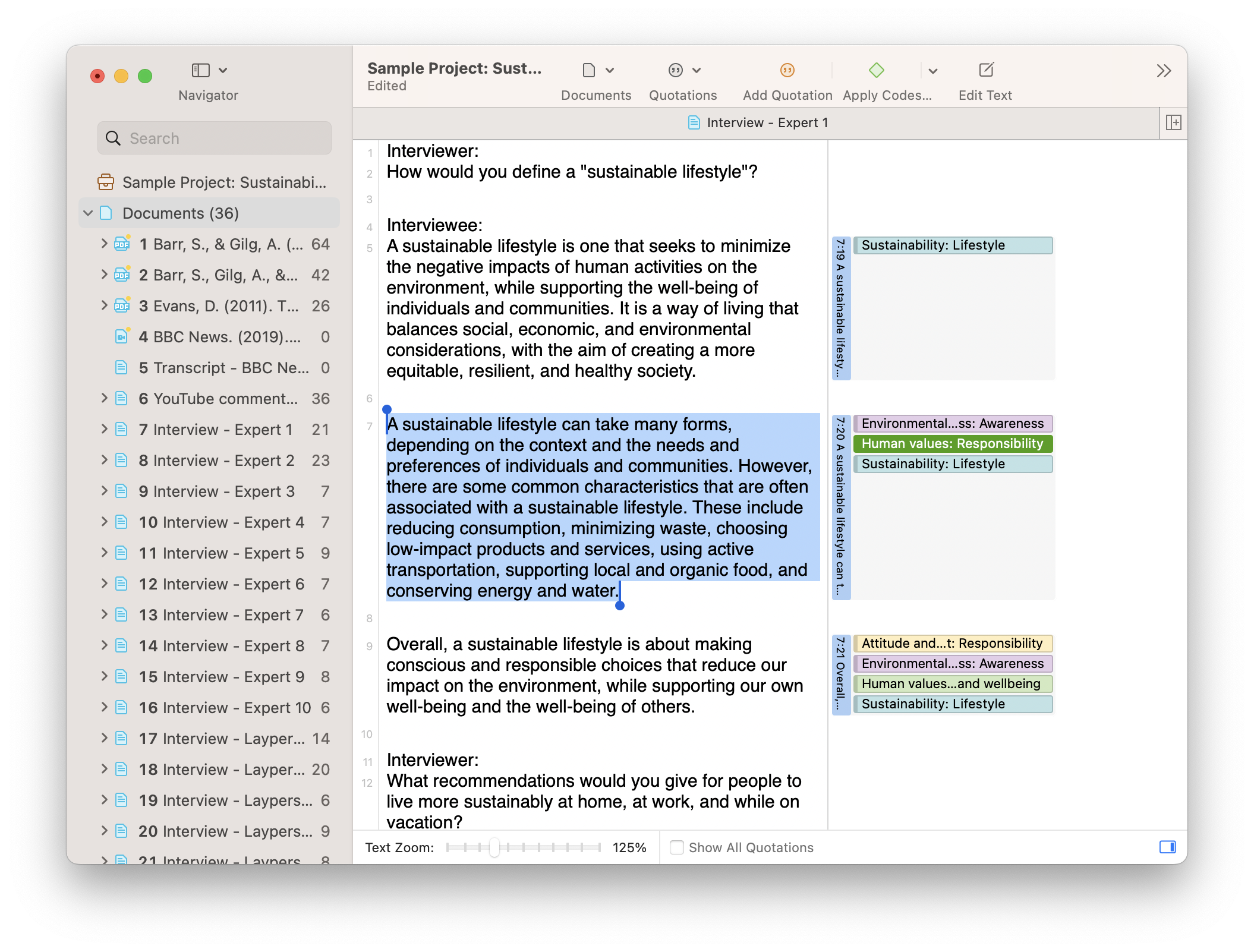

Credibility refers to the extent to which the results accurately represent the participants' experiences. To achieve credibility, qualitative researchers, especially those conducting research on human research participants, employ a number of strategies to bolster the credibility of the data and the subsequent analysis. Prolonged engagement and persistent observation , for example, involve spending significant time in the field to gain a deep understanding of the research context and to continuously observe the phenomenon under study. Peer debriefing involves discussing the research and findings with knowledgeable peers to assess their validity . Member checking involves sharing the findings with the research participants to confirm that they accurately reflect their experiences. These and other methods ensure an abundantly rich data set from which the researcher describes in vivid detail the phenomenon under study, and which other scholars can audit to challenge the strength of the findings if necessary.

Dependability

Dependability refers to the consistency of the research process such that it is logical and clearly documented. It addresses the potential for others to build on the research through subsequent studies. To achieve dependability, researchers should provide a 'decision trail' detailing all decisions made during the course of the study. This allows others to understand how conclusions were reached and to replicate the study if necessary. Ultimately, the documentation of a researcher's process while collecting and analyzing data provides a clear record not only for other scholars to consider but also for those conducting the study and refining their methods for future research.

Confirmability

Confirmability requires the research findings to be directly linked to the data. While it is important to acknowledge researcher positionality (e.g., through reflexive memos) in social science research, researchers still have a responsibility to make assertions and identify insights rooted in the data for the resulting knowledge to be considered confirmable. By transparently communicating how the data was analyzed and conclusions were reached, researchers can allow their audience to perform a sort of audit of the study. This practice helps remind researchers about the importance of ensuring that there are sufficient connections to the raw data collected from the field and the findings that are presented as consequential developments of theory.

Transferability

Transferability refers to the applicability of the research findings in other contexts or with other participants. While dependability is more relevant to the application of research within its own situated context, transferability is determined by how findings generated in one set of circumstances (e.g., a geographic location or a culture) apply to another set of circumstances. This is essentially a significant challenge since, given the unique focus on context in qualitative research , researchers can't usually claim that their findings are universally applicable. Instead, they provide a rich, detailed description of the context and participants, enabling others to determine if the findings may apply to their own context. As a result, such detail necessitates discussion of transparency in research, which will be discussed later in this section.

Reflexivity

The concept of reflexivity also contributes to rigor in qualitative research. Reflexivity involves the researcher critically reflecting on the research and their own role in it, including how their biases , values, experiences, and presence may influence the research. Any discussion of reflexivity necessitates a recognition that knowledge about the social world is never objective, but always from a particular perspective. Reflexivity begins with an acknowledgment that those who conduct qualitative research do so while perceiving the social world through an analytical lens that is unique and distinct from that of others. As subjectivity is an inevitable circumstance in any research involving humans as sources or instruments of data collection , the researcher is responsible for providing a thick description of the environment in which they are collecting data as well as a detailed description of their own place in the research. Subjectivity can be considered as an asset, whereby researchers acknowledge and indicate how their positionality informed the analysis in ways that were insightful and productive.

Triangulation

Triangulation is another key aspect of rigor, referring to the use of multiple data sources, researchers, or methods to cross-check and validate findings. This can increase the depth and breadth of the research, improve its quality, and decrease the likelihood of researcher bias influencing the findings. Particularly given the complexity and dynamic nature of the social world, one method or one analytical approach will seldom be sufficient in holistically understanding the phenomenon or concept under study. Instead, a researcher benefits from examining the world through multiple methods and multiple analytical approaches, not to garner perfectly consistent results but to gather as much rich detail as possible to strengthen the analysis and subsequent findings.

In qualitative research , rigor is not about seeking a single truth or reality, but rather about being thorough, transparent, and critical in the research to ensure the integrity and value of the study. Rigor can be seen as a commitment to best practices in research, with researchers consistently questioning their methods and findings, checking for alternative interpretations , and remaining open to critique and revision. This commitment to rigor helps ensure that qualitative research provides valid, reliable , and meaningful contributions to our understanding of the complex social world.

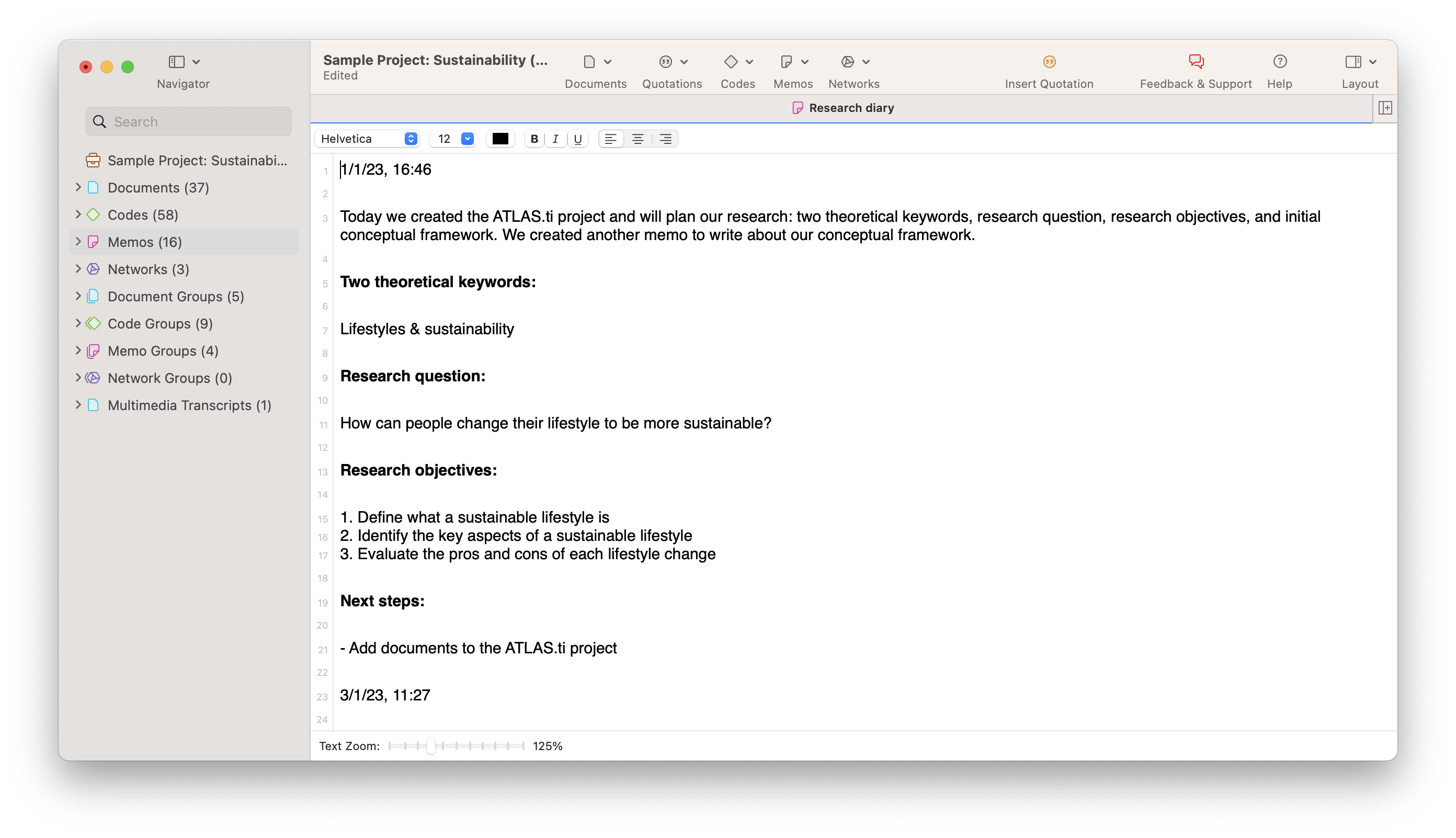

Transparently document your research with ATLAS.ti

Choose ATLAS.ti for getting the most out of your data. Download a free trial of our software today.

When you read a story in a newspaper or watch a news report on television, do you ever get the feeling that you may not be receiving all the information or context necessary to understand the overarching messages being conveyed? Perhaps a salesperson is trying to convince you to buy something from them by explaining all the benefits of a product but doesn't tell you how they know these benefits are real. When you're choosing a movie to watch, you might look at a critic's review or a score in an online movie database without actually knowing how that review or score was actually determined.

In all of these situations, it is easier to trust the information presented to you if there is a rigorous analysis process behind that information and if that process is explicitly detailed. The same is true for qualitative research results, making transparency a key element in qualitative research methodologies . Transparency is a fundamental aspect of rigor in qualitative research. It involves the clear, detailed, and explicit documentation of all stages of the research process. This allows other researchers to understand, evaluate, transfer, and build upon the study. The key aspects of transparency in qualitative research include methodological transparency, analytical transparency, and reflexive transparency.

Methodological transparency involves providing a comprehensive description of the research methods and procedures used in the study. This includes detailing the research design, sampling strategy, data collection methods , and ethical considerations . For example, researchers should thoroughly describe how participants were selected, how and where data were collected (e.g., interviews , focus groups , observations ), and how ethical issues such as consent, confidentiality , and potential harm were addressed. They should also clearly articulate the theoretical and conceptual frameworks that guided the study. Methodological transparency allows other researchers to understand how the study was conducted and assess its trustworthiness.

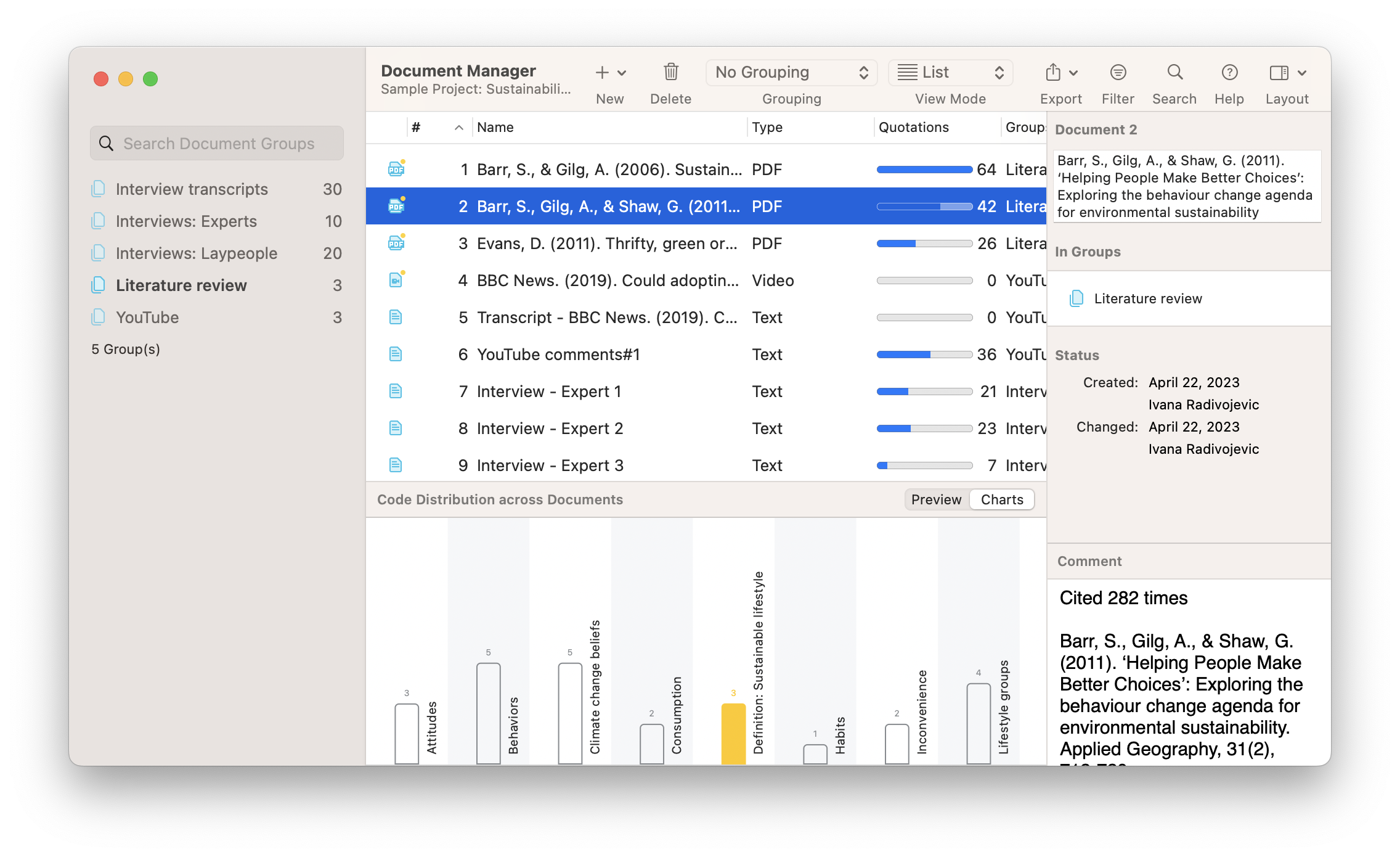

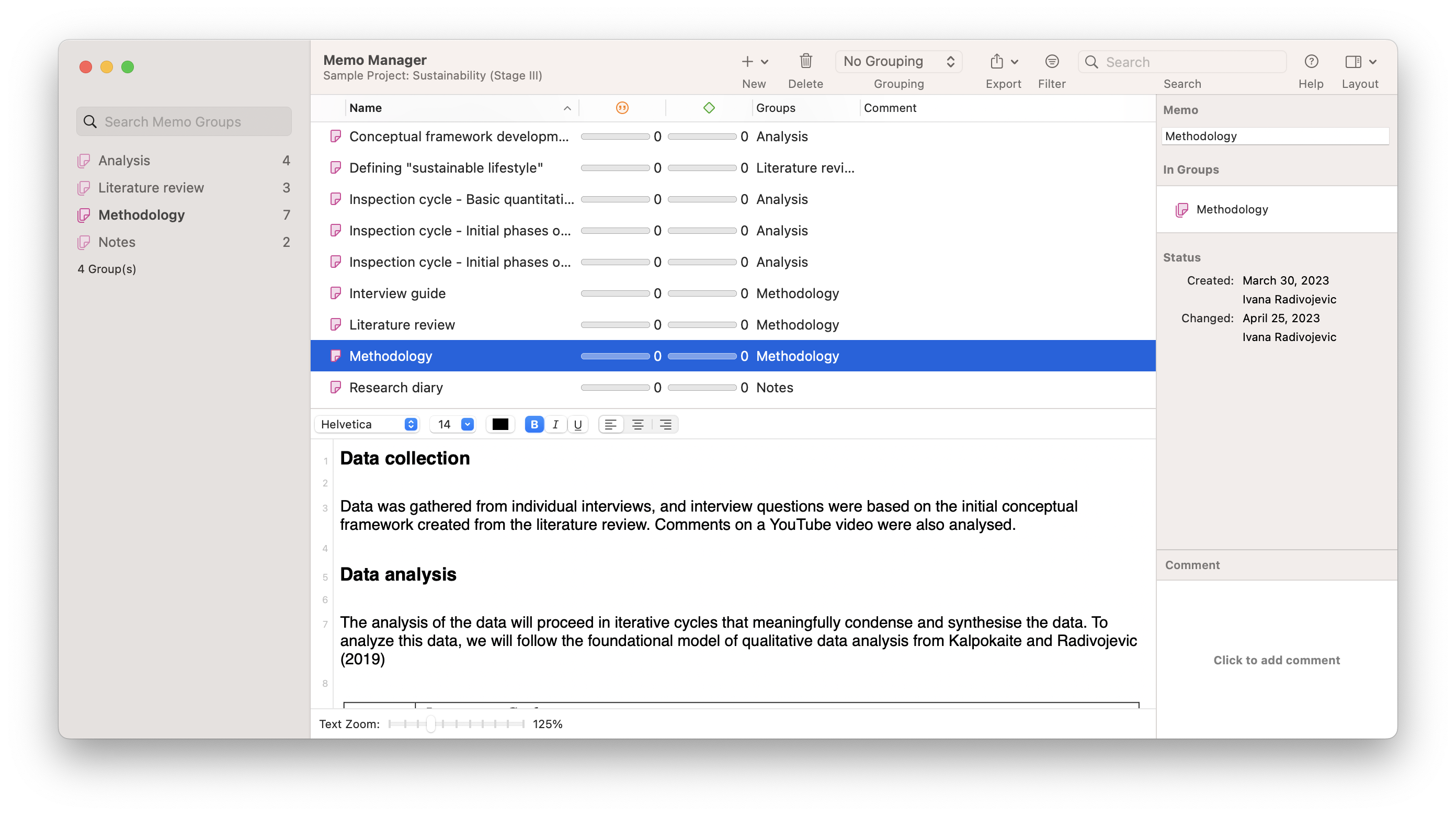

Analytical transparency refers to the clear and detailed documentation of the data analysis process. This involves explaining how the raw data were transformed into findings, including the coding process , theme/category development, and interpretation of results . Researchers should describe the specific analytical strategies they used, such as thematic analysis , grounded theory , or discourse analysis . They should provide evidence to support their findings, such as direct quotes from participants. They may also describe any software they used to assist with analyzing data . Analytical transparency allows other researchers to understand how the findings were derived and assess their credibility and confirmability.

Reflexive transparency involves the researcher reflecting on and disclosing their own role in the research, including their potential biases , assumptions, and influences. This includes recognizing and discussing how the researcher's background, beliefs, and interactions with participants may have shaped the data collection and analysis . Reflexive transparency may be achieved through the use of a reflexivity journal, where the researcher regularly records their thoughts, feelings, and reactions during research. This aspect of transparency ensures that the researcher is open about their subjectivity and allows others to assess the potential impact of the researcher's positionality on the findings.

Transparency in qualitative research is essential for maintaining rigor, trustworthiness, and ethical integrity . By being transparent, researchers allow their work to be scrutinized, critiqued, and improved upon, contributing to the ongoing development and refinement of knowledge in their field.

Rigorous, trustworthy research is research that applies the appropriate research tools to meet the stated objectives of the investigation. For example, to determine if an exploratory investigation was rigorous, the investigator would need to answer a series of methodological questions: Do the data collection tools produce appropriate information for the level of precision required in the analysis ? Do the tools maximize the chance of identifying the full range of what there is to know about the phenomenon? To what degree are the collection techniques likely to generate the appropriate level of detail needed for addressing the research question(s) ? To what degree do the tools maximize the chance of producing data with discernible patterns?

Once the data are collected, to what degree are the analytic techniques likely to ensure the discovery of the full range of relevant and salient themes and topics? To what degree do the analytic strategies maximize the potential for finding relationships among themes and topics? What checks are in place to ensure that the discovery of patterns and models are relevant to the research question? Finally, what standards of evidence are required to ensure readers that results are supported by the data?

The clear challenge is to identify what questions are most important for establishing research rigor (trustworthiness) and to provide examples of how such questions could be answered for those using qualitative data . Clearly, rigorous research must be both transparent and explicit; in other words, researchers need to be able to describe to their colleagues and their audiences what they did (or plan to do) in clear, simple language. Much of the confusion that surrounds qualitative data collection and analysis techniques comes from practitioners who shroud their behaviors in mystery and jargon. For example, clearly describing how themes are identified, how codebooks are built and applied, and how models were induced helps to bring more rigor to qualitative research .

Researchers also must become more familiar with the broad range of methodological techniques available, such as content analysis , grounded theory , and discourse analysis . Cross-fertilization across methodological traditions can also be extremely valuable to generate meaningful understanding rather than attacking all problems with the same type of methodological tool.

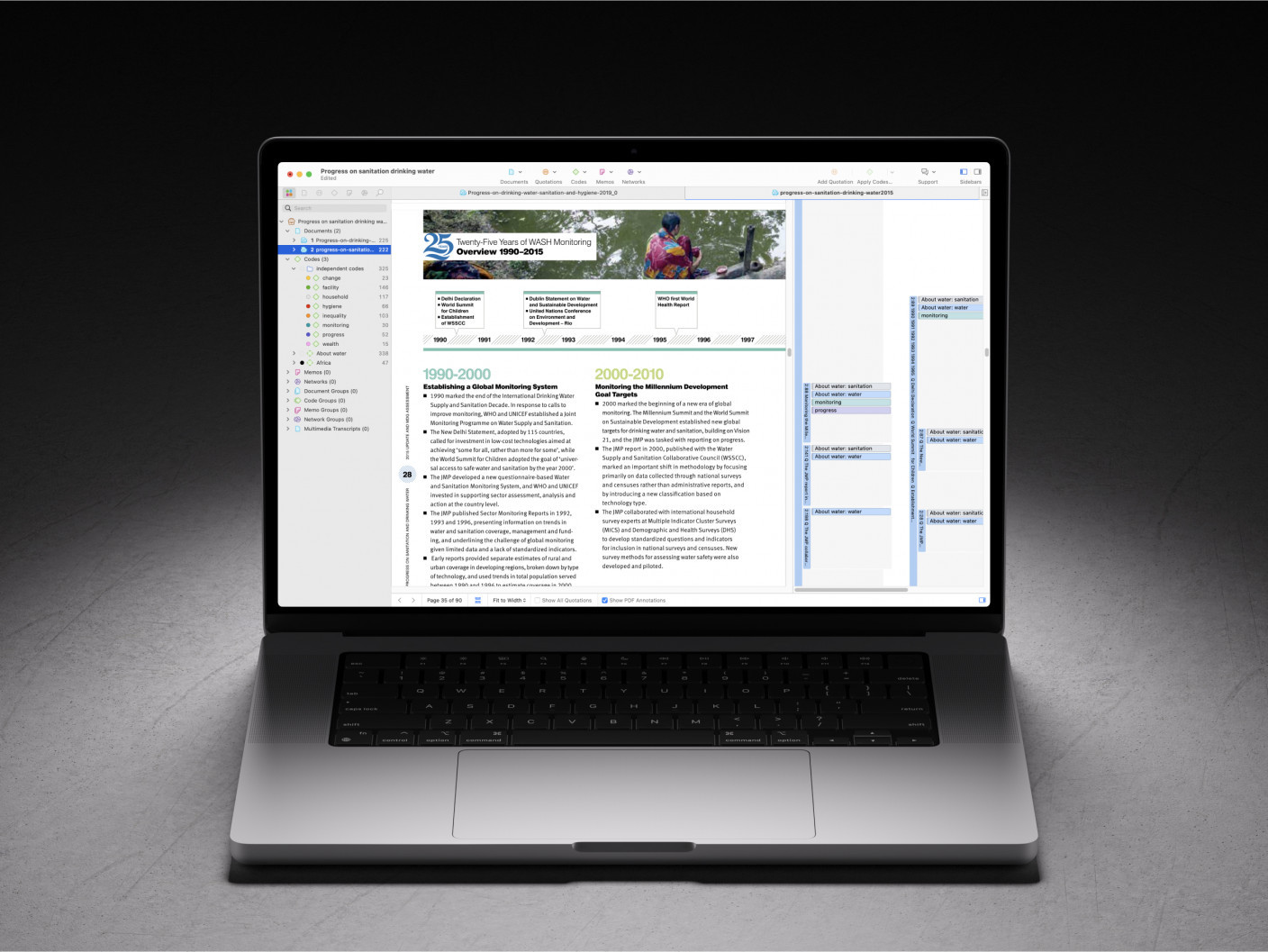

The introduction of methodologically neutral and highly flexible qualitative analysis software like ATLAS.ti can be considered as extremely helpful indeed. It is highly apt to both support interdisciplinary cross-pollination and to bring about a great deal of trust in the presented results. By allowing the researcher to combine both the source material and his/her findings in a structured, interactive platform while producing both quantifiable reports and intuitive visual renderings of their results, ATLAS.ti adds new levels of trustworthiness to qualitative research . Moreover, it permits the researcher to apply multiple approaches to their research, to collaborate across philosophical boundaries, and thus significantly enhance the level of rigor in qualitative research. Dedicated research software like ATLAS.ti helps the researcher to catalog, explore and competently analyze the data generated in a given research project.

Ultimately, transparency and rigor are indispensable elements of any robust research study. Achieving transparency requires a systematic, deliberate, and thoughtful approach. It revolves around clarity in the formulation of research objectives, comprehensiveness in methods, and conscientious reporting of the results. Here are several key strategies for achieving transparency and rigor in research:

Clear research objectives and methods

Transparency begins with the clear and explicit statement of research objectives and questions. Researchers should explain why they are conducting the study, what they hope to learn, and how they plan to achieve their objectives. This involves identifying and articulating the study's theoretical or conceptual framework and delineating the key research questions . Ensuring clarity at this stage sets the groundwork for transparency throughout the rest of the study.

Transparent research includes a comprehensive and detailed account of the research design and methodology. Researchers should describe all stages of their research process, including the selection and recruitment of participants, the data collection methods , the setting of the research, and the timeline. Each step should be explained in enough detail that another researcher could replicate the study. Furthermore, any modifications to the research design or methodology over the course of the study should be clearly documented and justified.

Thorough data documentation and analysis

In the data collection phase, researchers should provide thorough documentation, including original data records such as transcripts , field notes , or images . The specifics of how data was gathered, who was involved, and when and where it took place should be meticulously recorded.

During the data analysis phase , researchers should clearly describe the steps taken to analyze the data, including coding processes , theme identification , and how conclusions were drawn. Researchers should provide evidence to support their findings and interpretations , such as verbatim quotes or detailed examples from the data. They should also describe any analytic software or tools used, including how they were used and why they were chosen.

Reflexivity and acknowledgment of bias

Transparent research involves a process of reflexivity , where researchers critically reflect on their own role in the research process. This includes considering how their own beliefs, values, experiences, and relationships with participants may have influenced the data collection and analysis . Researchers should maintain reflexivity journals to document these reflections, which can then be incorporated into the final research report. Researchers should also explicitly acknowledge potential biases and conflicts of interest that could influence the research. This includes personal, financial, or institutional interests that could affect the conduct or reporting of the research.

Transparent reporting and publishing

Transparency also involves the open sharing of research materials and data, where ethical and legal guidelines permit. This may include providing access to interview guides , survey instruments , data analysis scripts, raw data , and other research materials. Open sharing allows others to scrutinize, transfer, or extend the research, thereby enhancing its transparency and trustworthiness.

Finally, the reporting and publishing phase should adhere to the principles of transparency. Researchers should follow the relevant reporting guidelines for their field. Such guidelines provide a framework for reporting research in a comprehensive, systematic, and transparent manner.

Furthermore, researchers should choose to publish in open-access journals or other accessible formats whenever possible, to ensure the research is publicly accessible. They should also be open to critique and engage in post-publication discussion and debate about their findings.

By adhering to these strategies, researchers can ensure the transparency of their research, enhancing its credibility, trustworthiness, and contribution to their field. Transparency is more than just a good research practice—it's a fundamental ethical obligation to the research community, participants, and wider society.

Rigorous research starts with ATLAS.ti

Click here for a free trial of our powerful and intuitive data analysis software.

Criteria for Good Qualitative Research: A Comprehensive Review

- Regular Article

- Open access

- Published: 18 September 2021

- Volume 31 , pages 679–689, ( 2022 )

Cite this article

You have full access to this open access article

- Drishti Yadav ORCID: orcid.org/0000-0002-2974-0323 1

78k Accesses

28 Citations

72 Altmetric

Explore all metrics

This review aims to synthesize a published set of evaluative criteria for good qualitative research. The aim is to shed light on existing standards for assessing the rigor of qualitative research encompassing a range of epistemological and ontological standpoints. Using a systematic search strategy, published journal articles that deliberate criteria for rigorous research were identified. Then, references of relevant articles were surveyed to find noteworthy, distinct, and well-defined pointers to good qualitative research. This review presents an investigative assessment of the pivotal features in qualitative research that can permit the readers to pass judgment on its quality and to condemn it as good research when objectively and adequately utilized. Overall, this review underlines the crux of qualitative research and accentuates the necessity to evaluate such research by the very tenets of its being. It also offers some prospects and recommendations to improve the quality of qualitative research. Based on the findings of this review, it is concluded that quality criteria are the aftereffect of socio-institutional procedures and existing paradigmatic conducts. Owing to the paradigmatic diversity of qualitative research, a single and specific set of quality criteria is neither feasible nor anticipated. Since qualitative research is not a cohesive discipline, researchers need to educate and familiarize themselves with applicable norms and decisive factors to evaluate qualitative research from within its theoretical and methodological framework of origin.

Similar content being viewed by others

Good Qualitative Research: Opening up the Debate

Beyond qualitative/quantitative structuralism: the positivist qualitative research and the paradigmatic disclaimer.

What is Qualitative in Research

Avoid common mistakes on your manuscript.

Introduction

“… It is important to regularly dialogue about what makes for good qualitative research” (Tracy, 2010 , p. 837)

To decide what represents good qualitative research is highly debatable. There are numerous methods that are contained within qualitative research and that are established on diverse philosophical perspectives. Bryman et al., ( 2008 , p. 262) suggest that “It is widely assumed that whereas quality criteria for quantitative research are well‐known and widely agreed, this is not the case for qualitative research.” Hence, the question “how to evaluate the quality of qualitative research” has been continuously debated. There are many areas of science and technology wherein these debates on the assessment of qualitative research have taken place. Examples include various areas of psychology: general psychology (Madill et al., 2000 ); counseling psychology (Morrow, 2005 ); and clinical psychology (Barker & Pistrang, 2005 ), and other disciplines of social sciences: social policy (Bryman et al., 2008 ); health research (Sparkes, 2001 ); business and management research (Johnson et al., 2006 ); information systems (Klein & Myers, 1999 ); and environmental studies (Reid & Gough, 2000 ). In the literature, these debates are enthused by the impression that the blanket application of criteria for good qualitative research developed around the positivist paradigm is improper. Such debates are based on the wide range of philosophical backgrounds within which qualitative research is conducted (e.g., Sandberg, 2000 ; Schwandt, 1996 ). The existence of methodological diversity led to the formulation of different sets of criteria applicable to qualitative research.

Among qualitative researchers, the dilemma of governing the measures to assess the quality of research is not a new phenomenon, especially when the virtuous triad of objectivity, reliability, and validity (Spencer et al., 2004 ) are not adequate. Occasionally, the criteria of quantitative research are used to evaluate qualitative research (Cohen & Crabtree, 2008 ; Lather, 2004 ). Indeed, Howe ( 2004 ) claims that the prevailing paradigm in educational research is scientifically based experimental research. Hypotheses and conjectures about the preeminence of quantitative research can weaken the worth and usefulness of qualitative research by neglecting the prominence of harmonizing match for purpose on research paradigm, the epistemological stance of the researcher, and the choice of methodology. Researchers have been reprimanded concerning this in “paradigmatic controversies, contradictions, and emerging confluences” (Lincoln & Guba, 2000 ).

In general, qualitative research tends to come from a very different paradigmatic stance and intrinsically demands distinctive and out-of-the-ordinary criteria for evaluating good research and varieties of research contributions that can be made. This review attempts to present a series of evaluative criteria for qualitative researchers, arguing that their choice of criteria needs to be compatible with the unique nature of the research in question (its methodology, aims, and assumptions). This review aims to assist researchers in identifying some of the indispensable features or markers of high-quality qualitative research. In a nutshell, the purpose of this systematic literature review is to analyze the existing knowledge on high-quality qualitative research and to verify the existence of research studies dealing with the critical assessment of qualitative research based on the concept of diverse paradigmatic stances. Contrary to the existing reviews, this review also suggests some critical directions to follow to improve the quality of qualitative research in different epistemological and ontological perspectives. This review is also intended to provide guidelines for the acceleration of future developments and dialogues among qualitative researchers in the context of assessing the qualitative research.

The rest of this review article is structured in the following fashion: Sect. Methods describes the method followed for performing this review. Section Criteria for Evaluating Qualitative Studies provides a comprehensive description of the criteria for evaluating qualitative studies. This section is followed by a summary of the strategies to improve the quality of qualitative research in Sect. Improving Quality: Strategies . Section How to Assess the Quality of the Research Findings? provides details on how to assess the quality of the research findings. After that, some of the quality checklists (as tools to evaluate quality) are discussed in Sect. Quality Checklists: Tools for Assessing the Quality . At last, the review ends with the concluding remarks presented in Sect. Conclusions, Future Directions and Outlook . Some prospects in qualitative research for enhancing its quality and usefulness in the social and techno-scientific research community are also presented in Sect. Conclusions, Future Directions and Outlook .

For this review, a comprehensive literature search was performed from many databases using generic search terms such as Qualitative Research , Criteria , etc . The following databases were chosen for the literature search based on the high number of results: IEEE Explore, ScienceDirect, PubMed, Google Scholar, and Web of Science. The following keywords (and their combinations using Boolean connectives OR/AND) were adopted for the literature search: qualitative research, criteria, quality, assessment, and validity. The synonyms for these keywords were collected and arranged in a logical structure (see Table 1 ). All publications in journals and conference proceedings later than 1950 till 2021 were considered for the search. Other articles extracted from the references of the papers identified in the electronic search were also included. A large number of publications on qualitative research were retrieved during the initial screening. Hence, to include the searches with the main focus on criteria for good qualitative research, an inclusion criterion was utilized in the search string.

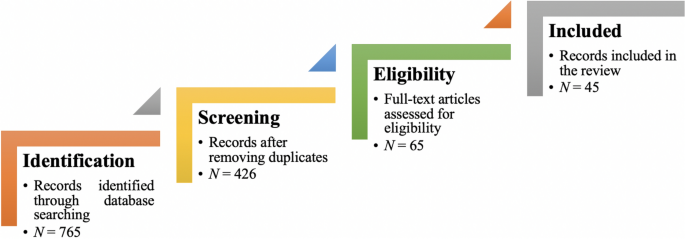

From the selected databases, the search retrieved a total of 765 publications. Then, the duplicate records were removed. After that, based on the title and abstract, the remaining 426 publications were screened for their relevance by using the following inclusion and exclusion criteria (see Table 2 ). Publications focusing on evaluation criteria for good qualitative research were included, whereas those works which delivered theoretical concepts on qualitative research were excluded. Based on the screening and eligibility, 45 research articles were identified that offered explicit criteria for evaluating the quality of qualitative research and were found to be relevant to this review.

Figure 1 illustrates the complete review process in the form of PRISMA flow diagram. PRISMA, i.e., “preferred reporting items for systematic reviews and meta-analyses” is employed in systematic reviews to refine the quality of reporting.

PRISMA flow diagram illustrating the search and inclusion process. N represents the number of records

Criteria for Evaluating Qualitative Studies

Fundamental criteria: general research quality.

Various researchers have put forward criteria for evaluating qualitative research, which have been summarized in Table 3 . Also, the criteria outlined in Table 4 effectively deliver the various approaches to evaluate and assess the quality of qualitative work. The entries in Table 4 are based on Tracy’s “Eight big‐tent criteria for excellent qualitative research” (Tracy, 2010 ). Tracy argues that high-quality qualitative work should formulate criteria focusing on the worthiness, relevance, timeliness, significance, morality, and practicality of the research topic, and the ethical stance of the research itself. Researchers have also suggested a series of questions as guiding principles to assess the quality of a qualitative study (Mays & Pope, 2020 ). Nassaji ( 2020 ) argues that good qualitative research should be robust, well informed, and thoroughly documented.

Qualitative Research: Interpretive Paradigms

All qualitative researchers follow highly abstract principles which bring together beliefs about ontology, epistemology, and methodology. These beliefs govern how the researcher perceives and acts. The net, which encompasses the researcher’s epistemological, ontological, and methodological premises, is referred to as a paradigm, or an interpretive structure, a “Basic set of beliefs that guides action” (Guba, 1990 ). Four major interpretive paradigms structure the qualitative research: positivist and postpositivist, constructivist interpretive, critical (Marxist, emancipatory), and feminist poststructural. The complexity of these four abstract paradigms increases at the level of concrete, specific interpretive communities. Table 5 presents these paradigms and their assumptions, including their criteria for evaluating research, and the typical form that an interpretive or theoretical statement assumes in each paradigm. Moreover, for evaluating qualitative research, quantitative conceptualizations of reliability and validity are proven to be incompatible (Horsburgh, 2003 ). In addition, a series of questions have been put forward in the literature to assist a reviewer (who is proficient in qualitative methods) for meticulous assessment and endorsement of qualitative research (Morse, 2003 ). Hammersley ( 2007 ) also suggests that guiding principles for qualitative research are advantageous, but methodological pluralism should not be simply acknowledged for all qualitative approaches. Seale ( 1999 ) also points out the significance of methodological cognizance in research studies.

Table 5 reflects that criteria for assessing the quality of qualitative research are the aftermath of socio-institutional practices and existing paradigmatic standpoints. Owing to the paradigmatic diversity of qualitative research, a single set of quality criteria is neither possible nor desirable. Hence, the researchers must be reflexive about the criteria they use in the various roles they play within their research community.

Improving Quality: Strategies

Another critical question is “How can the qualitative researchers ensure that the abovementioned quality criteria can be met?” Lincoln and Guba ( 1986 ) delineated several strategies to intensify each criteria of trustworthiness. Other researchers (Merriam & Tisdell, 2016 ; Shenton, 2004 ) also presented such strategies. A brief description of these strategies is shown in Table 6 .

It is worth mentioning that generalizability is also an integral part of qualitative research (Hays & McKibben, 2021 ). In general, the guiding principle pertaining to generalizability speaks about inducing and comprehending knowledge to synthesize interpretive components of an underlying context. Table 7 summarizes the main metasynthesis steps required to ascertain generalizability in qualitative research.

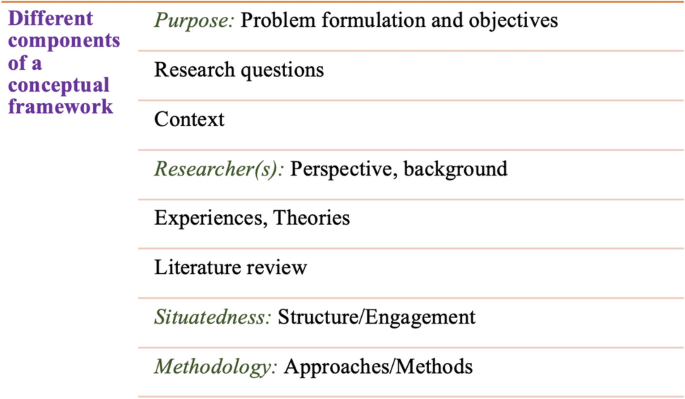

Figure 2 reflects the crucial components of a conceptual framework and their contribution to decisions regarding research design, implementation, and applications of results to future thinking, study, and practice (Johnson et al., 2020 ). The synergy and interrelationship of these components signifies their role to different stances of a qualitative research study.

Essential elements of a conceptual framework

In a nutshell, to assess the rationale of a study, its conceptual framework and research question(s), quality criteria must take account of the following: lucid context for the problem statement in the introduction; well-articulated research problems and questions; precise conceptual framework; distinct research purpose; and clear presentation and investigation of the paradigms. These criteria would expedite the quality of qualitative research.

How to Assess the Quality of the Research Findings?

The inclusion of quotes or similar research data enhances the confirmability in the write-up of the findings. The use of expressions (for instance, “80% of all respondents agreed that” or “only one of the interviewees mentioned that”) may also quantify qualitative findings (Stenfors et al., 2020 ). On the other hand, the persuasive reason for “why this may not help in intensifying the research” has also been provided (Monrouxe & Rees, 2020 ). Further, the Discussion and Conclusion sections of an article also prove robust markers of high-quality qualitative research, as elucidated in Table 8 .

Quality Checklists: Tools for Assessing the Quality

Numerous checklists are available to speed up the assessment of the quality of qualitative research. However, if used uncritically and recklessly concerning the research context, these checklists may be counterproductive. I recommend that such lists and guiding principles may assist in pinpointing the markers of high-quality qualitative research. However, considering enormous variations in the authors’ theoretical and philosophical contexts, I would emphasize that high dependability on such checklists may say little about whether the findings can be applied in your setting. A combination of such checklists might be appropriate for novice researchers. Some of these checklists are listed below:

The most commonly used framework is Consolidated Criteria for Reporting Qualitative Research (COREQ) (Tong et al., 2007 ). This framework is recommended by some journals to be followed by the authors during article submission.

Standards for Reporting Qualitative Research (SRQR) is another checklist that has been created particularly for medical education (O’Brien et al., 2014 ).

Also, Tracy ( 2010 ) and Critical Appraisal Skills Programme (CASP, 2021 ) offer criteria for qualitative research relevant across methods and approaches.

Further, researchers have also outlined different criteria as hallmarks of high-quality qualitative research. For instance, the “Road Trip Checklist” (Epp & Otnes, 2021 ) provides a quick reference to specific questions to address different elements of high-quality qualitative research.

Conclusions, Future Directions, and Outlook

This work presents a broad review of the criteria for good qualitative research. In addition, this article presents an exploratory analysis of the essential elements in qualitative research that can enable the readers of qualitative work to judge it as good research when objectively and adequately utilized. In this review, some of the essential markers that indicate high-quality qualitative research have been highlighted. I scope them narrowly to achieve rigor in qualitative research and note that they do not completely cover the broader considerations necessary for high-quality research. This review points out that a universal and versatile one-size-fits-all guideline for evaluating the quality of qualitative research does not exist. In other words, this review also emphasizes the non-existence of a set of common guidelines among qualitative researchers. In unison, this review reinforces that each qualitative approach should be treated uniquely on account of its own distinctive features for different epistemological and disciplinary positions. Owing to the sensitivity of the worth of qualitative research towards the specific context and the type of paradigmatic stance, researchers should themselves analyze what approaches can be and must be tailored to ensemble the distinct characteristics of the phenomenon under investigation. Although this article does not assert to put forward a magic bullet and to provide a one-stop solution for dealing with dilemmas about how, why, or whether to evaluate the “goodness” of qualitative research, it offers a platform to assist the researchers in improving their qualitative studies. This work provides an assembly of concerns to reflect on, a series of questions to ask, and multiple sets of criteria to look at, when attempting to determine the quality of qualitative research. Overall, this review underlines the crux of qualitative research and accentuates the need to evaluate such research by the very tenets of its being. Bringing together the vital arguments and delineating the requirements that good qualitative research should satisfy, this review strives to equip the researchers as well as reviewers to make well-versed judgment about the worth and significance of the qualitative research under scrutiny. In a nutshell, a comprehensive portrayal of the research process (from the context of research to the research objectives, research questions and design, speculative foundations, and from approaches of collecting data to analyzing the results, to deriving inferences) frequently proliferates the quality of a qualitative research.

Prospects : A Road Ahead for Qualitative Research

Irrefutably, qualitative research is a vivacious and evolving discipline wherein different epistemological and disciplinary positions have their own characteristics and importance. In addition, not surprisingly, owing to the sprouting and varied features of qualitative research, no consensus has been pulled off till date. Researchers have reflected various concerns and proposed several recommendations for editors and reviewers on conducting reviews of critical qualitative research (Levitt et al., 2021 ; McGinley et al., 2021 ). Following are some prospects and a few recommendations put forward towards the maturation of qualitative research and its quality evaluation:

In general, most of the manuscript and grant reviewers are not qualitative experts. Hence, it is more likely that they would prefer to adopt a broad set of criteria. However, researchers and reviewers need to keep in mind that it is inappropriate to utilize the same approaches and conducts among all qualitative research. Therefore, future work needs to focus on educating researchers and reviewers about the criteria to evaluate qualitative research from within the suitable theoretical and methodological context.

There is an urgent need to refurbish and augment critical assessment of some well-known and widely accepted tools (including checklists such as COREQ, SRQR) to interrogate their applicability on different aspects (along with their epistemological ramifications).

Efforts should be made towards creating more space for creativity, experimentation, and a dialogue between the diverse traditions of qualitative research. This would potentially help to avoid the enforcement of one's own set of quality criteria on the work carried out by others.

Moreover, journal reviewers need to be aware of various methodological practices and philosophical debates.

It is pivotal to highlight the expressions and considerations of qualitative researchers and bring them into a more open and transparent dialogue about assessing qualitative research in techno-scientific, academic, sociocultural, and political rooms.

Frequent debates on the use of evaluative criteria are required to solve some potentially resolved issues (including the applicability of a single set of criteria in multi-disciplinary aspects). Such debates would not only benefit the group of qualitative researchers themselves, but primarily assist in augmenting the well-being and vivacity of the entire discipline.

To conclude, I speculate that the criteria, and my perspective, may transfer to other methods, approaches, and contexts. I hope that they spark dialog and debate – about criteria for excellent qualitative research and the underpinnings of the discipline more broadly – and, therefore, help improve the quality of a qualitative study. Further, I anticipate that this review will assist the researchers to contemplate on the quality of their own research, to substantiate research design and help the reviewers to review qualitative research for journals. On a final note, I pinpoint the need to formulate a framework (encompassing the prerequisites of a qualitative study) by the cohesive efforts of qualitative researchers of different disciplines with different theoretic-paradigmatic origins. I believe that tailoring such a framework (of guiding principles) paves the way for qualitative researchers to consolidate the status of qualitative research in the wide-ranging open science debate. Dialogue on this issue across different approaches is crucial for the impending prospects of socio-techno-educational research.

Amin, M. E. K., Nørgaard, L. S., Cavaco, A. M., Witry, M. J., Hillman, L., Cernasev, A., & Desselle, S. P. (2020). Establishing trustworthiness and authenticity in qualitative pharmacy research. Research in Social and Administrative Pharmacy, 16 (10), 1472–1482.

Article Google Scholar

Barker, C., & Pistrang, N. (2005). Quality criteria under methodological pluralism: Implications for conducting and evaluating research. American Journal of Community Psychology, 35 (3–4), 201–212.

Bryman, A., Becker, S., & Sempik, J. (2008). Quality criteria for quantitative, qualitative and mixed methods research: A view from social policy. International Journal of Social Research Methodology, 11 (4), 261–276.

Caelli, K., Ray, L., & Mill, J. (2003). ‘Clear as mud’: Toward greater clarity in generic qualitative research. International Journal of Qualitative Methods, 2 (2), 1–13.

CASP (2021). CASP checklists. Retrieved May 2021 from https://casp-uk.net/casp-tools-checklists/

Cohen, D. J., & Crabtree, B. F. (2008). Evaluative criteria for qualitative research in health care: Controversies and recommendations. The Annals of Family Medicine, 6 (4), 331–339.

Denzin, N. K., & Lincoln, Y. S. (2005). Introduction: The discipline and practice of qualitative research. In N. K. Denzin & Y. S. Lincoln (Eds.), The sage handbook of qualitative research (pp. 1–32). Sage Publications Ltd.

Google Scholar

Elliott, R., Fischer, C. T., & Rennie, D. L. (1999). Evolving guidelines for publication of qualitative research studies in psychology and related fields. British Journal of Clinical Psychology, 38 (3), 215–229.

Epp, A. M., & Otnes, C. C. (2021). High-quality qualitative research: Getting into gear. Journal of Service Research . https://doi.org/10.1177/1094670520961445

Guba, E. G. (1990). The paradigm dialog. In Alternative paradigms conference, mar, 1989, Indiana u, school of education, San Francisco, ca, us . Sage Publications, Inc.

Hammersley, M. (2007). The issue of quality in qualitative research. International Journal of Research and Method in Education, 30 (3), 287–305.

Haven, T. L., Errington, T. M., Gleditsch, K. S., van Grootel, L., Jacobs, A. M., Kern, F. G., & Mokkink, L. B. (2020). Preregistering qualitative research: A Delphi study. International Journal of Qualitative Methods, 19 , 1609406920976417.

Hays, D. G., & McKibben, W. B. (2021). Promoting rigorous research: Generalizability and qualitative research. Journal of Counseling and Development, 99 (2), 178–188.

Horsburgh, D. (2003). Evaluation of qualitative research. Journal of Clinical Nursing, 12 (2), 307–312.

Howe, K. R. (2004). A critique of experimentalism. Qualitative Inquiry, 10 (1), 42–46.

Johnson, J. L., Adkins, D., & Chauvin, S. (2020). A review of the quality indicators of rigor in qualitative research. American Journal of Pharmaceutical Education, 84 (1), 7120.

Johnson, P., Buehring, A., Cassell, C., & Symon, G. (2006). Evaluating qualitative management research: Towards a contingent criteriology. International Journal of Management Reviews, 8 (3), 131–156.

Klein, H. K., & Myers, M. D. (1999). A set of principles for conducting and evaluating interpretive field studies in information systems. MIS Quarterly, 23 (1), 67–93.

Lather, P. (2004). This is your father’s paradigm: Government intrusion and the case of qualitative research in education. Qualitative Inquiry, 10 (1), 15–34.

Levitt, H. M., Morrill, Z., Collins, K. M., & Rizo, J. L. (2021). The methodological integrity of critical qualitative research: Principles to support design and research review. Journal of Counseling Psychology, 68 (3), 357.

Lincoln, Y. S., & Guba, E. G. (1986). But is it rigorous? Trustworthiness and authenticity in naturalistic evaluation. New Directions for Program Evaluation, 1986 (30), 73–84.

Lincoln, Y. S., & Guba, E. G. (2000). Paradigmatic controversies, contradictions and emerging confluences. In N. K. Denzin & Y. S. Lincoln (Eds.), Handbook of qualitative research (2nd ed., pp. 163–188). Sage Publications.

Madill, A., Jordan, A., & Shirley, C. (2000). Objectivity and reliability in qualitative analysis: Realist, contextualist and radical constructionist epistemologies. British Journal of Psychology, 91 (1), 1–20.

Mays, N., & Pope, C. (2020). Quality in qualitative research. Qualitative Research in Health Care . https://doi.org/10.1002/9781119410867.ch15

McGinley, S., Wei, W., Zhang, L., & Zheng, Y. (2021). The state of qualitative research in hospitality: A 5-year review 2014 to 2019. Cornell Hospitality Quarterly, 62 (1), 8–20.

Merriam, S., & Tisdell, E. (2016). Qualitative research: A guide to design and implementation. San Francisco, US.

Meyer, M., & Dykes, J. (2019). Criteria for rigor in visualization design study. IEEE Transactions on Visualization and Computer Graphics, 26 (1), 87–97.

Monrouxe, L. V., & Rees, C. E. (2020). When I say… quantification in qualitative research. Medical Education, 54 (3), 186–187.

Morrow, S. L. (2005). Quality and trustworthiness in qualitative research in counseling psychology. Journal of Counseling Psychology, 52 (2), 250.

Morse, J. M. (2003). A review committee’s guide for evaluating qualitative proposals. Qualitative Health Research, 13 (6), 833–851.

Nassaji, H. (2020). Good qualitative research. Language Teaching Research, 24 (4), 427–431.

O’Brien, B. C., Harris, I. B., Beckman, T. J., Reed, D. A., & Cook, D. A. (2014). Standards for reporting qualitative research: A synthesis of recommendations. Academic Medicine, 89 (9), 1245–1251.

O’Connor, C., & Joffe, H. (2020). Intercoder reliability in qualitative research: Debates and practical guidelines. International Journal of Qualitative Methods, 19 , 1609406919899220.

Reid, A., & Gough, S. (2000). Guidelines for reporting and evaluating qualitative research: What are the alternatives? Environmental Education Research, 6 (1), 59–91.

Rocco, T. S. (2010). Criteria for evaluating qualitative studies. Human Resource Development International . https://doi.org/10.1080/13678868.2010.501959

Sandberg, J. (2000). Understanding human competence at work: An interpretative approach. Academy of Management Journal, 43 (1), 9–25.

Schwandt, T. A. (1996). Farewell to criteriology. Qualitative Inquiry, 2 (1), 58–72.

Seale, C. (1999). Quality in qualitative research. Qualitative Inquiry, 5 (4), 465–478.

Shenton, A. K. (2004). Strategies for ensuring trustworthiness in qualitative research projects. Education for Information, 22 (2), 63–75.

Sparkes, A. C. (2001). Myth 94: Qualitative health researchers will agree about validity. Qualitative Health Research, 11 (4), 538–552.

Spencer, L., Ritchie, J., Lewis, J., & Dillon, L. (2004). Quality in qualitative evaluation: A framework for assessing research evidence.

Stenfors, T., Kajamaa, A., & Bennett, D. (2020). How to assess the quality of qualitative research. The Clinical Teacher, 17 (6), 596–599.

Taylor, E. W., Beck, J., & Ainsworth, E. (2001). Publishing qualitative adult education research: A peer review perspective. Studies in the Education of Adults, 33 (2), 163–179.

Tong, A., Sainsbury, P., & Craig, J. (2007). Consolidated criteria for reporting qualitative research (COREQ): A 32-item checklist for interviews and focus groups. International Journal for Quality in Health Care, 19 (6), 349–357.

Tracy, S. J. (2010). Qualitative quality: Eight “big-tent” criteria for excellent qualitative research. Qualitative Inquiry, 16 (10), 837–851.

Download references

Open access funding provided by TU Wien (TUW).

Author information

Authors and affiliations.

Faculty of Informatics, Technische Universität Wien, 1040, Vienna, Austria

Drishti Yadav

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Drishti Yadav .

Ethics declarations

Conflict of interest.

The author declares no conflict of interest.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Yadav, D. Criteria for Good Qualitative Research: A Comprehensive Review. Asia-Pacific Edu Res 31 , 679–689 (2022). https://doi.org/10.1007/s40299-021-00619-0

Download citation

Accepted : 28 August 2021

Published : 18 September 2021

Issue Date : December 2022

DOI : https://doi.org/10.1007/s40299-021-00619-0

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Qualitative research

- Evaluative criteria

- Find a journal

- Publish with us

- Track your research

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

20.1 Introduction to qualitative rigor

We hear a lot about fake news these days. Fake news has to do with the quality of journalism that we are consuming. It begs questions like: does it contain misinformation, is it skewed or biased in its portrayal of stories, does it leave out certain facts while inflating others. If we take this news at face value, our opinions and actions may be intentionally manipulated by poor quality information. So, how do we avoid or challenge this? The oversimplified answer is, we find ways to check for quality. While this isn’t a chapter dedicated to fake news, it does offer an important comparison for the focus of this chapter, rigor in qualitative research. Rigor is concerned with the quality of research that we are designing and consuming. While I devote a considerable amount of time in my clinical class talking about the importance of adopting a non-judgmental stance in practice, that is not the case here; I want you to be judgmental, critical thinkers about research! As a social worker who will hopefully be producing research (we need you!) and definitely consuming research, you need to be able to differentiate good science from rubbish science. Rigor will help you to do this.

This chapter will introduce you to the concept of rigor and specifically, what it looks like in qualitative research. We will begin by considering how rigor relates to issues of ethics and how thoughtfully involving community partners in our research can add additional dimensions in planning for rigor. Next, we will look at rigor in how we capture and manage qualitative data, essentially helping to ensure that we have quality raw data to work with for our study. Finally, we will devote time to discussing how researchers, as human instruments, need to maintain accountability throughout the research process. Finally, we will examine tools that encourage this accountability and how they can be integrated into your research design. Our hope is that by the end of this chapter, you will begin to be able to identify some of the hallmarks of quality in qualitative research, and if you are designing a qualitative research proposal, that you consider how to build these into your design.

19.1 Introduction to qualitative rigor

Learning objectives.

Learners will be able to…

- Identify the role of rigor in qualitative research and important concepts related to qualitative rigor

- Discuss why rigor is an important consideration when conducting, critiquing and consuming qualitative research

- Differentiate between quality in quantitative and qualitative research studies

In Chapter 11 we talked about quality in quantitative studies, but we built our discussion around concepts like reliability and validity . With qualitative studies, we generally think about quality in terms of the concept of rigor . The difference between quality in quantitative research and qualitative research extends beyond the type of data (numbers vs. words/sounds/images). If you sneak a peek all the way back to Chapter 7 , we discussed the idea of different paradigms or fundamental frameworks for how we can think about the world. These frameworks value different kinds of knowledge, arrive at knowledge in different ways, and evaluate the quality of knowledge with different criteria. These differences are essential in differentiating qualitative and quantitative work.

Quantitative research generally falls under a positivist paradigm, seeking to uncover knowledge that holds true across larger groups of people. To accomplish this, we need to have tools like reliability and validity to help produce internally consistent and externally generalizable findings (i.e. was our study design dependable and do our findings hold true across our population).

In contrast, qualitative research is generally considered to fall into an alternative paradigm (other than positivist), such as the interpretive paradigm which is focused on the subjective experiences of individuals and their unique perspectives. To accomplish this, we are often asking participants to expand on their ideas and interpretations. A positivist tradition requires the information collected to be very focused and discretely defined (i.e. closed questions with prescribed categories). With qualitative studies, we need to look across unique experiences reflected in the data and determine how these experiences develop a richer understanding of the phenomenon we are studying, often across numerous perspectives.

Rigor is a concept that reflects the quality of the process used in capturing, managing, and analyzing our data as we develop this rich understanding. Rigor helps to establish standards through which qualitative research is critiqued and judged, both by the scientific community and by the practitioner community.

For the scientific community, people who review qualitative research studies submitted for publication in scientific journals or for presentations at conferences will specifically look for indications of rigor, such as the tools we will discuss in this chapter. This confirms for them that the researcher(s) put safeguards in place to ensure that the research took place systematically and that consumers can be relatively confident that the findings are not fabricated and can be directly connected back to the primary sources of data that was gathered or the secondary data that was analyzed.

As a note here, as we are critiquing the research of others or developing our own studies, we also need to recognize the limitations of rigor. No research design is flawless and every researcher faces limitations and constraints. We aren’t looking for a researcher to adopt every tool we discuss below in their design. In fact, one of my mentors, speaks explicitly about “misplaced rigor”, that is, using techniques to support rigor that don’t really fit what you are trying to accomplish with your research design. Suffice it to say that we can go overboard in the area of rigor and it might not serve our study’s best interest. As a consumer or evaluator of research, you want to look for steps being taken to reflect quality and transparency throughout the research process, but they should fit within the overall framework of the study and what it is trying to accomplish.

From the perspective of a practitioner, we also need to be acutely concerned with the quality of research. Social work has made a commitment, outlined in our Code of Ethics (NASW,2017) , to competent practice in service to our clients based on “empirically based knowledge” (subsection 4.01). When I think about my own care providers, I want them to be using “good” research—research that we can be confident was conducted in a credible way and whose findings are honestly and clearly represented. Don’t our clients deserve the same from us?

As providers, we will be looking to qualitative research studies to provide us with information that helps us better understand our clients, their experiences, and the problems they encounter. As such, we need to look for research that accurately represents:

- Who is participating in the study

- What circumstances is the study being conducted under

- What is the research attempting to determine

Further, we want to ensure that:

- Findings are presented accurately and reflect what was shared by participants ( raw data )

- A reasonably good explanation of how the researcher got from the raw data to their findings is presented

- The researcher adequately considered and accounted for their potential influence on the research process