IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2019

Direct Speech-to-Image Translation

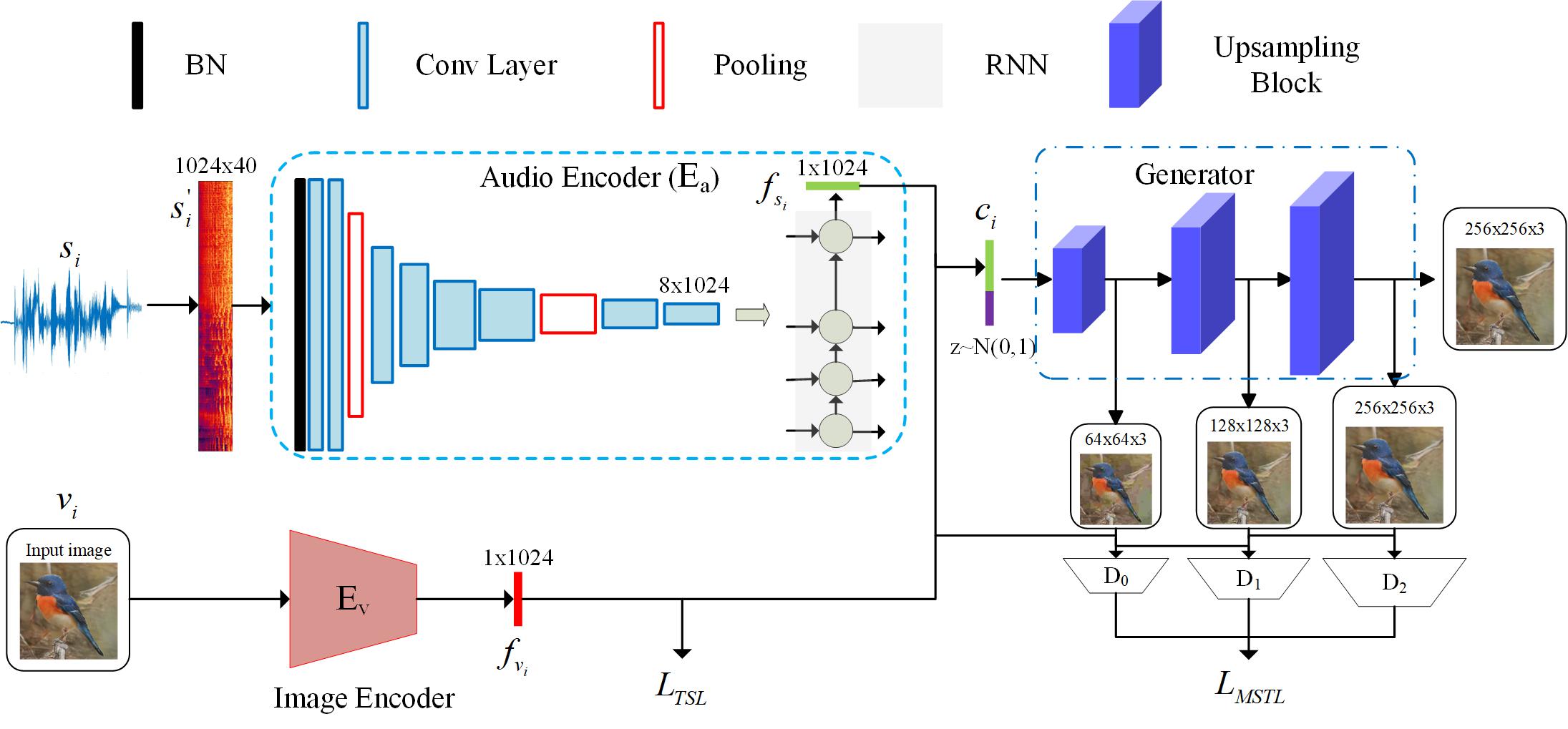

Speech-to-image translation without text is an interesting and useful topic due to the potential applications in humancomputer interaction, art creation, computer-aided design. etc. Not to mention that many languages have no writing form. However, as far as we know, it has not been well-studied how to translate the speech signals into images directly and how well they can be translated. In this paper, we attempt to translate the speech signals into the image signals without the transcription stage by leveraging the advance of teacher-student learning and generative adversarial models. Specifically, a speech encoder is designed to represent the input speech signals as an embedding feature, and it is trained using teacher-student learning to obtain better generalization ability on new classes. Subsequently, a stacked adversarial generative network is used to synthesized high-quality images conditioned on the embedding feature encoded by the speech encoder. Experimental results on both synthesized and real data show that our proposed method is efficient to translate the raw speech signals into images without the middle text representation. Ablation study gives more insights about our method.

Results on synthesized data

Results on read data

Feature interpolation

Supplementary material

Data and Code

We use 3 datasets in our paper, the data can be downloaded form the following table.

The code can be found on my github . Any question about the code or the paper, feel free to mail me: [email protected]

Acknowledgment

We consider the task of reconstructing an image of a person’s face from a short input audio segment of speech. We show several results of our method on VoxCeleb dataset. Our model takes only an audio waveform as input (the true faces are shown just for reference) . Note that our goal is not to reconstruct an accurate image of the person, but rather to recover characteristic physical features that are correlated with the input speech. *The three authors contributed equally to this work.

How much can we infer about a person's looks from the way they speak? In this paper, we study the task of reconstructing a facial image of a person from a short audio recording of that person speaking. We design and train a deep neural network to perform this task using millions of natural videos of people speaking from Internet/Youtube. During training, our model learns audiovisual, voice-face correlations that allow it to produce images that capture various physical attributes of the speakers such as age, gender and ethnicity. This is done in a self-supervised manner, by utilizing the natural co-occurrence of faces and speech in Internet videos, without the need to model attributes explicitly. Our reconstructions, obtained directly from audio, reveal the correlations between faces and voices. We evaluate and numerically quantify how--and in what manner--our Speech2Face reconstructions from audio resemble the true face images of the speakers.

Supplementary Material

Ethical Considerations

Further Reading

Google Research Blog

Subscribe to the PwC Newsletter

Join the community, edit social preview.

Add a new code entry for this paper

Remove a code repository from this paper.

Mark the official implementation from paper authors

Add a new evaluation result row, remove a task, add a method, remove a method, edit datasets, direct speech-to-image translation.

7 Apr 2020 · Jiguo Li , Xinfeng Zhang , Chuanmin Jia , Jizheng Xu , Li Zhang , Yue Wang , Siwei Ma , Wen Gao · Edit social preview

Direct speech-to-image translation without text is an interesting and useful topic due to the potential applications in human-computer interaction, art creation, computer-aided design. etc. Not to mention that many languages have no writing form. However, as far as we know, it has not been well-studied how to translate the speech signals into images directly and how well they can be translated. In this paper, we attempt to translate the speech signals into the image signals without the transcription stage. Specifically, a speech encoder is designed to represent the input speech signals as an embedding feature, and it is trained with a pretrained image encoder using teacher-student learning to obtain better generalization ability on new classes. Subsequently, a stacked generative adversarial network is used to synthesize high-quality images conditioned on the embedding feature. Experimental results on both synthesized and real data show that our proposed method is effective to translate the raw speech signals into images without the middle text representation. Ablation study gives more insights about our method.

Code Edit Add Remove Mark official

Datasets edit.

Direct Speech-to-image Translation

Direct speech-to-image translation without text is an interesting and useful topic due to the potential applications in human-computer interaction, art creation, computer-aided design. etc. Not to mention that many languages have no writing form. However, as far as we know, it has not been well-studied how to translate the speech signals into images directly and how well they can be translated. In this paper, we attempt to translate the speech signals into the image signals without the transcription stage. Specifically, a speech encoder is designed to represent the input speech signals as an embedding feature, and it is trained with a pretrained image encoder using teacher-student learning to obtain better generalization ability on new classes. Subsequently, a stacked generative adversarial network is used to synthesize high-quality images conditioned on the embedding feature. Experimental results on both synthesized and real data show that our proposed method is effective to translate the raw speech signals into images without the middle text representation. Ablation study gives more insights about our method.

Xinfeng Zhang

Chuanmin Jia

Related Research

End-to-end translation of human neural activity to speech with a dual-dual generative adversarial network, probability density distillation with generative adversarial networks for high-quality parallel waveform generation, image to image translation based on convolutional neural network approach for speech declipping, wav2pix: speech-conditioned face generation using generative adversarial networks, s2igan: speech-to-image generation via adversarial learning, audioviewer: learning to visualize sound, fusion-s2igan: an efficient and effective single-stage framework for speech-to-image generation.

Please sign up or login with your details

Generation Overview

AI Generator calls

AI Video Generator calls

AI Chat messages

Genius Mode messages

Genius Mode images

AD-free experience

Private images

- Includes 500 AI Image generations, 1750 AI Chat Messages, 30 AI Video generations, 60 Genius Mode Messages and 60 Genius Mode Images per month. If you go over any of these limits, you will be charged an extra $5 for that group.

- For example: if you go over 500 AI images, but stay within the limits for AI Chat and Genius Mode, you'll be charged $5 per additional 500 AI Image generations.

- Includes 100 AI Image generations and 300 AI Chat Messages. If you go over any of these limits, you will have to pay as you go.

- For example: if you go over 100 AI images, but stay within the limits for AI Chat, you'll have to reload on credits to generate more images. Choose from $5 - $1000. You'll only pay for what you use.

Out of credits

Refill your membership to continue using DeepAI

Share your generations with friends

Speech To Image Translation Framework for Teacher-Student Learning

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Voice speed

Text translation, source text, translation results, document translation, drag and drop.

Website translation

Enter a URL

Image translation

Search code, repositories, users, issues, pull requests...

Provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

speech-to-image

Here are 4 public repositories matching this topic..., smallflyingpig / speech-to-image-translation-without-text.

Code for paper "direct speech-to-image translation"

- Updated Jun 8, 2020

suriya-1403 / speech2image-hack36

A Mobile Application for Hearing Impaired Children who can easily understand & learn with this easƎEdu app. This app can Translate speech to images.

- Updated Sep 20, 2021

mehnoorsiddiqui / whatsapp-dalle-gpt-bot

A conversational, voice-enabled, multilingual WhatsApp bot that can generate both text and image responses using APIMatic auto-generated SDKs for WhatsApp and OpenAI APIs.

- Updated Apr 23, 2023

ICSLabOrganization / sota-fusion

Application to reach the state of art in Deep learning

- Updated Apr 17, 2023

Improve this page

Add a description, image, and links to the speech-to-image topic page so that developers can more easily learn about it.

Curate this topic

Add this topic to your repo

To associate your repository with the speech-to-image topic, visit your repo's landing page and select "manage topics."

Speech-to-Image Creation Using Jina

Use Jina, Whisper and Stable Diffusion to build a cloud-native application for generating images using your speech.

We’re all used to our digital assistants. They can schedule alarms, read the weather and reel off a few corny jokes. But how can we take that further? Can we use our voice to interact with the world (and other machines) in newer and more interesting ways?

Right now, most digital assistants operate in a mostly single-modal capacity. Your voice goes in, their voice comes out. Maybe they perform a few other actions along the way, but you get the idea.

This single-modality way of working is kind of like the Iron Man Mark I armor. Sure, it’s the best at what it does, but we want to do more. Especially since we have all these cool new toys today.

Let’s do the metaphorical equivalent of strapping some lasers and rocket boots onto boring old Alexa. We’ll build a speech recognition system of our own with the ability to generate beautiful AI-powered imagery. And we can apply the lessons from that to build even more complex applications further down the line.

Instead of a single-modal assistant like Alexa or Siri, we’re moving into the bright world of multi-modality , where we can use text to generate images, audio to generate video, or basically any modality (i.e. type of media) to create (or search) any other kind of modality.

You don’t need to be a Stark-level genius to make this happen. Hell, you barely need to be a Hulk level intellect. We’ll do it all in under 90 lines of code.

And what’s more, we’ll do all that with a cloud-native microservice architecture and deploy it to Kubernetes.

Preliminary research

AI has been exploding over the past few years, and we’re rapidly moving from primitive single-modality models (e.g. Transformers for text, Big Image Transfer for images) to multi-modal models that can handle different kinds of data at once (e.g. CLIP, which can handle text, images, and audio all at once).

But hell, even that’s yesterday’s news. Just this year we’ve seen an explosion in tools that can generate images from text prompts (again with that multi-modality), like DiscoArt , DALL-E 2 and Stable Diffusion. That’s not to mention some of the other models out there, which can generate video from text prompts or generate 3D meshes from images .

Let’s create some images with Stable Diffusion (since we’re using that to build our example):

But it’s not just multi-modal text-to-image generation that’s hot right now. Just a few weeks ago, OpenAI released Whisper , an automatic speech-recognition system robust enough to handle to accents, background noise, and technical language.

This post will integrate Whisper and Stable Diffusion, so a user can speak a phrase, Whisper will convert it to text, and Stable Diffusion will use that text to create an image.

Existing works

This isn’t really a new concept. Many people have written papers on it or created examples before:

- S2IGAN: Speech-to-Image Generation via Adversarial Learning

- Direct Speech-to-Image Translation

- Using AI to Generate Art - A Voice-Enabled Art Generation Tool

- Built with AssemblyAI - Real-time Speech-to-Image Generation

The difference is that our example will use cutting edge models, be fully scalable, leverage a microservices architecture, and be simple to deploy to Kubernetes. What’s more, it’ll do all of that in fewer lines of code than the above examples.

The problem

With all these new AI models for multi-modal generation, your imagination is the limit when it comes to thinking of what you can build. But just thinking something isn’t the same as building it. And therein lies some of the key problems:

Dependency Hell

Building fully-integrated monolithic systems is relatively straightforward, but tying together too many cutting edge deep learning models can lead to dependency conflicts. These models were built to showcase cool tech, not to play nice with others.

That’s like if Iron Man’s rocket boots were incompatible with his laser cannons. He’d be flying around shooting down Chitauri, then plummet out of the sky like a rock as his boots crashed.

That’s right everyone. This is no longer about just building a cool demo. We are saving. Iron Man’s. Life. [2]

Choosing a data format

If we’re dealing with multiple modalities, choosing a data format to interoperate between these different models is painful. If we’re just dealing with a single modality like text, we can use plain old strings. If we’re dealing with images we could use image tensors. In our example, we’re dealing with audio and images. How much pain will that be?

Tying it all together

But the biggest challenge of all is mixing different models to create a fully-fledged application . Sure, you can wrap one model in a web API, containerize it, and deploy it on the cloud. But as soon as you need to chain together two models to create a slightly-more-complex application it gets messy. Especially if you want to build a true microservices-based application than can, for instance, replicate some part of your pipeline to avoid downtime. How do you communicate between the different models? And that’s not to mention deploying on a cloud-native platform like Kubernetes or having observability and monitoring for your pipeline.

The solution

Jina AI is building a comprehensive MLOps platform around multimodal AI to help developers and businesses solve challenges like speech-to-image. To integrate Whisper and Stable Diffusion in a clean, scalable, deployable way, we’ll:

- Use Jina to wrap the deep learning models into Executor s.

- Combine these Executors into a complex Jina Flow (a cloud-native AI application with replication, sharding, etc).

- Use DocArray to stream our data (with different modalities) through the Flow, where it will be processed by each Executor in turn.

- Deploy all of that to Kubernetes / JCloud with full observability .

These pipelines and building blocks are not just concepts: A Flow is a cloud-native application, while each Executor is a microservice.

Jina translates the conceptual composition of building blocks at the programming language level (By module/class separation) into a cloud-native composition, with each Executor as its own microservice. These microservices can be seamlessly replicated and sharded. The Flow and the Executors are powered by state of the art networking tools and relies on duplex streaming networking.

This solves the problems we outlined above:

- Dependency Hell - each model will be wrapped into it’s own microservice, so dependencies don’t interfere with each other

- Choosing a data format - DocArray handles whatever we throw at it, be it audio, text, image or anything else.

- Tying it all together - A Jina Flow orchestrates the microservices and provides an API for users to interact with them. With Jina’s cloud-native functionality we can easily deploy to Kubernetes or JCloud and get monitoring and observability.

Building Executors

Every kind of multi-modal search or creation task requires several steps, which differ depending on what you’re trying to achieve. In our case, the steps are:

- Take a user’s voice as input in the UI.

- Transcribe that speech into text using Whisper.

- Take that text output and feed it into Stable Diffusion to generate an image.

- Display that image to the user in the UI.

In this example we’re just focusing on backend matters, so we’ll focus on just steps 2 and 3. For each of these we’ll wrap a model into an Executor:

- WhisperExecutor - transcribes a user’s voice input into text.

- StableDiffusionExecutor - generates images based on the text string generated by WhisperExecutor.

In Jina an Executor is a microservice that performs a single task. All Executors use Documents as their native data format. There’s more on that in the “streaming data” section.

We program each of these Executors at the programming language level (with module/class separation). Each can be seamlessly sharded, replicated, and deployed on Kubernetes. They’re even fully observable by default.

Executor code typically looks like the snippet below (taken from WhisperExecutor). Each function that a user can call has its own @requests decorator , specifying a network endpoint. Since we don’t specify a particular endpoint in this snippet, transcribe() gets called when accessing any endpoint:

Building a Flow

A Flow orchestrates Executors into a processing pipeline to build a multi-modal/cross-modal application. Documents “flow” through the pipeline and are processed by Executors.

You can think of Flow as an interface to configure and launch your microservice architecture , while the heavy lifting is done by the services (i.e. Executors) themselves. In particular, each Flow also launches a Gateway service, which can expose all other services through an API that you define. Its role is to link the Executors together. The Gateway ensures each request passes through the different Executors based on the Flow’s topology (i.e. it goes to Executor A before Executor B).

The Flow in this example has a simple topology , with the WhisperExecutor and StableDiffusionExecutor from above piped together. The code below defines the Flow then opens a port for users to connect and stream data back and forth:

A Flow can be visualized with flow.plot() :

Streaming data

Everything that comes into and goes out of our Flow is a Document , which is a class from the DocArray package. DocArray provides a common API for multiple modalities (in our case, audio, text, image), letting us mix concepts and create multi-modal Documents that can be sent over the wire.

That means that no matter what data modality we’re using, a Document can store it. And since all Executors use Documents (and DocumentArrays ) as their data format, consistency is ensured.

In this speech-to-image example, the input Document is an audio sample of the user’s voice, captured by the UI. This is stored in a Document as a tensor.

If we regard the input Document as doc :

- Initially the doc is created by the UI and the user’s voice input is stored in doc.tensor .

- The doc is send over the wire via gRPC call from the UI to the WhisperExecutor.

- Then the WhisperExecutor takes that tensor and transcribes it to doc.text .

- The doc moves onto the StableDiffusion Executor.

- The StableDiffusion Executor reads in doc.text and generates two images which get stored (as Documents) in doc.matches .

- The UI receives the doc back from the Flow.

- Finally, the UI takes that output Document and renders each of the matches.

Connecting to a Flow

After opening the Flow, users can connect with Jina Client or a third-party client . In this example, the Flow exposes gRPC endpoints but could be easily changed (with one line of code) to implement RESTful, WebSockets or GraphQL endpoints instead.

Deploying a Flow

As a cloud-native framework, Jina shines brightest when coupled with Kubernetes. The documentation explains how to deploy a Flow on Kubernetes, but let’s get some insight into what’s happening under the hood.

As mentioned earlier, an Executor is a containerized microservice. Both Executors in our application are deployed independently on Kubernetes as a Deployment . This means Kubernetes handles their lifecycle, scheduling on the correct machine etc. In addition, the Gateway is deployed in the Deployment to flow the requests through the Executors. This translation to Kubernetes concepts is done on the fly by Jina. You simply need to define your Flow in Python.

Alternatively a Flow can be deployed on JCloud, which handles all of the above, as well as providing an out-of-the-box monitoring dashboard. To do so, the Python Flow needs to be converted to a YAML Flow (with a few JCloud specific parameters ), and then deployed:

Let’s take the app for a spin and give it some test queries:

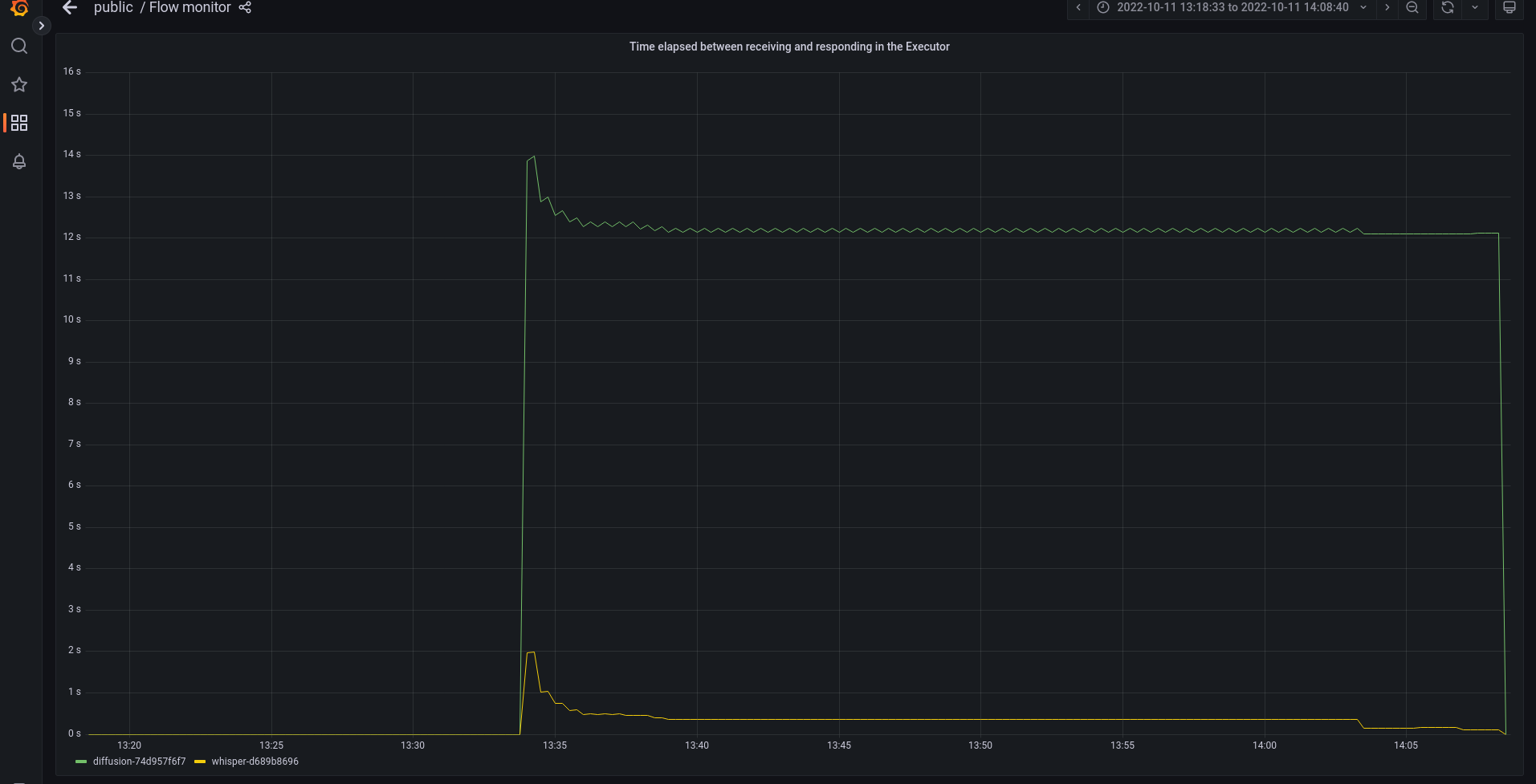

With monitoring enabled (which is the default in JCloud), everything that happens inside the Flow can be monitored on a Grafana dashboard:

One advantage of monitoring is that it can help you optimize your application to make it more resilient in a cost-effective way by detecting bottlenecks. The monitoring shows that the StableDiffusion Executor is a performance bottleneck:

This means that latency would skyrocket under a heavy workload. To get around this, we can replicate the StableDiffusion Executor to split image generation between different machines, thereby increasing efficiency:

or in the YAML file (for JCloud):

In this post we created a cloud-native audio-to-image generation tool using the state of the art AI models and the Jina AI MLOps platform.

With DocArray we used one data format to handle everything, allowing us to easily stream data back and forth. And with Jina we created a complex serving pipeline natively employing microservices, which we could then easily deploy to Kubernetes.

Since Jina provides a modular architecture, it’s straightforward to apply a similar solution to very different use cases, such as:

- Building a multi-modal PDF neural search engine, where a user can search for matching PDFs using strings or images.

- Building a multi-modal fashion neural search engine, where users can find products based on a string or image.

- Generating 3D models for movie scene design using a string or image as input.

- Generating complex blog posts using GPT-3.

All of these can be done in a manner that is cloud-native, scalable, and simple to deploy with Kubernetes.

Audio Course documentation

Speech-to-speech translation

Audio course.

and get access to the augmented documentation experience

to get started

Speech-to-speech translation (STST or S2ST) is a relatively new spoken language processing task. It involves translating speech from one langauge into speech in a different language:

STST can be viewed as an extension of the traditional machine translation (MT) task: instead of translating text from one language into another, we translate speech from one language into another. STST holds applications in the field of multilingual communication, enabling speakers in different languages to communicate with one another through the medium of speech.

Suppose you want to communicate with another individual across a langauge barrier. Rather than writing the information that you want to convey and then translating it to text in the target language, you can speak it directly and have a STST system convert your spoken speech into the target langauge. The recipient can then respond by speaking back at the STST system, and you can listen to their response. This is a more natural way of communicating compared to text-based machine translation.

In this chapter, we’ll explore a cascaded approach to STST, piecing together the knowledge you’ve acquired in Units 5 and 6 of the course. We’ll use a speech translation (ST) system to transcribe the source speech into text in the target language, then text-to-speech (TTS) to generate speech in the target language from the translated text:

We could also have used a three stage approach, where first we use an automatic speech recognition (ASR) system to transcribe the source speech into text in the same language, then machine translation to translate the transcribed text into the target language, and finally text-to-speech to generate speech in the target language. However, adding more components to the pipeline lends itself to error propagation , where the errors introduced in one system are compounded as they flow through the remaining systems, and also increases latency, since inference has to be conducted for more models.

While this cascaded approach to STST is pretty straightforward, it results in very effective STST systems. The three-stage cascaded system of ASR + MT + TTS was previously used to power many commercial STST products, including Google Translate . It’s also a very data and compute efficient way of developing a STST system, since existing speech recognition and text-to-speech systems can be coupled together to yield a new STST model without any additional training.

In the remainder of this Unit, we’ll focus on creating a STST system that translates speech from any language X to speech in English. The methods covered can be extended to STST systems that translate from any language X to any langauge Y, but we leave this as an extension to the reader and provide pointers where applicable. We further divide up the task of STST into its two constituent components: ST and TTS. We’ll finish by piecing them together to build a Gradio demo to showcase our system.

Speech translation

We’ll use the Whisper model for our speech translation system, since it’s capable of translating from over 96 languages to English. Specifically, we’ll load the Whisper Base checkpoint, which clocks in at 74M parameters. It’s by no means the most performant Whisper model, with the largest Whisper checkpoint being over 20x larger, but since we’re concatenating two auto-regressive systems together (ST + TTS), we want to ensure each model can generate relatively quickly so that we get reasonable inference speed:

Great! To test our STST system, we’ll load an audio sample in a non-English language. Let’s load the first example of the Italian ( it ) split of the VoxPopuli dataset:

To listen to this sample, we can either play it using the dataset viewer on the Hub: facebook/voxpopuli/viewer

Or playback using the ipynb audio feature:

Now let’s define a function that takes this audio input and returns the translated text. You’ll remember that we have to pass the generation key-word argument for the "task" , setting it to "translate" to ensure that Whisper performs speech translation and not speech recognition:

Whisper can also be ‘tricked’ into translating from speech in any language X to any language Y. Simply set the task to "transcribe" and the "language" to your target language in the generation key-word arguments, e.g. for Spanish, one would set:

generate_kwargs={"task": "transcribe", "language": "es"}

Great! Let’s quickly check that we get a sensible result from the model:

Alright! If we compare this to the source text:

We see that the translation more or less lines up (you can double check this using Google Translate), barring a small extra few words at the start of the transcription where the speaker was finishing off their previous sentence.

With that, we’ve completed the first half of our cascaded STST pipeline, putting into practice the skills we gained in Unit 5 when we learnt how to use the Whisper model for speech recognition and translation. If you want a refresher on any of the steps we covered, have a read through the section on Pre-trained models for ASR from Unit 5.

Text-to-speech

The second half of our cascaded STST system involves mapping from English text to English speech. For this, we’ll use the pre-trained SpeechT5 TTS model for English TTS. 🤗 Transformers currently doesn’t have a TTS pipeline , so we’ll have to use the model directly ourselves. This is no biggie, you’re all experts on using the model for inference following Unit 6!

First, let’s load the SpeechT5 processor, model and vocoder from the pre-trained checkpoint:

As with the Whisper model, we’ll place the SpeechT5 model and vocoder on our GPU accelerator device if we have one:

Great! Let’s load up the speaker embeddings:

We can now write a function that takes a text prompt as input, and generates the corresponding speech. We’ll first pre-process the text input using the SpeechT5 processor, tokenizing the text to get our input ids. We’ll then pass the input ids and speaker embeddings to the SpeechT5 model, placing each on the accelerator device if available. Finally, we’ll return the generated speech, bringing it back to the CPU so that we can play it back in our ipynb notebook:

Let’s check it works with a dummy text input:

Sounds good! Now for the exciting part - piecing it all together.

Creating a STST demo

Before we create a Gradio demo to showcase our STST system, let’s first do a quick sanity check to make sure we can concatenate the two models, putting an audio sample in and getting an audio sample out. We’ll do this by concatenating the two functions we defined in the previous two sub-sections, such that we input the source audio and retrieve the translated text, then synthesise the translated text to get the translated speech. Finally, we’ll convert the synthesised speech to an int16 array, which is the output audio file format expected by Gradio. To do this, we first have to normalise the audio array by the dynamic range of the target dtype ( int16 ), and then convert from the default NumPy dtype ( float64 ) to the target dtype ( int16 ):

Let’s check this concatenated function gives the expected result:

Perfect! Now we’ll wrap this up into a nice Gradio demo so that we can record our source speech using a microphone input or file input and playback the system’s prediction:

This will launch a Gradio demo similar to the one running on the Hugging Face Space:

You can duplicate this demo and adapt it to use a different Whisper checkpoint, a different TTS checkpoint, or relax the constraint of outputting English speech and follow the tips provide for translating into a langauge of your choice!

Going forwards

While the cascaded system is a compute and data efficient way of building a STST system, it suffers from the issues of error propagation and additive latency described above. Recent works have explored a direct approach to STST, one that does not predict an intermediate text output and instead maps directly from source speech to target speech. These systems are also capable of retaining the speaking characteristics of the source speaker in the target speech (such a prosody, pitch and intonation). If you’re interested in finding out more about these systems, check-out the resources listed in the section on supplemental reading .

HTML conversions sometimes display errors due to content that did not convert correctly from the source. This paper uses the following packages that are not yet supported by the HTML conversion tool. Feedback on these issues are not necessary; they are known and are being worked on.

- failed: inconsolata

- failed: savetrees

- failed: arydshln

Authors: achieve the best HTML results from your LaTeX submissions by following these best practices .

TMT: Tri-Modal Translation between Speech, Image, and Text by Processing Different Modalities as Different Languages

The capability to jointly process multi-modal information is becoming an essential task. However, the limited number of paired multi-modal data and the large computational requirements in multi-modal learning hinder the development. We propose a novel Tri-Modal Translation (TMT) model that translates between arbitrary modalities spanning speech, image, and text. We introduce a novel viewpoint, where we interpret different modalities as different languages, and treat multi-modal translation as a well-established machine translation problem. To this end, we tokenize speech and image data into discrete tokens, which provide a unified interface across modalities and significantly decrease the computational cost. In the proposed TMT, a multi-modal encoder-decoder conducts the core translation, whereas modality-specific processing is conducted only within the tokenization and detokenization stages. We evaluate the proposed TMT on all six modality translation tasks. TMT outperforms single model counterparts consistently, demonstrating that unifying tasks is beneficial not only for practicality but also for performance.

1 Introduction

In search of artificial general intelligence, seamlessly processing and representing multi-modal information is a crucial prerequisite. Leveraging numerous efforts on the investigation of diverse multi-modal work, systems capable of processing audio-visual Chen et al. ( 2021 ); Hong et al. ( 2023 ) , audio-text Yang et al. ( 2023 ); Wu et al. ( 2024 ) , and text-visual Ramesh et al. ( 2021 ); Li et al. ( 2023b ) modalities are actively emerging. Furthermore, developing a unified model that processes tri-modalities ( i . e ., audio, visual, and text) is drawing attention given its potential for advancing general intelligence Wu et al. ( 2023 ); Han et al. ( 2023 ) .

However, building such a unified model remains challenging due to multifaceted factors. The distinct nature of different modalities intrinsically burdens the multi-modal systems to include two roles: processing modality-specifics and translating between different modalities. Consequently, the computational load required to train multi-modal models usually exceeds that of uni-modal models, attributed to the effort necessary to do two things simultaneously 1 1 1 We believe this computational burden is one of the most impactful bottlenecks that hinder large multi-modal models, compared to Large Language Models (LLMs), where one can scale the model size and efficiently train it with text-only data. . Furthermore, the necessity of paired multi-modal data is another obstacle because the amount of such data ( e . g ., speech-image-text) is less abundant than uni-modal data.

In this paper, we propose a novel Tri-Modal Translation (TMT) framework that freely translates between different modalities ( i . e ., speech, image, and text) using a single model. Specifically, we present a novel viewpoint on multi-modal modeling by interpreting different modalities as different languages. We split the Multi-Modal Translation (MMT) problem into modality-specific processings and an integrated translation, where the latter is treated as the Neural Machine Translation (NMT). To this end, we discretize all modalities into tokens in advance 2 2 2 Image and speech are represented as sequences of integers. by employing pre-trained modality-specific tokenizers. This enables us to leverage the expertise from each field, discarding modality-specifics from the core translation modeling.

The proposed TMT framework and viewpoint introduce several benefits. First, the proposed system is “purely multi-modal” by uniformly interfacing all modalities together different to the method of mapping different modalities into the text space of LLM Wu et al. ( 2023 ) , which we believe is the future direction of building multi-modal LLMs. Second, through discretization, the computational burden in modeling speech and image is significantly relaxed, specifically by more than 99 99 99 99 % Chang et al. ( 2023b ); Kim et al. ( 2024a ) in the aspect of required bits. Finally, we can employ unlimited training data through Back Translation (BT) Sennrich et al. ( 2016 ) , a well-known data augmentation strategy in NMT 3 3 3 BT typically translates target monolingual data into a source language to construct source-to-target parallel data. . Therefore, even utilizing uni-modal data Deng et al. ( 2009 ); Kahn et al. ( 2020 ); Fan et al. ( 2019 ) in building TMT becomes possible. With these advantages, we can efficiently scale up multi-modal training, combining computational efficiency with large-scale data.

The contributions of this paper can be summarized as follows: 1) To the best of our knowledge, this work is the first to explore translations between tri-modalities, image, speech, and text, by discretizing all modalities. 2) We provide a novel interpretation of modalities as languages. By regarding MMT as NMT, we show that target uni-modal corpora can be also employed through back translation. 3) The proposed TMT encompasses direct speech-to-image synthesis and image-to-speech captioning, both of which have not well been addressed in the previous literature. 4) We show that the proposed TMT can be efficiently trained like text-only systems via extensive experiments on six MMT tasks. Project page is available on github.com/ms-dot-k/TMT .

2 Related Work

NMT aims to transform a source language sentence into a target language Stahlberg ( 2020 ) . With advanced network architectures Kenton and Toutanova ( 2019 ); Lewis et al. ( 2020 ) and training methodologies, including denoising pre-training Ho et al. ( 2020 ) and BT Sennrich et al. ( 2016 ) , the field has rapidly developed. In this work, we interpret discretized speech and images as different languages so that TMT can be addressed using the format of NMT. In this viewpoint, NMT techniques, back translation and network architecture, are incorporated into TMT.

MMT. Developing models capable of performing multiple MMT tasks is a popular research topic with diverse groups of researchers’ investigation Kim et al. ( 2023 ); Ao et al. ( 2022 ); Girdhar et al. ( 2023 ) . MUGEN Hayes et al. ( 2022 ) explored the discretization of video, audio, and text obtained from synthetic game data, and successfully trained multiple MMT models capable of a single MMT task. VoxtLM Maiti et al. ( 2024 ) proposed a decoder-only architecture to train a model that performs four MMT tasks ( 2 × 2 2 2 2\times 2 2 × 2 ) spanning speech and text modalities. They discretized speech using a pre-trained self-supervised feature and treated discretized speech tokens in the same manner as text tokens within a combined vocabulary set. SEED Ge et al. ( 2023a , b ) proposed an image tokenizer using contrastive learning between image and text. With the learned image tokens, they finetuned an LLM spanning text and image modalities, covering two MMT tasks, image captioning and text-to-image synthesis. Different to VoxtLM and SEED, TMT is not restricted to bi-modal translation and can translate between tri-modalities.

There also exist a few concurrent works aiming to handle tri-modalities. NExT-GPT Wu et al. ( 2023 ) tries to adapt different modalities into the text space of a pre-trained LLM by using several modality-specific encoders and adaptors, where the overall performance depends on the performance of the LLM. Distinct from NExT-GPT, our TMT is “born multi-modal” by learning from multi-modal data instead of projecting different modalities’ representation into that of a text LLM. Gemini Team et al. ( 2023 ) is a recently proposed multi-modal LLM. Different to Gemini, the proposed TMT can directly synthesize speech. Most significantly, different to the existing work, TMT treats different modalities as different languages by interpreting discretized image and speech data equivalent to text tokens. Hence, modality specifics are separated and all six MMT tasks are jointly modeled with a unified interface with fully shared parameters.

Fig. 1 illustrates the overall scheme of the proposed TMT framework. Let x i subscript 𝑥 𝑖 x_{i} italic_x start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT be an image, x s subscript 𝑥 𝑠 x_{s} italic_x start_POSTSUBSCRIPT italic_s end_POSTSUBSCRIPT be speech describing the image, and x t subscript 𝑥 𝑡 x_{t} italic_x start_POSTSUBSCRIPT italic_t end_POSTSUBSCRIPT be the transcription of the speech. The main objective is to build a single model that freely translates between different modalities ( i . e ., x t subscript 𝑥 𝑡 x_{t} italic_x start_POSTSUBSCRIPT italic_t end_POSTSUBSCRIPT , x s subscript 𝑥 𝑠 x_{s} italic_x start_POSTSUBSCRIPT italic_s end_POSTSUBSCRIPT , and x i subscript 𝑥 𝑖 x_{i} italic_x start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT ). For this goal, we first tokenize image, speech, and text data into discretized tokens (Fig. 1 a and § § \lx@sectionsign § 3.1 ). The vocabulary set of TMT is a union set of three sets of vocabularies. Then we treat discretized tokens from different modalities as different languages and train the TMT model like training an NMT model ( § § \lx@sectionsign § 3.2 ). The proposed TMT can translate between tri-modalities, covering all six MMT tasks ( § § \lx@sectionsign § 3.3 ).

3.1 Individual tokenization of each modality

Discretized representations have demonstrated promising results in various research fields. In image processing, Vector Quantization (VQ) Van Den Oord et al. ( 2017 ); Esser et al. ( 2021 ); Ge et al. ( 2023a , b ) has successfully expressed images using discrete tokens. In speech processing, VQ Défossez et al. ( 2022 ); Wang et al. ( 2023 ) and Self-Supervised Learning (SSL) Baevski et al. ( 2020 ); Hsu et al. ( 2021a ); Chen et al. ( 2022 ) model-based discrete tokens Lakhotia et al. ( 2021 ) are showing remarkable performances across different tasks ( e . g ., speech translation Lee et al. ( 2022b ); Popuri et al. ( 2022 ); Choi et al. ( 2023 ) and synthesis Polyak et al. ( 2021 ); Hayashi and Watanabe ( 2020 ) ).

Motivated by the recent success, we tokenize image, speech, and text modalities. Specifically, as shown in Fig. 1 a, the raw image is tokenized into image tokens u i ∈ ℕ L i subscript 𝑢 𝑖 superscript ℕ subscript 𝐿 𝑖 u_{i}\in\mathbb{N}^{L_{i}} italic_u start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT ∈ blackboard_N start_POSTSUPERSCRIPT italic_L start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT end_POSTSUPERSCRIPT by using SEED-2 Ge et al. ( 2023b ) tokenizer, where each image is compressed into 32 tokens ( i . e ., L i = 32 subscript 𝐿 𝑖 32 L_{i}=32 italic_L start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT = 32 ). Speech is also tokenized into discrete values u s ∈ ℕ L s subscript 𝑢 𝑠 superscript ℕ subscript 𝐿 𝑠 u_{s}\in\mathbb{N}^{L_{s}} italic_u start_POSTSUBSCRIPT italic_s end_POSTSUBSCRIPT ∈ blackboard_N start_POSTSUPERSCRIPT italic_L start_POSTSUBSCRIPT italic_s end_POSTSUBSCRIPT end_POSTSUPERSCRIPT by clustering the features of a HuBERT Hsu et al. ( 2021a ) , an SSL speech model, whose output is 50Hz. Following the practices in Lakhotia et al. ( 2021 ) , we merge duplicated tokens so that the granularity of speech tokens ( i . e ., L s subscript 𝐿 𝑠 L_{s} italic_L start_POSTSUBSCRIPT italic_s end_POSTSUBSCRIPT ) is less than 50Hz. For the text input, we employ the BERT tokenizer Schuster and Nakajima ( 2012 ); Kenton and Toutanova ( 2019 ) to derive text tokens u t ∈ ℕ L t subscript 𝑢 𝑡 superscript ℕ subscript 𝐿 𝑡 u_{t}\in\mathbb{N}^{L_{t}} italic_u start_POSTSUBSCRIPT italic_t end_POSTSUBSCRIPT ∈ blackboard_N start_POSTSUPERSCRIPT italic_L start_POSTSUBSCRIPT italic_t end_POSTSUBSCRIPT end_POSTSUPERSCRIPT .

Advantages of tokenizing tri-modalities. Tokenizing all three modalities brings two main advantages. Firstly, the MMT problem now has a unified interface. Modality specifics are processed mostly within tokenization and detokenization process.

The other key advantage is efficiency Chang et al. ( 2023b ); Park et al. ( 2023 ) . Processing raw image and audio is burdensome due to the necessity of large-scale data storage and computational resources Kim et al. ( 2024b ) . Discretizing speech and image reduces the bits required to less than 0.2% and 0.04% as shown in Table 1 and appendix F . Therefore, by tokenizing all modalities before training, we can significantly increase the computational efficiency for both storage and computing memory.

3.2 Integrated modeling of tri-modalities

As shown in Fig. 1 b, we leverage a multi-modal encoder-decoder architecture Vaswani et al. ( 2017 ); Lewis et al. ( 2020 ); Liu et al. ( 2020 ) , widely used in the NMT literature as the backbone architecture. Therefore, all parameters are shared between all six MMT tasks. A popular auto-regressive training scheme is employed. To inform both the encoder and the decoder about input and output modalities, we add a modal-type embedding to the embedded features after the token embedding layer, similar to language embeddings Conneau and Lample ( 2019 ) . Then, the losses for each MMT are summed and backpropagated. Formally put, the objective function of TMT training can be expressed as:

where k 𝑘 k italic_k and m 𝑚 m italic_m refer to a pair of non-overlapping modalities, ℳ ℳ \mathcal{M}\, caligraphic_M = { i , s , t } 𝑖 𝑠 𝑡 \,\{i,s,t\} { italic_i , italic_s , italic_t } is the set of tri-modalities, and u < l superscript 𝑢 absent 𝑙 u^{<l} italic_u start_POSTSUPERSCRIPT < italic_l end_POSTSUPERSCRIPT represents the predictions before l 𝑙 l italic_l -th frame.

3.3 MMT tasks covered by TMT

TMT incorporates all six MMT tasks ( 𝐏 2 3 subscript subscript 𝐏 2 3 {}_{3}\mathbf{P}_{2} start_FLOATSUBSCRIPT 3 end_FLOATSUBSCRIPT bold_P start_POSTSUBSCRIPT 2 end_POSTSUBSCRIPT ) spanning different combinations of input and output modalities. Related works for each MMT task can be found in appendix E .

Image Captioning Xu et al. ( 2015 ) is a task of describing input image content using natural language. A comprehensive understanding of the input image and the naturalness of the output text are important.

Image-to-Speech Captioning Hsu et al. ( 2021b ) is a task that directly synthesizes a spoken description of the input image. This task is used for the image-to-speech translation evaluation of TMT. It is regarded as a challenging task due to insufficient supervision and the complex nature of speech.

Text-driven Image Synthesis Ramesh et al. ( 2021 , 2022 ); Saharia et al. ( 2022 ); Chang et al. ( 2023a ) aims to synthesize an image that matches a given text description. Both the naturalness of the generated image and the correctness of description are important.

Speech-driven Image Synthesis generates an image from a spoken description Li et al. ( 2020 ); Wang et al. ( 2020 ) . The task is equal to solving an automatic speech recognition (ASR) and text-driven image synthesis at once without the intermediate text representation. Similar to the relationship between image captioning and image-to-speech captioning, further challenges exist, mainly due to the absence of text modality. This task remains crucial for those languages that do not have writing systems Lee et al. ( 2022b ) , where text-based systems cannot be applied. Note that this paper is the first to synthesize high-resolution images of 768 × \times × 768 from speech.

ASR is a well-established task that generates text transcriptions from an input speech Amodei et al. ( 2016 ); Prabhavalkar et al. ( 2023 ) . We use this task for speech-to-text translation of TMT.

Text-to-Speech Synthesis (TTS) generates spoken content corresponding to the given text transcription Wang et al. ( 2017 ); Tan ( 2023 ) .

3.4 Back Translation (BT)

We investigate whether applying BT in the MMT problem based on our interpretation can help improve the performance even if utilizing uni-modal databases. The TMT model is first pre-trained with approximately 10M paired tri-modal data (detailed in § § \lx@sectionsign § 4.1 ) to produce an intermediate model. Then, with the intermediate model, we apply BT on a uni-modal image dataset, ImageNet Deng et al. ( 2009 ) , to produce pseudo source modalities of speech and text. This results in 2.6M text-image and speech-image data. We also apply BT on a speech-text bi-modal dataset, CommonVoice Ardila et al. ( 2020 ) , and produce additional 2M image-speech and image-text data. By merging the pseudo data on top of the original tri-modal data pairs, we further train the intermediate TMT model and derive the final TMT model.

4 Experiments

4.1 datasets.

The training data comprises Conceptual Captions 3M (CC3M), Conceptual Captions 12M (CC12M) Sharma et al. ( 2018 ); Changpinyo et al. ( 2021 ) , COCO Lin et al. ( 2014 ) , SpokenCOCO Hsu et al. ( 2021b ) , Flickr8k Hodosh et al. ( 2013 ) , and Flickr8kAudio Harwath and Glass ( 2015 ) . For COCO and Flickr8k, we employ the original corpora for image-text pairs and then further employ SpokenCOCO and Flickr8kAudio, their recorded speech version, to comprise audio-text-image tri-modal pairs. For CC3M and CC12M, VITS Kim et al. ( 2021a ) , a TTS model trained on VCTK Yamagishi et al. ( 2019 ) , is employed to synthesize speech from random speakers to compile audio-text-image pairs. The evaluation is performed on the test split of COCO and Flickr8k after finetuning. The popular Karpathy splits Karpathy and Fei-Fei ( 2015 ) are employed for COCO and Flickr8k.

4.2 Metrics

We leverage diverse metrics to evaluate six MMT tasks spanning three output modalities.

Captioning tasks. For the image captioning and image-to-speech captioning tasks, we employ BLEU Papineni et al. ( 2002 ) , METEOR Denkowski and Lavie ( 2014 ) , ROUGE Lin ( 2004 ) , CIDEr Vedantam et al. ( 2015 ) , and SPICE Anderson et al. ( 2016 ) . BLEU, METEOR, ROUGE, and CIDEr measure n-gram overlaps between predictions and references. SPICE compares semantic propositional content. Across all metrics, higher value indicates better performance.

Image synthesis tasks. For the text- and speech-driven image synthesis tasks, we adopt the CLIP score Radford et al. ( 2021 ) ( i . e ., the cosine similarity) to assess how similar the generated image is associated with the input text or speech, following Ge et al. ( 2023a ); Koh et al. ( 2023 ) .

ASR and TTS. We employ the widely used Word Error Rate (WER) for ASR and TTS. For TTS, we transcribe the generated speech by using a pre-trained ASR model Baevski et al. ( 2020 ) , following Lakhotia et al. ( 2021 ); Kim et al. ( 2023 ) . Additionally, we employ Mean Opinion Score (MOS) and neural MOS to assess the quality of generated results.

4.3 Implementation Details

The input image is resized into 224 × \times × 224, while the output image size is 768 × \times × 768. The input audio is resampled to 16kHz and tokenized using a pre-trained HuBERT base model Hsu et al. ( 2021a ) . The backbone model that models the multi-modal information is an encoder-decoder Transformer Vaswani et al. ( 2017 ) with 12 layers each. For pre-training the TMT before applying BT, we train the model for 350k steps. Then, we further train the model using the additional data obtained by applying BT, for 250k steps with the same configuration but with a peak learning rate of 5 e − 5 5 superscript 𝑒 5 5e^{-5} 5 italic_e start_POSTSUPERSCRIPT - 5 end_POSTSUPERSCRIPT . For decoding, we use beam search with a beam width of 5. Full implementation details including hyper-parameters can be found in appendix B .

4.4 Results

Firstly, we illustrate selected samples for each task of TMT in Fig. 2 . We then compare single MMT task models versus the proposed TMT model. Next, we compare our TMT with other works in the literature for each MMT task. Finally, we conduct a human subjective evaluation on two speech generation tasks: Image-to-Speech synthesis and TTS. Further ablations on the discrete token vocabulary size and image tokenizer are presented in appendix C .

4.4.1 Effectiveness of Unifying Tasks

We start with the question: “Can training a single model, capable of multiple MMT tasks, have synergy in performance on top of efficiency compared to training multiple single MMT models?” We train six single MMT models and one TMT model using the train sets of COCO and Flickr8k. For fairness, all models are trained for 300k iterations.

Table 2 compares the captioning performances, including image-to-text and image-to-speech translations. The results show that by unifying tasks, we can consistently obtain performance gain. In our analysis, this stems from the unified multi-modal decoder. The decoder can leverage complementary multi-modal information in learning language modeling. Table 3 shows the comparison results for the remaining four tasks. Similar to captioning, the unified TMT model outperforms its single-task counterparts consistently, except for the text-to-image translation. However, the performance differences between single MMT and TMT in text-to-image translation are marginal, while the performance gain obtained by unifying tasks is substantial for speech-to-image translation. We conclude that unifying tasks is beneficial not only for practicality by building a single model but also in the aspect of performance.

4.4.2 Comparison with the literature

We compare TMT’s performance on each MMT task with the other works in the literature. The TMT model in this section employs 10M training samples.

Image Captioning Tasks. Table 4 shows the results of image-to-text translation of TMT and other competing systems. We also report the performances of raw image-based systems for reference purposes. We observe that our TMT accomplishes comparable performances among state-of-the-art discrete image-based models. Notably, the proposed TMT is competitive with the LLM-based method Ge et al. ( 2023a ) with a much smaller number of parameters, on COCO. Note that Ge et al. ( 2023a ) is built on OPT 2.7B model Zhang et al. ( 2022 ) while the proposed TMT consists of a 270M multi-modal model. This shows the effectiveness of the proposed TMT compared to the method of finetuning LLM. Moreover, TMT can perform six MMT tasks while the previous methods can only perform two MMT tasks at maximum.

Table 5 shows the direct image-to-speech captioning performances. TMT outperforms the recently proposed state-of-the-art Kim et al. ( 2024a ) on both COCO and Flickr8k databases and even achieves comparable performances with raw-image based method. Since the decoder of the proposed TMT is trained using multi-tasks with text simultaneously, it better models linguistics compared to the transfer learning from the text of Kim et al. ( 2024a ) .

Image Synthesis Tasks. The upper part of Table 6 displays the performances of text- and speech-driven image synthesis tasks. Following the literature, we evaluate the model on Flickr30k instead of using Flickr8k for the text-to-image synthesis tasks. The proposed TMT achieves the best CLIP similarity on both databases in text-to-image translation, indicating that TMT generates images that best describe the given text sentences. Note that the proposed TMT has much fewer model parameters ( i . e ., 270M) compared to Koh et al. ( 2023 ) and Ge et al. ( 2023a ) which are based on LLMs, OPT 6.7B, and OPT 2.7B, respectively. TMT still outperforms the competitors with more diverse MMT task abilities. Also, the speech-to-image translation performance of TMT on COCO is 68.88, closer to that of text-to-image performance. We hence confirm that even if using speech directly as input, TMT correctly generates images described in the speech. We underline that this work is the first to explore high-resolution image synthesis at a resolution of 768 × \times × 768 from speech. In addition to the samples shown in Fig 2 , appendix A .

ASR and TTS Tasks. The lower part of Table 6 shows the performances of ASR and TTS. For the evaluation purpose, we compare the ASR performance with a pre-trained Wav2Vec2.0 (W2V2) Baevski et al. ( 2020 ) model. The results show that TMT can correctly recognize the input speech into text by achieving lower WER compared to W2V2. For TTS, as the proposed model is a discrete token-based speech synthesis model, we compare the performance with the resynthesized speech (ReSyn) from the ground-truth speech tokens following Lakhotia et al. ( 2021 ); Kim et al. ( 2023 ) . Results demonstrate that the TMT can generate the correct speech from input text without suffering in synthesizing background noises which inherently exist in the ground-truth speech tokens.

4.4.3 Mean Opinion Score Comparisons

In order to assess the quality of generated speech in both image-to-speech captioning and text-to-speech tasks, we conduct human subjective study and neural MOS test using MOSNet Lo et al. ( 2018 ) and SpeechLMScore Maiti et al. ( 2023 ) . Specifically, we enlisted 21 participants to evaluate the naturalness of the generated speech for both tasks, and for the image-to-speech captioning task, we also assessed descriptiveness, gauging the extent to which the speech accurately describes the input images. Following the previous works Hsu et al. ( 2021b ); Kim et al. ( 2024a ) , 20 samples are used for the image-to-speech captioning task. For TTS, 20 samples and 100 samples are employed for human subjective study and neural MOS, respectively. The results are shown in Table 7 . TMT achieves the best score for both human study and neural MOS scores in image-to-speech captioning. Moreover, we achieve comparable performances with VITS on TTS task. Through MOS evaluation, we can confirm the effectiveness of TMT in generating intelligible and natural speech.

4.4.4 Effectiveness of Back Translation

Table 8 shows the result of training TMT with and without BT. By employing additional 4M back translated data, we observe no significant yet consistent improvement across tasks of TMT. This shows the potential for further improvement, since the amount of additional data BT can produce is limitless. In appendix D , we show the examples of back translated data on both uni-modal data and bi-modal data.

5 Conclusion

We introduced TMT, a novel tri-modal translation model between speech, image, and text modalities. We interpreted different modalities as new languages and approached the MMT task as an NMT task after tokenizing all modalities. Our experiments demonstrated that the three modalities could be successfully translated using a multi-modal encoder-decoder architecture, incorporating six MMT tasks in a single model. TMT outperformed single MMT model counterparts. Remarkably, TMT achieves comparable performances with LLM-based methods consisting of more than 2.7B parameters, whereas TMT only has 270M parameters.

- Amodei et al. (2016) Dario Amodei, Sundaram Ananthanarayanan, Rishita Anubhai, Jingliang Bai, Eric Battenberg, Carl Case, Jared Casper, Bryan Catanzaro, Qiang Cheng, Guoliang Chen, et al. 2016. Deep speech 2: End-to-end speech recognition in english and mandarin. In International conference on machine learning , pages 173–182. PMLR.

- Anderson et al. (2016) Peter Anderson, Basura Fernando, Mark Johnson, and Stephen Gould. 2016. Spice: Semantic propositional image caption evaluation. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part V 14 , pages 382–398. Springer.

- Ao et al. (2022) Junyi Ao, Rui Wang, Long Zhou, Chengyi Wang, Shuo Ren, Yu Wu, Shujie Liu, Tom Ko, Qing Li, Yu Zhang, et al. 2022. Speecht5: Unified-modal encoder-decoder pre-training for spoken language processing. In Proc. ACL , pages 5723–5738.

- Ardila et al. (2020) Rosana Ardila, Megan Branson, Kelly Davis, Michael Kohler, Josh Meyer, Michael Henretty, Reuben Morais, Lindsay Saunders, Francis Tyers, and Gregor Weber. 2020. Common voice: A massively-multilingual speech corpus. In Proceedings of the Twelfth Language Resources and Evaluation Conference , pages 4218–4222.

- Baevski et al. (2020) Alexei Baevski, Yuhao Zhou, Abdelrahman Mohamed, and Michael Auli. 2020. wav2vec 2.0: A framework for self-supervised learning of speech representations. Advances in neural information processing systems , 33:12449–12460.

- Chang et al. (2023a) Huiwen Chang, Han Zhang, Jarred Barber, AJ Maschinot, Jose Lezama, Lu Jiang, Ming-Hsuan Yang, Kevin Murphy, William T Freeman, Michael Rubinstein, et al. 2023a. Muse: Text-to-image generation via masked generative transformers. arXiv preprint arXiv:2301.00704 .

- Chang et al. (2023b) Xuankai Chang, Brian Yan, Yuya Fujita, Takashi Maekaku, and Shinji Watanabe. 2023b. Exploration of efficient end-to-end asr using discretized input from self-supervised learning. In Proc. Interspeech .

- Changpinyo et al. (2021) Soravit Changpinyo, Piyush Sharma, Nan Ding, and Radu Soricut. 2021. Conceptual 12m: Pushing web-scale image-text pre-training to recognize long-tail visual concepts. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition , pages 3558–3568.

- Chen et al. (2021) Honglie Chen, Weidi Xie, Triantafyllos Afouras, Arsha Nagrani, Andrea Vedaldi, and Andrew Zisserman. 2021. Localizing visual sounds the hard way. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition , pages 16867–16876.

- Chen et al. (2022) Sanyuan Chen, Chengyi Wang, Zhengyang Chen, Yu Wu, Shujie Liu, Zhuo Chen, Jinyu Li, Naoyuki Kanda, Takuya Yoshioka, Xiong Xiao, et al. 2022. Wavlm: Large-scale self-supervised pre-training for full stack speech processing. IEEE Journal of Selected Topics in Signal Processing , 16(6):1505–1518.

- Choi et al. (2023) Jeongsoo Choi, Se Jin Park, Minsu Kim, and Yong Man Ro. 2023. Av2av: Direct audio-visual speech to audio-visual speech translation with unified audio-visual speech representation. arXiv preprint arXiv:2312.02512 .

- Conneau and Lample (2019) Alexis Conneau and Guillaume Lample. 2019. Cross-lingual language model pretraining. Advances in neural information processing systems , 32.

- Défossez et al. (2022) Alexandre Défossez, Jade Copet, Gabriel Synnaeve, and Yossi Adi. 2022. High fidelity neural audio compression. arXiv preprint arXiv:2210.13438 .

- Deng et al. (2009) Jia Deng, Wei Dong, Richard Socher, Li-Jia Li, Kai Li, and Li Fei-Fei. 2009. Imagenet: A large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition , pages 248–255. Ieee.

- Denkowski and Lavie (2014) Michael Denkowski and Alon Lavie. 2014. Meteor universal: Language specific translation evaluation for any target language. In Proceedings of the ninth workshop on statistical machine translation , pages 376–380.

- Effendi et al. (2021) Johanes Effendi, Sakriani Sakti, and Satoshi Nakamura. 2021. End-to-end image-to-speech generation for untranscribed unknown languages. IEEE Access , 9:55144–55154.

- Esser et al. (2021) Patrick Esser, Robin Rombach, and Bjorn Ommer. 2021. Taming transformers for high-resolution image synthesis. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition , pages 12873–12883.

- Fan et al. (2019) Angela Fan, Yacine Jernite, Ethan Perez, David Grangier, Jason Weston, and Michael Auli. 2019. Eli5: Long form question answering. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics , pages 3558–3567.

- Ge et al. (2023a) Yuying Ge, Yixiao Ge, Ziyun Zeng, Xintao Wang, and Ying Shan. 2023a. Planting a seed of vision in large language model. arXiv preprint arXiv:2307.08041 .

- Ge et al. (2023b) Yuying Ge, Sijie Zhao, Ziyun Zeng, Yixiao Ge, Chen Li, Xintao Wang, and Ying Shan. 2023b. Making llama see and draw with seed tokenizer. arXiv preprint arXiv:2310.01218 .

- Girdhar et al. (2023) Rohit Girdhar, Alaaeldin El-Nouby, Zhuang Liu, Mannat Singh, Kalyan Vasudev Alwala, Armand Joulin, and Ishan Misra. 2023. Imagebind: One embedding space to bind them all. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition , pages 15180–15190.

- Han et al. (2023) Jiaming Han, Renrui Zhang, Wenqi Shao, Peng Gao, Peng Xu, Han Xiao, Kaipeng Zhang, Chris Liu, Song Wen, Ziyu Guo, et al. 2023. Imagebind-llm: Multi-modality instruction tuning. arXiv preprint arXiv:2309.03905 .

- Harwath and Glass (2015) David Harwath and James Glass. 2015. Deep multimodal semantic embeddings for speech and images. In 2015 IEEE Workshop on Automatic Speech Recognition and Understanding (ASRU) , pages 237–244. IEEE.

- Hayashi and Watanabe (2020) Tomoki Hayashi and Shinji Watanabe. 2020. Discretalk: Text-to-speech as a machine translation problem. arXiv preprint arXiv:2005.05525 .

- Hayes et al. (2022) Thomas Hayes, Songyang Zhang, Xi Yin, Guan Pang, Sasha Sheng, Harry Yang, Songwei Ge, Qiyuan Hu, and Devi Parikh. 2022. Mugen: A playground for video-audio-text multimodal understanding and generation. In European Conference on Computer Vision , pages 431–449.

- Ho et al. (2020) Jonathan Ho, Ajay Jain, and Pieter Abbeel. 2020. Denoising diffusion probabilistic models. Advances in neural information processing systems , 33:6840–6851.

- Hodosh et al. (2013) Micah Hodosh, Peter Young, and Julia Hockenmaier. 2013. Framing image description as a ranking task: Data, models and evaluation metrics. Journal of Artificial Intelligence Research , 47:853–899.

- Hong et al. (2023) Joanna Hong, Minsu Kim, Jeongsoo Choi, and Yong Man Ro. 2023. Watch or listen: Robust audio-visual speech recognition with visual corruption modeling and reliability scoring. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition , pages 18783–18794.

- Hsu et al. (2021a) Wei-Ning Hsu, Benjamin Bolte, Yao-Hung Hubert Tsai, Kushal Lakhotia, Ruslan Salakhutdinov, and Abdelrahman Mohamed. 2021a. Hubert: Self-supervised speech representation learning by masked prediction of hidden units. IEEE/ACM Transactions on Audio, Speech, and Language Processing , 29:3451–3460.

- Hsu et al. (2021b) Wei-Ning Hsu, David Harwath, Tyler Miller, Christopher Song, and James Glass. 2021b. Text-free image-to-speech synthesis using learned segmental units. In Proc. ACL , pages 5284–5300.

- Ito and Johnson (2017) Keith Ito and Linda Johnson. 2017. The lj speech dataset. https://keithito.com/LJ-Speech-Dataset/ .

- Jia et al. (2021) Chao Jia, Yinfei Yang, Ye Xia, Yi-Ting Chen, Zarana Parekh, Hieu Pham, Quoc Le, Yun-Hsuan Sung, Zhen Li, and Tom Duerig. 2021. Scaling up visual and vision-language representation learning with noisy text supervision. In International conference on machine learning , pages 4904–4916. PMLR.

- Kahn et al. (2020) Jacob Kahn, Morgane Rivière, Weiyi Zheng, Evgeny Kharitonov, Qiantong Xu, Pierre-Emmanuel Mazaré, Julien Karadayi, Vitaliy Liptchinsky, Ronan Collobert, Christian Fuegen, et al. 2020. Libri-light: A benchmark for asr with limited or no supervision. In ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) , pages 7669–7673. IEEE.

- Karpathy and Fei-Fei (2015) Andrej Karpathy and Li Fei-Fei. 2015. Deep visual-semantic alignments for generating image descriptions. In Proceedings of the IEEE conference on computer vision and pattern recognition , pages 3128–3137.

- Kenton and Toutanova (2019) Jacob Devlin Ming-Wei Chang Kenton and Lee Kristina Toutanova. 2019. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of NAACL-HLT , pages 4171–4186.

- Kim et al. (2021a) Jaehyeon Kim, Jungil Kong, and Juhee Son. 2021a. Conditional variational autoencoder with adversarial learning for end-to-end text-to-speech. In International Conference on Machine Learning , pages 5530–5540. PMLR.

- Kim et al. (2023) Minsu Kim, Jeongsoo Choi, Dahun Kim, and Yong Man Ro. 2023. Many-to-many spoken language translation via unified speech and text representation learning with unit-to-unit translation. arXiv preprint arXiv:2308.01831 .

- Kim et al. (2024a) Minsu Kim, Jeongsoo Choi, Soumi Maiti, Jeong Hun Yeo, Shinji Watanabe, and Yong Man Ro. 2024a. Towards practical and efficient image-to-speech captioning with vision-language pre-training and multi-modal tokens. In ICASSP 2024-2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) .

- Kim et al. (2024b) Minsu Kim, Jeong Hun Yeo, Jeongsoo Choi, Se Jin Park, and Yong Man Ro. 2024b. Multilingual visual speech recognition with a single model by learning with discrete visual speech units. arXiv preprint arXiv:2401.09802 .

- Kim et al. (2021b) Wonjae Kim, Bokyung Son, and Ildoo Kim. 2021b. Vilt: Vision-and-language transformer without convolution or region supervision. In International Conference on Machine Learning , pages 5583–5594. PMLR.

- Kingma and Ba (2014) Diederik P Kingma and Jimmy Ba. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 .

- Koh et al. (2023) Jing Yu Koh, Daniel Fried, and Ruslan Salakhutdinov. 2023. Generating images with multimodal language models. arXiv preprint arXiv:2305.17216 .

- Kong et al. (2020) Jungil Kong, Jaehyeon Kim, and Jaekyoung Bae. 2020. Hifi-gan: Generative adversarial networks for efficient and high fidelity speech synthesis. Advances in Neural Information Processing Systems , 33:17022–17033.

- Lakhotia et al. (2021) Kushal Lakhotia, Eugene Kharitonov, Wei-Ning Hsu, Yossi Adi, Adam Polyak, Benjamin Bolte, Tu-Anh Nguyen, Jade Copet, Alexei Baevski, Abdelrahman Mohamed, et al. 2021. On generative spoken language modeling from raw audio. Transactions of the Association for Computational Linguistics , 9:1336–1354.

- Lee et al. (2022a) Ann Lee, Peng-Jen Chen, Changhan Wang, Jiatao Gu, Sravya Popuri, Xutai Ma, Adam Polyak, Yossi Adi, Qing He, Yun Tang, et al. 2022a. Direct speech-to-speech translation with discrete units. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) , pages 3327–3339.

- Lee et al. (2022b) Ann Lee, Hongyu Gong, Paul-Ambroise Duquenne, Holger Schwenk, Peng-Jen Chen, Changhan Wang, Sravya Popuri, Yossi Adi, Juan Pino, Jiatao Gu, et al. 2022b. Textless speech-to-speech translation on real data. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies , pages 860–872.

- Lewis et al. (2020) Mike Lewis, Yinhan Liu, Naman Goyal, Marjan Ghazvininejad, Abdelrahman Mohamed, Omer Levy, Veselin Stoyanov, and Luke Zettlemoyer. 2020. Bart: Denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics , pages 7871–7880.

- Li et al. (2020) Jiguo Li, Xinfeng Zhang, Chuanmin Jia, Jizheng Xu, Li Zhang, Yue Wang, Siwei Ma, and Wen Gao. 2020. Direct speech-to-image translation. IEEE Journal of Selected Topics in Signal Processing , 14(3):517–529.

- Li et al. (2023a) Junnan Li, Dongxu Li, Silvio Savarese, and Steven Hoi. 2023a. Blip-2: Bootstrapping language-image pre-training with frozen image encoders and large language models. arXiv preprint arXiv:2301.12597 .

- Li et al. (2022) Junnan Li, Dongxu Li, Caiming Xiong, and Steven Hoi. 2022. Blip: Bootstrapping language-image pre-training for unified vision-language understanding and generation. In International Conference on Machine Learning , pages 12888–12900. PMLR.

- Li et al. (2021) Junnan Li, Ramprasaath Selvaraju, Akhilesh Gotmare, Shafiq Joty, Caiming Xiong, and Steven Chu Hong Hoi. 2021. Align before fuse: Vision and language representation learning with momentum distillation. Advances in neural information processing systems , 34:9694–9705.

- Li et al. (2023b) Yuheng Li, Haotian Liu, Qingyang Wu, Fangzhou Mu, Jianwei Yang, Jianfeng Gao, Chunyuan Li, and Yong Jae Lee. 2023b. Gligen: Open-set grounded text-to-image generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition , pages 22511–22521.

- Lin (2004) Chin-Yew Lin. 2004. Rouge: A package for automatic evaluation of summaries. In Text summarization branches out , pages 74–81.

- Lin et al. (2014) Tsung-Yi Lin, Michael Maire, Serge Belongie, James Hays, Pietro Perona, Deva Ramanan, Piotr Dollár, and C Lawrence Zitnick. 2014. Microsoft coco: Common objects in context. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part V 13 , pages 740–755. Springer.

- Liu et al. (2020) Yinhan Liu, Jiatao Gu, Naman Goyal, Xian Li, Sergey Edunov, Marjan Ghazvininejad, Mike Lewis, and Luke Zettlemoyer. 2020. Multilingual denoising pre-training for neural machine translation. Transactions of the Association for Computational Linguistics , 8:726–742.

- Lo et al. (2018) Chen-Chou Lo, Szu-Wei Fu, Wen-Chin Huang, Xin Wang, Junichi Yamagishi, Yu Tsao, and Hsin-Min Wang. 2018. Mosnet: Deep learning based objective assessment for voice conversion. Challenge (VCC) , page 11.

- Maiti et al. (2024) Soumi Maiti, Yifan Peng, Shukjae Choi, Jee-weon Jung, Xuankai Chang, and Shinji Watanabe. 2024. Voxtlm: unified decoder-only models for consolidating speech recognition/synthesis and speech/text continuation tasks. In ICASSP 2024-2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) .

- Maiti et al. (2023) Soumi Maiti, Yifan Peng, Takaaki Saeki, and Shinji Watanabe. 2023. Speechlmscore: Evaluating speech generation using speech language model. In ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) , pages 1–5. IEEE.

- Papineni et al. (2002) Kishore Papineni, Salim Roukos, Todd Ward, and Wei-Jing Zhu. 2002. Bleu: a method for automatic evaluation of machine translation. In Proceedings of the 40th annual meeting of the Association for Computational Linguistics , pages 311–318.

- Park et al. (2023) Song Park, Sanghyuk Chun, Byeongho Heo, Wonjae Kim, and Sangdoo Yun. 2023. Seit: Storage-efficient vision training with tokens using 1% of pixel storage. In Proceedings of the IEEE/CVF International Conference on Computer Vision .

- Polyak et al. (2021) Adam Polyak, Yossi Adi, Jade Copet, Eugene Kharitonov, Kushal Lakhotia, Wei-Ning Hsu, Abdelrahman Mohamed, and Emmanuel Dupoux. 2021. Speech resynthesis from discrete disentangled self-supervised representations. arXiv preprint arXiv:2104.00355 .

- Popuri et al. (2022) Sravya Popuri, Peng-Jen Chen, Changhan Wang, Juan Pino, Yossi Adi, Jiatao Gu, Wei-Ning Hsu, and Ann Lee. 2022. Enhanced direct speech-to-speech translation using self-supervised pre-training and data augmentation. arXiv preprint arXiv:2204.02967 .

- Prabhavalkar et al. (2023) Rohit Prabhavalkar, Takaaki Hori, Tara N Sainath, Ralf Schlüter, and Shinji Watanabe. 2023. End-to-end speech recognition: A survey. arXiv preprint arXiv:2303.03329 .

- Radford et al. (2021) Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. 2021. Learning transferable visual models from natural language supervision. In International conference on machine learning , pages 8748–8763. PMLR.

- Ramesh et al. (2022) Aditya Ramesh, Prafulla Dhariwal, Alex Nichol, Casey Chu, and Mark Chen. 2022. Hierarchical text-conditional image generation with clip latents. arXiv preprint arXiv:2204.06125 , 1(2):3.

- Ramesh et al. (2021) Aditya Ramesh, Mikhail Pavlov, Gabriel Goh, Scott Gray, Chelsea Voss, Alec Radford, Mark Chen, and Ilya Sutskever. 2021. Zero-shot text-to-image generation. In International Conference on Machine Learning , pages 8821–8831. PMLR.

- Rombach et al. (2022) Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. 2022. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition , pages 10684–10695.

- Saharia et al. (2022) Chitwan Saharia, William Chan, Saurabh Saxena, Lala Li, Jay Whang, Emily L Denton, Kamyar Ghasemipour, Raphael Gontijo Lopes, Burcu Karagol Ayan, Tim Salimans, et al. 2022. Photorealistic text-to-image diffusion models with deep language understanding. Advances in Neural Information Processing Systems , 35:36479–36494.

- Schuster and Nakajima (2012) Mike Schuster and Kaisuke Nakajima. 2012. Japanese and korean voice search. In 2012 IEEE international conference on acoustics, speech and signal processing (ICASSP) , pages 5149–5152. IEEE.

- Sennrich et al. (2016) Rico Sennrich, Barry Haddow, and Alexandra Birch. 2016. Improving neural machine translation models with monolingual data. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) , pages 86–96.

- Sharma et al. (2018) Piyush Sharma, Nan Ding, Sebastian Goodman, and Radu Soricut. 2018. Conceptual captions: A cleaned, hypernymed, image alt-text dataset for automatic image captioning. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) , pages 2556–2565.

- Stahlberg (2020) Felix Stahlberg. 2020. Neural machine translation: A review. Journal of Artificial Intelligence Research , 69:343–418.

- Tan (2023) Xu Tan. 2023. Neural Text-to-Speech Synthesis . Springer Nature.

- Team et al. (2023) Gemini Team, Rohan Anil, Sebastian Borgeaud, Yonghui Wu, Jean-Baptiste Alayrac, Jiahui Yu, Radu Soricut, Johan Schalkwyk, Andrew M Dai, Anja Hauth, et al. 2023. Gemini: a family of highly capable multimodal models. arXiv preprint arXiv:2312.11805 .