Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Sampling Methods | Types, Techniques & Examples

Sampling Methods | Types, Techniques & Examples

Published on September 19, 2019 by Shona McCombes . Revised on June 22, 2023.

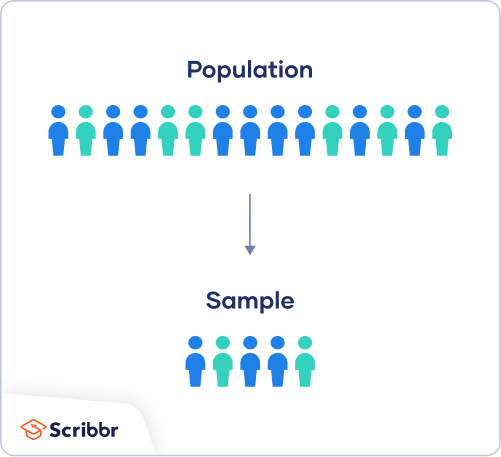

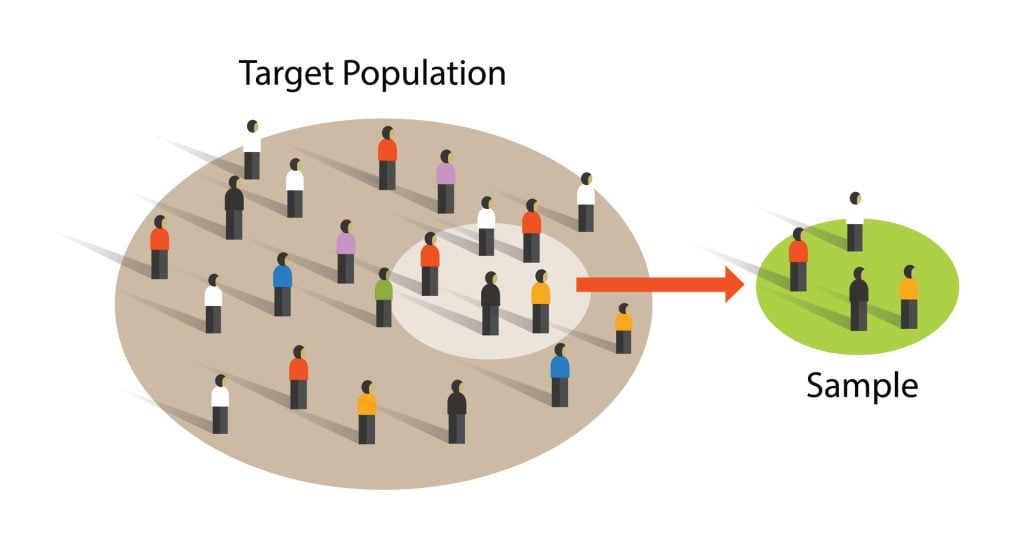

When you conduct research about a group of people, it’s rarely possible to collect data from every person in that group. Instead, you select a sample . The sample is the group of individuals who will actually participate in the research.

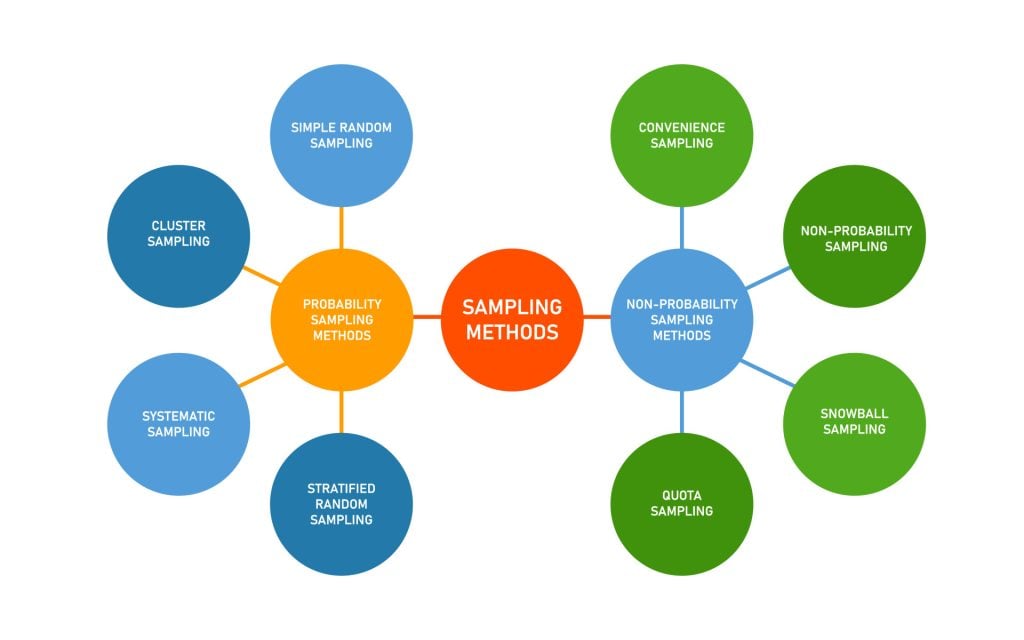

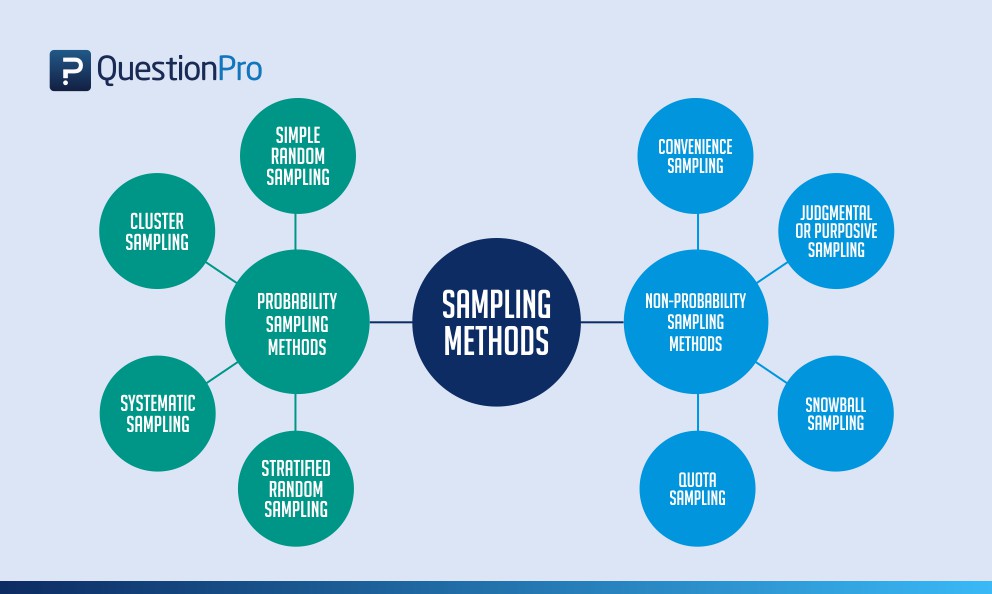

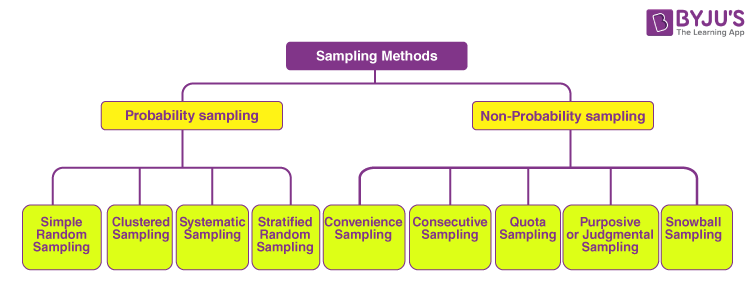

To draw valid conclusions from your results, you have to carefully decide how you will select a sample that is representative of the group as a whole. This is called a sampling method . There are two primary types of sampling methods that you can use in your research:

- Probability sampling involves random selection, allowing you to make strong statistical inferences about the whole group.

- Non-probability sampling involves non-random selection based on convenience or other criteria, allowing you to easily collect data.

You should clearly explain how you selected your sample in the methodology section of your paper or thesis, as well as how you approached minimizing research bias in your work.

Table of contents

Population vs. sample, probability sampling methods, non-probability sampling methods, other interesting articles, frequently asked questions about sampling.

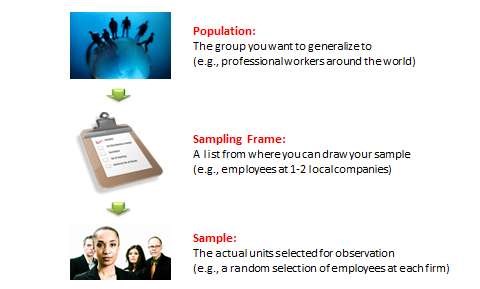

First, you need to understand the difference between a population and a sample , and identify the target population of your research.

- The population is the entire group that you want to draw conclusions about.

- The sample is the specific group of individuals that you will collect data from.

The population can be defined in terms of geographical location, age, income, or many other characteristics.

It is important to carefully define your target population according to the purpose and practicalities of your project.

If the population is very large, demographically mixed, and geographically dispersed, it might be difficult to gain access to a representative sample. A lack of a representative sample affects the validity of your results, and can lead to several research biases , particularly sampling bias .

Sampling frame

The sampling frame is the actual list of individuals that the sample will be drawn from. Ideally, it should include the entire target population (and nobody who is not part of that population).

Sample size

The number of individuals you should include in your sample depends on various factors, including the size and variability of the population and your research design. There are different sample size calculators and formulas depending on what you want to achieve with statistical analysis .

Prevent plagiarism. Run a free check.

Probability sampling means that every member of the population has a chance of being selected. It is mainly used in quantitative research . If you want to produce results that are representative of the whole population, probability sampling techniques are the most valid choice.

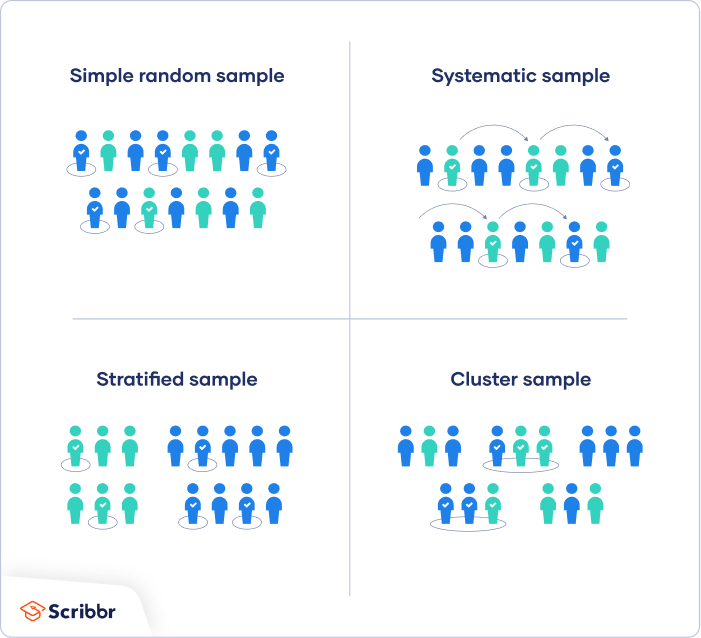

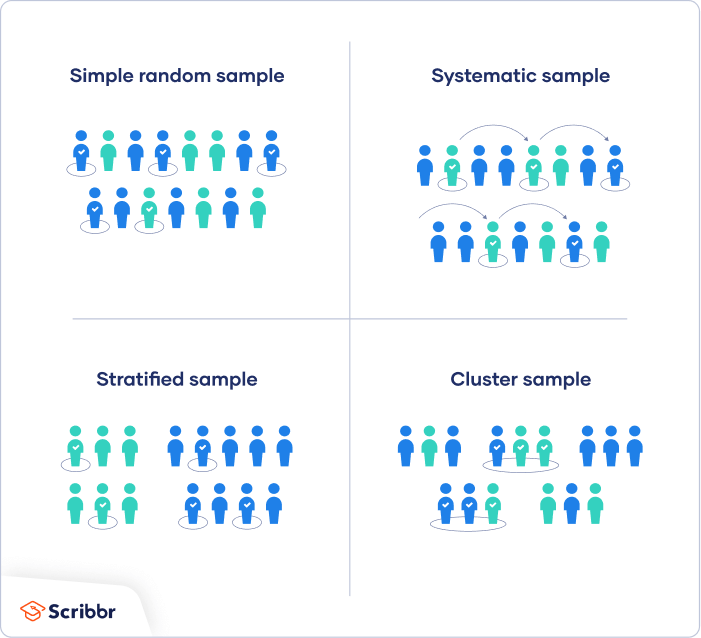

There are four main types of probability sample.

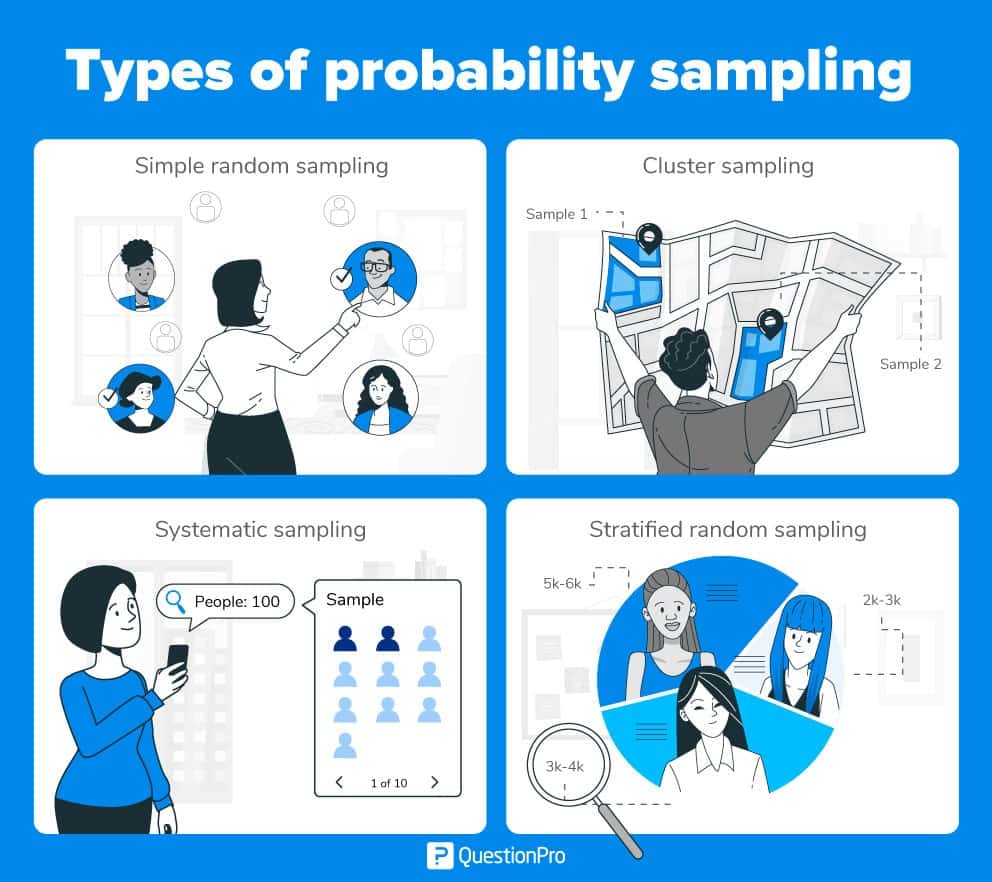

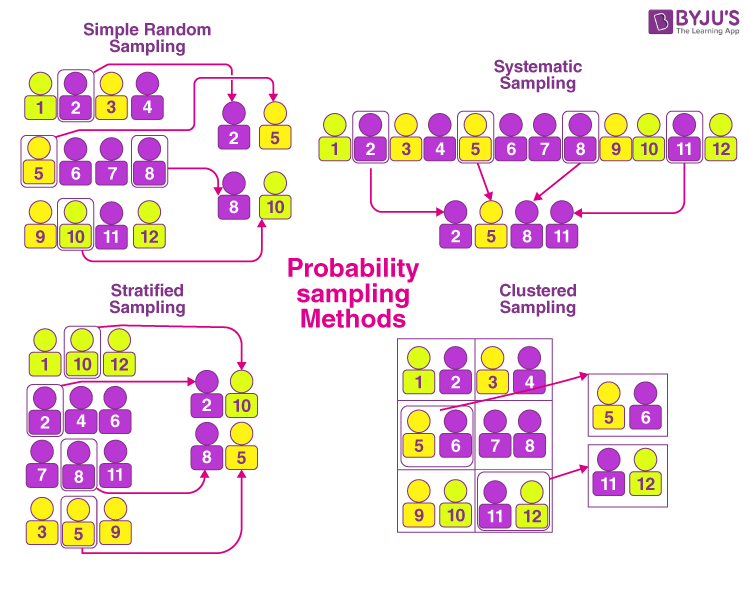

1. Simple random sampling

In a simple random sample, every member of the population has an equal chance of being selected. Your sampling frame should include the whole population.

To conduct this type of sampling, you can use tools like random number generators or other techniques that are based entirely on chance.

2. Systematic sampling

Systematic sampling is similar to simple random sampling, but it is usually slightly easier to conduct. Every member of the population is listed with a number, but instead of randomly generating numbers, individuals are chosen at regular intervals.

If you use this technique, it is important to make sure that there is no hidden pattern in the list that might skew the sample. For example, if the HR database groups employees by team, and team members are listed in order of seniority, there is a risk that your interval might skip over people in junior roles, resulting in a sample that is skewed towards senior employees.

3. Stratified sampling

Stratified sampling involves dividing the population into subpopulations that may differ in important ways. It allows you draw more precise conclusions by ensuring that every subgroup is properly represented in the sample.

To use this sampling method, you divide the population into subgroups (called strata) based on the relevant characteristic (e.g., gender identity, age range, income bracket, job role).

Based on the overall proportions of the population, you calculate how many people should be sampled from each subgroup. Then you use random or systematic sampling to select a sample from each subgroup.

4. Cluster sampling

Cluster sampling also involves dividing the population into subgroups, but each subgroup should have similar characteristics to the whole sample. Instead of sampling individuals from each subgroup, you randomly select entire subgroups.

If it is practically possible, you might include every individual from each sampled cluster. If the clusters themselves are large, you can also sample individuals from within each cluster using one of the techniques above. This is called multistage sampling .

This method is good for dealing with large and dispersed populations, but there is more risk of error in the sample, as there could be substantial differences between clusters. It’s difficult to guarantee that the sampled clusters are really representative of the whole population.

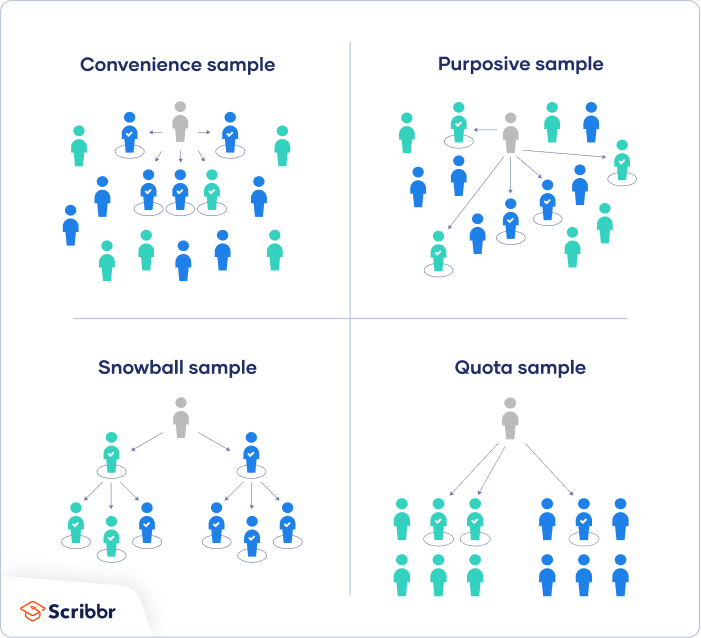

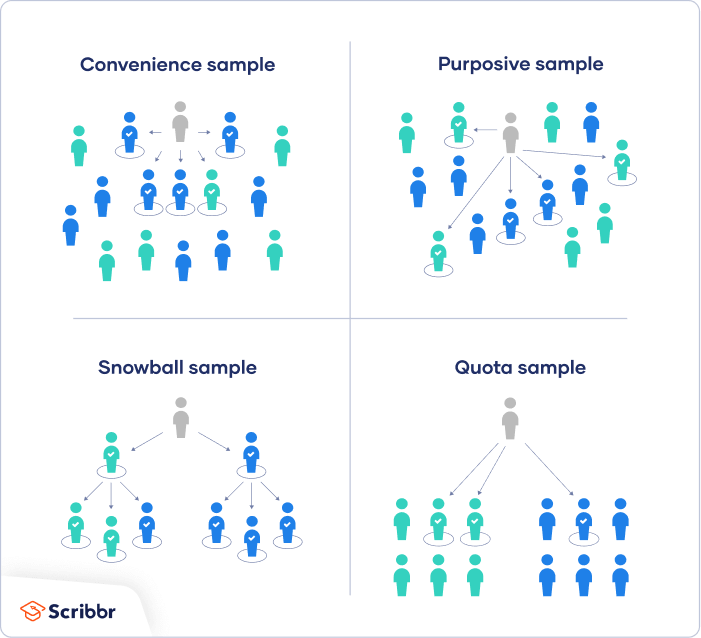

In a non-probability sample, individuals are selected based on non-random criteria, and not every individual has a chance of being included.

This type of sample is easier and cheaper to access, but it has a higher risk of sampling bias . That means the inferences you can make about the population are weaker than with probability samples, and your conclusions may be more limited. If you use a non-probability sample, you should still aim to make it as representative of the population as possible.

Non-probability sampling techniques are often used in exploratory and qualitative research . In these types of research, the aim is not to test a hypothesis about a broad population, but to develop an initial understanding of a small or under-researched population.

1. Convenience sampling

A convenience sample simply includes the individuals who happen to be most accessible to the researcher.

This is an easy and inexpensive way to gather initial data, but there is no way to tell if the sample is representative of the population, so it can’t produce generalizable results. Convenience samples are at risk for both sampling bias and selection bias .

2. Voluntary response sampling

Similar to a convenience sample, a voluntary response sample is mainly based on ease of access. Instead of the researcher choosing participants and directly contacting them, people volunteer themselves (e.g. by responding to a public online survey).

Voluntary response samples are always at least somewhat biased , as some people will inherently be more likely to volunteer than others, leading to self-selection bias .

3. Purposive sampling

This type of sampling, also known as judgement sampling, involves the researcher using their expertise to select a sample that is most useful to the purposes of the research.

It is often used in qualitative research , where the researcher wants to gain detailed knowledge about a specific phenomenon rather than make statistical inferences, or where the population is very small and specific. An effective purposive sample must have clear criteria and rationale for inclusion. Always make sure to describe your inclusion and exclusion criteria and beware of observer bias affecting your arguments.

4. Snowball sampling

If the population is hard to access, snowball sampling can be used to recruit participants via other participants. The number of people you have access to “snowballs” as you get in contact with more people. The downside here is also representativeness, as you have no way of knowing how representative your sample is due to the reliance on participants recruiting others. This can lead to sampling bias .

5. Quota sampling

Quota sampling relies on the non-random selection of a predetermined number or proportion of units. This is called a quota.

You first divide the population into mutually exclusive subgroups (called strata) and then recruit sample units until you reach your quota. These units share specific characteristics, determined by you prior to forming your strata. The aim of quota sampling is to control what or who makes up your sample.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Student’s t -distribution

- Normal distribution

- Null and Alternative Hypotheses

- Chi square tests

- Confidence interval

- Quartiles & Quantiles

- Cluster sampling

- Stratified sampling

- Data cleansing

- Reproducibility vs Replicability

- Peer review

- Prospective cohort study

Research bias

- Implicit bias

- Cognitive bias

- Placebo effect

- Hawthorne effect

- Hindsight bias

- Affect heuristic

- Social desirability bias

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

A sample is a subset of individuals from a larger population . Sampling means selecting the group that you will actually collect data from in your research. For example, if you are researching the opinions of students in your university, you could survey a sample of 100 students.

In statistics, sampling allows you to test a hypothesis about the characteristics of a population.

Samples are used to make inferences about populations . Samples are easier to collect data from because they are practical, cost-effective, convenient, and manageable.

Probability sampling means that every member of the target population has a known chance of being included in the sample.

Probability sampling methods include simple random sampling , systematic sampling , stratified sampling , and cluster sampling .

In non-probability sampling , the sample is selected based on non-random criteria, and not every member of the population has a chance of being included.

Common non-probability sampling methods include convenience sampling , voluntary response sampling, purposive sampling , snowball sampling, and quota sampling .

In multistage sampling , or multistage cluster sampling, you draw a sample from a population using smaller and smaller groups at each stage.

This method is often used to collect data from a large, geographically spread group of people in national surveys, for example. You take advantage of hierarchical groupings (e.g., from state to city to neighborhood) to create a sample that’s less expensive and time-consuming to collect data from.

Sampling bias occurs when some members of a population are systematically more likely to be selected in a sample than others.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

McCombes, S. (2023, June 22). Sampling Methods | Types, Techniques & Examples. Scribbr. Retrieved April 2, 2024, from https://www.scribbr.com/methodology/sampling-methods/

Is this article helpful?

Shona McCombes

Other students also liked, population vs. sample | definitions, differences & examples, simple random sampling | definition, steps & examples, sampling bias and how to avoid it | types & examples, "i thought ai proofreading was useless but..".

I've been using Scribbr for years now and I know it's a service that won't disappoint. It does a good job spotting mistakes”

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- Sampling Methods | Types, Techniques, & Examples

Sampling Methods | Types, Techniques, & Examples

Published on 3 May 2022 by Shona McCombes . Revised on 10 October 2022.

When you conduct research about a group of people, it’s rarely possible to collect data from every person in that group. Instead, you select a sample. The sample is the group of individuals who will actually participate in the research.

To draw valid conclusions from your results, you have to carefully decide how you will select a sample that is representative of the group as a whole. There are two types of sampling methods:

- Probability sampling involves random selection, allowing you to make strong statistical inferences about the whole group. It minimises the risk of selection bias .

- Non-probability sampling involves non-random selection based on convenience or other criteria, allowing you to easily collect data.

You should clearly explain how you selected your sample in the methodology section of your paper or thesis.

Table of contents

Population vs sample, probability sampling methods, non-probability sampling methods, frequently asked questions about sampling.

First, you need to understand the difference between a population and a sample , and identify the target population of your research.

- The population is the entire group that you want to draw conclusions about.

- The sample is the specific group of individuals that you will collect data from.

The population can be defined in terms of geographical location, age, income, and many other characteristics.

It is important to carefully define your target population according to the purpose and practicalities of your project.

If the population is very large, demographically mixed, and geographically dispersed, it might be difficult to gain access to a representative sample.

Sampling frame

The sampling frame is the actual list of individuals that the sample will be drawn from. Ideally, it should include the entire target population (and nobody who is not part of that population).

You are doing research on working conditions at Company X. Your population is all 1,000 employees of the company. Your sampling frame is the company’s HR database, which lists the names and contact details of every employee.

Sample size

The number of individuals you should include in your sample depends on various factors, including the size and variability of the population and your research design. There are different sample size calculators and formulas depending on what you want to achieve with statistical analysis .

Prevent plagiarism, run a free check.

Probability sampling means that every member of the population has a chance of being selected. It is mainly used in quantitative research . If you want to produce results that are representative of the whole population, probability sampling techniques are the most valid choice.

There are four main types of probability sample.

1. Simple random sampling

In a simple random sample , every member of the population has an equal chance of being selected. Your sampling frame should include the whole population.

To conduct this type of sampling, you can use tools like random number generators or other techniques that are based entirely on chance.

You want to select a simple random sample of 100 employees of Company X. You assign a number to every employee in the company database from 1 to 1000, and use a random number generator to select 100 numbers.

2. Systematic sampling

Systematic sampling is similar to simple random sampling, but it is usually slightly easier to conduct. Every member of the population is listed with a number, but instead of randomly generating numbers, individuals are chosen at regular intervals.

All employees of the company are listed in alphabetical order. From the first 10 numbers, you randomly select a starting point: number 6. From number 6 onwards, every 10th person on the list is selected (6, 16, 26, 36, and so on), and you end up with a sample of 100 people.

If you use this technique, it is important to make sure that there is no hidden pattern in the list that might skew the sample. For example, if the HR database groups employees by team, and team members are listed in order of seniority, there is a risk that your interval might skip over people in junior roles, resulting in a sample that is skewed towards senior employees.

3. Stratified sampling

Stratified sampling involves dividing the population into subpopulations that may differ in important ways. It allows you draw more precise conclusions by ensuring that every subgroup is properly represented in the sample.

To use this sampling method, you divide the population into subgroups (called strata) based on the relevant characteristic (e.g., gender, age range, income bracket, job role).

Based on the overall proportions of the population, you calculate how many people should be sampled from each subgroup. Then you use random or systematic sampling to select a sample from each subgroup.

The company has 800 female employees and 200 male employees. You want to ensure that the sample reflects the gender balance of the company, so you sort the population into two strata based on gender. Then you use random sampling on each group, selecting 80 women and 20 men, which gives you a representative sample of 100 people.

4. Cluster sampling

Cluster sampling also involves dividing the population into subgroups, but each subgroup should have similar characteristics to the whole sample. Instead of sampling individuals from each subgroup, you randomly select entire subgroups.

If it is practically possible, you might include every individual from each sampled cluster. If the clusters themselves are large, you can also sample individuals from within each cluster using one of the techniques above. This is called multistage sampling .

This method is good for dealing with large and dispersed populations, but there is more risk of error in the sample, as there could be substantial differences between clusters. It’s difficult to guarantee that the sampled clusters are really representative of the whole population.

The company has offices in 10 cities across the country (all with roughly the same number of employees in similar roles). You don’t have the capacity to travel to every office to collect your data, so you use random sampling to select 3 offices – these are your clusters.

In a non-probability sample , individuals are selected based on non-random criteria, and not every individual has a chance of being included.

This type of sample is easier and cheaper to access, but it has a higher risk of sampling bias . That means the inferences you can make about the population are weaker than with probability samples, and your conclusions may be more limited. If you use a non-probability sample, you should still aim to make it as representative of the population as possible.

Non-probability sampling techniques are often used in exploratory and qualitative research . In these types of research, the aim is not to test a hypothesis about a broad population, but to develop an initial understanding of a small or under-researched population.

1. Convenience sampling

A convenience sample simply includes the individuals who happen to be most accessible to the researcher.

This is an easy and inexpensive way to gather initial data, but there is no way to tell if the sample is representative of the population, so it can’t produce generalisable results.

You are researching opinions about student support services in your university, so after each of your classes, you ask your fellow students to complete a survey on the topic. This is a convenient way to gather data, but as you only surveyed students taking the same classes as you at the same level, the sample is not representative of all the students at your university.

2. Voluntary response sampling

Similar to a convenience sample, a voluntary response sample is mainly based on ease of access. Instead of the researcher choosing participants and directly contacting them, people volunteer themselves (e.g., by responding to a public online survey).

Voluntary response samples are always at least somewhat biased, as some people will inherently be more likely to volunteer than others.

You send out the survey to all students at your university and many students decide to complete it. This can certainly give you some insight into the topic, but the people who responded are more likely to be those who have strong opinions about the student support services, so you can’t be sure that their opinions are representative of all students.

3. Purposive sampling

Purposive sampling , also known as judgement sampling, involves the researcher using their expertise to select a sample that is most useful to the purposes of the research.

It is often used in qualitative research , where the researcher wants to gain detailed knowledge about a specific phenomenon rather than make statistical inferences, or where the population is very small and specific. An effective purposive sample must have clear criteria and rationale for inclusion.

You want to know more about the opinions and experiences of students with a disability at your university, so you purposely select a number of students with different support needs in order to gather a varied range of data on their experiences with student services.

4. Snowball sampling

If the population is hard to access, snowball sampling can be used to recruit participants via other participants. The number of people you have access to ‘snowballs’ as you get in contact with more people.

You are researching experiences of homelessness in your city. Since there is no list of all homeless people in the city, probability sampling isn’t possible. You meet one person who agrees to participate in the research, and she puts you in contact with other homeless people she knows in the area.

A sample is a subset of individuals from a larger population. Sampling means selecting the group that you will actually collect data from in your research.

For example, if you are researching the opinions of students in your university, you could survey a sample of 100 students.

Statistical sampling allows you to test a hypothesis about the characteristics of a population. There are various sampling methods you can use to ensure that your sample is representative of the population as a whole.

Samples are used to make inferences about populations . Samples are easier to collect data from because they are practical, cost-effective, convenient, and manageable.

Probability sampling means that every member of the target population has a known chance of being included in the sample.

Probability sampling methods include simple random sampling , systematic sampling , stratified sampling , and cluster sampling .

In non-probability sampling , the sample is selected based on non-random criteria, and not every member of the population has a chance of being included.

Common non-probability sampling methods include convenience sampling , voluntary response sampling, purposive sampling , snowball sampling , and quota sampling .

Sampling bias occurs when some members of a population are systematically more likely to be selected in a sample than others.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

McCombes, S. (2022, October 10). Sampling Methods | Types, Techniques, & Examples. Scribbr. Retrieved 2 April 2024, from https://www.scribbr.co.uk/research-methods/sampling/

Is this article helpful?

Shona McCombes

Other students also liked, what is quantitative research | definition & methods, a quick guide to experimental design | 5 steps & examples, controlled experiments | methods & examples of control.

Sampling Methods In Reseach: Types, Techniques, & Examples

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

Sampling methods in psychology refer to strategies used to select a subset of individuals (a sample) from a larger population, to study and draw inferences about the entire population. Common methods include random sampling, stratified sampling, cluster sampling, and convenience sampling. Proper sampling ensures representative, generalizable, and valid research results.

- Sampling : the process of selecting a representative group from the population under study.

- Target population : the total group of individuals from which the sample might be drawn.

- Sample: a subset of individuals selected from a larger population for study or investigation. Those included in the sample are termed “participants.”

- Generalizability : the ability to apply research findings from a sample to the broader target population, contingent on the sample being representative of that population.

For instance, if the advert for volunteers is published in the New York Times, this limits how much the study’s findings can be generalized to the whole population, because NYT readers may not represent the entire population in certain respects (e.g., politically, socio-economically).

The Purpose of Sampling

We are interested in learning about large groups of people with something in common in psychological research. We call the group interested in studying our “target population.”

In some types of research, the target population might be as broad as all humans. Still, in other types of research, the target population might be a smaller group, such as teenagers, preschool children, or people who misuse drugs.

Studying every person in a target population is more or less impossible. Hence, psychologists select a sample or sub-group of the population that is likely to be representative of the target population we are interested in.

This is important because we want to generalize from the sample to the target population. The more representative the sample, the more confident the researcher can be that the results can be generalized to the target population.

One of the problems that can occur when selecting a sample from a target population is sampling bias. Sampling bias refers to situations where the sample does not reflect the characteristics of the target population.

Many psychology studies have a biased sample because they have used an opportunity sample that comprises university students as their participants (e.g., Asch ).

OK, so you’ve thought up this brilliant psychological study and designed it perfectly. But who will you try it out on, and how will you select your participants?

There are various sampling methods. The one chosen will depend on a number of factors (such as time, money, etc.).

Random Sampling

Random sampling is a type of probability sampling where everyone in the entire target population has an equal chance of being selected.

This is similar to the national lottery. If the “population” is everyone who bought a lottery ticket, then everyone has an equal chance of winning the lottery (assuming they all have one ticket each).

Random samples require naming or numbering the target population and then using some raffle method to choose those to make up the sample. Random samples are the best method of selecting your sample from the population of interest.

- The advantages are that your sample should represent the target population and eliminate sampling bias.

- The disadvantage is that it is very difficult to achieve (i.e., time, effort, and money).

Stratified Sampling

During stratified sampling , the researcher identifies the different types of people that make up the target population and works out the proportions needed for the sample to be representative.

A list is made of each variable (e.g., IQ, gender, etc.) that might have an effect on the research. For example, if we are interested in the money spent on books by undergraduates, then the main subject studied may be an important variable.

For example, students studying English Literature may spend more money on books than engineering students, so if we use a large percentage of English students or engineering students, our results will not be accurate.

We have to determine the relative percentage of each group at a university, e.g., Engineering 10%, Social Sciences 15%, English 20%, Sciences 25%, Languages 10%, Law 5%, and Medicine 15%. The sample must then contain all these groups in the same proportion as the target population (university students).

- The disadvantage of stratified sampling is that gathering such a sample would be extremely time-consuming and difficult to do. This method is rarely used in Psychology.

- However, the advantage is that the sample should be highly representative of the target population, and therefore we can generalize from the results obtained.

Opportunity Sampling

Opportunity sampling is a method in which participants are chosen based on their ease of availability and proximity to the researcher, rather than using random or systematic criteria. It’s a type of convenience sampling .

An opportunity sample is obtained by asking members of the population of interest if they would participate in your research. An example would be selecting a sample of students from those coming out of the library.

- This is a quick and easy way of choosing participants (advantage)

- It may not provide a representative sample and could be biased (disadvantage).

Systematic Sampling

Systematic sampling is a method where every nth individual is selected from a list or sequence to form a sample, ensuring even and regular intervals between chosen subjects.

Participants are systematically selected (i.e., orderly/logical) from the target population, like every nth participant on a list of names.

To take a systematic sample, you list all the population members and then decide upon a sample you would like. By dividing the number of people in the population by the number of people you want in your sample, you get a number we will call n.

If you take every nth name, you will get a systematic sample of the correct size. If, for example, you wanted to sample 150 children from a school of 1,500, you would take every 10th name.

- The advantage of this method is that it should provide a representative sample.

Sample size

The sample size is a critical factor in determining the reliability and validity of a study’s findings. While increasing the sample size can enhance the generalizability of results, it’s also essential to balance practical considerations, such as resource constraints and diminishing returns from ever-larger samples.

Reliability and Validity

Reliability refers to the consistency and reproducibility of research findings across different occasions, researchers, or instruments. A small sample size may lead to inconsistent results due to increased susceptibility to random error or the influence of outliers. In contrast, a larger sample minimizes these errors, promoting more reliable results.

Validity pertains to the accuracy and truthfulness of research findings. For a study to be valid, it should accurately measure what it intends to do. A small, unrepresentative sample can compromise external validity, meaning the results don’t generalize well to the larger population. A larger sample captures more variability, ensuring that specific subgroups or anomalies don’t overly influence results.

Practical Considerations

Resource Constraints : Larger samples demand more time, money, and resources. Data collection becomes more extensive, data analysis more complex, and logistics more challenging.

Diminishing Returns : While increasing the sample size generally leads to improved accuracy and precision, there’s a point where adding more participants yields only marginal benefits. For instance, going from 50 to 500 participants might significantly boost a study’s robustness, but jumping from 10,000 to 10,500 might not offer a comparable advantage, especially considering the added costs.

Sampling Methods & Strategies 101

Everything you need to know (including examples)

By: Derek Jansen (MBA) | Expert Reviewed By: Kerryn Warren (PhD) | January 2023

If you’re new to research, sooner or later you’re bound to wander into the intimidating world of sampling methods and strategies. If you find yourself on this page, chances are you’re feeling a little overwhelmed or confused. Fear not – in this post we’ll unpack sampling in straightforward language , along with loads of examples .

Overview: Sampling Methods & Strategies

- What is sampling in a research context?

- The two overarching approaches

Simple random sampling

Stratified random sampling, cluster sampling, systematic sampling, purposive sampling, convenience sampling, snowball sampling.

- How to choose the right sampling method

What (exactly) is sampling?

At the simplest level, sampling (within a research context) is the process of selecting a subset of participants from a larger group . For example, if your research involved assessing US consumers’ perceptions about a particular brand of laundry detergent, you wouldn’t be able to collect data from every single person that uses laundry detergent (good luck with that!) – but you could potentially collect data from a smaller subset of this group.

In technical terms, the larger group is referred to as the population , and the subset (the group you’ll actually engage with in your research) is called the sample . Put another way, you can look at the population as a full cake and the sample as a single slice of that cake. In an ideal world, you’d want your sample to be perfectly representative of the population, as that would allow you to generalise your findings to the entire population. In other words, you’d want to cut a perfect cross-sectional slice of cake, such that the slice reflects every layer of the cake in perfect proportion.

Achieving a truly representative sample is, unfortunately, a little trickier than slicing a cake, as there are many practical challenges and obstacles to achieving this in a real-world setting. Thankfully though, you don’t always need to have a perfectly representative sample – it all depends on the specific research aims of each study – so don’t stress yourself out about that just yet!

With the concept of sampling broadly defined, let’s look at the different approaches to sampling to get a better understanding of what it all looks like in practice.

The two overarching sampling approaches

At the highest level, there are two approaches to sampling: probability sampling and non-probability sampling . Within each of these, there are a variety of sampling methods , which we’ll explore a little later.

Probability sampling involves selecting participants (or any unit of interest) on a statistically random basis , which is why it’s also called “random sampling”. In other words, the selection of each individual participant is based on a pre-determined process (not the discretion of the researcher). As a result, this approach achieves a random sample.

Probability-based sampling methods are most commonly used in quantitative research , especially when it’s important to achieve a representative sample that allows the researcher to generalise their findings.

Non-probability sampling , on the other hand, refers to sampling methods in which the selection of participants is not statistically random . In other words, the selection of individual participants is based on the discretion and judgment of the researcher, rather than on a pre-determined process.

Non-probability sampling methods are commonly used in qualitative research , where the richness and depth of the data are more important than the generalisability of the findings.

If that all sounds a little too conceptual and fluffy, don’t worry. Let’s take a look at some actual sampling methods to make it more tangible.

Need a helping hand?

Probability-based sampling methods

First, we’ll look at four common probability-based (random) sampling methods:

Importantly, this is not a comprehensive list of all the probability sampling methods – these are just four of the most common ones. So, if you’re interested in adopting a probability-based sampling approach, be sure to explore all the options.

Simple random sampling involves selecting participants in a completely random fashion , where each participant has an equal chance of being selected. Basically, this sampling method is the equivalent of pulling names out of a hat , except that you can do it digitally. For example, if you had a list of 500 people, you could use a random number generator to draw a list of 50 numbers (each number, reflecting a participant) and then use that dataset as your sample.

Thanks to its simplicity, simple random sampling is easy to implement , and as a consequence, is typically quite cheap and efficient . Given that the selection process is completely random, the results can be generalised fairly reliably. However, this also means it can hide the impact of large subgroups within the data, which can result in minority subgroups having little representation in the results – if any at all. To address this, one needs to take a slightly different approach, which we’ll look at next.

Stratified random sampling is similar to simple random sampling, but it kicks things up a notch. As the name suggests, stratified sampling involves selecting participants randomly , but from within certain pre-defined subgroups (i.e., strata) that share a common trait . For example, you might divide the population into strata based on gender, ethnicity, age range or level of education, and then select randomly from each group.

The benefit of this sampling method is that it gives you more control over the impact of large subgroups (strata) within the population. For example, if a population comprises 80% males and 20% females, you may want to “balance” this skew out by selecting a random sample from an equal number of males and females. This would, of course, reduce the representativeness of the sample, but it would allow you to identify differences between subgroups. So, depending on your research aims, the stratified approach could work well.

Next on the list is cluster sampling. As the name suggests, this sampling method involves sampling from naturally occurring, mutually exclusive clusters within a population – for example, area codes within a city or cities within a country. Once the clusters are defined, a set of clusters are randomly selected and then a set of participants are randomly selected from each cluster.

Now, you’re probably wondering, “how is cluster sampling different from stratified random sampling?”. Well, let’s look at the previous example where each cluster reflects an area code in a given city.

With cluster sampling, you would collect data from clusters of participants in a handful of area codes (let’s say 5 neighbourhoods). Conversely, with stratified random sampling, you would need to collect data from all over the city (i.e., many more neighbourhoods). You’d still achieve the same sample size either way (let’s say 200 people, for example), but with stratified sampling, you’d need to do a lot more running around, as participants would be scattered across a vast geographic area. As a result, cluster sampling is often the more practical and economical option.

If that all sounds a little mind-bending, you can use the following general rule of thumb. If a population is relatively homogeneous , cluster sampling will often be adequate. Conversely, if a population is quite heterogeneous (i.e., diverse), stratified sampling will generally be more appropriate.

The last probability sampling method we’ll look at is systematic sampling. This method simply involves selecting participants at a set interval , starting from a random point .

For example, if you have a list of students that reflects the population of a university, you could systematically sample that population by selecting participants at an interval of 8 . In other words, you would randomly select a starting point – let’s say student number 40 – followed by student 48, 56, 64, etc.

What’s important with systematic sampling is that the population list you select from needs to be randomly ordered . If there are underlying patterns in the list (for example, if the list is ordered by gender, IQ, age, etc.), this will result in a non-random sample, which would defeat the purpose of adopting this sampling method. Of course, you could safeguard against this by “shuffling” your population list using a random number generator or similar tool.

Non-probability-based sampling methods

Right, now that we’ve looked at a few probability-based sampling methods, let’s look at three non-probability methods :

Again, this is not an exhaustive list of all possible sampling methods, so be sure to explore further if you’re interested in adopting a non-probability sampling approach.

First up, we’ve got purposive sampling – also known as judgment , selective or subjective sampling. Again, the name provides some clues, as this method involves the researcher selecting participants using his or her own judgement , based on the purpose of the study (i.e., the research aims).

For example, suppose your research aims were to understand the perceptions of hyper-loyal customers of a particular retail store. In that case, you could use your judgement to engage with frequent shoppers, as well as rare or occasional shoppers, to understand what judgements drive the two behavioural extremes .

Purposive sampling is often used in studies where the aim is to gather information from a small population (especially rare or hard-to-find populations), as it allows the researcher to target specific individuals who have unique knowledge or experience . Naturally, this sampling method is quite prone to researcher bias and judgement error, and it’s unlikely to produce generalisable results, so it’s best suited to studies where the aim is to go deep rather than broad .

Next up, we have convenience sampling. As the name suggests, with this method, participants are selected based on their availability or accessibility . In other words, the sample is selected based on how convenient it is for the researcher to access it, as opposed to using a defined and objective process.

Naturally, convenience sampling provides a quick and easy way to gather data, as the sample is selected based on the individuals who are readily available or willing to participate. This makes it an attractive option if you’re particularly tight on resources and/or time. However, as you’d expect, this sampling method is unlikely to produce a representative sample and will of course be vulnerable to researcher bias , so it’s important to approach it with caution.

Last but not least, we have the snowball sampling method. This method relies on referrals from initial participants to recruit additional participants. In other words, the initial subjects form the first (small) snowball and each additional subject recruited through referral is added to the snowball, making it larger as it rolls along .

Snowball sampling is often used in research contexts where it’s difficult to identify and access a particular population. For example, people with a rare medical condition or members of an exclusive group. It can also be useful in cases where the research topic is sensitive or taboo and people are unlikely to open up unless they’re referred by someone they trust.

Simply put, snowball sampling is ideal for research that involves reaching hard-to-access populations . But, keep in mind that, once again, it’s a sampling method that’s highly prone to researcher bias and is unlikely to produce a representative sample. So, make sure that it aligns with your research aims and questions before adopting this method.

How to choose a sampling method

Now that we’ve looked at a few popular sampling methods (both probability and non-probability based), the obvious question is, “ how do I choose the right sampling method for my study?”. When selecting a sampling method for your research project, you’ll need to consider two important factors: your research aims and your resources .

As with all research design and methodology choices, your sampling approach needs to be guided by and aligned with your research aims, objectives and research questions – in other words, your golden thread. Specifically, you need to consider whether your research aims are primarily concerned with producing generalisable findings (in which case, you’ll likely opt for a probability-based sampling method) or with achieving rich , deep insights (in which case, a non-probability-based approach could be more practical). Typically, quantitative studies lean toward the former, while qualitative studies aim for the latter, so be sure to consider your broader methodology as well.

The second factor you need to consider is your resources and, more generally, the practical constraints at play. If, for example, you have easy, free access to a large sample at your workplace or university and a healthy budget to help you attract participants, that will open up multiple options in terms of sampling methods. Conversely, if you’re cash-strapped, short on time and don’t have unfettered access to your population of interest, you may be restricted to convenience or referral-based methods.

In short, be ready for trade-offs – you won’t always be able to utilise the “perfect” sampling method for your study, and that’s okay. Much like all the other methodological choices you’ll make as part of your study, you’ll often need to compromise and accept practical trade-offs when it comes to sampling. Don’t let this get you down though – as long as your sampling choice is well explained and justified, and the limitations of your approach are clearly articulated, you’ll be on the right track.

Let’s recap…

In this post, we’ve covered the basics of sampling within the context of a typical research project.

- Sampling refers to the process of defining a subgroup (sample) from the larger group of interest (population).

- The two overarching approaches to sampling are probability sampling (random) and non-probability sampling .

- Common probability-based sampling methods include simple random sampling, stratified random sampling, cluster sampling and systematic sampling.

- Common non-probability-based sampling methods include purposive sampling, convenience sampling and snowball sampling.

- When choosing a sampling method, you need to consider your research aims , objectives and questions, as well as your resources and other practical constraints .

If you’d like to see an example of a sampling strategy in action, be sure to check out our research methodology chapter sample .

Last but not least, if you need hands-on help with your sampling (or any other aspect of your research), take a look at our 1-on-1 coaching service , where we guide you through each step of the research process, at your own pace.

Psst… there’s more (for free)

This post is part of our dissertation mini-course, which covers everything you need to get started with your dissertation, thesis or research project.

You Might Also Like:

Excellent and helpful. Best site to get a full understanding of Research methodology. I’m nolonger as “clueless “..😉

Excellent and helpful for junior researcher!

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

- En español – ExME

- Em português – EME

What are sampling methods and how do you choose the best one?

Posted on 18th November 2020 by Mohamed Khalifa

This tutorial will introduce sampling methods and potential sampling errors to avoid when conducting medical research.

Introduction to sampling methods

Examples of different sampling methods, choosing the best sampling method.

It is important to understand why we sample the population; for example, studies are built to investigate the relationships between risk factors and disease. In other words, we want to find out if this is a true association, while still aiming for the minimum risk for errors such as: chance, bias or confounding .

However, it would not be feasible to experiment on the whole population, we would need to take a good sample and aim to reduce the risk of having errors by proper sampling technique.

What is a sampling frame?

A sampling frame is a record of the target population containing all participants of interest. In other words, it is a list from which we can extract a sample.

What makes a good sample?

A good sample should be a representative subset of the population we are interested in studying, therefore, with each participant having equal chance of being randomly selected into the study.

We could choose a sampling method based on whether we want to account for sampling bias; a random sampling method is often preferred over a non-random method for this reason. Random sampling examples include: simple, systematic, stratified, and cluster sampling. Non-random sampling methods are liable to bias, and common examples include: convenience, purposive, snowballing, and quota sampling. For the purposes of this blog we will be focusing on random sampling methods .

Example: We want to conduct an experimental trial in a small population such as: employees in a company, or students in a college. We include everyone in a list and use a random number generator to select the participants

Advantages: Generalisable results possible, random sampling, the sampling frame is the whole population, every participant has an equal probability of being selected

Disadvantages: Less precise than stratified method, less representative than the systematic method

Example: Every nth patient entering the out-patient clinic is selected and included in our sample

Advantages: More feasible than simple or stratified methods, sampling frame is not always required

Disadvantages: Generalisability may decrease if baseline characteristics repeat across every nth participant

Example: We have a big population (a city) and we want to ensure representativeness of all groups with a pre-determined characteristic such as: age groups, ethnic origin, and gender

Advantages: Inclusive of strata (subgroups), reliable and generalisable results

Disadvantages: Does not work well with multiple variables

Example: 10 schools have the same number of students across the county. We can randomly select 3 out of 10 schools as our clusters

Advantages: Readily doable with most budgets, does not require a sampling frame

Disadvantages: Results may not be reliable nor generalisable

How can you identify sampling errors?

Non-random selection increases the probability of sampling (selection) bias if the sample does not represent the population we want to study. We could avoid this by random sampling and ensuring representativeness of our sample with regards to sample size.

An inadequate sample size decreases the confidence in our results as we may think there is no significant difference when actually there is. This type two error results from having a small sample size, or from participants dropping out of the sample.

In medical research of disease, if we select people with certain diseases while strictly excluding participants with other co-morbidities, we run the risk of diagnostic purity bias where important sub-groups of the population are not represented.

Furthermore, measurement bias may occur during re-collection of risk factors by participants (recall bias) or assessment of outcome where people who live longer are associated with treatment success, when in fact people who died were not included in the sample or data analysis (survivors bias).

By following the steps below we could choose the best sampling method for our study in an orderly fashion.

Research objectiveness

Firstly, a refined research question and goal would help us define our population of interest. If our calculated sample size is small then it would be easier to get a random sample. If, however, the sample size is large, then we should check if our budget and resources can handle a random sampling method.

Sampling frame availability

Secondly, we need to check for availability of a sampling frame (Simple), if not, could we make a list of our own (Stratified). If neither option is possible, we could still use other random sampling methods, for instance, systematic or cluster sampling.

Study design

Moreover, we could consider the prevalence of the topic (exposure or outcome) in the population, and what would be the suitable study design. In addition, checking if our target population is widely varied in its baseline characteristics. For example, a population with large ethnic subgroups could best be studied using a stratified sampling method.

Random sampling

Finally, the best sampling method is always the one that could best answer our research question while also allowing for others to make use of our results (generalisability of results). When we cannot afford a random sampling method, we can always choose from the non-random sampling methods.

To sum up, we now understand that choosing between random or non-random sampling methods is multifactorial. We might often be tempted to choose a convenience sample from the start, but that would not only decrease precision of our results, and would make us miss out on producing research that is more robust and reliable.

References (pdf)

Mohamed Khalifa

Leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

No Comments on What are sampling methods and how do you choose the best one?

Thank you for this overview. A concise approach for research.

really helps! am an ecology student preparing to write my lab report for sampling.

I learned a lot to the given presentation.. It’s very comprehensive… Thanks for sharing…

Very informative and useful for my study. Thank you

Oversimplified info on sampling methods. Probabilistic of the sampling and sampling of samples by chance does rest solely on the random methods. Factors such as the random visits or presentation of the potential participants at clinics or sites could be sufficiently random in nature and should be used for the sake of efficiency and feasibility. Nevertheless, this approach has to be taken only after careful thoughts. Representativeness of the study samples have to be checked at the end or during reporting by comparing it to the published larger studies or register of some kind in/from the local population.

Thank you so much Mr.mohamed very useful and informative article

Subscribe to our newsletter

You will receive our monthly newsletter and free access to Trip Premium.

Related Articles

How to read a funnel plot

This blog introduces you to funnel plots, guiding you through how to read them and what may cause them to look asymmetrical.

Internal and external validity: what are they and how do they differ?

Is this study valid? Can I trust this study’s methods and design? Can I apply the results of this study to other contexts? Learn more about internal and external validity in research to help you answer these questions when you next look at a paper.

Cluster Randomized Trials: Concepts

This blog summarizes the concepts of cluster randomization, and the logistical and statistical considerations while designing a cluster randomized controlled trial.

Join thousands of product people at Insight Out Conf on April 11. Register free.

Insights hub solutions

Analyze data

Uncover deep customer insights with fast, powerful features, store insights, curate and manage insights in one searchable platform, scale research, unlock the potential of customer insights at enterprise scale.

Featured reads

Inspiration

Three things to look forward to at Insight Out

Tips and tricks

Make magic with your customer data in Dovetail

Four ways Dovetail helps Product Managers master continuous product discovery

Events and videos

© Dovetail Research Pty. Ltd.

An overview of sampling methods

Last updated

27 February 2023

Reviewed by

Cathy Heath

When researching perceptions or attributes of a product, service, or people, you have two options:

Survey every person in your chosen group (the target market, or population), collate your responses, and reach your conclusions.

Select a smaller group from within your target market and use their answers to represent everyone. This option is sampling .

Sampling saves you time and money. When you use the sampling method, the whole population being studied is called the sampling frame .

The sample you choose should represent your target market, or the sampling frame, well enough to do one of the following:

Generalize your findings across the sampling frame and use them as though you had surveyed everyone

Use the findings to decide on your next step, which might involve more in-depth sampling

Make research less tedious

Dovetail streamlines research to help you uncover and share actionable insights

How was sampling developed?

Valery Glivenko and Francesco Cantelli, two mathematicians studying probability theory in the early 1900s, devised the sampling method. Their research showed that a properly chosen sample of people would reflect the larger group’s status, opinions, decisions, and decision-making steps.

They proved you don't need to survey the entire target market, thereby saving the rest of us a lot of time and money.

- Why is sampling important?

We’ve already touched on the fact that sampling saves you time and money. When you get reliable results quickly, you can act on them sooner. And the money you save can pay for something else.

It’s often easier to survey a sample than a whole population. Sample inferences can be more reliable than those you get from a very large group because you can choose your samples carefully and scientifically.

Sampling is also useful because it is often impossible to survey the entire population. You probably have no choice but to collect only a sample in the first place.

Because you’re working with fewer people, you can collect richer data, which makes your research more accurate. You can:

Ask more questions

Go into more detail

Seek opinions instead of just collecting facts

Observe user behaviors

Double-check your findings if you need to

In short, sampling works! Let's take a look at the most common sampling methods.

- Types of sampling methods

There are two main sampling methods: probability sampling and non-probability sampling. These can be further refined, which we'll cover shortly. You can then decide which approach best suits your research project.

Probability sampling method

Probability sampling is used in quantitative research , so it provides data on the survey topic in terms of numbers. Probability relates to mathematics, hence the name ‘quantitative research’. Subjects are asked questions like:

How many boxes of candy do you buy at one time?

How often do you shop for candy?

How much would you pay for a box of candy?

This method is also called random sampling because everyone in the target market has an equal chance of being chosen for the survey. It is designed to reduce sampling error for the most important variables. You should, therefore, get results that fairly reflect the larger population.

Non-probability sampling method

In this method, not everyone has an equal chance of being part of the sample. It's usually easier (and cheaper) to select people for the sample group. You choose people who are more likely to be involved in or know more about the topic you’re researching.

Non-probability sampling is used for qualitative research. Qualitative data is generated by questions like:

Where do you usually shop for candy (supermarket, gas station, etc.?)

Which candy brand do you usually buy?

Why do you like that brand?

- Probability sampling methods

Here are five ways of doing probability sampling:

Simple random sampling (basic probability sampling)

Systematic sampling

Stratified sampling.

Cluster sampling

Multi-stage sampling

Simple random sampling.

There are three basic steps to simple random sampling:

Choose your sampling frame.

Decide on your sample size. Make sure it is large enough to give you reliable data.

Randomly choose your sample participants.

You could put all their names in a hat, shake the hat to mix the names, and pull out however many names you want in your sample (without looking!)

You could be more scientific by giving each participant a number and then using a random number generator program to choose the numbers.

Instead of choosing names or numbers, you decide beforehand on a selection method. For example, collect all the names in your sampling frame and start at, for example, the fifth person on the list, then choose every fourth name or every tenth name. Alternatively, you could choose everyone whose last name begins with randomly-selected initials, such as A, G, or W.

Choose your system of selecting names, and away you go.

This is a more sophisticated way to choose your sample. You break the sampling frame down into important subgroups or strata . Then, decide how many you want in your sample, and choose an equal number (or a proportionate number) from each subgroup.

For example, you want to survey how many people in a geographic area buy candy, so you compile a list of everyone in that area. You then break that list down into, for example, males and females, then into pre-teens, teenagers, young adults, senior citizens, etc. who are male or female.

So, if there are 1,000 young male adults and 2,000 young female adults in the whole sampling frame, you may want to choose 100 males and 200 females to keep the proportions balanced. You then choose the individual survey participants through the systematic sampling method.

Clustered sampling

This method is used when you want to subdivide a sample into smaller groups or clusters that are geographically or organizationally related.

Let’s say you’re doing quantitative research into candy sales. You could choose your sample participants from urban, suburban, or rural populations. This would give you three geographic clusters from which to select your participants.

This is a more refined way of doing cluster sampling. Let’s say you have your urban cluster, which is your primary sampling unit. You can subdivide this into a secondary sampling unit, say, participants who typically buy their candy in supermarkets. You could then further subdivide this group into your ultimate sampling unit. Finally, you select the actual survey participants from this unit.

- Uses of probability sampling

Probability sampling has three main advantages:

It helps minimizes the likelihood of sampling bias. How you choose your sample determines the quality of your results. Probability sampling gives you an unbiased, randomly selected sample of your target market.

It allows you to create representative samples and subgroups within a sample out of a large or diverse target market.

It lets you use sophisticated statistical methods to select as close to perfect samples as possible.

- Non-probability sampling methods

To recap, with non-probability sampling, you choose people for your sample in a non-random way, so not everyone in your sampling frame has an equal chance of being chosen. Your research findings, therefore, may not be as representative overall as probability sampling, but you may not want them to be.

Sampling bias is not a concern if all potential survey participants share similar traits. For example, you may want to specifically focus on young male adults who spend more than others on candy. In addition, it is usually a cheaper and quicker method because you don't have to work out a complex selection system that represents the entire population in that community.

Researchers do need to be mindful of carefully considering the strengths and limitations of each method before selecting a sampling technique.

Non-probability sampling is best for exploratory research , such as at the beginning of a research project.

There are five main types of non-probability sampling methods:

Convenience sampling

Purposive sampling, voluntary response sampling, snowball sampling, quota sampling.

The strategy of convenience sampling is to choose your sample quickly and efficiently, using the least effort, usually to save money.

Let's say you want to survey the opinions of 100 millennials about a particular topic. You could send out a questionnaire over the social media platforms millennials use. Ask respondents to confirm their birth year at the top of their response sheet and, when you have your 100 responses, begin your analysis. Or you could visit restaurants and bars where millennials spend their evenings and sign people up.

A drawback of convenience sampling is that it may not yield results that apply to a broader population.

This method relies on your judgment to choose the most likely sample to deliver the most useful results. You must know enough about the survey goals and the sampling frame to choose the most appropriate sample respondents.

Your knowledge and experience save you time because you know your ideal sample candidates, so you should get high-quality results.

This method is similar to convenience sampling, but it is based on potential sample members volunteering rather than you looking for people.

You make it known you want to do a survey on a particular topic for a particular reason and wait until enough people volunteer. Then you give them the questionnaire or arrange interviews to ask your questions directly.

Snowball sampling involves asking selected participants to refer others who may qualify for the survey. This method is best used when there is no sampling frame available. It is also useful when the researcher doesn’t know much about the target population.

Let's say you want to research a niche topic that involves people who may be difficult to locate. For our candy example, this could be young males who buy a lot of candy, go rock climbing during the day, and watch adventure movies at night. You ask each participant to name others they know who do the same things, so you can contact them. As you make contact with more people, your sample 'snowballs' until you have all the names you need.

This sampling method involves collecting the specific number of units (quotas) from your predetermined subpopulations. Quota sampling is a way of ensuring that your sample accurately represents the sampling frame.

- Uses of non-probability sampling

You can use non-probability sampling when you:

Want to do a quick test to see if a more detailed and sophisticated survey may be worthwhile

Want to explore an idea to see if it 'has legs'

Launch a pilot study

Do some initial qualitative research

Have little time or money available (half a loaf is better than no bread at all)

Want to see if the initial results will help you justify a longer, more detailed, and more expensive research project

- The main types of sampling bias, and how to avoid them

Sampling bias can fog or limit your research results. This will have an impact when you generalize your results across the whole target market. The two main causes of sampling bias are faulty research design and poor data collection or recording. They can affect probability and non-probability sampling.

Faulty research

If a surveyor chooses participants inappropriately, the results will not reflect the population as a whole.

A famous example is the 1948 presidential race. A telephone survey was conducted to see which candidate had more support. The problem with the research design was that, in 1948, most people with telephones were wealthy, and their opinions were very different from voters as a whole. The research implied Dewey would win, but it was Truman who became president.

Poor data collection or recording

This problem speaks for itself. The survey may be well structured, the sample groups appropriate, the questions clear and easy to understand, and the cluster sizes appropriate. But if surveyors check the wrong boxes when they get an answer or if the entire subgroup results are lost, the survey results will be biased.

How do you minimize bias in sampling?

To get results you can rely on, you must:

Know enough about your target market

Choose one or more sample surveys to cover the whole target market properly

Choose enough people in each sample so your results mirror your target market

Have content validity . This means the content of your questions must be direct and efficiently worded. If it isn’t, the viability of your survey could be questioned. That would also be a waste of time and money, so make the wording of your questions your top focus.

If using probability sampling, make sure your sampling frame includes everyone it should and that your random sampling selection process includes the right proportion of the subgroups

If using non-probability sampling, focus on fairness, equality, and completeness in identifying your samples and subgroups. Then balance those criteria against simple convenience or other relevant factors.

What are the five types of sampling bias?

Self-selection bias. If you mass-mail questionnaires to everyone in the sample, you’re more likely to get results from people with extrovert or activist personalities and not from introverts or pragmatists. So if your convenience sampling focuses on getting your quota responses quickly, it may be skewed.

Non-response bias. Unhappy customers, stressed-out employees, or other sub-groups may not want to cooperate or they may pull out early.

Undercoverage bias. If your survey is done, say, via email or social media platforms, it will miss people without internet access, such as those living in rural areas, the elderly, or lower-income groups.

Survivorship bias. Unsuccessful people are less likely to take part. Another example may be a researcher excluding results that don’t support the overall goal. If the CEO wants to tell the shareholders about a successful product or project at the AGM, some less positive survey results may go “missing” (to take an extreme example.) The result is that your data will reflect an overly optimistic representation of the truth.

Pre-screening bias. If the researcher, whose experience and knowledge are being used to pre-select respondents in a judgmental sampling, focuses more on convenience than judgment, the results may be compromised.

How do you minimize sampling bias?

Focus on the bullet points in the next section and:

Make survey questionnaires as direct, easy, short, and available as possible, so participants are more likely to complete them accurately and send them back

Follow up with the people who have been selected but have not returned their responses

Ignore any pressure that may produce bias

- How do you decide on the type of sampling to use?

Use the ideas you've gleaned from this article to give yourself a platform, then choose the best method to meet your goals while staying within your time and cost limits.

If it isn't obvious which method you should choose, use this strategy:

Clarify your research goals

Clarify how accurate your research results must be to reach your goals

Evaluate your goals against time and budget

List the two or three most obvious sampling methods that will work for you

Confirm the availability of your resources (researchers, computer time, etc.)

Compare each of the possible methods with your goals, accuracy, precision, resource, time, and cost constraints

Make your decision

- The takeaway

Effective market research is the basis of successful marketing, advertising, and future productivity. By selecting the most appropriate sampling methods, you will collect the most useful market data and make the most effective decisions.

Get started today

Go from raw data to valuable insights with a flexible research platform

Editor’s picks

Last updated: 21 December 2023

Last updated: 16 December 2023

Last updated: 6 October 2023

Last updated: 17 February 2024

Last updated: 5 March 2024

Last updated: 19 November 2023

Last updated: 15 February 2024

Last updated: 11 March 2024

Last updated: 12 December 2023

Last updated: 6 March 2024

Last updated: 10 April 2023

Last updated: 20 December 2023

Latest articles

Related topics, log in or sign up.

Get started for free

If you're seeing this message, it means we're having trouble loading external resources on our website.

If you're behind a web filter, please make sure that the domains *.kastatic.org and *.kasandbox.org are unblocked.

To log in and use all the features of Khan Academy, please enable JavaScript in your browser.

Statistics and probability

Course: statistics and probability > unit 6.

- Picking fairly

- Using probability to make fair decisions

- Techniques for generating a simple random sample

- Simple random samples

- Techniques for random sampling and avoiding bias

- Sampling methods

Sampling methods review

- Samples and surveys

What are sampling methods?

Bad ways to sample.

- (Choice A) Convenience sampling A Convenience sampling

- (Choice B) Voluntary response sampling B Voluntary response sampling

Good ways to sample

- (Choice A) Simple random sampling A Simple random sampling

- (Choice B) Stratified random sampling B Stratified random sampling

- (Choice C) Cluster random sampling C Cluster random sampling

- (Choice D) Systematic random sampling D Systematic random sampling

Want to join the conversation?

- Upvote Button navigates to signup page

- Downvote Button navigates to signup page

- Flag Button navigates to signup page

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Sampling Methods: Guide To All Types with Examples

Sampling is an essential part of any research project. The right sampling method can make or break the validity of your research, and it’s essential to choose the right method for your specific question. In this article, we’ll take a closer look at some of the most popular sampling methods and provide real-world examples of how they can be used to gather accurate and reliable data.

LEARN ABOUT: Research Process Steps

From simple random sampling to complex stratified sampling , we’ll explore each method’s pros, cons, and best practices. So, whether you’re a seasoned researcher or just starting your journey, this article is a must-read for anyone looking to master sampling methods. Let’s get started!

Content Index

What is sampling?

Types of sampling: sampling methods, types of probability sampling with examples:, uses of probability sampling, types of non-probability sampling with examples, uses of non-probability sampling, how do you decide on the type of sampling to use, difference between probability sampling and non-probability sampling methods.

Sampling is a technique of selecting individual members or a subset of the population to make statistical inferences from them and estimate the characteristics of the whole population. Different sampling methods are widely used by researchers in market research so that they do not need to research the entire population to collect actionable insights.

It is also a time-convenient and cost-effective method and hence forms the basis of any research design . Sampling techniques can be used in research survey software for optimum derivation.