Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

Design Science Research in Doctoral Projects: An Analysis of Australian Theses

Journal of the Association for Information Systems

Related Papers

ICT Education

Hanlie Smuts

Design science research (DSR) is well-known in different domains, including information systems (IS), for the construction of artefacts. One of the most challenging aspects of IS postgraduate studies (with DSR) is determining the structure of the study and its report, which should reflect all the components necessary to build a convincing argument in support of such a study’s claims or assertions. Analysing several postgraduate IS-DSR reports as examples, this paper presents a mapping between recommendable structures for research reports and the DSR process model of Vaishnavi and Kuechler, which several of our current postgraduate students have found helpful.

Management Information Systems Quarterly

Shirley Gregor

Informing Science: The International Journal of an Emerging Transdiscipline

Manuel Meireles

Aim/Purpose: To discuss the Design Science Research approach by comparing some of its canons with observed practices in projects in which it is applied, in order to understand and structure it better. Background: Recent criticisms of the application of the Design Science Research (DSR) approach have pointed out the need to make it more approachable and less confusing to overcome deficiencies such as the unrealistic evaluation. Methodology: We identified and analyzed 92 articles that presented artifacts developed from DSR projects and another 60 articles with preceding or subsequent actions associated with these 92 projects. We applied the content analysis technique to these 152 articles, enabling the preparation of network diagrams and an analysis of the longitudinal evolution of these projects in terms of activities performed and the types of artifacts involved. Contribution: The content analysis of these 152 articles enabled the preparation of network diagrams and an analysis of t...

Tuure Tuunanen , Kenneth Peffers , Johanna Bragge

Rahul Thakurta

Design science is an increasingly popular research paradigm in the information systems discipline. De- spite a recognition of the design science research par- adigm, questions are being raised about the nature of its existence and its contributions. Central to this ar- gument is the understanding of the relationship be- tween “theoretical research” and “design research” and the necessary implications for design. In this re- search, we contribute to this discourse by carrying out a structured literature review in order to appreciate the current state of the art in design science research. The results identify an incongruence between the methodological guidelines informing the design and how the design is carried out in practice. On the basis of our observations on the design process, the theoreti- cal foundations of design, and the design outcomes, we outline some research directions that we believe will contribute to methodically well-executed design sci- ence contributions in the f...

Proceedings of the 4th …

Olga Levina , Udo Bub

Discussions about the body of knowledge of information systems, including the research domain, relevant perspectives and methods have been going on for a long time. Many researchers vote for a combination of research perspectives and their respective research methodologies; rigour and relevance as requirements in design science are generally accepted. What has been lacking is a formalisation of a detailed research process for design science that takes into account all requirements. We have developed such a research process, building on top of existing processes and findings from design research. The process combines qualitative and quantitative research and references well-known research methods. Publication possibilities and self-contained work packages are recommended. Case studies using the process are presented and discussed.

Diane Strode , Susan Chard

Design science is a research paradigm where the development and evaluation of an artefact is a key contribution. Design science is used in many domains and this paper draws on those domains to formulate a generic structure for design science research suitable for small-scale postgraduate information technology research projects. The paper includes guidelines for writing proposals and a generic research report structure. The paper presents ethical issues to consider in design science research and contributes guidelines for assessment.

Design Science Research Cases

Jan vom Brocke

Design Science Research (DSR) is a problem-solving paradigm that seeks to enhance human knowledge via the creation of innovative artifacts. Simply stated, DSR seeks to enhance technology and science knowledge bases via the creation of innovative artifacts that solve problems and improve the environment in which they are instantiated. The results of DSR include both the newly designed artifacts and design knowledge (DK) that provides a fuller understanding via design theories of why the artifacts enhance (or, disrupt) the relevant application contexts. The goal of this introduction chapter is to provide a brief survey of DSR concepts for better understanding of the following chapters that present DSR case studies.

International Journal of Doctoral Studies

Seyum Getenet

Aim/Purpose: We show a new dimension to the process of using design-based research approach in doctoral dissertations. Background: Design-based research is a long-term and concentrated approach to educational inquiry. It is often a recommendation that doctoral students should not attempt to adopt this approach for their doctoral dissertations. In this paper, we document two doctoral dissertations that used a design-based research approach in two different contexts. Methodology : The study draws on a qualitative analysis of the methodological approaches of two doctoral dissertations through the lenses of Herrington, McKenney, Reeves and Oliver principles of design-based research approach. Contribution: The findings of this study add a new dimension to using design-based research approach in doctoral dissertations in shorter-term and less intensive contexts. Findings: The results of this study indicate that design-based research is not only an effective methodological approach in doct...

Communications in Computer and Information Science

Alta Van der Merwe

RELATED PAPERS

Journal of the Society of Dyers and Colourists

jiri militky

Journal of Food Quality

Leonardo Sabatino

Komputa : Jurnal Ilmiah Komputer dan Informatika

Ana Hadiana

Piero Del Soldato

Teresa Cristina Moraes Genro

Parasitology Research

Philippe Vignoles

Piyawat Wuttichaikitcharoen

Political Economy: Fiscal Policies & Behavior of Economic Agents eJournal

Andrew Morriss

James Fadokun

International Journal of Antimicrobial Agents

Samuel Kariuki

Journal of Leukocyte Biology

Michelle Azevedo

RESUMEN COTEC

DEVORA CEDEÑO

Titi Prihatin

Scientific Reports

Sandra Gomez

Anuario Filosófico

Lourdes Flamarique

Cognitive Social Science eJournal

Brett Freudenberg

Semina: Ciências Agrárias

camila soares batista

Jan Hendrickx

BOHR International Journal of Computer Science

Narjes alarsali

Roos Gerritsen

Ecehan Aras

Pedagogy, Culture and Society

Anna-Maija Pirttilä-Backman

Chemical Science

Alexander Hepp

Journal of Molecular Evolution

Bernhard Lieb

See More Documents Like This

RELATED TOPICS

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

Publications

Conversational Agents (CAs) have become a new paradigm for human-computer interaction. Despite the potential benefits, there are ethical challenges to the widespread use of these agents that may inhibit their use for individual and social goals. However, besides a multitude of behavioral and design-oriented studies on CAs, a distinct ethical perspective falls rather short in the current literature. In this paper, we present the first steps of our design science research project on principles for a value-sensitive design of CAs. Based on theoretical insights from 87 papers and eleven user interviews, we propose preliminary requirements and design principles for a value-sensitive design of CAs. Moreover, we evaluate the preliminary principles with an expert-based evaluation. The evaluation confirms that an ethical approach for design CAs might be promising for certain scenarios.

This essay derives a schema for specifying design principles for information technology-based artifacts in sociotechnical systems. Design principles are used to specify design knowledge in an accessible form, but there is wide variation and lack of precision across views on their formulation. This variation is a sign of important issues that should be addressed, including a lack of attention to human actors and levels of complexity as well as differing views on causality, on the nature of the mechanisms used to achieve goals, and on the need for justificatory knowledge. The new schema includes the well-recognized elements of design principles, including goals in a specific context and the mechanisms to achieve the goal. In addition, the schema allows: (i) consideration of the varying roles of the human actors involved and the utility of design principles; (ii) attending to the complexity of IT-based artifacts through decomposition;(iii) distinction of the types of causation (i.e., deterministic versus probabilistic); (iv) a variety of mechanisms in achieving aims; and (v) the optional definition of justificatory knowledge underlying the design principles. We illustrate the utility of the proposed schema by applying it to examples of published research.

Kuhns Thema ist der Prozeß, in dem wissenschaftliche Erkenntnisse erzielt werden. Fortschritt in der Wissenschaft - das ist seine These - vollzieht sich nicht durch kontinuierliche Veränderung, sondern durch revolutionäre Prozesse. Dabei beschreibt der Begriff der wissenschaftlichen Revolution den Vorgang, bei dem bestehende Erklärungsmodelle, an denen und mit denen die wissenschaftliche Welt bis dahin gearbeitet hat, abgelöst und durch andere ersetzt werden: es findet ein Paradigmenwechsel statt.

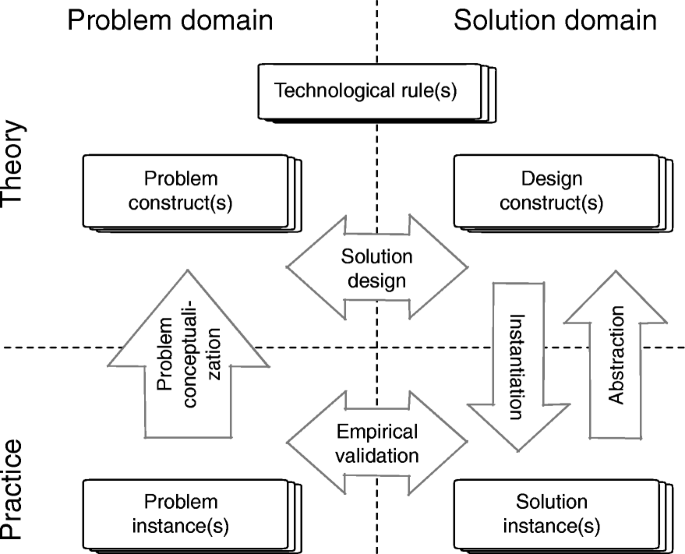

Sir Isaac Newton famously said, "If I have seen further it is by standing on the shoulders of giants." Research is a collaborative, evolutionary endeavor-and it is no different with design science research (DSR) which builds upon existing design knowledge and creates new design knowledge to pass on to future projects. However, despite the vast, growing body of DSR contributions, scant evidence of the accumulation and evolution of design knowledge is found in an organized DSR body of knowledge. Most contributions rather stand on their own feet than on the shoulders of giants, and this is limiting how far we can see; or in other words, the extent of the broader impacts we can make through DSR. In this editorial, we aim at providing guidance on how to position design knowledge contributions in wider problem and solution spaces. We propose (1) a model conceptualizing design knowledge as a resilient relationship between problem and solution spaces, (2) a model that demonstrates how individual DSR projects consume and produce design knowledge, (3) a map to position a design knowledge contribution in problem and solution spaces, and (4) principles on how to use this map in a DSR project. We show how fellow researchers, readers, editors, and reviewers, as well as the IS community as a whole, can make use of these proposals, while also illustrating future research opportunities.

We develop an empirically grounded understanding of how design knowledge accumulates over time. Drawing from theory on knowledge creation, we conceptualize accumulation along the goals and scope of knowledge in DSR as a distinct knowledge creation problem. Through two empirical studies, we theorize knowledge accumulation in DSR by unpacking (1) three knowledge creation mechanisms, and (2) explaining how their interplay forms different patterns of knowledge creation over time. We contribute a theoretical framework conducive to integrating knowledge produced through different methods by introducing the knowledge creation processes as a distinct unit of analysis in DSR and by pointing out that extant procedural models may need revision to account for the continuous knowledge creation occurring in potentially multiyear DSR projects.

The rising complexity of automotive software makes it increasingly difficult to develop the software with high quality in short time. Especially the late detection of early errors, such as requirement inconsistencies and ambiguities, often causes costly iterations. We address this problem with a new requirements specification and analysis technique based on executable scenarios and automated testing. The technique is based on the Scenario Modeling Language for Kotlin (SMLK), a Kotlin based framework that supports the modeling/programming of behavior as loosely coupled scenarios, which is close to how humans conceive and communicate behavioral requirements. Combined with JUnit, we propose the Test-Driven Scenario Specification (TDSS) process, which introduces agile practices into the early phases of development, significantly reducing the risk of requirement inconsistencies and ambiguities, and, thus, reducing development costs. We overview TDSS with the help of an example from the e-mobility domain, report on lessons learned, and outline open challenges.

More and more people use virtual assistants in their everyday life (e.g., on their mobile phones, in their homes, or in their cars). So-called vehicle assistance systems have evolved over the years and now perform various proactive tasks. However, we still lack concrete guidelines with all the specifics that one needs to consider to build virtual assistants that provide a convincing user experience (especially in vehicles). This research provides guidelines for designing virtual in- vehicle assistants. The developed guidelines offer a clear and structured overview of what designers have to consider while designing virtual in-vehicle assistants for a convincing user experience. Following design science research principles, we designed the guidelines based on the existing literature on the requirements of assistant systems and on the results from interviewing experts. In order to demonstrate the applicability of the guidelines, we developed a virtual reality prototype that considered the design guidelines. In a user experience test with 19 participants, we found that the prototype was easy to use, allowed good interaction, and increased the users’ overall comfort.

Digitalization triggers a shift in the compositions of skills and knowledge needed for students in their future work life. Hence, higher order thinking skills are becoming more important to solve future challenges. One subclass of these skills, which contributes significantly to communication, collaboration and problem-solving, is the skill of how to argue in a structured, reflective and well-formed way. However, educational organizations face difficulties in providing the boundary conditions necessary to develop this skill, due to increasing student numbers paired with financial constraints. In this short paper, we present the first steps of our design science research project on how to design an adaptive IT-tool that helps students develop their argumentation skill through formative feedback in large-scale lectures. Based on scientific learning theory and user interviews, we propose preliminary requirements and design principles for an adaptive argumentation learning tool. Furthermore, we present a first instantiation of those principles.

Innovation in the medical technology (med tech) industry has a major impact on well-being in society. Open innovation has the potential to accelerate the development of new or improved healthcare solutions. Building on work system theory (WST), this paper explores how a multi-sided open innovation platform can systematically be established in a German med tech industry cluster in situations where firms had no prior experience with this approach. We aim to uncover problems that may arise and identify opportunities for overcoming them. We performed an action research study in which we implemented and evaluated a multi-sided web-based open innovation platform in four real-world innovation challenges. Analyzing the four different challenges fostered a deeper understanding of the conceptual and organizational aspects of establishing the multi-sided open innovation platform as part of a larger work system. Reflecting on the findings, we developed five design principles that shall support the establishment of multi-sided open innovation platforms in other contexts. Thus, this paper contributes to both theory and practice.

One of the most critical tasks for startups is to validate their business model. Therefore, entrepreneurs try to collect information such as feedback from other actors to assess the validity of their assumptions and make decisions. However, previous work on decisional guidance for business model validation provides no solution for the highly uncertain and complex context of earlystage startups. The purpose of this paper is, thus, to develop design principles for a Hybrid Intelligence decision support system (HI-DSS) that combines the complementary capabilities of human and machine intelligence. We follow a design science research approach to design a prototype artifact and a set of design principles. Our study provides prescriptive knowledge for HI-DSS and contributes to previous work on decision support for business models, the applications of complementary strengths of humans and machines for making decisions, and support systems for extremely uncertain decision-making problems.

Posing research questions represents a fundamental step to guide and direct how researchers develop knowledge in research. In design science research (DSR), researchers need to pose research questions to define the scope and the modes of inquiry, characterize the artifacts, and communicate the contributions. Despite the importance of research questions, research provides few guidelines on how to construct suitable DSR research questions. We fill this gap by exploring ways of constructing DSR research questions and analyzing the research questions in a sample of 104 DSR publications. We found that about two-thirds of the analyzed DSR publications actually used research questions to link their problem statements to research approaches and that most questions focused on solving problems. Based on our analysis, we derive a typology of DSR question formulation to provide guidelines and patterns that help researchers formulate research questions when conducting their DSR projects.

Design science research (DSR) aims to deliver innovative solutions for real-world problems. DSR produces Information Systems (IS) artifacts and design knowledge describing means-end relationships between problem and solution spaces. A key success factor of any DSR research endeavor is an appropriate understanding and description of the underlying problem space. However, existing DSR literature lacks a solid conceptualization of the problem space in DSR. This paper addresses this gap and suggests a conceptualization of the problem space in DSR that builds on the four key concepts of stakeholders, needs, goals, and requirements. We showcase the application of our conceptualization in two published DSR projects. Our work contributes methodologically to the field of DSR as it helps DSR scholars to explore and describe the problem space in terms of a set of key concepts and their relationships.

To remain competitive, businesses need to develop innovative and profitable products, processes and services. The development of innovation relies on novel ideas, which can be generated during creative workshops. In this context the Design Thinking approach, a problem-solving methodology based on collaboration, user-centricity and creativity, may be used. However, guidance and moderation of this process require a vast amount of skills and knowledge. As technologies like artificial intelligence have the potential of making machines our collaboration partner in the future, creating virtual assistants adapting human behaviors is promising. To reduce cognitive dissonance and stress on both the moderators and participants, we investigate the potential of a virtual assistant to support moderation in a Design Thinking process to improve innovative output as well as perceived satisfaction. We therefore developed design guidelines for virtual assistants supporting creative workshops based on qualitative expert interviews and related literature following the Design Science Research Methodology.

With the rising interest in Design Science Research (DSR), it is crucial to engage in the ongoing debate on what constitutes an acceptable contribution for publishing DSR - the design artifact, the design theory, or both. In this editorial, we provide some constructive guidance across different positioning statements with actionable recommendations for DSR authors and reviewers. We expect this editorial to serve as a foundational step towards clarifying misconceptions about DSR contributions and to pave the way for the acceptance of more DSR papers to top IS journals.

This paper reports on the results of a design science research (DSR) study that develops design principles for information systems (IS) that support organisational sensemaking in environmental sustainability transformations. We identify initial design principles based on salient affordances required in organisational sensemaking and revise them through three rounds of developing, demonstrating and evaluating a prototypical implementation. Through our analysis, we learn how IS can support essential sensemaking practices in environmental sustainability transformations, including experiencing disruptive ambiguity through the provision of environmental data, noticing and bracketing, engaging in an open and inclusive communication and presuming potential alternative environmentally responsible actions. We make two key contributions: First, we provide a set of theory-inspired design principles for IS that support sensemaking in sustainability transformations, and revise them empirically using a DSR method. Second, we show how the concept of affordances can be used in DSR to investigate how IS can support organisational practices. While our findings are based on the investigation of the substantive context of environmental sustainability transformation, we suggest that they might be applicable in a broader set of contexts of organisational sensemaking and thus for a broader class of sensemaking support systems.

Design Science Research (DSR) is now an accepted research paradigm in the Information Systems (IS) field, aiming at developing purposeful IT artifacts and knowledge about the design of IT artifacts. A rich body of knowledge on approaches, methods, and frameworks supports researchers in conducting DSR projects. While methodological guidance is abundant, there is little support and guidance for documenting and effectively managing DSR processes. In this article, we present a set of design principles for tool support for DSR processes along with a prototypical implementation (MyDesignProcess.com). We argue that tool support for DSR should enable researchers and teams of researchers to structure, document, maintain, and present DSR, including the resulting design knowledge and artifacts. Such tool support can increase traceability, collaboration, and quality in DSR. We illustrate the use of our prototypical implementation by applying it to published cases, and we suggest guidelines for using tools to effectively manage design-oriented research.

In order to generate valuable innovations, it is important to come up with potential beneficial ideas. A well-known method for collective idea generation is Brainstorming and with Electronic Brainstorming, individuals can virtually brainstorm. However, an effective Brainstorming facilitation always needs a moderator. In our research, we designed and implemented a virtual moderator that can automatically facilitate a Brainstorming session. We used various artificial intelligence functions, like natural language processing, machine learning and reasoning and created a comprehensive Intelligent Moderator (IMO) for virtual Brainstorming.

This paper reports on the results of a study to investigate how scholars engage with and use the action design research (ADR) approach. ADR has been acknowledged as an important variant of the Design Science Research approach, and has been adopted by a number of scholars, as the methodological basis for doctoral dissertations as well as multidisciplinary research projects. With this use, the research community is learning about how to apply ADR’s central tenets in different contexts. In this paper, we draw on primary data from researchers who have recently engaged in or finished an ADR project to identify recurring problems and opportunities related to working in different ADR stages, balancing demands from practice and research, and addressing problem instance vs. class of problems. Our work contributes a greater understanding of how ADR projects are carried out in practice, how researchers use ADR, and pointers to possibilities for extending ADR.

In the IS discipline, the formulation of design principles is an important vehicle to convey design knowledge that contributes beyond instantiations applicable in a limited context of use. However, their formulation still varies in terms of orientation, clarity, and precision. In this paper, we focus on the design of artifacts that are oriented towards human use, and we identify and analyze three orientations in the formulation of such design principles in IS journals—action oriented, materiality oriented, and both action and materiality oriented. We propose an effective and actionable formulation of design principles that is both clear and precise.

This paper distinguishes and contrasts two design science research strategies in information systems. In the first strategy, a researcher constructs or builds an IT meta-artefact as a general solution concept to address a class of problem. In the second strategy, a researcher attempts to solve a client’s specific problem by building a concrete IT artefact in that specific context and distils from that experience prescriptive knowledge to be packaged into a general solution concept to address a class of problem. The two strategies are contrasted along 16 dimensions representing the context, outcomes, process and resource requirements.

Design research promotes understanding of advanced, cutting-edge information systems through the construction and evaluation of these systems and their components. Since this method of research can produce rigorous, meaningful results in the absence of a strong theory base, it excels in investigating new and even speculative technologies, offering the potential to advance accepted practice.

Unter „Mixed Methods“ wird üblicherweise die Kombination qualitativer und quantitativer Forschungsmethoden in einem Untersuchungsdesign verstanden. Es handelt sich um einen Begriff aus der anglo-amerikanischen Methodendebatte in den Sozial- und Erziehungswissenschaften, der seit dem Ende der 1990er-Jahre, konkret seit dem Erscheinen der Monographie „Mixed Methodology“ von Abbas Tashakkori und Charles Teddlie (1998) große Prominenz erlangt hat. Von den amerikanischen Erziehungswissenschaften ausgehend hat sich eine eigene Mixed Methods-Bewegung gebildet – mittlerweile existieren eine ganze Reihe von Lehrbüchern (etwa Creswell/Plano Clark 2007; Morse/Niehaus 2009; Kuckartz/Cresswell 2014), ein in zweiter Auflage erschienenes umfangreiches Handbuch (Tashakkori/Teddlie 2010), seit 2007 eine Zeitschrift mit Namen „Journal of Mixed Methods Research“ (JMMR) und eine internationale Fachgesellschaft unter dem Namen „Mixed Methods International Research Association“ (MMIRA).

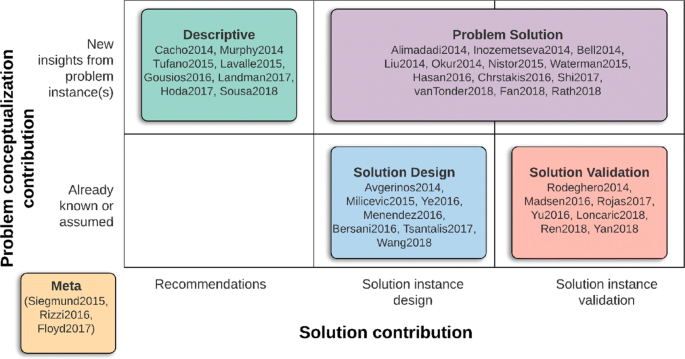

Design science research (DSR) has staked its rightful ground as an important and legitimate Information Systems (IS) research paradigm. We contend that DSR has yet to attain its full potential impact on the development and use of information systems due to gaps in the understanding and application of DSR concepts and methods. This essay aims to help researchers (1) appreciate the levels of artifact abstractions that may be DSR contributions, (2) identify appropriate ways of consuming and producing knowledge when they are preparing journal articles or other scholarly works, (3) understand and position the knowledge contributions of their research projects, and (4) structure a DSR article so that it emphasizes significant contributions to the knowledge base. Our focal contribution is the DSR knowledge contribution framework with two dimensions based on the existing state of knowledge in both the problem and solution domains for the research opportunity under study. In addition, we propose a DSR communication schema with similarities to more conventional publication patterns, but which substitutes the description of the DSR artifact in place of a traditional results section. We evaluate the DSR contribution framework and the DSR communication schema via examinations of DSR exemplar publications.

Design research (DR) is an emergent research approach within information systems. There exist demands to clarify the meta-scientific foundations for this approach. Different responses to these demands are made. There exist attempts to position DR within interpretivism and critical realism. Some scholars have suggested pragmatism as an appropriate paradigm base for design research. This paper has taken pragmatism as a candidate paradigm and it has investigated and elaborated the epistemological foundations for DR. Different epistemic types of DR are identified using a pragmatist perspective. Design research is also related to four aspects/types of pragmatism: Local functional pragmatism (as the design of a useful artefact), general functional pragmatism (as creating design theories and methods aimed for general practice), referential pragmatism (focusing artefact affordances and actions) and methodological pragmatism (knowledge development through making).

One point of convergence in the many recent discussions on design science research in information systems (DSRIS) has been the desirability of a directive design theory (ISDT) as one of the outputs from a DSRIS project. However, the literature on theory development in DSRIS is very sparse. In this paper, we develop a framework to support theory development in DSRIS and explore its potential from multiple perspectives. The framework positions ISDT in a hierarchy of theories in IS design that includes a type of theory for describing how and why the design functions: Design-relevant explanatory/predictive theory (DREPT). DREPT formally captures the translation of general theory constructs from outside IS to the design realm. We introduce the framework from a knowledge representation perspective and then provide typological and epistemological perspectives. We begin by motivating the desirability of both directive-prescriptive theory (ISDT) and explanatory-predictive theory (DREPT) for IS design science research and practice. Since ISDT and DREPT are both, by definition, mid-range theories, we examine the notion of mid-range theory in other fields and then in the specific context of DSRIS. We position both types of theory in Gregor’s (2006) taxonomy of IS theory in our typological view of the framework. We then discuss design theory semantics from an epistemological view of the framework, relating it to an idealized design science research cycle. To demonstrate the potential of the framework for DSRIS, we use it to derive ISDT and DREPT from two published examples of DSRIS.

This paper explores which theorizing strategies can be employed in DSR to make a theoretical contribution by examining two illustrative case examples. First, we find that abduction, deduction, and induction all play a role in DSR. Second, we suggest that design theorists can choose among a range of theorizing strategies (i.e., inductive theorizing, deductive theorizing, and hybrid approaches) that differ in their degree to which they make use of abduction, deduction, and induction as well as their iterative sequencing over time in repeated theorizing cycles. Third, we reveal from the discussion of two prominent IS design theories that empirical and conceptual methods for theorizing play an important role in both the build and evaluate phases of the DSR cycle. Finally, we recommend theorists in future DSR projects that pursue the goal to develop design theory to think explicitly about their theorizing approach and select and use research methods accordingly.

Design Science Research for Information Systems (ISDSR) has received considerable attention recently. With the growing interest in ISDSR, calls continue to establish the rigor of artifact construction. In analogy to other scientific disciplines, the scientific foundation of artifact construction has been designated as IS Design Theory (ISDT) (Gregor 2006, p. 611). Although the ISDSR community has been discussing ISDTs since the early 1990s, no consensus on the definition or the componential structure of ISDTs has been reached yet. In this short article, we give an overview of the ongoing discussion on ISDTs. First, we introduce fundamental concepts of ISDT. Second, we give an overview on seminal contributions to the field of ISDTs in chronological order. Finally, we cluster the presented ISDT contributions into ISDT schools.

Prior research has identified the similarity of Action Research (AR) and Design Science Research (DSR). This paper analyses AR and DSR from several perspectives, including paradigmatic assumptions of ontology, epistemology, methodology, and ethics, their research interests, and activities. We identify that often AR does not share the paradigmatic assumptions and the research interests of DSR, that some activities in DSR are always mutually exclusive from AR, and that there may be no, little, or significant (but not total) overlaps between AR and DSR. Thus we judge that AR and DSR are decisively dissimilar. We further identify several key problems with combining AR and DSR based on the ethical requirement of researchers to identify and manage risks to research stakeholders. Management of such risks is done by careful disclosure, identifying research limitations or by choosing alternative methods than AR for accomplishing DSR.

The common understanding of design science research in information systems (DSRIS) continues to evolve. Only in the broadest terms has there been consensus: that DSRIS involves, in some way, learning through the act of building. However, what is to be built – the definition of the DSRIS artifact – and how it is to be built – the methodology of DSRIS – has drawn increasing discussion in recent years. The relationship of DSRIS to theory continues to make up a significant part of the discussion: how theory should inform DSRIS and whether or not DSRIS can or should be instrumental in developing and refining theory. In this paper, we present the exegesis of a DSRIS research project in which creating a (prescriptive) design theory through the process of developing and testing an information systems artifact is inextricably bound to the testing and refinement of its kernel theory.

Design work and design knowledge in Information Systems (IS) is important for both research and practice. Yet there has been comparatively little critical attention paid to the problem of specifying design theory so that it can be communicated, justified, and developed cumulatively. In this essay we focus on the structural components or anatomy of design theories in IS as a special class of theory. In doing so, we aim to extend the work of Walls, Widemeyer and El Sawy (1992) on the specification of information systems design theories (ISDT), drawing on other streams of thought on design research and theory to provide a basis for a more systematic and useable formulation of these theories. We identify eight separate components of design theories: (1) purpose and scope, (2) constructs, (3) principles of form and function, (4) artifact mutability, (5) testable propositions, (6) justificatory knowledge (kernel theories), (7) principles of implementation, and (8) an expository instantiation. This specification includes components missing in the Walls et al. adaptation of Dubin (1978) and Simon (1969) and also addresses explicitly problems associated with the role of instantiations and the specification of design theories for methodologies and interventions as well as for products and applications. The essay is significant as the unambiguous establishment of design knowledge as theory gives a sounder base for arguments for the rigor and legitimacy of IS as an applied discipline and for its continuing progress. A craft can proceed with the copying of one example of a design artifact by one artisan after another. A discipline cannot.

As a commentary to Juhani Iivari’s insightful essay, I briefly analyze design science research as an embodiment of three closely related cycles of activities. The Relevance Cycle inputs requirements from the contextual environment into the research and introduces the research artifacts into environmental field testing. The Rigor Cycle provides grounding theories and methods along with domain experience and expertise from the foundations knowledge base into the research and adds the new knowledge generated by the research to the growing knowledge base. The central Design Cycle supports a tighter loop of research activity for the construction and evaluation of design artifacts and processes. The recognition of these three cycles in a research project clearly positions and differentiates design science from other research paradigms. The commentary concludes with a claim to the pragmatic nature of design science.

The paper motivates, presents, demonstrates in use, and evaluates a methodology for conducting design science (DS) research in information systems (IS). DS is of importance in a discipline oriented to the creation of successful artifacts. Several researchers have pioneered DS research in IS, yet over the past 15 years, little DS research has been done within the discipline. The lack of a methodology to serve as a commonly accepted framework for DS research and of a template for its presentation may have contributed to its slow adoption. The design science research methodology (DSRM) presented here incorporates principles, practices, and procedures required to carry out such research and meets three objectives: it is consistent with prior literature, it provides a nominal process model for doing DS research, and it provides a mental model for presenting and evaluating DS research in IS. The DS process includes six steps: problem identification and motivation, definition of the objectives for a solution, design and development, demonstration, evaluation, and communication. We demonstrate and evaluate the methodology by presenting four case studies in terms of the DSRM, including cases that present the design of a database to support health assessment methods, a software reuse measure, an Internet video telephony application, and an IS planning method. The designed methodology effectively satisfies the three objectives and has the potential to help aid the acceptance of DS research in the IS discipline.

The aim of this research essay is to examine the structural nature of theory in Information Systems. Despite the importance of theory, questions relating to its form and structure are neglected in comparison with questions relating to epistemology. The essay addresses issues of causality, explanation, prediction, and generalization that underlie an understanding of theory. A taxonomy is proposed that classifies information systems theories with respect to the manner in which four central goals are addressed: analysis, explanation, prediction, and prescription. Five interrelated types of theory are distinguished: (1) theory for analyzing, (2) theory for explaining, (3) theory for predicting, (4) theory for explaining and predicting, and (5) theory for design and action. Examples illustrate the nature of each theory type. The applicability of the taxonomy is demonstrated by classifying a sample of journal articles. The paper contributes by showing that multiple views of theory exist and by exposing the assumptions underlying different viewpoints. In addition, it is suggested that the type of theory under development can influence the choice of an epistemological approach. Support is given for the legitimacy and value of each theory type. The building of integrated bodies of theory that encompass all theory types is advocated.

Within the information systems community there is growing interest in design theories. These theories are aimed to give knowledge support to design activities. Design theories are considered as theorized practical knowledge. This paper is an inquiry into the epistemology of design theories. It is an inquiry in how to justify such knowledge; the need to ground and how to ground a design theory. A distinction is made between empirical, theoretical and internal grounding. The empirical grounding has to do with the effectiveness of the application of knowledge. External theoretical grounding relates design theory to other theories. One part of this is the grounding of the design knowledge in general explanatory theories. Internal grounding means an investigation of internal warrants (e.g. as values and categories) and internal cohesion of the knowledge. Together, these different grounding processes form a coherent approach for the multi-grounding of design theory (MGDT). As illustrations some examples of design theories in IS are discussed. These are design theories concerning business interaction which are based on language action theories.

Two paradigms characterize much of the research in the Information Systems discipline: behavioral science and design science. The behavioral-science paradigm seeks to develop and verify theories that explain or predict human or organizational behavior. The design-science paradigm seeks to extend the boundaries of human and organizational capabilities by creating new and innovative artifacts. Both paradigms are foundational to the IS discipline, positioned as it is at the confluence of people, organizations, and technology. Our objective is to describe the performance of design-science research in Information Systems via a concise conceptual framework and clear guidelines for understanding, executing, and evaluating the research. In the design-science paradigm, knowledge and understanding of a problem domain and its solution are achieved in the building and application of the designed artifact. Three recent exemplars in the research literature are used to demonstrate the application of these guidelines. We conclude with an analysis of the challenges of performing high-quality design-science research in the context of the broader IS community.

Research in IT must address the design tasks faced by practitioners. Real problems must be properly conceptualized and represented, appropriate techniques for their solution must be constructed, and solutions must be implemented and evaluated using appropriate criteria. If significant progress is to be made, IT research must also develop an understanding of how and why IT systems work or do not work. Such an understanding must tie together natural laws governing IT systems with natural laws governing the environments in which they operate. This paper presents a two dimensional framework for research in information technology. The first dimension is based on broad types of design and natural science research activities: build, evaluate, theorize, and justify. The second dimension is based on broad types of outputs produced by design research: representational constructs, models, methods, and instantiations. We argue that both design science and natural science activities are needed to insure that IT research is both relevant and effective.

This paper defines an information system design theory (ISDT) to be a prescriptive theory which integrates normative and descriptive theories into design paths intended to produce more effective information systems. The nature of ISDTs is articulated using Dubin’s concept of theory building and Simon’s idea of a science of the artificial. An example of an ISDT is presented in the context of Executive Information Systems (EIS). Despite the increasing awareness of the potential of EIS for enhancing executive strategic decision-making effectiveness, there exists little theoretical work which directly guides EIS design. We contend that the underlying theoretical basis of EIS can be addressed through a design theory of vigilant information systems. Vigilance denotes the ability of an information system to help an executive remain alertly watchful for weak signals and discontinuities in the organizational environment relevant to emerging strategic threats and opportunities. Research on managerial information scanning and emerging issue tracking as well as theories of open loop control are synthesized to generate vigilant information system design theory propositions. Transformation of the propositions into testable empirical hypotheses is discussed.

In verschiedenen Forschungsprojekten haben wir mit dem Verfahren des offenen, leitfadenorientierten Expertlnneninterview gearbeitet und dabei die Erfahrung gemacht, daß wir methodisch auf einem wenig beackerten Terrain operieren mußten. Das gilt nahezu vollständig für Auswertungsprobleme. In der — spärlich vorhandenen — Literatur zu Expertlnneninterviews werden vorwiegend Fragen des Feldzugangs und der Gesprächsführung behandelt. Die Frage, wie “methodisch kontrolliertes Fremdverstehen” (vgl. Schütze u. a. 1973) im Rahmen von Expertlnneninterviews zu bewerkstelligen ist, bleibt völlig offen. Ziel dieses Artikels ist es, einige Fragen hinsichtlich der Methodik des Expertlnneninterviews zu behandeln. Das empirische Material, auf das wir uns beziehen, stammt aus Forschungsprojekten, die wir durchgeführt haben bzw. gegenwärtig bearbeitenl. Das Auswertungsverfahren, das wir vorstellen werden (s. Kap. 4), haben wir aus unserer eigenen Forschungspraxis entwickelt, die ihrerseits im Rekurs auf die Literatur zur qualitativen bzw. interpretativen Sozialforschung zustandegekommen ist.

This book is about options for inquiry: options among the paradigms—basic belief systems—that have emerged as successors to conventional positivism. Three options are explored in this book: postpositivism, on the shoulders of whose proponents the mantles of succession and of hegemony appear to have fallen, and two brash and sometimes contentious contendors, critical theory and constructivism. Although all three alternatives reject positivism, they make very different diagnoses of its problems and, therefore, offer very different remedies.

The authors critically review systems development in information systems (IS) research. Several classification schemes of research are described and systems development is identified as a developmental, engineering, and formulative type of research. A framework of research is proposed to explain the dual nature of systems development as a research methodology and a research domain in IS research. Progress in several disciplinary areas is reviewed to provide a basis to argue that systems development is a valid research methodology. A systems development research process is presented from a methodological perspective. Software engineering, the basic method is applying the systems development research methodology, is then discussed. A framework to classify IS research domain and various research methodologies in studying systems development is presented. It is suggested that systems development and empirical research methodologies are complementary to each other. It is further proposed that an integrated multidimensional and multimethodological approach will generate fruitful research results in IS research.

Disentangling Hype from Practicality: On Realistically Achieving Quantum Advantage

- Hacker News

- Download PDF

- Join the Discussion

- View in the ACM Digital Library

- Introduction

Key Insights

Operating on fundamentally different principles than conventional computers, quantum computers promise to solve a variety of important problems that seemed forever intractable on classical computers. Leveraging the quantum foundations of nature, the time to solve certain problems on quantum computers grows more slowly with the size of the problem than on classical computers—this is called quantum speedup. Going beyond quantum supremacy, 2 which was the demonstration of a quantum computer outperforming a classical one for an artificial problem, an important question is finding meaningful applications (of academic or commercial interest) that can realistically be solved faster on a quantum computer than on a classical one. We call this a practical quantum advantage, or quantum practicality for short.

Back to Top

- Most of today’s quantum algorithms may not achieve practical speedups. Material science and chemistry have a huge potential and we hope more practical algorithms will be invented based on our guidelines.

- Due to limitations of input and output bandwidth, quantum computers will be practical for “big compute” problems on small data, not big data problems.

- Quadratic speedups delivered by algorithms such as Grover’s search are insufficient for practical quantum advantage without significant improvements across the entire software/hardware stack.

There is a maze of hard problems that have been suggested to profit from quantum acceleration: from cryptanalysis, chemistry and materials science, to optimization, big data, machine learning, database search, drug design and protein folding, fluid dynamics and weather prediction. But which of these applications realistically offer a potential quantum advantage in practice? For this, we cannot only rely on asymptotic speedups but must consider the constants involved. Being optimistic in our outlook for quantum computers, we identify clear guidelines for quantum practicality and use them to classify which of the many proposed applications for quantum computing show promise and which ones would require significant algorithmic improvements to become practical and relevant.

To establish reliable guidelines, or lower bounds for the required speedup of a quantum computer, we err on the side of being optimistic for quantum and overly pessimistic for classical computing. Despite our overly optimistic assumptions, our analysis shows a wide range of often-cited applications is unlikely to result in a practical quantum advantage without significant algorithmic improvements. We compare the performance of only a single classical chip fabricated like the one used in the NVIDIA A100 GPU that fits around 54 billion transistors 15 with an optimistic assumption for a hypothetical quantum computer that may be available in the next decades with 10,000 error-corrected logical qubits, 10μs gate time for logical operations, the ability to simultaneously perform gate operations on all qubits and all-to-all connectivity for fault tolerant two-qubit gates. a

I/O bandwidth. We first consider the fundamental I/O bottleneck that limits quantum computers in their interaction with the classical world, which determines bounds for data input and output bandwidths. Scalable implementations of quantum random access memory (QRAM 8 , 9 ) demand a fault-tolerant error corrected implementation and the bandwidth is then fundamentally limited by the number of quantum gate operations or measurements that can be performed per unit time. We assume only a single gate operation per input bit. For our optimistic future quantum computer, the resulting rate is 10,000-times smaller than for an existing classical chip (see Table 1 ). We immediately see that any problem limited by accessing classical data, such as search problems in databases, will be solved faster by classical computers. Similarly, a potentially exponential quantum speedup in linear algebra problems 12 vanishes when the matrix must be loaded from classical data, or when the full solution vector should be read out. Generally, quantum computers will be practical for “big compute” problems on small data , not big data problems.

Crossover scale. With quantum speedup, asymptotically fewer operations will be needed on a quantum computer than on a classical computer. Due to the high operational complexity and slower gate operations, however, each operation on a quantum computer will be slower than a corresponding classical one. As sketched in the accompanying figure , classical computers will always be faster for small problems and quantum advantage is realized beyond a problem-dependent crossover scale where the gain due to quantum speedup overcomes the constant slowdown of the quantum computer. To have real practical impact, the crossover time must be short, not more than weeks. Constants matter in determining the utility for applications, as with any runtime estimate in computing.

Compute performance. To model performance, we employ the well-known work-depth model from classical parallel computing to determine upper bounds of classical silicon-based computations and an extension for quantum computations. In this model, the work is the total number of operations and applies to both classical and quantum executions. In Table 1 , we provide concrete examples using three types of operations: logical operations, 16-bit floating point, and 32-bit integer or fixed-point arithmetic operations for numerical modeling. For the quantum costs, we consider only the most expensive parts in our estimates, again benefiting quantum computers; for arithmetic, we count just the dominant cost of multiplications, assuming additions are free. Furthermore, for floating point multiplication, we consider only the cost of the multiplication of the mantissa (10 bits in fp16). We ignore all further overheads incurred by the quantum algorithm due to reversible computations, as well as the significant cost of mapping to a specific hardware architecture with limited qubit connectivity.

Crossover times for classical and quantum computation. To estimate lower bounds for the crossover times, we consider that while both classical and quantum computers must evaluate the same functions (usually called oracles) that describe a problem, quantum computers require fewer evaluations thereof due to quantum speedup. At the root of many quantum acceleration proposals lies a quadratic quantum speedup, including the well-known Grover algorithm. 10 , 11 For such an algorithm, a problem that needs X function calls on a quantum computer requires quadratically more, namely on the order of X 2 calls on a classical computer. To overcome the large constant performance difference between a quantum computer and a classical computer, which Table 1 shows to be more than a factor of 10 10 , many function calls X >> 10 10 is needed for the quantum speedup to deliver a practical advantage. In Table 2 , we estimate upper bounds for the complexity of the function that will lead to a cross-over time of 10 6 seconds, or approximately two weeks.

We see that with quadratic speedup even a single floating point or integer operation leads to crossover times of several months. Furthermore, at most 68 binary logical operations can be afforded to stay within our desired crossover time of two weeks, which is too low for any non-trivial application. Keeping in mind that these estimates are pessimistic for classical computation (a single of today’s classical chips) and overly optimistic for quantum computing (only considering the multiplication of the mantissa and assuming all-to-all qubit connectivity), we come to the clear conclusion that quadratic speedups are insufficient for practical quantum advantage. The numbers look better for cubic or quartic speedups where thousands or millions of operations may be feasible, and we conclude, similarly to Babbush et al., 3 that at least cubic or quartic speedups are required for a practical quantum advantage.

As a result of our overly optimistic assumptions in favor of quantum computing, these conclusions will remain valid even with significant advances in quantum technology of multiple orders of magnitude.

Practical and impractical applications. We can now use these considerations to discuss several classes of applications where our fundamental bounds draw a line for quantum practicality. The most likely problems to allow for a practical quantum advantage are those with exponential quantum speedup. This includes the simulation of quantum systems for problems in chemistry, materials science, and quantum physics, as well as cryptanalysis using Shor’s algorithm. 16 The solution of linear systems of equations for highly structured problems 12 also has an exponential speedup, but the I/O limitations discussed above will limit the practicality and undo this advantage if the matrix has to be loaded from memory instead of being computed based on limited data or knowledge of the full solution is required (as opposed to just some limited information obtained by sampling the solution).

Equally important, we identify likely dead ends in the maze of applications. A large range of problem areas with quadratic quantum speedups, such as many current machine learning training approaches, accelerating drug design and protein folding with Grover’s algorithm, speeding up Monte Carlo simulations through quantum walks, as well as more traditional scientific computing simulations including the solution of many non-linear systems of equations, such as fluid dynamics in the turbulent regime, weather, and climate simulations will not achieve quantum advantage with current quantum algorithms in the foreseeable future. We also conclude that the identified I/O limits constrain the performance of quantum computing for big data problems, unstructured linear systems, and database search based on Grover’s algorithm such that a speedup is unlikely in those cases. Furthermore, Aaronson et al. 1 show the achievable quantum speedup of unstructured black-box algorithms is limited to O ( N 4 ). This implies that any algorithm achieving higher speedup must exploit structure in the problem it solves.

These considerations help with separating hype from practicality in the search for quantum applications and can guide algorithmic developments. Specifically, our analysis shows it is necessary for the community to focus on super-quadratic speedups, ideally exponential speedups, and one needs to carefully consider I/O bottlenecks when deriving algorithms to exploit quantum computation best. Therefore, the most promising candidates for quantum practicality are small-data problems with exponential speedup. Specific examples where this is the case are quantum problems in chemistry and materials science, 5 which we identify as the most promising application. We recommend using precise requirements models 4 to get more reliable and realistic (less optimistic) estimates in cases where our rough guidelines indicate a potential practical quantum advantage.

Here, we provide more details for how we obtained the numbers mentioned earlier. We compare our quantum computer with a single microprocessor chip like the one used in the NVIDIA A100 GPU. 15 The A100 chip is around 850 mm 2 in size and manufactured in TSMC’s 7nm N7 silicon process. A100 shows that such a chip fits around 54.2 billion transistors and can operator at a cycle time of around 0.7ns.

Determining peak operation throughputs. In Table 1 , we provide concrete examples using three types of operations: logical operations, 16-bit floating point, and 32-bit integer arithmetic operations for numerical modeling. Other datatypes could be modeled using our methodology as well.

Classical NVIDIA A100. According to its datasheet, NVIDIA’s A100 GPU, a SIMT-style von Neumann load store architecture, delivers 312 tera-operations per second (Top/s) with half precision floating point (fp16) through tensor cores and 78Top/s through the normal processing pipeline. NVIDIA assumes a 50/50 mix of addition and multiplication operations and thus, we divide the number by two, yielding 195Top/s fp16 performance. The datasheet states 19.5Top/s for 32-bit integer operations, again assuming a 50/50 mix of addition and multiplication, leading to an effective 9.75Top/s. The binary tensor core performance is listed as 4,992Top/s with a limited set of instructions.

Classical special-purpose ASIC. Our main analysis assumes that we build a special-purpose ASIC using a similar technology. If we were to fill the equivalent chip-space of an A100 with a specialized circuit, we would use existing execution units, for which the size is typically measured in gate equivalents (GE). A 16-bit floating point unit (FPU) with addition and multiplication functions requires approximately 7kGE, a 32-bit integer unit requires 18kGE, 14 and we assume 50GE for a simple binary operation. All units include operand buffer registers and support a set of programmable instructions. We note that simple addition or multiplication circuits would be significantly cheaper. If we assume a transistor-to-gate ratio of 10 13 and that 50% of the total chip area is used for control logic of a dataflow ASIC with the required buffering, we can fit 54.2 B /(7 k • 10 • 2) = 387 k fp16 units. Similarly, we can fit 54.2 B (18 k • 10 • 2) = 151 k int32, or 54.2 B /(50 • 10 • 2) = 54.2M bin2 units on our hypothetical chip. Assuming a cycle time of 0.7ns, this leads to a total operation rate of 0.55 fp16, 0.22 int32, and 77.4 bin Pop/s for an application-specific ASIC with the A100’s technology and budget. The ASIC thus leads to a raw speedup between approximately 2x and 15x over a programmable circuit. Thus, on classical silicon, the performance ranges approximately between 10 13 and 10 16 op/s for binary, int32, and fp16 types.

Our analysis shows a wide range of often-cited applications is unlikely to result in a practical quantum advantage without significant algorithmic improvements.

Hypothetical future quantum computer. To determine the costs of N -bit multiplication on a quantum computer, we choose the controlled adder from Gidney 6 and implement the multiplication using N single-bit controlled adders, each requiring 2 N CCZ magic states. These states are produced in so called “magic state factories” that are implemented on the physical chip. While the resulting multiplier is entirely sequential, we found that this construction allows for more units to be placed on one chip than for a low-depth adder and/or for a tree-like reduction of partial products since the number of CCZ states is lower (and thus fewer magic state factories are required), and the number of work-qubits is lower. The resulting multiplier has a CCZ-depth and count of 2 N 2 using 5 N – 1 qubits (2 N input, 2 N – 1 output, N ancilla for the addition).

To compute the space overhead due to CCZ factories, we first use the analysis of Gidney and Fowler 7 to compute the number of physical qubits per factory when aiming for circuits (programs) using ≈ 10 8 CCZ magic states with physical gate errors of 10 -3 . We approximate the overhead in terms of logical qubits by dividing the physical space overhead by 2 d 2 , where we choose the error-correcting code distance d = 2 • 31 2 to be the same as the distance used for the second level of distillation. 7 Thus we divide Gidney and Fowler’s 147,904 physical qubits per factory (for details consult the anciliary spreadsheet (field B40) of Gidney and Fowler) by 2 d 2 = 2 • 31 2 and get an equivalent space of 77 logical qubits per factory.

For the multiplier of the 10-bit mantissa of an fp16 floating point number, we need 2 • 10 2 = 200 CCZ states and 5 • 10 = 50 qubits. Since each factory takes 5.5 cycles 7 and we can pipeline the production of CCZ states, we assume 5.5 factories per multiplication unit such that multipliers do not wait for magic state production on average. Thus, each multiplier requires 200 cycles and 5 N + 5.5 • 77 = 50 + 5.5 • 77 = 473.5 qubits. With a total of 10,000 logical qubits, we can implement 21 10-bit multipliers on our hypothetical quantum chip. With 10μs cycle time, the 200-cycle latency, we get the final rate of less than 10 5 cycle/ s / (200 cycle/op ) • 21= 10.5 kop/s. For int32 ( N =32), the calculation is equivalent. For binary, we assume two input and one output qubit for the (binary) adder (Toffoli gate) which does not need ancillas. The results are summarized in Table 1 .

A note on parallelism. We assumed massively parallel execution of the oracle on both the classical and quantum computer (that is, oracles with a depth of one). If the oracle does not admit such parallelization, for example, if depth = work in the worst-case scenario, then the comparison becomes more favorable towards the quantum computer. One could model this scenario by allowing the classical computer to only perform one operation per cycle. With a 2GHz clock frequency, this would mean a slowdown of about 100,000 times for fp16 on the GPU. In this extremely unrealistic algorithmic worst case, the oracle would still have to consist of only several thousands of fp16 operations with a quadratic speedup. However, we note that in practice, most oracles have low depth and parallelization across a single chip is achievable, which is what we assumed.

Determining maximum operation counts per oracle call. In Table 2 , we list the maximum number of operations of a certain type that can be run to achieve a quantum speedup within a runtime of 10 6 seconds (a little more than two weeks). The maximum number of classical operations that can be performed with a single classical chip in 10 6 seconds would be: 0.55 fp16, 0.22 int32, and 77.4 bin Zop. Similarly, assuming the rates from Table 1 , for a quantum chip: 7, 4, 2, and 350 Gop, respectively.

We now assume that all calculations are used in oracle calls on the quantum computer and we ignore all further costs on the quantum machine. We start by modeling algorithms that provide polynomial X k speedup, for small constants k. For example, for Grover’s algorithms, 11 k + 2. It is clear quantum computers are asymptotically faster (in the number of oracle queries) for any k >1. However, we are interested to find the oracle complexity (that is, the number of operations required to evaluate it) for which a quantum computer is faster than a classical computer within the time-window of 10 6 seconds.

Let the number of operations required to evaluate a single oracle call be M and let the number of required invocations be N. It takes a classical computer time T c = N k • M • t c , whereas a quantum computer solves the same problem in time T q = N k • M • t q where t c and t q denote the time to evaluate an operation on a classical and on a quantum computer, respectively. By demanding that the quantum computer should solve the problem faster than the classical computer and within 10 6 seconds, we find

which allows us to compute the maximal number of basic operations per oracle evaluation such that the quantum computer still achieves a practical speedup:

Acknowledgments. We thank L. Benini for helpful discussions about ASIC and processor design and related overheads and W. van Dam and anonymous reviewers for comments that improved an earlier draft.

Submit an Article to CACM

CACM welcomes unsolicited submissions on topics of relevance and value to the computing community.

You Just Read

Copyright held by authors. Publication rights licensed to ACM. Request permission to publish from [email protected]

The Digital Library is published by the Association for Computing Machinery. Copyright © 2023 ACM, Inc.

May 2023 Issue

Published: May 1, 2023

Vol. 66 No. 5

Pages: 82-87

Advertisement

Join the Discussion (0)

Become a member or sign in to post a comment, the latest from cacm.

Building Computing Systems for Embodied Artificial Intelligence

First Impressions: Designing Social Actions for Positive Social Change with Bard

The Artist’s Role on a Science and Engineering Campus

Shape the Future of Computing

ACM encourages its members to take a direct hand in shaping the future of the association. There are more ways than ever to get involved.

Communications of the ACM (CACM) is now a fully Open Access publication.

By opening CACM to the world, we hope to increase engagement among the broader computer science community and encourage non-members to discover the rich resources ACM has to offer.

Suggestions or feedback?

MIT News | Massachusetts Institute of Technology

- Machine learning

- Social justice

- Black holes

- Classes and programs

Departments

- Aeronautics and Astronautics

- Brain and Cognitive Sciences

- Architecture

- Political Science

- Mechanical Engineering

Centers, Labs, & Programs

- Abdul Latif Jameel Poverty Action Lab (J-PAL)

- Picower Institute for Learning and Memory

- Lincoln Laboratory

- School of Architecture + Planning

- School of Engineering

- School of Humanities, Arts, and Social Sciences

- Sloan School of Management

- School of Science

- MIT Schwarzman College of Computing

Tests show high-temperature superconducting magnets are ready for fusion

Press contact :, media download.

*Terms of Use:

Images for download on the MIT News office website are made available to non-commercial entities, press and the general public under a Creative Commons Attribution Non-Commercial No Derivatives license . You may not alter the images provided, other than to crop them to size. A credit line must be used when reproducing images; if one is not provided below, credit the images to "MIT."

Previous image Next image

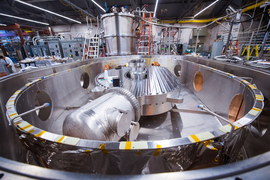

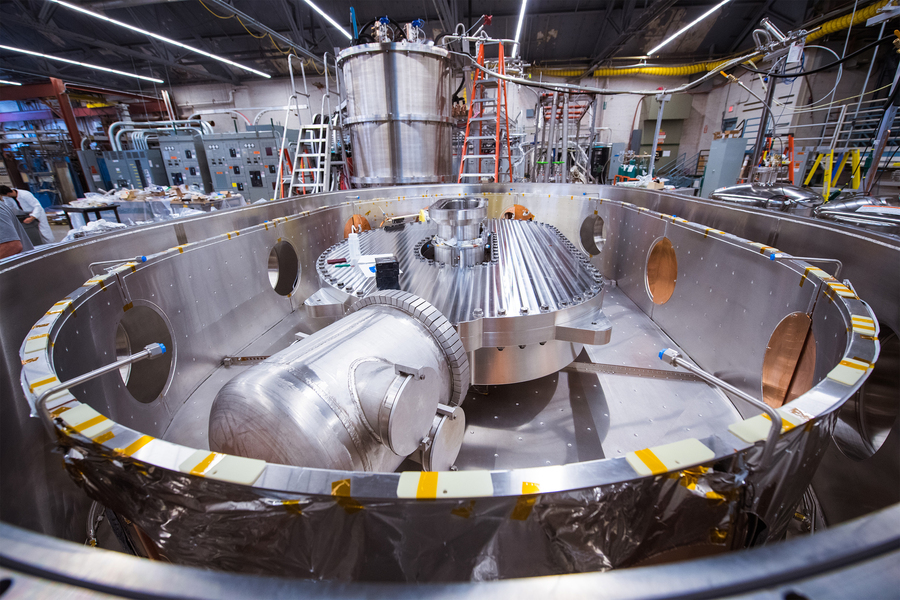

In the predawn hours of Sept. 5, 2021, engineers achieved a major milestone in the labs of MIT’s Plasma Science and Fusion Center (PSFC), when a new type of magnet, made from high-temperature superconducting material, achieved a world-record magnetic field strength of 20 tesla for a large-scale magnet. That’s the intensity needed to build a fusion power plant that is expected to produce a net output of power and potentially usher in an era of virtually limitless power production.

The test was immediately declared a success, having met all the criteria established for the design of the new fusion device, dubbed SPARC, for which the magnets are the key enabling technology. Champagne corks popped as the weary team of experimenters, who had labored long and hard to make the achievement possible, celebrated their accomplishment.

But that was far from the end of the process. Over the ensuing months, the team tore apart and inspected the components of the magnet, pored over and analyzed the data from hundreds of instruments that recorded details of the tests, and performed two additional test runs on the same magnet, ultimately pushing it to its breaking point in order to learn the details of any possible failure modes.

All of this work has now culminated in a detailed report by researchers at PSFC and MIT spinout company Commonwealth Fusion Systems (CFS), published in a collection of six peer-reviewed papers in a special edition of the March issue of IEEE Transactions on Applied Superconductivity . Together, the papers describe the design and fabrication of the magnet and the diagnostic equipment needed to evaluate its performance, as well as the lessons learned from the process. Overall, the team found, the predictions and computer modeling were spot-on, verifying that the magnet’s unique design elements could serve as the foundation for a fusion power plant.

Enabling practical fusion power

The successful test of the magnet, says Hitachi America Professor of Engineering Dennis Whyte, who recently stepped down as director of the PSFC, was “the most important thing, in my opinion, in the last 30 years of fusion research.”

Before the Sept. 5 demonstration, the best-available superconducting magnets were powerful enough to potentially achieve fusion energy — but only at sizes and costs that could never be practical or economically viable. Then, when the tests showed the practicality of such a strong magnet at a greatly reduced size, “overnight, it basically changed the cost per watt of a fusion reactor by a factor of almost 40 in one day,” Whyte says.

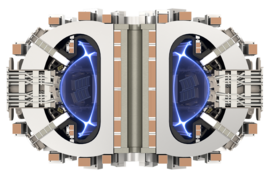

“Now fusion has a chance,” Whyte adds. Tokamaks, the most widely used design for experimental fusion devices, “have a chance, in my opinion, of being economical because you’ve got a quantum change in your ability, with the known confinement physics rules, about being able to greatly reduce the size and the cost of objects that would make fusion possible.”

The comprehensive data and analysis from the PSFC’s magnet test, as detailed in the six new papers, has demonstrated that plans for a new generation of fusion devices — the one designed by MIT and CFS, as well as similar designs by other commercial fusion companies — are built on a solid foundation in science.

The superconducting breakthrough

Fusion, the process of combining light atoms to form heavier ones, powers the sun and stars, but harnessing that process on Earth has proved to be a daunting challenge, with decades of hard work and many billions of dollars spent on experimental devices. The long-sought, but never yet achieved, goal is to build a fusion power plant that produces more energy than it consumes. Such a power plant could produce electricity without emitting greenhouse gases during operation, and generating very little radioactive waste. Fusion’s fuel, a form of hydrogen that can be derived from seawater, is virtually limitless.

But to make it work requires compressing the fuel at extraordinarily high temperatures and pressures, and since no known material could withstand such temperatures, the fuel must be held in place by extremely powerful magnetic fields. Producing such strong fields requires superconducting magnets, but all previous fusion magnets have been made with a superconducting material that requires frigid temperatures of about 4 degrees above absolute zero (4 kelvins, or -270 degrees Celsius). In the last few years, a newer material nicknamed REBCO, for rare-earth barium copper oxide, was added to fusion magnets, and allows them to operate at 20 kelvins, a temperature that despite being only 16 kelvins warmer, brings significant advantages in terms of material properties and practical engineering.

Taking advantage of this new higher-temperature superconducting material was not just a matter of substituting it in existing magnet designs. Instead, “it was a rework from the ground up of almost all the principles that you use to build superconducting magnets,” Whyte says. The new REBCO material is “extraordinarily different than the previous generation of superconductors. You’re not just going to adapt and replace, you’re actually going to innovate from the ground up.” The new papers in Transactions on Applied Superconductivity describe the details of that redesign process, now that patent protection is in place.

A key innovation: no insulation

One of the dramatic innovations, which had many others in the field skeptical of its chances of success, was the elimination of insulation around the thin, flat ribbons of superconducting tape that formed the magnet. Like virtually all electrical wires, conventional superconducting magnets are fully protected by insulating material to prevent short-circuits between the wires. But in the new magnet, the tape was left completely bare; the engineers relied on REBCO’s much greater conductivity to keep the current flowing through the material.

“When we started this project, in let’s say 2018, the technology of using high-temperature superconductors to build large-scale high-field magnets was in its infancy,” says Zach Hartwig, the Robert N. Noyce Career Development Professor in the Department of Nuclear Science and Engineering. Hartwig has a co-appointment at the PSFC and is the head of its engineering group, which led the magnet development project. “The state of the art was small benchtop experiments, not really representative of what it takes to build a full-size thing. Our magnet development project started at benchtop scale and ended up at full scale in a short amount of time,” he adds, noting that the team built a 20,000-pound magnet that produced a steady, even magnetic field of just over 20 tesla — far beyond any such field ever produced at large scale.

“The standard way to build these magnets is you would wind the conductor and you have insulation between the windings, and you need insulation to deal with the high voltages that are generated during off-normal events such as a shutdown.” Eliminating the layers of insulation, he says, “has the advantage of being a low-voltage system. It greatly simplifies the fabrication processes and schedule.” It also leaves more room for other elements, such as more cooling or more structure for strength.

The magnet assembly is a slightly smaller-scale version of the ones that will form the donut-shaped chamber of the SPARC fusion device now being built by CFS in Devens, Massachusetts. It consists of 16 plates, called pancakes, each bearing a spiral winding of the superconducting tape on one side and cooling channels for helium gas on the other.

But the no-insulation design was considered risky, and a lot was riding on the test program. “This was the first magnet at any sufficient scale that really probed what is involved in designing and building and testing a magnet with this so-called no-insulation no-twist technology,” Hartwig says. “It was very much a surprise to the community when we announced that it was a no-insulation coil.”

Pushing to the limit … and beyond