share this!

April 27, 2022

Millions of research papers are published in a year. How do scientists keep up?

by Eva Botkin-Kowacki, Northeastern University

If you want to be a scientist, you're going to have to do a lot of reading.

Science is an endeavor focused on building and sharing knowledge. Researchers publish papers detailing their discoveries, breakthroughs, and innovations in order to share those revelations with colleagues. And there are millions of scientific papers each year.

Keeping up with the latest developments in their field is a challenge for researchers at all points of their careers, but it especially affects early-career scientists, as they also have to read the many papers that represent the foundation of their field.

"It's impossible to read everything. Absolutely impossible," Ajay Satpute, director of the Affective and Brain Science Lab and an assistant professor of psychology at Northeastern. "And if you don't know everything that has happened in the field, there's a real chance of reinventing the wheel over and over and over again." The challenge, he says, is to figure out how to train the next generation of scientists economically, balancing the need to read all the seminal papers with training them as researchers in their own right.

That task is only getting more difficult, says Alessia Iancarelli, a Ph.D student studying affective and social psychology in Satpute's lab. "The volume of published literature just keeps increasing," she says. "How are scientists able to develop their scholarship in a field given this huge amount of literature?" They have to pick and choose what to read.

But common approaches to that prioritization, Iancarelli says, can incorporate biases and leave out crucial corners of the field. So Iancarelli, Satpute and colleagues developed a machine learning approach to find a better—and less biased—way to make a reading list. Their results, which were published last week in the journal PLOS One , also help reduce gender bias.

"There really is a problem about how we develop scholarship," Satpute says. Right now, scientists will often use a search tool like Google Scholar on a topic and start from there, he says. "Or, if you're lucky, you'll get a wonderful instructor and have a great syllabus. But that's going to be basically the field through that person's eyes. And so I think that this really fills a niche that might help create balance and cross-disciplinary scholarship without necessarily having access to a wonderful instructor, because not everyone gets that."

The problem with something like Google Scholar, Iancarelli explains, is that it will give you the most popular papers in a field, measured by how many other papers have cited them. If there are subsets of that field that aren't as popular but are still relevant, the important papers on those topics might get missed with such a search.

Take, for example, the topic of aggression (which is the subject the researchers focused on to develop their algorithm). Media and video games are a particularly hot topic in aggression research, Iancarelli says, and therefore there are a lot more papers on that subset of the field than on other topics, such as the role of testosterone, and social aggression.

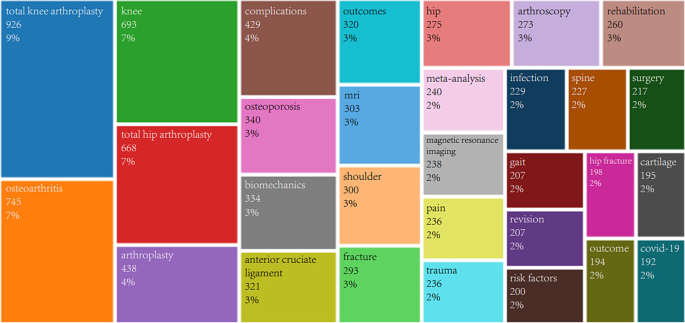

So Iancarelli decided to group papers on the topic of aggression into communities. Using citation network analysis, she identified 15 research communities on aggression. Rather than looking at the raw number of times a paper has been cited in another research paper , the algorithm determines a community of papers that tend to cite each other or the same core set of papers. The largest communities it revealed were media and video games, stress, traits and aggression, rumination and displaced aggression, the role of testosterone, and social aggression. But there were also some surprises, such as a smaller community of research papers focused on aggression and horses.

"If you use community detection, then you get this really rich, granular look at the aggression field," Satpute says. "You have sort of a bird's-eye-view of the entire field rather than [it appearing that] the field of aggression is basically media, video games, and violence."

In addition to diversifying the topics featured by using this community approach, the researchers also found that the percentage of articles with women first authors dubbed influential by the algorithm doubled in comparison to when they focused only on total citation counts. (Iancarelli adds there might be some biases baked into that result, as the team couldn't ask the authors directly about their gender identity and instead had to rely on assumptions based on the author's name, picture, and any pronouns used to refer to them.)

The team has released the code behind this algorithm so that others can use it and replicate their citation network analysis approach in other fields of research.

For Iancarelli, there's another motivation: "I would love to use this work to create a syllabus and teach my own course on human aggression. I would really love to base the syllabus on the most relevant papers from each different community to give a true general view of the human aggression field."

Journal information: PLoS ONE

Provided by Northeastern University

Explore further

Feedback to editors

Artificial intelligence helps scientists engineer plants to fight climate change

4 hours ago

Ultrasensitive photonic crystal detects single particles down to 50 nanometers

5 hours ago

Scientists map soil RNA to fungal genomes to understand forest ecosystems

6 hours ago

Researchers show it's possible to teach old magnetic cilia new tricks

Mantle heat may have boosted Earth's crust 3 billion years ago

Study suggests that cells possess a hidden communication system

Researcher finds that wood frogs evolved rapidly in response to road salts

Imaging technique shows new details of peptide structures

7 hours ago

Cows' milk particles used for effective oral delivery of drugs

New research confirms plastic production is directly linked to plastic pollution

Relevant physicsforums posts, interesting anecdotes in the history of physics, cover songs versus the original track, which ones are better.

11 hours ago

Great Rhythm Sections in the 21st Century

21 hours ago

Biographies, history, personal accounts

Apr 23, 2024

History of Railroad Safety - Spotlight on current derailments

Apr 21, 2024

For WW2 buffs!

Apr 20, 2024

More from Art, Music, History, and Linguistics

Related Stories

Prize winning topics found to deliver more science papers and citations than non-prize-winning topics

Oct 6, 2021

Women are undercited and men are overcited in communication

Aug 6, 2021

Women more likely to enjoy aggression in porn: study

Feb 11, 2022

Analysis suggests China has passed US on one research measure

Mar 8, 2022

Successful research papers cite young references

Apr 15, 2019

Seattle AI lab's free search engine aims to accelerate scientific breakthroughs

Oct 31, 2019

Recommended for you

Saturday Citations: Irrationality modeled; genetic basis for PTSD; Tasmanian devils still endangered

Training of brain processes makes reading more efficient

Apr 18, 2024

Researchers find lower grades given to students with surnames that come later in alphabetical order

Apr 17, 2024

Saturday Citations: Listening to bird dreams, securing qubits, imagining impossible billiards

Apr 13, 2024

Earth, the sun and a bike wheel: Why your high-school textbook was wrong about the shape of Earth's orbit

Apr 8, 2024

Saturday Citations: AI and the prisoner's dilemma; stellar cannibalism; evidence that EVs reduce atmospheric CO₂

Apr 6, 2024

Let us know if there is a problem with our content

Use this form if you have come across a typo, inaccuracy or would like to send an edit request for the content on this page. For general inquiries, please use our contact form . For general feedback, use the public comments section below (please adhere to guidelines ).

Please select the most appropriate category to facilitate processing of your request

Thank you for taking time to provide your feedback to the editors.

Your feedback is important to us. However, we do not guarantee individual replies due to the high volume of messages.

E-mail the story

Your email address is used only to let the recipient know who sent the email. Neither your address nor the recipient's address will be used for any other purpose. The information you enter will appear in your e-mail message and is not retained by Phys.org in any form.

Newsletter sign up

Get weekly and/or daily updates delivered to your inbox. You can unsubscribe at any time and we'll never share your details to third parties.

More information Privacy policy

Donate and enjoy an ad-free experience

We keep our content available to everyone. Consider supporting Science X's mission by getting a premium account.

E-mail newsletter

Interactive visualization requires JavaScript

Related research and data

- Annual articles published in scientific and technical journals

- Annual patent applications

- Annual patent applications per million people

- Annual patents filed for carbon capture and storage technologies

- Annual patents filed for electric vehicle technologies

- Annual patents filed for energy storage technologies

- Annual patents filed for renewable energy technologies

- Annual patents filed in sustainable energy

- Annual patents granted in Great Britain during the Industrial Revolution

- Cumulative RCTs published in high-ranked medical journals

- International finance received for clean energy

- Invention patents granted annually in the United States since 1790

- Invention patents granted annually in the United States since 1840 By category

- Level of implementation of sustainable procurement policies and plans

- Number of R&D researchers per million people

- Ocean science and research funding

- R&D researchers per million people vs. GDP per capita

- Research & development spending as a share of GDP

- Share of clinical trials that report results within a year By country

- Share of government expenditure going to interest payments

Our World in Data is free and accessible for everyone.

Help us do this work by making a donation.

Click through the PLOS taxonomy to find articles in your field.

For more information about PLOS Subject Areas, click here .

Loading metrics

Open Access

Peer-reviewed

Research Article

More journal articles and fewer books: Publication practices in the social sciences in the 2010’s

Roles Conceptualization, Data curation, Investigation, Methodology, Writing – original draft, Writing – review & editing

Affiliation Academic Analytics Research Center (AARC), Columbus, Ohio, United States of America

Roles Conceptualization, Data curation, Formal analysis, Methodology, Project administration, Visualization, Writing – review & editing

* E-mail: [email protected]

- William E. Savage,

- Anthony J. Olejniczak

- Published: February 3, 2022

- https://doi.org/10.1371/journal.pone.0263410

- Peer Review

- Reader Comments

The number of scholarly journal articles published each year is growing, but little is known about the relationship between journal article growth and other forms of scholarly dissemination (e.g., books and monographs). Journal articles are the de facto currency of evaluation and prestige in STEM fields, but social scientists routinely publish books as well as articles, representing a unique opportunity to study increased article publications in disciplines with other dissemination options. We studied the publishing activity of social science faculty members in 12 disciplines at 290 Ph.D. granting institutions in the United States between 2011 and 2019, asking: 1) have publication practices changed such that more or fewer books and articles are written now than in the recent past?; 2) has the percentage of scholars actively participating in a particular publishing type changed over time?; and 3) do different age cohorts evince different publication strategies? In all disciplines, journal articles per person increased between 3% and 64% between 2011 and 2019, while books per person decreased by at least 31% and as much as 54%. All age cohorts show increased article authorship over the study period, and early career scholars author more articles per person than the other cohorts in eight disciplines. The article-dominated literatures of the social sciences are becoming increasingly similar to those of STEM disciplines.

Citation: Savage WE, Olejniczak AJ (2022) More journal articles and fewer books: Publication practices in the social sciences in the 2010’s. PLoS ONE 17(2): e0263410. https://doi.org/10.1371/journal.pone.0263410

Editor: Joshua L. Rosenbloom, Iowa State University, UNITED STATES

Received: August 27, 2021; Accepted: January 18, 2022; Published: February 3, 2022

Copyright: © 2022 Savage, Olejniczak. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Data Availability: All anonymized data, scripts, and statistical results files are available from the OSF database ( https://osf.io/2x4uf/ , DOI 10.17605/OSF.IO/2X4UF ).

Funding: WES and AJO received data and computing resources from Academic Analytics, LLC ( http://www.academicanalytics.com ). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Competing interests: AJO and WES are paid employees of Academic Analytics, LLC. None of the authors has an equity interest in Academic Analytics, LLC. The results presented reflect the authors’ opinions, and do not necessarily reflect the opinions or positions of Academic Analytics, LLC. Academic Analytics, LLC management had no oversight or involvement in the project and were not involved in preparation or review of the manuscript. All work was done as part of the respective authors’ research, with no additional or external funding. This does not alter our adherence to PLOS ONE policies on sharing data and materials.

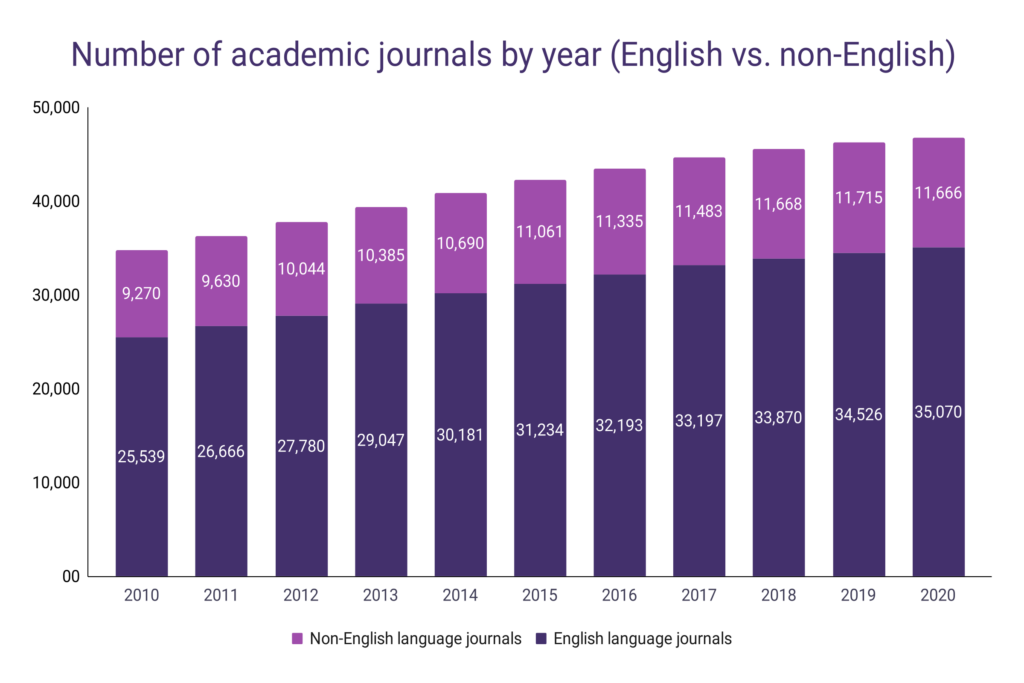

Introduction

The number of scientific and scholarly journal articles published each year has been increasing for some time. Kyvik [ 1 ] estimated there was a 30% increase in scientific and scholarly publishing between 1980 and 2000. In a later study, Kyvik and Aksnes [ 2 ] noted that Web of Science records increased from 500,000 indexed articles in 1981 to 1.5 million indexed articles in 2013. In 2018 Johnson et al. [ 3 ] estimated that the number of scholarly journals grew 5–6% per year over the past decade, and that there were 33,100 peer-reviewed English language journals distributing approximately 3 million articles each year. Much less attention has been given to the scholarly production of books, and the potential relationship between increased journal article publishing and book publishing practices. The social sciences in particular are comprised of several disciplines in which scholars regularly publish both journal articles and books, representing a unique opportunity to explore the growth of journal article publications in a sample of disciplines where journal articles are not the sole preferred mode of knowledge dissemination.

In this study, we examine how disciplinary publishing practices in the social sciences have changed over a recent nine-year period at Ph.D. granting universities in the United States. We begin by reviewing two of several proposed factors that may underlie the increase in journal article publication in the social sciences. We then quantify changes in total and per capita journal article and book publication output of each discipline, as well as the rate of participation in both modes of dissemination (i.e., the percentage of scholars who have participated in journal article and book authorship over time). Both the rate of publication and the rate of participation are compared across academic age groups (early career, mid-career, and senior scholars). Specifically, we address the following questions using a large database of scholarly activity spanning several social sciences disciplines and including tens of thousands of individual scholars:

- Has the publication strategy of social science disciplines changed such that more or fewer books and journal articles are being written now than in the recent past (in total and in the context of faculty population changes in these disciplines over time)?

- Has the percentage of scholars actively participating in a particular publishing type changed over time (e.g., are fewer scholars authoring books, or are fewer books being published per scholar, or both)?

- Do different faculty age groups show different publication strategies?

Changes in the social science research environment and performance-based measures

Within the context of overall growth in scientific and scholarly article publication rates, changes in social science journal article publishing have been studied by several researchers. Warren [ 4 ] observed increased publication rates among newly hired social science scholars, finding that publishing expectations are now twice as great as they were in the early 1990’s for graduates seeking an assistant professor position or assistant professors seeking promotion to associate professor. In a prior study, Bauldry [ 5 ] examined 403 new hires in sociology at 98 research universities and found assistant professors hired in either 2011 or 2012 had a median number of publications two to three times greater than new assistant professors hired in 2007. Increasing rates of co-authorship of social science journal articles have also been studied. In a study of 56 subject categories in the Web of Science: Social Science Citation Index, Henriksen [ 6 ] observed that larger increases in the share of co-authorships occur in disciplines using quantitative research methods, large data sets, and team research, as well as those with greater research specialization.

Among the suggestions that Kyvik and Aksnes [ 2 ] offered as contributing factors to the growth of scholarly publishing was the improvement of research environments and external funding. However, they focused primarily on the impact of external funding, simply noting that research environments had benefitted from the introduction of personal computers, databases, and the internet. In 2009, Lazer et al. [ 7 ] described the emergent field of computational social science “that leverages the capacity to collect and analyze data with an unprecedented breadth and depth and scale.” King [ 8 ] describes the dramatic methodological changes in computational social sciences as “from isolated scholars toiling away on their own to larger scale, collaborative, interdisciplinary, lab-style research teams; and from a purely academic pursuit to having a major impact on the world.” Since those early days, data repositories such as the Inter-university Consortium for Political and Social Research (ICPSR) and the Harvard-MIT Data Center now make large datasets and technical services available to researchers. On-campus resources for social science researchers at American universities have become widespread. For example, every member institution of the Association of American Universities (AAU) now has at least one center, institute, or program exploring computational social science research. The availability of large research grants further demonstrates the growing importance of quantitative social sciences. The U.S. National Science Foundation (NSF) Directorate of Social, Behavioral and Economic Science, for example, funds projects through a program called Human Networks and Data Science [ 9 ]. The emergence and expansion of quantitative social sciences has had a clear impact on the social science research environment. Assembly and analysis of massive databases, data verification, statistical modeling, and visualization require levels of expertise beyond a single researcher. Jones’ [ 10 ] recent analysis of all published articles in economics from 1950–2018 estimates that single-author articles ceased to be the majority of economics papers in 2005, and that co-authored papers now constitute 74% of all articles in the discipline.

Another potential factor in the growth of publishing offered by Kyvik and Aksnes [ 2 ] is the emergence and spread of performance-based research funding systems in which published output has become an important parameter in evaluation of individual scholars, their departments, and their universities. Hermanowicz [ 11 ] observed that both the university and the individual faculty member have become entrepreneurs for whom “research and publication have become the main currency in which prestige is traded.” In short, research evaluation and the conferral of prestige share the same currency: scholarly publication. The UK’s Research Excellence Framework (REF), for example, determines a large proportion of national funding for institutional research in the United Kingdom [ 12 ]. Fry et al. [ 13 ] conducted interviews in December, 2008 aimed at understanding how research assessment may influence scholarly and scientific publication in the UK. They reported a near-universal view among respondents that the publication of peer-reviewed journal articles was a fundamental disciplinary and institutional expectation, and that there was increasing institutional pressure to publish more frequently. Across disciplines, institutional emphasis was placed on peer-reviewed journal articles as the preferred output which would most contribute to their institution’s REF submissions. Additionally, emphasis was placed on collaborative research, suggesting that collaborative team projects were best-suited to REF submissions. In their review of the evolution of UK economics under the REF, Lee et al. [ 14 ] note that over the course of four research assessment exercises, 1992, 1996, 2001, and 2008, the proportion of all journal submissions appearing in Diamond’s [ 15 ] 27 core prestigious economics journals increased from 31% to 44%. Further, the percentage of journal titles in all economics departmental submissions increased from 53% in 1992 to 91% in 2008 [ 14 ].

Another analysis of REF submissions for the 1996, 2001, and 2008 REF cycles found that the volume of articles grew from 62% of submitted publications in 1996 to 75% in 2008 [ 16 ]. The increase in articles came at the expense of other publishing types: engineering submissions included fewer conference proceedings and social sciences submissions included fewer books. Evidence from the REF demonstrates that performance-based evaluation can catalyze more collaborative research and more frequent journal publication to the exclusion of other publication types. The United States does not have a national assessment framework tied to research funding, but widely consulted research evaluation data sources in the US also favor journal articles over books; the most recent National Research Council report on US doctoral programs, for example, did not include books in its tally of social science publications [ 17 ]. Influential public university rankings also omit books (e.g., QS World University Rankings) or minimize books’ weight relative to journal articles (e.g., US News and World Report) [ 18 , 19 ].

Materials and methods

Data source.

We mined the Academic Analytics, LLC (AcA) commercial database for the names, departmental affiliation(s), and year of terminal degree of tenured and tenure-track scholars (Assistant Professor, Associate Professor, and Professor titles) over 9 years (2011–2019) in the following 12 social and behavioral science fields at Ph.D. granting universities in the United States:

- Anthropology

- Criminal Justice and Criminology

- Educational Psychology

- International Affairs and Development

- Political Science

- Public Administration

- Public Policy

- Social Work/Social Welfare

The AcA database compiles information on faculty members associated with academic departments at 380 Ph.D.-granting universities in the United States. AcA faculty rosters are updated at least annually by manual collection from publicly available resources, supplemented by verification and submission of faculty lists from some institutions. Each academic department is manually assigned to one or more of 170 subject classifications based on the National Center for Education Statistics (NCES) Classification of Instructional Programs (CIP) code classifications [ 20 ]. A complete list of the departments included in this study and their subject classifications is publicly available ( https://osf.io/2x4uf/ ). AcA matches scholarly publications to their authors using a semi-automated matching process. All journal articles indexed in CrossRef ( https://www.crossref.org/ ) are ingested into AcA’s data warehouse and matched to their author(s); our study includes only the peer-reviewed journal articles, other article types that are also assigned DOIs but do not necessarily represent original research are excluded (e.g., book reviews, obituaries). Harzing [ 21 ] found that CrossRef has “similar or better coverage” of publications than Web of Science and Scopus, but are less comprehensive than Google Scholar and Microsoft Academic. A CrossRef API query performed in January 2022 reveals 6,200,221 works of all types are indexed in CrossRef with a publication date in 2019. Of these works, AcA identified 367,883 unique peer-reviewed journal articles (co-)authored by faculty members at the Ph.D. granting universities in their database (i.e., in 2019, about 5.9% of the works indexed in CrossRef represent peer-reviewed journal articles by authors at the institutions covered by AcA). Works indexed by CrossRef that were not matched to scholars in the AcA database are either non-journal article types (e.g., conference proceedings, book chapters, working papers), or they were authored by scholars outside the United States or at non-Ph.D. granting US universities.

AcA also matches academic book publications from Baker & Taylor ( https://www.baker-taylor.com/ ) to their respective author(s), editor(s), and translator(s). Baker & Taylor is among the most widely used book vendors among public libraries; scholarly books from 5,774 publishers catalogued by Baker & Taylor are matched to faculty members in the Academic Analytics database (the list of academic publishers is available at https://osf.io/2x4uf/ ). For both publication types, a 5-year window of authored publications was extracted (e.g., for the 2011 database, publications authored between 2007–2011 were extracted). All faculty members in departments assigned to one of the 12 social sciences disciplines were included in the sample, including those with zero articles or books in the previous 5 years.

The earliest iteration of the AcA database we extracted (2011) contains 27,447 unique faculty members affiliated with 1,476 social science departments at 267 universities that offered a social science Ph.D. degree in 2011. The most recent database (2019) contains 28,928 unique faculty members affiliated with 1,561 departments at 290 universities that offered a social science Ph.D. degree in 2019. Anonymized raw data, including faculty and department lists for each of the nine years studied, journal titles, book presses, and the crosswalk of university departments to scholarly disciplines are publicly available ( https://osf.io/2x4uf/ ).

Data analysis

All post-extract data handling, computations and statistical tests were performed in R v1.4.0 [ 22 ]. The total publication output of each discipline in each database year was tabulated as the unique number of articles and books published by scholars whose academic departments are classified within that discipline category. Each publication is counted only once per discipline, even if more than one scholar in that discipline shared authorship of that work. For each discipline and each year, we calculated the number of articles per faculty, the number of books per faculty, and the number of books per article. Changes in the number of departments in each discipline over the 9-year period may reflect the creation of new departments at the universities studied, but it may also reflect an increased scope of data collection in the AcA database. We attempted to control for the creation (or dissolution) of departments (and the possibility of increased faculty roster collection efforts) by calculating the same totals and ratios as above for the subset of departments that appear in the AcA database in all nine database years. Likewise, to explore whether changes in article and book publication are related to changing demographics within disciplines or due to changes in the publication practices of individual scholars, we calculated the same totals and ratios as above for the subset of faculty members who appear in all nine database years. The median number of authors on each article was also tabulated for each discipline in each year, to explore the growth of team authorship.

In each database year, the proportion of each discipline’s population actively engaged in a particular publication type was calculated as the percent of scholars who published at least one book in the previous five years, and the percent who published at least one journal article in the previous five years. Significant differences in the proportion of scholars who have published at least one book (or journal article) between the 2011 and 2019 years was tested using the Chi-squared test.

The AcA database includes the year of terminal degree for each faculty member (typically the Ph.D., but sometimes MBA, MFA, etc.), from which we defined three academic age cohorts following [ 23 ]: early career researchers (ECR) earned their terminal degree 0–10 years before the year in which the database was compiled; mid-career researchers (MCR) earned their degree between 11 and 30 years before the database compilation year; and senior career researchers (SCR) earned their degree 31 or more years before the database compilation year. For each discipline, year, and age cohort we calculated the number of articles per faculty, the number of books per faculty, and the number of books per article. The proportion of each age cohort participating in both publication types was also calculated, and differences in the percent of the population actively engaged in each publication type was tested using the Chi-squared test. When comparisons are made with disciplines among age cohorts, the unique number of books or articles authored by that age cohort was used.

Population, academic department count, and publication count

The number of faculty members and academic departments in each discipline in the 2011 and 2019 database years, as well as the percent change between 2011 and 2019, appears in Table 1 . Data for all years is available at https://osf.io/2x4uf/ . In total, scholars in the 2011 dataset published 158,104 unique journal articles in 8,706 journals between 2007 and 2011. Over the same five-year period, the 2011 scholars published 17,101 unique books. Scholars in the 2019 dataset published 215,540 unique journal articles in 11,480 journals between 2015 and 2019. Over the same five-year period, the 2019 scholars published 13,102 unique books. The counts of unique journal articles and books by authors in each discipline are presented in Table 2 . While the overall number of social science scholars increased 5.4% between 2011 and 2019, the number of journal articles they produced increased at a much faster rate: 36.3%. The increase in articles published is associated with a 31.9% increase in the number of unique journal titles in which these works appear. Conversely, the overall number of books published decreased by 23.4% over the nine-year period. The declining ratio of books per journal article in each discipline over the study period is shown in Fig 1 .

- PPT PowerPoint slide

- PNG larger image

- TIFF original image

Both books and journal articles represent five-year sums, such that the 2011 data point represents the total books published between 2007 and 2011 divided by the total articles published between 2007 and 2011, the 2012 data point represents the total books published between 2008 and 2012 divided by the total articles published between 2008 and 2012, and so on.

https://doi.org/10.1371/journal.pone.0263410.g001

https://doi.org/10.1371/journal.pone.0263410.t001

https://doi.org/10.1371/journal.pone.0263410.t002

Growth in the number of social science faculty members over the study period (5.4%) is not uniform across disciplines. Table 1 reveals that one discipline (Educational Psychology) evinced a slight decline in the number of faculty members and the number of academic departments (-1.2%), while large population increases were observed in International Affairs and Development (39.1%), Public Administration (22.3%), and Public Policy (10.1%). The number of academic departments classified as International Affairs and Development and Public Administration also increased substantially between 2011 and 2019 ( Table 1 ). A different pattern is observed among Criminal Justice and Criminology departments. The number of departments in this discipline increased by more than 10% over the study period, while the number of faculty members in this field increased by 5.4% on par with the overall social sciences population increase.

The results presented in Table 2 are not substantively different than those performed on a subset of the data limited to only those departments appearing in all nine years of the dataset. The limited subset of departments differed from the complete set by about 1%, on average, in terms of articles per person, books per person, and books per article. A table showing results for the limited subset of departments is available at https://osf.io/2x4uf/ . Similarity between the full sample of departments and the limited subset suggest that growth in the number of departments (or expanded AcA data collection efforts) does not account for the trend towards increased article publication and decreased book publication we observed in each discipline.

Changing publishing practices

In every discipline, the 5-year total of journal article publications increased more rapidly than the population of faculty members, and in all but one discipline the 5-year total of book publications decreased between 2011 and 2019 (in Public Administration, book publishing increased by 7.1%). Book publishing in International Affairs and Development also increased after 2011 but began to decrease again after 2014 and by 2019 the number of books published was identical to the 2011 number.

Table 2 shows the number of journal articles and books in 2011 and 2019 for each discipline, as well as the following ratios: articles per faculty, books per faculty, and books per article. In seven of the 12 disciplines we studied, journal articles per person increased by more than 30% from 2011 to 2019, including a more than 60% increase in both Educational Psychology and Social Work/Social Welfare. The increase in articles per person in Economics is notably lower than the other disciplines, with only a 3% increase over the study period. The next lowest increase is in International Affairs and Development (11%); unlike other fields, the year-by-year population growth in International Affairs and Development largely mirrors growth in the discipline’s journal article output over time.

A decline in books per person over the study period characterizes all twelve disciplines ( Table 2 ). Economics shows the greatest decline in books per person (-46%), while the lowest declines are in Public Administration and Educational Psychology (-12% and -14%, respectively). In all disciplines, the ratio of books per article declined by at least 31% from 2011 to 2019. In Sociology, for example, there was one book published for every 6.3 journal articles in the 2011 dataset; in the 2019 dataset, there was one book published for every 11.1 articles. The largest decline in books per article appears in Geography and Social Work/Social Welfare (-54% in both disciplines). We visualized the ratio “books per journal article” in each discipline throughout the study period ( Fig 1 ); book publications constitute a steadily decreasing portion of the total publication output in each social science discipline over this timeframe.

Table 3 shows 2011 and 2019 articles per person and books per person by age cohort. In eight of twelve disciplines, the largest increase in articles per person from 2011 to 2019 is observed among the youngest age cohort (ECR). ECRs in Economics showed the smallest increase in journal articles per person among the ECR cohorts. Book publications per person declined among SCRs in all disciplines, and books per person also declined among MCRs in all but one discipline (Educational Psychology). Books per person among ECRs decreased slightly in all but one discipline, Geography.

https://doi.org/10.1371/journal.pone.0263410.t003

Analysis of a subset of data containing only individual faculty members that appear in all nine years of the study is available at https://osf.io/2x4uf/ . Data show that the number of journal articles authored by faculty who were present throughout the study period did increase in most disciplines, but this increase was much less than that observed for the overall study sample (e.g., faculty present throughout the study timeframe in Anthropology authored 15.4% more articles in 2019 than in 2011, but when the entire sample of faculty members is included, the increase was more than twice as great, 34.1%). This result indicates that while all faculty members are contributing to the increase in journal article production, those who joined the faculty at the research institutions in our study after 2011 (i.e., new hires) contributed disproportionately to the overall increase in journal article authorships over the study period.

Percent of faculty actively engaged in publishing books and articles

We quantified the rate of participation in each publishing type in each of the nine years studied. Results for 2011 and 2019 appear in Table 4 along with significance values from Chi-squared tests for differences in proportions of faculty who participate in a particular publishing type; results for each of the nine years are available at https://osf.io/2x4uf/ . The rate of participation was defined as the percent of all scholars in each discipline within a data year who authored at least one of that type of publication over the previous 5-year period, divided by the total number of scholars in that discipline in that year. In every discipline, the rate of participation in journal article publishing increased, while the rate of book publishing participation decreased. The changes in participation rate between 2011 and 2019 observed in Table 4 are generally less than the changes in books and articles per person ( Table 2 ). For example, although Geography saw a 54% decline in the number of books published per person, the number of faculty who have published at least one book decreased by only 5.9%. Likewise, in Psychology the number of journal articles per person increased 34% over the study period, but the percent of the population engaged in the production of journal articles increased only 2.9%.

https://doi.org/10.1371/journal.pone.0263410.t004

Table 5 shows the percent of scholars in 2011 and 2019 who published at least one book or journal article by age cohort. In all but one discipline (Economics) the youngest cohort (ECR) had the greatest rate of participation in journal article publication in both 2011 and 2019. Likewise, in every discipline except Educational Psychology, the oldest cohort (SCR) had the greatest participation in book publication. These findings are broadly consistent with our previous analysis of publishing behavior among age cohorts [ 23 : Fig 6], where senior scholars were observed to publish more books than their younger colleagues. Table 5 also shows that rates of participation in journal article publication increased in all age cohorts in all disciplines, with three exceptions: SCRs in International Affairs and Development, Public Administration, and Public Policy all showed non-significant decreases in journal article publication participation. In six disciplines SCRs showed the greatest increase in journal article publication participation, in four disciplines the greatest increase was among ECRs, and in the remaining two disciplines MCRs showed the greatest increase in journal article publication participation.

https://doi.org/10.1371/journal.pone.0263410.t005

Thirty-five of the 36 comparisons of book publication participation by age cohort revealed a decrease in participation rate between 2011 and 2019 (among MCRs in Educational Psychology the increase was not statistically significant; Table 5 ). In six disciplines MCRs showed the greatest decrease in journal article publication participation, in four disciplines the greatest decrease was among ECRs, and in the remaining two disciplines SCRs showed the greatest increase in journal article publication participation. ECRs universally show the lowest rate of book publication participation. This is most extreme in Economics where only 3.7% of ECRs published at least one book in the 5-year period leading to 2011, and only 2.0% of Economics ECRs published a book in the 5-year period leading to 2019.

Individual scholars are members of various communities: academic departments, colleges, universities, and disciplines, among others. Placing the individual author in this complex social context, Nygaard [ 24 ] used an academic literacies framework to analyze research and writing. In this model, research, writing, and publishing are social practices embedded within a community, and communities create expectations for individual behavior. The researcher must decide the genre of the artifact to be produced, whether to involve other researchers in a collaborative effort, the quality of the work, the appropriate audience, and the process of how the scholarship is done. The community (department, university, discipline, etc.) establishes the parameters for those individual decisions. One of the most consequential decisions early career faculty face is deciding the venue for publishing their research. Clemens et al. [ 25 ] observe that access to book publishers is usually through cumulative advantage which accrues to senior faculty who have established a record of successful publications. Journal article publication, on the other hand, is more egalitarian, relying more on the author’s tenacity to submit their work multiple times until accepted. Thus, as Harley, et al. [ 26 ], Tenopir, et al. [ 27 ], and Wakeling, et al. [ 28 ] suggest, early career faculty members often recognize that the most advantageous strategy is to first establish their research reputations through the publication of journal articles in prestigious journals. With this background to the decisions the publishing researcher makes and the choices available, we suggest that the growing pressure to publish more—and more frequently—amidst the backdrop of community, reputation, and career stage requirements has altered the publication practices of social scientists.

Journal articles are the de facto “currency” of research in many physical, mathematical, biological, biomedical, and engineering fields [e.g., 29 ], and our data show that the social sciences are becoming more like those STEM disciplines in terms of publication practices. King [ 8 ] prefaced his comments on how computational research is restructuring the social sciences by noting “The social sciences are in the midst of an historic change, with large parts moving from the humanities to the sciences in terms of research style, infrastructural needs, data availability, empirical methods, substantive understanding, and the ability to make swift and dramatic progress.” Thus, the research methodologies of large parts of the social sciences are contributing to more collaborative research and an increased emphasis on journal article publication. Our analyses suggest that the emphasis on journal article publication may come at the expense of book publication and may be driven by increasing article publishing expectations on the youngest age cohort. While increased rates of journal article publication are not limited to the ECR cohort, in all but one discipline, the youngest cohort (ECR) had the greatest rate of participation in journal article publication in both 2011 and 2019. Our finding that the increase in articles per faculty member among those who appear in all nine years of the study is less than the overall increase in article per author may be partially explained by increasing pressure to write more papers among ECRs, perhaps as a corollary to the increasingly competitive job market for professorships.

The influence of performance-based research assessment systems on faculty publishing and research decisions is also likely related to the increase in journal article production and the de-emphasis on book publication. Hicks [ 30 ] notes “….it is the form of social science scholarly publication that is evolving in response to the imposition of national research evaluation… Research evaluation and publishing in the social sciences and humanities are co-evolving.” Our data indicate that this co-evolution in the social sciences likely results in greater emphasis on large research programs conducted by teams and increased frequency of journal article publication. In every discipline we examined, the rate of participation in journal article publishing increased, while the rate of book publishing participation decreased. In general, books take more time to produce than journal articles and their impact on the community is difficult to ascertain in the short term due in part to a dearth of comprehensive book citation databases. We posit that the increased need for rapidly produced research artifacts, the growth of quantitatively focused modes of inquiry in social science disciplines, and the increasingly greater number of journal articles produced by ECRs is likely to continue favoring journal article publication in the social sciences over book publications.

There are several potential ramifications of the decrease in book publications for social sciences as a whole and individual social science disciplines. The U.S. market for scholarly monographs has been shrinking for several years [ 31 ]. Book publishers used to see successful print runs and sales of 2,000 copies of new books. Now, annual sales of 200 copies of a new book is considered successful by some publishers [ 32 ]. Some book publishers have responded to this decline in revenues by increasing book prices as much as three-or four-fold [ 32 ]. The declines in book publications may provide some relief for acquisition librarians stretching their already depleted funds.

Declining book publications may have detrimental effects for social sciences disciplines most closely related to the humanities. Long-form scholarly publishing provides the place and space to explore a topic in detail, analyzing subjects with greater contextualization than shorter-form journal articles typically allow. Crossik [ 33 ] observes that “Writing a monograph allows the author to weave a complex and reflective narrative, tying together a body of research in a way that is not possible with journal articles or other shorter outputs.” Hill [ 34 ] further suggests that “ways of knowing” and “forms of telling” are entwined; “reducing one may diminish the richness of the other.”

Future directions and study limitations

Collaborative research and publication have become commonplace in most disciplines in the social sciences, and further studies of scholarly collaboration are likely to provide more context and depth to understanding the behaviors involved in this phenomenon. Our study aimed to quantify the disciplinary literature as a whole, rather than the number of authorships attributable to individual scholars. If an article was co-authored by more than one scholar within a discipline, the article was counted only once in the article total for that discipline. Articles per person (as reported, e.g., in Table 2 ) was calculated as the number of unique articles authored by scholars in that discipline, divided by the number of unique scholars in that discipline. In this way, our study design is not suited to directly address whether increased co-authorship has a causal relationship with increased journal article authorships overall. We did, however, calculate the median number of authors for each article in each calendar year between 2007 and 2019 (a table with these data appears as supplemental information ( https://osf.io/2x4uf/ ). The median number of authors increased by 1.0 in all 12 disciplines we studied (e.g., from 2.0 to 3.0 authors per article in Anthropology, and from 3.0 to 4.0 authors per article in Geography). Increasing numbers of authors per article (see also [ 6 , 10 ]), in light of our result that the number of unique articles per person is also increasing, suggests a fruitful avenue for future research to explore the relationship between “teaming” and disciplinary article production.

Our study sample was limited to research universities that offer the Ph.D. degree in the United States. Faculty employed by many non-Ph.D. granting universities also routinely publish research results, and are likely also influenced by community practices, prestige, external evaluation, and the increasing use of quantitative research methods. Future research may seek to expand the sample of universities to include those institutions. Further, we did not consider non-traditional forms of scientific and scholarly communication such as blog authorship, zine authorship, newsletters, op-ed pieces, listserv posts, performances, musical compositions, choreography, etc., nor did we consider conference proceedings and book chapters. It is possible that the decline we observed in books published (and the increase in journal articles published) does not completely capture the fullness of the shift in social science research dissemination strategies. Bibliometric data aggregation would benefit from the inclusion of more of the diversity of dissemination strategies now available to scholars.

Acknowledgments

We thank the following individuals for thoughtful comments and suggestions: R. Berdahl, P. Lange, M. Matier, T. Stapleton, G. Walker, R. Wheeler, and C. Whitacre.

- View Article

- Google Scholar

- 3. Johnson R, Watkinson A, Mabe M. The STM Report: an overview of scientific and scholarly publishing—fifth Edition. STM: International Association of Scientific, Technical and Medical Publishers; 2018 Oct. Available: https://www.stm-assoc.org/2018_10_04_STM_Report_2018.pdf

- PubMed/NCBI

- 9. National Science Foundation. Human Networks and Data Science (HNDS): PROGRAM SOLICITATION NSF 21–514. 2021. Available: https://www.nsf.gov/publications/pub_summ.jsp?WT.z_pims_id=505702&ods_key=nsf21514

- 17. Ostriker JP, Holland PW, Kuh CV, Voytuk JA, editors. A Guide to the Methodology of the National Research Council Assessment of Doctorate Programs. Washington, D.C.: National Academies Press; 2009. p. 12676. https://doi.org/10.17226/12676

- 18. Morse R, Castonguay A. How U.S. News Calculated the Best Global Universities Rankings. 25 Oct 2021. Available: https://www.usnews.com/education/best-global-universities/articles/methodology#:~:text=How%20U.S.%20News%20Calculated%20the%20Best%20Global%20Universities,%20%202.5%25%20%209%20more%20rows%20

- 19. Understanding the Methodology—QS World University Rankings. In: QS Top Universities [Internet]. Jun 2021 [cited 25 Oct 2021]. Available: https://www.topuniversities.com/university-rankings-articles/world-university-rankings/understanding-methodology-qs-world-university-rankings

- 20. NCES. Introduction to the Classification of Instructional Programs: 2010 Edition (CIP-2010). 2013. Available: https://doi.org/10.4135/9781412957403.n289

- 22. R Core Team. R: A Language and Environment for Statistical Computing. Vienna, Austria: R Foundation for Statistical Computing; 2020. Available: https://www.R-project.org/

- 26. Harley D, Acord SK, Earl-Novell S, Lawrence S, King CJ. Assessing the future landscape of scholarly communication: an exploration of faculty values and needs in seven disciplines. Berkeley: Univ Of California Press; 2010.

- 33. Crossik G. Monographs and Open Access: a report to HEFCE. HEFCE; 2015 Jan. Available: https://dera.ioe.ac.uk/21921/1/2014_monographs.pdf

Academics Write Papers Arguing Over How Many People Read (And Cite) Their Papers

Studies about reading studies go back more than two decades

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/Rose-Eveleth-240.jpg)

Rose Eveleth

Contributor

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/bf/02/bf02c9f2-73b8-4fb0-b6e6-bd81d34822f7/2099848735_d5241ea070_o.jpg)

There are a lot of scientific papers out there. One estimate puts the count at 1.8 million articles published each year, in about 28,000 journals. Who actually reads those papers? According to one 2007 study , not many people: half of academic papers are read only by their authors and journal editors, the study's authors write.

But not all academics accept that they have an audience of three. There's a heated dispute around academic readership and citation—enough that there have been studies about reading studies going back for more than two decades.

In the 2007 study , the authors introduce their topic by noting that “as many as 50% of papers are never read by anyone other than their authors, referees and journal editors.” They also claim that 90 percent of papers published are never cited. Some academics are unsurprised by these numbers. “ I distinctly remember focusing not so much on the hyper-specific nature of these research topics, but how it must feel as an academic to spend so much time on a topic so far on the periphery of human interest,” writes Aaron Gordon at Pacific Standard . “Academia’s incentive structure is such that it’s better to publish something than nothing,” he explains, even if that something is only read by you and your reviewers.

But not everybody agrees these numbers are fair. The claim that half of papers are never cited comes first from a paper from 1990. “ Statistics compiled by the Philadelphia-based Institute for Scientific Information (ISI) indicate that 55% of the papers published between 1981 and 1985 in journals indexed by the institute received no citations at all in the 5 years after they were published,” David P. Hamilton wrote in Science .

In 2008, a team found that the problem is likely getting worse . “ As more journal issues came online, the articles referenced tended to be more recent, fewer journals and articles were cited, and more of those citations were to fewer journals and articles.” But some researchers took issue with that study, arguing that using different methods you could get quite different results. “Our own extensive investigations on this phenomenon… show that Evans’ suggestions that researchers tend to concentrate on more recent and more cited papers does not hold at the aggregate level in the biomedical sciences, the natural sciences and engineering, or the social sciences,” the authors write. This group of researchers found that plenty of old papers, for instance, were racking up readers over time.

It seems like this should be an easy question to answer: all you have to do is count the number of citations each paper has. But it’s harder than you might think. There are entire papers themselves dedicated to figuring out how to do this efficiently and accurately. The point of the 2007 paper wasn’t to assert that 50 percent of studies are unread. It was actually about citation analysis and the ways that the internet is letting academics see more accurately who is reading and citing their papers. “Since the turn of the century, dozens of databases such as Scopus and Google Scholar have appeared, which allow the citation patterns of academic papers to be studied with unprecedented speed and ease,” the paper's authors wrote.

Hopefully, someone will figure out how to answer this question definitively, so academics can start arguing about something else.

Get the latest stories in your inbox every weekday.

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/Rose-Eveleth-240.jpg)

Rose Eveleth | | READ MORE

Rose Eveleth was a writer for Smart News and a producer/designer/ science writer/ animator based in Brooklyn. Her work has appeared in the New York Times , Scientific American , Story Collider , TED-Ed and OnEarth .

PublishingState.com

How Many Journal Articles Have Been Published?

Table of contents, introduction, academic journal publishing models, the number of journal articles published, trends in journal publications, high-impact papers, high-impact journals, impacts on metrics and evaluation.

How many journal articles have been published so far?

It’s a very intriguing question, isn’t it?

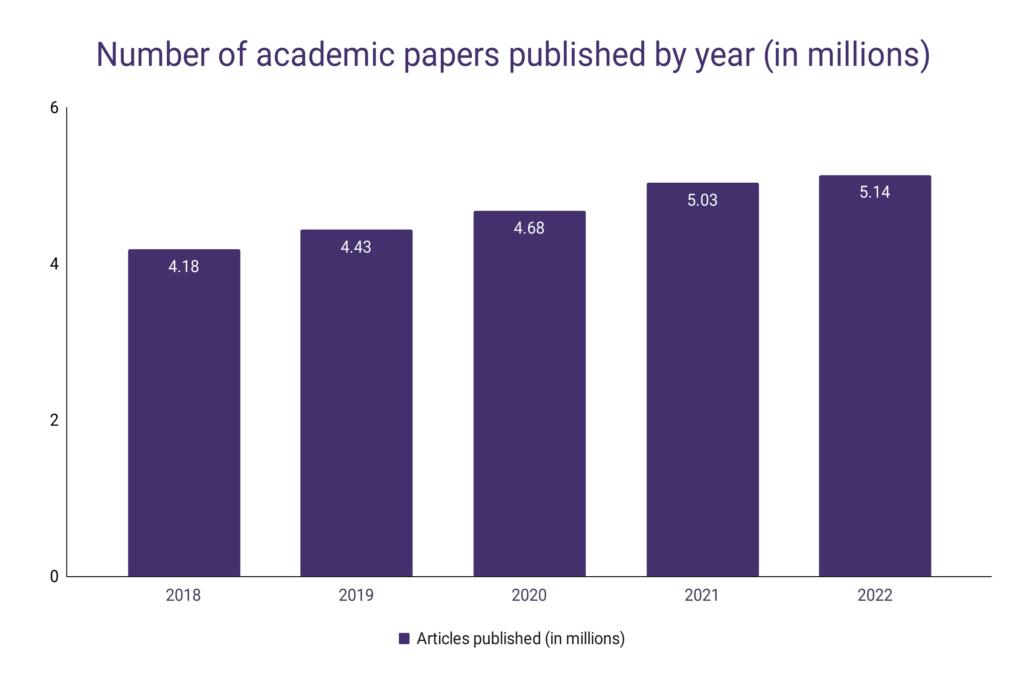

The world of academic publishing is vast and ever-expanding. The global academic landscape is teeming with many scientific and academic papers. Every year, more than 5 million research documents are published, encompassing the following materials:

- Academic books

- Journal articles

- Conference papers

- White papers and technical papers

- Theses and dissertations

- Academic reports

A study found that since 1996, more than 64 million academic papers have been published. These numbers continue to rise every year.

The staggering numbers highlight the explosive growth of scientific research and academic publishing over the past few decades. With new journals launching yearly and existing journals publishing more papers than ever, the total output of scholarly literature seems to increase exponentially.

But who reads all these papers? It’s estimated that, on average, slightly more than ten people read a research paper. What about journal papers?

While the published body of scientific knowledge is growing, the question remains whether it is being absorbed and utilized to its full potential.

With millions of research and academic materials published annually across thousands of academic niches, even experts in a field can struggle to keep up with the latest developments. This massive volume of published research raises important questions about the evolving world of journal publishing .

How can scholars and professionals stay current in their areas of expertise? What incentives drive the publication of so many articles? And how can we ensure that meaningful discoveries are findable amidst the sea of published papers? As publishing metrics and numbers continue to rise, it’s worth examining if growth matches the growth in knowledge and impact.

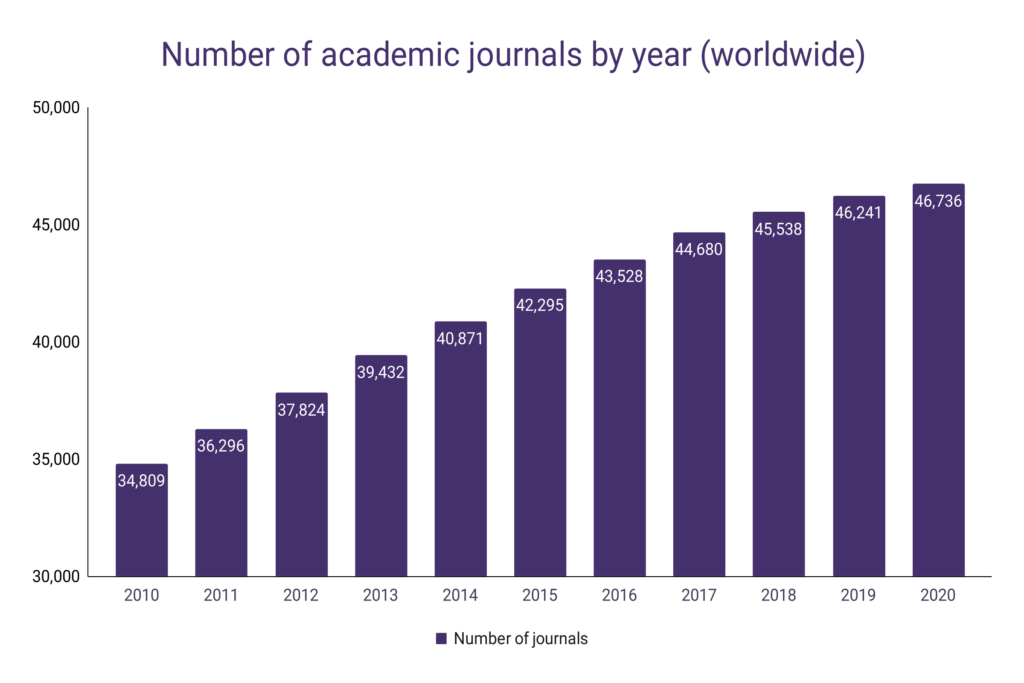

How Many Academic Journals Are There Today?

There are more than 30,000 academic journals globally, with the number projected to increase to 33,080-34,050 journals by 2025. The journals publish on various fields and scopes, including science, engineering, humanities, social sciences, medical, law, management, information technology, mathematics, business, accounting, education, and psychology.

The United Kingdom and the United States lead the world with a combination of over 10,000 academic journals published annually, accounting for over 20% of the global scholarly journal publishing.

Hundreds of new ranked journals seem to crop up yearly to accommodate the ballooning mass of publishable research.

The world of academic publishing has a variety of models that journals use to publish and disseminate their content. The two most common models are the subscription model and the open access model.

The subscription model is the traditional model of academic publishing. In this model, readers (or, more often, their institutions like universities or libraries) pay a subscription fee to access the journal’s content. The subscription can be for a single journal, a bundle of journals from the same publisher, or even a package of journals across multiple publishers.

The advantage of the subscription model is that it provides a steady stream of revenue for the publisher. However, the downside is that it restricts access to those who can afford the subscription fees, which can be quite high.

In contrast to the subscription model, open access journals allow anyone with internet access to read the articles for free. The authors (or their institutions or funders) cover the publishing costs and pay article processing charges (APCs) once their paper is accepted for publication.

The benefit of the open access model is that it promotes wider dissemination and accessibility of research findings. However, it can place a financial burden on researchers, particularly those from low-income countries or less-funded research areas. There are also hybrid models where a journal operates mainly under the subscription model but offers authors the option to make their articles open access for a fee. This model combines elements of both the subscription and open access models. Moreover, there’s a growing movement towards “diamond” or “platinum” open access, where neither the reader nor the author pays. These journals are usually funded by an organization, institution, or consortium that values the free dissemination of research. It’s important to note that regardless of the publishing model, all these journals strive to ensure rigorous peer-review processes to maintain the quality and integrity of the academic literature they publish.

Each year, over 2 million new research articles are published in more than 30,000 peer-reviewed journals across all fields of study.

With more than 2 million journal articles, the number of academic papers published yearly is staggering. With new journals constantly emerging, this immense literature volume can be hard to wrap your head around.

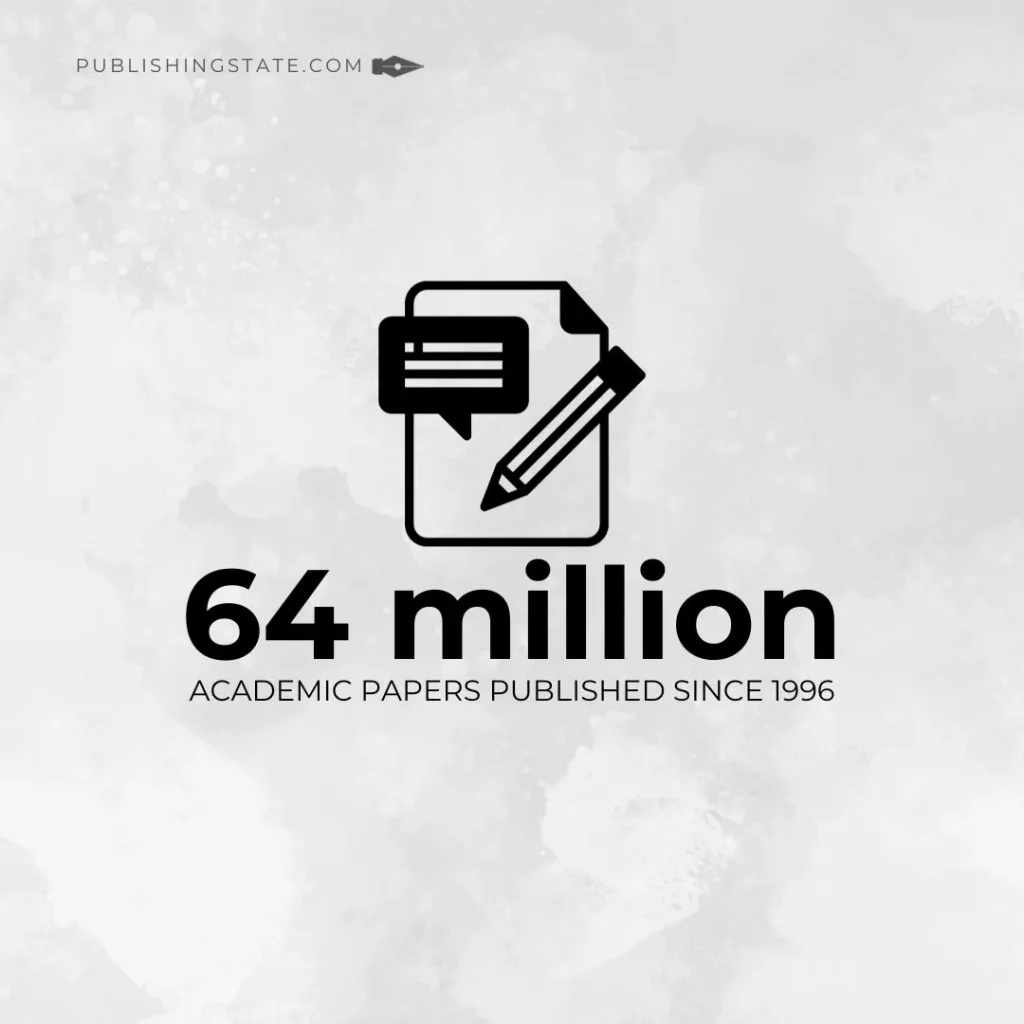

The rate of publication output has also seen an upward trajectory in recent years. The compound annual growth rate of publication output increased by 5% over the four years from 2017 to 2020, a slight uptick from the 4% growth rate observed over the longer 11-year period from 2010 to 2020.

Moreover, established journals publish way more papers yearly than they used to. Overall, there’s been a sharp increase in the number of articles published per journal. Many publish thousands of papers annually – a major change from old publishing patterns.

The world of academic literature is expanding rapidly. Making sense of millions of journal articles is no small feat. But staying informed about the research happening in your field is crucial, even in the face of information overload.

The number of academic journals being published each year continues to rise staggeringly. Hundreds of newly ranked journals enter publication annually. This proliferation of journals provides more opportunities for researchers to disseminate their work but also makes it challenging to keep up with the latest publications.

In addition to more journals, existing publications are increasing the number of papers they publish each year.

According to the data from the Scimago Journal Rank and Microsoft Academic Graph (MAG) datasets, the number of published papers per journal increased sharply, from a mean of 74.2 papers in 1999 to a mean of 99.6 papers in 2016. While a more updated study is needed, the growth pattern will continue for at least another decade.

Of all active academic journals globally, more than 100 journals publish over 1,000 papers annually, and the number continues to increase. Reputable journals that once published hundreds of articles annually are now releasing thousands.

Journals with massive resources, such as PLOS One, publish over 20,000 articles annually. This indicates a shift in publishing norms, with journals taking on higher volumes of articles and researchers producing work at faster rates.

Some experts argue the increasing trend diminishes the value of individual publications, overwhelms readers, and encourages academic publishers to make more money . Academic publishing has been labeled “ greedy ” for this practice.

The rise in the number of new journals and papers per journal has exponentially increased the scientific literature. While more research is being conducted and shared than ever, the overabundance of publications raises questions about the efficacy of academic metrics and best practices for disseminating quality research.

Changes in Metrics Over Time

The world of academic publishing has undergone significant transformations in recent decades. Two notable trends are the doubling of papers published in top-tier journals and the exponential growth in total papers published annually.

Over the past two decades, the number of papers published and indexed in the top database quartiles (Q1 and Q2) has also increased. This dramatic increase reflects the rise in submissions to top journals as more researchers enter the field.

What makes a quality, high-impact scholarly paper ?

A quality, high-impact scholarly paper possesses several key characteristics. These include: Originality and novelty . High-quality research should contribute something new to the field of study. It could be a new theory, method, data set, or empirical findings that have not been previously published. Rigorous methodology . The research methodology should be sound and appropriate for the research question. This includes the design of the study, the data collection process, and the analysis of the results. The methodology should be detailed so that other researchers can replicate the study. Significance and relevance . The research should be important and relevant to the field. It should address a significant problem or gap in the existing literature. Clear and concise writing . The paper should be well-written and easy to understand. It should clearly state the research question, explain the methodology, present the results, and discuss the implications of the findings. Valid and reliable results . The study’s findings should be valid (i.e., they accurately represent what they are supposed to measure) and reliable (i.e., they would be the same if the study were conducted again under the same conditions). Peer-reviewed . High-quality papers typically undergo a rigorous peer-review process, where other experts in the field review the paper for its validity, significance, and originality. Citation potential . High-impact papers are those that other researchers frequently cite. The number of citations a paper receives is often used to indicate its impact on the field. Ethical considerations . Any ethical issues related to the research should be addressed. This includes obtaining informed consent from participants, ensuring confidentiality and anonymity, and avoiding conflicts of interest. Transparency and openness . High-quality papers make their data, code, and other research materials available. This allows other researchers to verify the results and build upon the research. Contribution to knowledge . The paper should significantly contribute to the existing body of knowledge. It should advance our understanding of a particular topic or issue. It’s important to note that while these are general characteristics of high-quality, high-impact papers, the specific criteria can vary depending on the field of study.

However, it also suggests that these journals have become less selective in what they publish, possibly due to commercial pressures. Some experts argue this inflation has diluted the value of appearing in elite journals.

These trends require rethinking how we evaluate academic success. Metrics like publication count and journal impact factor are becoming less meaningful amid the journal publishing boom and the continued rise in journal articles. Citation-based measures are also problematic when citing references is increasingly performative.

More holistic evaluation criteria emphasize research quality and contribution over quantity. This may involve peer assessments, evidence of real-world impact, and valuing datasets/code as research outputs. Reform is critical to ensure scholarship retains meaning and purpose.

We have learned that there are more than 30,000 academic journals globally, estimated to grow to 33,080-34,050 journals by 2025, publishing in various fields and scopes.

More than 60 million academic papers have been published in the past decade.

How many journal articles have been published out of this 60 million?

As of the write-up, 2 million articles are published yearly, a truly massive number. Is it good or bad? Well, that depends.

The continued growth in journal numbers and articles published significantly impacts metrics and evaluation in academia. The increase in papers published in top-tier journals suggests that they have become less selective in their publication. This could be due to commercial pressures as more researchers enter the field and submit their work to these prestigious journals. As a result, the value of appearing in elite journals may have been diluted. Furthermore, the exponential growth in total papers published across thousands of journals has led to an overabundance of publications. This makes it difficult for scholars to keep up with the literature in their field. It has also enabled the rise of predatory journals that publish anything for a fee, undermining quality control in academic publishing. These trends in academic publishing require reevaluating how academic success is evaluated. Traditional metrics like publication count and journal impact factor are becoming less meaningful in the face of the publishing boom. Citation-based measures are also problematic, as citing references may be more performative than indicative of quality or impact. Instead, more holistic evaluation criteria that emphasize research quality and contribution over quantity are needed. This could involve peer assessments, evidence of real-world impact, and valuing datasets and code as research outputs. Such reforms are critical to ensure that scholarship retains its meaning and purpose in the age of information overload.

4 thoughts on “How Many Journal Articles Have Been Published?”

- Pingback: A ridiculous AI-generated rat penis made it into a peer-reviewed journal - News 247

- Pingback: Who Cares if You Plagiarise? - Insite Media

- Pingback: How to Organize Technical Research?

- Pingback: How to Organize Technical Research? - SoatDev IT Consulting

Leave a comment Cancel reply

SCIENCE & ENGINEERING INDICATORS

Publications output: u.s. trends and international comparisons.

- Report PDF (807 KB)

- Report - All Formats .ZIP (3.9 MB)

- Supplemental Materials - All Formats .ZIP (35.6 MB)

- MORE DOWNLOADS OPTIONS

- Share on X/Twitter

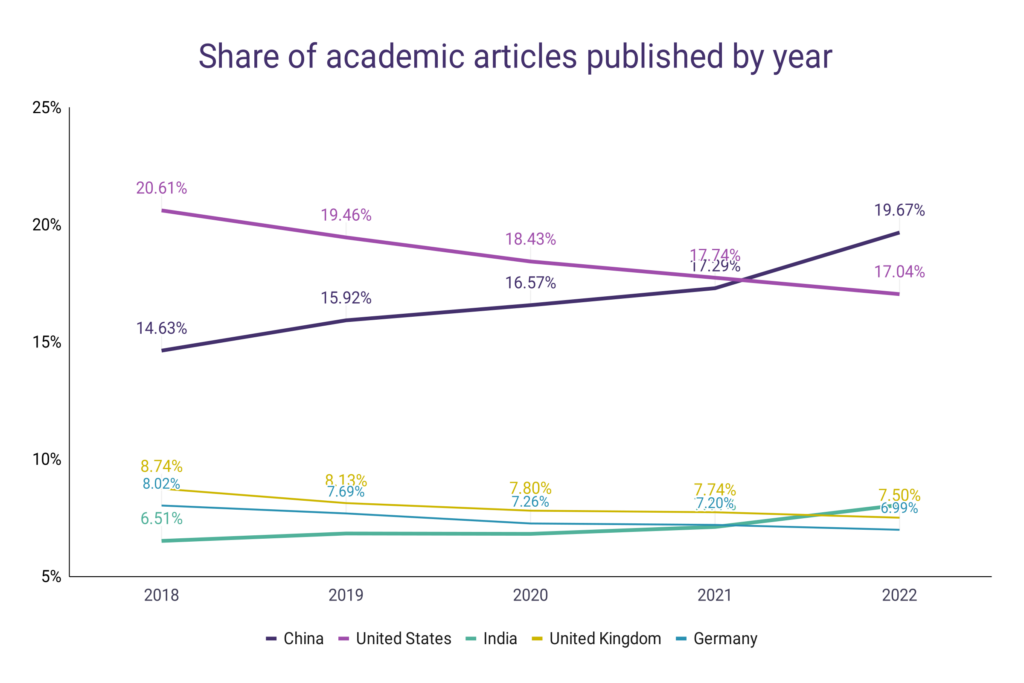

- Share on Facebook

- Share on LinkedIn

- Send as Email

Publication Output by Country, Region, or Economy and Scientific Field

Publication output reached 2.9 million articles in 2020 with over 90% of the total from countries with high-income and upper middle-income economies ( Figure PBS-1 ). predatory journals (NSB Indicators 2018 : Bibliometric Data Filters sidebar )." data-bs-content="Publication output only includes those indexed in the Scopus database. The publication output discussion uses fractional counting, which credits coauthored publications according to the collaborating institutions or countries based on the proportion of their participating authors. Country assignments refer to the institutional address of authors, with partial credit given for each international coauthorship. As part of our data analysis, we employ filters on the raw Scopus S&E publication data to remove publications with questionable quality, which appear in what are sometimes called predatory journals (NSB Indicators 2018 : Bibliometric Data Filters sidebar )." data-endnote-uuid="2ca4e1b6-71b9-46cd-95e8-d823ad890cb3"> Publication output only includes those indexed in the Scopus database. The publication output discussion uses fractional counting, which credits coauthored publications according to the collaborating institutions or countries based on the proportion of their participating authors. Country assignments refer to the institutional address of authors, with partial credit given for each international coauthorship. As part of our data analysis, we employ filters on the raw Scopus S&E publication data to remove publications with questionable quality, which appear in what are sometimes called predatory journals (NSB Indicators 2018 : Bibliometric Data Filters sidebar ). Since 1996, output has consistently grown for countries with high-income economies, such as the United States, Germany, and the United Kingdom (UK), expanding from a large base number of publications ( Table SPBS-2 ). https://datahelpdesk.worldbank.org/knowledgebase/articles/906519-world-bank-country-and-lending-groups ." data-bs-content="This report uses the World Bank (2021) country income classifications accessed in March 2021. The World Bank updates the classifications each year on 1 July. The World Bank income classifications are assigned using the gross national income per capita as measured in current U.S. dollars. This report uses the rankings. More information is available at https://datahelpdesk.worldbank.org/knowledgebase/articles/906519-world-bank-country-and-lending-groups ." data-endnote-uuid="aba70396-9a04-4a9b-a470-d2458891074f"> This report uses the World Bank (2021) country income classifications accessed in March 2021. The World Bank updates the classifications each year on 1 July. The World Bank income classifications are assigned using the gross national income per capita as measured in current U.S. dollars. This report uses the rankings. More information is available at https://datahelpdesk.worldbank.org/knowledgebase/articles/906519-world-bank-country-and-lending-groups . Countries with upper-middle-income economies, such as China, Iran, Russia, and Brazil, have had a more rapid pace of growth since 1996, expanding from a relatively smaller base number of publications. Overall, the publication compound annual growth rates of countries with upper middle-income and high-income economies have been 10% and 3%, respectively, for the 25-year period covering 1996–2020 ( Figure PBS-1 ).

- For grouped bar charts, Tab to the first data element (bar/line data point) which will bring up a pop-up with the data details

- To read the data in all groups Arrow-Down will go back and forth

- For bar/line chart data points are linear and not grouped, Arrow-Down will read each bar/line data points in order

- For line charts, Arrow-Left and Arrow-Right will move to the next set of data points after Tabbing to the first data point

- For stacked bars use the Arrow-Down key again after Tabbing to the first data bar

- Then use Arrow-Right and Arrow-Left to navigate the stacked bars within that stack

- Arrow-Down to advance to the next stack. Arrow-Up reverses

S&E articles, by income group: 1996–2020

Article counts refer to publications from a selection of conference proceedings and peer-reviewed journals in S&E fields from Scopus. Articles are classified by their year of publication and are assigned to a region, country, or economy on the basis of the institutional address(es) of the author(s) listed in the article. Articles are credited on a fractional count basis (i.e., for articles produced by authors from different countries, each country receives fractional credit on the basis of the proportion of its participating authors). Data are not directly comparable to Science and Engineering Indicators 2020 ; see the Technical Appendix for information on data filters. Low-income economies are not included in this figure because of their low publication output. Data by country and income groups are available in Table SPBS-2 .

National Center for Science and Engineering Statistics; Science-Metrix; Elsevier, Scopus abstract and citation database, accessed May 2021; World Bank Country and Lending Groups, accessed March 2021.

Science and Engineering Indicators

More recently, the compound annual growth in publication output for the world was 4% from 2010 to 2020 ( Table PBS-1 ). Country-specific growth rates vary widely by country. Among the 15 largest publication producers, countries with compound annual growth rates above the world average were Russia (10%), Iran (9%), India (9%), China (8%), and Brazil (5%); those with the lower growth rates were Japan (-1%), France (-0.3%), the United States (1%), the UK (1%), and Germany (1%). Table SPBS-17 ." data-bs-content="It is possible that the growth rates could be influenced by fractional counting. For example, the compound annual growth rate for France using whole counting is 1%. Publication output using whole counting is available in Table SPBS-17 ." data-endnote-uuid="0e263707-7950-46cf-831d-cf85eab318f1"> It is possible that the growth rates could be influenced by fractional counting. For example, the compound annual growth rate for France using whole counting is 1%. Publication output using whole counting is available in Table SPBS-17 . The countries with low growth rates are those that built their scientific capacity decades ago and continue to maintain their scientific research. The worldwide growth of publication output, from 1.9 million in 2010 to 2.9 million in 2020, was led by four geographically large countries. China (36%), India (9%), Russia (6%), and the United States (5%) together accounted for about half the increase in publications over this time period.

S&E articles in all fields for 15 largest producing regions, countries, or economies: 2010 and 2020

na = not applicable.