Dictate your documents in Word

Dictation lets you use speech-to-text to author content in Microsoft 365 with a microphone and reliable internet connection. It's a quick and easy way to get your thoughts out, create drafts or outlines, and capture notes.

Start speaking to see text appear on the screen.

How to use dictation

Tip: You can also start dictation with the keyboard shortcut: ⌥ (Option) + F1.

Learn more about using dictation in Word on the web and mobile

Dictate your documents in Word for the web

Dictate your documents in Word Mobile

What can I say?

In addition to dictating your content, you can speak commands to add punctuation, navigate around the page, and enter special characters.

You can see the commands in any supported language by going to Available languages . These are the commands for English.

Punctuation

Navigation and selection, creating lists, adding comments, dictation commands, mathematics, emoji/faces, available languages.

Select from the list below to see commands available in each of the supported languages.

- Select your language

Arabic (Bahrain)

Arabic (Egypt)

Arabic (Saudi Arabia)

Croatian (Croatia)

Gujarati (India)

- Hebrew (Israel)

- Hungarian (Hungary)

- Irish (Ireland)

Marathi (India)

- Polish (Poland)

- Romanian (Romania)

- Russian (Russia)

- Slovenian (Slovenia)

Tamil (India)

Telugu (India)

- Thai (Thailand)

- Vietnamese (Vietnam)

More Information

Spoken languages supported.

By default, Dictation is set to your document language in Microsoft 365.

We are actively working to improve these languages and add more locales and languages.

Supported Languages

Chinese (China)

English (Australia)

English (Canada)

English (India)

English (United Kingdom)

English (United States)

French (Canada)

French (France)

German (Germany)

Italian (Italy)

Portuguese (Brazil)

Spanish (Spain)

Spanish (Mexico)

Preview languages *

Chinese (Traditional, Hong Kong)

Chinese (Taiwan)

Dutch (Netherlands)

English (New Zealand)

Norwegian (Bokmål)

Portuguese (Portugal)

Swedish (Sweden)

Turkish (Turkey)

* Preview Languages may have lower accuracy or limited punctuation support.

Dictation settings

Click on the gear icon to see the available settings.

Spoken Language: View and change languages in the drop-down

Microphone: View and change your microphone

Auto Punctuation: Toggle the checkmark on or off, if it's available for the language chosen

Profanity filter: Mask potentially sensitive phrases with ***

Tips for using Dictation

Saying “ delete ” by itself removes the last word or punctuation before the cursor.

Saying “ delete that ” removes the last spoken utterance.

You can bold, italicize, underline, or strikethrough a word or phrase. An example would be dictating “review by tomorrow at 5PM”, then saying “ bold tomorrow ” which would leave you with "review by tomorrow at 5PM"

Try phrases like “ bold last word ” or “ underline last sentence .”

Saying “ add comment look at this tomorrow ” will insert a new comment with the text “Look at this tomorrow” inside it.

Saying “ add comment ” by itself will create a blank comment box you where you can type a comment.

To resume dictation, please use the keyboard shortcut ALT + ` or press the Mic icon in the floating dictation menu.

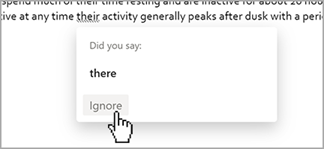

Markings may appear under words with alternates we may have misheard.

If the marked word is already correct, you can select Ignore .

This service does not store your audio data or transcribed text.

Your speech utterances will be sent to Microsoft and used only to provide you with text results.

For more information about experiences that analyze your content, see Connected Experiences in Microsoft 365 .

Troubleshooting

Can't find the dictate button.

If you can't see the button to start dictation:

Make sure you're signed in with an active Microsoft 365 subscription

Dictate is not available in Office 2016 or 2019 for Windows without Microsoft 365

Make sure you have Windows 10 or above

Dictate button is grayed out

If you see the dictate button is grayed out

Make sure the note is not in a Read-Only state.

Microphone doesn't have access

If you see "We don’t have access to your microphone":

Make sure no other application or web page is using the microphone and try again

Refresh, click on Dictate, and give permission for the browser to access the microphone

Microphone isn't working

If you see "There is a problem with your microphone" or "We can’t detect your microphone":

Make sure the microphone is plugged in

Test the microphone to make sure it's working

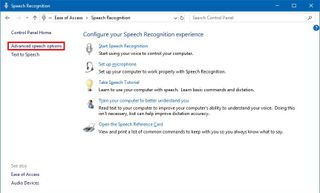

Check the microphone settings in Control Panel

Also see How to set up and test microphones in Windows

On a Surface running Windows 10: Adjust microphone settings

Dictation can't hear you

If you see "Dictation can't hear you" or if nothing appears on the screen as you dictate:

Make sure your microphone is not muted

Adjust the input level of your microphone

Move to a quieter location

If using a built-in mic, consider trying again with a headset or external mic

Accuracy issues or missed words

If you see a lot of incorrect words being output or missed words:

Make sure you're on a fast and reliable internet connection

Avoid or eliminate background noise that may interfere with your voice

Try speaking more deliberately

Check to see if the microphone you are using needs to be upgraded

Need more help?

Want more options.

Explore subscription benefits, browse training courses, learn how to secure your device, and more.

Microsoft 365 subscription benefits

Microsoft 365 training

Microsoft security

Accessibility center

Communities help you ask and answer questions, give feedback, and hear from experts with rich knowledge.

Ask the Microsoft Community

Microsoft Tech Community

Windows Insiders

Microsoft 365 Insiders

Was this information helpful?

Thank you for your feedback.

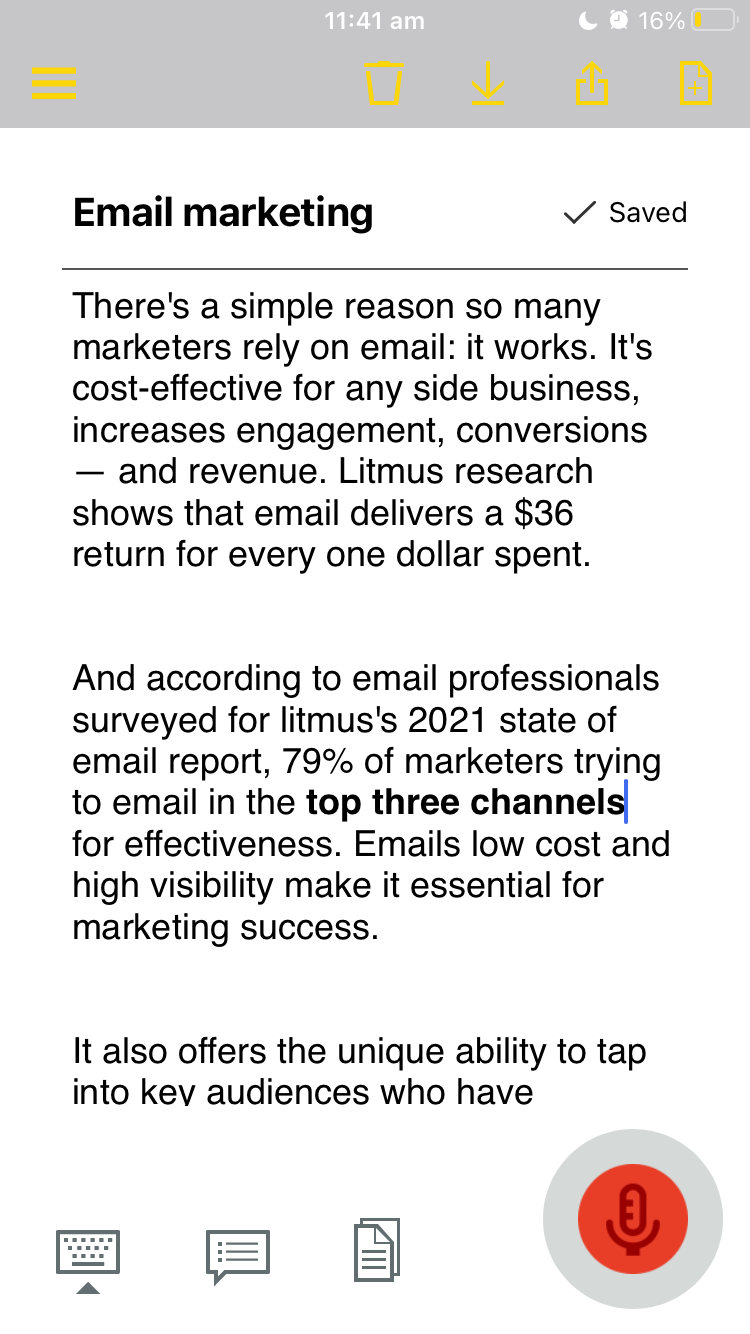

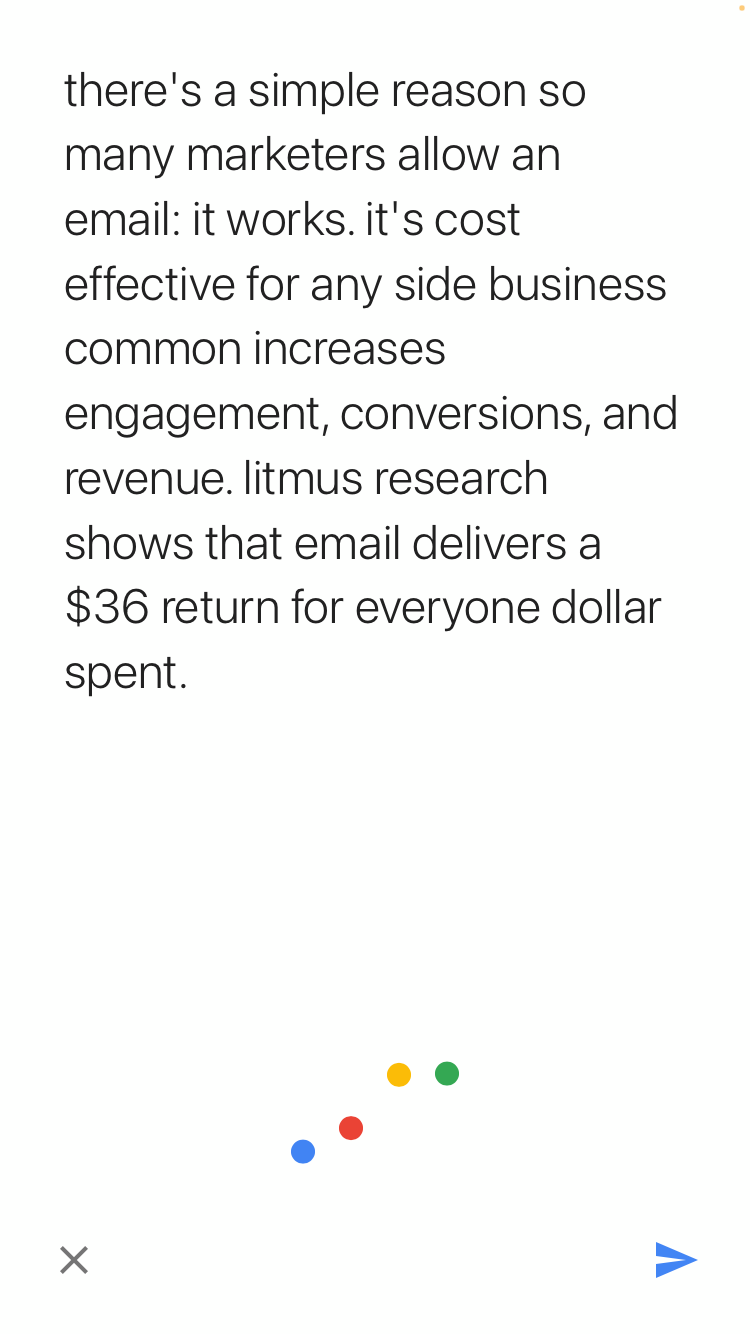

The best dictation software in 2024

These speech-to-text apps will save you time without sacrificing accuracy..

The early days of dictation software were like your friend that mishears lyrics: lots of enthusiasm but little accuracy. Now, AI is out of Pandora's box, both in the news and in the apps we use, and dictation apps are getting better and better because of it. It's still not 100% perfect, but you'll definitely feel more in control when using your voice to type.

I took to the internet to find the best speech-to-text software out there right now, and after monologuing at length in front of dozens of dictation apps, these are my picks for the best.

The best dictation software

Windows 11 Speech Recognition for free dictation software on Windows

Dragon by Nuance for a customizable dictation app

Google Docs voice typing for dictating in Google Docs

Gboard for a free mobile dictation app

Otter for collaboration

What is dictation software?

When searching for dictation software online, you'll come across a wide range of options. The ones I'm focusing on here are apps or services that you can quickly open, start talking, and see the results on your screen in (near) real-time. This is great for taking quick notes , writing emails without typing, or talking out an entire novel while you walk in your favorite park—because why not.

Beyond these productivity uses, people with disabilities or with carpal tunnel syndrome can use this software to type more easily. It makes technology more accessible to everyone .

If this isn't what you're looking for, here's what else is out there:

AI assistants, such as Apple's Siri, Amazon's Alexa, and Microsoft's Cortana, can help you interact with each of these ecosystems to send texts, buy products, or schedule events on your calendar.

AI meeting assistants will join your meetings and transcribe everything, generating meeting notes to share with your team.

AI transcription platforms can process your video and audio files into neat text.

Transcription services that use a combination of dictation software, AI, and human proofreaders can achieve above 99% accuracy.

There are also advanced platforms for enterprise, like Amazon Transcribe and Microsoft Azure's speech-to-text services.

What makes a great dictation app?

How we evaluate and test apps.

Our best apps roundups are written by humans who've spent much of their careers using, testing, and writing about software. Unless explicitly stated, we spend dozens of hours researching and testing apps, using each app as it's intended to be used and evaluating it against the criteria we set for the category. We're never paid for placement in our articles from any app or for links to any site—we value the trust readers put in us to offer authentic evaluations of the categories and apps we review. For more details on our process, read the full rundown of how we select apps to feature on the Zapier blog .

Dictation software comes in different shapes and sizes. Some are integrated in products you already use. Others are separate apps that offer a range of extra features. While each can vary in look and feel, here's what I looked for to find the best:

High accuracy. Staying true to what you're saying is the most important feature here. The lowest score on this list is at 92% accuracy.

Ease of use. This isn't a high hurdle, as most options are basic enough that anyone can figure them out in seconds.

Availability of voice commands. These let you add "instructions" while you're dictating, such as adding punctuation, starting a new paragraph, or more complex commands like capitalizing all the words in a sentence.

Availability of the languages supported. Most of the picks here support a decent (or impressive) number of languages.

Versatility. I paid attention to how well the software could adapt to different circumstances, apps, and systems.

I tested these apps by reading a 200-word script containing numbers, compound words, and a few tricky terms. I read the script three times for each app: the accuracy scores are an average of all attempts. Finally, I used the voice commands to delete and format text and to control the app's features where available.

I used my laptop's or smartphone's microphone to test these apps in a quiet room without background noise. For occasional dictation, an equivalent microphone on your own computer or smartphone should do the job well. If you're doing a lot of dictation every day, it's probably worth investing in an external microphone, like the Jabra Evolve .

What about AI?

Before the ChatGPT boom, AI wasn't as hot a keyword, but it already existed. The apps on this list use a combination of technologies that may include AI— machine learning and natural language processing (NLP) in particular. While they could rebrand themselves to keep up with the hype, they may use pipelines or models that aren't as bleeding-edge when compared to what's going on in Hugging Face or under OpenAI Whisper 's hood, for example.

Also, since this isn't a hot AI software category, these apps may prefer to focus on their core offering and product quality instead, not ride the trendy wave by slapping "AI-powered" on every web page.

Tips for using voice recognition software

Though dictation software is pretty good at recognizing different voices, it's not perfect. Here are some tips to make it work as best as possible.

Speak naturally (with caveats). Dictation apps learn your voice and speech patterns over time. And if you're going to spend any time with them, you want to be comfortable. Speak naturally. If you're not getting 90% accuracy initially, try enunciating more.

Punctuate. When you dictate, you have to say each period, comma, question mark, and so forth. The software isn't always smart enough to figure it out on its own.

Learn a few commands . Take the time to learn a few simple commands, such as "new line" to enter a line break. There are different commands for composing, editing, and operating your device. Commands may differ from app to app, so learn the ones that apply to the tool you choose.

Know your limits. Especially on mobile devices, some tools have a time limit for how long they can listen—sometimes for as little as 10 seconds. Glance at the screen from time to time to make sure you haven't blown past the mark.

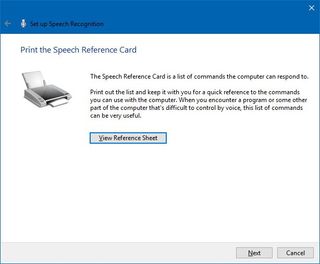

Practice. It takes time to adjust to voice recognition software, but it gets easier the more you practice. Some of the more sophisticated apps invite you to train by reading passages or doing other short drills. Don't shy away from tutorials, help menus, and on-screen cheat sheets.

The best dictation software at a glance

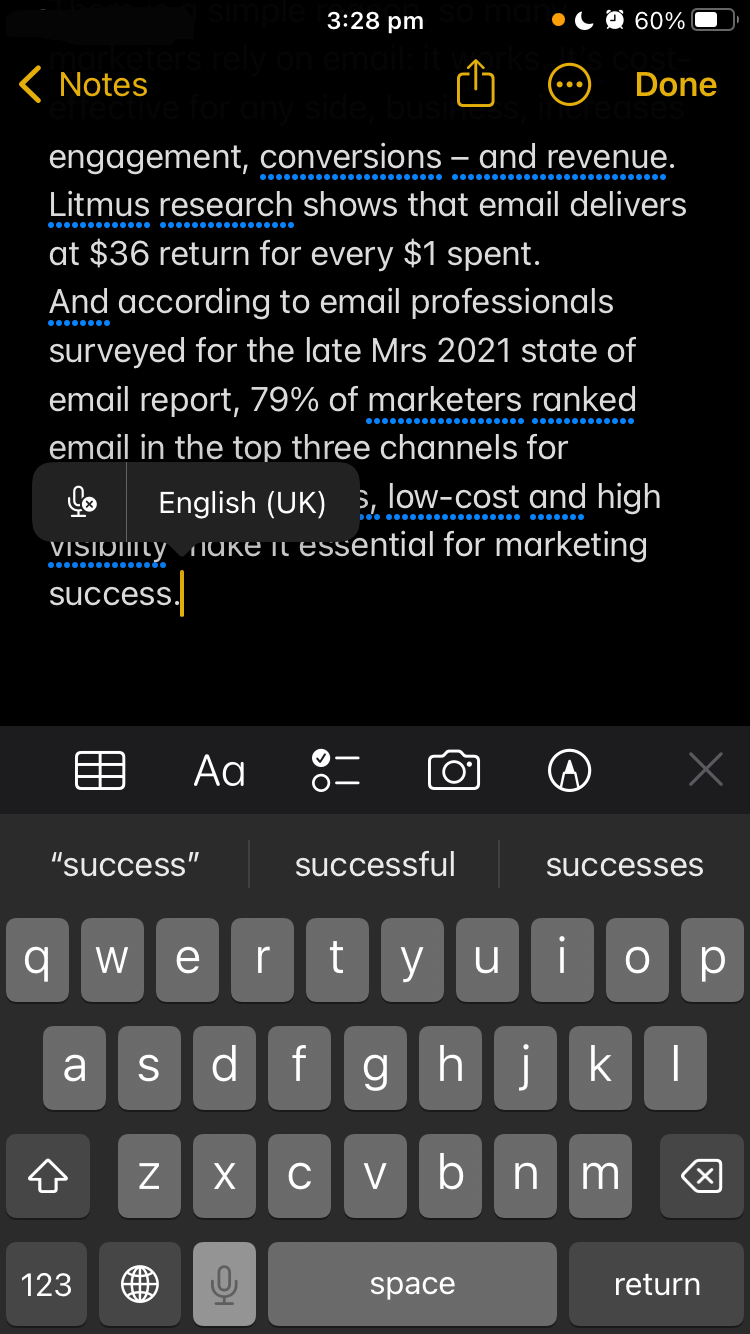

Best free dictation software for apple devices, apple dictation (ios, ipados, macos).

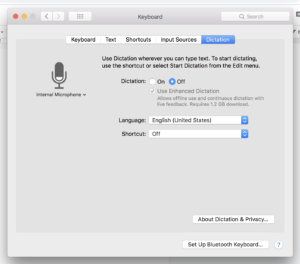

Look no further than your Mac, iPhone, or iPad for one of the best dictation tools. Apple's built-in dictation feature, powered by Siri (I wouldn't be surprised if the two merged one day), ships as part of Apple's desktop and mobile operating systems. On iOS devices, you use it by pressing the microphone icon on the stock keyboard. On your desktop, you turn it on by going to System Preferences > Keyboard > Dictation , and then use a keyboard shortcut to activate it in your app.

If you want the ability to navigate your Mac with your voice and use dictation, try Voice Control . By default, Voice Control requires the internet to work and has a time limit of about 30 seconds for each smattering of speech. To remove those limits for a Mac, enable Enhanced Dictation, and follow the directions here for your OS (you can also enable it for iPhones and iPads). Enhanced Dictation adds a local file to your device so that you can dictate offline.

You can format and edit your text using simple commands, such as "new paragraph" or "select previous word." Tip: you can view available commands in a small window, like a little cheat sheet, while learning the ropes. Apple also offers a number of advanced commands for things like math, currency, and formatting.

Apple Dictation price: Included with macOS, iOS, iPadOS, and Apple Watch.

Apple Dictation accuracy: 96%. I tested this on an iPhone SE 3rd Gen using the dictation feature on the keyboard.

Recommendation: For the occasional dictation, I'd recommend the standard Dictation feature available with all Apple systems. But if you need more custom voice features (e.g., medical terms), opt for Voice Control with Enhanced Dictation. You can create and import both custom vocabulary and custom commands and work while offline.

Apple Dictation supported languages: 59 languages and dialects .

While Apple Dictation is available natively on the Apple Watch, if you're serious about recording plenty of voice notes and memos, check out the Just Press Record app. It runs on the same engine and keeps all your recordings synced and organized across your Apple devices.

Best free dictation software for Windows

Windows 11 speech recognition (windows).

Windows 11 Speech Recognition (also known as Voice Typing) is a strong dictation tool, both for writing documents and controlling your Windows PC. Since it's part of your system, you can use it in any app you have installed.

To start, first, check that online speech recognition is on by going to Settings > Time and Language > Speech . To begin dictating, open an app, and on your keyboard, press the Windows logo key + H. A microphone icon and gray box will appear at the top of your screen. Make sure your cursor is in the space where you want to dictate.

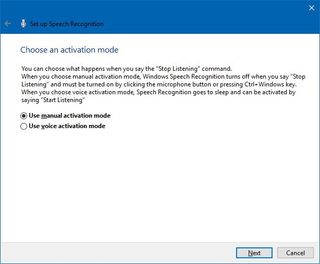

When it's ready for your dictation, it will say Listening . You have about 10 seconds to start talking before the microphone turns off. If that happens, just click it again and wait for Listening to pop up. To stop the dictation, click the microphone icon again or say "stop talking."

As I dictated into a Word document, the gray box reminded me to hang on, we need a moment to catch up . If you're speaking too fast, you'll also notice your transcribed words aren't keeping up. This never posed an issue with accuracy, but it's a nice reminder to keep it slow and steady.

To activate the computer control features, you'll have to go to Settings > Accessibility > Speech instead. While there, tick on Windows Speech Recognition. This unlocks a range of new voice commands that can fully replace a mouse and keyboard. Your voice becomes the main way of interacting with your system.

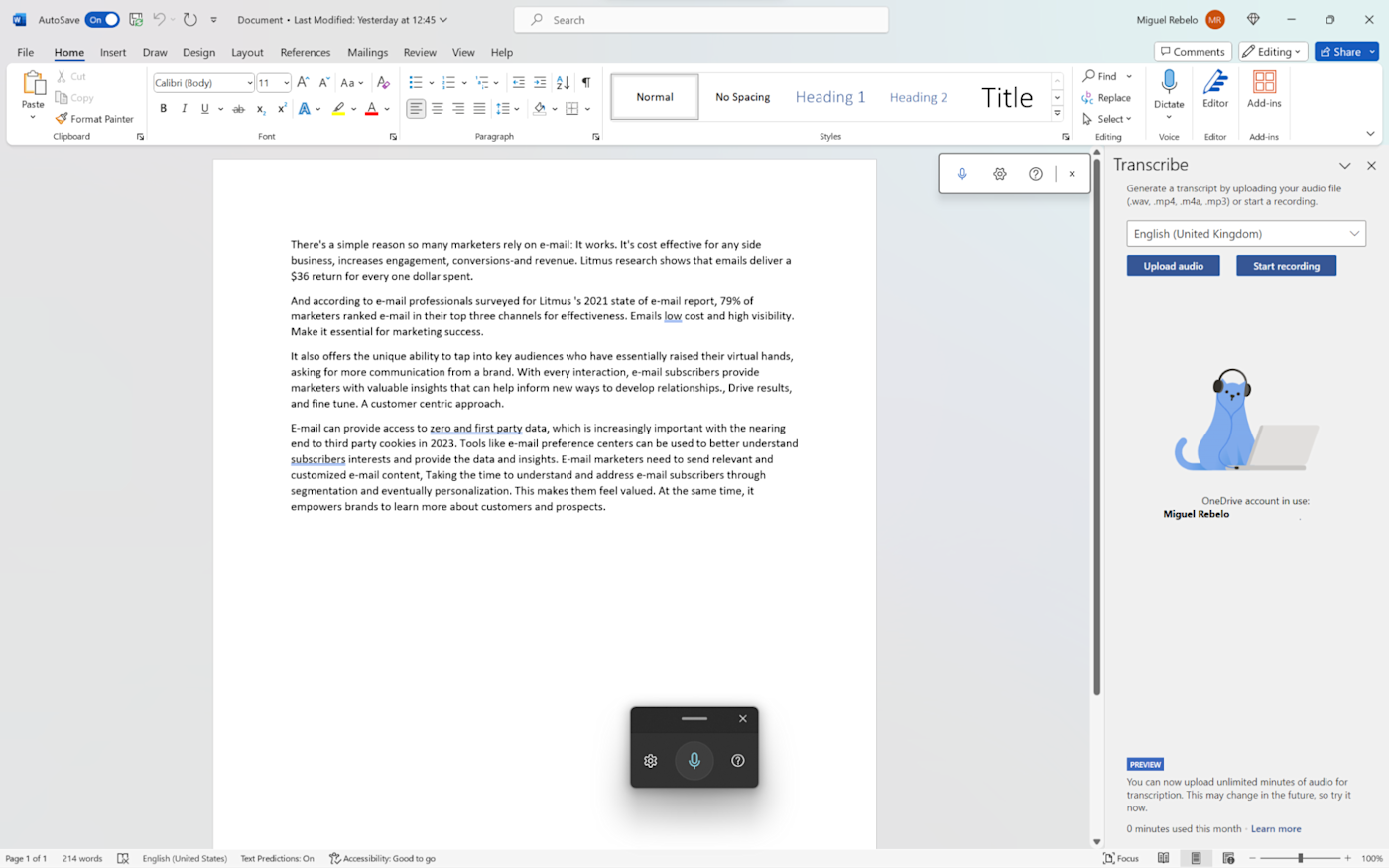

While you can use this tool anywhere inside your computer, if you're a Microsoft 365 subscriber, you'll be able to use the dictation features there too. The best app to use it on is, of course, Microsoft Word: it even offers file transcription, so you can upload a WAV or MP3 file and turn it into text. The engine is the same, provided by Microsoft Speech Services.

Windows 11 Speech Recognition price: Included with Windows 11. Also available as part of the Microsoft 365 subscription.

Windows 11 Speech Recognition accuracy: 95%. I tested it in Windows 11 while using Microsoft Word.

Windows 11 Speech Recognition languages supported : 11 languages and dialects .

Best customizable dictation software

Dragon by nuance (android, ios, macos, windows).

In 1990, Dragon Dictate emerged as the first dictation software. Over three decades later, we have Dragon by Nuance, a leader in the industry and a distant cousin of that first iteration. With a variety of software packages and mobile apps for different use cases (e.g., legal, medical, law enforcement), Dragon can handle specialized industry vocabulary, and it comes with excellent features, such as the ability to transcribe text from an audio file you upload.

For this test, I used Dragon Anywhere, Nuance's mobile app, as it's the only version—among otherwise expensive packages—available with a free trial. It includes lots of features not found in the others, like Words, which lets you add words that would be difficult to recognize and spell out. For example, in the script, the word "Litmus'" (with the possessive) gave every app trouble. To avoid this, I added it to Words, trained it a few times with my voice, and was then able to transcribe it accurately.

It also provides shortcuts. If you want to shorten your entire address to one word, go to Auto-Text , give it a name ("address"), and type in your address: 1000 Eichhorn St., Davenport, IA 52722, and hit Save . The next time you dictate and say "address," you'll get the entire thing. Press the comment bubble icon to see text commands while you're dictating, or say "What can I say?" and the command menu pops up.

Once you complete a dictation, you can email, share (e.g., Google Drive, Dropbox), open in Word, or save to Evernote. You can perform these actions manually or by voice command (e.g., "save to Evernote.") Once you name it, it automatically saves in Documents for later review or sharing.

Accuracy is good and improves with use, showing that you can definitely train your dragon. It's a great choice if you're serious about dictation and plan to use it every day, but may be a bit too much if you're just using it occasionally.

Dragon by Nuance price: $15/month for Dragon Anywhere (iOS and Android); from $200 to $500 for desktop packages

Dragon by Nuance accuracy: 97%. Tested it in the Dragon Anywhere iOS app.

Dragon by Nuance supported languages: 6 languages and dialects in Dragon Anywhere and 8 languages and dialects in Dragon Desktop.

Best free mobile dictation software

Gboard (android, ios).

Gboard, also known as Google Keyboard, is a free keyboard native to Android phones. It's also available for iOS: go to the App Store, download the Gboard app , and then activate the keyboard in the settings. In addition to typing, it lets you search the web, translate text, or run a quick Google Maps search.

Back to the topic: it has an excellent dictation feature. To start, press the microphone icon on the top-right of the keyboard. An overlay appears on the screen, filling itself with the words you're saying. It's very quick and accurate, which will feel great for fast-talkers but probably intimidating for the more thoughtful among us. If you stop talking for a few seconds, the overlay disappears, and Gboard pastes what it heard into the app you're using. When this happens, tap the microphone icon again to continue talking.

Wherever you can open a keyboard while using your phone, you can have Gboard supporting you there. You can write emails or notes or use any other app with an input field.

The writer who handled the previous update of this list had been using Gboard for seven years, so it had plenty of training data to adapt to his particular enunciation, landing the accuracy at an amazing 98%. I haven't used it much before, so the best I had was 92% overall. It's still a great score. More than that, it's proof of how dictation apps improve the more you use them.

Gboard price : Free

Gboard accuracy: 92%. With training, it can go up to 98%. I tested it using the iOS app while writing a new email.

Gboard supported languages: 916 languages and dialects .

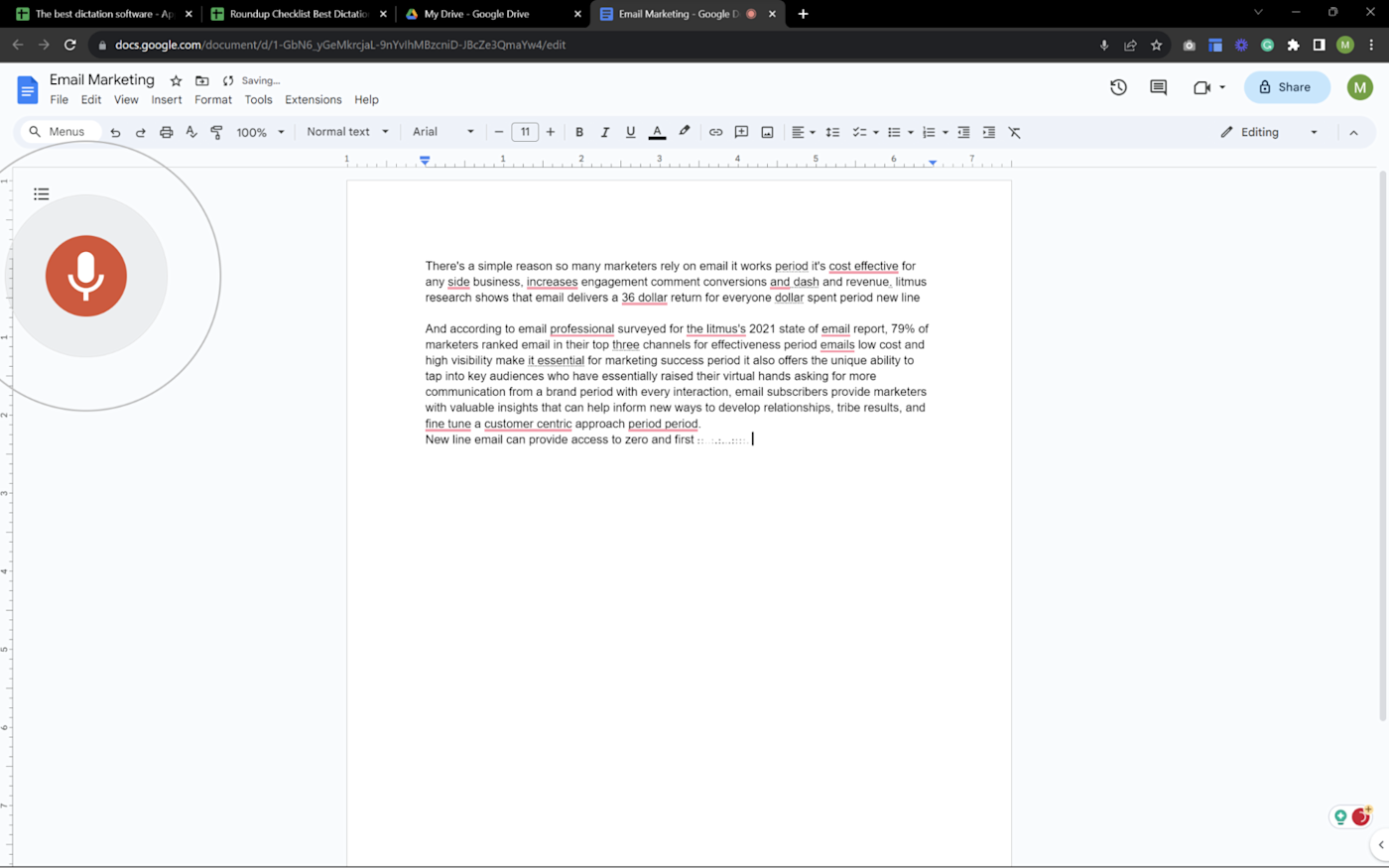

Best dictation software for typing in Google Docs

Google docs voice typing (web on chrome).

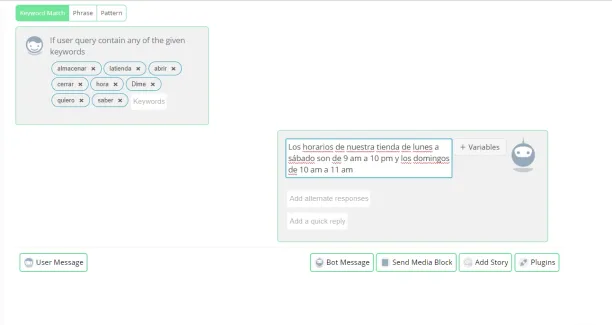

Just like Microsoft offers dictation in their Office products, Google does the same for their Workspace suite. The best place to use the voice typing feature is in Google Docs, but you can also dictate speaker notes in Google Slides as a way to prepare for your presentation.

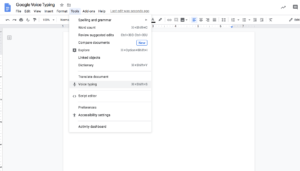

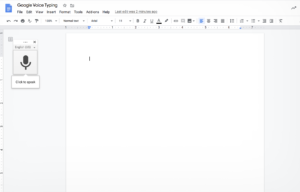

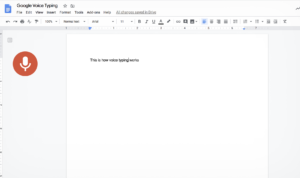

To get started, make sure you're using Chrome and have a Google Docs file open. Go to Tools > Voice typing , and press the microphone icon to start. As you talk, the text will jitter into existence in the document.

You can change the language in the dropdown on top of the microphone icon. If you need help, hover over that icon, and click the ? on the bottom-right. That will show everything from turning on the mic, the voice commands for dictation, and moving around the document.

It's unclear whether Google's voice typing here is connected to the same engine in Gboard. I wasn't able to confirm whether the training data for the mobile keyboard and this tool are connected in any way. Still, the engines feel very similar and turned out the same accuracy at 92%. If you start using it more often, it may adapt to your particular enunciation and be more accurate in the long run.

Google Docs voice typing price : Free

Google Docs voice typing accuracy: 92%. Tested in a new Google Docs file in Chrome.

Google Docs voice typing supported languages: 118 languages and dialects ; voice commands only available in English.

Google Docs integrates with Zapier , which means you can automatically do things like save form entries to Google Docs, create new documents whenever something happens in your other apps, or create project management tasks for each new document.

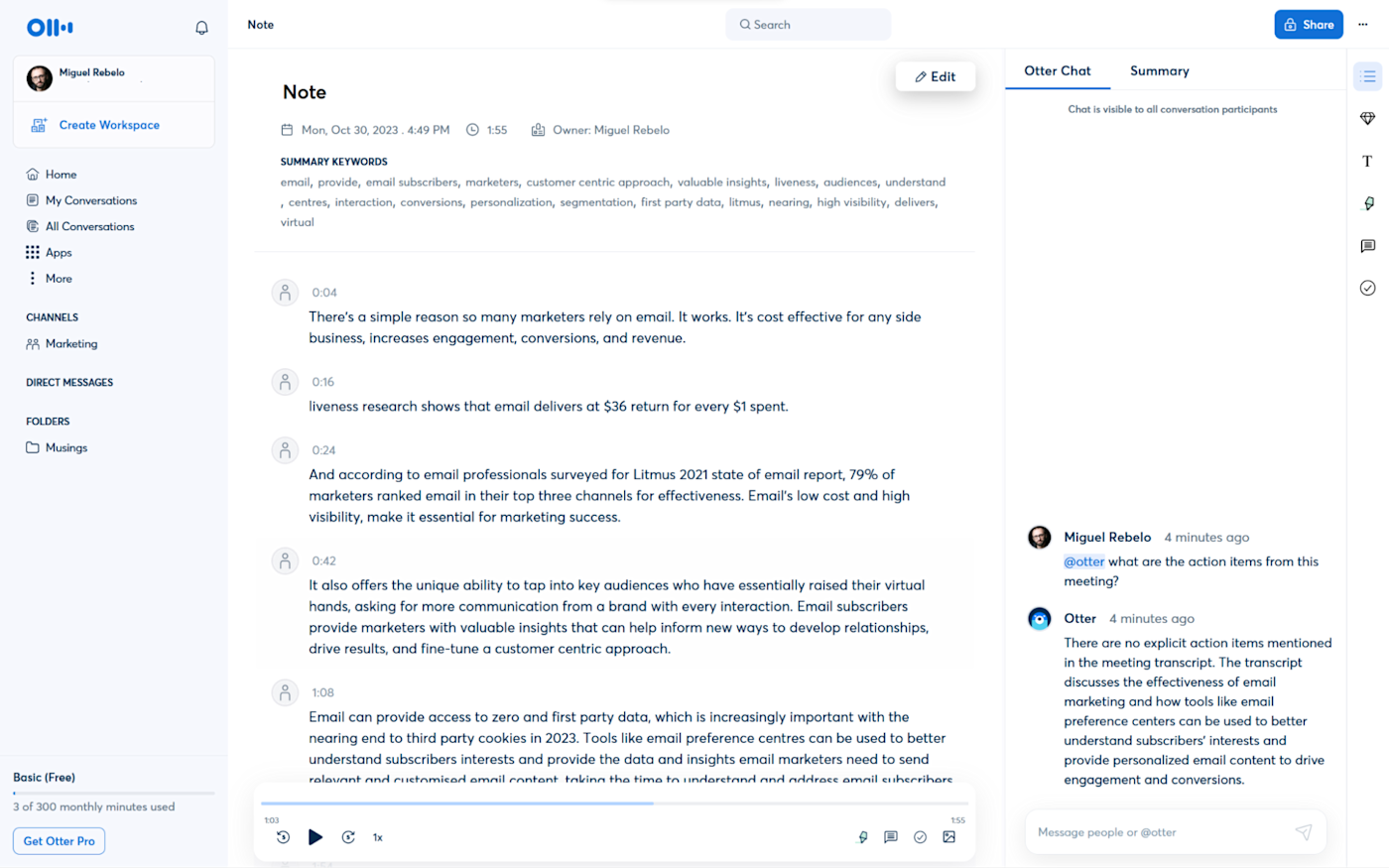

Best dictation software for collaboration

Otter (web, android, ios).

Most of the time, you're dictating for yourself: your notes, emails, or documents. But there may be situations in which sharing and collaboration is more important. For those moments, Otter is the better option.

It's not as robust in terms of dictation as others on the list, but it compensates with its versatility. It's a meeting assistant, first and foremost, ready to hop on your meetings and transcribe everything it hears. This is great to keep track of what's happening there, making the text available for sharing by generating a link or in the corresponding team workspace.

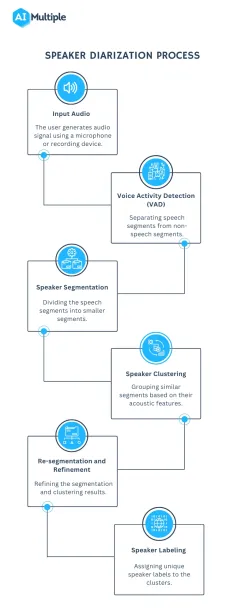

The reason why it's the best for collaboration is that others can highlight parts of the transcript and leave their comments. It also separates multiple speakers, in case you're recording a conversation, so that's an extra headache-saver if you use dictation software for interviewing people.

When you open the app and click the Record button on the top-right, you can use it as a traditional dictation app. It doesn't support voice commands, but it has decent intuition as to where the commas and periods should go based on the intonation and rhythm of your voice. Once you're done talking, Otter will start processing what you said, extract keywords, and generate action items and notes from the content of the transcription.

If you're going for long recording stretches where you talk about multiple topics, there's an AI chat option, where you can ask Otter questions about the transcript. This is great to summarize the entire talk, extract insights, and get a different angle on everything you said.

Not all meeting assistants offer dictation, so Otter sits here on this fence between software categories, a jack-of-two-trades, quite good at both. If you want something more specialized for meetings, be sure to check out the best AI meeting assistants . But if you want a pure dictation app with plenty of voice commands and great control over the final result, the other options above will serve you better.

Otter price: Free plan available for 300 minutes / month. Pro plan starts at $16.99, adding more collaboration features and monthly minutes.

Otter accuracy: 93% accuracy. I tested it in the web app on my computer.

Otter supported languages: Only American and British English for now.

Is voice dictation for you?

Dictation software isn't for everyone. It will likely take practice learning to "write" out loud because it will feel unnatural. But once you get comfortable with it, you'll be able to write from anywhere on any device without the need for a keyboard.

And by using any of the apps I listed here, you can feel confident that most of what you dictate will be accurately captured on the screen.

Related reading:

The best transcription services

Catch typos by making your computer read to you

Why everyone should try the accessibility features on their computer

What is Otter.ai?

The best voice recording apps for iPhone

This article was originally published in April 2016 and has also had contributions from Emily Esposito, Jill Duffy, and Chris Hawkins. The most recent update was in November 2023.

Get productivity tips delivered straight to your inbox

We’ll email you 1-3 times per week—and never share your information.

Miguel Rebelo

Miguel Rebelo is a freelance writer based in London, UK. He loves technology, video games, and huge forests. Track him down at mirebelo.com.

- Video & audio

- Google Docs

Related articles

The 7 best Zoom alternatives in 2024

The best CRM software to manage your leads and customers in 2024

The best CRM software to manage your leads...

The best project management software for small businesses in 2024

The best project management software for...

The 9 best Mailchimp alternatives in 2024

Improve your productivity automatically. Use Zapier to get your apps working together.

Best speech-to-text app of 2024

Free, paid and online voice recognition apps and services

Best overall

Best for business, best for mobile, best text service, best speech recognition, best virtual assistant, best for cloud, best for azure, best for batch conversion, best free speech to text apps, best mobile speech to text apps.

- How we test

The best speech-to-text apps make it simple and easy to convert speech into text, for both desktop and mobile devices.

1. Best overall 2. Best for business 3. Best for mobile 4. Best text service 5. Best speech recognition 6. Best virtual assistant 7. Best for cloud 8. Best for Azure 9. Best for batch conversion 10. Best free speech to text apps 11. Best mobile speech to text apps 12. FAQs 13. How we test

Speech-to-text used to be regarded as very niche, specifically serving either people with accessibility needs or for dictation . However, speech-to-text is moving more and more into the mainstream as office work can now routinely be completed more simply and easily by using voce-recognition software, rather than having to type through members, and speaking aloud for text to be recorded is now quite common.

While the best speech to text software used to be specifically only for desktops, the development of mobile devices and the explosion of easily accessible apps means that transcription can now also be carried out on a smartphone or tablet .

This has made the best voice to text applications increasingly valuable to users in a range of different environments, from education to business. This is not least because the technology has matured to the level where mistakes in transcriptions are relatively rare, with some services rightly boasting a 99.9% success rate from clear audio.

Even still, this applies mainly to ordinary situations and circumstances, and precludes the use of technical terminology such as required in legal or medical professions. Despite this, digital transcription can still service needs such as basic note-taking which can still be easily done using a phone app, simplifying the dictation process.

However, different speech-to-text programs have different levels of ability and complexity, with some using advanced machine learning to constantly correct errors flagged up by users so that they are not repeated. Others are downloadable software which is only as good as its latest update.

Here then are the best in speech-to-text recognition programs, which should be more than capable for most situations and circumstances.

We've also featured the best voice recognition software .

Get in touch

- Want to find out about commercial or marketing opportunities? Click here

- Out of date info, errors, complaints or broken links? Give us a nudge

- Got a suggestion for a product or service provider? Message us directly

The best paid for speech to text apps of 2024 in full:

Why you can trust TechRadar We spend hours testing every product or service we review, so you can be sure you’re buying the best. Find out more about how we test.

1. Dragon Anywhere

Our expert review:

Reasons to buy

Reasons to avoid.

Dragon Anywhere is the Nuance mobile product for Android and iOS devices, however this is no ‘lite’ app, but rather offers fully-formed dictation capabilities powered via the cloud.

So essentially you get the same excellent speech recognition as seen on the desktop software – the only meaningful difference we noticed was a very slight delay in our spoken words appearing on the screen (doubtless due to processing in the cloud). However, note that the app was still responsive enough overall.

It also boasts support for boilerplate chunks of text which can be set up and inserted into a document with a simple command, and these, along with custom vocabularies, are synced across the mobile app and desktop Dragon software. Furthermore, you can share documents across devices via Evernote or cloud services (such as Dropbox).

This isn’t as flexible as the desktop application, however, as dictation is limited to within Dragon Anywhere – you can’t dictate directly in another app (although you can copy over text from the Dragon Anywhere dictation pad to a third-party app). The other caveats are the need for an internet connection for the app to work (due to its cloud-powered nature), and the fact that it’s a subscription offering with no one-off purchase option, which might not be to everyone’s tastes.

Even bearing in mind these limitations, though, it’s a definite boon to have fully-fledged, powerful voice recognition of the same sterling quality as the desktop software, nestling on your phone or tablet for when you’re away from the office.

Nuance Communications offers a 7-day free trial to give the app a try before you commit to a subscription.

Read our full Dragon Anywhere review .

- ^ Back to the top

2. Dragon Professional

Should you be looking for a business-grade dictation application, your best bet is Dragon Professional. Aimed at pro users, the software provides you with the tools to dictate and edit documents, create spreadsheets, and browse the web using your voice.

According to Nuance, the solution is capable of taking dictation at an equivalent typing speed of 160 words per minute, with a 99% accuracy rate – and that’s out-of-the-box, before any training is done (whereby the app adapts to your voice and words you commonly use).

As well as creating documents using your voice, you can also import custom word lists. There’s also an additional mobile app that lets you transcribe audio files and send them back to your computer.

This is a powerful, flexible, and hugely useful tool that is especially good for individuals, such as professionals and freelancers, allowing for typing and document management to be done much more flexibly and easily.

Overall, the interface is easy to use, and if you get stuck at all, you can access a series of help tutorials. And while the software can seem expensive, it's just a one-time fee and compares very favorably with paid-for subscription transcription services.

Also note that Nuance are currently offering 12-months' access to Dragon Anywhere at no extra cost with any purchase of Dragon Home or Dragon Professional Individual.

Read our full Dragon Professional review .

Otter is a cloud-based speech to text program especially aimed for mobile use, such as on a laptop or smartphone. The app provides real-time transcription, allowing you to search, edit, play, and organize as required.

Otter is marketed as an app specifically for meetings, interviews, and lectures, to make it easier to take rich notes. However, it is also built to work with collaboration between teams, and different speakers are assigned different speaker IDs to make it easier to understand transcriptions.

There are three different payment plans, with the basic one being free to use and aside from the features mentioned above also includes keyword summaries and a wordcloud to make it easier to find specific topic mentions. You can also organize and share, import audio and video for transcription, and provides 600 minutes of free service.

The Premium plan also includes advanced and bulk export options, the ability to sync audio from Dropbox, additional playback speeds including the ability to skip silent pauses. The Premium plan also allows for up to 6,000 minutes of speech to text.

The Teams plan also adds two-factor authentication, user management and centralized billing, as well as user statistics, voiceprints, and live captioning.

Read our full Otter review .

Verbit aims to offer a smarter speech to text service, using AI for transcription and captioning. The service is specifically targeted at enterprise and educational establishments.

Verbit uses a mix of speech models, using neural networks and algorithms to reduce background noise, focus on terms as well as differentiate between speakers regardless of accent, as well as incorporate contextual events such as news and company information into recordings.

Although Verbit does offer a live version for transcription and captioning, aiming for a high degree of accuracy, other plans offer human editors to ensure transcriptions are fully accurate, and advertise a four hour turnaround time.

Altogether, while Verbit does offer a direct speech to text service, it’s possibly better thought of as a transcription service, but the focus on enterprise and education, as well as team use, means it earns a place here as an option to consider.

Read our full Verbit review .

5. Speechmatics

Speechmatics offers a machine learning solution to converting speech to text, with its automatic speech recognition solution available to use on existing audio and video files as well as for live use.

Unlike some automated transcription software which can struggle with accents or charge more for them, Speechmatics advertises itself as being able to support all major British accents, regardless of nationality. That way it aims to cope with not just different American and British English accents, but also South African and Jamaican accents.

Speechmatics offers a wider number of speech to text transcription uses than many other providers. Examples include taking call center phone recordings and converting them into searchable text or Word documents. The software also works with video and other media for captioning as well as using keyword triggers for management.

Overall, Speechmatics aims to offer a more flexible and comprehensive speech to text service than a lot of other providers, and the use of automation should keep them price competitive.

Read our full Speechmatics review .

6. Braina Pro

Braina Pro is speech recognition software which is built not just for dictation, but also as an all-round digital assistant to help you achieve various tasks on your PC. It supports dictation to third-party software in not just English but almost 90 different languages, with impressive voice recognition chops.

Beyond that, it’s a virtual assistant that can be instructed to set alarms, search your PC for a file, or search the internet, play an MP3 file, read an ebook aloud, plus you can implement various custom commands.

The Windows program also has a companion Android app which can remotely control your PC, and use the local Wi-Fi network to deliver commands to your computer, so you can spark up a music playlist, for example, wherever you happen to be in the house. Nifty.

There’s a free version of Braina which comes with limited functionality, but includes all the basic PC commands, along with a 7-day trial of the speech recognition which allows you to test out its powers for yourself before you commit to a subscription. Yes, this is another subscription-only product with no option to purchase for a one-off fee. Also note that you need to be online and have Google ’s Chrome browser installed for speech recognition functionality to work.

Read our full Braina Pro review .

7. Amazon Transcribe

Amazon Transcribe is as big cloud-based automatic speech recognition platform developed specifically to convert audio to text for apps. It especially aims to provide a more accurate and comprehensive service than traditional providers, such as being able to cope with low-fi and noisy recordings, such as you might get in a contact center .

Amazon Transcribe uses a deep learning process that automatically adds punctuation and formatting, as well as process with a secure livestream or otherwise transcribe speech to text with batch processing.

As well as offering time stamping for individual words for easy search, it can also identify different speaks and different channels and annotate documents accordingly to account for this.

There are also some nice features for editing and managing transcribed texts, such as vocabulary filtering and replacement words which can be used to keep product names consistent and therefore any following transcription easier to analyze.

Overall, Amazon Transcribe is one of the most powerful platforms out there, though it’s aimed more for the business and enterprise user rather than the individual.

8. Microsoft Azure Speech to Text

Microsoft 's Azure cloud service offers advanced speech recognition as part of the platform's speech services to deliver the Microsoft Azure Speech to Text functionality.

This feature allows you to simply and easily create text from a variety of audio sources. There are also customization options available to work better with different speech patterns, registers, and even background sounds. You can also modify settings to handle different specialist vocabularies, such as product names, technical information, and place names.

The Microsoft's Azure Speech to Text feature is powered by deep neural network models and allows for real-time audio transcription that can be set up to handle multiple speakers.

As part of the Azure cloud service, you can run Azure Speech to Text in the cloud, on premises, or in edge computing. In terms of pricing, you can run the feature in a free container with a single concurrent request for up to 5 hours of free audio per month.

Read our full Microsoft Azure Speech to Text review .

9. IBM Watson Speech to Text

IBM's Watson Speech to Text works is the third cloud-native solution on this list, with the feature being powered by AI and machine learning as part of IBM's cloud services.

While there is the option to transcribe speech to text in real-time, there is also the option to batch convert audio files and process them through a range of language, audio frequency, and other output options.

You can also tag transcriptions with speaker labels, smart formatting, and timestamps, as well as apply global editing for technical words or phrases, acronyms, and for number use.

As with other cloud services Watson Speech to Text allows for easy deployment both in the cloud and on-premises behind your own firewall to ensure security is maintained.

Read our full Watson Speech to Text review .

1. Google Gboard

If you already have an Android mobile device, then if it's not already installed then download Google Keyboard from the Google Play store and you'll have an instant text-to-speech app. Although it's primarily designed as a keyboard for physical input, it also has a speech input option which is directly available. And because all the power of Google's hardware is behind it, it's a powerful and responsive tool.

If that's not enough then there are additional features. Aside from physical input ones such as swiping, you can also trigger images in your text using voice commands. Additionally, it can also work with Google Translate, and is advertised as providing support for over 60 languages.

Even though Google Keyboard isn't a dedicated transcription tool, as there are no shortcut commands or text editing directly integrated, it does everything you need from a basic transcription tool. And as it's a keyboard, it means should be able to work with any software you can run on your Android smartphone, so you can text edit, save, and export using that. Even better, it's free and there are no adverts to get in the way of you using it.

2. Just Press Record

If you want a dedicated dictation app, it’s worth checking out Just Press Record. It’s a mobile audio recorder that comes with features such as one tap recording, transcription and iCloud syncing across devices. The great thing is that it’s aimed at pretty much anyone and is extremely easy to use.

When it comes to recording notes, all you have to do is press one button, and you get unlimited recording time. However, the really great thing about this app is that it also offers a powerful transcription service.

Through it, you can quickly and easily turn speech into searchable text. Once you’ve transcribed a file, you can then edit it from within the app. There’s support for more than 30 languages as well, making it the perfect app if you’re working abroad or with an international team. Another nice feature is punctuation command recognition, ensuring that your transcriptions are free from typos.

This app is underpinned by cloud technology, meaning you can access notes from any device (which is online). You’re able to share audio and text files to other iOS apps too, and when it comes to organizing them, you can view recordings in a comprehensive file.

3. Speechnotes

Speechnotes is yet another easy to use dictation app. A useful touch here is that you don’t need to create an account or anything like that; you just open up the app and press on the microphone icon, and you’re off.

The app is powered by Google voice recognition tech. When you’re recording a note, you can easily dictate punctuation marks through voice commands, or by using the built-in punctuation keyboard.

To make things even easier, you can quickly add names, signatures, greetings and other frequently used text by using a set of custom keys on the built-in keyboard. There’s automatic capitalization as well, and every change made to a note is saved to the cloud.

When it comes to customizing notes, you can access a plethora of fonts and text sizes. The app is free to download from the Google Play Store , but you can make in-app purchases to access premium features (there's also a browser version for Chrome).

Read our full Speechnotes review .

4. Transcribe

Marketed as a personal assistant for turning videos and voice memos into text files, Transcribe is a popular dictation app that’s powered by AI. It lets you make high quality transcriptions by just hitting a button.

The app can transcribe any video or voice memo automatically, while supporting over 80 languages from across the world. While you can easily create notes with Transcribe, you can also import files from services such as Dropbox.

Once you’ve transcribed a file, you can export the raw text to a word processor to edit. The app is free to download, but you’ll have to make an in-app purchase if you want to make the most of these features in the long-term. There is a trial available, but it’s basically just 15 minutes of free transcription time. Transcribe is only available on iOS, though.

5. Windows Speech Recognition

If you don’t want to pay for speech recognition software, and you’re running Microsoft’s latest desktop OS, then you might be pleased to hear that speech-to-text is built into Windows.

Windows Speech Recognition, as it’s imaginatively named – and note that this is something different to Cortana, which offers basic commands and assistant capabilities – lets you not only execute commands via voice control, but also offers the ability to dictate into documents.

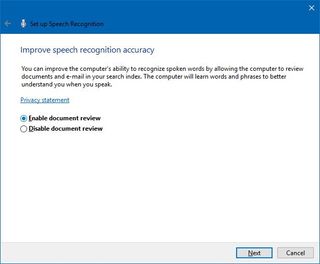

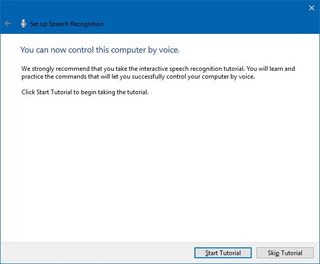

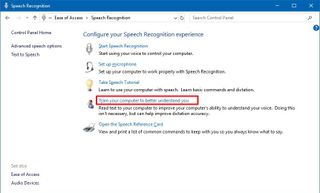

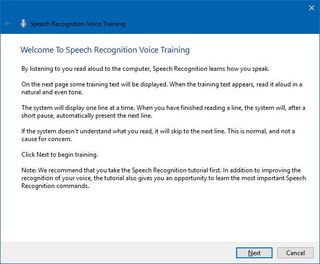

The sort of accuracy you get isn’t comparable with that offered by the likes of Dragon, but then again, you’re paying nothing to use it. It’s also possible to improve the accuracy by training the system by reading text, and giving it access to your documents to better learn your vocabulary. It’s definitely worth indulging in some training, particularly if you intend to use the voice recognition feature a fair bit.

The company has been busy boasting about its advances in terms of voice recognition powered by deep neural networks, especially since windows 10 and now for Windows 11 , and Microsoft is certainly priming us to expect impressive things in the future. The likely end-goal aim is for Cortana to do everything eventually, from voice commands to taking dictation.

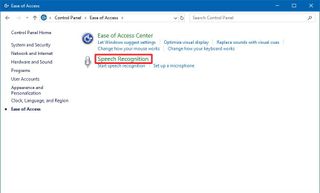

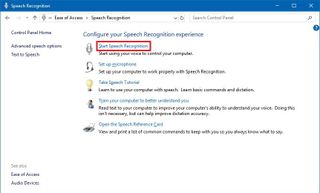

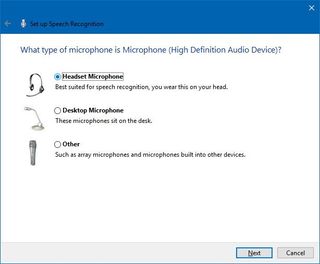

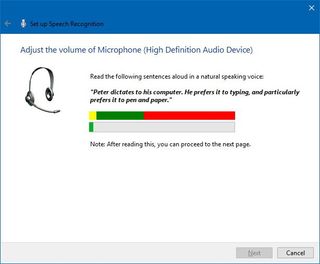

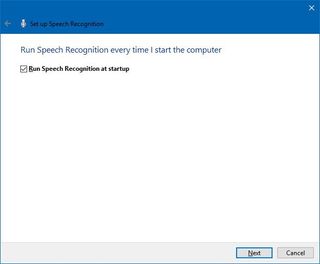

Turn on Windows Speech Recognition by heading to the Control Panel (search for it, or right click the Start button and select it), then click on Ease of Access, and you will see the option to ‘start speech recognition’ (you’ll also spot the option to set up a microphone here, if you haven’t already done that).

Aside from what has already been covered above, there are an increasing number of apps available across all mobile devices for working with speech to text, not least because Google's speech recognition technology is available for use.

iTranslate Translator is a speech-to-text app for iOS with a difference, in that it focuses on translating voice languages. Not only does it aim to translate different languages you hear into text for your own language, it also works to translate images such as photos you might take of signs in a foreign country and get a translation for them. In that way, iTranslate is a very different app, that takes the idea of speech-to-text in a novel direction, and by all accounts, does it well.

ListNote Speech-to-Text Notes is another speech-to-text app that uses Google's speech recognition software, but this time does a more comprehensive job of integrating it with a note-taking program than many other apps. The text notes you record are searchable, and you can import/export with other text applications. Additionally there is a password protection option, which encrypts notes after the first 20 characters so that the beginning of the notes are searchable by you. There's also an organizer feature for your notes, using category or assigned color. The app is free on Android, but includes ads.

Voice Notes is a simple app that aims to convert speech to text for making notes. This is refreshing, as it mixes Google's speech recognition technology with a simple note-taking app, so there are more features to play with here. You can categorize notes, set reminders, and import/export text accordingly.

SpeechTexter is another speech-to-text app that aims to do more than just record your voice to a text file. This app is built specifically to work with social media, so that rather than sending messages, emails, Tweets, and similar, you can record your voice directly to the social media sites and send. There are also a number of language packs you can download for offline working if you want to use more than just English, which is handy.

Also consider reading these related software and app guides:

- Best text-to-speech software

- Best transcription services

- Best Bluetooth headsets

Speech-to-text app FAQs

Which speech-to-text app is best for you.

When deciding which speech-to-text app to use, first consider what your actual needs are, as free and budget options may only provide basic features, so if you need to use advanced tools you may find a paid-for platform is better suited to you. Additionally, higher-end software can usually cater for every need, so do ensure you have a good idea of which features you think you may require from your speech-to-text app.

How we tested the best speech-to-text apps

To test for the best speech-to-text apps we first set up an account with the relevant platform, then we tested the service to see how the software could be used for different purposes and in different situations. The aim was to push each speech-to-text platform to see how useful its basic tools were and also how easy it was to get to grips with any more advanced tools.

Read more on how we test, rate, and review products on TechRadar .

- You've reached the end of the page. Jump back up to the top ^

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

Brian has over 30 years publishing experience as a writer and editor across a range of computing, technology, and marketing titles. He has been interviewed multiple times for the BBC and been a speaker at international conferences. His specialty on techradar is Software as a Service (SaaS) applications, covering everything from office suites to IT service tools. He is also a science fiction and fantasy author, published as Brian G Turner.

Adobe Dreamweaver (2024) review

Adobe Character Animator (2024) review

Don't be a fool: buy the Apple Watch 9 for an incredible price of just $299 at Amazon

Most Popular

By Barclay Ballard February 27, 2024

By Krishi Chowdhary February 26, 2024

By Barclay Ballard February 26, 2024

By Barclay Ballard February 24, 2024

By Barclay Ballard February 23, 2024

By Barclay Ballard February 22, 2024

By Barclay Ballard February 21, 2024

By Jess Weatherbed, Dom Reseigh-Lincoln February 21, 2024

- 2 Android 14 powered Doogee T30 Max has a 4K IPS screen and retails for under $300

- 3 Early April Fools? Get a free Samsung 65-inch 4K TV, plus installation at Best Buy

- 4 Vizio’s latest 4K TV is its largest one yet and costs just $999

- 5 The iPhone 16 Pro and Pro Max may get a new polished titanium finish

- 2 White House demands all government agencies must appoint an AI officer to help mitigate risks

- 3 iOS 18 might break the iPhone's iconic app grid, and it's a change no one asked for

- 4 The big Apple lawsuit explained: why Apple's getting sued and what it means for the iPhone

- 5 macOS isn’t perfect – but every day with Windows 11 makes me want to use my MacBook full-time

- GTA 5 Cheats

- What is Discord?

- Find a Lost Phone

- Upcoming Movies

- Nintendo Switch 2

- Best YouTube TV Alternatives

- How to Recall an Email in Outlook

The best speech-to-text software for 2022

If you’re looking to take your productivity up a notch (or if you’re just a really slow typist), the best speech-to-text software is a sure way to do it. The idea is pretty simple: You speak, and the software detects your words and converts them into text format. The applications are nearly endless, from dictating thoughts and jotting down notes to creating long-form documents without having to type a word yourself. Yet despite this, not many businesses and professionals are taking full advantage of what speech-to-text software can give them.

Dragon Anywhere

Amazon transcribe, google docs voice typing.

The good news is that the best speech-to-text software doesn’t have to cost an arm and a leg — or anything at all, depending on your needs. There’s a handful of noteworthy services out there, though, and selecting the right one is important. That’s where we come in. Below, we’ve rounded up the best speech-to-text software platforms out there, with our picks covering a wide spectrum of platforms, features, and price points.

- Price: $15 per month or $150 per year

- Free Trial: Yes

- Platforms: iOS, Android

- Voice editing and formatting

- Cloud-based storage and file sharing

- AI learning adapts to your speech

If you’re already somewhat familiar with the best speech-to-text software then there’s a good chance you’ve heard of Dragon. Dragon Anywhere is a dedicated mobile speech-to-text app that delivers a high degree of accuracy thanks to its industry-leading speech recognition software that can adapt to your own speech patterns. In other words, Dragon Anywhere can actually learn how you speak, right down to your sentence cadence and word pronunciation. In the off-chance that it does make a mistake, you can edit and format using just your voice. Dragon Anywhere also allows for continuous dictation with no word limits or length cut-offs, and your text documents are stored in the cloud for easy access and sharing with colleagues when you need to.

- The best business laptops from Apple, Lenovo, Dell, and more

- The Best Hiring Apps for Recruiters

- 15 best online jobs for teens in 2022

Dragon Anywhere is by far the best speech-to-text software for mobile users, given that it’s designed entirely for use on iOS and Android devices, making it the ideal choice for translators, lawyers, accountants and other professionals who need to turn spoken dialog into written notes. It’s a bit like having a virtual stenographer. Plus, it’s useful for anybody else who wants to be able to “jot” things down hands-free. Its cloud-based sharing makes Dragon Anywhere great for group work, too.

Dragon Anywhere is a paid service with monthly and yearly subscription plans. You can pay on a monthly basis for $15, although if you like the service, then the $150 annual subscription is a better value (basically getting you two months free each year). If you want to give it a try first, there is a free one-week Dragon Anywhere trial available as well. There are Dragon software suites available for business users on Windows, and Dragon Anywhere syncs with them seamlessly. You also get a Dragon Anywhere subscription at no additional cost — a $150 value — with the Dragon Home and Dragon Professional desktop versions, which might be a better value depending on your needs.

- Price: Starts at $0.024 per minute

- Free Trial: Yes, Free Tier provides 60 audio minutes monthly for the first 12 months

- Platforms: Most devices with a microphone

- HIPAA- eligible and compatible with electronic health record systems

- Integrates with AWS cloud services

- Call Analytics extracts data and insights from customer interactions

If you need a more enterprise-grade solution, then Amazon Transcribe is one of the best speech-to-text software services for businesses large and small. It’s designed to integrate seamlessly with Amazon Web Services, so if your website and/or company already uses any of these, then setup should be a breeze. You can create text documents, transcribe conversations and videos, translate speech, and more. What really sets Amazon Transcribe apart from other speech-to-text apps (aside from its AWS integration) is its bevy of great features tailored for professional environments.

For instance, its Call Analytics feature can automatically extract useful insights from customer interactions, allowing you to tune and tailor your customer service. It’s also HIPAA-eligible and compatible with electronic health record systems for easy uploading and management of medical transcriptions and other patient data. Amazon Transcribe is purpose-built for businesses, especially larger enterprises (not to mention organizations such as hospitals), which should come as no surprise given its integration with Amazon Web Services.

Compared to other dictating software, Amazon Transcribe’s pricing structure is somewhat unique in that its monthly subscription fee is based on how many audio minutes you use, with plans starting at $0.024 per minute and scaling down in price per minute for the higher tiers. If you’re looking for the best speech-to-text software for professional business applications, Amazon Transcribe is hard to beat.

- Price: $79 for yearly subscription, $200 for lifetime

- Free Trial: Yes, basic free plan available

- Platforms: Windows; companion app available for iOS and Android

- Understands more than 100 languages

- Acts as a virtual assistant for your PC

- Remote PC control through Android or iOS mobile devices

If Dragon and Amazon Transcribe are overkill for your needs, Braina is one of the best speech-to-text software suites for individual users. We named it the best multipurpose program in our roundup of the best dictation software , as Braina can be considered more of a virtual assistant for your PC rather than a simple speech-to-text app. Think of it as being much like Siri or Alexa , but more focused on productivity (and much more powerful and versatile in this regard) while being also capable of excellent speech-to-text functions thanks to its impressive speech recognition A.I. that understands more than 100 languages.

If you feel like you could use a hand around the office but don’t want to actually hire a personal assistant, Braina might be worth a go. It’s one of the best speech-to-text software choices for small businesses, home offices, and individual users thanks to its excellent speech recognition capabilities and other features. Perform internet searches, dictate documents, translate different languages, record calls and meetings, set alarms and calendar reminders, sort through your files — you name it. Braina’s companion app even lets you do everything remotely via your iOS or Android phone or tablet when you’re away from your computer.

One major drawback of Braina is that the core software only works on Windows, the aforementioned iOS and Android companion app notwithstanding. Also, multiple people can use Braina without having separate accounts or subscriptions, which is a nice change of pace from most subscription-based software suites. There is a basic free plan available as well. If you want to unlock the full set of features, though, such as non-English language compatibility, then Braina will set you back $79 yearly or $200 for a lifetime key.

- Price: Free

- Platforms: Windows, Mac, and Linux (browser-based)

- If you have a Google account, you already have it

- Automatically converts text into document format

- Cloud-based

You might already have access to one of the best speech-to-text software apps without even knowing it, as Google Docs has one build right in. Google’s browser-based word processor (part of the broader Google Drive suite of cloud-based office software) features a Voice Typing feature, and if you have a Google account and a working mic, then you’re already set up to use it. You don’t have to pay a cent for it, either, and for free software, it’s pretty good — although it naturally lacks many of the advanced features and dictation functions of the best speech-to-text software we outlined above.

Google Docs Voice Typing is very simple: You speak into your microphone, and Google Docs dumps the text into a document. It costs nothing to use, so if you’re on the fence about whether you need speech recognition at all, then Google Docs Voice Typing is a free way to try it out before you shell out any cash for any of the best speech-to-text software suites that you have to pay for. Voice Typing is great for those who just need basic dictating software without the bells and whistles offered by paid services, as well.

Since Google Docs is browser-based, you shouldn’t have to worry about platform compatibility. It’s naturally best for use on a computer rather than a mobile device; that said, you can really use it on any device with a microphone and access to Google Docs. Everything you do with Google Docs Voice Typing is automatically stored on the cloud, too, just like any other document you’d create or edit using Google Docs. The Google Drive cloud also makes it easy to share your transcriptions with friends and colleagues if you want.

Editors' Recommendations

- The 5 best tax software suites for individuals in 2024

- The best free antivirus software for 2023

- The best accounting software for your small business

- The best way to hire employees in 2022

- The best CRM software for your business in 2022

Knowing the best way to hire employees is an important part of finding great employees online fast. However, when it comes to doing so quickly, there can be differences involved in finding the most appropriate approach. That's why we've got all the best insight into the four key ways to find employees online fast.

When time is of the essence, it's important to know exactly what to do so that you're not stuck waiting too long to employ the right candidate for your business. Time is money and if you're short on staff, you need to be able to fill those vacancies quickly. Having said that, you still want the best candidates which is why it's important to go about it the right way. Some ways are more obvious than others but this is the time for efficiency so you get the best value proposition.

Communication is an essential part of doing business online, from the simplest calls and text messages to large-scale video conferences involving dozens or even hundreds of people. Unfortunately, most of the free communication apps most of us use every day aren't really built for anything other than simple messaging and therefore aren't up to meeting the demands of modern companies.

That's why any small business looking to streamline its operations in the digital age should invest in a more comprehensive Voice over Internet Protocol (better known as a VoIP) service. But if you don't even know where to start with this, don't fret. We've got everything you need to know about the best VoIP services for small businesses to set you and your burgeoning enterprise sailing in the right direction. RingCentral

Voice over Internet Protocol, or VoIP, is a popular alternative to landlines, especially in the business world. VoIP providers deliver digital telephone services that rely on the internet for voice and video calls. The main advantages of VoIP are that you can make long-distance calls at a very affordable price and benefit from a faster connection compared to a traditional landline.

A VoIP service is worth considering if you run a small business or make a lot of international phone calls, but comparing different VoIP providers can be challenging if you’re not familiar with the technology. We’ve compared different VoIP services to help you find the best provider to fit your needs. RingCentral

Table of Contents

Why Use Speech Recognition Software?

- Dictation vs. Transcription

Why Use Dictation?

Why use transcription.

- Do You Need Special Recording Equipment?

The Best Transcription Services

The 5 best dictation software options, the best dictation software for writers (to use in 2023).

A lot of Authors give up on their books before they even start writing .

I see it all the time. Authors sit down to write and end up staring at a blank page. They might get a few words down, but they hate what they’ve written, harshly judge themselves, and quit.

Or they get intimidated by the prospect of writing more and give up. They may come back, but if so, it’s with less and less enthusiasm, until they eventually just stop.

In order to break the pattern, you have to get out of your own head. And the best way to do that is to talk it out.

I’m serious. Who ever said that you have to write your book? Why not speak it?

Authors don’t need to be professional writers. You’re publishing a book because you have knowledge to share with the world.

If you’re more comfortable speaking than writing, there’s no shame in dictating your book.

Sure, at some point, you’ll have to put the words on a page and make them readable, of course.

But for your first draft, you can stop focusing on being a perfect writer and instead focus on getting your ideas out in the world.

In this post, I’ll cover why dictation software is such a great tool, the difference between dictation and transcription, and the best options in each category.

When Authors experience writer’s block , it’s not usually because they have bad ideas or because they’re unorganized. The number 1 cause of writer’s block is fear.

So, how do you get rid of that fear?

The easiest solution is to stop staring at the screen and talk instead.

Many Authors can talk clearly and comfortably about their ideas when they aren’t put on the spot. Just think of how easy it is to sit down with colleagues over coffee or how excited you get explaining your work to a friend.

There’s a lot less pressure in those situations. It’s much easier than thinking, “I’m writing something that thousands of people are going to read and judge.”

When that thought is in your head, of course you’re going to freeze.

Your best bet is to ignore all those thoughts and really focus on your reader . Imagine you’re speaking to a specific person—maybe your ideal client or a close friend. What do they want to know? What can you help them with? What tone do you use when you talk to them?

When you keep your attention on the reader you’re trying to serve, it helps quiet your fear and anxiety. And when you speak, rather than write, it can help you keep a relaxed, confident, and personable tone.

Readers relate to Authors’ authentic voices far more than overly-crafted, hyper-intellectual writing styles.

Speaking will also help you finish your first draft faster because it helps you resist the desire to edit as you go.

We always tell Scribe Authors that their first draft should be a “vomit draft.”

You should spew words onto a page without worrying whether they’re good, how they can be better, or whether you’ve said the right thing.

Your vomit draft can be—and possibly will be—absolute garbage.

But that’s okay. As the Author of 4 New York Times bestsellers, I can tell you: first drafts are often garbage. In the end, they still go on to become highly successful books.

It’s a lot easier to edit words that are already on the page than to agonize over every single thing you’re writing.

That’s why speech recognition software is the perfect workaround. When you talk, you don’t have time to agonize. Your ideas can flow without your brain working overtime on grammar, clarity, and all those other things we expect from the written word.

Of course, your spoken words won’t be the same as a book. You’ll have to edit out all the “uh”s and the places you went on tangents. You might even have to overhaul the organization of the sections.

But remember, the goal of a first draft is never perfection. The goal is to have a text you can work with.

What’s the Difference between Dictation & Transcription?

If you know you want to talk out your first draft, you have 2 options:

- Use dictation software

- Use a transcription service

1. Dictation Software

With dictation software, you speak, and the software transcribes your words in real-time.

For example, when you give Siri a voice command on your iPhone, the words pop up across the top of the screen. That’s how dictation software works.

Although, I should point out that we aren’t really talking about Apple’s Siri, Amazon’s Alexa, or Microsoft’s Cortana here. Those are AI virtual assistants that use voice recognition software, but they aren’t true dictation apps. In other words, they’re good at transcribing a shopping list, but they won’t help you write a book.

Some dictation software comes as a standalone app you use exclusively for converting speech to text. Other dictation software comes embedded in a word processor, like Apple’s built-in dictation in Pages or Google Docs’ built-in voice tool.

If you’re a fast speaker, most live dictation software won’t be able to keep up with you. You have to speak slowly and clearly for it to work.

For many people, trying to use dictation software slows them down, which can interrupt their train of thought.

2. Transcription Services

In contrast, transcription services convert your words to text after-the-fact. You record yourself talking and send the completed audio files to the service for transcription.

Some transcription services use human transcription, which is exactly what it sounds like: a human listens to your audio and transcribes the content. This kind of transcription is typically slower and more expensive, but it’s also more accurate.

Other transcription services rely on computer transcription. Using artificial intelligence and advanced voice recognition technology, these services can turn around a full transcript in a matter of minutes. You’ll find some mistakes, but unless you have a strong accent or there’s a lot of background noise in the recording, they’re fairly accurate.

Dictation is the way to go if you want to sit in front of your computer and type—but maybe just type a little faster. It’s especially useful for people who want to switch between talking and typing.

It’s probably not your best option if you want to speak your entire first draft. Voice recognition software still requires you to speak slowly and clearly. You might lose your train of thought if you’re constantly stopping to let the software catch up.

With dictation software, you may also be tempted to stop and read what the software is typing. That’s an easy way to get sucked into editing, which you should never do when you’re writing your first draft.

I recommend using dictation as a way to shake up your writing process, not to replace typing entirely.

If you want to get your vomit draft out by speaking at your own natural pace, we recommend making actual recordings and sending them to a transcription service.

Transcription is also preferable if you’re being interviewed or if you have a co-author because it can recognize multiple voices. It’s also a lot more flexible in terms of location. People can interview you over Zoom or in any other conferencing system, and as long as you can record the conversation, it will work.

Transcription is also relatively cheap and works for you while you do other things. You can record your content at your own pace and choose when you want a computer (or person) to transcribe it. You could record your whole book before you send the audio files for transcription, or you could do a chapter at a time.

Transcription may not work well for you if you are a visual person who needs to see text in order to stay on track. Without a clear outline in front of you, sometimes the temptation to verbally wander or jump around can be too great, and you’ll waste a lot of time sorting through the transcripts later.

Do You Need Any Special Recording Equipment?

No. Most people don’t need anything special.

Whether you’re using transcription or dictation, don’t waste your money on fancy audio equipment. The microphone that comes with your computer or smartphone is more than adequate.

Some people find headsets useful because they can move around while they’re speaking. But you don’t want to multitask too much. If you’re trying to dictate your book while you’re cooking, you’ll be distracted, and the ambient noise could mess up the recording.

Scribe recommends 2 transcription services:

Temi works well for automated transcription (i.e., transcribed by a computer, not a human).

They charge $.25 per audio minute, and their turnaround only takes a few minutes.

Their transcripts are easy to read with clear timestamps and labels for different speakers. They also provide an online editing tool that allows you to easily clean up your transcripts. For example, you can easily search for all the “um”s and remove them with the touch of a button.

You can also listen to your audio alongside the transcript, and you can adjust the playback speed. This is very useful if you’re a fast talker.

If you prefer to work on the go, Temi also offers a mobile app.

Rev offers many of the same features as Temi for automated transcripts. They call this option “Rough Draft” transcription, and it also costs $.25 per audio minute. The average turnaround time for a transcript is 5 minutes.

What sets Rev apart is that they also offer human transcription. This service costs $1.25 per minute, and Rev guarantees 99% accuracy. The average turnaround time is 12 hours.

Human transcription is a great option if your audio file has a lot of background noise. It’s also great if you have a strong accent that automatic transcription software has trouble recognizing.

1. Google Docs Voice Typing

This is currently the best voice typing software, by far. It’s driven by Google’s AI software, which applies Google’s deep learning algorithms to accurately recognize speech. It also supports 125 different languages.

One of the best aspects of Voice Typing is that you don’t need to use a specific operating system or install any extra software to use it. You just need the Chrome web browser and a Google account.

It’s also easy to use. Just log into your account and open a Google Doc. Go to “Tools” and select “Voice Typing.”

A microphone icon will pop up on your screen.

Click it, and it will turn red. That’s when you can start dictating.

Click the microphone again to stop the dictation.

Voice Typing is highly accurate, with the typical caveats that you have to speak clearly and at a relatively slow pace.

It’s free, and because it’s embedded in the Docs software, it’s easy to integrate into your pre-existing workflow. The only potential downside is that you need a high-quality internet connection for Voice Typing, so you won’t be able to use it offline.

2. Apple Dictation

Apple Dictation is a voice dictation software that’s built into Apple’s OS/ iOS. It comes preloaded with every Mac, and it works great with Apple software.

If you’re on an iPhone or iPad, you can access Apple dictation by pressing the microphone icon on the keyboard. Many people use this feature to dictate texts, but it also works in Pages for iPhone. It can be a useful option for taking notes or dictating content while you’re away from your desktop.

If you’re on a laptop or desktop, you can enable dictation by going to System Preferences > Keyboard.

Apple Dictation typically requires an internet connection, but you can select a feature in Settings called “Enhanced Dictation” that allows you to continuously dictate text when you’re offline.

Apple Dictation is great because it’s free, it works well with Apple software across multiple devices, and it generates fairly accurate text.

It’s not quite as high-powered as some “professional” grade dictation programs, but it would work well for most Authors who already own Apple products.

3. Windows Speech Recognition

The current Windows operating system comes with a built-in voice dictation system. You can train the system to recognize your voice, which means that the more you use it, the more accurate it becomes.

Unfortunately, that training can take a long time, so you’ll have to live with some inaccuracies until the system is calibrated.

On Windows 10, you can access dictation by hitting the Windows logo key + H. You can turn the microphone off by typing Windows key + H again or by resuming typing.

Windows Speech Recognition is a good option if you don’t own a Mac or don’t use Google Docs, but overall, I’d still recommend one of the other options.

4. Otter.ai

Otter allows you to “live transcribe” or create real-time streaming transcripts with synced audio, text, and images. You can record conversations on your phone or web browser, or you can import audio files from other services. You can also integrate Otter with Zoom.

Otter is powered by Ambient Voice Intelligence, which means it’s always learning. You can train Otter to recognize specific voices or learn certain terminology. It’s fast, accurate, and user-friendly.

Otter is based on a subscription plan with basic, premium, and team options. I’ll only mention the basic and premium plans since most Authors won’t need the team features.

The free basic plan allows 600 minutes of transcription per month, which should be plenty—but the maximum length of each file is only 40 minutes. You also can’t import audio and video, and you can only export your transcripts as txt files, not pdf or docx files.