Speech Recognition in Unity3D – The Ultimate Guide

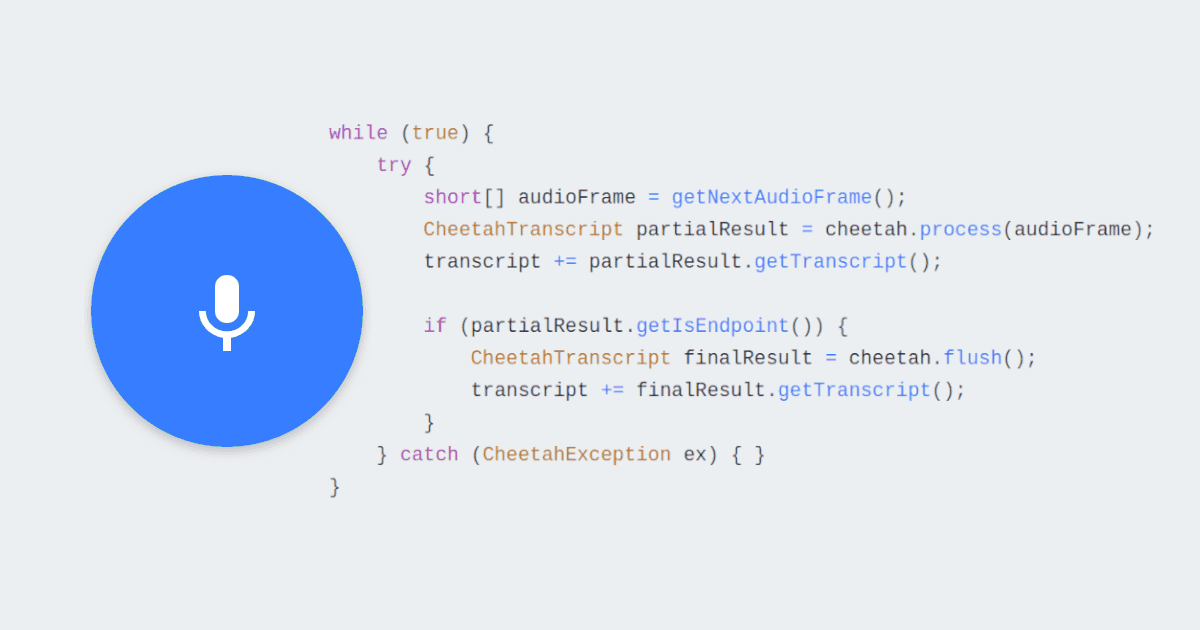

There are three main strategies in converting user speech input to text:

- Voice Commands

- Free Dictation

These strategies exist in any voice detection engine (Google, Microsoft, Amazon, Apple, Nuance, Intel, or others), therefore the concepts described here will give you a good reference point to understand how to work with any of them. In today’s article, we’ll explore the differences of each method, understand their use-cases, and see a quick implementation of the main ones.

Prerequisites

To write and execute code, you need to install the following software:

- Visual Studio 2019 Community

Unity3D is using a Microsoft API that works on any Windows 10 device (Desktop, UWP, HoloLens, XBOX). Similar APIs also exist for Android and iOS.

Did you know?…

LightBuzz has been helping Fortune-500 companies and innovative startups create amazing Unity3D applications and games. If you are looking to hire developers for your project, get in touch with us.

Source code

The source code of the project is available in our LightBuzz GitHub account. Feel free to download, fork, and even extend it!

1) Voice commands

We are first going to examine the simplest form of speech recognition: plain voice commands.

Description

Voice commands are predictable single words or expressions, such as:

- “Forward”

- “Left”

- “Fire”

- “Answer call”

The detection engine is listening to the user and compares the result with various possible interpretations. If one of them is near the spoken phrase within a certain confidence threshold, it’s marked as a proposed answer.

Since that is a “one or anything” approach, the engine will either recognize the phrase or nothing at all.

This method fails when you have several ways to say one thing. For example, the words “hello”, “hi”, “hey there” are all forms of greeting. Using this approach, you have to define all of them explicitly.

This method is useful for short, expected phrases, such as in-game controls.

Our original article includes detailed examples of using simple voice commands. You may also check out the Voice Commands Scene on the sample project .

Below, you can see the simplest C# code example for recognizing a few words:

2) Free Dictation

To solve the challenges of simple voice commands, we shall use the dictation mode.

While the user speaks in this mode, the engine listens for every possible word. While listening, it tries to find the best possible match of what the user meant to say.

This is the mode activated by your mobile device when you speak to it when writing a new email using voice. The engine manages to write the text in less than a second after you finish to say a word.

Technically, this is really impressive, especially considering that it compares your voice across multi-lingual dictionaries, while also checking grammar rules.

Use this mode for free-form text. If your application has no idea what to expect, the Dictation mode is your best bet.

You can see an example of the Dictation mode in the sample project Dictation Mode Scene . Here is the simplest way to use the Dictation mode:

As you can see, we first create a new dictation engine and register for the possible events.

- It starts with DictationHypothesis events, which are thrown really fast as the user speaks. However, hypothesized phrases may contain lots of errors.

- DictationResult is an event thrown after the user stops speaking for 1–2 seconds. It’s only then that the engine provides a single sentence with the highest probability.

- DictationComplete is thrown on several occasions when the engine shuts down. Some occasions are irreversible technical issues, while others just require a restart of the engine to get back to work.

- DictationError is thrown for other unpredictable errors.

Here are two general rules-of-thumb:

- For the highest quality, use DictationResult .

- For the fastest response, use DictationHypothesis .

Having both quality and speed is impossible with this technic.

Is it even possible to combine high-quality recognition with high speed?

Well, there is a reason we are not yet using voice commands as Iron Man does: In real-world applications, users are frequently complaining about typing errors, which probably happens only less than 10% of the cases… Dictation has many more mistakes than that.

To increase accuracy and keep the speed fast at the same time, we need the best of both worlds — the freedom of the Dictation and the response time of the Voice Commands.

The solution is Grammar Mode . This mode requires us to write a dictionary. A dictionary is an XML file that defines various rules for the things that the user will potentially say. This way, we can ignore languages we don’t need, and phrases the user will probably not use.

The grammar file also explains to the engine what are the possible words it can expect to receive next, therefore shrinking the amount from ANYTHING to X. This significantly increases performance and quality.

For example, using a Grammar, we could greet with either of these phrases:

- “Hello, how are you?”

- “Hi there”

- “Hey, what’s up?”

- “How’s it going?”

All of those could be listed in a rule that says:

If the user started saying something that sounds like” Hello”, it would be easily differentiated from e.g “Ciao”, compared to being differentiated also from e.g. “Yellow” or “Halo”.

We are going to see how to create our own Grammar file in a future article.

For your reference, this is the official specification for structuring a Grammar file .

In this tutorial, we described two methods of recognizing voice in Unity3D: Voice Commands and Dictation. Voice Commands are the easiest way to recognize pre-defined words. Dictation is a way to recognize free-form phrases. In a future article, we are going to see how to develop our own Grammar and feed it to Unity3D.

Until then, why don’t you start writing your code by speaking to your PC?

You made it to this point? Awesome! Here is the source code for your convenience.

Before you go…

Sharing is caring.

If you liked this article, remember to share it on social media, so you can help other developers, too! Also, let me know your thoughts in the comments below. ‘Til the next time… keep coding!

Shachar Oz is a product manager and UX specialist with extensive experience with emergent technologies, like AR, VR and computer vision. He designed Human Machine Interfaces for the last 10 years for video games, apps, robots and cars, using interfaces like face tracking, hand gestures and voice recognition. Website

You May Also Like

Lightbuzz body tracking sdk version 6.

Kinect is dead. Here is the best alternative

Product Update: LightBuzz SDK version 5.5

11 comments.

Hello, I have a question, while in unity everything works perfectly, but when I build the project for PC, and open the application, it doesn’t work. Please help.

hi Omar, well, i have built it will Unity 2019.1 as well as with 2019.3 and it works perfectly.

i apologize if it doesn’t. please try to make a build from the github source code, and feel free to send us some error messages that occur.

i apologize if it doesn’t. please try to make a build from the github source code, and feel free to send us some error messages that occur.

Hello, I’m trying Dictation Recognizer and I want to change the language to Spanish but I still don’t quite get it. Can you help me with this?

hi Alexis, perhaps check if the code here could help you: https://docs.microsoft.com/en-us/windows/apps/design/input/specify-the-speech-recognizer-language

You need an object – protected PhraseRecognizer recognizer;

in the example nr 1. Take care and thanks for this article!

Thank you Carl. Happy you liked it.

does this support android builds

Hi there. Sadly not. Android and ios have different speech api. this api supports microsoft devices.

Any working example for the grammar case?

Well, you can find this example from Microsoft. It should work anyway on PC. A combination between Grammar and machine learning is how most of these mechanisms work today.

https://learn.microsoft.com/en-us/dotnet/api/system.speech.recognition.grammar?view=netframework-4.8.1#examples

Leave a Reply Cancel Reply

Save my name, email, and website in this browser for the next time I comment.

This site uses Akismet to reduce spam. Learn how your comment data is processed .

© 2022 LIGHTBUZZ INC. Privacy Policy & Terms of Service

Privacy Overview

AI Speech Recognition in Unity

Introduction

This tutorial guides you through the process of implementing state-of-the-art Speech Recognition in your Unity game using the Hugging Face Unity API. This feature can be used for giving commands, speaking to an NPC, improving accessibility, or any other functionality where converting spoken words to text may be useful.

To try Speech Recognition in Unity for yourself, check out the live demo in itch.io .

Prerequisites

This tutorial assumes basic knowledge of Unity. It also requires you to have installed the Hugging Face Unity API . For instructions on setting up the API, check out our earlier blog post .

1. Set up the Scene

In this tutorial, we'll set up a very simple scene where the player can start and stop a recording, and the result will be converted to text.

Begin by creating a Unity project, then creating a Canvas with four UI elements:

- Start Button : This will start the recording.

- Stop Button : This will stop the recording.

- Text (TextMeshPro) : This is where the result of the speech recognition will be displayed.

2. Set up the Script

Create a script called SpeechRecognitionTest and attach it to an empty GameObject.

In the script, define references to your UI components:

Assign them in the inspector.

Then, use the Start() method to set up listeners for the start and stop buttons:

At this point, your script should look something like this:

3. Record Microphone Input

Now let's record Microphone input and encode it in WAV format. Start by defining the member variables:

Then, in StartRecording() , using the Microphone.Start() method to start recording:

This will record up to 10 seconds of audio at 44100 Hz.

In case the recording reaches its maximum length of 10 seconds, we'll want to stop the recording automatically. To do so, write the following in the Update() method:

Then, in StopRecording() , truncate the recording and encode it in WAV format:

Finally, we'll need to implement the EncodeAsWAV() method, to prepare the audio data for the Hugging Face API:

The full script should now look something like this:

To test whether this code is working correctly, you can add the following line to the end of the StopRecording() method:

Now, if you click the Start button, speak into the microphone, and click Stop , a test.wav file should be saved in your Unity Assets folder with your recorded audio.

4. Speech Recognition

Next, we'll want to use the Hugging Face Unity API to run speech recognition on our encoded audio. To do so, we'll create a SendRecording() method:

This will send the encoded audio to the API, displaying the response in white if successful, otherwise the error message in red.

Don't forget to call SendRecording() at the end of the StopRecording() method:

5. Final Touches

Finally, let's improve the UX of this demo a bit using button interactability and status messages.

The Start and Stop buttons should only be interactable when appropriate, i.e. when a recording is ready to be started/stopped.

Then, set the response text to a simple status message while recording or waiting for the API.

The finished script should look something like this:

Congratulations, you can now use state-of-the-art Speech Recognition in Unity!

If you have any questions or would like to get more involved in using Hugging Face for Games, join the Hugging Face Discord !

More articles from our Blog

Total noob’s intro to Hugging Face Transformers

By 2legit2overfit March 22, 2024

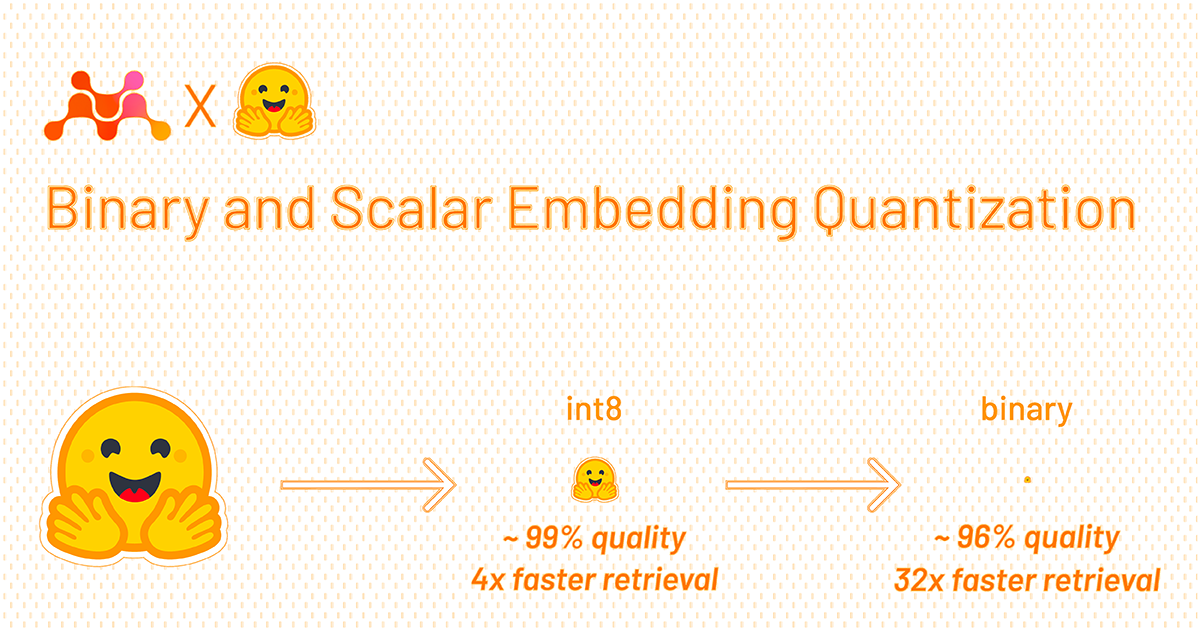

Binary and Scalar Embedding Quantization for Significantly Faster & Cheaper Retrieval

By aamirshakir March 22, 2024 guest

Unity Speech Recognition

This article serves as a comprehensive guide for adding on-device Speech Recognition to an Unity project.

When used casually, Speech Recognition usually refers solely to Speech-to-Text . However, Speech-to-Text represents only a single facet of Speech Recognition technologies. It also refers to features such as Wake Word Detection , Voice Command Recognition , and Voice Activity Detection ( VAD ). In the context of Unity projects, Speech Recognition can be used to implement a Voice Interface .

Fortunately Picovoice offers a few tools to help implement Voice Interfaces . If all that is needed is to recognize when specific phrases or words are said, use Porcupine Wake Word . If Voice Commands need to be understood and intent extracted with details (i.e. slot values), Rhino Speech-to-Intent is more suitable. Keep reading to see how to quickly start with both of them.

Picovoice Unity SDKs have cross-platform support for Linux , macOS , Windows , Android and iOS !

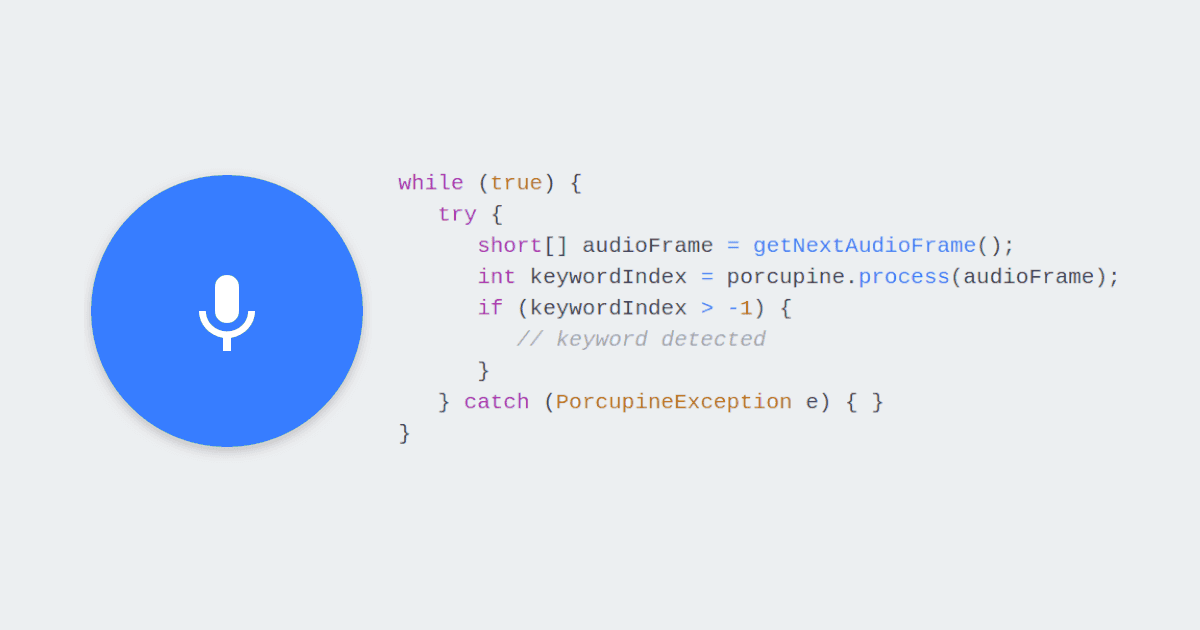

Porcupine Wake Word

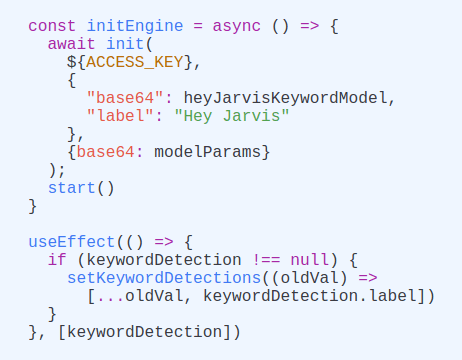

To integrate the Porcupine Wake Word SDK into your Unity project, download and import the latest Porcupine Unity package .

Sign up for a free Picovoice Console account and obtain your AccessKey . The AccessKey is only required for authentication and authorization.

Create a custom wake word model using Picovoice Console.

Download the .ppn model file and copy it into your project's StreamingAssets folder.

Write a callback that takes action when a keyword is detected:

- Initialize the Porcupine Wake Word engine with the callback and the .ppn file name (or path relative to the StreamingAssets folder):

- Start detecting:

For further details, visit the Porcupine Wake Word product page or refer to Porcupine's Unity SDK quick start guide .

Rhino Speech-to-Intent

To integrate the Rhino Speech-to-Intent SDK into your Unity project, download and import the latest Rhino Unity package .

Create a custom context model using Picovoice Console.

Download the .rhn model file and copy it into your project's StreamingAssets folder.

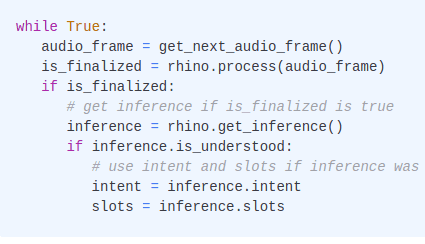

Write a callback that takes action when a user's intent is inferred:

- Initialize the Rhino Speech-to-Intent engine with the callback and the .rhn file name (or path relative to the StreamingAssets folder):

- Start inferring:

For further details, visit the Rhino Speech-to-Intent product page or refer to Rhino's Android SDK quick start guide .

Subscribe to our newsletter

More from Picovoice

Learn how to perform Speech Recognition in iOS, including Speech-to-Text, Voice Commands, Wake Word Detection, and Voice Activity Detection.

Learn how to perform Speech Recognition in the web using React, including Voice Commands and Wake Word Detection.

Learn how to perform Speech Recognition in Android, including Speech-to-Text, Voice Commands, Wake Word Detection, and Voice Activity Detect...

Learn how to perform Speech Recognition in Python, including Speech-to-Text, Voice Commands, Wake Word Detection, and Voice Activity Detecti...

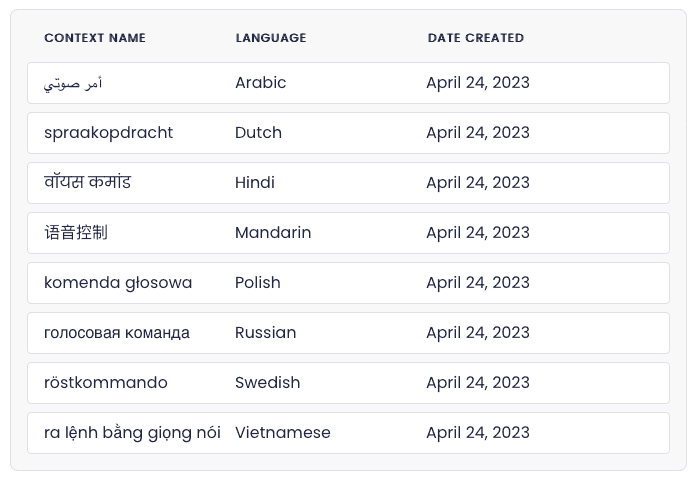

New releases of Porcupine Wake Word and Rhino Speech-to-Intent engines add support for Arabic, Dutch, Farsi, Hindi, Mandarin, Polish, Russia...

Learn how to create offline voice assistants like Alexa or Siri that run fully on-device using an STM32 microcontroller.

Perform keyword spotting on Arm Cortex-M microcontrollers using Picovoice Porcupine Wake Word. Run NLU on MCUs using Picovoice Rhino Speech-...

Learn how to transcribe speech to text on an Android device. Picovoice Leopard and Cheetah Speech-to-Text SDKs run on mobile, desktop, and e...

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Voice input in Unity

- 3 contributors

Before starting, consider using the Unity plug-in for the Cognitive Speech Services SDK. The plugin has better Speech Accuracy results and easy access to speech-to-text decode, as well as advanced speech features like dialog, intent based interaction, translation, text-to-speech synthesis, and natural language speech recognition. To get started, check out the sample and documentation .

Unity exposes three ways to add Voice input to your Unity application, the first two of which are types of PhraseRecognizer:

- The KeywordRecognizer supplies your app with an array of string commands to listen for

- The GrammarRecognizer gives your app an SRGS file defining a specific grammar to listen for

- The DictationRecognizer lets your app listen for any word and provide the user with a note or other display of their speech

Dictation and phrase recognition can't be handled at the same time. If a GrammarRecognizer or KeywordRecognizer is active, a DictationRecognizer can't be active and vice versa.

Enabling the capability for Voice

The Microphone capability must be declared for an app to use Voice input.

- In the Unity Editor, navigate to Edit > Project Settings > Player

- Select the Windows Store tab

- In the Publishing Settings > Capabilities section, check the Microphone capability

- You'll be asked to do this on device startup, but if you accidentally clicked "no" you can change the permissions in the device settings

Phrase Recognition

To enable your app to listen for specific phrases spoken by the user then take some action, you need to:

- Specify which phrases to listen for using a KeywordRecognizer or GrammarRecognizer

- Handle the OnPhraseRecognized event and take action corresponding to the phrase recognized

KeywordRecognizer

Namespace: UnityEngine.Windows.Speech Types: KeywordRecognizer , PhraseRecognizedEventArgs , SpeechError , SpeechSystemStatus

We'll need a few using statements to save some keystrokes:

Then let's add a few fields to your class to store the recognizer and keyword->action dictionary:

Now add a keyword to the dictionary, for example in of a Start() method. We're adding the "activate" keyword in this example:

Create the keyword recognizer and tell it what we want to recognize:

Now register for the OnPhraseRecognized event

An example handler is:

Finally, start recognizing!

GrammarRecognizer

Namespace: UnityEngine.Windows.Speech Types : GrammarRecognizer , PhraseRecognizedEventArgs , SpeechError , SpeechSystemStatus

The GrammarRecognizer is used if you're specifying your recognition grammar using SRGS. This can be useful if your app has more than just a few keywords, if you want to recognize more complex phrases, or if you want to easily turn on and off sets of commands. See: Create Grammars Using SRGS XML for file format information.

Once you have your SRGS grammar, and it is in your project in a StreamingAssets folder :

Create a GrammarRecognizer and pass it the path to your SRGS file:

You'll get a callback containing information specified in your SRGS grammar, which you can handle appropriately. Most of the important information will be provided in the semanticMeanings array.

Namespace: UnityEngine.Windows.Speech Types : DictationRecognizer , SpeechError , SpeechSystemStatus

Use the DictationRecognizer to convert the user's speech to text. The DictationRecognizer exposes dictation functionality and supports registering and listening for hypothesis and phrase completed events, so you can give feedback to your user both while they speak and afterwards. Start() and Stop() methods respectively enable and disable dictation recognition. Once done with the recognizer, it should be disposed using Dispose() to release the resources it uses. It will release these resources automatically during garbage collection at an extra performance cost if they aren't released before that.

There are only a few steps needed to get started with dictation:

- Create a new DictationRecognizer

- Handle Dictation events

- Start the DictationRecognizer

Enabling the capability for dictation

The Internet Client and Microphone capabilities must be declared for an app to use dictation:

- In the Unity Editor, go to Edit > Project Settings > Player

- Select on the Windows Store tab

- Optionally, if you didn't already enable the microphone, check the Microphone capability

DictationRecognizer

Create a DictationRecognizer like so:

There are four dictation events that can be subscribed to and handled to implement dictation behavior.

DictationResult

DictationComplete

DictationHypothesis

DictationError

This event is fired after the user pauses, typically at the end of a sentence. The full recognized string is returned here.

First, subscribe to the DictationResult event:

Then handle the DictationResult callback:

This event is fired continuously while the user is talking. As the recognizer listens, it provides text of what it's heard so far.

First, subscribe to the DictationHypothesis event:

Then handle the DictationHypothesis callback:

This event is fired when the recognizer stops, whether from Stop() being called, a timeout occurring, or some other error.

First, subscribe to the DictationComplete event:

Then handle the DictationComplete callback:

This event is fired when an error occurs.

First, subscribe to the DictationError event:

Then handle the DictationError callback:

Once you've subscribed and handled the dictation events that you care about, start the dictation recognizer to begin receiving events.

If you no longer want to keep the DictationRecognizer around, you need to unsubscribe from the events and Dispose the DictationRecognizer.

- Start() and Stop() methods respectively enable and disable dictation recognition.

- Once done with the recognizer, it must be disposed using Dispose() to release the resources it uses. It will release these resources automatically during garbage collection at an extra performance cost if they aren't released before that.

- If the recognizer starts and doesn't hear any audio for the first five seconds, it will time out.

- If the recognizer has given a result, but then hears silence for 20 seconds, it will time out.

Using both Phrase Recognition and Dictation

If you want to use both phrase recognition and dictation in your app, you'll need to fully shut one down before you can start the other. If you have multiple KeywordRecognizers running, you can shut them all down at once with:

You can call Restart() to restore all recognizers to their previous state after the DictationRecognizer has stopped:

You could also just start a KeywordRecognizer, which will restart the PhraseRecognitionSystem as well.

Voice input in Mixed Reality Toolkit

You can find MRTK examples for voice input in the following demo scenes:

Next Development Checkpoint

If you're following the Unity development checkpoint journey we've laid out, you're next task is exploring the Mixed Reality platform capabilities and APIs:

Shared experiences

You can always go back to the Unity development checkpoints at any time.

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback .

Submit and view feedback for

Additional resources

DEV Community

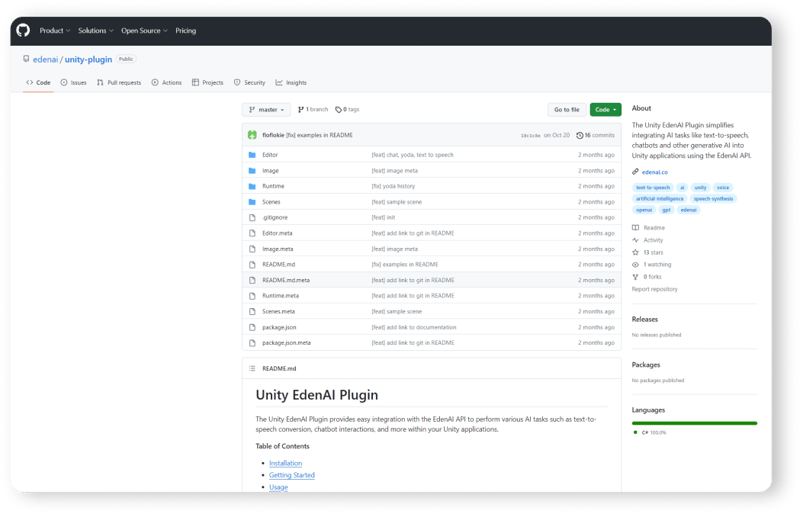

Posted on Jan 31 • Originally published at edenai.co

How to use Text-to-Speech in Unity

Enhance your Unity game by integrating artificial intelligence capabilities. This Unity AI tutorial will walk you through the process of using the Eden AI Unity Plugin, covering key steps from installation to implementing various AI models.

What is Unity ?

Established in 2004, Unity is a gaming company offering a powerful game development engine that empowers developers to create immersive games across various platforms, including mobile devices, consoles, and PCs.

If you're aiming to elevate your gameplay, Unity allows you to integrate artificial intelligence (AI), enabling intelligent behaviors, decision-making, and advanced functionalities in your games or applications.

Unity offers multiple paths for AI integration. Notably, the Unity Eden AI Plugin effortlessly syncs with the Eden AI API, enabling easy integration of AI tasks like text-to-speech conversion within your Unity applications.

Benefits of integrating Text to Speech into video game development

Integrating Text-to-Speech (TTS) into video game development offers a range of benefits, enhancing both the gaming experience and the overall development process:

1. Immersive Player Interaction

TTS enables characters in the game to speak, providing a more immersive and realistic interaction between players and non-player characters (NPCs).

2. Accessibility for Diverse Audiences

TTS can be utilized to cater to a diverse global audience by translating in-game text into spoken words, making the gaming experience more accessible for players with varying linguistic backgrounds.

3. Customizable Player Experience

Developers can use TTS to create personalized and adaptive gaming experiences, allowing characters to respond dynamically to player actions and choices.

4. Innovative Gameplay Mechanics

Game developers can introduce innovative gameplay mechanics by incorporating voice commands, allowing players to control in-game actions using spoken words, leading to a more interactive gaming experience.

5. Adaptive NPC Behavior

NPCs with TTS capabilities can exhibit more sophisticated and human-like behaviors, responding intelligently to player actions and creating a more challenging and exciting gaming environment.

6. Multi-Modal Gaming Experiences

TTS opens the door to multi-modal gaming experiences, combining visual elements with spoken dialogues, which can be especially beneficial for players who prefer or require alternative communication methods.

Integrating TTS into video games enhances the overall gameplay, contributing to a more inclusive, dynamic, and enjoyable gaming experience for players.

Use cases of Video Game Text-to-Speech Integration

Text-to-Speech (TTS) integration in video games introduces various use cases, enhancing player engagement, accessibility, and overall gaming experiences. Here are several applications of TTS in the context of video games:

Quest Guidance

TTS can guide players through quests by providing spoken instructions, hints, or clues, offering an additional layer of assistance in navigating game objectives.

Interactive Conversations

Enable players to engage in interactive conversations with NPCs through TTS, allowing for more realistic and dynamic exchanges within the game world.

Accessibility for Visually Impaired Players

TTS aids visually impaired players by converting in-game text into spoken words, providing crucial information about game elements, menus, and story developments.

Character AI Interaction

TTS can enhance interactions with AI-driven characters by allowing them to vocally respond to player queries, creating a more realistic and immersive gaming environment.

Interactive Learning Games

In educational or serious games, TTS can assist in delivering instructional content, quizzes, or interactive learning experiences, making the gameplay educational and engaging.

Procedural Content Generation

TTS can contribute to procedural content generation by dynamically narrating events, backstory, or lore within the game, adding depth and context to the gaming world.

Integrating TTS into video games offers a versatile set of applications that go beyond traditional text presentation, providing new dimensions of interactivity, accessibility, and storytelling.

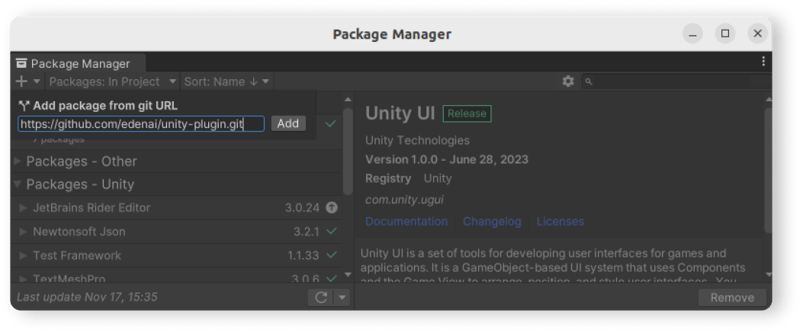

How to integrate TTS into your video game with Unity

Step 1. install the eden ai unity plugin.

Ensure that you have a Unity project open and ready for integration. If you haven't installed the Eden AI plugin, follow these steps:

- Open your Unity Package Manager

- Add package from GitHub

Step 2. Obtain your Eden AI API Key

To get started with the Eden AI API, you need to sign up for an account on the Eden AI platform.

Try Eden AI for FREE

Once registered, you will get an API key which you will need to use the Eden AI Unity Plugin. You can set it in your script or add a file auth.json to your user folder (path: ~/.edenai (Linux/Mac) or %USERPROFILE%/.edenai/ (Windows)) as follows:

Alternatively, you can pass the API key as a parameter when creating an instance of the EdenAIApi class. If the API key is not provided, it will attempt to read it from the auth.json file in your user folder.

Step 3. Integrate Text-to-Speech on Unity

Bring vitality to your non-player characters (NPCs) by empowering them to vocalize through the implementation of text-to-speech functionality.

Leveraging the Eden AI plugin, you can seamlessly integrate a variety of services, including Google Cloud, OpenAI, AWS, IBM Watson, LovoAI, Microsoft Azure, and ElevenLabs text-to-speech providers, into your Unity project (refer to the complete list here).

This capability allows you to tailor the voice model, language, and audio format to align with the desired atmosphere of your game.

Open your script file where you want to implement the text-to-speech functionality.

Import the required namespaces at the beginning of your script:

- Create an instance of the Eden AI API class:

- Implement the SendTextToSpeechRequest function with the necessary parameters:

Step 4: Handle the Text-to-Speech Response

The SendTextToSpeechRequest function returns a TextToSpeechResponse object.

Access the response attributes as needed. For example:

Step 5: Customize Parameters (Optional)

The SendTextToSpeechRequest function allows you to customize various parameters:

- Rate: Adjust speaking rate.

- Pitch: Modify speaking pitch.

- Volume: Control audio volume.

- VoiceModel: Specify a specific voice model.

- Include these optional parameters based on your preferences.

Step 6: Test and Debug

Run your Unity project and test the text-to-speech functionality. Monitor the console for any potential errors or exceptions, and make adjustments as necessary.

Now, your Unity project is equipped with text-to-speech functionality using the Eden AI plugin. Customize the parameters to suit your game's atmosphere, and enhance the immersive experience for your players.

TTS integration enhances immersion and opens doors for diverse gameplay experiences. Feel free to experiment with optional parameters for further fine-tuning. Explore additional AI functionalities offered by Eden AI to elevate your game development here.

About Eden AI

Eden AI is the future of AI usage in companies: our app allows you to call multiple AI APIs.

- Centralized and fully monitored billing

- Unified API: quick switch between AI models and providers

- Standardized response format: the JSON output format is the same for all suppliers.

- The best Artificial Intelligence APIs in the market are available

- Data protection: Eden AI will not store or use any data.

Top comments (0)

Templates let you quickly answer FAQs or store snippets for re-use.

Are you sure you want to hide this comment? It will become hidden in your post, but will still be visible via the comment's permalink .

Hide child comments as well

For further actions, you may consider blocking this person and/or reporting abuse

Introducing secret variables in Hoppscotch Environments

Kiran Johns - Feb 14

Devin AI: Friend or Foe for Software Engineers?

Sanjay R - Mar 15

Using Ollama: Getting hands-on with local LLMs and building a chatbot

Arjun Rao - Mar 15

You Will Love This Tool! PS: "Talk to a GitHub Repo" AI 😍

Arjun Vijay Prakash - Mar 15

We're a place where coders share, stay up-to-date and grow their careers.

Scripting API

Dictationrecognizer.

class in UnityEngine.Windows.Speech

Implemented in: UnityEngine.CoreModule

Thank you for helping us improve the quality of Unity Documentation. Although we cannot accept all submissions, we do read each suggested change from our users and will make updates where applicable.

Submission failed

For some reason your suggested change could not be submitted. Please <a>try again</a> in a few minutes. And thank you for taking the time to help us improve the quality of Unity Documentation.

Description

DictationRecognizer listens to speech input and attempts to determine what phrase was uttered.

Users can register and listen for hypothesis and phrase completed events. Start() and Stop() methods respectively enable and disable dictation recognition. Once done with the recognizer, it must be disposed using Dispose() method to release the resources it uses. It will release these resources automatically during garbage collection at an additional performance cost if they are not released prior to that.

Dictation recognizer is currently functional only on Windows 10, and requires that dictation is permitted in the user's Speech privacy policy (Settings->Privacy->Speech, inking & typing). If dictation is not enabled, DictationRecognizer will fail on Start . Developers can handle this failure in an app-specific way by providing a DictationError delegate and testing for SPERR_SPEECH_PRIVACY_POLICY_NOT_ACCEPTED (0x80045509).

Constructors

Public methods.

Is something described here not working as you expect it to? It might be a Known Issue . Please check with the Issue Tracker at issuetracker.unity3d.com .

Introducing the Unity Text-to-Speech Plugin from ReadSpeaker

Having trouble adding synthetic speech to your next video game release? Try the Unity text-to-speech plugin from ReadSpeaker AI. Learn more here.

- Accessibility

- Assistive Technology

- ReadSpeaker News

- Text To Speech

- Voice Branding

As a game developer, how will you use text to speech (TTS)?

We’ve only begun to discover what this tool can do in the hands of creators. What we do know is that TTS can solve tough development problems , that it’s a cornerstone of accessibility , and that it’s a key component of dynamic AI-enhanced characters: NPCs that carry on original conversations with players.

There have traditionally been a few technical roadblocks between TTS and the game studio: Devs find it cumbersome to create and import TTS sound files through an external TTS engine. Some TTS speech labors under perceptible latency, making it unsuitable for in-game audio. And an unintegrated TTS engine creates a whole new layer of project management, threatening already drum-tight production schedules.

What devs need is a latency-free TTS tool they can use independently, without leaving the game engine—and that’s exactly what you get with ReadSpeaker AI’s Unity text-to-speech plugin.

Want to include dynamic, runtime synthetic speech in your next project? Contact ReadSpeaker AI to try the Unity text-to-speech plugin.

ReadSpeaker AI’s Unity Text-to-Speech Plugin

ReadSpeaker AI offers a market-ready TTS plugin for Unity and Unreal Engine, and will work with studios to provide APIs for other game engines. For now, though, we’ll confine our discussion to Unity, which claims nearly 65% of the game development engine market. ReadSpeaker AI’s TTS plugin is an easy-to-install tool that allows devs to create and manipulate synthetic speech directly in Unity: no file management, no swapping between interfaces, and a deep library of rich, lifelike TTS voices. ReadSpeaker AI uses deep neural networks (DNN) to create AI-powered TTS voices of the highest quality, complete with industry-leading pronunciation thanks to custom pronunciation dictionaries and linguist support.

With this neural TTS at their fingertips, developers can improve the game development process—and the player’s experience—limited only by their creativity. So far, we’ve identified four powerful uses for a TTS game engine plugin. These include:

- User interface (UI) narration for accessibility. User interface narration is an accessibility feature that remediates barriers for players with vision impairments and other disabilities; TTS makes it easy to implement. Even before ReadSpeaker AI released the Unity plugin, The Last of Us Part 2 (released in 2018) used ReadSpeaker TTS for its UI narration feature. A triple-A studio like Naughty Dog can take the time to generate TTS files outside the game engine; those files were ultimately shipped on the game disc. That solution might not work ideally for digital games or independent studios, but a TTS game engine plugin will.

- Prototyping dialogue at early stages of development. Don’t wait until you’ve got a voice actor in the studio to find out your script doesn’t flow perfectly. The Unity TTS plugin allows developers to draft scenes within the engine, tweaking lines and pacing to get the plan perfect before the recording studio’s clock starts running.

- Instant audio narration for in-game text chat. Unity speech synthesis from ReadSpeaker AI renders audio instantly at runtime, through a speech engine embedded in the game files, so it’s ideal for narrating chat messages instantly. This is another powerful accessibility tool—one that’s now required for online multiplayer games in the U.S., according to the 21st Century Communications and Video Accessibility Act (CVAA). But it’s also great for players who simply prefer to listen rather than read in the heat of action.

- Lifelike speech for AI NPCs and procedurally generated text. Natural language processing allows software to understand human speech and create original, relevant responses. Only TTS can make these conversational voicebots—which is essentially what AI NPCs are—speak out loud. Besides, AI NPCs are just one use of procedurally generated speech in video games. What are the others? You decide. Game designers are artists, and dynamic, runtime TTS from ReadSpeaker AI is a whole new palette.

Text to Speech vs. Human Voice Actors for Video Game Characters

Note that our list of use cases for TTS in game development doesn’t include replacing voice talent for in-game character voices, other than AI NPCs that generate dialogue in real time. Voice actors remain the gold standard for character speech, and that’s not likely to change any time soon. In fact, every great neural TTS voice starts with a great voice actor; they provide the training data that allows the DNN technology to produce lifelike speech, with contracts that ensure fair, ethical treatment for all parties. So while there’s certainly a place for TTS in character voices, they are not a replacement for human talent. Instead, think of TTS as a tool for development, accessibility, and the growing role of AI in gaming.

ReadSpeaker AI brings more than 20 years of experience in TTS, with a focus on performance. That expertise helped us develop an embedded TTS engine that renders audio on the player’s machine, eliminating latency. We also offer more than 90 top-quality voices in over 30 languages, plus SSML support so you can control expression precisely. These capabilities set ReadSpeaker AI apart from the crowd. Curious? Keep reading for a real-world example.

ReadSpeaker AI Speech Synthesis in Action

Soft Leaf Studios used ReadSpeaker AI’s Unity text-to-speech plugin for scene prototyping and UI and story narration for its highly accessible game, in development at publication time, Stories of Blossom . Check out this video to see how it works:

“Without a TTS plugin like this, we would be left guessing what audio samples we would need to generate, and how they would play back,” Conor Bradley, Stories of Blossom lead developer, told ReadSpeaker AI. “The plugin allows us to experiment without the need to lock our decisions, which is a very powerful tool to have the privilege to use.”

This example begs the question every game developer will soon be asking themselves, a variation on the question we started with: What could a Unity text-to-speech plugin do for your next release? Reach out to start the conversation .

ReadSpeaker’s industry-leading voice expertise leveraged by leading Italian newspaper to enhance the reader experience Milan, Italy. – 19 October, 2023 – ReadSpeaker, the most trusted,…

Accessibility overlays have gotten a lot of bad press, much of it deserved. So what can you do to improve web accessibility? Find out here.

Struggling to produce a worthwhile voice over for your podcast? One (or more!) of these three production methods is sure to work for you.

- ReadSpeaker webReader

- ReadSpeaker docReader

- ReadSpeaker TextAid

- Assessments

- Text to Speech for K12

- Higher Education

- Corporate Learning

- Learning Management Systems

- Custom Text-To-Speech (TTS) Voices

- Voice Cloning Software

- Text-To-Speech (TTS) Voices

- ReadSpeaker speechMaker Desktop

- ReadSpeaker speechMaker

- ReadSpeaker speechCloud API

- ReadSpeaker speechEngine SAPI

- ReadSpeaker speechServer

- ReadSpeaker speechServer MRCP

- ReadSpeaker speechEngine SDK

- ReadSpeaker speechEngine SDK Embedded

- Automotive Applications

- Conversational AI

- Entertainment

- Experiential Marketing

- Guidance & Navigation

- Smart Home Devices

- Transportation

- Virtual Assistant Persona

- Voice Commerce

- Customer Stories & e-Books

- About ReadSpeaker

- TTS Languages and Voices

- The Top 10 Benefits of Text to Speech for Businesses

- Learning Library

- e-Learning Voices: Text to Speech or Voice Actors?

- TTS Talks & Webinars

Make your products more engaging with our voice solutions.

- Solutions ReadSpeaker Online ReadSpeaker webReader ReadSpeaker docReader ReadSpeaker TextAid ReadSpeaker Learning Education Assessments Text to Speech for K12 Higher Education Corporate Learning Learning Management Systems ReadSpeaker Enterprise AI Voice Generator Custom Text-To-Speech (TTS) Voices Voice Cloning Software Text-To-Speech (TTS) Voices ReadSpeaker speechCloud API ReadSpeaker speechEngine SAPI ReadSpeaker speechServer ReadSpeaker speechServer MRCP ReadSpeaker speechEngine SDK ReadSpeaker speechEngine SDK Embedded

- Applications Accessibility Automotive Applications Conversational AI Education Entertainment Experiential Marketing Fintech Gaming Government Guidance & Navigation Healthcare Media Publishing Smart Home Devices Transportation Virtual Assistant Persona Voice Commerce

- Resources Resources TTS Languages and Voices Learning Library TTS Talks and Webinars About ReadSpeaker Careers Support Blog The Top 10 Benefits of Text to Speech for Businesses e-Learning Voices: Text to Speech or Voice Actors?

- Get started

Search on ReadSpeaker.com ...

All languages.

- Norsk Bokmål

- Latviešu valoda

Open main menu

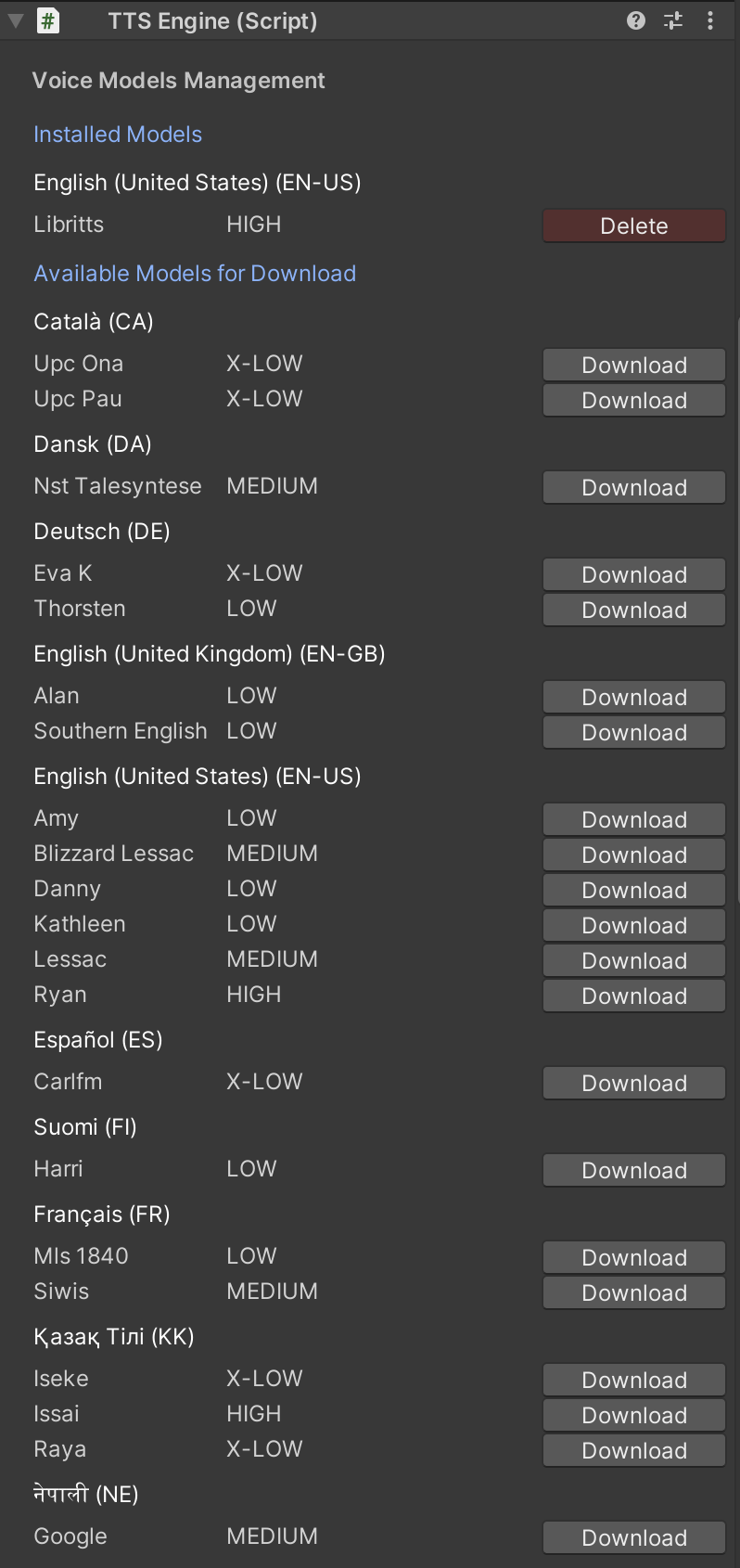

Overtone - Realistic AI Offline Text to Speech (TTS)

Overtone is an offline Text-to-Speech asset for Unity. Enrich your game with 15+ languages, 900+ English voices, rapid performance, and cross-platform support.

Getting Started

Welcome to the Overtone documentation! In this section, we’ll walk you through the initial steps to start using the tools. We will explain the various features of Overtone, how to set it up, and provide guidance on using the different models for text to speech

Overtone provides a versatile text-to-speech solution, supporting over 15 languages to cater to a diverse user base. It is important to note that the quality of each model varies, which in turn affects the voice output. Overtone offers four quality variations: X-LOW, LOW, MEDIUM, and HIGH, allowing users to choose the one that best fits their needs.

The plugin includes a default English-only model, called LibriTTS, which boasts a selection of more than 900 distinct voices, readily available for use. As lower quality models are faster to process, they are particularly well-suited for mobile devices, where speed and efficiency are crucial.

How to download models

The TTSVoice component provides a convenient interface for downloading the models with just a click. Alternatively you can open the window from Window > Overtone > Download Manager

The plugin contains a demos to demonstrate the functionality: Text to speech. You can input text, select a downloaded voice in the TTSVoice component an listen to it

This class loads and setups the model into memory. It should be added into scenes that Overtone is planned to be used. It exposes 1 method, Speak which receives a string and a TTSVoice and returns an audioclip.

Example programatic usage:

This script loads a voice model and frees it when necessary. It also allows the user to select the speaker id to use in the voice model.

Script Reference for TTSVoice.cs

TTSPlayer.cs is a script that combines a TTSVoice and a TTSEngine into synthesized text.

Script Reference for TTSPlayer.cs

Ssmlpreprocessor.

SSMLPreprocessor.cs is a static class that offers limited SSML (Speech Synthesis Markup Language) support for Overtone. Currently, this class supports preprocessing for the <break> tag.

Speech Synthesis Markup Language (SSML) is an XML-based markup language that provides a standard way to control various aspects of synthesized speech output, including pronunciation, volume, pitch, and speed.

While we plan to add partial SSML support in future updates, for now, the SSMLPreprocessor class only recognizes the <break> tag.

The <break> tag allows you to add a pause in the synthesized speech output.

Supported Platforms

Overtone supports the following platforms:

If interested in any other platforms, please reach out.

Supported Languages

Troubleshooting.

For any questions, issues or feature requests don’t hesitate to email us at [email protected] or join the discord . Very are happy to help and aim to have very fast response times :)

We are a small company focused on building tools for game developers. Send us an email to [email protected] if interested in working with us. For any other inquiries, feel free to contact us at [email protected] or contact us on the discord

Sign up to our newsletter.

Want to receive news about discounts, new products and updates?

- Case Studies

- Support & Services

- Asset Store

Search Unity

A Unity ID allows you to buy and/or subscribe to Unity products and services, shop in the Asset Store and participate in the Unity community.

- Discussions

- Evangelists

- User Groups

- Beta Program

- Advisory Panel

You are using an out of date browser. It may not display this or other websites correctly. You should upgrade or use an alternative browser .

- Megacity Metro Demo now available. Download now. Dismiss Notice

- Search titles only

Separate names with a comma.

- Search this thread only

- Display results as threads

Useful Searches

- Recent Posts

RT-Voice - Run-time text-to-speech solution

Discussion in ' Assets and Asset Store ' started by Stefan-Laubenberger , Jul 10, 2015 .

- editor extensions

Stefan-Laubenberger

RT-Voice Have you ever wanted to make a game for people with visual impairment or who have difficulties reading ? Do you have lazy players who don't like to read too much? Or do you even want to test your game's voice dialogues without having to pay a voice actor yet? With RT-Voice this is very easily done – it's a major time saver! RT-Voice uses the computer's (already implemented) TTS (text-to-speech) voices to turn the written lines into speech and dialogue at run-time ! Therefore, all text in your game/app can be spoken out loud to the player. And all of this without any intermediate steps: The transformation is instantaneous and simultaneous (if needed)! Features: Convert text to voice Instant conversion from text to speech - generated during runtime! Side effect: the continuous audio generation saves a lot of memory No need for voice actors during the testing phase of your game Several voices at once are possible (e.g. for scenes in a public place, where many people are talking at the same time) Fine tuning for your voices with speed, pitch and volume Support for SSML and EmotionML Current word , visemes and phomenes on Windows and iOS - including marker functions Generated audio can be stored in files reusable within Unity 1-infinite synchronized speakers for a single AudioSource Simple sequence and dialogue system No performance drops Components to speak UI-elements, like Text and InputField Enables access to more than 1'000 different voices Documentation & control Test all voices within the editor Powerful API for maximum control Detailed demo scenes Comprehensive documentation and support Full source code (including libraries) Compatibility Supports all build platforms Native providers for Windows , macOS , Android, iOS and XBox Compatible with: AWS Polly Azure (Bing Speech) MaryTTS eSpeak and eSpeak-NG Klattersynth WebGL Speech Synthesis Google Cloud Text-To-Speech Mimic Works with Windows , Mac and Linux editors Compatible with Unity 2019.4 – 2023 Supports AR and VR C# delegates and Unity events Works with Online Check PlayMaker actions Integrations: Adventure Creator Amplitude Cinema Director Dialogue System Google Cloud Speech Klattersynth LipSync Pro Localized Dialogs Naninovel NPC Chat Online Check PlayMaker Quest System Pro SALSA SLATE Volumetric Audio WebGL Speech Synthesis Some impressions: Video: AssetStore: RT-Voice PRO All Tools Bundle Our other assets Demos: WebGL Windows Mac Linux Android Changes Feel free to download and test it. Any constructive comments are very welcome! Cheers Stefan

Sounds promising! Tagged and looking forward to it.

Interesting idea. Might be useful, will be fun. Just wondering, what happens if user has disabled text to speech system?

In this case, no voices are found and "RT-Voice" can't speak the text. You can catch this case and tell your users to enable TTS on their system. No further complications will occur - you simply don't hear the text. I hope this helps!

Already have.

DigitalAdam

Looks good! I noticed in your screen shot above that you have additional voices to choose from. Is that because the user installed additional voices on their PC? If so, where would I find those? I'm, looking for something more natural. Any plans to also integrate this with Windows 10 and Cortana? Also on your website your "Features" and "More Features" links doesn't load the modal popup.

Hi sicga! Thank you for buying our asset! If you have any suggestions/questions, just contact us. Cheers Stefan sicga123 said: ↑ Already have. Click to expand...

Hi Adamz Thank you for your interest in our asset! RT-Voice can currently only use the installed voices on a system. Unfortunately, there are only a few voices available under Microsoft Windows 8.1: 2-3 female and 1 male voice (English). There are (female) voices for all major languages available, but it depends on the regional settings in Windows: https://en.wikipedia.org/wiki/Microsoft_text-to-speech_voices I can't do much about it, sorry. On a Mac, there are like 50 voices for various languages installed. They are much better imho and some are really fun About Windows10/Cortana: probably ;-) No, serious, atm we still use Windows 8.1 and we will wait some time until the biggest bugs are fixed... But I will take a look in my VM and think about it (without promising anything!). About the website: thank you for the info, but I already knew it. We had a bit too much work so this wasn't fixed until now. :-( Cheers Stefan adamz said: ↑ Looks good! I noticed in your screen shot above that you have additional voices to choose from. Is that because the user installed additional voices on their PC? If so, where would I find those? I'm, looking for something more natural. Any plans to also integrate this with Windows 10 and Cortana? Also on your website your "Features" and "More Features" links doesn't load the modal popup. Click to expand...

Is there a way to have it "speak" without using an input field? I just want to use a Text UI element. Do I need to change something in the SpeakWrapper.cs script?

Hi Adamz! I wrote you an email - I hope it helps Here is the solution for you and other users: After adding the "RTVoice"-prefab to your scene, create an empty gameobject and add follwing script to this new object. Code (CSharp): using UnityEngine ; using System.Collections ; using Crosstales.RTVoice ; public class RTVGame : MonoBehaviour { void Start ( ) { Invoke ( "SayHello" , 2f ) ; Invoke ( "Talk" , 6f ) ; Invoke ( "Silence" , 9f ) ; } public void SayHello ( ) { Speaker . Speak ( "Hello dear user! How are you?" ) ; } public void Talk ( ) { Speaker . Speak ( "Lorem ipsum dolor sit amet, consetetur sadipscing elitr, sed diam nonumy eirmod tempor invidunt ut labore et dolore magna aliquyam erat, sed diam voluptua." ) ; } public void Silence ( ) { Speaker . Silence ( ) ; } } Then hit "run" The script does this: After 2 seconds, it speaks "Hello dear user! How are you?" with the OS-default voice (if your OS isn't "English" it would probably sound a bit strange). After 6 seconds, a Lorem ipsum-sentence is played After 9 seconds, RT-Voice should be quiet Hopefully, this explains the basic usage - you can use your own way of letting "RT-Voice" speak (e.g. by commenting the "Start"-method and then calling "SayHello()" from a button click or use the text of an input field). If you want a list of all available voices, use "Speaker.Voices()" -> for more API-calls and examples, please see the documentation (starts at page 6). Cheers Stefan

Stefan Laubenberger said: ↑ Hi Adamz! I wrote you an email - I hope it helps Here is the solution for you and other users: After adding the "RTVoice"-prefab... Click to expand...

This isn't an error - just a warning The reason for this: The method "Speaker.Speak()" has two parameters - the first is the text and the second is the OS "voice", which is optional but results in your described warning. Here is an updated example: Code (CSharp): using UnityEngine ; using System.Collections ; using Crosstales.RTVoice ; public class RTVGame : MonoBehaviour { void Start ( ) { Invoke ( "SayHello" , 2f ) ; Invoke ( "Talk" , 6f ) ; Invoke ( "Silence" , 9f ) ; foreach ( Voice voice in Speaker . Voices ( ) ) { Debug . Log ( voice ) ; } } public void SayHello ( ) { Speaker . Speak ( "Hello dear user! How are you?" , Speaker . VoicesForCulture ( "en" ) [ 0 ] ) ; } public void Talk ( ) { Speaker . Speak ( "Lorem ipsum dolor sit amet, consetetur sadipscing elitr, sed diam nonumy eirmod tempor invidunt ut labore et dolore magna aliquyam erat, sed diam voluptua." ) ; } public void Silence ( ) { Speaker . Silence ( ) ; } } Now, it uses (if available) the first English voice to speak the text in the "SayHello()"-method. It also prints all available voices to your console and you can choose a voice and use it like that: Code (CSharp): Speaker . Speak ( "hello world" , Speaker . VoicesForCulture ( "en" ) [ 0 ] ) ) ;

Version 1.1.0 is submitted to the store and adds much more value: The generated audio from the TTS-system is now available inside Unity! This means, you can use it with awesome tools like SALSA ;-) We also added a promo video: Have a nice weekend! Cheers Stefan

Here is a very simple demo showing RT-Voice and SALSA working together. Please follow this steps: create a new Unity 5 project import the SALSA-package import the RT-Voice-package - make sure you're using the latest version (1.1.0 or higher) from the store import the demo-package found here open Assets/_Scenes/RTVoiceSalsa Hit run and hf Here are the actual demos: Windows Mac I hope this helps you jump-start using our products ;-) If you have any further suggestions or questions, just contact us. Edit: Deleted the links since this demo is part of RT-Voice since 2.3.0

Crazy-Minnow-Studio

Thanks Stefan, this is an awesome update of RT-Voice! And will pair nicely with SALSA! As a small caveat for customers using this with the current release of SALSA v1.3.2, it is necessary to collapse the SALSA custom inspector for proper operation with RT-Voice. This requirement is removed in v1.3.3 which is currently pending review by the UAS team and we expect it to be approved in the next couple of days.

We've just finished version 1.2.0 and will submit it tomorrow. There are many new features added, but the most useful should be the support for Unity's "SendMessage". Therefore Localized Dialogs & Cutscenes (LDC) will fully support "RT-Voice" with their upcoming release . Happy time!

Yeah, we support now also Dialogue System for Unity ! Have a nice weekend!

We submitted the new version 1.2.0 to the store. It adds also support for THE Dialogue Engine - please take a look at their post . This means, RT-Voice is now working with SALSA and all the major dialog systems ! Now, I'm off for LD33 - happy jamming!

The Dialogue System for Unity version 1.5.5 is now available on the Asset Store with support for Stefan's excellent RT-Voice. It's very easy to use: Just drop an RT Voice Actor component on your speakers, and they'll automatically speak Dialogue System conversation lines and barks. You can tweak the component to specify gender and age range, and it will use the best match available on the player's system. It also includes an additional example that ties in SALSA with RT-Voice and the Dialogue System for fully lipsynced interactive text-to-speech.

Hi Tony I'm so happy that your awesome Dialogue System for Unity supports now RT-Voice! Thank you very much for your effort! Cheers Stefan

unitydevist

Hi Stefan, I wrote a similar implementation myself but I would like to switch to yours after seeing your integration with Dialogue System and SALSA is already complete. I would like to see you add additional choices for voice APIs to support Linux and Web builds via MaryTTS (which could allow for consistent voices across all operating systems) and Google speech APIs that read WWW requested audio in as resources for purely web-based builds. I was planning on doing these myself but I would much prefer to see them integrated for all RT-Voice users. Google's voices are very nice also for use on desktops with batch pre-generated audio files if you support that. Here's someone who started with RT-Voice and then implemented MaryTTS with SALSA already: Just a head: In a scene: Here's a free implementation of Google Voice APIs: http://www.phaaxgames.com/unity-scripts/ Another thing my version does is generate pre-recorded files while running the platform that has the desired voice so those pre-recorded files are available to play as clips instead of being generated at real-time on alternate platforms. Do you have this feature already? Thanks for making this so I can stop maintaining my own custom voice system and utilize yours! Alex

lxpk said: ↑ Hi Stefan, I wrote a similar implementation myself but I would like to switch to yours after seeing your integration with Dialogue System and SALSA is already complete. I would like to see you add additional choices for voice APIs to support Linux and Web builds via MaryTTS (which could allow for consistent voices across all operating systems) and Google speech APIs that read WWW requested audio in as resources for purely web-based builds. I was planning on doing these myself but I would much prefer to see them integrated for all RT-Voice users. Google's voices are very nice also for use on desktops with batch pre-generated audio files if you support that. Here's someone who started with RT-Voice and then implemented MaryTTS with SALSA already: Just a head: In a scene: Here's a free implementation of Google Voice APIs: http://www.phaaxgames.com/unity-scripts/ Another thing my version does is generate pre-recorded files while running the platform that has the desired voice so those pre-recorded files are available to play as clips instead of being generated at real-time on alternate platforms. Do you have this feature already? Thanks for making this so I can stop maintaining my own custom voice system and utilize yours! Alex Click to expand...

We recommend this awesome run-time text-to-speech system. It's easy to use, a great price, and it works great with SALSA lip-sync.

^ I second that! The Dialogue System for Unity has RT-Voice and SALSA support, and the example scene using all three was super easy to set up. It took hardly any effort to get characters audibly conversing with full lipsync. Even if you're planning to eventually use prerecorded voice actors, RT-Voice is a great way to prototype your conversations beforehand.

If there is a possibility to use it with Adventure Creator? I'm using Salsa with Adventure Creator and it would be great if RT-Voice could support this workflow.

Hormic said: ↑ If there is a possibility to use it with Adventure Creator? I'm using Salsa with Adventure Creator and it would be great if RT-Voice could support this workflow. Click to expand...

Thank you TonyLi, i already own your Dialoge System but i'm actually happy with the way Adventure Creator is handling all the Dialogs and Texts and i don't want to make the developing process more complex without the proper need. I don't know which developer should do the first step to integrate RT-Voice to Adventure Creator. Let's see what Stefan is thinking about a patch and then i can also write a request in the AC Forum.

Hormic said: ↑ Thank you TonyLi, i already own your Dialoge System but i'm actually happy with the way Adventure Creator is handling all the Dialogs and Texts and i don't want to make the developing process more complex without the proper need. I don't know which developer should do the first step to integrate RT-Voice to Adventure Creator. Let's see what Stefan is thinking about a patch and then i can also write a request in the AC Forum. Click to expand...

finally... I've been waiting for something like this for ages... now for those of us challenged at coding, is there any chance in making some actions (like speak, pause, stop, pitch...) for playmaker??

cel said: ↑ finally... I've been waiting for something like this for ages... now for those of us challenged at coding, is there any chance in making some actions (like speak, pause, stop, pitch...) for playmaker?? Click to expand...

Hi, I'm a user of RT-Voice PRO version 1.4.1. Since I have upgraded Windows10 (first release to the latest) and Unity5.3.0 (5.1 to 5.3), RT-voice doesn't work. It gives an error "No OS voices found". I have checked OS voices by control panel and it works fine, it has 3 voices (2 English, 1 Japanese). Would you help me to solve the problem?

hayasim777 said: ↑ Hi, I'm a user of RT-Voice PRO version 1.4.1. Since I have upgraded Windows10 (first release to the latest) and Unity5.3.0 (5.1 to 5.3), RT-voice doesn't work. It gives an error "No OS voices found". I have checked OS voices by control panel and it works fine, it has 3 voices (2 English, 1 Japanese). Would you help me to solve the problem? Click to expand...

Hi Stefan, Thank you for your quick reply! I'm sooooo sorry, I found that it's just my easy mistake.(build setup was switched to Webplayer for some reason. ^^;; Now, it's working with no problem. Thanks a lot!

hayasim777 said: ↑ Hi Stefan, Thank you for your quick reply! I'm sooooo sorry, I found that it's just my easy mistake.(build setup was switched to Webplayer for some reason. ^^;; Now, it's working with no problem. Thanks a lot! Click to expand...

Hi Stefan, I bought RTVoice and imported v1.4.1 I opened up the demo scene "Dialog" (and all the others) but I get always the same error messages: Code (CSharp): ERROR - Could not find the TTS - wrapper : 'F:/unity/arisinggames/sos/Assets/crosstales/RTVoice/Exe/RTVoiceTTSWrapper.exe' No voices for culture 'en' found ! Speaking with the default voice ! The given 'voice' or 'voice.Name' is null ! Using the OS 'default' voice . Unity version: 5.2.0f3 OS: Windows 10 Any suggestions? Thanks, Boris

Boris0Dev said: ↑ Hi Stefan, I bought RTVoice and imported v1.4.1 I opened up the demo scene "Dialog" (and all the others) but I get always the same error messages: Code (CSharp): ERROR - Could not find the TTS - wrapper : 'F:/unity/arisinggames/sos/Assets/crosstales/RTVoice/Exe/RTVoiceTTSWrapper.exe' No voices for culture 'en' found ! Speaking with the default voice ! The given 'voice' or 'voice.Name' is null ! Using the OS 'default' voice . Unity version: 5.2.0f3 OS: Windows 10 Any suggestions? Thanks, Boris Click to expand...

Thanks Stefan. The cause for the error was that I moved the folder "RTVoice" to another one (.../Assets/vendor/RTVoice). Is there a way to make it work under this folder? Thanks

Boris0Dev said: ↑ Thanks Stefan. The cause for the error was that I moved the folder "RTVoice" to another one (.../Assets/vendor/RTVoice). Is there a way to make it work under this folder? Thanks Click to expand...

- private string applicationName ( ) {

- if ( Application . platform == RuntimePlatform . WindowsEditor ) {

- return Application . dataPath + @"/vendor/RTVoice/Exe/RTVoiceTTSWrapper.exe" ;

- } else {

- return Application . dataPath + @"/RTVoiceTTSWrapper.exe" ;

- }

- }

- Upgrade to the PRO version and change the path as mentioned above.

- Send me an email with your invoice and I can send you a DLL 2.0 beta-version in the next few days.

Good morning Stefan, thanks for the great support and the quick replies. As I have the PRO version I could change it and it works like a charm.

Boris0Dev said: ↑ Good morning Stefan, thanks for the great support and the quick replies. As I have the PRO version I could change it and it works like a charm. Click to expand...

Hi Stefan, We just bought the RT-Voice plugin, and are very excited by the possibilities. We are working on a machinima short film (unfortunately without any coders) that is basically a big cutscene...We are looking for a simple way to fire different voice texts on a timeline ( like Usequencer or CinemaDirector) without using Playmaker if possible. It works well with the 'send message' demo, as we disable the _scene behaviour at start, and enabling it at a certain time. But unfortunately it is impossible to change the text as it is hard coded in the script. Could you help us ? We are sure that It would be interesting for RT-Voice to support those assets.... Thanks,.. Eric

RitonV said: ↑ Hi Stefan, We just bought the RT-Voice plugin, and are very excited by the possibilities. We are working on a machinima short film (unfortunately without any coders) that is basically a big cutscene...We are looking for a simple way to fire different voice texts on a timeline ( like Usequencer or CinemaDirector) without using Playmaker if possible. It works well with the 'send message' demo, as we disable the _scene behaviour at start, and enabling it at a certain time. But unfortunately it is impossible to change the text as it is hard coded in the script. Could you help us ? We are sure that It would be interesting for RT-Voice to support those assets.... Thanks,.. Eric Click to expand...

Hi Stefan, I have RT voicePRO. I use "Speaker.Speak"-method in my scripts. Speaker.Speak(speechText,audioSource,selectedVoice); It works nice but there is a latency especially in windows desktop (1 seconds, 2 seconds, etc.) . I also test your sample scene and demos you gave upper post of this forum. There is same problem also. I have use these samples in Windows8 (somehow weak computer) and windows10 (powerful computer); however, there is still same problem. How can solve this problem? It is really important and urgent because this project is being developed for private company and it has dead-line. The project like a dialog manager. Our software (robotic) and user talk together but with this problem fluency of conversation is lost.

uboncukcu said: ↑ Hi Stefan, I have RT voicePRO. I use "Speaker.Speak"-method in my scripts. Speaker.Speak(speechText,audioSource,selectedVoice); It works nice but there is a latency especially in windows desktop (1 seconds, 2 seconds, etc.) . I also test your sample scene and demos you gave upper post of this forum. There is same problem also. I have use these samples in Windows8 (somehow weak computer) and windows10 (powerful computer); however, there is still same problem. How can solve this problem? It is really important and urgent because this project is being developed for private company and it has dead-line. The project like a dialog manager. Our software (robotic) and user talk together but with this problem fluency of conversation is lost. Click to expand...

- Speak(): This method calls the TTS-system and let it generate a wav-file. This file is then passed to Unity which reads it as an "AudioClip" (that's probably causing the delay).

- SpeakNative(): This method calls the TTS-system directly and let it speak. Therefore, there should be no (or only a marginal) delay.

- Generate all AudioClips in advance by calling "Speaker.Speak(speechText,audioSource,selectedVoice, false);" and play the AudioSource when it's needed.

- Use "Speaker.SpeakNative(speechText,audioSource,selectedVoice);"

Hello, Are their any plans to have this work on mobile (iOS, android)? Thanks, jrDev

jrDev said: ↑ Hello, Are their any plans to have this work on mobile (iOS, android)? Thanks, jrDev Click to expand...

Stefan Laubenberger said: ↑ Hi jrDev We already did some extended research on the mobile-topic, but it's really a pain... In every Android and iOS-version something changes and to support all the various devices and versions will be the opposite of fun. There is also a (even more) limited set of voices (iOS has afaik only "Siri"). So, we won't support mobile devices in the near future. :-( But we will deliver the best experience on Windows/Mac and are constantly improving "RTVoice" for this use-case. Cheers Stefan P.S: there are already many assets for mobile and TTS in the store. But I don't know them, so I can't give any advice... Click to expand...

Hey Stefan, Does RTVoice have Playmaker actions? Thanks, jrDev

jrDev said: ↑ Hey Stefan, Does RTVoice have Playmaker actions? Thanks, jrDev Click to expand...

We've got a new trailer video for RTVoice: Have fun! Cheers Stefan

Mobile Menu Overlay

The White House 1600 Pennsylvania Ave NW Washington, DC 20500

A Proclamation on Transgender Day of Visibility, 2024

On Transgender Day of Visibility, we honor the extraordinary courage and contributions of transgender Americans and reaffirm our Nation’s commitment to forming a more perfect Union — where all people are created equal and treated equally throughout their lives.

I am proud that my Administration has stood for justice from the start, working to ensure that the LGBTQI+ community can live openly, in safety, with dignity and respect. I am proud to have appointed transgender leaders to my Administration and to have ended the ban on transgender Americans serving openly in our military. I am proud to have signed historic Executive Orders that strengthen civil rights protections in housing, employment, health care, education, the justice system, and more. I am proud to have signed the Respect for Marriage Act into law, ensuring that every American can marry the person they love.

Transgender Americans are part of the fabric of our Nation. Whether serving their communities or in the military, raising families or running businesses, they help America thrive. They deserve, and are entitled to, the same rights and freedoms as every other American, including the most fundamental freedom to be their true selves. But extremists are proposing hundreds of hateful laws that target and terrify transgender kids and their families — silencing teachers; banning books; and even threatening parents, doctors, and nurses with prison for helping parents get care for their children. These bills attack our most basic American values: the freedom to be yourself, the freedom to make your own health care decisions, and even the right to raise your own child. It is no surprise that the bullying and discrimination that transgender Americans face is worsening our Nation’s mental health crisis, leading half of transgender youth to consider suicide in the past year. At the same time, an epidemic of violence against transgender women and girls, especially women and girls of color, continues to take too many lives. Let me be clear: All of these attacks are un-American and must end. No one should have to be brave just to be themselves.

At the same time, my Administration is working to stop the bullying and harassment of transgender children and their families. The Department of Justice has taken action to push back against extreme and un-American State laws targeting transgender youth and their families and the Department of Justice is partnering with law enforcement and community groups to combat hate and violence. My Administration is also providing dedicated emergency mental health support through our nationwide suicide and crisis lifeline — any LGBTQI+ young person in need can call “988” and press “3” to speak with a counselor trained to support them. We are making public services more accessible for transgender Americans, including with more inclusive passports and easier access to Social Security benefits. There is much more to do. I continue to call on the Congress to pass the Equality Act, to codify civil rights protections for all LGBTQI+ Americans.

Today, we send a message to all transgender Americans: You are loved. You are heard. You are understood. You belong. You are America, and my entire Administration and I have your back.

NOW, THEREFORE, I, JOSEPH R. BIDEN JR., President of the United States of America, by virtue of the authority vested in me by the Constitution and the laws of the United States, do hereby proclaim March 31, 2024, as Transgender Day of Visibility. I call upon all Americans to join us in lifting up the lives and voices of transgender people throughout our Nation and to work toward eliminating violence and discrimination based on gender identity.

IN WITNESS WHEREOF, I have hereunto set my hand this twenty-ninth day of March, in the year of our Lord two thousand twenty-four, and of the Independence of the United States of America the two hundred and forty-eighth.

JOSEPH R. BIDEN JR.

Stay Connected

We'll be in touch with the latest information on how President Biden and his administration are working for the American people, as well as ways you can get involved and help our country build back better.

Opt in to send and receive text messages from President Biden.

Search code, repositories, users, issues, pull requests...

Provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications

Zero-Shot Speech Editing and Text-to-Speech in the Wild

Licenses found

Jasonppy/voicecraft, folders and files, repository files navigation, voicecraft: zero-shot speech editing and text-to-speech in the wild.

VoiceCraft is a token infilling neural codec language model, that achieves state-of-the-art performance on both speech editing and zero-shot text-to-speech (TTS) on in-the-wild data including audiobooks, internet videos, and podcasts.

To clone or edit an unseen voice, VoiceCraft needs only a few seconds of reference.

⭐ 03/28/2024: Model weights are up on HuggingFace🤗 here !

- Codebase upload

Environment setup

- Inference demo for speech editing and TTS

- Training guidance

- RealEdit dataset and training manifest

- Model weights (both 330M and 830M, the former seems to be just as good)

- Write colab notebooks for better hands-on experience

- HuggingFace Spaces demo

- Better guidance on training/finetuning

How to run TTS inference

There are two ways:

- with docker. see quickstart

- without docker. see envrionment setup

When you are inside the docker image or you have installed all dependencies, Checkout inference_tts.ipynb .

If you want to do model development such as training/finetuning, I recommend following envrionment setup and training .

⭐ To try out TTS inference with VoiceCraft, the best way is using docker. Thank @ubergarm and @jayc88 for making this happen.

Tested on Linux and Windows and should work with any host with docker installed.

If you have encountered version issues when running things, checkout environment.yml for exact matching.

Inference Examples

Checkout inference_speech_editing.ipynb and inference_tts.ipynb

To train an VoiceCraft model, you need to prepare the following parts:

- utterances and their transcripts

- encode the utterances into codes using e.g. Encodec

- convert transcripts into phoneme sequence, and a phoneme set (we named it vocab.txt)

- manifest (i.e. metadata)

Step 1,2,3 are handled in ./data/phonemize_encodec_encode_hf.py , where

- Gigaspeech is downloaded through HuggingFace. Note that you need to sign an agreement in order to download the dataset (it needs your auth token)

- phoneme sequence and encodec codes are also extracted using the script.

An example run: