- Onsite training

3,000,000+ delegates

15,000+ clients

1,000+ locations

- KnowledgePass

- Log a ticket

01344203999 Available 24/7

12 Best Artificial Intelligence Topics for Research in 2024

Explore the "12 Best Artificial Intelligence Topics for Research in 2024." Dive into the top AI research areas, including Natural Language Processing, Computer Vision, Reinforcement Learning, Explainable AI (XAI), AI in Healthcare, Autonomous Vehicles, and AI Ethics and Bias. Stay ahead of the curve and make informed choices for your AI research endeavours.

Exclusive 40% OFF

Training Outcomes Within Your Budget!

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

Share this Resource

- AI Tools in Performance Marketing Training

- Deep Learning Course

- Natural Language Processing (NLP) Fundamentals with Python

- Machine Learning Course

- Duet AI for Workspace Training

Table of Contents

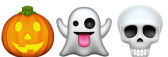

1) Top Artificial Intelligence Topics for Research

a) Natural Language Processing

b) Computer vision

c) Reinforcement Learning

d) Explainable AI (XAI)

e) Generative Adversarial Networks (GANs)

f) Robotics and AI

g) AI in healthcare

h) AI for social good

i) Autonomous vehicles

j) AI ethics and bias

2) Conclusion

Top Artificial Intelligence Topics for Research

This section of the blog will expand on some of the best Artificial Intelligence Topics for research.

Natural Language Processing

Natural Language Processing (NLP) is centred around empowering machines to comprehend, interpret, and even generate human language. Within this domain, three distinctive research avenues beckon:

1) Sentiment analysis: This entails the study of methodologies to decipher and discern emotions encapsulated within textual content. Understanding sentiments is pivotal in applications ranging from brand perception analysis to social media insights.

2) Language generation: Generating coherent and contextually apt text is an ongoing pursuit. Investigating mechanisms that allow machines to produce human-like narratives and responses holds immense potential across sectors.

3) Question answering systems: Constructing systems that can grasp the nuances of natural language questions and provide accurate, coherent responses is a cornerstone of NLP research. This facet has implications for knowledge dissemination, customer support, and more.

Computer Vision

Computer Vision, a discipline that bestows machines with the ability to interpret visual data, is replete with intriguing avenues for research:

1) Object detection and tracking: The development of algorithms capable of identifying and tracking objects within images and videos finds relevance in surveillance, automotive safety, and beyond.

2) Image captioning: Bridging the gap between visual and textual comprehension, this research area focuses on generating descriptive captions for images, catering to visually impaired individuals and enhancing multimedia indexing.

3) Facial recognition: Advancements in facial recognition technology hold implications for security, personalisation, and accessibility, necessitating ongoing research into accuracy and ethical considerations.

Reinforcement Learning

Reinforcement Learning revolves around training agents to make sequential decisions in order to maximise rewards. Within this realm, three prominent Artificial Intelligence Topics emerge:

1) Autonomous agents: Crafting AI agents that exhibit decision-making prowess in dynamic environments paves the way for applications like autonomous robotics and adaptive systems.

2) Deep Q-Networks (DQN): Deep Q-Networks, a class of reinforcement learning algorithms, remain under active research for refining value-based decision-making in complex scenarios.

3) Policy gradient methods: These methods, aiming to optimise policies directly, play a crucial role in fine-tuning decision-making processes across domains like gaming, finance, and robotics.

Explainable AI (XAI)

The pursuit of Explainable AI seeks to demystify the decision-making processes of AI systems. This area comprises Artificial Intelligence Topics such as:

1) Model interpretability: Unravelling the inner workings of complex models to elucidate the factors influencing their outputs, thus fostering transparency and accountability.

2) Visualising neural networks: Transforming abstract neural network structures into visual representations aids in comprehending their functionality and behaviour.

3) Rule-based systems: Augmenting AI decision-making with interpretable, rule-based systems holds promise in domains requiring logical explanations for actions taken.

Generative Adversarial Networks (GANs)

The captivating world of Generative Adversarial Networks (GANs) unfolds through the interplay of generator and discriminator networks, birthing remarkable research avenues:

1) Image generation: Crafting realistic images from random noise showcases the creative potential of GANs, with applications spanning art, design, and data augmentation.

2) Style transfer: Enabling the transfer of artistic styles between images, merging creativity and technology to yield visually captivating results.

3) Anomaly detection: GANs find utility in identifying anomalies within datasets, bolstering fraud detection, quality control, and anomaly-sensitive industries.

Robotics and AI

The synergy between Robotics and AI is a fertile ground for exploration, with Artificial Intelligence Topics such as:

1) Human-robot collaboration: Research in this arena strives to establish harmonious collaboration between humans and robots, augmenting industry productivity and efficiency.

2) Robot learning: By enabling robots to learn and adapt from their experiences, Researchers foster robots' autonomy and the ability to handle diverse tasks.

3) Ethical considerations: Delving into the ethical implications surrounding AI-powered robots helps establish responsible guidelines for their deployment.

AI in healthcare

AI presents a transformative potential within healthcare, spurring research into:

1) Medical diagnosis: AI aids in accurately diagnosing medical conditions, revolutionising early detection and patient care.

2) Drug discovery: Leveraging AI for drug discovery expedites the identification of potential candidates, accelerating the development of new treatments.

3) Personalised treatment: Tailoring medical interventions to individual patient profiles enhances treatment outcomes and patient well-being.

AI for social good

Harnessing the prowess of AI for Social Good entails addressing pressing global challenges:

1) Environmental monitoring: AI-powered solutions facilitate real-time monitoring of ecological changes, supporting conservation and sustainable practices.

2) Disaster response: Research in this area bolsters disaster response efforts by employing AI to analyse data and optimise resource allocation.

3) Poverty alleviation: Researchers contribute to humanitarian efforts and socioeconomic equality by devising AI solutions to tackle poverty.

Unlock the potential of Artificial Intelligence for effective Project Management with our Artificial Intelligence (AI) for Project Managers Course . Sign up now!

Autonomous vehicles

Autonomous Vehicles represent a realm brimming with potential and complexities, necessitating research in Artificial Intelligence Topics such as:

1) Sensor fusion: Integrating data from diverse sensors enhances perception accuracy, which is essential for safe autonomous navigation.

2) Path planning: Developing advanced algorithms for path planning ensures optimal routes while adhering to safety protocols.

3) Safety and ethics: Ethical considerations, such as programming vehicles to make difficult decisions in potential accident scenarios, require meticulous research and deliberation.

AI ethics and bias

Ethical underpinnings in AI drive research efforts in these directions:

1) Fairness in AI: Ensuring AI systems remain impartial and unbiased across diverse demographic groups.

2) Bias detection and mitigation: Identifying and rectifying biases present within AI models guarantees equitable outcomes.

3) Ethical decision-making: Developing frameworks that imbue AI with ethical decision-making capabilities aligns technology with societal values.

Future of AI

The vanguard of AI beckons Researchers to explore these horizons:

1) Artificial General Intelligence (AGI): Speculating on the potential emergence of AI systems capable of emulating human-like intelligence opens dialogues on the implications and challenges.

2) AI and creativity: Probing the interface between AI and creative domains, such as art and music, unveils the coalescence of human ingenuity and technological prowess.

3) Ethical and regulatory challenges: Researching the ethical dilemmas and regulatory frameworks underpinning AI's evolution fortifies responsible innovation.

AI and education

The intersection of AI and Education opens doors to innovative learning paradigms:

1) Personalised learning: Developing AI systems that adapt educational content to individual learning styles and paces.

2) Intelligent tutoring systems: Creating AI-driven tutoring systems that provide targeted support to students.

3) Educational data mining: Applying AI to analyse educational data for insights into learning patterns and trends.

Unleash the full potential of AI with our comprehensive Introduction to Artificial Intelligence Training . Join now!

Conclusion

The domain of AI is ever-expanding, rich with intriguing topics about Artificial Intelligence that beckon Researchers to explore, question, and innovate. Through the pursuit of these twelve diverse Artificial Intelligence Topics, we pave the way for not only technological advancement but also a deeper understanding of the societal impact of AI. By delving into these realms, Researchers stand poised to shape the trajectory of AI, ensuring it remains a force for progress, empowerment, and positive transformation in our world.

Unlock your full potential with our extensive Personal Development Training Courses. Join today!

Frequently Asked Questions

Upcoming data, analytics & ai resources batches & dates.

Fri 26th Apr 2024

Fri 2nd Aug 2024

Fri 15th Nov 2024

Get A Quote

WHO WILL BE FUNDING THE COURSE?

My employer

By submitting your details you agree to be contacted in order to respond to your enquiry

- Business Analysis

- Lean Six Sigma Certification

Share this course

Our biggest spring sale.

We cannot process your enquiry without contacting you, please tick to confirm your consent to us for contacting you about your enquiry.

By submitting your details you agree to be contacted in order to respond to your enquiry.

We may not have the course you’re looking for. If you enquire or give us a call on 01344203999 and speak to our training experts, we may still be able to help with your training requirements.

Or select from our popular topics

- ITIL® Certification

- Scrum Certification

- Change Management Certification

- Business Analysis Courses

- Microsoft Azure Certification

- Microsoft Excel & Certification Course

- Microsoft Project

- Explore more courses

Press esc to close

Fill out your contact details below and our training experts will be in touch.

Fill out your contact details below

Thank you for your enquiry!

One of our training experts will be in touch shortly to go over your training requirements.

Back to Course Information

Fill out your contact details below so we can get in touch with you regarding your training requirements.

* WHO WILL BE FUNDING THE COURSE?

Preferred Contact Method

No preference

Back to course information

Fill out your training details below

Fill out your training details below so we have a better idea of what your training requirements are.

HOW MANY DELEGATES NEED TRAINING?

HOW DO YOU WANT THE COURSE DELIVERED?

Online Instructor-led

Online Self-paced

WHEN WOULD YOU LIKE TO TAKE THIS COURSE?

Next 2 - 4 months

WHAT IS YOUR REASON FOR ENQUIRING?

Looking for some information

Looking for a discount

I want to book but have questions

One of our training experts will be in touch shortly to go overy your training requirements.

Your privacy & cookies!

Like many websites we use cookies. We care about your data and experience, so to give you the best possible experience using our site, we store a very limited amount of your data. Continuing to use this site or clicking “Accept & close” means that you agree to our use of cookies. Learn more about our privacy policy and cookie policy cookie policy .

We use cookies that are essential for our site to work. Please visit our cookie policy for more information. To accept all cookies click 'Accept & close'.

- Free Python 3 Tutorial

- Control Flow

- Exception Handling

- Python Programs

- Python Projects

- Python Interview Questions

- Python Database

- Data Science With Python

- Machine Learning with Python

- How Amazon Uses Machine Learning?

- Artificial Intelligence Could be a Better Doctor

- 10 Most Interesting Chatbots in the World

- What Are DeepFakes And How Dangerous Are They?

- Impact of AI and ML On Warfare Techniques

- What is the Role of Artificial Intelligence in Fighting Coronavirus?

- Top 7 Artificial Intelligence and Machine Learning Trends For 2022

- 5 Algorithms that Demonstrate Artificial Intelligence Bias

- What is Artificial Intelligence as a Service (AIaaS) in the Tech Industry?

- Top 10 Business Intelligence Platforms in 2020

- What is IBM Watson and Its Services?

- 8 Best Artificial Intelligence Books For Beginners in 2024

- Can Artificial Intelligence Help in Curing Cancer?

- Is AI Really a Threat to Cybersecurity?

- 10 Best Artificial Intelligence Project Ideas To Kick-Start Your Career

- 5 Best Humanoid Robots in The World

- Propositional Logic based Agent

- Resolution Algorithm in Artificial Intelligence

- Face recognition using Artificial Intelligence

8 Best Topics for Research and Thesis in Artificial Intelligence

Imagine a future in which intelligence is not restricted to humans!!! A future where machines can think as well as humans and work with them to create an even more exciting universe. While this future is still far away, Artificial Intelligence has still made a lot of advancement in these times. There is a lot of research being conducted in almost all fields of AI like Quantum Computing, Healthcare, Autonomous Vehicles, Internet of Things , Robotics , etc. So much so that there is an increase of 90% in the number of annually published research papers on Artificial Intelligence since 1996. Keeping this in mind, if you want to research and write a thesis based on Artificial Intelligence, there are many sub-topics that you can focus on. Some of these topics along with a brief introduction are provided in this article. We have also mentioned some published research papers related to each of these topics so that you can better understand the research process.

So without further ado, let’s see the different Topics for Research and Thesis in Artificial Intelligence!

1. Machine Learning

Machine Learning involves the use of Artificial Intelligence to enable machines to learn a task from experience without programming them specifically about that task. (In short, Machines learn automatically without human hand holding!!!) This process starts with feeding them good quality data and then training the machines by building various machine learning models using the data and different algorithms. The choice of algorithms depends on what type of data do we have and what kind of task we are trying to automate. However, generally speaking, Machine Learning Algorithms are divided into 3 types i.e. Supervised Machine Learning Algorithms, Unsupervised Machine Learning Algorithms , and Reinforcement Machine Learning Algorithms.

2. Deep Learning

Deep Learning is a subset of Machine Learning that learns by imitating the inner working of the human brain in order to process data and implement decisions based on that data. Basically, Deep Learning uses artificial neural networks to implement machine learning. These neural networks are connected in a web-like structure like the networks in the human brain (Basically a simplified version of our brain!). This web-like structure of artificial neural networks means that they are able to process data in a nonlinear approach which is a significant advantage over traditional algorithms that can only process data in a linear approach. An example of a deep neural network is RankBrain which is one of the factors in the Google Search algorithm.

3. Reinforcement Learning

Reinforcement Learning is a part of Artificial Intelligence in which the machine learns something in a way that is similar to how humans learn. As an example, assume that the machine is a student. Here the hypothetical student learns from its own mistakes over time (like we had to!!). So the Reinforcement Machine Learning Algorithms learn optimal actions through trial and error. This means that the algorithm decides the next action by learning behaviors that are based on its current state and that will maximize the reward in the future. And like humans, this works for machines as well! For example, Google’s AlphaGo computer program was able to beat the world champion in the game of Go (that’s a human!) in 2017 using Reinforcement Learning.

4. Robotics

Robotics is a field that deals with creating humanoid machines that can behave like humans and perform some actions like human beings. Now, robots can act like humans in certain situations but can they think like humans as well? This is where artificial intelligence comes in! AI allows robots to act intelligently in certain situations. These robots may be able to solve problems in a limited sphere or even learn in controlled environments. An example of this is Kismet , which is a social interaction robot developed at M.I.T’s Artificial Intelligence Lab. It recognizes the human body language and also our voice and interacts with humans accordingly. Another example is Robonaut , which was developed by NASA to work alongside the astronauts in space.

5. Natural Language Processing

It’s obvious that humans can converse with each other using speech but now machines can too! This is known as Natural Language Processing where machines analyze and understand language and speech as it is spoken (Now if you talk to a machine it may just talk back!). There are many subparts of NLP that deal with language such as speech recognition, natural language generation, natural language translation , etc. NLP is currently extremely popular for customer support applications, particularly the chatbot . These chatbots use ML and NLP to interact with the users in textual form and solve their queries. So you get the human touch in your customer support interactions without ever directly interacting with a human.

Some Research Papers published in the field of Natural Language Processing are provided here. You can study them to get more ideas about research and thesis on this topic.

6. Computer Vision

The internet is full of images! This is the selfie age, where taking an image and sharing it has never been easier. In fact, millions of images are uploaded and viewed every day on the internet. To make the most use of this huge amount of images online, it’s important that computers can see and understand images. And while humans can do this easily without a thought, it’s not so easy for computers! This is where Computer Vision comes in. Computer Vision uses Artificial Intelligence to extract information from images. This information can be object detection in the image, identification of image content to group various images together, etc. An application of computer vision is navigation for autonomous vehicles by analyzing images of surroundings such as AutoNav used in the Spirit and Opportunity rovers which landed on Mars.

7. Recommender Systems

When you are using Netflix, do you get a recommendation of movies and series based on your past choices or genres you like? This is done by Recommender Systems that provide you some guidance on what to choose next among the vast choices available online. A Recommender System can be based on Content-based Recommendation or even Collaborative Filtering. Content-Based Recommendation is done by analyzing the content of all the items. For example, you can be recommended books you might like based on Natural Language Processing done on the books. On the other hand, Collaborative Filtering is done by analyzing your past reading behavior and then recommending books based on that.

8. Internet of Things

Artificial Intelligence deals with the creation of systems that can learn to emulate human tasks using their prior experience and without any manual intervention. Internet of Things , on the other hand, is a network of various devices that are connected over the internet and they can collect and exchange data with each other. Now, all these IoT devices generate a lot of data that needs to be collected and mined for actionable results. This is where Artificial Intelligence comes into the picture. Internet of Things is used to collect and handle the huge amount of data that is required by the Artificial Intelligence algorithms. In turn, these algorithms convert the data into useful actionable results that can be implemented by the IoT devices.

Please Login to comment...

Similar reads.

- AI-ML-DS Blogs

- 10 Best Slack Integrations to Enhance Your Team's Productivity

- 10 Best Zendesk Alternatives and Competitors

- 10 Best Trello Power-Ups for Maximizing Project Management

- Google Rolls Out Gemini In Android Studio For Coding Assistance

- 30 OOPs Interview Questions and Answers (2024)

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

- LibGuides

- A-Z List

- Help

Artificial Intelligence

- Background Information

- Getting started

- Browse Journals

- Dissertations & Theses

- Datasets and Repositories

- Research Data Management 101

- Scientific Writing

- Find Videos

- Related Topics

- Quick Links

- Ask Us/Contact Us

FIU dissertations

Non-FIU dissertations

Many universities provide full-text access to their dissertations via a digital repository. If you know the title of a particular dissertation or thesis, try doing a Google search.

Aims to be the best possible resource for finding open access graduate theses and dissertations published around the world with metadata from over 800 colleges, universities, and research institutions. Currently, indexes over 1 million theses and dissertations.

This is a discovery service for open access research theses awarded by European universities.

A union catalog of Canadian theses and dissertations, in both electronic and analog formats, is available through the search interface on this portal.

There are currently more than 90 countries and over 1200 institutions represented. CRL has catalog records for over 800,000 foreign doctoral dissertations.

An international collaborative resource, the NDLTD Union Catalog contains more than one million records of electronic theses and dissertations. Use BASE, the VTLS Visualizer or any of the geographically specific search engines noted lower on their webpage.

Indexes doctoral dissertations and masters' theses in all areas of academic research includes international coverage.

ProQuest Dissertations & Theses global

Related Sites

- << Previous: Browse Journals

- Next: Datasets and Repositories >>

- Last Updated: Apr 4, 2024 8:33 AM

- URL: https://library.fiu.edu/artificial-intelligence

Information

Fiu libraries floorplans, green library, modesto a. maidique campus, hubert library, biscayne bay campus.

Directions: Green Library, MMC

Directions: Hubert Library, BBC

Completed Theses

State space search solves navigation tasks and many other real world problems. Heuristic search, especially greedy best-first search, is one of the most successful algorithms for state space search. We improve the state of the art in heuristic search in three directions.

In Part I, we present methods to train neural networks as powerful heuristics for a given state space. We present a universal approach to generate training data using random walks from a (partial) state. We demonstrate that our heuristics trained for a specific task are often better than heuristics trained for a whole domain. We show that the performance of all trained heuristics is highly complementary. There is no clear pattern, which trained heuristic to prefer for a specific task. In general, model-based planners still outperform planners with trained heuristics. But our approaches exceed the model-based algorithms in the Storage domain. To our knowledge, only once before in the Spanner domain, a learning-based planner exceeded the state-of-the-art model-based planners.

A priori, it is unknown whether a heuristic, or in the more general case a planner, performs well on a task. Hence, we trained online portfolios to select the best planner for a task. Today, all online portfolios are based on handcrafted features. In Part II, we present new online portfolios based on neural networks, which receive the complete task as input, and not just a few handcrafted features. Additionally, our portfolios can reconsider their choices. Both extensions greatly improve the state-of-the-art of online portfolios. Finally, we show that explainable machine learning techniques, as the alternative to neural networks, are also good online portfolios. Additionally, we present methods to improve our trust in their predictions.

Even if we select the best search algorithm, we cannot solve some tasks in reasonable time. We can speed up the search if we know how it behaves in the future. In Part III, we inspect the behavior of greedy best-first search with a fixed heuristic on simple tasks of a domain to learn its behavior for any task of the same domain. Once greedy best-first search expanded a progress state, it expands only states with lower heuristic values. We learn to identify progress states and present two methods to exploit this knowledge. Building upon this, we extract the bench transition system of a task and generalize it in such a way that we can apply it to any task of the same domain. We can use this generalized bench transition system to split a task into a sequence of simpler searches.

In all three research directions, we contribute new approaches and insights to the state of the art, and we indicate interesting topics for future work.

Greedy best-first search (GBFS) is a sibling of A* in the family of best-first state-space search algorithms. While A* is guaranteed to find optimal solutions of search problems, GBFS does not provide any guarantees but typically finds satisficing solutions more quickly than A*. A classical result of optimal best-first search shows that A* with admissible and consistent heuristic expands every state whose f-value is below the optimal solution cost and no state whose f-value is above the optimal solution cost. Theoretical results of this kind are useful for the analysis of heuristics in different search domains and for the improvement of algorithms. For satisficing algorithms a similarly clear understanding is currently lacking. We examine the search behavior of GBFS in order to make progress towards such an understanding.

We introduce the concept of high-water mark benches, which separate the search space into areas that are searched by GBFS in sequence. High-water mark benches allow us to exactly determine the set of states that GBFS expands under at least one tie-breaking strategy. We show that benches contain craters. Once GBFS enters a crater, it has to expand every state in the crater before being able to escape.

Benches and craters allow us to characterize the best-case and worst-case behavior of GBFS in given search instances. We show that computing the best-case or worst-case behavior of GBFS is NP-complete in general but can be computed in polynomial time for undirected state spaces.

We present algorithms for extracting the set of states that GBFS potentially expands and for computing the best-case and worst-case behavior. We use the algorithms to analyze GBFS on benchmark tasks from planning competitions under a state-of-the-art heuristic. Experimental results reveal interesting characteristics of the heuristic on the given tasks and demonstrate the importance of tie-breaking in GBFS.

Classical planning tackles the problem of finding a sequence of actions that leads from an initial state to a goal. Over the last decades, planning systems have become significantly better at answering the question whether such a sequence exists by applying a variety of techniques which have become more and more complex. As a result, it has become nearly impossible to formally analyze whether a planning system is actually correct in its answers, and we need to rely on experimental evidence.

One way to increase trust is the concept of certifying algorithms, which provide a witness which justifies their answer and can be verified independently. When a planning system finds a solution to a problem, the solution itself is a witness, and we can verify it by simply applying it. But what if the planning system claims the task is unsolvable? So far there was no principled way of verifying this claim.

This thesis contributes two approaches to create witnesses for unsolvable planning tasks. Inductive certificates are based on the idea of invariants. They argue that the initial state is part of a set of states that we cannot leave and that contains no goal state. In our second approach, we define a proof system that proves in an incremental fashion that certain states cannot be part of a solution until it has proven that either the initial state or all goal states are such states.

Both approaches are complete in the sense that a witness exists for every unsolvable planning task, and can be verified efficiently (in respect to the size of the witness) by an independent verifier if certain criteria are met. To show their applicability to state-of-the-art planning techniques, we provide an extensive overview how these approaches can cover several search algorithms, heuristics and other techniques. Finally, we show with an experimental study that generating and verifying these explanations is not only theoretically possible but also practically feasible, thus making a first step towards fully certifying planning systems.

Heuristic search with an admissible heuristic is one of the most prominent approaches to solving classical planning tasks optimally. In the first part of this thesis, we introduce a new family of admissible heuristics for classical planning, based on Cartesian abstractions, which we derive by counterexample-guided abstraction refinement. Since one abstraction usually is not informative enough for challenging planning tasks, we present several ways of creating diverse abstractions. To combine them admissibly, we introduce a new cost partitioning algorithm, which we call saturated cost partitioning. It considers the heuristics sequentially and uses the minimum amount of costs that preserves all heuristic estimates for the current heuristic before passing the remaining costs to subsequent heuristics until all heuristics have been served this way.

In the second part, we show that saturated cost partitioning is strongly influenced by the order in which it considers the heuristics. To find good orders, we present a greedy algorithm for creating an initial order and a hill-climbing search for optimizing a given order. Both algorithms make the resulting heuristics significantly more accurate. However, we obtain the strongest heuristics by maximizing over saturated cost partitioning heuristics computed for multiple orders, especially if we actively search for diverse orders.

The third part provides a theoretical and experimental comparison of saturated cost partitioning and other cost partitioning algorithms. Theoretically, we show that saturated cost partitioning dominates greedy zero-one cost partitioning. The difference between the two algorithms is that saturated cost partitioning opportunistically reuses unconsumed costs for subsequent heuristics. By applying this idea to uniform cost partitioning we obtain an opportunistic variant that dominates the original. We also prove that the maximum over suitable greedy zero-one cost partitioning heuristics dominates the canonical heuristic and show several non-dominance results for cost partitioning algorithms. The experimental analysis shows that saturated cost partitioning is the cost partitioning algorithm of choice in all evaluated settings and it even outperforms the previous state of the art in optimal classical planning.

Classical planning is the problem of finding a sequence of deterministic actions in a state space that lead from an initial state to a state satisfying some goal condition. The dominant approach to optimally solve planning tasks is heuristic search, in particular A* search combined with an admissible heuristic. While there exist many different admissible heuristics, we focus on abstraction heuristics in this thesis, and in particular, on the well-established merge-and-shrink heuristics.

Our main theoretical contribution is to provide a comprehensive description of the merge-and-shrink framework in terms of transformations of transition systems. Unlike previous accounts, our description is fully compositional, i.e. can be understood by understanding each transformation in isolation. In particular, in addition to the name-giving merge and shrink transformations, we also describe pruning and label reduction as such transformations. The latter is based on generalized label reduction, a new theory that removes all of the restrictions of the previous definition of label reduction. We study the four types of transformations in terms of desirable formal properties and explain how these properties transfer to heuristics being admissible and consistent or even perfect. We also describe an optimized implementation of the merge-and-shrink framework that substantially improves the efficiency compared to previous implementations.

Furthermore, we investigate the expressive power of merge-and-shrink abstractions by analyzing factored mappings, the data structure they use for representing functions. In particular, we show that there exist certain families of functions that can be compactly represented by so-called non-linear factored mappings but not by linear ones.

On the practical side, we contribute several non-linear merge strategies to the merge-and-shrink toolbox. In particular, we adapt a merge strategy from model checking to planning, provide a framework to enhance existing merge strategies based on symmetries, devise a simple score-based merge strategy that minimizes the maximum size of transition systems of the merge-and-shrink computation, and describe another framework to enhance merge strategies based on an analysis of causal dependencies of the planning task.

In a large experimental study, we show the evolution of the performance of merge-and-shrink heuristics on planning benchmarks. Starting with the state of the art before the contributions of this thesis, we subsequently evaluate all of our techniques and show that state-of-the-art non-linear merge-and-shrink heuristics improve significantly over the previous state of the art.

Admissible heuristics are the main ingredient when solving classical planning tasks optimally with heuristic search. Higher admissible heuristic values are more accurate, so combining them in a way that dominates their maximum and remains admissible is an important problem.

The thesis makes three contributions in this area. Extensions to cost partitioning (a well-known heuristic combination framework) allow to produce higher estimates from the same set of heuristics. The new heuristic family called operator-counting heuristics unifies many existing heuristics and offers a new way to combine them. Another new family of heuristics called potential heuristics allows to cast the problem of finding a good heuristic as an optimization problem.

Both operator-counting and potential heuristics are closely related to cost partitioning. They offer a new look on cost partitioned heuristics and already sparked research beyond their use as classical planning heuristics.

Master's theses

Classical planning tasks are typically formulated in PDDL. Some of them can be described more concisely using derived variables. Contrary to basic variables, their values cannot be changed by operators and are instead determined by axioms which specify conditions under which they take a certain value. Planning systems often support axioms in their search component, but their heuristics’ support is limited or nonexistent. This leads to decreased search performance with tasks that use axioms. We compile axioms away using our implementation of a known algorithm in the Fast Downward planner. Our results show that the compilation has a negative impact on search performance with its only benefit being the ability to use heuristics that have no axiom support. As a compromise between performance and expressivity, we identify axioms of a simple form and devise a compilation for them. We compile away all axioms in several of the tested domains without a decline in search performance.

The International Planning Competitions (IPCs) serve as a testing suite for planning sys- tems. These domains are well-motivated as they are derived from, or possess characteristics analogous to real-life applications. In this thesis, we study the computational complexity of the plan existence and bounded plan existence decision problems of the following grid- based IPC domains: VisitAll, TERMES, Tidybot, Floortile, and Nurikabe. In all of these domains, there are one or more agents moving through a rectangular grid (potentially with obstacles) performing actions along the way. In many cases, we engineer instances that can be solved only if the movement of the agent or agents follows a Hamiltonian path or cycle in a grid graph. This gives rise to many NP-hardness reductions from Hamiltonian path/cycle problems on grid graphs. In the case of VisitAll and Floortile, we give necessary and suffi- cient conditions for deciding the plan existence problem in polynomial time. We also show that Tidybot has the game Push -1F as a special case, and its plan existence problem is thus PSPACE-complete. The hardness proofs in this thesis highlight hard instances of these domains. Moreover, by assigning a complexity class to each domain, researchers and practitioners can better assess the strengths and limitations of new and existing algorithms in these domains.

Planning tasks can be used to describe many real world problems of interest. Solving those tasks optimally is thus an avenue of great interest. One established and successful approach for optimal planning is the merge-and-shrink framework, which decomposes the task into a factored transition system. The factors initially represent the behaviour of one state variable and are repeatedly combined and abstracted. The solutions of these abstract states is then used as a heuristic to guide search in the original planning task. Existing merge-and-shrink transformations keep the factored transition system orthogonal, meaning that the variables of the planning task are represented in no more than one factor at any point. In this thesis we introduce the clone transformation, which duplicates a factor of the factored transition system, making it non-orthogonal. We test two classes of clone strategies, which we introduce and implement in the Fast Downward planning system and conclude that, while theoretically promising, our clone strategies are practically inefficient as their performance was worse than state-of-the-art methods for merge-and-shrink.

This thesis aims to present a novel approach for improving the performance of classical planning algorithms by integrating cost partitioning with merge-and-shrink techniques. Cost partitioning is a well-known technique for admissibly adding multiple heuristic values. Merge-and-shrink, on the other hand, is a technique to generate well-informed abstractions. The "merge” part of the technique is based on creating an abstract representation of the original problem by replacing two transition systems with their synchronised product. In contrast, the ”shrink” part refers to reducing the size of the factor. By combining these two approaches, we aim to leverage the strengths of both methods to achieve better scalability and efficiency in solving classical planning problems. Considering a range of benchmark domains and the Fast Downward planning system, the experimental results show that the proposed method achieves the goal of fusing merge and shrink with cost partitioning towards better outcomes in classical planning.

Planning is the process of finding a path in a planning task from the initial state to a goal state. Multiple algorithms have been implemented to solve such planning tasks, one of them being the Property-Directed Reachability algorithm. Property-Directed Reachability utilizes a series of propositional formulas called layers to represent a super-set of states with a goal distance of at most the layer index. The algorithm iteratively improves the layers such that they represent a minimum number of states. This happens by strengthening the layer formulas and therefore excluding states with a goal distance higher than the layer index. The goal of this thesis is to implement a pre-processing step to seed the layers with a formula that already excludes as many states as possible, to potentially improve the run-time performance. We use the pattern database heuristic and its associated pattern generators to make use of the planning task structure for the seeding algorithm. We found that seeding does not consistently improve the performance of the Property-Directed Reachability algorithm. Although we observed a significant reduction in planning time for some tasks, it significantly increased for others.

Certifying algorithms is a concept developed to increase trust by demanding affirmation of the computed result in form of a certificate. By inspecting the certificate, it is possible to determine correctness of the produced output. Modern planning systems have been certifying for long time in the case of solvable instances, where a generated plan acts as a certificate.

Only recently there have been the first steps towards certifying unsolvability judgments in the form of inductive certificates which represent certain sets of states. Inductive certificates are expressed with the help of propositional formulas in a specific formalism.

In this thesis, we investigate the use of propositional formulas in conjunctive normal form (CNF) as a formalism for inductive certificates. At first, we look into an approach that allows us to construct formulas representing inductive certificates in CNF. To show general applicability of this approach, we extend this to the family of delete relaxation heuristics. Furthermore, we present how a planning system is able to generate an inductive validation formula, a single formula that can be used to validate if the set found by the planner is indeed an inductive certificate. At last, we show with an experimental evaluation that the CNF formalism can be feasible in practice for the generation and validation of inductive validation formulas.

In generalized planning the aim is to solve whole classes of planning tasks instead of single tasks one at a time. Generalized representations provide information or knowledge about such classes to help solving them. This work compares the expressiveness of three generalized representations, generalized potential heuristics, policy sketches and action schema networks, in terms of compilability. We use a notion of equivalence that requires two generalized representations to decompose the tasks of a class into the same subtasks. We present compilations between pairs of equivalent generalized representations and proofs where a compilation is impossible.

A Digital Microfluidic Biochip (DMFB) is a digitally controllable lab-on-a-chip. Droplets of fluids are moved, merged and mixed on a grid. Routing these droplets efficiently has been tackled by various different approaches. We try to use temporal planning to do droplet routing, inspired by the use of it in quantum circuit compilation. We test a model for droplet routing in both classical and temporal planning and compare both versions. We show that our classical planning model is an efficient method to find droplet routes on DMFBs. Then we extend our model and include spawning, disposing, merging, splitting and mixing of droplets. The results of these extensions show that we are able to find plans for simple experiments. When scaling the problem size to real life experiments our model fails to find plans.

Cost partitioning is a technique used to calculate heuristics in classical optimal planning. It involves solving a linear program. This linear program can be decomposed into a master and pricing problems. In this thesis we combine Fourier-Motzkin elimination and the double description method in different ways to precompute the generating rays of the pricing problems. We further empirically evaluate these approaches and propose a new method that replaces the Fourier-Motzkin elimination. Our new method improves the performance of our approaches with respect to runtime and peak memory usage.

The increasing number of data nowadays has contributed to new scheduling approaches. Aviation is one of the domains concerned the most, as the aircraft engine implies millions of maintenance events operated by staff worldwide. In this thesis we present a constraint programming-based algorithm to solve the aircraft maintenance scheduling problem. We want to find the best time to do the maintenance by determining which employee will perform the work and when. Here we report how the scheduling process in aviation can be automatized.

To solve stochastic state-space tasks, the research field of artificial intelligence is mainly used. PROST2014 is state of the art when determining good actions in an MDP environment. In this thesis, we aimed to provide a heuristic by using neural networks to outperform the dominating planning system PROST2014. For this purpose, we introduced two variants of neural networks that allow to estimate the respective Q-value for a pair of state and action. Since we envisaged the learning method of supervised learning, in addition to the architecture as well as the components of the neural networks, the generation of training data was also one of the main tasks. To determine the most suitable network parameters, we performed a sequential parameter search, from which we expected a local optimum of the model settings. In the end, the PROST2014 planning system could not be surpassed in the total rating evaluation. Nevertheless, in individual domains, we could establish increased final scores on the side of the neural networks. The result shows the potential of this approach and points to eventual adaptations in future work pursuing this procedure furthermore.

In classical planning, there are tasks that are hard and tasks that are easy. We can measure the complexity of a task with the correlation complexity, the improvability width, and the novelty width. In this work, we compare these measures.

We investigate what causes a correlation complexity of at least 2. To do so we translate the state space into a vector space which allows us to make use of linear algebra and convex cones.

Additionally, we introduce the Basel measure, a new measure that is based on potential heuristics and therefore similar to the correlation complexity but also comparable to the novelty width. We show that the Basel measure is a lower bound for the correlation complexity and that the novelty width +1 is an upper bound for the Basel measure.

Furthermore, we compute the Basel measure for some tasks of the International Planning Competitions and show that the translation of a task can increase the Basel measure by removing seemingly irrelevant state variables.

Unsolvability is an important result in classical planning and has seen increased interest in recent years. This thesis explores unsolvability detection by automatically generating parity arguments, a well-known way of proving unsolvability. The argument requires an invariant measure, whose parity remains constant across all reachable states, while all goal states are of the opposite parity. We express parity arguments using potential functions in the field F 2 . We develop a set of constraints that describes potential functions with the necessary separating property, and show that the constraints can be represented efficiently for up to two-dimensional features. Enhanced with mutex information, an algorithm is formed that tests whether a parity function exists for a given planning task. The existence of such a function proves the task unsolvable. To determine its practical use, we empirically evaluate our approach on a benchmark of unsolvable problems and compare its performance to a state of the art unsolvability planner. We lastly analyze the arguments found by our algorithm to confirm their validity, and understand their expressive power.

We implemented the invariant synthesis algorithm proposed by Rintanen and experimentally compared it against Helmert’s mutex group synthesis algorithm as implemented in Fast Downward.

The context for the comparison is the translation of propositional STRIPS tasks to FDR tasks, which requires the identification of mutex groups.

Because of its dominating lead in translation speed, combined with few and marginal advantages in performance during search, Helmert’s algorithm is clearly better for most uses. Meanwhile Rintanen’s algorithm is capable of finding invariants other than mutexes, which Helmert’s algorithm per design cannot do.

The International Planning Competition (IPC) is a competition of state-of-the-art planning systems. The evaluation of these planning systems is done by measuring them with different problems. It focuses on the challenges of AI planning by analyzing classical, probabilistic and temporal planning and by presenting new problems for future research. Some of the probabilistic domains introduced in IPC 2018 are Academic Advising, Chromatic Dice, Cooperative Recon, Manufacturer, Push Your Luck, Red-finned Blue-eyes, etc.

This thesis aims to solve (near)-optimally two probabilistic IPC 2018 domains, Academic Advising and Chromatic Dice. We use different techniques to solve these two domains. In Academic Advising, we use a relevance analysis to remove irrelevant actions and state variables from the planning task. We then convert the problem from probabilistic to classical planning, which helped us solve it efficiently. In Chromatic Dice, we implement backtracking search to solve the smaller instances optimally. More complex instances are partitioned into several smaller planning tasks, and a near-optimal policy is derived as a combination of the optimal solutions to the small instances.

The motivation for finding (near)-optimal policies is related to the IPC score, which measures the quality of the planners. By providing the optimal upper bound of the domains, we contribute to the stabilization of the IPC score evaluation metric for these domains.

Most well-known and traditional online planners for probabilistic planning are in some way based on Monte-Carlo Tree Search. SOGBOFA, symbolic online gradient-based optimization for factored action MDPs, offers a new perspective on this: it constructs a function graph encoding the expected reward for a given input state using independence assumptions for states and actions. On this function, they use gradient ascent to perform a symbolic search optimizing the actions for the current state. This unique approach to probabilistic planning has shown very strong results and even more potential. In this thesis, we attempt to integrate the new ideas SOGBOFA presents into the traditionally successful Trial-based Heuristic Tree Search framework. Specifically, we design and evaluate two heuristics based on the aforementioned graph and its Q value estimations, but also the search using gradient ascent. We implement and evaluate these heuristics in the Prost planner, along with a version of the current standalone planner.

In this thesis, we consider cyclical dependencies between landmarks for cost-optimal planning. Landmarks denote properties that must hold at least once in all plans. However, if the orderings between them induce cyclical dependencies, one of the landmarks in each cycle must be achieved an additional time. We propose the generalized cycle-covering heuristic which considers this in addition to the cost for achieving all landmarks once.

Our research is motivated by recent applications of cycle-covering in the Freecell and logistics domain where it yields near-optimal results. We carry it over to domain-independent planning using a linear programming approach. The relaxed version of a minimum hitting set problem for the landmarks is enhanced by constraints concerned with cyclical dependencies between them. In theory, this approach surpasses a heuristic that only considers landmarks.

We apply the cycle-covering heuristic in practice where its theoretical dominance is confirmed; Many planning tasks contain cyclical dependencies and considering them affects the heuristic estimates favorably. However, the number of tasks solved using the improved heuristic is virtually unaffected. We still believe that considering this feature of landmarks offers great potential for future work.

Potential heuristics are a class of heuristics used in classical planning to guide a search algorithm towards a goal state. Most of the existing research on potential heuristics is focused on finding heuristics that are admissible, such that they can be used by an algorithm such as A* to arrive at an optimal solution. In this thesis, we focus on the computation of potential heuristics for satisficing planning, where plan optimality is not required and the objective is to find any solution. Specifically, our focus is on the computation of potential heuristics that are descending and dead-end avoiding (DDA), since these prop- erties guarantee favorable search behavior when used with greedy search algorithms such as hillclimbing. We formally prove that the computation of DDA heuristics is a PSPACE-complete problem and propose several approximation algorithms. Our evaluation shows that the resulting heuristics are competitive with established approaches such as Pattern Databases in terms of heuristic quality but suffer from several performance bottlenecks.

Most automated planners use heuristic search to solve the tasks. Usually, the planners get as input a lifted representation of the task in PDDL, a compact formalism describing the task using a fragment of first-order logic. The planners then transform this task description into a grounded representation where the task is described in propositional logic. This new grounded format can be exponentially larger than the lifted one, but many planners use this grounded representation because it is easier to implement and reason about.

However, sometimes this transformation between lifted and grounded representations is not tractable. When this is the case, there is not much that planners based on heuristic search can do. Since this transformation is a required preprocess, when this fails, the whole planner fails.

To solve the grounding problem, we introduce new methods to deal with tasks that cannot be grounded. Our work aims to find good ways to perform heuristic search while using a lifted representation of planning problems. We use the point-of-view of planning as a database progression problem and borrow solutions from the areas of relational algebra and database theory.

Our theoretical and empirical results are motivating: several instances that were never solved by any planner in the literature are now solved by our new lifted planner. For example, our planner can solve the challenging Organic Synthesis domain using a breadth-first search, while state-of-the-art planners cannot solve more than 60% of the instances. Furthermore, our results offer a new perspective and a deep theoretical study of lifted representations for planning tasks.

The generation of independently verifiable proofs for the unsolvability of planning tasks using different heuristics, including linear Merge-and-Shrink heuristics, is possible by usage of a proof system framework. Proof generation in the case of non-linear Merge-and-Shrink heuristic, however, is currently not supported. This is due to the lack of a suitable state set representation formalism that allows to compactly represent states mapped to a certain value in the belonging Merge-and-Shrink representation (MSR). In this thesis, we overcome this shortcoming using Sentential Decision Diagrams (SDDs) as set representations. We describe an algorithm that constructs the desired SDD from the MSR, and show that efficient proof verification is possible with SDDs as representation formalism. Aditionally, we use a proof of concept implementation to analyze the overhead occurred by the proof generation functionality and the runtime of the proof verification.

The operator-counting framework is a framework in classical planning for heuristics that are based on linear programming. The operator-counting framework covers several kinds of state-of-the-art linear programming heuristics, among them the post-hoc optimization heuristic. In this thesis we will use post-hoc optimization constraints and evaluate them under altered cost functions instead of the original cost function of the planning task. We show that such cost-altered post-hoc optimization constraints are also covered by the operator-counting framework and that it is possible to achieve improved heuristic estimates with them, compared with post-hoc optimization constraints under the original cost function. In our experiments we have not been able to achieve improved problem coverage, as we were not able to find a method for generating favorable cost functions that work well in all domains.

Heuristic forward search is the state-of-the-art approach to solve classical planning problems. On the other hand, bidirectional heuristic search has a lot of potential but was never able to deliver on those expectations in practice. Only recently the near-optimal bidirectional search algorithm (NBS) was introduces by Chen et al. and as the name suggests, NBS expands nearly the optimal number of states to solve any search problem. This is a novel achievement and makes the NBS algorithm a very promising and efficient algorithm in search. With this premise in mind, we raise the question of how applicable NBS is to planning. In this thesis, we inquire this very question by implementing NBS in the state- of-the-art planner Fast-Downward and analyse its performance on the benchmark of the latest international planning competition. We additionally implement fractional meet-in- the-middle and computeWVC to analyse NBS’ performance more thoroughly in regards to the structure of the problem task.

The conducted experiments show that NBS can successfully be applied to planning as it was able to consistently outperform A*. Especially good results were achieved on the domains: blocks, driverlog, floortile-opt11-strips, get-opt14-strips, logistics00, and termes- opt18-strips. Analysing these results, we deduce that the efficiency of forward and backward search depends heavily upon the underlying implicit structure of the transition system which is induced by the problem task. This suggests that bidirectional search is inherently more suited for certain problems. Furthermore, we find that this aptitude for a certain search direction correlates with the domain, thereby providing a powerful analytic tool to a priori derive the effectiveness of certain search approaches.

In conclusion, even without intricate improvements the NBS algorithm is able to compete with A*. It therefore has further potential for future research. Additionally, the underlying transition system of a problem instance is shown to be an important factor which influences the efficiency of certain search approaches. This knowledge could be valuable for devising portfolio planners.

Multiple Sequence Alignment (MSA) is the problem of aligning multiple biological sequences in the evoluationary most plausible way. It can be viewed as a shortest path problem through an n-dimensional lattice. Because of its large branching factor of 2^n − 1, it has found broad attention in the artificial intelligence community. Finding a globally optimal solution for more than a few sequences requires sophisticated heuristics and bounding techniques in order to solve the problem in acceptable time and within memory limitations. In this thesis, we show how existing heuristics fall into the category of combining certain pattern databases. We combine arbitrary pattern collections that can be used as heuristic estimates and apply cost partitioning techniques from classical planning for MSA. We implement two of those heuristics for MSA and compare their estimates to the existing heuristics.

Increasing Cost Tree Search is a promising approach to multi-agent pathfinding problems, but like all approaches it has to deal with a huge number of possible joint paths, growing exponentially with the number of agents. We explore the possibility of reducing this by introducing a value abstraction to the Multi-valued Decision Diagrams used to represent sets of joint paths. To that end we introduce a heat map to heuristically judge how collisionprone agent positions are and present how to use and possible refine abstract positions in order to still find valid paths.

Estimating cheapest plan costs with the help of network flows is an established technique. Plans and network flows are already very similar, however network flows can differ from plans in the presence of cycles. If a transition system contains cycles, flows might be composed of multiple disconnected parts. This discrepancy can make the cheapest plan estimation worse. One idea to get rid of the cycles works by introducing time steps. For every time step the states of a transition system are copied. Transitions will be changed, so that they connect states only with states of the next time step, which ensures that there are no cycles. It turned out, that by applying this idea to multiple transitions systems, network flows of the individual transition systems can be synchronized via the time steps to get a new kind of heuristic, that will also be discussed in this thesis.

Probabilistic planning is a research field that has become popular in the early 1990s. It aims at finding an optimal policy which maximizes the outcome of applying actions to states in an environment that feature unpredictable events. Such environments can consist of a large number of states and actions which make finding an optimal policy intractable using classical methods. Using a heuristic function for a guided search allows for tackling such problems. Designing a domain-independent heuristic function requires complex algorithms which may be expensive when it comes to time and memory consumption.

In this thesis, we are applying the supervised learning techniques for learning two domain-independent heuristic functions. We use three types of gradient descent methods: stochastic, batch and mini-batch gradient descent and their improved versions using momen- tum, learning decay rate and early stopping. Furthermore, we apply the concept of feature combination in order to better learn the heuristic functions. The learned functions are pro- vided to Prost, a domain-independent probabilistic planner, and benchmarked against the winning algorithms of the International Probabilistic Planning Competition held in 2014. The experiments show that learning an offline heuristic improves the overall score of the search for some of the domains used in aforementioned competition.

The merge-and-shrink heuristic is a state-of-the-art admissible heuristic that is often used for optimal planning. Recent studies showed that the merge strategy is an important factor for the performance of the merge-and-shrink algorithm. There are many different merge strategies and improvements for merge strategies described in the literature. One out of these merge strategies is MIASM by Fan et al. MIASM tries to merge transition systems that produce unnecessary states in their product which can be pruned. Another merge strategy is the symmetry-based merge-and-shrink framework by Sievers et al. This strategy tries to merge transition systems that cause factored symmetries in their product. This strategy can be combined with other merge strategies and it often improves the performance for many merge strategy. However, the current combination of MIASM with factored symmetries performs worse than MIASM. We implement a different combination of MIASM that uses factored symmetries during the subset search of MIASM. Our experimental evaluation shows that our new combination of MIASM with factored symmetries solves more tasks than the existing MIASM and the previously implemented combination of MIASM with factored symmetries. We also evaluate different combinations of existing merge strategies and find combinations that perform better than their basic version that were not evaluated before.

Tree Cache is a pathfinding algorithm that selects one vertex as a root and constructs a tree with cheapest paths to all other vertices. A path is found by traversing up the tree from both the start and goal vertices to the root and concatenating the two parts. This is fast, but as all paths constructed this way pass through the root vertex they can be highly suboptimal.

To improve this algorithm, we consider two simple approaches. The first is to construct multiple trees, and save the distance to each root in each vertex. To find a path, the algorithm first selects the root with the lowest total distance. The second approach is to remove redundant vertices, i.e. vertices that are between the root and the lowest common ancestor (LCA) of the start and goal vertices. The performance and space requirements of the resulting algorithm are then compared to the conceptually similar hub labels and differential heuristics.

Greedy Best-First Search (GBFS) is a prominent search algorithm to find solutions for planning tasks. GBFS chooses nodes for further expansion based on a distance-to-goal estimator, the heuristic. This makes GBFS highly dependent on the quality of the heuristic. Heuristics often face the problem of producing Uninformed Heuristic Regions (UHRs). GBFS additionally suffers the possibility of simultaneously expanding nodes in multiple UHRs. In this thesis we change the heuristic approach in UHRs. The heuristic was unable to guide the search and so we try to expand novel states to escape the UHRs. The novelty measures how “new” a state is in the search. The result is a combination of heuristic and novelty guided search, which is indeed able to escape UHRs quicker and solve more problems in reasonable time.

In classical AI planning, the state explosion problem is a reoccurring subject: although the problem descriptions are compact, often a huge number of states needs to be considered. One way to tackle this problem is to use static pruning methods which reduce the number of variables and operators in the problem description before planning.

In this work, we discuss the properties and limitations of three existing static pruning techniques with a focus on satisficing planning. We analyse these pruning techniques and their combinations, and identify synergy effects between them and the domains and problem structures in which they occur. We implement the three methods into an existing propositional planner, and evaluate the performance of different configurations and combinations in a set of experiments on IPC benchmarks. We observe that static pruning techniques can increase the number of solved problems, and that the synergy effects of the combinations also occur on IPC benchmarks, although they do not lead to a major performance increase.

The goal of classical domain-independent planning is to find a sequence of actions which lead from a given initial state to a goal state that satisfies some goal criteria. Most planning systems use heuristic search algorithms to find such a sequence of actions. A critical part of heuristic search is the heuristic function. In order to find a sequence of actions from an initial state to a goal state efficiently this heuristic function has to guide the search towards the goal. It is difficult to create such an efficient heuristic function. Arfaee et al. show that it is possible to improve a given heuristic function by applying machine learning techniques on a single domain in the context of heuristic search. To achieve this improvement of the heuristic function, they propose a bootstrap learning approach which subsequently improves the heuristic function.

In this thesis we will introduce a technique to learn heuristic functions that can be used in classical domain-independent planning based on the bootstrap-learning approach introduced by Arfaee et al. In order to evaluate the performance of the learned heuristic functions, we have implemented a learning algorithm for the Fast Downward planning system. The experiments have shown that a learned heuristic function generally decreases the number of explored states compared to blind-search . The total time to solve a single problem increases because the heuristic function has to be learned before it can be applied.

Essential for the estimation of the performance of an algorithm in satisficing planning is its ability to solve benchmark problems. Those results can not be compared directly as they originate from different implementations and different machines. We implemented some of the most promising algorithms for greedy best-first search, published in the last years, and evaluated them on the same set of benchmarks. All algorithms are either based on randomised search, localised search or a combination of both. Our evaluation proves the potential of those algorithms.

Heuristic search with admissible heuristics is the leading approach to cost-optimal, domain-independent planning. Pattern database heuristics - a type of abstraction heuristics - are state-of-the-art admissible heuristics. Two recent pattern database heuristics are the iPDB heuristic by Haslum et al. and the PhO heuristic by Pommerening et al.

The iPDB procedure performs a hill climbing search in the space of pattern collections and evaluates selected patterns using the canonical heuristic. We apply different techniques to the iPDB procedure, improving its hill climbing algorithm as well as the quality of the resulting heuristic. The second recent heuristic - the PhO heuristic - obtains strong heuristic values through linear programming. We present different techniques to influence and improve on the PhO heuristic.

We evaluate the modified iPDB and PhO heuristics on the IPC benchmark suite and show that these abstraction heuristics can compete with other state-of-the-art heuristics in cost-optimal, domain-independent planning.

Greedy best-first search (GBFS) is a prominent search algorithm for satisficing planning - finding good enough solutions to a planning task in reasonable time. GBFS selects the next node to consider based on the most promising node estimated by a heuristic function. However, this behaviour makes GBFS heavily depend on the quality of the heuristic estimator. Inaccurate heuristics can lead GBFS into regions far away from a goal. Additionally, if the heuristic ranks several nodes the same, GBFS has no information on which node it shall follow. Diverse best-first search (DBFS) is a new algorithm by Imai and Kishimoto [2011] which has a local search component to emphasis exploitation. To enable exploration, DBFS deploys probabilities to select the next node.

In two problem domains, we analyse GBFS' search behaviour and present theoretical results. We evaluate these results empirically and compare DBFS and GBFS on constructed as well as on provided problem instances.

State-of-the-art planning systems use a variety of control knowledge in order to enhance the performance of heuristic search. Unfortunately most forms of control knowledge use a specific formalism which makes them hard to combine. There have been several approaches which describe control knowledge in Linear Temporal Logic (LTL). We build upon this work and propose a general framework for encoding control knowledge in LTL formulas. The framework includes a criterion that any LTL formula used in it must fulfill in order to preserve optimal plans when used for pruning the search space; this way the validity of new LTL formulas describing control knowledge can be checked. The framework is implemented on top of the Fast Downward planning system and is tested with a pruning technique called Unnecessary Action Application, which detects if a previously applied action achieved no useful progress.

Landmarks are known to be useable for powerful heuristics for informed search. In this thesis, we explain and evaluate a novel algorithm to find ordered landmarks of delete free tasks by intersecting solutions in the relaxation. The proposed algorithm efficiently finds landmarks and natural orders of delete free tasks, such as delete relaxations or Pi-m compilations.

Planning as heuristic search is the prevalent technique to solve planning problems of any kind of domains. Heuristics estimate distances to goal states in order to guide a search through large state spaces. However, this guidance is sometimes moderate, since still a lot of states lie on plateaus of equally prioritized states in the search space topology. Additional techniques that ignore or prefer some actions for solving a problem are successful to support the search in such situations. Nevertheless, some action pruning techniques lead to incomplete searches.

We propose an under-approximation refinement framework for adding actions to under-approximations of planning tasks during a search in order to find a plan. For this framework, we develop a refinement strategy. Starting a search on an initial under-approximation of a planning task, the strategy adds actions determined at states close to a goal, whenever the search does not progress towards a goal, until a plan is found. Key elements of this strategy consider helpful actions and relaxed plans for refinements. We have implemented the under-approximation refinement framework into the greedy best first search algorithm. Our results show considerable speedups for many classical planning problems. Moreover, we are able to plan with fewer actions than standard greedy best first search.

The main approach for classical planning is heuristic search. Many cost heuristics are based on the delete relaxation. The optimal heuristic of a delete free planning problem is called h + . This thesis explores two new ways to compute h + . Both approaches use factored planning, which decomposes the original planning problem to work on each subproblem separately. The algorithm reuses the subsolutions and combines them to a global solution.

The two algorithms are used to compute a cost heuristic for an A* search. As both approaches compute the optimal heuristic for delete free planning tasks, the algorithms can also be used to find a solution for relaxed planning tasks.

Multi-Agent-Path-Finding (MAPF) is a common problem in robotics and memory management. Pebbles in Motion is an implementation of a problem solver for MAPF in polynomial time, based on a work by Daniel Kornhauser from 1984. Recently a lot of research papers have been published on MAPF in the research community of Artificial Intelligence, but the work by Kornhauser seems hardly to be taken into account. We assumed that this might be related to the fact that said paper was more mathematically and hardly describing algorithms intuitively. This work aims at filling this gap, by providing an easy understandable approach of implementation steps for programmers and a new detailed description for researchers in Computer Science.

Bachelor's theses

Fast Downward is a classical planner using heuristical search. The planner uses many advanced planning techniques that are not easy to teach, since they usually rely on complex data structures. To introduce planning techniques to the user an interactive application is created. This application uses an illustrative example to showcase planning techniques: Blocksworld

Blocksworld is an easy understandable planning problem which allows a simple representation of a state space. It is implemented in the Unreal Engine and provides an interface to the Fast Downward planner. Users can explore a state space themselves or have Fast Downward generate plans for them. The concept of heuristics as well as the state space are explained and made accessible to the user. The user experiences how the planner explores a state space and which techniques the planner uses.

This thesis is about implementing Jussi Rintanen’s algorithm for schematic invariants. The algo- rithm is implemented in the planning tool Fast Downward and refers to Rintanen’s paper Schematic Invariants by Reduction to Ground Invariants. The thesis describes all necessary definitions to under- stand the algorithm and draws a comparison between the original task and a reduced task in terms of runtime and number of grounded actions.