Something went wrong when searching for seed articles. Please try again soon.

No articles were found for that search term.

Author, year The title of the article goes here

LITERATURE REVIEW SOFTWARE FOR BETTER RESEARCH

“This tool really helped me to create good bibtex references for my research papers”

Ali Mohammed-Djafari

Director of Research at LSS-CNRS, France

“Any researcher could use it! The paper recommendations are great for anyone and everyone”

Swansea University, Wales

“As a student just venturing into the world of lit reviews, this is a tool that is outstanding and helping me find deeper results for my work.”

Franklin Jeffers

South Oregon University, USA

“One of the 3 most promising tools that (1) do not solely rely on keywords, (2) does nice visualizations, (3) is easy to use”

Singapore Management University

“Incredibly useful tool to get to know more literature, and to gain insight in existing research”

KU Leuven, Belgium

“Seeing my literature list as a network enhances my thinking process!”

Katholieke Universiteit Leuven, Belgium

“I can’t live without you anymore! I also recommend you to my students.”

Professor at The Chinese University of Hong Kong

“This has helped me so much in researching the literature. Currently, I am beginning to investigate new fields and this has helped me hugely”

Aran Warren

Canterbury University, NZ

“It's nice to get a quick overview of related literature. Really easy to use, and it helps getting on top of the often complicated structures of referencing”

Christoph Ludwig

Technische Universität Dresden, Germany

“Litmaps is extremely helpful with my research. It helps me organize each one of my projects and see how they relate to each other, as well as to keep up to date on publications done in my field”

Daniel Fuller

Clarkson University, USA

“Litmaps is a game changer for finding novel literature... it has been invaluable for my productivity.... I also got my PhD student to use it and they also found it invaluable, finding several gaps they missed”

Varun Venkatesh

Austin Health, Australia

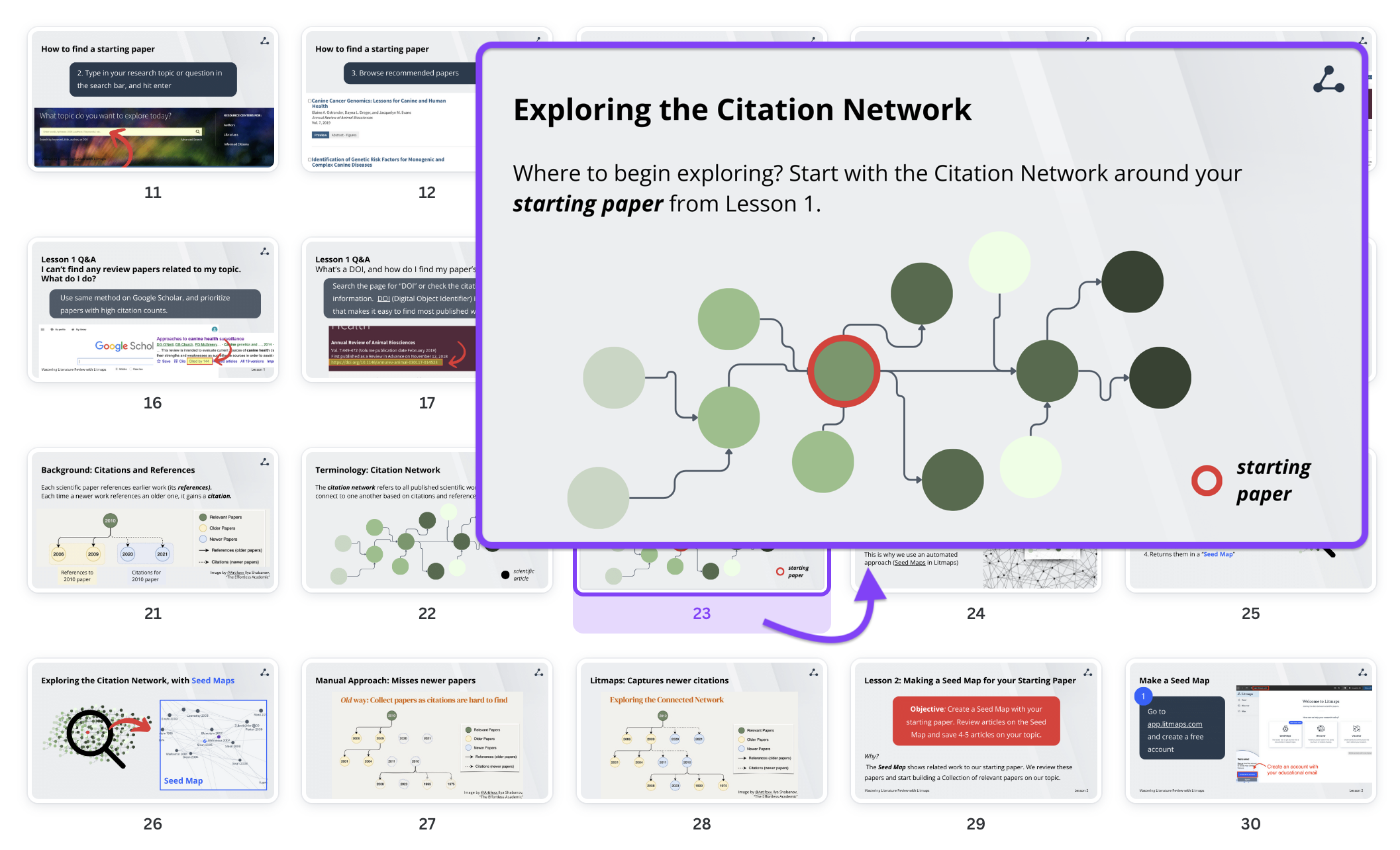

Our Course: Learn and Teach with Litmaps

10 Best Literature Review Tools for Researchers

This post may contain affiliate links that allow us to earn a commission at no expense to you. Learn more

Boost your research game with these Best Literature Review Tools for Researchers! Uncover hidden gems, organize your findings, and ace your next research paper!

Conducting literature reviews poses challenges for researchers due to the overwhelming volume of information available and the lack of efficient methods to manage and analyze it.

Researchers struggle to identify key sources, extract relevant information, and maintain accuracy while manually conducting literature reviews. This leads to inefficiency, errors, and difficulty in identifying gaps or trends in existing literature.

Advancements in technology have resulted in a variety of literature review tools. These tools streamline the process, offering features like automated searching, filtering, citation management, and research data extraction. They save time, improve accuracy, and provide valuable insights for researchers.

In this article, we present a curated list of the 10 best literature review tools, empowering researchers to make informed choices and revolutionize their systematic literature review process.

Table of Contents

Top 10 Literature Review Tools for Researchers: In A Nutshell (2023)

#1. semantic scholar – a free, ai-powered research tool for scientific literature.

Semantic Scholar is a cutting-edge literature review tool that researchers rely on for its comprehensive access to academic publications. With its advanced AI algorithms and extensive database, it simplifies the discovery of relevant research papers.

By employing semantic analysis, users can explore scholarly articles based on context and meaning, making it a go-to resource for scholars across disciplines.

Additionally, Semantic Scholar offers personalized recommendations and alerts, ensuring researchers stay updated with the latest developments. However, users should be cautious of potential limitations.

Not all scholarly content may be indexed, and occasional false positives or inaccurate associations can occur. Furthermore, the tool primarily focuses on computer science and related fields, potentially limiting coverage in other disciplines.

Researchers should be mindful of these considerations and supplement Semantic Scholar with other reputable resources for a comprehensive literature review. Despite these caveats, Semantic Scholar remains a valuable tool for streamlining research and staying informed.

#2. Elicit – Research assistant using language models like GPT-3

Elicit is a game-changing literature review tool that has gained popularity among researchers worldwide. With its user-friendly interface and extensive database of scholarly articles, it streamlines the research process, saving time and effort.

The tool employs advanced algorithms to provide personalized recommendations, ensuring researchers discover the most relevant studies for their field. Elicit also promotes collaboration by enabling users to create shared folders and annotate articles.

However, users should be cautious when using Elicit. It is important to verify the credibility and accuracy of the sources found through the tool, as the database encompasses a wide range of publications.

Additionally, occasional glitches in the search function have been reported, leading to incomplete or inaccurate results. While Elicit offers tremendous benefits, researchers should remain vigilant and cross-reference information to ensure a comprehensive literature review.

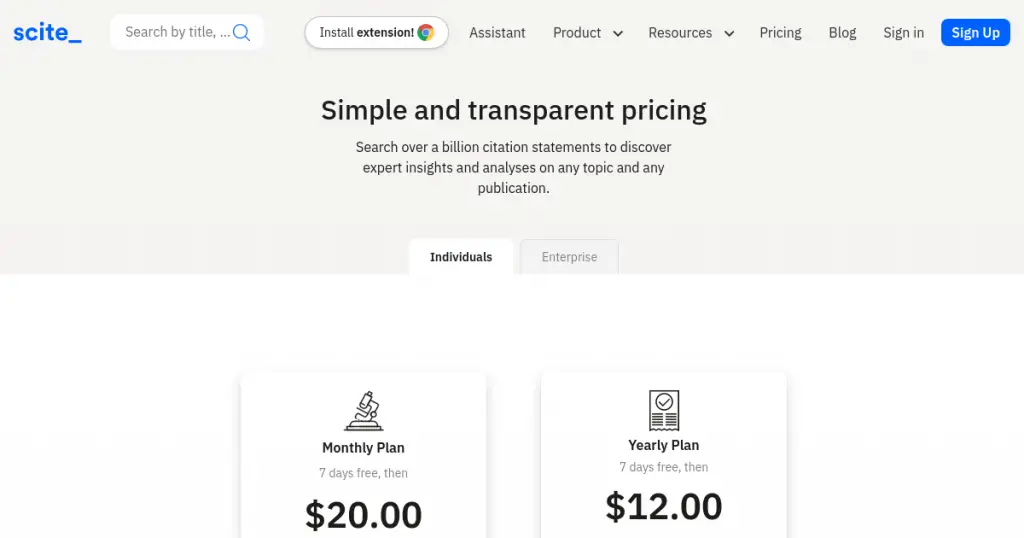

#3. Scite.Ai – Your personal research assistant

Scite.Ai is a popular literature review tool that revolutionizes the research process for scholars. With its innovative citation analysis feature, researchers can evaluate the credibility and impact of scientific articles, making informed decisions about their inclusion in their own work.

By assessing the context in which citations are used, Scite.Ai ensures that the sources selected are reliable and of high quality, enabling researchers to establish a strong foundation for their research.

However, while Scite.Ai offers numerous advantages, there are a few aspects to be cautious about. As with any data-driven tool, occasional errors or inaccuracies may arise, necessitating researchers to cross-reference and verify results with other reputable sources.

Moreover, Scite.Ai’s coverage may be limited in certain subject areas and languages, with a possibility of missing relevant studies, especially in niche fields or non-English publications.

Therefore, researchers should supplement the use of Scite.Ai with additional resources to ensure comprehensive literature coverage and avoid any potential gaps in their research.

Rayyan offers the following paid plans:

- Monthly Plan: $20

- Yearly Plan: $12

#4. DistillerSR – Literature Review Software

DistillerSR is a powerful literature review tool trusted by researchers for its user-friendly interface and robust features. With its advanced search capabilities, researchers can quickly find relevant studies from multiple databases, saving time and effort.

The tool offers comprehensive screening and data extraction functionalities, streamlining the review process and improving the reliability of findings. Real-time collaboration features also facilitate seamless teamwork among researchers.

While DistillerSR offers numerous advantages, there are a few considerations. Users should invest time in understanding the tool’s features and functionalities to maximize its potential. Additionally, the pricing structure may be a factor for individual researchers or small teams with limited budgets.

Despite occasional technical glitches reported by some users, the developers actively address these issues through updates and improvements, ensuring a better user experience.

Overall, DistillerSR empowers researchers to navigate the vast sea of information, enhancing the quality and efficiency of literature reviews while fostering collaboration among research teams .

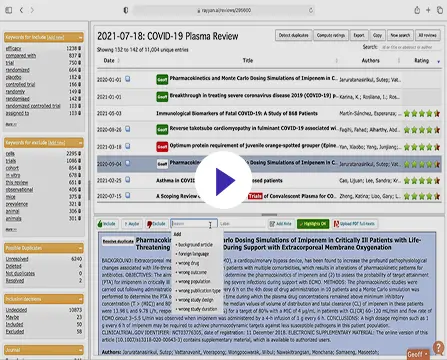

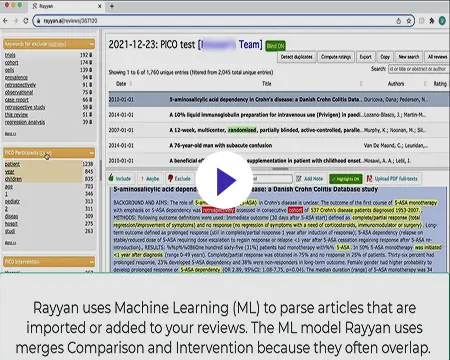

#5. Rayyan – AI Powered Tool for Systematic Literature Reviews

Rayyan is a powerful literature review tool that simplifies the research process for scholars and academics. With its user-friendly interface and efficient management features, Rayyan is highly regarded by researchers worldwide.

It allows users to import and organize large volumes of scholarly articles, making it easier to identify relevant studies for their research projects. The tool also facilitates seamless collaboration among team members, enhancing productivity and streamlining the research workflow.

However, it’s important to be aware of a few aspects. The free version of Rayyan has limitations, and upgrading to a premium subscription may be necessary for additional functionalities.

Users should also be mindful of occasional technical glitches and compatibility issues, promptly reporting any problems. Despite these considerations, Rayyan remains a valuable asset for researchers, providing an effective solution for literature review tasks.

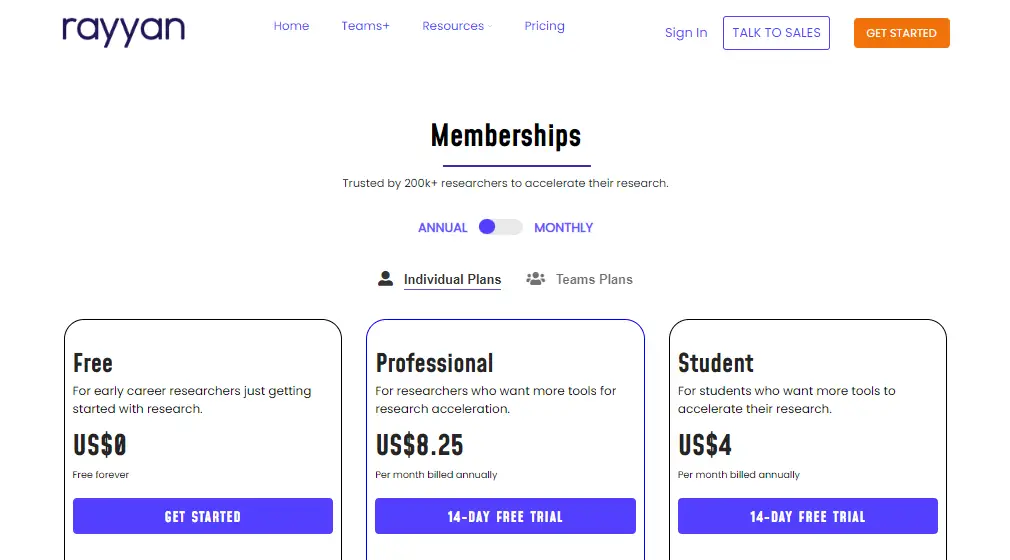

Rayyan offers both free and paid plans:

- Professional: $8.25/month

- Student: $4/month

- Pro Team: $8.25/month

- Team+: $24.99/month

#6. Consensus – Use AI to find you answers in scientific research

Consensus is a cutting-edge literature review tool that has become a go-to choice for researchers worldwide. Its intuitive interface and powerful capabilities make it a preferred tool for navigating and analyzing scholarly articles.

With Consensus, researchers can save significant time by efficiently organizing and accessing relevant research material.People consider Consensus for several reasons.

Its advanced search algorithms and filters help researchers sift through vast amounts of information, ensuring they focus on the most relevant articles. By streamlining the literature review process, Consensus allows researchers to extract valuable insights and accelerate their research progress.

However, there are a few factors to watch out for when using Consensus. As with any automated tool, researchers should exercise caution and independently verify the accuracy and relevance of the generated results. Complex or niche topics may present challenges, resulting in limited search results. Researchers should also supplement Consensus with manual searches to ensure comprehensive coverage of the literature.

Overall, Consensus is a valuable resource for researchers seeking to optimize their literature review process. By leveraging its features alongside critical thinking and manual searches, researchers can enhance the efficiency and effectiveness of their work, advancing their research endeavors to new heights.

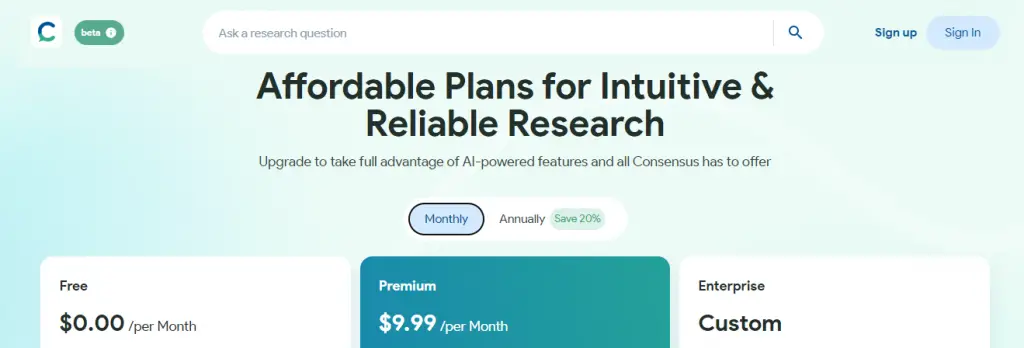

Consensus offers both free and paid plans:

- Premium: $9.99/month

- Enterprise: Custom

#7. RAx – AI-powered reading assistant

Consensus is a revolutionary literature review tool that has transformed the research process for scholars worldwide. With its user-friendly interface and advanced features, it offers a vast database of academic publications across various disciplines, providing access to relevant and up-to-date literature.

Using advanced algorithms and machine learning, Consensus delivers personalized recommendations, saving researchers time and effort in their literature search.

However, researchers should be cautious of potential biases in the recommendation system and supplement their search with manual verification to ensure a comprehensive review.

Additionally, occasional inaccuracies in metadata have been reported, making it essential for users to cross-reference information with reliable sources. Despite these considerations, Consensus remains an invaluable tool for enhancing the efficiency and quality of literature reviews.

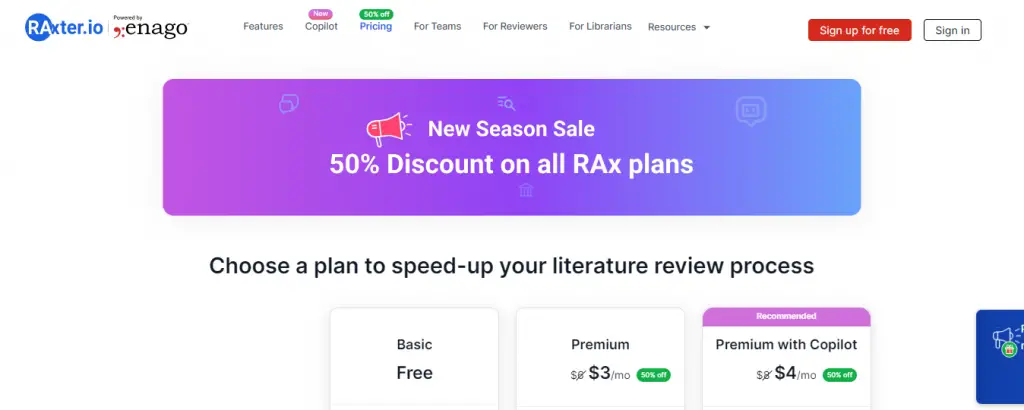

RAx offers both free and paid plans. Currently offering 50% discounts as of July 2023:

- Premium: $6/month $3/month

- Premium with Copilot: $8/month $4/month

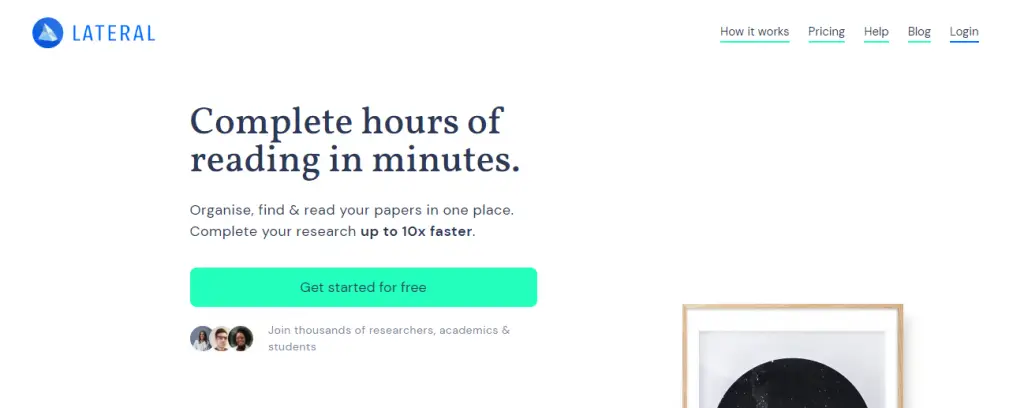

#8. Lateral – Advance your research with AI

“Lateral” is a revolutionary literature review tool trusted by researchers worldwide. With its user-friendly interface and powerful search capabilities, it simplifies the process of gathering and analyzing scholarly articles.

By leveraging advanced algorithms and machine learning, Lateral saves researchers precious time by retrieving relevant articles and uncovering new connections between them, fostering interdisciplinary exploration.

While Lateral provides numerous benefits, users should exercise caution. It is advisable to cross-reference its findings with other sources to ensure a comprehensive review.

Additionally, researchers must be mindful of potential biases introduced by the tool’s algorithms and should critically evaluate and interpret the results.

Despite these considerations, Lateral remains an indispensable resource, empowering researchers to delve deeper into their fields of study and make valuable contributions to the academic community.

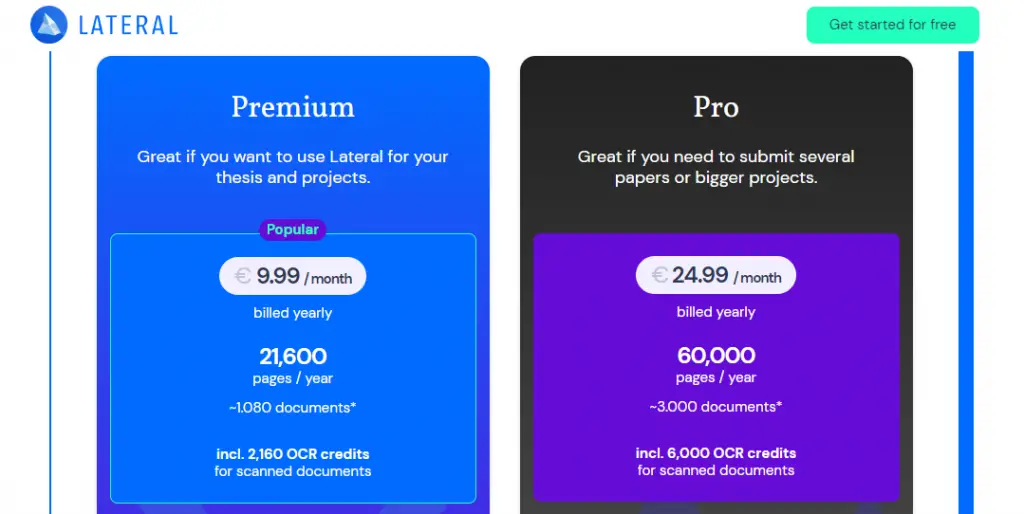

RAx offers both free and paid plans:

- Premium: $10.98

- Pro: $27.46

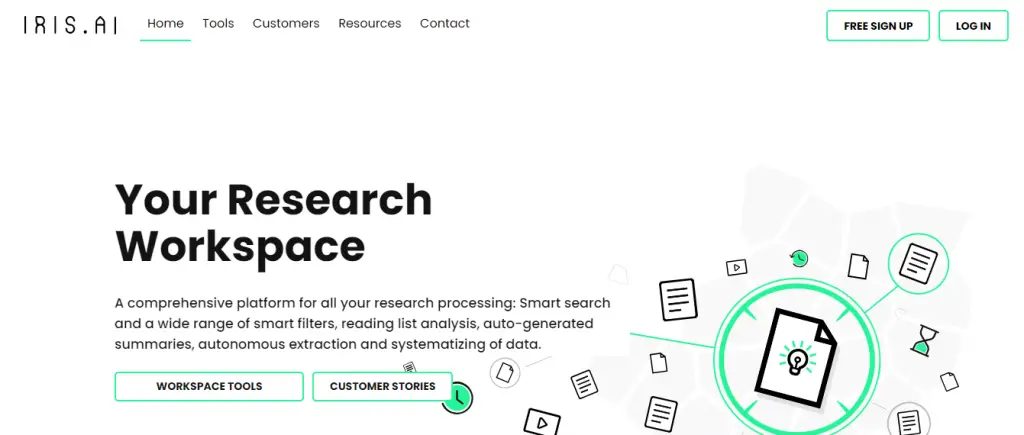

#9. Iris AI – Introducing the researcher workspace

Iris AI is an innovative literature review tool that has transformed the research process for academics and scholars. With its advanced artificial intelligence capabilities, Iris AI offers a seamless and efficient way to navigate through a vast array of academic papers and publications.

Researchers are drawn to this tool because it saves valuable time by automating the tedious task of literature review and provides comprehensive coverage across multiple disciplines.

Its intelligent recommendation system suggests related articles, enabling researchers to discover hidden connections and broaden their knowledge base. However, caution should be exercised while using Iris AI.

While the tool excels at surfacing relevant papers, researchers should independently evaluate the quality and validity of the sources to ensure the reliability of their work.

It’s important to note that Iris AI may occasionally miss niche or lesser-known publications, necessitating a supplementary search using traditional methods.

Additionally, being an algorithm-based tool, there is a possibility of false positives or missed relevant articles due to the inherent limitations of automated text analysis. Nevertheless, Iris AI remains an invaluable asset for researchers, enhancing the quality and efficiency of their research endeavors.

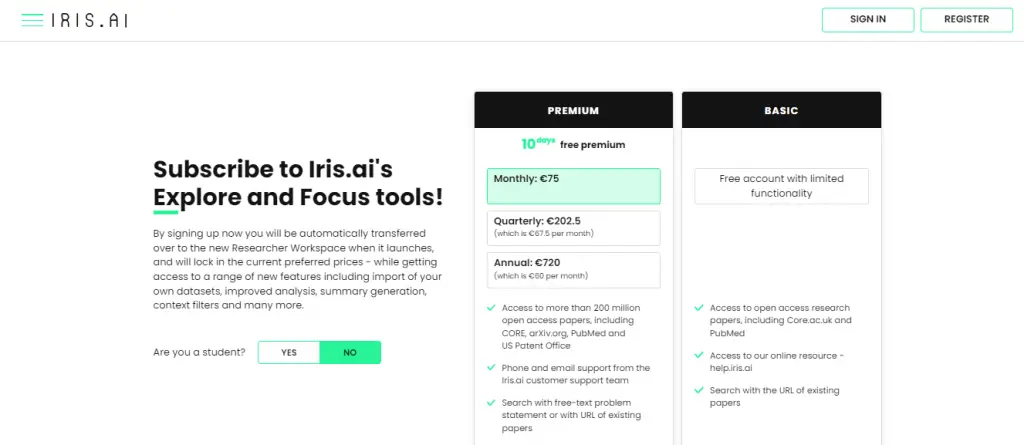

Iris AI offers different pricing plans to cater to various user needs:

- Basic: Free

- Premium: Monthly ($82.41), Quarterly ($222.49), and Annual ($791.07)

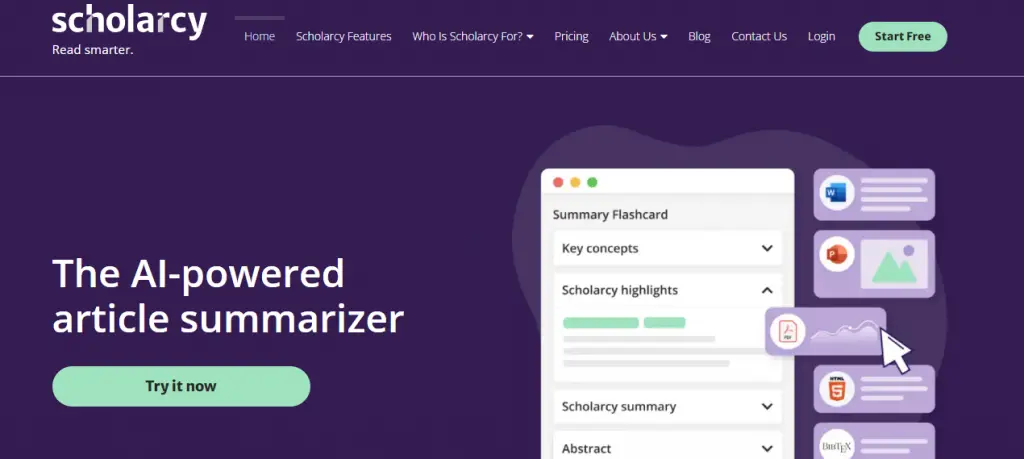

#10. Scholarcy – Summarize your literature through AI

Scholarcy is a powerful literature review tool that helps researchers streamline their work. By employing advanced algorithms and natural language processing, it efficiently analyzes and summarizes academic papers, saving researchers valuable time.

Scholarcy’s ability to extract key information and generate concise summaries makes it an attractive option for scholars looking to quickly grasp the main concepts and findings of multiple papers.

However, it is important to exercise caution when relying solely on Scholarcy. While it provides a useful starting point, engaging with the original research papers is crucial to ensure a comprehensive understanding.

Scholarcy’s automated summarization may not capture the nuanced interpretations or contextual information presented in the full text.

Researchers should also be aware that certain types of documents, particularly those with heavy mathematical or technical content, may pose challenges for the tool.

Despite these considerations, Scholarcy remains a valuable resource for researchers seeking to enhance their literature review process and improve overall efficiency.

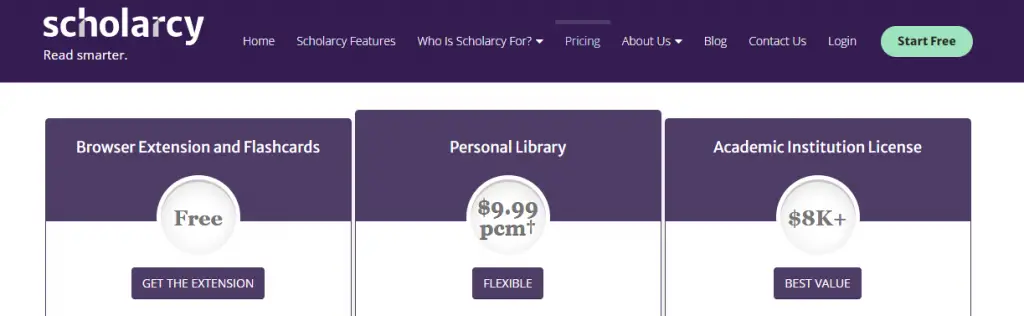

Scholarcy offer the following pricing plans:

- Browser Extension and Flashcards: Free

- Personal Library: $9.99

- Academic Institution License: $8K+

Final Thoughts

In conclusion, conducting a comprehensive literature review is a crucial aspect of any research project, and the availability of reliable and efficient tools can greatly facilitate this process for researchers. This article has explored the top 10 literature review tools that have gained popularity among researchers.

Moreover, the rise of AI-powered tools like Iris.ai and Sci.ai promises to revolutionize the literature review process by automating various tasks and enhancing research efficiency.

Ultimately, the choice of literature review tool depends on individual preferences and research needs, but the tools presented in this article serve as valuable resources to enhance the quality and productivity of research endeavors.

Researchers are encouraged to explore and utilize these tools to stay at the forefront of knowledge in their respective fields and contribute to the advancement of science and academia.

Q1. What are literature review tools for researchers?

Literature review tools for researchers are software or online platforms designed to assist researchers in efficiently conducting literature reviews. These tools help researchers find, organize, analyze, and synthesize relevant academic papers and other sources of information.

Q2. What criteria should researchers consider when choosing literature review tools?

When choosing literature review tools, researchers should consider factors such as the tool’s search capabilities, database coverage, user interface, collaboration features, citation management, annotation and highlighting options, integration with reference management software, and data extraction capabilities.

It’s also essential to consider the tool’s accessibility, cost, and technical support.

Q3. Are there any literature review tools specifically designed for systematic reviews or meta-analyses?

Yes, there are literature review tools that cater specifically to systematic reviews and meta-analyses, which involve a rigorous and structured approach to reviewing existing literature. These tools often provide features tailored to the specific needs of these methodologies, such as:

Screening and eligibility assessment: Systematic review tools typically offer functionalities for screening and assessing the eligibility of studies based on predefined inclusion and exclusion criteria. This streamlines the process of selecting relevant studies for analysis.

Data extraction and quality assessment: These tools often include templates and forms to facilitate data extraction from selected studies. Additionally, they may provide features for assessing the quality and risk of bias in individual studies.

Meta-analysis support: Some literature review tools include statistical analysis features that assist in conducting meta-analyses. These features can help calculate effect sizes, perform statistical tests, and generate forest plots or other visual representations of the meta-analytic results.

Reporting assistance: Many tools provide templates or frameworks for generating systematic review reports, ensuring compliance with established guidelines such as PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses).

Q4. Can literature review tools help with organizing and annotating collected references?

Yes, literature review tools often come equipped with features to help researchers organize and annotate collected references. Some common functionalities include:

Reference management: These tools enable researchers to import references from various sources, such as databases or PDF files, and store them in a central library. They typically allow you to create folders or tags to organize references based on themes or categories.

Annotation capabilities: Many tools provide options for adding annotations, comments, or tags to individual references or specific sections of research articles. This helps researchers keep track of important information, highlight key findings, or note potential connections between different sources.

Full-text search: Literature review tools often offer full-text search functionality, allowing you to search within the content of imported articles or documents. This can be particularly useful when you need to locate specific information or keywords across multiple references.

Integration with citation managers: Some literature review tools integrate with popular citation managers like Zotero, Mendeley, or EndNote, allowing seamless transfer of references and annotations between platforms.

By leveraging these features, researchers can streamline the organization and annotation of their collected references, making it easier to retrieve relevant information during the literature review process.

Leave a Comment Cancel reply

Save my name, email, and website in this browser for the next time I comment.

We maintain and update science journals and scientific metrics. Scientific metrics data are aggregated from publicly available sources. Please note that we do NOT publish research papers on this platform. We do NOT accept any manuscript.

2012-2024 © scijournal.org

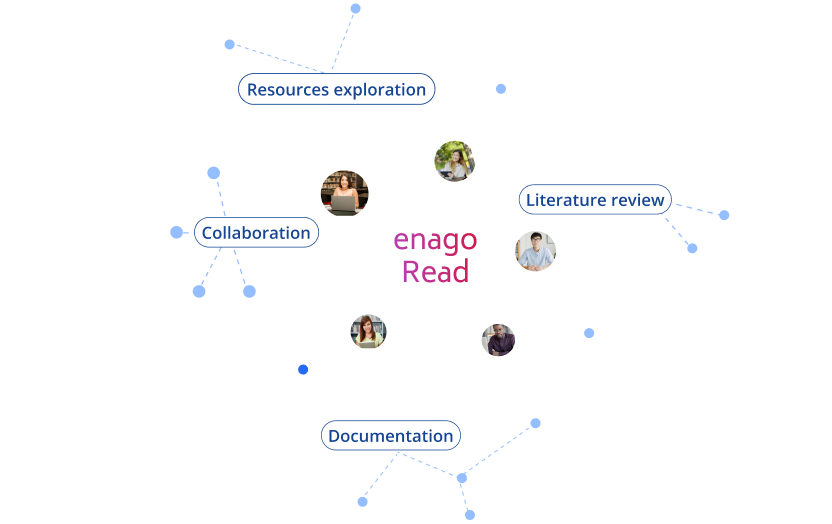

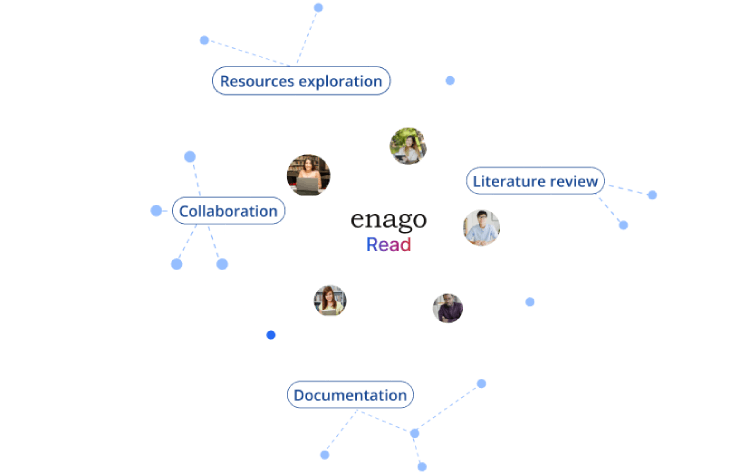

Your all in one AI-powered Reading Assistant

A Reading Space to Ideate, Create Knowledge, & Collaborate on Your Research

- Smartly organize your research

- Receive recommendations that can not be ignored

- Collaborate with your team to read, discuss, and share knowledge

From Surface-Level Exploration to Critical Reading - All at One Place!

Fine-tune your literature search.

Our AI-powered reading assistant saves time spent on the exploration of relevant resources and allows you to focus more on reading.

Select phrases or specific sections and explore more research papers related to the core aspects of your selections. Pin the useful ones for future references.

Our platform brings you the latest research news, online courses, and articles from magazines/blogs related to your research interests and project work.

Speed up your literature review

Quickly generate a summary of key sections of any paper with our summarizer.

Make informed decisions about which papers are relevant, and where to invest your time in further reading.

Get key insights from the paper, quickly comprehend the paper’s unique approach, and recall the key points.

Bring order to your research projects

Organize your reading lists into different projects and maintain the context of your research.

Quickly sort items into collections and tag or filter them according to keywords and color codes.

Experience the power of sharing by finding all the shared literature at one place

Decode papers effortlessly for faster comprehension

Highlight what is important so that you can retrieve it faster next time

Find Wikipedia explanations for any selected word or phrase

Save time in finding similar ideas across your projects

Collaborate to read with your team, professors, or students

Share and discuss literature and drafts with your study group, colleagues, experts, and advisors. Recommend valuable resources and help each other for better understanding.

Work in shared projects efficiently and improve visibility within your study group or lab members.

Keep track of your team's progress by being constantly connected and engaging in active knowledge transfer by requesting full access to relevant papers and drafts.

Find Papers From Across the World's Largest Repositories

Testimonials

Privacy and security of your research data are integral to our mission..

Everything you add or create on Enago Read is private by default. It is visible only if and when you share it with other users.

You can put Creative Commons license on original drafts to protect your IP. For shared files, Enago Read always maintains a copy in case of deletion by collaborators or revoked access.

We use state-of-the-art security protocols and algorithms including MD5 Encryption, SSL, and HTTPS to secure your data.

7 open source tools to make literature reviews easy

Opensource.com

A good literature review is critical for academic research in any field, whether it is for a research article, a critical review for coursework, or a dissertation. In a recent article, I presented detailed steps for doing a literature review using open source software .

The following is a brief summary of seven free and open source software tools described in that article that will make your next literature review much easier.

1. GNU Linux

Most literature reviews are accomplished by graduate students working in research labs in universities. For absurd reasons, graduate students often have the worst computers on campus. They are often old, slow, and clunky Windows machines that have been discarded and recycled from the undergraduate computer labs. Installing a flavor of GNU Linux will breathe new life into these outdated PCs. There are more than 100 distributions , all of which can be downloaded and installed for free on computers. Most popular Linux distributions come with a "try-before-you-buy" feature. For example, with Ubuntu you can make a bootable USB stick that allows you to test-run the Ubuntu desktop experience without interfering in any way with your PC configuration. If you like the experience, you can use the stick to install Ubuntu on your machine permanently.

Linux distributions generally come with a free web browser, and the most popular is Firefox . Two Firefox plugins that are particularly useful for literature reviews are Unpaywall and Zotero. Keep reading to learn why.

3. Unpaywall

Often one of the hardest parts of a literature review is gaining access to the papers you want to read for your review. The unintended consequence of copyright restrictions and paywalls is it has narrowed access to the peer-reviewed literature to the point that even Harvard University is challenged to pay for it. Fortunately, there are a lot of open access articles—about a third of the literature is free (and the percentage is growing). Unpaywall is a Firefox plugin that enables researchers to click a green tab on the side of the browser and skip the paywall on millions of peer-reviewed journal articles. This makes finding accessible copies of articles much faster that searching each database individually. Unpaywall is fast, free, and legal, as it accesses many of the open access sites that I covered in my paper on using open source in lit reviews .

Formatting references is the most tedious of academic tasks. Zotero can save you from ever doing it again. It operates as an Android app, desktop program, and a Firefox plugin (which I recommend). It is a free, easy-to-use tool to help you collect, organize, cite, and share research. It replaces the functionality of proprietary packages such as RefWorks, Endnote, and Papers for zero cost. Zotero can auto-add bibliographic information directly from websites. In addition, it can scrape bibliographic data from PDF files. Notes can be easily added on each reference. Finally, and most importantly, it can import and export the bibliography databases in all publishers' various formats. With this feature, you can export bibliographic information to paste into a document editor for a paper or thesis—or even to a wiki for dynamic collaborative literature reviews (see tool #7 for more on the value of wikis in lit reviews).

5. LibreOffice

Your thesis or academic article can be written conventionally with the free office suite LibreOffice , which operates similarly to Microsoft's Office products but respects your freedom. Zotero has a word processor plugin to integrate directly with LibreOffice. LibreOffice is more than adequate for the vast majority of academic paper writing.

If LibreOffice is not enough for your layout needs, you can take your paper writing one step further with LaTeX , a high-quality typesetting system specifically designed for producing technical and scientific documentation. LaTeX is particularly useful if your writing has a lot of equations in it. Also, Zotero libraries can be directly exported to BibTeX files for use with LaTeX.

7. MediaWiki

If you want to leverage the open source way to get help with your literature review, you can facilitate a dynamic collaborative literature review . A wiki is a website that allows anyone to add, delete, or revise content directly using a web browser. MediaWiki is free software that enables you to set up your own wikis.

Researchers can (in decreasing order of complexity): 1) set up their own research group wiki with MediaWiki, 2) utilize wikis already established at their universities (e.g., Aalto University ), or 3) use wikis dedicated to areas that they research. For example, several university research groups that focus on sustainability (including mine ) use Appropedia , which is set up for collaborative solutions on sustainability, appropriate technology, poverty reduction, and permaculture.

Using a wiki makes it easy for anyone in the group to keep track of the status of and update literature reviews (both current and older or from other researchers). It also enables multiple members of the group to easily collaborate on a literature review asynchronously. Most importantly, it enables people outside the research group to help make a literature review more complete, accurate, and up-to-date.

Wrapping up

Free and open source software can cover the entire lit review toolchain, meaning there's no need for anyone to use proprietary solutions. Do you use other libre tools for making literature reviews or other academic work easier? Please let us know your favorites in the comments.

Related Content

A free, AI-powered research tool for scientific literature

- Jean Louise Cohen

- Continental Drift

- Sigma Bonds

New & Improved API for Developers

Introducing semantic reader in beta.

Stay Connected With Semantic Scholar Sign Up What Is Semantic Scholar? Semantic Scholar is a free, AI-powered research tool for scientific literature, based at the Allen Institute for AI.

RAxter is now Enago Read! Enjoy the same licensing and pricing with enhanced capabilities. No action required for existing customers.

Your all in one AI-powered Reading Assistant

A Reading Space to Ideate, Create Knowledge, and Collaborate on Your Research

- Smartly organize your research

- Receive recommendations that cannot be ignored

- Collaborate with your team to read, discuss, and share knowledge

From Surface-Level Exploration to Critical Reading - All in one Place!

Fine-tune your literature search.

Our AI-powered reading assistant saves time spent on the exploration of relevant resources and allows you to focus more on reading.

Select phrases or specific sections and explore more research papers related to the core aspects of your selections. Pin the useful ones for future references.

Our platform brings you the latest research related to your and project work.

Speed up your literature review

Quickly generate a summary of key sections of any paper with our summarizer.

Make informed decisions about which papers are relevant, and where to invest your time in further reading.

Get key insights from the paper, quickly comprehend the paper’s unique approach, and recall the key points.

Bring order to your research projects

Organize your reading lists into different projects and maintain the context of your research.

Quickly sort items into collections and tag or filter them according to keywords and color codes.

Experience the power of sharing by finding all the shared literature at one place.

Decode papers effortlessly for faster comprehension

Highlight what is important so that you can retrieve it faster next time.

Select any text in the paper and ask Copilot to explain it to help you get a deeper understanding.

Ask questions and follow-ups from AI-powered Copilot.

Collaborate to read with your team, professors, or students

Share and discuss literature and drafts with your study group, colleagues, experts, and advisors. Recommend valuable resources and help each other for better understanding.

Work in shared projects efficiently and improve visibility within your study group or lab members.

Keep track of your team's progress by being constantly connected and engaging in active knowledge transfer by requesting full access to relevant papers and drafts.

Find papers from across the world's largest repositories

Testimonials

Privacy and security of your research data are integral to our mission..

Everything you add or create on Enago Read is private by default. It is visible if and when you share it with other users.

You can put Creative Commons license on original drafts to protect your IP. For shared files, Enago Read always maintains a copy in case of deletion by collaborators or revoked access.

We use state-of-the-art security protocols and algorithms including MD5 Encryption, SSL, and HTTPS to secure your data.

- Park University

- Tools for Academic Writing

Literature Review

Tools for Academic Writing: Literature Review

- URL: https://library.park.edu/writing

- Annotated Bibliography

- Writing in Your Discipline This link opens in a new window

- Giving Peer Feedback

- Citing & Plagiarism This link opens in a new window

- Individual Help This link opens in a new window

- Careers & Job Hunting

- Writing Tutoring

What is a literature review?

A literature review is a discussion of previously published information on a particular topic, providing summary and connections to help readers understand the research that has been completed on a subject and why it is important. Unlike a research paper, a literature review does not develop a new argument, instead focusing on what has been argued or proven in past papers. However, a literature review should not just be an annotated bibliography that lists the sources found; the literature review should be organized thematically as a cohesive paper.

Why write a literature review?

Literature reviews provide you with a handy guide to a particular topic. If you have limited time to conduct research, literature reviews can give you an overview or act as a stepping stone. For professionals, they are useful reports that keep them up to date with what is current in the field. For scholars, the depth and breadth of the literature review emphasizes the credibility of the writer in his or her field. Literature reviews also provide a solid background for a research paper’s investigation. Comprehensive knowledge of the literature of the field is essential to most research papers.

Who writes literature reviews?

Literature reviews are sometimes written in the humanities, but more often in the sciences and social sciences. In scientific reports and longer papers, they constitute one section of the work. Literature reviews can also be written as stand-alone papers.

How Should I Organize My Literature Review?

Here are some ways to organize a literature review from Purdue OWL:

- Chronological: The simplest approach is to trace the development of the topic over time, which helps familiarize the audience with the topic (for instance if you are introducing something that is not commonly known in your field). If you choose this strategy, be careful to avoid simply listing and summarizing sources in order.

- Thematic: If you have found some recurring central themes that you will continue working with throughout your piece, you can organize your literature review into subsections that address different aspects of the topic. For example, if you are reviewing literature about women and religion, key themes can include the role of women in churches and the religious attitude towards women.

- Methodological: If you draw your sources from different disciplines or fields that use a variety of research methods, you can compare the results and conclusions that emerge from different approaches. For example: Qualitative versus quantitative research, empirical versus theoretical scholarship, divide the research by sociological, historical, or cultural sources.

- Theoretical: In many humanities articles, the literature review is the foundation for the theoretical framework. You can use it to discuss various theories, models, and definitions of key concepts. You can argue for the relevance of a specific theoretical approach or combine various theoretical concepts to create a framework for your research.

Outline Your Literature Review's Structure

How to Write a Literature Review

Literature Reviews: An Overview for Graduate Students

Writing the Literature Review

Find a focus Just like a term paper, a literature review is organized around ideas, not just sources. Use the research question you developed in planning your review and the issues or themes that connect your sources together to create a thesis statement. Yes, literature reviews have thesis statements! But your literature review thesis statement will be presenting a perspective on the material, rather than arguing for a position or opinion. For example:

The current trend in treatment for congestive heart failure combines surgery and medicine.

More and more cultural studies scholars are accepting popular media as a subject worthy of academic consideration.

Consider organization Once you have your thesis statement, you will need to think about the best way to effectively organize the information you have in your review. Like most academic papers, literature reviews should have an introduction, body, and conclusion.

Use evidence and be selective When making your points in your literature review, you should refer to several sources as evidence, just like in any academic paper. Your interpretation of the available information must be backed up with evidence to show that your ideas are valid. You also need to be selective about the information you choose to include in your review. Select only the most important points in each source, making sure everything you mention relates to the review's focus.

Summarize and synthesize Remember to summarize and synthesize your sources in each paragraph as well as throughout the review. You should not be doing in-depth analysis in your review, so keep your use of quotes to a minimum. A literature review is not just a summary of current sources; you should be keeping your own voice and saying something new about the collection of sources you have put together.

Revise, revise, revise When you have finished writing the literature review, you still have one final step! Spending a lot of time revising is important to make sure you have presented your information in the best way possible. Check your review to see if it follows the assignment instructions and/or your outline. Rewrite or rework your language to be more concise and double check that you have documented your sources and formatted your review appropriately.

The Literature Review Model

Machi, Lawrence A, and Brenda T McEvoy. The Literature Review: Six Steps to Success. 2Nd ed. Thousand Oaks, Calif.: Corwin Press, 2012.

What the Literature Review IS and ISN'T:

Need assistance with writing? 24/7 help available

Literature Review Sample Paper

- Literature Review Sample 1

- Literature Review Sample 2

- Literature Review Sample 3

Literature Review Tips

- Taking Notes For The Literature Review

- The Art of Scan Reading

- UNC-Chapel Hill Writing Guide for Literature Reviews

- Literature Review Guidelines from Purdue OWL

Organizing Your Review

As you read and evaluate your literature there are several different ways to organize your research . Courtesy of Dr. Gary Burkholder in the School of Psychology, these sample matrices are one option to help organize your articles. These documents allow you to compile details about your sources, such as the foundational theories, methodologies, and conclusions; begin to note similarities among the authors; and retrieve citation information for easy insertion within a document.

- Literature Review Matrix 1

- Literature Review Matrix 2

- Spreadsheet Style

How to Create a Literature Matrix using Excel

Synthesis for Literature Reviews

Developing a Research Question

- << Previous: Academic Writing

- Next: Annotated Bibliography >>

- Last Updated: Jan 23, 2024 12:57 PM

THE PAPER REVIEW GENERATOR

This tool is designed to speed up writing reviews for research papers for computer science. It provides a list of items that can be used to automatically generate a review draft. This website should not replace a human. Generated text should be edited by the reviewer to add more details.

How to use? Click on the check-boxes below and the review will be auto-generated according to your selection.

Introduction

Related work, problem definition, experiments, reproducibility, the generated review.

About this tool

This website is designed by Philippe Fournier-Viger by modifying the Autoreject project of Andreas Zeller (https://autoreject.org/) and replacing the textual content so as to turn what was a joke into a serious tool. By using this website, you agree to use it ethically and responsibly. If you have any suggestions to improve this tool or want to report bugs, you can contact with me . License of webpage: [C.C. Attribution 3.0 Unported license] (https://creativecommons.org/licenses/by/3.0/). License of source code to display content: [MIT license: https://mit-license.org/]. Some other websites by me

Also, I have made some useful online text processing tools .

- Help Center

GET STARTED

COLLABORATE ON YOUR REVIEWS WITH ANYONE, ANYWHERE, ANYTIME

Save precious time and maximize your productivity with a Rayyan membership. Receive training, priority support, and access features to complete your systematic reviews efficiently.

Rayyan Teams+ makes your job easier. It includes VIP Support, AI-powered in-app help, and powerful tools to create, share and organize systematic reviews, review teams, searches, and full-texts.

RESEARCHERS

Rayyan makes collaborative systematic reviews faster, easier, and more convenient. Training, VIP support, and access to new features maximize your productivity. Get started now!

Over 1 billion reference articles reviewed by research teams, and counting...

Intelligent, scalable and intuitive.

Rayyan understands language, learns from your decisions and helps you work quickly through even your largest systematic literature reviews.

WATCH A TUTORIAL NOW

Solutions for Organizations and Businesses

Rayyan Enterprise and Rayyan Teams+ make it faster, easier and more convenient for you to manage your research process across your organization.

- Accelerate your research across your team or organization and save valuable researcher time.

- Build and preserve institutional assets, including literature searches, systematic reviews, and full-text articles.

- Onboard team members quickly with access to group trainings for beginners and experts.

- Receive priority support to stay productive when questions arise.

- SCHEDULE A DEMO

- LEARN MORE ABOUT RAYYAN TEAMS+

RAYYAN SYSTEMATIC LITERATURE REVIEW OVERVIEW

LEARN ABOUT RAYYAN’S PICO HIGHLIGHTS AND FILTERS

Join now to learn why Rayyan is trusted by already more than 500,000 researchers

Individual plans, teams plans.

For early career researchers just getting started with research.

Free forever

- 3 Active Reviews

- Invite Unlimited Reviewers

- Import Directly from Mendeley

- Industry Leading De-Duplication

- 5-Star Relevance Ranking

- Advanced Filtration Facets

- Mobile App Access

- 100 Decisions on Mobile App

- Standard Support

- Revoke Reviewer

- Online Training

- PICO Highlights & Filters

- PRISMA (Beta)

- Auto-Resolver

- Multiple Teams & Management Roles

- Monitor & Manage Users, Searches, Reviews, Full Texts

- Onboarding and Regular Training

Professional

For researchers who want more tools for research acceleration.

Per month billed annually

- Unlimited Active Reviews

- Unlimited Decisions on Mobile App

- Priority Support

- Auto-Resolver

For students who want more tools to accelerate their research.

Per month billed annually

Billed monthly

For a team that wants professional licenses for all members.

Per-user, per month, billed annually

- Single Team

- High Priority Support

For teams that want support and advanced tools for members.

- Multiple Teams

- Management Roles

For organizations who want access to all of their members.

Annual Subscription

Contact Sales

- Organizational Ownership

- For an organization or a company

- Access to all the premium features such as PICO Filters, Auto-Resolver, PRISMA and Mobile App

- Store and Reuse Searches and Full Texts

- A management console to view, organize and manage users, teams, review projects, searches and full texts

- Highest tier of support – Support via email, chat and AI-powered in-app help

- GDPR Compliant

- Single Sign-On

- API Integration

- Training for Experts

- Training Sessions Students Each Semester

- More options for secure access control

ANNUAL ONLY

Per-user, billed monthly

Rayyan Subscription

membership starts with 2 users. You can select the number of additional members that you’d like to add to your membership.

Total amount:

Click Proceed to get started.

Great usability and functionality. Rayyan has saved me countless hours. I even received timely feedback from staff when I did not understand the capabilities of the system, and was pleasantly surprised with the time they dedicated to my problem. Thanks again!

This is a great piece of software. It has made the independent viewing process so much quicker. The whole thing is very intuitive.

Rayyan makes ordering articles and extracting data very easy. A great tool for undertaking literature and systematic reviews!

Excellent interface to do title and abstract screening. Also helps to keep a track on the the reasons for exclusion from the review. That too in a blinded manner.

Rayyan is a fantastic tool to save time and improve systematic reviews!!! It has changed my life as a researcher!!! thanks

Easy to use, friendly, has everything you need for cooperative work on the systematic review.

Rayyan makes life easy in every way when conducting a systematic review and it is easy to use.

AI Literature Review Generator

Automated literature review creation tool.

- Academic Research: Create a literature review for your thesis, dissertation, or research paper.

- Professional Research: Conduct a literature review for a project, report, or proposal at work.

- Content Creation: Write a literature review for a blog post, article, or book.

- Personal Research: Conduct a literature review to deepen your understanding of a topic of interest.

Literature Review Generator by AcademicHelp

Features of Our Literature Review Generator

Advanced power of AI

Simplified information gathering

Enhanced quality

Free literature review generator.

Remember Me

What is your profession ? Student Teacher Writer Other

Forgotten Password?

Username or Email

The best AI tools for research papers and academic research (Literature review, grants, PDFs and more)

As our collective understanding and application of artificial intelligence (AI) continues to evolve, so too does the realm of academic research. Some people are scared by it while others are openly embracing the change.

Make no mistake, AI is here to stay!

Instead of tirelessly scrolling through hundreds of PDFs, a powerful AI tool comes to your rescue, summarizing key information in your research papers. Instead of manually combing through citations and conducting literature reviews, an AI research assistant proficiently handles these tasks.

These aren’t futuristic dreams, but today’s reality. Welcome to the transformative world of AI-powered research tools!

The influence of AI in scientific and academic research is an exciting development, opening the doors to more efficient, comprehensive, and rigorous exploration.

This blog post will dive deeper into these tools, providing a detailed review of how AI is revolutionizing academic research. We’ll look at the tools that can make your literature review process less tedious, your search for relevant papers more precise, and your overall research process more efficient and fruitful.

I know that I wish these were around during my time in academia. It can be quite confronting when trying to work out what ones you should and shouldn’t use. A new one seems to be coming out every day!

Here is everything you need to know about AI for academic research and the ones I have personally trialed on my Youtube channel.

Best ChatGPT interface – Chat with PDFs/websites and more

I get more out of ChatGPT with HeyGPT . It can do things that ChatGPT cannot which makes it really valuable for researchers.

Use your own OpenAI API key ( h e re ). No login required. Access ChatGPT anytime, including peak periods. Faster response time. Unlock advanced functionalities with HeyGPT Ultra for a one-time lifetime subscription

AI literature search and mapping – best AI tools for a literature review – elicit and more

Harnessing AI tools for literature reviews and mapping brings a new level of efficiency and precision to academic research. No longer do you have to spend hours looking in obscure research databases to find what you need!

AI-powered tools like Semantic Scholar and elicit.org use sophisticated search engines to quickly identify relevant papers.

They can mine key information from countless PDFs, drastically reducing research time. You can even search with semantic questions, rather than having to deal with key words etc.

With AI as your research assistant, you can navigate the vast sea of scientific research with ease, uncovering citations and focusing on academic writing. It’s a revolutionary way to take on literature reviews.

- Elicit – https://elicit.org

- Supersymmetry.ai: https://www.supersymmetry.ai

- Semantic Scholar: https://www.semanticscholar.org

- Connected Papers – https://www.connectedpapers.com/

- Research rabbit – https://www.researchrabbit.ai/

- Laser AI – https://laser.ai/

- Litmaps – https://www.litmaps.com

- Inciteful – https://inciteful.xyz/

- Scite – https://scite.ai/

- System – https://www.system.com

If you like AI tools you may want to check out this article:

- How to get ChatGPT to write an essay [The prompts you need]

AI-powered research tools and AI for academic research

AI research tools, like Concensus, offer immense benefits in scientific research. Here are the general AI-powered tools for academic research.

These AI-powered tools can efficiently summarize PDFs, extract key information, and perform AI-powered searches, and much more. Some are even working towards adding your own data base of files to ask questions from.

Tools like scite even analyze citations in depth, while AI models like ChatGPT elicit new perspectives.

The result? The research process, previously a grueling endeavor, becomes significantly streamlined, offering you time for deeper exploration and understanding. Say goodbye to traditional struggles, and hello to your new AI research assistant!

- Bit AI – https://bit.ai/

- Consensus – https://consensus.app/

- Exper AI – https://www.experai.com/

- Hey Science (in development) – https://www.heyscience.ai/

- Iris AI – https://iris.ai/

- PapersGPT (currently in development) – https://jessezhang.org/llmdemo

- Research Buddy – https://researchbuddy.app/

- Mirror Think – https://mirrorthink.ai

AI for reading peer-reviewed papers easily

Using AI tools like Explain paper and Humata can significantly enhance your engagement with peer-reviewed papers. I always used to skip over the details of the papers because I had reached saturation point with the information coming in.

These AI-powered research tools provide succinct summaries, saving you from sifting through extensive PDFs – no more boring nights trying to figure out which papers are the most important ones for you to read!

They not only facilitate efficient literature reviews by presenting key information, but also find overlooked insights.

With AI, deciphering complex citations and accelerating research has never been easier.

- Open Read – https://www.openread.academy

- Chat PDF – https://www.chatpdf.com

- Explain Paper – https://www.explainpaper.com

- Humata – https://www.humata.ai/

- Lateral AI – https://www.lateral.io/

- Paper Brain – https://www.paperbrain.study/

- Scholarcy – https://www.scholarcy.com/

- SciSpace Copilot – https://typeset.io/

- Unriddle – https://www.unriddle.ai/

- Sharly.ai – https://www.sharly.ai/

AI for scientific writing and research papers

In the ever-evolving realm of academic research, AI tools are increasingly taking center stage.

Enter Paper Wizard, Jenny.AI, and Wisio – these groundbreaking platforms are set to revolutionize the way we approach scientific writing.

Together, these AI tools are pioneering a new era of efficient, streamlined scientific writing.

- Paper Wizard – https://paperwizard.ai/

- Jenny.AI https://jenni.ai/ (20% off with code ANDY20)

- Wisio – https://www.wisio.app

AI academic editing tools

In the realm of scientific writing and editing, artificial intelligence (AI) tools are making a world of difference, offering precision and efficiency like never before. Consider tools such as Paper Pal, Writefull, and Trinka.

Together, these tools usher in a new era of scientific writing, where AI is your dedicated partner in the quest for impeccable composition.

- Paper Pal – https://paperpal.com/

- Writefull – https://www.writefull.com/

- Trinka – https://www.trinka.ai/

AI tools for grant writing

In the challenging realm of science grant writing, two innovative AI tools are making waves: Granted AI and Grantable.

These platforms are game-changers, leveraging the power of artificial intelligence to streamline and enhance the grant application process.

Granted AI, an intelligent tool, uses AI algorithms to simplify the process of finding, applying, and managing grants. Meanwhile, Grantable offers a platform that automates and organizes grant application processes, making it easier than ever to secure funding.

Together, these tools are transforming the way we approach grant writing, using the power of AI to turn a complex, often arduous task into a more manageable, efficient, and successful endeavor.

- Granted AI – https://grantedai.com/

- Grantable – https://grantable.co/

Free AI research tools

There are many different tools online that are emerging for researchers to be able to streamline their research processes. There’s no need for convience to come at a massive cost and break the bank.

The best free ones at time of writing are:

- Elicit – https://elicit.org

- Connected Papers – https://www.connectedpapers.com/

- Litmaps – https://www.litmaps.com ( 10% off Pro subscription using the code “STAPLETON” )

- Consensus – https://consensus.app/

Wrapping up

The integration of artificial intelligence in the world of academic research is nothing short of revolutionary.

With the array of AI tools we’ve explored today – from research and mapping, literature review, peer-reviewed papers reading, scientific writing, to academic editing and grant writing – the landscape of research is significantly transformed.

The advantages that AI-powered research tools bring to the table – efficiency, precision, time saving, and a more streamlined process – cannot be overstated.

These AI research tools aren’t just about convenience; they are transforming the way we conduct and comprehend research.

They liberate researchers from the clutches of tedium and overwhelm, allowing for more space for deep exploration, innovative thinking, and in-depth comprehension.

Whether you’re an experienced academic researcher or a student just starting out, these tools provide indispensable aid in your research journey.

And with a suite of free AI tools also available, there is no reason to not explore and embrace this AI revolution in academic research.

We are on the precipice of a new era of academic research, one where AI and human ingenuity work in tandem for richer, more profound scientific exploration. The future of research is here, and it is smart, efficient, and AI-powered.

Before we get too excited however, let us remember that AI tools are meant to be our assistants, not our masters. As we engage with these advanced technologies, let’s not lose sight of the human intellect, intuition, and imagination that form the heart of all meaningful research. Happy researching!

Thank you to Ivan Aguilar – Ph.D. Student at SFU (Simon Fraser University), for starting this list for me!

Dr Andrew Stapleton has a Masters and PhD in Chemistry from the UK and Australia. He has many years of research experience and has worked as a Postdoctoral Fellow and Associate at a number of Universities. Although having secured funding for his own research, he left academia to help others with his YouTube channel all about the inner workings of academia and how to make it work for you.

Thank you for visiting Academia Insider.

We are here to help you navigate Academia as painlessly as possible. We are supported by our readers and by visiting you are helping us earn a small amount through ads and affiliate revenue - Thank you!

2024 © Academia Insider

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- 16 April 2024

Structure peer review to make it more robust

- Mario Malički 0

Mario Malički is associate director of the Stanford Program on Research Rigor and Reproducibility (SPORR) and co-editor-in-chief of the Research Integrity and Peer Review journal.

You can also search for this author in PubMed Google Scholar

You have full access to this article via your institution.

In February, I received two peer-review reports for a manuscript I’d submitted to a journal. One report contained 3 comments, the other 11. Apart from one point, all the feedback was different. It focused on expanding the discussion and some methodological details — there were no remarks about the study’s objectives, analyses or limitations.

My co-authors and I duly replied, working under two assumptions that are common in scholarly publishing: first, that anything the reviewers didn’t comment on they had found acceptable for publication; second, that they had the expertise to assess all aspects of our manuscript. But, as history has shown, those assumptions are not always accurate (see Lancet 396 , 1056; 2020 ). And through the cracks, inaccurate, sloppy and falsified research can slip.

As co-editor-in-chief of the journal Research Integrity and Peer Review (an open-access journal published by BMC, which is part of Springer Nature), I’m invested in ensuring that the scholarly peer-review system is as trustworthy as possible. And I think that to be robust, peer review needs to be more structured. By that, I mean that journals should provide reviewers with a transparent set of questions to answer that focus on methodological, analytical and interpretative aspects of a paper.

For example, editors might ask peer reviewers to consider whether the methods are described in sufficient detail to allow another researcher to reproduce the work, whether extra statistical analyses are needed, and whether the authors’ interpretation of the results is supported by the data and the study methods. Should a reviewer find anything unsatisfactory, they should provide constructive criticism to the authors. And if reviewers lack the expertise to assess any part of the manuscript, they should be asked to declare this.

Anonymizing peer review makes the process more just

Other aspects of a study, such as novelty, potential impact, language and formatting, should be handled by editors, journal staff or even machines, reducing the workload for reviewers.

The list of questions reviewers will be asked should be published on the journal’s website, allowing authors to prepare their manuscripts with this process in mind. And, as others have argued before, review reports should be published in full. This would allow readers to judge for themselves how a paper was assessed, and would enable researchers to study peer-review practices.

To see how this works in practice, since 2022 I’ve been working with the publisher Elsevier on a pilot study of structured peer review in 23 of its journals, covering the health, life, physical and social sciences. The preliminary results indicate that, when guided by the same questions, reviewers made the same initial recommendation about whether to accept, revise or reject a paper 41% of the time, compared with 31% before these journals implemented structured peer review. Moreover, reviewers’ comments were in agreement about specific parts of a manuscript up to 72% of the time ( M. Malički and B. Mehmani Preprint at bioRxiv https://doi.org/mrdv; 2024 ). In my opinion, reaching such agreement is important for science, which proceeds mainly through consensus.

Stop the peer-review treadmill. I want to get off

I invite editors and publishers to follow in our footsteps and experiment with structured peer reviews. Anyone can trial our template questions (see go.nature.com/4ab2ppc ), or tailor them to suit specific fields or study types. For instance, mathematics journals might also ask whether referees agree with the logic or completeness of a proof. Some journals might ask reviewers if they have checked the raw data or the study code. Publications that employ editors who are less embedded in the research they handle than are academics might need to include questions about a paper’s novelty or impact.

Scientists can also use these questions, either as a checklist when writing papers or when they are reviewing for journals that don’t apply structured peer review.

Some journals — including Proceedings of the National Academy of Sciences , the PLOS family of journals, F1000 journals and some Springer Nature journals — already have their own sets of structured questions for peer reviewers. But, in general, these journals do not disclose the questions they ask, and do not make their questions consistent. This means that core peer-review checks are still not standardized, and reviewers are tasked with different questions when working for different journals.

Some might argue that, because different journals have different thresholds for publication, they should adhere to different standards of quality control. I disagree. Not every study is groundbreaking, but scientists should view quality control of the scientific literature in the same way as quality control in other sectors: as a way to ensure that a product is safe for use by the public. People should be able to see what types of check were done, and when, before an aeroplane was approved as safe for flying. We should apply the same rigour to scientific research.

Ultimately, I hope for a future in which all journals use the same core set of questions for specific study types and make all of their review reports public. I fear that a lack of standard practice in this area is delaying the progress of science.

Nature 628 , 476 (2024)

doi: https://doi.org/10.1038/d41586-024-01101-9

Reprints and permissions

Competing Interests

M.M. is co-editor-in-chief of the Research Integrity and Peer Review journal that publishes signed peer review reports alongside published articles. He is also the chair of the European Association of Science Editors Peer Review Committee.

Related Articles

- Scientific community

- Peer review

Will AI accelerate or delay the race to net-zero emissions?

Comment 22 APR 24

Londoners see what a scientist looks like up close in 50 photographs

Career News 18 APR 24

Researchers want a ‘nutrition label’ for academic-paper facts

Nature Index 17 APR 24

Is ChatGPT corrupting peer review? Telltale words hint at AI use

News 10 APR 24

Three ways ChatGPT helps me in my academic writing

Career Column 08 APR 24

Is AI ready to mass-produce lay summaries of research articles?

Nature Index 20 MAR 24

2024 Recruitment notice Shenzhen Institute of Synthetic Biology: Shenzhen, China

The wide-ranging expertise drawing from technical, engineering or science professions...

Shenzhen,China

Shenzhen Institute of Synthetic Biology

Recruitment of Global Talent at the Institute of Zoology, Chinese Academy of Sciences (IOZ, CAS)

The Institute of Zoology (IOZ), Chinese Academy of Sciences (CAS), is seeking global talents around the world.

Beijing, China

Institute of Zoology, Chinese Academy of Sciences (IOZ, CAS)

Research Associate - Brain Cancer

Houston, Texas (US)

Baylor College of Medicine (BCM)

Senior Manager, Animal Care

Research associate - genomics.

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Do hours worth of reading in minutes

Try asking or searching for:

Popular papers to read

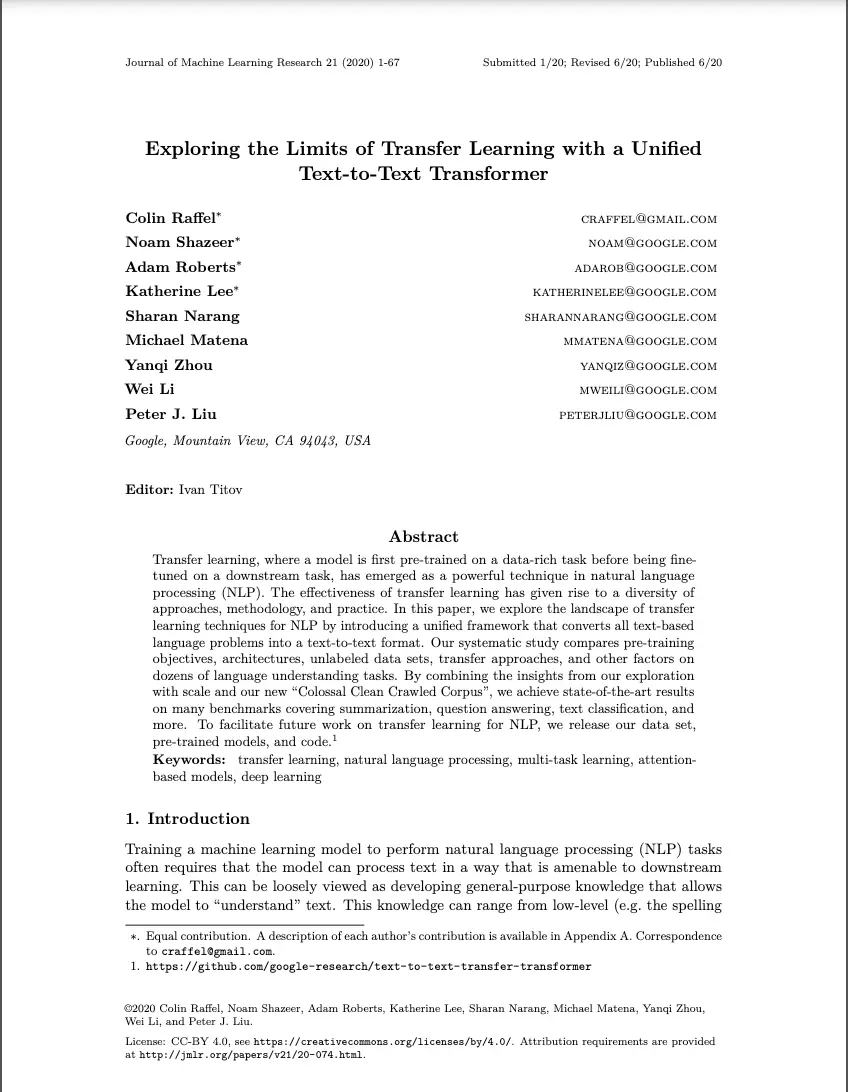

Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer

Attention is All you Need

mT5: A Massively Multilingual Pre-trained Text-to-Text Transformer

An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale

Deformable DETR: Deformable Transformers for End-to-End Object Detection

How Good is Your Tokenizer? On the Monolingual Performance of Multilingual Language Models

Machine Learning

Support-Vector Networks

Distributed Optimization and Statistical Learning Via the Alternating Direction Method of Multipliers

Learning Deep Architectures for AI

Adaptive Subgradient Methods for Online Learning and Stochastic Optimization

An Introduction to Support Vector Machines

Model-agnostic meta-learning for fast adaptation of deep networks

Semi-supervised learning using Gaussian fields and harmonic functions

Manifold Regularization: A Geometric Framework for Learning from Labeled and Unlabeled Examples

Support vector machine learning for interdependent and structured output spaces

A Framework for Learning Predictive Structures from Multiple Tasks and Unlabeled Data

Natural Language

Exploiting Cloze-Questions for Few-Shot Text Classification and Natural Language Inference

Learning Transferable Visual Models From Natural Language Supervision

Unified Pre-training for Program Understanding and Generation

Semantic memory: A review of methods, models, and current challenges

A Survey on Recent Approaches for Natural Language Processing in Low-Resource Scenarios.

Foundations of Statistical Natural Language Processing

A framework for representing knowledge

Speech and Language Processing: An Introduction to Natural Language Processing, Computational Linguistics, and Speech Recognitio

Semantic similarity in a taxonomy: an information-based measure and its application to problems of ambiguity in natural language

Cheap and Fast -- But is it Good? Evaluating Non-Expert Annotations for Natural Language Tasks

Top papers of 2023

Top papers of 2022, trending questions, how is urban decision-making, how do cyanide affect aquatic life and human health, what can california government do to improve food security and solve poverty, what are the negative impact of electric aircraft in terms of market acceptance for aircraft manufacturers, what are the potential benefits and drawbacks of implementing the flipped row strategy in freestyle swimming instruction, what are the synthetic routes for the production of aceanthrylenedione, how can english affect the students learning, canary islands women colonial, how does social media affect on mental health, what are the positive impact of electric aircraft in terms of noise reduction for airlines, how does shakespeare explore the topics of gender roles and identity in „much ado about nothing“ and twelfth night“, how to find the carbon sequestrated from the atmosphere based on the age and species of a tree, ai trend analysis, how does addiction affect the development and treatment of psychiatric disorders, what are the current trends and developments in the application of artificial intelligence in cyber security, what are the horizontal and vertical variation of sound speed in the ocean, what are the positive impact of electric aircraft in terms of energy efficiency for airlines, how did the black consciousness influenced the revival of protests in south africa in the 1970s, what are the most prevalent adverse childhood experiences reported in epidemiological studies, what is the difference between a swinging and sliding armature when used in a contactor, sirve el acs traking para el tratamiento del tlp, what are the negative impact of electric aircraft in terms of limited range for airlines, artificial intelligence trend analysis, how south atlantic ocean open, what are the benefits and side effects of coffee, what are the negative impact of electric aircraft in terms of battery weight and size for airlines, what is the vitamins and minerals of bok choy, how long allamanda cathartica take to bloom, what are the potential consequences of not having a clear definition of recovery for individuals, families, and healthcare providers, what are some environmental and agricultural benefits of agrivoltais, what are different types of brands in terms of brand management, how is used llms for computer science education in universities, what are the underlying psychological factors that contribute to impulsive buying behavior among women, what are the negative impact of electric aircraft in terms of charging infrastructure for airlines, what role does clusterin play in regulating glucose homeostasis, what trait personality is needed for a ciso, how long allamanda cathartica growth cycle is, how effective is mindfulness based cognitive therapy compared to pharmacological treatment of pain in cancer patients undergoing treatment, why females are active in answering survey, what are the negative impact of electric aircraft in terms of high initial costs for airlines, how does the proportional-integral-derivative (pid) control algorithm work in energy storage systems, what are the users' perceptions of smart home technology, how an genai or llm be used in it governance and mangement, what is a librarian role in avoiding plagiarism, how does estrogen affect the tumor microenvironment, what are the negative impact of electric aircraft in terms of regulatory hurdles for airlines, how can taking a work of art from the museum to the streets impact, what are the current global statistics on the prevalence of diabetic mellitus, how was the rio do peixe basin evolution, which ai research tools can be useful for generating research questions, what effect is hdac6 deficiency on brain, how to hire personal for gold mining company, what assessment methods that is used to test literacy skills are effective, what are the positive impact of electric aircraft in terms of lower emissions for passengers, what factors contribute to the success of policy development for construction projects, what factors contribute to the increased participation of females in answering thesis questions, what is the push and pull theory by crompton, what assessment methods that is used to test reading literacy skills are effective, is phytopathogenicity usually studied through the lens of effectors, what are the positive impact of electric aircraft in terms of potential cost savings for passengers, how strong is the research evidence support for the hcr-20, what is the history of slack, how does language devices such as metaphor make journalistic writing effective, what are the positive impact of electric aircraft in terms of increased flight options for passengers, has high dependency with india affected domestic trade policies related to trade of food commodities of nepal, what is the significance of horthohantavirus, what is ontario’s police learning system, how social media has improved human communication, how do animators use exaggeration to enhance character emotions and actions in animated films, what are different types of brands, downsides of colon cancer surgery, why are nurses at high risk for burnout, how did celtic adults acquire old english, what are the audiological findings in disorders of the tympanic membrane, what are some examples of terminal selector transcription factors in immune cell differentiation, what are feasibilty studies in construction, what are the long-term health consequences of chronic obesity, what is convoluted neural network, how does the growth productivity of pseudomonas fluorescens compare to other bacterial strains in promoting plant growth, what are the roles of kisspeptin in cancer biology, what is the history of slack as a collaborative software, what is the reason for selecting parameters for the influence of talent management practices on organizational performance, what is the definition of green roofs, how does cultural background influence the expression of quiet ambition, how to acylate well at the designated 1 and 9 positions on anthracene, can skill collaboration filtering improve diversity in candidate recommendations, what factors contribute to the success of policy development in construction sector, how to maximize financial results by optimizing production processes, how green synthesis using spatholobus littoralis hassk, william james sick soul, what are the historical and cultural factors that contributed to the development of pastoralist communities in turkana, how much of poor health is due to lifestyle versus poor genetics, how many milions of words pararel corpora machine translation, is there a difference between the urban form of a european historic centre and a middle eastern one, how high dependency with india affected domestic trade policies related to trade of food commodities of nepal, what is the current state of above and below ground vegetation carbon stock in bhutan's forests, what are the potential benefits and challenges of implementing pid control in energy storage systems for smart grid applications, how efficient are [nickel complex]/nanoparticles as photocatalysts in the reductive carboxylation of unsaturated hydrocarbons with carbon dioxide under visible-light, digital literacy as a barriers to the adoption of virtual assistant and cnn-based interventions for malnutrition in india, why can't sodium metabisulfite preserve anthocyanin, 🔬 researchers worldwide are simplifying papers.

Millions of researchers are already using SciSpace on research papers. Join them and start using your AI research assistant wherever you're reading online.

Mushtaq Bilal, PhD

Researcher @ Syddansk Universitet

SciSpace is an incredible (AI-powered) tool to help you understand research papers better. It can explain and elaborate most academic texts in simple words.

Olesia Nikulina

PhD Candidate

Academic research gets easier day by day. All thanks to AI tools like @scispace Copilot, Copilot can instantly answer your questions and simply explain scientific concepts as you read

Richard Gao

Co-founder evoke-app.com

This is perfect for a layman to scientific information like me. Especially with so much misinformation floating around nowadays, this is great for understanding studies or research others may have misrepresented on purpose or by accident.

Product Hunt

I absolutely adore this product. It's been years since I was in a lab but, I plugged in a study I did way back when and this gets everything right. Equations, hypotheses, and methodologies will be game changers for graduate studies (the current education system severely limits comprehension while encouraging interconnectivity between streams). But, early learners would be able to discover so many papers through this as well. In short, love it

Livia Burbulea

I'm gonna recommend SciSpace to all of my friends and family that are still studying. And I'll definitely love to give it a try for myself, cause you know, education should never stop when you finish your studies. 😀

Sara Botticelli

Product Hunt User.

Wonderful idea! I know this will be used and appreciated by a lot of students, researchers, and lovers of knowledge. Great job, team @saikiranchandha and @shanukumr!

Divyansh Verma

SVNIT'25 Chemical Engineering