- Search Menu

- CNS Injury and Stroke

- Epilepsy and Sleep

- Movement Disorders

- Multiple Sclerosis/Neuroinflammation

- Neuro-oncology

- Neurodegeneration - Cellular & Molecular

- Neuromuscular Disease

- Neuropsychiatry

- Pain and Headache

- Advance articles

- Editor's Choice

- Author Guidelines

- Submission Site

- Why publish with this journal?

- Open Access

- About Brain

- Editorial Board

- Advertising and Corporate Services

- Journals Career Network

- Self-Archiving Policy

- Dispatch Dates

- Terms and Conditions

- Journals on Oxford Academic

- Books on Oxford Academic

Latest Article

Latest articles.

Editor's Choice

Publish with Brain

Enjoy benefits including a user-friendly submissions system, discoverability support, and a distinguished editorial team.

Learn more about reasons to publish your work with Brain

Latest posts on X

Altered brain glucose metabolism as a mechanism for delirium.

Delirium is a common condition with significant impact on patient outcome. This episode discussed potential intrinsic brain mechanisms that may underly delirium. The team explores the evidence that delirium is more than just a systemic process that extends into the brain but may involve pathophysiological alterations of brain function.

Are there differences in the immune response among individuals of Black ethnicity with multiple sclerosis?

This episode discussed racial differences in antibody response to natalizumab treatment for individuals of black ethnicity with multiple sclerosis. Further, they consider potential mechanisms which may underly differences and the implications on treatment.

Exploring a genetic basis for disordered speech and language: FOXP2 mutations and striatal neurons

This article explores a potential genetic basis for disordered speech by a mutation in the transcription factor FOXP2 . This was discovered in KE family members with speech disturbances was a landmark example of the genetic control of vocal communication in humans.

The BRAIN podcast

The BRAIN podcast is the official podcast of Brain and Brain Communications highlighting the rich and diverse neuroscience published in the journals.

Browse all episodes

Video Abstracts

Brain endothelium class I molecules regulate neuropathology in experimental cerebral malaria

Spike propagation mapping reveals effective connectivity and predicts surgical outcome in epilepsy

Watch more videos from Brain

Haemorrhage of human foetal cortex associated with SARS-CoV-2 infection

Diabetes and hypertension are related to amyloid-beta burden in the population-based Rotterdam Study

Neurogenetics Collection

Explore recently published articles on the topic of neurogenetics from Brain . The articles are open access or freely available for a limited time.

Browse the collection

China Collection

Brain celebrates the Year of the Tiger with a collection of articles from our Chinese colleagues. The articles are open access or freely available for a limited time.

Neuroinflammation Collection

Explore nine recently published articles on neuroinflammation. The articles are open access or freely available for a limited time.

Browse the collection

COVID Collection

Discover Brain ’s COVID collection, bringing together a range of articles on the neuroscience, neurology, and neuropsychiatry of SARS-CoV-2 infection.

Brain on the OUPblog

Of language, brain health, and global inequities

Speech and language assessments have emerged as crucial tools in combatting one of the greatest public health challenges of our century, the growth of neurodegenerative disorders. In this blog post, Adolfo M. García explores how a lack of linguistic diversity in assessment methods threatens their potential for more equitable testing worldwide.

Blog post | Read article | All blog posts

It’s time to use software-as-medicine to help an injured brain

Multiple mild Traumatic Brain Injuries (“mTBIs”) can put military service members at an elevated risk of cognitive impairment. Service members and veterans were enrolled in a trial with a new type of brain training program, based on the science of brain plasticity and the discovery that intensive, adaptive, computerized training—targeting sensory speed and accuracy—can rewire the brain to improve cognitive function. The trial found that the training program significantly improved overall cognitive function.

Blog post | Read article | All blog posts

How air pollution may lead to Alzheimer’s disease

Air pollution harms billions of people worldwide. Over the past few decades, it has become widely recognized that outdoor air pollution is detrimental to respiratory and cardiovascular health, but recently scientists have come to acknowledge the damage it may cause on the brain as well.

When narcolepsy makes you more creative

Patients with narcolepsy are often lucid dreamers, and experience direct transitions from wakefulness into REM sleep. Lacaux et al. report that these patients perform better than healthy controls on creativity tests, supporting a role for REM sleep in creativity.

Blog post | Read article | All blog posts

Author resources

Learn about how to submit your article, our publishing process, and tips on how to promote your article.

Find out more

Want access to Brain ?

Fill out our simple online form to recommend Brain to your library. Your institution could also be eligible to free or deeply discounted access via our developing countries initiatives .

Recommend now

Introducing Brain Communications

Brain Communications is the open access sister journal to Brain , publishing high-quality preclinical and clinical studies related to diseases of the nervous system or maintaining brain health.

Top trending articles

Discover the top Brain articles sorted by Altmetric Score, that have been mentioned online in the past three months.

Related Titles

- Contact the editorial office

- Guarantors of Brain

- Recommend to your Library

Affiliations

- Online ISSN 1460-2156

- Print ISSN 0006-8950

- Copyright © 2024 Guarantors of Brain

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Institutional account management

- Rights and permissions

- Get help with access

- Accessibility

- Advertising

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

Loading metrics

Open Access

Peer-reviewed

Research Article

Relating Structure and Function in the Human Brain: Relative Contributions of Anatomy, Stationary Dynamics, and Non-stationarities

* E-mail: [email protected]

Affiliation Laboratoire d'Imagerie Fonctionnelle, UMR678, Inserm/UPMC Univ Paris 06, Paris, France

- Arnaud Messé,

- David Rudrauf,

- Habib Benali,

- Guillaume Marrelec

- Published: March 20, 2014

- https://doi.org/10.1371/journal.pcbi.1003530

- Reader Comments

Investigating the relationship between brain structure and function is a central endeavor for neuroscience research. Yet, the mechanisms shaping this relationship largely remain to be elucidated and are highly debated. In particular, the existence and relative contributions of anatomical constraints and dynamical physiological mechanisms of different types remain to be established. We addressed this issue by systematically comparing functional connectivity (FC) from resting-state functional magnetic resonance imaging data with simulations from increasingly complex computational models, and by manipulating anatomical connectivity obtained from fiber tractography based on diffusion-weighted imaging. We hypothesized that FC reflects the interplay of at least three types of components: (i) a backbone of anatomical connectivity, (ii) a stationary dynamical regime directly driven by the underlying anatomy, and (iii) other stationary and non-stationary dynamics not directly related to the anatomy. We showed that anatomical connectivity alone accounts for up to 15% of FC variance; that there is a stationary regime accounting for up to an additional 20% of variance and that this regime can be associated to a stationary FC; that a simple stationary model of FC better explains FC than more complex models; and that there is a large remaining variance (around 65%), which must contain the non-stationarities of FC evidenced in the literature. We also show that homotopic connections across cerebral hemispheres, which are typically improperly estimated, play a strong role in shaping all aspects of FC, notably indirect connections and the topographic organization of brain networks.

Author Summary

By analogy with the road network, the human brain is defined both by its anatomy (the ‘roads’), that is, the way neurons are shaped, clustered together and connected to each others and its dynamics (the ‘traffic’): electrical and chemical signals of various types, shapes and strength constantly propagate through the brain to support its sensorimotor and cognitive functions, its capacity to learn and adapt to disease, and to create consciousness. While anatomy and dynamics are organically intertwined (anatomy contributes to shape dynamics), the nature and strength of this relation remain largely mysterious. Various hypotheses have been proposed and tested using modern neuroimaging techniques combined with mathematical models of brain activity. In this study, we demonstrate the existence (and quantify the contribution) of a dynamical regime in the brain, coined ‘stationary’, that appears to be largely induced and shaped by the underlying anatomy. We also reveal the critical importance of specific anatomical connections in shaping the global anatomo-functional structure of this dynamical regime, notably connections between hemispheres.

Citation: Messé A, Rudrauf D, Benali H, Marrelec G (2014) Relating Structure and Function in the Human Brain: Relative Contributions of Anatomy, Stationary Dynamics, and Non-stationarities. PLoS Comput Biol 10(3): e1003530. https://doi.org/10.1371/journal.pcbi.1003530

Editor: Claus C. Hilgetag, Hamburg University, Germany

Received: August 14, 2013; Accepted: February 8, 2014; Published: March 20, 2014

Copyright: © 2014 Messé et al. This is an open-access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Funding: This work is supported by the Inserm and the University Pierre et Marie Curie (Paris, France). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Competing interests: The authors have declared that no competing interests exist.

Introduction

Coherent behavior and cognition involve synergies between neuronal populations in interaction [1] – [3] . Even at rest, in the absence of direct environmental stimulations, these interactions drive the synchronization of spontaneous activity across brain systems, shedding light on the large-scale anatomo-functional organization of the brain [4] . The study of such patterns of synchronization has known important developments due to recent methodological advances in brain imaging data acquisition and analysis. These advances have enabled investigators to estimate interactions in the brain by measuring functional connectivity (FC) from resting-state functional MRI (rs-fMRI). Analyses of FC at rest have supported the hypothesis that the brain is spatially organized into large-scale intrinsic networks [5] – [7] , e.g. the so-called resting-state networks [8] , [9] , such as the default mode network, which have been linked to central integrative cognitive functions [10] – [13] . The study of large-scale intrinsic networks from rs-fMRI has become a central and active area for neuroscience research. However, the mechanisms and factors driving FC, as well as their relative contribution to empirical data, are still highly debated [14] and remain to be elucidated.

Theoretical rationale and empirical findings support the hypothesis that FC is driven and shaped by structural connectivity (SC) between brain systems, i.e., by the actual bundles of white matter fiber connecting neurons [15] . As a first approximation, SC can be inferred from fiber tractography based on diffusion-weighted imaging (DWI) [16] – [19] . A recent study [20] , which focused on a small subset of robustly estimated structural connections, demonstrated the existence of a statistical, yet complex, correspondence between FC and specific features of SC (e.g., low vs. high fiber density, short vs. long fibers, intra vs. interhemispheric connections). However, a large part of FC cannot be explained by SC alone [21] . There appears that FC is the result of at least two main contributing factors: (i) the underlying anatomical structure of connectivity, and (ii) the dynamics of neuronal populations emerging from their physiology [3] . A key issue is to better understand the relative contributions of these two components to FC. Besides, recent studies using windowed analyses have suggested that FC estimated over an entire acquisition session (referred to as ‘stationary FC’ in the literature) breaks down into a variety of reliable correlation patterns (also referred to as ‘dynamic FC’ or ‘non-stationarities’) when estimated over short time windows (30 s) [14] , [22] . The authors advocated that FC estimated over short time windows (or windowed FC, for short) mostly reflects recurrent transitory patterns that are aggregated when estimating FC over a whole session. They further suggested that whole-session FC may only be an epiphenomenon without clear physiological underpinning, and not the reflection of an actual process with stationary FC [14] . This perspective remains to be reconciled with the fact that whole-session FC has been found to be highly reproducible, functionally meaningful and a useful biomarker in many pathological contexts [23] , [24] . Note that, in the recent literature of fMRI data analysis, stationarity implicitely refers to a stationary FC (i.e., the invariance of FC over time), to be contrasted with the more general notion of (strong) stationarity, where a model or process is stationary if its parameters remain constant over time [25] , [26] . SC being temporally stable at the scale of a whole resting state fMRI session (typically 10 min), we could expect SC to drive a stationary process (in the strong sense). Since SC is furthermore expected to drive FC, we can hypothesize that this stationary process contributes to generate a stationary FC.

In order to bring together the structural and dynamical components underlying FC, some studies have used computational models that incorporate SC together with biophysical models of neuronal activity to generate coherent brain dynamics [27] – [32] . This approach has yielded promising results for the understanding of the relationship between structure and function [17] , [33] , [34] . Here, we used a testbed of well-established generative models simulating neuronal dynamics combined with empirical measures, to investigate the relative contributions of anatomical connections, stationary dynamics, and non-stationarities to the emergence of empirical functional connectivity. In particular, we considered the following hypotheses: (H1) part of FC directly reflects SC; (H2) models of physiological mechanisms added to SC increase predictive power all the more as they are complex; (H3) part of the variance of FC that is unexplained by models is due to issues in the estimation of SC, e.g., problems with measuring homotopic connections; (H4) there is an actual stationary process reflected in whole-session FC that is not merely an artifact but substantially reflects the driving of the dynamics by SC.

In order to test these hypotheses and estimate the relative contribution of anatomy, stationary dynamics and non-stationarities to FC, we relied on the following approach. After T 1 -weighted MRI based parcellation of the brain ( N = 160 regions), SC was estimated using the proportion of white matter fibers connecting pairs of regions, based on probabilistic tractography of DWI data [35] . FC was measured on rs-fMRI data using Pearson correlation between the time courses of brain regions. We quantified the correlation between SC alone and FC as a reference, and also fed SC to generative neurodynamical models of increasing complexity: a spatial autoregressive (SAR) model [36] , analytic models with or without conduction delays [28] – [31] , [37] , and biologically constrained models [29] , [32] . Importantly, all these models were used in their stationary regime in the strong sense, since their parameters were not changed during the simulations. Of these models, only the SAR is explicitely associated with a stationary FC; other, more complex models, generate dynamics that are compatible with a non-stationary FC. We computed FC from data simulated by these models and compared the results to empirical FC. For each model, performance was quantified using predictive power [29] , for each subject as well as on the ‘average subject’ (obtained by averaging SC and empirical FC across subjects). Values for the model parameters were based on the literature, except for the structural coupling strength that was optimized in order to maximize each model's performance.

Predictive power of models

In agreement with H1, SC explained a significant amount of the variance of whole-session FC for all subjects, as did all generative models (permutation test, p <0.05 corrected) ( Figure 1 , panel A). Generative models predicted FC better than SC alone (paired permutation test, p <0.05 corrected). Predictive power obtained with the average subject ranged from 0.32 for SC alone to 0.43 for the SAR model ( Table 1 ). For a given model, predictive power was reproducible across subjects. Contrary to our hypothesis H2, generative models had similar performance, and complexity was not predictive of performance. The results remained unchanged when no global signal regression was applied ( Figure S1 ). Also, findings were found to be similar for SC alone and the SAR model at finer spatial scales ( N = 461 and N = 825 regions, Figure S2 ) and consistent with a replication dataset ( Figure S3 ). Most importantly, a large part of the variance ( R 2 ) in the empirical data (at least 82%) remained unexplained by this first round of simulations.

- PPT PowerPoint slide

- PNG larger image

- TIFF original image

(A) Predictive power for all connections and when restricted to intra/interhemispheric, direct/indirect connections. For each type of connections and each model, we represented the individual predictive powers (bar chart representing means and associated standard deviations), as well as the predictive power for the average subject computed using the original SC (diamonds), or after adding homotopic connections (circles). Of note, SC alone has no predictive power (zero) for the subset of indirect connections, by definition. (B) Patterns of SC, empirical FC and FC simulated from the SAR model for the average subject and associated scatter plot of simulated versus empirical FC (solid line represents perfect match). SARh stands for the SAR model with added homotopic connections. Matrices were rearranged such that network structure is highlighted. Homologous regions were arranged symmetrically with respect to the center of the matrix; for instance, the first and last regions are homologous. (C) Similarity of functional brain networks across subjects (bar chart with means and associated standard deviations), for the average subject (diamonds), and when adding homotopic connections (circles) (left). Network maps for the average subject and empirical FC, as well as for FC simulated using either the SAR model with original SC or the SARh.

https://doi.org/10.1371/journal.pcbi.1003530.g001

https://doi.org/10.1371/journal.pcbi.1003530.t001

Role of homotopic connections

We reasoned (see hypothesis H3) that part of the unexplained variance could reflect issues with the estimation of SC from DWI, which can be expected because of limitations in current fiber tracking algorithms and the problem of crossing fibers [38] . We know for instance that many fibers passing through the corpus callosum are poorly estimated in diffusion imaging, in particular those connecting more lateral parts of the cerebral cortex [39] . Yet, the corpus callosum is the main interhemispheric commissure of the mammal brain, see [40] . It systematically connects homologous sectors of the cerebral cortex across the two hemispheres in a topographically organized manner, with an antero-posterior gradient, through a system of myelinated homotopic fibers or ‘homotopic connections’. The hypothesis of an impact of SC estimation problems on FC unexplained variance was supported by the observation that, in our results, intrahemispheric connections yielded on average a much higher predictive power (e.g., 0.59 for the SAR model) than interhemispheric connections (0.16 for the SAR model).

In order to further test the role of white matter connections in driving FC, we artificially set all homotopic connections to a constant SC value (0.5) for the average subject and reran all simulations. As a result, the predictive power strongly increased for all models ( Figure 1 , panels A and B), ranging from 0.39 for SC alone to 0.61 for the SAR model ( Table 1 ). Thus the variance unexplained (1- R 2 ) was reduced to 63%. Moreover, predictive power for intra and interhemispheric connections became equivalent (0.60 and 0.62, respectively). Interestingly, adding homotopic connections also led to a substantial increase in predictive power for indirect connections, that is, pairs of regions for which SC is zero (increasing from 0.07 to 0.45). The effect of adding interhemispheric anatomical connections on increasing predictive power was highly specific to homotopic connections. When applying the SAR model to the SC matrix with added homotopic connections and randomly permuting (10 000 permutations) the 80 corresponding interhemispheric connections (one region in one hemisphere was connected to one and only one region in the other hemisphere), the predictive power strongly decreased, even compared to results with the original SC ( Figure 2 , panel A). Moreover, we further assessed the specificity of this result by systematically manipulating SC. In three different simulations, we randomly removed, added, and permuted structural connections (10 000 times). In all cases, the predictive power decreased as a function of the proportion of connections manipulated ( Figure 2 , panel B). Moreover, changes induced by these manipulations remained small (<0.05), far below the changes that we were able to induce by adding homotopic connections. All in all, these results suggest that homotopic connections play a key role in shaping the network dynamics, in a complex and non-trivial manner.

(A) Predictive power of the SAR model with original SC (green), when adding homotopic connections (‘SARh’, red), or with shuffled homotopic connections (black). (B) Predictive power of the SAR model with original SC (red) and when SC values were randomly permuted, removed or added (from left to right). For each graph, predictive power was quantified as a function of the percentage of connections manipulated.

https://doi.org/10.1371/journal.pcbi.1003530.g002

Predicting functional brain networks

Beyond predicting the overall pattern of FC, we also assessed whether models could predict the empirical organization of FC into a set of intrinsic networks. Connectivity matrices were clustered into groups of non-overlapping brain regions showing high within-group correlation and low between-group correlation, and the resulting partitions into functional brain networks were compared between empirical and simulated FC using the adjusted Rand index (see Methods ). Again, the SAR model tended to perform best among all computational models ( Figure 1 , panel C).

Without adding homotopic connections in the SC matrix, the simulated networks highly differed from the empirical networks. In particular, most networks were found to be lateralized. After adding homotopic connections, the resemblence between simulated and empirical networks greatly improved. Networks were more often bilateral and overall consistent with the topography of empirical functional networks, including somatosensory, motor, visual, and associative networks. High FC between the amygdala and ventral-lateral sectors of the prefrontal cortex was also correctly predicted by the simulations. There were nevertheless some notable differences. First, the clustering of empirical FC yielded a long-range fronto-parieto-temporal association network ( Figure 1 , panel C, cyan) that was not observed in simulated FC as such. Second, a parieto-temporal cluster ( Figure 1 , panel C, red), which was associated with thalamo-striatal networks, was predicted by simulations but was not present in the empirical data. Third, a cluster encompassing the entire cingulate gyrus and precuneus ( Figure 1 , panel C, green) was predicted by simulations but was broken down into more clusters in the empirical data.

Stationary FC, non-stationary FC, and non-stationarities

The results above show that SC plays a causal role in FC, but one can still wonder what aspects of the underlying dynamics are the most directly related to this influence. A hypothesis is that SC, in combination with stable physiological processes (e.g., overall gain in synaptic transmission), drives a stationary regime of the dynamics. This hypothesis is supported by the finding that all models tested in this study, which were used in a stationary regime (in the strong sense), could explain significantly more variance than SC alone. Furthermore, the fact that the SAR could predict FC significantly better than all other models is evidence that this stationary regime is associated with stationary FC (paired permutation test, p <0.05 corrected).

But, clearly, many variations in the dynamical patterns of brain activity, be it in the process of spontaneous cognition, physiological regulation, or context-dependent changes, cannot be expected to be associated with a purely stationary FC. Modeling how the brain dynamics deal with endogenous and environmental contexts should require more complex models, either stationary or non-stationary, that are able to generate non-stationary (i.e., time-varying) patterns of FC. Given that at best 37% of the variance could be explained by the model of a purely stationary FC (the SAR), we can wonder why the models of higher complexity used in our simulation testbed did not perform better in predicting FC. One possible hypothesis is that the SAR model was favored in the simulations, because we estimated FC over about 10 minutes of actual brain dynamics. In such configuration, we can imagine that the non-stationarities of FC cancel out, the estimation effectively keeping the stationary part of FC. We thus wondered whether the more complex models would better perform when non-stationary FC had the potential of being more strongly reflected in the data. We approached this question by computing predictive power on windowed FC as a function of the length of the time-window used [22] , for all possible time-windows over which FC could be estimated and for all models. We also investigated the effect of simulation duration (see Methods ). We found that the relative performance of more complex models was still lower than that of the SAR model ( Figures 3 and S4 ). The average predictive power was lower for shorter time-windows and increased towards a limit for longer time-windows. The SAR model behaved like an ‘upper-bound’ for predictive power. The performance of all other models, irrespective of the size of the time-window, was between that of SC alone and that of the SAR model.

Predictive power as a function of time-window length across subjects (left) and of duration of simulated runs on the average subject (right). For color code see Figure 1 .

https://doi.org/10.1371/journal.pcbi.1003530.g003

A straightforward explanation is that the non-stationary patterns of FC, as generated by the simulation models, did not match the non-stationary patterns of the empirical FC as they unfolded during the acquisition in the brain of the participants. Context-dependent and transient dynamics are likely to be missed by models of the dynamics that cannot be contextually constrained in the absence of further information. It is thus difficult to infer how much of the 63% of unexplained variance remaining in whole-session FC actually reflect physiologically meaningful non-stationary FC, and more broadly, non-stationary dynamics.

In the present study, we investigated the respective contributions of anatomical connections, stationary dynamics, and non-stationarities to the emergence of empirical functional connectivity. We compared the performance of computational models in modeling FC and manipulated SC in order to analyze the impact of SC on FC, with and without the filter of combined physiological models of the dynamics.

The importance of white matter fiber pathways in shaping functional brain networks is a known fact, for a review, see [15] , [17] , [21] , [23] . Previous modeling studies have supported the importance of the underlying anatomical connections, i.e., SC, in shaping functional relationships among brain systems [16] , [41] , [42] . In agreement with our hypothesis H1, we showed that functional connectivity could at least in part be explained by structural connectivity alone. Adding homotopic connections in the matrix of SC, we found a slight increase in explained variance when considering the prediction of whole-session FC from SC alone (+4% of explained variance). In agreement with H2, adding models of physiological interactions above and beyond SC alone increased the explained variance in whole-session FC, by 8% for the best performing model, the SAR model, when no homotopic connections were added, and by 22% when homotopic connections were added. This latter fact, which strongly supports H3, suggests a complex interplay between anatomy as reflected by SC and physiological mechanisms in generating FC. This impact of SC manipulations on predicted FC pertained not only to direct but also to indirect connections. For indirect connections, whole-session FC was much better predicted after adding homotopic connections to SC than before adding them (0.45 versus 0.07 in predictive power). The problem of limited predictive power for FC based on SC when considering indirect connections has puzzled the field [43] . For this reasons many studies only assess the performance of models on direct connections. Here, we showed that a major factor in driving FC for indirect anatomical connections (+20% in explained variance) is the interplay between a subset of anatomical connections, i.e., homotopic connections (which are typically underestimated by DWI), and physiological parameters that generate the dynamics underlying FC, themselves conditioned by the possible interactions defined by SC.

Contrary to our expectation (see hypothesis H2), all models tended to perform similarly, irrespective of model complexity. The best performing model in most cases was the SAR model, a model of stationary FC driven by SC, with 63% of the variance remaining unexplained. It is likely that, above and beyond problems with the estimation of SC from DWI, and other incompressible sources of irrelevant noise, much of the unexplained variance in FC relates to non-stationary patterns in FC, and more generally to non-stationarities in the strong-sense. Such non-stationarities are difficult to model in the experimentally unconstrained resting-state and in the absence of further information regarding the specific parameters shaping FC. Irrespective of their complexity, computational models are only capable of generating prototypical brain activities, and not the subject-dependent activity that took place in the brain of the participants during scanning. The scientific necessity of modeling brain dynamics is hindered by such uncertainty and it will be a challenge to find solutions to approach this problem [26] , [44] . Even though one objective for neuroscience is to propose generative models that are capable of generating detailed neuronal dynamics, generative models cannot be informed by this unknown context and, as a consequence, cannot generate context-dependent activity in a manner that would be predictive of empirical data, in the absence of additional measures and experimental controls. Nevertheless, and perhaps for that very reason, the study of non-stationarity in FC should become of central interest for the field, as such non-stationarities could explain much of FC (up to 63% according to our simulation results), and thus reflect critical mechanisms for neurocognitive processing.

In the absence of adequate modeling principles, determining the precise contribution of non-stationarities to the unexplained variance in FC is impossible, as other confounding sources of unexplained variance are expected. As we showed, even naive manipulations aimed at estimating the impact of the known errors in DWI-based reconstruction of homotopic connections showed that such errors could cause 20% of the unexplained variance in predicting empirical FC. How DWI and fiber tracking should be used for an optimal estimation of structural connectivity is still a topic of intense debates [45] – [48] . It is likely that part of the unexplained variance in predicting FC will be reduced as better estimates of SC become available.

The model showing the best results, the SAR model, explicitly modeled a stationary process with a stationary FC. In line with our hypothesis H4, empirical FC is likely to incorporate stationary components driven by SC. Further knowledge about this stationary process might be gained by analyzing FC computed over much longer periods of time than is commonly performed (e.g., hours versus minutes). This stationary process is itself likely to be only locally stationary, as it might be expected that slow physiological cycles, from nycthemeral cycles to hormonal cycles, development, learning and aging, will modify the parameters controlling it.

In the present study, we did not take into account the statistical fluctuations induced by the fact that the time series were of finite length. Such a finiteness entails that even a model that is stationary in the strong sense could generate sample moments that fluctuate over time. For instance, the sample sum of square of a multivariate normal model with covariance matrix Σ computed from time series of size N is not equal to N Σ but is Wishart distributed with N -1 degrees of freedom and scale matrix Σ. This phenomenon will artificially increase the part of variance that cannot be accounted for by stationary models and, hence, play against stationary models. Since it is conversely very unlikely for a non-stationary model to generate sample moments that are constant over time, statistical fluctuations cannot at the same time artificially increase the part of variance that can be accounted for by stationary models. As a consequence, not considering these statistical fluctuations made us underestimate the part of variance that can be accounted for by models that are stationary in the strong sense. In other words, our estimate of the part of variance accounted for by a stationary model is a lower bound for the true value. We can therefore be confident that taking statistical fluctuations into account will only strengthen H4.

Our goal here was to investigate how current generative models of brain acticity fare in predicting the relationship between structure and function. The complexity of some of these models was such that the simulations included here were only possible thanks to a computer cluster. The behavior of all these models depends on the values of some parameters and, in the present study, we set these parameters in agreement with the literature. In what measure this choice affects how well models predict FC is unclear. Yet a full investigation of this issue remains beyond the scope of this study, since parameter optimization through extensive exploration of the parameter space for all models is at this stage unrealistic. Nevertheless, in order to get a sense of the sensitivity of our results to parameter values in a way that is compatible with the computational power available, we explored the behavior of the Fitzhugh-Nagumo, Wilson and Kuramoto models over a subset of the parameter space (see Figures S5 and S6 ). We found that parameter values had little influence on predictive power, which, in all cases, remained below that of the SAR, the simplest model tested.

We formulated H2 to test for the existence of a relationship between complexity and realism in the models that we used. Indeed, there should exist a very tight connection between the two, since the more complex generative models in our study have been designed to take biophysical mechanisms into account, with parameters that are physiologically relevant and values often chosen based on prior experimental results. Now, realism usually comes at the cost of complexity. As a consequence, it is often (implicitely) assumed that, among the models we selected, the more complex a model is, the more realistic it will also be and the better it will fit the data. This is the reason why we stated H2, based on such rationale inspired from the literature, in order to put such hypothesis to the test. The results show that for the models we used, with their sets of parameters, an increase in complexity was not associated with an increase in performance. This suggests that, for these models, complexity and realism are not quite as tightly connected as expected.

Given that the SAR model is the only model that does not include a step of hemodynamic modeling (Balloon-Windkessel), it cannot be ruled out that the superiority of the SAR reflects issues with this step. In order to check that this is not the case, we computed predictive power for all models without the hemodynamic model. The predictive power was largely insensitive to the presence of the hemodynamic model (see Figure S7 ). In particular, the SAR model remained overall an upper bound in terms of predictive power.

Finally, we should note that we relied on a definition of SC restricted to the white matter compartment. Although this is standard in the field, in reality, local intrinsic SC exists in the gray matter. However, current models generally make prior assumptions about such SC. Moreover, intrinsic SC currently remains impossible to measure reliably for the entire brain.

In spite of the complexity of the problems and the limitations of current modeling approaches, computational modeling of large-scale brain dynamics remains an essential scientific endeavor. It is key to better understand generative mechanisms and make progress in brain physiology, physiopathology and, more generally, theoretical neuroscience. It is also central to the endeavor of searching for accurate and meaningful biomarkers in aging and disease [49] . Moreover, computational modeling of FC opens the possibility of making inference on specific biophysical parameters, including inference about the underlying anatomical connectivity itself. In spite of their limited predictive powers, simpler models can be useful in this context. The SAR model, introduced in [36] , may appear well-suited to model essential stationary aspects of the generative mechanisms of FC. One interest of such a simple and analytically tractable model is that, beyond its very low computational burden, it could be the basis for straightforward estimation of the model parameters that can be used to compare clinical populations, and could constitute a potentially important biomarker of disease.

Ethics statement

All participants gave written informed consent and the protocol was approved by the local Ethics Committee of the Pitié-Salpêtrière Hospital (number: 44-08; Paris, France).

Twenty-one right-handed healthy volunteers were recruited within local community (11 males, mean age 22±2.4 years). Data were acquired using a 3 T Siemens Trio TIM MRI scanner (CENIR, Paris, France). For acquisition and preprocessing details, see Text S1 . For each subject, the preprocessing yielded three matrices: one of SC, one with the average fiber lengths, and one of empirical FC. These matrices were also averaged across subjects (‘average subject’).

Simulations

We used eight generative models with various levels of complexity: the SAR model, a purely spatial model with no dynamics that expresses BOLD fluctuations within one region as a linear combination of the fluctuations in other regions; the Wilson-Cowan system, a model expressing excitatory and inhibitory neuronal populations activity; the two rate models (with or without conduction delays), simplified versions of the Wilson-Cowan system obtained by considering exclusively the excitatory population; the Kuramoto model, which simulates neuronal activity using oscillators; the Fitzhugh-Nagumo model, which aims at reproducing complex behaviors such as those observed in conductance-based models; the neural-mass model, also based on conductance and with strong biophysiological constraints; and finally, the model of spiking neurons, the most constrained model in the current study which models neuron populations as attractors. For more details, see Text S2 .

All models took an SC matrix as input, and all but the SAR were taken as models of neuronal (rather than BOLD) activity. Simulated fMRI BOLD signal was obtained from simulated neuronal activity by means of the Balloon-Windkessel hemodynamic model [50] , [51] . Global mean signal was then regressed out from each region's time series. Finally, simulated FC was computed as Pearson correlation between simulated time series. For the SAR model, we directly computed simulated FC from the analytical expression of the covariance, see Equation (2) in Text S2 . All models had a parameter that represents the coupling strength between regions. This parameter was optimized separately for each model on the average subject to limit computational burden ( Text S2 ). After optimization, we generated three runs of 8 min BOLD activity and averaged the corresponding FCs to obtain the simulated FC for each dynamical model and each subject. For the average subject, simulated FC was obtained by feeding the average SC matrix to the different models.

Performance

Modeling performance was assessed using predictive power and similarity of spatial patterns. Predictive power was quantified for each subject and for the average subject by means of Pearson correlation between simulated and empirical FC [29] . Regarding the similarity of functional brain networks, SC, empirical FC and simulated FC were decomposed into 10 networks using agglomerative hierarchical clustering and generalized Ward criterion [52] . The resulting networks from SC and simulated FC were compared to the ones resulting from empirical FC using the adjusted Rand index [53] , [54] . The Rand index quantifies the similarity between two partitions of the brain into networks by computing the proportion of pairs of regions for which the two partitions are consistent (i.e., they are either in the same network for both partitions, or in a different network for both partitions). The adjustment accounts for the level of similarity that would be expected by chance only.

Analysis of dynamics

Empirical and simulated windowed FC were computed on individual subjects using sliding time-windows (increment of 20 s) of varying length (from 20 to 420 s by step of 20 s). Predictive power was computed as the correlation between any pair of time-windows of equal length corresponding to simulated and empirical windowed FC, respectively. This approach was only applied to the dynamical models; for SC alone and the SAR model, simulated FC remained, by definition, constant through time and, as a consequence, windowed FC was equaled to whole-session FC. The influence of simulated run duration on predictive power was also investigated. For each model, three runs of one hour were simulated on the average subject. Predictive power was then computed as a function of simulated run duration. For the same reason as above, SC alone and the SAR model did not depend on simulation duration.

Supporting Information

Performance of computational models when no global signal regression was performed. (A) Predictive power for all connections and when restricted to intra/interhemispheric, direct/indirect connections. For each type of connections and each model, we represented the individual predictive powers (bar chart representing means and associated standard deviations), as well as the predictive power for the average subject computed using the original SC (diamonds), or after adding homotopic connections (circles). Of note, SC alone has no predictive power (zero) for the subset of indirect connections, by definition. (B) Patterns of SC, empirical FC and FC simulated from the SAR model for the average subject and associated scatter plot of simulated versus empirical FC (solid line represents perfect match). SARh stands for the SAR model with added homotopic connections. Matrices were rearranged such that network structure is highlighted. Homologous regions were arranged symmetrically with respect to the center of the matrix; for instance, the first and last regions are homologous. (C) Similarity of functional brain networks across subjects (bar chart with means and associated standard deviations), for the average subject (diamonds), and when adding homotopic connections (circles) (left). Network maps for the average subject and empirical FC, as well as for FC simulated using either the SAR model with original SC or the SARh.

https://doi.org/10.1371/journal.pcbi.1003530.s001

Performance of SC alone and the SAR model at finer spatial scales. Predictive power for all connections and when restricted to intra/interhemispheric, direct/indirect connections. For each type of connections and each model, we represented the individual predictive powers (bar chart representing mean and associated standard deviation), as well as the predictive power of the average subject computed using the original SC (diamonds), or after adding homotopic connections (circles).

https://doi.org/10.1371/journal.pcbi.1003530.s002

Performance of computational models on the replication dataset. The replication dataset was from the study of Hagmann and colleagues [55] . Brain network was defined at low anatomical granularity (N = 66 regions), and connectivity measures were averaged over five healthy volunteer subjects. (A) Predictive power for all connections and when restricted to intra/interhemispheric, direct/indirect connections. For each type of connections and each model, we represented the individual predictive powers (bar chart representing means and associated standard deviations), as well as the predictive power for the average subject computed using the original SC (diamonds), or after adding homotopic connections (circles). Of note, SC alone has no predictive power (zero) for the subset of indirect connections, by definition. (B) Patterns of SC, empirical FC and FC simulated from the SAR model for the average subject and associated scatter plot of simulated versus empirical FC (solid line represents perfect match). SARh stands for the SAR model with added homotopic connections. Matrices were rearranged such that network structure is highlighted. Homologous regions were arranged symmetrically with respect to the center of the matrix; for instance, the first and last regions are homologous. (C) Similarity of functional brain networks across subjects (bar chart with means and associated standard deviations), for the average subject (diamonds), and when adding homotopic connections (circles) (left). Network maps for the average subject and empirical FC, as well as for FC simulated using either the SAR model with original SC or the SARh.

https://doi.org/10.1371/journal.pcbi.1003530.s003

Effect of time on performance. Predictive power of computational models as a function of the time-window length for each subject (graphs) and model (color).

https://doi.org/10.1371/journal.pcbi.1003530.s004

Exploration of the parameter space for the Fitzhugh-Nagumo model. (Left) Phase diagrams (i.e., x - y plane) for an uncoupled model ( k = 0) over various parameter values of α and β . The model operate mostly in an oscillatory regime for the range of parameter values investigated. (Right) Predictive power as a function of α and β . The black dot represents the parameter set used in our simulations, while the black square corresponds to the values from [28] . The values used in our simulations gave rise to higher predictive power than the parameters values from [28] . In any case, for the range of parameters considered, the predictive power always remained lower than that obtained with a SAR model.

https://doi.org/10.1371/journal.pcbi.1003530.s005

Effect of velocity on predictive power. Predictive power as a function of the coupling strength and velocity values in generative models. Black dots represent values used for subsequent simulations. These simulations show that the predictive power is little influenced by velocity. In any case, for the range of parameters considered, the predictive power also always remained lower than that obtained with a SAR model.

https://doi.org/10.1371/journal.pcbi.1003530.s006

Effect of the hemodynamic model. Predictive power for all connections and when restricted to intra/interhemispheric, direct/indirect connections. For each type of connections and each model, we represented the predictive power for the average subject computed using the BOLD signal (diamonds, solid line) or using the neuronal activity (circles, dashed line). Of note, the prediction differs slightly from that of the Figure 1 due to the stochastic component of most models at each run.

https://doi.org/10.1371/journal.pcbi.1003530.s007

Data and preprocessing.

https://doi.org/10.1371/journal.pcbi.1003530.s008

Computational models.

https://doi.org/10.1371/journal.pcbi.1003530.s009

Acknowledgments

The authors are thankful to Olaf Sporns (Department of Psychology, Princeton University, Princeton, USA) and Christopher J. Honey (Department of Psychological and Brain Sciences, Indiana University, Bloomington, USA) for providing the neural-mass model; to Gustavo Deco, Étienne Hugues and Joanna Cabral (Computational Neuroscience Group, Department of Technology, Universitat pompeu Fabra, Barcelona, Spain) for providing the Kuramoto and rate models as well as the spike model; and to Olaf Sporns and Patric Hagmann (Department of Radiology, University Hospital Center and University of Lausanne, Lausanne, Switzerland) for sharing their data for replication. We would also like to thank them for fruitful discussions. The authors are grateful to Stéphane Lehéricy and his team (Center for Neuroimaging Research, Paris, France) for providing them with the data, and especially to Romain Valabrègue for his help in handling coarse-grained distributed parallelization of computational tasks.

Author Contributions

Conceived and designed the experiments: AM DR HB GM. Performed the experiments: AM DR GM. Analyzed the data: AM. Wrote the paper: AM DR HB GM.

- View Article

- Google Scholar

- 40. Schmahmann JD, Pandya DN (2006) Fiber pathways of the brain. Oxford University Press.

- 52. Batageli V (1988) Generalized ward and related clustering problems. In: Bock HH, editor. Classification and Related Methods of Data Analysis. North-Holland. pp. 67–74.

Experimental Brain Research

Founded in 1966, Experimental Brain Research publishes original contributions on many aspects of experimental research of the central and peripheral nervous system. The scope of the journal encompasses neurophysiological mechanisms underlying sensory, motor, and cognitive functions in health and disease. This includes developmental, cellular, molecular, computational and translational neuroscience approaches. The journal does not normally consider manuscripts with a singular specific focus on pharmacology or the action of specific natural substances on the nervous system.

Experimental Brain Research is pleased to offer Registered Reports for authors submitting to the journal. Please see the full instructions here: https://www.springer.com/journal/221/updates/19883440

Article Types: Original Paper, Review, Mini-Review, Registered Reports, Case Study, Letter to the Editor Before submitting a manuscript, please consult "Submission Guidelines" at www.springer.com/221.

- Winston Byblow,

- Bill J. Yates

Latest issue

Volume 242, Issue 3

Latest articles

Unveiling the invisible: receivers use object weight cues for grip force planning in handover actions.

- L. Kopnarski

- C. Voelcker-Rehage

Sleep deprivation induces late deleterious effects in a pharmacological model of Parkinsonism

- L. B. Lopes-Silva

- D. M. G. Cunha

- R. H. Silva

A computational model of motion sickness dynamics during passive self-motion in the dark

- Aaron R. Allred

- Torin K. Clark

Inhibition of fatty acid amide hydrolase reverses aberrant prefrontal gamma oscillations in the sub-chronic PCP model for schizophrenia

- Alexandre Seillier

Effects of the perceived temporal distance of events on mental time travel and on its underlying brain circuits

- Claudia Casadio

- Ivan Patané

- Francesca Benuzzi

Journal updates

Join experimental brain research as an associate editor (neuropsychology).

Experimental Brain Research is recruiting a new Associate Editor to serve on the editorial team. We welcome applications from researchers with expertise in neuropsychology, cognitive neuroscience and neurorehabilitation.

Important Update

It is with profound sadness that we announce that Dr. Francesca Frassinetti, an Associate Editor for Experimental Brain Research and Professor at Università di Bologna, died suddenly on February 6 in an automobile accident.

Follow us on Twitter

Thanks to our reviewers

A special note of thanks to our reviewers in the first half of 2020.

Journal information

- Biological Abstracts

- CAB Abstracts

- Chemical Abstracts Service (CAS)

- Current Contents/Life Sciences

- Google Scholar

- Japanese Science and Technology Agency (JST)

- Norwegian Register for Scientific Journals and Series

- OCLC WorldCat Discovery Service

- Pathway Studio

- Science Citation Index Expanded (SCIE)

- TD Net Discovery Service

- UGC-CARE List (India)

Rights and permissions

Springer policies

© Springer-Verlag GmbH Germany, part of Springer Nature

- Find a journal

- Publish with us

- Track your research

- MyU : For Students, Faculty, and Staff

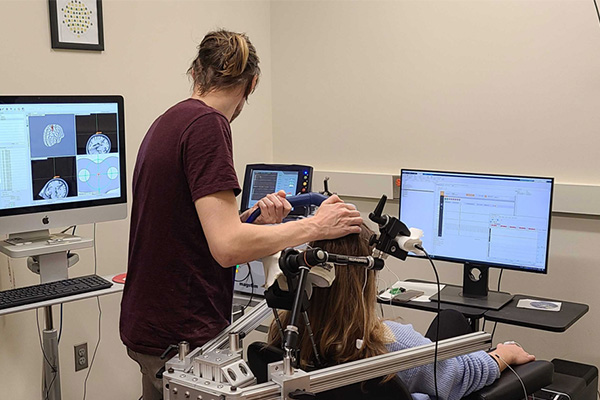

New study reveals breakthrough in understanding brain stimulation therapies

For the first time, researchers show how the brain can precisely adapt to external stimulation.

MINNEAPOLIS/ST. PAUL (03/14/2024) — For the first time, researchers at the University of Minnesota Twin Cities showed that non-invasive brain stimulation can change a specific brain mechanism that is directly related to human behavior. This is a major step forward for discovering new therapies to treat brain disorders such as schizophrenia, depression, Alzheimer’s disease, and Parkinson’s disease.

The study was recently published in Nature Communications , a peer-reviewed, open access, scientific journal.

Researchers used what is called “transcranial alternating current stimulation” to modulate brain activity. This technique is also known as neuromodulation. By applying a small electrical current to the brain, the timing of when brain cells are active is shifted. This modulation of neural timing is related to neuroplasticity, which is a change in the connections between brain cells that is needed for human behavior, learning, and cognition.

“Previous research showed that brain activity was time-locked to stimulation. What we found in this new study is that this relationship slowly changed and the brain adapted over time as we added in external stimulation,” said Alexander Opitz, University of Minnesota biomedical engineering associate professor. “This showed brain activity shifting in a way we didn’t expect.”

This result is called “neural phase precession.” This is when the brain activity gradually changes over time in relation to a repeating pattern, like an external event or in this case non-invasive stimulation. In this research, all three investigated methods (computational models, humans, and animals) showed that the external stimulation could shift brain activity over time.

“The timing of this repeating pattern has a direct impact on brain processes, for example, how we navigate space, learn, and remember,” Opitz said.

The discovery of this new technique shows how the brain adapts to external stimulation. This technique can increase or decrease brain activity, but is most powerful when it targets specific brain functions that affect behaviors. This way, long-term memory as well as learning can be improved. The long-term goal is to use this technique in the treatment of psychiatric and neurological disorders.

Opitz hopes that this discovery will help bring improved knowledge and technology to clinical applications, which could lead to more personalized therapies for schizophrenia, depression, Alzheimer’s disease, and Parkinson’s disease.

In addition to Opitz, the research team included co-first authors Miles Wischnewski and Harry Tran. Other team members from the University of Minnesota Biomedical Engineering Department include Zhihe Zhao, Zachary Haigh, Nipun Perera, Ivan Alekseichuk, Sina Shirinpour and Jonna Rotteveel. This study was in collaboration with Dr. Jan Zimmermann, associate professor in the University of Minnesota Medical School.

This work was supported primarily by the National Institute of Health (NIH) along with the Behavior and Brain Research Foundation and the University of Minnesota’s Minnesota’s Discovery, Research, and InnoVation Economy (MnDRIVE) Initiative. Computational resources were provided by the Minnesota Supercomputing Institute (MSI).

To read the entire research paper titled, “Induced neural phase precession through exogenous electric fields”, visit the Nature Communications website .

Rhonda Zurn, College of Science and Engineering, [email protected]

University Public Relations, [email protected]

Read more stories:

Find more news and feature stories on the CSE news page .

Related news releases

- Study provides new insights into deadly acute respiratory distress syndrome (ARDS)

- New study is first step in predicting carbon emissions in agriculture

- Six CSE faculty named University of Minnesota McKnight Land Grant Professors

- University of Minnesota consortium receives inaugural NSF Regional Innovation Engines award

- Closing the loop

- Future undergraduate students

- Future transfer students

- Future graduate students

- Future international students

- Diversity and Inclusion Opportunities

- Learn abroad

- Living Learning Communities

- Mentor programs

- Programs for women

- Student groups

- Visit, Apply & Next Steps

- Information for current students

- Departments and majors overview

- Departments

- Undergraduate majors

- Graduate programs

- Integrated Degree Programs

- Additional degree-granting programs

- Online learning

- Academic Advising overview

- Academic Advising FAQ

- Academic Advising Blog

- Appointments and drop-ins

- Academic support

- Commencement

- Four-year plans

- Honors advising

- Policies, procedures, and forms

- Career Services overview

- Resumes and cover letters

- Jobs and internships

- Interviews and job offers

- CSE Career Fair

- Major and career exploration

- Graduate school

- Collegiate Life overview

- Scholarships

- Diversity & Inclusivity Alliance

- Anderson Student Innovation Labs

- Information for alumni

- Get engaged with CSE

- Upcoming events

- CSE Alumni Society Board

- Alumni volunteer interest form

- Golden Medallion Society Reunion

- 50-Year Reunion

- Alumni honors and awards

- Outstanding Achievement

- Alumni Service

- Distinguished Leadership

- Honorary Doctorate Degrees

- Nobel Laureates

- Alumni resources

- Alumni career resources

- Alumni news outlets

- CSE branded clothing

- International alumni resources

- Inventing Tomorrow magazine

- Update your info

- CSE giving overview

- Why give to CSE?

- College priorities

- Give online now

- External relations

- Giving priorities

- Donor stories

- Impact of giving

- Ways to give to CSE

- Matching gifts

- CSE directories

- Invest in your company and the future

- Recruit our students

- Connect with researchers

- K-12 initiatives

- Diversity initiatives

- Research news

- Give to CSE

- CSE priorities

- Corporate relations

- Information for faculty and staff

- Administrative offices overview

- Office of the Dean

- Academic affairs

- Finance and Operations

- Communications

- Human resources

- Undergraduate programs and student services

- CSE Committees

- CSE policies overview

- Academic policies

- Faculty hiring and tenure policies

- Finance policies and information

- Graduate education policies

- Human resources policies

- Research policies

- Research overview

- Research centers and facilities

- Research proposal submission process

- Research safety

- Award-winning CSE faculty

- National academies

- University awards

- Honorary professorships

- Collegiate awards

- Other CSE honors and awards

- Staff awards

- Performance Management Process

- Work. With Flexibility in CSE

- K-12 outreach overview

- Summer camps

- Outreach events

- Enrichment programs

- Field trips and tours

- CSE K-12 Virtual Classroom Resources

- Educator development

- Sponsor an event

- U.S. Department of Health & Human Services

- Virtual Tour

- Staff Directory

- En Español

You are here

News releases.

News Release

Monday, March 18, 2024

NIH studies find severe symptoms of “Havana Syndrome,” but no evidence of MRI-detectable brain injury or biological abnormalities

Compared to healthy volunteers, affected U.S. government personnel did not exhibit differences that would explain symptoms.

Using advanced imaging techniques and in-depth clinical assessments, a research team at the National Institutes of Health (NIH) found no significant evidence of MRI-detectable brain injury, nor differences in most clinical measures compared to controls, among a group of federal employees who experienced anomalous health incidents (AHIs). These incidents, including hearing noise and experiencing head pressure followed by headache, dizziness, cognitive dysfunction and other symptoms, have been described in the news media as “Havana Syndrome” since U.S. government personnel stationed in Havana first reported the incidents. Scientists at the NIH Clinical Center conducted the research over the course of nearly five years and published their findings in two papers in JAMA today.

“Our goal was to conduct thorough, objective and reproducible evaluations to see if we could identify structural brain or biological differences in people who reported AHIs,” said Leighton Chan, M.D., chief, rehabilitation medicine and acting chief scientific officer, NIH Clinical Center, and lead author on one of the papers. “While we did not identify significant differences in participants with AHIs, it’s important to acknowledge that these symptoms are very real, cause significant disruption in the lives of those affected and can be quite prolonged, disabling and difficult to treat.”

Researchers designed multiple methods to evaluate more than 80 U.S. government employees and their adult family members, mostly stationed abroad, who had reported an AHI and compared them to matched healthy controls. The control groups included healthy volunteers who had similar work assignments but did not report AHIs. In this study, participants underwent a battery of clinical, auditory, balance, visual, neuropsychological and blood biomarkers testing. In addition, they received different types of MRI scans aimed at investigating volume, structure and function of the brain.

In this study, researchers obtained multiple measurements and used several methods and models to analyze the data. This was done to ensure the findings were highly reproducible, meaning similar results were found regardless of how many times participants were evaluated or their data statistically analyzed. Scientists also used deep phenotyping, which is an analysis of observable traits or biochemical characteristics of an individual, to assess any correlations between clinically reported symptoms and neuroimaging findings.

For the imaging portion of the study, participants underwent MRI scans an average of 80 days following symptom onset, although some participants had an MRI as soon as 14 days after reporting an AHI. Using thorough and robust methodology, which resulted in highly reproducible MRI metrics, the researchers were unable to identify a consistent set of imaging abnormalities that might differentiate participants with AHIs from controls.

“A lack of evidence for an MRI-detectable difference between individuals with AHIs and controls does not exclude that an adverse event impacting the brain occurred at the time of the AHI,” said Carlo Pierpaoli, M.D., Ph.D., senior investigator and chief of the Laboratory on Quantitative Medical Imaging at the National Institute of Biomedical Imaging and Bioengineering, part of NIH, and lead author on the neuroimaging paper. “It is possible that individuals with an AHI may be experiencing the results of an event that led to their symptoms, but the injury did not produce the long-term neuroimaging changes that are typically observed after severe trauma or stroke. We hope these results will alleviate concerns about AHI being associated with severe neurodegenerative changes in the brain.”

Similarly, there were no significant differences between individuals reporting AHIs and matched controls with respect to most clinical, research and biomarker measures, except for certain self-reported measures. Compared to controls, participants with AHIs self-reported significantly increased symptoms of fatigue, post-traumatic stress and depression. Forty-one percent of participants in the AHI group, from nearly every geographic area, met the criteria for functional neurological disorders (FNDs), a group of common neurological movement disorders caused by an abnormality in how the brain functions, or had significant somatic symptoms. FNDs can be associated with depression and anxiety, and high stress. Most of the AHI group with FND met specific criteria to enable the diagnosis of persistent postural-perceptual dizziness, also known as PPPD. Symptoms of PPPD include dizziness, non-spinning vertigo and fluctuating unsteadiness provoked by environmental or social stimuli that cannot be explained by some other neurologic disorder.

“The post-traumatic stress and mood symptoms reported are not surprising given the ongoing concerns of many of the participants,” said Louis French, Psy.D., neuropsychologist and deputy director of the National Intrepid Center of Excellence at Walter Reed National Military Medical Center and a co-investigator on the study. “Often these individuals have had significant disruption to their lives and continue to have concerns about their health and their future. This level of stress can have significant negative impacts on the recovery process.”

The researchers note that if the symptoms were caused by some external phenomenon, they are without persistent or detectable patho-physiologic changes. Additionally, it is possible that the physiologic markers of an external phenomenon are no longer detectable or cannot be identified with the current methodologies and sample size.

About the NIH Clinical Center: The NIH Clinical Center is the clinical research hospital for the National Institutes of Health. Through clinical research, clinician-investigators translate laboratory discoveries into better treatments, therapies and interventions to improve the nation's health. More information: https://clinicalcenter.nih.gov .

About the National Institute of Biomedical Imaging and Bioengineering (NIBIB): NIBIB’s mission is to improve health by leading the development and accelerating the application of biomedical technologies. The Institute is committed to integrating the physical and engineering sciences with the life sciences to advance basic research and medical care. NIBIB supports emerging technology research and development within its internal laboratories and through grants, collaborations, and training. More information is available at the NIBIB website: https://www.nibib.nih.gov .

About the National Institutes of Health (NIH): NIH, the nation's medical research agency, includes 27 Institutes and Centers and is a component of the U.S. Department of Health and Human Services. NIH is the primary federal agency conducting and supporting basic, clinical, and translational medical research, and is investigating the causes, treatments, and cures for both common and rare diseases. For more information about NIH and its programs, visit www.nih.gov .

NIH…Turning Discovery Into Health ®

Pierpaoli C, Nayak A, Hafiz R, et al. Neuroimaging Findings in United States Government Personnel and their Family Members Involved in Anomalous Health Incidents. JAMA. Published online March 18, 2024. doi: 10.1001/jama.2024.2424

Chan L, Hallett M, Zalewski C, et al. Clinical, Biomarker, and Research Tests Among United States Government Personnel and their Family Members Involved in Anomalous Health Incidents. JAMA. Published online March 10, 2024. doi: 10.1001/jama.2024.2413

Connect with Us

- More Social Media from NIH

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Sensors (Basel)

Brain-Computer Interface: Advancement and Challenges

M. f. mridha.

1 Department of Computer Science and Engineering, Bangladesh University of Business and Technology, Dhaka 1216, Bangladesh; db.ude.tbub@zorif (M.F.M.); [email protected] (S.C.D.); moc.liamg@ibakmdm (M.M.K.); [email protected] (A.A.L.)

Sujoy Chandra Das

Muhammad mohsin kabir, aklima akter lima, md. rashedul islam.

2 Department of Computer Science and Engineering, University of Asia Pacific, Dhaka 1216, Bangladesh

Yutaka Watanobe

3 Department of Computer Science and Engineering, University of Aizu, Aizu-Wakamatsu 965-8580, Japan; pj.ca.uzia-u@akatuy

Associated Data

There is no statement regarding the data.

Brain-Computer Interface (BCI) is an advanced and multidisciplinary active research domain based on neuroscience, signal processing, biomedical sensors, hardware, etc. Since the last decades, several groundbreaking research has been conducted in this domain. Still, no comprehensive review that covers the BCI domain completely has been conducted yet. Hence, a comprehensive overview of the BCI domain is presented in this study. This study covers several applications of BCI and upholds the significance of this domain. Then, each element of BCI systems, including techniques, datasets, feature extraction methods, evaluation measurement matrices, existing BCI algorithms, and classifiers, are explained concisely. In addition, a brief overview of the technologies or hardware, mostly sensors used in BCI, is appended. Finally, the paper investigates several unsolved challenges of the BCI and explains them with possible solutions.

1. Introduction

The quest for direct communication between a person and a computer has always been an attractive topic for scientists and researchers. The Brain-Computer Interface (BCI) system has directly connected the human brain and the outside environment. The BCI is a real-time brain-machine interface that interacts with external parameters. The BCI system employs the user’s brain activity signals as a medium for communication between the person and the computer, translated into the required output. It enables users to operate external devices that are not controlled by peripheral nerves or muscles via brain activity.

BCI has always been a fascinating domain for researchers. Recently, it has become a charming area of scientific inquiry and has become a possible means of proving a direct connection between the brain and technology. Many research and development projects have implemented this concept, and it has also become one of the fastest expanding fields of scientific inquiry. Many scientists tried and applied various communication methods between humans and computers in different BCI forms. However, it has progressed from a simple concept in the early days of digital technology to extremely complex signal recognition, recording, and analysis techniques today. In 1929, Hans Berger [ 1 ] became the first person to record an Electroencephalogram (EEG) [ 2 ], which shows the electrical activity of the brain that is measured through the scalp of a human brain. The author tried it on a boy with a brain tumor; since then, EEG signals have been used clinically to identify brain disorders. Vidal [ 3 ] made the first effort to communicate between a human and a computer using EEG in 1973, coining the phrase “Brain-Computer Interface”. The author listed all of the components required to construct a functional BCI. He made an experiment room that was separated from the control and computer rooms. In the experiment room, three screens were required; the subject’s EEG was to be sent to an amplifier the size of an entire desk in the control area, including two more screens and a printer.

The concept of combining brains and technology has constantly stimulated people’s interest, and it has become a reality because of recent advancements in neurology and engineering, which have opened the pathway to repairing and possibly enhancing human physical and mental capacities. The sector flourishing the most based on BCI is considered the medical application sector. Cochlear implants [ 4 ] for the deaf and deep brain stimulation for Parkinson’s illness are examples of medical uses becoming more prevalent. In addition to these medical applications, security, lie detection, alertness monitoring, telepresence, gaming, education, art, and human enhancement are just a few uses for brain–computer interfaces (BCIs), also known as brain–machine interfaces or BMIs [ 5 ]. Every application based on BCI follows different approaches and methods. Each method has its own set of benefits and drawbacks. The degree to which a performance can be enhanced while minute-to-minute and day-to-day volatility are reduced is crucial for the future of BCI technology. Such advancements rely on the capacity to systematically evaluate and contrast different BCI techniques, allowing for the most promising approaches to be discovered. In addition, this versatility around BCI technologies in different sectors and their applications can seem so complex yet so structured. Most of the BCI applications follow a standard structure and system. This basic structure of BCI consists of signal acquisition, pre-processing, feature extraction, classification, and control of the devices. The signal acquisition paves the way to connecting a brain and a computer and to gathering knowledge from signals. The three parts of pre-processing, feature extraction, and classification are responsible for making the associated signal more usable. Lastly, control of the devices points out the primary motivation: to use the signals in an application, prosthetic, etc.

The outstanding compatibility of various methods and procedures in BCI systems demands extensive research. A few research studies on specific features of BCI have also been conducted. Given all of the excellent BCI research, a comprehensive survey is now necessary. Therefore, an extensive survey analysis was attempted and focused on nine review papers featured in this study. Most surveys, however, do not address contemporary trends and application as well as the purpose and limits of BCI methods. Now, an overview and comparisons of the known reviews of the literature on BCI are shown in Table 1 .