- Teesside University Student & Library Services

- Learning Hub Group

Critical Appraisal for Health Students

- Critical Appraisal of a quantitative paper

- Critical Appraisal: Help

- Critical Appraisal of a qualitative paper

- Useful resources

Appraisal of a Quantitative paper: Top tips

- Introduction

Critical appraisal of a quantitative paper (RCT)

This guide, aimed at health students, provides basic level support for appraising quantitative research papers. It's designed for students who have already attended lectures on critical appraisal. One framework for appraising quantitative research (based on reliability, internal and external validity) is provided and there is an opportunity to practise the technique on a sample article.

Please note this framework is for appraising one particular type of quantitative research a Randomised Controlled Trial (RCT) which is defined as

a trial in which participants are randomly assigned to one of two or more groups: the experimental group or groups receive the intervention or interventions being tested; the comparison group (control group) receive usual care or no treatment or a placebo. The groups are then followed up to see if there are any differences between the results. This helps in assessing the effectiveness of the intervention.(CASP, 2020)

Support materials

- Framework for reading quantitative papers (RCTs)

- Critical appraisal of a quantitative paper PowerPoint

To practise following this framework for critically appraising a quantitative article, please look at the following article:

Marrero, D.G. et al (2016) 'Comparison of commercial and self-initiated weight loss programs in people with prediabetes: a randomized control trial', AJPH Research , 106(5), pp. 949-956.

Critical Appraisal of a quantitative paper (RCT): practical example

- Internal Validity

- External Validity

- Reliability Measurement Tool

How to use this practical example

Using the framework, you can have a go at appraising a quantitative paper - we are going to look at the following article:

Marrero, d.g. et al (2016) 'comparison of commercial and self-initiated weight loss programs in people with prediabetes: a randomized control trial', ajph research , 106(5), pp. 949-956., step 1. take a quick look at the article, step 2. click on the internal validity tab above - there are questions to help you appraise the article, read the questions and look for the answers in the article. , step 3. click on each question and our answers will appear., step 4. repeat with the other aspects of external validity and reliability. , questioning the internal validity:, randomisation : how were participants allocated to each group did a randomisation process taken place, comparability of groups: how similar were the groups eg age, sex, ethnicity – is this made clear, blinding (none, single, double or triple): who was not aware of which group a patient was in (eg nobody, only patient, patient and clinician, patient, clinician and researcher) was it feasible for more blinding to have taken place , equal treatment of groups: were both groups treated in the same way , attrition : what percentage of participants dropped out did this adversely affect one group has this been evaluated, overall internal validity: does the research measure what it is supposed to be measuring, questioning the external validity:, attrition: was everyone accounted for at the end of the study was any attempt made to contact drop-outs, sampling approach: how was the sample selected was it based on probability or non-probability what was the approach (eg simple random, convenience) was this an appropriate approach, sample size (power calculation): how many participants was a sample size calculation performed did the study pass, exclusion/ inclusion criteria: were the criteria set out clearly were they based on recognised diagnostic criteria, what is the overall external validity can the results be applied to the wider population, questioning the reliability (measurement tool) internal validity:, internal consistency reliability (cronbach’s alpha). has a cronbach’s alpha score of 0.7 or above been included, test re-test reliability correlation. was the test repeated more than once were the same results received has a correlation coefficient been reported is it above 0.7 , validity of measurement tool. is it an established tool if not what has been done to check if it is reliable pilot study expert panel literature review criterion validity (test against other tools): has a criterion validity comparison been carried out was the score above 0.7, what is the overall reliability how consistent are the measurements , overall validity and reliability:, overall how valid and reliable is the paper.

- << Previous: Critical Appraisal of a qualitative paper

- Next: Useful resources >>

- Last Updated: Aug 25, 2023 2:48 PM

- URL: https://libguides.tees.ac.uk/critical_appraisal

Handbook of Research Methods in Health Social Sciences pp 27–49 Cite as

Quantitative Research

- Leigh A. Wilson 2 , 3

- Reference work entry

- First Online: 13 January 2019

4075 Accesses

4 Citations

Quantitative research methods are concerned with the planning, design, and implementation of strategies to collect and analyze data. Descartes, the seventeenth-century philosopher, suggested that how the results are achieved is often more important than the results themselves, as the journey taken along the research path is a journey of discovery. High-quality quantitative research is characterized by the attention given to the methods and the reliability of the tools used to collect the data. The ability to critique research in a systematic way is an essential component of a health professional’s role in order to deliver high quality, evidence-based healthcare. This chapter is intended to provide a simple overview of the way new researchers and health practitioners can understand and employ quantitative methods. The chapter offers practical, realistic guidance in a learner-friendly way and uses a logical sequence to understand the process of hypothesis development, study design, data collection and handling, and finally data analysis and interpretation.

- Quantitative

- Epidemiology

- Data analysis

- Methodology

- Interpretation

This is a preview of subscription content, log in via an institution .

Buying options

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Durable hardcover edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Babbie ER. The practice of social research. 14th ed. Belmont: Wadsworth Cengage; 2016.

Google Scholar

Descartes. Cited in Halverston, W. (1976). In: A concise introduction to philosophy, 3rd ed. New York: Random House; 1637.

Doll R, Hill AB. The mortality of doctors in relation to their smoking habits. BMJ. 1954;328(7455):1529–33. https://doi.org/10.1136/bmj.328.7455.1529 .

Article Google Scholar

Liamputtong P. Research methods in health: foundations for evidence-based practice. 3rd ed. Melbourne: Oxford University Press; 2017.

McNabb DE. Research methods in public administration and nonprofit management: quantitative and qualitative approaches. 2nd ed. New York: Armonk; 2007.

Merriam-Webster. Dictionary. http://www.merriam-webster.com . Accessed 20th December 2017.

Olesen Larsen P, von Ins M. The rate of growth in scientific publication and the decline in coverage provided by Science Citation Index. Scientometrics. 2010;84(3):575–603.

Pannucci CJ, Wilkins EG. Identifying and avoiding bias in research. Plast Reconstr Surg. 2010;126(2):619–25. https://doi.org/10.1097/PRS.0b013e3181de24bc .

Petrie A, Sabin C. Medical statistics at a glance. 2nd ed. London: Blackwell Publishing; 2005.

Portney LG, Watkins MP. Foundations of clinical research: applications to practice. 3rd ed. New Jersey: Pearson Publishing; 2009.

Sheehan J. Aspects of research methodology. Nurse Educ Today. 1986;6:193–203.

Wilson LA, Black DA. Health, science research and research methods. Sydney: McGraw Hill; 2013.

Download references

Author information

Authors and affiliations.

School of Science and Health, Western Sydney University, Penrith, NSW, Australia

Leigh A. Wilson

Faculty of Health Science, Discipline of Behavioural and Social Sciences in Health, University of Sydney, Lidcombe, NSW, Australia

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Leigh A. Wilson .

Editor information

Editors and affiliations.

Pranee Liamputtong

Rights and permissions

Reprints and permissions

Copyright information

© 2019 Springer Nature Singapore Pte Ltd.

About this entry

Cite this entry.

Wilson, L.A. (2019). Quantitative Research. In: Liamputtong, P. (eds) Handbook of Research Methods in Health Social Sciences. Springer, Singapore. https://doi.org/10.1007/978-981-10-5251-4_54

Download citation

DOI : https://doi.org/10.1007/978-981-10-5251-4_54

Published : 13 January 2019

Publisher Name : Springer, Singapore

Print ISBN : 978-981-10-5250-7

Online ISBN : 978-981-10-5251-4

eBook Packages : Social Sciences Reference Module Humanities and Social Sciences Reference Module Business, Economics and Social Sciences

Share this entry

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Critical Appraisal Tools

Jbi’s critical appraisal tools assist in assessing the trustworthiness, relevance and results of published papers..

These tools have been revised. Recently published articles detail the revision.

"Assessing the risk of bias of quantitative analytical studies: introducing the vision for critical appraisal within JBI systematic reviews"

"revising the jbi quantitative critical appraisal tools to improve their applicability: an overview of methods and the development process".

End to end support for developing systematic reviews

Analytical Cross Sectional Studies

Checklist for analytical cross sectional studies, how to cite, associated publication(s), case control studies , checklist for case control studies, case reports , checklist for case reports, case series , checklist for case series.

Munn Z, Barker TH, Moola S, Tufanaru C, Stern C, McArthur A, Stephenson M, Aromataris E. Methodological quality of case series studies: an introduction to the JBI critical appraisal tool. JBI Evidence Synthesis. 2020;18(10):2127-2133

Methodological quality of case series studies: an introduction to the JBI critical appraisal tool

Cohort studies , checklist for cohort studies, diagnostic test accuracy studies , checklist for diagnostic test accuracy studies.

Campbell JM, Klugar M, Ding S, Carmody DP, Hakonsen SJ, Jadotte YT, White S, Munn Z. Chapter 9: Diagnostic test accuracy systematic reviews. In: Aromataris E, Munn Z (Editors). JBI Manual for Evidence Synthesis. JBI, 2020

JBI Manual for Evidence Synthesis

Chapter 9: Diagnostic test accuracy systematic reviews

Economic Evaluations

Checklist for economic evaluations, prevalence studies , checklist for prevalence studies.

Munn Z, Moola S, Lisy K, Riitano D, Tufanaru C. Chapter 5: Systematic reviews of prevalence and incidence. In: Aromataris E, Munn Z (Editors). JBI Manual for Evidence Synthesis. JBI, 2020

Chapter 5: Systematic reviews of prevalence and incidence

Qualitative Research

Checklist for qualitative research.

Lockwood C, Munn Z, Porritt K. Qualitative research synthesis: methodological guidance for systematic reviewers utilizing meta-aggregation. Int J Evid Based Healthc. 2015;13(3):179–187

Chapter 2: Systematic reviews of qualitative evidence

Qualitative research synthesis

Methodological guidance for systematic reviewers utilizing meta-aggregation

Quasi-Experimental Studies

Checklist for quasi-experimental studies.

Barker TH, Habibi N, Aromataris E, Stone JC, Leonardi-Bee J, Sears K, et al. The revised JBI critical appraisal tool for the assessment of risk of bias quasi-experimental studies. JBI Evid Synth. 2024;22(3):378-88.

The revised JBI critical appraisal tool for the assessment of risk of bias for quasi-experimental studies

Randomized controlled trials , randomized controlled trials.

Barker TH, Stone JC, Sears K, Klugar M, Tufanaru C, Leonardi-Bee J, Aromataris E, Munn Z. The revised JBI critical appraisal tool for the assessment of risk of bias for randomized controlled trials. JBI Evidence Synthesis. 2023;21(3):494-506

The revised JBI critical appraisal tool for the assessment of risk of bias for randomized controlled trials

Randomized controlled trials checklist (archive), systematic reviews , checklist for systematic reviews.

Aromataris E, Fernandez R, Godfrey C, Holly C, Kahlil H, Tungpunkom P. Summarizing systematic reviews: methodological development, conduct and reporting of an Umbrella review approach. Int J Evid Based Healthc. 2015;13(3):132-40.

Chapter 10: Umbrella Reviews

Textual Evidence: Expert Opinion

Checklist for textual evidence: expert opinion.

McArthur A, Klugarova J, Yan H, Florescu S. Chapter 4: Systematic reviews of text and opinion. In: Aromataris E, Munn Z (Editors). JBI Manual for Evidence Synthesis. JBI, 2020

Chapter 4: Systematic reviews of text and opinion

Textual Evidence: Narrative

Checklist for textual evidence: narrative, textual evidence: policy , checklist for textual evidence: policy.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- What Is Quantitative Research? | Definition, Uses & Methods

What Is Quantitative Research? | Definition, Uses & Methods

Published on June 12, 2020 by Pritha Bhandari . Revised on June 22, 2023.

Quantitative research is the process of collecting and analyzing numerical data. It can be used to find patterns and averages, make predictions, test causal relationships, and generalize results to wider populations.

Quantitative research is the opposite of qualitative research , which involves collecting and analyzing non-numerical data (e.g., text, video, or audio).

Quantitative research is widely used in the natural and social sciences: biology, chemistry, psychology, economics, sociology, marketing, etc.

- What is the demographic makeup of Singapore in 2020?

- How has the average temperature changed globally over the last century?

- Does environmental pollution affect the prevalence of honey bees?

- Does working from home increase productivity for people with long commutes?

Table of contents

Quantitative research methods, quantitative data analysis, advantages of quantitative research, disadvantages of quantitative research, other interesting articles, frequently asked questions about quantitative research.

You can use quantitative research methods for descriptive, correlational or experimental research.

- In descriptive research , you simply seek an overall summary of your study variables.

- In correlational research , you investigate relationships between your study variables.

- In experimental research , you systematically examine whether there is a cause-and-effect relationship between variables.

Correlational and experimental research can both be used to formally test hypotheses , or predictions, using statistics. The results may be generalized to broader populations based on the sampling method used.

To collect quantitative data, you will often need to use operational definitions that translate abstract concepts (e.g., mood) into observable and quantifiable measures (e.g., self-ratings of feelings and energy levels).

Note that quantitative research is at risk for certain research biases , including information bias , omitted variable bias , sampling bias , or selection bias . Be sure that you’re aware of potential biases as you collect and analyze your data to prevent them from impacting your work too much.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

Once data is collected, you may need to process it before it can be analyzed. For example, survey and test data may need to be transformed from words to numbers. Then, you can use statistical analysis to answer your research questions .

Descriptive statistics will give you a summary of your data and include measures of averages and variability. You can also use graphs, scatter plots and frequency tables to visualize your data and check for any trends or outliers.

Using inferential statistics , you can make predictions or generalizations based on your data. You can test your hypothesis or use your sample data to estimate the population parameter .

First, you use descriptive statistics to get a summary of the data. You find the mean (average) and the mode (most frequent rating) of procrastination of the two groups, and plot the data to see if there are any outliers.

You can also assess the reliability and validity of your data collection methods to indicate how consistently and accurately your methods actually measured what you wanted them to.

Quantitative research is often used to standardize data collection and generalize findings . Strengths of this approach include:

- Replication

Repeating the study is possible because of standardized data collection protocols and tangible definitions of abstract concepts.

- Direct comparisons of results

The study can be reproduced in other cultural settings, times or with different groups of participants. Results can be compared statistically.

- Large samples

Data from large samples can be processed and analyzed using reliable and consistent procedures through quantitative data analysis.

- Hypothesis testing

Using formalized and established hypothesis testing procedures means that you have to carefully consider and report your research variables, predictions, data collection and testing methods before coming to a conclusion.

Despite the benefits of quantitative research, it is sometimes inadequate in explaining complex research topics. Its limitations include:

- Superficiality

Using precise and restrictive operational definitions may inadequately represent complex concepts. For example, the concept of mood may be represented with just a number in quantitative research, but explained with elaboration in qualitative research.

- Narrow focus

Predetermined variables and measurement procedures can mean that you ignore other relevant observations.

- Structural bias

Despite standardized procedures, structural biases can still affect quantitative research. Missing data , imprecise measurements or inappropriate sampling methods are biases that can lead to the wrong conclusions.

- Lack of context

Quantitative research often uses unnatural settings like laboratories or fails to consider historical and cultural contexts that may affect data collection and results.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Chi square goodness of fit test

- Degrees of freedom

- Null hypothesis

- Discourse analysis

- Control groups

- Mixed methods research

- Non-probability sampling

- Inclusion and exclusion criteria

Research bias

- Rosenthal effect

- Implicit bias

- Cognitive bias

- Selection bias

- Negativity bias

- Status quo bias

Quantitative research deals with numbers and statistics, while qualitative research deals with words and meanings.

Quantitative methods allow you to systematically measure variables and test hypotheses . Qualitative methods allow you to explore concepts and experiences in more detail.

In mixed methods research , you use both qualitative and quantitative data collection and analysis methods to answer your research question .

Data collection is the systematic process by which observations or measurements are gathered in research. It is used in many different contexts by academics, governments, businesses, and other organizations.

Operationalization means turning abstract conceptual ideas into measurable observations.

For example, the concept of social anxiety isn’t directly observable, but it can be operationally defined in terms of self-rating scores, behavioral avoidance of crowded places, or physical anxiety symptoms in social situations.

Before collecting data , it’s important to consider how you will operationalize the variables that you want to measure.

Reliability and validity are both about how well a method measures something:

- Reliability refers to the consistency of a measure (whether the results can be reproduced under the same conditions).

- Validity refers to the accuracy of a measure (whether the results really do represent what they are supposed to measure).

If you are doing experimental research, you also have to consider the internal and external validity of your experiment.

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bhandari, P. (2023, June 22). What Is Quantitative Research? | Definition, Uses & Methods. Scribbr. Retrieved April 8, 2024, from https://www.scribbr.com/methodology/quantitative-research/

Is this article helpful?

Pritha Bhandari

Other students also liked, descriptive statistics | definitions, types, examples, inferential statistics | an easy introduction & examples, unlimited academic ai-proofreading.

✔ Document error-free in 5minutes ✔ Unlimited document corrections ✔ Specialized in correcting academic texts

- Privacy Policy

Buy Me a Coffee

Home » Quantitative Research – Methods, Types and Analysis

Quantitative Research – Methods, Types and Analysis

Table of Contents

Quantitative Research

Quantitative research is a type of research that collects and analyzes numerical data to test hypotheses and answer research questions . This research typically involves a large sample size and uses statistical analysis to make inferences about a population based on the data collected. It often involves the use of surveys, experiments, or other structured data collection methods to gather quantitative data.

Quantitative Research Methods

Quantitative Research Methods are as follows:

Descriptive Research Design

Descriptive research design is used to describe the characteristics of a population or phenomenon being studied. This research method is used to answer the questions of what, where, when, and how. Descriptive research designs use a variety of methods such as observation, case studies, and surveys to collect data. The data is then analyzed using statistical tools to identify patterns and relationships.

Correlational Research Design

Correlational research design is used to investigate the relationship between two or more variables. Researchers use correlational research to determine whether a relationship exists between variables and to what extent they are related. This research method involves collecting data from a sample and analyzing it using statistical tools such as correlation coefficients.

Quasi-experimental Research Design

Quasi-experimental research design is used to investigate cause-and-effect relationships between variables. This research method is similar to experimental research design, but it lacks full control over the independent variable. Researchers use quasi-experimental research designs when it is not feasible or ethical to manipulate the independent variable.

Experimental Research Design

Experimental research design is used to investigate cause-and-effect relationships between variables. This research method involves manipulating the independent variable and observing the effects on the dependent variable. Researchers use experimental research designs to test hypotheses and establish cause-and-effect relationships.

Survey Research

Survey research involves collecting data from a sample of individuals using a standardized questionnaire. This research method is used to gather information on attitudes, beliefs, and behaviors of individuals. Researchers use survey research to collect data quickly and efficiently from a large sample size. Survey research can be conducted through various methods such as online, phone, mail, or in-person interviews.

Quantitative Research Analysis Methods

Here are some commonly used quantitative research analysis methods:

Statistical Analysis

Statistical analysis is the most common quantitative research analysis method. It involves using statistical tools and techniques to analyze the numerical data collected during the research process. Statistical analysis can be used to identify patterns, trends, and relationships between variables, and to test hypotheses and theories.

Regression Analysis

Regression analysis is a statistical technique used to analyze the relationship between one dependent variable and one or more independent variables. Researchers use regression analysis to identify and quantify the impact of independent variables on the dependent variable.

Factor Analysis

Factor analysis is a statistical technique used to identify underlying factors that explain the correlations among a set of variables. Researchers use factor analysis to reduce a large number of variables to a smaller set of factors that capture the most important information.

Structural Equation Modeling

Structural equation modeling is a statistical technique used to test complex relationships between variables. It involves specifying a model that includes both observed and unobserved variables, and then using statistical methods to test the fit of the model to the data.

Time Series Analysis

Time series analysis is a statistical technique used to analyze data that is collected over time. It involves identifying patterns and trends in the data, as well as any seasonal or cyclical variations.

Multilevel Modeling

Multilevel modeling is a statistical technique used to analyze data that is nested within multiple levels. For example, researchers might use multilevel modeling to analyze data that is collected from individuals who are nested within groups, such as students nested within schools.

Applications of Quantitative Research

Quantitative research has many applications across a wide range of fields. Here are some common examples:

- Market Research : Quantitative research is used extensively in market research to understand consumer behavior, preferences, and trends. Researchers use surveys, experiments, and other quantitative methods to collect data that can inform marketing strategies, product development, and pricing decisions.

- Health Research: Quantitative research is used in health research to study the effectiveness of medical treatments, identify risk factors for diseases, and track health outcomes over time. Researchers use statistical methods to analyze data from clinical trials, surveys, and other sources to inform medical practice and policy.

- Social Science Research: Quantitative research is used in social science research to study human behavior, attitudes, and social structures. Researchers use surveys, experiments, and other quantitative methods to collect data that can inform social policies, educational programs, and community interventions.

- Education Research: Quantitative research is used in education research to study the effectiveness of teaching methods, assess student learning outcomes, and identify factors that influence student success. Researchers use experimental and quasi-experimental designs, as well as surveys and other quantitative methods, to collect and analyze data.

- Environmental Research: Quantitative research is used in environmental research to study the impact of human activities on the environment, assess the effectiveness of conservation strategies, and identify ways to reduce environmental risks. Researchers use statistical methods to analyze data from field studies, experiments, and other sources.

Characteristics of Quantitative Research

Here are some key characteristics of quantitative research:

- Numerical data : Quantitative research involves collecting numerical data through standardized methods such as surveys, experiments, and observational studies. This data is analyzed using statistical methods to identify patterns and relationships.

- Large sample size: Quantitative research often involves collecting data from a large sample of individuals or groups in order to increase the reliability and generalizability of the findings.

- Objective approach: Quantitative research aims to be objective and impartial in its approach, focusing on the collection and analysis of data rather than personal beliefs, opinions, or experiences.

- Control over variables: Quantitative research often involves manipulating variables to test hypotheses and establish cause-and-effect relationships. Researchers aim to control for extraneous variables that may impact the results.

- Replicable : Quantitative research aims to be replicable, meaning that other researchers should be able to conduct similar studies and obtain similar results using the same methods.

- Statistical analysis: Quantitative research involves using statistical tools and techniques to analyze the numerical data collected during the research process. Statistical analysis allows researchers to identify patterns, trends, and relationships between variables, and to test hypotheses and theories.

- Generalizability: Quantitative research aims to produce findings that can be generalized to larger populations beyond the specific sample studied. This is achieved through the use of random sampling methods and statistical inference.

Examples of Quantitative Research

Here are some examples of quantitative research in different fields:

- Market Research: A company conducts a survey of 1000 consumers to determine their brand awareness and preferences. The data is analyzed using statistical methods to identify trends and patterns that can inform marketing strategies.

- Health Research : A researcher conducts a randomized controlled trial to test the effectiveness of a new drug for treating a particular medical condition. The study involves collecting data from a large sample of patients and analyzing the results using statistical methods.

- Social Science Research : A sociologist conducts a survey of 500 people to study attitudes toward immigration in a particular country. The data is analyzed using statistical methods to identify factors that influence these attitudes.

- Education Research: A researcher conducts an experiment to compare the effectiveness of two different teaching methods for improving student learning outcomes. The study involves randomly assigning students to different groups and collecting data on their performance on standardized tests.

- Environmental Research : A team of researchers conduct a study to investigate the impact of climate change on the distribution and abundance of a particular species of plant or animal. The study involves collecting data on environmental factors and population sizes over time and analyzing the results using statistical methods.

- Psychology : A researcher conducts a survey of 500 college students to investigate the relationship between social media use and mental health. The data is analyzed using statistical methods to identify correlations and potential causal relationships.

- Political Science: A team of researchers conducts a study to investigate voter behavior during an election. They use survey methods to collect data on voting patterns, demographics, and political attitudes, and analyze the results using statistical methods.

How to Conduct Quantitative Research

Here is a general overview of how to conduct quantitative research:

- Develop a research question: The first step in conducting quantitative research is to develop a clear and specific research question. This question should be based on a gap in existing knowledge, and should be answerable using quantitative methods.

- Develop a research design: Once you have a research question, you will need to develop a research design. This involves deciding on the appropriate methods to collect data, such as surveys, experiments, or observational studies. You will also need to determine the appropriate sample size, data collection instruments, and data analysis techniques.

- Collect data: The next step is to collect data. This may involve administering surveys or questionnaires, conducting experiments, or gathering data from existing sources. It is important to use standardized methods to ensure that the data is reliable and valid.

- Analyze data : Once the data has been collected, it is time to analyze it. This involves using statistical methods to identify patterns, trends, and relationships between variables. Common statistical techniques include correlation analysis, regression analysis, and hypothesis testing.

- Interpret results: After analyzing the data, you will need to interpret the results. This involves identifying the key findings, determining their significance, and drawing conclusions based on the data.

- Communicate findings: Finally, you will need to communicate your findings. This may involve writing a research report, presenting at a conference, or publishing in a peer-reviewed journal. It is important to clearly communicate the research question, methods, results, and conclusions to ensure that others can understand and replicate your research.

When to use Quantitative Research

Here are some situations when quantitative research can be appropriate:

- To test a hypothesis: Quantitative research is often used to test a hypothesis or a theory. It involves collecting numerical data and using statistical analysis to determine if the data supports or refutes the hypothesis.

- To generalize findings: If you want to generalize the findings of your study to a larger population, quantitative research can be useful. This is because it allows you to collect numerical data from a representative sample of the population and use statistical analysis to make inferences about the population as a whole.

- To measure relationships between variables: If you want to measure the relationship between two or more variables, such as the relationship between age and income, or between education level and job satisfaction, quantitative research can be useful. It allows you to collect numerical data on both variables and use statistical analysis to determine the strength and direction of the relationship.

- To identify patterns or trends: Quantitative research can be useful for identifying patterns or trends in data. For example, you can use quantitative research to identify trends in consumer behavior or to identify patterns in stock market data.

- To quantify attitudes or opinions : If you want to measure attitudes or opinions on a particular topic, quantitative research can be useful. It allows you to collect numerical data using surveys or questionnaires and analyze the data using statistical methods to determine the prevalence of certain attitudes or opinions.

Purpose of Quantitative Research

The purpose of quantitative research is to systematically investigate and measure the relationships between variables or phenomena using numerical data and statistical analysis. The main objectives of quantitative research include:

- Description : To provide a detailed and accurate description of a particular phenomenon or population.

- Explanation : To explain the reasons for the occurrence of a particular phenomenon, such as identifying the factors that influence a behavior or attitude.

- Prediction : To predict future trends or behaviors based on past patterns and relationships between variables.

- Control : To identify the best strategies for controlling or influencing a particular outcome or behavior.

Quantitative research is used in many different fields, including social sciences, business, engineering, and health sciences. It can be used to investigate a wide range of phenomena, from human behavior and attitudes to physical and biological processes. The purpose of quantitative research is to provide reliable and valid data that can be used to inform decision-making and improve understanding of the world around us.

Advantages of Quantitative Research

There are several advantages of quantitative research, including:

- Objectivity : Quantitative research is based on objective data and statistical analysis, which reduces the potential for bias or subjectivity in the research process.

- Reproducibility : Because quantitative research involves standardized methods and measurements, it is more likely to be reproducible and reliable.

- Generalizability : Quantitative research allows for generalizations to be made about a population based on a representative sample, which can inform decision-making and policy development.

- Precision : Quantitative research allows for precise measurement and analysis of data, which can provide a more accurate understanding of phenomena and relationships between variables.

- Efficiency : Quantitative research can be conducted relatively quickly and efficiently, especially when compared to qualitative research, which may involve lengthy data collection and analysis.

- Large sample sizes : Quantitative research can accommodate large sample sizes, which can increase the representativeness and generalizability of the results.

Limitations of Quantitative Research

There are several limitations of quantitative research, including:

- Limited understanding of context: Quantitative research typically focuses on numerical data and statistical analysis, which may not provide a comprehensive understanding of the context or underlying factors that influence a phenomenon.

- Simplification of complex phenomena: Quantitative research often involves simplifying complex phenomena into measurable variables, which may not capture the full complexity of the phenomenon being studied.

- Potential for researcher bias: Although quantitative research aims to be objective, there is still the potential for researcher bias in areas such as sampling, data collection, and data analysis.

- Limited ability to explore new ideas: Quantitative research is often based on pre-determined research questions and hypotheses, which may limit the ability to explore new ideas or unexpected findings.

- Limited ability to capture subjective experiences : Quantitative research is typically focused on objective data and may not capture the subjective experiences of individuals or groups being studied.

- Ethical concerns : Quantitative research may raise ethical concerns, such as invasion of privacy or the potential for harm to participants.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Questionnaire – Definition, Types, and Examples

Case Study – Methods, Examples and Guide

Observational Research – Methods and Guide

Qualitative Research Methods

Explanatory Research – Types, Methods, Guide

Survey Research – Types, Methods, Examples

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Indian J Crit Care Med

- v.24(Suppl 4); 2020 Sep

Critical Analysis of a Randomized Controlled Trial

Balkrishna d nimavat.

1 Critical Care Unit, Sir HN Reliance Hospital, Ahmedabad, Gujarat, India

Kapil G Zirpe

2,3 Department of Neuro Trauma Unit, Grant Medical Foundation, Pune, Maharashtra, India

Sushma K Gurav

In the era of evidence-based medicine, healthcare professionals are bombarded with plenty of trials and articles of which randomized control trial is considered as the epitome of all in terms of level of evidence. It is very crucial to learn the skill of balancing knowledge of randomized control trial and to avoid misinterpretation of trial result in clinical practice. There are various methods and steps to critically appraise the randomized control trial, but those are overly complex to interpret. There should be more simplified and pragmatic approach for analysis of randomized controlled trial. In this article, we like to summarize few of the practical points under 5 headings: “5 ‘Rs’ of critical analysis of randomized control trial” which encompass Right Question, Right Population, Right Study Design, Right Data, and Right Interpretation. This article gives us insight that analysis of randomized control trial should not only based on statistical findings or results but also on systematically reviewing its core question, relevant population selection, robustness of study design, and right interpretation of outcome.

How to cite this article: Nimavat BD, Zirpe KG, Gurav SK. Critical Analysis of a Randomized Controlled Trial. Indian J Crit Care Med 2020;24(Suppl 4):S215–S222.

I ntroduction

“ Statistics are like bikinis. What they reveal is suggestive, but what they conceal is vital .” [Aaron Levenstein]

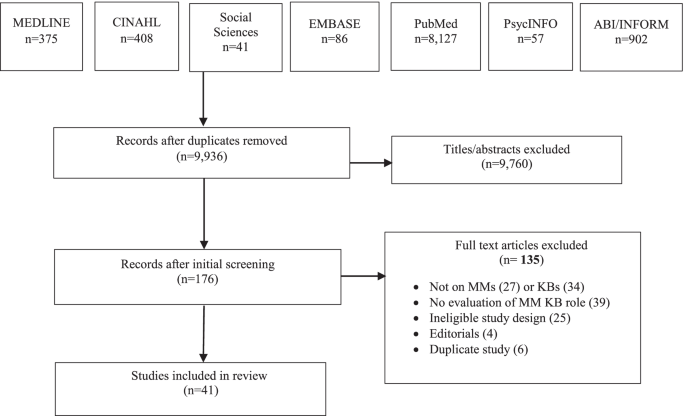

Being up to date with knowledge is pivotal in world of evidence-based medicine. Sometimes, it is also crucial in terms of medicolegal aspect and to improve best current practice. In view of this background, plenty of articles and trials are emerging out in various journals every day. Among all types of study design, randomized control trial (RCT) is considered as supreme in terms of strength of evidence. Appropriately planned and vigorously conducted RCT is the best study design to see the intervention-related outcome difference, but simultaneously poorly conducted biased RCTs will misguide the reader. It is ideal to read RCTs and optimize clinical practice, but it is critical to understand strong and weak points of those RCTs before being dogmatic about their result or conclusion. There are many methods to appraise the RCTs, but in this article, I tried to simplify the points under 5 headings with mnemonic 5‘Rs’ that helps to understand things in better way ( Flowchart 1 ).

Presentation of “critical analysis of RCT”

S teps for C ritical A nalysis of R andomized C ontrol T rials

Formulate right question/address right question.

As Claude Lévi-Strauss said, “The scientist is not a person who gives the right answers, he is one who asks the right questions.”

It is crucial to look for right question that possesses characteristic such as innovative, practice changing, knowledge amplifying, and above all having some biological plausibility.

Does Randomized Control Trial Address New/Relevant Question? Does Answer to this Question Lead to More Information that will Help to Improve Current Clinical Practice or Knowledge?

Questions arises from any of topic are mostly of two types: background questions and foreground questions. RCTs are the experimental design that usually target foreground questions that are more specific to establish intervention/drug and their effect/outcome relationship. Foreground research question has four components to get relevant information like Population, Intervention, Control, and Outcome (PICO format). Whether study question and design are ethical and feasible for relevant population can be decided by FINER criterial. 1

Outcome are the variables that are monitored during study to observe presence/absence of impact of intervention on desired population. Outcome is also labeled as events or end points. Most common clinical end points are mortality, morbidity, and quality of life. It is decisive to choose right end point with their background knowledge and its relevance to formulated question ( Fig. 1 ). 2 – 4

Types of endpoint and their pros and cons

So, it is evident that no single end point is perfect, but end points should be accessed in the context of clinical question, power, and randomization.

Is Cause and Effect Having Biological Plausibility?

Biological plausibility is one of the essential components to establish that correlation means causation. Just mere association or having significant p -value without biological plausibility is like beating a dead horse (purely punitive). That means statistically significant data make least sense or should be interpreted with caution if they lack biological plausibility, and data that are unable to give statistical significance but have strong biological plausibility with vigorously conducted study should be evaluated again and discussed before rejection. 5

To determine whether correlation is equivalent to causation, many criteria and methods are available. One of such criteria is Bradford Hill criteria. It is also important to understand that knowledge of biological plausibility is dynamic and evolves with time. It is possible that there is true causation, but biological knowledge at that time is unable to explain it ( Table 1 ).

Factors help to formulate sound question 1 , 6

Right Population

Define target population/does sample truly represent population.

RCTs are usually conducted on group of people (sample) rather than whole population. It is important for the trial that selected sample truly represents the baseline characteristic of the rest of the population. Inferential leap or generalization from samples to population is also not that simple and most of the time not full proof.

External validity in RCT represents at what extent the study result can be generalized to real-world population. Internal validity gives idea about how vigorous trial is conducted and generates robust data. If RCTs have poor internal validity, result made on that trial cannot be used firmly due to higher chances poor quality data and higher chances of bias for that given sample. Limitation of external validity means trial sample or defined sample is not true representative of rest of population. In a simplified way, if internal validity is questionable, applying it on larger scale is irrelevant, and second if trial having limited external validity (by having large exclusion criteria), applicability of RCT conclusion to rest of the population should be done with caution and less reliable. External validity is improved by changing inclusion and exclusion criteria, while internal validity can be boosted by controlling more variables (reducing confounding), randomization, blinding, improving measurement technique, and by adding control/placebo group. 7

Size of Target Population/Is Sample Size Adequate?

Another important step is to choose adequate sample size that gives relevant clinical difference that is statistically significant. Sample size estimation should be done prior to trial only and should not be deviate while study is ongoing to prevent statistical error. Study size is affected by multiple factors such as acceptable level of significance (alpha error), power of study, expected effect size, event rate in population (prevalence rate), alternative hypothesis, and standard deviation in population. There are formulas to calculate sample size, but it is more important for us to understand the relationship of each factor with sample size. 8

For phenomenon or association where effect size is large, even small size sample will solve the purpose. In traditional concept, we learnt that large sample size is good but that it is not true all the time, as even clinically nonsignificant difference will be highlighted when large sample size is analyzed. For certain disease where prevalence rate is low (rare events), it is not possible to do RCTs (where observational study solve the purpose).

Tool used for sample size estimation is “Power of the study”. Power of study represents how much study population required to avoid type II error for that study. Power of study depends on variable factors such as precision and variance of measurements within any sample, effect size, type I error acceptance level, and type of statistical test we are performing. 9 Sample size also depends on expected attrition rate/dropout rate/losses to follow-up and funding capacity of trial.

Right Study Design

Experimental design considers better over observational design, as they have better grip on variables, and cause–effect hypothesis can be established. Experimental study design is again divided into preexperimental, quasi-experimental, and true experimental. Quasi-experimental and true experimental design is differentiated by absence and presence of randomization of groups. Randomized control trial is true experimental design, and it delivers higher quality of evidence over other designs as having remarkably high internal validity and presence of randomization. But RCT has its own limitations such as complex study design, costly by nature, ethical issue with limitations (as intervention/medicine use), time consuming, and difficult to apply on rare disease or conditions.

Strengthen Study Design/Are Measures Taken to Reduce Bias (Selection or Confouding Bias)?

Interventional studies/RCTs are designed to observe the efficacy and safety of new treatment for clinical condition, it is particularly important that outcome does not happen by chance. To reduce confounding factors and bias, variety of strategies such as selection of control, randomization, blinding, and allocation concealment are helpful. Control arm is used for comparison to derive the more reliable effect of intervention. Control are of four types: (1) Historical, (2) Placebo, (3) Active control (where standard treatment used), and (4) Dose–response control (where control have different dose/gradient of intervention compared to interventional arm). Randomization helps to reduce the selection bias and confounding bias. Randomization can be done by computer-generated or random number table from textbook. Randomization techniques are of different types such as simple, block randomization, stratified, and cluster randomization. Reliability of sample randomization gets compromised if used for small sample. Block randomization is better method when there is large sample size, and follow-up period is lengthy. It is also important that block size should not be disclosed to the investigator, and if possible, block size should vary with time and randomly distributed to avoid predictability. Stratified randomization is used when there are specific variables having known influence on outcome. In cluster randomization, rather than individual a group of people are randomized. Blinding is a method to reduce observation bias. Study can be open labeled/unblinded or blinded. Blinding has different types such as participant blinding, observer/investigator blinding, and data analyst blinding. Allocation concealment secures randomization and thus reduces selection bias. The difference between allocation concealment and blinding is that allocation concealment is used while recruitment and blinding after recruitment. 10

Right Data/Is Appropriate Tool/Method Used to Analyze Data?

“ We must be careful not to confuse data with the abstractions we use to analyze them .” [William James]

Study methodology should include mentioning type of research, collect and analyze data, tool/method used, and rational of using those tools. After collecting data, the next step is to decide which statistical test should be used. Choosing the right test depends on few parameters: (1) purpose/objectives of study question (whether it is to compare data or establish any correlation between them); (2) how many samples are there (one, two or multiple); (3) type of data (categorical and numerical), (4) type and number of variables? (univariate, bivariate, or multivariate); and (5) Relationship between groups (paired/dependent vs unpaired/independent). Based on these differences, possible combinations arise. Table shows different combination and methodological tests used to analyze data ( Table 2 ).

Factors/questions helps to select statistical tool to analyze data 11 , 12

In RCTs, many times we have seen subgroup analysis or post hoc analysis; for a reader, it is very important to understand limitation of those analyzes. Subgroup analyzes are usually considered as a secondary objective, but in era of personalized medicine and targeted therapies, it is well recognized that the treatment effect of a new drug/intervention might not be same among study population. Subgroup analyzes are therefore important to interpret the results of clinical trials. 13 Subgroup analyzes is helpful when (1) to evaluate safety profile in particular subgroup, (2) to access consistency of effect on different subgroup, and (3) to detect effect in subgroup in otherwise nonsignificant trial. 14 Subgroup analysis is much criticized by 2 ways: (1) Chances of high false-positive findings as multiple testing and (2) chances of false-negative when inadequate power (because of small sample size). It is exceedingly difficult to come to conclusion based on subgroup analysis and practice it. Still there are few scenarios where clinicians consider validity of subgroup analysis when prior probability of subgroup effect is more (at least more than 20% and preferably >50%), small number of subgroups (≤2) are tested, subgroup has same baseline characteristic, and when hypothesis testing of subgroup decided prior only. To reduce false-positive rate in subgroup findings, the clinician can take help of Bayes approach. 15 Post hoc analyzes, type of subgroup analysis defined by, ‘The act of examining data for findings or responses that were not specified a priori but analyzed after the study has been completed’. If possible, prespecified subgroup analyzes should be done compared to post hoc analyzes, as they are more credible. 13

Right Interpretation (Giving Meaning to Data)

“ Everything we hear is an opinion, not a fact. Everything we see is a perspective, not the truth .” [Marcus Aurelius]

Is this RCT Result Difference by Chance/Statistically Significant?

Is p-value significant? Purpose of data collection and analysis is to show whether there is difference between two groups or not. Now this difference can be due to chance or true difference. To rule out difference by chance, many tools are used in statistics: p -value is one of them. p -value is a widely used yet highly misunderstood and misinterpreted index. In Fisher's system, the p -value was used as a rough numerical guide for the strength of evidence against the null hypothesis and value of which was arbitrary selected to 0.05. In simplified way, p -value <0.05 suggests that one should repeat the experiment and word significance is merely indicating “worthy of attention”. So once p -value becomes significant, one should do more and more vigorous study rather than end of story. 16

Misperception about p-value: Most common misperception about p -value are: (1) Large p -value means no difference and (2) smaller p -value is always more significant?

(a)“Absence of evidence is not the evidence of absence.” If the p -value is above the prespecified threshold alpha error (mostly 0.05), we normally conclude that the H 0 is not rejected. But it does not mean that the H 0 is true. The better interpretation is that there is insufficient evidence to reject the H 0 . Similarly, the “not H 0 ” could mean there is something wrong with the H 0 and not necessarily that H a is right. 17 (b) p -value is affected by factors like (i) effect size (appropriate index for measuring the effect and size of effect), (ii) size of sample (larger the sample size likely a difference to be detected), and (iii) distribution of data (bigger the standard deviation, lower the p -value). 18 It is very important to understand that smaller p -values do not always mean significant findings, as larger sample size and smaller effect size can give smaller p -value.

Is multiple testing done? Another problem with p -value is multiple testing, and few of them/last testing shows p -value of <0.05.

“If you torture the data enough, nature will always confess.” [Ronald Coase] One success out of one attempt and one success out of multiple attempts have different meanings in terms of statistics and probability. The underlying mechanism of multiple described is as “File drawer problem”. Multiple testing is more about “intention” and the future likelihood of replicability of the observed finding rather than truth. 17

Is false discovery rate ruled out?/solution of multiple testing p-value: Tools used to weed out such bad data that seems good: A simple not perfect solution of multiple testing p -value is the Bonferroni adjustment, which is to use α = 0.05/5 = 0.01 for 5 (independent) tests as a new threshold and adjust the observed p -values by multiplying by 5. Problem with this adjustment is that it not only lowers the chance of detecting false-positive but also reduces true discoveries. False discovery rate (FDR) is another method that controls the number of false discoveries only in those tests having significant result. Adjusted p -values using an optimized FDR approach is known as q -value. There are other methods to overcome this phenomenon like O'Brien-Fleming for interim analyzes and empirical Bayes methods. 17 , 18

Is alternative approach to p-value used?/Bayes method: Limitation of p -value is that it does not consider prior probability and alternative hypothesis. The evidence from a given study needs to be combined with that from prior work to generate a conclusion. This purpose is solved by Bayes’ theorem/method. Bayes’ factor is the likelihood ratio of null hypothesis to alternate hypothesis. In simple terms, p -value should be compared to strongest Bayes’ factor to see the true evidence against null hypothesis 16 ( Table 3 ).

Properties and differences between Bayes’ factor and p -value 16 , 19

Is p-value backed up with confidence interval? Confidence interval (CI) describes the range of values calculated from sample observation that likely contains true population value with some degree of uncertainty. CI will help to overcome lacunae of p -value by giving more information about significance. It gives idea about size of effect rather than hypothesis testing. Width of CI will give idea about precision/reliability of estimate. CI gives insight about direction and strength of effect and thus clinical relevance rather than just statistical one. p -value is affected by type I error while CI is not. 20 , 21 Size of CI depends on sample size and standard deviation of study group. If sample size is large (leads to more confidence), it will give narrow CI. If the dispersion is wide, then certainty of conclusion is less and wider CI. Confidence interval is also affected by level of confidence that is selected by user and does not depend on sample characteristics. Most selected level is 95%, but different levels like 90% or 99% can be considered. 20 , 21 Another usefulness of CI is in equivalence/superior/non-inferior type of studies, where CI is used as intergroup comparison tool and not the p -value. 21

Is data robust? The Fragility index (FI) measures the robustness of the results of a clinical trial. In simple words, if the FI is high, statistical reproducibility of the study is high. The FI is the minimum number of patients whose status would have to change from a non-event (not experiencing the primary end point) to an event (experiencing the primary end point) to make the study lose statistical significance. For instance, an FI score of 1 means that only one patient would have to not experience the primary end point to make the trial result nonsignificant. In other words, it is a measure of how many events the statistical significance of a clinical trial result depends on. A smaller FI score indicates a more fragile, less statistically robust clinical trial result. Like other statistical tools, FI is also not free from limitations: (1) Only appropriate for RCTs; (2) Appropriate for dichotomous outcome; (3) Not appropriate for time-to event binary outcomes; (4) No specific FI value that defines an RCT outcome as robust and no FI score cut off value considered acceptable; (5) Use of FI scores to assess secondary outcome measures in studies may be limited; (6) Not reliable/difficult to interpret when number of subjects who drop out for unknown reasons is large; and (7) FI strongly related to p -value. In view of above-mentioned flaws, FI should not be used as isolated tool to measure strength of effect. Trials with lower scores are more fragile (which is usually in association with the smaller number of events, smaller sample size, and resulting lower study power), and trials with a higher FI score are less fragile (which is usually associated with larger number of events, larger sample size, and resulting higher study power). 22 – 24

Is this Statistically Significant Difference/Clinically Significant?

Another more common misinterpretation is ‘statistically significant is equivalent to clinical significant’. Statistically significant means there is true difference in the data but whether that difference is clinically significant or not depends on many factors such as size of effect (minimum important difference), any harms (risk-benefit), cost-effectiveness/feasibility, and conflict of interest/funding. 25

“ The primary product of a research inquiry is one or more measures of effect size, not p-values .” [Jacob Cohen]

p -value gives idea about whether effect exists or not but does not give idea about size of effect. It is particularly important to mention both effect size and p -value in the study. Both parameters are not alternative to each other but rather they are complementary. Unlike significance tests, effect size is independent of sample size. 26 Effect size indices can be calculated depending on the type of comparison under study ( Table 4 ).

Common effect size indices 26 – 28

Interpretation of effect size depends on the assumptions that both group (“control” and “experimental”) values are normally distributed and have same standard deviations. Relative risk and odds ratio should be interpreted in the context of absolute risk and confidence interval. Use of an effect size with a confidence interval will deliver the same information as a test of statistical significance, but it gives weightage on the significance of the effect rather than the sample size.

Minimum important difference: Most important and difficult point in clinical significance is to decide what difference is clinically important. There are 3 ways to decide MID: Anchor-based, distribution-based, and expert panel approach. 25

Is Randomized Control Trial Result Applicable/Practice Changing?

When any of new intervention or therapy launched, its acceptance and success not only depend on clinical efficacy but also on the costs associated with it. Randomized trials focus on clinical end points such as organ failure, respiratory or renal support, mortality, and morbidity, while contemporary clinical trials include economic outcomes. Therapy with good clinical outcome and low cost is considered as dominant strategy, and in such cases, there is no need of any deep analysis. But problem arises when there is one novel therapy showing some better clinical outcome but having higher cost. In such cases, the most important thing is whether improvement in outcome is worth the higher cost. So, cost-effectiveness helps in balancing cost with efficacy/outcome and comparing available alternative therapies. 29

Is any conflict of interest financial/non-financial? A conflict of interest (COI) happens when contradictory interest emerges out to on a topic/activity by an individual/institution. When conflict of interest exists, validity of RCT should be in question, independent of the behavior of the investigator. Conflict of interest can happen at different level/tier like at the level of investigator, ethics committee (EC), or at regulator level. Conflict of interest can happen with sponsors like pharmaceutical companies, contract research organization, or at multiple levels. Nowadays, most of the trials are blinded, so, it is exceedingly difficult for investigator to manipulate the data and thus the result. But it is possible to alter data unintentionally or knowingly at the level of data analysis by data management team. It is important to check at this level, as most investigators would not even know if results were altered by data analyst. In simple way, conflict of interest can be divided into non-financial type and financial type. Other classifications are negative conflict of interest and positive conflict of interest. More common is that we concern about positive conflict of interest, but negative conflict of interest is also worth observing. Negative COI happens when any investigator/sponsor willfully rejects/gives injustice to potential useful therapy or intervention, just for his own rivalry or benefit. 30

It is also very important to know that conflict of interest is not always bad thing, and sometimes it just happens because of nature of question/core problem not because of individual or sponsor. 30 , 31 Most common and best approach to handle conflict of interest is by public reporting of relevant conflicts.

Is bias present in randomized control trial? Bias is defined as systematic error in the results of individual studies or their synthesis. Cochrane Risk of Bias Tool for randomized trials mentioned that bias can happen at 6 different levels/domains: generation of allocation sequence, concealment of allocation sequence, blinding of participants(single blinding) and doctors(double blinding), blinding of data analyst (triple blinding), attrition bias, and publication bias. It is worth noticing that financial conflict of interest is not part of this but, it can be motive behind it. 31

Is randomized control trial peer reviewed or not? Another important thing about article publication and reliability is whether peer review done or not. Peer-review is the assessment of article by qualified people before publication. Peer-review helps to improve the quality of article by adding suggestion, and second it rejects the unacceptable poor-quality articles. Most of the reputed journals made their own policy about peer-review. Peer-review is not free of bias. Sometimes, quality of this process depends on selected qualified faculty and their preference on article. Like peer-review, post publication review is also especially important and should not be ignored, as it is criticized/analyzed by hundreds of experts. 32 , 33

C onclusion

In nutshell, critical analysis of RCT is all about balancing the strong and weak points of trial based on analyzing main domains such as right question, right population, right study design, right data, and right interpretation. It is also important to note that these demarcations are immensely simplified, and they are interconnected by many paths.

Source of support: Nil

Conflict of interest: None

R eferences

- - Google Chrome

Intended for healthcare professionals

- Access provided by Google Indexer

- My email alerts

- BMA member login

- Username * Password * Forgot your log in details? Need to activate BMA Member Log In Log in via OpenAthens Log in via your institution

Search form

- Advanced search

- Search responses

- Search blogs

- Quantifying possible...

Quantifying possible bias in clinical and epidemiological studies with quantitative bias analysis: common approaches and limitations

- Related content

- Peer review

- Jeremy P Brown , doctoral researcher 1 ,

- Jacob N Hunnicutt , director 2 ,

- M Sanni Ali , assistant professor 1 ,

- Krishnan Bhaskaran , professor 1 ,

- Ashley Cole , director 3 ,

- Sinead M Langan , professor 1 ,

- Dorothea Nitsch , professor 1 ,

- Christopher T Rentsch , associate professor 1 ,

- Nicholas W Galwey , statistics leader 4 ,

- Kevin Wing , assistant professor 1 ,

- Ian J Douglas , professor 1

- 1 Department of Non-Communicable Disease Epidemiology, London School of Hygiene and Tropical Medicine, London, UK

- 2 Epidemiology, Value Evidence and Outcomes, R&D Global Medical, GSK, Collegeville, PA, USA

- 3 Real World Analytics, Value Evidence and Outcomes, R&D Global Medical, GSK, Collegeville, PA, USA

- 4 R&D, GSK Medicines Research Centre, GSK, Stevenage, UK

- Correspondence to: J P Brown jeremy.brown{at}lshtm.ac.uk (or @jeremy_pbrown on X)

- Accepted 12 February 2024

Bias in epidemiological studies can adversely affect the validity of study findings. Sensitivity analyses, known as quantitative bias analyses, are available to quantify potential residual bias arising from measurement error, confounding, and selection into the study. Effective application of these methods benefits from the input of multiple parties including clinicians, epidemiologists, and statisticians. This article provides an overview of a few common methods to facilitate both the use of these methods and critical interpretation of applications in the published literature. Examples are given to describe and illustrate methods of quantitative bias analysis. This article also outlines considerations to be made when choosing between methods and discusses the limitations of quantitative bias analysis.

Bias in epidemiological studies is a major concern. Biased studies have the potential to mislead, and as a result to negatively affect clinical practice and public health. The potential for residual systematic error due to measurement bias, confounding, or selection bias is often acknowledged in publications but is seldom quantified. 1 Therefore, for many studies it is difficult to judge the extent to which residual bias could affect study findings, and how confident we should be about their conclusions. Increasingly large datasets with millions of patients are available for research, such as insurance claims data and electronic health records. With increasing dataset size, random error decreases but bias remains, potentially leading to incorrect conclusions.

Sensitivity analyses to quantify potential residual bias are available. 2 3 4 5 6 7 However, use of these methods is limited. Effective use typically requires input from multiple parties (including clinicians, epidemiologists, and statisticians) to bring together clinical and domain area knowledge, epidemiological expertise, and a statistical understanding of the methods. Improved awareness of these methods and their pitfalls will enable more frequent and effective implementation, as well as critical interpretation of their application in the medical literature.

In this article, we aim to provide an accessible introduction, description, and demonstration of three common approaches of quantitative bias analysis, and to describe their potential limitations. We briefly review bias in epidemiological studies due to measurement error, confounding, and selection. We then introduce quantitative bias analyses, methods to quantify the potential impact of residual bias (ie, bias that has not been accounted for through study design or statistical analysis). Finally, we discuss limitations and pitfalls in the application and interpretation of these methods.

Summary points

Quantitative bias analysis methods allow investigators to quantify potential residual bias and to objectively assess the sensitivity of study findings to this potential bias

Bias formulas, bounding methods, and probabilistic bias analysis can be used to assess sensitivity of results to potential residual bias; each of these approaches has strengths and limitations

Quantitative bias analysis relies on assumptions about bias parameters (eg, the strength of association between unmeasured confounder and outcome), which can be informed by substudies, secondary studies, the literature, or expert opinion

When applying, interpreting, and reporting quantitative bias analysis, it is important to transparently report assumptions, to consider multiple biases if relevant, and to account for random error

Types of bias

All clinical studies, both interventional and non-interventional, are potentially vulnerable to bias. Bias is ideally prevented or minimised through careful study design and the choice of appropriate statistical methods. In non-interventional studies, three major biases that can affect findings are measurement bias (also known as information bias) due to measurement error (referred to as misclassification for categorical variables), confounding, and selection bias.

Misclassification occurs when one or more categorical variables (such as the exposure, outcome, or covariates) are mismeasured or misreported. 8 Continuous variables might also be mismeasured leading to measurement error. As one example, misclassification occurs in some studies of alcohol consumption owing to misreporting by study participants of their alcohol intake. 9 10 As another example, studies using electronic health records or insurance claims data could have outcome misclassification if the outcome is not always reported to, or recorded by, the individual’s healthcare professional. 11 Measurement error is said to be differential when the probability of error depends on another variable (eg, differential participant recall of exposure status depending on the outcome). Errors in measurement of multiple variables could be dependent (ie, associated with each other), particularly when data are collected from one source (eg, electronic health records). Measurement error can lead to biased study findings in both descriptive and aetiological (ie, cause-effect) non-interventional studies. 12

Confounding arises in aetiological studies when the association between exposure and outcome is not solely due to the causal effect of the exposure, but rather is partly or wholly due to one or more other causes of the outcome associated with the exposure. For example, researchers have found that greater adherence to statins is associated with a reduction in motor vehicle accidents and an increase in the use of screening services. 13 However, this association is almost certainly not due to a causal effect of statins on these outcomes, but more probably because attitudes to precaution and risk that are associated with these outcomes are also associated with adherence to statins.

Selection bias occurs when non-random selection of people or person time into the study results in systematic differences between results obtained in the study population and results that would have been obtained in the population of interest. 14 15 This bias can be due to selection at study entry or due to differential loss to follow-up. For example, in a cohort study where the patients selected are those admitted to hospital in respiratory distress, covid-19 and chronic obstructive pulmonary disease might be negatively associated, even if there was no association in the overall population, because if you do not have one condition it is more likely you have the other condition in order to be admitted. 16 Selection bias can affect both descriptive and aetiological non-interventional studies.

Handling bias in practice

All three biases should ideally be minimised through study design and analysis. For example, misclassification can be reduced by the use of a more accurate measure, confounding through measurement of all relevant potential confounders and their subsequent adjustment, and selection bias through appropriate sampling from the population of interest and accounting for loss to follow-up. Other biases should also be considered, for example, immortal time bias through the appropriate choice of time zero, and sparse data bias through collection of a sample of sufficient size or by the use of penalised estimation. 17 18

Even with the best available study design and most appropriate statistical analysis, we typically cannot guarantee that residual bias will be absent. For instance, it is often not possible to perfectly measure all required variables, or it might be either impossible or impractical to collect or obtain data on every possible potential confounder. For instance, studies conducted using data collected for non-research purposes, such as insurance claims and electronic health records, are often limited to the variables previously recorded. Randomly sampling from the population of interest might also not be practically feasible, especially if individuals are not willing to participate.