What Is Statistical Validity? -Understanding Trends in Validating Research Data

With an aim to understand, analyze, and draw conclusions based on the enormous data often presented in complex formats, it is imperative to validate it statistically. In research, decision modeling and inferential aspects depend on the statistical validity of research data. Thus, it becomes imperative for researchers and statisticians to develop novel frameworks in the statistical paradigm to evaluate and validate research data. In this article, we will explore the recent trends in the statistical validity of research data.

Table of Contents

What Is Statistical Validity?

Statistical validity can be defined as the extent to which drawn conclusions of a research study can be considered accurate and reliable from a statistical test. To achieve statistical validity , it is essential for researchers to have sufficient data and also choose the right statistical approach to analyze that data. Furthermore, statistical validity also refers to whether statistics derived from a research study are in agreement with its scientific laws. Thus, if a given data set draws a conclusion after experimentation, it is said to be scientifically valid and relies on the mathematical and statistical laws of the principal study.

Why Is It Important to Determine Statistical Validity of Research Data?

It is important to determine statistical validity of research data because;

- It allows the analyst to know whether the results of the conducted experiments can be accepted with confidence or not.

- It increases the probability of research reproducibility.

- The researcher understands whether a method of analysis is suitable for its intended use to derive conclusive results.

- It allows the researcher to ensure the validity of research based on its criteria of method selection .

- Furthermore, it also allows the researcher to optimize the number of assays and satisfy the validation criteria of a study.

What Are the Different Types of Statistical Validities?

Statistical validities relevant to research are broadly classified into 6 categories:

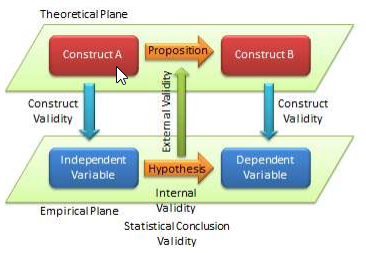

1. Construct Validity:

- It ensures that the actual experimentation and data collection conforms to the theory that is being studied.

- It is reflected by a questionnaire regarding public opinion. It provides a clearer image of what people think of a certain issue.

- Construct validity is further divided into 2 types: A. Convergent Validity – It ensures that if the required theory predicts that one measure is correlated with the other, then the statistics confirm this. B. Divergent or Discriminant Validity – It ensures that if the required theory predicts that one variable doesn’t correlate with others, then statistics need to confirm this.

2. Content Validity:

This validity ensures that the test or questionnaire that is prepared completely covers all aspects of the variable being studied.

3. Face Validity:

This type of validity estimates whether the given experiment actually mimics the claims that are being verified.

4. Conclusion Validity:

This validity ensures that the conclusion is achieved from the data sets obtained from the experiment are actually correct and justified without any violations.

5. Internal Validity:

It is a measure of the relationship between cause and effect being studied in the experiment.

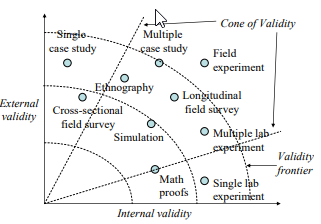

6. External Validity:

This validity is a measure of how to apply the results from a particular experiment to more general populations. Furthermore, it informs the analyst whether or not to generalize the results of a particular experiment to all other populations or to some populations with particular characteristics.

Understanding Trends in Determining Statistical Validity

1. specificity and selectivity.

Statistical validity is relevant to specificity—a quantitative indication of the extent to which a method can distinguish between the subject of interest and interfering substances on the basis of signals produced under actual experimental conditions. In case of random interferences, they should be determined using representative blank samples.

2. Accuracy

Accuracy is the closeness of agreement between the true value of the subject being analyzed and the mean result obtained by applying experimental procedure to a larger population or sample size.

3. Precision

While comparing results, they should be analyzed based on their precision of repeatability and reproducibility . In statistics, repeatability can be termed as intra-assay precision.

4. Detection Limit

Detection limit can be determined with several approaches: visual inspection, signal-to-noise, and using the standard deviation of the response and the slope. While presenting, researchers must also ensure that the detection limit and the method used for determining the detection limit is also displayed.

5. Robustness

Robustness of data is the measure of how effectively the performance of the research method stands up to not exactly similar implementation of the approach. Exact same results can only be replicated following a set procedure; however, to avoid the performance to be severely affected, the procedure must be carried out with sufficient care following the procedure efficiently. Such impacting aspects should be identified and their influence on method’s performance must be evaluated using tests for robustness.

What Are the Challenges in Determining Statistical Validity of Research Data?

- Methods are generally developed by the R&D department, whilst the quality assurance and quality control departments conduct data validation. The transfer of methods and data from one department to another is important and must be done scrupulously to ensure proper validation.

- If methods are not built on research robustness , the results delivered may also be affected, eventually leading to lack of efficiency in quality testing encountering lengthy and complicated validation process.

- Inadequate knowledge of design and execution of the studies will hamper the statistical validity of research data.

Statistical validity helps ensure that the developed methods qualify and are capable of their intended use. Which methods do you follow to ensure statistical validity of your research data? Let us know about it in the comments section below.

Good article to read and gain information.

nice article

This article is really helpful thanks once again. I love this site

Rate this article Cancel Reply

Your email address will not be published.

Enago Academy's Most Popular Articles

- Promoting Research

Plain Language Summary — Communicating your research to bridge the academic-lay gap

Science can be complex, but does that mean it should not be accessible to the…

- Reporting Research

Choosing the Right Analytical Approach: Thematic analysis vs. content analysis for data interpretation

In research, choosing the right approach to understand data is crucial for deriving meaningful insights.…

Demystifying the Role of Confounding Variables in Research

In the realm of scientific research, the pursuit of knowledge often involves complex investigations, meticulous…

Research Interviews: An effective and insightful way of data collection

Research interviews play a pivotal role in collecting data for various academic, scientific, and professional…

Planning Your Data Collection: Designing methods for effective research

Planning your research is very important to obtain desirable results. In research, the relevance of…

Choosing the Right Analytical Approach: Thematic analysis vs. content analysis for…

Sign-up to read more

Subscribe for free to get unrestricted access to all our resources on research writing and academic publishing including:

- 2000+ blog articles

- 50+ Webinars

- 10+ Expert podcasts

- 50+ Infographics

- 10+ Checklists

- Research Guides

We hate spam too. We promise to protect your privacy and never spam you.

I am looking for Editing/ Proofreading services for my manuscript Tentative date of next journal submission:

What should universities' stance be on AI tools in research and academic writing?

Popular searches

- How to Get Participants For Your Study

- How to Do Segmentation?

- Conjoint Preference Share Simulator

- MaxDiff Analysis

- Likert Scales

- Reliability & Validity

Request consultation

Do you need support in running a pricing or product study? We can help you with agile consumer research and conjoint analysis.

Looking for an online survey platform?

Conjointly offers a great survey tool with multiple question types, randomisation blocks, and multilingual support. The Basic tier is always free.

Research Methods Knowledge Base

- Navigating the Knowledge Base

- Foundations

- Measurement

- Research Design

- Threats to Conclusion Validity

- Improving Conclusion Validity

- Statistical Power

- Data Preparation

- Descriptive Statistics

- Inferential Statistics

- Table of Contents

Fully-functional online survey tool with various question types, logic, randomisation, and reporting for unlimited number of surveys.

Completely free for academics and students .

Conclusion Validity

Of the four types of validity (see also internal validity , construct validity and external validity ) conclusion validity is undoubtedly the least considered and most misunderstood. That’s probably due to the fact that it was originally labeled ‘statistical’ conclusion validity and you know how even the mere mention of the word statistics will scare off most of the human race!

In many ways, conclusion validity is the most important of the four validity types because it is relevant whenever we are trying to decide if there is a relationship in our observations (and that’s one of the most basic aspects of any analysis). Perhaps we should start with an attempt at a definition:

Conclusion validity is the degree to which conclusions we reach about relationships in our data are reasonable.

For instance, if we’re doing a study that looks at the relationship between socioeconomic status (SES) and attitudes about capital punishment, we eventually want to reach some conclusion. Based on our data, we may conclude that there is a positive relationship, that persons with higher SES tend to have a more positive view of capital punishment while those with lower SES tend to be more opposed. Conclusion validity is the degree to which the conclusion we reach is credible or believable.

Although conclusion validity was originally thought to be a statistical inference issue, it has become more apparent that it is also relevant in qualitative research. For example, in an observational field study of homeless adolescents the researcher might, on the basis of field notes, see a pattern that suggests that teenagers on the street who use drugs are more likely to be involved in more complex social networks and to interact with a more varied group of people. Although this conclusion or inference may be based entirely on impressionistic data, we can ask whether it has conclusion validity, that is, whether it is a reasonable conclusion about a relationship in our observations.

Whenever you investigate a relationship, you essentially have two possible conclusions — either there is a relationship in your data or there isn’t. In either case, however, you could be wrong in your conclusion. You might conclude that there is a relationship when in fact there is not, or you might infer that there isn’t a relationship when in fact there is (but you didn’t detect it!). So, we have to consider all of these possibilities when we talk about conclusion validity.

It’s important to realize that conclusion validity is an issue whenever you conclude there is a relationship, even when the relationship is between some program (or treatment) and some outcome. In other words, conclusion validity also pertains to causal relationships. How do we distinguish it from internal validity which is also involved with causal relationships? Conclusion validity is only concerned with whether there is a relationship. For instance, in a program evaluation, we might conclude that there is a positive relationship between our educational program and achievement test scores — students in the program get higher scores and students not in the program get lower ones. Conclusion validity is essentially whether that relationship is a reasonable one or not, given the data. But it is possible that we will conclude that, while there is a relationship between the program and outcome, the program didn’t cause the outcome. Perhaps some other factor, and not our program, was responsible for the outcome in this study. For instance, the observed differences in the outcome could be due to the fact that the program group was smarter than the comparison group to begin with. Our observed posttest differences between these groups could be due to this initial difference and not be the result of our program. This issue — the possibility that some other factor than our program caused the outcome — is what internal validity is all about. So, it is possible that in a study we can conclude that our program and outcome are related (conclusion validity) and also conclude that the outcome was caused by some factor other than the program (i.e., we don’t have internal validity).

We’ll begin this discussion by considering the major threats to conclusion validity , the different reasons you might be wrong in concluding that there is or isn’t a relationship. You’ll see that there are several key reasons why reaching conclusions about relationships is so difficult. One major problem is that it is often hard to see a relationship because our measures or observations have low reliability — they are too weak relative to all of the ’noise’ in the environment. Another issue is that the relationship we are looking for may be a weak one and seeing it is a bit like looking for a needle in the haystack. Sometimes the problem is that we just didn’t collect enough information to see the relationship even if it is there. All of these problems are related to the idea of statistical power and so we’ll spend some time trying to understand what ‘power’ is in this context. Finally, we need to recognize that we have some control over our ability to detect relationships, and we’ll conclude with some suggestions for improving conclusion validity .

Cookie Consent

Conjointly uses essential cookies to make our site work. We also use additional cookies in order to understand the usage of the site, gather audience analytics, and for remarketing purposes.

For more information on Conjointly's use of cookies, please read our Cookie Policy .

Which one are you?

I am new to conjointly, i am already using conjointly.

Credibility, Validity, and Assumptions in Program Evaluation Methodology pp 105–118 Cite as

Validity in Analysis, Interpretation, and Conclusions

- Apollo M. Nkwake 2

- First Online: 14 December 2023

30 Accesses

This phase of the evaluation process involves use of appropriate methods and tools for cleaning, processing, and analysis; interpreting the results to determine what they mean; applying appropriate approaches for comparing, verifying, and triangulating results; and lastly, documenting appropriate conclusions and recommendations. Therefore, critical validity questions include:

Are conclusions and inferences accurately derived from evaluation data and measures that generate this data?

To what extent can findings be applied to situations other than the one in which evaluation is conducted?

The main forms of validity affected at this stage include statistical conclusion, internal validity, and external validity. This chapter discusses the meaning, preconditions, and assumptions of these validity types.

This is a preview of subscription content, log in via an institution .

Buying options

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Durable hardcover edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Descriptive validity concerns the adequacy of the presentation of key features of an evaluation in a research report. The quality of documentation affects the usefulness of an evaluation. Farrington ( 2003 ) argues that a well-written evaluation report needs document nothing less than the following:

Design of the study, for example, how were participants allocated to different comparison groups and conditions?

Characteristics of study participants and settings (e.g., age and gender of individuals, sociodemographic features of areas).

Sample sizes and attrition rates.

Hypotheses to be tested and theories from which they are derived.

The operational definition and detailed description of the intervention’s theory of change (including its intensity and duration).

Implementation details and program delivery personnel.

Description of what treatment the control or other comparison groups received.

The operational definition and measurement of the outcome before and after the intervention.

The reliability and validity of outcome measures.

The follow-up period after the intervention (where applicable).

Effect size, confidence intervals, statistical significance, and statistical methods used.

How independent and extraneous variables were controlled so that it was possible to disentangle the impact of the intervention or how threats to internal validity were ruled out.

Who knows what about the intervention?

Conflict of interest issues: who funded the intervention, and how independent were the researchers? (Farrington, 2003 ).

Calloway, M., & Belyea, M. J. (1988). Ensuring validity using coworker samples: A situationally driven approach. Evaluation Review, 12 (2), 186–195.

Article Google Scholar

Campbell, D. T. (1986). Relabeling internal and external validity for applied social scientists, In W. M. K. Trochim, Advances in quasi-experimental design and analysis. New Directions for Program Education , 31 (Fall):67–78.

Google Scholar

Chen, H. T., & Garbe, P. (2011). Assessing program outcomes from the bottom-up approach: An innovative perspective to outcome evaluation. In H. T. Chen, S. I. Donaldson, & M. M. Mark (Eds.), Advancing validity in outcome evaluation: Theory and practice. New directions for evaluation , 130 (summer), 93–106.

Cook, T. D., Campbell, D. T., & Peracchio, L. (1990). Quasi experimentation. In M. D. Dunnette & L. M. Hough (Eds.), Handbook of industrial and organizational psychology (pp. 491–576).

Cronbach, L. H., Glesser, G. C., Nanda, H., & Rajaratnam, N. (1972). The dependability of behavioral measurements: Theory of generalizability for scores and profiles . Wiley.

Dikmen, S., Reitan, R. M., & Temkin, N. R. (1983). Neuropsychological recovery in head injury. Archives of Neurology, 40 , 333–338.

Article PubMed Google Scholar

Farrington, D. F. (2003). Methodological quality standards for evaluation research. Annals of the American Academy of Political and Social Science, 587 (2003), 49–68.

Field, A. (2014). Discovering statistics using IBM SPSS . London: Sage.

Glasgow, R. E., Klesges, L. M., Dzewaltowski, D. A., Bull, S. S., & Estabrooks, P. (2004). The future of health behavior change research: What is needed to improve translation of research into health promotion practice? Annals of Behavioral Medicine, 27 , 3–12.

Glasgow, R. E., Green, L. W., & Ammerman, A. (2007). A focus on external validity. Evaluation & the Health Professions, 30 (2), 115–117.

Green, L. W., & Glasgow, R. E. (2006). Evaluating the relevance, generalization, and applicability of research issues in external validation and translation methodology. Evaluation & the Health Professions, 29 (1), 126–153.

Hahn, G. J., & Meeker, W. Q. (1993). assumptions for statistical inference. The American Statistician, 47 (1), 1–11.

House, E. R. (1980). The logic of evaluative argument, monograph #7 . Center for the Study of Evaluation, UCLA.

House, E. R. (2008). Blowback: Consequences of evaluation for evaluation. American Journal of Evaluation, 29 , 416–426.

Julnes, G. (2011). Reframing validity in research and evaluation: A multidimensional, systematic model of valid inference. In H. T. Chen, S. I. Donaldson, & M. M. Mark (Eds.), Advancing validity in outcome evaluation: Theory and practice. New Directions for Evaluation , 130 , 55–67.

Klass, G. M. (1984). Drawing inferences from policy experiments: Issues of external validity and conflict of interest. Evaluation Review, 8 (1), 3–24.

Mark, M. M. (2011). New (and old) directions for validity concerning generalizability. In H. T. Chen, S. I. Donaldson, & M. M. Mark (Eds.), Advancing validity in outcome evaluation: Theory and practice. New directions for evaluation, 130, 31–42.

Peck, L. R., Kim, Y., & Lucio, J. (2012). An empirical examination of validity in evaluation. American Journal of Evaluation, 0 (0), 1–16.

Reichardt, C. S. (2011). Criticisms of and an alternative to the Shadish, Cook, and Campbell validity typology. In H. T. Chen, S. I. Donaldson, & M. M. Mark (Eds.), Advancing validity in outcome evaluation: Theory and practice. New directions for evaluation , 130, 43–53.

Shadish, W. R., Cook, T. D., & Leviton, L. C. (1991). Foundations of program evaluation: Theories of practice . Sage.

Shadish, W. R., Cook, T. D., & Campbell, D. T. (2002a). Experimental and quasi-experimental design for generalized causal inference . Houghton Mifflin.

Shadish, W. R., Cook, T. D., & Campbell, D. T. (2002b). Experimental and quasi-experimental designs for generalized causal inference . Houghton Mifflin.

Stone, R. (1993). The assumptions on which causal inferences rest. Journal of the Royal Statistical Society. Series B (Methodological), 55 (2), 455–466.

Tebes, J. K., Snow, D. L., & Arthur, M. W. (1992). Panel attrition and external validity in the short-term follow-up study of adolescent substance use. Evaluation Review, 16 (2), 151–170.

Tunis, S. R., Stryer, D. B., & Clancy, C. M. (2003). Practical clinical trials. Increasing the value of clinical research for decision making in clinical and health policy. Journal of the American Medical Association, 290 , 1624–1632.

Yeaton, W. H., & Sechrest, L. (1986). Use and misuse of no-difference findings in eliminating threats to validity. Evaluation Review, 10 (6), 836–852.

Download references

Author information

Authors and affiliations.

The Questions Team, Frederick, MD, USA

Apollo M. Nkwake

You can also search for this author in PubMed Google Scholar

Rights and permissions

Reprints and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter.

Nkwake, A.M. (2023). Validity in Analysis, Interpretation, and Conclusions. In: Credibility, Validity, and Assumptions in Program Evaluation Methodology. Springer, Cham. https://doi.org/10.1007/978-3-031-45614-5_6

Download citation

DOI : https://doi.org/10.1007/978-3-031-45614-5_6

Published : 14 December 2023

Publisher Name : Springer, Cham

Print ISBN : 978-3-031-45613-8

Online ISBN : 978-3-031-45614-5

eBook Packages : Behavioral Science and Psychology Behavioral Science and Psychology (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- Search Menu

- Browse content in Arts and Humanities

- Browse content in Archaeology

- Anglo-Saxon and Medieval Archaeology

- Archaeological Methodology and Techniques

- Archaeology by Region

- Archaeology of Religion

- Archaeology of Trade and Exchange

- Biblical Archaeology

- Contemporary and Public Archaeology

- Environmental Archaeology

- Historical Archaeology

- History and Theory of Archaeology

- Industrial Archaeology

- Landscape Archaeology

- Mortuary Archaeology

- Prehistoric Archaeology

- Underwater Archaeology

- Urban Archaeology

- Zooarchaeology

- Browse content in Architecture

- Architectural Structure and Design

- History of Architecture

- Residential and Domestic Buildings

- Theory of Architecture

- Browse content in Art

- Art Subjects and Themes

- History of Art

- Industrial and Commercial Art

- Theory of Art

- Biographical Studies

- Byzantine Studies

- Browse content in Classical Studies

- Classical History

- Classical Philosophy

- Classical Mythology

- Classical Literature

- Classical Reception

- Classical Art and Architecture

- Classical Oratory and Rhetoric

- Greek and Roman Papyrology

- Greek and Roman Epigraphy

- Greek and Roman Law

- Greek and Roman Archaeology

- Late Antiquity

- Religion in the Ancient World

- Digital Humanities

- Browse content in History

- Colonialism and Imperialism

- Diplomatic History

- Environmental History

- Genealogy, Heraldry, Names, and Honours

- Genocide and Ethnic Cleansing

- Historical Geography

- History by Period

- History of Emotions

- History of Agriculture

- History of Education

- History of Gender and Sexuality

- Industrial History

- Intellectual History

- International History

- Labour History

- Legal and Constitutional History

- Local and Family History

- Maritime History

- Military History

- National Liberation and Post-Colonialism

- Oral History

- Political History

- Public History

- Regional and National History

- Revolutions and Rebellions

- Slavery and Abolition of Slavery

- Social and Cultural History

- Theory, Methods, and Historiography

- Urban History

- World History

- Browse content in Language Teaching and Learning

- Language Learning (Specific Skills)

- Language Teaching Theory and Methods

- Browse content in Linguistics

- Applied Linguistics

- Cognitive Linguistics

- Computational Linguistics

- Forensic Linguistics

- Grammar, Syntax and Morphology

- Historical and Diachronic Linguistics

- History of English

- Language Evolution

- Language Reference

- Language Acquisition

- Language Variation

- Language Families

- Lexicography

- Linguistic Anthropology

- Linguistic Theories

- Linguistic Typology

- Phonetics and Phonology

- Psycholinguistics

- Sociolinguistics

- Translation and Interpretation

- Writing Systems

- Browse content in Literature

- Bibliography

- Children's Literature Studies

- Literary Studies (Romanticism)

- Literary Studies (American)

- Literary Studies (Asian)

- Literary Studies (European)

- Literary Studies (Eco-criticism)

- Literary Studies (Modernism)

- Literary Studies - World

- Literary Studies (1500 to 1800)

- Literary Studies (19th Century)

- Literary Studies (20th Century onwards)

- Literary Studies (African American Literature)

- Literary Studies (British and Irish)

- Literary Studies (Early and Medieval)

- Literary Studies (Fiction, Novelists, and Prose Writers)

- Literary Studies (Gender Studies)

- Literary Studies (Graphic Novels)

- Literary Studies (History of the Book)

- Literary Studies (Plays and Playwrights)

- Literary Studies (Poetry and Poets)

- Literary Studies (Postcolonial Literature)

- Literary Studies (Queer Studies)

- Literary Studies (Science Fiction)

- Literary Studies (Travel Literature)

- Literary Studies (War Literature)

- Literary Studies (Women's Writing)

- Literary Theory and Cultural Studies

- Mythology and Folklore

- Shakespeare Studies and Criticism

- Browse content in Media Studies

- Browse content in Music

- Applied Music

- Dance and Music

- Ethics in Music

- Ethnomusicology

- Gender and Sexuality in Music

- Medicine and Music

- Music Cultures

- Music and Media

- Music and Religion

- Music and Culture

- Music Education and Pedagogy

- Music Theory and Analysis

- Musical Scores, Lyrics, and Libretti

- Musical Structures, Styles, and Techniques

- Musicology and Music History

- Performance Practice and Studies

- Race and Ethnicity in Music

- Sound Studies

- Browse content in Performing Arts

- Browse content in Philosophy

- Aesthetics and Philosophy of Art

- Epistemology

- Feminist Philosophy

- History of Western Philosophy

- Metaphysics

- Moral Philosophy

- Non-Western Philosophy

- Philosophy of Language

- Philosophy of Mind

- Philosophy of Perception

- Philosophy of Science

- Philosophy of Action

- Philosophy of Law

- Philosophy of Religion

- Philosophy of Mathematics and Logic

- Practical Ethics

- Social and Political Philosophy

- Browse content in Religion

- Biblical Studies

- Christianity

- East Asian Religions

- History of Religion

- Judaism and Jewish Studies

- Qumran Studies

- Religion and Education

- Religion and Health

- Religion and Politics

- Religion and Science

- Religion and Law

- Religion and Art, Literature, and Music

- Religious Studies

- Browse content in Society and Culture

- Cookery, Food, and Drink

- Cultural Studies

- Customs and Traditions

- Ethical Issues and Debates

- Hobbies, Games, Arts and Crafts

- Lifestyle, Home, and Garden

- Natural world, Country Life, and Pets

- Popular Beliefs and Controversial Knowledge

- Sports and Outdoor Recreation

- Technology and Society

- Travel and Holiday

- Visual Culture

- Browse content in Law

- Arbitration

- Browse content in Company and Commercial Law

- Commercial Law

- Company Law

- Browse content in Comparative Law

- Systems of Law

- Competition Law

- Browse content in Constitutional and Administrative Law

- Government Powers

- Judicial Review

- Local Government Law

- Military and Defence Law

- Parliamentary and Legislative Practice

- Construction Law

- Contract Law

- Browse content in Criminal Law

- Criminal Procedure

- Criminal Evidence Law

- Sentencing and Punishment

- Employment and Labour Law

- Environment and Energy Law

- Browse content in Financial Law

- Banking Law

- Insolvency Law

- History of Law

- Human Rights and Immigration

- Intellectual Property Law

- Browse content in International Law

- Private International Law and Conflict of Laws

- Public International Law

- IT and Communications Law

- Jurisprudence and Philosophy of Law

- Law and Politics

- Law and Society

- Browse content in Legal System and Practice

- Courts and Procedure

- Legal Skills and Practice

- Primary Sources of Law

- Regulation of Legal Profession

- Medical and Healthcare Law

- Browse content in Policing

- Criminal Investigation and Detection

- Police and Security Services

- Police Procedure and Law

- Police Regional Planning

- Browse content in Property Law

- Personal Property Law

- Study and Revision

- Terrorism and National Security Law

- Browse content in Trusts Law

- Wills and Probate or Succession

- Browse content in Medicine and Health

- Browse content in Allied Health Professions

- Arts Therapies

- Clinical Science

- Dietetics and Nutrition

- Occupational Therapy

- Operating Department Practice

- Physiotherapy

- Radiography

- Speech and Language Therapy

- Browse content in Anaesthetics

- General Anaesthesia

- Neuroanaesthesia

- Clinical Neuroscience

- Browse content in Clinical Medicine

- Acute Medicine

- Cardiovascular Medicine

- Clinical Genetics

- Clinical Pharmacology and Therapeutics

- Dermatology

- Endocrinology and Diabetes

- Gastroenterology

- Genito-urinary Medicine

- Geriatric Medicine

- Infectious Diseases

- Medical Toxicology

- Medical Oncology

- Pain Medicine

- Palliative Medicine

- Rehabilitation Medicine

- Respiratory Medicine and Pulmonology

- Rheumatology

- Sleep Medicine

- Sports and Exercise Medicine

- Community Medical Services

- Critical Care

- Emergency Medicine

- Forensic Medicine

- Haematology

- History of Medicine

- Browse content in Medical Skills

- Clinical Skills

- Communication Skills

- Nursing Skills

- Surgical Skills

- Browse content in Medical Dentistry

- Oral and Maxillofacial Surgery

- Paediatric Dentistry

- Restorative Dentistry and Orthodontics

- Surgical Dentistry

- Medical Ethics

- Medical Statistics and Methodology

- Browse content in Neurology

- Clinical Neurophysiology

- Neuropathology

- Nursing Studies

- Browse content in Obstetrics and Gynaecology

- Gynaecology

- Occupational Medicine

- Ophthalmology

- Otolaryngology (ENT)

- Browse content in Paediatrics

- Neonatology

- Browse content in Pathology

- Chemical Pathology

- Clinical Cytogenetics and Molecular Genetics

- Histopathology

- Medical Microbiology and Virology

- Patient Education and Information

- Browse content in Pharmacology

- Psychopharmacology

- Browse content in Popular Health

- Caring for Others

- Complementary and Alternative Medicine

- Self-help and Personal Development

- Browse content in Preclinical Medicine

- Cell Biology

- Molecular Biology and Genetics

- Reproduction, Growth and Development

- Primary Care

- Professional Development in Medicine

- Browse content in Psychiatry

- Addiction Medicine

- Child and Adolescent Psychiatry

- Forensic Psychiatry

- Learning Disabilities

- Old Age Psychiatry

- Psychotherapy

- Browse content in Public Health and Epidemiology

- Epidemiology

- Public Health

- Browse content in Radiology

- Clinical Radiology

- Interventional Radiology

- Nuclear Medicine

- Radiation Oncology

- Reproductive Medicine

- Browse content in Surgery

- Cardiothoracic Surgery

- Gastro-intestinal and Colorectal Surgery

- General Surgery

- Neurosurgery

- Paediatric Surgery

- Peri-operative Care

- Plastic and Reconstructive Surgery

- Surgical Oncology

- Transplant Surgery

- Trauma and Orthopaedic Surgery

- Vascular Surgery

- Browse content in Science and Mathematics

- Browse content in Biological Sciences

- Aquatic Biology

- Biochemistry

- Bioinformatics and Computational Biology

- Developmental Biology

- Ecology and Conservation

- Evolutionary Biology

- Genetics and Genomics

- Microbiology

- Molecular and Cell Biology

- Natural History

- Plant Sciences and Forestry

- Research Methods in Life Sciences

- Structural Biology

- Systems Biology

- Zoology and Animal Sciences

- Browse content in Chemistry

- Analytical Chemistry

- Computational Chemistry

- Crystallography

- Environmental Chemistry

- Industrial Chemistry

- Inorganic Chemistry

- Materials Chemistry

- Medicinal Chemistry

- Mineralogy and Gems

- Organic Chemistry

- Physical Chemistry

- Polymer Chemistry

- Study and Communication Skills in Chemistry

- Theoretical Chemistry

- Browse content in Computer Science

- Artificial Intelligence

- Computer Architecture and Logic Design

- Game Studies

- Human-Computer Interaction

- Mathematical Theory of Computation

- Programming Languages

- Software Engineering

- Systems Analysis and Design

- Virtual Reality

- Browse content in Computing

- Business Applications

- Computer Security

- Computer Games

- Computer Networking and Communications

- Digital Lifestyle

- Graphical and Digital Media Applications

- Operating Systems

- Browse content in Earth Sciences and Geography

- Atmospheric Sciences

- Environmental Geography

- Geology and the Lithosphere

- Maps and Map-making

- Meteorology and Climatology

- Oceanography and Hydrology

- Palaeontology

- Physical Geography and Topography

- Regional Geography

- Soil Science

- Urban Geography

- Browse content in Engineering and Technology

- Agriculture and Farming

- Biological Engineering

- Civil Engineering, Surveying, and Building

- Electronics and Communications Engineering

- Energy Technology

- Engineering (General)

- Environmental Science, Engineering, and Technology

- History of Engineering and Technology

- Mechanical Engineering and Materials

- Technology of Industrial Chemistry

- Transport Technology and Trades

- Browse content in Environmental Science

- Applied Ecology (Environmental Science)

- Conservation of the Environment (Environmental Science)

- Environmental Sustainability

- Environmentalist Thought and Ideology (Environmental Science)

- Management of Land and Natural Resources (Environmental Science)

- Natural Disasters (Environmental Science)

- Nuclear Issues (Environmental Science)

- Pollution and Threats to the Environment (Environmental Science)

- Social Impact of Environmental Issues (Environmental Science)

- History of Science and Technology

- Browse content in Materials Science

- Ceramics and Glasses

- Composite Materials

- Metals, Alloying, and Corrosion

- Nanotechnology

- Browse content in Mathematics

- Applied Mathematics

- Biomathematics and Statistics

- History of Mathematics

- Mathematical Education

- Mathematical Finance

- Mathematical Analysis

- Numerical and Computational Mathematics

- Probability and Statistics

- Pure Mathematics

- Browse content in Neuroscience

- Cognition and Behavioural Neuroscience

- Development of the Nervous System

- Disorders of the Nervous System

- History of Neuroscience

- Invertebrate Neurobiology

- Molecular and Cellular Systems

- Neuroendocrinology and Autonomic Nervous System

- Neuroscientific Techniques

- Sensory and Motor Systems

- Browse content in Physics

- Astronomy and Astrophysics

- Atomic, Molecular, and Optical Physics

- Biological and Medical Physics

- Classical Mechanics

- Computational Physics

- Condensed Matter Physics

- Electromagnetism, Optics, and Acoustics

- History of Physics

- Mathematical and Statistical Physics

- Measurement Science

- Nuclear Physics

- Particles and Fields

- Plasma Physics

- Quantum Physics

- Relativity and Gravitation

- Semiconductor and Mesoscopic Physics

- Browse content in Psychology

- Affective Sciences

- Clinical Psychology

- Cognitive Psychology

- Cognitive Neuroscience

- Criminal and Forensic Psychology

- Developmental Psychology

- Educational Psychology

- Evolutionary Psychology

- Health Psychology

- History and Systems in Psychology

- Music Psychology

- Neuropsychology

- Organizational Psychology

- Psychological Assessment and Testing

- Psychology of Human-Technology Interaction

- Psychology Professional Development and Training

- Research Methods in Psychology

- Social Psychology

- Browse content in Social Sciences

- Browse content in Anthropology

- Anthropology of Religion

- Human Evolution

- Medical Anthropology

- Physical Anthropology

- Regional Anthropology

- Social and Cultural Anthropology

- Theory and Practice of Anthropology

- Browse content in Business and Management

- Business Ethics

- Business Strategy

- Business History

- Business and Technology

- Business and Government

- Business and the Environment

- Comparative Management

- Corporate Governance

- Corporate Social Responsibility

- Entrepreneurship

- Health Management

- Human Resource Management

- Industrial and Employment Relations

- Industry Studies

- Information and Communication Technologies

- International Business

- Knowledge Management

- Management and Management Techniques

- Operations Management

- Organizational Theory and Behaviour

- Pensions and Pension Management

- Public and Nonprofit Management

- Strategic Management

- Supply Chain Management

- Browse content in Criminology and Criminal Justice

- Criminal Justice

- Criminology

- Forms of Crime

- International and Comparative Criminology

- Youth Violence and Juvenile Justice

- Development Studies

- Browse content in Economics

- Agricultural, Environmental, and Natural Resource Economics

- Asian Economics

- Behavioural Finance

- Behavioural Economics and Neuroeconomics

- Econometrics and Mathematical Economics

- Economic History

- Economic Systems

- Economic Methodology

- Economic Development and Growth

- Financial Markets

- Financial Institutions and Services

- General Economics and Teaching

- Health, Education, and Welfare

- History of Economic Thought

- International Economics

- Labour and Demographic Economics

- Law and Economics

- Macroeconomics and Monetary Economics

- Microeconomics

- Public Economics

- Urban, Rural, and Regional Economics

- Welfare Economics

- Browse content in Education

- Adult Education and Continuous Learning

- Care and Counselling of Students

- Early Childhood and Elementary Education

- Educational Equipment and Technology

- Educational Strategies and Policy

- Higher and Further Education

- Organization and Management of Education

- Philosophy and Theory of Education

- Schools Studies

- Secondary Education

- Teaching of a Specific Subject

- Teaching of Specific Groups and Special Educational Needs

- Teaching Skills and Techniques

- Browse content in Environment

- Applied Ecology (Social Science)

- Climate Change

- Conservation of the Environment (Social Science)

- Environmentalist Thought and Ideology (Social Science)

- Natural Disasters (Environment)

- Social Impact of Environmental Issues (Social Science)

- Browse content in Human Geography

- Cultural Geography

- Economic Geography

- Political Geography

- Browse content in Interdisciplinary Studies

- Communication Studies

- Museums, Libraries, and Information Sciences

- Browse content in Politics

- African Politics

- Asian Politics

- Chinese Politics

- Comparative Politics

- Conflict Politics

- Elections and Electoral Studies

- Environmental Politics

- European Union

- Foreign Policy

- Gender and Politics

- Human Rights and Politics

- Indian Politics

- International Relations

- International Organization (Politics)

- International Political Economy

- Irish Politics

- Latin American Politics

- Middle Eastern Politics

- Political Behaviour

- Political Economy

- Political Institutions

- Political Methodology

- Political Communication

- Political Philosophy

- Political Sociology

- Political Theory

- Politics and Law

- Public Policy

- Public Administration

- Quantitative Political Methodology

- Regional Political Studies

- Russian Politics

- Security Studies

- State and Local Government

- UK Politics

- US Politics

- Browse content in Regional and Area Studies

- African Studies

- Asian Studies

- East Asian Studies

- Japanese Studies

- Latin American Studies

- Middle Eastern Studies

- Native American Studies

- Scottish Studies

- Browse content in Research and Information

- Research Methods

- Browse content in Social Work

- Addictions and Substance Misuse

- Adoption and Fostering

- Care of the Elderly

- Child and Adolescent Social Work

- Couple and Family Social Work

- Developmental and Physical Disabilities Social Work

- Direct Practice and Clinical Social Work

- Emergency Services

- Human Behaviour and the Social Environment

- International and Global Issues in Social Work

- Mental and Behavioural Health

- Social Justice and Human Rights

- Social Policy and Advocacy

- Social Work and Crime and Justice

- Social Work Macro Practice

- Social Work Practice Settings

- Social Work Research and Evidence-based Practice

- Welfare and Benefit Systems

- Browse content in Sociology

- Childhood Studies

- Community Development

- Comparative and Historical Sociology

- Economic Sociology

- Gender and Sexuality

- Gerontology and Ageing

- Health, Illness, and Medicine

- Marriage and the Family

- Migration Studies

- Occupations, Professions, and Work

- Organizations

- Population and Demography

- Race and Ethnicity

- Social Theory

- Social Movements and Social Change

- Social Research and Statistics

- Social Stratification, Inequality, and Mobility

- Sociology of Religion

- Sociology of Education

- Sport and Leisure

- Urban and Rural Studies

- Browse content in Warfare and Defence

- Defence Strategy, Planning, and Research

- Land Forces and Warfare

- Military Administration

- Military Life and Institutions

- Naval Forces and Warfare

- Other Warfare and Defence Issues

- Peace Studies and Conflict Resolution

- Weapons and Equipment

- < Previous chapter

- Next chapter >

6 Statistical Conclusion Validity

- Published: May 2017

- Cite Icon Cite

- Permissions Icon Permissions

Chapter 6 addresses the sub-category of internal validity defined by Shadish et al., as statistical conclusion validity, or “validity of inferences about the correlation (covariance) between treatment and outcome.” The common threats to statistical conclusion validity can arise, or become plausible through either model misspecification or through hypothesis testing. The risk of a serious model misspecification is inversely proportional to the length of the time series, for example, and so is the risk of mistating the Type I and Type II error rates. Threats to statistical conclusion validity arise from the classical and modern hybrid significance testing structures, the serious threats that weigh heavily in p-value tests are shown to be undefined in Beyesian tests. While the particularly vexing threats raised by modern null hypothesis testing are resolved through the elimination of the modern null hypothesis test, threats to statistical conclusion validity would inevitably persist and new threats would arise.

Signed in as

Institutional accounts.

- Google Scholar Indexing

- GoogleCrawler [DO NOT DELETE]

Personal account

- Sign in with email/username & password

- Get email alerts

- Save searches

- Purchase content

- Activate your purchase/trial code

Institutional access

- Sign in with a library card Sign in with username/password Recommend to your librarian

- Institutional account management

- Get help with access

Access to content on Oxford Academic is often provided through institutional subscriptions and purchases. If you are a member of an institution with an active account, you may be able to access content in one of the following ways:

IP based access

Typically, access is provided across an institutional network to a range of IP addresses. This authentication occurs automatically, and it is not possible to sign out of an IP authenticated account.

Sign in through your institution

Choose this option to get remote access when outside your institution. Shibboleth/Open Athens technology is used to provide single sign-on between your institution’s website and Oxford Academic.

- Click Sign in through your institution.

- Select your institution from the list provided, which will take you to your institution's website to sign in.

- When on the institution site, please use the credentials provided by your institution. Do not use an Oxford Academic personal account.

- Following successful sign in, you will be returned to Oxford Academic.

If your institution is not listed or you cannot sign in to your institution’s website, please contact your librarian or administrator.

Sign in with a library card

Enter your library card number to sign in. If you cannot sign in, please contact your librarian.

Society Members

Society member access to a journal is achieved in one of the following ways:

Sign in through society site

Many societies offer single sign-on between the society website and Oxford Academic. If you see ‘Sign in through society site’ in the sign in pane within a journal:

- Click Sign in through society site.

- When on the society site, please use the credentials provided by that society. Do not use an Oxford Academic personal account.

If you do not have a society account or have forgotten your username or password, please contact your society.

Sign in using a personal account

Some societies use Oxford Academic personal accounts to provide access to their members. See below.

A personal account can be used to get email alerts, save searches, purchase content, and activate subscriptions.

Some societies use Oxford Academic personal accounts to provide access to their members.

Viewing your signed in accounts

Click the account icon in the top right to:

- View your signed in personal account and access account management features.

- View the institutional accounts that are providing access.

Signed in but can't access content

Oxford Academic is home to a wide variety of products. The institutional subscription may not cover the content that you are trying to access. If you believe you should have access to that content, please contact your librarian.

For librarians and administrators, your personal account also provides access to institutional account management. Here you will find options to view and activate subscriptions, manage institutional settings and access options, access usage statistics, and more.

Our books are available by subscription or purchase to libraries and institutions.

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Rights and permissions

- Accessibility

- Advertising

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

Statistical conclusion validity: some common threats and simple remedies

Affiliation.

- 1 Facultad de Psicología, Departamento de Metodología, Universidad Complutense Madrid, Spain.

- PMID: 22952465

- PMCID: PMC3429930

- DOI: 10.3389/fpsyg.2012.00325

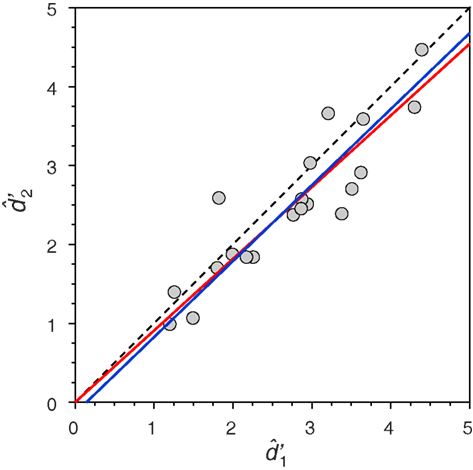

The ultimate goal of research is to produce dependable knowledge or to provide the evidence that may guide practical decisions. Statistical conclusion validity (SCV) holds when the conclusions of a research study are founded on an adequate analysis of the data, generally meaning that adequate statistical methods are used whose small-sample behavior is accurate, besides being logically capable of providing an answer to the research question. Compared to the three other traditional aspects of research validity (external validity, internal validity, and construct validity), interest in SCV has recently grown on evidence that inadequate data analyses are sometimes carried out which yield conclusions that a proper analysis of the data would not have supported. This paper discusses evidence of three common threats to SCV that arise from widespread recommendations or practices in data analysis, namely, the use of repeated testing and optional stopping without control of Type-I error rates, the recommendation to check the assumptions of statistical tests, and the use of regression whenever a bivariate relation or the equivalence between two variables is studied. For each of these threats, examples are presented and alternative practices that safeguard SCV are discussed. Educational and editorial changes that may improve the SCV of published research are also discussed.

Keywords: data analysis; preliminary tests; regression; stopping rules; validity of research.

- Privacy Policy

Buy Me a Coffee

Home » Validity – Types, Examples and Guide

Validity – Types, Examples and Guide

Table of Contents

Definition:

Validity refers to the extent to which a concept, measure, or study accurately represents the intended meaning or reality it is intended to capture. It is a fundamental concept in research and assessment that assesses the soundness and appropriateness of the conclusions, inferences, or interpretations made based on the data or evidence collected.

Research Validity

Research validity refers to the degree to which a study accurately measures or reflects what it claims to measure. In other words, research validity concerns whether the conclusions drawn from a study are based on accurate, reliable and relevant data.

Validity is a concept used in logic and research methodology to assess the strength of an argument or the quality of a research study. It refers to the extent to which a conclusion or result is supported by evidence and reasoning.

How to Ensure Validity in Research

Ensuring validity in research involves several steps and considerations throughout the research process. Here are some key strategies to help maintain research validity:

Clearly Define Research Objectives and Questions

Start by clearly defining your research objectives and formulating specific research questions. This helps focus your study and ensures that you are addressing relevant and meaningful research topics.

Use appropriate research design

Select a research design that aligns with your research objectives and questions. Different types of studies, such as experimental, observational, qualitative, or quantitative, have specific strengths and limitations. Choose the design that best suits your research goals.

Use reliable and valid measurement instruments

If you are measuring variables or constructs, ensure that the measurement instruments you use are reliable and valid. This involves using established and well-tested tools or developing your own instruments through rigorous validation processes.

Ensure a representative sample

When selecting participants or subjects for your study, aim for a sample that is representative of the population you want to generalize to. Consider factors such as age, gender, socioeconomic status, and other relevant demographics to ensure your findings can be generalized appropriately.

Address potential confounding factors

Identify potential confounding variables or biases that could impact your results. Implement strategies such as randomization, matching, or statistical control to minimize the influence of confounding factors and increase internal validity.

Minimize measurement and response biases

Be aware of measurement biases and response biases that can occur during data collection. Use standardized protocols, clear instructions, and trained data collectors to minimize these biases. Employ techniques like blinding or double-blinding in experimental studies to reduce bias.

Conduct appropriate statistical analyses

Ensure that the statistical analyses you employ are appropriate for your research design and data type. Select statistical tests that are relevant to your research questions and use robust analytical techniques to draw accurate conclusions from your data.

Consider external validity

While it may not always be possible to achieve high external validity, be mindful of the generalizability of your findings. Clearly describe your sample and study context to help readers understand the scope and limitations of your research.

Peer review and replication

Submit your research for peer review by experts in your field. Peer review helps identify potential flaws, biases, or methodological issues that can impact validity. Additionally, encourage replication studies by other researchers to validate your findings and enhance the overall reliability of the research.

Transparent reporting

Clearly and transparently report your research methods, procedures, data collection, and analysis techniques. Provide sufficient details for others to evaluate the validity of your study and replicate your work if needed.

Types of Validity

There are several types of validity that researchers consider when designing and evaluating studies. Here are some common types of validity:

Internal Validity

Internal validity relates to the degree to which a study accurately identifies causal relationships between variables. It addresses whether the observed effects can be attributed to the manipulated independent variable rather than confounding factors. Threats to internal validity include selection bias, history effects, maturation of participants, and instrumentation issues.

External Validity

External validity concerns the generalizability of research findings to the broader population or real-world settings. It assesses the extent to which the results can be applied to other individuals, contexts, or timeframes. Factors that can limit external validity include sample characteristics, research settings, and the specific conditions under which the study was conducted.

Construct Validity

Construct validity examines whether a study adequately measures the intended theoretical constructs or concepts. It focuses on the alignment between the operational definitions used in the study and the underlying theoretical constructs. Construct validity can be threatened by issues such as poor measurement tools, inadequate operational definitions, or a lack of clarity in the conceptual framework.

Content Validity

Content validity refers to the degree to which a measurement instrument or test adequately covers the entire range of the construct being measured. It assesses whether the items or questions included in the measurement tool represent the full scope of the construct. Content validity is often evaluated through expert judgment, reviewing the relevance and representativeness of the items.

Criterion Validity

Criterion validity determines the extent to which a measure or test is related to an external criterion or standard. It assesses whether the results obtained from a measurement instrument align with other established measures or outcomes. Criterion validity can be divided into two subtypes: concurrent validity, which examines the relationship between the measure and the criterion at the same time, and predictive validity, which investigates the measure’s ability to predict future outcomes.

Face Validity

Face validity refers to the degree to which a measurement or test appears, on the surface, to measure what it intends to measure. It is a subjective assessment based on whether the items seem relevant and appropriate to the construct being measured. Face validity is often used as an initial evaluation before conducting more rigorous validity assessments.

Importance of Validity

Validity is crucial in research for several reasons:

- Accurate Measurement: Validity ensures that the measurements or observations in a study accurately represent the intended constructs or variables. Without validity, researchers cannot be confident that their results truly reflect the phenomena they are studying. Validity allows researchers to draw accurate conclusions and make meaningful inferences based on their findings.

- Credibility and Trustworthiness: Validity enhances the credibility and trustworthiness of research. When a study demonstrates high validity, it indicates that the researchers have taken appropriate measures to ensure the accuracy and integrity of their work. This strengthens the confidence of other researchers, peers, and the wider scientific community in the study’s results and conclusions.

- Generalizability: Validity helps determine the extent to which research findings can be generalized beyond the specific sample and context of the study. By addressing external validity, researchers can assess whether their results can be applied to other populations, settings, or situations. This information is valuable for making informed decisions, implementing interventions, or developing policies based on research findings.

- Sound Decision-Making: Validity supports informed decision-making in various fields, such as medicine, psychology, education, and social sciences. When validity is established, policymakers, practitioners, and professionals can rely on research findings to guide their actions and interventions. Validity ensures that decisions are based on accurate and trustworthy information, which can lead to better outcomes and more effective practices.

- Avoiding Errors and Bias: Validity helps researchers identify and mitigate potential errors and biases in their studies. By addressing internal validity, researchers can minimize confounding factors and alternative explanations, ensuring that the observed effects are genuinely attributable to the manipulated variables. Validity assessments also highlight measurement errors or shortcomings, enabling researchers to improve their measurement tools and procedures.

- Progress of Scientific Knowledge: Validity is essential for the advancement of scientific knowledge. Valid research contributes to the accumulation of reliable and valid evidence, which forms the foundation for building theories, developing models, and refining existing knowledge. Validity allows researchers to build upon previous findings, replicate studies, and establish a cumulative body of knowledge in various disciplines. Without validity, the scientific community would struggle to make meaningful progress and establish a solid understanding of the phenomena under investigation.

- Ethical Considerations: Validity is closely linked to ethical considerations in research. Conducting valid research ensures that participants’ time, effort, and data are not wasted on flawed or invalid studies. It upholds the principle of respect for participants’ autonomy and promotes responsible research practices. Validity is also important when making claims or drawing conclusions that may have real-world implications, as misleading or invalid findings can have adverse effects on individuals, organizations, or society as a whole.

Examples of Validity

Here are some examples of validity in different contexts:

- Example 1: All men are mortal. John is a man. Therefore, John is mortal. This argument is logically valid because the conclusion follows logically from the premises.

- Example 2: If it is raining, then the ground is wet. The ground is wet. Therefore, it is raining. This argument is not logically valid because there could be other reasons for the ground being wet, such as watering the plants.

- Example 1: In a study examining the relationship between caffeine consumption and alertness, the researchers use established measures of both variables, ensuring that they are accurately capturing the concepts they intend to measure. This demonstrates construct validity.

- Example 2: A researcher develops a new questionnaire to measure anxiety levels. They administer the questionnaire to a group of participants and find that it correlates highly with other established anxiety measures. This indicates good construct validity for the new questionnaire.

- Example 1: A study on the effects of a particular teaching method is conducted in a controlled laboratory setting. The findings of the study may lack external validity because the conditions in the lab may not accurately reflect real-world classroom settings.

- Example 2: A research study on the effects of a new medication includes participants from diverse backgrounds and age groups, increasing the external validity of the findings to a broader population.

- Example 1: In an experiment, a researcher manipulates the independent variable (e.g., a new drug) and controls for other variables to ensure that any observed effects on the dependent variable (e.g., symptom reduction) are indeed due to the manipulation. This establishes internal validity.

- Example 2: A researcher conducts a study examining the relationship between exercise and mood by administering questionnaires to participants. However, the study lacks internal validity because it does not control for other potential factors that could influence mood, such as diet or stress levels.

- Example 1: A teacher develops a new test to assess students’ knowledge of a particular subject. The items on the test appear to be relevant to the topic at hand and align with what one would expect to find on such a test. This suggests face validity, as the test appears to measure what it intends to measure.

- Example 2: A company develops a new customer satisfaction survey. The questions included in the survey seem to address key aspects of the customer experience and capture the relevant information. This indicates face validity, as the survey seems appropriate for assessing customer satisfaction.

- Example 1: A team of experts reviews a comprehensive curriculum for a high school biology course. They evaluate the curriculum to ensure that it covers all the essential topics and concepts necessary for students to gain a thorough understanding of biology. This demonstrates content validity, as the curriculum is representative of the domain it intends to cover.

- Example 2: A researcher develops a questionnaire to assess career satisfaction. The questions in the questionnaire encompass various dimensions of job satisfaction, such as salary, work-life balance, and career growth. This indicates content validity, as the questionnaire adequately represents the different aspects of career satisfaction.

- Example 1: A company wants to evaluate the effectiveness of a new employee selection test. They administer the test to a group of job applicants and later assess the job performance of those who were hired. If there is a strong correlation between the test scores and subsequent job performance, it suggests criterion validity, indicating that the test is predictive of job success.

- Example 2: A researcher wants to determine if a new medical diagnostic tool accurately identifies a specific disease. They compare the results of the diagnostic tool with the gold standard diagnostic method and find a high level of agreement. This demonstrates criterion validity, indicating that the new tool is valid in accurately diagnosing the disease.

Where to Write About Validity in A Thesis

In a thesis, discussions related to validity are typically included in the methodology and results sections. Here are some specific places where you can address validity within your thesis:

Research Design and Methodology

In the methodology section, provide a clear and detailed description of the measures, instruments, or data collection methods used in your study. Discuss the steps taken to establish or assess the validity of these measures. Explain the rationale behind the selection of specific validity types relevant to your study, such as content validity, criterion validity, or construct validity. Discuss any modifications or adaptations made to existing measures and their potential impact on validity.

Measurement Procedures

In the methodology section, elaborate on the procedures implemented to ensure the validity of measurements. Describe how potential biases or confounding factors were addressed, controlled, or accounted for to enhance internal validity. Provide details on how you ensured that the measurement process accurately captures the intended constructs or variables of interest.

Data Collection

In the methodology section, discuss the steps taken to collect data and ensure data validity. Explain any measures implemented to minimize errors or biases during data collection, such as training of data collectors, standardized protocols, or quality control procedures. Address any potential limitations or threats to validity related to the data collection process.

Data Analysis and Results

In the results section, present the analysis and findings related to validity. Report any statistical tests, correlations, or other measures used to assess validity. Provide interpretations and explanations of the results obtained. Discuss the implications of the validity findings for the overall reliability and credibility of your study.

Limitations and Future Directions

In the discussion or conclusion section, reflect on the limitations of your study, including limitations related to validity. Acknowledge any potential threats or weaknesses to validity that you encountered during your research. Discuss how these limitations may have influenced the interpretation of your findings and suggest avenues for future research that could address these validity concerns.

Applications of Validity

Validity is applicable in various areas and contexts where research and measurement play a role. Here are some common applications of validity:

Psychological and Behavioral Research

Validity is crucial in psychology and behavioral research to ensure that measurement instruments accurately capture constructs such as personality traits, intelligence, attitudes, emotions, or psychological disorders. Validity assessments help researchers determine if their measures are truly measuring the intended psychological constructs and if the results can be generalized to broader populations or real-world settings.

Educational Assessment

Validity is essential in educational assessment to determine if tests, exams, or assessments accurately measure students’ knowledge, skills, or abilities. It ensures that the assessment aligns with the educational objectives and provides reliable information about student performance. Validity assessments help identify if the assessment is valid for all students, regardless of their demographic characteristics, language proficiency, or cultural background.

Program Evaluation

Validity plays a crucial role in program evaluation, where researchers assess the effectiveness and impact of interventions, policies, or programs. By establishing validity, evaluators can determine if the observed outcomes are genuinely attributable to the program being evaluated rather than extraneous factors. Validity assessments also help ensure that the evaluation findings are applicable to different populations, contexts, or timeframes.

Medical and Health Research

Validity is essential in medical and health research to ensure the accuracy and reliability of diagnostic tools, measurement instruments, and clinical assessments. Validity assessments help determine if a measurement accurately identifies the presence or absence of a medical condition, measures the effectiveness of a treatment, or predicts patient outcomes. Validity is crucial for establishing evidence-based medicine and informing medical decision-making.

Social Science Research

Validity is relevant in various social science disciplines, including sociology, anthropology, economics, and political science. Researchers use validity to ensure that their measures and methods accurately capture social phenomena, such as social attitudes, behaviors, social structures, or economic indicators. Validity assessments support the reliability and credibility of social science research findings.

Market Research and Surveys

Validity is important in market research and survey studies to ensure that the survey questions effectively measure consumer preferences, buying behaviors, or attitudes towards products or services. Validity assessments help researchers determine if the survey instrument is accurately capturing the desired information and if the results can be generalized to the target population.

Limitations of Validity

Here are some limitations of validity:

- Construct Validity: Limitations of construct validity include the potential for measurement error, inadequate operational definitions of constructs, or the failure to capture all aspects of a complex construct.

- Internal Validity: Limitations of internal validity may arise from confounding variables, selection bias, or the presence of extraneous factors that could influence the study outcomes, making it difficult to attribute causality accurately.

- External Validity: Limitations of external validity can occur when the study sample does not represent the broader population, when the research setting differs significantly from real-world conditions, or when the study lacks ecological validity, i.e., the findings do not reflect real-world complexities.

- Measurement Validity: Limitations of measurement validity can arise from measurement error, inadequately designed or flawed measurement scales, or limitations inherent in self-report measures, such as social desirability bias or recall bias.

- Statistical Conclusion Validity: Limitations in statistical conclusion validity can occur due to sampling errors, inadequate sample sizes, or improper statistical analysis techniques, leading to incorrect conclusions or generalizations.

- Temporal Validity: Limitations of temporal validity arise when the study results become outdated due to changes in the studied phenomena, interventions, or contextual factors.

- Researcher Bias: Researcher bias can affect the validity of a study. Biases can emerge through the researcher’s subjective interpretation, influence of personal beliefs, or preconceived notions, leading to unintentional distortion of findings or failure to consider alternative explanations.

- Ethical Validity: Limitations can arise if the study design or methods involve ethical concerns, such as the use of deceptive practices, inadequate informed consent, or potential harm to participants.

Also see Reliability Vs Validity

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Alternate Forms Reliability – Methods, Examples...

Construct Validity – Types, Threats and Examples

Internal Validity – Threats, Examples and Guide

Reliability Vs Validity

Internal Consistency Reliability – Methods...

Split-Half Reliability – Methods, Examples and...

- Foundations

- Write Paper

Search form

- Experiments

- Anthropology

- Self-Esteem

- Social Anxiety

- Statistics >

Statistical Validity

Statistical validity refers to whether a statistical study is able to draw conclusions that are in agreement with statistical and scientific laws. This means if a conclusion is drawn from a given data set after experimentation, it is said to be scientifically valid if the conclusion drawn from the experiment is scientific and relies on mathematical and statistical laws.

This article is a part of the guide:

- Significance 2

- Sample Size

- Experimental Probability

- Cronbach’s Alpha

- Systematic Error

Browse Full Outline

- 1 Inferential Statistics

- 2.1 Bayesian Probability

- 3.1.1 Significance 2

- 3.2 Significant Results

- 3.3 Sample Size

- 3.4 Margin of Error

- 3.5.1 Random Error

- 3.5.2 Systematic Error

- 3.5.3 Data Dredging

- 3.5.4 Ad Hoc Analysis

- 3.5.5 Regression Toward the Mean

- 4.1 P-Value