How to Write a Systematic Review of the Literature

Affiliations.

- 1 1 Texas Tech University, Lubbock, TX, USA.

- 2 2 University of Florida, Gainesville, FL, USA.

- PMID: 29283007

- DOI: 10.1177/1937586717747384

This article provides a step-by-step approach to conducting and reporting systematic literature reviews (SLRs) in the domain of healthcare design and discusses some of the key quality issues associated with SLRs. SLR, as the name implies, is a systematic way of collecting, critically evaluating, integrating, and presenting findings from across multiple research studies on a research question or topic of interest. SLR provides a way to assess the quality level and magnitude of existing evidence on a question or topic of interest. It offers a broader and more accurate level of understanding than a traditional literature review. A systematic review adheres to standardized methodologies/guidelines in systematic searching, filtering, reviewing, critiquing, interpreting, synthesizing, and reporting of findings from multiple publications on a topic/domain of interest. The Cochrane Collaboration is the most well-known and widely respected global organization producing SLRs within the healthcare field and a standard to follow for any researcher seeking to write a transparent and methodologically sound SLR. Preferred Reporting Items for Systematic Reviews and Meta-Analysis (PRISMA), like the Cochrane Collaboration, was created by an international network of health-based collaborators and provides the framework for SLR to ensure methodological rigor and quality. The PRISMA statement is an evidence-based guide consisting of a checklist and flowchart intended to be used as tools for authors seeking to write SLR and meta-analyses.

Keywords: evidence based design; healthcare design; systematic literature review.

- Evidence-Based Medicine* / organization & administration

- Research Design*

- Systematic Reviews as Topic*

- Locations and Hours

- UCLA Library

- Research Guides

- Biomedical Library Guides

Systematic Reviews

- Types of Literature Reviews

What Makes a Systematic Review Different from Other Types of Reviews?

- Planning Your Systematic Review

- Database Searching

- Creating the Search

- Search Filters and Hedges

- Grey Literature

- Managing and Appraising Results

- Further Resources

Reproduced from Grant, M. J. and Booth, A. (2009), A typology of reviews: an analysis of 14 review types and associated methodologies. Health Information & Libraries Journal, 26: 91–108. doi:10.1111/j.1471-1842.2009.00848.x

- << Previous: Home

- Next: Planning Your Systematic Review >>

- Last Updated: Apr 17, 2024 2:02 PM

- URL: https://guides.library.ucla.edu/systematicreviews

- Research Process

Systematic Literature Review or Literature Review?

- 3 minute read

- 43.4K views

Table of Contents

As a researcher, you may be required to conduct a literature review. But what kind of review do you need to complete? Is it a systematic literature review or a standard literature review? In this article, we’ll outline the purpose of a systematic literature review, the difference between literature review and systematic review, and other important aspects of systematic literature reviews.

What is a Systematic Literature Review?

The purpose of systematic literature reviews is simple. Essentially, it is to provide a high-level of a particular research question. This question, in and of itself, is highly focused to match the review of the literature related to the topic at hand. For example, a focused question related to medical or clinical outcomes.

The components of a systematic literature review are quite different from the standard literature review research theses that most of us are used to (more on this below). And because of the specificity of the research question, typically a systematic literature review involves more than one primary author. There’s more work related to a systematic literature review, so it makes sense to divide the work among two or three (or even more) researchers.

Your systematic literature review will follow very clear and defined protocols that are decided on prior to any review. This involves extensive planning, and a deliberately designed search strategy that is in tune with the specific research question. Every aspect of a systematic literature review, including the research protocols, which databases are used, and dates of each search, must be transparent so that other researchers can be assured that the systematic literature review is comprehensive and focused.

Most systematic literature reviews originated in the world of medicine science. Now, they also include any evidence-based research questions. In addition to the focus and transparency of these types of reviews, additional aspects of a quality systematic literature review includes:

- Clear and concise review and summary

- Comprehensive coverage of the topic

- Accessibility and equality of the research reviewed

Systematic Review vs Literature Review

The difference between literature review and systematic review comes back to the initial research question. Whereas the systematic review is very specific and focused, the standard literature review is much more general. The components of a literature review, for example, are similar to any other research paper. That is, it includes an introduction, description of the methods used, a discussion and conclusion, as well as a reference list or bibliography.

A systematic review, however, includes entirely different components that reflect the specificity of its research question, and the requirement for transparency and inclusion. For instance, the systematic review will include:

- Eligibility criteria for included research

- A description of the systematic research search strategy

- An assessment of the validity of reviewed research

- Interpretations of the results of research included in the review

As you can see, contrary to the general overview or summary of a topic, the systematic literature review includes much more detail and work to compile than a standard literature review. Indeed, it can take years to conduct and write a systematic literature review. But the information that practitioners and other researchers can glean from a systematic literature review is, by its very nature, exceptionally valuable.

This is not to diminish the value of the standard literature review. The importance of literature reviews in research writing is discussed in this article . It’s just that the two types of research reviews answer different questions, and, therefore, have different purposes and roles in the world of research and evidence-based writing.

Systematic Literature Review vs Meta Analysis

It would be understandable to think that a systematic literature review is similar to a meta analysis. But, whereas a systematic review can include several research studies to answer a specific question, typically a meta analysis includes a comparison of different studies to suss out any inconsistencies or discrepancies. For more about this topic, check out Systematic Review VS Meta-Analysis article.

Language Editing Plus

With Elsevier’s Language Editing Plus services , you can relax with our complete language review of your systematic literature review or literature review, or any other type of manuscript or scientific presentation. Our editors are PhD or PhD candidates, who are native-English speakers. Language Editing Plus includes checking the logic and flow of your manuscript, reference checks, formatting in accordance to your chosen journal and even a custom cover letter. Our most comprehensive editing package, Language Editing Plus also includes any English-editing needs for up to 180 days.

- Publication Recognition

How to Make a PowerPoint Presentation of Your Research Paper

- Manuscript Preparation

What is and How to Write a Good Hypothesis in Research?

You may also like.

Descriptive Research Design and Its Myriad Uses

Five Common Mistakes to Avoid When Writing a Biomedical Research Paper

Making Technical Writing in Environmental Engineering Accessible

To Err is Not Human: The Dangers of AI-assisted Academic Writing

When Data Speak, Listen: Importance of Data Collection and Analysis Methods

Choosing the Right Research Methodology: A Guide for Researchers

Why is data validation important in research?

Writing a good review article

Input your search keywords and press Enter.

Systematic Reviews & Literature Reviews

Evidence synthesis: part 1.

This blog post is the first in a series exploring Evidence Synthesis . We’re going to start by looking at two types of evidence synthesis: literature reviews and systemic reviews . To help me with this topic I looked at a number of research guides from other institutions, e.g., Cornell University Libraries.

The Key Differences Between a Literature Review and a Systematic Review

Overall, while both literature reviews and systematic reviews involve reviewing existing research literature, systematic reviews adhere to more rigorous and transparent methods to minimize bias and provide robust evidence to inform decision-making in education and other fields. If you are interested in learning about other evidence synthesis this decision tree created by Cornell Libraries (Robinson, n.d.) is a nice visual introduction.

Along with exploring evidence synthesis I am also interested in generative A.I. I want to be transparent about how I used A.I. to create the table above. I fed this prompt into ChatGPT:

“ List the differences between a literature review and a systemic review for a graduate student of education “

I wanted to see what it would produce. I reformatted the list into a table so that it would be easier to compare and contrast these two reviews much like the one created by Cornell University Libraries (Kibbee, 2024). I think ChatGPT did a pretty good job. I did have to do quite a bit of editing, and make sure that what was created matched what I already knew. There are things ChatGPT left out, for example time frames, and how many people are needed for a systemic review, but we can revisit that in a later post.

Kibbee, M. (2024, April 10). Libguides: A guide to evidence synthesis: Cornell University Library Evidence Synthesis Service. Cornell University Library. https://guides.library.cornell.edu/evidence-synthesis/intro

- Blog Archive 2009-2018

- Library Hours

- Library Salons

- Library Spaces

- Library Workshops

- Reference Desk Questions

Subscribe to the Bank Street Library Blog

Literature Review vs Systematic Review

- Literature Review vs. Systematic Review

- Primary vs. Secondary Sources

- Databases and Articles

- Specific Journal or Article

Subject Guide

Definitions

It’s common to confuse systematic and literature reviews because both are used to provide a summary of the existent literature or research on a specific topic. Regardless of this commonality, both types of review vary significantly. The following table provides a detailed explanation as well as the differences between systematic and literature reviews.

Kysh, Lynn (2013): Difference between a systematic review and a literature review. [figshare]. Available at: http://dx.doi.org/10.6084/m9.figshare.766364

- << Previous: Home

- Next: Primary vs. Secondary Sources >>

- Last Updated: Dec 15, 2023 10:19 AM

- URL: https://libguides.sjsu.edu/LitRevVSSysRev

Understanding the influence of different proxy perspectives in explaining the difference between self-rated and proxy-rated quality of life in people living with dementia: a systematic literature review and meta-analysis

- Open access

- Published: 24 April 2024

Cite this article

You have full access to this open access article

- Lidia Engel ORCID: orcid.org/0000-0002-7959-3149 1 ,

- Valeriia Sokolova 1 ,

- Ekaterina Bogatyreva 2 &

- Anna Leuenberger 2

Proxy assessment can be elicited via the proxy-patient perspective (i.e., asking proxies to assess the patient’s quality of life (QoL) as they think the patient would respond) or proxy-proxy perspective (i.e., asking proxies to provide their own perspective on the patient’s QoL). This review aimed to identify the role of the proxy perspective in explaining the differences between self-rated and proxy-rated QoL in people living with dementia.

A systematic literate review was conducted by sourcing articles from a previously published review, supplemented by an update of the review in four bibliographic databases. Peer-reviewed studies that reported both self-reported and proxy-reported mean QoL estimates using the same standardized QoL instrument, published in English, and focused on the QoL of people with dementia were included. A meta-analysis was conducted to synthesize the mean differences between self- and proxy-report across different proxy perspectives.

The review included 96 articles from which 635 observations were extracted. Most observations extracted used the proxy-proxy perspective (79%) compared with the proxy-patient perspective (10%); with 11% of the studies not stating the perspective. The QOL-AD was the most commonly used measure, followed by the EQ-5D and DEMQOL. The standardized mean difference (SMD) between the self- and proxy-report was lower for the proxy-patient perspective (SMD: 0.250; 95% CI 0.116; 0.384) compared to the proxy-proxy perspective (SMD: 0.532; 95% CI 0.456; 0.609).

Different proxy perspectives affect the ratings of QoL, whereby adopting a proxy-proxy QoL perspective has a higher inter-rater gap in comparison with the proxy-patient perspective.

Avoid common mistakes on your manuscript.

Quality of life (QoL) has become an important outcome for research and practice but obtaining reliable and valid estimates remains a challenge in people living with dementia [ 1 ]. According to the Diagnostic and Statistical Manual of Mental Disorders (DSM-5) criteria [ 2 ], dementia, termed as Major Neurocognitive Disorder (MND), involves a significant decline in at least one cognitive domain (executive function, complex attention, language, learning, memory, perceptual-motor, or social cognition), where the decline represents a change from a patient's prior level of cognitive ability, is persistent and progressive over time, is not associated exclusively with an episode of delirium, and reduces a person’s ability to perform everyday activities. Since dementia is one of the most pressing challenges for healthcare systems nowadays [ 3 ], it is critical to study its impact on QoL. The World Health Organization defines the concept of QoL as “individuals' perceptions of their position in life in the context of the culture and value systems in which they live and in relation to their goals, expectations, standards, and concerns” [ 4 ]. It is a broad ranging concept incorporating in a complex way the persons' physical health, psychological state, level of independence, social relationships, personal beliefs, and their relationships to salient features of the environment.

Although there is evidence that people with mild to moderate dementia can reliably rate their own QoL [ 5 ], as the disease progresses, there is typically a decline in memory, attention, judgment, insight, and communication that may compromise self-reporting of QoL [ 6 ]. Additionally, behavioral symptoms, such as agitation, and affective symptoms, such as depression, may present another challenge in obtaining self-reported QoL ratings due to emotional shifts and unwillingness to complete the assessment [ 7 ]. Although QoL is subjective and should ideally be assessed from an individual’s own perspective [ 8 ], the decline in cognitive function emphasizes the need for proxy-reporting by family members, health professionals, or care staff who are asked to report on behalf of the person with dementia. However, proxy-reports are not substitutable for self-reports from people with dementia, as they offer supplementary insights, reflecting the perceptions and viewpoints of people surrounding the person with dementia [ 9 ].

Previous research has consistently highlighted a disagreement between self-rated and proxy-rated QoL in people living with dementia, with proxies generally providing lower ratings (indicating poorer QoL) compared with person’s own ratings [ 8 , 10 , 11 , 12 ]. Impairment in cognition associated with greater dementia severity has been found to be associated with larger difference between self-rating and proxy-rating obtained from family caregivers, as it becomes increasingly difficult for severely cognitively impaired individuals to respond to questions that require contemplation, introspection, and sustained attention [ 13 , 14 ]. Moreover, non-cognitive factors, such as awareness of disease and depressive symptoms play an important role when comparing QoL ratings between individuals with dementia and their proxies [ 15 ]. Qualitative evidence has also shown that people with dementia tend to compare themselves with their peers, whereas carers make comparisons with how the person used to be in the past [ 9 ]. The disagreement between self-reported QoL and carer proxy-rated QoL could be modulated by some personal, cognitive or relational factors, for example, the type of relationship or the frequency of contact maintained, person’s cognitive status, carer’s own feeling about dementia, carer’s mood, and perceived burden of caregiving [ 14 , 16 ]. Disagreement may also arise from the person with dementia’s problems to communicate symptoms, and proxies’ inability to recognize certain symptoms, like pain [ 17 ], or be impacted by the amount of time spent with the person with dementia [ 18 ]. This may also prevent proxies to rate accurately certain domains of QoL, with previous evidence showing higher level of agreement for observable domains, such as mobility, compared with less observable domains like emotional wellbeing [ 8 ]. Finally, agreement also depends on the type of proxy (i.e., informal/family carers or professional staff) and the nature of their relationship, for instance, proxy QoL scores provided by formal carers tend to be higher (reflecting better QoL) compared to the scores supplied by family members [ 19 , 20 ]. Staff members might associate residents’ QoL with the quality of care delivered or the stage of their cognitive impairment, whereas relatives often focus on comparison with the person’s QoL when they were younger, lived in their own home and did not have dementia [ 20 ].

What has been not been fully examined to date is the role of different proxy perspectives employed in QoL questionnaires in explaining disagreement between self-rated and proxy-rated scores in people with dementia. Pickard et al. (2005) have proposed a conceptual framework for proxy assessments that distinguish between the proxy-patient perspective (i.e., asking proxies to assess the patient’s QoL as they think the patient would respond) or proxy-proxy perspective (i.e., asking proxies to provide their own perspective on the patient’s QoL) [ 21 ]. In this context, the intra-proxy gap describes the differences between proxy-patient and proxy-proxy perspective, whereas the inter-rater gap is the difference between self-report and proxy-report [ 21 ].

Existing generic and dementia-specific QoL instruments specify the perspective explicitly in their instructions or imply the perspective indirectly in their wording. For example, the instructions of the Dementia Quality of Life Measure (DEMQOL) asks proxies to give the answer they think their relative would give (i.e., proxy-patient perspective) [ 22 ], whereas the family version of the Quality of Life in Alzheimer’s Disease (QOL-AD) instructs the proxies to rate their relative’s current situation as they (the proxy) see it (i.e., proxy-proxy perspective) [ 7 ]. Some instruments, like the EQ-5D measures, have two proxy versions for each respective perspective [ 23 , 24 ]. The Adult Social Care Outcome Toolkit (ASCOT) proxy version, on the other hand, asks proxies to complete the questions from both perspectives, from their own opinion and how they think the person would answer [ 25 ].

QoL scores generated using different perspectives are expected to differ, with qualitative evidence showing that carers rate the person with dementia’s QoL lower (worse) when instructed to comment from their own perspective than from the perspective of the person with dementia [ 26 ]. However, to our knowledge, no previous review has fully synthesized existing evidence in this area. Therefore, we aimed to undertake a systematic literature review to examine the role of different proxy-assessment perspectives in explaining differences between self-rated and proxy-rated QoL in people living with dementia. The review was conducted under the hypothesis that the difference in QoL estimates will be larger when adopting the proxy-proxy perspective compared with proxy-patient perspective.

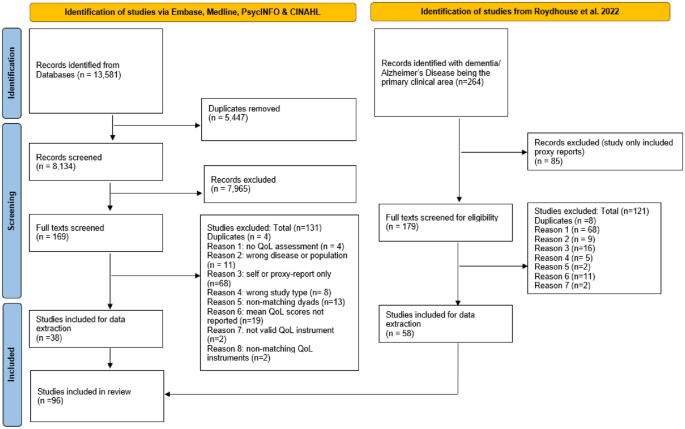

The review was registered with the International Prospective Register of Systematic Reviews (CRD42022333542) and followed the Preferred Reporting Items System for Systematic Reviews and Meta-Analysis (PRISMA) guidelines (see Appendix 1 ) [ 27 ].

Search strategy

This review used two approaches to obtain literature. First, primary articles from an existing review by Roydhouse et al. were retrieved [ 28 ]. The review included studies published from inception to February 2018 that compared self- and proxy-reports. Studies that focused explicitly on Alzheimer’s Disease or dementia were retrieved for the current review. Two reviewers conducted a full-text review to assess whether the eligibility criteria listed below for the respective study were met. An update of the Roydhouse et al. review was undertaken to capture more recent studies. The search strategy by Roydhouse et al. was amended and covered studies published after January 1, 2018, and was limited to studies within the context of dementia. The original search was undertaken over a three-week period (17/11/2021–9/12/2021) and then updated on July 3, 2023. Peer-reviewed literature was sourced from MEDLINE, CINAHL, and PsycINFO databases via EBSCOHost as well as EMBASE. Four main search term categories were used: (1) proxy terms (i.e., care*-report*), (2) QoL/ outcome terms (i.e., ‘quality of life’), (3) disease terms (i.e., ‘dementia’), and (4) pediatric terms (i.e., ‘pediatric*’) (for exclusion). Keywords were limited to appear in titles and abstracts only, and MeSH terms were included for all databases. A list of search strategy can be found in Appendix 2 . The first three search term categories were searched with AND, and the NOT function was used to exclude pediatric terms. A limiter was applied in all database searches to only include studies with human participants and articles published in English.

Selection criteria

Studies from all geographical locations were included in the review if they (1) were published in English in a peer-reviewed journal (conference abstracts, dissertations, a gray literature were excluded); (2) were primary studies (reviews were excluded); (3) clearly defined the disease of participants, which were limited to Alzheimer’s disease or dementia; (4) reported separate QoL scores for people with dementia (studies that included mixed populations had to report a separate QoL score for people with dementia to be considered); (5) were using a standardized and existing QoL instrument for assessment; and (6) provided a mean self-reported and proxy-reported QoL score for the same dyads sample (studies that reported means for non-matched samples were excluded) using the same QoL instrument.

Four reviewers (LE, VS, KB, AL) were grouped into two groups who independently screened the 179 full texts from the Roydhouse et. al (2022) study that included Alzheimer’s disease or dementia patients. If a discrepancy within the inclusion selection occurred, articles were discussed among all the reviewers until a consensus was reached. Studies identified from the database search were imported into EndNote [ 29 ]. Duplicates were removed through EndNote and then uploaded to Rayyan [ 30 ]. Each abstract was reviewed by two independent reviewers (any two from four reviewers). Disagreements regarding study inclusions were discussed between all reviewers until a consensus was reached. Full-text screening of each eligible article was completed by two independent reviewers (any two from four reviewers). Again, a discussion between all reviewers was used in case of disagreements.

Data extraction

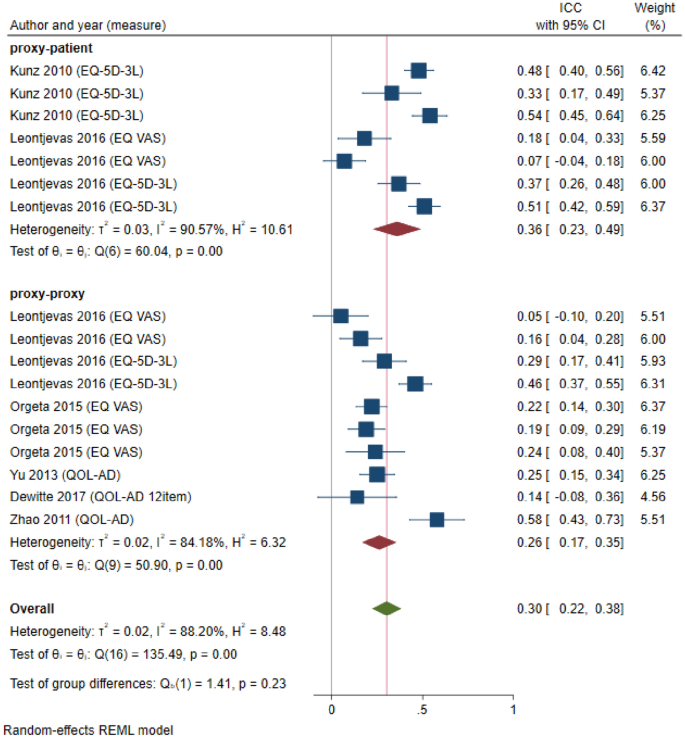

A data extraction template was created in Microsoft Excel. The following information were extracted if available: country, study design, study sample, study setting, dementia type, disease severity, Mini-Mental Health State Exam (MMSE) score details, proxy type, perspective, living arrangements, QoL assessment measure/instrument, self-reported scores (mean, SD), proxy-reported scores (mean, SD), and agreement statistics. If a study reported the mean (SD) for the total score as well as for specific QoL domains of the measure, we extracted both. If studies reported multiple scores across different time points or subgroups, we extracted all scores. For interventional studies, scores from both the intervention group and the control group were recorded. In determining the proxy perspective, we relied on authors’ description in the article. If the perspective was not explicitly stated, we adopted the perspective of the instrument developers; where more perspectives were possible (e.g., in the case of the EQ-5D measures) and the perspective was not explicitly stated, it was categorized as ‘undefined.’ For agreement, we extracted the Intraclass Correlation Coefficient (ICC), a reliability index that reflects both degree of correlation and agreement between measurements of continuous variables. While there are different forms of ICC based on the model (1-way random effects, 2-wy random effects, or 2-way fixed effects), the type (single rater/measurement or the mean k raters/measurements), and definition of relationship [ 31 ], this level of information was not extracted due to insufficient information provided in the original studies. Values for ICC range between 0 and 1, with values interpreted as poor (less than 0.5), moderate (0.5–0.75), good (0.75–0.9), and excellent (greater than 0.9) reliability between raters [ 31 ].

Data synthesis and analysis

Characteristics of studies were summarized descriptively. Self-reported and proxy-reported means and SD were extracted from the full texts and the mean difference was calculated (or extracted if available) for each pair. Studies that reported median values instead of mean values were converted using the approach outlined by Wan et al. (2014) [ 32 ]. Missing SDs (5 studies, 20 observations) were obtained from standard errors or confidence intervals reported following the Cochrane guidelines [ 33 ]. Missing SDs (6 studies, 29 observations) in studies that only presented the mean value without any additional summary statistics were imputed using the prognostic method [ 34 ]. Thereby, we predicted the missing SDs by calculating the average SDs of observed studies with full information by the respective measure and source (self-report versus proxy-report).

A meta-analysis was performed in Stata (17.1 Stata Corp LLC, College Station, TX) to synthesize mean differences between self- and proxy-reported scores across different proxy perspectives. First, the pooled raw mean differences were calculated for each QoL measure separately, given differences in scales between measures. Secondly, we calculated the pooled standardized mean difference (SMD) for all studies stratified by proxy type (family carer, formal carers, mixed), dementia severity (mild, moderate, severe), and living arrangement (residential/institutional care, mixed). SMD accounts for the use of different measurement scales, where effect sizes were estimated using Cohen’s d. Random-effects models were used to allow for unexplained between-study variability based on the restricted maximum-likelihood (REML) estimator. The percentage of variability attributed to heterogeneity between the studies was assessed using the I 2 statistic; an I 2 of 0%-40% represents possibly unimportant heterogeneity, 30–60% moderate heterogeneity, 50–90% substantial heterogeneity, and 75%-100% considerable heterogeneity [ 35 ]. Chi-squared statistics (χ 2 ) provided evidence of heterogeneity, where a p -value of 0.1 was used as significance level. For studies that reported agreement statistics, based on ICC, we also ran a forest plot stratified by the study perspective. We also calculated Q statistic (Cochran’s test of homogeneity), which assesses whether observed differences in results are compatible with chance alone.

Risk of bias and quality assessment

The quality of studies was assessed using the using a checklist for assessing the quality of quantitative studies developed by Kmet et al. (2004) [ 36 ]. The checklist consists of 14 items and items are scored as ‘2’ (yes, item sufficiently addressed), ‘1’ (item partially addressed), ‘0’ (no, not addressed), or ‘not applicable.’ A summary score was calculated for each study by summing the total score obtained across relevant items and dividing by the total possible score. Scores were adjusted by excluding items that were not applicable from the total score. Quality assessment was undertaken by one reviewer, with 25% of the papers assessed independently by a second reviewer.

The PRISMA diagram in Fig. 1 shows that after the abstract and full-text screening, 38 studies from the database search and 58 studies from the Roydhouse et al. (2022) review were included in this review—a total of 96 studies. A list of all studies included and their characteristics can be found in Appendix 3.

PRISMA 2020 flow diagram

General study characteristics

The 96 articles included in the review were published between 1999 and 2023 from across the globe; most studies (36%) were conducted in Europe. People with dementia in these studies were living in the community (67%), residential/institutional care (15%), as well as mixed dwelling settings (18%). Most proxy-reports were provided by family carers (85%) and only 8 studies (8%) included formal carers. The mean MMSE score for dementia and Alzheimer’s participants was 18.77 (SD = 4.34; N = 85 studies), which corresponds to moderate cognitive impairment [ 37 ]. Further characteristics of studies included are provided in Table 1 . The quality of studies included (see Appendix 4) was generally very good, scoring on average 91% (SD: 9.1) with scores ranging from 50 to 100%.

Quality of life measure and proxy perspective used

A total of 635 observations were recorded from the 96 studies. The majority of studies and observations extracted assumed the proxy-proxy perspective (77 studies, 501 observations), followed by the proxy-patient perspective (18 studies, 62 observations), with 18 studies (72 observations) not clearly defining the perspective. Table 2 provides a detailed overview of number of studies and observations across the respective QoL measures and proxy perspectives. Two studies (14 observations) adopted both perspectives within the same study design: one using the QOL-AD measure [ 5 ] and the second study exploring the EQ-5D-3L and EQ VAS [ 38 ]. Overall, the QOL-AD was the most often used QoL measure, followed by the EQ-5D and DEMQOL. Mean scores for specific QoL domains were accessible for the DEMQOL and QOL-AD. However, only the QOL-AD provided domain-specific mean scores from both proxy perspectives.

Mean scores and mean differences by proxy perspective and QoL measure

The raw mean scores for self-reported and proxy-reported QoL scores are provided in the Supplementary file 2. The pooled raw mean difference by proxy perspective and measure is shown in Table 3 . Regardless of the perspective adopted and the QoL instrument used, self-reported scores were higher (indicating better QoL) compared with proxy-reported scores, except for the DEMQOL, where proxies reported better QoL than people with dementia themselves. Most instruments were explored from one perspective, except for the EQ-5D-3L, EQ VAS, and QOL-AD, for which mean differences were available for both perspectives. For these three measures, mean differences were smaller when adopting the proxy-patient perspective compared with proxy-proxy perspective, although mean scores for the QOL-AD were slightly lower from the proxy-proxy perspective. I 2 statistics indicate considerable heterogeneity (I 2 > 75%) between studies. Mean differences by specific QoL domains are provided in Appendix 5, but only for the QOL-AD measure that was explored from both perspectives. Generally, mean differences appeared to be smaller for the proxy-proxy perspective than the proxy-patient perspective across all domains, except for ‘physical health’ and ‘doing chores around the house.’ However, results need to be interpreted carefully as proxy-patient perspective scores were derived from only one study.

Standardized mean differences by proxy perspective, stratified by proxy type, dementia severity, and living arrangement

Table 4 provides the SMD by proxy perspective, which adjusts for the different QoL measurement scales. Findings suggest that adopting the proxy-patient perspective results in lower SMDs (SMD: 0.250; 95% CI 0.116; 0.384) compared with the proxy-proxy perspective (SMD: 0.532; 95% CI 0.456; 0.609). The largest SMD was recorded for studies that did not define the study perspective (SMD: 0.594; 95% CI 0.469; 0.718). A comparison by different proxy types (formal carers, family carers, and mixed proxies) revealed some mixed results. When adopting the proxy-proxy perspective, the largest SMD was found for family carers (SMD: 0.556; 95% CI 0.465; 0.646) compared with formal carers (SMD: 0.446; 95% CI 0.305; 0.586) or mixed proxies (SMD: 0.335; 95% CI 0.211; 0.459). However, the opposite relationship was found when the proxy-patient perspective was used, where the smallest SMD was found for family carers compared with formal carers and mixed proxies. The SMD increased with greater level of dementia severity, suggesting a greater disagreement. However, compared with the proxy-proxy perspective, where self-reported scores were greater (i.e., better QoL) than proxy-reported scores across all dementia severity levels, the opposite was found when adopting the proxy-patient perspective, where proxies reported better QoL than people with dementia themselves, except for the severe subgroup. No clear trend was observed for different living settings, although the SMD appeared to be smaller for people with dementia living in residential care compared with those living in the community.

Direct proxy perspectives comparison studies

Two studies assessed both proxy perspectives within the same study design. Bosboom et al. (2012) found that compared with self-reported scores (mean: 34.7; SD: 5.3) using the QOL-AD, proxy scores using the proxy-patient perspective were closer to the self-reported scores (mean: 32.1; SD: 6.1) compared with the proxy-proxy perspective (mean: 29.5; SD: 5.4) [ 5 ]. Similar findings were reported by Leontjevas et al. (2016) using the EQ-5D-3L, including the EQ VAS, showing that the inter-proxy gap between self-report (EQ-5D-3L: 0.609; EQ VAS: 65.37) and proxy-report was smaller when adopting the proxy-patient perspective (EQ-5D-3L: 0.555; EQ VAS: 65.15) compared with the proxy-proxy perspective (EQ-5D-3L: 0.492; EQ VAS: 64.42) [ 38 ].

Inter-rater agreement (ICC) statistics

Six studies reported agreement statistics based on ICC, from which we extracted 17 observations that were included in the meta-analysis. Figure 2 shows the study-specific and overall estimates of ICC by the respective study perspective. The heterogeneity between studies was high ( I 2 = 88.20%), with a Q test score of 135.49 ( p < 0.001). While the overall ICC for the 17 observations was 0.3 (95% CI 0.22; 0.38), indicating low agreement, the level of agreement was slightly better when adopting a proxy-patient perspective (ICC: 0.36, 95% CI 0.23; 0.49) than a proxy-proxy perspective (ICC: 0.26, 95% CI 0.17; 0.35).

Forest plot depicting study-specific and overall ICC estimates by study perspective

While previous studies highlighted a disagreement between self-rated and proxy-rated QoL in people living with dementia, this review, for the first time, assessed the role of different proxy perspectives in explaining the inter-rater gap. Our findings align with the baseline hypothesis and indicate that QoL scores reported from the proxy-patient perspective are closer to self-reported QoL scores than the proxy-proxy perspective, suggesting that the proxy perspective does impact the inter-rater gap and should not be ignored. This finding was observed across different analyses conducted in this review (i.e., pooled raw mean difference, SMD, ICC analysis), which also confirms the results of two previous primary studies that adopted both proxy perspectives within the same study design [ 5 , 38 ]. Our findings emphasize the need for transparency in reporting the proxy perspective used in future studies, as it can impact results and interpretation. This was also noted by the recent ISPOR Proxy Task Force that developed a checklist of considerations when using proxy-reporting [ 39 ]. While consistency in proxy-reports is desirable, it is crucial to acknowledge that each proxy perspective holds significance in future research, depending on study objectives. It is evident that both proxy perspectives offer distinct insights—one encapsulating the perspectives of people with dementia, and the other reflecting the viewpoints of proxies. Therefore, in situations where self-report is unattainable due to advanced disease severity and the person’s perspective on their own QoL assessment is sought, it is recommended to use the proxy-patient perspective. Conversely, if the objective of future research is to encompass the viewpoints of proxies, opting for the proxy-proxy perspective is advisable. However, it is important to note that proxies may deviate from instructed perspectives, requiring future qualitative research to examine the adherence to proxy perspectives. Additionally, others have argued that proxy-reports should not substitute self-reports, and only serve as supplementary sources alongside patient self-reports whenever possible [ 9 ].

This review considered various QoL instruments, but most instruments adopted one specific proxy perspective, limiting detailed analyses. QoL instruments differ in their scope (generic versus disease-specific) as well as coverage of QoL domains. The QOL-AD, an Alzheimer's Disease-specific measure, was commonly used. Surprisingly, for this measure, the mean differences between self-reported and proxy-reported scores were smaller using the proxy-proxy perspective, contrary to the patterns observed with all other instruments. This may be due to the lack of studies reporting QOL-AD proxy scores from the proxy-patient perspective, as the study by Bosboom et al. (2012) found the opposite [ 5 ]. Previous research has also suggested that the inter-rater gap is dependent on the QoL domains and that the risk of bias is greater for more ‘subjective’ (less observable) domains such as emotions, feelings, and moods in comparison with observable, objective areas such as physical domains [ 8 , 40 ]. However, this review lacks sufficient observations for definitive results on QoL dimensions and their impact on self-proxy differences, emphasizing the need for future research in this area.

With regard to proxy type, there is an observable trend suggesting a wider inter-rater gap when family proxies are employed using the proxy-proxy perspective, in contrast to formal proxies. This variance might be attributed to the use of distinct anchoring points; family proxies tend to assess the individual's QoL in relation to their past self before having dementia, while formal caregivers may draw comparisons with other individuals with dementia under their care [ 41 ]. However, the opposite was found when the proxy-patient perspective was used, where family proxies scores seemed to align more closely with self-reported scores, resulting in lower SMD scores. This suggests that family proxies might possess a better ability to empathize with the perspective of the person with dementia compared to formal proxies. Nonetheless, it is important to interpret these findings cautiously, given the relatively small number of observations for formal caregiver reports. Additionally, other factors such as emotional connection, caregiver burden, and caregiver QoL may also impact proxy-reports by family proxies [ 14 , 16 ] that have not been explored in this review.

Our review found that the SMD between proxy and self-report increased with greater level of dementia severity, contrasting a previous study, which showed that cognitive impairment was not the primary factor that accounted for the differences in the QoL assessments between family proxies and the person with dementia [ 15 ]. However, it is noteworthy that different interpretations and classifications were used across studies to define mild, moderate, and severe dementia, which needs to be considered. Most studies used MMSE to define dementia severity levels. Given the MMSE’s role as a standard measure of cognitive function, the study findings are considered generalizable and clinically relevant for people with dementia across different dementia severity levels. When examining the role of the proxy perspective by level of severity, we found that compared with the proxy-proxy perspective, where self-reported scores were greater than proxy-reported scores across all dementia severity levels, the proxy-patient perspective yielded the opposite results, and proxies reported better QoL than people with dementia themselves, except for the severe subgroup. It is possible that in the early stages of dementia, the person with dementia has a greater awareness of increasing deficits, coupled with denial and lack of acceptance, leading to a more critical view of their own QoL than how proxies think they would rate their QoL. However, future studies are warranted, given the small number of observations adopting the proxy-patient perspective in our review.

The heterogeneity observed in the studies included was high, supporting the use of random-effects meta-analysis. This is not surprising given the diverse nature of studies included (i.e., RCTs, cross-sectional studies), differences in the population (i.e., people living in residential care versus community-dwelling people), mixed levels of dementia severity, and differences between instruments. While similar heterogeneity was observed in another review on a similar topic [ 42 ], our presentation of findings stratified by proxy type, dementia severity, and living arrangement attempted to account for such differences across studies.

Limitations and recommendations for future studies

Our review has some limitations. Firstly, proxy perspectives were categorized based on the authors' descriptions, but many papers did not explicitly state the perspective, which led to the use of assumptions based on instrument developers. Some studies may have modified the perspective's wording without reporting it. Due to lack of resources, we did not contact the authors of the original studies directly to seek clarification around the proxy perspective adopted. Regarding studies using the EQ-5D, which has two proxy perspectives, some studies did not specify which proxy version was used, suggesting the potential use of self-reported versions for proxies. In such cases, the proxy perspective was categorized as undefined. Despite accounting for factors like QoL measure, proxy type, setting, and dementia severity, we could not assess the impact of proxy characteristics (e.g., carer burden) or dementia type due to limited information provided in the studies. We also faced limitations in exploring the proxy perspective by QoL domains due to limited information. Further, not all studies outlined the data collection process in full detail. For example, it is possible that the proxy also assisted the person with dementia with their self-report, which could have resulted in biased estimates and the need for future studies applying blinding. Although we assessed the risk of bias of included studies, the checklist was not directly reflecting the purpose of our study that looked into inter-rater agreement. No checklist for this purpose currently exists. Finally, quality appraisal by a second reviewer was only conducted for the first 25% of the studies due to resource constraints and a low rate of disagreement between the two assessors. However, an agreement index between reviewers regarding the concordance in selecting full texts for inclusion and conducting risk of bias assessments was not calculated.

This review demonstrates that the choice of proxy perspective impacts the inter-rater gap. QoL scores from the proxy-patient perspective align more closely with self-reported scores than the proxy-proxy perspective. These findings contribute to the broader literature investigating factors influencing differences in QoL scores between proxies and individuals with dementia. While self-reported QoL is the gold standard, proxy-reports should be viewed as complements rather than substitutes. Both proxy perspectives offer unique insights, yet QoL assessments in people with dementia are complex. The difference in self- and proxy-reports can be influenced by various factors, necessitating further research before presenting definitive results that inform care provision and policy.

Data availability

All data associated with the systematic literature review are available in the supplementary file.

Moyle, W., & Murfield, J. E. (2013). Health-related quality of life in older people with severe dementia: Challenges for measurement and management. Expert Review of Pharmacoeconomics & Outcomes, 13 (1), 109–122. https://doi.org/10.1586/erp.12.84

Article Google Scholar

Sachdev, P. S., Blacker, D., Blazer, D. G., Ganguli, M., Jeste, D. V., Paulsen, J. S., & Petersen, R. C. (2014). Classifying neurocognitive disorders: The DSM-5 approach. Nature reviews Neurology, 10 (11), 634–642. https://doi.org/10.1038/nrneurol.2014.181

Article PubMed Google Scholar

Health, The Lancet Regional., & – Europe. (2022). Challenges for addressing dementia. The Lancet Regional Health . https://doi.org/10.1016/j.lanepe.2022.100504

The WHOQOL Group. (1995). The World Health Organization quality of life assessment (WHOQOL): Position paper from the World Health Organization. Social Science & Medicine, 41 (10), 1403–1409. https://doi.org/10.1016/0277-9536(95)00112-K

Bosboom, P. R., Alfonso, H., Eaton, J., & Almeida, O. P. (2012). Quality of life in Alzheimer’s disease: Different factors associated with complementary ratings by patients and family carers. International Psychogeriatrics, 24 (5), 708–721. https://doi.org/10.1017/S1041610211002493

Scholzel-Dorenbos, C. J., Rikkert, M. G., Adang, E. M., & Krabbe, P. F. (2009). The challenges of accurate measurement of health-related quality of life in frail elderly people and dementia. Journal of the American Geriatrics Society, 57 (12), 2356–2357. https://doi.org/10.1111/j.1532-5415.2009.02586.x

Logsdon, R. G., Gibbons, L. E., McCurry, S. M., & Teri, L. (2002). Assessing quality of life in older adults with cognitive impairment. Psychosomatic Medicine, 64 (3), 510–519. https://doi.org/10.1097/00006842-200205000-00016

Hutchinson, C., Worley, A., Khadka, J., Milte, R., Cleland, J., & Ratcliffe, J. (2022). Do we agree or disagree? A systematic review of the application of preference-based instruments in self and proxy reporting of quality of life in older people. Social Science & Medicine, 305 , 115046. https://doi.org/10.1016/j.socscimed.2022.115046

Smith, S. C., Hendriks, A. A. J., Cano, S. J., & Black, N. (2020). Proxy reporting of health-related quality of life for people with dementia: A psychometric solution. Health and Quality of Life Outcomes, 18 (1), 148. https://doi.org/10.1186/s12955-020-01396-y

Article CAS PubMed PubMed Central Google Scholar

Andrieu, S., Coley, N., Rolland, Y., Cantet, C., Arnaud, C., Guyonnet, S., Nourhashemi, F., Grand, A., Vellas, B., & group, P. (2016). Assessing Alzheimer’s disease patients’ quality of life: Discrepancies between patient and caregiver perspectives. Alzheimer’s & Dementia, 12 (4), 427–437. https://doi.org/10.1016/j.jalz.2015.09.003

Jönsson, L., Andreasen, N., Kilander, L., Soininen, H., Waldemar, G., Nygaard, H., Winblad, B., Jonhagen, M. E., Hallikainen, M., & Wimo, A. (2006). Patient- and proxy-reported utility in Alzheimer disease using the EuroQoL. Alzheimer Disease & Associated Disorders, 20 (1), 49–55. https://doi.org/10.1097/01.wad.0000201851.52707.c9

Zucchella, C., Bartolo, M., Bernini, S., Picascia, M., & Sinforiani, E. (2015). Quality of life in Alzheimer disease: A comparison of patients’ and caregivers’ points of view. Alzheimer Disease & Associated Disorders, 29 (1), 50–54. https://doi.org/10.1097/WAD.0000000000000050

Article CAS Google Scholar

Buckley, T., Fauth, E. B., Morrison, A., Tschanz, J., Rabins, P. V., Piercy, K. W., Norton, M., & Lyketsos, C. G. (2012). Predictors of quality of life ratings for persons with dementia simultaneously reported by patients and their caregivers: The Cache County (Utah) study. International Psychogeriatrics, 24 (7), 1094–1102. https://doi.org/10.1017/S1041610212000063

Article PubMed PubMed Central Google Scholar

Schiffczyk, C., Romero, B., Jonas, C., Lahmeyer, C., Muller, F., & Riepe, M. W. (2010). Generic quality of life assessment in dementia patients: A prospective cohort study. BMC Neurology, 10 , 48. https://doi.org/10.1186/1471-2377-10-48

Sousa, M. F., Santos, R. L., Arcoverde, C., Simoes, P., Belfort, T., Adler, I., Leal, C., & Dourado, M. C. (2013). Quality of life in dementia: The role of non-cognitive factors in the ratings of people with dementia and family caregivers. International Psychogeriatrics, 25 (7), 1097–1105. https://doi.org/10.1017/S1041610213000410

Arons, A. M., Krabbe, P. F., Scholzel-Dorenbos, C. J., van der Wilt, G. J., & Rikkert, M. G. (2013). Quality of life in dementia: A study on proxy bias. BMC Medical Research Methodology, 13 , 110. https://doi.org/10.1186/1471-2288-13-110

Gomez-Gallego, M., Gomez-Garcia, J., & Ato-Lozano, E. (2015). Addressing the bias problem in the assessment of the quality of life of patients with dementia: Determinants of the accuracy and precision of the proxy ratings. The Journal of Nutrition, Health & Aging, 19 (3), 365–372. https://doi.org/10.1007/s12603-014-0564-7

Moon, H., Townsend, A. L., Dilworth-Anderson, P., & Whitlatch, C. J. (2016). Predictors of discrepancy between care recipients with mild-to-moderate dementia and their caregivers on perceptions of the care recipients’ quality of life. American Journal of Alzheimer’s Disease & Other Dementias, 31 (6), 508–515. https://doi.org/10.1177/1533317516653819

Crespo, M., Bernaldo de Quiros, M., Gomez, M. M., & Hornillos, C. (2012). Quality of life of nursing home residents with dementia: A comparison of perspectives of residents, family, and staff. The Gerontologist, 52 (1), 56–65. https://doi.org/10.1093/geront/gnr080

Griffiths, A. W., Smith, S. J., Martin, A., Meads, D., Kelley, R., & Surr, C. A. (2020). Exploring self-report and proxy-report quality-of-life measures for people living with dementia in care homes. Quality of Life Research, 29 (2), 463–472. https://doi.org/10.1007/s11136-019-02333-3

Pickard, A. S., & Knight, S. J. (2005). Proxy evaluation of health-related quality of life: A conceptual framework for understanding multiple proxy perspectives. Medical Care, 43 (5), 493–499. https://doi.org/10.1097/01.mlr.0000160419.27642.a8

Smith, S. C., Lamping, D. L., Banerjee, S., Harwood, R. H., Foley, B., Smith, P., Cook, J. C., Murray, J., Prince, M., Levin, E., Mann, A., & Knapp, M. (2007). Development of a new measure of health-related quality of life for people with dementia: DEMQOL. Psychological Medicine, 37 (5), 737–746. https://doi.org/10.1017/S0033291706009469

Article CAS PubMed Google Scholar

Brooks, R. (1996). EuroQol: The current state of play. Health Policy, 37 (1), 53–72. https://doi.org/10.1016/0168-8510(96)00822-6

Herdman, M., Gudex, C., Lloyd, A., Janssen, M., Kind, P., Parkin, D., Bonsel, G., & Badia, X. (2011). Development and preliminary testing of the new five-level version of EQ-5D (EQ-5D-5L). Quality of Life Research, 20 (10), 1727–1736. https://doi.org/10.1007/s11136-011-9903-x

Rand, S., Caiels, J., Collins, G., & Forder, J. (2017). Developing a proxy version of the adult social care outcome toolkit (ASCOT). Health and Quality of Life Outcomes, 15 (1), 108. https://doi.org/10.1186/s12955-017-0682-0

Engel, L., Bucholc, J., Mihalopoulos, C., Mulhern, B., Ratcliffe, J., Yates, M., & Hanna, L. (2020). A qualitative exploration of the content and face validity of preference-based measures within the context of dementia. Health and Quality of Life Outcomes, 18 (1), 178. https://doi.org/10.1186/s12955-020-01425-w

Page, M. J., McKenzie, J. E., Bossuyt, P. M., Boutron, I., Hoffmann, T. C., Mulrow, C. D., Shamseer, L., Tetzlaff, J. M., Akl, E. A., Brennan, S. E., Chou, R., Glanville, J., Grimshaw, J. M., Hrobjartsson, A., Lalu, M. M., Li, T., Loder, E. W., Mayo-Wilson, E., McDonald, S., … Moher, D. (2021). The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. British Medical Journal, 372 , n71. https://doi.org/10.1136/bmj.n71

Roydhouse, J. K., Cohen, M. L., Eshoj, H. R., Corsini, N., Yucel, E., Rutherford, C., Wac, K., Berrocal, A., Lanzi, A., Nowinski, C., Roberts, N., Kassianos, A. P., Sebille, V., King, M. T., Mercieca-Bebber, R., Force, I. P. T., & the, I. B. o. D. (2022). The use of proxies and proxy-reported measures: A report of the international society for quality of life research (ISOQOL) proxy task force. Quality of Life Research, 31 (2), 317–327. https://doi.org/10.1007/s11136-021-02937-8

The EndNote Team. (2013). EndNote (Version EndNote X9) [64 bit]. Philadelphia, PA: Clarivate.

Ouzzani, M., Hammady, H., Fedorowicz, Z., & Elmagarmid, A. (2016). Rayyan—A web and mobile app for systematic reviews. Systematic Reviews, 5 (1), 210. https://doi.org/10.1186/s13643-016-0384-4

Koo, T. K., & Li, M. Y. (2016). A guideline of selecting and reporting intraclass correlation coefficients for reliability research. Journal of Chiropractic Medicine, 15 (2), 155–163. https://doi.org/10.1016/j.jcm.2016.02.012

Wan, X., Wang, W., Liu, J., & Tong, T. (2014). Estimating the sample mean and standard deviation from the sample size, median, range and/or interquartile range. BMC Medical Research Methodology, 14 , 135. https://doi.org/10.1186/1471-2288-14-135

Higgins JPT and Green S (editors). (2011). Cochrane handbook for systematic reviews of interventions.Version 5.1.0 [updated March 2011]. Retrieved 20 Jan 2023, from https://handbook-5-1.cochrane.org/chapter_7/7_7_3_2_obtaining_standard_deviations_from_standard_errors_and.htm

Ma, J., Liu, W., Hunter, A., & Zhang, W. (2008). Performing meta-analysis with incomplete statistical information in clinical trials. BMC Medical Research Methodology, 8 , 56. https://doi.org/10.1186/1471-2288-8-56

Deeks, J. J., Higgins, J. P. T., Altman, D. G., & on behalf of the Cochrane Statistical Methods Group. (2023). Chapter 10: Analysing data and undertaking meta-analyses. In J. Higgins & J. Thomas (Eds.), Cochrane Handbook for Systematic Reviews of Interventions. Version 6.4.

Kmet, L. M., Cook, L. S., & Lee, R. C. (2004). Standard quality assessment criteria for evaluating primary research papers from a variety of fields: Health and technology assessment unit. Alberta Heritage Foundation for Medical Research.

Lewis, T. J., & Trempe, C. L. (2017). Diagnosis of Alzheimer’s: Standard-of-care . USA: Elsevier Science & Technology.

Google Scholar

Leontjevas, R., Teerenstra, S., Smalbrugge, M., Koopmans, R. T., & Gerritsen, D. L. (2016). Quality of life assessments in nursing homes revealed a tendency of proxies to moderate patients’ self-reports. Journal of Clinical Epidemiology, 80 , 123–133. https://doi.org/10.1016/j.jclinepi.2016.07.009

Lapin, B., Cohen, M. L., Corsini, N., Lanzi, A., Smith, S. C., Bennett, A. V., Mayo, N., Mercieca-Bebber, R., Mitchell, S. A., Rutherford, C., & Roydhouse, J. (2023). Development of consensus-based considerations for use of adult proxy reporting: An ISOQOL task force initiative. Journal of Patient-Reported Outcomes, 7 (1), 52. https://doi.org/10.1186/s41687-023-00588-6

Li, M., Harris, I., & Lu, Z. K. (2015). Differences in proxy-reported and patient-reported outcomes: assessing health and functional status among medicare beneficiaries. BMC Medical Research Methodology . https://doi.org/10.1186/s12874-015-0053-7

Robertson, S., Cooper, C., Hoe, J., Lord, K., Rapaport, P., Marston, L., Cousins, S., Lyketsos, C. G., & Livingston, G. (2020). Comparing proxy rated quality of life of people living with dementia in care homes. Psychological Medicine, 50 (1), 86–95. https://doi.org/10.1017/S0033291718003987

Khanna, D., Khadka, J., Mpundu-Kaambwa, C., Lay, K., Russo, R., Ratcliffe, J., & Quality of Life in Kids: Key Evidence to Strengthen Decisions in Australia Project, T. (2022). Are We Agreed? Self- versus proxy-reporting of paediatric health-related quality of life (HRQoL) Using generic preference-based measures: A systematic review and meta-analysis. PharmacoEconomics, 40 (11), 1043–1067. https://doi.org/10.1007/s40273-022-01177-z

Download references

Open Access funding enabled and organized by CAUL and its Member Institutions. This study was conducted without financial support.

Author information

Authors and affiliations.

Monash University Health Economics Group, School of Public Health and Preventive Medicine, Monash University, Level 4, 553 St. Kilda Road, Melbourne, VIC, 3004, Australia

Lidia Engel & Valeriia Sokolova

School of Health and Social Development, Deakin University, Burwood, VIC, Australia

Ekaterina Bogatyreva & Anna Leuenberger

You can also search for this author in PubMed Google Scholar

Contributions

LE contributed to the study conception and design. The original database search was performed by AL and later updated by VS. All authors were involved in the screening process, data extraction, and data analyses. Quality assessment was conducted by VS and LE. The first draft of the manuscript was written by LE and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Correspondence to Lidia Engel .

Ethics declarations

Competing interests.

Lidia Engel is a member of the EuroQol Group.

Ethical approval

Not applicable.

Consent to participate

Consent to publish, additional information, publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary file1 (XLSX 67 KB)

Supplementary file2 (docx 234 kb), rights and permissions.

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Engel, L., Sokolova, V., Bogatyreva, E. et al. Understanding the influence of different proxy perspectives in explaining the difference between self-rated and proxy-rated quality of life in people living with dementia: a systematic literature review and meta-analysis. Qual Life Res (2024). https://doi.org/10.1007/s11136-024-03660-w

Download citation

Accepted : 27 March 2024

Published : 24 April 2024

DOI : https://doi.org/10.1007/s11136-024-03660-w

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Quality of Life

- Outcome measurement

- Find a journal

- Publish with us

- Track your research

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Wiley-Blackwell Online Open

An overview of methodological approaches in systematic reviews

Prabhakar veginadu.

1 Department of Rural Clinical Sciences, La Trobe Rural Health School, La Trobe University, Bendigo Victoria, Australia

Hanny Calache

2 Lincoln International Institute for Rural Health, University of Lincoln, Brayford Pool, Lincoln UK

Akshaya Pandian

3 Department of Orthodontics, Saveetha Dental College, Chennai Tamil Nadu, India

Mohd Masood

Associated data.

APPENDIX B: List of excluded studies with detailed reasons for exclusion

APPENDIX C: Quality assessment of included reviews using AMSTAR 2

The aim of this overview is to identify and collate evidence from existing published systematic review (SR) articles evaluating various methodological approaches used at each stage of an SR.

The search was conducted in five electronic databases from inception to November 2020 and updated in February 2022: MEDLINE, Embase, Web of Science Core Collection, Cochrane Database of Systematic Reviews, and APA PsycINFO. Title and abstract screening were performed in two stages by one reviewer, supported by a second reviewer. Full‐text screening, data extraction, and quality appraisal were performed by two reviewers independently. The quality of the included SRs was assessed using the AMSTAR 2 checklist.

The search retrieved 41,556 unique citations, of which 9 SRs were deemed eligible for inclusion in final synthesis. Included SRs evaluated 24 unique methodological approaches used for defining the review scope and eligibility, literature search, screening, data extraction, and quality appraisal in the SR process. Limited evidence supports the following (a) searching multiple resources (electronic databases, handsearching, and reference lists) to identify relevant literature; (b) excluding non‐English, gray, and unpublished literature, and (c) use of text‐mining approaches during title and abstract screening.

The overview identified limited SR‐level evidence on various methodological approaches currently employed during five of the seven fundamental steps in the SR process, as well as some methodological modifications currently used in expedited SRs. Overall, findings of this overview highlight the dearth of published SRs focused on SR methodologies and this warrants future work in this area.

1. INTRODUCTION

Evidence synthesis is a prerequisite for knowledge translation. 1 A well conducted systematic review (SR), often in conjunction with meta‐analyses (MA) when appropriate, is considered the “gold standard” of methods for synthesizing evidence related to a topic of interest. 2 The central strength of an SR is the transparency of the methods used to systematically search, appraise, and synthesize the available evidence. 3 Several guidelines, developed by various organizations, are available for the conduct of an SR; 4 , 5 , 6 , 7 among these, Cochrane is considered a pioneer in developing rigorous and highly structured methodology for the conduct of SRs. 8 The guidelines developed by these organizations outline seven fundamental steps required in SR process: defining the scope of the review and eligibility criteria, literature searching and retrieval, selecting eligible studies, extracting relevant data, assessing risk of bias (RoB) in included studies, synthesizing results, and assessing certainty of evidence (CoE) and presenting findings. 4 , 5 , 6 , 7

The methodological rigor involved in an SR can require a significant amount of time and resource, which may not always be available. 9 As a result, there has been a proliferation of modifications made to the traditional SR process, such as refining, shortening, bypassing, or omitting one or more steps, 10 , 11 for example, limits on the number and type of databases searched, limits on publication date, language, and types of studies included, and limiting to one reviewer for screening and selection of studies, as opposed to two or more reviewers. 10 , 11 These methodological modifications are made to accommodate the needs of and resource constraints of the reviewers and stakeholders (e.g., organizations, policymakers, health care professionals, and other knowledge users). While such modifications are considered time and resource efficient, they may introduce bias in the review process reducing their usefulness. 5

Substantial research has been conducted examining various approaches used in the standardized SR methodology and their impact on the validity of SR results. There are a number of published reviews examining the approaches or modifications corresponding to single 12 , 13 or multiple steps 14 involved in an SR. However, there is yet to be a comprehensive summary of the SR‐level evidence for all the seven fundamental steps in an SR. Such a holistic evidence synthesis will provide an empirical basis to confirm the validity of current accepted practices in the conduct of SRs. Furthermore, sometimes there is a balance that needs to be achieved between the resource availability and the need to synthesize the evidence in the best way possible, given the constraints. This evidence base will also inform the choice of modifications to be made to the SR methods, as well as the potential impact of these modifications on the SR results. An overview is considered the choice of approach for summarizing existing evidence on a broad topic, directing the reader to evidence, or highlighting the gaps in evidence, where the evidence is derived exclusively from SRs. 15 Therefore, for this review, an overview approach was used to (a) identify and collate evidence from existing published SR articles evaluating various methodological approaches employed in each of the seven fundamental steps of an SR and (b) highlight both the gaps in the current research and the potential areas for future research on the methods employed in SRs.

An a priori protocol was developed for this overview but was not registered with the International Prospective Register of Systematic Reviews (PROSPERO), as the review was primarily methodological in nature and did not meet PROSPERO eligibility criteria for registration. The protocol is available from the corresponding author upon reasonable request. This overview was conducted based on the guidelines for the conduct of overviews as outlined in The Cochrane Handbook. 15 Reporting followed the Preferred Reporting Items for Systematic reviews and Meta‐analyses (PRISMA) statement. 3

2.1. Eligibility criteria

Only published SRs, with or without associated MA, were included in this overview. We adopted the defining characteristics of SRs from The Cochrane Handbook. 5 According to The Cochrane Handbook, a review was considered systematic if it satisfied the following criteria: (a) clearly states the objectives and eligibility criteria for study inclusion; (b) provides reproducible methodology; (c) includes a systematic search to identify all eligible studies; (d) reports assessment of validity of findings of included studies (e.g., RoB assessment of the included studies); (e) systematically presents all the characteristics or findings of the included studies. 5 Reviews that did not meet all of the above criteria were not considered a SR for this study and were excluded. MA‐only articles were included if it was mentioned that the MA was based on an SR.

SRs and/or MA of primary studies evaluating methodological approaches used in defining review scope and study eligibility, literature search, study selection, data extraction, RoB assessment, data synthesis, and CoE assessment and reporting were included. The methodological approaches examined in these SRs and/or MA can also be related to the substeps or elements of these steps; for example, applying limits on date or type of publication are the elements of literature search. Included SRs examined or compared various aspects of a method or methods, and the associated factors, including but not limited to: precision or effectiveness; accuracy or reliability; impact on the SR and/or MA results; reproducibility of an SR steps or bias occurred; time and/or resource efficiency. SRs assessing the methodological quality of SRs (e.g., adherence to reporting guidelines), evaluating techniques for building search strategies or the use of specific database filters (e.g., use of Boolean operators or search filters for randomized controlled trials), examining various tools used for RoB or CoE assessment (e.g., ROBINS vs. Cochrane RoB tool), or evaluating statistical techniques used in meta‐analyses were excluded. 14

2.2. Search

The search for published SRs was performed on the following scientific databases initially from inception to third week of November 2020 and updated in the last week of February 2022: MEDLINE (via Ovid), Embase (via Ovid), Web of Science Core Collection, Cochrane Database of Systematic Reviews, and American Psychological Association (APA) PsycINFO. Search was restricted to English language publications. Following the objectives of this study, study design filters within databases were used to restrict the search to SRs and MA, where available. The reference lists of included SRs were also searched for potentially relevant publications.

The search terms included keywords, truncations, and subject headings for the key concepts in the review question: SRs and/or MA, methods, and evaluation. Some of the terms were adopted from the search strategy used in a previous review by Robson et al., which reviewed primary studies on methodological approaches used in study selection, data extraction, and quality appraisal steps of SR process. 14 Individual search strategies were developed for respective databases by combining the search terms using appropriate proximity and Boolean operators, along with the related subject headings in order to identify SRs and/or MA. 16 , 17 A senior librarian was consulted in the design of the search terms and strategy. Appendix A presents the detailed search strategies for all five databases.

2.3. Study selection and data extraction

Title and abstract screening of references were performed in three steps. First, one reviewer (PV) screened all the titles and excluded obviously irrelevant citations, for example, articles on topics not related to SRs, non‐SR publications (such as randomized controlled trials, observational studies, scoping reviews, etc.). Next, from the remaining citations, a random sample of 200 titles and abstracts were screened against the predefined eligibility criteria by two reviewers (PV and MM), independently, in duplicate. Discrepancies were discussed and resolved by consensus. This step ensured that the responses of the two reviewers were calibrated for consistency in the application of the eligibility criteria in the screening process. Finally, all the remaining titles and abstracts were reviewed by a single “calibrated” reviewer (PV) to identify potential full‐text records. Full‐text screening was performed by at least two authors independently (PV screened all the records, and duplicate assessment was conducted by MM, HC, or MG), with discrepancies resolved via discussions or by consulting a third reviewer.

Data related to review characteristics, results, key findings, and conclusions were extracted by at least two reviewers independently (PV performed data extraction for all the reviews and duplicate extraction was performed by AP, HC, or MG).

2.4. Quality assessment of included reviews

The quality assessment of the included SRs was performed using the AMSTAR 2 (A MeaSurement Tool to Assess systematic Reviews). The tool consists of a 16‐item checklist addressing critical and noncritical domains. 18 For the purpose of this study, the domain related to MA was reclassified from critical to noncritical, as SRs with and without MA were included. The other six critical domains were used according to the tool guidelines. 18 Two reviewers (PV and AP) independently responded to each of the 16 items in the checklist with either “yes,” “partial yes,” or “no.” Based on the interpretations of the critical and noncritical domains, the overall quality of the review was rated as high, moderate, low, or critically low. 18 Disagreements were resolved through discussion or by consulting a third reviewer.

2.5. Data synthesis

To provide an understandable summary of existing evidence syntheses, characteristics of the methods evaluated in the included SRs were examined and key findings were categorized and presented based on the corresponding step in the SR process. The categories of key elements within each step were discussed and agreed by the authors. Results of the included reviews were tabulated and summarized descriptively, along with a discussion on any overlap in the primary studies. 15 No quantitative analyses of the data were performed.

From 41,556 unique citations identified through literature search, 50 full‐text records were reviewed, and nine systematic reviews 14 , 19 , 20 , 21 , 22 , 23 , 24 , 25 , 26 were deemed eligible for inclusion. The flow of studies through the screening process is presented in Figure 1 . A list of excluded studies with reasons can be found in Appendix B .

Study selection flowchart

3.1. Characteristics of included reviews

Table 1 summarizes the characteristics of included SRs. The majority of the included reviews (six of nine) were published after 2010. 14 , 22 , 23 , 24 , 25 , 26 Four of the nine included SRs were Cochrane reviews. 20 , 21 , 22 , 23 The number of databases searched in the reviews ranged from 2 to 14, 2 reviews searched gray literature sources, 24 , 25 and 7 reviews included a supplementary search strategy to identify relevant literature. 14 , 19 , 20 , 21 , 22 , 23 , 26 Three of the included SRs (all Cochrane reviews) included an integrated MA. 20 , 21 , 23

Characteristics of included studies

SR = systematic review; MA = meta‐analysis; RCT = randomized controlled trial; CCT = controlled clinical trial; N/R = not reported.

The included SRs evaluated 24 unique methodological approaches (26 in total) used across five steps in the SR process; 8 SRs evaluated 6 approaches, 19 , 20 , 21 , 22 , 23 , 24 , 25 , 26 while 1 review evaluated 18 approaches. 14 Exclusion of gray or unpublished literature 21 , 26 and blinding of reviewers for RoB assessment 14 , 23 were evaluated in two reviews each. Included SRs evaluated methods used in five different steps in the SR process, including methods used in defining the scope of review ( n = 3), literature search ( n = 3), study selection ( n = 2), data extraction ( n = 1), and RoB assessment ( n = 2) (Table 2 ).

Summary of findings from review evaluating systematic review methods

There was some overlap in the primary studies evaluated in the included SRs on the same topics: Schmucker et al. 26 and Hopewell et al. 21 ( n = 4), Hopewell et al. 20 and Crumley et al. 19 ( n = 30), and Robson et al. 14 and Morissette et al. 23 ( n = 4). There were no conflicting results between any of the identified SRs on the same topic.

3.2. Methodological quality of included reviews

Overall, the quality of the included reviews was assessed as moderate at best (Table 2 ). The most common critical weakness in the reviews was failure to provide justification for excluding individual studies (four reviews). Detailed quality assessment is provided in Appendix C .

3.3. Evidence on systematic review methods

3.3.1. methods for defining review scope and eligibility.