- Survey Paper

- Open access

- Published: 31 March 2021

Review of deep learning: concepts, CNN architectures, challenges, applications, future directions

- Laith Alzubaidi ORCID: orcid.org/0000-0002-7296-5413 1 , 5 ,

- Jinglan Zhang 1 ,

- Amjad J. Humaidi 2 ,

- Ayad Al-Dujaili 3 ,

- Ye Duan 4 ,

- Omran Al-Shamma 5 ,

- J. Santamaría 6 ,

- Mohammed A. Fadhel 7 ,

- Muthana Al-Amidie 4 &

- Laith Farhan 8

Journal of Big Data volume 8 , Article number: 53 ( 2021 ) Cite this article

380k Accesses

2105 Citations

40 Altmetric

Metrics details

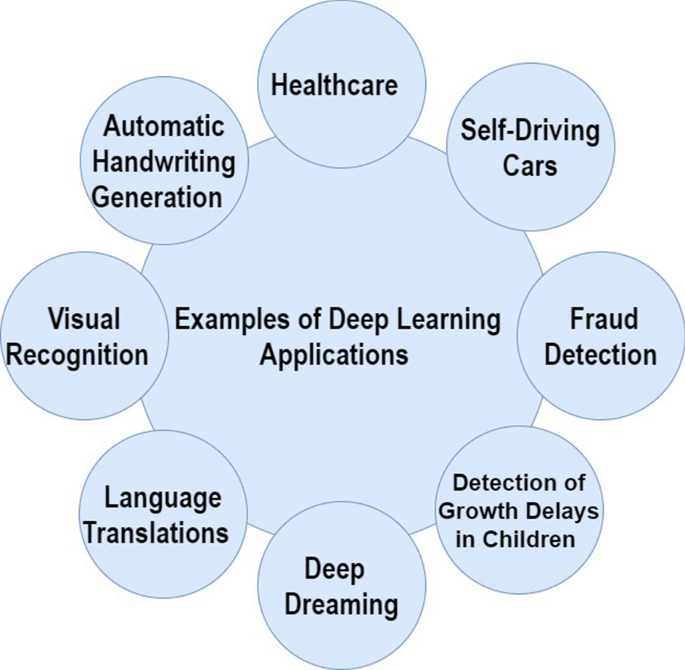

In the last few years, the deep learning (DL) computing paradigm has been deemed the Gold Standard in the machine learning (ML) community. Moreover, it has gradually become the most widely used computational approach in the field of ML, thus achieving outstanding results on several complex cognitive tasks, matching or even beating those provided by human performance. One of the benefits of DL is the ability to learn massive amounts of data. The DL field has grown fast in the last few years and it has been extensively used to successfully address a wide range of traditional applications. More importantly, DL has outperformed well-known ML techniques in many domains, e.g., cybersecurity, natural language processing, bioinformatics, robotics and control, and medical information processing, among many others. Despite it has been contributed several works reviewing the State-of-the-Art on DL, all of them only tackled one aspect of the DL, which leads to an overall lack of knowledge about it. Therefore, in this contribution, we propose using a more holistic approach in order to provide a more suitable starting point from which to develop a full understanding of DL. Specifically, this review attempts to provide a more comprehensive survey of the most important aspects of DL and including those enhancements recently added to the field. In particular, this paper outlines the importance of DL, presents the types of DL techniques and networks. It then presents convolutional neural networks (CNNs) which the most utilized DL network type and describes the development of CNNs architectures together with their main features, e.g., starting with the AlexNet network and closing with the High-Resolution network (HR.Net). Finally, we further present the challenges and suggested solutions to help researchers understand the existing research gaps. It is followed by a list of the major DL applications. Computational tools including FPGA, GPU, and CPU are summarized along with a description of their influence on DL. The paper ends with the evolution matrix, benchmark datasets, and summary and conclusion.

Introduction

Recently, machine learning (ML) has become very widespread in research and has been incorporated in a variety of applications, including text mining, spam detection, video recommendation, image classification, and multimedia concept retrieval [ 1 , 2 , 3 , 4 , 5 , 6 ]. Among the different ML algorithms, deep learning (DL) is very commonly employed in these applications [ 7 , 8 , 9 ]. Another name for DL is representation learning (RL). The continuing appearance of novel studies in the fields of deep and distributed learning is due to both the unpredictable growth in the ability to obtain data and the amazing progress made in the hardware technologies, e.g. High Performance Computing (HPC) [ 10 ].

DL is derived from the conventional neural network but considerably outperforms its predecessors. Moreover, DL employs transformations and graph technologies simultaneously in order to build up multi-layer learning models. The most recently developed DL techniques have obtained good outstanding performance across a variety of applications, including audio and speech processing, visual data processing, natural language processing (NLP), among others [ 11 , 12 , 13 , 14 ].

Usually, the effectiveness of an ML algorithm is highly dependent on the integrity of the input-data representation. It has been shown that a suitable data representation provides an improved performance when compared to a poor data representation. Thus, a significant research trend in ML for many years has been feature engineering, which has informed numerous research studies. This approach aims at constructing features from raw data. In addition, it is extremely field-specific and frequently requires sizable human effort. For instance, several types of features were introduced and compared in the computer vision context, such as, histogram of oriented gradients (HOG) [ 15 ], scale-invariant feature transform (SIFT) [ 16 ], and bag of words (BoW) [ 17 ]. As soon as a novel feature is introduced and is found to perform well, it becomes a new research direction that is pursued over multiple decades.

Relatively speaking, feature extraction is achieved in an automatic way throughout the DL algorithms. This encourages researchers to extract discriminative features using the smallest possible amount of human effort and field knowledge [ 18 ]. These algorithms have a multi-layer data representation architecture, in which the first layers extract the low-level features while the last layers extract the high-level features. Note that artificial intelligence (AI) originally inspired this type of architecture, which simulates the process that occurs in core sensorial regions within the human brain. Using different scenes, the human brain can automatically extract data representation. More specifically, the output of this process is the classified objects, while the received scene information represents the input. This process simulates the working methodology of the human brain. Thus, it emphasizes the main benefit of DL.

In the field of ML, DL, due to its considerable success, is currently one of the most prominent research trends. In this paper, an overview of DL is presented that adopts various perspectives such as the main concepts, architectures, challenges, applications, computational tools and evolution matrix. Convolutional neural network (CNN) is one of the most popular and used of DL networks [ 19 , 20 ]. Because of CNN, DL is very popular nowadays. The main advantage of CNN compared to its predecessors is that it automatically detects the significant features without any human supervision which made it the most used. Therefore, we have dug in deep with CNN by presenting the main components of it. Furthermore, we have elaborated in detail the most common CNN architectures, starting with the AlexNet network and ending with the High-Resolution network (HR.Net).

Several published DL review papers have been presented in the last few years. However, all of them have only been addressed one side focusing on one application or topic such as the review of CNN architectures [ 21 ], DL for classification of plant diseases [ 22 ], DL for object detection [ 23 ], DL applications in medical image analysis [ 24 ], and etc. Although these reviews present good topics, they do not provide a full understanding of DL topics such as concepts, detailed research gaps, computational tools, and DL applications. First, It is required to understand DL aspects including concepts, challenges, and applications then going deep in the applications. To achieve that, it requires extensive time and a large number of research papers to learn about DL including research gaps and applications. Therefore, we propose a deep review of DL to provide a more suitable starting point from which to develop a full understanding of DL from one review paper. The motivation behinds our review was to cover the most important aspect of DL including open challenges, applications, and computational tools perspective. Furthermore, our review can be the first step towards other DL topics.

The main aim of this review is to present the most important aspects of DL to make it easy for researchers and students to have a clear image of DL from single review paper. This review will further advance DL research by helping people discover more about recent developments in the field. Researchers would be allowed to decide the more suitable direction of work to be taken in order to provide more accurate alternatives to the field. Our contributions are outlined as follows:

This is the first review that almost provides a deep survey of the most important aspects of deep learning. This review helps researchers and students to have a good understanding from one paper.

We explain CNN in deep which the most popular deep learning algorithm by describing the concepts, theory, and state-of-the-art architectures.

We review current challenges (limitations) of Deep Learning including lack of training data, Imbalanced Data, Interpretability of data, Uncertainty scaling, Catastrophic forgetting, Model compression, Overfitting, Vanishing gradient problem, Exploding Gradient Problem, and Underspecification. We additionally discuss the proposed solutions tackling these issues.

We provide an exhaustive list of medical imaging applications with deep learning by categorizing them based on the tasks by starting with classification and ending with registration.

We discuss the computational approaches (CPU, GPU, FPGA) by comparing the influence of each tool on deep learning algorithms.

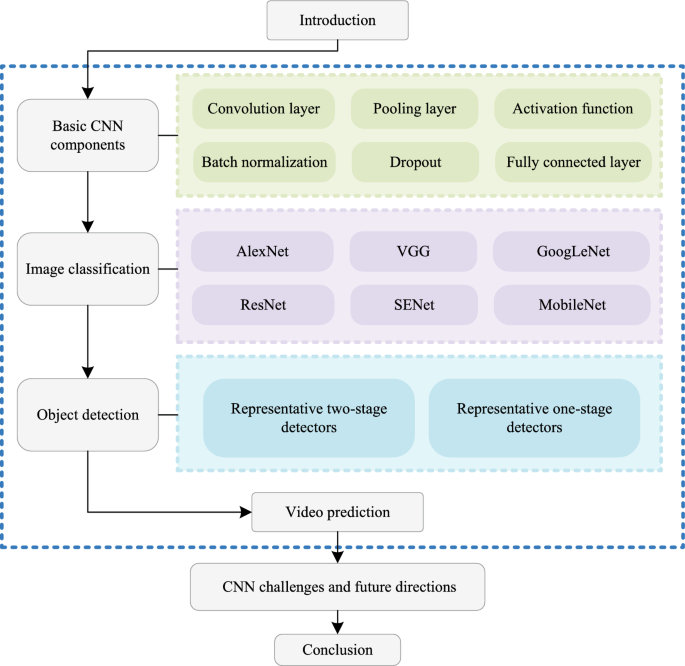

The rest of the paper is organized as follows: “ Survey methodology ” section describes The survey methodology. “ Background ” section presents the background. “ Classification of DL approaches ” section defines the classification of DL approaches. “ Types of DL networks ” section displays types of DL networks. “ CNN architectures ” section shows CNN Architectures. “ Challenges (limitations) of deep learning and alternate solutions ” section details the challenges of DL and alternate solutions. “ Applications of deep learning ” section outlines the applications of DL. “ Computational approaches ” section explains the influence of computational approaches (CPU, GPU, FPGA) on DL. “ Evaluation metrics ” section presents the evaluation metrics. “ Frameworks and datasets ” section lists frameworks and datasets. “ Summary and conclusion ” section presents the summary and conclusion.

Survey methodology

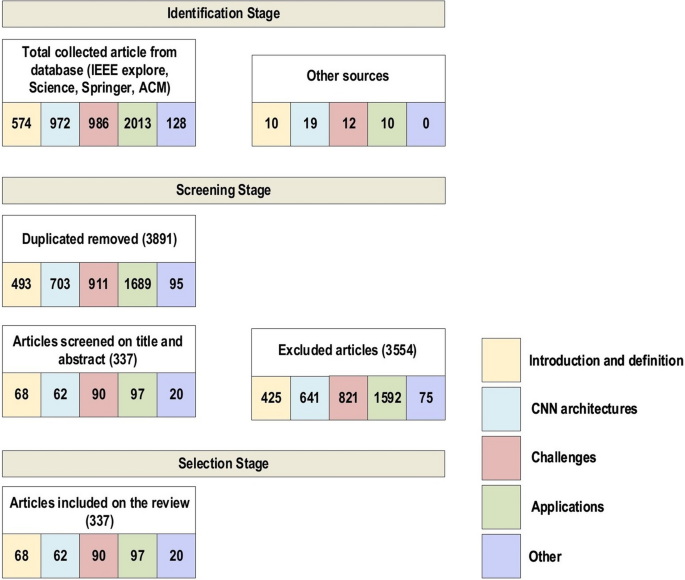

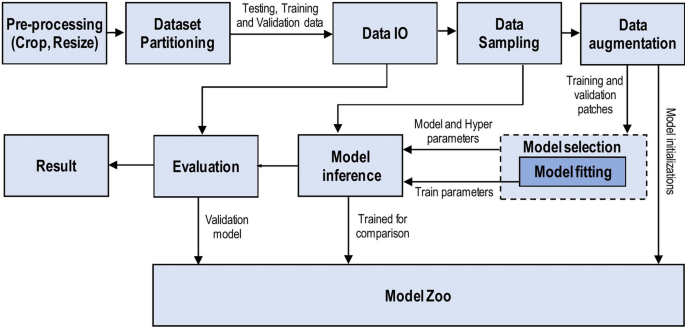

We have reviewed the significant research papers in the field published during 2010–2020, mainly from the years of 2020 and 2019 with some papers from 2021. The main focus was papers from the most reputed publishers such as IEEE, Elsevier, MDPI, Nature, ACM, and Springer. Some papers have been selected from ArXiv. We have reviewed more than 300 papers on various DL topics. There are 108 papers from the year 2020, 76 papers from the year 2019, and 48 papers from the year 2018. This indicates that this review focused on the latest publications in the field of DL. The selected papers were analyzed and reviewed to (1) list and define the DL approaches and network types, (2) list and explain CNN architectures, (3) present the challenges of DL and suggest the alternate solutions, (4) assess the applications of DL, (5) assess computational approaches. The most keywords used for search criteria for this review paper are (“Deep Learning”), (“Machine Learning”), (“Convolution Neural Network”), (“Deep Learning” AND “Architectures”), ((“Deep Learning”) AND (“Image”) AND (“detection” OR “classification” OR “segmentation” OR “Localization”)), (“Deep Learning” AND “detection” OR “classification” OR “segmentation” OR “Localization”), (“Deep Learning” AND “CPU” OR “GPU” OR “FPGA”), (“Deep Learning” AND “Transfer Learning”), (“Deep Learning” AND “Imbalanced Data”), (“Deep Learning” AND “Interpretability of data”), (“Deep Learning” AND “Overfitting”), (“Deep Learning” AND “Underspecification”). Figure 1 shows our search structure of the survey paper. Table 1 presents the details of some of the journals that have been cited in this review paper.

Search framework

This section will present a background of DL. We begin with a quick introduction to DL, followed by the difference between DL and ML. We then show the situations that require DL. Finally, we present the reasons for applying DL.

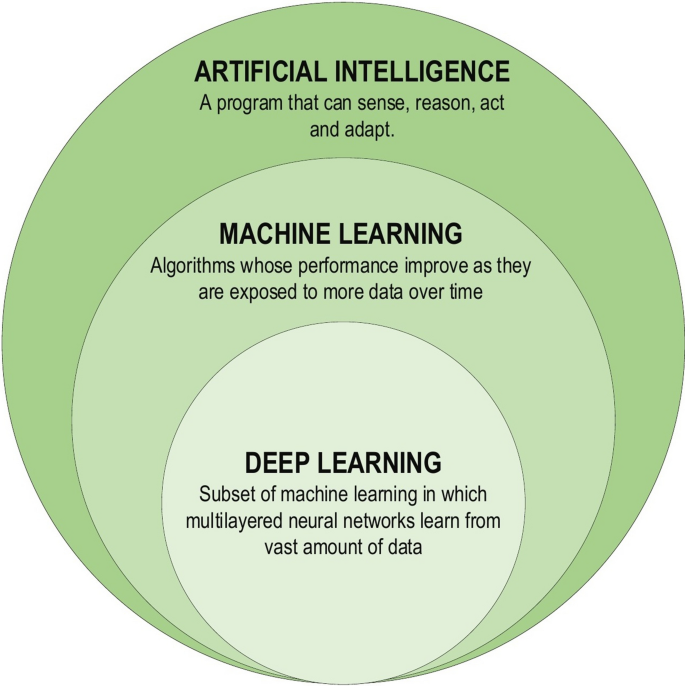

DL, a subset of ML (Fig. 2 ), is inspired by the information processing patterns found in the human brain. DL does not require any human-designed rules to operate; rather, it uses a large amount of data to map the given input to specific labels. DL is designed using numerous layers of algorithms (artificial neural networks, or ANNs), each of which provides a different interpretation of the data that has been fed to them [ 18 , 25 ].

Deep learning family

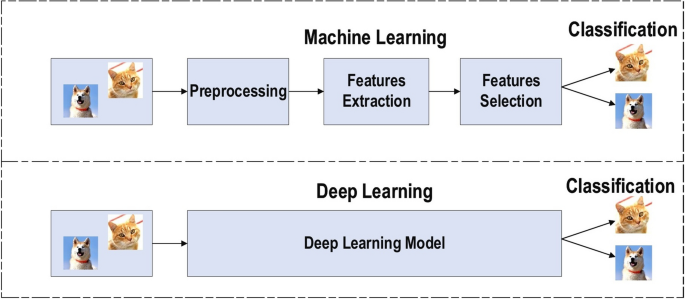

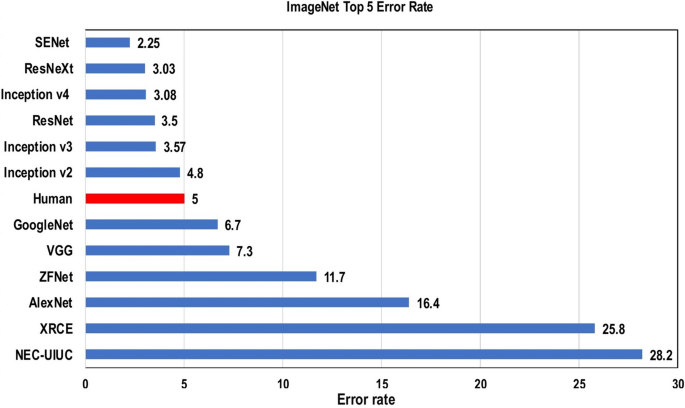

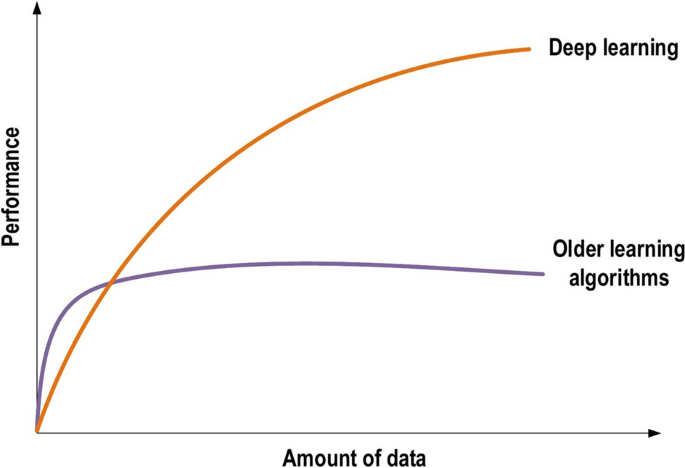

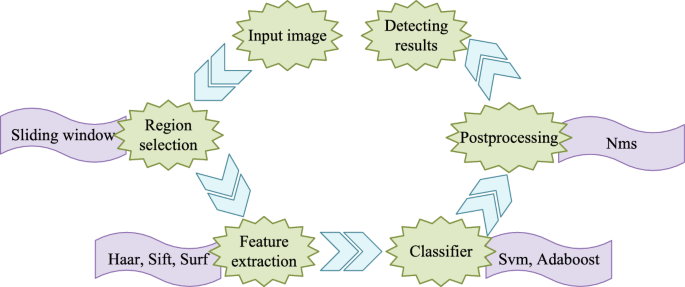

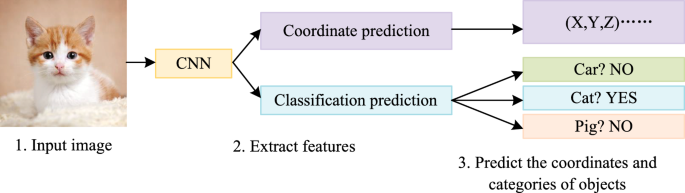

Achieving the classification task using conventional ML techniques requires several sequential steps, specifically pre-processing, feature extraction, wise feature selection, learning, and classification. Furthermore, feature selection has a great impact on the performance of ML techniques. Biased feature selection may lead to incorrect discrimination between classes. Conversely, DL has the ability to automate the learning of feature sets for several tasks, unlike conventional ML methods [ 18 , 26 ]. DL enables learning and classification to be achieved in a single shot (Fig. 3 ). DL has become an incredibly popular type of ML algorithm in recent years due to the huge growth and evolution of the field of big data [ 27 , 28 ]. It is still in continuous development regarding novel performance for several ML tasks [ 22 , 29 , 30 , 31 ] and has simplified the improvement of many learning fields [ 32 , 33 ], such as image super-resolution [ 34 ], object detection [ 35 , 36 ], and image recognition [ 30 , 37 ]. Recently, DL performance has come to exceed human performance on tasks such as image classification (Fig. 4 ).

The difference between deep learning and traditional machine learning

Deep learning performance compared to human

Nearly all scientific fields have felt the impact of this technology. Most industries and businesses have already been disrupted and transformed through the use of DL. The leading technology and economy-focused companies around the world are in a race to improve DL. Even now, human-level performance and capability cannot exceed that the performance of DL in many areas, such as predicting the time taken to make car deliveries, decisions to certify loan requests, and predicting movie ratings [ 38 ]. The winners of the 2019 “Nobel Prize” in computing, also known as the Turing Award, were three pioneers in the field of DL (Yann LeCun, Geoffrey Hinton, and Yoshua Bengio) [ 39 ]. Although a large number of goals have been achieved, there is further progress to be made in the DL context. In fact, DL has the ability to enhance human lives by providing additional accuracy in diagnosis, including estimating natural disasters [ 40 ], the discovery of new drugs [ 41 ], and cancer diagnosis [ 42 , 43 , 44 ]. Esteva et al. [ 45 ] found that a DL network has the same ability to diagnose the disease as twenty-one board-certified dermatologists using 129,450 images of 2032 diseases. Furthermore, in grading prostate cancer, US board-certified general pathologists achieved an average accuracy of 61%, while the Google AI [ 44 ] outperformed these specialists by achieving an average accuracy of 70%. In 2020, DL is playing an increasingly vital role in early diagnosis of the novel coronavirus (COVID-19) [ 29 , 46 , 47 , 48 ]. DL has become the main tool in many hospitals around the world for automatic COVID-19 classification and detection using chest X-ray images or other types of images. We end this section by the saying of AI pioneer Geoffrey Hinton “Deep learning is going to be able to do everything”.

When to apply deep learning

Machine intelligence is useful in many situations which is equal or better than human experts in some cases [ 49 , 50 , 51 , 52 ], meaning that DL can be a solution to the following problems:

Cases where human experts are not available.

Cases where humans are unable to explain decisions made using their expertise (language understanding, medical decisions, and speech recognition).

Cases where the problem solution updates over time (price prediction, stock preference, weather prediction, and tracking).

Cases where solutions require adaptation based on specific cases (personalization, biometrics).

Cases where size of the problem is extremely large and exceeds our inadequate reasoning abilities (sentiment analysis, matching ads to Facebook, calculation webpage ranks).

Why deep learning?

Several performance features may answer this question, e.g

Universal Learning Approach: Because DL has the ability to perform in approximately all application domains, it is sometimes referred to as universal learning.

Robustness: In general, precisely designed features are not required in DL techniques. Instead, the optimized features are learned in an automated fashion related to the task under consideration. Thus, robustness to the usual changes of the input data is attained.

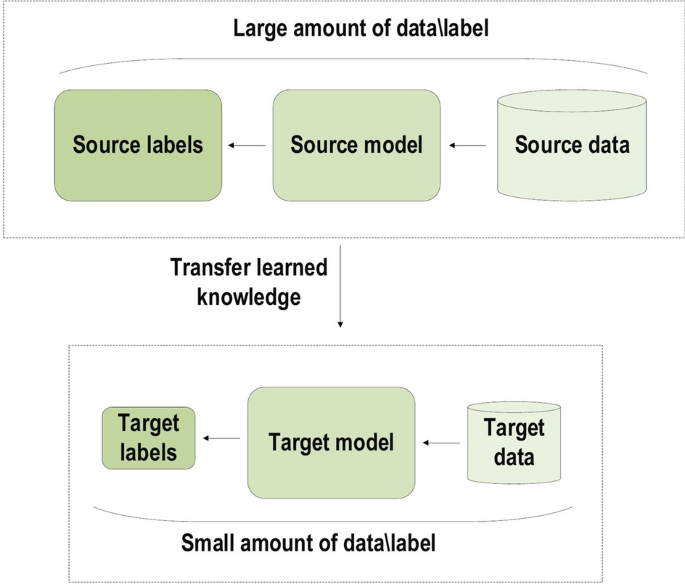

Generalization: Different data types or different applications can use the same DL technique, an approach frequently referred to as transfer learning (TL) which explained in the latter section. Furthermore, it is a useful approach in problems where data is insufficient.

Scalability: DL is highly scalable. ResNet [ 37 ], which was invented by Microsoft, comprises 1202 layers and is frequently applied at a supercomputing scale. Lawrence Livermore National Laboratory (LLNL), a large enterprise working on evolving frameworks for networks, adopted a similar approach, where thousands of nodes can be implemented [ 53 ].

Classification of DL approaches

DL techniques are classified into three major categories: unsupervised, partially supervised (semi-supervised) and supervised. Furthermore, deep reinforcement learning (DRL), also known as RL, is another type of learning technique, which is mostly considered to fall into the category of partially supervised (and occasionally unsupervised) learning techniques.

Deep supervised learning

Deep semi-supervised learning.

In this technique, the learning process is based on semi-labeled datasets. Occasionally, generative adversarial networks (GANs) and DRL are employed in the same way as this technique. In addition, RNNs, which include GRUs and LSTMs, are also employed for partially supervised learning. One of the advantages of this technique is to minimize the amount of labeled data needed. On other the hand, One of the disadvantages of this technique is irrelevant input feature present training data could furnish incorrect decisions. Text document classifier is one of the most popular example of an application of semi-supervised learning. Due to difficulty of obtaining a large amount of labeled text documents, semi-supervised learning is ideal for text document classification task.

Deep unsupervised learning

This technique makes it possible to implement the learning process in the absence of available labeled data (i.e. no labels are required). Here, the agent learns the significant features or interior representation required to discover the unidentified structure or relationships in the input data. Techniques of generative networks, dimensionality reduction and clustering are frequently counted within the category of unsupervised learning. Several members of the DL family have performed well on non-linear dimensionality reduction and clustering tasks; these include restricted Boltzmann machines, auto-encoders and GANs as the most recently developed techniques. Moreover, RNNs, which include GRUs and LSTM approaches, have also been employed for unsupervised learning in a wide range of applications. The main disadvantages of unsupervised learning are unable to provide accurate information concerning data sorting and computationally complex. One of the most popular unsupervised learning approaches is clustering [ 54 ].

Deep reinforcement learning

For solving a task, the selection of the type of reinforcement learning that needs to be performed is based on the space or the scope of the problem. For example, DRL is the best way for problems involving many parameters to be optimized. By contrast, derivative-free reinforcement learning is a technique that performs well for problems with limited parameters. Some of the applications of reinforcement learning are business strategy planning and robotics for industrial automation. The main drawback of Reinforcement Learning is that parameters may influence the speed of learning. Here are the main motivations for utilizing Reinforcement Learning:

It assists you to identify which action produces the highest reward over a longer period.

It assists you to discover which situation requires action.

It also enables it to figure out the best approach for reaching large rewards.

Reinforcement Learning also gives the learning agent a reward function.

Reinforcement Learning can’t utilize in all the situation such as:

In case there is sufficient data to resolve the issue with supervised learning techniques.

Reinforcement Learning is computing-heavy and time-consuming. Specially when the workspace is large.

Types of DL networks

The most famous types of deep learning networks are discussed in this section: these include recursive neural networks (RvNNs), RNNs, and CNNs. RvNNs and RNNs were briefly explained in this section while CNNs were explained in deep due to the importance of this type. Furthermore, it is the most used in several applications among other networks.

Recursive neural networks

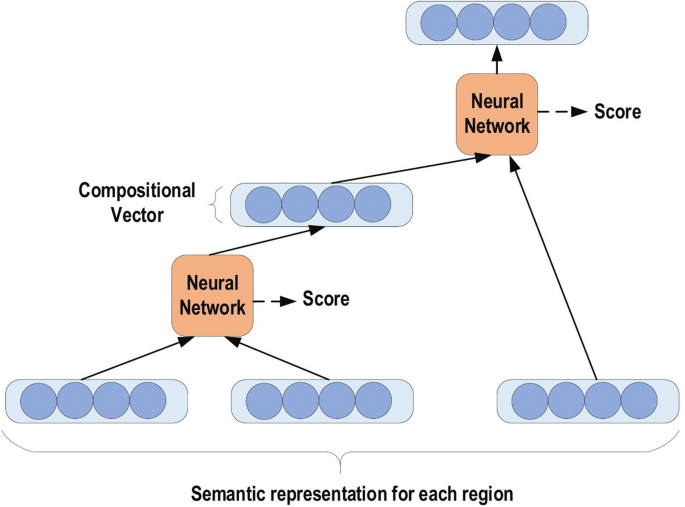

RvNN can achieve predictions in a hierarchical structure also classify the outputs utilizing compositional vectors [ 57 ]. Recursive auto-associative memory (RAAM) [ 58 ] is the primary inspiration for the RvNN development. The RvNN architecture is generated for processing objects, which have randomly shaped structures like graphs or trees. This approach generates a fixed-width distributed representation from a variable-size recursive-data structure. The network is trained using an introduced back-propagation through structure (BTS) learning system [ 58 ]. The BTS system tracks the same technique as the general-back propagation algorithm and has the ability to support a treelike structure. Auto-association trains the network to regenerate the input-layer pattern at the output layer. RvNN is highly effective in the NLP context. Socher et al. [ 59 ] introduced RvNN architecture designed to process inputs from a variety of modalities. These authors demonstrate two applications for classifying natural language sentences: cases where each sentence is split into words and nature images, and cases where each image is separated into various segments of interest. RvNN computes a likely pair of scores for merging and constructs a syntactic tree. Furthermore, RvNN calculates a score related to the merge plausibility for every pair of units. Next, the pair with the largest score is merged within a composition vector. Following every merge, RvNN generates (a) a larger area of numerous units, (b) a compositional vector of the area, and (c) a label for the class (for instance, a noun phrase will become the class label for the new area if two units are noun words). The compositional vector for the entire area is the root of the RvNN tree structure. An example RvNN tree is shown in Fig. 5 . RvNN has been employed in several applications [ 60 , 61 , 62 ].

An example of RvNN tree

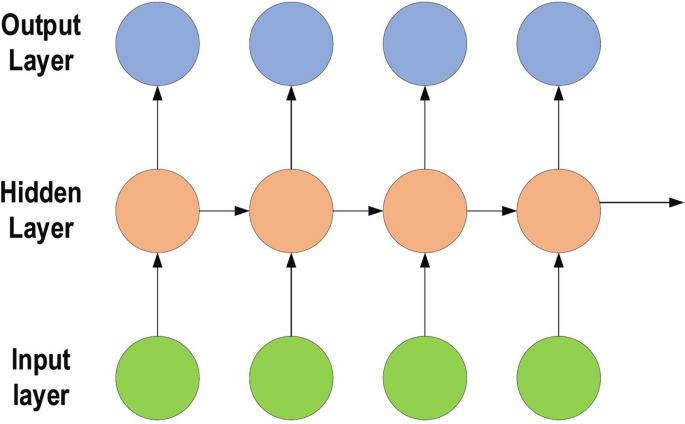

Recurrent neural networks

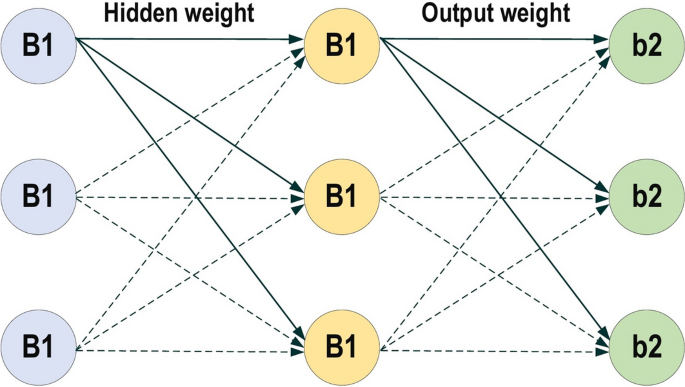

RNNs are a commonly employed and familiar algorithm in the discipline of DL [ 63 , 64 , 65 ]. RNN is mainly applied in the area of speech processing and NLP contexts [ 66 , 67 ]. Unlike conventional networks, RNN uses sequential data in the network. Since the embedded structure in the sequence of the data delivers valuable information, this feature is fundamental to a range of different applications. For instance, it is important to understand the context of the sentence in order to determine the meaning of a specific word in it. Thus, it is possible to consider the RNN as a unit of short-term memory, where x represents the input layer, y is the output layer, and s represents the state (hidden) layer. For a given input sequence, a typical unfolded RNN diagram is illustrated in Fig. 6 . Pascanu et al. [ 68 ] introduced three different types of deep RNN techniques, namely “Hidden-to-Hidden”, “Hidden-to-Output”, and “Input-to-Hidden”. A deep RNN is introduced that lessens the learning difficulty in the deep network and brings the benefits of a deeper RNN based on these three techniques.

Typical unfolded RNN diagram

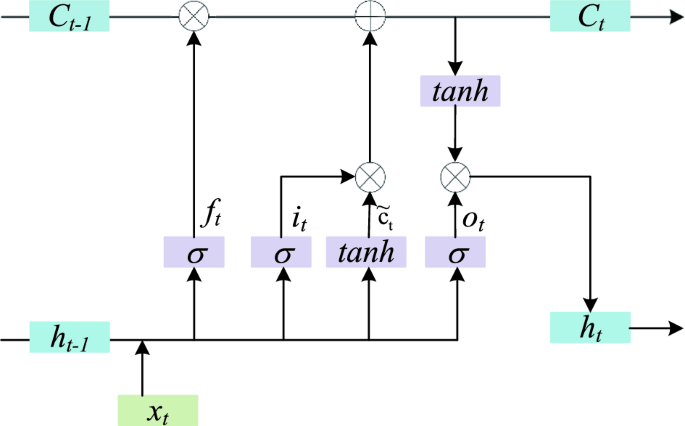

However, RNN’s sensitivity to the exploding gradient and vanishing problems represent one of the main issues with this approach [ 69 ]. More specifically, during the training process, the reduplications of several large or small derivatives may cause the gradients to exponentially explode or decay. With the entrance of new inputs, the network stops thinking about the initial ones; therefore, this sensitivity decays over time. Furthermore, this issue can be handled using LSTM [ 70 ]. This approach offers recurrent connections to memory blocks in the network. Every memory block contains a number of memory cells, which have the ability to store the temporal states of the network. In addition, it contains gated units for controlling the flow of information. In very deep networks [ 37 ], residual connections also have the ability to considerably reduce the impact of the vanishing gradient issue which explained in later sections. CNN is considered to be more powerful than RNN. RNN includes less feature compatibility when compared to CNN.

Convolutional neural networks

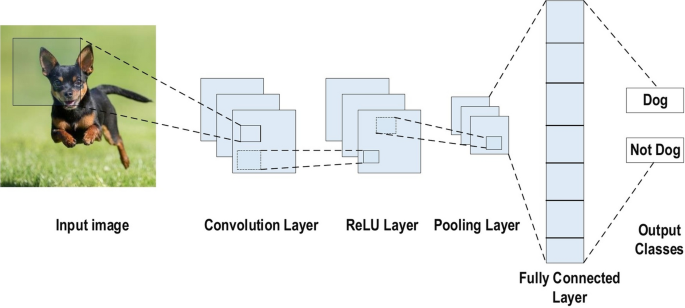

In the field of DL, the CNN is the most famous and commonly employed algorithm [ 30 , 71 , 72 , 73 , 74 , 75 ]. The main benefit of CNN compared to its predecessors is that it automatically identifies the relevant features without any human supervision [ 76 ]. CNNs have been extensively applied in a range of different fields, including computer vision [ 77 ], speech processing [ 78 ], Face Recognition [ 79 ], etc. The structure of CNNs was inspired by neurons in human and animal brains, similar to a conventional neural network. More specifically, in a cat’s brain, a complex sequence of cells forms the visual cortex; this sequence is simulated by the CNN [ 80 ]. Goodfellow et al. [ 28 ] identified three key benefits of the CNN: equivalent representations, sparse interactions, and parameter sharing. Unlike conventional fully connected (FC) networks, shared weights and local connections in the CNN are employed to make full use of 2D input-data structures like image signals. This operation utilizes an extremely small number of parameters, which both simplifies the training process and speeds up the network. This is the same as in the visual cortex cells. Notably, only small regions of a scene are sensed by these cells rather than the whole scene (i.e., these cells spatially extract the local correlation available in the input, like local filters over the input).

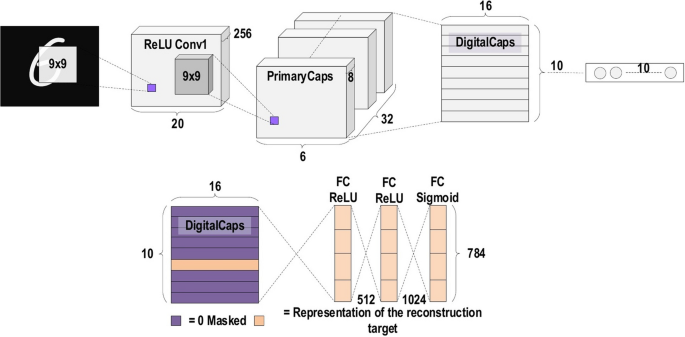

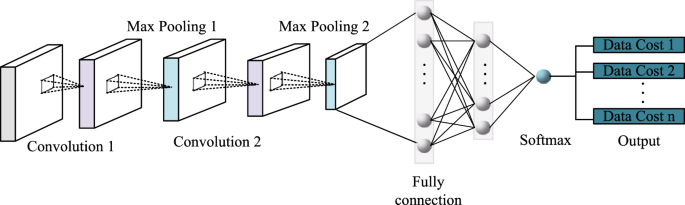

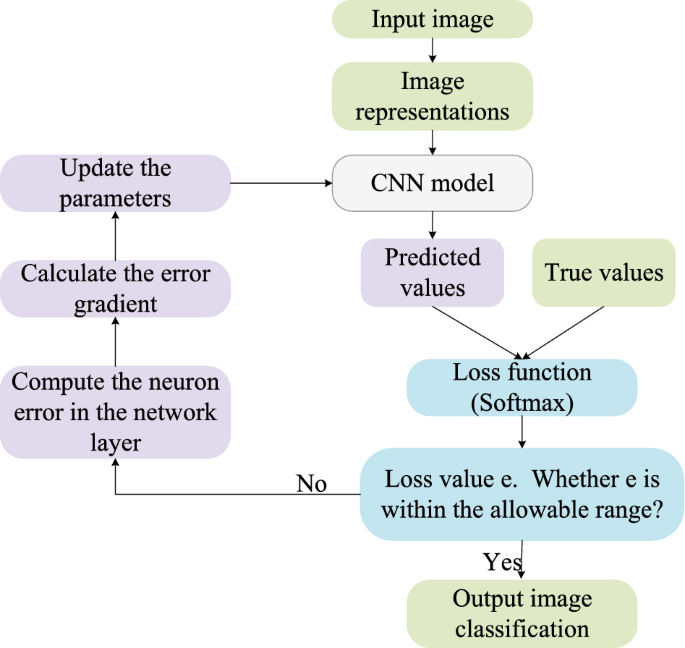

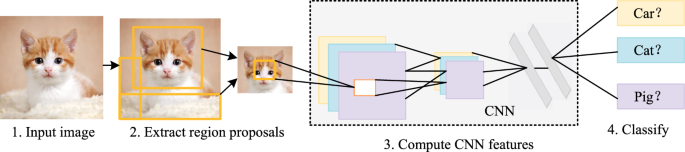

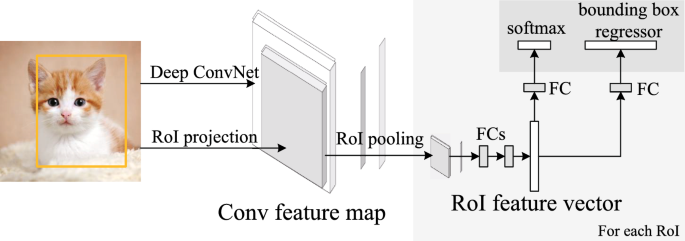

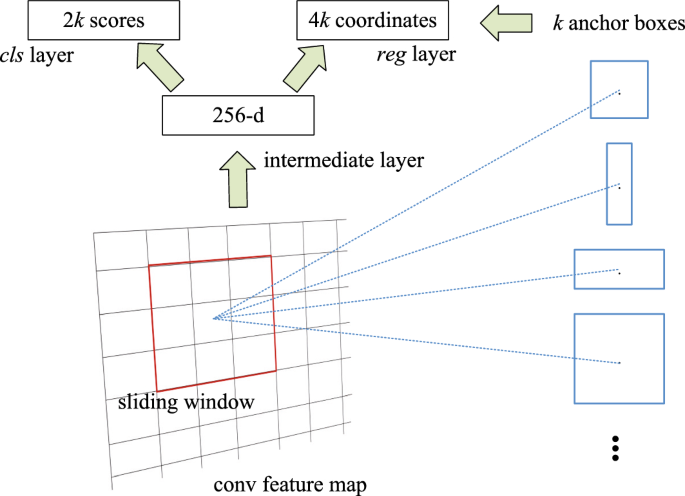

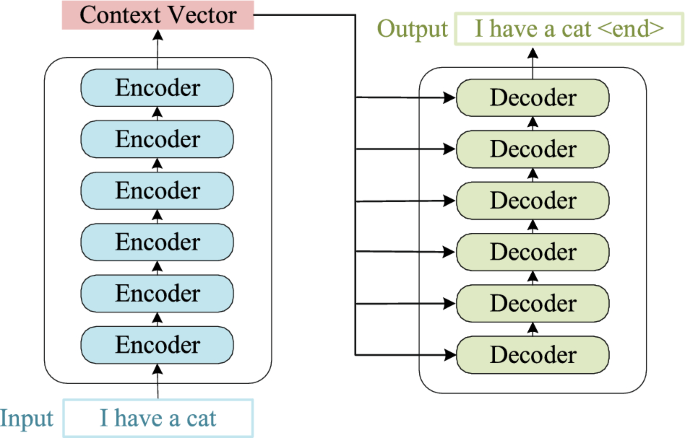

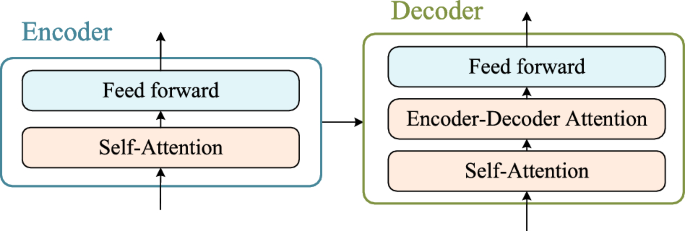

A commonly used type of CNN, which is similar to the multi-layer perceptron (MLP), consists of numerous convolution layers preceding sub-sampling (pooling) layers, while the ending layers are FC layers. An example of CNN architecture for image classification is illustrated in Fig. 7 .

An example of CNN architecture for image classification

The input x of each layer in a CNN model is organized in three dimensions: height, width, and depth, or \(m \times m \times r\) , where the height (m) is equal to the width. The depth is also referred to as the channel number. For example, in an RGB image, the depth (r) is equal to three. Several kernels (filters) available in each convolutional layer are denoted by k and also have three dimensions ( \(n \times n \times q\) ), similar to the input image; here, however, n must be smaller than m , while q is either equal to or smaller than r . In addition, the kernels are the basis of the local connections, which share similar parameters (bias \(b^{k}\) and weight \(W^{k}\) ) for generating k feature maps \(h^{k}\) with a size of ( \(m-n-1\) ) each and are convolved with input, as mentioned above. The convolution layer calculates a dot product between its input and the weights as in Eq. 1 , similar to NLP, but the inputs are undersized areas of the initial image size. Next, by applying the nonlinearity or an activation function to the convolution-layer output, we obtain the following:

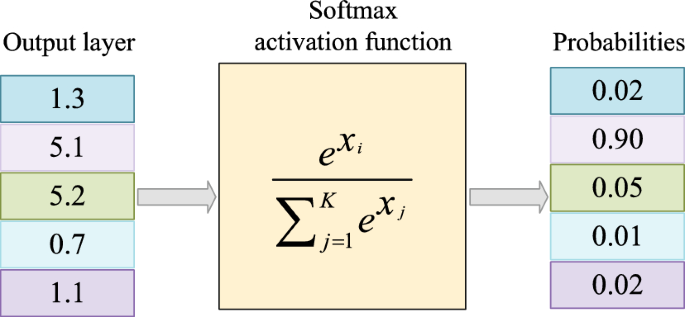

The next step is down-sampling every feature map in the sub-sampling layers. This leads to a reduction in the network parameters, which accelerates the training process and in turn enables handling of the overfitting issue. For all feature maps, the pooling function (e.g. max or average) is applied to an adjacent area of size \(p \times p\) , where p is the kernel size. Finally, the FC layers receive the mid- and low-level features and create the high-level abstraction, which represents the last-stage layers as in a typical neural network. The classification scores are generated using the ending layer [e.g. support vector machines (SVMs) or softmax]. For a given instance, every score represents the probability of a specific class.

Benefits of employing CNNs

The benefits of using CNNs over other traditional neural networks in the computer vision environment are listed as follows:

The main reason to consider CNN is the weight sharing feature, which reduces the number of trainable network parameters and in turn helps the network to enhance generalization and to avoid overfitting.

Concurrently learning the feature extraction layers and the classification layer causes the model output to be both highly organized and highly reliant on the extracted features.

Large-scale network implementation is much easier with CNN than with other neural networks.

The CNN architecture consists of a number of layers (or so-called multi-building blocks). Each layer in the CNN architecture, including its function, is described in detail below.

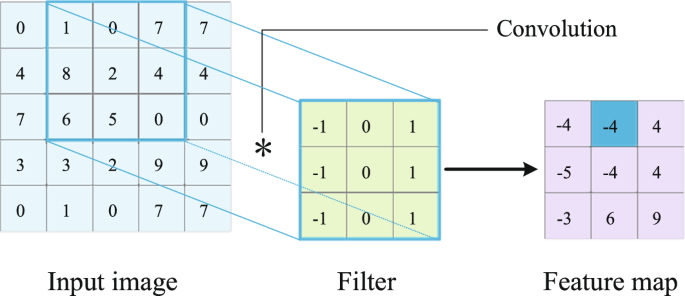

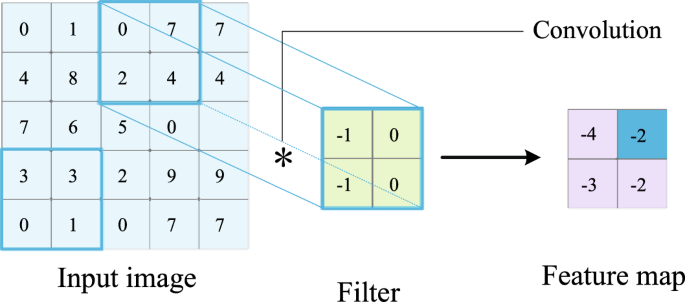

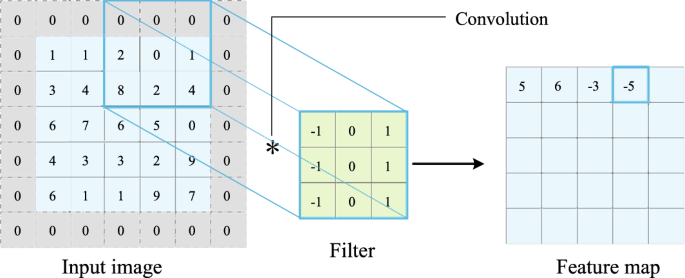

Convolutional Layer: In CNN architecture, the most significant component is the convolutional layer. It consists of a collection of convolutional filters (so-called kernels). The input image, expressed as N-dimensional metrics, is convolved with these filters to generate the output feature map.

Kernel definition: A grid of discrete numbers or values describes the kernel. Each value is called the kernel weight. Random numbers are assigned to act as the weights of the kernel at the beginning of the CNN training process. In addition, there are several different methods used to initialize the weights. Next, these weights are adjusted at each training era; thus, the kernel learns to extract significant features.

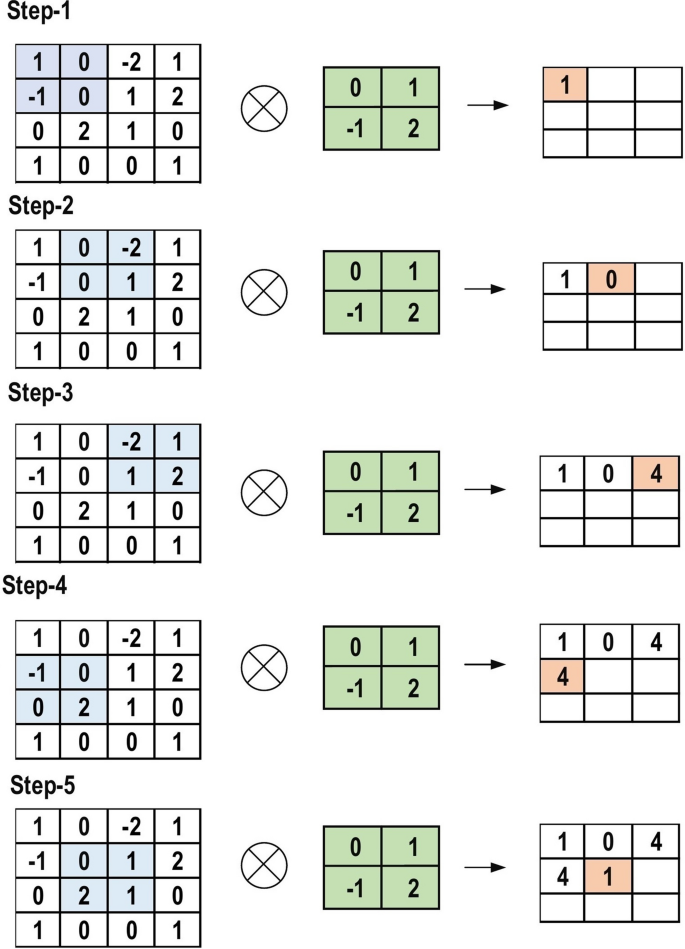

Convolutional Operation: Initially, the CNN input format is described. The vector format is the input of the traditional neural network, while the multi-channeled image is the input of the CNN. For instance, single-channel is the format of the gray-scale image, while the RGB image format is three-channeled. To understand the convolutional operation, let us take an example of a \(4 \times 4\) gray-scale image with a \(2 \times 2\) random weight-initialized kernel. First, the kernel slides over the whole image horizontally and vertically. In addition, the dot product between the input image and the kernel is determined, where their corresponding values are multiplied and then summed up to create a single scalar value, calculated concurrently. The whole process is then repeated until no further sliding is possible. Note that the calculated dot product values represent the feature map of the output. Figure 8 graphically illustrates the primary calculations executed at each step. In this figure, the light green color represents the \(2 \times 2\) kernel, while the light blue color represents the similar size area of the input image. Both are multiplied; the end result after summing up the resulting product values (marked in a light orange color) represents an entry value to the output feature map.

The primary calculations executed at each step of convolutional layer

However, padding to the input image is not applied in the previous example, while a stride of one (denoted for the selected step-size over all vertical or horizontal locations) is applied to the kernel. Note that it is also possible to use another stride value. In addition, a feature map of lower dimensions is obtained as a result of increasing the stride value.

On the other hand, padding is highly significant to determining border size information related to the input image. By contrast, the border side-features moves carried away very fast. By applying padding, the size of the input image will increase, and in turn, the size of the output feature map will also increase. Core Benefits of Convolutional Layers.

Sparse Connectivity: Each neuron of a layer in FC neural networks links with all neurons in the following layer. By contrast, in CNNs, only a few weights are available between two adjacent layers. Thus, the number of required weights or connections is small, while the memory required to store these weights is also small; hence, this approach is memory-effective. In addition, matrix operation is computationally much more costly than the dot (.) operation in CNN.

Weight Sharing: There are no allocated weights between any two neurons of neighboring layers in CNN, as the whole weights operate with one and all pixels of the input matrix. Learning a single group of weights for the whole input will significantly decrease the required training time and various costs, as it is not necessary to learn additional weights for each neuron.

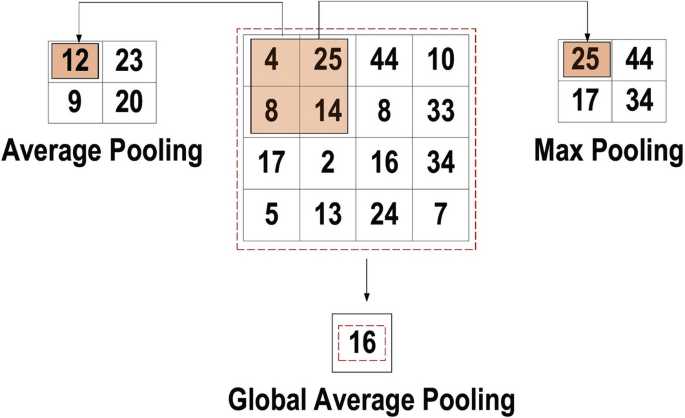

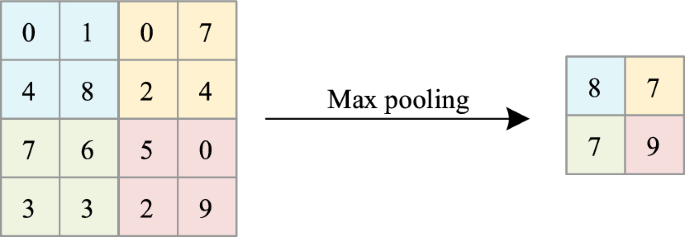

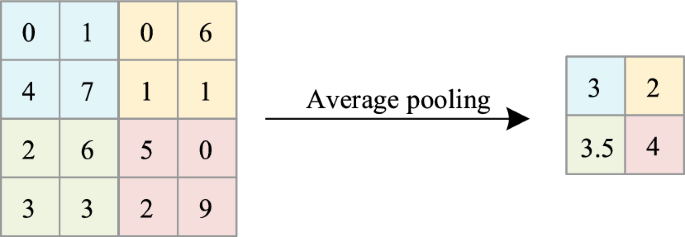

Pooling Layer: The main task of the pooling layer is the sub-sampling of the feature maps. These maps are generated by following the convolutional operations. In other words, this approach shrinks large-size feature maps to create smaller feature maps. Concurrently, it maintains the majority of the dominant information (or features) in every step of the pooling stage. In a similar manner to the convolutional operation, both the stride and the kernel are initially size-assigned before the pooling operation is executed. Several types of pooling methods are available for utilization in various pooling layers. These methods include tree pooling, gated pooling, average pooling, min pooling, max pooling, global average pooling (GAP), and global max pooling. The most familiar and frequently utilized pooling methods are the max, min, and GAP pooling. Figure 9 illustrates these three pooling operations.

Three types of pooling operations

Sometimes, the overall CNN performance is decreased as a result; this represents the main shortfall of the pooling layer, as this layer helps the CNN to determine whether or not a certain feature is available in the particular input image, but focuses exclusively on ascertaining the correct location of that feature. Thus, the CNN model misses the relevant information.

Activation Function (non-linearity) Mapping the input to the output is the core function of all types of activation function in all types of neural network. The input value is determined by computing the weighted summation of the neuron input along with its bias (if present). This means that the activation function makes the decision as to whether or not to fire a neuron with reference to a particular input by creating the corresponding output.

Non-linear activation layers are employed after all layers with weights (so-called learnable layers, such as FC layers and convolutional layers) in CNN architecture. This non-linear performance of the activation layers means that the mapping of input to output will be non-linear; moreover, these layers give the CNN the ability to learn extra-complicated things. The activation function must also have the ability to differentiate, which is an extremely significant feature, as it allows error back-propagation to be used to train the network. The following types of activation functions are most commonly used in CNN and other deep neural networks.

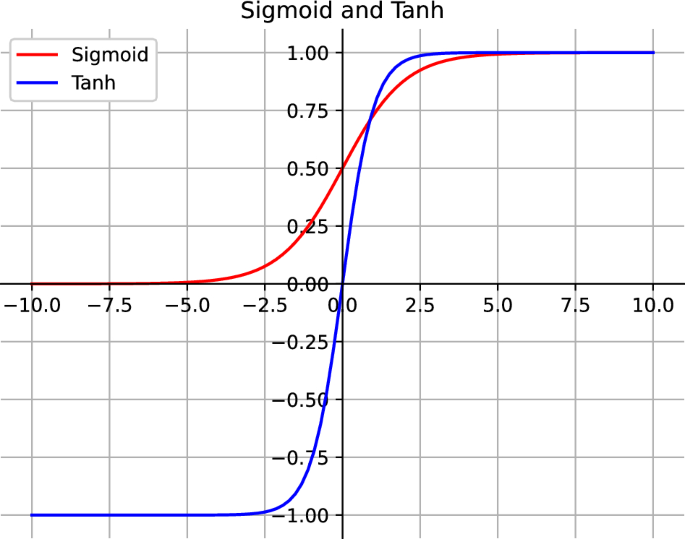

Sigmoid: The input of this activation function is real numbers, while the output is restricted to between zero and one. The sigmoid function curve is S-shaped and can be represented mathematically by Eq. 2 .

Tanh: It is similar to the sigmoid function, as its input is real numbers, but the output is restricted to between − 1 and 1. Its mathematical representation is in Eq. 3 .

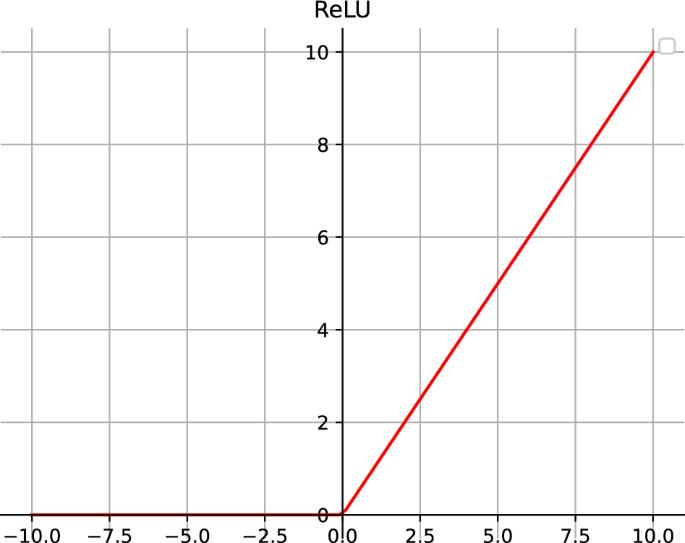

ReLU: The mostly commonly used function in the CNN context. It converts the whole values of the input to positive numbers. Lower computational load is the main benefit of ReLU over the others. Its mathematical representation is in Eq. 4 .

Occasionally, a few significant issues may occur during the use of ReLU. For instance, consider an error back-propagation algorithm with a larger gradient flowing through it. Passing this gradient within the ReLU function will update the weights in a way that makes the neuron certainly not activated once more. This issue is referred to as “Dying ReLU”. Some ReLU alternatives exist to solve such issues. The following discusses some of them.

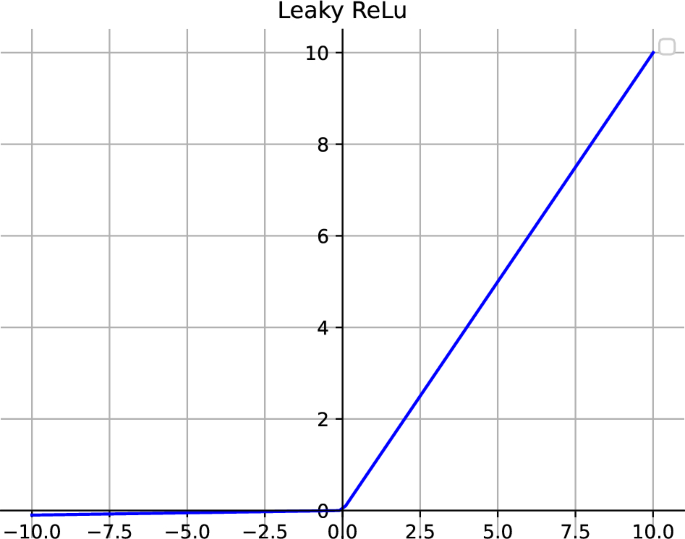

Leaky ReLU: Instead of ReLU down-scaling the negative inputs, this activation function ensures these inputs are never ignored. It is employed to solve the Dying ReLU problem. Leaky ReLU can be represented mathematically as in Eq. 5 .

Note that the leak factor is denoted by m. It is commonly set to a very small value, such as 0.001.

Noisy ReLU: This function employs a Gaussian distribution to make ReLU noisy. It can be represented mathematically as in Eq. 6 .

Parametric Linear Units: This is mostly the same as Leaky ReLU. The main difference is that the leak factor in this function is updated through the model training process. The parametric linear unit can be represented mathematically as in Eq. 7 .

Note that the learnable weight is denoted as a.

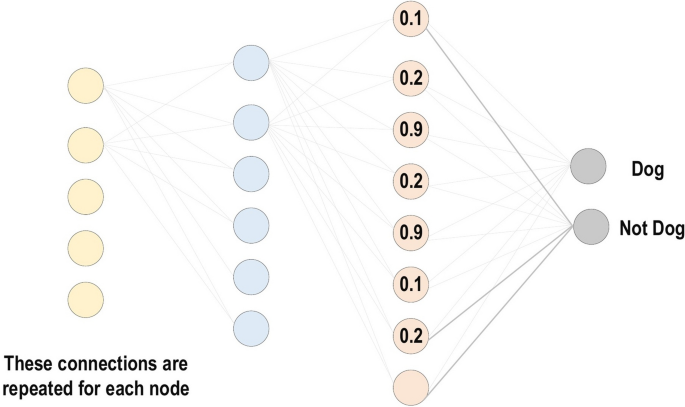

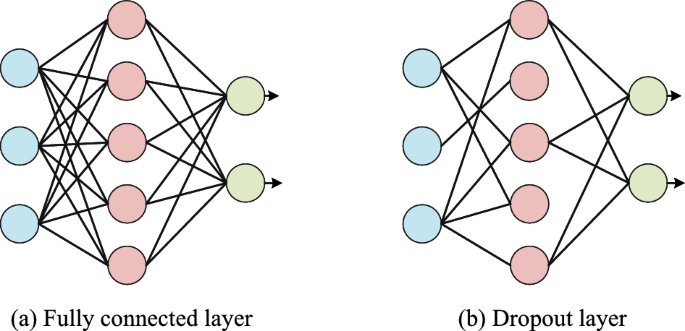

Fully Connected Layer: Commonly, this layer is located at the end of each CNN architecture. Inside this layer, each neuron is connected to all neurons of the previous layer, the so-called Fully Connected (FC) approach. It is utilized as the CNN classifier. It follows the basic method of the conventional multiple-layer perceptron neural network, as it is a type of feed-forward ANN. The input of the FC layer comes from the last pooling or convolutional layer. This input is in the form of a vector, which is created from the feature maps after flattening. The output of the FC layer represents the final CNN output, as illustrated in Fig. 10 .

Fully connected layer

Loss Functions: The previous section has presented various layer-types of CNN architecture. In addition, the final classification is achieved from the output layer, which represents the last layer of the CNN architecture. Some loss functions are utilized in the output layer to calculate the predicted error created across the training samples in the CNN model. This error reveals the difference between the actual output and the predicted one. Next, it will be optimized through the CNN learning process.

However, two parameters are used by the loss function to calculate the error. The CNN estimated output (referred to as the prediction) is the first parameter. The actual output (referred to as the label) is the second parameter. Several types of loss function are employed in various problem types. The following concisely explains some of the loss function types.

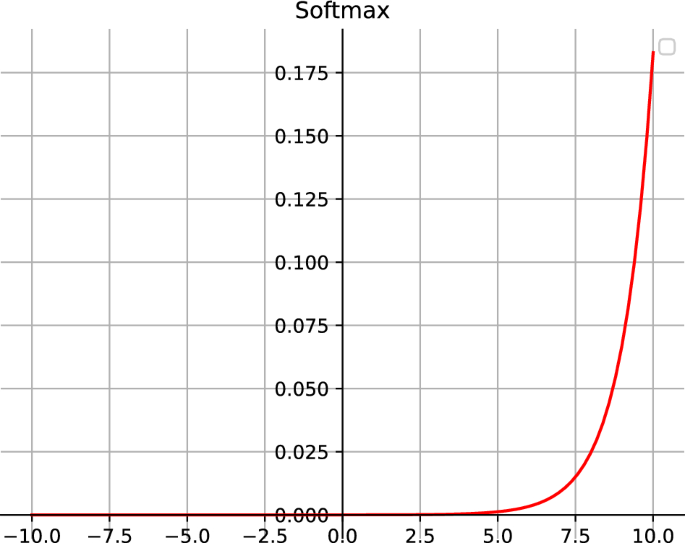

Cross-Entropy or Softmax Loss Function: This function is commonly employed for measuring the CNN model performance. It is also referred to as the log loss function. Its output is the probability \(p \in \left\{ 0\left. , 1 \right\} \right. \) . In addition, it is usually employed as a substitution of the square error loss function in multi-class classification problems. In the output layer, it employs the softmax activations to generate the output within a probability distribution. The mathematical representation of the output class probability is Eq. 8 .

Here, \(e^{a_{i}}\) represents the non-normalized output from the preceding layer, while N represents the number of neurons in the output layer. Finally, the mathematical representation of cross-entropy loss function is Eq. 9 .

Euclidean Loss Function: This function is widely used in regression problems. In addition, it is also the so-called mean square error. The mathematical expression of the estimated Euclidean loss is Eq. 10 .

Hinge Loss Function: This function is commonly employed in problems related to binary classification. This problem relates to maximum-margin-based classification; this is mostly important for SVMs, which use the hinge loss function, wherein the optimizer attempts to maximize the margin around dual objective classes. Its mathematical formula is Eq. 11 .

The margin m is commonly set to 1. Moreover, the predicted output is denoted as \(p_{_{i}}\) , while the desired output is denoted as \(y_{_{i}}\) .

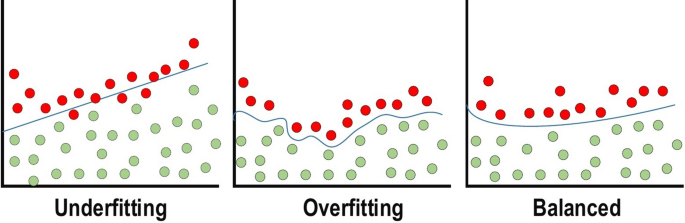

Regularization to CNN

For CNN models, over-fitting represents the central issue associated with obtaining well-behaved generalization. The model is entitled over-fitted in cases where the model executes especially well on training data and does not succeed on test data (unseen data) which is more explained in the latter section. An under-fitted model is the opposite; this case occurs when the model does not learn a sufficient amount from the training data. The model is referred to as “just-fitted” if it executes well on both training and testing data. These three types are illustrated in Fig. 11 . Various intuitive concepts are used to help the regularization to avoid over-fitting; more details about over-fitting and under-fitting are discussed in latter sections.

Dropout: This is a widely utilized technique for generalization. During each training epoch, neurons are randomly dropped. In doing this, the feature selection power is distributed equally across the whole group of neurons, as well as forcing the model to learn different independent features. During the training process, the dropped neuron will not be a part of back-propagation or forward-propagation. By contrast, the full-scale network is utilized to perform prediction during the testing process.

Drop-Weights: This method is highly similar to dropout. In each training epoch, the connections between neurons (weights) are dropped rather than dropping the neurons; this represents the only difference between drop-weights and dropout.

Data Augmentation: Training the model on a sizeable amount of data is the easiest way to avoid over-fitting. To achieve this, data augmentation is used. Several techniques are utilized to artificially expand the size of the training dataset. More details can be found in the latter section, which describes the data augmentation techniques.

Batch Normalization: This method ensures the performance of the output activations [ 81 ]. This performance follows a unit Gaussian distribution. Subtracting the mean and dividing by the standard deviation will normalize the output at each layer. While it is possible to consider this as a pre-processing task at each layer in the network, it is also possible to differentiate and to integrate it with other networks. In addition, it is employed to reduce the “internal covariance shift” of the activation layers. In each layer, the variation in the activation distribution defines the internal covariance shift. This shift becomes very high due to the continuous weight updating through training, which may occur if the samples of the training data are gathered from numerous dissimilar sources (for example, day and night images). Thus, the model will consume extra time for convergence, and in turn, the time required for training will also increase. To resolve this issue, a layer representing the operation of batch normalization is applied in the CNN architecture.

The advantages of utilizing batch normalization are as follows:

It prevents the problem of vanishing gradient from arising.

It can effectively control the poor weight initialization.

It significantly reduces the time required for network convergence (for large-scale datasets, this will be extremely useful).

It struggles to decrease training dependency across hyper-parameters.

Chances of over-fitting are reduced, since it has a minor influence on regularization.

Over-fitting and under-fitting issues

Optimizer selection

This section discusses the CNN learning process. Two major issues are included in the learning process: the first issue is the learning algorithm selection (optimizer), while the second issue is the use of many enhancements (such as AdaDelta, Adagrad, and momentum) along with the learning algorithm to enhance the output.

Loss functions, which are founded on numerous learnable parameters (e.g. biases, weights, etc.) or minimizing the error (variation between actual and predicted output), are the core purpose of all supervised learning algorithms. The techniques of gradient-based learning for a CNN network appear as the usual selection. The network parameters should always update though all training epochs, while the network should also look for the locally optimized answer in all training epochs in order to minimize the error.

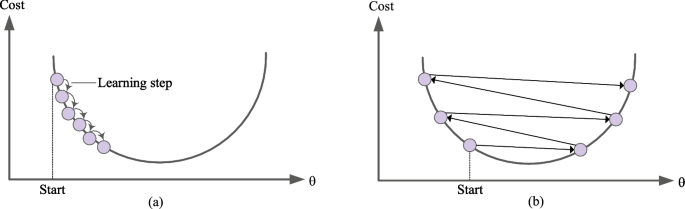

The learning rate is defined as the step size of the parameter updating. The training epoch represents a complete repetition of the parameter update that involves the complete training dataset at one time. Note that it needs to select the learning rate wisely so that it does not influence the learning process imperfectly, although it is a hyper-parameter.

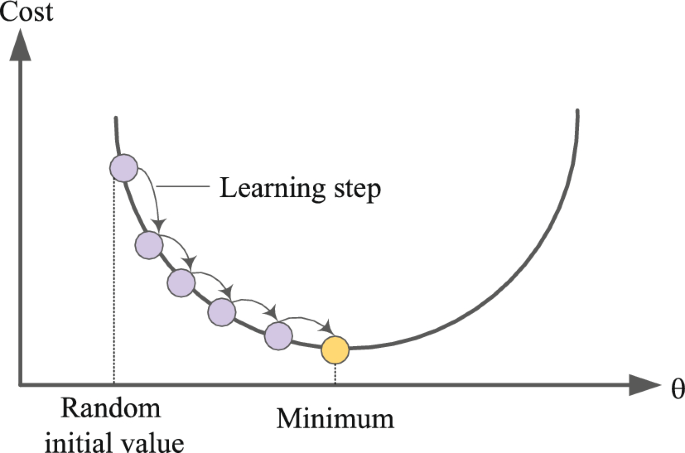

Gradient Descent or Gradient-based learning algorithm: To minimize the training error, this algorithm repetitively updates the network parameters through every training epoch. More specifically, to update the parameters correctly, it needs to compute the objective function gradient (slope) by applying a first-order derivative with respect to the network parameters. Next, the parameter is updated in the reverse direction of the gradient to reduce the error. The parameter updating process is performed though network back-propagation, in which the gradient at every neuron is back-propagated to all neurons in the preceding layer. The mathematical representation of this operation is as Eq. 12 .

The final weight in the current training epoch is denoted by \(w_{i j^{t}}\) , while the weight in the preceding \((t-1)\) training epoch is denoted \(w_{i j^{t-1}}\) . The learning rate is \(\eta \) and the prediction error is E . Different alternatives of the gradient-based learning algorithm are available and commonly employed; these include the following:

Batch Gradient Descent: During the execution of this technique [ 82 ], the network parameters are updated merely one time behind considering all training datasets via the network. In more depth, it calculates the gradient of the whole training set and subsequently uses this gradient to update the parameters. For a small-sized dataset, the CNN model converges faster and creates an extra-stable gradient using BGD. Since the parameters are changed only once for every training epoch, it requires a substantial amount of resources. By contrast, for a large training dataset, additional time is required for converging, and it could converge to a local optimum (for non-convex instances).

Stochastic Gradient Descent: The parameters are updated at each training sample in this technique [ 83 ]. It is preferred to arbitrarily sample the training samples in every epoch in advance of training. For a large-sized training dataset, this technique is both more memory-effective and much faster than BGD. However, because it is frequently updated, it takes extremely noisy steps in the direction of the answer, which in turn causes the convergence behavior to become highly unstable.

Mini-batch Gradient Descent: In this approach, the training samples are partitioned into several mini-batches, in which every mini-batch can be considered an under-sized collection of samples with no overlap between them [ 84 ]. Next, parameter updating is performed following gradient computation on every mini-batch. The advantage of this method comes from combining the advantages of both BGD and SGD techniques. Thus, it has a steady convergence, more computational efficiency and extra memory effectiveness. The following describes several enhancement techniques in gradient-based learning algorithms (usually in SGD), which further powerfully enhance the CNN training process.

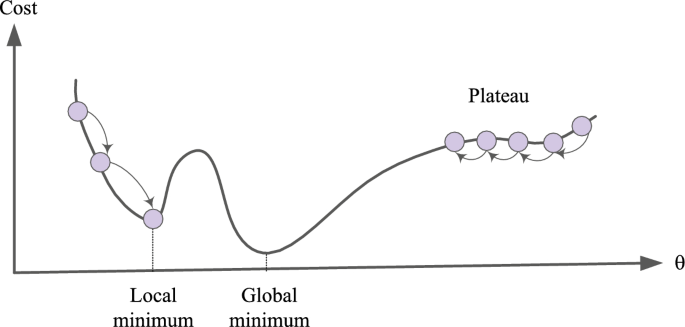

Momentum: For neural networks, this technique is employed in the objective function. It enhances both the accuracy and the training speed by summing the computed gradient at the preceding training step, which is weighted via a factor \(\lambda \) (known as the momentum factor). However, it therefore simply becomes stuck in a local minimum rather than a global minimum. This represents the main disadvantage of gradient-based learning algorithms. Issues of this kind frequently occur if the issue has no convex surface (or solution space).

Together with the learning algorithm, momentum is used to solve this issue, which can be expressed mathematically as in Eq. 13 .

The weight increment in the current \(t^{\prime} \text{th}\) training epoch is denoted as \( \Delta w_{i j^{t}}\) , while \(\eta \) is the learning rate, and the weight increment in the preceding \((t-1)^{\prime} \text{th}\) training epoch. The momentum factor value is maintained within the range 0 to 1; in turn, the step size of the weight updating increases in the direction of the bare minimum to minimize the error. As the value of the momentum factor becomes very low, the model loses its ability to avoid the local bare minimum. By contrast, as the momentum factor value becomes high, the model develops the ability to converge much more rapidly. If a high value of momentum factor is used together with LR, then the model could miss the global bare minimum by crossing over it.

However, when the gradient varies its direction continually throughout the training process, then the suitable value of the momentum factor (which is a hyper-parameter) causes a smoothening of the weight updating variations.

Adaptive Moment Estimation (Adam): It is another optimization technique or learning algorithm that is widely used. Adam [ 85 ] represents the latest trends in deep learning optimization. This is represented by the Hessian matrix, which employs a second-order derivative. Adam is a learning strategy that has been designed specifically for training deep neural networks. More memory efficient and less computational power are two advantages of Adam. The mechanism of Adam is to calculate adaptive LR for each parameter in the model. It integrates the pros of both Momentum and RMSprop. It utilizes the squared gradients to scale the learning rate as RMSprop and it is similar to the momentum by using the moving average of the gradient. The equation of Adam is represented in Eq. 14 .

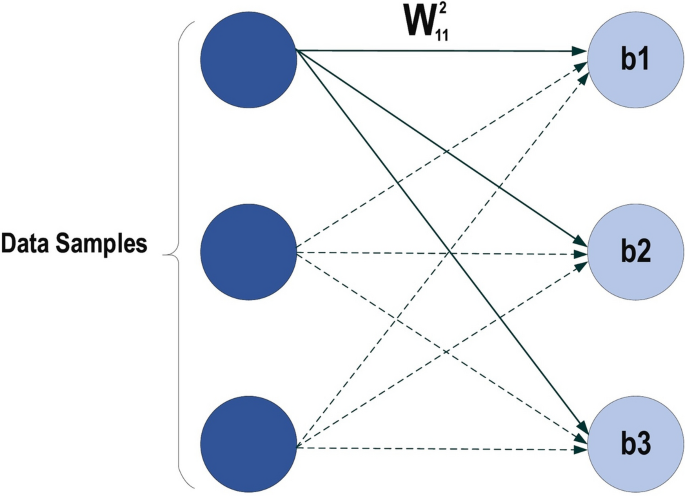

Design of algorithms (backpropagation)

Let’s start with a notation that refers to weights in the network unambiguously. We denote \({\varvec{w}}_{i j}^{h}\) to be the weight for the connection from \(\text {ith}\) input or (neuron at \(\left. (\text {h}-1){\text{th}}\right) \) to the \(j{\text{t }}\) neuron in the \(\text {hth}\) layer. So, Fig. 12 shows the weight on a connection from the neuron in the first layer to another neuron in the next layer in the network.

MLP structure

Where \(w_{11}^{2}\) has represented the weight from the first neuron in the first layer to the first neuron in the second layer, based on that the second weight for the same neuron will be \(w_{21}^{2}\) which means is the weight comes from the second neuron in the previous layer to the first layer in the next layer which is the second in this net. Regarding the bias, since the bias is not the connection between the neurons for the layers, so it is easily handled each neuron must have its own bias, some network each layer has a certain bias. It can be seen from the above net that each layer has its own bias. Each network has the parameters such as the no of the layer in the net, the number of the neurons in each layer, no of the weight (connection) between the layers, the no of connection can be easily determined based on the no of neurons in each layer, for example, if there are ten input fully connect with two neurons in the next layer then the number of connection between them is \((10 * 2=20\) connection, weights), how the error is defined, and the weight is updated, we will imagine there is there are two layers in our neural network,

where \(\text {d}\) is the label of induvial input \(\text {ith}\) and \(\text {y}\) is the output of the same individual input. Backpropagation is about understanding how to change the weights and biases in a network based on the changes of the cost function (Error). Ultimately, this means computing the partial derivatives \(\partial \text {E} / \partial \text {w}_{\text {ij}}^{h}\) and \(\partial \text {E} / \partial \text {b}_{\text {j}}^{h}.\) But to compute those, a local variable is introduced, \(\delta _{j}^{1}\) which is called the local error in the \(j{\text{th} }\) neuron in the \(h{\text{th} }\) layer. Based on that local error Backpropagation will give the procedure to compute \(\partial \text {E} / \partial \text {w}_{\text {ij}}^{h}\) and \(\partial \text {E} / \partial \text {b}_{\text {j}}^{h}\) how the error is defined, and the weight is updated, we will imagine there is there are two layers in our neural network that is shown in Fig. 13 .

Neuron activation functions

Output error for \(\delta _{\text {j}}^{1}\) each \(1=1: \text {L}\) where \(\text {L}\) is no. of neuron in output

where \(\text {e}(\text {k})\) is the error of the epoch \(\text {k}\) as shown in Eq. ( 2 ) and \(\varvec{\vartheta }^{\prime }\left( {\varvec{v}}_{j}({\varvec{k}})\right) \) is the derivate of the activation function for \(v_{j}\) at the output.

Backpropagate the error at all the rest layer except the output

where \(\delta _{j}^{1}({\mathbf {k}})\) is the output error and \(w_{j l}^{h+1}(k)\) is represented the weight after the layer where the error need to obtain.

After finding the error at each neuron in each layer, now we can update the weight in each layer based on Eqs. ( 16 ) and ( 17 ).

Improving performance of CNN

Based on our experiments in different DL applications [ 86 , 87 , 88 ]. We can conclude the most active solutions that may improve the performance of CNN are:

Expand the dataset with data augmentation or use transfer learning (explained in latter sections).

Increase the training time.

Increase the depth (or width) of the model.

Add regularization.

Increase hyperparameters tuning.

CNN architectures

Over the last 10 years, several CNN architectures have been presented [ 21 , 26 ]. Model architecture is a critical factor in improving the performance of different applications. Various modifications have been achieved in CNN architecture from 1989 until today. Such modifications include structural reformulation, regularization, parameter optimizations, etc. Conversely, it should be noted that the key upgrade in CNN performance occurred largely due to the processing-unit reorganization, as well as the development of novel blocks. In particular, the most novel developments in CNN architectures were performed on the use of network depth. In this section, we review the most popular CNN architectures, beginning from the AlexNet model in 2012 and ending at the High-Resolution (HR) model in 2020. Studying these architectures features (such as input size, depth, and robustness) is the key to help researchers to choose the suitable architecture for the their target task. Table 2 presents the brief overview of CNN architectures.

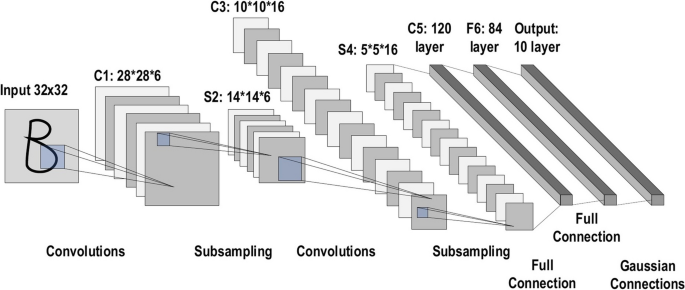

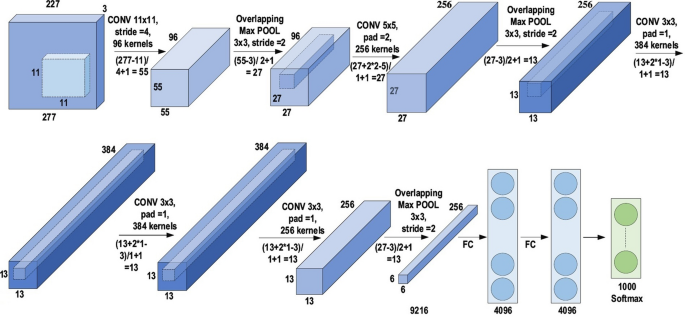

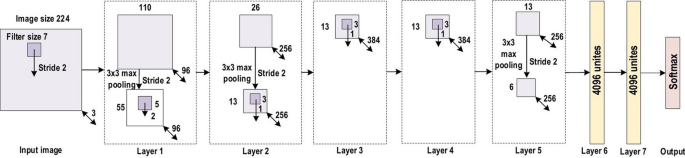

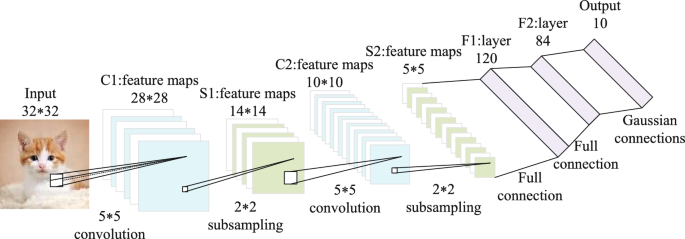

The history of deep CNNs began with the appearance of LeNet [ 89 ] (Fig. 14 ). At that time, the CNNs were restricted to handwritten digit recognition tasks, which cannot be scaled to all image classes. In deep CNN architecture, AlexNet is highly respected [ 30 ], as it achieved innovative results in the fields of image recognition and classification. Krizhevesky et al. [ 30 ] first proposed AlexNet and consequently improved the CNN learning ability by increasing its depth and implementing several parameter optimization strategies. Figure 15 illustrates the basic design of the AlexNet architecture.

The architecture of LeNet

The architecture of AlexNet

The learning ability of the deep CNN was limited at this time due to hardware restrictions. To overcome these hardware limitations, two GPUs (NVIDIA GTX 580) were used in parallel to train AlexNet. Moreover, in order to enhance the applicability of the CNN to different image categories, the number of feature extraction stages was increased from five in LeNet to seven in AlexNet. Regardless of the fact that depth enhances generalization for several image resolutions, it was in fact overfitting that represented the main drawback related to the depth. Krizhevesky et al. used Hinton’s idea to address this problem [ 90 , 91 ]. To ensure that the features learned by the algorithm were extra robust, Krizhevesky et al.’s algorithm randomly passes over several transformational units throughout the training stage. Moreover, by reducing the vanishing gradient problem, ReLU [ 92 ] could be utilized as a non-saturating activation function to enhance the rate of convergence [ 93 ]. Local response normalization and overlapping subsampling were also performed to enhance the generalization by decreasing the overfitting. To improve on the performance of previous networks, other modifications were made by using large-size filters \((5\times 5 \; \text{and}\; 11 \times 11)\) in the earlier layers. AlexNet has considerable significance in the recent CNN generations, as well as beginning an innovative research era in CNN applications.

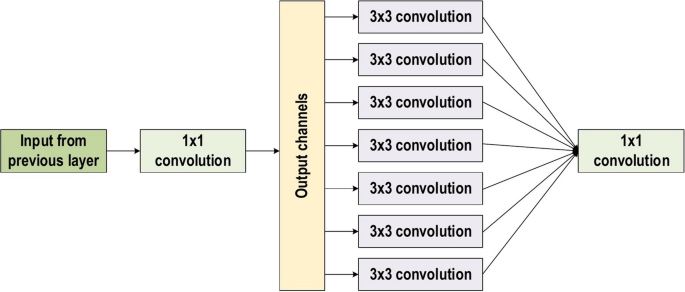

Network-in-network

This network model, which has some slight differences from the preceding models, introduced two innovative concepts [ 94 ]. The first was employing multiple layers of perception convolution. These convolutions are executed using a 1×1 filter, which supports the addition of extra nonlinearity in the networks. Moreover, this supports enlarging the network depth, which may later be regularized using dropout. For DL models, this idea is frequently employed in the bottleneck layer. As a substitution for a FC layer, the GAP is also employed, which represents the second novel concept and enables a significant reduction in the number of model parameters. In addition, GAP considerably updates the network architecture. Generating a final low-dimensional feature vector with no reduction in the feature maps dimension is possible when GAP is used on a large feature map [ 95 , 96 ]. Figure 16 shows the structure of the network.

The architecture of network-in-network

Before 2013, the CNN learning mechanism was basically constructed on a trial-and-error basis, which precluded an understanding of the precise purpose following the enhancement. This issue restricted the deep CNN performance on convoluted images. In response, Zeiler and Fergus introduced DeconvNet (a multilayer de-convolutional neural network) in 2013 [ 97 ]. This method later became known as ZefNet, which was developed in order to quantitively visualize the network. Monitoring the CNN performance via understanding the neuron activation was the purpose of the network activity visualization. However, Erhan et al. utilized this exact concept to optimize deep belief network (DBN) performance by visualizing the features of the hidden layers [ 98 ]. Moreover, in addition to this issue, Le et al. assessed the deep unsupervised auto-encoder (AE) performance by visualizing the created classes of the image using the output neurons [ 99 ]. By reversing the operation order of the convolutional and pooling layers, DenconvNet operates like a forward-pass CNN. Reverse mapping of this kind launches the convolutional layer output backward to create visually observable image shapes that accordingly give the neural interpretation of the internal feature representation learned at each layer [ 100 ]. Monitoring the learning schematic through the training stage was the key concept underlying ZefNet. In addition, it utilized the outcomes to recognize an ability issue coupled with the model. This concept was experimentally proven on AlexNet by applying DeconvNet. This indicated that only certain neurons were working, while the others were out of action in the first two layers of the network. Furthermore, it indicated that the features extracted via the second layer contained aliasing objects. Thus, Zeiler and Fergus changed the CNN topology due to the existence of these outcomes. In addition, they executed parameter optimization, and also exploited the CNN learning by decreasing the stride and the filter sizes in order to retain all features of the initial two convolutional layers. An improvement in performance was accordingly achieved due to this rearrangement in CNN topology. This rearrangement proposed that the visualization of the features could be employed to identify design weaknesses and conduct appropriate parameter alteration. Figure 17 shows the structure of the network.

The architecture of ZefNet

Visual geometry group (VGG)

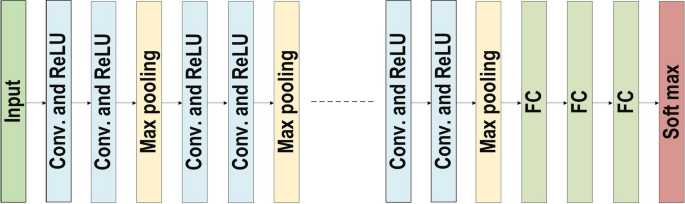

After CNN was determined to be effective in the field of image recognition, an easy and efficient design principle for CNN was proposed by Simonyan and Zisserman. This innovative design was called Visual Geometry Group (VGG). A multilayer model [ 101 ], it featured nineteen more layers than ZefNet [ 97 ] and AlexNet [ 30 ] to simulate the relations of the network representational capacity in depth. Conversely, in the 2013-ILSVRC competition, ZefNet was the frontier network, which proposed that filters with small sizes could enhance the CNN performance. With reference to these results, VGG inserted a layer of the heap of \(3\times 3\) filters rather than the \(5\times 5\) and 11 × 11 filters in ZefNet. This showed experimentally that the parallel assignment of these small-size filters could produce the same influence as the large-size filters. In other words, these small-size filters made the receptive field similarly efficient to the large-size filters \((7 \times 7 \; \text{and}\; 5 \times 5)\) . By decreasing the number of parameters, an extra advantage of reducing computational complication was achieved by using small-size filters. These outcomes established a novel research trend for working with small-size filters in CNN. In addition, by inserting \(1\times 1\) convolutions in the middle of the convolutional layers, VGG regulates the network complexity. It learns a linear grouping of the subsequent feature maps. With respect to network tuning, a max pooling layer [ 102 ] is inserted following the convolutional layer, while padding is implemented to maintain the spatial resolution. In general, VGG obtained significant results for localization problems and image classification. While it did not achieve first place in the 2014-ILSVRC competition, it acquired a reputation due to its enlarged depth, homogenous topology, and simplicity. However, VGG’s computational cost was excessive due to its utilization of around 140 million parameters, which represented its main shortcoming. Figure 18 shows the structure of the network.

The architecture of VGG

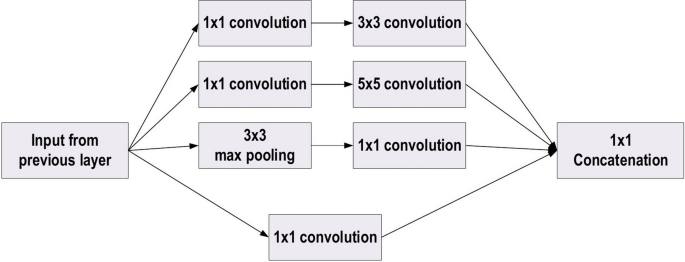

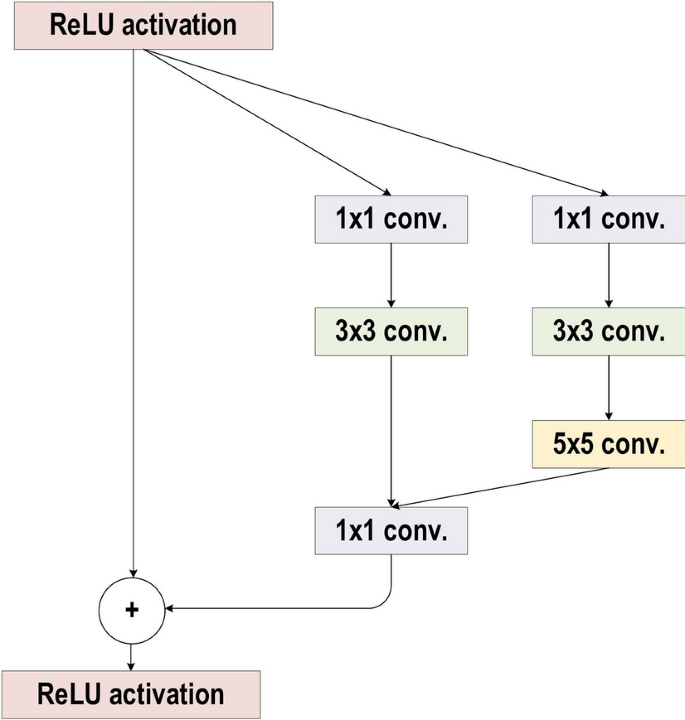

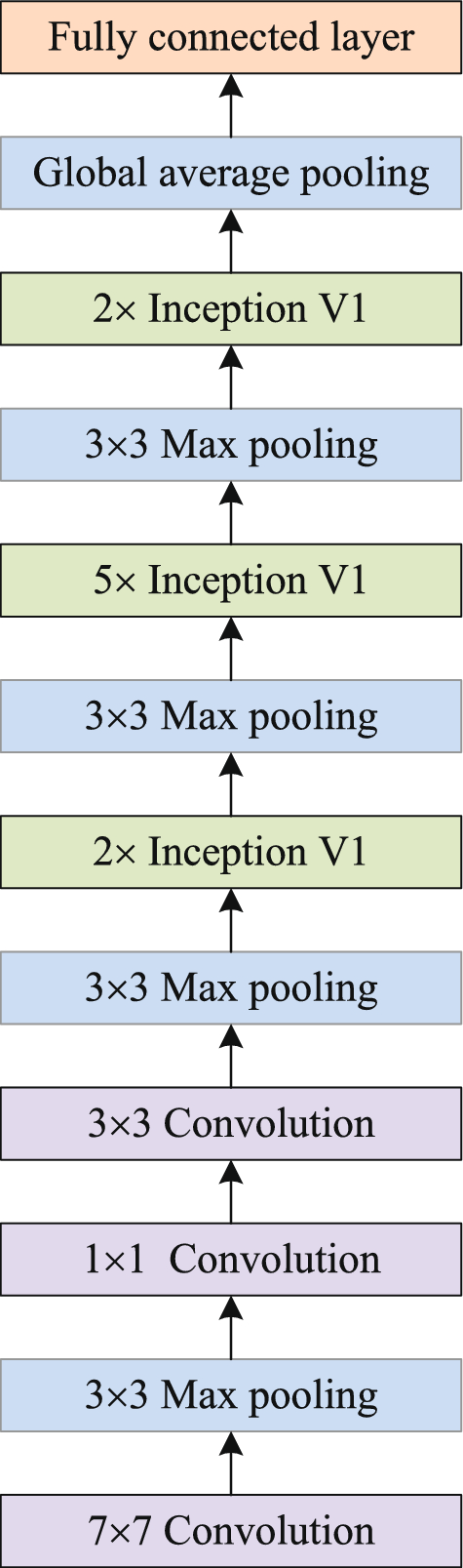

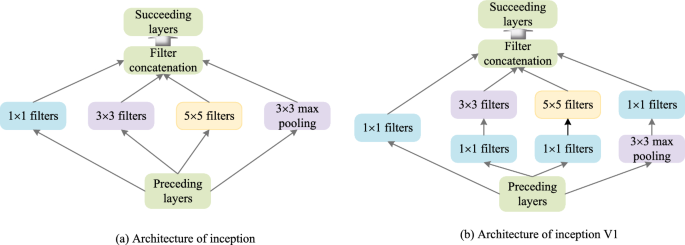

In the 2014-ILSVRC competition, GoogleNet (also called Inception-V1) emerged as the winner [ 103 ]. Achieving high-level accuracy with decreased computational cost is the core aim of the GoogleNet architecture. It proposed a novel inception block (module) concept in the CNN context, since it combines multiple-scale convolutional transformations by employing merge, transform, and split functions for feature extraction. Figure 19 illustrates the inception block architecture. This architecture incorporates filters of different sizes ( \(5\times 5, 3\times 3, \; \text{and} \; 1\times 1\) ) to capture channel information together with spatial information at diverse ranges of spatial resolution. The common convolutional layer of GoogLeNet is substituted by small blocks using the same concept of network-in-network (NIN) architecture [ 94 ], which replaced each layer with a micro-neural network. The GoogLeNet concepts of merge, transform, and split were utilized, supported by attending to an issue correlated with different learning types of variants existing in a similar class of several images. The motivation of GoogLeNet was to improve the efficiency of CNN parameters, as well as to enhance the learning capacity. In addition, it regulates the computation by inserting a \(1\times 1\) convolutional filter, as a bottleneck layer, ahead of using large-size kernels. GoogleNet employed sparse connections to overcome the redundant information problem. It decreased cost by neglecting the irrelevant channels. It should be noted here that only some of the input channels are connected to some of the output channels. By employing a GAP layer as the end layer, rather than utilizing a FC layer, the density of connections was decreased. The number of parameters was also significantly decreased from 40 to 5 million parameters due to these parameter tunings. The additional regularity factors used included the employment of RmsProp as optimizer and batch normalization [ 104 ]. Furthermore, GoogleNet proposed the idea of auxiliary learners to speed up the rate of convergence. Conversely, the main shortcoming of GoogleNet was its heterogeneous topology; this shortcoming requires adaptation from one module to another. Other shortcomings of GoogleNet include the representation jam, which substantially decreased the feature space in the following layer, and in turn occasionally leads to valuable information loss.

The basic structure of Google Block

Highway network

Increasing the network depth enhances its performance, mainly for complicated tasks. By contrast, the network training becomes difficult. The presence of several layers in deeper networks may result in small gradient values of the back-propagation of error at lower layers. In 2015, Srivastava et al. [ 105 ] suggested a novel CNN architecture, called Highway Network, to overcome this issue. This approach is based on the cross-connectivity concept. The unhindered information flow in Highway Network is empowered by instructing two gating units inside the layer. The gate mechanism concept was motivated by LSTM-based RNN [ 106 , 107 ]. The information aggregation was conducted by merging the information of the \(\i{\text{th}}-k\) layers with the next \(\i{\text{th}}\) layer to generate a regularization impact, which makes the gradient-based training of the deeper network very simple. This empowers the training of networks with more than 100 layers, such as a deeper network of 900 layers with the SGD algorithm. A Highway Network with a depth of fifty layers presented an improved rate of convergence, which is better than thin and deep architectures at the same time [ 108 ]. By contrast, [ 69 ] empirically demonstrated that plain Net performance declines when more than ten hidden layers are inserted. It should be noted that even a Highway Network 900 layers in depth converges much more rapidly than the plain network.

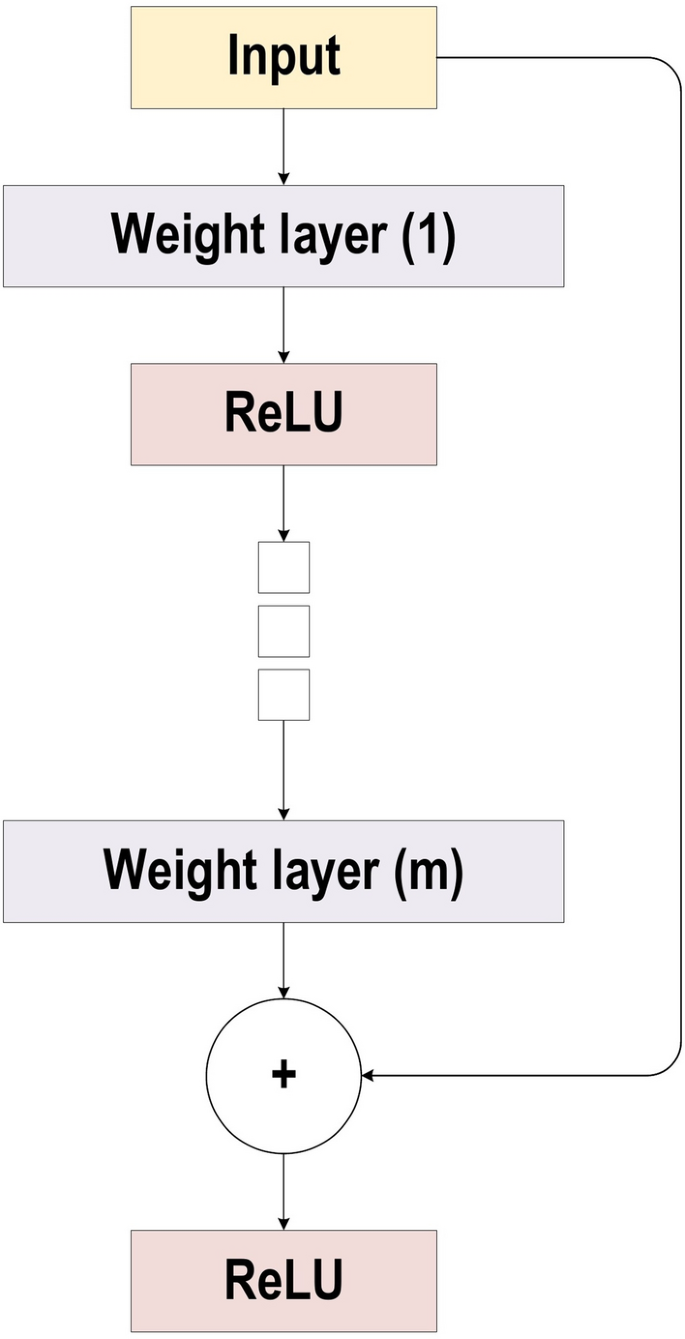

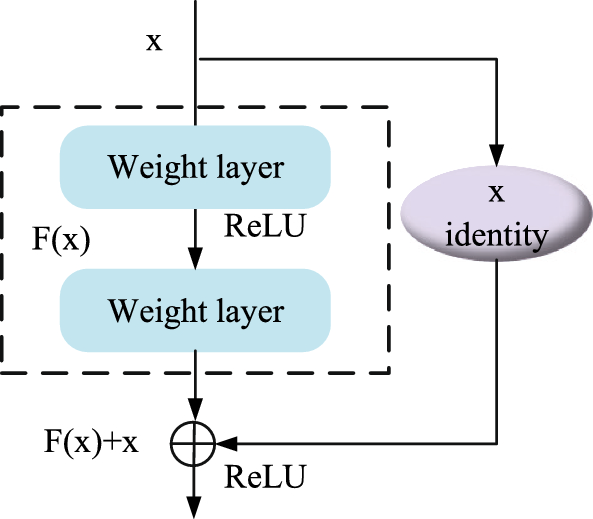

He et al. [ 37 ] developed ResNet (Residual Network), which was the winner of ILSVRC 2015. Their objective was to design an ultra-deep network free of the vanishing gradient issue, as compared to the previous networks. Several types of ResNet were developed based on the number of layers (starting with 34 layers and going up to 1202 layers). The most common type was ResNet50, which comprised 49 convolutional layers plus a single FC layer. The overall number of network weights was 25.5 M, while the overall number of MACs was 3.9 M. The novel idea of ResNet is its use of the bypass pathway concept, as shown in Fig. 20 , which was employed in Highway Nets to address the problem of training a deeper network in 2015. This is illustrated in Fig. 20 , which contains the fundamental ResNet block diagram. This is a conventional feedforward network plus a residual connection. The residual layer output can be identified as the \((l - 1){\text{th}}\) outputs, which are delivered from the preceding layer \((x_{l} - 1)\) . After executing different operations [such as convolution using variable-size filters, or batch normalization, before applying an activation function like ReLU on \((x_{l} - 1)\) ], the output is \(F(x_{l} - 1)\) . The ending residual output is \(x_{l}\) , which can be mathematically represented as in Eq. 18 .

There are numerous basic residual blocks included in the residual network. Based on the type of the residual network architecture, operations in the residual block are also changed [ 37 ].

The block diagram for ResNet

In comparison to the highway network, ResNet presented shortcut connections inside layers to enable cross-layer connectivity, which are parameter-free and data-independent. Note that the layers characterize non-residual functions when a gated shortcut is closed in the highway network. By contrast, the individuality shortcuts are never closed, while the residual information is permanently passed in ResNet. Furthermore, ResNet has the potential to prevent the problems of gradient diminishing, as the shortcut connections (residual links) accelerate the deep network convergence. ResNet was the winner of the 2015-ILSVRC championship with 152 layers of depth; this represents 8 times the depth of VGG and 20 times the depth of AlexNet. In comparison with VGG, it has lower computational complexity, even with enlarged depth.

Inception: ResNet and Inception-V3/4

Szegedy et al. [ 103 , 109 , 110 ] proposed Inception-ResNet and Inception-V3/4 as upgraded types of Inception-V1/2. The concept behind Inception-V3 was to minimize the computational cost with no effect on the deeper network generalization. Thus, Szegedy et al. used asymmetric small-size filters ( \(1\times 5\) and \(1\times 7\) ) rather than large-size filters ( \( 7\times 7\) and \(5\times 5\) ); moreover, they utilized a bottleneck of \(1\times 1\) convolution prior to the large-size filters [ 110 ]. These changes make the operation of the traditional convolution very similar to cross-channel correlation. Previously, Lin et al. utilized the 1 × 1 filter potential in NIN architecture [ 94 ]. Subsequently, [ 110 ] utilized the same idea in an intelligent manner. By using \(1\times 1\) convolutional operation in Inception-V3, the input data are mapped into three or four isolated spaces, which are smaller than the initial input spaces. Next, all of these correlations are mapped in these smaller spaces through common \(5\times 5\) or \(3\times 3\) convolutions. By contrast, in Inception-ResNet, Szegedy et al. bring together the inception block and the residual learning power by replacing the filter concatenation with the residual connection [ 111 ]. Szegedy et al. empirically demonstrated that Inception-ResNet (Inception-4 with residual connections) can achieve a similar generalization power to Inception-V4 with enlarged width and depth and without residual connections. Thus, it is clearly illustrated that using residual connections in training will significantly accelerate the Inception network training. Figure 21 shows The basic block diagram for Inception Residual unit.

The basic block diagram for Inception Residual unit

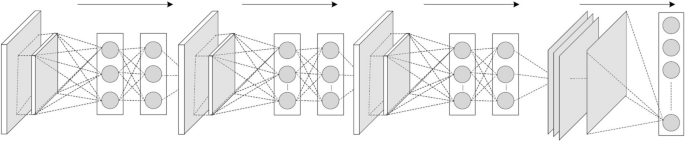

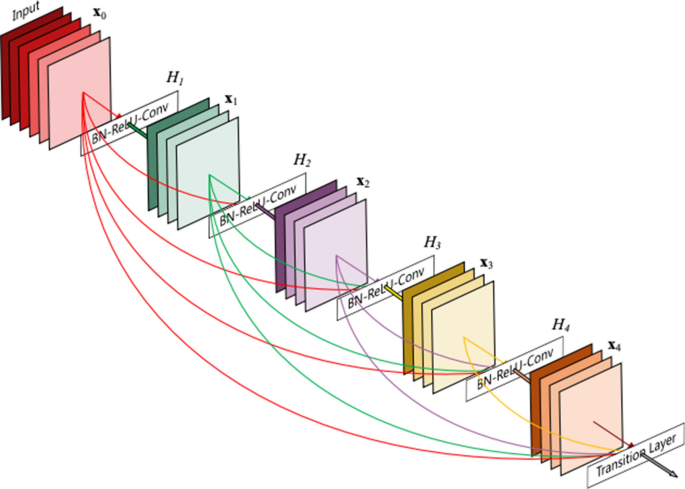

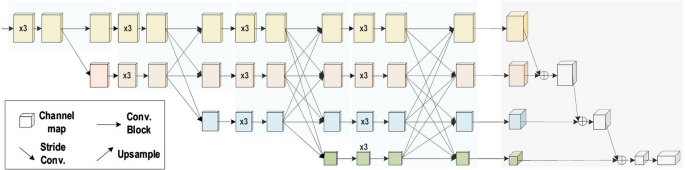

To solve the problem of the vanishing gradient, DenseNet was presented, following the same direction as ResNet and the Highway network [ 105 , 111 , 112 ]. One of the drawbacks of ResNet is that it clearly conserves information by means of preservative individuality transformations, as several layers contribute extremely little or no information. In addition, ResNet has a large number of weights, since each layer has an isolated group of weights. DenseNet employed cross-layer connectivity in an improved approach to address this problem [ 112 , 113 , 114 ]. It connected each layer to all layers in the network using a feed-forward approach. Therefore, the feature maps of each previous layer were employed to input into all of the following layers. In traditional CNNs, there are l connections between the previous layer and the current layer, while in DenseNet, there are \(\frac{l(l+1)}{2}\) direct connections. DenseNet demonstrates the influence of cross-layer depth wise-convolutions. Thus, the network gains the ability to discriminate clearly between the added and the preserved information, since DenseNet concatenates the features of the preceding layers rather than adding them. However, due to its narrow layer structure, DenseNet becomes parametrically high-priced in addition to the increased number of feature maps. The direct admission of all layers to the gradients via the loss function enhances the information flow all across the network. In addition, this includes a regularizing impact, which minimizes overfitting on tasks alongside minor training sets. Figure 22 shows the architecture of DenseNet Network.

(adopted from [ 112 ])

The architecture of DenseNet Network

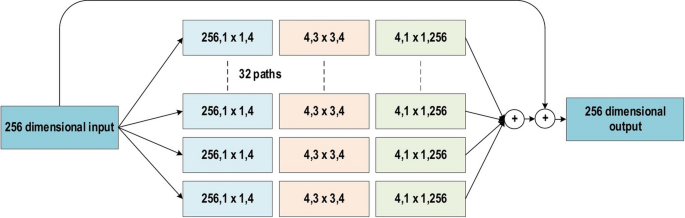

ResNext is an enhanced version of the Inception Network [ 115 ]. It is also known as the Aggregated Residual Transform Network. Cardinality, which is a new term presented by [ 115 ], utilized the split, transform, and merge topology in an easy and effective way. It denotes the size of the transformation set as an extra dimension [ 116 , 117 , 118 ]. However, the Inception network manages network resources more efficiently, as well as enhancing the learning ability of the conventional CNN. In the transformation branch, different spatial embeddings (employing e.g. \(5\times 5\) , \(3\times 3\) , and \(1\times 1\) ) are used. Thus, customizing each layer is required separately. By contrast, ResNext derives its characteristic features from ResNet, VGG, and Inception. It employed the VGG deep homogenous topology with the basic architecture of GoogleNet by setting \(3\times 3\) filters as spatial resolution inside the blocks of split, transform, and merge. Figure 23 shows the ResNext building blocks. ResNext utilized multi-transformations inside the blocks of split, transform, and merge, as well as outlining such transformations in cardinality terms. The performance is significantly improved by increasing the cardinality, as Xie et al. showed. The complexity of ResNext was regulated by employing \(1\times 1\) filters (low embeddings) ahead of a \(3\times 3\) convolution. By contrast, skipping connections are used for optimized training [ 115 ].

The basic block diagram for the ResNext building blocks

The feature reuse problem is the core shortcoming related to deep residual networks, since certain feature blocks or transformations contribute a very small amount to learning. Zagoruyko and Komodakis [ 119 ] accordingly proposed WideResNet to address this problem. These authors advised that the depth has a supplemental influence, while the residual units convey the core learning ability of deep residual networks. WideResNet utilized the residual block power via making the ResNet wider instead of deeper [ 37 ]. It enlarged the width by presenting an extra factor, k, which handles the network width. In other words, it indicated that layer widening is a highly successful method of performance enhancement compared to deepening the residual network. While enhanced representational capacity is achieved by deep residual networks, these networks also have certain drawbacks, such as the exploding and vanishing gradient problems, feature reuse problem (inactivation of several feature maps), and the time-intensive nature of the training. He et al. [ 37 ] tackled the feature reuse problem by including a dropout in each residual block to regularize the network in an efficient manner. In a similar manner, utilizing dropouts, Huang et al. [ 120 ] presented the stochastic depth concept to solve the slow learning and gradient vanishing problems. Earlier research was focused on increasing the depth; thus, any small enhancement in performance required the addition of several new layers. When comparing the number of parameters, WideResNet has twice that of ResNet, as an experimental study showed. By contrast, WideResNet presents an improved method for training relative to deep networks [ 119 ]. Note that most architectures prior to residual networks (including the highly effective VGG and Inception) were wider than ResNet. Thus, wider residual networks were established once this was determined. However, inserting a dropout between the convolutional layers (as opposed to within the residual block) made the learning more effective in WideResNet [ 121 , 122 ].

Pyramidal Net

The depth of the feature map increases in the succeeding layer due to the deep stacking of multi-convolutional layers, as shown in previous deep CNN architectures such as ResNet, VGG, and AlexNet. By contrast, the spatial dimension reduces, since a sub-sampling follows each convolutional layer. Thus, augmented feature representation is recompensed by decreasing the size of the feature map. The extreme expansion in the depth of the feature map, alongside the spatial information loss, interferes with the learning ability in the deep CNNs. ResNet obtained notable outcomes for the issue of image classification. Conversely, deleting a convolutional block—in which both the number of channel and spatial dimensions vary (channel depth enlarges, while spatial dimension reduces)—commonly results in decreased classifier performance. Accordingly, the stochastic ResNet enhanced the performance by decreasing the information loss accompanying the residual unit drop. Han et al. [ 123 ] proposed Pyramidal Net to address the ResNet learning interference problem. To address the depth enlargement and extreme reduction in spatial width via ResNet, Pyramidal Net slowly enlarges the residual unit width to cover the most feasible places rather than saving the same spatial dimension inside all residual blocks up to the appearance of the down-sampling. It was referred to as Pyramidal Net due to the slow enlargement in the feature map depth based on the up-down method. Factor l, which was determined by Eq. 19 , regulates the depth of the feature map.