- Search Menu

- Browse content in Arts and Humanities

- Browse content in Archaeology

- Anglo-Saxon and Medieval Archaeology

- Archaeological Methodology and Techniques

- Archaeology by Region

- Archaeology of Religion

- Archaeology of Trade and Exchange

- Biblical Archaeology

- Contemporary and Public Archaeology

- Environmental Archaeology

- Historical Archaeology

- History and Theory of Archaeology

- Industrial Archaeology

- Landscape Archaeology

- Mortuary Archaeology

- Prehistoric Archaeology

- Underwater Archaeology

- Urban Archaeology

- Zooarchaeology

- Browse content in Architecture

- Architectural Structure and Design

- History of Architecture

- Residential and Domestic Buildings

- Theory of Architecture

- Browse content in Art

- Art Subjects and Themes

- History of Art

- Industrial and Commercial Art

- Theory of Art

- Biographical Studies

- Byzantine Studies

- Browse content in Classical Studies

- Classical History

- Classical Philosophy

- Classical Mythology

- Classical Literature

- Classical Reception

- Classical Art and Architecture

- Classical Oratory and Rhetoric

- Greek and Roman Epigraphy

- Greek and Roman Law

- Greek and Roman Papyrology

- Greek and Roman Archaeology

- Late Antiquity

- Religion in the Ancient World

- Digital Humanities

- Browse content in History

- Colonialism and Imperialism

- Diplomatic History

- Environmental History

- Genealogy, Heraldry, Names, and Honours

- Genocide and Ethnic Cleansing

- Historical Geography

- History by Period

- History of Emotions

- History of Agriculture

- History of Education

- History of Gender and Sexuality

- Industrial History

- Intellectual History

- International History

- Labour History

- Legal and Constitutional History

- Local and Family History

- Maritime History

- Military History

- National Liberation and Post-Colonialism

- Oral History

- Political History

- Public History

- Regional and National History

- Revolutions and Rebellions

- Slavery and Abolition of Slavery

- Social and Cultural History

- Theory, Methods, and Historiography

- Urban History

- World History

- Browse content in Language Teaching and Learning

- Language Learning (Specific Skills)

- Language Teaching Theory and Methods

- Browse content in Linguistics

- Applied Linguistics

- Cognitive Linguistics

- Computational Linguistics

- Forensic Linguistics

- Grammar, Syntax and Morphology

- Historical and Diachronic Linguistics

- History of English

- Language Acquisition

- Language Evolution

- Language Reference

- Language Variation

- Language Families

- Lexicography

- Linguistic Anthropology

- Linguistic Theories

- Linguistic Typology

- Phonetics and Phonology

- Psycholinguistics

- Sociolinguistics

- Translation and Interpretation

- Writing Systems

- Browse content in Literature

- Bibliography

- Children's Literature Studies

- Literary Studies (Asian)

- Literary Studies (European)

- Literary Studies (Eco-criticism)

- Literary Studies (Romanticism)

- Literary Studies (American)

- Literary Studies (Modernism)

- Literary Studies - World

- Literary Studies (1500 to 1800)

- Literary Studies (19th Century)

- Literary Studies (20th Century onwards)

- Literary Studies (African American Literature)

- Literary Studies (British and Irish)

- Literary Studies (Early and Medieval)

- Literary Studies (Fiction, Novelists, and Prose Writers)

- Literary Studies (Gender Studies)

- Literary Studies (Graphic Novels)

- Literary Studies (History of the Book)

- Literary Studies (Plays and Playwrights)

- Literary Studies (Poetry and Poets)

- Literary Studies (Postcolonial Literature)

- Literary Studies (Queer Studies)

- Literary Studies (Science Fiction)

- Literary Studies (Travel Literature)

- Literary Studies (War Literature)

- Literary Studies (Women's Writing)

- Literary Theory and Cultural Studies

- Mythology and Folklore

- Shakespeare Studies and Criticism

- Browse content in Media Studies

- Browse content in Music

- Applied Music

- Dance and Music

- Ethics in Music

- Ethnomusicology

- Gender and Sexuality in Music

- Medicine and Music

- Music Cultures

- Music and Religion

- Music and Media

- Music and Culture

- Music Education and Pedagogy

- Music Theory and Analysis

- Musical Scores, Lyrics, and Libretti

- Musical Structures, Styles, and Techniques

- Musicology and Music History

- Performance Practice and Studies

- Race and Ethnicity in Music

- Sound Studies

- Browse content in Performing Arts

- Browse content in Philosophy

- Aesthetics and Philosophy of Art

- Epistemology

- Feminist Philosophy

- History of Western Philosophy

- Metaphysics

- Moral Philosophy

- Non-Western Philosophy

- Philosophy of Science

- Philosophy of Language

- Philosophy of Mind

- Philosophy of Perception

- Philosophy of Action

- Philosophy of Law

- Philosophy of Religion

- Philosophy of Mathematics and Logic

- Practical Ethics

- Social and Political Philosophy

- Browse content in Religion

- Biblical Studies

- Christianity

- East Asian Religions

- History of Religion

- Judaism and Jewish Studies

- Qumran Studies

- Religion and Education

- Religion and Health

- Religion and Politics

- Religion and Science

- Religion and Law

- Religion and Art, Literature, and Music

- Religious Studies

- Browse content in Society and Culture

- Cookery, Food, and Drink

- Cultural Studies

- Customs and Traditions

- Ethical Issues and Debates

- Hobbies, Games, Arts and Crafts

- Lifestyle, Home, and Garden

- Natural world, Country Life, and Pets

- Popular Beliefs and Controversial Knowledge

- Sports and Outdoor Recreation

- Technology and Society

- Travel and Holiday

- Visual Culture

- Browse content in Law

- Arbitration

- Browse content in Company and Commercial Law

- Commercial Law

- Company Law

- Browse content in Comparative Law

- Systems of Law

- Competition Law

- Browse content in Constitutional and Administrative Law

- Government Powers

- Judicial Review

- Local Government Law

- Military and Defence Law

- Parliamentary and Legislative Practice

- Construction Law

- Contract Law

- Browse content in Criminal Law

- Criminal Procedure

- Criminal Evidence Law

- Sentencing and Punishment

- Employment and Labour Law

- Environment and Energy Law

- Browse content in Financial Law

- Banking Law

- Insolvency Law

- History of Law

- Human Rights and Immigration

- Intellectual Property Law

- Browse content in International Law

- Private International Law and Conflict of Laws

- Public International Law

- IT and Communications Law

- Jurisprudence and Philosophy of Law

- Law and Politics

- Law and Society

- Browse content in Legal System and Practice

- Courts and Procedure

- Legal Skills and Practice

- Primary Sources of Law

- Regulation of Legal Profession

- Medical and Healthcare Law

- Browse content in Policing

- Criminal Investigation and Detection

- Police and Security Services

- Police Procedure and Law

- Police Regional Planning

- Browse content in Property Law

- Personal Property Law

- Study and Revision

- Terrorism and National Security Law

- Browse content in Trusts Law

- Wills and Probate or Succession

- Browse content in Medicine and Health

- Browse content in Allied Health Professions

- Arts Therapies

- Clinical Science

- Dietetics and Nutrition

- Occupational Therapy

- Operating Department Practice

- Physiotherapy

- Radiography

- Speech and Language Therapy

- Browse content in Anaesthetics

- General Anaesthesia

- Neuroanaesthesia

- Browse content in Clinical Medicine

- Acute Medicine

- Cardiovascular Medicine

- Clinical Genetics

- Clinical Pharmacology and Therapeutics

- Dermatology

- Endocrinology and Diabetes

- Gastroenterology

- Genito-urinary Medicine

- Geriatric Medicine

- Infectious Diseases

- Medical Toxicology

- Medical Oncology

- Pain Medicine

- Palliative Medicine

- Rehabilitation Medicine

- Respiratory Medicine and Pulmonology

- Rheumatology

- Sleep Medicine

- Sports and Exercise Medicine

- Clinical Neuroscience

- Community Medical Services

- Critical Care

- Emergency Medicine

- Forensic Medicine

- Haematology

- History of Medicine

- Browse content in Medical Dentistry

- Oral and Maxillofacial Surgery

- Paediatric Dentistry

- Restorative Dentistry and Orthodontics

- Surgical Dentistry

- Browse content in Medical Skills

- Clinical Skills

- Communication Skills

- Nursing Skills

- Surgical Skills

- Medical Ethics

- Medical Statistics and Methodology

- Browse content in Neurology

- Clinical Neurophysiology

- Neuropathology

- Nursing Studies

- Browse content in Obstetrics and Gynaecology

- Gynaecology

- Occupational Medicine

- Ophthalmology

- Otolaryngology (ENT)

- Browse content in Paediatrics

- Neonatology

- Browse content in Pathology

- Chemical Pathology

- Clinical Cytogenetics and Molecular Genetics

- Histopathology

- Medical Microbiology and Virology

- Patient Education and Information

- Browse content in Pharmacology

- Psychopharmacology

- Browse content in Popular Health

- Caring for Others

- Complementary and Alternative Medicine

- Self-help and Personal Development

- Browse content in Preclinical Medicine

- Cell Biology

- Molecular Biology and Genetics

- Reproduction, Growth and Development

- Primary Care

- Professional Development in Medicine

- Browse content in Psychiatry

- Addiction Medicine

- Child and Adolescent Psychiatry

- Forensic Psychiatry

- Learning Disabilities

- Old Age Psychiatry

- Psychotherapy

- Browse content in Public Health and Epidemiology

- Epidemiology

- Public Health

- Browse content in Radiology

- Clinical Radiology

- Interventional Radiology

- Nuclear Medicine

- Radiation Oncology

- Reproductive Medicine

- Browse content in Surgery

- Cardiothoracic Surgery

- Gastro-intestinal and Colorectal Surgery

- General Surgery

- Neurosurgery

- Paediatric Surgery

- Peri-operative Care

- Plastic and Reconstructive Surgery

- Surgical Oncology

- Transplant Surgery

- Trauma and Orthopaedic Surgery

- Vascular Surgery

- Browse content in Science and Mathematics

- Browse content in Biological Sciences

- Aquatic Biology

- Biochemistry

- Bioinformatics and Computational Biology

- Developmental Biology

- Ecology and Conservation

- Evolutionary Biology

- Genetics and Genomics

- Microbiology

- Molecular and Cell Biology

- Natural History

- Plant Sciences and Forestry

- Research Methods in Life Sciences

- Structural Biology

- Systems Biology

- Zoology and Animal Sciences

- Browse content in Chemistry

- Analytical Chemistry

- Computational Chemistry

- Crystallography

- Environmental Chemistry

- Industrial Chemistry

- Inorganic Chemistry

- Materials Chemistry

- Medicinal Chemistry

- Mineralogy and Gems

- Organic Chemistry

- Physical Chemistry

- Polymer Chemistry

- Study and Communication Skills in Chemistry

- Theoretical Chemistry

- Browse content in Computer Science

- Artificial Intelligence

- Computer Architecture and Logic Design

- Game Studies

- Human-Computer Interaction

- Mathematical Theory of Computation

- Programming Languages

- Software Engineering

- Systems Analysis and Design

- Virtual Reality

- Browse content in Computing

- Business Applications

- Computer Security

- Computer Games

- Computer Networking and Communications

- Digital Lifestyle

- Graphical and Digital Media Applications

- Operating Systems

- Browse content in Earth Sciences and Geography

- Atmospheric Sciences

- Environmental Geography

- Geology and the Lithosphere

- Maps and Map-making

- Meteorology and Climatology

- Oceanography and Hydrology

- Palaeontology

- Physical Geography and Topography

- Regional Geography

- Soil Science

- Urban Geography

- Browse content in Engineering and Technology

- Agriculture and Farming

- Biological Engineering

- Civil Engineering, Surveying, and Building

- Electronics and Communications Engineering

- Energy Technology

- Engineering (General)

- Environmental Science, Engineering, and Technology

- History of Engineering and Technology

- Mechanical Engineering and Materials

- Technology of Industrial Chemistry

- Transport Technology and Trades

- Browse content in Environmental Science

- Applied Ecology (Environmental Science)

- Conservation of the Environment (Environmental Science)

- Environmental Sustainability

- Environmentalist Thought and Ideology (Environmental Science)

- Management of Land and Natural Resources (Environmental Science)

- Natural Disasters (Environmental Science)

- Nuclear Issues (Environmental Science)

- Pollution and Threats to the Environment (Environmental Science)

- Social Impact of Environmental Issues (Environmental Science)

- History of Science and Technology

- Browse content in Materials Science

- Ceramics and Glasses

- Composite Materials

- Metals, Alloying, and Corrosion

- Nanotechnology

- Browse content in Mathematics

- Applied Mathematics

- Biomathematics and Statistics

- History of Mathematics

- Mathematical Education

- Mathematical Finance

- Mathematical Analysis

- Numerical and Computational Mathematics

- Probability and Statistics

- Pure Mathematics

- Browse content in Neuroscience

- Cognition and Behavioural Neuroscience

- Development of the Nervous System

- Disorders of the Nervous System

- History of Neuroscience

- Invertebrate Neurobiology

- Molecular and Cellular Systems

- Neuroendocrinology and Autonomic Nervous System

- Neuroscientific Techniques

- Sensory and Motor Systems

- Browse content in Physics

- Astronomy and Astrophysics

- Atomic, Molecular, and Optical Physics

- Biological and Medical Physics

- Classical Mechanics

- Computational Physics

- Condensed Matter Physics

- Electromagnetism, Optics, and Acoustics

- History of Physics

- Mathematical and Statistical Physics

- Measurement Science

- Nuclear Physics

- Particles and Fields

- Plasma Physics

- Quantum Physics

- Relativity and Gravitation

- Semiconductor and Mesoscopic Physics

- Browse content in Psychology

- Affective Sciences

- Clinical Psychology

- Cognitive Psychology

- Cognitive Neuroscience

- Criminal and Forensic Psychology

- Developmental Psychology

- Educational Psychology

- Evolutionary Psychology

- Health Psychology

- History and Systems in Psychology

- Music Psychology

- Neuropsychology

- Organizational Psychology

- Psychological Assessment and Testing

- Psychology of Human-Technology Interaction

- Psychology Professional Development and Training

- Research Methods in Psychology

- Social Psychology

- Browse content in Social Sciences

- Browse content in Anthropology

- Anthropology of Religion

- Human Evolution

- Medical Anthropology

- Physical Anthropology

- Regional Anthropology

- Social and Cultural Anthropology

- Theory and Practice of Anthropology

- Browse content in Business and Management

- Business Strategy

- Business Ethics

- Business History

- Business and Government

- Business and Technology

- Business and the Environment

- Comparative Management

- Corporate Governance

- Corporate Social Responsibility

- Entrepreneurship

- Health Management

- Human Resource Management

- Industrial and Employment Relations

- Industry Studies

- Information and Communication Technologies

- International Business

- Knowledge Management

- Management and Management Techniques

- Operations Management

- Organizational Theory and Behaviour

- Pensions and Pension Management

- Public and Nonprofit Management

- Strategic Management

- Supply Chain Management

- Browse content in Criminology and Criminal Justice

- Criminal Justice

- Criminology

- Forms of Crime

- International and Comparative Criminology

- Youth Violence and Juvenile Justice

- Development Studies

- Browse content in Economics

- Agricultural, Environmental, and Natural Resource Economics

- Asian Economics

- Behavioural Finance

- Behavioural Economics and Neuroeconomics

- Econometrics and Mathematical Economics

- Economic Systems

- Economic History

- Economic Methodology

- Economic Development and Growth

- Financial Markets

- Financial Institutions and Services

- General Economics and Teaching

- Health, Education, and Welfare

- History of Economic Thought

- International Economics

- Labour and Demographic Economics

- Law and Economics

- Macroeconomics and Monetary Economics

- Microeconomics

- Public Economics

- Urban, Rural, and Regional Economics

- Welfare Economics

- Browse content in Education

- Adult Education and Continuous Learning

- Care and Counselling of Students

- Early Childhood and Elementary Education

- Educational Equipment and Technology

- Educational Strategies and Policy

- Higher and Further Education

- Organization and Management of Education

- Philosophy and Theory of Education

- Schools Studies

- Secondary Education

- Teaching of a Specific Subject

- Teaching of Specific Groups and Special Educational Needs

- Teaching Skills and Techniques

- Browse content in Environment

- Applied Ecology (Social Science)

- Climate Change

- Conservation of the Environment (Social Science)

- Environmentalist Thought and Ideology (Social Science)

- Natural Disasters (Environment)

- Social Impact of Environmental Issues (Social Science)

- Browse content in Human Geography

- Cultural Geography

- Economic Geography

- Political Geography

- Browse content in Interdisciplinary Studies

- Communication Studies

- Museums, Libraries, and Information Sciences

- Browse content in Politics

- African Politics

- Asian Politics

- Chinese Politics

- Comparative Politics

- Conflict Politics

- Elections and Electoral Studies

- Environmental Politics

- European Union

- Foreign Policy

- Gender and Politics

- Human Rights and Politics

- Indian Politics

- International Relations

- International Organization (Politics)

- International Political Economy

- Irish Politics

- Latin American Politics

- Middle Eastern Politics

- Political Methodology

- Political Communication

- Political Philosophy

- Political Sociology

- Political Behaviour

- Political Economy

- Political Institutions

- Political Theory

- Politics and Law

- Public Administration

- Public Policy

- Quantitative Political Methodology

- Regional Political Studies

- Russian Politics

- Security Studies

- State and Local Government

- UK Politics

- US Politics

- Browse content in Regional and Area Studies

- African Studies

- Asian Studies

- East Asian Studies

- Japanese Studies

- Latin American Studies

- Middle Eastern Studies

- Native American Studies

- Scottish Studies

- Browse content in Research and Information

- Research Methods

- Browse content in Social Work

- Addictions and Substance Misuse

- Adoption and Fostering

- Care of the Elderly

- Child and Adolescent Social Work

- Couple and Family Social Work

- Developmental and Physical Disabilities Social Work

- Direct Practice and Clinical Social Work

- Emergency Services

- Human Behaviour and the Social Environment

- International and Global Issues in Social Work

- Mental and Behavioural Health

- Social Justice and Human Rights

- Social Policy and Advocacy

- Social Work and Crime and Justice

- Social Work Macro Practice

- Social Work Practice Settings

- Social Work Research and Evidence-based Practice

- Welfare and Benefit Systems

- Browse content in Sociology

- Childhood Studies

- Community Development

- Comparative and Historical Sociology

- Economic Sociology

- Gender and Sexuality

- Gerontology and Ageing

- Health, Illness, and Medicine

- Marriage and the Family

- Migration Studies

- Occupations, Professions, and Work

- Organizations

- Population and Demography

- Race and Ethnicity

- Social Theory

- Social Movements and Social Change

- Social Research and Statistics

- Social Stratification, Inequality, and Mobility

- Sociology of Religion

- Sociology of Education

- Sport and Leisure

- Urban and Rural Studies

- Browse content in Warfare and Defence

- Defence Strategy, Planning, and Research

- Land Forces and Warfare

- Military Administration

- Military Life and Institutions

- Naval Forces and Warfare

- Other Warfare and Defence Issues

- Peace Studies and Conflict Resolution

- Weapons and Equipment

- < Previous chapter

- Next chapter >

4 Knowledge Representation

Arthur B. Markman, Department of Psychology, University of Texas, Austin, TX

- Published: 21 November 2012

- Cite Icon Cite

- Permissions Icon Permissions

Theories in psychology make implicit or explicit assumptions about the way people store and use information. The choice of a format for knowledge representation is crucial, because it influences what processes are easy or hard for a system to accomplish. In this chapter, I define the concept of a representation. Then, I review three broad types of representations that have been incorporated into many theories. Finally, I examine proposals for the role of body states in representation as well as proposals that the concept of knowledge representation has outlived its usefulness.

Introduction

Theories of psychological functioning routinely make assumptions about the type of information that people use to carry out a process. They also make proposals for the form in which that information is stored and the procedures by which it is used. The type, form, and use of information by psychological mechanisms are incorporated into psychological proposals about knowledge representation . This chapter aims to provide a broad introduction to knowledge representation (see Markman, 1999 , for a more detailed discussion of these issues).

In this chapter, I start by defining what I mean by a representation. Then, I discuss some of the kinds of information that people have proposed to be central to people's mental representations. Next, I describe three proposals for the way that people's knowledge is structured. Finally, I explore some broader controversies within the field. For instance, I describe an antirepresentationalist argument that suggests that we can safely dispense with the notion of knowledge representation. I end with a call for a pluralist approach to representation.

Mental Representations

The modern notion of a mental representation emerged during the cognitive revolution of the 1950s, when the computational view of the mind ascended. The behaviorist approach to psychology that played a significant role in American psychology explicitly denied that the form and content of people's knowledge were legitimate objects of scientific study. Advances in the development of digital computers, however, provided a theoretical basis for thinking about how information could be stored and manipulated in a device.

On this computational view, minds are descriptions of the nature of the program that is implemented (in humans) by brains . Just as computers with very different hardware architectures could implement the same word-processing program (and thus make use of the same data structures and algorithms), different brains might compute the same sorts of functions and thus create representations (mental data structures) and processes (mental algorithms). Thus, proposals for knowledge representation are often stated at a level of description that abstracts across the details of what the brain is doing to implement that process yet nonetheless specifies in some detail how some functions are computed. Marr ( 1982 ) called this the algorithmic level of description. He called the abstract function being computed by an algorithm the computational level (see Griffiths, Tenenbaum, & Kemp, Chapter 3 ). What the brain is doing, which Marr ( 1982 ) called the implementational level of description, may provide some constraints on our understanding of these mental representations (see Morrison & Knowlton, Chapter 6 ; Green & Dunbar, Chapter 7 ), but not all of the details of an implementation are necessary to understand the way the mind works.

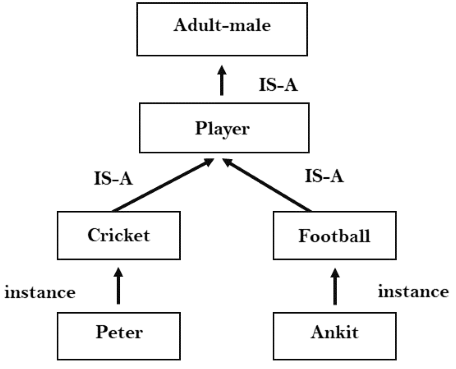

Example of the four key aspects of a definition of a representation. A representing world corresponds to a represented world through some set of representing relations. Processes must be specified that make use of the information in the representation.

Defining Mental Representation

To define the concept of representation, I draw from work by Palmer ( 1978 ) and Pylyshyn ( 1980 ). In order for something to qualify as a representation, four conditions have to hold. First, there has to be some representing world . The representing world is the domain serving as the representation. In theories of psychological processing, this representing world may be a collection of symbols or a multidimensional space. In Figure 4.1 , mental symbols for the numbers 0–9 are used to represent numerical quantities.

There is also a represented world . The represented world is the domain or information that is to be represented. There is almost always more information in the world than can be captured by some representing world. Thus, the act of representing some world almost always leads to some loss of fidelity (Holland, Holyoak, Nisbett, & Thagard, 1986 ). In the example in Figure 4.1 , representing the world of numbers with mental symbols will lose information, because the space of numbers is continuous, while this symbolic representation has discrete elements in it.

The third component of a representation is a set of representing relations that determines how the represented world stands in for the represented world. In Figure 4.1 , these representing relations are shown as an arrow connecting the representing world to the represented world. There has to be some kind of relationship between the items that are used as the representation and the information being represented. These relations are what give the representation its meaning. I'll discuss this issue in more detail in the next section.

Finally, in order for something to function as a representation, there has to be some set of processes that use the information in the representation for some function. Without some processes that act on the representing world, the potential information in the representation is inert. In the case of the number representation in Figure 4.1 , there need to be procedures that generate an ordering of the numbers and procedures that specify operations such as how to add or multiply or create other functions defined over numbers.

One reason why it is important to specify both the represented world and the processes is that it is tempting for readers to look at a representing world and have intuitions about the information that is captured in it. For example, you probably know a lot about numbers, and so you may bring that knowledge to bear when you see that the symbols in Figure 4.1 are associated with numbers. However, unless the system that is going to use this representation has procedures for manipulating these symbols, then the system that has this representing world does not have the same degree of knowledge that you do.

The definition that I just gave does not distinguish between mental representations and other things that serve as representations. For example, I may take a digital photograph as a representation of a visual scene. The photograph represents color by having a variety of pixels bunched close together that take on the wavelength of light that struck a detector when the picture was taken. This lawful relationship is what allows this photo to serve as a representation of some scene. Furthermore, when you look at that photo, your visual and conceptual systems are able to interpret what is in the image, thereby serving as a process for extracting and using information from the representation.

In order for something to count as a mental representation, of course, the representing world needs to be inside the head. Humans use a variety of external representations, including photos, writing, and a variety of tools. The relationship between humans and the representations they put in their environment is interesting, and I discuss it a bit more at the end of this chapter.

Giving Meaning to Representations

In a computer program, data structures function to store the data that a program is going to manipulate in order to carry out some function. As I discussed earlier, a mental representation plays a role within cognitive systems that is analogous to the role of the data structure in a program. In order for this representation to serve effectively, though, there has to be some way to ensure that the representation captures the right information. We can think of the information that is captured by a particular representation as its meaning .

What are the sources of meaning in mental representations? Obviously, one source of meaning is the set of representing relations that relate the representing world to the represented world. These relations help to ground the representation. As a very simple example, consider a thermostat. One way to build a thermostat is to use a bimetal strip. The two metals that make up the strip expand and contract at different rates when exposed to heat. Thus, the curvature of the strip changes continuously with changes in temperature. In a typical thermostat, the bimetal strip is connected to a vial of conducting liquid (like mercury) that will close an electrical switch between two contacts when the right level of curvature is reached. Within this system, the lawful relationship between air temperature and the curvature of the strip provides a grounding for the representation of temperature.

Many mental representations that get (part of) their meaning through grounding are actually grounded in other representations. For example, research on vision focuses on how people detect the edges of objects in an image. Even these basic processes, however, are making use of other internal states. After all, light hits the retina at the back of the eye, and then the retina turns that light into electrical signals that are sent to the brain. From that point forward, all other computations are performed on information that is already internal to the cognitive system. In some cases, we can trace a chain of representations all the way back out to states in the world through the set of representing relations that link one relation to the next.

The ability to ground representations in actual perceptual states of the world, though, is complicated by the fact that people are able to represent things that are not present at the moment in the environment. That is, not only can I clearly see my dog in front of me when she is in the room, I can also imagine what my dog looks like when I am at work and she is at home. In this case, I may have some representations in my head that are grounded in others, but at some point, there are states of my cognitive system that are not lawfully related to any existing state of the world at that moment. For that matter, I can believe in all sorts of things like ghosts, unicorns, or the Tooth Fairy that do not exist and never have. Thus, there must be other sources of meaning in mental representations.

A proposal for dealing with the fact that not every concept can be defined solely in terms of a lawful representing relationship to another representation comes from philosophical work on conceptual role semantics (Fodor, 1981 ; Stich & Warfield, 1994 ). On this view, a mental representation gets its meaning as a result of the role that it plays within some broader representational system. This proposal acknowledges that the connections among representations may be crucial for their meaning. When I discuss semantic networks in the section on structured representations, we will see how the connections among representational elements influence meaning.

Obviously, not every mental representation in a cognitive system can derive its meaning from its connection to other representational elements (Searle, 1980 ). As an analogy, imagine that you had an English dictionary, but you didn't actually speak English. You could look up a word in the dictionary, and it would provide you the definition using other words. You could look up those words as well, but that would just give you additional definitions that use other words. Without having the sense of how some of the words relate to things that are not part of language (like knowing that dog refers to a certain class of four-legged creatures, or that above is a certain kind of relationship in the world), you would not really understand English. Likewise, at least some mental representations need to be grounded.

Types of Representations

There have been many different proposals for mental representations. In this section, I will catalog three key types of representations: mental spaces, featural representations, and structured representations. I discuss each of these representations in a separate section, but before that I want to discuss some general ways that proposals for representations differ from each other (see also Markman & Dietrich, 2000 ).

First, proposals for representations differ in the presence of discrete symbols. Some representations use continuous spaces. For example, an analog clock represents time using a circle. Every point on that circle has some meaning, though that meaning depends on whether the second hand, minute hand, or hour hand is pointing to that location. In contrast, a digital clock uses symbols to represent time. I will have more to say about symbols when discussing feature representations.

Second, representations differ in whether they include specific connections among the representational elements. Some representations (like the spatial and feature representations I discuss) involve collections of independent representational elements. In contrast, the semantic networks and structured representations discussed later specify relationships among the representational elements.

These two dimensions of difference are generally correlated with the type of representation. In addition, there are dimensions of difference that cross-cut the general proposals for types of representations. For example, representations differ in how enduring the states of the representation are intended to be. Some representations—particularly those that are involved in capturing basic visual information and states of the motor system—are meant to capture moment-by-moment changes in the environment. In contrast, others capture more enduring states.

A final dimension of difference is whether the representation is analog or symbolic. An analog representation is one in which the representing world has the same structure as the represented world. For example, I mentioned that watches represent time using a circle. These watches are analog representations, because both space and time are continuous. Increasing rotational distance in space is used to represent increasing differences in time up to the limit of the time span of the circle.

One advantage of an analog representation is that there are many aspects of the structure of the representing world that can be used to represent relationships in the represented world without having to define them explicitly. Let us return to the example of using angular distance to represent time, when distances are measured on an absolute scale. If one interval is represented by a 90-degree movement and a second is represented by a 180-degree movement, the first interval moves half the distance of the second. Without having to create any additional relations, we can also assume that the first time interval is half the length of the second.

It is rare to find a representing world that has structure that is similar enough to one in a represented world to warrant creating an analog representation. Thus, most representations are symbols : They have an arbitrary relationship between the representing world and the represented world. With symbolic representations, the representing world does not have any structure, and so all of the relationships within the representing world have to be defined explicitly. For example, if we used numbers to represent time, then the representing world of symbols has to define all of the relationships among the symbols for the different numbers to capture relevant relations among numbers in the represented world. For example, the ability to determine that one interval is half the length of another has to be defined into the system, rather than being a part of the structure of the representing world itself.

Finally, it is important to note that an important reason why there are so many different proposals for kinds of knowledge representations is that any choice of a representation makes some processes easy to perform and makes other things difficult to do (see Doumas & Hummel, Chapter 5 ). There is good reason to believe that the cognitive system uses many different kinds of representations in order to provide systems that are optimized for particular tasks that must be carried out (Dale, Dietrich, & Chemero, 2009 ; Dove, 2009 ). Thus, when evaluating proposals about representations, it is probably best to think about what kinds of representations are best suited to a particular process rather than trying to find a way to account for all of cognition with a particular narrow set of representational assumptions. I return to this point at the end of the chapter.

Spatial Representations

Defining spaces.

The first type of representation on our tour uses space as the representing world (Gärdenfors, 2000 ). It might seem strange to think about a mental representation involving space. We clearly use physical spaces to help us represent things all the time. For example, a map creates a two-dimensional layout that we then look at to get information about the spatial relationships in the world. And I have already discussed analog clocks in which angular distance in space is used to represent time.

Space in the outside world has three dimensions. That means that you can put at most three independent (or orthogonal ) lines into space. Once you have those three lines set up in your space, you can create an address for every object in the space using the distance along each of those three dimensions. Mathematically, though, a space can have any number of dimensions. In these high-dimensional spaces, points in space have a coordinate for each dimension that determines its location, just as in the familiar three-dimensional space. Objects can then be represented by points or perhaps regions in space.

Once you have located points in space, it is straightforward to measure the distance between them using the formula

where d(x,y) is the distance between points x and y (each with coordinates for each dimension i, x i and y i ), N is the number of dimensions, and r is the distance metric, sometimes called the Minkowski metric. When measuring distance in a space, the familiar Euclidean straight-line distance sets r = 2. When r = 1, then distance is measured using a “city block” metric in which the distance corresponds to the summed distances along the axes of the space.

It is easy to calculate distance within a space, and so the distance between points becomes an important element in spatial representations. For example, Rips, Shoben, and Smith ( 1973 ) tried to map people's conceptual spaces for simple concepts like birds and animals (see Rips et al., Chapter 11 ). An example of these spaces is shown in Figure 4.2 . The idea here is that pairs of similar birds (like robin and sparrow) are near in space, while pairs of dissimilar birds (like robin and goose) are far away in space. A cognitive process that uses a spatial representation can calculate the distance between concepts when it needs information about the similarity of the items. For example, Rips et al. found that the amount of time that it took to verify sentences like “A robin is a bird” was inversely related to the distance between the points representing the concepts in the space. Consistent with this model, people are faster to verify that the sentence “A robin is a bird” is true than to verify that the sentence “A duck is a bird” is true.

One reason why mental space models of mental representation are appealing is that there are mathematical methods for generating spaces from data about the closeness of items in that space. One technique, called multidimensional scaling (MDS), is the one that Rips et al. used in their study (Shepard, 1962 ; Torgerson, 1965 ). Multidimensional scaling places points in a space based on information about the distances among those points. For example, if you were to give an MDS algorithm the distances among 15 European cities, you would get back a map of the locations of those cities in two dimensions.

A sample semantic space of a set of concepts representing various birds (taken from Rips, Shoben, & Smith, 1973 ).

This same technique can be used for mental distances. For example, Rips et al. had people rate the similarities among the pairs of concepts in Figure 4.2 . Similarity ratings can be interpreted as a measure of mental closeness (see Goldstone & Son, Chapter 10 ). These similarity ratings can be fed into an MDS algorithm. The space that results from this algorithm is the one that best approximates the mental distances it was given. One difficulty with creating spaces like this is that they require a lot of effort from research participants. If there are X items in the space, then there are

distances among those points. That requires a lot of ratings from people, and so in practice it becomes difficult to generate spaces with more than 15 or 20 items in them.

Other techniques have been developed that create very high-dimensional spaces among larger sets of items. A powerful set of techniques uses the co-occurrence relationships among words to develop high-dimensional semantic spaces from corpora of text (Burgess & Lund, 2000 ; Landauer & Dumais, 1997 ). These techniques take advantage of the fact that words with similar meanings often appear along with the same kinds of words in sentences. For example, in the sentence “The X jumped up the stairs” the words that can play the role of X are all generally animate beings.

These techniques can process millions of words from on-line databases of sentences from newspapers, magazines, blog entries, and Web sites. As a result, these techniques can create spaces with hundreds or thousands of dimensions that represent the relationships among thousands of words. The interested reader is invited to explore the papers cited in the previous paragraph for details about how these systems operate. For the present purposes, what is important is that after the space is generated, the high-dimensional distances among these concepts in the space are used to represent differences in meanings among the concepts.

Strengths and Weaknesses of Spatial Models

There are two key strengths of spatial models of representation. On the practical side, the availability of techniques like MDS and methods for generating high-dimensional semantic spaces from text provide modelers with a way of creating representations from the data obtained from subjects in studies. For the other types of representations described in this chapter, it is often more difficult to determine the information that ought to end up in the representations generated as parts of explanations of particular cognitive processes.

A second strength of spatial representations is that the mental operations that can be performed on a space are typically quite efficient. For example, there are very simple algorithms for calculating distance among points in a space, and so the processes that operate on spatial representations are quite easy to carry out. Thus, spatial representations are often used when comparisons have to be made among a large number of items.

Despite these strengths, there are also limitations of spatial representations. The core limitation is that the calculation of distance among points provides a measure of the degree of proximity among points. It is also possible to generate vectors that measure a distance and direction between points. However, the underlying dimensions of a semantic space have no obvious meaning. A modeler looking at the representation may ascribe some meaning to that distance and direction, but the system itself has access only to the vector or distance.

People are also able to focus on specific commonalities and differences among pairs of items, and these properties influence people's assessments of similarity. For example, Tversky and his colleagues (Tversky, 1977 ; Tversky & Gati, 1982 ) explored the commonalities and differences that affect judgments of similarity. As an example of the kinds of items he used, I'll paraphrase a discussion by William James ( 1892 / 1985 ). When people compare the moon and a ball, they find a moderate degree of similarity, because both are round. When people compare the moon and a lamp, they find a moderate degree of similarity, because both are bright. However, when people compare a ball and a lamp, they find no similarity at all, because they don't share any properties. As Tversky ( 1977 ) pointed out, this pattern of similarities is incompatible with a space, because if two pairs of items in a real space are fairly close to each other, then the third pair of points must also be reasonably close. In the mathematical definition of a space, this aspect is called the triangle inequality . The observed pattern of similarity judgments by people reflects that they give a lot of weight to specific commonalities among pairs, though different pairs may focus on different commonalities. Thus, human similarity judgments frequently violate the triangle inequality.

One response to patterns of data that seem inconsistent with spatial representations is to add mechanisms that allow spaces or distances to be modified in response to context (Krumhansl, 1978 ; Nosofsky, 1986 ). However, these mechanisms tend to complicate the determination of distance. Because the simplicity of the computations in a space was one of the key strengths of spatial representations, many other theories have opted to use representations with explicit symbols in them to capture cognitive processes that involve a specific focus on particular representation elements. We turn to representations that consist of specific features in the next section.

Feature Representations

The reason why it is difficult to focus on particular commonalities and differences or particular properties in a spatial representation is that spaces are continuous. The core aspect of a feature representation is that it has discrete elements that make up the representation. These discrete elements allow processes to fix reference to particular items within the representation (Dietrich & Markman, 2003 ).

Typically, feature representations assume that some mental concept is represented by a collection or set of features, each of which corresponds to some property of the items. For example, early models of speech perception assumed that a set of features could be used to distinguish among the various phonemes that make up the sounds of a language (Jakobsen, Fant, & Halle, 1963 ). On this view, the sound /b/ as in bog and the sound /d/ as in dog differ by the presence of a feature that marks where in the mouth the speech sound is produced. Linguists identified the particular features that distinguished phonemes by finding pairs of speech sounds that were as similar as possible except for particular features that led them to be distinguished. To return to the example of /b/ and /d/, these phonemes are similar in other properties like engaging the vocal cords (which would distinguish /b/ from /p/).

When this proposal for phoneme representation was being evaluated, a key aspect of the research program for understanding speech perception involved finding some mapping between the features of speech sounds and the speech signal itself (Blumstein & Stevens, 1981 ). On this view, the speech perception system would identify the features in a particular phoneme from aspects of the audio signal. A particular phoneme would be recognized when the collection of features associated with it was understood. While this process is straightforward, a weakness of this particular approach to speech perception was that it has proven difficult to isolate particular aspects of the speech signal that reliably indicate particular phonetic features.

Featural models have also been used prominently to study similarity (see Goldstone & Son, Chapter 10 ). In particular, Tversky's ( 1977 ) contrast model assumed that objects could be represented as sets of features. Comparing a pair of representations then required elementary set operations. The intersection of the feature sets of a pair were the commonalities of that pair, while the set differences were the differences of the pair. Tversky proposed that people's judgments of similarity should increase with the size of the set of common features and decrease with the size of the sets of distinctive features. He provided support for this model by having people describe various objects by listing their features. He found that people's judgments of the similarity of various pairs was positively related to the number of features that the lists had in common and negatively related to the number of features that were unique to one of the lists.

Some proposals for feature representations augment the features with other information. For example, it is common to include information about the importance of particular features to a category in a representation. In a classic paper, Smith, Shoben, and Rips ( 1974 ) argued that people distinguish between core feature of items and characteristic properties. For example, having feathers is a core characteristic of birds, while singing is typical of birds but is not central for something to be a bird.

They argued that when people classify an object, they look first at all of the properties of the object. If they are uncertain of the category that something belongs to based on its overall similarity, then they focus just on the characteristic properties. That is why people have difficulty classifying objects like dolphins. Dolphins have many features that are characteristic of fish, but they also have features of mammals. Only when people focus on the core characteristics of fish and mammals is it possible to classify a dolphin correctly as a mammal.

Some featural models also include information about the degree of belief in the feature. That is, there are some properties that someone may be certain are true of an object but others for which it is less clear. Some models have proposed that there are certainty factors that allow a system to keep track of the degree of belief in a particular property (see, e.g., Lenat & Guha, 1990 ; Shafer, 1996 ).

While people clearly need information about the degree of belief in a property or the likelihood that the information is true, it is not clear that some kind of marking on features is the best way to handle this kind of information. Often, we want to know more than just how strongly we believe something or how central it is to a category. We want to know why a particular fact is central or what it is that causes us to believe it. To support this kind of reasoning, it is useful to have representations that contain explicit connections among the representational elements. The following section discusses types of representations that capture relationships among representational elements.

Structured Representations

Feature representations do a good job of representing the properties of items but a poor job at representing relationships. Consider a variety of relationships that may exist in the world. Poodles are a kind of dog. John is taller than Mary. Sally outperformed Jack. These kinds of relationships are more than just properties of some item. The way that items are connected (or bound ) to the relationship also matters. Saying that poodles are a kind of dog is true, but saying that dogs are a type of poodle is not.

To capture these relational bindings, structured representations contain mechanisms for creating representations that take arguments that specify the scope of the representation. For example, we can use the notation kind-of(?x,?y) to denote that some item?x is a kind of?y. I precede the letter x with a question mark here to denote that it is a variable that can be filled in with some value. Thus, to specify a particular relation, we fill in values for the variables.

Representations of this type are often called predicates . Once the values are specified, the representation states a proposition , and in a logical system all propositions can be evaluated as true or false, though most proposals for using predicate representations in psychological models do not evaluate the logical form of these predicate structures.

The example kind-of (?x,?y) is a binary predicate, because it takes two arguments. A predicate that takes one argument, like red (?x), is often called an attribute , because it is typically used to describe the properties or attributes of objects. This type of predicate is particularly useful in situations in which there are multiple items in a scene, and it is necessary to bound the scope of the representation.

For example, consider the simple scenes at the top of Figure 4.3 . One depicts a circle on top of a square and the other shows a square on top of a circle. In the left-hand scene, one figure is shaded and another is striped. If we just had a collection of features (as shown in the middle of this figure), then it wouldn't be clear which object was striped and which one was shaded. Indeed, the same collection of features could be used to describe both the left- and right-hand scenes, even though they are clearly different.

Because attributes take arguments, though, it is possible to determine the scope of each representational element. The bottom section of Figure 4.3 shows a structured representation of the same pair of scenes drawn as a graph. The relation above (?x,?y) is presented as an oval with lines connecting the relation to its arguments. Likewise, the rounded rectangles are attributes that are connected to the objects they describe. Using this type of representation, it is possible to specify that it is the circle that is striped in the left-hand scene.

A simple pair of geometric scenes. The middle panel shows that the same set of features can represent each scene. At the bottom, the explicit connections between representations and their arguments allow the scope of each representational element to be defined.

The increase in expressive power that structured representations provide requires that there be processes that are sensitive to this structure. These structure-sensitive processes often require more computational effort than the processes that were specified for spatial and featural representations. In the next two sections, I discuss two different approaches to structured representations that use different processing assumptions to access the connections among representational elements.

Semantic Networks

An early use of structured representations in psychology was semantic networks (Collins & Loftus, 1975 ; Collins & Quillian, 1972 ; Quillian, 1968 ). In a semantic network, objects are represented by nodes in the network. These nodes are connected by links that represent the relations among concepts. The links are directed so that the first argument of a relation points to the second. For example, Figure 4.4 shows a part of a simple semantic network with nodes relating to the concepts vampire and hematologist. This network has a variety of relations in it such as drinks (vampire,blood) and studies (hematologist,blood).

One use of semantic networks was to make simple inferences (Collins & Quillian, 1972 ). One way to make inferences in a semantic network is to use marker passing . In this process, you seek a relationship between a pair of concepts by placing a marker at each of the concepts. The markers are labeled with the concept where they originated. At each step of the process, markers are placed on each node that can be reached from a link that points outward from a node that has a marker on it. When markers from each concept are placed at the same node, then the path back to the original nodes is traced back, and that specifies the relationship between the concepts.

For example, in the network shown in Figure 4.4 , if I wanted to know the relationship between a vampire and a hematologist, I would start by placing markers at the vampire and hematologist nodes. At the first time step, a marker from vampire would be placed on the monster, cape, and blood nodes. A marker from hematologist would be placed at the doctor, lab coat, water, and blood nodes. The presence of markers from each of the starting concepts at the blood node would lead to the conclusion that vampires drink blood, while hematologists study blood. The amount of time that it takes to make the inference depends on the number of time steps it takes to find an intersection between paths emerging from each concept.

A second process often used in semantic networks is spreading activation (e.g., Anderson, 1983 ; Collins & Loftus, 1975 ). Spreading activation theories assume that there are semantic networks consisting of nodes and links, though they do not require the links to be directed. Unlike the marker passing process, activation can spread in both directions along a link.

Each node has some level of activation that determines how accessible that concept is in memory. When a concept appears in the world or in a discourse or in a sentence, then the node for that concept temporarily gets a boost in its activation. That activation then spreads across the links and activates neighboring concepts. Models of this sort have been used to explain priming effects in which processing of one concept speeds processing of related concepts. For example, a classic finding in the priming literature is that seeing a word (e.g., doctor ) speeds the identification of semantically related words like nurse (Meyer & Schvaneveldt, 1971 ).

A simple semantic network showing concepts relating to vampires and hematologists (drawn after Markman, 1999 ).

Spreading activation theories have been augmented with a number of mechanisms to help them account for more subtle experimental results. For example, links in a network may vary in their strength, which is consistent with the idea that concepts differ in their strength of association. One interesting addition to spreading activation models is the concept of fan (Anderson, 1983 ). The fan of a node in a network is the number of links that leave it, which differs for each node. In some models, the total amount of activation that is spread from one node to others is divided by the number of links, so that nodes with a low fan provide more activation to neighboring nodes than do nodes with high fan. This mechanism captures the regularity that when a node has low fan, then the presence of one concept strongly predicts the presence of the small number of other concepts to which it is connected. In contrast, when a node has a high fan, the presence of one concept doesn't predict the presence of another concept all that strongly.

Semantic network models have been used primarily as models of the relationships among concepts in memory. These processing mechanisms are also shared with interactive activation models that have been used to account for a variety of psychological phenomena (see, e.g., McClelland & Rumelhart, 1981 ; Read & Marcus-Newhall, 1993 ; Thagard, 1989 , 2000 ). There is much more that can be done with the relational structure in representations than just passing markers or activation among nodes. I discuss some additional aspects of structured representations in the next section.

Structured Relational Representations

A variety of aspects of psychological functioning seem to rely on people's ability to represent and reason with relations. For example, language use seems to depend crucially on the ability to use relations. Verbs bind together the actors and objects that specify actions (e.g., Gentner, 1975 , 1978 ; Talmy, 1975 ). Prepositions allow people to talk about spatial relationships (e.g., Landau & Jackendoff, 1993 ; Regier, 1996 ; Talmy, 1983 ). For both verbs and prepositions, the objects that they relate can be specified in sentences that essentially fill in the arguments to these relations.

Related to our ability to talk about complex relations is the ability to reason about the causal structure of the world. Obviously, many verbs focus on how events in the world are caused and prevented (Wolff, 2007 ; Wolff & Song, 2003 ). These verbs reflect that people are adept at understanding why events occur. For example, Schank and colleagues (Schank, 1982 ; Schank & Abelson, 1977 ) proposed that people form scripts and schemas to represent complex events like going to a restaurant or going to a doctor's office. These knowledge structures contain relationships among the components of an event that suggest the order that things typically happen. They also have causal relations among the events that explain why they are performed (see Buehner & Cheng, Chapter 12 ).

These causal relations are particularly important for helping people to reason about situations that do not go as expected. For example, when visiting a restaurant, the waiter typically brings you a menu. Thus, you expect to get a menu when you are seated. You also know that you get a menu, because it is necessary to know what the restaurant serves so that you can order food. If you do not get a menu, then you might start to look around for other sources of information about what the restaurant serves like a board posted on a wall.

This range of relations creates an additional burden, because processes have to be developed to make use of this structure. To provide an example of both the great power of these processes as well as their computational burden, I describe work on analogical reasoning. The study of analogical reasoning is a great cognitive science success story, because there is widespread agreement on the general principles underlying analogy, even if there is disagreement about some of the fine details of how analogy is accomplished (Falkenhainer, Forbus, & Gentner, 1989 ; Gentner, 1983 ; Holyoak & Thagard, 1989 ; Hummel & Holyoak, 2003 ; Keane, 1990 ; see Holyoak, Chapter 13 ).

Analogies involve people's ability to find similarities between domains that are not similar on the surface. To find these nonliteral similarities, people seek commonalities in the relations in two domains. For example, a classic example of an analogy is the comparison that the atom is like the solar system. This analogy came out of the Rutherford Model of the atom, which was prominent in the early 20th century. The domain that people know most about is typically called the base of source (in this case, the solar system 1 ), while the domain that is lessunderstood is called the target (in this case, the atom). Atoms are not like solar systems because of the way they look. Atoms are small, and solar systems are large. The nucleus of an atom is not hot like the sun. There are no electrons that support life like planets do. What is similar between these domains is that the electrons of the atom revolve around the nucleus in the same way that the planets revolve around the sun.

Theories of analogy assume that people represent information with structured relational representations. So we could think of the solar system as being represented with some simple relations like

revolve-around (electron, nucleus) and greater (mass (nucleus), mass (electron))

which reflect that the electron revolves around the nucleus and that the mass of the nucleus is greater than the mass of the electron. Then, the solar system could be represented by a more elaborate relational system like

cause (greater (mass (sun), mass (planet), revolve-around (planet, sun))

These representations might also have a lot more descriptive information about the attributes of the nucleus, electrons, sun, and planets.

The process of analogical mapping seeks parallel relational structures. It does so by first matching up relations that are similar. So the revolve-around (?x,?y) relation in the representation of the solar system would be matched to the revolve-around (?x,?y) relation in the representation of the atom. Once relations are matched, the arguments of those relations are also placed in correspondence. This constraint on analogy is called parallel connectivity (Gentner, 1983 ), and it is incorporated into many models of analogical reasoning (Falkenhainer et al., 1989 ; Holyoak & Thagard, 1989 ; Hummel & Holyoak, 1997 ; Keane, 1990 ). So the electron in the atom and the planet in the solar system are matched, because both revolve around something (i.e., they are the first argument in the matched revolve-around (?x,?y) relation). Similarly, the nucleus of the atom and the sun in the solar system are matched because both are being revolved around. As many matching relations between domains are found as possible, provided that each object in one domain is matched to at most one object in the other domain (Gentner's, 1983 , one-to-one mapping constraint).

Analogical mapping processes also allow one domain to be extended based on the comparison to another. In this process of analogical inference, relations from the base that are consistent with the correspondence between the base and target can be carried over to the target. For example, in the simple representations of the atom and the solar system shown earlier, the match between the domains licenses the inference that the electron revolves around the nucleus because the nucleus is more massive than the electron. This example also demonstrates that inferences drawn from analogies may be plausible, but they need not be true.

One final point to make about analogy is that the structure in representations helps to define which information in a comparison is salient. In particular, some correspondences between domains may match up a series of independent relations that happen to be similar across domains. However, some relations take other relations as arguments. For example, the cause (?x,?y) relation in the solar system representation takes two other relations as arguments. Analogies can be based on similarities among entire systems of relations, some of which have embedded relations as arguments. Gentner's ( 1983 ) systematicity principle suggests that mappings that capture similarities in relational systems are considered to be particularly good analogies (Clement & Gentner, 1991 ).

This discussion of analogy raises two important general points about structured representations. First, the structure mapping process is complex. Compared to the comparison processes for spatial and featural representations, there are more constraints that have to be specified to describe the structure mapping process. The representation provides few constraints on the nature of the process, and so significant effort has to be put into setting up appropriate processes that act on the representation.

Second, structural alignment is much more computationally intensive than either distance calculations or feature comparison (see Falkenhainer et al., 1989 for an analysis of the computational complexity of structure mapping). Generally speaking, structure-sensitive processes are more computationally intensive than those that can operate on spatial and featural representations. Thus, they are most appropriate for models of processes for which significant time and processing resources are available.

This concludes our brief tour of types of representations. It is not possible to do justice to all of these types of representations in a chapter of this length. I have discussed all of these representations in more detail elsewhere (Markman, 1999 , 2002 ).

Broader Issues About Representation

In the rest of this chapter, I focus on some broader issues and open questions in the area of knowledge representation in the field. I start with an exploration of the way that knowledge is treated in cognitive psychology and how that differs from the treatment of knowledge in other areas of psychology. Then, I discuss some approaches to representation that have focused on the importance of the physical body and of the context when thinking about mental representation. Finally, I discuss a stream of research in the field that has argued that the concept of representation has outlived its usefulness.

Content and Structure

One thing you may have noticed about this entire discussion about knowledge representation is that it has focused on the structure of the knowledge people have without regard to the content of what they know. Spatial representations, featural representations, and structured representations differ in their assumptions about how knowledge is organized. Models of this type have been used in theories that range from vision to memory to reasoning.

It is not logically necessary that the discussion of knowledge representation be organized around the structure of knowledge. Developmental psychology, for example, focuses extensively on the content of children's knowledge (e.g., Carey, 2010 ). It is quite common to see discussions within developmental psychology that focus on what information children possess and the ages at which they can use that knowledge to solve particular kinds of problems.

Cognitive psychology, however, emerged out of the cognitive revolution. This view of mind is dominated by computation. A computer does not care what it is reasoning about. Right now, my computer is executing the commands to power my word processor so that I can write this sentence. But the computer does not really care what this chapter is about. As long as the data structures within the program function seamlessly with the program, the word processor will do its job. Similarly, cognitive theories have focused on the format of mental data structures with little regard for the content of those structures.

In many areas, though, research in cognitive psychology will need to pay careful attention to content. Because most studies in the field have focused on college undergraduates, research has typically explored people with no particular expertise in the domain in which they are being studied. There is good reason to believe, though, that experts reason differently from novices in a variety of domains, because of what they know (see e.g., Klein, 2000 ; Bassok & Novick, Chapter 21 ). Thus, future progress on core aspects of thinking will require attention both to the content of people's knowledge as well as its structure. There are some exceptions to this generalization, such as the work on pragmatic reasoning schemas (Cheng & Holyoak, 1985 ) and research that has been done on expertise, but studies incorporating the content of what people know are much more the exception than the rule in the field.

Embodied and Situated Cognition

The computational view of mind that dominated cognitive psychology had another key consequence. There has been a pervasive (if implicit) assumption that the sensory systems provide information to the mind and the mind in turn suggests actions that can be carried out by the motor system. More recently, this assumption has been challenged.

One approach challenges the assumption that cognition is somehow separate from perception and motor control (Barsalou, 1999 ; Glenberg, 1997 ; Wilson, 2002 ). This view, which is often called embodied cognition , suggests that understanding cognitive processing requires reorienting the view of mind from the assumption that the mind exists to process information to the assumption that cognition functions to guide action.

An early version of this view came from Gibson's ( 1986 ) work on vision. A dominant view of vision in the 1970s was that the goal of vision was to furnish the cognitive system with a veridical representation of the three-dimensional world (Marr, 1982 ). Gibson argued that the primary goal of vision was to support the goals of an organism. Consequently, vision should be biased toward information that suggests to an animal how it should act. He argued that people tend to see objects not in terms of their raw visual properties, but rather in terms of their affordances , that is, with respect to the actions that can be performed on an object. On his view, when seeing a chair, we immediately calculate whether we think we could sit on it, stand on it, or pick it up.

More recent approaches to embodied cognition have provided an important correction to the field, by emphasizing the role of perception and action on cognition. This work demonstrates influences of perception on higher level thinking and also the influence of thinking on perception. For example, Wu and Barsalou (2009) gave people different perceptual contexts and showed how the context influenced their beliefs about the properties of objects. For example, when people list properties of a lawn, they typically talk about the grass and the greenness of the lawn. When they talk about a “rolled-up lawn,” though, they then talk about the roots, even though the grass in a lawn must also have roots. Findings such as this one suggest that people are able to generate simulations of what something would look like and then to use that information in conceptual tasks.

Conceptual processing may also influence perception. A number of studies, for example, have demonstrated that people's perception of the slope of a hill is influenced by the perception of how hard it would be to climb up the hill. For example, people wearing a heavy backpack see a hill as steeper than those who are not wearing a heavy backpack (Proffitt, Creem, & Zosh, 2001 ). However, having a friend with you when wearing a heavy backpack makes the hill look less steep than it would look if you were alone. So your beliefs about the amount of social support you have can affect general perception.

The implication of this work on embodied cognition is that there is no clean separation between the mental representations involved in perception, cognition, and action. Instead, the cognitive system makes use of a variety of types of information. Even tasks that would seem on the surface to involve abstract reasoning often use perceptual and motor representations as well (see Goldin-Meadow & Cook, Chapter 32 , for a review of the role of gesture in thought).

A related area of work is called situated cognition (Hutchins, 1995 ; Suchman, 1987 ). Situated cognition takes as its starting point the recognition that human thinking occurs in particular contexts. The external world in that context also plays a role in people's thought processes. Humans use a variety of tools to help structure difficult cognitive tasks. For example, because human memory is fallible, we make lists to remind us of information we might forget otherwise. Hutchins ( 1995 ) examined the way that navigators aboard large navy ships navigate through tight harbors. He found that—while it is possible to think about the abstract process of navigation—the specific way that navigation teams work is structured by the variety of tools that they have to perform the task.