- Child's progress checker

1.9 million children in the UK are currently struggling with talking and understanding words. They urgently need help.

- Our campaigns

- Our policy campaigns

- Our past campaigns

Help for families

We give advice and guidance to families to help them support their child's skills.

- Signs and symptoms

- Ages and stages

- Developmental Language Disorder (DLD) awareness

- Resource library for families

- Talk to a speech and language therapist

- Book an assessment for your child

Educators and professionals

We design innovative tools and training for thousands of nursery assistants and teachers to use in their classrooms.

- Programmes for nurseries and schools

- Training courses

- Resource library for educators

- Developmental Language Disorder (DLD) educational support

- What Works database

- Information for speech and language therapists

We work to give every child the skills they need to face the future with confidence.

- Our 5-year strategy: Confident young futures

- Our schools

- Our work with local authorities and multi-academy trusts

- Speech, Language and Communication Alliance

- Diversity, equity and inclusion (DEI) statement

- Safeguarding

- Our annual reports

- News and blogs

Get involved

By making a donation, fundraising for us, or supporting our campaigns, you can help create a brighter future for children across the UK today.

- Sign our open letter

- Support our pledge

- Take part in an event

- No Pens Day

- Sign up to our mailing list

- Become a tutor

- Work for us

- Corporate partnerships

- Trusts and foundations

Search our site

Speech sounds, what are speech sounds .

Speech sounds are the sounds we use for talking. We use our tongue, lips, teeth, and other parts of our mouth to create different speech sounds. Speech sounds are not the same as letters. For example, the word ‘sheep’ has five letters, but only three sounds: ‘sh’ ‘ee’ ‘p’.

When do children learn different speech sounds?

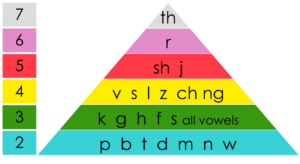

As children learn to talk, they gradually learn to make more and more speech sounds. This means that they cannot say all speech sounds straight away. Some sounds like ‘m’ are easier to make than sounds like ‘th’. The chart below shows the ages that children who speak English can normally say different sounds.

Children who can’t make these speech sounds at these ages might need extra help from a speech and language therapist. Children may lisp ‘s’ sounds until around age four or five. See our factsheet on lisps.

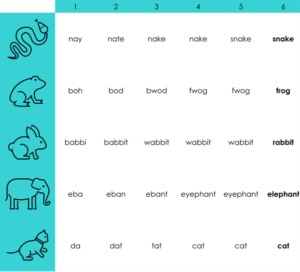

Even when children can make a speech sound by itself, it can be much trickier to use speech sounds when saying whole words and sentences! Children often find simpler ways to say words when they are finding a speech sound tricky. This chart shows examples of how your child might say words at different ages:

By five or six years old, nearly all words will be clear and easy to understand. Children who say words like a much younger child, or who say their words in an unusual or unpredictable way, might need extra help from a speech and language therapist.

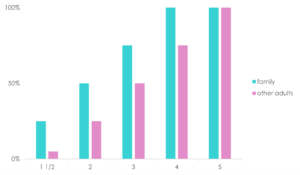

I can understand everything my child says, but other people can’t.

Families can often understand their child much better than other people. Families might be able to guess what their child is talking about and they learn to tune into how their child says words. It can be much harder for other people who don’t know your child! Here is a rough guide about how clear your child’s talking should be at different ages:

What causes challenges with speech sounds?

Children’s speech is often unclear when they first start to talk, but most children will get clearer in time. Some children, though, have particular challenges making or using speech sounds and will need extra help from a speech and language therapist to talk clearly. We often do not know why a child has challenges using speech sounds, but it can be linked to:

- Challenges with other areas of their communication skills, for example talking and understanding words

- Glue ear (see glue ear page for more information)

- Cleft palate

- Having other family members who needed help with their speech sounds.

What about dummies?

In the past, professionals usually warned against using a dummy after age one as it could cause problems with speech sounds. Recently, studies show that this may not be completely true. For most children, their speech sound challenges are not caused by dummies. We have more information on the pros and cons of dummies here.

I am worried about my child’s speech sounds, what should I do?

If the information here has made you think that your child’s speech sounds are not as clear as other children their age, it is best to talk to your child’s nursery or school, or contact a speech and language therapist. Please see our ‘How do I find a speech and language therapist’ guide for more information.

We also have a free speech and language advice line , which is a confidential phone call with an experienced speech and language therapist. During the 30-minute call, you will be given the opportunity to talk through your concerns and questions regarding your child’s development. You can book your phone call here.

What can I do to help my child at home?

- Focus on trying to understand what your child is saying, rather than how they are saying it. Turn off any background noise and get down to their level so you can see and hear each other easily.

- It is usually best not to correct your child or ask them to copy you saying tricky sounds or words.

- Model words. This means saying the word correctly to your child, but not making them repeat it after you. You can do this if you hear them make a mistake. Try to repeat the word a few times for your child. For example, if they say, ‘Mummy, there’s a tat!’, you could say, ‘Oh yes a cat! A ginger cat. What a lovely cat.’

- Show your child how to use other ways to communicate what they want and need. Our information on visual supports is a good starting point.

- Listening to and playing with sounds and music can help develop your child’s awareness of sounds. Things to try:

- Sing nursery rhymes or read rhyming books together. See if your child can finish the rhyme: Jack and Jill went up the… (hill).

- Sing, dance or clap along to their favourite music and songs.

- Play with musical instruments. Copy each other’s tune or rhythm.

- Drum together: Beat out a rhythm together using drums or household objects like pans and spoon.

We don’t suggest trying mouth exercises such as practicing blowing or sucking. Mouth exercises are not helpful for most children with speech sound challenges.

Useful websites:

BBC – Tiny Happy People

With thanks to our patron the late Queen Elizabeth II

The 44 sounds in English with examples

Do you want to learn more about American English sounds? You’ve come to the right place. In this guide, we discuss everything you need to know, starting with the basics.

Definition of Phonemes

- English Keywords

Phonics: The way sounds are spelled

English consonant letters and their sounds.

- Bringing it all together

The vowel chart shows the keyword, or quick reference word, for each English vowel sound. Keywords are used because vowel sounds are easier to hear within a word than when they are spoken in isolation. Memorizing keywords allows easier comparison between different vowel sounds.

Phonemic awareness is the best predictor of future reading ability Word origins. The English word dates back to the late 19th century and was borrowed from two many sources. The 44 English sounds fall into two categories: consonants and vowels.

Below is a list of english phonemes and their International Phonetic Alphabet symbols and some examples of their use. Note that there is no such thing as a definitive list of phonemes because of accents, dialects, and the evolution of language itself. Therefore you may discover lists with more or less than these 44 sounds.

A consonant letter usually represents one consonant sound. Some consonant letters, for example, c, g, s, can represent two different consonant sounds.

English vowel letters and their sounds

A vowel is a particular kind of speech sound made by changing the shape of the upper vocal tract, or the area in the mouth above the tongue. In English it is important to know that there is a difference between a vowel sound and a [letter] in the [alphabet]. In English there are five vowel letters in the alphabet.

Do you find it difficult to pronounce English words correctly or to comprehend the phonetic alphabet's symbols?

The collection of English pronunciation tools of the EnglishPhonetics below is for anyone learning the language who want to practice their pronunciation at any time or place.

(FREE) English Accent Speaking test with Voice and Video

Take a Pronunciation Test | Fluent American Accent Training

The best interactive tool to the IPA chart sounds

Listen to all International Phonetics Alphabet sounds, learn its subtle differences

American English IPA chart

Listen the American english sounds with the interactive phonics panel

Free English voice accent and pronunciation test

Get a score that informs your speaking skills

To Phonetics Transcription (IPA) and American Pronunciation voice

Online converter of English text to IPA phonetic transcription

Contrasting Sounds or Minimal Pairs Examples

Minimal pairs are an outstanding resource for english learning, linguist and for speech therapy

English pronunciation checker online

To check your pronunciation. Press the 'Record' button and say the phrase (a text box and 'Record' button). After you have spelled the word correctly, a 'speaking test' for the word will appear.

The [MOST] complete Glossary of Linguistic Terms

The meaning of " linguistics " is the science of studying the structure, transition, lineage, distribution, and interrelationships of human language.

Vowel sounds and syllable stress

Vowel sounds and syllables are closely related. Syllables are naturally occurring units of sound that create the rhythm of spoken English. Words with multiple syllables always have one syllable that is stressed (given extra emphasis).

Unstressed syllables may contain schwa /ə/, and can have almost any spelling. In addition, three consonant sounds, the n sound, l sound, and r sound (called 'schwa+r' /ɚ/ when it is syllabic) can create a syllable without an additional vowel sound. These are called syllabic consonants.

Ready to improve your english accent?

Get a FREE, actionable assessment of your english accent. Start improving your clarity when speaking

** You will receive an e-mail to unlock your access to the FREE assessment test

Eriberto Do Nascimento

Eriberto Do Nascimento has Ph.D. in Speech Intelligibility and Artificial Intelligence and is the founder of English Phonetics Academy

Speech in Linguistics

- An Introduction to Punctuation

- Ph.D., Rhetoric and English, University of Georgia

- M.A., Modern English and American Literature, University of Leicester

- B.A., English, State University of New York

In linguistics , speech is a system of communication that uses spoken words (or sound symbols ).

The study of speech sounds (or spoken language ) is the branch of linguistics known as phonetics . The study of sound changes in a language is phonology . For a discussion of speeches in rhetoric and oratory , see Speech (Rhetoric) .

Etymology: From the Old English, "to speak"

Studying Language Without Making Judgements

- "Many people believe that written language is more prestigious than spoken language--its form is likely to be closer to Standard English , it dominates education and is used as the language of public administration. In linguistic terms, however, neither speech nor writing can be seen as superior. Linguists are more interested in observing and describing all forms of language in use than in making social and cultural judgements with no linguistic basis." (Sara Thorne, Mastering Advanced English Language , 2nd ed. Palgrave Macmillan, 2008)

Speech Sounds and Duality

- "The very simplest element of speech --and by 'speech' we shall henceforth mean the auditory system of speech symbolism, the flow of spoken words--is the individual sound, though, . . . the sound is not itself a simple structure but the resultant of a series of independent, yet closely correlated, adjustments in the organs of speech." ( Edward Sapir , Language: An Introduction to the Study of Speech , 1921)

- "Human language is organized at two levels or layers simultaneously. This property is called duality (or 'double articulation'). In speech production, we have a physical level at which we can produce individual sounds, like n , b and i . As individual sounds, none of these discrete forms has any intrinsic meaning . In a particular combination such as bin , we have another level producing a meaning that is different from the meaning of the combination in nib . So, at one level, we have distinct sounds, and, at another level, we have distinct meanings. This duality of levels is, in fact, one of the most economical features of human language because, with a limited set of discrete sounds, we are capable of producing a very large number of sound combinations (e.g. words) which are distinct in meaning." (George Yule, The Study of Language , 3rd ed. Cambridge University Press, 2006)

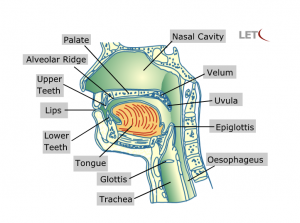

Approaches to Speech

- "Once we decide to begin an analysis of speech , we can approach it on various levels. At one level, speech is a matter of anatomy and physiology: we can study organs such as tongue and larynx in the production of speech. Taking another perspective, we can focus on the speech sounds produced by these organs--the units that we commonly try to identify by letters , such as a 'b-sound' or an 'm-sound.' But speech is also transmitted as sound waves, which means that we can also investigate the properties of the sound waves themselves. Taking yet another approach, the term 'sounds' is a reminder that speech is intended to be heard or perceived and that it is therefore possible to focus on the way in which a listener analyzes or processes a sound wave." (J. E. Clark and C. Yallop, An Introduction to Phonetics and Phonology . Wiley-Blackwell, 1995)

Parallel Transmission

- "Because so much of our lives in a literate society has been spent dealing with speech recorded as letters and text in which spaces do separate letters and words, it can be extremely difficult to understand that spoken language simply does not have this characteristic. . . . [A]lthough we write, perceive, and (to a degree) cognitively process speech linearly--one sound followed by another--the actual sensory signal our ear encounters is not composed of discretely separated bits. This is an amazing aspect of our linguistic abilities, but on further thought one can see that it is a very useful one. The fact that speech can encode and transmit information about multiple linguistic events in parallel means that the speech signal is a very efficient and optimized way of encoding and sending information between individuals. This property of speech has been called parallel transmission ." (Dani Byrd and Toben H. Mintz, Discovering Speech, Words, and Mind . Wiley-Blackwell, 2010)

Oliver Goldsmith on the True Nature of Speech

- "It is usually said by grammarians , that the use of language is to express our wants and desires; but men who know the world hold, and I think with some show of reason, that he who best knows how to keep his necessities private is the most likely person to have them redressed; and that the true use of speech is not so much to express our wants, as to conceal them." (Oliver Goldsmith, "On the Use of Language." The Bee , October 20, 1759)

Pronunciation: SPEECH

- Duality of Patterning in Language

- Phonology: Definition and Observations

- What Is Phonetics?

- Definition and Examples of Productivity in Language

- Spoken English

- Definition of Voice in Phonetics and Phonology

- Phonological Segments

- Sound Symbolism in English: Definition and Examples

- What Are Utterances in English (Speech)?

- Grapheme: Letters, Punctuation, and More

- What Is a Phoneme?

- Phoneme vs. Minimal Pair in English Phonetics

- Connected Speech

- What Is Graphemics? Definition and Examples

- 10 Titillating Types of Sound Effects in Language

- Assimilation in Speech

- Apr 28, 2020

What are the different types of speech sounds?

Updated: Mar 24, 2023

Did you know there are different types of speech sounds? Have you worked with a child in the past who has focused on back sounds, or flow sounds, and wondered what does that mean?

Well, us humans are clever things and utilise different parts of our mouth and throat, controlling airflow in particular ways to produce a range of sounds. As a native English speaker, I’ll focus on English sounds but there may be some theory you can put towards sounds in any other language you are using with your child.

Voice, Place, Manner

This is the foundation phrase Speech and Language Therapists use when referring to speech sounds. Some sounds can be loud, like a D or V sound and others can be quiet or whispered like a T or H. This refers to the use of voice , utilising the voice box for the louder sounds and switching it off (or not vibrating the vocal folds) for quieter sounds.

When looking at place , this refers to where in the mouth the sound is made i.e. at the front or the back, with the tongue, teeth or lips.

The Manner of articulation indicates air flow and whether a speech sound is made when the air flow is stopped, allowed to flow a little or whether it is a sound made when the air flows out of the nose (like when producing a M sound).

Different places of Articulation

We make speech sounds in a few different places in our mouth and throat.

Lip sounds - these sounds are made by using the lips in some sort of way. P, B and M are made with both lips pressed together, W is made with lips rounded and F and V are made with the bottom lip tucked under the top front teeth.

Alveolar sounds- this refers to the hard palatal ridge just behind your top teeth. Here we make the T, D, N, S, Z, L, SH, CH, J. These are also referred to as front sounds .

Back sounds - sounds made towards the back of the mouth include K, G, NG. There are some other sounds made here in other languages, for example the Spanish ‘j’, Greek Ɣ and German ‘ch’.

Glide sounds - these are made when articulators move, R, L, W, Y.

Stop and flow sounds

Some of the sounds that we produce are made when the airflow is stopped and then released. Think of the ‘p’ sound. We produce this sound by pressing our lips together and letting air come out of our lungs but stopping it with our closed lips. Then we release the lips and the ‘puh’ sound is made.

The same thing happens for the ‘t’ sound, except this time we use the tongue tip on the ridge behind the teeth (the alveolar ridge) to stop the air flow and then release to make the ‘tuh’ sound. Try it.

The technical term for these ‘stoppy sounds’ is plosive . Stop sounds used in English are P, B, T, D, K, G.

Other speech sounds are made by letting the air flow through our articulators. Like the ‘s’ sound. For this sound we hover the tongue tip on the ridge behind the top teeth and let the air flow through to make the ‘sss’ sound.

Now try with the ‘f’ sound. For this we need to trap the bottom lip under the top teeth gently so that we can let the air flow to make this sound. This is one of my favourite sounds to help children with because we can make a rabbit face.

The technical term for ‘flowy sounds’ is fricative. Flow sounds in English are F, V, TH, S, Z, SH, H.

How can we tell there is a problem?

When speech sound difficulties occur, it is usually because a child is replacing one type of speech sounds with another. For example, they may be replacing a front sound T with a back sound K/C (saying key instead of tea ). OR they may replace a flow sound F with a stop sound D (saying dock for sock ).

A Speech and Language Therapist will be able to use samples of a child’s speech, usually with a picture naming assessment, to figure out what sounds a child can say, what sounds are missing or replaced, if the child has any particular difficulty with saying a type of sound and then decide on the best way to help make these difficulties better.

If this all sounds interesting to you, I go into much more depth and theory in my online course Speech Sounds: Steps to Success . We look at types of speech sound difficulties and common replacement patterns, as well as the development of speech sounds and the age children are expected to be able to produce certain types of sounds. Then we go on to look at fun ways to remedy speech sounds at different stages of their journey, from producing single sounds to being able to use their target sounds in sentences.

There's also my Speech Sounds Games & Activities eBook (available as an instant download) which contains my favourite games to play when working on speech sound difficulties, using target sound picture and games where you don’t need pictures.

I have also created a FREE information sheet of the ages and stages of speech sound development, perfect to help you work out what sounds your child should be making at which age. Click here to get the FREEBIE!

If you’d like more advice, tips and freebies relating to working with children who have speech and language difficulties, make sure you’re signed up to receive my regular newsletters. Click here to join.

- Speech Sounds

Recent Posts

HOT TOPIC: Speech Sound Difficulites 🗨

How to Become a Speech Sound Specialist

Leo can't say his name properly

Commentaires

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

3 Chapter 3: Phonetics (The Sounds of Speech)

Learning outcomes.

After studying this chapter, you should be able to discuss:

- the three aspects of speech that make up phonetics

- the International Phonetic Alphabet

- the theories of speech perception

Introduction

Think about how you might describe the pronunciation of the English word cat (cat pictured below). If you had to tell someone what’s the first sound of the word, what would you tell them?

Read this poem out loud:

“Hints on pronunciation for foreigners ”

by Anonymous (see note)

I take it you already know of tough and bough and cough and dough. Others may stumble, but not you, On hiccough, thorough, lough* and through. Well done! And now you wish, perhaps, To learn of less familiar traps.

Beware of heard, a dreadful word That looks like beard and sounds like bird. And dead-it’s said like bed, not bead. For goodness sake, don’t call it deed! Watch out for meat and great and threat. They rhyme with suite and straight and debt.

A moth is not a moth in mother, Nor both in bother, broth in brother, And here is not a match for there, Nor dear and fear for pear and bear. And then there’s dose and rose and lose Just look them up–and goose and choose. And cork and work and card and ward. And font and front and word and sword. And do and go, then thwart and cart. Come, come I’ve hardly made a start.

A dreadful language? Man alive, I’d mastered it when I was five!

*Laugh has been changed to lough, which is pronounced “lock” and is suggested as the original spelling here.

NOTE: For interesting tidbits about the origins of this poem, see the comments on this blog post .

What does this poem show us about the need for phonetic description?

In addition to variations of pronunciation of the same groupings of letters, the same word, with the same spelling, can have different pronunciations by different people (for example, because of different dialects). The point here is that English spelling does not clearly and consistently represent the sounds of language. In fact, English writing is infamous for that problem.There are lots of subtle differences between pronunciations, and these can’t always be explained with traditional casual ways of explanation. We need a very systematic and formal way to describe how every sound is made. That’s what phonetics is for.

What is Phonetics?

Phonetics looks at human speech from three distinct but interdependent viewpoints:

- Articulatory phonetics (The Production of Speech)…studies how speech sounds are produced.

- Auditory phonetics (The Perception of Speech)…studies the way in which humans perceive sounds.

- Acoustic phonetics (The Physics of Speech)…studies the physical properties of speech sounds.

Articulatory Phonetics

When people play a clarinet or similar instrument, they can make different sounds by closing the tube in different places or different ways. Human speech works the same way: we make sound by blowing air through our tube (from the lungs, up the throat, and out the mouth and/or nose), and we change sounds by changing the way the air flows and/or closing the tube in different places or in different ways.

There are three basic ways we can change a sound, and they correspond to three basic phonetic “features”. (The way I am categorizing features here may be different than what’s presented in some readings; there are lots of different theories about how to organize phonetic features.) They are as follows:

- We can change the way the air comes out of our lungs in the first place, by letting our vocal folds vibrate or not vibrate. This is called voicing . (Voicing is also closely related to aspiration , although they are realized in different ways. The complex relationship between voicing and aspiration is beyond the scope of this subject; for our purposes, you can just treat them as the same thing, and you can use the terms “voiced” and “unaspirated” interchangeably, and use the terms “voiceless” and “aspirated” interchangeably.)

- We can change the way that we close the tube—for example, completely closing the tube will create a one kind of sound, whereas than just narrowing it a little to make the air hiss will create a different kind of sound. This aspect of how we make sound is called manner of articulation .

- We can change the place that we close the tube — for example, putting our two lips together creates a “closure” further up the tube than touching our tongue to the top of our mouth does. This aspect of how we make sound is called place of articulation (“articulation” means movement, and we close our tube by moving something—moving the lips to touch each other, moving the tip of the tongue to touch the top of the mouth, etc.—, so “place of articulation” means “the place that you move to close your mouth).

Articulatory phonetics investigates how speech sounds are produced. This involves some basic understanding of

- The anatomy of speech i.e. the lungs, the larynx and the vocal tract;

- Airstream mechanisms, that is, the mechanisms involved in initiating and producing the types of airstreams used for speech.

Adopting anatomical and physiological criteria, phoneticians define segmental (i.e. the sounds of speech) and suprasegmental (e.g. tonal phenomena).

The Anatomy of Speech

Three central mechanisms are responsible for the production of speech:

- Respiration: The lungs produce the necessary energy in form of a stream of air.

- Phonation: The larynx serves as a modifier to the airstream and is responsible for phonation.

- Articulation: The vocal tract modifies and modulates the airstream by means of several articulators.

Respiration

Before any sound can be produced at all, there has to be some form of energy. In speech, the energy takes the form of a stream of air normally coming from the lungs. Lung air is referred to as pulmonic air.

The respiratory system is used in normal breathing and in speech and is contained within the chest or thorax. Within the thoracic cavity are the lungs, which provide the reservoir for pulmonic airflow in speech.

The lungs are connected to the trachea, by two bronchial tubes which join at the base of the trachea. At the lower end of the thoracic cavity we find the dome-shaped diaphragm which is responsible for thoracic volume changes during respiration. The diaphragm separates the lungs from the abdominal cavity and lower organs.

The larynx consists of a number of cartilages which are interconnected by complex joints and move about these joints by means of muscular and ligamental force. The larynx has several functions:

- the protective function

- the respiratory function

- the function in speech

The primary biological function of the larynx is to act as a valve, by closing off air from the lungs or preventing foreign substances from entering the trachea. The principal example of this protective function of the larynx is the glottal closure, during which the laryngeal musculature closes the airway while swallowing.

During respiration, the larynx controls the air-flow from subglottal to supraglottal regions. Normally, humans breathe about 15 times per minute (2 sec. inhaling, 2 sec. exhaling). Breathing for speech has a different pattern than normal breathing. Speaking may require a deeper, more full breath than regular inhalation and such inhalation would be done at different intervals. A speaker’s breathing rate is no longer a regular pattern of fifteen to twenty breaths a minute, but rather is sporadic and irregular, with quick inhalation and a long, drawn out, controlled exhalation (exhaling can last 10 to 15 seconds).In speech production, the larynx modifies the air-flow from the lungs in such a way as to produce an acoustic signal. The result are various types of phonation.

- Normal voice

The most important effect of vocal fold action is the production of audible vibration – a buzzing sound, known as voice or vibration. Each pulse of vibration represents a single opening and closing movement of the vocal folds. The number of cycles per second depends on age and sex. Average male voices vibrate at 120 cycles per second, women’s voices average 220 cycles per second.

Articulation

Once the air passes through the trachea and the glottis, it enters a long tubular structure known as the vocal tract. Here, the airstream is affected by the action of several mobile organs, the active articulators. Active articulators include the lower lip, tongue, and glottis. They are actively involved in the production of speech sounds.

The active articulators are supported by a number of passive articulators, i.e. by specific organs or locations in the vocal tract which are involved in the production of speech sounds but do not move. These passive articulators include the palate, alveola, ridge, upper and lower teeth, nasal cavity, velum, pharynx, epiglottis, and trachea.

The production of speech sounds through these organs is referred to as articulation .

Articulation of Consonants in North American English

Introduction to Articulatory Phonetics licensed CC BY .

Articulation of Vowel Sounds in North American English

International Phonetic Alphabet (IPA)

The International Phonetic Alphabet ( IPA ) is an alphabetic system of phonetic notation based primarily on the Latin script. It was devised by the International Phonetic Association in the late 19th century as a standardized representation of speech sounds in written form. The IPA is used by lexicographers, foreign language students and teachers, linguists, speech–language pathologists, singers, actors, constructed language creators and translators.

The IPA is designed to represent those qualities of speech that are part of sounds in oral language: phones, phonemes, intonation, and the separation of words and syllables.

IPA symbols are composed of one or more elements of two basic types, letters and diacritics. For example, the sound of the English letter ⟨t⟩ may be transcribed in IPA with a single letter, [t] , or with a letter plus diacritics, [t̺ʰ] , depending on how precise one wishes to be. Slashes are used to signal phonemic transcription; thus /t/ is more abstract than either [t̺ʰ] or [t] and might refer to either, depending on the context and language.

This website shows the sounds from American English represented with the IPA. In addition, you can type in any English word and get the phonetic conversion!

Suprasegmental Features

Vowels and consonants are the basic segments of speech. Together, they form syllables, larger units, and eventually utterances. Superimposed on the segments are a number of additional features known as suprasegmental or prosodic features. They do not characterize a single segment but a succession of segments. The most important suprasegmental features are:

In a spoken utterance the syllables are never produced with the same intensity. Some syllables are unstressed (weaker), others stressed (stronger).

A stressed syllable is produced by an increase in respiratory activity, i.e. more air is pushed out of the lungs.

The video below suggests that chimpanzees can speak. Is that true? HInt: Think about anatomical reasons that chimpanzees may or may not be able to speak.

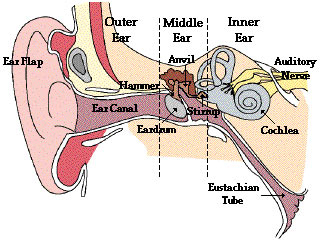

Auditory Phonetics

Auditory phonetics investigates the processes underlying human speech perception. The starting point for any auditory analysis of speech is the study of the human hearing system i.e. the anatomy and physiology of the ear and the brain.

Since the hearing system cannot react to all features present in a sound wave, it is essential to determine what we perceive and how we perceive it. This enormously complex field is referred to as speech perception .

This area is not only of interest to phonetics but is also the province of experimental psychology.

The Auditory System

The auditory system consists of three central components:

- The outer ear – modifies the incoming sound signal and amplifies it at the eardrum.

- The middle ear – improves the signal and transfers it to the inner ear.

- The inner ear – converts the signal from mechanical vibrations into nerve impulses and transmits it to the brain via the auditory nerve.

The outer ear consists of the visible part, known as the auricle or pinna, and of the interior part. The auricle helps to focus sound waves into the ear, and supports our ability to locate the source of a sound.

From here, the ear canal, a 2.5 cm long tube, leads to the eardrum.The main function of the ear canal is to filter out tiny substances that might approach the eardrum. Furthermore, it amplifies certain sound frequencies(esp.between 3, 000 and 4, 000 Hz) and protects the eardrum from changes in temperature as well as from damage.

Behind the eardrum lies the middle ear, a cavity which is filled with air via the Eustachian tube (which is linked to the back of the nose and throat).

The primary function of the middle ear is to convert the sound vibrations at the eardrum into mechanical movements. This is achieved by a system of three small bones, known as the auditory ossicles. They are named after their shape:

- the malleus (hammer)

- the incus (anvil)

- the stapes (stirrup)

When the eardrum vibrates due to the varying air pressure caused by the sound waves, it causes the three small bones, the so-called ossicles, to move back and forth. These three bones transmit the vibrations to the membrane-covered oval opening of the inner ear. Together, the ossicles function as a kind of leverage system, amplifying the vibrations by a factor of over 30 dB by the time they reach the inner ear.

The inner ear contains the vestibular organ with the semi-circular canals, which control our sense of balance, and the cochlea, a coiled cavity about 35 mm long, resembling a snail’s shell. The cochlea is responsible for converting sounds which enter the ear canal, from mechanical vibrations into electrical signals. The mechanical vibrations are transmitted to the oval window of the inner ear via the stapes (stirrup). The conversion process, known as transduction, is performed by specialized sensory cells within the cochlea. The electrical signals, which code the sound’s characteristics, are carried to the brain by the auditory nerve.

The cochlea is divided into three chambers by the basilar membrane. The upper chamber is the scala vestibuli and the bottom chamber is the scala tympani. They are both filled with a clear viscous fluid called perilymph. Between these two chambers is the cochlea duct, which is filled with endolymph.

On the basilar membrane rests the organ of Corti which contains a systematic arrangement of hair cells which pick up the pressure movements along the basilar membrane where different sound frequencies are mapped onto different membrane sites from apex to base.

The hair cells bend in wave-like actions in the fluid and set off nerve impulses which then pass through the auditory nerve to the hearing center of the brain. Short hair cell fibres respond to high frequencies and longer fibres respond to lower frequencies.

Speech Perception

Since the hearing system cannot react to all features present in a sound wave, it is essential to determine what we perceive and how we perceive it. This enormously complex field is referred to as speech perception. Two questions have dominated research:

- Acoustic cues: Does the speech signal contain specific perceptual cues?

- Theories of Speech Perception: How can the process of speech perception be modeled?

A further important issue in speech perception, which is also the province of experimental psychology, is whether it is a continuous or – as often assumed – a categorical process.

Acoustic Cues

The speech signal presents us with far more information than we need in order to recognize what is being said. Yet, our auditory system is able to focus our attention on just the relevant auditory features of the speech signal – features that have come to be known as acoustic cues:

- Voice Onset Time (VOT): the acoustic cue for the voiceless/voiced distinction

- F2-transition : the acoustic cue for place of articulation

- Frequency cues

The importance of these small auditory events has led to the assumption that speech perception is by and large not a continuous process, but rather a phenomenon that can be described as discontinuous or categorical perception .

Voice Onset Time

The voice onset time (VOT) is the point when vocal fold vibration starts relative to the release of a closure, i.e.the interval of voicing prior to a voiced sound. It is crucial for us to discriminate between clusters such as [ pa ] or [ ba ]. It is a well-established fact that a gradual delay of VOT does not lead to a differentiation between voiceless and voiced consonants. Rather, a VOT-value of around 30 msecs serves as the key factor. In other words:

- If VOT is longer than 30 msecs, we hear a voiced sound, such as [ ba ],

- If VOT is shorter than 30 msecs, the perceptual result is [ pa ].

F2 Transition

The formant pattern of vowels in isolation differs enormously from that of vowels embedded in a consonantal context. If a consonant precedes a vowel, e.g. ka/ba/etc, the second formant (F2) seems to emerge from a certain frequency region, the so-called F2-locus. It seems that speech perception is sensitive to the transition of F2 and that F2-transition is an important cue in the perception of speech. In other words, the F2 frequency of the vowel determines whether or not the initial consonant sound is clear.

Frequency Cues

The frequency of certain parts of the sound wave helps to identify a large number of speech sounds. Fricative consonants, such as [s], for example, involve a partial closure of the vocal tract, which produces a turbulence in the air flow and results in a noisy sound without clear formant structure spreading over a broad frequency range. This friction noise is relatively unaffected by the context in which the fricative occurs and may thus serve as a nearly invariant cue for its identification.

However, the value of frequency cues is only relative since the perception of fricatives is also influenced by the fricative’s formant transitions.

Theories of Speech Perception

Speech perception begins with a highly complex, continuously varying, acoustic signal and ends with a representation of the phonological features encoded in that signal. There are two groups of theories that model this process:

- Passive theories: This group views the listener as relatively passive and speech perception as primarily sensory. The message is filtered and mapped directly onto the acoustic-phonetic features of language.

- Active theories: This group views the listener as more active and postulates that speech perception involves some aspects of speech production; the signal is sensed and analysed by reference to how the sounds in the signal are produced.

Passive Theories

Passive theories of speech perception emphasize the sensory side of the perceptual process and relegate the process of speech production to a minor role. They postulate the use of stored neural patterns which may be innate. Two influential passive theories have emerged:

- The Theory of Template Matching Templates are innate recognition devices that are rudimentary at birth and tuned as language is acquired.

- The Feature Detector Theory Feature detectors are specialized neural receptors necessary for the generation of auditory patterns.

Active Theories

Active theories assume that the process of speech perception involves some sort of internal speech production, i.e. the listener applies his articulatory knowledge when he analyzes the incoming signal. In other words: the listener acts not only when he produces speech, but also when he receives it.

Two influential active theories have emerged:

- The Motor Theory of Perception According to the motor theory, reference to your own articulatory knowledge is manifested via direct comparison with articulatory patterns.

- The Analysis-by-Synthesis Theory The analysis-by-synthesis theory postulates that the reference to your own articulation is via neurally generated auditory patterns.

The McGurk Effect

The McGurk effect is a perceptual phenomenon that demonstrates an interaction between hearing and vision in speech perception. The illusion occurs when the auditory component of one sound is paired with the visual component of another sound, leading to the perception of a third sound. The visual information a person gets from seeing a person speak changes the way they hear the sound. If a person is getting poor quality auditory information but good quality visual information, they may be more likely to experience the McGurk effect.

You are invited to participate in a little experiment on perception. In the video below you see a mouth speaking four items. Your tasks are the following:

- Watch the mouth closely, but concentrate on what you hear.

- Now close your eyes. Play the clip again.

- What did you perceive when you saw and heard the video clip? What did you perceive when you just heard the items?

Acoustic Phonetics

Acoustic phonetics studies the physical properties of the speech signal. This includes the physical characteristics of human speech, such as frequencies, friction noise, etc.

There are numerous factors that complicate the straightforward analysis of the speech signal, for example, background noises, anatomical and physiological differences between speakers etc.

These and other aspects contributing to the overall speech signal are studied under the heading of acoustic phonetics.

Sound Waves

Sound originates from the motion or vibration of a sound source, e.g. from a tuning fork. The result of this vibration is known as a simple sound wave, which can be mathematically modeled as a sine wave. Most sources of sounds produce complex sets of vibrations. They arise from the combination of a number of simple sound waves.

Speech involves the use of complex sound waves because it results from the simultaneous use of many sound sources in the vocal tract.

The vibration of a sound source is normally intensified by the body around it. This intensification is referred to as resonance. Depending on the material and the shape of this body, several resonance frequencies are produced.

Simple sound waves are produced by a simple source, e.g. the vibration of a tuning fork. They are regular in motion and are referred to as periodic. Two properties are central to the measurement of simple sound waves: the frequency and the amplitude.

Practically every sound we hear is not a pure tone but a complex tone; its wave form is not simple but complex. Complex wave forms are synthesized from a sufficient number of simple sound waves. There are two types of complex wave forms:

- periodic complex sound waves

- aperiodic complex sound waves

Speech makes use of both kinds. Vowels, for example, are basically periodic, whereas consonants range from periodic to aperiodic:

- the vowel [ a ], periodic

- the consonant [ n ], periodic

- the consonant [ s ], aperiodic

- the consonant [ t ], aperiodic

The sound wave created by a sound source is referred to as the fundamental frequency or F0 (US: “F zero”).

On a musical instrument, F0 is the result of the vibration of a string or a piece of reed. In speech, it is the result of vocal fold vibration.

In both cases, F0 is a complex sound wave which is filtered (intensified and damped) by numerous parts of the resonating body. The resulting bundles of resonance frequencies or harmonics are multiples of F0. They are called formants and are numbered F1, F2 and so on.

In speech, these formants can be associated with certain parts of the vocal tract, on a musical instrument they are multiples of F0. For example, on an oboe F0 is the result of the vibration of the reed. This fundamental frequency is intensified (and damped) by the resonating body. As a result, a number of harmonics or formant frequencies are created as multiples of the frequency of F0.

Attributions:

Content adapted from the following:

VLC102 – Speech Science by Jürgen Handke, Peter Franke, Linguistic Engineering Team under CC BY 4.0

“ International Phonetic Alphabet ” licensed under CC BY SA .

“ McGurk Effect ” licensed under CC BY SA .

Introduction to Linguistics by Stephen Politzer-Ahles . CC-BY-4.0 .

More than Words: The Intersection of Language and Culture Copyright © 2022 by Dr. Karen Palmer is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Subscribe to Get the Latest News!

August 19, 2022

Speech Sound Development

Children learn to speak at a very young age, and while speech sound development often follows a predictable pattern, each child’s speech development will vary. This speech sound development chart can help caregivers and speech-language pathologists determine if a child’s speech is on track and help make decisions about when a child needs speech therapy.

Scroll to the bottom of this post to download a Speech Sound Development PDF!

Recently, a cross-linguistic review by McLeod and Crowe (2018) reviewed 64 studies from 31 countries to analyze the development of speech sounds across multiple languages.

Further analysis of this data was done across 15 studies of 18,907 children for English in the United States (Crowe and McLeod, 2020). The analysis reported the following:

Children are able to produce English speech sounds relatively early, with most sounds acquired by 4 years of age , and almost all speech sounds acquired by the age of 7 .

Here’s a visual breakdown of when specific consonant speech sounds are acquired.

What Speech Sounds Develop at What Ages?

Most speech sounds develop between the ages of 2 to 7 years old (though speech can develop before and after these ages as well). The 24 English consonant speech sounds can be broken up into early, middle, and late-developing sounds.

Early sounds are typically easy for a young child to produce and are learned by age 3. Middle sounds are acquired next and are learned by age 4. Finally, four speech sounds are considered late-developing sounds, and these usually aren’t acquired until the age of 7.

Early 13 Sounds (Ages 2-4)

The first “early 13” speech sounds that children learn include:

- B, N, M, P, H, W, D, G, K, F, T, NG, and Y.

Middle 7 Sounds (Age 4-5)

The next “middle 7” speech sounds that children learn include:

- V, J, S, CH, L, SH, and Z.

Late 4 Sounds (Ages 5-7)

Finally, the last “late 4” speech sounds that children learn include:

- R, Voiced TH, ZH, and Voiceless TH.

Speech Sound Development By Age

To get an even more specific view of speech sound development by age, let’s break down these speech sounds further by each year of development.

The following sections include the average age at which 90% of children acquire English speech sounds. Keep in mind, that there is variability in when children acquire and subsequently master speech sounds. This list should be used as a basic guide to help determine if a child’s speech is developing appropriately.

A quick note on intelligibility: The intelligibility noted below is how much speech (in sentences) an unfamiliar listener can understand. An unfamiliar listener is someone who does not listen to the child on a regular basis.

2-3 Years (24-35 months)

Toddlers are often difficult to understand, as they are learning the different speech sounds and how to communicate with others. Two-year-olds still make many speech errors, and these are considered developmentally appropriate, but they should be able to say relatively simple speech sounds.

Intelligibility: Children should be at least 15% intelligible at 3 years old.

By the time a child turns 3 years old, they should have acquired the following sounds:

- P. (As in p ay, o p en, and cu p .)

- B. (As in b oy, ro b ot, and tu b .)

- D. (As in d og, ra d io, and sa d .)

- M. (As in m op, le m on, and ja m .)

- N. (As in n ose, po n y, and pi n .)

- H. (As in h am, be h ind, and fore h ead.)

- W. (As in w eb, a w ake, and co w. )

3-4 Years (36-47 months)

Young preschoolers are continuing to learn many speech sounds, and they are becoming easier to understand. Speech errors are still common and appropriate at this age, but they should be able to say 13 total speech sounds.

Intelligibility: Children should be 50% intelligible at 4 years old.

By the time a child turns 4 years old, they should have acquired the following sounds:

- T. (As in t op, wa t er, and ha t .)

- K. (As in k iss, ba k er, and boo k .)

- G. (As in g um, wa g on, and lo g .)

- NG. (As in ha ng er, and swi ng .)

- F. (As in f an, so f a, and lea f .)

- Y. (As in y es, can y on, and ro y al.)

4-5 Years (48-59 months)

An older preschooler’s speech should include most speech sounds, though a few errors are still developmentally appropriate (like saying “wabbit” for “rabbit” or “fumb” for “thumb.”).

Intelligibility: Children should be 75% intelligible at 5 years old.

By the time a child turns 5 years old, they should have acquired the following sounds:

- V. (As in v an, se v en, and fi ve . )

- S. (As in s un, me ss y, and ye s )

- Z. (As in z oo, li z ard, and bu zz .)

- SH. (As in sh oe, wa sh ing, and fi sh . )

- CH. (As in ch in, tea ch er, and pea ch .)

- J. (As in j ump, ban j o, and ca ge .)

- L. (As in l id, sa l ad, and hi ll .)

5-6 Years (60-71 months)

As a child enters grade school, their speech continues to improve and correctly saying speech sounds will be important to their success in school and their ability to read. Few errors in speech are now considered developmentally appropriate.

Intelligibility: Children should be 80% intelligible at 6 years old.

By the time a child turns 6 years old, they should have acquired the following sounds:

- Voiced TH. (As in th ey, wea th er, and smoo th .)

- ZH. (As in mea s ure, vi s ion, and gara ge .)

- R. (As in r un, ze r o, and fa r .)

6-7 Years (72-83 months)

A child’s speech at this age should be continually improving, with one final sound learned when they are in their 6th year. By the time a child reaches their 7th birthday, most will have acquired all speech sounds, and you should be able to understand almost everything they say.

Intelligibility: Children should be 90% intelligible a little past 7 years old.

By the time a child turns 7 years old, they should have acquired the following sound:

- Voiceless TH. (As in th ink, py th on, and ma th .)

Speech Sound Development Chart

This speech sound development chart is a helpful visual guide to gauge how “on track” children are with developing speech in comparison to other children their same age.

Click on the image below to download the speech sound development chart PDF.

Concerned About Your Child’s Speech Development?

These milestones are a good place to start to help track your child’s speech development. If your child hasn’t acquired speech sounds at the ages specified above and you are concerned about their speech, reach out to a speech-language pathologist.

Remember! These speech sound milestones are to be used as a general guide to help you determine if your child’s speech is developing appropriately. These norms are only one piece of the puzzle in determining if a child’s speech is delayed and if may benefit from speech therapy. They are not set-in-stone “cut-off” ages for when your child should be perfectly saying each speech sound in conversation as children continue to “master” these sounds after the ages specified above.

If you have concerns about your child’s speech, please contact us or speak with a local speech-language pathologist for further information and recommendations.

More Information on Speech Development

Interested in learning more about speech therapy and speech development? Check out these related posts below!

- How to Become a Speech Pathologist

- Speech Intelligibility

Crowe, K., & McLeod, S. (2020). Children’s English consonant acquisition in the United States: A review. American Journal of Speech-Language Pathology . https://doi.org/10.1044/2020_AJSLP-19-00168

Hustad, K.C., Mahr, T.J., Natzke, P., & Rathouz, P.J. (2021). Speech development between 30 and 119 months in typical children I: Intelligibility growth curves for single-word and multiword productions. Journal of Speech, Language, and Hearing Research . https://doi.org/10.1044/2021_JSLHR-21-00142

McLeod, S., & Crowe, K. (2018). Children’s consonant acquisition in 27 languages: A cross-linguistic review. American Journal of Speech-Language Pathology . https://doi.org/10.1044/2018_AJSLP-17-0100

Share This Article

To the newsletter, the newsletter.

Subscribe to The Speech Guide newsletter and never miss a new post, handy resource, or freebie.

You have successfully joined our subscriber list.

Leave A Comment Cancel reply

Save my name, email, and website in this browser for the next time I comment.

Related Posts

Speech Intelligibility in Children

Place Manner Voice Chart

© Copyright 2022

Speech Sounds

Octavia e. butler, ask litcharts ai: the answer to your questions.

Welcome to the LitCharts study guide on Octavia E. Butler's Speech Sounds . Created by the original team behind SparkNotes, LitCharts are the world's best literature guides.

Speech Sounds: Introduction

Speech sounds: plot summary, speech sounds: detailed summary & analysis, speech sounds: themes, speech sounds: quotes, speech sounds: characters, speech sounds: symbols, speech sounds: theme wheel, brief biography of octavia e. butler.

Historical Context of Speech Sounds

Other books related to speech sounds.

- Full Title: Speech Sounds

- When Written: 1983

- Where Written: Pasadena, CA

- When Published: 1983

- Literary Period: Post-modernism

- Genre: Science fiction, speculative fiction, Afrofuturism

- Setting: Between Los Angeles and Pasadena (California) in an imagined future, sometime in the 1980s

- Climax: Rye discovers two young children who are able to speak

- Antagonist: While the story has no single antagonist, Rye often finds herself struggling against people who have been most impaired by the illness. The illness itself could also be considered the story’s antagonist.

- Point of View: Third-person omniscient

Extra Credit for Speech Sounds

Prizewinner. In 1984, “Speech Sounds” won Octavia Butler her first Hugo Award for Best Short Story. This award helped her rise to prominence as a writer.

That Sounds Right: Hearing Objects Helps Us Recognize Them More Quickly

- Auditory Perception

- Cognitive Processes

- Psychological Science

When we imagine a typical scene—for example, a kitchen—we tend to recall not just what a place looks like, but the sounds we associate with it. These audio associations can help us recognize objects more quickly, wrote Jamal R. Williams (University of California, San Diego) and Viola S. Störmer (Dartmouth College) in Psychological Science , such that we may identify a photo of a tea kettle more quickly when we hear the sound of water boiling or an “audio scene” of someone working in their kitchen.

“Despite the intuitive feeling that our visual experience is coherent and comprehensive, the world is full of ambiguous and indeterminate information,” wrote Williams and Störmer. “Our study reveals the importance of audiovisual interactions to support meaningful perceptual experiences in naturalistic settings.”

In the first of four experiments, Williams and Störmer tasked 20 undergraduate students with identifying obfuscated images of various objects while listening to congruent or incongruent sounds. In one trial, for example, participants might view an obscured image of a car that faded into view while listening to the congruent sound of car driving by or the incongruent sound of a tea kettle boiling. Participants were instructed to press the space bar as soon as they were confident they could identify the image, at which point they were asked to identify which of two images had appeared in the task: the car or a random unrelated object like a cash register.

The researchers found that participants were able to identify objects more quickly and just as accurately while listening to the sound made by that object versus an unrelated sound. Similarly, in their second study of 30 students, Williams and Störmer found that participants also identified images of conceptually related but silent objects more quickly while listening to audio scenes commonly associated with that object—for example, identifying a park bench while listening to sounds from a park.

“This, for the first time, demonstrates that, even when the information is indirectly related to possibly hundreds of objects and not directly related to a single object, people can still leverage this information to facilitate their sensory processing,” Williams said in an interview with APS.

Related content: Hearing is Believing: Sounds Can Alter Our Visual Perception

In their third experiment, Williams and Störmer put 30 students to the test with a slightly more difficult version of the same task: This time, instead of being asked to choose between two unrelated objects during the identification stage of the task (a car or a cash register), participants were presented with two objects from the same category (the car that appeared while the sound was played or a different car). As in the previous studies, participants were able to identify the correct object more quickly when a congruent sound was played while it faded into view. In their fourth study, Williams and Störmer found that a group of 30 students were able to distinguish between similar objects more quickly when the image aligned with the audio scenes played for them during the task.

This suggests that sounds can help us make fine-grained discriminations between objects, not just categorical ones, Williams said in an interview.

“Our perceptual system excels at navigating the complexities of the world by integrating information from different senses,” Williams and Störmer wrote. “Our results suggest that perception integrates contextual information at various levels of processing and can leverage general, gist-like information across sensory modalities to facilitate visual object perception.”

Participants’ ability to quickly integrate novel audiovisual information suggests that this process occurs relatively automatically, without volitional intent, but more research is needed to determine the extent to which automatic processes contribute to object recognition, the researchers concluded.

“These audiovisual interactions between naturalistic sounds and visual objects may be driven by a lifetime of experience and the computational architecture that gives rise to them is an automatic feature of a probabilistic mind,” Williams and Störmer wrote.

Related content we think you’ll enjoy

Learn how the human brain influences what our ears register – and what they don’t.

Cognition and Perception: Is There Really a Distinction?

A look at how scientific advances are calling into question one of the most basic and fundamental components of psychological science.

Between Speech and Song

Take note! Psychological scientists are doing sound research in the quest for the elusive crossroads where words and music meet.

Feedback on this article? Email [email protected] or login to comment.

Reference

Williams, J. R., Störmer, V. S. (in press) Cutting through the noise: Auditory scenes and their effects on visual object processing. Psychological Science .

APS regularly opens certain online articles for discussion on our website. Effective February 2021, you must be a logged-in APS member to post comments. By posting a comment, you agree to our Community Guidelines and the display of your profile information, including your name and affiliation. Any opinions, findings, conclusions, or recommendations present in article comments are those of the writers and do not necessarily reflect the views of APS or the article’s author. For more information, please see our Community Guidelines .

Please login with your APS account to comment.

Hearing is Believing: Sounds Can Alter Our Visual Perception

Audio cues can not only help us to recognize objects more quickly but can even alter our visual perception. That is, pair birdsong with a bird and we see a bird—but replace that birdsong with a squirrel’s chatter, and we’re not quite so sure what we’re looking at.

Using Neuroscience to Challenge Our Eyes and Ears

The split-second distinctions made possible by neuroscience challenge common understandings of how we see and hear.

Familiar Voices Are Easier to Understand, Even If We Don’t Recognize Them

Familiar voices are easier to understand and this advantage holds even if we don’t actually recognize a familiar voice, researchers find.

Privacy Overview

Help | Advanced Search

Computer Science > Sound

Title: separate in the speech chain: cross-modal conditional audio-visual target speech extraction.

Abstract: The integration of visual cues has revitalized the performance of the target speech extraction task, elevating it to the forefront of the field. Nevertheless, this multi-modal learning paradigm often encounters the challenge of modality imbalance. In audio-visual target speech extraction tasks, the audio modality tends to dominate, potentially overshadowing the importance of visual guidance. To tackle this issue, we propose AVSepChain, drawing inspiration from the speech chain concept. Our approach partitions the audio-visual target speech extraction task into two stages: speech perception and speech production. In the speech perception stage, audio serves as the dominant modality, while visual information acts as the conditional modality. Conversely, in the speech production stage, the roles are reversed. This transformation of modality status aims to alleviate the problem of modality imbalance. Additionally, we introduce a contrastive semantic matching loss to ensure that the semantic information conveyed by the generated speech aligns with the semantic information conveyed by lip movements during the speech production stage. Through extensive experiments conducted on multiple benchmark datasets for audio-visual target speech extraction, we showcase the superior performance achieved by our proposed method.

Submission history

Access paper:.

- HTML (experimental)

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

BibTeX formatted citation

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

RFK Jr. speaks candidly about his gravelly voice: ‘If I could sound better, I would’

- Show more sharing options

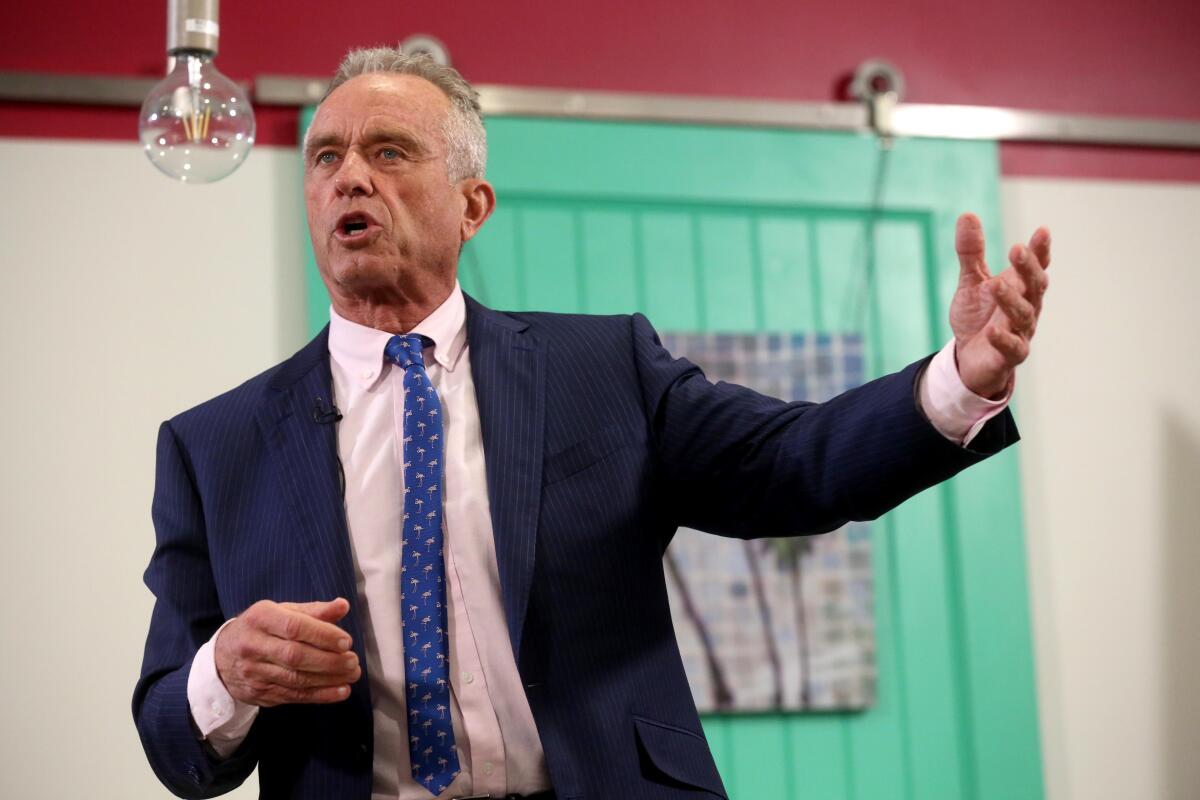

- Copy Link URL Copied!

There was a time before the turn of the millennium when Robert F. Kennedy Jr. gave a full-throated accounting of himself and the things he cared about. He recalls his voice then as “unusually strong,” so much so that he could fill large auditoriums with his words. No amplification needed.

The independent presidential candidate recounts those times somewhat wistfully, telling interviewers that he “can’t stand” the sound of his voice today — sometimes choked, halting and slightly tremulous.

The cause of RFK Jr.’s vocal distress? Spasmodic dysphonia, a rare neurological condition, in which an abnormality in the brain’s neural network results in involuntary spasms of the muscles that open or close the vocal cords.

My voice doesn’t really get tired. It just sounds terrible.

— Robert F. Kennedy Jr.

“I feel sorry for the people who have to listen to me,” Kennedy said in a phone interview with The Times, his voice as strained as it sounds in his public appearances. “My voice doesn’t really get tired. It just sounds terrible. But the injury is neurological, so actually the more I use the voice the stronger it tends to get.”

Since declaring his bid for the presidency a year ago, the 70-year-old environmental lawyer has discussed his frayed voice only on occasion, usually when asked by a reporter. He told The Times: “If I could sound better, I would.”

SD, as it’s known, affects about 50,000 people in North America, although that estimate may be off because of undiagnosed and misdiagnosed cases, according to Dysphonia International , a nonprofit that organizes support groups and funds research.

As with Kennedy, cases typically arise in midlife, though increased recognition of SD has led to more people being diagnosed at younger ages. The disorder, also known as laryngeal dystonia, hits women more often than men.

Internet searches for the condition have spiked, as Kennedy and his gravelly voice have become staples on the news. When Dysphonia International posted an article answering the query, “What is wrong with RFK Jr.’s voice?,” it got at least 10 times the traffic of other items.

Those with SD usually have healthy vocal cords. Because of this, and the fact that it makes some people sound like they are on the verge of tears, some doctors once believed that the croaking or breathy vocalizations were tied to psychological trauma. They often prescribed treatment by a psychotherapist.

But in the early 1980s, researchers, including Dr. Herbert Dedo of UC San Francisco, recognized that SD was a condition rooted in the brain.

Researchers have not been able to find the cause or causes of the disorder. There is speculation that a genetic predisposition might be set off by some event — physical or emotional — that triggers a change in neural networks.

Some who live with SD say the spasms came out of the blue, seemingly unconnected to other events, while others report that it followed an emotionally devastating personal setback, an injury accident or a severe infection.

Kennedy said he was teaching at Pace University School of Law in White Plains, N.Y., in 1996 when he noticed a problem with his voice. He was 42.

His campaigns for clean water and other causes in those days meant that he traveled the country, sometimes appearing in court or giving speeches. He lectured, of course, in his law school classes and co-hosted a radio show. Asked whether it was hard to hear his voice gradually devolve, Kennedy said: “I would say it was ironic, because I made my living on my voice.”

“For years people asked me if I had any trauma at that time,” he said. “My life was a series of traumas … so there was nothing in particular that stood out.”

RFK Jr. names California tech lawyer Nicole Shanahan as his vice presidential choice

Kennedy, a long shot presidential candidate, announced his decision in Oakland, where Shanahan, 38, grew up.

March 26, 2024

Kennedy was just approaching his 10th birthday when his uncle, President John F. Kennedy, was assassinated. At 14, his father was fatally shot in Los Angeles, on the night he won California’s 1968 Democratic primary for president.

RFK Jr. also lost two younger brothers: David died at age 28 of a heroin overdose in 1984 and Michael died in 1997 in a skiing accident in Aspen, Colo., while on the slopes with family members, including then-43-year-old RFK Jr.

It was much more recently, and two decades after the speech disorder cropped up, that Kennedy came up with a theory about a possible cause. Like many of his highly controversial and oft-debunked pronouncements in recent years, it involved a familiar culprit — a vaccine.

Kennedy said that while he was preparing litigation against the makers of flu vaccines in 2016, his research led him to the written inserts that manufacturers package along with the medications. He said he saw spasmodic dysphonia on a long list of possible side effects. “That was the first I ever realized that,” he said.

Although he acknowledged there is no proof of a connection between the flu vaccines he once received annually and SD, he told The Times he continues to view the flu vaccine as “at least a potential culprit.”

RFK Jr.’s campaign is celebrating Cesar Chavez Day. The labor icon’s family is not having it

Relatives of Cesar Chavez decry the Kennedy campaign’s use of the late labor icon’s image. The candidate’s father, RFK, was an ally of the farmworkers union Chavez co-founded.

March 29, 2024

Kennedy said he no longer has the flu vaccine paperwork that triggered his suspicion, but his campaign forwarded a written disclosure for a later flu vaccine. The 24-page document lists commonly recognized adverse reactions, including pain, swelling, muscle aches and fever.

It also lists dozens of less common reactions that users said they experienced. “Dysphonia” is on the list, though the paperwork adds that “it is not always possible to reliably estimate their frequency or establish a causal relationship to the vaccine.”

Public health experts have slammed Kennedy and his anti-vaccine group, Children’s Health Defense, for advancing unsubstantiated claims, including that vaccines cause autism and that COVID-19 vaccines caused a spike of sudden deaths among healthy young people.

Dr. Timothy Brewer, a professor of medicine and epidemiology at UCLA, said an additional study cited by the Kennedy campaign to The Times referred to reported adverse reactions that were unverified and extremely rare.

“We shouldn’t minimize risks or overstate them,” Brewer said. “With these influenza vaccines there are real benefits that so far outweigh the potential harm cited here that it’s not worth considering those types of reactions further.”

Anyone with concerns about influenza vaccine side effects should consult their physician, he said.

So what does research suggest about SD?

“We just don’t know what brings it on,” said Dr. Michael Johns, director of the USC Voice Center and an authority on spasmodic dysphonia. “Intubation, emotional trauma, physical trauma, infections and vaccinations are all things that are incredibly common. And it’s very hard to pin causation on something that is so common when this is a condition that is so rare.”

No two SD sufferers sound the same. For some, spasms push the vocal cords too far apart, causing breathy and nearly inaudible speech. For others, such as Kennedy, the larynx muscles push the vocal cords closer together, creating a strained or strangled delivery.

“I would say it was a very, very slow progression,” Kennedy said last week. “I think my voice was getting worse and worse.”

There were times when mornings were especially difficult.

“When I opened my mouth, I would have no idea what would come out, if anything,” he said.

One of the most common treatments for the disorder is injecting Botox into the muscles that bring the vocal cords together.

Kennedy said he received Botox injections every three or four months for about 10 years. But he called the treatment “not a good fit for me,” because he was “super sensitive to the Botox.” He recalled losing his voice entirely after the injections, before it would return days later, somewhat smoother.

Looking for a surgical solution, Kennedy traveled to Japan in May, 2022. Surgeons in Kyoto implanted a titanium bridge between his vocal cords (also known as vocal folds) to keep them from pressing together.

He told a YouTube interviewer last year that his voice was getting “better and better,” an improvement he credited to the surgery and to alternative therapies, including chiropractic care.

The procedure has not been approved by regulators in the U.S.

Johns cautioned that titanium bridge surgeries haven’t been consistently effective or durable and said there have been reports of the devices fracturing, despite being implanted by reputable doctors. He suggested that the more promising avenue for breakthroughs will be in treating the “primary condition, which is in the brain.”

Researchers are now trying to find the locations in the brain that send faulty signals to the larynx. Once those neural centers are located, doctors might use deep stimulation — like a pacemaker for the brain — to block the abnormal signals that cause vocal spasms. (Deep brain stimulation is used to treat patients with Parkinson’s disease and other afflictions.)

Column: Trump’s vice presidential show and Kennedy’s kamikaze mission

The ex-president tends to think aloud and let us in on his deliberations. But his choice of a vice presidential running mate remains a mystery, like Kennedy’s path to victory.

April 4, 2024

Long and grueling presidential campaigns have stolen the voice of many candidates. But Kennedy said he is not concerned, since his condition is based on a neural disturbance, not one in his voice box.

“Actually, the more I use the voice, the stronger it tends to get,” he said. “It warms up when I speak.”

Kennedy was asked whether the loss of his full voice felt particularly frustrating, given his family’s legacy of ringing oratory. He replied, his voice still raspy, “Like I said, it’s ironic.”

More to Read

Kennedy family makes ‘crystal clear’ its Biden endorsement in attempt to deflate RFK Jr.’s candidacy

April 18, 2024

Column: It’s not just RFK Jr.: Biden faces serious danger from third-party candidates

April 8, 2024

Goldberg: Voters wishing for an alternative to Trump and Biden got one. Unfortunately, it’s RFK Jr.

April 2, 2024

Get the L.A. Times Politics newsletter

Deeply reported insights into legislation, politics and policy from Sacramento, Washington and beyond. In your inbox three times per week.

You may occasionally receive promotional content from the Los Angeles Times.

James Rainey has covered multiple presidential elections, the media and the environment, mostly at the Los Angeles Times, which he first joined in 1984. He was part of Times teams that won three Pulitzer Prizes.

More From the Los Angeles Times

Entertainment & Arts

Ashley Judd, Aloe Blacc open up about deaths of Naomi Judd, Avicii in White House visit

World & Nation

Biden’s Morehouse College graduation invitation draws backlash

April 24, 2024

Homeless encampments are on the ballot in Arizona. Could California, other states follow?

Tabloid publisher says he pledged to be Trump campaign’s ‘eyes and ears’ during 2016 race

April 23, 2024

Jewish NYU professor sounds off on double standard for anti-Israel protesters

J ewish New York University professor Scott Galloway criticized anti-Israel protesters and antisemitism on college campuses, arguing there was a double standard when it came to hate speech directed at Jewish people.